Multi-Iteration Stochastic Optimizers

Abstract.

We here introduce Multi-Iteration Stochastic Optimizers, a novel class of first-order stochastic optimizers where the relative error is estimated and controlled using successive control variates along the path of iterations. By exploiting the correlation between iterates, control variates may reduce the estimator’s variance so that an accurate estimation of the mean gradient becomes computationally affordable. We name the estimator of the mean gradient Multi-Iteration stochastiC Estimator—MICE. In principle, MICE can be flexibly coupled with any first-order stochastic optimizer, given its non-intrusive nature. Our generic algorithm adaptively decides which iterates to keep in its index set. We present an error analysis of MICE and a convergence analysis of Multi-Iteration Stochastic Optimizers for different classes of problems, including some non-convex cases. Within the smooth strongly convex setting, we show that to approximate a minimizer with accuracy , SGD-MICE requires, on average, stochastic gradient evaluations, while SGD with adaptive batch sizes requires , correspondingly. Moreover, in a numerical evaluation, SGD-MICE achieved with less than the number of gradient evaluations than adaptive batch SGD. The MICE estimator provides a straightforward stopping criterion based on the gradient norm that is validated in consistency tests. To assess the efficiency of MICE, we present several examples in which we use SGD-MICE and Adam-MICE. We include one example based on a stochastic adaptation of the Rosenbrock function and logistic regression training for various datasets. When compared to SGD, SAG, SAGA, SVRG, and SARAH, the Multi-Iteration Stochastic Optimizers reduced, without the need to tune parameters for each example, the gradient sampling cost in all cases tested, also being competitive in runtime in some cases.

AMS subject classifications:

65K05

90C15

65C05

Keywords: Stochastic Optimization; Monte Carlo; Multilevel Monte Carlo; Variance Reduction; Control Variates; Machine Learning

1. Introduction

We focus on the stochastic optimization problem of minimizing the objective function where is a given real-valued function, is the design variable vector, is a random vector, and is the expectation conditioned on . Stochastic optimization problems [1, 2, 3] are relevant to different fields, such as Machine Learning [4], Stochastic Optimal Control [5, 6], Computational Finance [7, 8, 9], Economics [10], Insurance [11], Communication Networks [12], Queues and Supply Chains [13], and Bayesian Optimal Design of Experiments [14, 15], among many others.

In the same spirit and inspired by the work by Heinrich [16] and Giles [17] on Multilevel Monte Carlo methods, we propose the Multi-Iteration stochastiC Estimator—MICE—to obtain a computationally efficient and affordable approximation of the mean gradient at iteration , , which may be coupled with any first order stochastic optimizer in a non-intrusive fashion. Combining MICE with any stochastic optimizer furnishes Multi-Iteration Stochastic Optimizers, a novel class of efficient and robust stochastic optimizers. In this class of stochastic optimizers, the mean gradient estimator’s relative variance is controlled using successive control variates based on previous iterations’ available information. This procedure results in a more accurate yet affordable estimation of the mean gradient. In approximating the mean gradient, MICE constructs an index set of iterations and performs control variates for every pair of nested elements of this index set. As the stochastic optimization evolves, we increase the number of samples along the index set while keeping the previously sampled gradients, i.e., we use gradient information from previous iterations to reduce the variance in the current gradient estimate, which is a crucial feature to make MICE competitive. We design MICE to achieve a given relative error for the mean gradient with minimum additional gradient sampling cost. Indeed, in the MICE index set constructed along the stochastic optimization path , our generic optimizer optimally decides whether to drop a particular iteration out of the index set or restart it in order to reduce the total optimization work. Moreover, it can decide if it is advantageous, from the computational work perspective, to clip the index set at some point , discarding the iterations before . Since we control the gradients’ error using an estimate of the gradient norm, we propose a resampling technique to get a gradient norm estimate, reducing the effect of sampling error and resulting in robust optimizers. We note in passing that MICE can be adjusted to the case of finite populations; see (2), for optimization problems arising in supervised machine learning.

Generally speaking, in first-order stochastic optimization algorithms that produce convergent iterates, the mean gradient converges to zero as the number of iterations, , goes to infinity, that is ; however, the gradient covariance, , does not. Thus, to ensure convergence of the iterates , in the literature it is customary to use decreasing step-size (learning rate) schedules, reducing the effect of the statistical error in the gradient onto the iterates . However, this approach also results in sublinear convergence rates [18]. Another approach to deal with the gradient’s statistical error is to increase the sample sizes (batch sizes) while keeping the step-size fixed, thus avoiding worsening the convergence. Byrd et al. [19] propose to adaptively increase the sample sizes to guarantee that the trace of the covariance matrix of the mean gradient is proportional to its norm. This approach forces the statistical error to decrease as fast as the gradient norm. Balles et al. [20] use a similar approach; however, instead of setting a parameter to control the statistical error, they set a step-size and find the parameter that guarantees the desired convergence. Bollapragada et al. [21] propose yet another approach to control the variance of gradient estimates in stochastic optimization, which they call the inner product test. Their approach ensures that descent directions are generated sufficiently often.

Instead of increasing the sample size, some methods rely on using control variates with respect to previously sampled gradients to reduce the variance in current iterations and thus be able to keep a fixed step-size. Pioneering ideas of control variates in stochastic optimization, by Johnson & Zhang [22], profit on an accurate mean gradient estimation at the initial guess , , to update and compute, via single control variates, an inexpensive and accurate version of the mean gradient at the iteration , . Instead of doing control variates with respect to one starting full-gradient, SARAH, by Nguyen et al. [23], computes an estimate of the gradient at the current iteration by using control variates with respect to the last iteration. An ‘inexact’ version of SARAH is presented in [24], where SARAH is generalized to the minimization of expectations. In the spirit of successive control variates, SPIDER by Fang et al. [25] uses control variates between subsequent iterations; however, it employs the normalized gradient descent instead of plain gradient descent. In a different approach, SAGA, by Defazio et al. [26], keeps in the memory the last gradient observed for each data point and computes using control variates with respect to the average of this memory. Lastly, many algorithms try to ‘adapt’ the initial batch size of the index set of batches using predefined rules, such as exponential or polynomial growth, as presented by Friedlander & Schmidt [27], or based on statistical bounds as discussed by De Soham et al. [28] and Ji et al. [29], to mention a few.

Although Multi-Iteration Stochastic Optimizers share similarities with SVRG [22], SARAH, and SPIDER, our stochastic optimizers distinctly control the relative variance in gradient estimates. We achieve this control by sampling the entire index set of iterations, optimally distributing the samples to minimize the gradient sampling cost. While the previously mentioned methods are devised for finite sum minimization, MICE can tackle both finite sum and expectation minimization. Moreover, we provide additional flexibility by including dropping, restart, and clipping operations in the MICE index set updates.

For strongly-convex and -smooth objective functions, Polyak, in his book [30, Theorem 5, pg 102], shows a convergence rate in the presence of random relative noise. The theorem states a linear (geometric) convergence in terms of the number of iterations. However, the dependency on the relative noise level, , of the constants and is not made explicit. This work presents the explicit form of these constants and their dependency on . Using this, we can estimate the total average computational work in stochastic gradient evaluations and optimize it with respect to the controllable relative noise . Finally, we conclude that to generate an iterate such that , SGD-MICE requires, on average, stochastic gradient evaluations, while SGD with adaptive batch sizes requires the larger , correspondingly. While the reuse of previous data causes the MICE estimator to be conditionally biased, we present an analysis for the bias and characterize the error, including bias and statistical error, which is controlled to achieve convergence of SGD-MICE.

Since MICE is non-intrusive and designed for both continuous and discrete random variables, it can be coupled with most available optimizers with ease. For instance, we couple MICE with SGD [31] and Adam [32], showing the robustness of our approach. The Adam algorithm by Kingma & Ba [32] does not exploit control variates techniques for variance reduction. Instead, it reduces the gradient estimator’s variance based on iterate history by adaptive estimates of lower-order moments, behaving similarly to a filter. Thus, the coupling Adam-MICE profits from the information available in the optimizer path in more than one way. Finally, the reader is referred to the books by Spall [33] and Shapiro, Dentcheva, and Ruszczyński [34] for comprehensive overviews on stochastic optimization.

To assess MICE’s applicability, we numerically minimize expectations of continuous and discrete random variables using analytical functions and logistic regression models. Also, we compare SGD-MICE with SVRG, SARAH, SAG, and SAGA in training the logistic regression model with datasets with different sizes and numbers of features.

The remainder of this work is as follows. In §1.1, we describe the stochastic optimization problem, classical stochastic optimization methods and motivate variance reduction in this context. In §2, we construct the MICE statistical estimator §2.1; analyze its error §2.2; compute the optimal number of samples for the current index set §2.3; present the operators used to build MICE’s index set and derive a work-based criteria to choose one §2.4. In §3, we present a convergence analysis of error-controlled SGD, which includes SGD-MICE, showing these converge polynomially for general -smooth problems, and exponentially if the objective function is gradient-dominated §3.1. In §3.2 we present gradient sampling cost analyzes for SGD-MICE and SGD-A (SGD with adaptive increase in the sample sizes) on expectation minimization §3.2.1 and finite sum minimization §3.2.2. In §4, practical matters related to implementation of the MICE estimator are discussed. In §5, to assess the efficiency of Multi-Iteration Stochastic Optimizers, we present some numerical examples, ranging from analytical functions to the training of a logistic regression model over datasets with data of size of up to . In Appendix A, are presented detailed pseudocodes for the Multi-Iteration Stochastic Optimizers used in this work. In Appendix B, we analyze both the bias and the statistical error of the MICE gradient estimator.

1.1. Optimization of expectations and stochastic optimizers

To state the stochastic optimization problem, let be the design variable in dimension and a vector-valued random variable in dimension , whose probability distribution may depend on Throughout this work we assume that we can produce as many independent identically distributed samples from as needed. Here, and are respectively the expectation and variance operators conditioned on . Aiming at optimizing expectations on , we state our problem as follows. Find such that

| (1) |

where . Through what follows, let the objective function in our problem be denoted by . In general, function might not have a unique minimizer, in which case we define as the set of all satisfying (1). The case of minimizing a finite sum of functions is of special interest given its importance for training machine learning models in empirical risk minimization tasks,

| (2) |

where is usually a large number. Note that the finite sum case is a special case of the expectation minimization, i.e., let be a random variable with probability mass function

| (3) |

In minimizing (1) with respect to the design variable , SGD is constructed with the following updating rule

| (4) |

where is the step-size at iteration and is an estimator of the gradient of at . For instance, an unbiased estimator of the gradient of at at the iteration may be constructed by means of a Monte Carlo estimator, namely

| (5) |

with independent and identically distributed (iid) random variables given , , with being an index set with cardinality . Bear in mind that an estimator of the type (5) is, in fact, a random variable and its use in optimization algorithms gives rise to the so-called Stochastic Optimizers. The challenge of computing the gradient of in an affordable and accurate manner motivated the design of several gradient estimators.

For the sake of brevity, the following review on control variates techniques for stochastic optimization is not comprehensive. To motivate our approach, we recall the control variates proposed by Johnson & Zhang [22] (and similarly, by Defazio et al. [26]) for the optimization of a function defined by a finite sum of functions. The idea of control variates is to add and subtract the same quantity, that is, for any ,

| (6) |

rendering the following sample-based version

| (7) |

where and are iid samples from the distribution, which does not depend on in their setting. In the original work by Johnson & Zhang [22], is the total population and . Later, Nitanda [35] and Konečnỳ et al. [36] also used the total populations at , but with , to study the efficiency of the algorithm. Additionally, the work [22] restarts the algorithm after a pre-established number of iterations by setting . The efficiency of this algorithm relies on the correlation between the components of the gradients and . If this correlation is high, the variance of the mean gradient estimator (7) is reduced.

2. Multi-iteration stochastic optimizers

2.1. Multi-iteration gradient estimator

We now construct an affordable estimator of the mean gradient at the current iteration , , which we name Multi-Iteration stochastiC Estimator—MICE. Profiting from available information already computed in previous iterations, MICE uses multiple control variates between pairs of, possibly non-consecutive, iterations along the optimization path to approximate the mean gradient at the iteration . Bearing in mind that stochastic optimization algorithms, in a broad sense, create an convergent path where as , the gradients evaluated at and should become more and more correlated for . In this scenario, control variates with respect to previous iterations become more efficient, in the sense that one needs fewer and fewer new samples to accurately estimate the mean gradient.

To introduce the MICE gradient estimator, we need first to establish some notation. Let be an index set, such that, , where is the current iteration and . This index set is just at the initial iteration, , and for later iterations it contains the indices of the iterations MICE uses to reduce the computational work at the current iteration, , via control variates.

Next, for any , let be the element previous to in ,

| (8) |

Then, the mean gradient at conditioned on the sequence of random iterates, , indexed by the set can be decomposed as

| (9) |

with the gradient difference notation

| (10) |

Thus, the conditional mean defined in (9) is simply

| (11) |

In what follows, for readability’s sake, we make the assumption that the distribution of does not depend on Observe that this assumption is more general than it may seem, see the discussion on Remark 5.

Assumption 1 (Simplified probability distribution of ).

The probability distribution of , , does not depend on .

Now we are ready to introduce the MICE gradient estimator.

Definition 1 (MICE gradient estimator).

Given an index set such that and positive integer numbers , we define the MICE gradient estimator for at iteration as

| (12) |

where, for each index , the set of samples, , has cardinality . Finally, denote as before the difference to the previous gradient as

| (13) |

For each , we might increase the sample sizes with respect to , hence the dependence on both and on the notation. Definition 1 allows us to manipulate the MICE index set to improve its efficiency; one can pick which to keep in . For example, furnishes an SVRG-like index set, furnishes a SARAH-like index set, and results in SGD. The construction of the index set is discussed in §2.4.

Remark 1 (Cumulative sampling in MICE).

As the stochastic optimization progresses, new additional samples of are taken and others, already available from previous iterations, are reused to compute the MICE estimator at the current iteration,

| (14) |

This sampling procedure is defined by the couples , making a deterministic function of all the samples in the index set .

Remark 2 (About MICE and MLMC).

Note that MICE resembles the estimator obtained in the Multilevel Monte Carlo method—MLMC [37, 17, 38]. For instance, if , MICE reads

| (15) |

Indeed, we may think that in MICE, the iterations play the same role as the levels of approximation in MLMC. However, there are several major differences with MLMC, namely i) MICE exploits sunk cost of previous computations, computing afresh only what is necessary to have enough accuracy on the current iteration ii) there is dependence in MICE across iterations and iii) in MICE, the sample cost for the gradients is the same in different iterations while in MLMC one usually has higher cost per sample for deeper, more accurate levels.

Indeed, assuming the availability of a convergent hierarchy of approximations and following the MLMC lines, the work [39] proposed and analyzed multilevel stochastic approximation algorithms, essentially recovering the classical error bounds for multilevel Monte Carlo approximations in this more complex context. In a similar MLMC hierarchical approximation framework, the work by Yang, Wand, and Fang [40] proposed a stochastic gradient algorithm for solving optimization problems with nested expectations as objective functions. Last, the combination of MICE and the MLMC ideas like those in [39] and [40] is thus a natural research avenue to pursue.

2.2. MICE estimator mean squared error

To determine the optimal number of samples per iteration , we begin by defining the square of the error, , as the squared -distance between MICE estimator (12) and the true gradient conditioned on the iterates generated up to , which leads to

| (16) |

The cumulative sampling described in Remark 1 results in a bias once we condition the MICE estimator on the set of iterates generated up to , thus, the error analysis of MICE is not trivial. Here we prove that the error of the MICE estimator is identical to the expectation of the contribution of the statistical error of each element of the index set. Before we start, let’s prove the following Lemma.

Lemma 1.

Let , as defined in (12), be generated by a multi-iteration stochastic optimizer using MICE as a gradient estimator. Then, for ,

| (17) |

Proof.

First, let us assume without loss of generality. Note that the samples of used to compute and are independent. However, the iterates depend on , thus, and are not independent. To prove Lemma 1, let us use the law of total expectation to write the expectation above as the expectation of an expectation conditioned on . Since and are then conditionally independent,

| (18) |

concluding the proof. ∎

Let be the -th component of the dimensional vector . Then, we define

| (19) |

Lemma 2 (Expected squared error of the MICE estimator for expectation minimization).

Proof.

Remark 3 (Expected squared error of the MICE estimator for finite sum minimization).

When minimizing a finite sum of functions as in (2), we sample the random variables without replacement. Thus, the variance of the estimator should account for the ratio between the actual number of samples used in the estimator and the total population [41, Section 3.7]. In this case, the error analysis is identical to the expectation minimization case up to (23), except in this case we include the correction factor in the sample variance due to the finite population having size , resulting in

| (25) |

2.3. Multi-iteration optimal setting for gradient error control

First, let the gradient sampling cost and the total MICE work be defined as in the following Remark. The number of gradient evaluations is for when and otherwise. For this reason, we define the auxiliary index function

| (26) |

and define the gradient sampling cost in number of gradient evaluations as

| (27) |

Motivated by the analysis of SGD-MICE in §3, here we choose the number of samples for the index set by approximate minimization of the gradient sampling cost (36) subject to a given tolerance on the relative error in the mean gradient approximation, that is

| (28) | ||||

2.3.1. Expectation minimization

As a consequence of (28) and Lemma 2, we define the sample sizes as the solution of the following constrained optimization problem,

| (29) | ||||

An approximate integer-valued solution based on Lagrangian relaxation to problem (29) is

| (30) |

In general, in considering the cost of computing new gradients at the iteration , the expenditure already carried out up to the iteration is sunk cost and must not be included, as described in Remark 1, that is, one should only consider the incremental cost of going from to . Moreover, in the variance constraint of problem (29), since we do not have access to the norm of the mean gradient, , we use a resampling technique combined with the MICE estimator as an approximation; see Remark 6.

2.3.2. Finite sum minimization

In view of Remark 3, we define the sample sizes for MICE as the solution of the following optimization problem,

| (31) | ||||

| subject to |

This problem does not have a closed form solution, but can be solved in an iterative process by noting that any such that does not contribute to the error of the estimator. Then, letting

| (32) |

we derive a closed form solution for the sample sizes as

| (33) |

However, it is not possible to know directly the set . So, we initialize and iteratively remove elements that do not satisfy the condition as presented in Algorithm 1.

2.4. Optimal index set operators

As for the construction of the MICE index set at iteration , that is, , from the previous one, , we use one of the following index set operators:

Definition 2.

[Construction of the index set ] For , let . If , After this step, there are four possible cases to finish the construction of :

| Add : | |||

|---|---|---|---|

| Drop : | |||

| Restart : | |||

| Clip at : |

The Add operator simply adds to the current index set. The Drop operator does the same but also removes from the index set. As the name suggests, Restart resets the index set at the current iterate. Finally, Clip adds to the current index set and removes all components previous to . For more details, see §4 for an algorithmic description.

In the previous section, the sample sizes for each element of the index set are chosen as to minimize the gradient sampling cost while satisfying a relative error constraint. However, to pick one of the operators to update the index set, we must use the work including the overhead of aggregating the index set elements. Let the gradient sampling cost increment at iteration be

| (34) |

The total work of a MICE evaluation is then the sum of the cost of sampling the gradients and the cost of aggregating the gradients as

| (35) |

where is the work of sampling and is the work of averaging the to construct . Then, the work done in iteration to update MICE is

| (36) |

We choose the index set operator for iteration as the one that minimizes the weighted work increment,

| (37) |

where will be discussed in more detail in §2.4.3, and are parameters used to encourage dropping and restarting. The rationale of introducing these parameters is that one might want to keep the index set as small as possible to reduce MICE’s overhead. We recommend values between and for and values between and for .

2.4.1. Dropping iterations of the MICE index set

Given our estimator’s stochastic nature, at the current iteration , we may wonder if the iteration should be kept or dropped out from the MICE index set since it may not reduce the computational work. The procedure we follow here draws directly from an idea introduced by Giles [38] for the MLMC method. Although the numerical approach is the same, we construct the algorithm in a greedy manner. We only check the case of dropping the previous iteration in the current index set. In this approach, we never drop the initial iteration .

2.4.2. Restarting the MICE index set

As we verified in the previous section on whether we should keep the iteration in the MICE index set, we also may wonder if restarting the estimator may be less expensive than updating it. Usually, in the literature of control variates techniques for stochastic optimization, the restart step is performed after a fixed number of iterations; see, for instance, [22, 35, 36].

2.4.3. Clipping the MICE index set

In some cases, it may be advantageous to discard only some initial iterates indices out of the index set instead of the whole index set. We refer to this procedure as clipping the index set. We propose two different approaches to decide when and where to clip the index set.

- Clipping “A”:

-

is as in Definition 2 with

(38) This clipping technique can be applied in both the continuous and discrete cases.

- Clipping “B”:

-

This technique is simpler but can only be used in the finite sum case. It consists in clipping at .

Clipping “A” adds an extra computation overhead when calculating for each each iteration . Thus, in the finite sum case, we suggest using Clipping “B”. Clipping shortens the index set, thus possibly reducing the general overhead of MICE. Moreover, clipping the index set may reduce the frequency of restarts and the bias of the MICE estimator.

3. SGD-MICE convergence and gradient sampling cost analysis

In this section, we will analyze the convergence of stochastic gradient methods with fixed step size as

| (39) |

with gradient estimates controlled as

| (40) |

In special, we are interested in SGD-MICE, where as defined in (12), and SGD-A, where . Here, SGD-A is SGD where the sample sizes are increased to control the statistical error condition in (40) and can be seen as a special case of SGD-MICE where Restart is used every iteration. For MICE, this condition is satisfied by the choice of the sample sizes in §2.3.

Let us lay some assumptions.

Assumption 2 (Lipschitz continuous gradient).

If the gradient of is Lipschitz continuous, then, for some ,

| (41) |

Assumption 3 (Convexity).

If is convex, then,

| (42) |

Assumption 4 (Strong convexity).

If is -strongly convex, then, for some ,

| (43) |

Assumption 5 (Polyak–Łojasiewicz).

If is gradient dominated, it satisfies the Polyak–Łojasiewicz inequality

| (44) |

for a constant , where is the minimizer of .

3.1. Optimization convergence analysis

Proposition 1 (Local convergence of gradient-controlled SGD on -smooth problems).

Let be a differentiable function satisfying Assumption 2 with constant . Then, SGD methods with relative gradient error control in the -norm sense and step-size reduce the optimality gap in expectation as

| (45) |

Proof.

Let . From -smoothness,

| (46) | ||||

| (47) |

Taking expectation on both sides and then using the Cauchy–Schwarz inequality,

| (50) | ||||

| (51) |

where (40) is used to get the last inequality. Here, the step size that minimizes the term inside the parenthesis is . Substituting the step size in the equation above and taking full expectation on both sides concludes the proof. ∎

If the function is also unimodal, as in the case of satisfying Assumptions 3 or 5, then the convergence presented in Proposition 1 is also global, i.e., .

Proposition 2 (Global convergence of gradient-controlled SGD in gradient-dominated problems).

Corollary 1.

Proof.

3.2. Gradient sampling cost analysis

Assuming the assumptions of Proposition 2 hold, the optimality gap converges with rate . Then, we have the following inequalities that will be used throughout this section,

| (57) |

where . Moreover,

| (58) |

For the sake of simplicity and given the cumulative nature of the computational gradient sampling cost in MICE, we analyze the total gradient sampling cost on a set of iterations converging to as per Proposition 2. Observe that in this simplified setting, the number of iterations required to stop the iteration, , and both the sequences and are still random. Indeed, we define

| (59) |

Corollary 3 (Number of iterations).

Proof.

The expected value of can be bounded using (57) as

| (66) |

Assumption 6 (Bound on second moments of gradient differences).

| (67) |

Let the total gradient sampling cost to reach iteration be

| (74) |

where is defined as in (34). In this section, we present limited analyzes of SGD-MICE to reach where we assume only the Add operator is used, thus, using the equation above,

| (75) | ||||

| (76) |

As will be shown in §5, the other index set operators, Drop, Restart, and Clip greatly improve the convergence of SGD-MICE. As a consequence, these analyses considering only the Add operator are pessimistic.

3.2.1. Expectation minimization problems

Corollary 4 (Expected gradient sampling cost of SGD-MICE with linear convergence).

Let the Assumptions of Corollary 1 and Assumption 6 hold. Moreover, let be the smallest such that and all sample sizes at the last iteration be larger than . Then, the expected number of gradient evaluations needed to generate is

| (77) |

Moreover, the relative gradient error that minimizes the expected gradient sampling cost is .

Proof.

We know that iterations are needed to generate . Thus, the whole optimization cost is

| (78) | ||||

| (79) |

Let us analyze the following sum

| (80) | ||||

| (81) | ||||

| (82) | ||||

| (83) |

Taking expectation of the summation above squared,

| (86) | ||||

| (89) | ||||

| (90) | ||||

| (91) | ||||

| (92) |

Substituting back to the expected cost,

| (93) |

Substituting the expected number of iterations from Corollary 3 and using (58) results in 77.

Since the term is , it dominates convergence as , thus the expected work of SGD-MICE without restart or dropping is . Therefore, the relative gradient error that minimizes the total gradient sampling cost is . ∎

Corollary 5 (Expected gradient sampling cost of SGD-A).

Proof.

Let the gradient sampling cost of SGD-A be

| (95) |

The sample sizes are

| (96) |

We can bound as

| (97) | ||||

| (98) | ||||

| (99) |

| (100) | ||||

| (101) | ||||

| (102) | ||||

| (103) |

Taking expectation and substituting from Corollary 3 finishes the proof. ∎

Remark 4 (Stopping criterion).

In practice, applying the stopping criterion (59) requires an approximation of the mean gradient norm at each iteration. A natural approach is to use the MICE estimator as such an approximation, yielding

| (104) |

provided that the error in the mean gradient is controlled in a relative sense. This quality assurance requires a certain number of gradient samples. For example, let us consider the ideal case of stopping when we start inside the stopping region, near the optimal point . To this end, suppose that the initial iteration point, , is such that . What is the cost needed to stop by sampling gradients at without iterating at all? Observing that we need a tolerance , we thus need a number of samples that satisfies

| (105) |

3.2.2. Finite sum minimization problems

Corollary 6 (Cost analysis of SGD-MICE on the finite sum case).

If Assumptions of Corollary 2 hold, SGD-MICE achieves a stopping criterion with expected gradient sampling cost

| (108) |

Proof.

Corollary 7 (Cost analysis of SGD-A on the finite sum case).

If the assumptions of Proposition 2 are satisfied, SGD-A finds an iterate such that with expected gradient sampling cost

| (117) |

Proof.

When using SGD-A to solve the finite sum minimization problem while taking into consideration that variance goes to zero as , the sample size at iteration is

| (118) |

where . Thus, the total gradient sampling cost to reach iteration is

| (119) |

Using (99),

| (120) | ||||

| (121) | ||||

| (122) |

Another bound can be obtained from (121) as

| (123) | ||||

| (124) |

Taking expectation and using (62) concludes the proof. ∎

Remark 5 (More general probability distributions).

Although in Assumption 1 we restricted our attention to the case where the probability distribution of , , does not depend on , it is possible to use mappings to address more general cases. Indeed, let us consider the case where

| (125) |

for some given smooth function and such that the distribution of , , does not depend on . Then we can simply write, letting ,

| (126) |

and, by sampling instead of , we are back in the setup of Assumption 1.

4. MICE algorithm

In this section, we describe the MICE algorithm and some of its practical implementation aspects. Before we start, let us discuss the resampling technique used to build an approximated probability distribution for the norm of the gradient.

Remark 6 (Gradient resampling for calculating sample sizes).

To approximate the empirical distribution of , we perform a jackknife [44] resampling of the approximate mean gradient using sample subsets for each iteration .

First, for each element , we partition the index set in disjoint sets with the same cardinality. Then, we create, for each of these sets, their complement with respect to , i.e., for all . We use these complements to compute the average of these gradient differences excluding a portion of the data,

| (127) |

which we then sample for each to get a single sample of the mean gradient,

| (128) |

by independently sampling from a categorical distribution with categories. Sampling times, we construct a set of gradient mean estimates . Then, letting be a quantile of the gradient norms where is the norm of gradient smaller than the quantile, we approximate

| (129) |

Similarly, we set a right tail quantile with to define a gradient norm to be used as a stopping criterion. We stop at if

| (130) |

where is the norm of the gradient respective to the quantile.

To control the work of the resampling technique, we measure the runtime needed to get a sample of and then set so that the overall time does not exceed a fraction of the remaining runtime of MICE. From our numerical tests, we recommend to be set between and , between (for expensive gradients) and , , and .

In Algorithm 2, we present the pseudocode for the MICE estimator and on Algorithm 3 we present the algorithm to update the index set from according to §2.4. Two coupling algorithms for the multi-iteration stochastic optimizers are presented in Appendix A, these are SGD-MICE and Adam-MICE.

In general, keeping all gradient realizations for all iterations in memory may be computationally inefficient, especially for large-dimensional problems. To avoid this unnecessary memory overhead, we use Welford’s online algorithm to estimate the variances online. We keep in memory only the samples mean and second-centered moments and update them in an online fashion [45]. This procedure makes the memory overhead much smaller than naively storing all gradients and evaluating variances when needed. Therefore, for each at iteration , we need to store the mean gradient differences estimate, a vector of size ; , a scalar; and , an integer. Also, we store the gradient mean estimate in case we might clip the index set at in the future, and the respective sum of the variances component-wise, also using Welford’s algorithm. Thus, for first-order methods such as Adam-MICE and SGD-MICE, the memory overhead of MICE is of floating-point numbers and integers. Thus, for large-scale problems, dropping iterations and restarting the index set are very important to reduce memory allocation. Regarding the computational overhead, updating each using Welford’s algorithm at iteration has complexity . Computing the sample sizes using (30) or Algorithm 1 requires a number of operations that is . While sample sizes might be computed several times per iteration due to the progressive sample size increase, this cost does not increase with the dimensionality of the problem. The resampling technique presented in (128) increases the memory overhead by a factor and the computational work by a factor .

5. Numerical examples

In this section, we present some numerical examples to assess the efficiency of Multi-Iteration Stochastic Optimizers. We focus on SGD-MICE, Adam-MICE and compare their performances with SGD, Adam, SAG, SAGA, SVRG, and SARAH methods in stochastic optimization. When using SGD, with or without MICE, we assume the constant to be known and use it to compute the step-size . As a measure of the performance of the algorithms, we use the optimality gap, which is the difference between the approximate optimal value at iteration and the exact optimal value,

| (131) |

In some examples, we know the optimal value and optimal point analytically; otherwise, we estimate numerically by letting optimization algorithms run for many iterations.

As for MICE parameters, when coupled with SGD, we use , and when couple with Adam we use . The other parameters are fixed for all problems, showing the robustness of MICE with respect to the tuning: , , is set to for general iterations and for restarts, and the maximum index set cardinality is set to . For the continuous cases, we use the clipping “A”, whereas, for the finite case, we use clipping “B”. As for the resampling parameters, we use , , and with a minimum resampling size of . In our numerical examples, we report the runtime taken by the different algorithms we test in addition to the usual number of gradient evaluations. Note, however, that the current MICE implementation is not implemented aiming at performance, and could be much improved in this sense. Regarding the stopping criterion, except in the first example, we do not define a . Instead, we define a fixed gradient sampling cost that, when reached, halts execution. This choice allows us to better compare SGD-MICE with other methods.

5.1. Random quadratic function

This problem is a simple numerical example devised to test the performance of SGD-MICE on the minimization of a strongly convex function. The function whose expected value we want to minimize is

| (132) |

where

| (133) |

is the identity matrix of size , is a vector of ones, and . We use and initial guess . The objective function to be minimized is

| (134) |

where

| (135) |

The optimal point of this problem is . To perform optimization using SGD-MICE and SGD, we use the unbiased gradient estimator

| (136) |

We use the eigenvalues of the Hessian of the objective function, , to calculate and thus define the step-size as . We set a stopping criterion of .

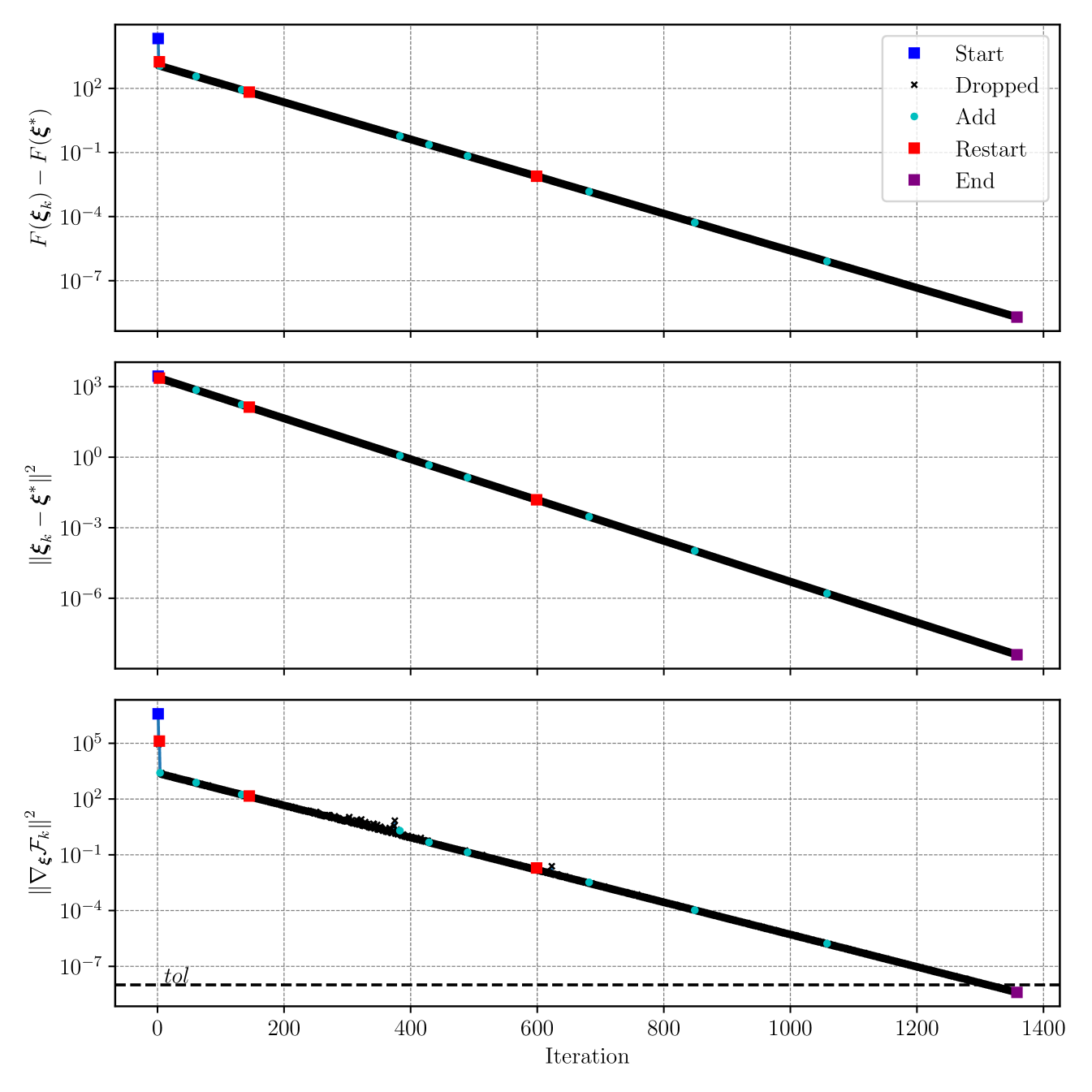

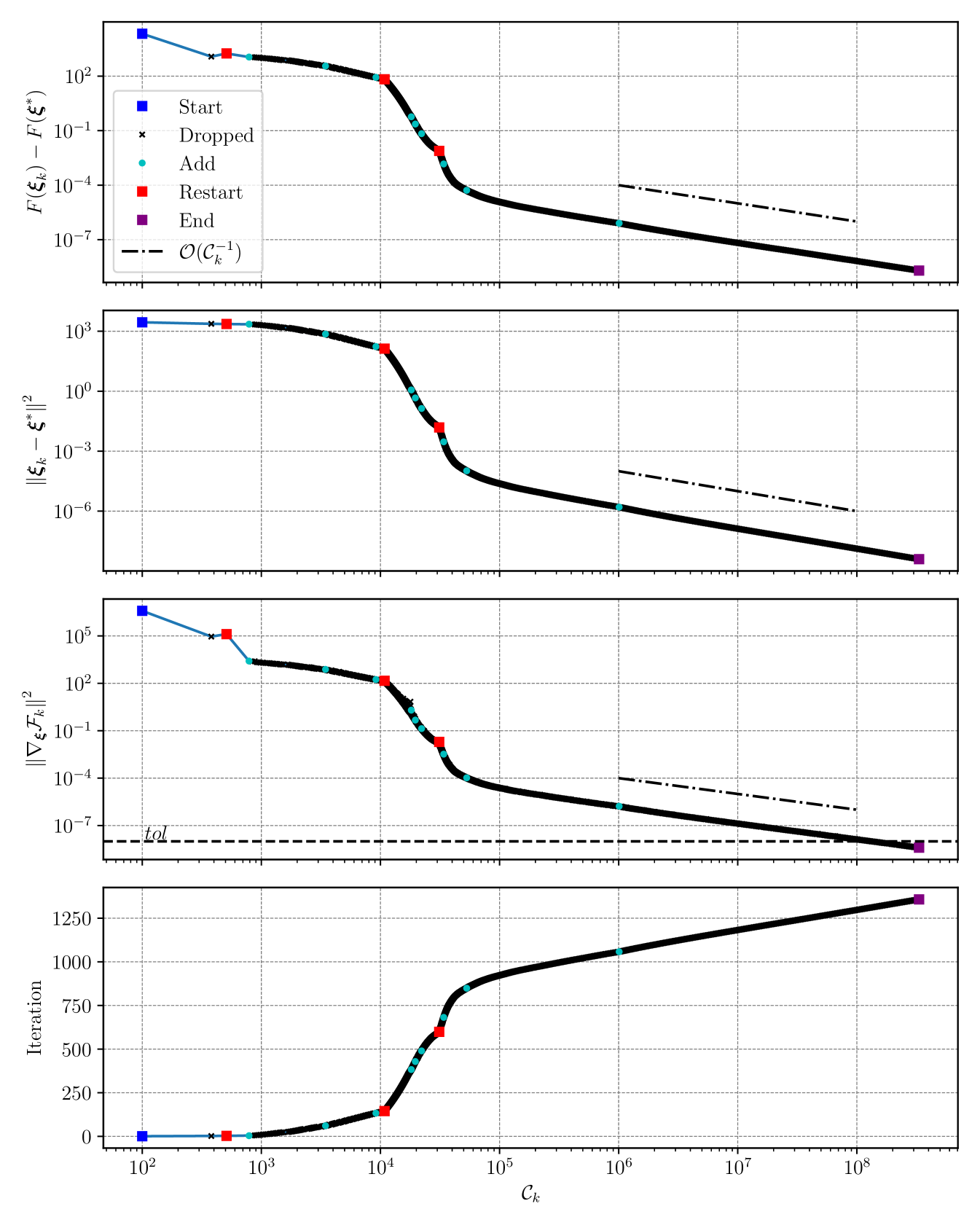

In Figures 1 and 2, we present the optimality gap (131), the squared distance to the optimal point and the squared norm of the gradient estimate versus iteration and number of gradient sampling cost, respectively. In Figure 2 we also plot the iteration reached versus gradient sampling cost. We mark the starting points, restarts, and ending points with blue, red, and purple squares, respectively; the dropped points with black , and the remaining iterations in the MICE index set with cyan dots. In Figure 1, one can observe that SGD-MICE attains linear convergence with a constant step-size, as predicted in Proposition 2. In Figure 2, we present the convergence plots versus gradient sampling cost, exhibiting numerical rates of . These rates are expected as the distance to the optimal point converges linearly (see Proposition 2) and the cost of sampling new gradients per iteration grows as , as shown in (30). Note that the convergence is exponential as in the deterministic case until around gradient evaluations. After this point, there is a change of regime in which we achieve the asymptotic rates; after , the cost of performing each iteration grows exponentially.

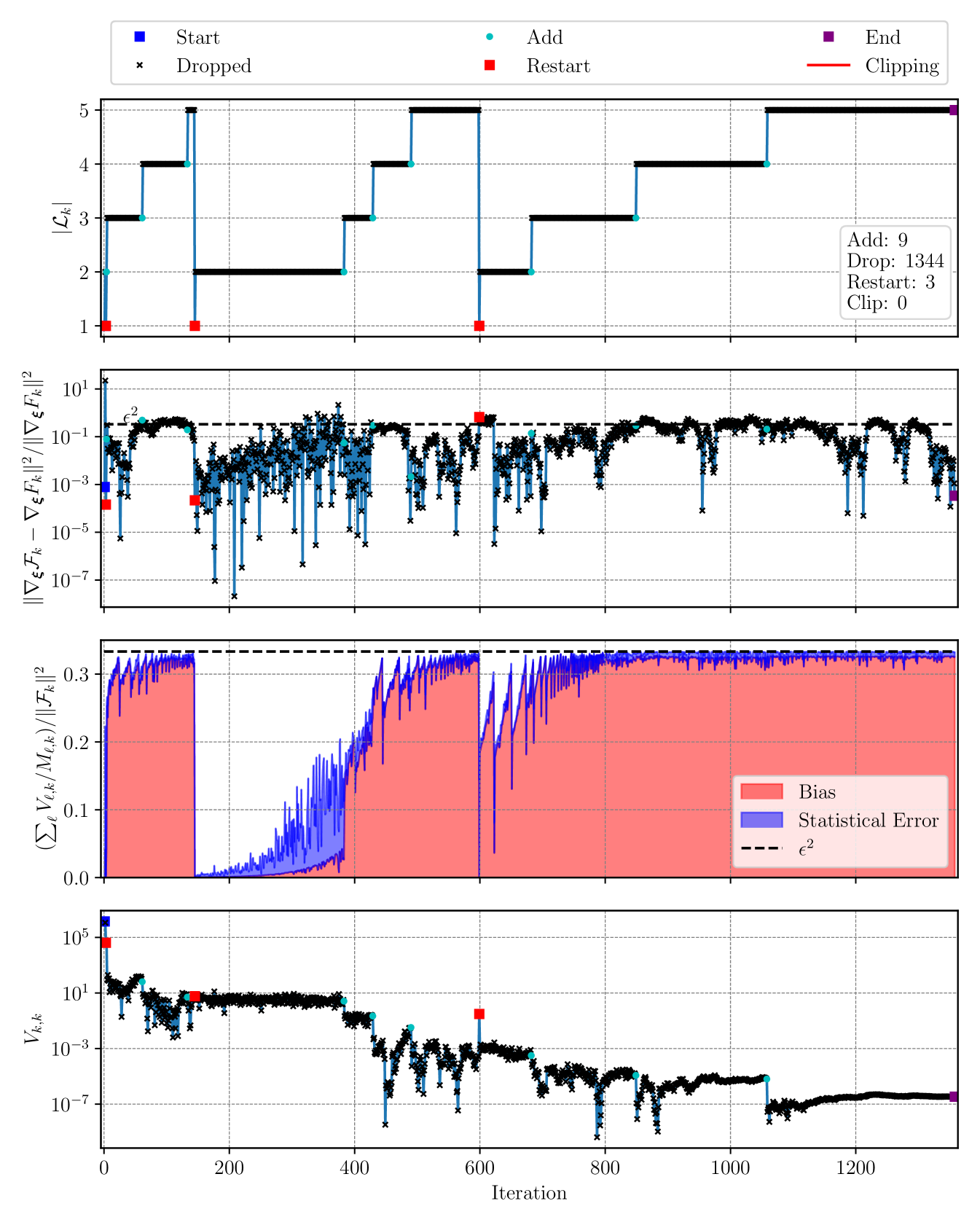

Figure 3 presents the cardinality of the index set , the true squared relative error, an approximation of the error that we control to be less than , and versus iteration. Moreover, on the relative error plots, horizontal lines with the upper bounds we impose are presented. It can be seen that imposing the condition results in the actual relative squared error being below . Also, we split the empirical relative error between bias and statistical error, as discussed in Appendix B. Note that the bias is reset when MICE’s index set is restarted. The plot illustrates how the variance of the last gradient difference decreases with the optimization, notably when a new element is added to the set .

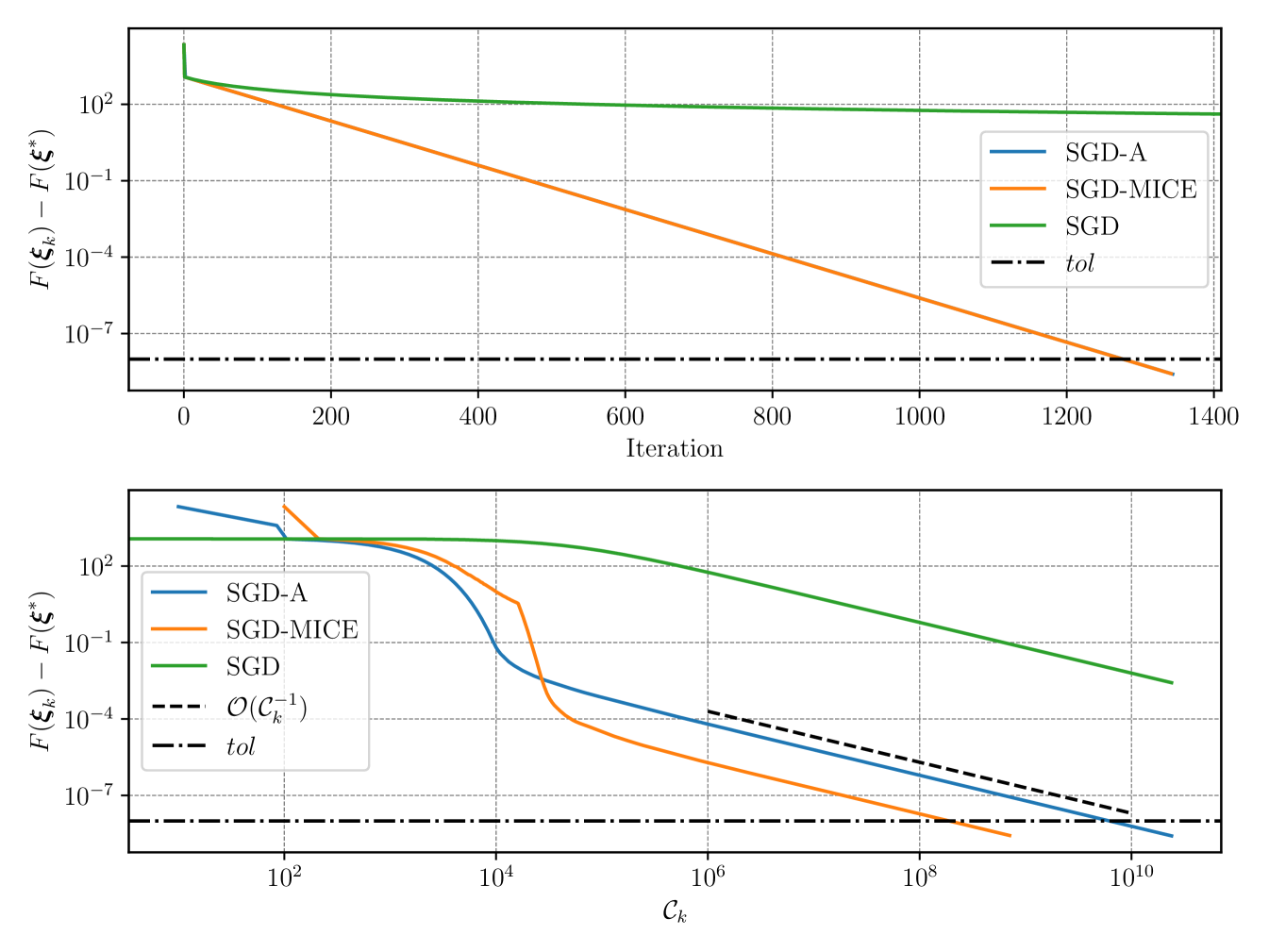

To validate the robustness and performance increase of SGD-MICE, we compare it with SGD using Monte Carlo sampling, at every iteration, to estimate the mean gradient, which we call SGD-A. Likewise, SGD-MICE, SGD-A controls the relative error in the mean gradient approximation. In practice, SGD-A is SGD-MICE but only equipped with the Restart index set operator. We run both methods until the tolerance is reached. Then, we run vanilla SGD (Robbins–Monro algorithm [31]) with sample size until it reaches the same work as SGD-A.

In Figure 4, we present the optimality gap per iteration and number of gradient evaluations for SGD-A, SGD-MICE, and vanilla SGD. Our SGD-MICE achieved the desired tolerance with of the cost needed by SGD-A, illustrating the performance improvement of using the data from previous iterations efficiently.

Although this example is very simple, it illustrates the performance of SGD-MICE in an ideal situation where both and are known. Finally, SGD-MICE was able to automatically decide whether to drop iterations, restart, or clip the index set to minimize the overall work required to attain the linear convergence per iteration.

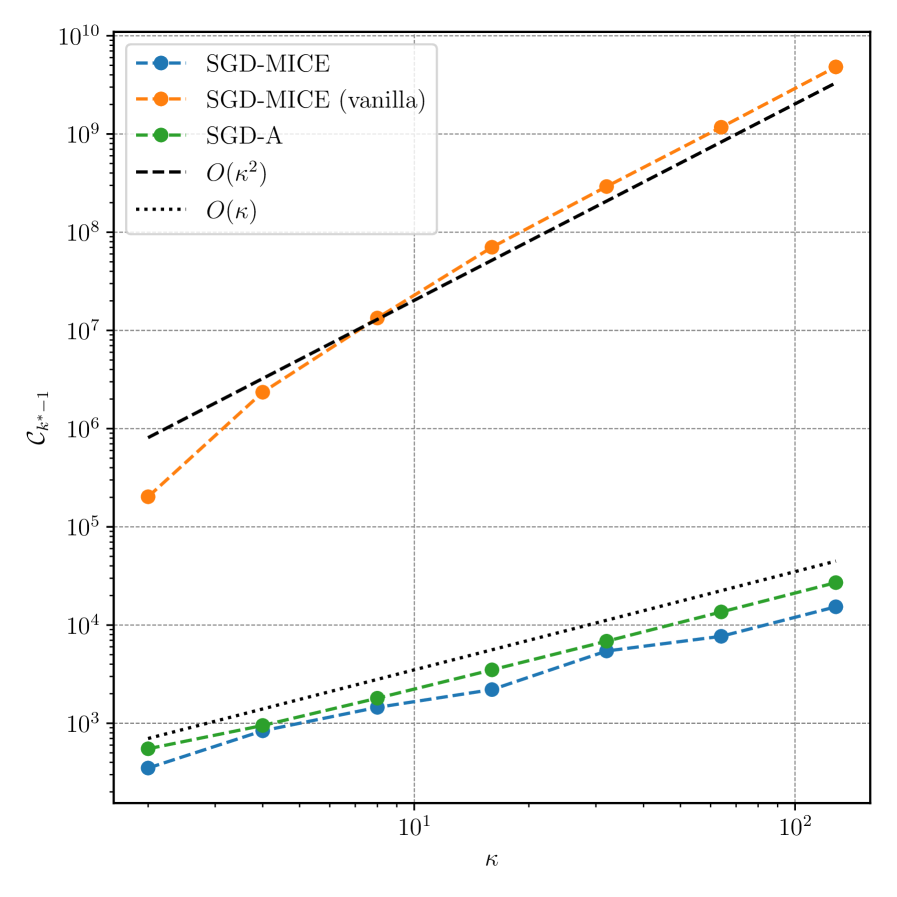

In §3.2, we prove that, for expectation minimization, the gradient sampling cost necessary to reach a certain is for SGD-MICE and for SGD-A. To validate numerically the dependency of the cost with respect to the conditioning number, we evaluated both SGD-MICE and SGD-A with different condition numbers until the stopping criterion. Moreover, we also tested SGD-MICE with and without the index set operators Restart, Drop, and Clip. The reasoning for doing this test is that, in the analysis of Corollary 4, we consider the case where all iterates are kept in the index set. However, in practice, one would expect SGD-MICE with the index set operators to perform better than both vanilla SGD-MICE (without the operators) and SGD-A; in one extreme case where all iterates are kept, we recover vanilla SGD-MICE, and in another extreme case we restart every iteration, resulting in SGD-A. The gradient sampling cost versus for these tests is presented in Figure 5.

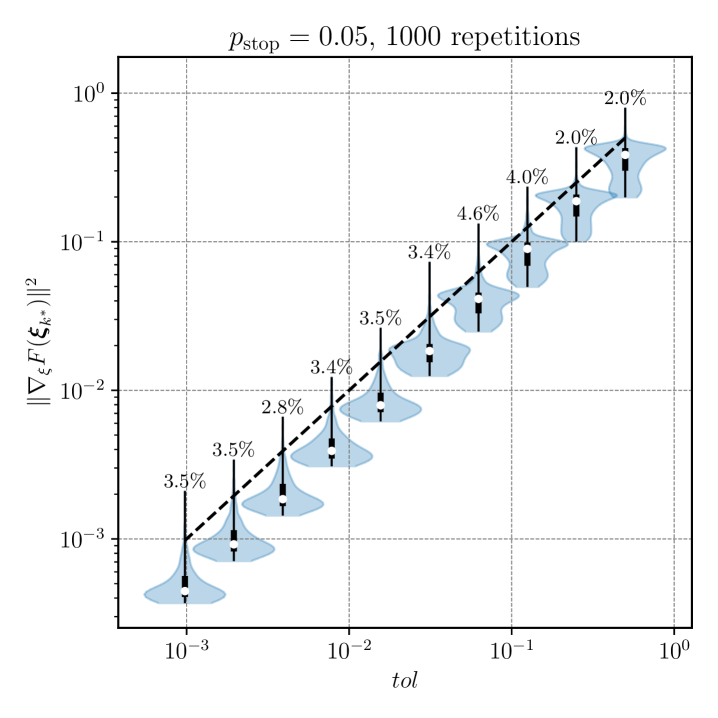

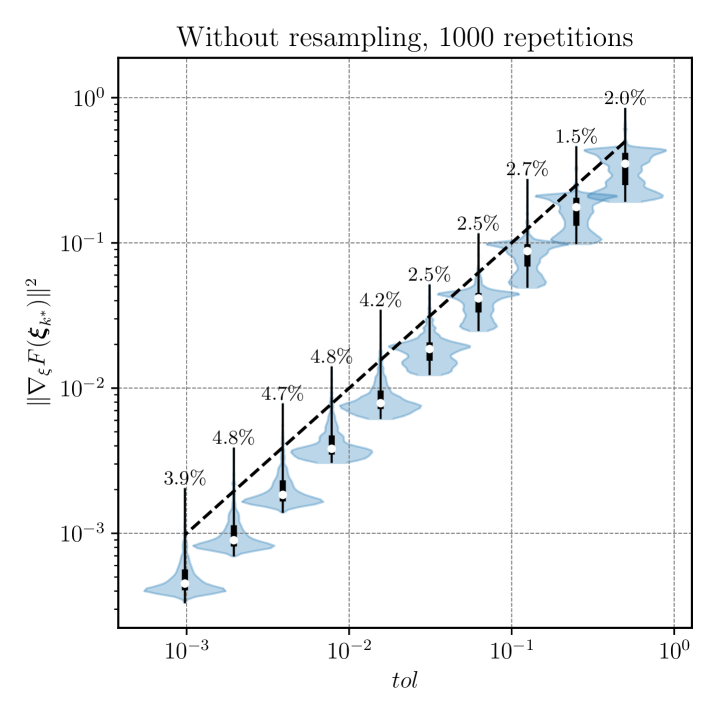

In Remark 6, we present a resampling technique to take more informed decisions on stopping criterion and error control. To validate our stopping criterion, we performed a thousand independent runs of SGD-MICE for different values of , using the resampling to decide both sample sizes and the stopping criterion. Figure 6 presents violin plots with approximations of empirical distributions of the squared gradient norms where optimization stopped and the percentage of times this quantity exceeded . Moreover, we show both the case where we use the resampling technique and when we do not use it. For lower tolerances, the resampling technique indeed reduced the percentage of premature stops, however, in both cases, a general trend of decrease following is observed.

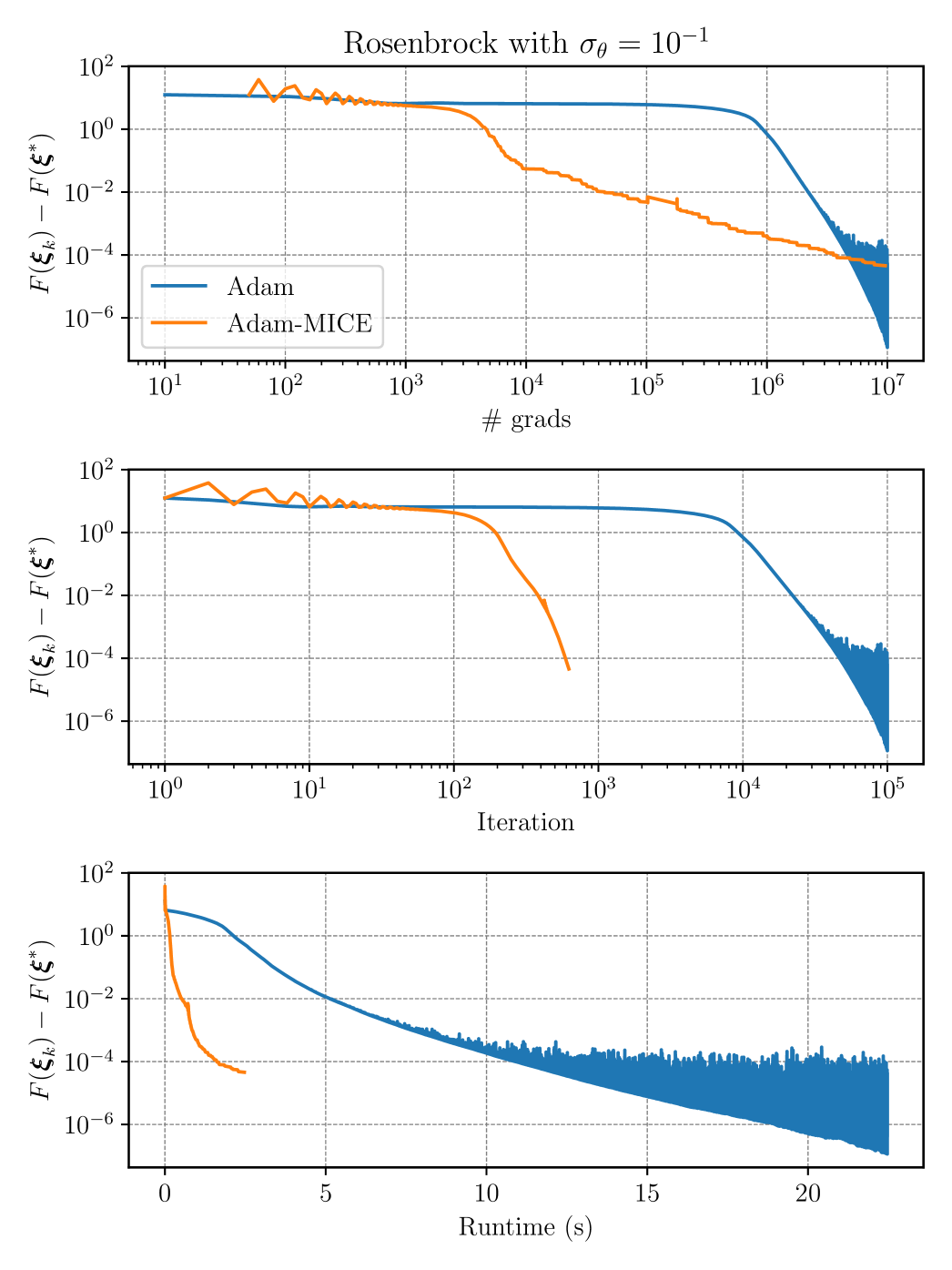

5.2. Stochastic Rosenbrock function

The goal of this example is to test the performance of Adam-MICE, that is, Adam coupled with our gradient estimator MICE, in minimizing the expected value of the stochastic Rosenbrock function in (138), showing that MICE can be coupled with different first-order optimization methods in a non-intrusive manner. Here we adapt the deterministic Rosenbrock function to the stochastic setting, specializing our optimization problem (1) with

| (137) |

where , , . The objective function to be minimized is thus

| (138) |

and its gradient is given by

| (139) |

which coincides with the gradient of the deterministic Rosenbrock function. Therefore, the optimal point of the stochastic Rosenbrock is the same as the one of the deterministic: . To perform the optimization, we sample the stochastic gradient

| (140) |

Although this is still a low dimensional example, minimizing the Rosenbrock function poses a difficult optimization problem for first-order methods; these tend to advance slowly in the region where the gradient has near-zero norm. Moreover, when noise is introduced in gradient estimates, their relative error can become large, affecting the optimization convergence.

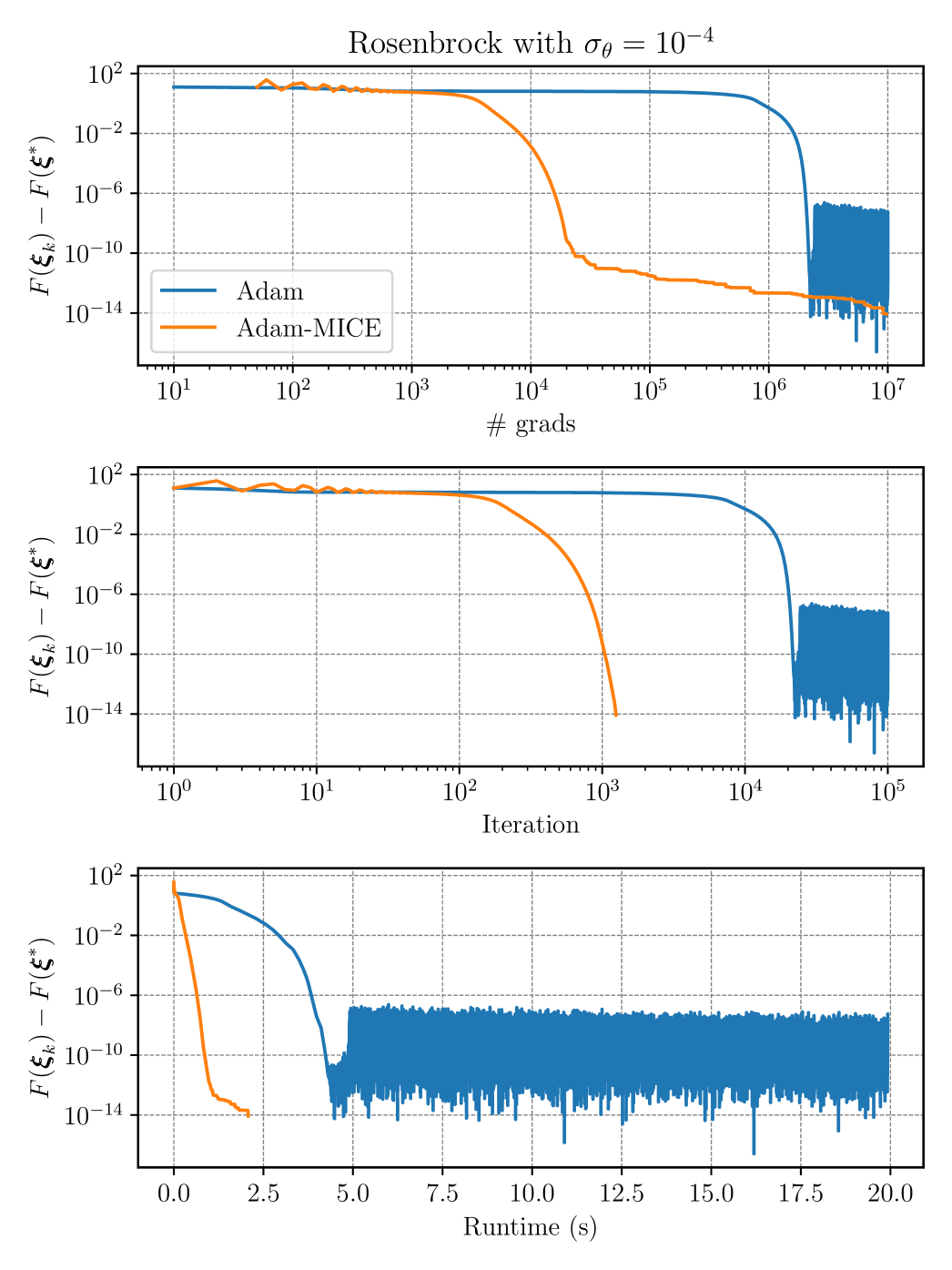

We compare the convergence of the classical Adam algorithm against Adam-MICE. To illustrate the effect of the dispersion of the random variable , two distinct noise levels are considered, namely and . As for the optimization setup, we set Adam-MICE with fixed step-size and Adam with a decreasing step-size , which we observed to be the best step-sizes for each method. The stopping criterion for both algorithms is set as gradient evaluations. For Adam-MICE, we use , whereas for Adam we use a fixed batch size of . In all cases, we start the optimization from

In Figures 7 and 8, we present, for of and , respectively, the optimality gap for both Adam and Adam-MICE versus the number of gradients, iterations, and runtime in seconds. It is clear that Adam-MICE is more stable than Adam as the latter oscillates as it approximates the optimal point in both cases. The efficient control of the error in gradient estimates allows Adam-MICE to converge monotonically in the asymptotic phase. Moreover, the number of iterations and the runtime are much smaller for Adam-MICE than for Adam.

As a conclusion, even though Adam has its own mechanisms to control the statistical error of gradients, coupling it with MICE, for this example, has proven to be advantageous as it allows more evaluations to be performed simultaneously. Moreover, as the gradient error is controlled, we can use Adam with a fixed step-size. Also, MICE allows for a stopping criterion based on the gradient norm, which would not be possible for vanilla Adam.

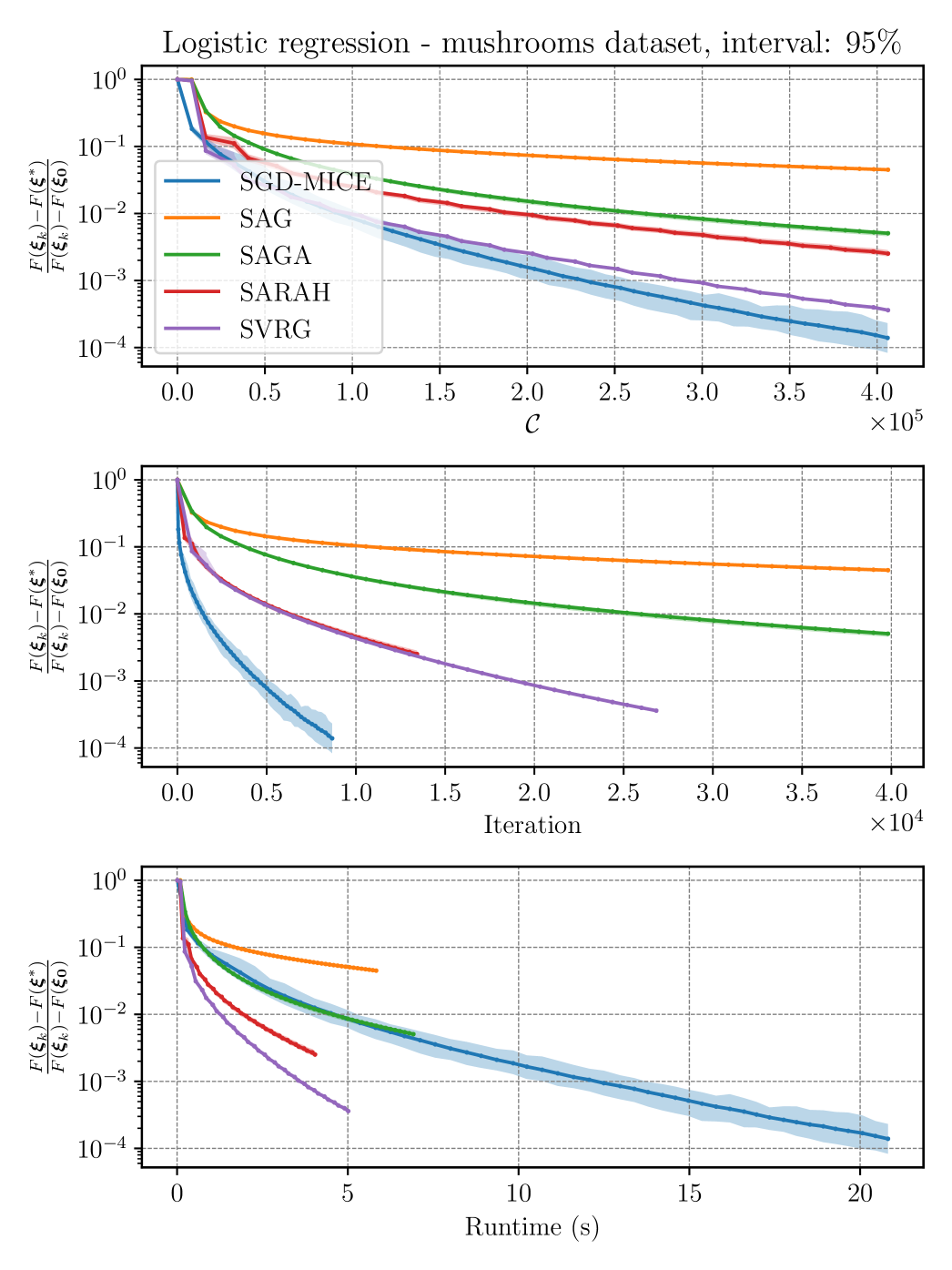

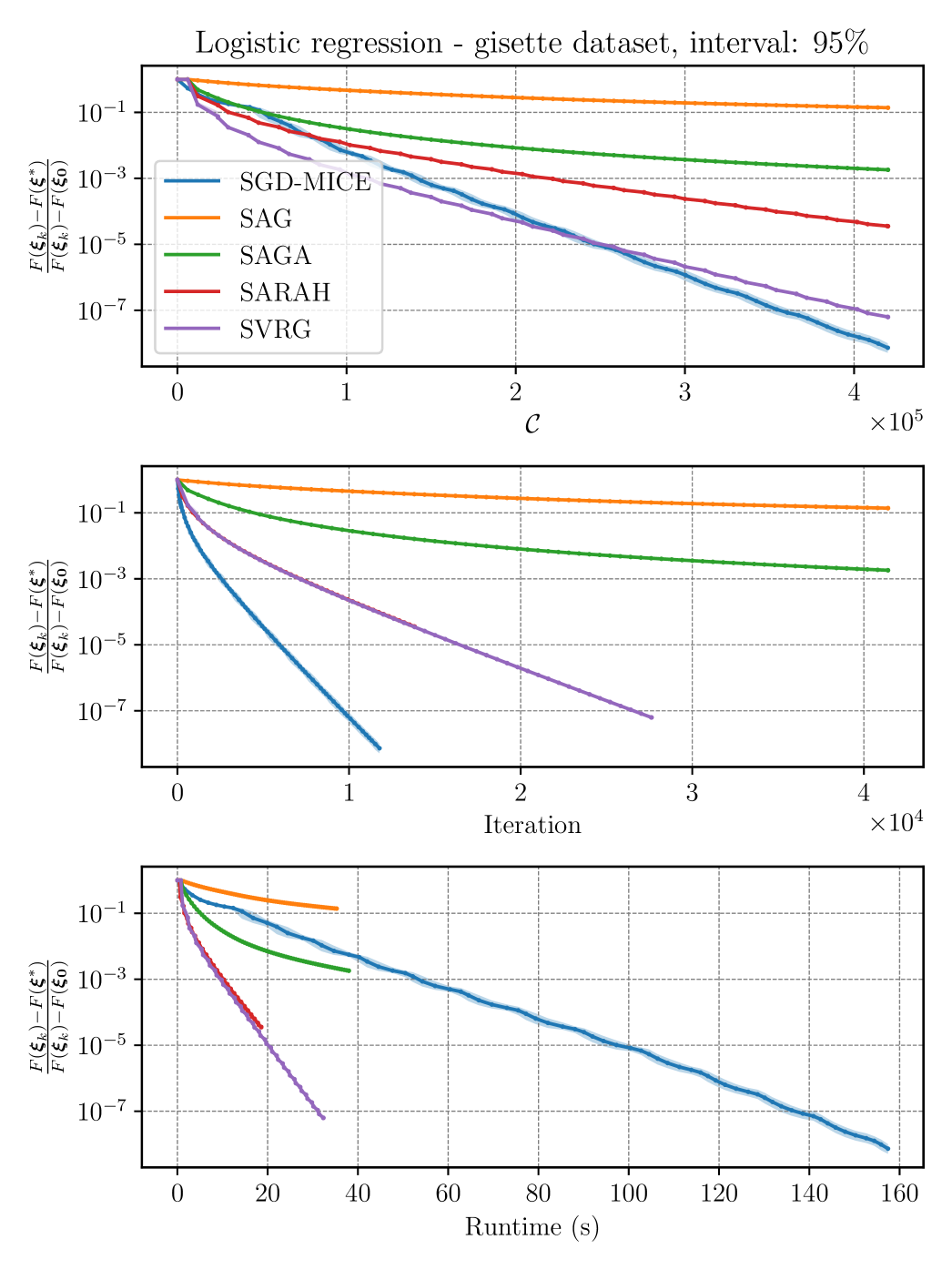

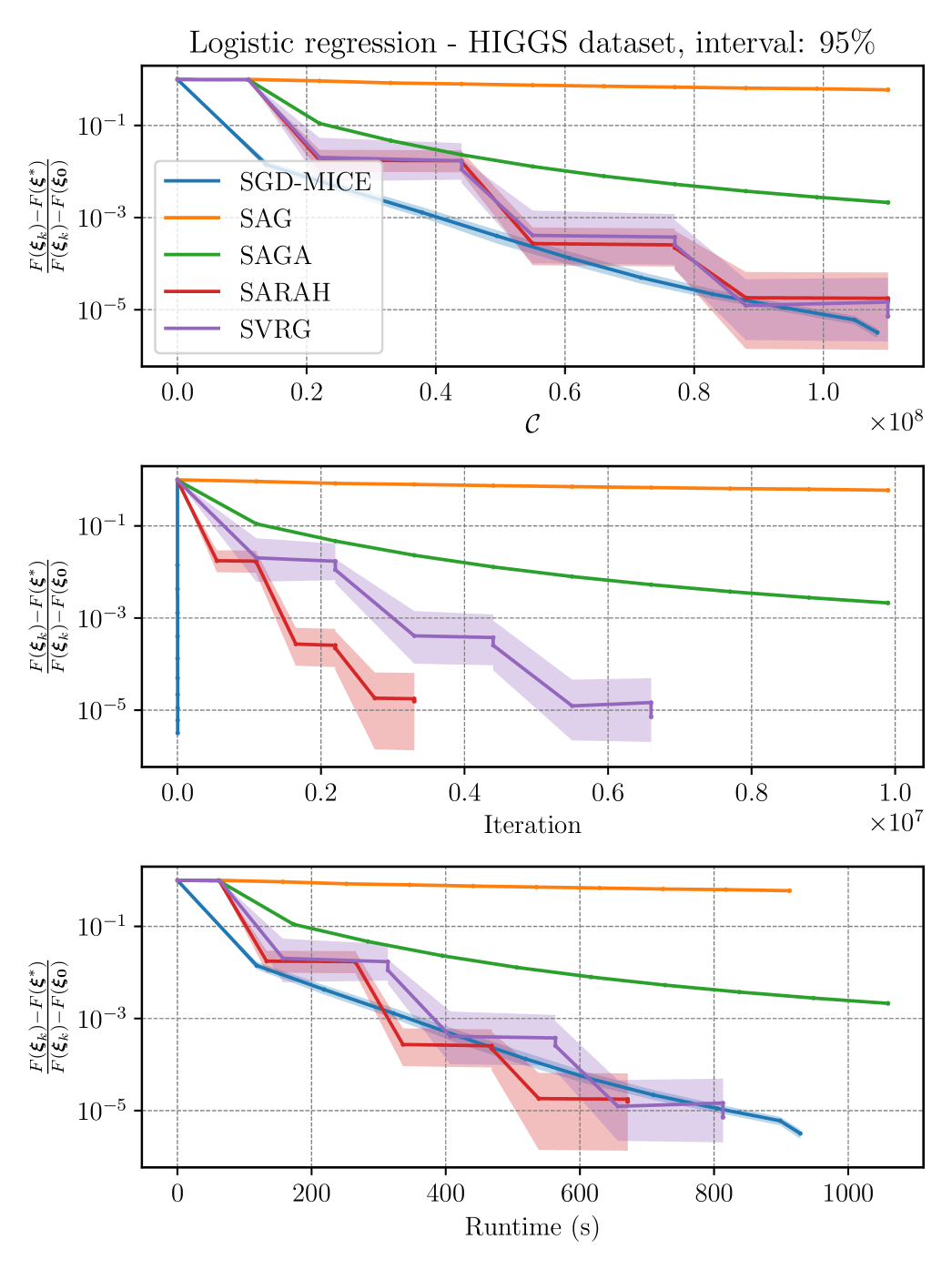

5.3. Logistic regression

In this example, we train logistic regression models using SGD-MICE, SAG [46], SAGA [26], SARAH [23], and SVRG [22] to compare their performances. Here, we present a more practical application of MICE, where we can test its performance on high-dimensional settings with finite populations. Therefore, we calculate the error as in (2) and use Algorithm 1 to obtain the optimal sample sizes. To train the logistic regression model for binary classification, we use the -regularized log-loss function

| (141) |

where each data point is such that and . We use the datasets mushrooms, gisette, and Higgs, obtained from LibSVM111 https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary.html . The size of the datasets , number of features , and regularization parameters are presented in Table 1.

| Dataset | Size | Features | ||

|---|---|---|---|---|

| mushrooms | 8124 | 112 | 12316.30 | |

| gisette | 6000 | 5000 | 1811.21 | |

| HIGGS | 11000000 | 28 | 765.76 |

When using SGD-MICE for training the logistic regression model, we use . For the other methods, we use batch sizes of size . Since we have finite populations, we use Algorithm 1 to calculate the sample-sizes. SGD-MICE step is based on the Lipschitz smoothness of the true objective function as presented in Proposition 2. Conversely, the other methods rely on a Lipschitz constant that must hold for all data points, which we refer to as . A maximum index set cardinality of is imposed on SGD-MICE; if , we restart the index set. The step-sizes for SAG, SAGA, SARAH, and SVRG are presented in Table 2. These steps were chosen as the best performing for each case based on the recommendations of their original papers.

| Method | SAG | SAGA | SARAH | SVRG |

|---|---|---|---|---|

| Step-size |

To evaluate the consistency of SGD-MICE versus the other baseline methods, we perform independent runs of each method for each dataset. Figures 9, 10, and 11 present confidence intervals and median of the relative optimality gap (the optimality gap normalized by its starting value) for, respectively, the mushrooms, gisette, and HIGGS datasets versus the number of gradient evaluations, iterations and runtime in seconds.

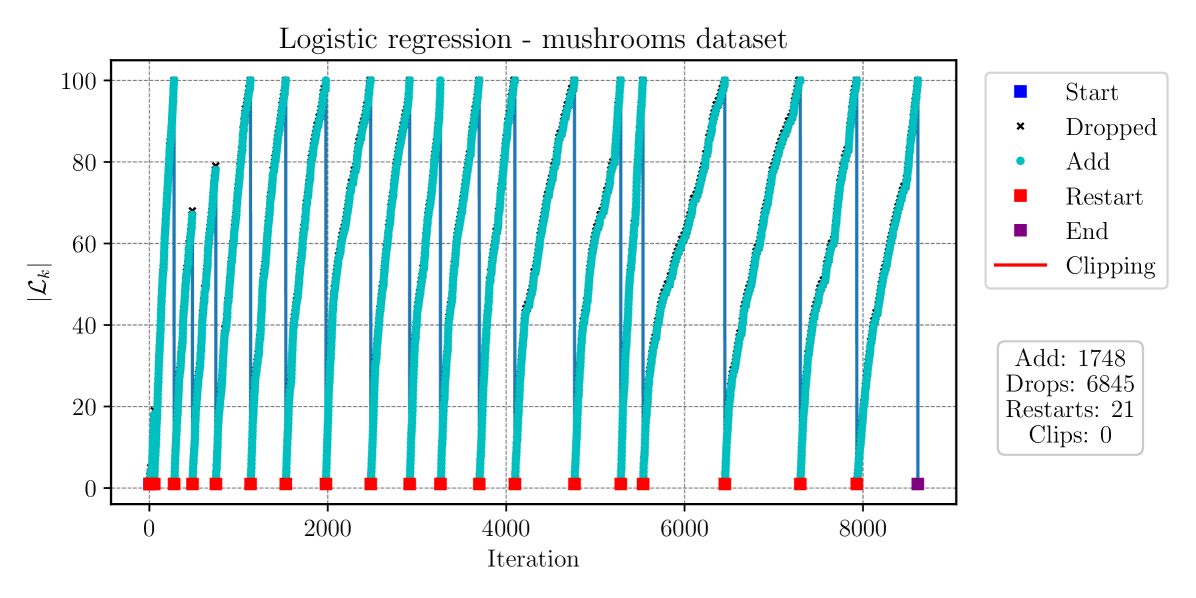

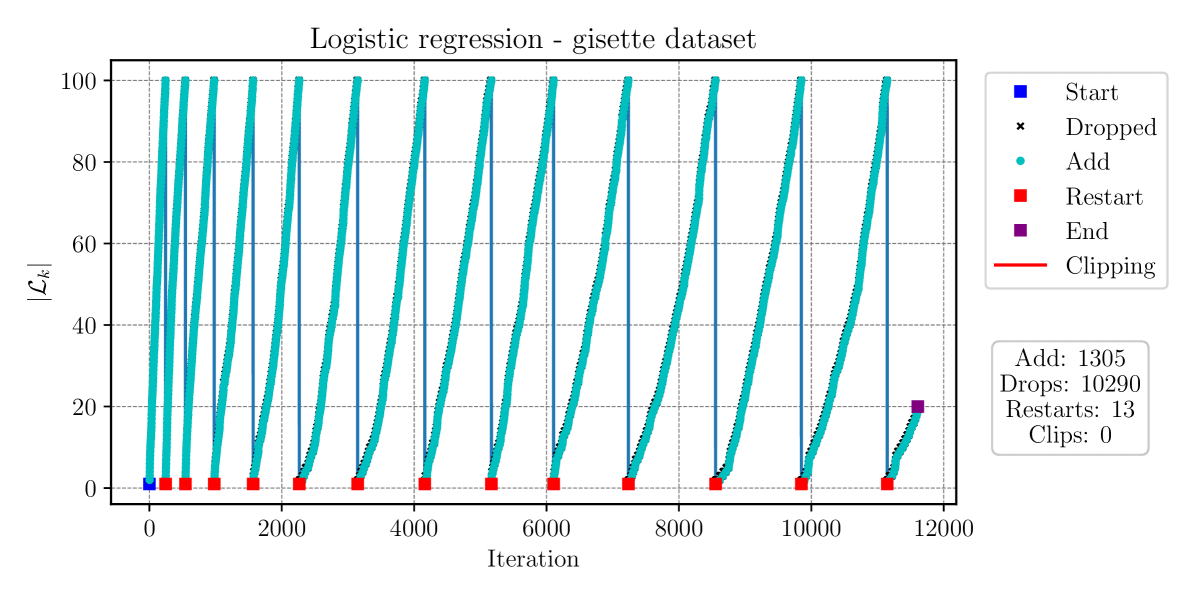

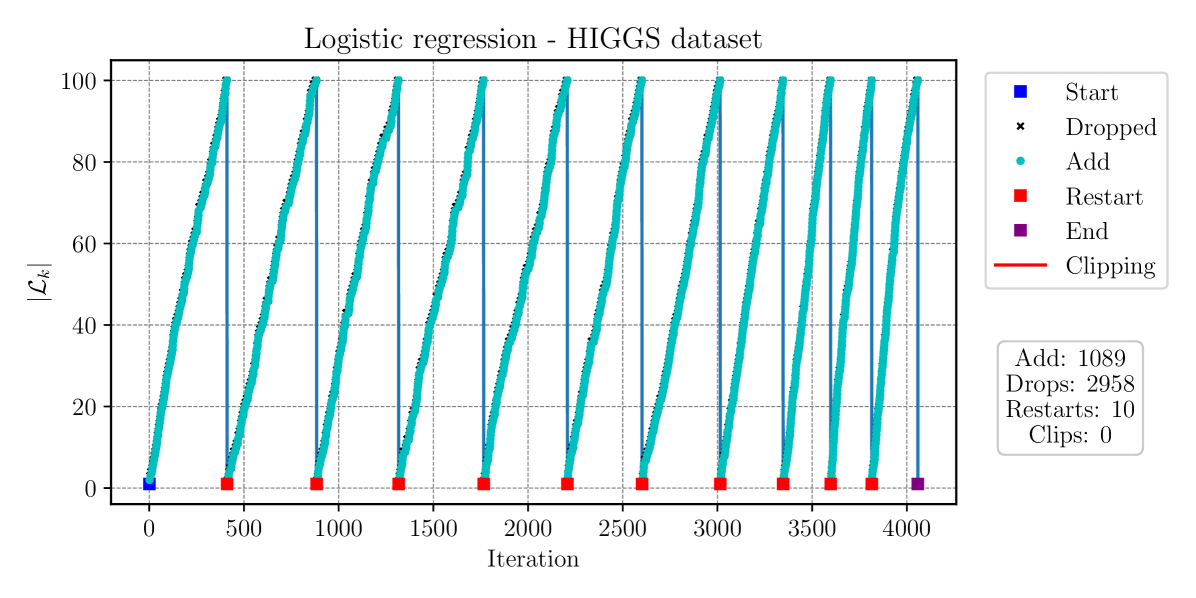

In the mushrooms dataset, SGD-MICE decreases the optimality gap more than the other methods for the same number of gradient samples during the whole optimization process. Moreover, the total number of iterations is much smaller than for the other methods. Yet, the overhead of our SGD-MICE implementation becomes clear when we compare the runtimes; the current implementation of SGD-MICE is more costly than the other methods. In the gisette dataset, SGD-MICE shows a better convergence rate when compared to the other methods, both in terms of number of gradient evaluations as in iterations. The overhead, however, is significantly larger, due to the larger number of optimization variables here, . Finally, for the HIGGS dataset, which is a much larger dataset, SGD-MICE performs better than the other methods in the number of gradient evaluations, iterations, and is competitive with SARAH and SVRG in runtime. Moreover, on average SGD-MICE performed iterations while the other methods required more than iterations. Thus, more gradient evaluations can be performed simultaneously in parallel. Figure 12 presents the index set cardinalities versus iterations of SGD-MICE for the three datasets. Moreover, we present the iterations that were kept in the index set, the ones that were dropped, as well as restarts and clippings.

From the results of this example, we observe that MICE performs well in problems with a reasonably large number of parameters, for instance, in the gisette, and finite dataset populations ranging from the thousands to the millions. One can conclude from the results obtained that SGD-MICE’s performance compared to the other methods increases as the population size grows. Note that both SAG and SAGA need to decrease their step-sizes as the sample-size increases, and that SARAH and SVRG need to reevaluate the full-gradient after a few epochs to keep their convergence.

6. Acknowledgments

This publication is based upon work supported by the King Abdullah University of Science and Technology (KAUST) Office of Sponsored Research (OSR) under Award No. OSR-2019-CRG8-4033, the Alexander von Humboldt Foundation, and Coordination for the Improvement of Higher Education Personnel (CAPES).

Last but not least, we want to thank Dr. Sören Wolfers and Prof. Jesper Oppelstrup. They both provided us with valuable ideas and constructive comments.

7. Conflict of interest

The authors have no conflicts to disclose.

8. Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request. A python implementation of MICE can be found at PyPi https://pypi.org/project/mice/. We use the datasets mushrooms, gisette, and Higgs, obtained from LibSVM https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary.html.

9. Conclusion

We introduced the Multi-Iteration Stochastic Optimizers, a novel class of first-order stochastic optimization methods that use the Multi-Iteration stochastiC Estimator (MICE). The MICE estimator utilizes successive control variates along optimization paths, efficiently reusing previously computed gradients to achieve accurate mean gradient approximations. At each iteration, it adaptively samples gradients to satisfy a user-specified relative error tolerance. Moreover, it employs a greedy strategy to determine which iterates to retain in memory and which to discard.

Thanks to its ability to control relative gradient error, MICE facilitates robust stopping criteria based on the gradient norm. Moreover, its nonintrusive design makes it readily integrable with existing first-order optimization methods, significantly expanding its applicability.

We provided a rigorous theoretical analysis for SGD-MICE, highlighting its strong performance across different classes of optimization problems. In particular, we proved exponential convergence in the sense for gradient-dominated functions under constant step-size conditions. For strongly convex problems, our results demonstrate that SGD-MICE achieves accuracy with an average complexity of gradient evaluations, outperforming conventional adaptive batch-size SGD methods, which require evaluations.

Numerically, we validated our theory through three illustrative examples, employing consistent MICE parameters across diverse scenarios. The tests ranged from a quadratic function with stochastic Hessian to a stochastic Rosenbrock problem solved via Adam-MICE, and finally logistic regression training on large-scale datasets. In the latter, SGD-MICE demonstrated competitive performance against established variance-reduction methods (SAG, SAGA, SVRG, and SARAH), confirming its practical efficiency and scalability.

Future research directions include extending MICE to quasi-Newton methods, exploring constrained optimization settings through standard techniques like projected gradients and active set methods, and investigating additional gradient estimation error sources, such as biases from numerical discretizations.

References

- [1] K. Marti. Stochastic optimization methods, volume 2. Springer, 2005.

- [2] S. Uryasev and P.M. Pardalos. Stochastic optimization: algorithms and applications, volume 54. Springer Science & Business Media, 2013.

- [3] J.R. Birge and F. Louveaux. Introduction to Stochastic Programming. Springer Publishing Company, Incorporated, 2nd edition, 2011.

- [4] G. Lan. First-order and Stochastic Optimization Methods for Machine Learning. Springer, 2020.

- [5] S.W. Wallace and W.T. Ziemba. Applications of stochastic programming. SIAM, 2005.

- [6] W.H. Fleming and R.W. R. Deterministic and stochastic optimal control, volume 1. Springer Science & Business Media, 2012.

- [7] W.T. Ziemba and R.G. Vickson. Stochastic optimization models in finance. Academic Press, 2014.

- [8] A.J. Conejo, M. Carrión, J.M. Morales, et al. Decision making under uncertainty in electricity markets, volume 1. Springer, 2010.

- [9] C. Bayer, R. Tempone, and S. Wolfers. Pricing American options by exercise rate optimization. Quant. Finance, 20(11):1749–1760, 2020.

- [10] F.-R. Chang. Stochastic optimization in continuous time. Cambridge University Press, 2004.

- [11] P. Azcue and N. Muler. Stochastic optimization in insurance. SpringerBriefs in Quantitative Finance. Springer, New York, 2014. A dynamic programming approach.

- [12] Z. Ding. Stochastic Optimization and Its Application to Communication Networks and the Smart Grid. University of Florida Digital Collections. University of Florida, 2012.

- [13] D.D. Yao, H. Zhang, and X.Y. Zhou, editors. Stochastic modeling and optimization. Springer-Verlag, New York, 2003. With applications in queues, finance, and supply chains.

- [14] E.G. Ryan, C.C. Drovandi, J.M. McGree, and A.N. Pettitt. A review of modern computational algorithms for bayesian optimal design. International Statistical Review, 84(1):128–154, 2016.

- [15] A.G. Carlon, B.M. Dia, L. Espath, R.H. Lopez, and R. Tempone. Nesterov-aided stochastic gradient methods using laplace approximation for bayesian design optimization. Computer Methods in Applied Mechanics and Engineering, 363, 2020.

- [16] S. Heinrich. The multilevel method of dependent tests. In Advances in stochastic simulation methods, pages 47–61. Springer, 2000.

- [17] M.B. Giles. Multilevel Monte Carlo path simulation. Operations research, 56(3):607–617, 2008.

- [18] D. Ruppert. Efficient estimations from a slowly convergent robbins-monro process. Technical report, Cornell University Operations Research and Industrial Engineering, 1988.

- [19] R.H. Byrd, G.M. Chin, J. Nocedal, and Y. Wu. Sample size selection in optimization methods for machine learning. Mathematical programming, 134(1):127–155, 2012.

- [20] L. Balles, J. Romero, and P. Hennig. Coupling adaptive batch sizes with learning rates. arXiv preprint arXiv:1612.05086, 2016.

- [21] R. Bollapragada, R. Byrd, and J. Nocedal. Adaptive sampling strategies for stochastic optimization. SIAM Journal on Optimization, 28(4):3312–3343, 2018.

- [22] R. Johnson and T. Zhang. Accelerating stochastic gradient descent using predictive variance reduction. In Advances in neural information processing systems, pages 315–323, 2013.

- [23] L.M. Nguyen, J. Liu, K. Scheinberg, and M. Takáč. Sarah: A novel method for machine learning problems using stochastic recursive gradient. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pages 2613–2621. JMLR. org, 2017.

- [24] L. Nguyen, K. Scheinberg, and M. Takáč. Inexact sarah algorithm for stochastic optimization. Optimization methods & software, 08 2020.

- [25] C. Fang, C.J. Li, Z. Lin, and T. Zhang. Spider: Near-optimal non-convex optimization via stochastic path-integrated differential estimator. In Advances in Neural Information Processing Systems, pages 689–699, 2018.

- [26] A. Defazio, F. Bach, and S. Lacoste-Julien. Saga: A fast incremental gradient method with support for non-strongly convex composite objectives. In Advances in neural information processing systems, pages 1646–1654, 2014.

- [27] M.P. Friedlander and M. Schmidt. Hybrid deterministic-stochastic methods for data fitting. SIAM Journal on Scientific Computing, 34(3):A1380–A1405, 2012.

- [28] S. De, A. Yadav, D. Jacobs, and T. Goldstein. Big batch sgd: Automated inference using adaptive batch sizes. arXiv preprint arXiv:1610.05792, 2016.

- [29] K. Ji, Z. Wang, Y. Zhou, and Y. Liang. Faster stochastic algorithms via history-gradient aided batch size adaptation. arXiv preprint arXiv:1910.09670, 2019.

- [30] B. T Polyak. Introduction to optimization. optimization software. Inc., Publications Division, New York, 1, 1987.

- [31] H. Robbins and S. Monro. A stochastic approximation method. The annals of mathematical statistics, pages 400–407, 1951.

- [32] D. P Kingma and J. Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [33] J.C. Spall. Introduction to stochastic search and optimization: estimation, simulation, and control, volume 65. John Wiley & Sons, 2005.

- [34] A. Shapiro, D. Dentcheva, and A. Ruszczyński. Lectures on stochastic programming: modeling and theory. SIAM, 2014.

- [35] A. Nitanda. Stochastic proximal gradient descent with acceleration techniques. In Advances in Neural Information Processing Systems, pages 1574–1582, 2014.

- [36] J. Konečnỳ, J. Liu, P. Richtárik, and M. Takáč. Mini-batch semi-stochastic gradient descent in the proximal setting. IEEE Journal of Selected Topics in Signal Processing, 10(2):242–255, 2016.

- [37] S. Heinrich. Multilevel Monte Carlo methods. In Large-Scale Scientific Computing, volume 2179 of Lecture Notes in Computer Science, pages 58–67. Springer Berlin Heidelberg, 2001.

- [38] M.B. Giles. Multilevel monte carlo methods. Acta Numerica, 24:259–328, 2015.

- [39] S. Dereich and T. Müller-Gronbach. General multilevel adaptations for stochastic approximation algorithms of Robbins-Monro and Polyak-Ruppert type. Numer. Math., 142(2):279–328, 2019.

- [40] S. Yang, M. Wang, and E.X. Fang. Multilevel stochastic gradient methods for nested composition optimization. SIAM Journal on Optimization, 29(1):616–659, 2019.

- [41] A. C. Davison and D. V. Hinkley. Bootstrap methods and their application. Number 1. Cambridge university press, 1997.

- [42] H. Karimi, J. Nutini, and M. Schmidt. Linear convergence of gradient and proximal-gradient methods under the polyak-łojasiewicz condition. In Joint European conference on machine learning and knowledge discovery in databases, pages 795–811. Springer, 2016.

- [43] Y. Nesterov. Lectures on convex optimization, volume 137. Springer, 2018.

- [44] B. Efron. The jackknife, the bootstrap, and other resampling plans, volume 38. Siam, 1982.

- [45] B.P. Welford. Note on a method for calculating corrected sums of squares and products. Technometrics, 4(3):419–420, 1962.

- [46] M. Schmidt, N. Le Roux, and F. Bach. Minimizing finite sums with the stochastic average gradient. Mathematical Programming, 162(1-2):83–112, 2017.

Appendix A Multi-iteration stochastic optimizers

In this section, we present the detailed algorithms for the multi-iteration stochastic optimizers using the MICE estimator for the mean gradient. In Algorithms 4, 5, we respectively describe the pseudocodes for SGD-MICE and Adam-MICE.

Appendix B Error decomposition of the MICE estimator

The MICE estimator has a conditional bias due to the reuse of previous information. Here we prove that, if the statistical error of the estimator is controlled every iteration, then the bias is implicitly controlled as well. Recall the MICE estimator is defined as

| (142) |

where

| (143) |

The squared error of the MICE estimator can be decomposed as

| (144) |

due to

| (145) |

Before we analyze the bias and statistical errors, let us analyze the conditional expectation of the MICE estimator,

| (146) |

and noting that, for , is deterministic,

| (147) |

Let . Splitting the summands in MICE between the terms computed at and the previous ones,

| (148) |

taking the expectation conditioned on , and using ,

| (151) | ||||

| (153) | ||||

| (154) |

where in (153) we used .

Next, we investigate the bias of the MICE estimator conditioned on the current iterate and its contribution to the squared error.

Proposition 3 (Bias of the MICE estimator in expectation minimization).

Let the bias of the MICE estimator be defined as

| (155) |

Then, the bias is

| (156) |

and its contribution to the squared error is

| (157) |

Proof.

Using Lemma 1 and concludes the proof. ∎

Note from (156) that .

Corollary 8 (Bias of the MICE estimator in finite sum minimization).

The bias of the MICE estimator in finite sum minimization is similar to the expectation minimization one, with the consideration of the finite population correction factor,

| (162) |

Proof.

The proof follows exactly as in Proposition 3, except the finite population correction factor is used in the centered second moment of , . ∎

Proposition 4 (Statistical error of the MICE estimator in expectation minimization).

The statistical error of the MICE estimator in the case of expectation minimization is

| (163) |

Proof.

Corollary 9 (Statistical error of the MICE estimator in finite sum minimization).

The statistical error in the finite sum minimization case is

| (167) |