Multi-Way Number Partitioning: an Information-Theoretic View

Abstract

The number partitioning problem is the problem of partitioning a given list of numbers into multiple subsets so that the sum of the numbers in each subset are as nearly equal as possible. We introduce two closely related notions of the “most informative” and “most compressible” partitions. Most informative partitions satisfy a principle of optimality property. We also give an exact algorithm (based on Huffman coding) with a running time of in input size to find the most compressible partition.

Index Terms:

Multi-way number partitioning, Entropy, Huffman codes.I Introduction

Let be a list of positive integers. The number partitioning problem is the task of partitioning into subsets so that the sum of the numbers in different subsets ( for ) are as nearly equal as possible. For instance, if and , we can consider the following partition and . The numbers in each subset add up to 8, so this is a completely balanced partition. Three typical objective functions exist for this problem [1]:

-

1.

[Min-Difference objective function] Minimize the difference between the largest and smallest subset sums, i.e., minimize ,

-

2.

[Min-Max objective function] Minimize the largest subset sum, i.e., minimize ,

-

3.

[Max-Min objective function] Maximize the smallest subset sum, i.e., maximize .

While these objective functions are equivalent when , neither of them is equivalent to the other for [2]. For the case of , Karp proved that the decision version of number-partitioning problem is NP-complete [3]. However, there are some algorithms such as pseudo-polynomial time dynamic programming solution or some heuristic algorithms that solve the problem approximately or completely [4, 2, 3, 5, 6, 7, 1, 8, 9, 10].

In this paper, we introduce a new objective function for the number partitioning problem, different from the three objective functions described above. Let be sum of all numbers in the list. Then, will be a probability distribution, and we can measure its distance from the uniform distribution via its Shannon entropy:

The above Shannon entropy is less than or equal to and reaches its maximum for the uniform distribution. We define a new objective function as maximizing this Shannon entropy and call it the entropic objective function. In information theory, entropy also finds an interpretation in terms of the optimal compression rate of a source. This interpretation of entropy allows us to define another objective function, closely related to the entropic objective function, which we call the compression objective function. Using a variant of Huffman coding, we present an exact algorithm, with a running time of , to solve the optimization problem with the compression objective function.

II Entropic objective function and a principle of optimality

Definition 1.

Given a list , we define a random variable over the alphabet set such that where . Let be a set of size . Then, an -partition function is a mapping . This partitions into sets for . Let . Then, the marginal distribution of is characterized by the sum of numbers in different partitions divided by .

Definition 2.

For two discrete random variables and with the joint probability mass function define

The number partition problem with the entropic objective function can be expressed as follows

| (1) |

where . Since , we are looking for a partition function such that is most informative about . As an example, consider the list . This corresponds to a random variable with the following distribution on on the set . If , we should consider functions . Assume that and . Then is a uniform and bit. Note that for , random variable is binary and maximizing is equivalent to making as close as possible to . Thus, the entropic objective function is equivalent with the Min-Difference, Min-Max and Max-Min objective functions, reviewed in the introduction, for . For , the entropic objective function is related to the Min-Max objective function. Remember that the min-entropy of a random variable is defined as Thus, maximizing is equivalent to minimizing the largest subset sum. Since the min-entropy is never larger than the Shannon entropy, the maximum value of yields a lower bound on the maximum of .111 Maximizing is equivalent with minimizing the where is the uniform distribution on alphabet of , and is the Renyi divergence of order infinity. The entropic objective function is equivalent with minimizing where is the KL divergence. On the other hand, minimizing is equivalent with maximizing the smallest subset sum objective function. Note that minimizing is equivalent to maximizing the product of all subset sums, a different objective function that also satisfies a principle of optimality as in Theorem 3. Finally, the entropic objective function depends on the entire subset sums, not just the the largest or smallest subset sums. Next, we discuss a principle of optimality for the entropic objective function.

Principle of optimality: A property of the Min-Difference objective function is that in each optimal -way partition if the numbers in any subsets are optimally partitioned, the new partition is also optimal (principle of optimality) [4]. This property underlies the recursive algorithms of [4]. A different and a kind of more general principle of optimality (called recursive principle of optimality in [5]) is valid for Min-Max and Max-Min objective functions [2, 5]. It says that for any optimal -way partition with subsets and any , combining any optimal -way partition of the numbers in subsets and any optimal -way partition of the numbers in the other subsets results in an optimal partition for the main set [5]. In [6], the authors develop a principle of weakest-link optimality for minimizing the largest subset sum. In [7], the authors incorporate the ideas of [5, 6] and [11] and develop an algorithm that is similar to [6] in the sense of weakest-link optimality. See [1] for a review.

Next, we prove that the entropic objective function has a principle of optimality property similar to the one in [5] (which is the basis of algorithms in [5]).

Theorem 3.

Take an optimal -partition function . Let , be an arbitrary partition of into two sets. Define a partition of into and by . Define a random variable on the set whose distribution equals the conditional distribution of given . Set . Let be an arbitrary optimal -partition function of , and be an arbitrary optimal -partition function of . Then, the following function is an optimal -partition function for :

The following lemma is the key to prove Theorem 3.

Lemma 4 (Grouping Axiom of Entropy).

For any probability vector and ,

| (2) |

Proof of Theorem 3.

Let be an optimal -partition function of . Suppose that is an arbitrary optimal partition function for . Thus, by definition. On the other hand, using Lemma 4 we have , because otherwise combining and results in a -partition function such that . That is contradiction with the optimality of . A similar argument is true for . Hence combining any optimal -partition function of with -partition function of must yield an optimal -partition function of . ∎

III Compression objective function and an algorithm

Observe that . Since , we have . Since does not depend on the choice of partition function, we can minimize instead of maximizing . The conditional entropy can be understood as the average uncertainty remaining in when is revealed. Moreover, approximates the average number of bits required to compress the source when is revealed. Consider the running example of and . A worst-case partition is to put all numbers in the first subset, and nothing in the other subset. This partition is also worst-case from the perspective of compression: assume that random variable takes values with probabilities . The partition given above implies with probability one, and its revelation provides no information about . Thus, one still needs to fully compress .

To go from the entropic objective function to the compression objective function (which is more operational), we note the following connection between entropy and compression. It is known that the minimum expected length among all prefix-free codes to describe a source is given by the Huffman code [12]. Moreover, we have

| (3) |

where is the length of the Huffman code assigned to symbol [12].

Given an -partition function where , let be the conditional distribution of given for . Let be the Huffman code for compressing when . Then, the compression objective function is defined as

| (4) |

where is the length of the Huffman code assigned to symbol in . Using (3) we obtain

| (5) |

Thus, minimizing and are approximately the same. Unlike , does not admit an explicit formula. However, we give a fast algorithm for solving

| (6) |

where .

Consider a list where . We show in Lemma 5 that there is an optimal partition (minimizing ) such that the two smallest numbers in the list, namely and , belong to the same subset in that partition. Knowing this, we can simply merge these two numbers together and replace and by . We claim that the problem then reduces to finding an optimal partition for a new list . The reason is as follows: assume that for some . Then, in the distribution of conditioned on , the probabilities and are still the two smallest numbers. It is known that a Huffman code starts off by merging the two symbol of lowest probabilities. Therefore, as and are in the same group, an optimal Huffman code also begins by merging and into a symbol . Thus, there is a one-to-one correspondence between Huffman codes for partitions of in which and are in the same group, and Huffman codes for partitions of . Moreover, from (4), for a partition of in which and are in the same group equals plus for the corresponding partition of . Since is a constant that does not depend on the choice of partitions, it suffices to proceed by minimizing over partitions of partitions of .

Algorithm 1 gives the formal algorithm. This algorithm is similar to the Huffman code except that the algorithm is stopped prematurely when the size of the list becomes equal to .

Consider the running example of and . The algorithm produces the following lists: . One can see that the numbers are grouped together (adding up to ), and are also grouped together during the execution of the algorithm (adding up to ). This shows that the minimum of equals . The balanced partition and also yields . However, unlike , it is not always the case that is minimized by a perfectly balanced partition (if such a partition exists). Nonetheless, as , maximizing or are approximately the same when and are large.

Lemma 5.

Assume . There is an optimal mapping minimizing (6), such that the two smallest numbers are in the same partition, i.e., for a list where .

Proof.

Take a list where . Let be an optimal mapping minimizing (6) such that . There are two cases:

-

1.

One cannot find such that or . In this case, the Huffman code for given or has zero length. Since , one can find numbers such that for some . We construct a new partition function such that , and , and , are equal on the other values, then the expected length decreases by

where and are the length of the Huffman codewords assigned to and conditioned on . This is a contradiction with optimality of unless . If , will also be an optimal code satisfying .

-

2.

There exists some such that either , or . Let and . Let and be the Huffman codes for distribution of given and respectively. At least one of the Huffman codes and has a non-zero average length. In any Huffman code with at least two symbols, the two longest codewords have the same length and they are assigned to symbols with the lowest probabilities [12]. First assume that . Then, certainly has more than one codeword. Since has the least probability (corresponds to ), it has the least probability in its group and also its codeword has the largest length in code . Moreover there is another codeword with this length that corresponds to some . Thus, and . Construct the new partition function such that , and , are equal on the other values. Using the same Huffman codewords as before, this change in the mapping reduces the expected length of codewords by This is a contradiction unless which implies optimality of . For case , a similar argument goes through. Therefore, similar to Case 1, we can construct an optimal mapping satisfying .∎

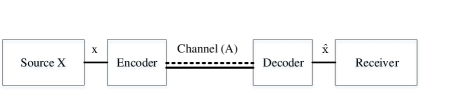

We end this section by giving an information theoretic characterization of in terms of a source coding problem. Suppose that we have a discrete memoryless source with alphabet available at the encoder; see Fig. 1. The encoder has access to two noiseless parallel channels. The first channel (depicted as channel (A) in Fig. 1) is a free channel and can carry a symbol . The second channel is not free, and the encoder is charged for each transmitted bit. The goal is to minimize the average number of bits that are transmitted on the second channel in such a way that the receiver is able to perfectly reconstruct the source . The solution to this problem is .

References

- [1] E. L. Schreiber, R. E. Korf, and M. D. Moffitt, “Optimal multi-way number partitioning,” J. ACM, vol. 65, no. 4, Jul. 2018.

- [2] R. E. Korf, “Objective functions for multi-way number partitioning,” in Third Annual Symposium on Combinatorial Search, 2010.

- [3] R. M. Karp, “Reducibility among combinatorial problems,” in Complexity of computer computations, 1972, pp. 85–103.

- [4] R. E. Korf, “Multi-way number partitioning,” in Twenty-First International Joint Conference on Artificial Intelligence, 2009.

- [5] ——, “A hybrid recursive multi-way number partitioning algorithm,” in Twenty-Second International Joint Conference on Artificial Intelligence, 2011.

- [6] M. D. Moffitt, “Search strategies for optimal multi-way number partitioning,” in Twenty-Third International Joint Conference on Artificial Intelligence, 2013.

- [7] R. E. Korf, E. L. Schreiber, and M. D. Moffitt, “Optimal sequential multi-way number partitioning.” in ISAIM, 2014.

- [8] R. L. Graham, “Bounds on multiprocessing timing anomalies,” SIAM journal on Applied Mathematics, vol. 17, no. 2, pp. 416–429, 1969.

- [9] R. E. Korf, “From approximate to optimal solutions: A case study of number partitioning,” in IJCAI, 1995, pp. 266–272.

- [10] N. Karmarkar and R. M. Karp, The differencing method of set partitioning. University of California Berkeley, 1982.

- [11] R. E. Korf and E. L. Schreiber, “Optimally scheduling small numbers of identical parallel machines,” in Twenty-Third International Conference on Automated Planning and Scheduling, 2013.

- [12] D. A. Huffman, “A method for the construction of minimum-redundancy codes,” Proceedings of the IRE, vol. 40, no. 9, pp. 1098–1101, 1952.