Multifaceted Privacy: How to Express Your Online Persona without Revealing Your Sensitive Attributes

Abstract

Recent works in social network stream analysis show that a user’s online persona attributes (e.g., gender, ethnicity, political interest, location, etc.) can be accurately inferred from the topics the user writes about or engages with. Attribute and preference inferences have been widely used to serve personalized recommendations, directed ads, and to enhance the user experience in social networks. However, revealing a user’s sensitive attributes could represent a privacy threat to some individuals. Microtargeting (e.g., Cambridge Analytica scandal), surveillance, and discriminating ads are examples of threats to user privacy caused by sensitive attribute inference. In this paper, we propose Multifaceted privacy, a novel privacy model that aims to obfuscate a user’s sensitive attributes while publicly preserving the user’s public persona. To achieve multifaceted privacy, we build Aegis, a prototype client-centric social network stream processing system that helps preserve multifaceted privacy, and thus allowing social network users to freely express their online personas without revealing their sensitive attributes of choice. Aegis allows social network users to control which persona attributes should be publicly revealed and which ones should be kept private. For this, Aegis continuously suggests topics and hashtags to social network users to post in order to obfuscate their sensitive attributes and hence confuse content-based sensitive attribute inferences. The suggested topics are carefully chosen to preserve the user’s publicly revealed persona attributes while hiding their private sensitive persona attributes. Our experiments show that adding as few as 0 to 4 obfuscation posts (depending on how revealing the original post is) successfully hides the user specified sensitive attributes without changing the user’s public persona attributes

Keywords Attribute Privacy, Online-Persona, Content-Based Inference

1 Introduction

Over the past decade, social network platforms such as Facebook, Twitter, and Instagram have attracted hundreds of millions of users [11, 13, 12]. These platforms are widely and pervasively used to communicate, create online communities [26], and socialize. Social media users develop, over time, online persona [45] that reflect their overall interests, activism, and diverse orientations. Users have numerous followers that are specifically interested in their personas and their postings which are aligned with these personas. However, due to the rise of machine learning and deep learning techniques, user posts and social network interactions can be used to accurately and automatically infer many user persona attributes such as gender, ethnicity, age, political interest, and location [33, 38, 47, 46]. Recent work shows that it is possible to predict an individual user’s location solely using content-based analysis of the user’s posts [20, 19]. Zhang et al. [46] show that hashtags in user posts can alone be used to precisely infer a user’s location with accuracy of 70% to 76%. Also, Facebook likes analysis was successfully used to distinguish between Democrats and Republicans with 85% accuracy [33].

Social network giants have widely used attribute inference to serve personalized trending topics, to suggest pages to like and accounts to follow, and to notify users about hyper-local events. In addition, social networks such as Facebook use tracking [9] and inference techniques to classify users into categories (e.g. Expats, Away from hometown, Politically Liberal, etc.). These categories are used by advertisers and small businesses to enhance directed advertising campaigns. However, recent news about the Cambridge Analytica scandal [6] and similar data breaches [14] suggest that users cannot depend on the social network providers to preserve their privacy. User sensitive attributes such as gender, ethnicity, and location have been widely misused in illegally discriminating ads, microtargeting, and surveillance. A recent ACLU report [5] shows that Facebook illegally allowed employers to exclude women from receiving their job ads on Facebook. Also, several reports have shown that Facebook allows discrimination against some ethnic groups in housing ads [10]. News about the Russian-linked Facebook Ads during the 2016 election suggests that the campaign targeted voters in swing states [2] and specifically in Michigan and Wisconsin [3]. In addition, location data collected from Facebook, Twitter, and Instagram has been used to target activists of color [1].

An online-persona can be thought of as the set of user attributes that can be inferred about a user from their online postings and interactions. These attributes fall into two categories: public and private persona attributes. Users should decide which attributes fall in each category. Some attributes (e.g., political orientation and ethnicity) should be publicly revealed as a user’s followers might follow her because of her public persona attributes. Other attributes (e.g., gender and location) are private and sensitive, and the user would not like them to be revealed. However, with the above mentioned inference methods, the social media providers, as well as any adversary receiving the user posting can reveal a user’s sensitive attributes.

To remedy this situation, in this paper, we propose multifaceted privacy, a novel privacy model that aims to obfuscate a user’s sensitive attributes while revealing the user’s public persona attributes. Multifaceted privacy allows users to freely express their online public personas without revealing any sensitive attributes of their choice. For example, a #BlackLivesMatter activist might want to hide her location from the police and from discriminating advertisers while continuing to post about topics specifically related to her political movement. This activist can try to hide her location by disabling the geo-tagging feature of her posts and hiding her IP address using an IP obfuscation browser like Tor [4]. However, recent works have shown that content-based location inferences can successfully and accurately predict a user’s location solely based on the content of her posts [20, 19, 46]. If this activist frequently posts about topics that discuss BLM events in Montpelier, Vermont, she is most probably a resident of Montpelier (Montpelier has a low African American population).

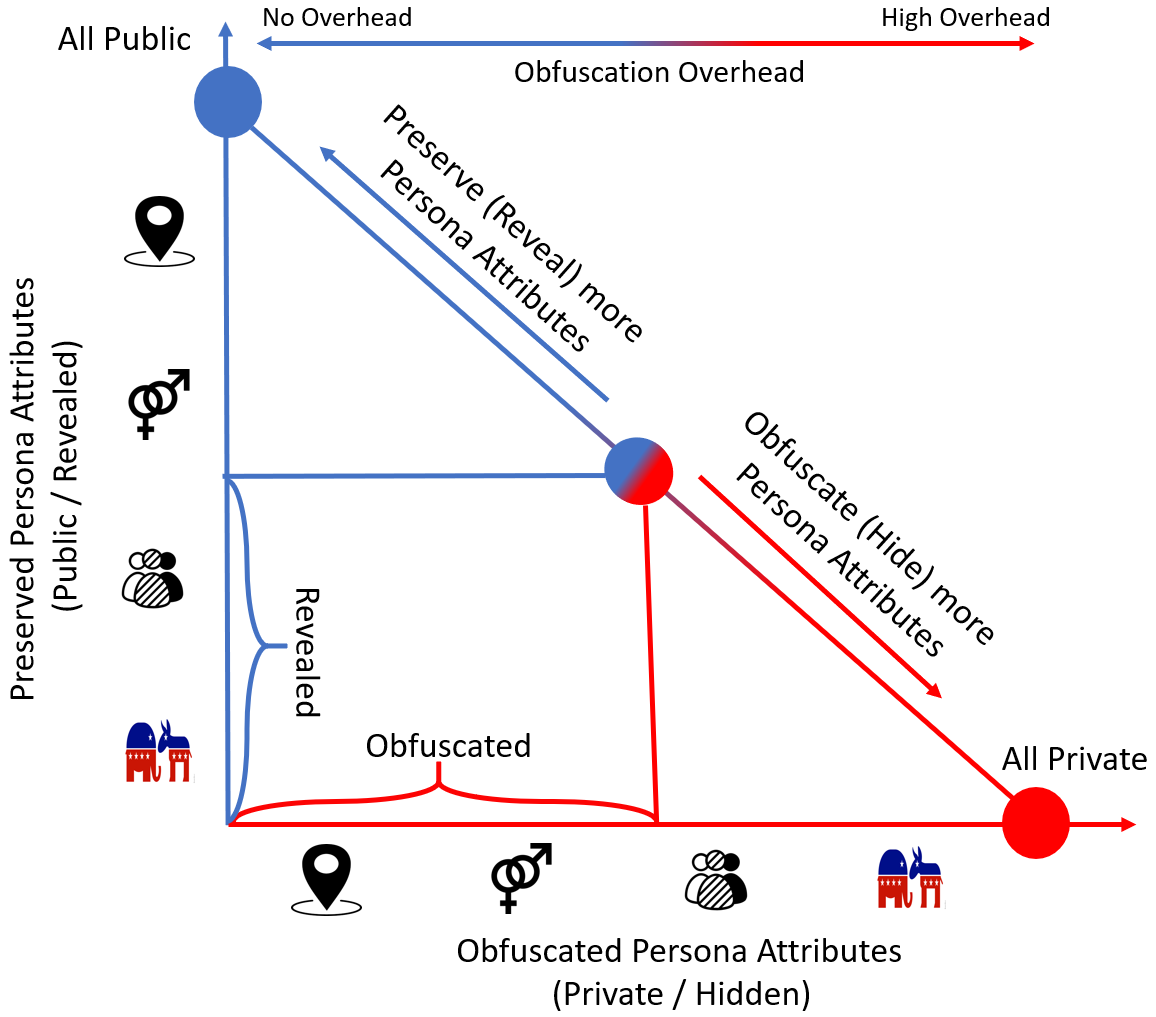

To achieve multifaceted privacy, we build Aegis111Aegis: a shield in the Greek mythology., a prototype client-centric social network stream processing system that enables social network users to take charge of protecting their own privacy, instead of depending on the social network providers. Our philosophy in building Aegis is that social network users need to introduce some noisy interactions and obfuscation posts to confuse content based attribute inferences. This idea is inspired from Rivest’s chaffing and winnowing privacy model in [39]. Unlike in [39] where the sender and receiver exchange a secret that allows the receiver to easily distinguish the chaff from the wheat, in social networks, a user (sender) posts to an open world of followers (receivers) and it is not feasible to exchange a secret with every recipient. In addition, a subset of the recipients could be adversaries who try to infer the user’s sensitive attributes from their postings. Choosing this noise introduces a challenging dichotomy and tension between the utility of the user persona and her privacy. Similar notions of dichotomy between sensitive and non sensitive personal attributes have been explored in sociology and are referred to as contextual integrity [37]. Obfuscation posts need to be carefully chosen to achieve obfuscation of private attributes without damaging the user’s public persona. For example, a #NoBanNoWall activist loses persona utility if she writes about #BuildTheWall to hide her location. Multifaceted privacy represents a continuum between privacy and persona utility. Figure 1 captures this continuum, where the x-axis represents the persona attributes that need to be obfuscated or kept private while the y-axis represents the persona attributes that should be publicly preserved or revealed. Figure 1 shows that privacy is the reciprocal of the persona utility. Any attribute that needs to be kept private cannot be preserved in the public persona. As illustrated, the more attributes are kept private, the more obfuscation overhead is needed to achieve their privacy. A user who chooses to publicly reveal all her persona attributes achieves no attribute privacy and hence requires no obfuscation posting overhead.

As illustrated in Figure 1, a user chooses a point on the diagonal line of the multifaceted privacy that determines which persona attributes should be publicly revealed (e.g., political interest, ethnicity, etc) and which ones should be kept private (e.g., location, gender, etc). Note that users can reorder the attributes on the axes of Figure 1 in order to achieve their intended public/private attribute separation. Unlike previous approaches that require users to change their posts and hashtags [46] to hide their sensitive attributes, Aegis allows users to publish their original posts without changing their content. Our experiments show that adding obfuscation posts successfully preserves multifaceted privacy. Aegis considers the added noise as the cost to pay for achieving multifaceted privacy. Therefore, Aegis targets users who are willing to write additional posts to hide their sensitive attributes.

Aegis is user-centric, as we believe that users need to take control of their own privacy concerns and cannot depend on the social media providers. This is challenging as it requires direct user engagement and certain sacrifices. However, we believe Aegis will help better understand the complexity of privacy as well as the role for individual engagement and responsibility. Aegis represents a first step in the long path to better understand the tensions between user privacy, the utility of social media, and trust in public social media providers. This is an overdue discussion that needs to be discussed by the scientific community, and we believe Aegis will facilitate the medium for this discussion.

Our contributions are summarized as follows:

-

•

We propose multifaceted privacy, a novel privacy model that represents a continuum between the privacy of sensitive private attributes and public persona attributes.

-

•

We build Aegis, a prototype user-centric social network stream processing system that preserves multifaceted privacy. Aegis continuously analyzes social media streams to suggest topics to post that are aligned with the user’s public persona but hide their sensitive attributes.

-

•

We conduct an extensive experimental study to show that Aegis can successfully achieve multifaceted privacy.

The rest of the paper is organized as follows. We explain the models of user, topic, and security in Section 2. Topic classification algorithms and data structures that achieve multifaceted privacy are described in Section 3 and Aegis’s system design is explained in Section 4. Afterwards, an experimental evaluation is conducted in Section 5 to evaluate the effectiveness of Aegis in achieving the multifaceted privacy. The related work is presented in Section 6 and future extensions are presented in Section 7. The paper is concluded in Section 8.

2 Models

In this section, we present the user, topic, and security models. The user and topic models explain how users and topics are represented in the system. The security model presents both the privacy and the adversary models.

2.1 User Model

Our user model is similar to the user model presented in [27]. The set is the set of social network users where . A user is represented by a vector of attributes (e.g., gender , ethnicity , age , political interest , location , etc). Each attribute has a domain and the attribute values are picked from this domain. For example, the gender attribute has domain = {male, female}222Due to the limitation of the inference models, the gender attribute is considered only binary. However, better models can be used to infer non binary gender attribute values. and . An example user is represented by the vector where = (: female, : African American, : 23, : Democrat, : New York). Attribute domains can form a hierarchy (e.g., location: city county state country) and an attribute can be generalized by climbing up this hierarchy. A user who lives in Los Angeles is also a resident of Orange County, California, and the United States. Other attributes can form trivial hierarchies (e.g., gender: male or female * (no knowledge)).

The user attribute vector is divided into two main categories: 1) the set of public persona attributes and 2) the set of private sensitive attributes . Multifaceted privacy aims to publicly reveal all persona attributes in while hiding all sensitive attributes in . Each user defines her and a priori. As shown in Figure 1, attributes in are the complement of the attributes in . Therefore, each attribute either belongs to or .

2.2 Topic Model

The set represents the set of all topics that are discussed by all the social network users in . represents the set of all the topics posted by user ’s till time where . Social network topics are characterized by the attributes of the users who post about these topics. Unlike user attributes, which are discrete values, topic attributes are represented as distributions. For example, an analysis of the ethnicity of the users who post about the topic #BlackLivesMatter can result in the distribution 10% Asian, 25% White, 15% Hispanic, and 50% Black. This distribution means that Asians, Whites, Hispanics, and Blacks post about the topic #BlackLivesMatter and 50% of the users who post about this topic are Black. A topic is represented by a vector of attribute distributions where , , , and are respectively the gender, the ethnicity, the political party, and the location distributions of the users who post about .

To extract the gender, ethnicity, and political interest attribute distributions of different topics, we use the language models introduced in [26, 41]. However, any other available model could be used to infer user attributes. The location distribution of a topic is inferred using the geo-tagged public posts about this topic, where the location of the publisher is explicitly attached to the post.

2.3 Security Model

An approach that is commonly used for attribute obfuscation is generalization. The idea behind attribute generalization is to report a generalized value of a user’s sensitive attribute in order to hide the actual attribute value within. Consider location as a sensitive attribute example. Many works [36, 46] have used location generalization in different contexts. Mokbel et al. [36] use location generalization to hide a user’s exact location from Location Based Services (LBS). A query that asks "what is the nearest gas station to my exact location in Stanford, CA?" should be altered to "list all gas stations in California". Notice that the returned result of the altered query has to be filtered at the client side to find the answer of the original query. Similarly, Andres et al. [17] propose geo-indistinguishability, a location privacy model that uses differential privacy to hide a user’s exact location in a circle of radius from LBS providers. The wider the generalization range, the more privacy achieved, and the more network and processing overhead are added at the client side. Similarly, in the context of social networks, Zhang et al. [46] require Twitter users to generalize their location revealing hashtags in order to hide their exact location. For example, a user whose post includes "#WillisTower" should be generalized to "#Chicago" to hide a user’s exact location. Notice that generalization requires users to alter their original posts or queries.

To overcome these limitations and to allow users to write their posts using their own words, we adopt the notion of k-attribute-indistinguishability privacy that is defined as follows. For every sensitive attribute , the user defines an indistinguishability parameter . determines the number of attribute values among which the real value of parameter is hidden. For example, a user who lives in CA sets in order to hide her original state location, CA, among 3 different states (e.g., CA, IL, and NY). This means that a content-based inference attack should not be able to distinguish the user’s real location among the set {CA, IL, NY}. As explained in 2.1, attribute domains either form multi-level hierarchies (e.g., location) or trivial hierarchies (e.g., gender and ethnicity). Unlike in attribute generalization where a user’s attribute value is generalized by climbing up the attribute hierarchy, k-attribute-indistinguishability achieves the privacy of an attribute value by hiding it among attribute values chosen from the siblings of the actual attribute value in the same hierarchy level (e.g., a user’s state level location is hidden among other states instead of generalizing it to the entire country). The following inference attack explains when k-attribute-indistinguishability is achieved or violated.

The adversary assumptions: the adversary model and the inference attacks are similar to the ones presented in [46]. However, unlike in [46], our inference attack is not only limit to the location attribute but also can be extended to infer every user sensitive attribute in . The adversary has access to the set of all topics and all the public posts related to each topic. This assumption covers any adversary who can crawl or get access to the public posts of every topic in the social network. As proposed in Section 1, the target user does not reveal her sensitive attribute values to the public (e.g., a user who wants to hide her location must obfuscates her IP address and disable the geo-tagging feature for her posts). Therefore, the adversary can only see the content of the public posts published by . The adversary uses their knowledge about the set of all topics and the set of topics discussed by to infer her sensitive attributes. Multifaceted privacy protects users against an adversary who only performs content-based inference attacks. Therefore, multifaceted privacy assumes that the adversary does not have any side channel knowledge that can be used to reveal a user’s sensitive attribute value (e.g., another online profile that is directly linked to the user where sensitive attributes such as gender, ethnicity, or location are revealed).

Inference attack: the adversary’s ultimate goal is to reveal or at least have high confidence in the knowledge of the sensitive attribute values of the target user . For this, the adversary runs a content-based attack as follows. First, the adversary crawls the set of topics that user wrote about. For each topic, the adversary infers the demographics of the users who wrote about this topic. Then, the adversary aggregates the demographics of all the topics in . The adversary uses the aggregated demographics to estimate the sensitive attributes of user . The details of the inference attack is explained as follows.

For each topic , an adversary crawls the set of posts that discusses topic and for each post , the adversary uses some models to infer the gender, ethnicity, political interest, and location of the user who wrote this post. Then, the adversary uses the inferred attributes of each post in topic to populate ’s distribution vector . For example, is the gender distribution of all users who wrote about topic . Similarly, , , and are the ethnicity, political interest, and location distributions of the users who posted about topic . We define as a vector of attribute distributions that is used to estimate the attributes of user . is the result of aggregating the normalized for every topic as shown in Equation 1.

| (1) |

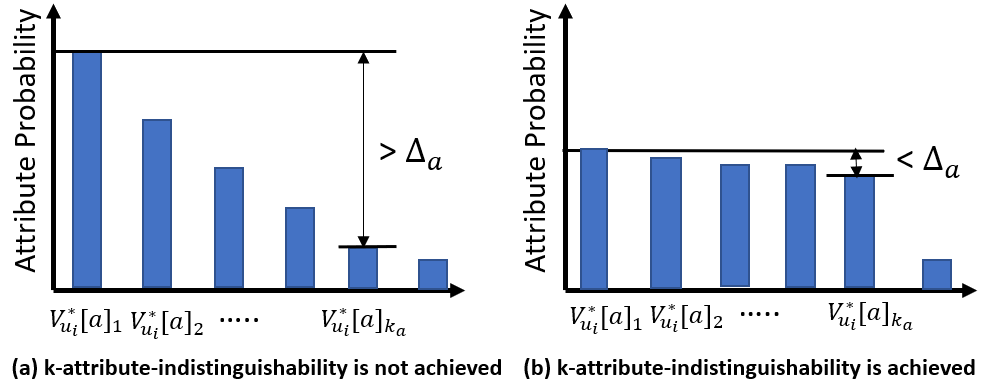

Equation 1 shows that the topic’s attribute distribution vector is first normalized by dividing by the number of posts in topic . This normalization equalizes the effect of every topic on the user’s attribute estimations in . is the summation of the normalized for every topic divided by the number of topics in . is the distribution of attribute for user . For example, a user might have a gender distribution = {female:0.8, male:0.2}. This means that the inference attack using ’s posted topics suggests that the probability is a female is 80% while is a male is only 20%. For every attribute , an attacker uses the maximum attribute value as an estimation of the actual value . In the previous example, an attacker would infer female as an estimate of the gender of user . An inference attack succeeds in estimating an attribute if the attacker can have sufficient confidence in estimating the actual value . This confidence is achieved if the difference between the maximum estimated attribute value of and the top- estimated attribute value of is greater than . For example, if and (assuming gender is a binary attribute and it needs to be hidden among the 2 gender attribute values), then an attacker successfully estimates ’s gender if the is distinguishable from the highest value in by more than 10%. In the previous example where = {female:0.8, male:0.2}, an attacker succeeds to estimate ’s gender = female as the difference between .

Figure 2 show an example of a successful inference attack and another of a failed inference attack on attribute of user . As shown in Figure 2.a, the maximum estimated attribute value is distinguishable from the top- ( for attribute ) attribute values in by more than . In this scenario, an attacker can conclude with high confidence that is a good estimate for . However, in Figure 2.b, is indistinguishable from the top- attribute values in . In this scenario, the attack is marked failed and k-attribute-indistinguishability is achieved.

The parameter is used to determine the number of attribute values within which the user’s actual attribute value is hidden. The bigger the , the less the attacker’s confidence about the user’s actual attribute value. As a result, increasing introduces uncertainty in the attacker’s inference and hence boosts the adversary cost to micro-target users who hide their actual attribute values among different attribute values. For example, an adversary who wants to target a user in location CA has to pay 3 times the advertisement cost to reach the same user if the user equally hides her location among 3 other locations (e.g., CA, IL, and NY).

We understand that the requirement to determine the sensitive attributes and an indistinguishability parameter value for every sensitive attribute could be challenging for many users. Users might not have a sense of the number of attribute values to obfuscate the actual value of a particular attribute. One possible solution to address this usability challenge is to design a questionnaire for Aegis’s first time users. This questionnaire helps Aegis understand which persona attributes are sensitive and how critical the privacy of every sensitive attribute is to every user. This allows Aegis to auto-configure of every sensitive attribute for every user. The details of such an approach is out of the scope of this paper. In this paper, we assume that Aegis is preconfigured with the set and for every attribute , the value of is determined.

3 Multifaceted Privacy

Multifaceted privacy aims to obfuscate a user’s sensitive attributes for every attribute in . This has to be done while publicly revealing every attribute in the user’s public persona in . Various approaches have been used to obfuscate specific sensitive attributes, in particular, Tagvisor [46] protects users against content-based inference attacks by requiring users to alter their posts by changing or replacing hashtags that reveal their sensitive attributes, in their case location. Our approach is different, as it is paramount to not only preserving the privacy of the sensitive attributes, but also to preserve the on-line persona of the user, and hence reveal their public attributes. It is critical for a user to post their posting in their own words that reflect their persona. We therefore preserve multifaceted privacy by hiding a specific post among other obfuscation posts. Our approach needs to suggest posts that are aligned with the user’s public persona but linked to alternative attribute values of their sensitive attributes in order to obfuscate them. This requires a topic classification model that simplifies the process of suggesting obfuscation posts. For example, consider State level location as a sensitive attribute. To achieve k-location indistinguishability, a user’s exact State should be hidden among other States. This requires suggesting obfuscation postings about topics that are linked to these other States. Users in NY state can use topics that are mainly discussed in IL to obfuscate their location among NY and IL. To discover such potential topics, all topics that are discussed on a social network need to be classified by the sensitive attributes that need to be obfuscated, State level location in this example. A topic is linked to some State if the maximum estimated State location of this topic, , is distinguishable from other State location estimates in by more than . For example, if , a topic that has a State location distribution of {NY=0.6, IL=0.2, CA=0.1, Others=0.1} is linked to NY State while a topic that does not have a distinguishable State location inference by more than is not linked to any State. In this section, we first explain a simple but incorrect topic classification model that successfully suggests obfuscation posts that hide a user’s sensitive attributes but does not preserve her public persona. Then, we explain how to modify the topic classification model to suggest obfuscation posts that do achieve multifaceted privacy.

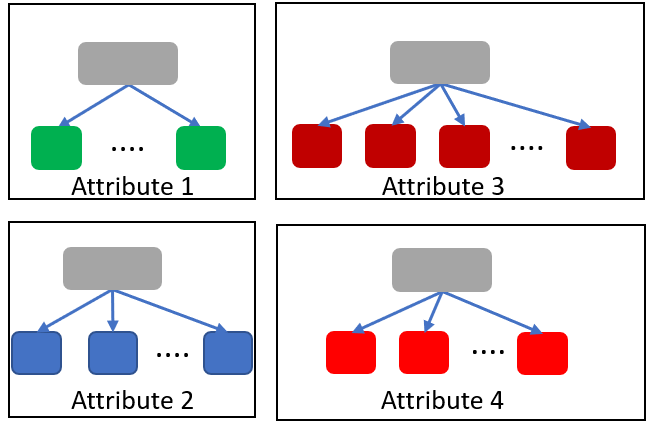

A simple incorrect proposal: in this proposal, topics are classified independently by every sensitive attribute. As shown in Figure 3, each attribute forms an independent hierarchy. The root of the hierarchy has the topics that are not linked to a specific attribute value. Topics that are strongly linked to some attribute value fall down in the hierarchy node that represents this attribute value. For example, State level location attribute forms a hierarchy of two levels. The first level, the root, has all the topics that do not belong to a specific State. A topic like #Trump is widely discussed in all the States and therefore it resides on the root of the location attribute. However, #cowboy is mainly discussed in TX and therefore it falls down in the hierarchy to the TX node. To obfuscate a user’s State location, topics need to be selected from the sibling nodes of the user’s State in the State level location hierarchy. These topics belong to other locations and can be used to achieve k-location-indistinguishability privacy. Although this proposal successfully achieves location privacy, the suggested posts are not necessarily aligned with the user’s public online persona. For example, this obfuscation technique could suggest the topic #BuildTheWall (from TX) to an activist (from NY) who frequently posts about #NoBanNoWall in order to hide her location. This misalignment between the suggested obfuscation posts and the user’s public persona discourages users from seeking privacy fearing the damage to their public online persona.

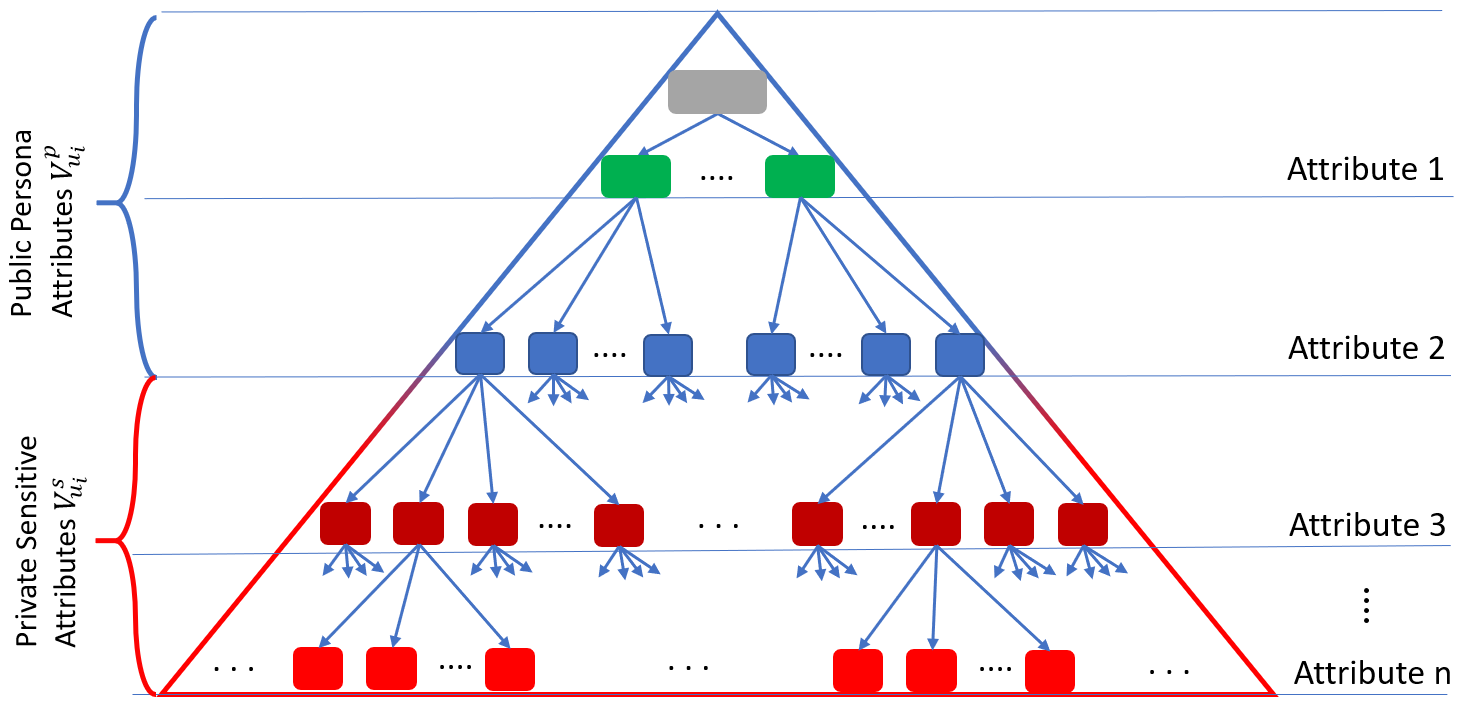

A persona preserving proposal: To overcome the independent classification shortcomings, obfuscation postings need to be suggested from a tree hierarchy that captures relevant dependencies among all user attributes including both public persona and private sensitive attributes. Public attributes need to reside on the upper levels of the classification tree while private ones reside on the bottom levels of the tree as shown in Figure 4. To achieve k-attribute-indistinguishability, sibling values of the sensitive attributes are used to hide the actual value of these sensitive attributes. By placing the public attributes higher up on the tree, we ensure that the suggest topics adhere to the public persona. Finally, multifaceted privacy only requires all the public attributes, regardless of their order, to reside on the upper levels of the tree while all the private attributes, regardless of their order, to reside on the lower levels of the tree.

Social network topics are dependently classified in the tree by the attribute domain values at each level. For example, if the top most level of the tree is the political party attribute, topics that are mainly discussed by Democrats are placed in the left green child while topics that are mainly discussed by Republicans are place in the right green child. Note that topics that have no inference reside in the root of the hierarchy. Now, if the second public persona attribute is ethnicity (shown as blue nodes in Figure 4), topics in both the Democratic and Republican nodes are classified by the ethnicity domain attribute values. For example, topics that are mainly discussed by White Democrats are placed under the Democratic node in the White ethnicity node while topics that are mainly discussed by Asian Republicans are put under the Republican node in the Asian ethnicity node. This classification is applied at every tree level for every attribute. Now, assume a user is White, Female, Democrat, who lives in CA and wants to hide her location (shown as red nodes in Figure 4) while publicly revealing her ethnicity, gender, and political party. In this case, topics that reside in the sibling nodes of the leaf of her persona path, e.g., topics that are mainly discussed by White Female Democrats who live in locations other than CA (e.g., NY and IL) can be suggested as obfuscation topics. This dependent classification guarantees that the suggested topics are aligned with the user’s public persona but belong to other sensitive attribute values (different locations in this example). Note that this technique is general enough to obfuscate any attribute and any number of attributes. Each user defines her sensitive attribute(s) and the classification tree would be constructed with these attributes to the bottom thus guaranteeing that the suggested posts do not violate multifaceted privacy.

4 Aegis System Design

This section presents Aegis, a prototype social network stream processing system that implements multifaceted privacy and overcomes the adversarial content-based attribute inference attacks discussed in Section 2.3. Aegis achieves k-attribute-indistinguishability by suggesting topics to post that are aligned with the social network user’s public persona while hiding the user’s sensitive attributes among their other domain values. To achieve this, Aegis uses the classification and suggestion models discussed in Section 3. Aegis is designed in a user-centric manner which is configured on the user’s local machine. In fact, Aegis can be developed as a browser extension where all the user interactions with the social network are handled through this extension. Every local deployment of Aegis only needs to construct a partition of the attribute-based topic classification hierarchy developed in Figure 4. This partition (or sub-hierarchy) include the user’s attribute path from the root to a leaf in addition to the sibling nodes of the user’s sensitive attribute nodes. For example, a user whose attributes are Female, White, Democrat, and CA and wants to obfuscate her State location only requires Aegis to construct the Female, White, Democrat, CA path in addition to other paths with the shared prefix Female, White, Democrat but linked to other States. These States are used to hide the user’s true state in order to achieve k-location-indistinguishability. Note that if another user’s public persona is specifically associated with their location while they consider their ethnicity to be sensitive, then the hierarchy needs to be reordered to reflect this criteria.

Although Aegis can be integrated with different online social network platforms, our prototype implementation of Aegis only supports Twitter to illustrate Aegis’s functionality. Twitter provides developers with several public APIs [8] that allow them to stream tweets that discuss certain topics. In addition, Twitter streaming APIs allow developers to sample 1% of all the tweets posted on Twitter. In Twitter, a topic is represented by either a hashtag or a keyword. Aegis is built to work for new Twitter profiles in order to continuously confuse an adversary about a profile’s true sensitive attribute values from the genesis of this profile. Aegis is not designed to work with existing old profiles as an adversary could have already used their existing posts to reveal their sensitive attribute values. Even though Aegis suggests obfuscation posts, an adversary can distinguish the old original posts from the newly added original posts accompanied by their obfuscation posts and hence reveals the user’s sensitive attributes true values.

Aegis is designed to achieve the following goals:

-

1.

to automate the process of streaming and classifying Twitter topics according to their attributes,

-

2.

to construct and continuously maintain the topic classification sub-hierarchy,

-

3.

and finally to use the topic classification sub-hierarchy to suggest topics to the user that achieve multifaceted privacy.

To achieve these goals, Aegis consists of two main processes:

-

•

a Twitter analyzer Process and

-

•

a topic Suggestion Process .

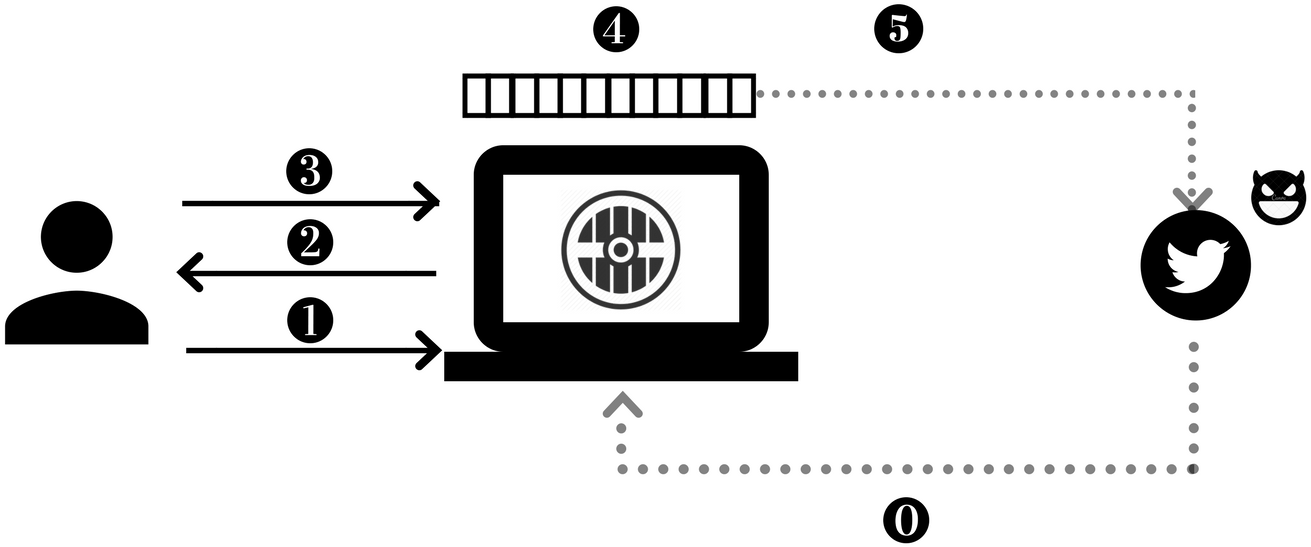

continuously analyzes the topics that are being discussed on Twitter and for each topic , uses the topic attribute inference models to infer ’s attribute distribution vector . The accuracy of the topic attribute inference increases as the number of posts that discuss topic , , increases. uses a local key-value store as a topic repository where the key is the topic id and the value is the topic attribute distribution vector . In addition, constructs and continuously maintains the topic classification sub-hierarchy that classifies the topics based on their attributes. This topic classification sub-hierarchy is used for suggesting obfuscation topics. For this, the user provides with both their attribute vector values and their sensitive attribute vector . and determine the sub-hierarchy that needs to maintain in order to obfuscate the attributes in . Figure 5 shows the interactions among the user, Aegis, and the social network. As shown, step 0 represents the continuous Twitter stream analysis performed by . As analyzes Twitter streams, it continuously updates the topic repository and the classification sub-hierarchy.

The topic suggestion process mainly handles user interactions with Twitter. uses the topic classification sub-hierarchy constructed and maintained by to suggest obfuscation topics. For every sensitive attribute , the user provides the indistinguishability parameter that determines how many attribute values from the domain of should be used to hide the true value of . Aegis allows users to configure the privacy parameter for every attribute . However, to enhance usability, Aegis maintains a default value for the privacy parameter . This means that the privacy of attribute is achieved if the inference attack cannot distinguish the maximum inferred attribute value from the inferred attribute value by more than 10%.

uses , and for every attribute to generate the topic suggestion set . Note that is locally deployed at the user’s machine. Therefore, the user does not have to trust any service outside of her machine. Aegis is designed to transfer user trust from the social network providers to the local machine. For every sensitive attribute , selects a fix set of attribute domain values. These attribute values are used to obfuscate the true value of attribute , .

As shown in Figure 5, in Step 1, the user writes a post to publish on Twitter. receives this post and queries about the attributes of all the topics mentioned in this post. uses these topic attributes to simulate the adversarial attack. If ’s topic inference indicates that k-attribute-indistinguishability is violated for any attribute , queries for topics with public persona but linked to the other attribute values of in the set of attribute values. For every returned topic, ensures that writing about this topic enhances the aggregated inference of the original post and the obfuscation posts towards -attribute-indistinguishability. adds these topics to the set and returns them to the user (Step 2). The user selects a few topics from to post in Step 3 and submits the posts to . Note that users are required to write the obfuscation posts using their personal writing styles to ensure that the original posts and the obfuscation posts are indistinguishable [30, 16]. Afterwards, ensures that the aggregated inference of submitted obfuscation posts in addition to the original post lead to k-attribute-indistinguishability. Otherwise, keeps suggesting more topics. As every original post along with its obfuscation posts achieve k-attribute-indistinguishability, the aggregated inference over the whole user’s posts achieve k-attribute-indistinguishability. In Step 4, queues the original and the obfuscation posts and publishes them on the user’s behalf in random order and intervals to prevent timing attacks (Step 5). An adversary can perform a timing attack if the original posts and the obfuscation posts are distinguishable. Queuing and randomly publishing the posts prevents the adversary from distinguishing original posts from the obfuscation posts and hence prevents timing attacks.

We understand that the obfuscation writing overhead might alienate users from Aegis. As a future extension, Aegis can exploit deep neural network language models to learn the user’s writing style [21]. Aegis can use such a model to generate [31] full posts instead of hashtags and users can either directly publish these posts or edit them before publishing.

5 Experimental Evaluation

In this section, we experimentally evaluate the effectiveness of Aegis in achieving multifaceted privacy. We first present the experimental setup and analyze some properties of the used dataset in Section 5.1. Then, Sections 5.2 and 5.3 present illustrative inference and obfuscation examples that show the functionality of Aegis using real Twitter topics. We experimentally show how Aegis can be used to hide user location in Section 5.4 and measure the effect of changing the indistinguishability parameter on the obfuscation overhead in Section 5.5. Finally, Section 5.6 illustrates the efficiency of Aegis on hiding the user gender while preserving other persona attributes.

5.1 Experimental Setup

Although Aegis can be integrated with different social network platform, our prototype implementation is integrated only with the Twitter social network. Twitter provides developers with several public APIs [8] including the streaming API that allows developers to crawl 1% of all the postings on Twitter. For our experiments, we use the 1% random sampling of the Twitter stream during the year 2017. The attributes gender, ethnicity, and location are used to build a three level topic classification hierarchy that classifies all topics according to their attribute distribution. For simplicity and without loss of generality, we use the language models in [41] to infer both gender and ethnicity attributes of a post writer. In addition, we infer the location distribution of different topics using the explicitly geo-tagged posts about these topics. In the 1% of Twitter’s 2017 postings, our models were able to extract 2,126,791 unique topics. Our classification hierarchy suggests that 66% of the dataset tweets are posted by males. This analysis is consistent with the statistics published in [7]. In addition, the dataset has 6,864,300 geo-tagged posts, 15% of which originated in California. Finally, the predominant ethnicity extracted from the dataset is White.

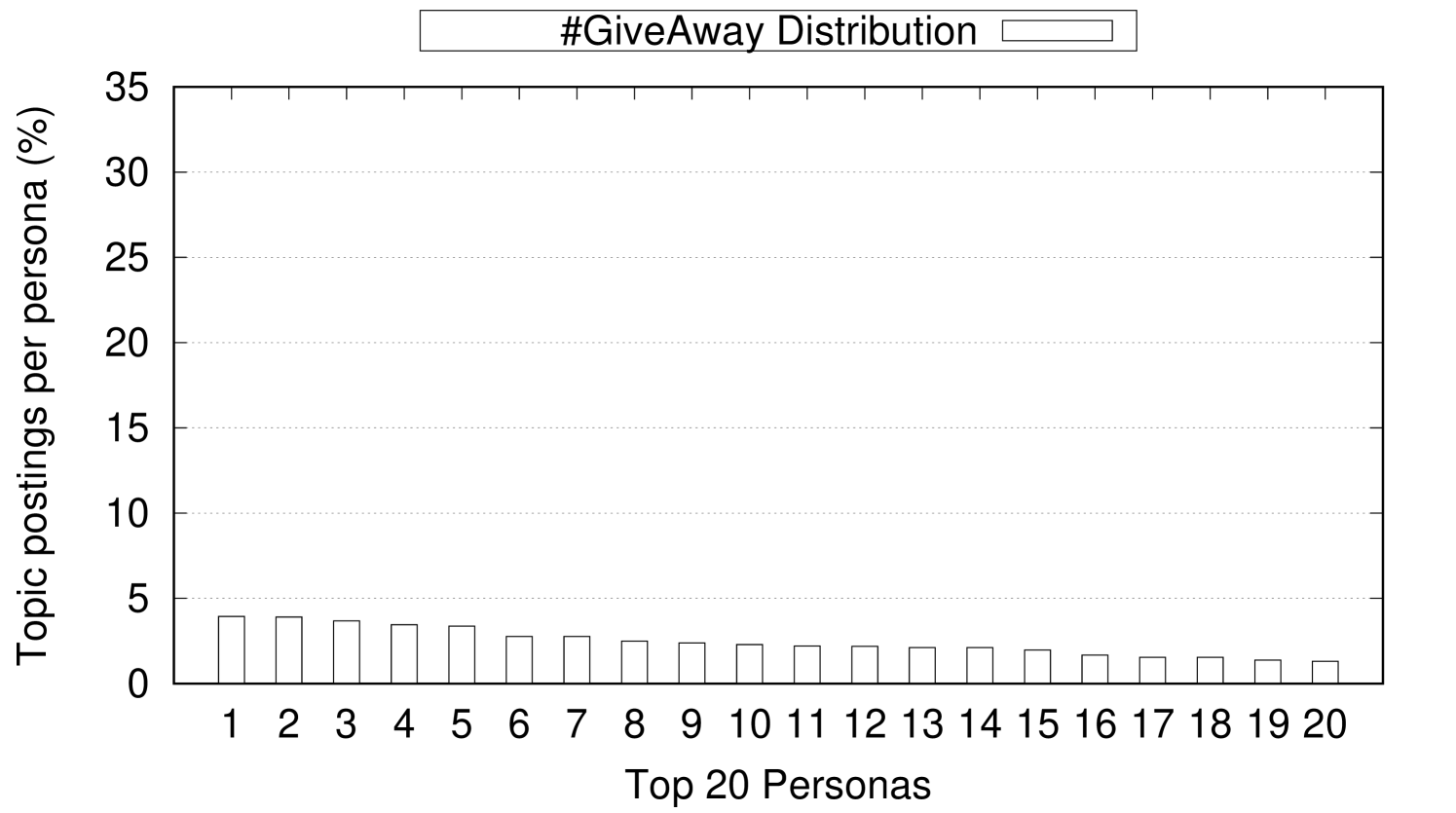

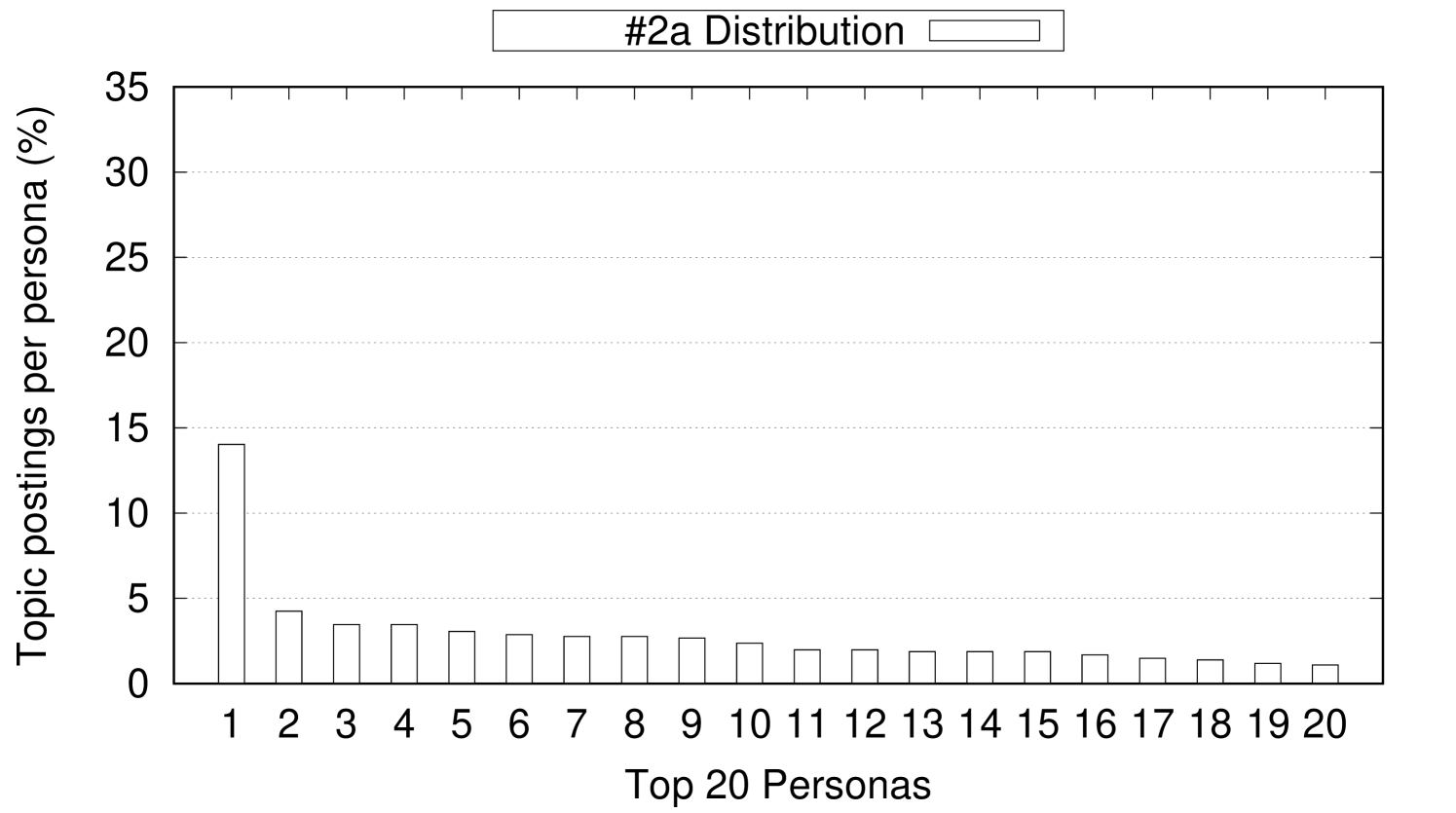

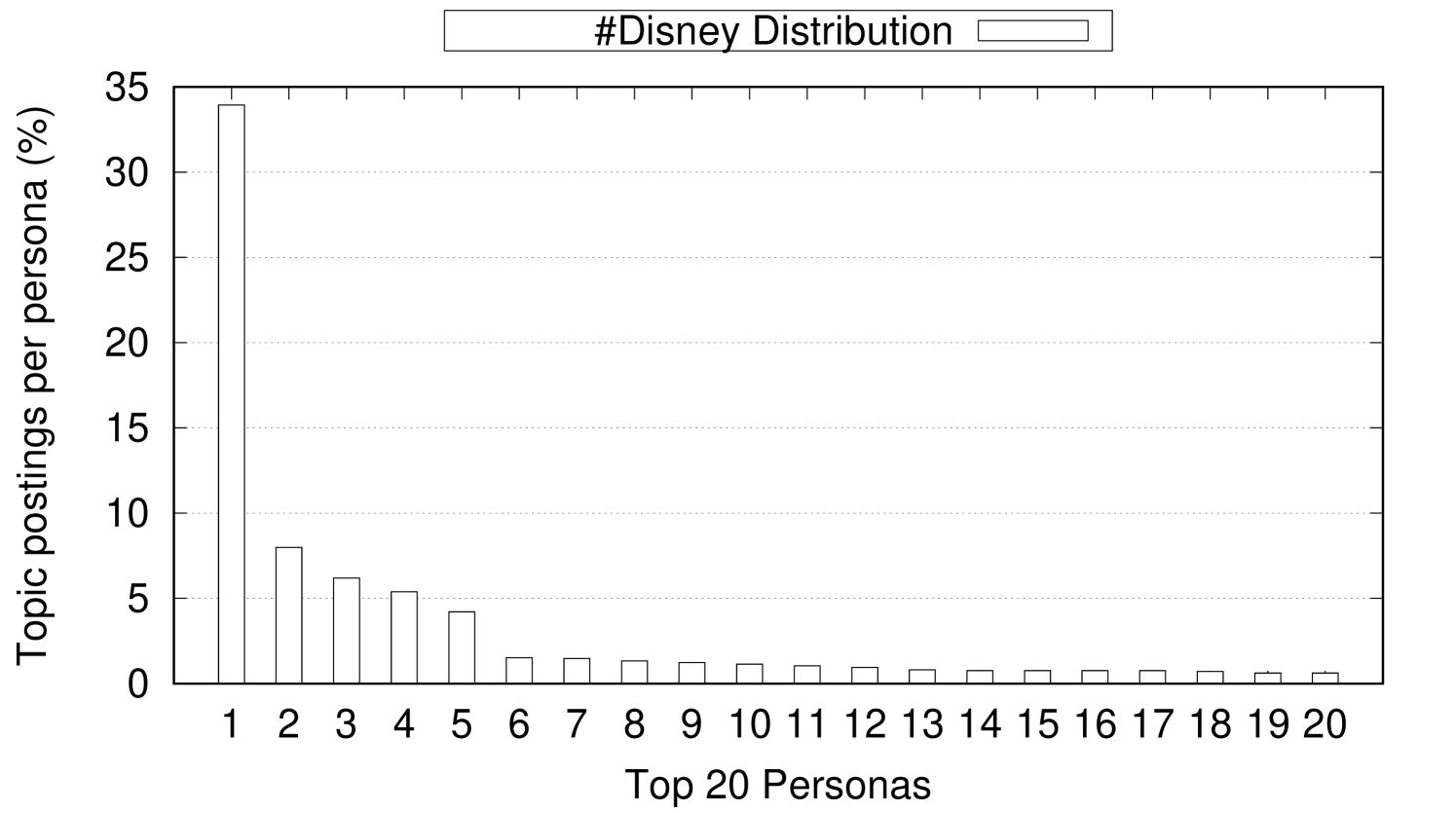

As the classification hierarchy is built using only gender, ethnicity, and state location attributes, this results in a hierarchy of 500 different paths from the root to a leaf of the hierarchy. These 500 paths result from all the possible combinations of gender (male, female), ethnicity (White, Black, Asian, Hispanic, Native American), and state location (50 States). The 500 paths represent the different 500 personas considered in our experiments. Our topic classification hierarchy suggests that topics vary significantly in their connection to a specific persona path (a gender, ethnicity, and location combination). For example, #GiveAway is widely discussed among the 500 personas across the 50 States with very little skew towards specific personas over others. For topics that are widely discussed across all different persona, their skew is usually proportional to the population density of different States. For example, the top five highly populated states (CA, TX, FL, NY, and PA) usually appear as the top locations where widely discussed topics are posted. On the other hand, other topics show strong connection to specific personas. For example, 33.93% of the personas who write about #Disney are Male, Asian, and live in Florida where Disney World is located. Figure 6 shows 3 examples of topics that have trivial, weak, and strong connection to specific personas. In Figure 6, the x-axis represents the top-20 personas who post about a topic and the y-axis represents the percentage of postings for each persona. As shown in Figure 6(a), #GiveAway has slight skew (negligible connection) towards some personas over other personas. Also, the top most persona who post about #GiveAway represent only 3.94% of the overall postings about the topic. On the other hand, Figure 6(b) shows that the persona distribution for #2a (refers to the second amendment) has more skew (weak connection) towards some personas over others. As shown, the top posting persona on the topic #2a contributes 14.03% of the overall postings of this topic. Finally, a topic like #Disney has remarkable skew (strong connection) towards some personas over others. As shown in Figure 6(c), the top posting persona on the topic #Disney contributes 33.93% of the overall postings of this topic.

| Strength | Minimum | Maximum |

|---|---|---|

| Negligible | 0% | 10% |

| Weak | 10% | 20% |

| Mild | 20% | 30% |

| Strong | 30% | 100% |

| Topic | Freq | M W Ca | F W Ca | M W Tx | F W Tx |

|---|---|---|---|---|---|

| #teen | 7094 | 7 | 1 | 15 | 4 |

| #hot | 7478 | 5 | 1 | 13 | 3 |

| #etsy | 2739 | 6 | 5 | 27 | 1 |

| #diy | 1987 | 3 | 7 | 11 | 1 |

| #actor | 725 | 1 | 2 | 9 | 11 |

| #cowboys | 797 | 5 | 16 | 1 | 2 |

We define a topic to persona connection strength parameter . is defined as the difference in posting percentage between the top-1 posting persona and the top-k posting persona. For #2a and k = 3, while for #Disney and for k=3, . We categorize topics into 4 categories according to their value. As shown in Table 1, a topic to persona connection that ranges from 0% to 10% represents a negligible connection and hence this topic does not reveal the persona attributes of the users who write about it. As the topic to persona connection increases, the potential of revealing the attributes of the users who write about this topic increases. In our experiments, we measure the overhead of obfuscation for three different distributions with weak, mild and strong connections having a topic to persona strength connection ranges that are shown in Table 1

5.2 Illustrative Inference Example

Using our tree data structure we can infer interesting information about different topics on Twitter in our dataset, specifically regarding correlations between persona and topics. In Table 2 we analyze 7 topics and their connection to 4 of the most prominent personas in our dataset (Male-White-CA, Female-White-CA, Male-White-TX, Female-White-TX). Frequency denotes the number of times the topic was observed and the number under a persona for a particular topic denotes the order or rank in which a persona discusses this topic most. For example, among all the persona we analyze in our dataset, #actor is most discussed by White Male Californians (rank 1) closely followed by White Female also from California (rank 2). This is followed by other persona out of the focus of Table 2, until White Female Texans are reached at rank 9 and White Male Texans at rank 11. Also, Table 2 shows that both #teen and #hot are discussed the most by Female White Californians and that overall Females (in both CA and TX) who discuss this topic are more than Males in both CA and TX. We can also observe correlations across topics that have semantic connections like #etsy and #diy. Etsy is an online platform for users to sell DIY (Do It Yourself) projects. Female White Texans are much more interested in such DIY specific topics than any other of the personas. Lastly, high correlations of certain topics can be observed with specific locations such as #actor with California and #cowboys with Texas. As Table 2 reveals, the topics you post on social media significantly reveal your attributes, even private sensitive attributes you are unwilling to share. As such, we need a tool like Aegis to prevent adversaries from inferring private attributes while preserving others.

5.3 Illustrative Obfuscation Example

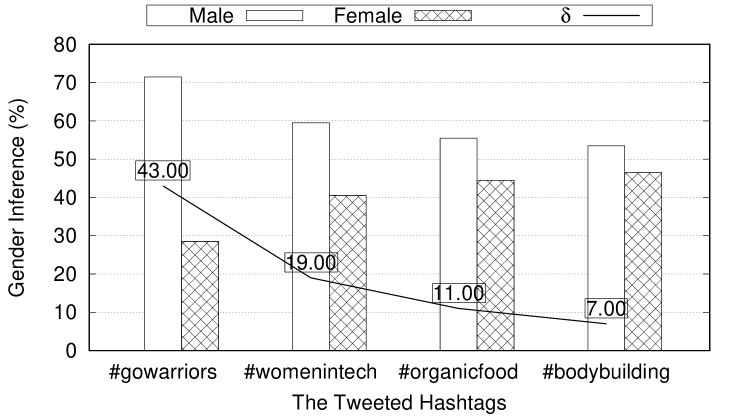

In this section, we provide an illustrative obfuscation example that shows how Aegis achieves multifaceted privacy. This example begins with a newly created Twitter profile of a Male, White user who lives in California. The user wants to obfuscate his gender among the gender domain values {male, female} achieving 2-gender-indistinguishably. Indistinguishability holds if the privacy parameter is achieved. is set to 0.1 (or 10%) to ensure that users who write topics with only negligible topic to persona strength connection do not have to add any obfuscation posts to their timelines as the topics they post do not reveal their sensitive attributes. In addition, the user wants to preserve his ethnicity and location attributes as his public persona. Now, assume that the user tweets #gowarriors to show his support for his favorite Californian basketball team, the Golden State Warriors.

Unfortunately, as shown in Figure 7, #gowarriors has a strong connection to the male gender attribute value. In Figure 7, the x-axis represents the posted hashtags one after the other and the y-axis represents the aggregated gender inferences for both male and female attribute values over all the posted hashtags. In addition, represents the difference of the aggregated gender inference between male and female attribute values. As shown, initially, which indicates a strong link between the user’s gender and the male attribute value. As , this indicates that 2-gender-indistinguishably is violated.

Therefore, Aegis suggests to post topics that are mainly discussed by White people who live in California but linked to the female gender attribute value. Figure 7 shows the effect of posting subsequent topics on the aggregated gender inference. The topic #womenintech helps to reduce the aggregate inference difference to 19%. #organicfood brings the difference down to 11% and #bodybuilding reduces it to 7%. Notice that achieves and hence 2-gender-indistinguishably is achieved. Notice that the same example holds if the user’s true gender is female. The goal of Aegis is not to invert the gender attribute value but to achieve inference indistinguishability among different gender attribute values.

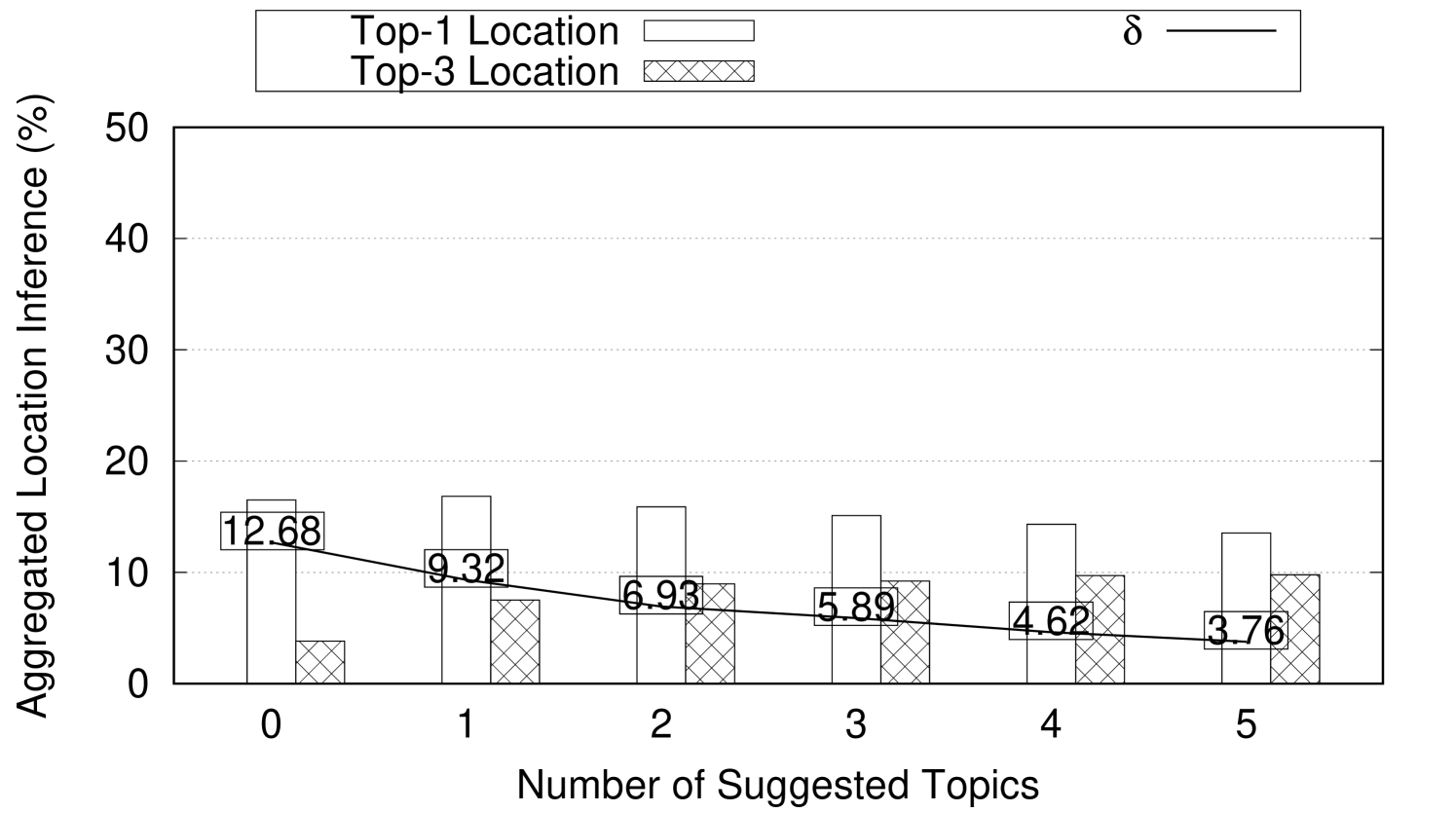

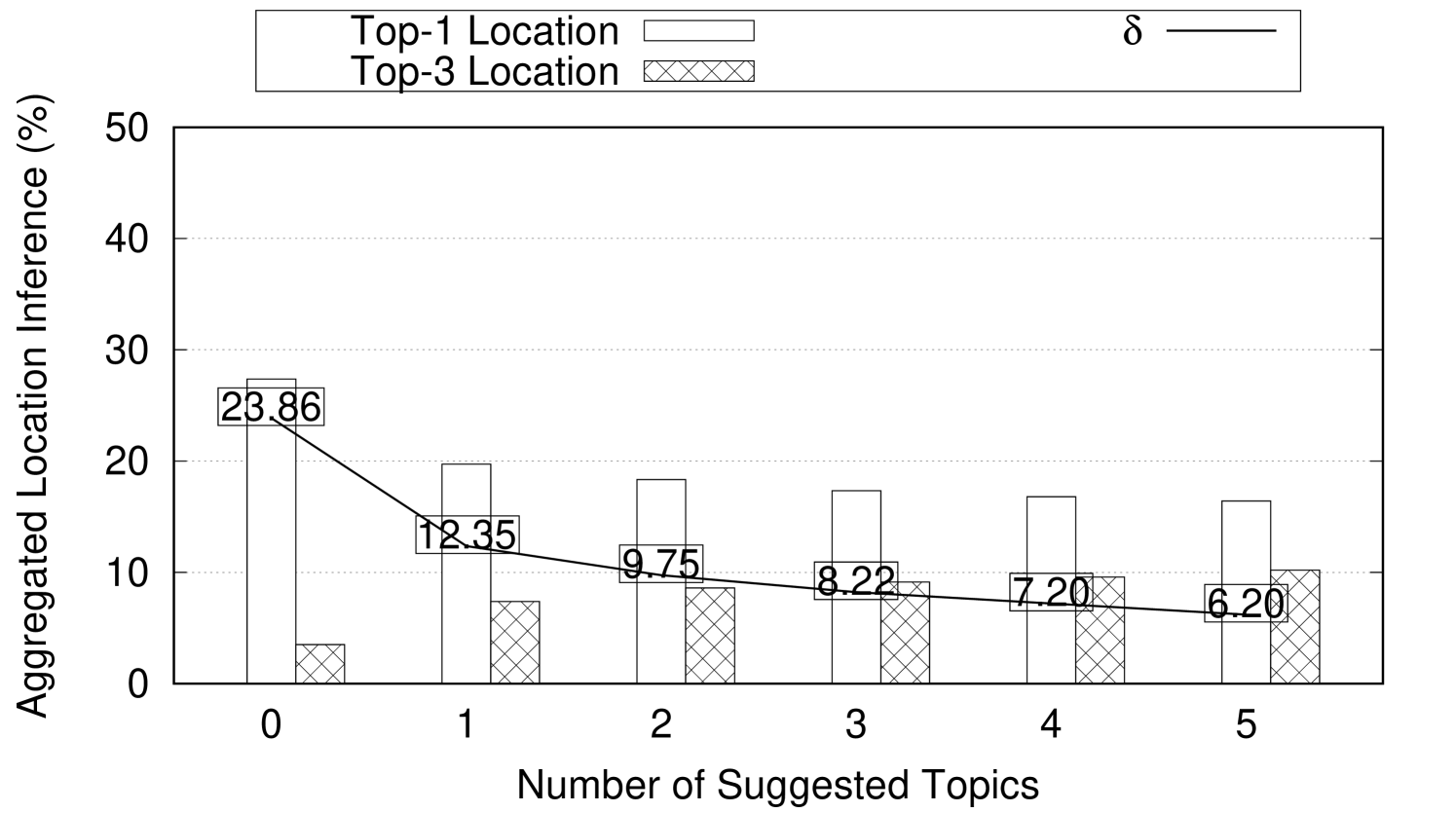

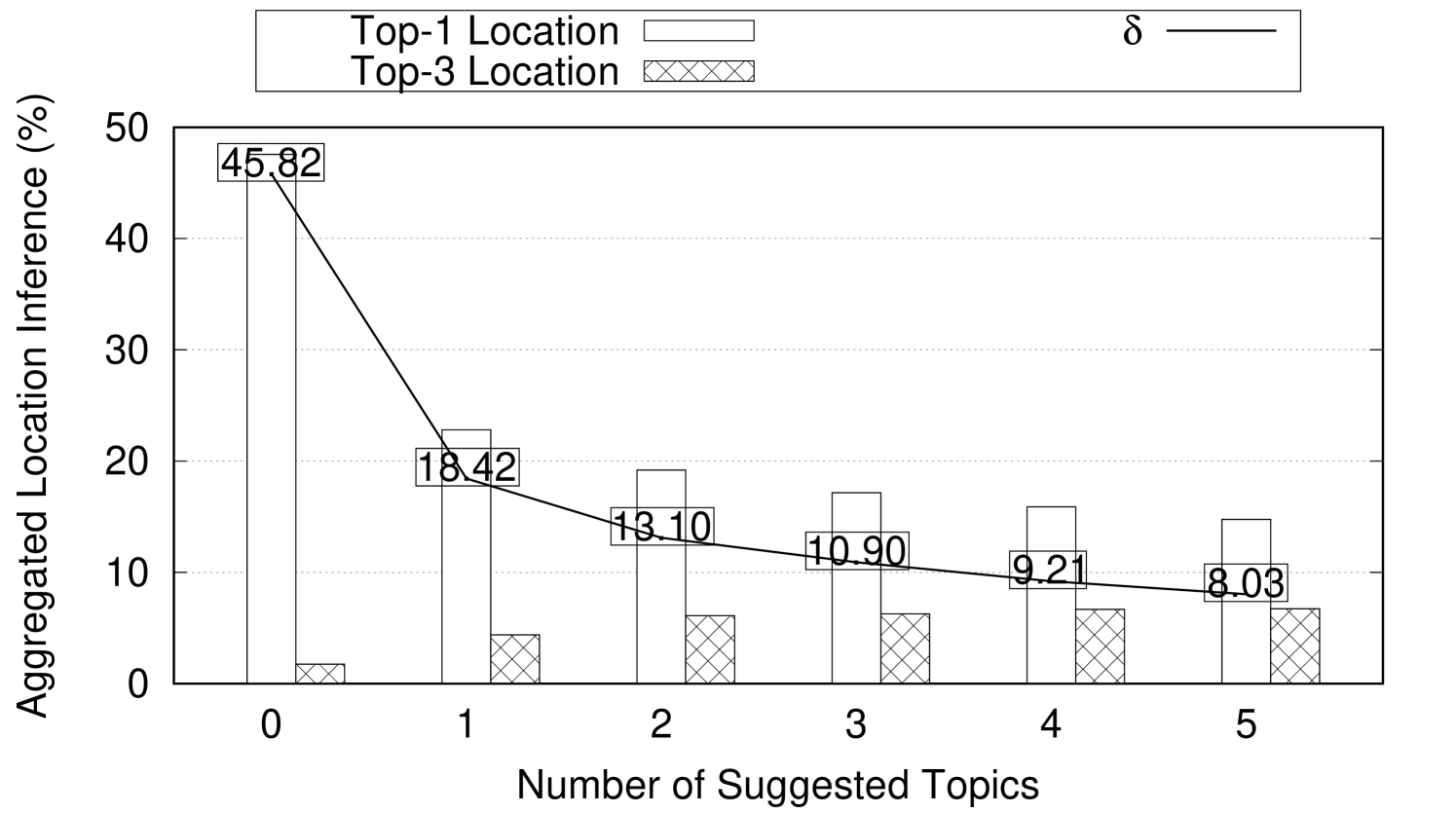

5.4 User Location Obfuscation

The number and the topic to persona connection strength of the obfuscation topics largely depend on the topic to persona connection strength of the original posts. In this experiment, we show how Aegis is used to hide user location while preserving their gender and ethnicity. In this experiment, we set where user location is hidden among three locations. is set to 0.1 to indicate that 3-location-indistinguishability is achieved if the difference between the highest (top-1) aggregated location inference and the (top-3) aggregated location inference is less than 10%. The number of obfuscation posts needed and their effect on the user persona are reported. This experiment runs using 4707 weak topics, 1984 mild topics, and 1106 strong topics collected from Twitter over several weeks. This experiment assumes a newly created twitter profile simulated with one of the personas in Table 2. First, a post with some topic to location connection strength (weak, mild, or strong) is added to the user profile. Then, we add the suggested obfuscation posts one at a time to the user’s timeline. After every added obfuscation post, the location and persona inference are reported. The reported numbers are aggregated and averaged for every topic to location connection strength category. Figure 8 shows the effect of adding obfuscation posts on both the location inference and the persona inference for weak, mild, and strong topics. As shown in Figure 8(a), the 4707 weak topics on average need just one obfuscation suggestion to achieve 3-location-indistinguishability when . Note that adding more obfuscation posts achieves 3-location-indistinguishability for smaller s (e.g., (4 suggestions), (5 suggestions)). Also, adding one suggestion post achieves reduction in . The same experiment is repeated for mild and strong topics and the results are reported in Figures 8(b) and 8(c) respectively. Notice that strong topics requires four suggestions on average to achieve 3-location-indistinguishability when . Also, in Figures 8(c), adding a single suggestion post achieves reduction in .

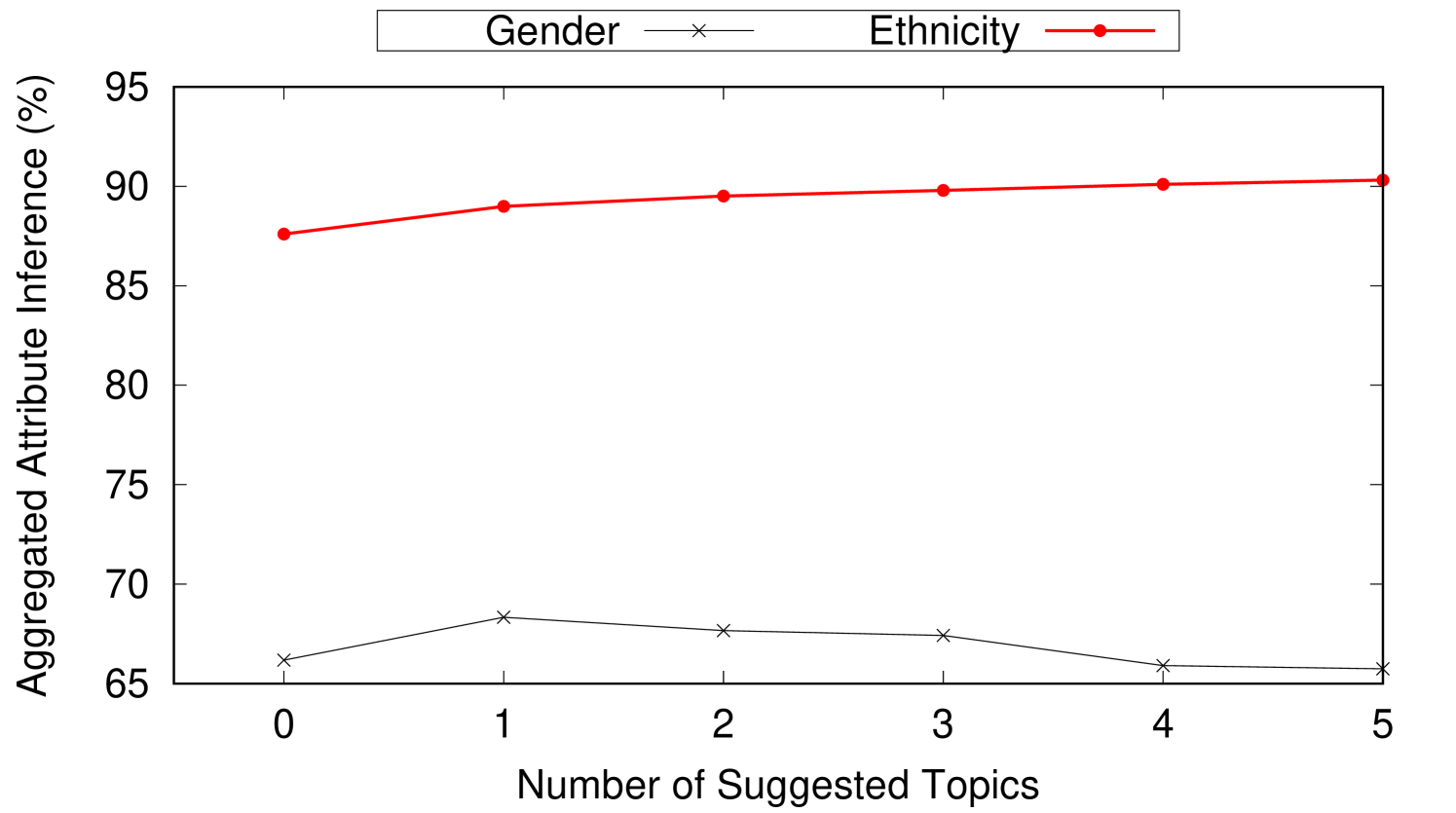

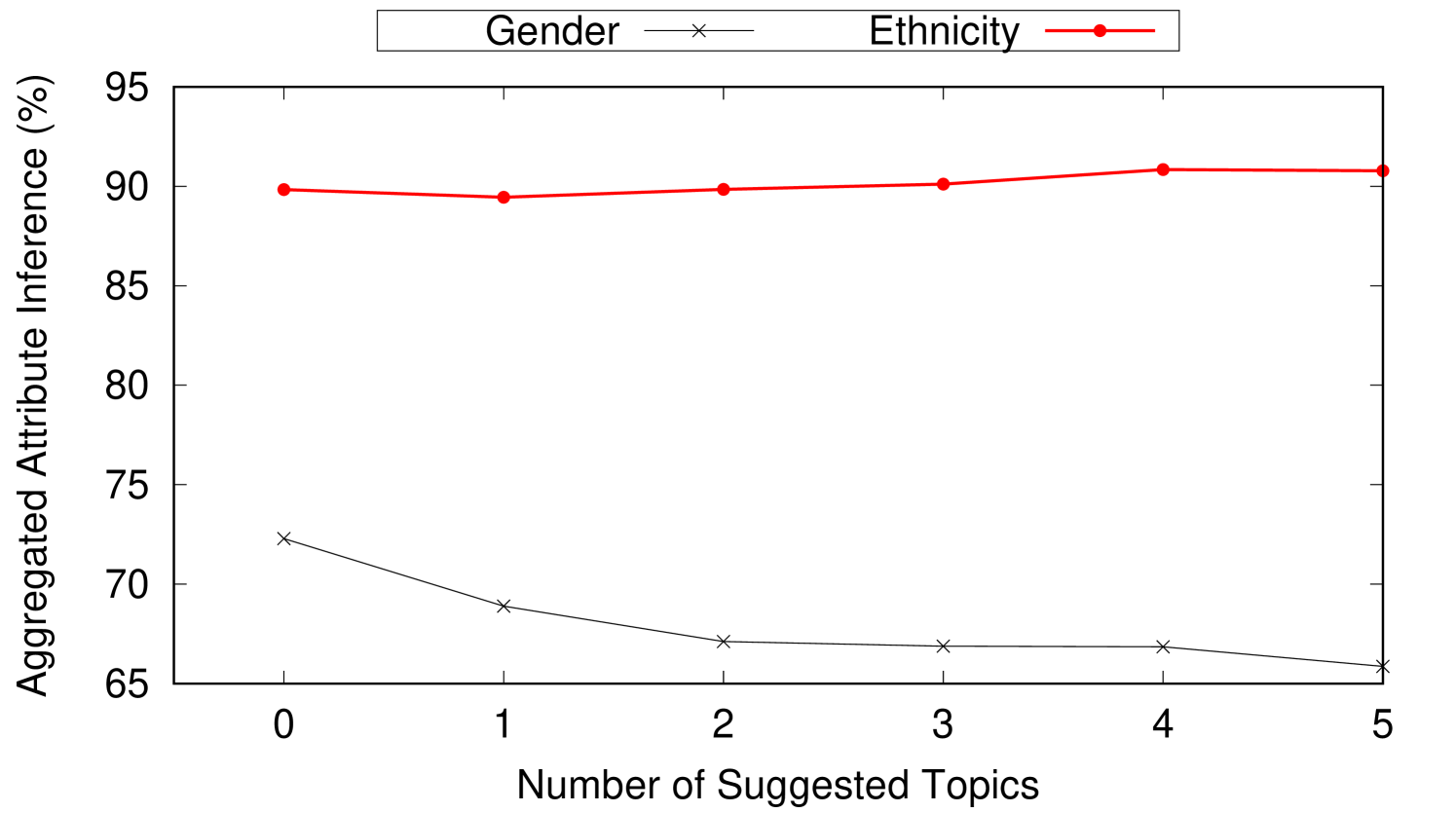

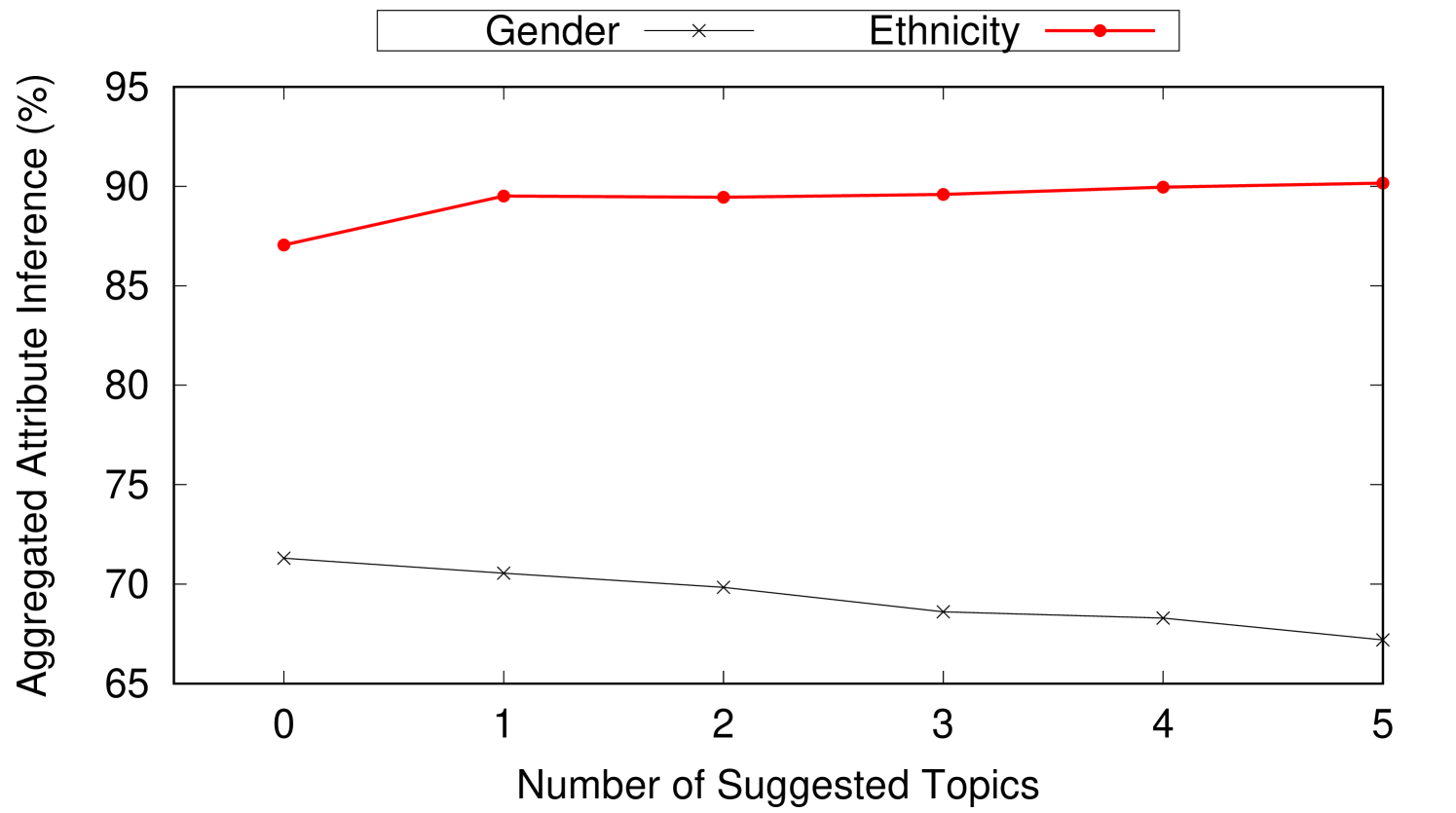

Figures 8(d), 8(e), and 8(f) show the effect on user persona after adding obfuscation posts to weak, mild, and strong posts respectively. User persona is represented by gender and ethnicity. As obfuscation posts are carefully chosen to align with the user persona, we observe negligible changes on the average gender and ethnicity inferences after adding obfuscation posts.

5.5 The Effect of Changing

In this experiment, we measure the effect of changing the parameter on the number of obfuscation posts required to achieve k-location-indistinguishability. determines the number of locations within which user wants to hide her true location. Increasing increases the achieved privacy and boosts the required obfuscation overhead to achieve k-location-indistinguishability. Assume users in Texas hide their State level location among 3 States: Texas, Alabama, and Arizona. A malicious advertiser who wants to target users in Texas is uncertain about their location and now has to pay 3 times the cost of the original advertisement campaign to reach the same target audience. Therefore, increasing inflates the cost of micro-targeting.

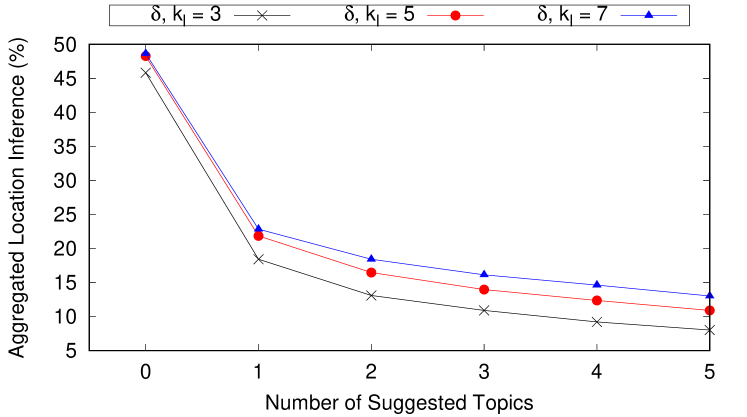

This experiment uses 621 strong topics collected from Twitter over several weeks. The average is reported for all topics. In addition, the effect of adding obfuscation posts on the average for different values of and is reported. Figure 9 shows the effect of adding obfuscation posts on the aggregated location inference for different values of . The privacy parameter is set to .

As shown in Figure 9, achieving 3-location indistinguishability for strong topics requires 4 obfuscation posts on the average for . On the other hand, 7-location indistinguishability requires more than 5 obfuscation posts for the same value of . This result highlights the trade-off between privacy and obfuscation overhead. Achieving higher privacy levels by increasing or lowering requires more obfuscation posts and hence more overhead. Obfuscation posts are carefully chosen to align with the user persona. Therefore, we observe negligible changes on the average gender and ethnicity inferences after adding obfuscation posts for different values of .

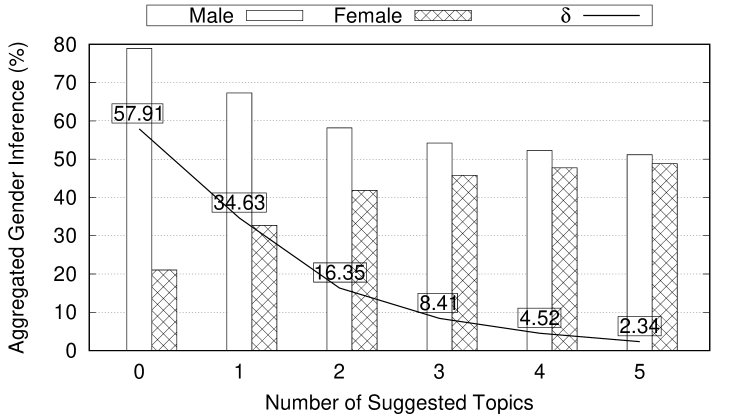

5.6 User Gender Obfuscation

This experiment shows how Aegis is used to hide user gender while preserving their ethnicity and location. In this experiment, we set where user gender should be hidden among male and female gender domain values. is set to to indicate that 2-gender-indistinguishability is achieved if the difference between the highest (top-1) aggregated gender inference and the (top-2) aggregated gender inference is less than 10%. This experiment runs over 40 gender strongly connected topics. The aggregated gender inference is reported when adding the original strong post to the user’s timeline and after adding every obfuscation post one at a time. In addition, , the difference between the male gender inference and the female gender inference is reported. 2-gender-indistinguishability is achieved if . As shown in Figure 10, gender strongly connected topics result in high that violates the 2-gender-indistinguishability privacy target. Therefore, Aegis suggest obfuscation posts that results in reduction. Figure 10 shows that strong topics need on the average 3 obfuscation posts to achieve the gender privacy target. This result is quite consistent with the location obfuscation experiments in Section 5.4. This shows Aegis’s obfuscation mechanism is quite generic and can be efficiently used to hide different user sensitive attributes. Finally, as the obfuscation posts are carefully chosen to align with the user’s public persona attributes, we observed negligible changes on the average location and ethnicity inferences after adding obfuscation posts.

6 Related Work

The problem of sensitive attribute privacy of social network users has been extensively studied in the literature from different angles. k-anonymity [40, 43, 42] and its successors l-diversity [35] and t-closeness [34] are well-known and widely used privacy models in publishing dataset to hide user information among a set of indistinguishable users in the dataset. Also, differential privacy [22, 23, 24] has been widely used in the context of dataset publishing to hide the identity of a user in a published dataset. These models focus on hiding user identity among other users in the published dataset. Another variation of differential privacy is pan-privacy [25]. Pan-privacy is designed to work for data streams and hence it is more suitable for social network streams privacy. However, these models are service centric and assume trusted service providers. In this paper, we tackle the privacy problem from the end-user angle where the user identity is known and all their online social network postings are public and connected to their identity. Our goal is to confuse content-based sensitive attribute inference attacks by hiding the user’s original public posts among other obfuscation posts. Our multifaceted privacy achieves the privacy of user sensitive attributes without altering their public persona.

In the context of social networks privacy, earlier works focus on sensitive attribute inferences due to the structure of social networks. In [47], Zhelava and Getoor attempt to infer the user’s sensitive attributes using public and private user profiles. However, the authors do not provide a solution to prevent such inference attacks. Georgiou et al. [27, 26] study the inference of sensitive attributes in the presence of community-aware trending topic reports. An attacker can increase their inference confidence by consuming these reports and the corresponding community characteristics of the involved users. In [27], a mechanism is proposed to prevent social network services from publishing trending topics that reveal information about individual users. However, this mechanism is service centric and it is not suitable for hiding a user’s sensitive attributes against content-based inference attacks. Ahmad et al.[15] introduce a client-centered obfuscation solution for protecting user privacy in personalized web searches. The privacy of a search query is achieved by hiding it among other obfuscation search queries. Although this work is client-centered, it is not suitable for social networks privacy where the user online persona has to be preserved.

Recent works have focused on the privacy of some sensitive attributes such as location of social network users. Ghufran et al. [28] show that social graph analysis can reveal user location from friends and followers locations. Although, it is important to protect user sensitive attributes like location against this attack, Aegis focuses only on content-based inference attacks. Yakout et al. [44] proposed a system called Privometer, which measures how much privacy leaks from certain user actions (or from their friends’ actions) and creates a set of suggestions that could reduce the risk of a sensitive attribute being successfully inferred. Similar to Privometer, [32] proposes sanitation techniques to the structure of the social graph by introducing noise, and obfuscating edges in the social graphs to prevent sensitive information inference. Andres et al. [17] propose geo-indistinguishability, a location privacy model that uses differential privacy to hide a user’s exact location in a circle of radius from locaction based service providers. In a recent work, Zhang et al. [46] introduce Tagvisor, a system to protect users against content-based inference attacks. However, Tagvisor requires users to alter their posts by changing or replacing hashtags that reveal their location. Other works [29, 18] depend on user collaboration to hide an individual’s exact location among the location of the collaboration group. This approach requires group members to collaborate and synchronously change their identities to confuse adversaries. However, these techniques are prone to content-based inference attacks and collaboration between users might be hard to achieve in the social network context. For location based services, Mokbel et al. [36] use location generalization and k-anonymity to hide the exact location of a query. These works do not preserve the user online persona while achieving location privacy. In addition, these works do not provide a generic mechanism to hide other sensitive attributes such as user gender and ethnicity.

This paper presents Aegis, the first persona friendly system that enables users to hide their sensitive attributes while preserving their online persona. Aegis is a client-centric solution that can be used to hide any user specified sensitive attribute. Aegis does not require users to alter their original posts or topics. Instead, Aegis hides the user’s original posts among other obfuscation posts that are aligned with their persona but linked to other sensitive attribute values achieving -attribute-indistinguishability.

7 Future Extensions

Research in the social network privacy has focused on dataset publishing and obfuscating user information among other users. Such focus is service centric and assumes that service providers are trusted. However, Aegis is user centric and aims to give the users control over their own privacy. This control comes with a cost represented by the obfuscation posts that need to be posted by users. The role of Aegis is to automate the topic suggestion process and to ensure that k-attribute-indistinguishability holds against content-base inference attacks. There are several directions where these obfuscation and privacy models can evolve.

Obfuscation Post Generation. Aegis suggests topics as keywords or hashtags and requires users to write the obfuscation posts using their personal writing styles to ensure that the original posts and the obfuscation posts are indistinguishable. However, the obfuscation writing overhead might alienate users from Aegis. Instead, Aegis can exploit deep neural network language models to learn the user’s writing style. Aegis can use such a model to suggest full posts instead of hashtags and users can either directly publish these posts or edit them before publishing. This extension aims to reduce the overhead on the users by automating the obfuscation post generation.

Social Graph Attack Prevention. Aegis is mainly designed to obfuscate the user sensitive attributes against content-based inference attacks. An orthogonal attack is to use the attribute values of friends and followers to infer a user’s real sensitive attribute value. Take location as a sensitive attribute example. Ghufran et al., [28] show that user location can be inferred from the locations of followers and friends. A user whose friends are mostly from NYC is highly probable to be from NYC. Aegis can be extended to prevent this attack. Users of similar persona but different locations can create an indistinguishability network where users in this network have followers and friends from different locations. Similar to the obfuscation topics, Aegis could suggest users to follow with similar persona but different locations.

8 Conclusion

In this paper, we propose multifaceted privacy, a novel privacy model that obfuscates a user’s sensitive attributes while publicly revealing their public online persona. To achieve the multifaceted privacy, we build Aegis, a prototype client-centric social network stream processing system that achieves multifaceted privacy. Aegis is user-centric and allows social network users to control which persona attributes should be publicly revealed and which should be kept private. Aegis is designed to transfer user trust from the social network providers to her local machine. For this, Aegis continuously suggests topics and hashtags to social network users to post in order to obfuscate their sensitive attributes and hence confuse content-based sensitive attribute inferences. The suggested topics are carefully chosen to preserve the user’s publicly revealed persona attributes while hiding their private sensitive persona attributes. Our experiments show that Aegis is able to achieve sensitive attributes privacy such as location and gender. Adding as few as 0 to 4 obfuscation posts (depending on how strongly connected the original post is to a persona) successfully hides the user specified sensitive attributes without altering the user’s public persona attributes.

References

- [1] Facebook, instagram, and twitter provided data access for a surveillance product marketed to target activists of color. https://www.aclunc.org/blog/facebook-instagram-and-twitter-provided-data-access-surveillance-product-marketed-target, 2016.

- [2] Russia-linked facebook ads reportedly aimed for swing states. http://fortune.com/2017/10/04/trump-russia-facebook-ads-michigan-wisconsin-swing/, 2017.

- [3] Russian-linked facebook ads targeted michigan and wisconsin. https://www.cnn.com/2017/10/03/politics/russian-facebook-ads-michigan-wisconsin/index.html, 2017.

- [4] Tor Browser. https://www.torproject.org/, 2017.

- [5] Aclu and workers take on facebook for gender discrimination in job ads. https://www.aclu.org/news/aclu-and-workers-take-facebook-gender-discrimination-job-ads, 2018.

- [6] Cambridge analytica data breach. http://www.businessinsider.com/cambridge-analytica-data-breach-harvested-private-facebook-messages-2018-4, 2018.

- [7] Distribution of twitter users worldwide as of october 2018, by gender. https://www.statista.com/statistics/828092/distribution-of-users-on-twitter-worldwide-gender/, 2018.

- [8] Docs – twitter developers. https://developer.twitter.com/en/docs.html, 2018.

- [9] Facebook pixel. https://www.facebook.com/business/learn/facebook-ads-pixel, 2018.

- [10] Facebook will remove 5,000 ad targeting categories to prevent discrimination. https://www.theverge.com/2018/8/21/17764480/facebook-ad-targeting-options-removal-housing-racial-discrimination, 2018.

- [11] Monthly active Facebook users. https://www.statista.com/statistics/264810/number-of-monthly-active-facebook-users-worldwide/, 2018.

- [12] Monthly active instagram users. https://www.statista.com/statistics/253577/number-of-monthly-active-instagram-users/, 2018.

- [13] Monthly active Twitter users. https://www.statista.com/statistics/282087/number-of-monthly-active-twitter-users/, 2018.

- [14] Other data breaches like cambridge analytica. https://www.cnbc.com/2018/04/08/cubeyou-cambridge-like-app-collected-data-on-millions-from-facebook.html, 2018.

- [15] W. U. Ahmad, K.-W. Chang, and H. Wang. Intent-aware query obfuscation for privacy protection in personalized web search. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, pages 285–294. ACM, 2018.

- [16] M. Anderson. Researchers take author recognition to neural networks. https://thestack.com/world/2016/06/07/researchers-take-author-recognition-to-neural-networks/, 2016.

- [17] M. E. Andrés, N. E. Bordenabe, K. Chatzikokolakis, and C. Palamidessi. Geo-indistinguishability: Differential privacy for location-based systems. In Proc. of ACM SIGSAC, CCS ’13, pages 901–914. ACM, 2013.

- [18] J. W. Brown, O. Ohrimenko, and R. Tamassia. Haze: Privacy-preserving real-time traffic statistics. In Proc. of ACM SIGSPATIAL, pages 540–543. ACM, 2013.

- [19] S. Chandra, L. Khan, and F. B. Muhaya. Estimating twitter user location using social interactions–a content based approach. In Privacy, Security, Risk and Trust (PASSAT) and 2011 IEEE Third Inernational Conference on Social Computing (SocialCom), 2011 IEEE Third International Conference on, pages 838–843. IEEE, 2011.

- [20] Z. Cheng, J. Caverlee, and K. Lee. You are where you tweet: a content-based approach to geo-locating twitter users. In Proceedings of the 19th ACM international conference on Information and knowledge management, pages 759–768. ACM, 2010.

- [21] S. H. Ding, B. C. Fung, F. Iqbal, and W. K. Cheung. Learning stylometric representations for authorship analysis. IEEE Transactions on Cybernetics, 2017.

- [22] C. Dwork. Automata, Languages and Programming: ICALP 2006, Proceedings, Part II, chapter Differential Privacy, pages 1–12. Springer Berlin Heidelberg, 2006.

- [23] C. Dwork. Differential privacy: A survey of results. In International Conference on Theory and Applications of Models of Computation, pages 1–19. Springer, 2008.

- [24] C. Dwork. Differential privacy. In Encyclopedia of Cryptography and Security, pages 338–340. Springer, 2011.

- [25] C. Dwork, M. Naor, T. Pitassi, G. N. Rothblum, and S. Yekhanin. Pan-private streaming algorithms. In Proceedings of ICS, 2010.

- [26] T. Georgiou, A. El Abbadi, and X. Yan. Extracting topics with focused communities for social content recommendation. In Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, 2017.

- [27] T. Georgiou, A. El Abbadi, and X. Yan. Privacy-preserving community-aware trending topic detection in online social media. In IFIP Annual Conference on Data and Applications Security and Privacy, pages 205–224. Springer, 2017.

- [28] M. Ghufran, G. Quercini, and N. Bennacer. Toponym disambiguation in online social network profiles. In Proceedings of the 23rd SIGSPATIAL International Conference on Advances in Geographic Information Systems, page 6. ACM, 2015.

- [29] X. Gong, X. Chen, K. Xing, D.-H. Shin, M. Zhang, and J. Zhang. Personalized location privacy in mobile networks: A social group utility approach. In Computer Communications (INFOCOM), 2015 IEEE Conference on, pages 1008–1016. IEEE, 2015.

- [30] T. Grant. How text-messaging slips can help catch murderers. https://www.independent.co.uk/voices/commentators/dr-tim-grant-how-text-messaging-slips-can-help-catch-murderers-923503.html, 2008.

- [31] A. Graves. Generating sequences with recurrent neural networks. arXiv preprint arXiv:1308.0850, 2013.

- [32] R. Heatherly, M. Kantarcioglu, and B. Thuraisingham. Preventing private information inference attacks on social networks. IEEE TKDE, 25(8):1849–1862, 2013.

- [33] M. Kosinski, D. Stillwell, and T. Graepel. Private traits and attributes are predictable from digital records of human behavior. Proceedings of the National Academy of Sciences, 110(15):5802–5805, 2013.

- [34] N. Li, T. Li, and S. Venkatasubramanian. t-closeness: Privacy beyond k-anonymity and l-diversity. In IEEE ICDE, pages 106–115, April 2007.

- [35] A. Machanavajjhala, D. Kifer, J. Gehrke, and M. Venkitasubramaniam. L-diversity: Privacy beyond k-anonymity. ACM Trans. Knowl. Discov. Data, 1(1), Mar. 2007.

- [36] M. F. Mokbel, C.-Y. Chow, and W. G. Aref. The new casper: Query processing for location services without compromising privacy. In Proc. of VLDB, pages 763–774, 2006.

- [37] H. Nissenbaum. Privacy as contextual integrity. Wash. L. Rev., 79:119, 2004.

- [38] M. J. Paul and M. Dredze. You are what you tweet: Analyzing twitter for public health. Icwsm, 20:265–272, 2011.

- [39] R. L. Rivest et al. Chaffing and winnowing: Confidentiality without encryption. CryptoBytes (RSA laboratories), 4(1):12–17, 1998.

- [40] P. Samarati. Protecting respondents identities in microdata release. IEEE TKDE, 13(6):1010–1027, 2001.

- [41] H. A. Schwartz, J. C. Eichstaedt, M. L. Kern, L. Dziurzynski, S. M. Ramones, M. Agrawal, A. Shah, M. Kosinski, D. Stillwell, M. E. Seligman, et al. Personality, gender, and age in the language of social media: The open-vocabulary approach. PloS one, 8(9):e73791, 2013.

- [42] L. Sweeney. Achieving k-anonymity privacy protection using generalization and suppression. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, 10(05):571–588, 2002.

- [43] L. Sweeney. K-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl.-Based Syst., 10(5):557–570, Oct. 2002.

- [44] M. Yakout, M. Ouzzani, H. Elmeleegy, N. Talukder, and A. K. Elmagarmid. Privometer: Privacy protection in social networks. IEEE ICDEW, 00:266–269, 2010.

- [45] V. Zakhary, C. Sahin, T. Georgiou, and A. El Abbadi. Locborg: Hiding social media user location while maintaining online persona. In Proceedings of the 25th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, page 12. ACM, 2017.

- [46] Y. Zhang, M. Humbert, T. Rahman, C.-T. Li, J. Pang, and M. Backes. Tagvisor: A privacy advisor for sharing hashtags. arXiv preprint arXiv:1802.04122, 2018.

- [47] E. Zheleva and L. Getoor. To join or not to join: the illusion of privacy in social networks with mixed public and private user profiles. In Proc. of WWW, pages 531–540. ACM, 2009.