Multiple entropy production for multitime quantum processes

Abstract

Entropy production and the detailed fluctuation theorem are of fundamental importance for thermodynamic processes. In this paper, we study the multiple entropy production for multitime quantum processes in a unified framework. For closed quantum systems and Markovian open quantum systems, the given entropy productions all satisfy the detailed fluctuation relation. This also shows that the entropy production rate under these processes is non-negative. For non-Markovian open quantum systems, the memory effect can lead to a negative entropy production rate. Thus, in general, the entropy production of the marginal distribution does not satisfy the detailed FT relation. Our framework can be applied to a wide range of physical systems and dynamics. It provides a systematic tool for studying entropy production and its rate under arbitrary quantum processes.

I Introduction

The fluctuation theorem can give a generalisation of the second law of thermodynamics and imply the Green-Kubo relations. It applies to fluctuations far from equilibrium and is of fundamental importance to non-equilibrium statistical mechanics. Roughly speaking, the fluctuation theorems (FTs) are closely related to time-reversal symmetry and the relations between the probabilities of forward and backward processes. For isolated quantum systems, the forward and backward processes can be described by unitary evolution. And there is always a widely held detailed FT [1]. For open quantum systems, one can assume that the entire system-environment combination is a large closed system and make use of the detailed FT of the closed system. Within this framework, the state of the environment must be detectable. If this is not the case, the backward mapping cannot fully recover the system state due to the lack of the environment information. One approach is to use the Petz recovery map as the backward processes and to establish the relation between the quantum channel and its Petz recovery map [2]. These two approaches give the same entropy production when dealing with the maps with global fixed points [3].

The FTs focus mainly on entropy production. If an entropy production satisfies the detailed, we call it a fluctuation quantity here. In the single-shot scenario, the entropy production depends on two-point measurements. For the multitime processes, the intermediate measurements may affect the system state and subsequent evolution, so the entropy production may depend on multi-point measurements. The process in presence of feedback control is a typical multitime process. And the corresponding FTs need to be modified to take into account the information gained from the measurement [4, 5, 6]. With the multipoint measurements, it becomes more natural to study the entropy production rate. An important observation is that the non-negativity of the entropy production does not guarantee the non-negativity of the entropy production rate. The entropy production rate is determined by the entropy production relation between the step processes and the step processes. Since non-negative average entropy production is a natural consequence of the FT, studying the entropy production and the detailed FT for multitime processes can help to understand the relationship between the sign of the entropy production rate and the occurrence of non-Markovian effects.

It should be noted that in the single-shot scenario, the entropy production rate can also be discussed by comparing the average entropy production for different evolutionary times. However, as we will explain later, evolution with different evolution times can be described by the same evolution process, but the measurement process is completely different. Therefore, entropy production at different evolutionary times does not correspond to the same overall process and cannot be described by the same joint distribution.

Usually the FT is directly related to the actual observation, and the multi-point measurements can give a joint probability distribution that can reflect the multi-time properties of the system. However, in the quantum regime, the measurements are generally invasive: the measurements are invasive not only to the system itself, but also to the subsequent dynamics of open system. On the one hand, since the measurement is invasive to the state, the measurement contributes directly to the entropy production. The cost of quantum measurement in a thermodynamic process are addressed by [7, 8]. So [7] tries to use a single measurement and gets a Jarzynski-like equality. Since there is only a single point measurement, the properties it gives must also depend only on a single point. There will be neither the concept of the backward processes, nor a fluctuation-dissipation theorem related to the properties of two-point measurements. On the other hand, because the measurement is invasive to the subsequent dynamics of open system, it must have other indirect effects on the entropy production of multi-time processes. Therefore, in this paper, we will consider the combined effects of evolution and measurement on the entropy production and the FT.

The quantum dynamical semigroups are standard Markovian quantum processes. Their entropy production and detailed FT have been studied by [9, 10]. For non-Markovian quantum processes, the memory effect makes the evolution much more complex. The process tensors [11, 12] are powerful operational tools for studying various temporally extended properties of general quantum processes. Using these tools, [13] set up a framework for quantum stochastic thermodynamics and discussed the entropy production of the Markovian processes. In our previous work [14], we used an equivalent form of process tensors to obtain the FTs for non-Markovian processes. In this work, we continue to use this form and consider the marginal distribution of the multitime quantum processes. The detailed FT of the joint probability does not guarantee that the marginal distribution also satisfies these relations. If these relations are indeed satisfied, then there can be several compatible fluctuation quantities in the same processes. As we will show later, the existence of multiple compatible fluctuations is directly related to the issue of the entropy production rate.

Due to the invasiveness of the measurements, the marginal distributions do not correspond to derived processes, in which some measurements are not performed. Since the memory effect can lead to a negative entropy production rate. Thus, in general, the entropy production of the marginal distribution does not satisfy the detailed FT relation. Only if the Kolmogorov consistency condition is satisfied, then not performing a measurement is the same as averaging over its probabilities [15]. And the entropy production of the corresponding marginal distributions will be the same as that of the derived processes. The entropy production of the derived processes should satisfy the detailed FT relation, so the entropy production of the marginal distributions should also do so. Another interesting relationship between the Kolmogorov condition and quantum thermodynamics is work extraction [16]. Work extraction itself is not a fluctuation quantity and the proof of [16] has nothing to do with backward processes. So the relation between the sum of each intermediate amount entropy production and the total entropy production is still unclear. And we will discuss it briefly in this paper.

This paper is organised as follows. In section II, we first briefly introduce the general framework of operator states and process states. Then, for closed quantum systems, Markovian open quantum systems and non-Markovian open quantum systems, we try to derive the entropy production and the detailed FT of the joint probability and marginal distributions. We show that the Kolmogorov condition will make the sum of each intermediate amount entropy production equal to the total entropy production in the closed system, but fails for other systems. We also show how multiple compatible fluctuations are related to the entropy production rate. In section III, we discuss the average entropy production of a simple Jaynes-Cummings model. The section IV concludes.

II Entropy production for multitime quantum processes

In the operator-state formalism [17, 14], operators are treated as states. The inner product of these states is defined as

| (1) |

The operator vector space is orthonormalized as follows:

| (2) |

where . The completeness relation is

| (3) |

The evolution of the system can be generally described with quantum channel , which is a superoperator that maps a density matrix to another density matrix . The operator state is often used to link the input and output of the state. It differs from the maximally entangled state by only a normalization factor . Hence, the state is nothing but the Choi state obtained from the Choi-Jamiłkowski isomorphism. We will use the Choi-state form of the process tensors which we call the process states. This form can help us separate measurement from evolution, and it is more convenient to use the results about the Choi-state of quantum channel.

| Time Evolution | Unitary | Markovian | Non-Markovian |

|---|---|---|---|

| Satisfy the FT relation | ,,,… | ,,,… | , Others to be determined |

| Average relation | Depends on conditions | ||

| Subsequent entropy production | Depends on conditions |

II.1 Closed quantum system

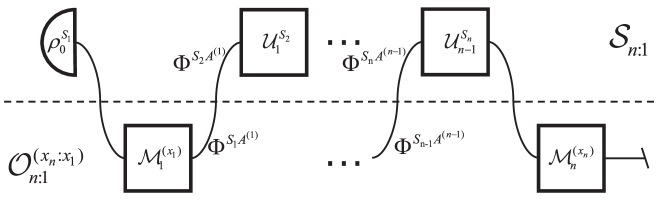

In general, the time evolution of closed quantum systems is described by unitary operators acting on the system. Under step evolution, the forward process state is

| (4) |

In such processes, it is natural to measure the state and use the measurement results as input for the next step. Therefore, we define the -point measurements operation as

| (5) |

where the operation acts on . With these notations in hand, the joint probability for -point measurements can be expressed as

| (6) |

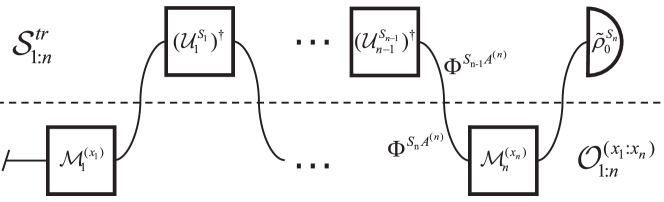

The unitary evolution is invertible with the time-reversed evolution. So the backward process state is

| (7) |

where is the initial state of the backward process, and is the adjoint map. For single-shot evolution, the final state of the forward process is usually chosen as the initial state of the backward process. For open quantum systems, there are also some other choices [3]. Here we chose

| (8) |

which is the final state of the forward process without any control operations. The backward -point measurements operation can be defined as

| (9) |

The joint probability for backward process can be expressed as

| (10) |

It is easy to see that

| (11) |

The entropy production is defined as usual as the logarithm of the ratio of the forward and backward probabilities

| (12) |

It follows from sections II.1, II.1 and 11 that

| (13) |

which depends only on local measurements of the initial and final states. The distribution of entropy production is given by

| (14) | |||

| (15) |

It then follows that

| (16) |

which gives the detailed FT.

Now consider the marginal distribution of the forward and backward probability. Without loss of generality, we divide the overall process into two parts: the first steps and the th step. Summing over outcomes of the last measurement , we obtain a marginal distribution of the forward probability

| (17) |

The backward one can be similarly defined. With these two probabilities, we can define another entropy production

| (18) |

Similar to Eq. 13, it is easy to show that

| (19) |

where . And is a dephasing map. The entropy production depends only on local measurements of and . The corresponding distribution of entropy production is given by

| (20) | |||

| (21) |

The entropy production also satisfies the detailed FT

| (22) |

If summing over outcomes of measurements , we obtain another marginal distribution of the forward probability

| (23) |

Similar to previous procedures, we can define

| (24) |

and show that

| (25) |

where

| (26) |

The corresponding distribution of entropy production is given by

| (27) | |||

| (28) |

The entropy production also satisfies the detailed FT. As stated above, the multitime quantum processes allow multiple entropy production terms. They all satisfy the detailed FT in a common multitime process. The previous procedures are actually applicable to all the marginal distributions. The corresponding entropy production also depends on local measurements.

If the joint probability and satisfy the Kolmogorov consistency condition [15], then measuring but summing the measurements is equivalent to not measuring. In such cases, the probability distributions for all subsets of times can be obtained by marginalization. And the detailed FT mentioned above are all equivalent to two-point measurement FT of some processes. In addition, when the Kolmogorov condition is met, there will be and . If choosing the initial state of the backward process as Eq. 8, then we have

| (29) |

and . Under these circumstances, the previously mentioned entropy production terms have the following relation

| (30) |

With this relation, one can easily see that , which is very similar to the relation of average work done shown in [16]. However, the work itself is not the entropy production. The relation of average work has nothing to do with the backward processes. Therefore, the two relations are very different.

Combining section II.1 and the detailed FT of , we have

| (31) |

The condition (II.1) is strong, but it is not a necessary condition for Eq. 31. In fact, if both and satisfy the detailed FT, then we have

| (32) |

Using Jensen’s inequality , Eq. 31 implies

| (33) |

which means the total average entropy production is not less than intermediate average entropy production. This also implies that the entropy production rate at step is nonnegative. The proof (II.1) applies to all cases where the joint probability is well-defined.

Now let’s calculate the average of entropy production. The aforementioned Kolmogorov consistency condition and Eq. 8 are mainly used to give . Without these conditions, using sections II.1, 13 and 26, the average entropy production can be expressed generally as

| (34) |

where is the quantum relative entropy. In the third equal sign of section II.1 we exploit the fact that unitary transformations do not change entropy. For single-shot unitary evolution, if two-point measurements do not invade , and , then we can obtian the commonly used average entropy production [1]

| (35) |

The average of the entropy production and is

| (36) |

Using the non-negativity of relative entropy and the data processing inequality, it is easy to find that these average entropy productions are non-negative. Combining sections II.1 and II.1, it is easy to find that

| (37) |

If the Kolmogorov consistency condition is not satisfied, then the sum of the segmental entropy production is generally greater than the total average entropy production.

II.2 Markovian open quantum system

The time evolution of the Markovian open quantum system can be described by a sequence of independent CPTP maps [18]. Under step evolution, the forward process state is

| (38) |

With the measurements (5), the joint probability for -point measurements can still be expressed as

| (39) |

For open quantum systems, the lack of information about environment makes the evolution irreversible. It is common to use Petz recovery map as the backward map [24]. For step evolution, the backward process state can be written as

| (40) |

where is the Petz recovery map of , is the rescaling map, and is the reference state that can be freely chosen. The Petz recovery map is a CPTP map and fully recovers the reference state. With the measurements (9), the joint probability for backward process can be expressed as

| (41) |

Similar to Eq. 11, the adjoint map and obey the following relation:

| (42) |

The rescaling map in Petz recovery will make the joint probability Eqs. 39 and 41 generally unable to establish a relation similar to Eq. 16. Only with the following operation can we obtain a detailed FT

| (43) |

where . The basis is chosen such that it diagonalizes the reference state and is chosen as the eigenbasis of . On this basis, we have , where

| (44) |

With the operation (II.2), we obtain a quasiprobability distribution [2]

| (45) |

This distribution is not positive, but it can be obtained from observable quantities [2]. The operation (II.2) can also be fully reconstructed as a linear combination of the -point positive-operator valued measurements (POVMs) operation. Moreover, the joint probability can be directly derived from quasiprobability

| (46) |

The backward quasi-measurements operation can be defined as

| (47) |

with which we obtain the quasiprobability distribution of backward processes

| (48) |

The joint probability of backward processes can also be directly derived from quasiprobability of backward processes like Eq. 46. Similar to (12), the entropy production of which can be defined as

| (49) |

With Eq. 42, it is easy to show that

| (50) |

Combining this with sections II.2 and 48, we find that

| (51) |

is independent of intermediate measurements . The distribution of entropy production for forward processes is given by

| (52) |

The backward one can be similarly defined. The detailed FT

| (53) |

has been shown in Ref. [14].

Now follow the same procedure used in section II.1, the marginal distribution can be defined as

| (54) |

The corresponding entropy production is

| (55) |

which is independent of intermediate measurements :

| (56) |

where . The distributions of entropy production can be defined similarly to the previous one, and the detailed FT also holds.

After summing over outcomes of measurements , we obtain marginal distribution

| (57) |

The corresponding entropy production is

| (58) |

It then follows that

| (59) |

where

| (60) |

The distributions of entropy production can be defined similarly to the previous one, and the detailed FT also holds.

Due to the irreversibility of open system evolution, there is no relation like Eq. 29 for and , even if the Kolmogorov condition is met. And the section II.1 no longer holds for the defined here. The backward process of is derived from . If we use the following step backward processes instead

| (61) |

then we can obtain quasiprobability distribution

| (62) |

and another entropy production

| (63) |

which satisfies

| (64) |

We can similarly define a distribution of entropy production and prove the detailed FT. However, the obtained fluctuation relation is related to processes (61), not to processes (40). The entropy production and share the same forward processes, but use different initial states in the backward processes. Obviously,

| (65) |

Similarly, we can use different initial states in the forward processes

| (66) |

to obtain another entropy production , which satisfies

| (67) |

Similar to section II.1, if both and satisfy the detailed FT, then

| (68) |

With Jensen’s inequality, the conclusion that the total average entropy production is not less than intermediate average entropy production still holds. And the entropy production rate is still nonnegative. Notice that the forward process of is different from that of and , so we have in general

| (69) |

where is the probability distribution from two-point measurement of the processes (66) and is the corresponding probability average.

Similar to section II.1, using sections II.2, II.2 and 60, the average of entropy production here can be generally expressed as

| (70) |

Comparing sections II.1 and II.2, it is easy to see that both of them contain the term , which is the direct contribution of the measurements to the entropy production [7]. If we choose , set the reference states and assume that all measurements are non-invasive, then the average of entropy production can be simplified to

| (71) |

where the evolution map

| (72) |

For single-shot CPTP evolution, the average entropy production is [2]

| (73) |

where the reference state can be freely chosen according to the needs, and the Gibbs states are a common choice. Since the Gibbs states are fixed points of many processes, the following average entropy production formula is often used [21, 20, 23]

| (74) |

But if Gibbs states are not fixed points, then Eq. 74 is inappropriate, see [19] for related discussion.

If we choose and assume that all measurements are non-invasive, then from section II.2, we can get

| (75) |

When the evolution of the system state can be described through an exact time-convolutionless master equation

| (76) |

one can choose the instantaneous fixed point as the reference state. is a null eigenvector of the generator of the dynamics. For continuous evolution and measurement, the total average entropy production can be written as

| (77) |

The average of entropy production and is

| (78) |

Combining sections II.2 and II.2, we have

| (79) |

Different from the case of unitary evolution, even if the Kolmogorov consistency condition is satisfied, due to the irreversibility of the evolution , the sum of the segmental entropy production is generally greater than the total average entropy production.

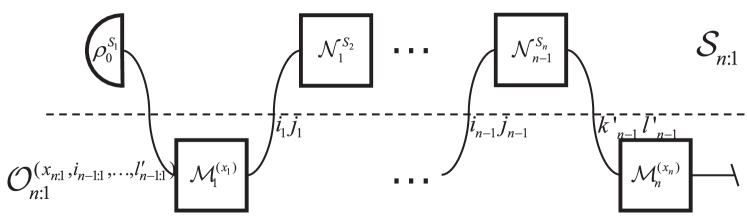

II.3 Non-Markovian open quantum system

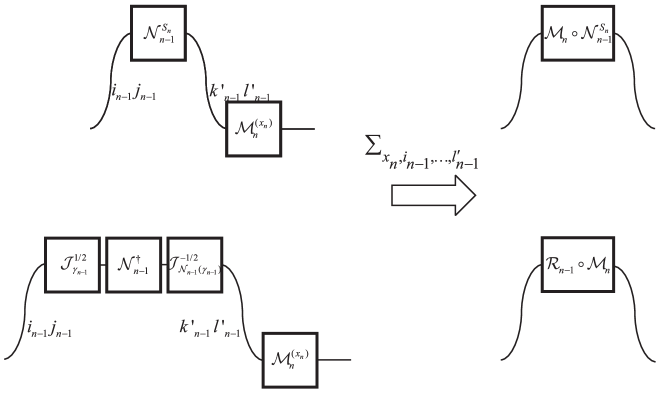

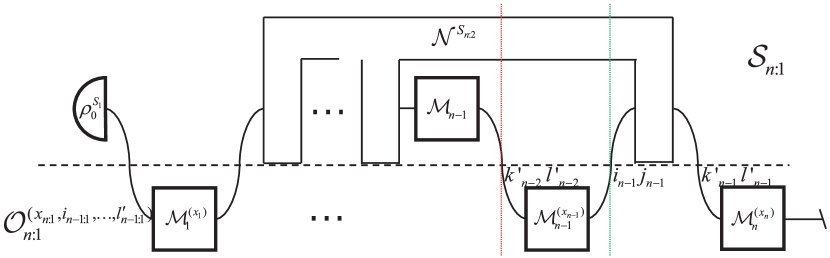

In general, the non-Markovian processes is indivisible. Under step evolution, the forward process state can be written as

| (80) |

where

| (81) |

With the measurements (5), the joint probability for -point measurements can still be expressed as Eq. 39. If we use the Petz recovery map of to give backward processes

| (82) |

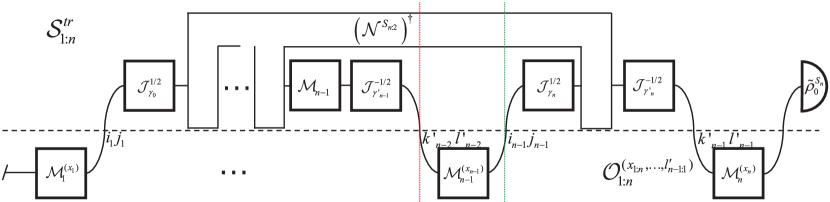

then the backward process is not linkable [14]. For such processes, measuring with the measurement (9) will lead to an ill-defined probability distribution. One can freshly prepare the system state at each step and obtain the detailed FT for the many-body channels, or insert a special operation and obtain the detailed FT for the derived channel [14]. The former has no connection with the joint probability distribution . The latter has input states, which are independent and there is no time ordered relationship among them. Therefore, both of them are not suitable for studying multi-entropy production under the same processes.

For non-Markovian processes, the intermediate measurements can influence subsequent evolution of the system and cause entropy increases themselves. Hence, only incorporating the intermediate measurements into the processes itself, can we guarantee that the entropy production is due to the processes rather than the measurements. In this manner, the forward process state is

| (83) |

where . Notice that the induction condition is naturally satisfied in the present framework, the present state and the measurement outcome of the present state cannot be affected by future measurements. Hence, we can obtain the first step evolution by ignoring the results of subsequent evolution . Performing a measurement and discarding the outcomes will not affect the re-measurement of the intermediate state

| (84) |

Thus, with the measurements Eq. 5, the joint probability distribution given by will be the same as that given by Eq. 80. Since the has incorporated intermediate measurements into the processes itself, even without further measurements, the final state is also different from that given by Eq. 80

| (85) |

For the same reason, their reference final states are also different.

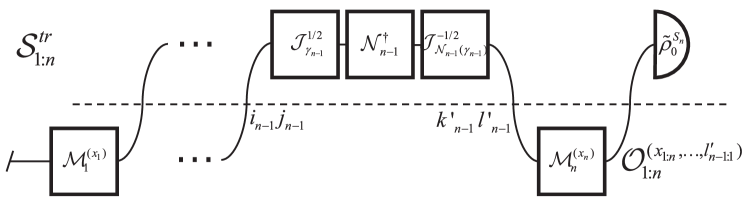

Here we propose the following backward process state

| (86) |

where

| (87) |

and

| (88) |

is the output of after steps of evolution. According to this, for , we know that is the output state of the measurement , so they share the same basis. We set and for the others . We still use the quasi-measurements section II.2 for forward processes and section II.2 for backward processes. Now the basis is chosen such that it diagonalizes and is chosen as the eigenbasis of . Relation (46) still holds and so does the one of backward processes.

Before further discussion of the entropy production, we briefly analysis the probability distribution of backward processes. Since and share the same basis, we have

| (89) |

Combining this with the definition of , we obtain

| (90) |

where

| (91) |

is related to the intermediate measurement results. It is easy to show that , which leads to

| (92) |

So the joint probability distribution is normalized.

Now we continue to discuss the FTs. The quasiprobability, entropy production and its probability distribution can be defined similarly to the section II.2. The entropy production reads

| (93) |

If we choose , the average of the entropy production is

| (94) |

Since the intermediate measurements are already included in the processes , the applied intermediate measurements are non-invasive. Furthermore, the intermediate reference states cannot be chosen arbitrarily. These make the entropy production (94) quite similar to the form (71). But note that their evolutions are completely different. The corresponding evolution (85) in entropy production (94) contains measurements and cannot be divided due to memory effects, which are quite different from the evolution (72).

Before considering the marginal distribution , we need to clarify the relation between and . Unlike the cases in section II.2, the memory effect will make the subsequent evolution depends on the previous measurement results, so the relation is more complex here. From the definition (81), we can utilize

| (95) |

to bridge and . Since the measurements on the system will destroy quantum correlations between system and environment [25], we have

| (96) |

represents the state of the environment. Since both and are CPTP maps, is also a CPTP map and this requires

| (97) |

section II.3 can relate to . According to the definition (87), the recovery map can be rewritten as

| (98) |

Combining this with section II.3, we obtain

| (99) |

The quasiprobability, entropy production and its probability distribution can also be defined similarly to the section II.2. But unlike section II.2, according to section II.3, the entropy production becomes

| (100) |

The density matrix will depend on the historical measurements

| (101) |

where the evolution depends on the state of the environment

| (102) |

The detailed FT relation is still held, but the entropy production section II.3 is no longer a combination of time-localized measurements, but contains temporal nonlocal measurements. Only when the evolution does not change with historical measurements, such as the Markovian processes, then the entropy production will only be related to the two-point measurement.

Now let’s discuss another marginal distribution. Summing over is related to the following processes

| (103) |

Similar to section II.3, the measurement makes

| (104) |

where . With the same reason as Eq. 97, it requires . With , the quasiprobability distribution of forward processes can be written as

| (105) |

where , and the notation is used because we ignore some common terms like , which also exist in the quasiprobability distribution of backward processes. The quasiprobability distribution of backward processes is

| (106) |

From sections II.3 and II.3, we find that the summing over cannot be eliminated or be attributed to a local measurement. So there is no detailed FT for in general. Only when is independent of , which is also satisfied in the Markovian processes, then we have

| (107) |

where

| (108) |

The proof has used that and share the same basis. From the previous analysis, we can see that if we want the entropy production of the marginal distribution satisfies the fluctuation theorem with time-localized measurements, we must require that the evolution do not change with historical measurements. That is to say, the measurement is not invasive to evolution.

Let’s briefly discuss why the marginal distribution in the non-Markovian processes does not necessarily give a detailed FT. Since the proof (II.1) also applies to here. If both and satisfy the detailed FT, then we have . For non-Markovian processes, the state of the system could be fully recovered. Then according to Eq. 94, there must be . However, is very natural when there is a contraction in the state space of the system. So there is a conflict here, which makes the detailed FT for marginal distributions not unconditional.

Since the proof (II.1) applies to all cases here, it leads to the following conclusion: For the marginal distribution, if its corresponding entropy production satisfies the detailed fluctuation theorem, then its average gives a lower bound on the total average entropy production.

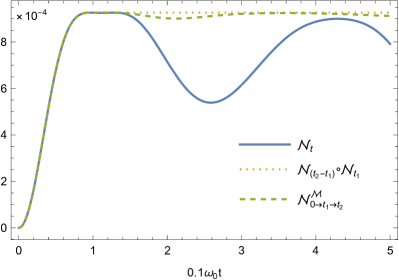

III Example

To illustrate our framework we briefly discuss the Jaynes-Cummings model. For simplicity, here we consider a two-state atom coupled to a single harmonic oscillator mode. Suppose the atom is our system of interest. The non-Markovian dynamics and the memory effects of this model have been discussed by [27, 28]. Ref. [22] took it as an example to calculate the entropy production of a single-shot evolution under different evolution durations, so as to obtain the entropy production rate. The total Hamiltonian is given by

| (109) |

Its eigenstate is

| (110) |

where denotes the number of radiation quanta in the mode, the letters denote the excited and ground state respectively, and . The energy eigenvalues associated with the eigenstates are given by

| (111) |

where . The unitary evolution operator in the basis is given by the following matrix [27]

| (112) |

where the operators

| (113) |

and . All functions related to the particle number operator satisfy the following relations

| (114) |

Using these relations and

| (115) |

one can easily verify the unitarity of . In addition, using

| (116) |

it is easy to verify that .

Suppose the initial state of the system and the environment is factorized , the environment is initially in thermal equilibrium (Gibbs) state . Then the dynamical map of the system is

| (117) |

Since the unitary evolution obeys the energy conservation relation , the thermal equilibrium state of system is the fixed point of map . For single-shot evolution, the corresponding forward process state is

| (118) |

where . See Eq. 123 for its specific matrix form.

If considering two-step evolution, the corresponding channel is

| (119) |

The corresponding forward process state is given by

| (120) |

The specific matrix form of can be found in appendix A.

Here, we plot the comparison between the entropy production (71), (73) and (94) (see fig. 5). Comparing the entropy production of with , we can find that the memory effect reduces entropy production. When the memory is erased (by refreshing the environment state), the restoration of the state will be prevented. Comparing with , it can be found that the recovery of the state is weakened, which means that the measurement can destroy part of the memory. This is consistent with the fact that memory can be divided into quantum and classical parts. Comparing with , it can be found that the negative entropy production still exists, which means that the measurement will not destroy all the memories. In addition, for process , we can see that . This makes it impossible for the corresponding marginal distribution to give a detailed FT.

IV Conclusion and discussion

In this paper, we have studied the entropy production and the detailed FT for multitime quantum processes in the framework of the operator-state formalism. For closed quantum systems and Markovian open quantum systems, the entropy production of the joint probability and marginal distributions all satisfy the detailed FT relation. This also leads to a non-negative entropy production rate. And the total average entropy production is not less than intermediate average entropy production. For closed quantum systems, we further showed that the sum of each intermediate amount entropy production can be equal to the total entropy production if the Kolmogorov condition is satisfied. For non-Markovian open quantum systems, the memory effect can lead to a negative entropy production rate. Therefore, the entropy production of the marginal distribution generally does not satisfy the detailed FT relation.

To illustrate the framework, we have briefly calculated the total average entropy production of the three processes in the Jaynes-Cummings model. The results show that memory effects do reduce the average entropy production, while measurements destroy part of the memory. For the non-Markovian open quantum system, the intermediate measurements can destroy the system-environment quantum correlations and thus part of the memory. If these intermediate measurements are not performed, then the full memory effect can be preserved. If the environment state is refreshed at each step, as in [26], then the evolution is completely Markovian, and the memory effect is completely destroyed. A further discussion of the effect of measurement on different memories, and the effect of different memories on entropy production will help us to fully understand the influence of memory effects on the fluctuation theorems.

This paper mainly focuses on the fluctuation theorem of multiple entropy production under the same process and the same measurement. The multiple fluctuations here are obtained by calculating the marginal distribution of measurements at different times. Some previous studies such as [29] have considered the fluctuation theorem under the single-shot process and the multi-body measurement, where the multiple fluctuations are obtained by calculating the marginal distribution of different measurement objects (such as system and reference). The conditional mutual information obtained by introducing the auxiliary system can be used to measure the memory effect. Therefore, studying the fluctuation theorem of many-body measurements under multi-time evolution can give some interesting results.

In [30, 31], the entropy production and the detailed FT relation of multiple channels are used to develop an arrow of time statistic associated with the measurement dynamics. Evolution with memory effects is clearly beyond these frameworks, so irreversibility may be violated. The framework of this paper allows us to discuss path probabilities of non-Markovian processes, and thus may help us to deepen our understanding of the relation between the Poincaré recurrence theorem and the statistical arrow of time.

The FTs considered here are completely general but only useful when can be expressed exclusively in terms of physical and measurable quantities. For closed quantum systems and Markovian open quantum systems, the entropy production defined here is consistent with previous work. So I won’t go into details here. The main difference is in the non-Markovian cases, where memory effects cause the entropy production to include temporally nonlocal measurements. This issue needs further investigation.

Acknowledgements.

Z.H. is supported by the National Natural Science Foundation of China under Grants No. 12305035.Appendix A Overall process state and open system process state

Here we use the system-environment unitary evolution process state to obtain the open system process state. The overall process state corresponding to a single-shot unitary evolution is

| (121) |

where can be expressed in the following matrix

| (122) |

where the rows are and the columns are , and the value is . According to Eq. 81, we can obtain the process state of the open system from the overall process state. When the environmental state commuting with the number operator, we have , where

| (123) |

and . For two-step unitary evolution, the following overall process state can be obtained

| (124) |

Again, we can use to obtain the specific form of the map . Using Eqs. 114, 115 and III repeatedly, one can get

| (125) |

where , , , , and

| (130) | ||||

| (135) |

where

| (136) |

When , there is , and it is easy to verify that

| (137) |

This mapping does correspond to the channel . In addition, one-step evolution can be derived from two-step evolution when intermediate states are directly connected. Accordingly, it can be verified that

| (138) |

If the intermediate states are measured, and assuming that the measurement is in the basis , then from

| (139) |

we can get the mapping corresponding to Eq. 85. Its specific matrix form is

| (140) |

where

| (141) |

The mapping Eq. 140 can be used to calculate the entropy production Eq. 94.

References

- [1] M. Esposito, U. Harbola, S. Mukamel, Nonequilibrium fluctuations, fluctuation theorems, and counting statistics in quantum systems, Reviews of Modern Physics, 81 (2009) 1665-1702.

- [2] H. Kwon, M.S. Kim, Fluctuation Theorems for a Quantum Channel, Physical Review X, 9 (2019) 031029.

- [3] G.T. Landi, M. Paternostro, Irreversible entropy production: From classical to quantum, Reviews of Modern Physics, 93 (2021) 035008.

- [4] J.M. Horowitz, S. Vaikuntanathan, Nonequilibrium detailed fluctuation theorem for repeated discrete feedback, Physical Review E, 82 (2010) 061120.

- [5] S. Lahiri, S. Rana, A.M. Jayannavar, Fluctuation theorems in the presence of information gain and feedback, Journal of Physics A: Mathematical and Theoretical, 45 (2012) 065002.

- [6] P.A. Camati, R.M. Serra, Verifying detailed fluctuation relations for discrete feedback-controlled quantum dynamics, Physical Review A, 97 (2018) 042127.

- [7] S. Deffner, J.P. Paz, W.H. Zurek, Quantum work and the thermodynamic cost of quantum measurements, Physical Review E, 94 (2016) 010103.

- [8] S. Deffner, Energetic cost of Hamiltonian quantum gates, Europhysics Letters, 134 (2021) 40002.

- [9] V. Jakšić, C.A. Pillet, M. Westrich, Entropic Fluctuations of Quantum Dynamical Semigroups, Journal of Statistical Physics, 154 (2014) 153-187.

- [10] J.-F. Bougron, L. Bruneau, Linear Response Theory and Entropic Fluctuations in Repeated Interaction Quantum Systems, Journal of Statistical Physics, 181 (2020) 1636-1677.

- [11] F. A. Pollock, C. Rodríguez-Rosario, T. Frauenheim, M. Paternostro, and K. Modi, Non-Markovian quantum processes: Complete framework and efficient characterization, Phys. Rev. A 97, 012127 (2018).

- [12] S. Milz and K. Modi, Quantum stochastic processes and quantum non-Markovian phenomena, PRX Quantum 2, 030201 (2021).

- [13] P. Strasberg, Operational approach to quantum stochastic thermodynamics, Physical Review E, 100 (2019) 022127.

- [14] Z. Huang, Fluctuation theorems for multitime processes, Physical Review A, 105 (2022) 062217.

- [15] S. Milz, D. Egloff, P. Taranto, T. Theurer, M. B. Plenio, A. Smirne, and S. F. Huelga, When is a non-Markovian quantum process classical? Phys. Rev. X 10, 041049 (2020).

- [16] H. Miller and J. Anders, Leggett-Garg Inequalities for Quantum Fluctuating Work, Entropy 20, 200 (2018).

- [17] D. E. Parker, X.-y. Cao, A. Avdoshkin, T. Scaffidi, and E. Altman, A universal operator growth hypothesis, Phys. Rev. X 9, 041017 (2019).

- [18] F.A. Pollock, C. Rodríguez-Rosario, T. Frauenheim, M. Paternostro, K. Modi, Operational Markov Condition for Quantum Processes, Physical Review Letters, 120 (2018) 040405.

- [19] P. Strasberg, M. Esposito, Non-Markovianity and negative entropy production rates, Physical Review E, 99 (2019) 012120.

- [20] S. Alipour, F. Benatti, F. Bakhshinezhad, M. Afsary, S. Marcantoni, A.T. Rezakhani, Correlations in quantum thermodynamics: Heat, work, and entropy production, Scientific Reports, 6 (2016) 35568.

- [21] O. Zografos, M. Manfrini, A. Vaysset, B. Sorée, F. Ciubotaru, C. Adelmann, R. Lauwereins, P. Raghavan, I.P. Radu, Exchange-driven Magnetic Logic, Scientific Reports, 7 (2017) 12154.

- [22] A. Colla, H.-P. Breuer, Open-system approach to nonequilibrium quantum thermodynamics at arbitrary coupling, Physical Review A, 105 (2022) 052216.

- [23] M. Popovic, B. Vacchini, S. Campbell, Entropy production and correlations in a controlled non-Markovian setting, Physical Review A, 98 (2018) 012130.

- [24] D. Petz, Sufficient subalgebras and the relative entropy of states of a von Neumann algebra, Communications in Mathematical Physics, 105 (1986) 123-131.

- [25] V. Madhok, A. Datta, Interpreting quantum discord through quantum state merging, Physical Review A, 83 (2011) 032323.

- [26] K. Modi, C.A. Rodríguez-Rosario, A. Aspuru-Guzik, Positivity in the presence of initial system-environment correlation, Physical Review A, 86 (2012) 064102.

- [27] A. Smirne, B. Vacchini, Nakajima-Zwanzig versus time-convolutionless master equation for the non-Markovian dynamics of a two-level system, Physical Review A, 82 (2010) 022110.

- [28] S. Deffner, E. Lutz, Quantum Speed Limit for Non-Markovian Dynamics, Physical Review Letters, 111 (2013) 010402.

- [29] K. Zhang, X. Wang, Q. Zeng, J. Wang, Conditional Entropy Production and Quantum Fluctuation Theorem of Dissipative Information: Theory and Experiments, PRX Quantum, 3 (2022) 030315.

- [30] S.K. Manikandan, C. Elouard, A.N. Jordan, Fluctuation theorems for continuous quantum measurements and absolute irreversibility, Physical Review A, 99 (2019) 022117.

- [31] P.M. Harrington, D. Tan, M. Naghiloo, K.W. Murch, Characterizing a Statistical Arrow of Time in Quantum Measurement Dynamics, Physical Review Letters, 123 (2019) 020502.