Multiple Speaker Separation from Noisy Sources in Reverberant Rooms using Relative Transfer Matrix

††thanks: Thanks to Australian Research Council (ARC) Discovery Project Grant DP200100693 for funding.

Abstract

Separation of simultaneously active multiple speakers is a difficult task in environments with strong reverberation and many background noise sources. This paper uses the relative transfer matrix (ReTM), a generalization of the relative transfer function of a room, to propose a simple yet novel approach for separating concurrent speakers using noisy multichannel microphone recordings. The proposed method (i) allows multiple speech and background noise sources, (ii) includes reverberation, (iii) does not need the knowledge of the locations of speech and noise sources nor microphone locations and their relative geometry, and (iv) uses relatively small segment of recordings for training. We illustrate the speech source separation capability with improved intelligibility using a simulation study consisting of four speakers in the presence of three noise sources in a reverberant room. We also show the applicability of the method in a practical experiment in a real room.

Index Terms:

Low SNR, multiple microphones, multiple sound sources, relative transfer matrix, speaker separationI Introduction

Separation of multiple simultaneously active speakers in a room is a challenging acoustic signal processing problem. The aim is to recover the original speech signals or their equivalent version from the multichannel audio mixture which is contaminated by reverberation and background noise. Speaker separation will provide spatially selective listening for applications in augmented, virtual, and mixed reality.

Various approaches to speaker separation have been proposed using either single-channel or multichannel audio mixture, such as independent component analysis (ICA) [1, 2], non-negative matrix factorization (NMF) [3, 4], beamforming [5, 6], relative transfer function (ReTF) [7], and their combinations [8], as well as machine learning techniques [9, 10, 11, 12, 13, 14, 15, 16]. Nevertheless, most of the existing multi-talker separation methods, including the aforementioned ones, assumed the sparsity of the signals, or learned the discriminative patterns of speech, speakers, and background noise directly from rigorous training for a relative array geometry with or without given location information to extract the target speaker.

In [17], we introduced the concept of Relative Transfer Matrix (ReTM) which relates the signal received between two sets of microphone groups with respect to all active sources present in a room. Similar to the ReTF [18], the ReTM is independent of source signals but dependent on the spatial location of the sources and the environment. Recently, the ReTM of the noise sources has been used to propose a multi-channel speech denoising algorithm [19, 20]. In this paper, we extend [19], for multi-speaker separation in a reverberant room under low signal-to-noise ratio (SNR) conditions. The key aspects of our method are as follows: (i) microphones are spatially distributed over the room, where microphone locations are unknown, (ii) microphones are divided into two groups, and (iii) neither source counting nor knowledge of the number of sources is required prior to the separation task. The method relies on estimating the ReTM with respect to all undesired sources (including noise) either semi-blindly or pre-training. We also assume that the spatial properties of the environment, room, noise sources and speakers do not change with time. We show the performance of the proposed method using both simulation and real recordings in noisy environments. Also, we discuss merits of the proposed method with respect to recent work in speech separation.

II Problem Formulation and Relative Transfer Matrix

In this section, we first formulate the problem of multichannel reverberant speech separation, and then review the relative transfer matrix (ReTM) method presented in [17], which divides the microphones into two groups.

II-A System Model

Consider a reverberant environment with concurrently active speech and background noise sources. Let . In the short time Fourier transform (STFT) domain, we denote , and , as the speech and background noise signals, respectively.

Let there be arbitrary distributed microphones in the room. We divide them to two groups of microphones, and with and microphones, respectively (). We denote and as the vector of received signals at microphone groups A and B, respectively. Then the received signals at each microphone group in matrix form as

| (1) |

| (2) |

where , and is the matrix transpose. Here, and are the matrices with elements defined by the acoustic transfer functions. The microphone thermal noise vector and are similarly defined.

The aim of this paper is to separate each individual speech signal , , from the concurrent speakers and the background noise sources .

II-B Background on the ReTM

The ReTM, , is define as in [17]

| (3) |

where is Moore-Penrose inverse, assuming the validity, i.e., . Thus, we can relate the received signal at group and using

Note that is defined by the spatial properties of the sound sources such that it is independent of the sound source signals. In applications, the ReTM is invariant for a stationary environment.

The next section shows how to separate the desired speakers by estimating the ReTM of the undesired sources in practice.

III Multichannel Speaker Separation using ReTM

In the following, we extract the target speech of the speaker. Note that all speakers, , in the mixture can be similarly extracted.

Let the target speech denotes as out of concurrent speakers. The rest of the undesired source signals including background noise source signals can be grouped as We express the source signal vector .

Let be the ReTM of the combination of all sound sources except the target source. Here, we assume a specific scenario of two time segments with known segment boundaries as (i) undesired sources-only segment () (to be used to estimate ReTM), and (ii) a sound segment () with a mixture of active sources including the desired source. We use the covariance matrices-based approach as in [17] to blindly estimate the using segment of the microphone recording in which the target speaker is inactive. Hence,

| (4) |

with , and , where denotes the expectation which can be obtained by averaging across the time frames. Although blind estimation of ReTM is used in this paper, the proposed method can also be implemented by pre-trained or semi-blind estimation (e.g., conference rooms with pre-arranged settings).

We remove all undesired speech sources and background noise from the group microphone signals by multiplying group microphone signals by and subtracting as

| (5) |

where is a vector consists copies of estimated target speech signal . For convenience, we omit the dependency of time () and frequency () in the rest of this section. Using (1) and (2) in (5), we obtain

| (6) |

Let and be the acoustic transfer function vectors from the speech source to group and microphones, respectively. Also, let and be the acoustic transfer function matrices from all other sources except the speech source to group A and B microphones, respectively. Thus,

| (7) |

Equation (7), along with an accurate estimate of , , provides a complete separation of all speakers from the mixture. However, (7) is a ‘distorted’ version of the target speech signal in terms of the room transfer matrix of both speech and background noise sources with some microphone thermal noise. We find that the impact of the thermal noise term in (7) is minimal in our experiments.

IV Experimental Evaluation

This section shows the implementation of the proposed separation method under low (below dB) signal-to-background noise ratio (SNR) conditions in a reverberant room using both simulation and real recordings.

IV-A Simulated Environments

We utilize an open-source toolbox [21] to model the room impulse response (RIR) from the sound sources to irregularly distributed microphones in a m rectangular room ( ms). We consider four speech sources, three background noise sources, and microphones. Four speaker locations are: (1): (3 m, 4.5 m, 1.2 m), (2): (3 m, 2.5 m, 1.2 m), (3): (4.5 m, 3.5 m, 1.2 m), and (4): (1.5 m, 3.5 m, 1.2 m) and three background noise sources locations are: (1): (2 m, 0.9 m, 1.8 m), (2): (1 m, 6 m, 2.5 m), and (3): (3.5 m, 5 m, 1.8 m) with respect to the origin position in the left corner of the room. We convolve the speech sources RIRs with both male and female speech utterances from the TIMIT dataset [22] and noise sources RIRs with wall air-conditioner noise, vacuum noise, and music signal. The received signals are down-sampled to kHz and ranged from to dB SNR of background noise and added with dB SNR of white Gaussian noise at each microphone. Here, we define the SNR by averaging SNR at each receiver over all receivers.

We assume this undesired sound sources-only signal has been obtained when each target speaker is inactive and use a second segment of this recording for training. Then, the recordings are short-time-Fourier-transformed with an 8192-point window size that was long relative to the length of the RIR to satisfy the multiplicative transfer function [23]. We assign varying number of receivers to group , and a fixed number of receivers to group .

We use two qualitative measures to assess the separation performance: (i) Signal-to-Interference Ratio (SIR), and (ii) Signal-to-Distortion Ratio (SDR), using the BSS-Eval toolbox [24]. We also measure speech intelligibility through Short-Time Objective Intelligibility (STOI) [25]. The SNR is defined with respect to all sources in the mixture, whereas SIR is defined considering one speaker as the target signal and the rest of the interfering speakers as the noise signal.

We first evaluate performance in a varying number of microphones with fixed at dB SNR level in Table I. We observe that both group A sizes of and yield the best results, however, obtains the highest SDR. The total number of sources in the mixture is , suggesting a lower bound for the groups size for the improved separation capabilities. The results confirm that with the higher accurately separated the speech signals in noisy reverberant environments.

| Speaker | STOI | SIR | SDR | |

|---|---|---|---|---|

| Num. | () | (dB) | (dB) | |

| 1 | Unprocessed | 37.35 | 1.40 | - |

| O/P | 84.79 | 15.41 | 7.22 | |

| O/P | 92.16 | 25.37 | 9.49 | |

| O/P | 91.83 | 31.23 | 7.53 | |

| 2 | Unprocessed | 23.85 | -6.82 | - |

| O/P | 59.78 | 9.94 | 0.86 | |

| O/P | 73.02 | 19.09 | 3.47 | |

| O/P | 72.61 | 26.68 | 2.98 | |

| 3 | Unprocessed | 20.79 | -14.35 | - |

| O/P | 40.65 | 0.82 | -7.21 | |

| O/P | 66.67 | 10.96 | -1.86 | |

| O/P | 69.76 | 21.83 | -2.83 | |

| 4 | Unprocessed | 29.59 | -5.69 | - |

| O/P | 60.77 | 9.56 | -0.15 | |

| O/P | 71.50 | 19.65 | 1.45 | |

| O/P | 71.37 | 23.89 | -0.16 |

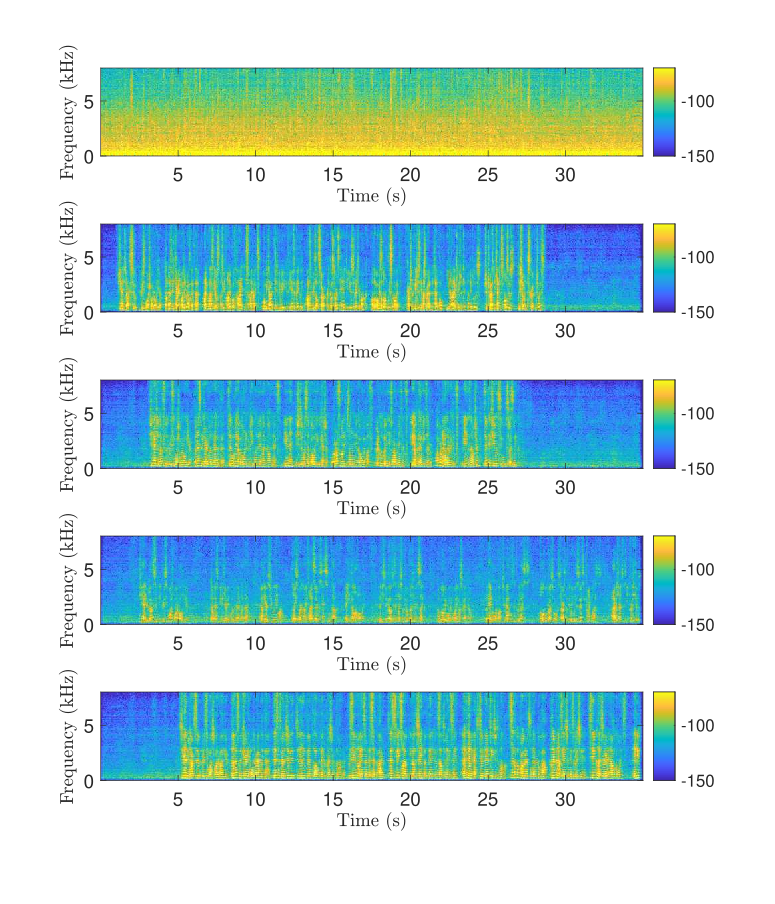

We will now examine the spectrogram plots of the mixture signal, separated speech signal outputs at the microphone of in Fig. 1. We observe that the input signal is contaminated by background noise, however, the separated output for each speaker is less noisy. We share a link to the mixture signal and the separation speech signals to listen.111https://github.com/wnilmini/SS-ReTM

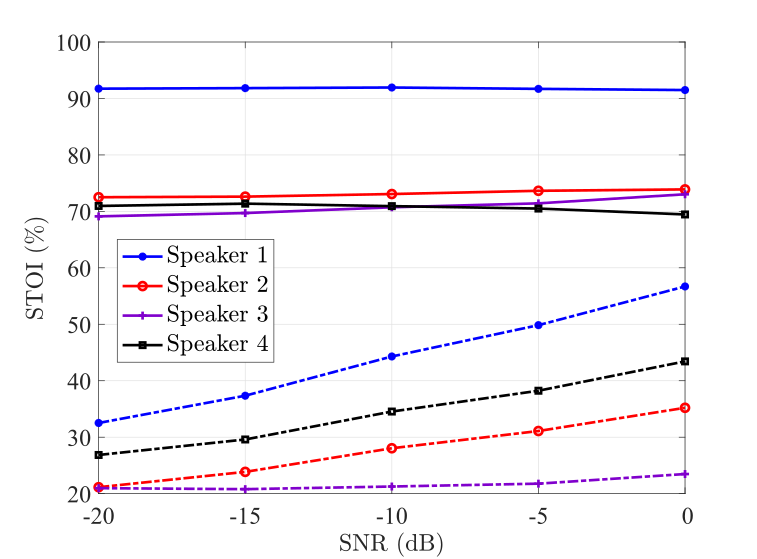

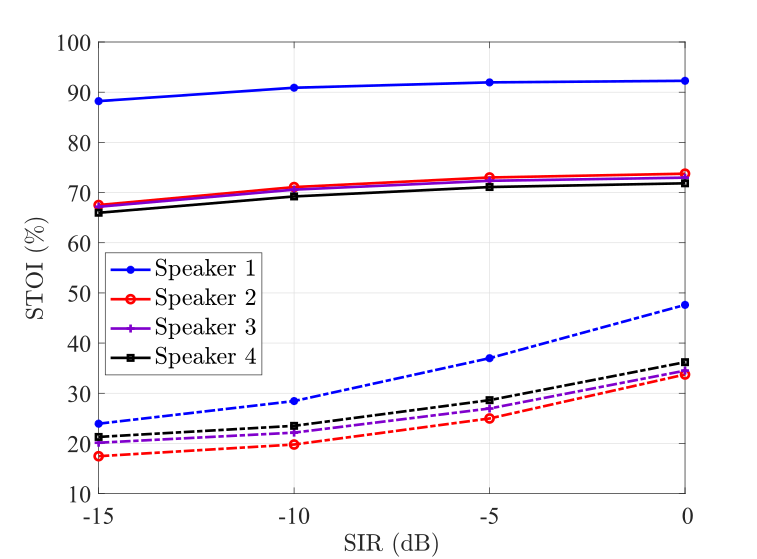

Next, we examine the performance of the proposed algorithm with different SNR levels in Fig. 2. Given that the proposed method works better with more microphones in groups A and B than the number of sound sources, we set and with varying SNR levels from dB to dB.

Results of Fig. 2 show that the proposed method is able to successfully separate all speakers in the mixture, especially with the different input speaker configurations, i.e., STOI, SIR, at low SNR levels. Three key observations are made from Fig. 2. First is in terms of intelligibility improvement, where we observe the highest output STOI values for the same speaker with the highest input STOI while the rest achieve comparable results. The second observation is on separation results, where we observe improved SIR, and SDR for individual speakers with a performance gap that the first speaker is dominated which has the highest SIR value if four speakers are with different SIR levels. Our third observation is that the speaker separation results are seen to be nearly constant for each speaker over the low input SNR conditions as focusing constant input SIR of the individual speaker.

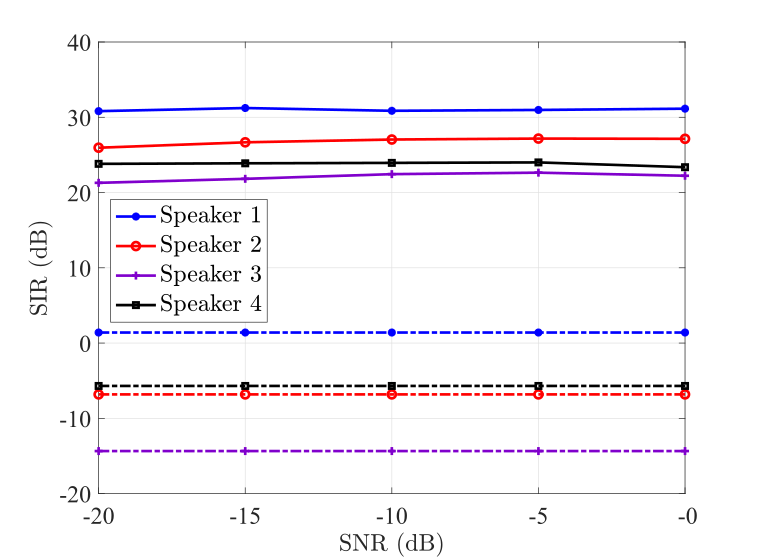

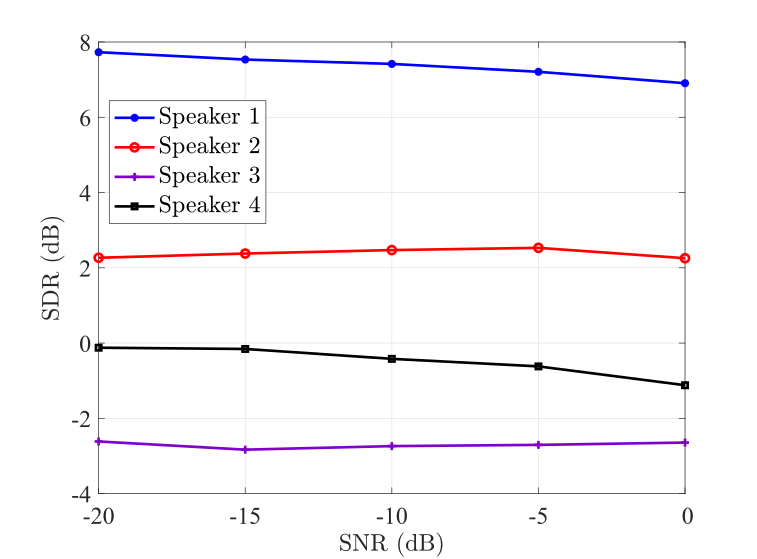

Finally, we consider intelligibility performance with different SIR levels from dB to dB and a fixed SNR level of dB to explain both the second and the third observations on speaker separation. In Fig. 3 we present four scenarios with the assumption that the target speaker’s SIR levels are varied given that the other three speakers and the background noise sources in the mixture are kept the same. This is similar to Fig. 2(a), where the first speaker with the highest SIR among all four speakers in the mixture is well-separated by the proposed method achieving the highest STOI performance. It can be seen in Fig. 3 that the proposed method has indistinguishable intelligibility performance for the other three speakers in the mixture. Note that the algorithm’s robustness to room reverberation is not included in Section IV, since it provided similar performance.

IV-B Real-life Environments

Now, we conduct a preliminary study of this work in a real-life scenario with highly overlapped speakers. The real recordings are measured in an office room at the Australian National University with dimensions m, and ms. We consider speakers, background noise sources (fan and room air cooler), and randomly distributed microphones over the room (as shown in Fig. 4). We assigned microphones (channels ) to group A, and microphones (channels ) to group B. We examined the proposed method’s performance in separating the desired speakers inside a real room. We share a link to the audio files.222https://zenodo.org/records/15009085 By listening to the output signals, we observe that the leakage of the interfering sources into the separated desired speakers. However, the desired speakers are clearly audible to understand their utterances. Note that the separation results are inferior to the results obtained in simulations. We attribute this discrepancy can be modified by using Wiener filtering techniques to enhance the separation performance, which we will continue to discuss in future work.

IV-C Comparison Methods

The machine learning-based methods [3, 4, 11, 12, 13, 14, 15, 16] for audio speaker separation consider data-driven approaches and exploit either speech features or sparsity in their models/algorithms, perhaps both in a supervised [11, 14] or unsupervised [3] manner whereas in the proposed algorithm only requires speaker spatial location or environment-based blind (or semi-blind) training among the microphone groups. Both DNN-based methods and NMF-based methods require greater computational power which aggravates with the number of microphone channels in the mixture whereas our proposed method alleviates the computational cost of multichannel speaker separation using one to two minutes of the ReTM training. Moreover, DNN-based methods rely on numerous assumptions about the acoustic environment or the background noise sources and essential rigorous training for specific microphone array structures and/or a given number of speakers in the mixture. However, the proposed method is independent of the microphone placement in the specific acoustic scenario, however, requires a more spatially diverse microphone configuration to learn the ReTM. Furthermore, we note that our algorithm is independent of the source signals, and the number of sources prior to the separation task as well as, speech-based features like pitch and sparsity which are unreliable under noisy environments.

As our method requires multiple microphones (groups A and B should have microphones more than the number of sound sources in the mixture), it is not direct and easy to compare fairly with existing datasets and tasks to reproduce with baseline methods with appropriate configurations. But in the future, we seek to have a fair comparison with the baseline speaker separation methods.

For now, we will present the performance evaluation of the DNN-based method, SepFormer [16]. To fairly evaluate the algorithms, we use the same settings given in IV-A with SNR dB. Table II depicts the speaker separation results for . The results confirm that both methods effectively separate the speaker with the highest loudness. Although ’SepFormer’ exhibits slightly higher performance than the input signal, it fails to effectively separate the concurrent speakers when their loudness levels vary, whereas our method successfully achieves this separation. Note that the results for low SNR levels are not reported here, since it is severely impacted by the noise. We share a link to the audio files.333https://github.com/wnilmini/SS-ReTM/tree/main/Baseline

| Spk. No. | STOI () | SIR (dB) | SDR (dB) | |

|---|---|---|---|---|

| 1 | Unprocessed | 63.05 | 3.92 | - |

| SepFormer [16] | 74.30 | 7.20 | 4.68 | |

| Our Method | ||||

| 2 | Unprocessed | 39.63 | -5.71 | - |

| SepFormer [16] | 38.63 | 2.89 | -5.96 | |

| Our Method | ||||

| 3 | Unprocessed | 32.58 | -10.77 | - |

| SepFormer [16] | 34.10 | -4.62 | -14.20 | |

| Our Method |

V Conclusion

In this paper, we have presented a method for multichannel reverberant speech separation in low SNR levels. The method exploits a unique spatial signature of the sound sources distributed throughout the environment using the ReTM. The ReTMs of the undesired sound sources are blindly estimated when the desired speaker is not active to separate the individual speakers. The simulation results have shown that the proposed algorithm provides good speaker separation performance with an increased number of microphone channels in both groups than the total number of sound sources in the mixture. In the future, we will conduct a fair comparison of the proposed speaker separation method with the state-of-the-art methods and further examine the performance of the multiple-speaker separation in real-life scenarios.

References

- [1] N. Mitianoudis and M. E. Davies, “Audio source separation of convolutive mixtures,” IEEE Trans. on Speech and Audio Process., vol. 11, no. 5, pp. 489–497, Aug. 2003.

- [2] H. Sawada, R. Mukai, S. Araki, and S. Makino, “A robust and precise method for solving the permutation problem of frequency-domain blind source separation,” IEEE Trans. on Speech and Audio Process., vol. 12, no. 5, pp. 530–538, Aug. 2004.

- [3] A. Ozerov and C. Févotte, “Multichannel nonnegative matrix factorization in convolutive mixtures for audio source separation,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 18, no. 3, pp. 550–563, Sep. 2009.

- [4] A. Cichocki, R. Zdunek, A. H. Phan, and S. i. Amari, “Nonnegative matrix and tensor factorizations: Applications to exploratory multi-way data analysis and blind source separation,” Hoboken, NJ. USA: Wiley, 2009.

- [5] S. Gannot, E. Vincent, S. Markovich-Golan, and A. Ozerov, “A consolidated perspective on multimicrophone speech enhancement and source separation,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 25, no. 4, pp. 692–730, Jan. 2017.

- [6] S. Markovich, S. Gannot, and I. Cohen, “Multichannel eigenspace beamforming in a reverberant noisy environment with multiple interfering speech signals,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 17, no. 6, pp. 1071–1086, Jun. 2009.

- [7] A. P. Bates, D. Grixti-Cheng, P. Samarasinghe, and T. Abhayapala, “On the use of the relative transfer function for source separation using two-channel recordings,” in Proc. Asia-Pacific Signal and Inf. Process. Assoc. Annu. Summit and Conf., Dec. 2009, pp. 734–738.

- [8] B. Laufer-Goldshtein, R. Talmon, and S. Gannot, “Source counting and separation based on simplex analysis,” IEEE Signal Process. Mag., vol. 66, no. 24, pp. 6458–6473, Oct. 2018.

- [9] D. Wang and J. Chen, “Supervised speech separation based on deep learning: An overview,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 26, no. 10, pp. 1702–1726, May 2018.

- [10] A. Défossez, N. Usunier, L. Bottou, and F. Bach, “Music source separation in the waveform domain,” arXiv preprint arXiv:1911.13254, 2019.

- [11] E. Nachmani, Y. Adi, and L. Wolf, “Voice separation with an unknown number of multiple speakers,” in Proc. Int. Conf. on Machine Learning, Nov. 2020, pp. 7164–7175.

- [12] Z. Wang and D. Wang, “Combining spectral and spatial features for deep learning based blind speaker separation,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 27, no. 2, pp. 457–468, Nov.

- [13] R. Gu, L. Chen, S. Zhang, J. Zheng, Y. Xu, M. Yu, D. Su, Y. Zou, and D. Yu, “Neural spatial filter: Target speaker speech separation assisted with directional information.” in Proc. INTERSPEECH, Sep. 2019, pp. 4290–4294.

- [14] K. Tesch and T. Gerkmann, “Multi-channel speech separation using spatially selective deep non-linear filters,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 32, pp. 542–553, Nov. 2023.

- [15] V. A. Kalkhorani and D. Wang, “Crossnet: Leveraging global, cross-band, narrow-band, and positional encoding for single-and multi-channel speaker separation,” arXiv preprint arXiv:2403.03411, Mar. 2024.

- [16] C. Subakan, M. Ravanelli, S. Cornell, M. Bronzi, and J. Zhong, “Attention is all you need in speech separation,” in Proc. IEEE Int. Conf. on Acoust., Speech and Signal Process., Jun. 2021, pp. 21–25.

- [17] T. D. Abhayapala, L. Birnie, M. Kumar, D. Grixti-Cheng, and P. N. Samarasinghe, “Generalizing the relative transfer function to a matrix for multiple sources and multichannel microphones,” in Proc. Eur. Signal Process. Conf., Sep. 2023, pp. 336–340.

- [18] R. Talmon, I. Cohen, and S. Gannot, “Relative transfer function identification using convolutive transfer function approximation,” IEEE Trans. on Audio, Speech, and Lang. Process., vol. 17, no. 4, pp. 546–555, Mat. 2009.

- [19] M. Kumar and et al., “Speech denoising in multi-noise source environments using multiple microphone devices via relative transfer matrix,” in Eur. Signal Process. Conf., Sep. 2024, pp. 336–340.

- [20] W. N. Manamperi and T. D. Abhayapala, “Relative transfer matrix for drone audition applications: Source enhancement,” in Proc. Asia-Pacific Signal and Inf. Process. Assoc. Annu. Summit and Conf., Dec. 2024.

- [21] E. A. Habets, “Room impulse response (RIR) generator,” 2006, [Online]. Available: https://www.audiolabserlangen.de/fau/professor/habets/software/rir-generator.

- [22] J. S. Garofolo, “Timit acoustic phonetic continuous speech corpus,” Linguistic Data Consortium, 1993.

- [23] Y. Avargel and I. Cohen, “On multiplicative transfer function approximation in the short-time fourier transform domain,” IEEE Signal Process. Letters, vol. 14, no. 5, pp. 337–340, Apr. 2007.

- [24] E. Vincent, R. Gribonval, and C. Févotte, “Performance measurement in blind audio source separation,” IEEE Trans. on Audio, Speech, and Lang. Process., vol. 14, no. 4, pp. 1462–1469, Jun. 2006.

- [25] C. H. Taal, R. C. Hendriks, R. Heusdens, and J. Jensen, “An algorithm for intelligibility prediction of time–frequency weighted noisy speech,” IEEE/ACM Trans. on Audio, Speech, and Lang. Process., vol. 19, no. 7, pp. 2125–2136, Feb. 2011.