Multivariate Generalized Gaussian Distribution: Convexity and Graphical Models

Abstract

We consider covariance estimation in the multivariate generalized Gaussian distribution (MGGD) and elliptically symmetric (ES) distribution. The maximum likelihood optimization associated with this problem is non-convex, yet it has been proved that its global solution can be often computed via simple fixed point iterations. Our first contribution is a new analysis of this likelihood based on geodesic convexity that requires weaker assumptions. Our second contribution is a generalized framework for structured covariance estimation under sparsity constraints. We show that the optimizations can be formulated as convex minimization as long the MGGD shape parameter is larger than half and the sparsity pattern is chordal. These include, for example, maximum likelihood estimation of banded inverse covariances in multivariate Laplace distributions, which are associated with time varying autoregressive processes.

Index Terms:

Multivariate generalized Gaussian distribution, geodesic convexity, graphical models, Cholesky decomposition.I Introduction

Covariance estimation is a fundamental problem in multivariate statistics. Many techniques for hypothesis testing, inference, denoising and prediction rely on accurate estimation of the true covariance. The problem is challenging when the available data is high dimensional and non-Gaussian. Such settings are typical in many applications including speech, radar, wireless communication, finance and more. These led to a growing interest in both robust and structured covariance estimation. Specifically, in this paper, we consider maximum likelihood estimation (MLE) in the multivariate generalized Gaussian distribution, with and without sparsity constraints on the inverse covariance.

The first part of this paper considers the geodesic convexity in MLE in elliptically symmetric (ES) distributions. Methods for robust covariance estimation date back to the early works of [18, 31]. A popular approach is Tyler’s scatter estimate. It involves a non-convex optimization yet can be solved via simple fixed point iteration. It has been rigorously analyzed and successfully applied to different problems [31, 26]. Recently, it was shown that the result is in fact the solution to a geodesically convex minimization [6, 10, 32, 33, 36]. Geodesic convexity ensures that any local minima is also globally optimal and leads to a much simpler analysis [27]. It also allows for numerous extensions, e.g., regularized solutions. In a competing line of works, a different class of robust covariance estimation techniques was proposed based on the MGGD [23, 11]. A well known example of MGGD is the multivariate Laplace distribution [14]. Fixed point iterations for MGGD estimation and their analyses has recently been considered in [24, 9, 25]. The first contribution in this paper is a new analysis which shows that the negative log-likelihood in MGGD is also geodesically convex. This result requires weaker conditions than previous analyses, provides more intuition and paves the road to numerous generalizations.

The second part of this paper addresses structured covariance estimation. Structure exploitation is a main ingredient in modern statistics that allows accurate high dimensional estimation via a small number of samples. A promising approach is based on sparse inverse covariance models. In the multivariate Gaussian case these are known as graphical models and characterize conditional independence [13, 20, 8, 4]. These models have been successfully applied to speech recognition, sensor networks, computer networks and other fields in signal processing [10, 35]. Our goal is to combine such models with non-Gaussian distributions, e.g., MGGD. Recently, a similar problem was addressed using an expectation maximization technique [15]. Another line of work focused on combining Tyler’s scatter estimate with a banded inverse covariance prior [2, 1]. Graphical models for transelliptical distributions were discussed in [21]. In this paper, we combine the MGGD framework with prior sparsity constraints on the inverse covariance. We show that the optimization can be formulated into a convex form as long as the MGGD scale parameter is larger than half and the sparsity satisfies a chordal structure. Chordal models, also known as decomposable or triangulated models, include banded structures, multiscale settings and other practical scenarios [34, 16, 12]. Such structures are associated with a perfect ordering of the variables. A typical example is banded models associated with time-varying autoregressive processes [3].

Our results are also applicable to the case of unknown sparsity pattern, i.e., structure learning via sparsity inducing penalties, but require prior knowledge of the perfect order. Recent works on structure learning in directed acyclic graphs provide data driven techniques for learning this order [30, 28].

The outline of the paper is as follows. We begin in Section II with a few mathematical preliminaries that will be useful in our work. Then, we continue to our two main contributions. In Section III we provide a new geodesic analysis of MGGD estimation, and in Section IV we introduce a convex optimization framework for chordal structured MGGD estimation. Simulation results are described in Section V, and concluding remarks are offered in Section VI.

We use the following notations. We denote the set of real, symmetric and positive definite matrices by . We denote the span operator by .

II Preliminaries

We begin with a brief review of two mathematical concepts which will be instrumental in the next sections.

II-A Geodesic convexity

Geodesic convexity is an extension of classical convexity which replaces lines with geodesic paths in manifolds. More details on this topic can be found in [27]. Given a Riemannian manifold and a set , we say a function is geodesically convex, if every geodesic of with endpoints (i.e., is a function from to with and ) lies in , and

| (1) |

If the inequality in (1) is replaced by strict inequality, we call the function geodesically strictly convex. An equivalent definition follows from [22, Theorem 1.1.4].

Proposition 1

For continuous function , the definition in (1) is equivalent to the condition

| (2) |

The importance of geodesic convexity stems from the following properties (see [27, Theorem 2.1] for more details).

Proposition 2

Any local minimizer of a geodesically convex function is also its global minimizer.

Proposition 3

Any strictly geodesically convex function has a unique global minimizer.

In particular, we consider geodesic convexity on the manifold of positive definite matrices denoted by . The geodesic connecting and is defined as [7, Chapter 6]

| (3) |

II-B Chordal graphs

In statistical graphical modeling, graphs are used to characterize the sparsity pattern of the corresponding inverse covariance matrices. When the pattern belongs to a special class known as chordal graphs, these concentration matrices satisfy an appealing structure which will be exploited in Section IV. More details on chordal graphs and their relation to graphical models can be found in [20, 34, 16, 12].

A graph is chordal if every cycle of length has an edge joining two nonconsecutive vertices of the cycle. For a chordal graph, there is a perfect elimination ordering of vertices, such that for any , the neighbor of , , satisfies that induces a fully connected clique, i.e., a set of fully connected nodes.

It is convenient to define the sparsity pattern of a square matrix via a graph with vertices. We say that is -sparse if

| (4) |

A Cholesky decomposition is a generalization of the squared root operation to positive definite matrices. Any has a unique decomposition where the Cholesky factor is a lower-triangular matrix with positive diagonal elements.

The following result characterizes the relation between sparse Cholesky decompositions and chordal graphs.

Proposition 4

Let be a chordal graph with a natural111If the order is not natural, this result holds by permuting the columns of the matrix. perfect order, i.e., for . If is -sparse, real and positive definite, then there exists a unique -sparse lower triangular with positive definite diagonal entries such that . If is -sparse and lower-triangular, then is -sparse and positive definite.

The existence proof can be found in [16, Section 2.1], and the uniqueness is a direct consequence of the perfect elimination order.

Example 1

For a banded matrix with band width , it corresponds to a graph defined as follows: when . Now we check that this is a chordal graph: for every cycle with length , the indices of the four nodes differ by at most, therefore the edges connect all nodes in the cycle, which corresponds to the definition of chordal graph.

The elimination order for this chordal graph turns out to be the natural order . Therefore Proposition 4 shows that the Cholesky decomposition of a banded matrix is the product of a banded (with the same bandwidth ) lower-triangular matrix with its transpose.

One can also easily show that the product of a banded lower-triangular matrix with its transpose is still a banded (with the same band width), positive definite matrix, which exemplifies the last sentence in Proposition 4.

III Geodesic convexity in MGGD

In this section, we consider unconstrained MLE in MGGD. More precisely, we address a more general family of elliptically symmetric (ES) distributions (see [11] for a review and [24] for a recent generalization to complex case). These problems involve non-convex minimizations, yet it has been shown that their global minima can be efficiently found using simple fixed point iterations. We will show that the negative log-likelihoods are in fact geodesically convex, and that this may be the underlying principle behind their success.

An random variable has a ES distribution in real space if its probability density function (p.d.f.) is

| (5) |

where such that , is a normalization constant such that the integral of the distribution is . In (5), is called a scatter matrix, and is the center of the distribution.

MGGD [23] is a widely used special case of ES when

| (6) |

where is the shape parameter. In particular, for it gives multivariate analog of Laplace distribution, and the multivariate Gaussian distribution is obtained for .

We consider the estimation of given independent and identically distributed (i.i.d) realizations of a zero mean ES random vector denoted by . The MLE is the parameter that minimizes the negative-log-likelihood

where . The following Theorem 1 characterizes the existence and uniqueness properties of this MLE. Theorem 1(a) characterizes the uniqueness and is the main contribution in this section; Theorem 1(b) characterize the existence and is borrowed from [31, Theorem 2.1].

Theorem 1

(a) Assume that is continuous in , nondecreasing and is convex, then is geodesically convex in . If additionally , is strictly increasing and is strictly convex, then is geodesically strictly convex in .

(b) If is continuous in , is positive, and ,

| for any linear subspace , | (7) |

then there exists a minimizer of .

Before proving this theorem, a few comments are in order. We remark that the condition is also implicated by (7), since otherwise violated the assumption. This condition is necessary since otherwise as , where is the projector to subspace ) and therefore the minimizer of does not exist.

Theorem 1 relaxes the conditions for the uniqueness/existence of the minimizer of presented in [19, Theorem 2.2]:

M1 is non-negative, continuous and nonincreasing.

M2 is strictly increasing.

M3 condition (7).

Our assumption that is increasing corresponds to , which follows from M1, where is nonnegative and M2 (which exclude the possibility that for ); and our assumption that is strictly convex corresponds to M2, where is strictly increasing. In comparison, our conditions does not require to be differentiable, and we do not assume their assumptions in M1 that is continuous or nonincreasing.

A special case of Theorem 1 is Tyler’s M-estimator in which . Following the first part of Theorem 1(a), is geodesically convex, which has been previously identified in [6, 32, 33, 36]. The geodesic convexity does not contradict the non-uniqueness of Tyler’s M-estimator since this convexity is not strict. Another special case is the class of MGGD estimators. The following corollary follows from Theorem 1 and the fact that in Theorem 1(b) is .

Corollary 1

For all , the ML estimator for MGGD exists and unique if .

Existence and uniqueness of the MLE in MGGD has been previously addressed in [24, Section V.A]. However, this contribution does not identify geodesic convexity and applies only to . Our theorem applies to MGGD with all and provides additional insight based on geodesic convexity. Furthermore, it applies to other ES distributions, including cases where is not continuous, e.g., when and when .

Here we remark that although ML estimator for elliptically symmetric (ES) distribution is the motivation of the argument, Theorem 1 (and Theorem 2 in next section) hold even if does not relate to an elliptically symmetric (ES) distribution. For example, Tyler’s M-estimator can be written in the form of (5) with ; however this corresponds to the central angular Gaussian which is not a member in ES class [24, (36)]. Another example is in [24, page 5610, Example 1], where is set to be a huber function.

We remark that the Theorem 1 also applied to the complex elliptically symmetric (CES) distributions defined by

| (8) |

where the MLE estimator minimizes

Then Theorem 1 holds with . This is also a generalization of the uniqueness/existence of the minimizer of presented in [24, Section V.A].

Proof:

(a) Applying [33, Proposition 1], if is the geodesic mean of and defined in (3), then

| (9) |

Combining this fact with the convex/monotone properties of , we have

| (10) | ||||

| (11) |

Since

| (12) |

we proved

| (13) |

By Proposition 1, we proved the geodesic convexity of .

When is strictly convex, is geodesically strictly convex since the equalities in (10) and (11) can not hold simultaneously for all . The proof is as follows. Following the proof of [36, Theorem III.1], when , the equality in (9) (and therefore the equality in (11)) holds for all only when for some . However, would fail the equality in (10) due to the strict convexity of . ∎

In practice, various numerical techniques can be used to find a local minima of the MGGD negative log-likelihood. Theorem 1 and geodesic convexity then ensure that this local minima will also be global minima. A promising approach is the classical iterative reweighed scheme due to [19, 5]:

| (14) |

where . It has been shown in [5, Proposition 1] that when , is continuous and nonnegative, then decreases monotonically ( is assumed in order to make sure that is well-defined). [5, Proposition 3] shows that any limiting point of the sequence is a stationary point. When the assumptions in Theorem 1 hold, this point is the unique minimizer of .

IV Convexity in chordal MGGD

In this section, we consider structured MGGD estimation. In particular, we consider MLE of the MGGD scatter matrix subject to sparsity constraints. Thus, we are interested in the solution to

| (17) |

where the objective is the negative MGGD log-likelihood as described in Section III, and is the edge set of a known graph associated with the sparsity of . Unfortunately, the above minimization is not convex in or . Nor is it geodesically convex in it. Indeed, the sparsity constraints are not preserved by the positive definite geodesic in (3). Thus, it is not clear whether its global minima can be found in an efficient manner.

In what follows, we propose a simple trick to “convexify” the optimization in many interesting cases of MGGD. In particular, we assume the is chordal and represent with its Cholesky factor . Due to Proposition 4, a unique chordal decomposition exists and we obtain

| (20) |

where

| (23) |

and we have used

| (24) |

The following theorem characterizes the properties of this optimization.

Theorem 2

Proof:

Negative logarithms are strictly convex, and it remains to show that

| (25) |

where we have used the triangle inequality and the convexity of .

To prove the existence of minimizer, we need to prove that for , as or the smallest eigenvalue of goes to . When , since and is full rank, there exists such that . Consider that is convex, there exists a constant and such that when ,

Therefore as , .

When the smallest eigenvalue of goes to , we have and . In another aspect, since is convex, exists (by convexity it is larger than ). Combining it with the fact that is nondecreasing, as the smallest eigenvalue of goes to , and the existence of the minimizer of is proved. ∎

The theorem shows that, under suitable conditions, any local minimizer of (20) is globally optimal. This holds for any ES distribution with satisfying the assumptions. In particular, an important special case is chordal graphical models in MGGD:

Corollary 2

If , under chordal graphical models the ML estimator for MGGD exists and unique for all .

Unfortunately, the recent result in [2] on does not satisfy our conditions and requires a different analysis. And there is no detailed proof to support the claim in [2] that this banded Tyler estimators converge to unique fixed points.

When the condition in Theorem 2 holds, the objective function is convex with respect to . Therefore, it can be numerically minimized via any general convex optimization solver. For example, in the MGGD case with the problem can be expressed as

| (29) |

where are auxiliary variables. This formulation with second order cone constraints can be easily solved using the popular CVX package [17].

Alternatively, the optimization can be addressed using a majorization - minimization (MM) technique, e.g. [5]. The method begins with an initial estimate and updates it according the following iterations

| (32) |

where

| (33) |

and is chosen such that . Since is continuous and nonnegative, we have

| (34) |

and converges as . Each iteration step in (32)-(33) can be interpreted as a Gaussian graphical model optimization (the weights are constant with respect to the optimization variable). These minimizations have a simple closed form solution when is chordal (see appendix). Thus, the proposed technique is very efficient for implementation in practice.

Finally, it is worth mentioning that our framework can also be extended to structure learning in MGGD graphical models. Structure learning, also known as covariance selection, considers the estimation of an inverse covariance which is known to be sparse but the sparsity pattern itself is unknown [13, 8, 29]. Adding a sparsity constraint usually destroys the convexity. Instead, the modern approach relies on a convex relaxation based on an L1 norm penalty. In our context, the problem is convex in the Cholesky factor rather than in the inverse covariance itself. This leads to the following convex minimization

| (35) |

where is a regularization parameter, and is a matrix version of the L1 norm, namely a sum over the absolute values of the elements in . It is important to emphasize that this approach is only applicable to chordal graphical models and it assumes that the perfect order of the variables is known a priori. Recent developments in high dimensional covariance estimation provide data-driven methods for identifying this order and structure [30, 28]. We leave this topic as a possible direction for future research.

Furthermore, our methodology can be easily extended to joint estimation of the covariance and the centering parameter . For this purpose, consider the optimization of

| (38) |

where When the conditions in Theorem 2(a) hold, the objective function is jointly convex with respect to , therefore any of its local minimizer is also its global minimizer. Its algorithm can be similarly addressed using a majorization - minimization (MM) technique as in (32)-(33).

V Numerical results

In this section, we present results of numerical simulations. The purpose of these simulations is three fold: to validate our theoretical results using synthetic data, to provide insight on a few open theoretical questions using synthetic data and to demonstrate the usefulness of our theoretical models in a real world setting.

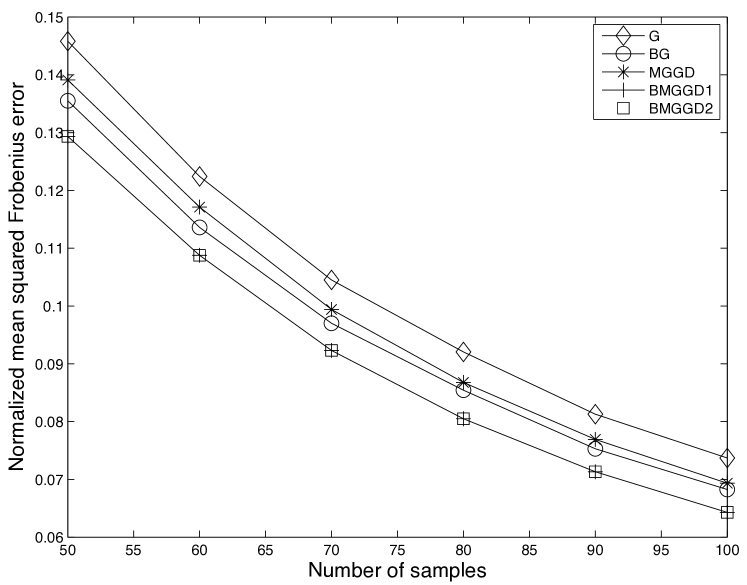

The first experiment considered inverse covariance estimation using synthetic data generated from a known MGGD distribution with and a chordal structure. The theory in Section IV shows that, in this case, the MLE can be formulated as a convex optimization. To verify this result, we generated i.i.d. realizations of an MGGD with , (corresponding to a classical multivariate Laplacian distribution), and a banded inverse covariance of width . In particular, we used a Toeplitz inverse covariance with diagonal elements and off-diagonal elements within the main band. We estimated the unknown covariance using five estimators:

-

•

G: A Gaussian MLE with no prior knowledge of the structure, corresponding to the classical sample covariance.

-

•

BG: A Gaussian banded MLE as detailed in the appendix.

-

•

MGGD: An MGGD MLE with no prior knowledge of the structure via iterations of (14).

-

•

BMGGD1: An MGGD banded MLE via iterations of (32) and the subroutine in the appendix. This estimator is initialized with .

-

•

BMGGD2: An MGGD banded MLE via iterations of (32) and the subroutine in the appendix. This estimator is initialized with (which is clearly impossible in practice).

In all estimators above we use as the number of iterations since in our simulations the fixed point algorithms (14) and (32) converge well after iterations.

Figure 1 shows the normalized mean squared Frobenius error in the covariance averaged over independent simulations as a function of the number of samples . It is easy to see the performance advantage of banded MGGD estimator. As expected, BMGGD1 and BMGGD2 converge to the identical fixed points irrespective of their initial condition.

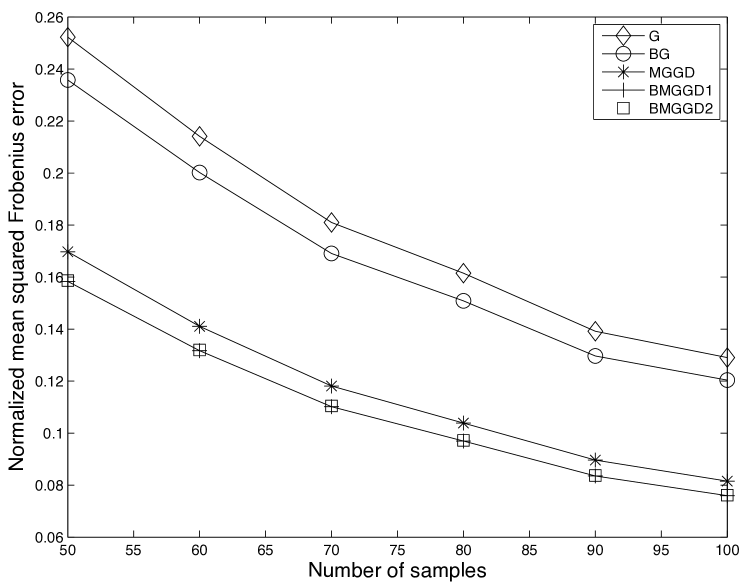

The second experiment is very similar to the first except that . Our theory assumes and therefore does not hold for this value of shape parameter. Yet, according to [2] banded Tyler estimators do converge to unique fixed points, and these can be interpreted as the limit of MGGD when . Our proposed fixed point iteration in (32) can be implemented with a small . Therefore, it is interesting to examine its performance and we repeat the first experiment with . The results are presented in Figure 2. Interestingly, BMGGD1 and BMGGD2 converged to identical fixed points irrespective of their initial condition. Thus, although we have no proof for this behavior, we conjecture that, in practice, the iteration can also be used for all MGGDs.

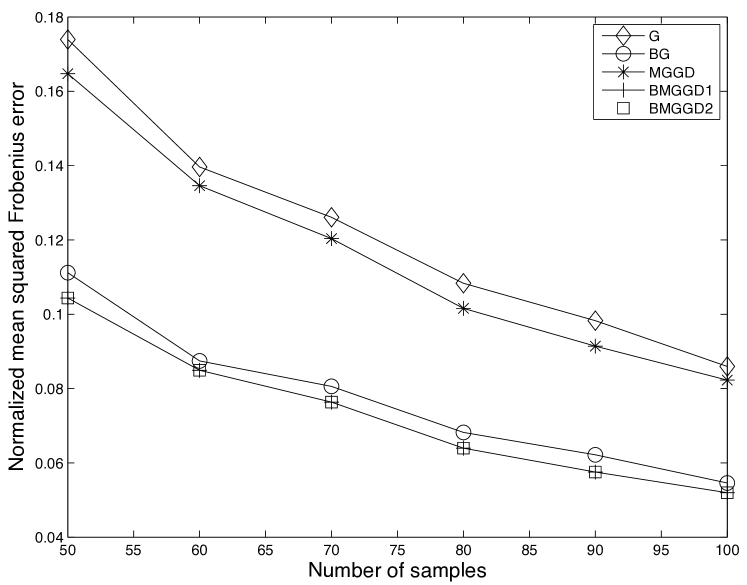

The third experiment focused on non-chordal structures. Our theory assumes a chordal sparsity pattern and it is interesting to see whether this assumption is indeed critical. Thus, we repeated the first experiment associated with MGGD , but replaced the banded inverse covariance with a loopy structure. In particular, we constructed a two dimensional grid graph of size with the edges , , , , , , , , , , , , , , , , , , , , , , , and . All the non-zero off diagonal elements were assigned a constant value small enough to ensure that will be well conditioned. In contrast to the chordal case, MLE in general Gaussian Graphical models in does not satisfy a closed form solution. Instead, we solved (17) using the CVX optimization package [17]. The latter was used for implementing BG, and for the inner solution in each iteration of BMGGD1 and BMGGD2. The results averaged over simulations222Due to CVX these simulations are highly time consuming. are provided in Figure 3. Here too, the iterations converged to identical solutions and seem to be independent of their initial conditions.

The fourth experiment addressed the practical use of the BMGGD1 estimator in a real world example. Following [29] we considered the SONAR dataset from the UCI machine learning data repository. This dataset has 111 spectra from metal cylinders and 97 spectra from rocks, where each spectrum has 60 frequency band energy measurements. Quadratic discriminant test is used to classify the metals and the rocks. It requires the estimation of the covariance in both classes, and previous work demonstrated the advantage of BG over the classical sample covariance and the naive diagonal estimate. We repeated the experiment step by step and added BMGGD1. Specifically, we chose as the parameter within that maximizes the MGGD likelihood, and used the same band which was used by BG (selected via 10 random splits with of the data for training and the validation likelihood). For the QDA test we applied the covariance of MGGD, which is , where . The test errors over a standard leave-one-out cross validation are provided in Table I. These results remained stable over different randomizations, and demonstrate the advantage of the proposed BMGGD1 framework.

| Sample covariance | Naive Bayes | BG | BMGGD1 |

|---|---|---|---|

VI Discussion

In this paper, we consider covariance estimation in the multivariate generalized Gaussian distribution (MGGD). We proved that the MGGD negative log-likelihood is geodesically convex for . In the sparsely constrained case, we proved that a simple change of variables can transform it into a convex function as long as and the underlying graph is chordal. This means that any local solution of these minimization is globally optimal and the problems can be solved using standard descent methods. In practice, we observed this behavior also for smaller values of . This agrees with a similar result on banded Tyler methods which can be interpreted as . An interesting direction for future work is a rigorous analysis of these phenomenon when . Another direction is relaxing the chordal assumption on the sparsity pattern. Here too, our numerical experience suggests that simple descent methods converge to the global solution and are independent of their initial conditions. Finally, as mentioned above, it in interesting to examine the problem of structure learning in MGGDs via sparsity enforcing priors.

In this appendix, we review a simple closed form solution for the MLE in chordal (decomposable) Gaussian graphical models [20, 34, 4]. The problem is

| (42) |

where

| (43) |

are the iteration weights which do not depend on . Using the chordal Cholesky decomposition , the problem is equivalent to

| (46) |

This minimization is completely separable and each column of can be simply obtained by a linear regression. Let be vectors consists of the -th components of , , and assume that in the linear regression of with respect to , the parameter on is , and standard error is . Then

| (47) | |||||

| (48) |

and finally .

References

- [1] Y. Abramovich and O. Besson. Regularized covariance matrix estimation in complex ellipti-cally symmetric distributions using the expected likelihood approach. ISAE, Tech. Rep, 2012.

- [2] Y. Abramovich and B. Johnson. Expected likelihood approach for covariance matrix estimation: Complex angular central gaussian case. In Sensor Array and Multichannel Signal Processing Workshop (SAM), 2012 IEEE 7th, pages 317–320. IEEE, 2012.

- [3] Y. Abramovich, N. Spencer, and M. Turley. Time-varying autoregressive (tvar) models for multiple radar observations. Signal Processing, IEEE Transactions on, 55(4):1298–1311, 2007.

- [4] H. Andersen, M. Hojbjerre, D. Sorensen, and P. Eriksen. Linear and Graphical Models: For the Multivariate Complex Normal Distribution, volume 101. Springer, 1995.

- [5] O. Arslan. Convergence behavior of an iterative reweighting algorithm to compute multivariate M-estimates for location and scatter. Journal of Statistical Planning and Inference, 118(1-2):115 – 128, 2004.

- [6] C. Auderset, C. Mazza, and E. A. Ruh. Angular gaussian and cauchy estimation. Journal of Multivariate Analysis, 93(1):180 – 197, 2005.

- [7] R. Bhatia. Positive Definite Matrices. Princeton Series in Applied Mathematics. Princeton University Press, 2007.

- [8] P. Bickel and E. Levina. Regularized estimation of large covariance matrices. The Annals of Statistics, 36(1):199–227, 2008.

- [9] L. Bombrun, F. Pascal, J. Tourneret, and Y. Berthoumieu. Performance of the maximum likelihood estimators for the parameters of multivariate generalized gaussian distributions. In Acoustics, Speech and Signal Processing (ICASSP), 2012 IEEE International Conference on, pages 3525–3528. IEEE, 2012.

- [10] Y. Chen, A. Wiesel, and A. Hero. Robust shrinkage estimation of high-dimensional covariance matrices. Signal Processing, IEEE Transactions on, 59(9):4097 –4107, sept. 2011.

- [11] M. A. Chmielewski. Elliptically symmetric distributions: A review and bibliography. International Statistical Review / Revue Internationale de Statistique, 49(1):pp. 67–74, 1981.

- [12] J. Dahl, L. Vandenberghe, and V. Roychowdhury. Covariance selection for nonchordal graphs via chordal embedding. Optimization Methods Software, 23(4):501–520, Aug. 2008.

- [13] A. Dempster. Covariance selection. Biometrics, pages 157–175, 1972.

- [14] T. Eltoft, T. Kim, and T. Lee. On the multivariate laplace distribution. Signal Processing Letters, IEEE, 13(5):300–303, 2006.

- [15] M. Finegold and M. Drton. Robust graphical modeling of gene networks using classical and alternative t-distributions. The Annals of Applied Statistics, 5(2A):1057–1080, 2011.

- [16] M. Fukuda, M. Kojima, K. Murota, and K. Nakata. Exploiting sparsity in semidefinite programming via matrix completion i: General framework. Siam Journal on Optimization, 11:647–674, 1999.

- [17] M. Grant, S. Boyd, and Y. Ye. Cvx: Matlab software for disciplined convex programming. Online accessiable: http://stanford. edu/˜ boyd/cvx, 2008.

- [18] P. Huber. Robust statistics. 1981.

- [19] J. T. Kent and D. E. Tyler. Redescending M-estimates of multivariate location and scatter. The Annals of Statistics, 19(4):pp. 2102–2119, 1991.

- [20] S. Lauritzen. Graphical models, volume 17. Oxford University Press, USA, 1996.

- [21] H. Liu, F. Han, and C. Zhang. Transelliptical graphical models. In Advances in Neural Information Processing Systems 25, pages 809–817, 2012.

- [22] C. Niculescu and L. Persson. Convex functions and their applications: a contemporary approach. Number v. 13 in CMS books in mathematics. Springer, 2006.

- [23] M. Novey, T. Adali, and A. Roy. A complex generalized gaussian distribution; characterization, generation, and estimation. Signal Processing, IEEE Transactions on, 58(3):1427 –1433, march 2010.

- [24] E. Ollila, D. E. Tyler, V. Koivunen, and H. V. Poor. Complex Elliptically Symmetric Distributions: Survey, New Results and Applications. IEEE Transactions on Signal Processing, 60(11):5597–5625, Nov. 2012.

- [25] F. Pascal, L. Bombrun, J.-Y. Tourenet, and Y. Berthoumieu. Parameter estimation for multivariate generalized gaussian distributions. Signal Processing, IEEE Transactions on (submitted to), 2012. [Online]. http://arxiv.org/abs/1302.6498.

- [26] F. Pascal, Y. Chitour, J. Ovarlez, P. Forster, and P. Larzabal. Covariance structure maximum-likelihood estimates in compound gaussian noise: Existence and algorithm analysis. Signal Processing, IEEE Transactions on, 56(1):34–48, 2008.

- [27] T. Rapcsak. Geodesic convexity in nonlinear optimization. Journal of Optimization Theory and Applications, 69:169–183, 1991. 10.1007/BF00940467.

- [28] B. Rolfs and B. Rajaratnam. Natural order recovery for banded covariance models. In Sensor Array and Multichannel Signal Processing Workshop (SAM), 2012 IEEE 7th, pages 365–368. IEEE, 2012.

- [29] A. J. Rothman, E. Levina, and J. Zhu. A new approach to cholesky-based covariance regularization in high dimensions. Biometrika, 97(3):539–550, 2010.

- [30] P. Rütimann and P. Bühlmann. High dimensional sparse covariance estimation via directed acyclic graphs. Electronic Journal of Statistics, 3:1133–1160, 2009.

- [31] D. E. Tyler. A distribution-free m-estimator of multivariate scatter. The Annals of Statistics, 15(1):pp. 234–251, 1987.

- [32] A. Wiesel. Geodesic convexity and covariance estimation. Signal Processing, IEEE Transactions on, 60(12):6182 –6189, dec. 2012.

- [33] A. Wiesel. Unified framework to regularized covariance estimation in scaled gaussian models. Signal Processing, IEEE Transactions on, 60(1):29 –38, jan. 2012.

- [34] A. Wiesel, Y. Eldar, and A. Hero. Covariance estimation in decomposable gaussian graphical models. Signal Processing, IEEE Transactions on, 58(3):1482 –1492, march 2010.

- [35] A. Wiesel and A. Hero. Decomposable principal component analysis. Signal Processing, IEEE Transactions on, 57(11):4369–4377, 2009.

- [36] T. Zhang. Robust subspace recovery by geodesically convex optimization. arXiv preprint arXiv:1206.1386, 2012.