Networks obtained by Implicit-Explicit Method:

Discrete-time distributed median solver

Abstract

In the purpose of making the consensus algorithm robust to outliers, consensus on the median value has recently attracted some attention. It has its applicability in for instance constructing a resilient distributed state estimator. Meanwhile, most of the existing works consider continuous-time algorithms and uses high-gain and discontinuous vector fields. This issues a problem of the need for smaller time steps and yielding chattering when discretizing by explicit method for its practical use. Thus, in this paper, we highlight that these issues vanish when we utilize instead Implicit-Explicit Method, for a broader class of networks designed by the blended dynamics approach. In particular, for undirected and connected graphs, we propose a discrete-time distributed median solver that does not suffer from chattering. We also verify by simulation that it has a smaller iteration number required to arrive at a steady-state.

Index Terms:

consensus protocols, resilient consensus, multi-agent systems, blended dynamicsI Introduction

Consensus problem; a design problem for a network that yields agreement on their states has attracted much attention during the decades [1]. Such popularity comes from its various uses in, for instance, mobile multi-robot systems and sensor networks for the purpose of coordination and estimation respectively. In the meantime, the consensus value obtained by such couplings were usually designed or obtained as (weighted) averages of initial values or external inputs.

Meanwhile, considering its application, a large network of cheap robots or sensors, it is hard to assume the reliability of individuals, and such large-scale distributed algorithms should be resilient to faults, outliers, and malicious attacks. In this respect, consensus on the average is inappropriate, as the mean statistic is weak to these abnormalities. On the contrary, what is robust to outliers, is the median statistic.

By this observation, such a problem to design a network to achieve consensus to the median of initial values or external inputs has been recently tackled [2, 3, 4, 5, 6]. Consensus on the median is still useful in the same manner illustrated earlier. In particular, [5] introduces its application to distributed estimation under malicious attacks.

However, most of the existing works deal with continuous-time algorithms and uses high-gain and discontinuous vector fields. Therefore, to adjust it to implement in a discrete-time framework, the usual explicit method yields some trouble. In particular, for stiff dynamics (high-gain), the explicit method requires a smaller time step (that depends on the gain). But, most importantly, the used discontinuous dynamics yields chattering, which arises from the theoretical use of Filippov solution [7] in continuous-time. This means a vector field can take any value in the interval, but in the end, it takes a particular value that ensures the existence of a solution. Since such a particular value is usually hard to find, implementing this in a discrete-time framework requires an alternative. Otherwise, it requires a sufficiently small time step. One way of resolving these issues is to use the implicit method.

Thus, in this paper, we will illustrate that the networks constructed by the blended dynamics approach (approach using strong diffusive coupling), such as the distributed median solver given in [5], can be successfully discretized by the Implicit-Explicit Method as in [8]. In particular, the obtained network does not suffer from an excess of parameters to tune; it does not have to choose an appropriate time step at each time we choose the coupling gain, unlike the explicit method. We will concentrate on the network introduced in [5], as this contains discontinuity only in the individual vector field, hence makes the application of the Implicit-Explicit Method easier. But, this is also to illustrate that this method applies well to the class of networks designed by the well-developed blended dynamics approach [9].

One exception in the previous works that has introduced discrete-time algorithm is [6]. However, they have another layer of network to perform the task. The network proposed in this paper has a smaller dimension compared to this network but instead achieves only approximate consensus.

This paper is organized as follows. In Section II, we briefly introduce the blended dynamics approach and illustrate how such a class of networks can be discretized by the Implicit-Explicit Method. Then, in Section III, we propose our discrete-time distributed median solver following the given outline and then prove its convergence. Section IV verifies by simulation, its ability to remove chattering, and then we conclude in Section V.

II Discretization of networks under strong diffusive coupling

Recently developed blended dynamics approach [9] is based on the observation that a strong diffusive coupling makes heterogeneous multi-agent systems behave like a single dynamical system which has its vector field as the average of all the individual vector fields in the network [10, 11]. In particular, consider a network given as

where is the set of agent indices with the number of agents , and is a subset of whose elements are the indices of the agents that send the information to agent . Here, the coefficient is the th element of the adjacency matrix that represents the interconnection graph, and we also assume hereafter that the graph is undirected and connected. Then, as the coupling gain approaches infinity, the network achieves arbitrary precision approximate synchronization and its synchronized behavior can be characterized by the single dynamics

which is called blended dynamics, under the only assumption that the blended dynamics is stable, e.g., it has contraction property or has an asymptotically stable limit cycle. The entire theory is based on the singular perturbation argument, and it has wide applicability in network design such as distributed optimization, distributed estimation, and formation control. We refer to [12] for an exhaustive review on the topic.

Now, to avoid the problem of using the explicit method in discretizing stiff dynamics, which in this case arises as to the use of smaller time steps for increasing coupling gain (hence making it harder to perform decentralized design),111In particular, even to ensure stability in the obtained network by the explicit method, the time step has to be well-selected to be sufficiently small. This problem does not arise in the following approach, hence leaves the coupling gain to be the only global parameter that we have to tune. we propose in this section the following, which makes use of the Implicit-Explicit Method. In particular, we discretize in the manner given as

or equivalently as

Now, this yields the network of form

where is the inverse of , where . By the implicit function theorem, such function is well-defined semi-globally for sufficiently large . By its definition, it satisfies the following identity.

The utility of this approach will be found in the particular example of distributed median solver, in Section III.

III Discrete-time distributed median solver

The continuous-time distributed median solver that we want to discretize in the manner illustrated in Section II is motivated by the blended dynamics approach and is given in [5] as

| (1) |

where by increasing sufficiently large, the network gets approximately synchronized to the median value of a collection of real numbers , . The function denotes the signum function defined as for non-zero , and for .

In this paper, the median is defined as a real number that belongs to the set

where ’s are the elements of with its index being sorted (rearranged) such that . With the help of this relaxed definition of the median, finding the median of becomes solving a simple optimization problem

Then, the gradient descent algorithm given by

solves the minimization problem; .222For a set , denotes the distance between the vector and , i.e., . This motivates the network (1) according to the blended dynamics approach introduced in Section II.

In this manner, we discretize the network (1) accordingly as

where is a left inverse of . Since

we obtain

In particular, the network is simply

| (2) | ||||

for . We have the following convergence result.

Theorem 1

Under the assumption that the communication graph induced by the adjacency element is undirected and connected, for any , there exists such that, for each and initial condition , , the solution to (2) exists for all , and satisfies

for all .

Proof:

Note first that the network (2) can be written as

where , , , and is a stochastic matrix. Note also that

is the left eigenvector of associated with the unique eigenvalue . The uniqueness comes from the connectivity of the network. Therefore, we obtain

and

with some and [13, 14]. In particular, and . See Appendix A for its illustration.

Now, since

we can conclude that

Therefore,

This implies that for any , there exists such that for each and initial condition , there exists such that

| (3) |

for all . Note that , and thus, we have

for such .

Now, since we have proved arbitrary precision approximate synchronization, let us recall how the averaged variable behaves;

where . This implies that if the overall balance is positive, then the averaged value increases, while if the balance is negative, then the value decreases. On the other hand, by its construction if and only if (see (2))

and thus, for , if

then by (3), we have

hence . Similarly, if

then we have . This finally implies that if

then we have , hence the averaged value increases for at least amount, and similarly, if

then we have , hence the averaged value decreases accordingly. Therefore, we can conclude that

which concludes the proof with the help of (3). ∎

Now, in the next section, we observe in the simulation result, a dramatic removal of the chattering phenomenon, compared to the network obtained by the explicit method.

IV Simulation

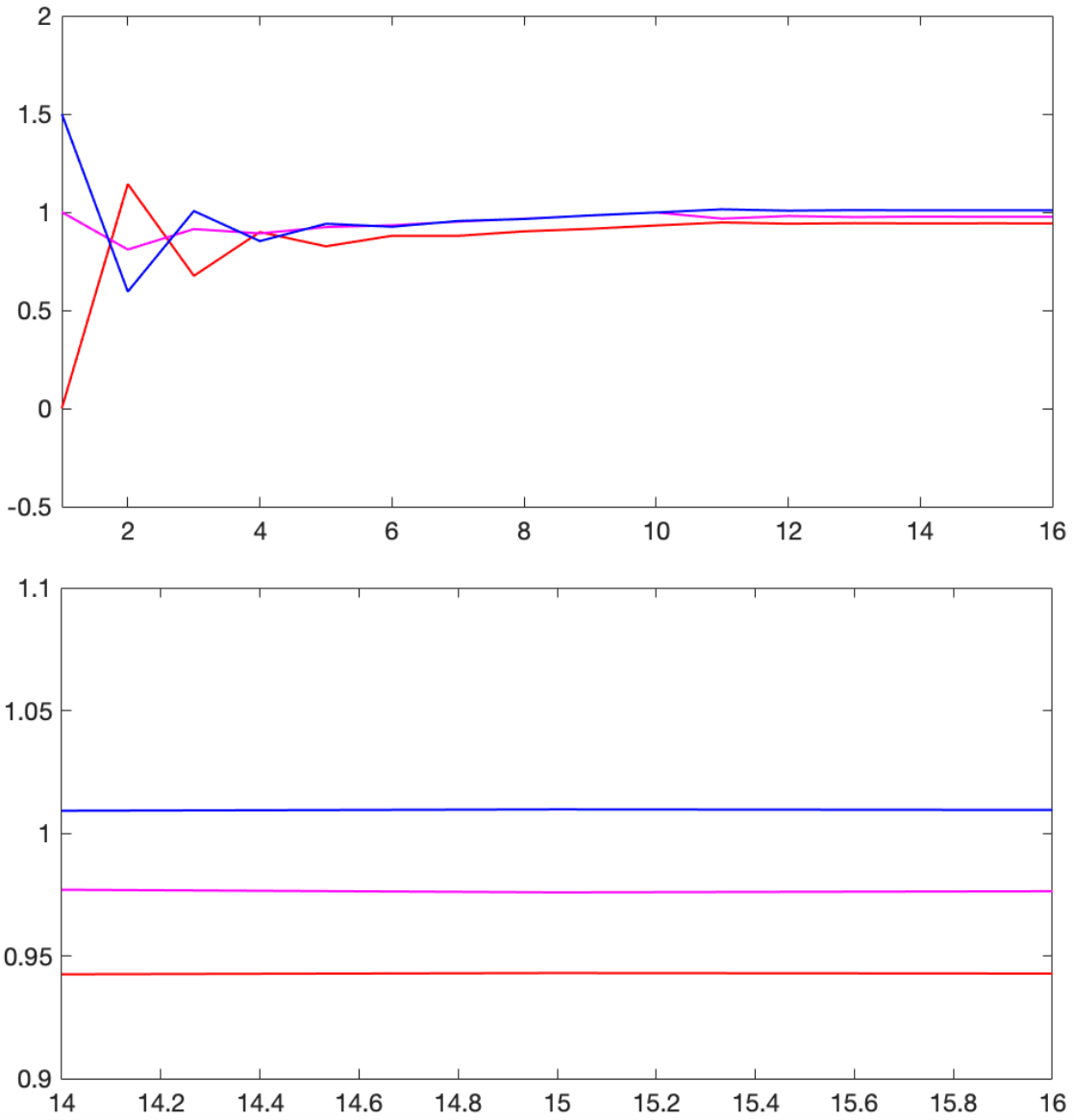

To compare the chattering phenomenon in the network, we consider a simple network consisting of three agents, where , , and . The communication graph is complete and unitary; for all . The simulation result of the network (2) with is given in Figure 1.

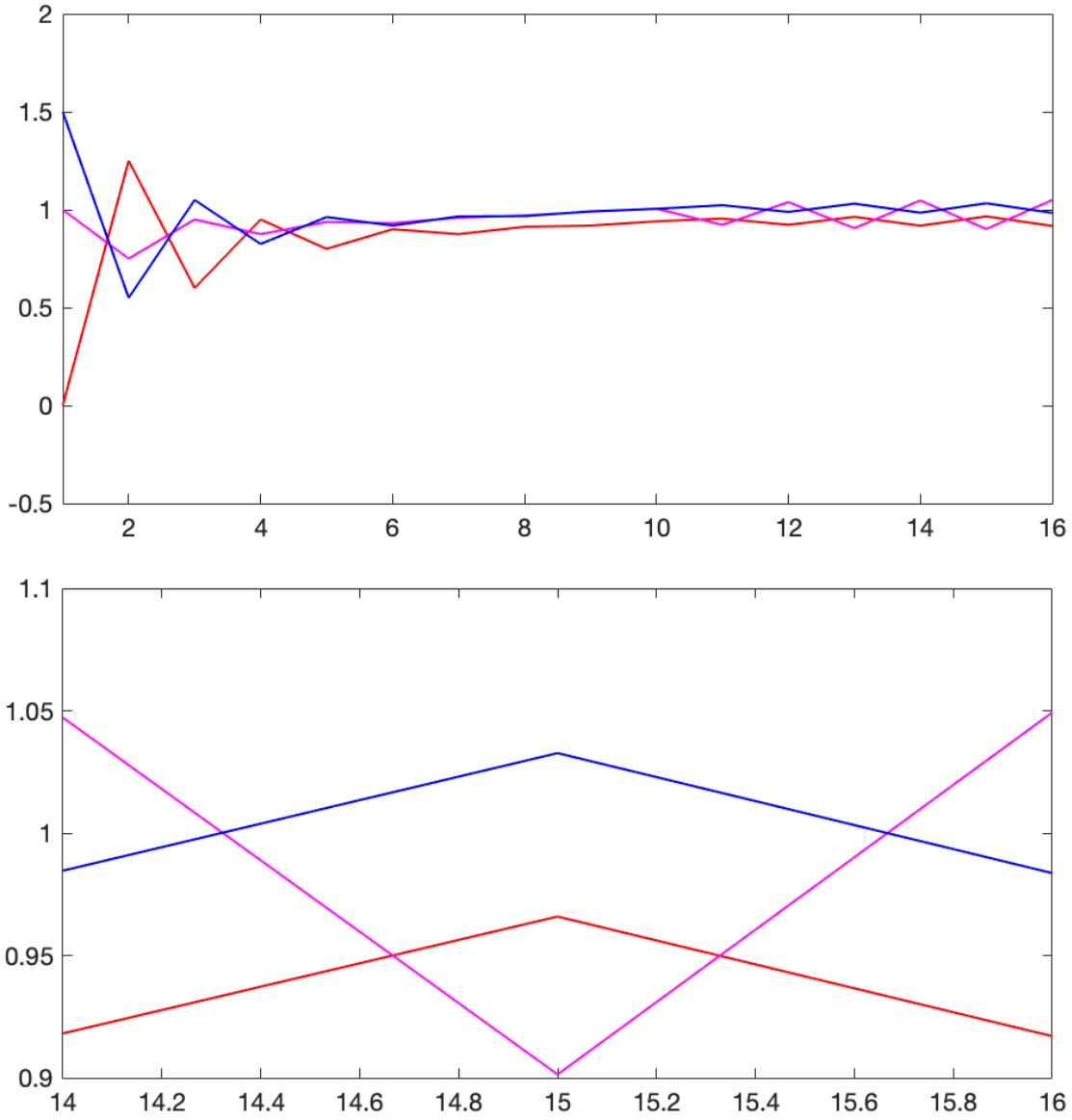

On the other hand, if we simulate a network obtained by discretizing (1) with the explicit method, given as

| (4) | ||||

for sufficiently small time step , then with , we get the trajectories illustrated in Figure 2. Here, by slightly increasing the time step to , we get unstable trajectories.

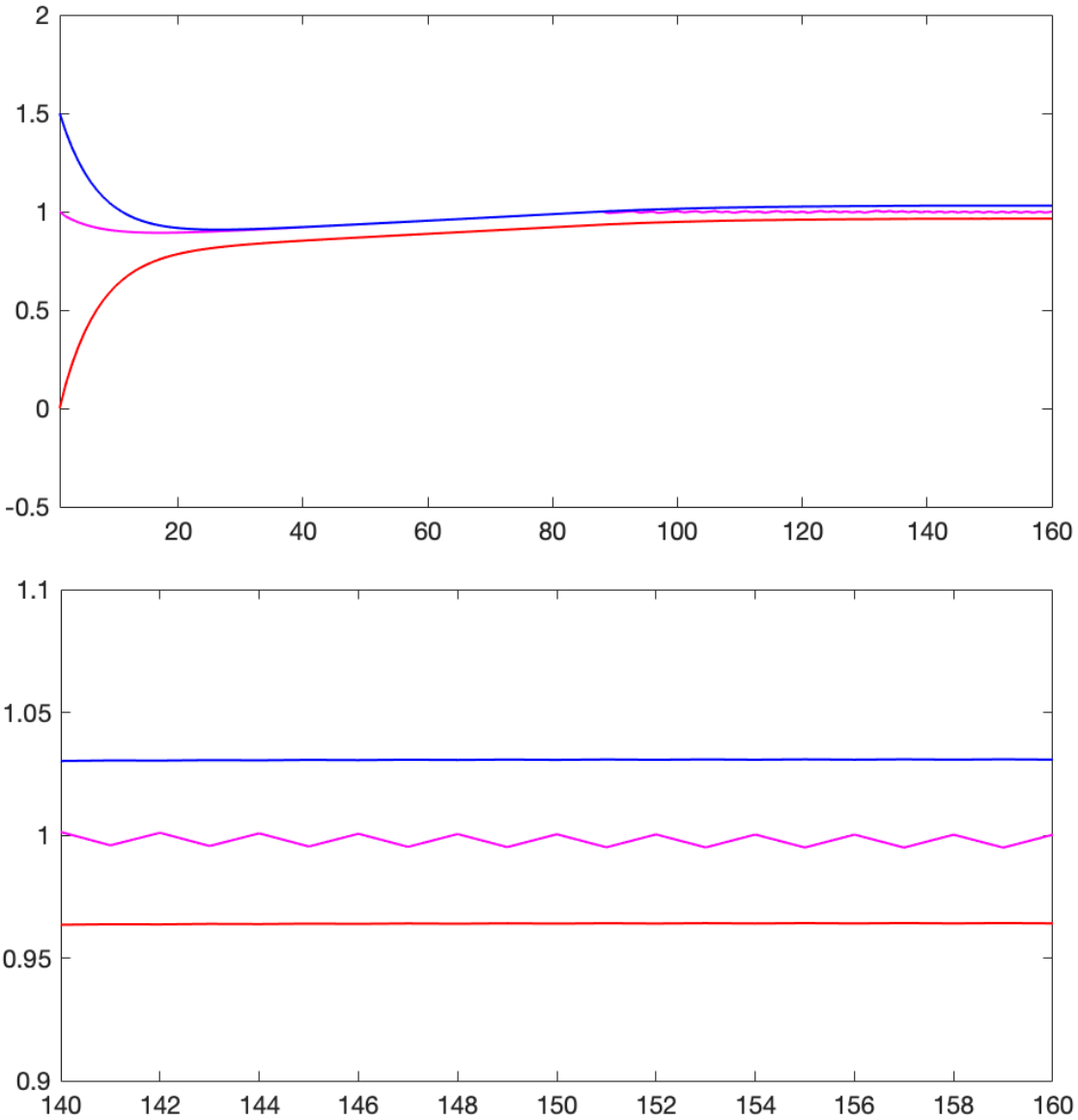

Note that we observe not only a bigger steady-state error, but also the chattering phenomenon. To recover the accuracy in the steady-state limit, we should employ , which results in the simulation result given in Figure 3, but it requires a larger number of iteration.

V Conclusion

By studying for a particular example of distributed median solver, we have seen the utility of the Implicit-Explicit Method, in discretizing a network constructed by the blended dynamics approach, which for the explicit method, by its stiffness in the dynamics, suffers from a problem like the need for a smaller time step, which depends on the coupling gain. Moreover, the method has proven its ability to remove the chattering phenomenon when discretizing a system having discontinuity in its vector field. The future consideration will be on the general conclusion of the use of the Implicit-Explicit Method on such class of networks and also on the analytical verification of its advantages compared with other methods.

References

- [1] W. Ren and Y. Cao, Distributed coordination of multi-agent networks: emergent problems, models, and issues. Springer, 2010.

- [2] M. Franceschelli, A. Giua, and A. Pisano, “Finite-time consensus on the median value by discontinuous control,” in Proceedings of American Control Conference, 2014, pp. 946–951.

- [3] ——, “Finite-time consensus on the median value with robustness properties,” IEEE Transactions on Automatic Control, vol. 62, no. 4, pp. 1652–1667, 2017.

- [4] Z. A. Z. S. Dashti, C. Seatzu, and M. Franceschelli, “Dynamic consensus on the median value in open multi-agent systems,” in Proceedings of 58th IEEE Conference on Decision and Control, 2019, pp. 3691–3697.

- [5] J. G. Lee, J. Kim, and H. Shim, “Fully distributed resilient state estimation based on distributed median solver,” IEEE Transactions on Automatic Control, vol. 65, no. 9, pp. 3935–3942, 2020.

- [6] G. Vasiljević, T. Petrović, B. Arbanas, and S. Bogdan, “Dynamic median consensus for marine multi-robot systems using acoustic communication,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 5299–5306, 2020.

- [7] A. F. Filippov, Differential equations with discontinuous righthand sides. Kluwer Academic Publishers, 1988.

- [8] X. Wang, J. Zhou, S. Mou, and M. J. Corless, “A distributed algorithm for least squares solutions,” IEEE Transactions on Automatic Control, vol. 64, no. 10, pp. 4217–4222, 2019.

- [9] J. G. Lee and H. Shim, “A tool for analysis and synthesis of heterogeneous multi-agent systems under rank-deficient coupling,” Automatica, vol. 117, p. 108952, 2020.

- [10] J. Kim, J. Yang, H. Shim, J.-S. Kim, and J. H. Seo, “Robustness of synchronization of heterogeneous agents by strong coupling and a large number of agents,” IEEE Transactions on Automatic Control, vol. 61, no. 10, pp. 3096–3102, 2016.

- [11] E. Panteley and A. Loría, “Synchronization and dynamic consensus of heterogeneous networked systems,” IEEE Transactions on Automatic Control, vol. 62, no. 8, pp. 3758–3773, 2017.

- [12] J. G. Lee and H. Shim, “Design of heterogeneous multi-agent system for distributed computation,” In Lecture notes in control and information sciences. Trends in nonlinear and adaptive control - A tribute to Laurent Praly for his 65th birthday. Springer, (Chapter), available at arXiv:2101.00161, 2021.

- [13] A. Nedic and A. Ozdaglar, “Distributed subgradient methods for multi-agent optimization,” IEEE Transactions on Automatic Control, vol. 54, no. 1, pp. 48–61, 2009.

- [14] A. Nedic and J. Liu, “A lyapunov approach to discrete-time linear consensus,” in Proceedings of IEEE Global Conference on Signal and Information Processing, 2014, pp. 842–846.

Appendix A Illustration of and in the proof of Theorem 1

First define the Laplacian matrix of a graph as , where is the adjacency matrix of the graph and is the diagonal matrix whose diagonal entries are , . By its construction, it contains at least one eigenvalue of zero, whose corresponding eigenvector is , and all the other eigenvalues have nonnegative real parts. For undirected graphs, the zero eigenvalue is simple if and only if the corresponding graph is connected. Moreover, has its eigenvalues contained inside the unit circle and the eigenvalue with magnitude one becomes unique if and only if the graph is connected.

Then, we have the representation

and thus,

Since is a symmetric positive semi-definite matrix, there exists normalized eigenvectors associated with eigenvalues such that

for and , . This implies that

where . Therefore, by noting that is the normalized vector of , hence

we can conclude that

This finally implies

where

Now, noting that has the same set of eigenvalues with the matrix , by the Gershgorin circle theorem, we can certify that , hence . In particular, we have

where are the eigenvalues of