∗Department of Applied Mathematics, University of California, Merced, 5200 N. Lake Rd, Merced, CA 95343 USA

1E-mail: mreeves3@ucmerced.edu

2E-mail: hbhat@ucmerced.edu

Neural Continuous-Time Markov Models

Abstract

Continuous-time Markov chains are used to model stochastic systems where transitions can occur at irregular times, e.g., birth-death processes, chemical reaction networks, population dynamics, and gene regulatory networks. We develop a method to learn a continuous-time Markov chain’s transition rate functions from fully observed time series. In contrast with existing methods, our method allows for transition rates to depend nonlinearly on both state variables and external covariates. The Gillespie algorithm is used to generate trajectories of stochastic systems where propensity functions (reaction rates) are known. Our method can be viewed as the inverse: given trajectories of a stochastic reaction network, we generate estimates of the propensity functions. While previous methods used linear or log-linear methods to link transition rates to covariates, we use neural networks, increasing the capacity and potential accuracy of learned models. In the chemical context, this enables the method to learn propensity functions from non-mass-action kinetics. We test our method with synthetic data generated from a variety of systems with known transition rates. We show that our method learns these transition rates with considerably more accuracy than log-linear methods, in terms of mean absolute error between ground truth and predicted transition rates. We also demonstrate an application of our methods to open-loop control of a continuous-time Markov chain.

keywords:

System Identification, Continuous-Time Markov Chains, Neural Networks, Parameter Estimation1 Introduction

Continuous-time Markov chains (CTMCs) are stochastic processes that model a variety of different systems, e.g., chemical reaction networks, population dynamics, gene regulatory networks, and infectious disease dynamics. CTMC sample paths are piecewise-constant functions that take values in the state space; for a given sample path, each jump discontinuity corresponds to a time at which the system transitions from one state to the next. On a discrete state space , a CTMC is completely characterized by its transition rates, an array that describes the rate at which the system transitions from one state to another. Given an initial condition, which can be expressed as a distribution over the possible states, one can use the transition rates to generate sample paths of the system. However, the inverse problem is not as straightforward. Learning the transition rates from sample path data is an active field of research.

Our focus in this work is on a particular class of CTMCs that model the stochastic dynamics of reaction networks [1]. For this class of CTMCs, there has been a wealth of prior work on estimation and identification. In the structure identification problem, the goal is to estimate the reaction network itself. Here we see approaches that focus on graphical modeling [2], sparse identification [3, 4, 5], and subnetwork reconstruction [6].

Our work has more in common with the parameter estimation problem, where all reactions and corresponding transition rates are known up to a finite set of parameters, which one seeks to estimate from data. Various prior approaches can then be distinguished based on methodology and assumptions regarding the data. In situations where one assumes access only to steady-state observations, we find both Bayesian [7] and moment-based methods [8]. In the case where we assume access to inexact measurements of all chemical species, we see moment-based methods [8], variational Bayes methods [9], Gaussian adaptation methods [10], and expectation maximization methods, either for general systems [11], or for the particular case of birth-death processes [12]. There also exist filtering and Bayesian methods to estimate states and parameters from exact measurements of a subset of all chemical species in a given system [13], and adjoint methods for reaction-diffusion systems [14].

In this paper, we model CTMC transition rates with functions that we parameterize and estimate using neural networks. We assume that all reactions are known, and that trajectories are fully observed. Unlike prior parameter estimation studies we have seen, we assume nothing regarding the functional form of the transition rates (or, equivalently, propensity functions). The neural CTMC (N-CTMC) method we describe allows for transition rates with arbitrary kinetics and dependence on external covariates, such as temperature.

Recent work has introduced covariate dependence via generalized linear models (GLM): for Markov chains in discrete/continuous-time, transition probabilities/rates are modeled as a softmax/exponential function of a linear function of the covariates [15, 16]. In this paper, on tests with synthetic data, we show that the N-CTMC method can model kinetics that lie outside mass-action or Michaelis-Menten models. This goes beyond the capabilities of GLM models.

The likelihood function is often used to learn the transition rate matrix of a CTMC. The likelihood measures how probable a sample path is to occur given a transition rate matrix. To find CTMC transition rates that best fit one or more given sample paths, we solve for the transition rates that maximize the likelihood function. For stochastic reaction networks with even a small number of reactions, the state space can be large or infinite, rendering the likelihood intractable. Strategies to deal with the intractable likelihood include truncating the state space [17], or using likelihood-free approaches such as approximate Bayesian computation [18, 19]. Truncating the state space introduces error, and does not allow for the full model to be learned.

Instead of attempting to estimate a large or infinite-dimensional matrix , N-CTMC uses the current state as one of the inputs to the neural network. The neural network then outputs a vector of transition rates, one for each reaction. In a setting with species of interest, every unique integer -tuple denotes a state. If each , the state space has maximal dimension . If we were to estimate each entry of individually, we would have to estimate constant parameters. In contrast, the number of parameters in the neural network model is independent of .

After deriving and explaining the method, we demonstrate its capabilities using tests with synthetic data. In each test, we start with sample paths generated from a known reaction network. We demonstrate that after training on these sample paths, N-CTMC succeeds in learning transition rates in close agreeement with the ground truth. We explain and show how N-CTMC significantly reduces the computational cost of learning transition rates. As we increase the sizes of our training sets, we show that the error of N-CTMC’s estimates decreases. Finally, we show that N-CTMC significantly outperforms a GLM of a similar construction.

2 Background

2.1 Stochastic Reaction Networks

We first review an established framework for stochastic reaction networks. In general, a reaction network with species, reactions, reaction rates , and stoichiometric coefficients and can be written as:

| () |

The state of the system at time is described by the state vector , where is a non-negative integer describing the count of species and denotes the change in the state after reaction :

Propensity functions describe how often reactions occur and are functions of the reaction rate and the current species count: . In mass action kinetics, is often a polynomial function of . The time until the next reaction is calculated using , where , i.e., is sampled uniformly on the interval . The next reaction is decided by sampling again, , and then determining for which it is true that

When , we define the summation on the left to be . Once we have determined both and , we update the state vector via .

2.2 Maximum Likelihood Estimation

Before proceeding, we review the maximum likelihood estimate (MLE) of CTMC transition rates. Let denote the state space for the CTMC. Assume we have a sequence of state observations at times :

For states such that , let denote the rate at which the CTMC transitions from state to state . The are the parameters to be estimated here. Let .

Given transition rates , the likelihood of observing the short sequence can be decomposed into two pieces. The first piece stems from the time spent in state before transitioning; this sojourn time has an distribution. The second piece is the probability of transitioning from state to another state : this equals . Carrying this out across the entire observation sequence, we obtain the likelihood

| (1) |

This likelihood can be rewritten in state space:

Here is the total time spent in state , and is the total number of transitions . Because is monotonic, we can maximize by maximizing

Differentiating with respect to and setting the derivative equal to zero, we obtain the MLE

| (2) |

We see that is the mean time spent in state .

3 Methods and Metrics

We now detail the neural CTMC (N-CTMC) method. For a stochastic reaction network () as described in Section 2.1, assume we have complete observations: . Specifically, at time , we have , a vector of counts (of all species), and , the associated covariates. Let .

We look to model the propensity functions , where is the number of reactions in the reaction network (). In this formulation, we assume no knowledge of the reaction rates .

We now formulate the likelihood function for the sequence of 3-tuples . In this likelihood function, we replace the constant transition rate matrix with a vector of propensity function . To model we use a neural network, a universal function approximator, with parameters . The network takes as input and outputs the propensity . In contrast with Section 2.1, depends not only on the state but also on the associated covariates . Taking the negative of (1) gives

| (3) |

In the data , the transition equates to the change in count vector ; let denote the reaction to which this change corresponds. Then we can replace by the -th element of the neural network’s output, which we denote by . Similarly, we can replace , the rate of leaving state , by , the sum of the propensity functions for each reaction in (). Rewritten in terms of and , the negative log likelihood (3) becomes

Let be the number of times reaction occurs. Suppose we collect all row vectors that correspond to states just before reaction occurs. We stack these vertically into an matrix . Similarly, let be a vector of time spent in state before reaction occurs. With this, we rewrite the above negative log likelihood as

| (4) |

To train the N-CTMC model, we apply gradient descent to minimize this negative log likelihood. Note that the internal sum over does not require a for loop in our implementation. With neural network input vectorization, we can pass all of into at once. The -th row of the output will be identical to the output we would have obtained had we passed in only , i.e., . Using this, the internal sum can be accomplished entirely with matrix algebra, which is highly optimized in modern neural network frameworks. The only remaining loop corresponds to the sum over from to ; this should be contrasted with sums over elements that would have resulted from naïve fusion of neural networks with the methods from Section 2.2. Together with automatic differentiation, our formulation leads to more efficient computation of and resulting minimization of the loss (4).

Note that the above N-CTMC approach learns a model without direct observations of ground truth propensity functions. We observe the propensities only indirectly through their effect on the system, as evidenced in the data . This varies from standard neural network training approaches where one starts with ground truth observations of a response variable and then minimizes the mean squared error beween predicted and true values of the response.

Here we briefly sketch how one might prove that neural networks approximations can converge to true propensity functions. For brevity, let . Let and denote propensity functions estimated by, respectively, a neural network and maximum likelihood estimation as in (2). More specifically, we begin by binning the covariate space . For a given bin , using transitions from such that , we form MLE estimates as in (2). Now given a state and covariates , we find the bin corresponding to and evaluate the MLE estimates (2) in that bin associated with all transitions of the form . Organizing these estimates by reaction number, we form . Let us assume that MLE consistency results for covariate-free CTMCs [20] can be extended to include covariates, assuming conditions on the distribution of covariates in the data and on the ergodicity of the CTMC, such that as , we will have almost surely (a.s.). Next, treating as ground truth, we train a neural network with suitable activation functions, width and depth ; as , we have [21]. Putting everything together, we obtain a.s. as both .

To evaluate the N-CTMC method, we will use several metrics: the mean absolute error (MAE) and mean squared error (MSE) across each unique state in , together with the weighted mean absolute error (W-MAE) and weighted mean squared error (W-MSE). The W-MAE and W-MSE examines the prevalence of each unique state in and weights the contribution to the overall absolute error accordingly. This means that a state that is rarely visited in will have less effect on the W-MAE and W-MSE than on the MAE and MSE.

4 Numerical Experiments

We examine three different applications of the N-CTMC: a birth-death process, Lotka-Volterra population dynamics, and a temperature-dependent chemical reaction network. Each application is chosen to test the N-CTMC model in a specific way.

In the birth-death process, we use a single covariate and let propensity functions depend solely on this covariate and not on the current population count. While this model lacks realism, it allows us to compare the N-CTMC to the counting-based MLE (2) for calculating rate parameters described in Section 2.2. To test the N-CTMC’s ability to capture arbitrary kinetics, we turn to a Lotka-Volterra population model. Here there are no covariates, but the propensity functions do not follow the law of mass action. The final reaction network we consider tests the N-CTMC’s ability to model arbitrary dependence on covariates. Here the system of reactions follows the law of mass action, but the propensities depend on temperature nonlinearly.

For each system, we generate trajectories using the Gillespie algorithm [22] and then use N-CTMC to learn propensity functions (equivalently, transition rates). We model all systems with an increasing amount of training data to show empirical model convergence.

4.1 Birth-Death Process

We analyze a birth-death process where the birth and death rates do not depend on population size:

Reactions:

-

•

-

•

Propensity Functions:

-

•

-

•

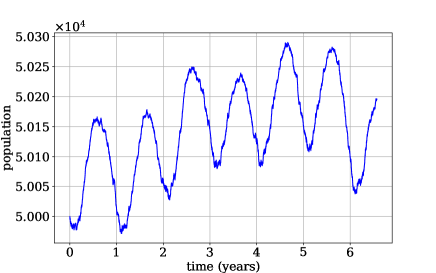

Let denote time in days. Then , rounded to the tenths decimal place to discretize the covariate space to . The birth and death rates and fluctuate annually, leading to sample trajectories such as that displayed in Figure 1. We generate training trajectories of increasing length, for , all with an initial population of , and train the N-CTMC model, yielding estimates .

While N-CTMC can handle continuous covariates, having a finite, discrete covariate space helps us compute transition rates using the counting-based MLE (2). Specifically, for each distinct value of the predictor, we find all transitions in the training set with predictor equal to . Using those transitions, we compute the counting-based MLEs (2), resulting in estimates .

In Table 1, we compute in turn the MAE between the true and predicted transition rates and from the N-CTMC and counting approaches. This yields, respectively, the errors labeled as N-MAE and C-MAE. Carrying out the same comparison with MSE, we obtain the N-MSE and C-MSE errors.

Table 1 shows convergence of both the N-CTMC and counting-based estimated propensity functions to the true propensity functions, as the number of transitions increases. Table 1 also shows that the error rates of the N-CTMC and counting-based methods are similar. This demonstrates that N-CTMC converges to the ground truth at a comparable rate to the counting methods derived by analytically maximizing the likelihood. As the number of predictors increases, this calculation becomes more arduous. Finally, Table 1 gives evidence that, under appropriate hypotheses, a consistency result for covariate-dependent CTMCs should be possible.

| Transitions | N-MAE | C-MAE | N-MSE | C-MSE |

|---|---|---|---|---|

| 5000 | ||||

| 50000 | ||||

| 500000 |

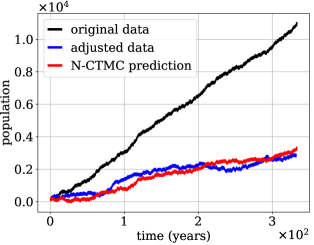

We now illustrate a potential open-loop control application of the N-CTMC method. Assume we have a population of, for instance, a contagion/pest, that evolves stochastically according to the birth-death process described previously. Suppose we simulate the system forward in time from an initial population of and find that it leads to too large of a population, the black curve in Figure 2. What changes to the propensities are required to reduce population growth by a particular amount? To answer: we process the data, switching 1.5% of the births (uniformly at random) to deaths, resulting in the blue curve in Figure 2. We then train our N-CTMC model on the blue curve. With the estimated propensities, we then generate predictions starting from the same initial population of —see the red curve in Figure 2. The close agreement indicates that N-CTMC succeeds in finding new propensity functions that, up to stochastic fluctuations, yield prescribed population dynamics.

4.2 Population Dynamics Modeling

Since neural networks are universal function approximators, N-CTMC should be able to model arbitrary transition rates. To test this, we consider a system that does not follow the law of mass action. We apply our method to a predator-prey system [23], which models dynamics with prey (A) and two predators (B, C). This system includes contingencies for A, B, and C entering and leaving the system, breeding between species A, species B hunting species A, and species C hunting species B.

Reactions:

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

Propensity Functions:

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

-

•

Note that reaction 4 and 7 have the same outcome on the state of the system, so the neural network cannot distinguish between the two reactions. Hence these reactions will be modeled jointly: . Additionally, reactions 8 and 9 model hunting and do not follow the law of mass action.

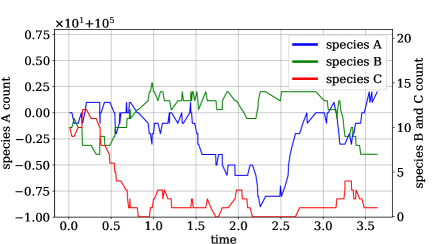

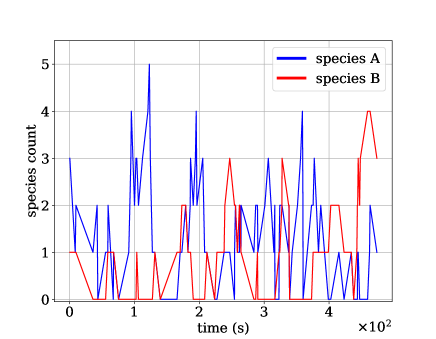

We generate three training sets with, respectively, 100, 1000, and 10000 trajectories. Each trajectory has length , initial conditions and parameters . An example trajectory can be seen in Figure 3. Using this data, we train both the N-CTMC model and, for the largest training set, a CTMC plus GLM model in which transition rates depend linearly on inputs. The N-CTMC models propensities via the following neural network:

For , we use the scaled exponential linear unit (selu) activation function [24]. We set , the softplus function. We chose softplus for because the in the loss (4) forces . During model formulation, we tested several activation functions that guarantee positive output, and softplus performed the best.

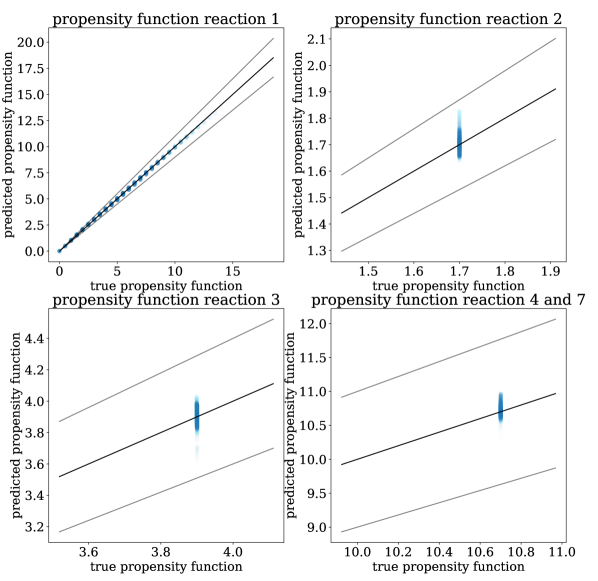

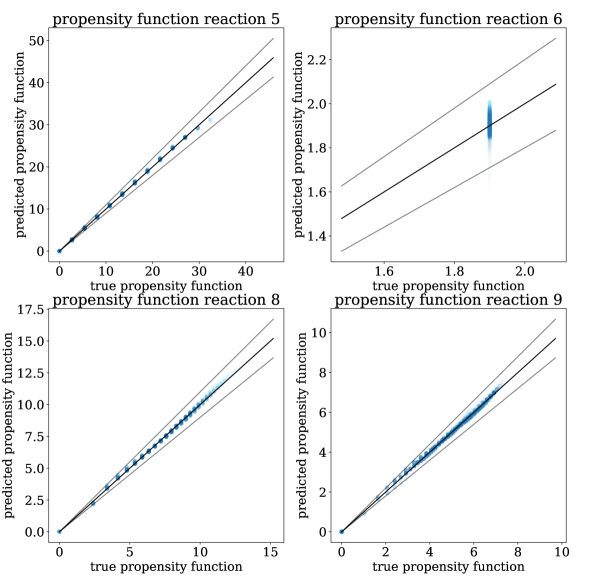

For each of the three training sets, we use the four error metrics described at the end of Section 3 to compare N-CTMC and GLM propensities against the true propensities. As we see in Table 2, for the N-CTMC model, as the training set size increases, all error metrics decrease. This implies empirical convergence to the true model. From the weighted MAE and MSE metrics, we see that N-CTMC learns the true propensity very well for states that the trajectories visit frequently. The unweighted MAE and MSE metrics show that it is more difficult to learn propensity functions for rarely visited states. This can be easily visualized in Figure 4 where predicted versus true propensity functions are plotted with intensity based on the prevalence of the state in the training data. When comparing the N-CTMC with the GLM, we see that the N-CTMC dramatically outperforms the GLM regardless of metric. Additionally, because the unweighted MAE and MSE are much larger for the GLM than for the N-CTMC, we conclude that the N-CTMC learns propensities for rarely visited states much better than the GLM.

| Trajectories | MAE | W-MAE | MSE | W-MSE | Epochs |

|---|---|---|---|---|---|

| 100 | 0.304 | 0.244 | 12,900 | ||

| 1000 | 0.157 | 0.093 | 33,500 | ||

| 10000 | 0.099 | 0.051 | 89,800 | ||

| 10000 (GLM) | 1.028 | 3.208 | 2,300 |

For each of the three training sets, the number of unique states explored is 3767, 8380, and 13435, respectively. We see an increase in the state space size due to the stochastic nature of the trajectory generation: the more data generated, the more the state space is explored. Using counting-based likelihood-based approaches, we would need to estimate a transition rate for each distinct pair of states where both and . Estimating each rate accurately would be highly inefficient and require a gargantuan amount of data. The N-CTMC model has 67,721 parameters, as compared with the 107,480 non-zero elements of the transition rate matrix for the system with 13,435 states. By sharing propensity function parameters across , N-CTMC requires less data and less computational expense.

4.3 Chemical Reaction Network Modeling

In the third system, a modification of a system from [22], we model the effect of temperature on a chemical reaction network. Reaction rates increase as a function of temperature according to , where is the frequency factor, is the activation energy, is the ideal gas constant, and is the temperature in Kelvin. This network has two species, A and B:

Reactions:

-

•

-

•

-

•

-

•

Propensity Functions:

-

•

-

•

-

•

-

•

Note that and . For each fixed choice of , we generate training sets with , , and trajectories, each with integer initial conditions drawn uniformly . This leads to total trajectories, as trajectories are generated at 5 different temperatures: . A sample trajectory at 273 K can be seen in Figure 5. Using this synthetic data, we train both a N-CTMC model and (using the largest training set only) a CTMC plus GLM model in which propensities depend linearly on inputs.

In the N-CTMC, we use a convolutional neural network. We expand the inputs with a 96-unit dense layer, reshape into a matrix, and perform 1D convolutions across the rows with 10 output channels and a kernel according to where is the input and is the kernel. We then flatten the results and apply two linear layers with 32 nodes each, followed by a linear layer with 4 nodes. We use selu activation functions for all layers except the final layer where we use softplus to ensure positive output. Including a convolutional layer improved model performance over a dense neural network. This model has 3,854 parameters, as compared to 5,820 parameters required in a naïve counting-based approach with 291 unique states and 4 reactions at 5 different temperatures. In this model, we implemented batch training, updating model parameters on each trajectory in turn, instead of on the whole data set at once. This improved model performance, possibly by allowing less frequently visited state spaces to have more impact on the overall likelihood gradients.

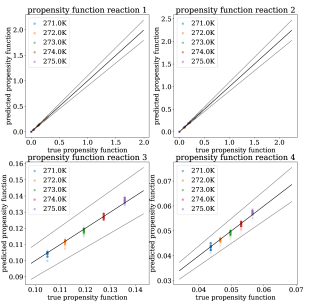

In Table 3, we quantify N-CTMC and GLM errors using the metrics described at the end of Section 3. As we increase the number of trajectories, N-CTMC errors decrease, implying empirical convergence to ground truth propensity functions. In Figure 6, we plot N-CTMC predicted versus true transition rates, with varying intensities based on their prevalence in the training data. Based on the W-MAE and W-MSE errors in Table 3 and the results in Figure 6, our conclusions regarding the strong performance of N-CTMC on frequently visited states are the same as in the discussion of results for the population dynamics system above. Across all metrics, the N-CTMC outperforms the GLM, and especially in learning rare state transition rates.

| Trajectories | MAE | W-MAE | MSE | W-MSE | Epochs |

|---|---|---|---|---|---|

| 100 | 0.024 | 0.004 | 7,700 | ||

| 300 | 0.020 | 0.003 | 6,900 | ||

| 500 | 0.015 | 0.002 | 4,000 | ||

| 500 (GLM) | 0.250 | 1.345 | 12,000 |

5 Discussion

We have assumed access to complete observations of trajectories together with knowledge of the number of reactions and associated stoichiometric coefficients. Subject to these assumptions, we have demonstrated that the N-CTMC method learns consistent transition rates for stochastic reaction networks with both mass action and non-mass action kinetics, including for one system with more than 13,000 states.

Unlike other methods where the likelihood is truncated, N-CTMC uses the entire state space in the likelihood calculation. The N-CTMC estimated propensity function can be evaluated on any state in , including but not limited to states visited in the training set. We can only guarantee accurate estimates of propensity functions on states that have been visited sufficiently often in the training data. On rarely visited states, however, N-CTMC outperformed a GLM approach in which propensity functions depend linearly on inputs (count vectors and/or covariates); such GLM approaches have been explored in prior work [15, 16]. The N-CTMC also models transition rates which vary as arbitrary functions of covariates, allowing for study of systems where propensity functions depend on external stimuli.

In the current work, we focused on systems with exponentially distributed sojourn times, an unreasonable assumption for some systems [25]. Using the framework developed here, the negative log likelihood (NLL) can be easily modified to accommodate a semi-Markov process [26]. For the birth-death example, we showed that N-CTMC can also be used to control—in an open-loop fashion—covariate-dependent CTMC systems. We hope to explore both semi-Markov versions and control applications of N-CTMC in future work. This will expand the class of problems to which we can apply our methods.

Acknowledgments. M. Reeves and H. S. Bhat acknowledge support from, respectively, NSF DGE-1633722 and NSF DMS-1723272. This research also benefited from computational resources that include the Pinnacles cluster at UC Merced (supported by NSF ACI-2019144) and Nautilus, supported by the Pacific Research Platform (NSF ACI-1541349), CHASE-CI (NSF CNS-1730158), Towards a National Research Platform (NSF OAC-1826967), and UCOP.

References

- [1] D. F. Anderson and T. G. Kurtz, “Continuous time Markov chain models for chemical reaction networks,” in Design and Analysis of Biomolecular Circuits: Engineering Approaches to Systems and Synthetic Biology (H. Koeppl, G. Setti, M. di Bernardo, and D. Densmore, eds.), pp. 3–42, New York, NY: Springer, 2011.

- [2] Y. J. Cho, N. Ramakrishnan, and Y. Cao, “Reconstructing chemical reaction networks: Data mining meets system identification,” in Proceedings of the 14th ACM SIGKDD Int. Conf. on Knowledge Discovery and Data Mining (Y. Li, B. Liu, and S. Sarawagi, eds.), pp. 142–150, 2008.

- [3] K. K. K. Kim, H. Jang, R. B. Gopaluni, J. H. Lee, and R. D. Braatz, “Sparse identification in chemical master equations for monomolecular reaction networks,” in American Control Conference, ACC 2014, Portland, OR, USA, June 4-6, 2014, pp. 3698–3703, 2014.

- [4] A. Klimovskaia, S. Ganscha, and M. Claassen, “Sparse regression based structure learning of stochastic reaction networks from single cell snapshot time series,” PLoS Comput. Biol., vol. 12, no. 12, p. e1005234, 2016.

- [5] R. M. Jiang, P. Singh, F. Wrede, A. Hellander, and L. R. Petzold, “Identification of dynamic mass-action biochemical reaction networks using sparse Bayesian methods,” PLoS Comput. Biol., vol. 18, no. 1, 2022.

- [6] E. Yeung, J. Kim, J. M. Gonçalves, and R. M. Murray, “Global network identification from reconstructed dynamical structure subnetworks: Applications to biochemical reaction networks,” in 54th IEEE Conference on Decision and Control, CDC 2015, pp. 881–888, 2015.

- [7] A. Gupta, M. Khammash, and G. Sanguinetti, “Bayesian parameter estimation for stochastic reaction networks from steady-state observations,” in Int. Conf. on Computational Methods in Systems Biology, pp. 342–346, Springer, 2019.

- [8] M. Backenköhler, L. Bortolussi, and V. Wolf, “Moment-based parameter estimation for stochastic reaction networks in equilibrium,” IEEE/ACM Transactions on Computational Biology and Bioinformatics, vol. 15, no. 4, pp. 1180–1192, 2017.

- [9] A. Ruttor, G. Sanguinetti, and M. Opper, “Approximate inference for stochastic reaction processes,” in Learning and Inference in Computational Systems Biology (N. D. Lawrence, M. A. Girolami, M. Rattray, and G. Sanguinetti, eds.), Computational Molecular Biology, pp. 277–296, MIT Press, 2010.

- [10] C. L. Müller, R. Ramaswamy, and I. F. Sbalzarini, “Global parameter identification of stochastic reaction networks from single trajectories,” in Advances in Systems Biology (I. I. Goryanin and A. B. Goryachev, eds.), (New York, NY), pp. 477–498, Springer, 2012.

- [11] A. Horváth and D. Manini, “Parameter estimation of kinetic rates in stochastic reaction networks by the EM method,” in 2008 International Conference on BioMedical Engineering and Informatics, vol. 1, pp. 713–717, 2008.

- [12] F. W. Crawford, V. N. Minin, and M. A. Suchard, “Estimation for general birth-death processes,” J. Am. Stat. Assoc., vol. 109, no. 506, pp. 730–747, 2014.

- [13] M. Rathinam and M. Yu, “State and parameter estimation from exact partial state observation in stochastic reaction networks,” J. Chem. Phys., vol. 154, no. 3, p. 034103, 2021.

- [14] I. Yang and C. J. Tomlin, “Reaction-diffusion systems in protein networks: Global existence and identification,” Syst. Control. Lett., vol. 74, pp. 50–57, 2014.

- [15] S. Barratt and S. Boyd, “Fitting feature-dependent Markov chains,” J. Glob. Optim., 2022.

- [16] A. Awasthi, V. Minin, J. Huang, D. Chow, and J. Xu, “Fitting a stochastic model of intensive care occupancy to noisy hospitalization time series,” arXiv:2203.00229, 2023.

- [17] A. Andreychenko, L. Mikeev, D. Spieler, and V. Wolf, “Approximate maximum likelihood estimation for stochastic chemical kinetics,” EURASIP J. Bioinform. Syst. Biol., vol. 2012, no. 1, pp. 1–14, 2012.

- [18] J. Owen, D. J. Wilkinson, and C. S. Gillespie, “Scalable inference for Markov processes with intractable likelihoods,” Stat. Comput., vol. 25, no. 1, pp. 145–156, 2015.

- [19] T. Toni, D. Welch, N. Strelkowa, A. Ipsen, and M. P. Stumpf, “Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems,” J. R. Soc. Interface, vol. 6, no. 31, pp. 187–202, 2009.

- [20] B. Ranneby, “On necessary and sufficient conditions for consistency of MLE’s in Markov chain models,” Scand. J. Stat., pp. 99–105, 1978.

- [21] G. Gripenberg, “Approximation by neural networks with a bounded number of nodes at each level,” J. Approx. Theory, vol. 122, no. 2, pp. 260–266, 2003.

- [22] R. Erban, J. Chapman, and P. Maini, “A practical guide to stochastic simulations of reaction-diffusion processes,” arXiv:0704.1908, 2007.

- [23] G. Enciso and J. Kim, “Accuracy of multiscale reduction for stochastic reaction systems,” Multiscale Model. Simul., vol. 19, no. 4, pp. 1633–1658, 2021.

- [24] G. Klambauer, T. Unterthiner, A. Mayr, and S. Hochreiter, “Self-normalizing neural networks,” in Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017 (I. Guyon, U. von Luxburg, S. Bengio, H. M. Wallach, R. Fergus, S. V. N. Vishwanathan, and R. Garnett, eds.), pp. 971–980, 2017.

- [25] D. Chiarugi, M. Falaschi, D. Hermith, C. Olarte, and L. Torella, “Modelling non-Markovian dynamics in biochemical reactions,” BMC Systems Biology, vol. 9, no. S8, pp. 1–13, 2015.

- [26] A. Asanjarani, B. Liquet, and Y. Nazarathy, “Estimation of semi-Markov multi-state models: a comparison of the sojourn times and transition intensities approaches,” Int. J. Biostat., vol. 18, no. 1, pp. 243–262, 2022.