Neural Integral Equations

Abstract

Nonlinear operators with long-distance spatiotemporal dependencies are fundamental in modeling complex systems across sciences, yet learning these nonlocal operators remains challenging in machine learning. Integral equations (IEs), which model such nonlocal systems, have wide-ranging applications in physics, chemistry, biology, and engineering. We introduce Neural Integral Equations (NIE), a method for learning unknown integral operators from data using an IE solver. To improve scalability and model capacity, we also present Attentional Neural Integral Equations (ANIE), which replaces the integral with self-attention. Both models are grounded in the theory of second-kind integral equations, where the indeterminate appears both inside and outside the integral operator. We provide theoretical analysis showing how self-attention can approximate integral operators under mild regularity assumptions, further deepening previously reported connections between transformers and integration, and deriving corresponding approximation results for integral operators. Through numerical benchmarks on synthetic and real-world data — including Lotka-Volterra, Navier-Stokes, and Burgers’ equations, as well as brain dynamics and integral equations — we showcase the models’ capabilities and their ability to derive interpretable dynamics embeddings. Our experiments demonstrate that ANIE outperforms existing methods, especially for longer time intervals and higher-dimensional problems. Our work addresses a critical gap in machine learning for nonlocal operators and offers a powerful tool for studying unknown complex systems with long-range dependencies.

1 Introduction

Integral equations (IEs) are functional equations where the indeterminate function appears under the sign of integration [SRH+81]. The theory of IEs has a long history in pure and applied mathematics, dating back to the 1800’s, and it is thought to have started with Fourier’s Theorem [Gro07]. One other early application of IEs, was found in the Dirichelet’s problem (a PDE), which was originally solved through its integral formulation. Subsequent studies, carried out by Fredholm, Volterra, and Hilbert and Schmidt, have significantly contributed to the establishment of this theory. IEs appear in many applications ranging from physics and chemistry, to biology and engineering [Waz11, Gro07], for instance in potential theory, diffraction and inverse problems such as scattering in quantum mechanics [Waz11, Gro07, Lak95]. Neural field equations, that model brain activity, can be described using IEs and integro-differential equations (IDEs), due to their highly non-local nature ([Ama77]). IEs are related to the theory of ordinary differential equations (ODEs) and partial differential equations (PDEs), however they possess unique properties. While ODEs and PDEs describe local behavior, IEs model global (long-distance) spatiotemporal relations. Moreover, ODEs and PDEs have IE forms that in certain circumstances can be solved more effectively and efficiently due to the better stability properties of IE solvers compared to ODE and PDE solvers [Rok85, Rok90]. See also [GK98] for an example of PDE system that is solved with high accuracy through an IE method.

Learning nonlocal operators for dynamics with long-distance relations is an open problem in deep learning. In this article, we introduce and address the problem of learning nonlocal dynamics from data through integral equations. Namely, we introduce the Neural Integral Equation (NIE) and the Attentional Neural Integral Equation (ANIE). Our setup is that of an operator learning problem, where we learn the integral operator that generates dynamics that fit given data. Often, one has observations of a dynamical system without knowing its analytical form. Our approach permits modeling the system purely from the observations. This model, via the learned integral operator, can be used to generate dynamics, as well as be used to infer the spatiotemporal relations that generated the data. The innovation of our proposed method lies in the fact that we formulate the operator learning problem associated to dynamics in the form of an optimization problem for the solutions of an IE obtained through an IE solver. Unlike other operator learning methods that learn dynamics as a mapping between function spaces for fixed time points, i.e. as a mapping , where and are function spaces each representing a time coordinate, NIE and ANIE allow to continuously learn dynamics with arbitrary time resolution. Our solver outputs solutions through an iterative procedure [Waz11], which converges to a solution of the IE.

1.1 Our Contributions

In this article, we introduce Neural Integral Equations (NIE) and Attentional Neural Integral Equations (ANIE), which are novel neural network based methods for learning dynamics, in the form of IEs, from data. Our architectures allow modeling dynamics with long-distance spatiotemporal relations typical of non-local functional equations. Our main contributions are as follows:

-

•

We introduce a novel method for learning dynamics from data as solutions of IEs of the second kind through an IE solver.

-

•

We implement a fully differentiable IE solver in PyTorch111Code available at: https://github.com/emazap7/ANIE.

-

•

We implement a highly scalable version of the solver where integration is done with a self-attention mechanism.

-

•

We derive theoretical results on convergence of the solver, and approximation capabilities of our models.

-

•

Our model provides explainable dynamics and meaningful embeddings of these dynamics.

-

•

Finally, we use our method to model and interpret non-local dynamics from brain activity recordings.

1.2 Background and related work

1.2.1 Integral equations in numerical analysis

Due to their wide range of applications, the theory of IEs has attracted the attention of mathematicians, physicists, and engineers for a long time. Detailed accounts on integral equations can be found in [Zem12, Waz11, Bôc26]. Along with their theoretical properties, much attention has been devoted to the development of efficient integral equation solvers, focusing on rapidly obtaining highly accurate solutions of certain PDE systems [Rok85, Rok90]. In fact, it is known that integral equation solvers obtain more accurate solutions than differential solvers for a variety of ODEs and PDEs.

1.2.2 Operator learning

IE solvers are used to solve given equations through some iterative procedure, see e.g. [Waz11, DM88]. Moreover, machine learning approaches to solve given types of IEs have been implemented [GFZJ22, Que16, KD19, GLS+21, EB12]. In such cases, the IE is known, and we seek the solution of it. However, in practice, we often do not have access to the analytical form of the equation and we only have data sampled from a system. In such cases, we want to model the system by learning an operator that can reproduce the system. This is the setting of operator learning problems, and several approaches to operator learning, including using deep learning, have been presented [KLL+21, LJP+21, LKA+21, LKA+20, Cao21, HWS+23, MKH+22, KLS24, PMB+22, BdBR+24, OOK+23, OSG+22]. Typical operator learning problems are formulated on finite grids (finite difference methods) that approximate the domain of the functions. In this case, recovering the continuous limit is a very challenging problem, and irregularly sampled data can completely alter the evaluation of the learned operator. Operator learning for integral equations has not been considered thus far, and it constitutes the main novelty of the present article. This is entailed in the formulation of the operator learning problem through an IE solver. The convenience of this approach lies in the capability of the solver to sample the domain of integration continuously, and the capabilities of integral equations to model very complex dynamics, due to their highly non-local behavior. A similar approach for Integro-Differential Equations (IDEs) has been followed in [ZFM+23]. However, in the present work our implementation does not include differential solvers, and the reformulation of such dynamical problems in terms of IEs has great benefits in terms of solver speed and stability. Moreover, our version of an IE solver that approximates integrals via self-attention allows for higher dimensional integrals than those considered in [ZFM+23].

1.2.3 Learning continuous dynamics

Modeling continuous dynamics from discretely sampled data is a fundamental task in data science. Methods for continuous modeling include those based on ODEs [CRBD18, CAN21]. While ODEs are useful for modeling temporal dynamics, they are fundamentally local equations which neither model spatial nor long-range temporal relations. The authors of [CRBD18] have employed auxiliary tools, such as RNNs, in order to include non-locality. We point out that RNNs can be seen as performing a temporal integration (in discrete steps), in order to codify some degree of non-local (temporal) dependence in the dynamics. In this work, we introduce a framework that provides a more general and formal solution to this non-local integration problem. Moreover, the dynamics are not produced sequentially with respect to time, as is done by ODE solvers, but are processed in parallel thus providing increased efficiency, as we will demonstrate experimentally.

1.2.4 Integration via self-attention

The self-attention mechanism and transformers were introduced in [VSP+17] and applied to machine translation tasks. Thanks to their initial success, they have since been used in many other domains, including operator learning for dynamics [Cao21, GZ22]. Interestingly, the self-attention mechanism can be interpreted as the Nystrom method for approximating integrals [XZC+21]. Making use of this connection, we approximate the integral kernel of our model using self-attention, allowing efficient integration over higher dimensions.

2 Neural integral equations

An IE (of Urysohn type) takes the general form given by

| (1) |

where the variable is the local time used for integration for each , which is the global time. Due to their fundamentally non-local behavior, integral equations have been used to model physical and biological phenomena, such as brain dynamics, virus spreading, and plasma physics [Waz11, Gro07, Ama77]. The case considered in this article, where the indeterminate function appears both under the sign of integration and outside of it, is termed an equation of the second kind, as opposed to the first kind where the indeterminate function appears only in the integral operator. IEs of the second kind are more stable than of the first kind for reasons rooted in functional analysis, as explained in Appendix B.4.

We introduce Neural Integral Equations (NIEs), a deep neural network model based on integral equations. NIEs are integral equations as defined by Equation 1 where is a neural network, parameterized by , and indicated by . Training a NIE consists of optimizing in such a way that the corresponding solution to Equation 1 fits the given data. At each step of the training, we perform two fundamental procedures. The first one is to solve the IE determined by , and the second one is to optimize for in such a way that solving the corresponding IE produces a function that fits a given dataset. Details on the solver procedure and the training are given in Appendix D.

IEs, in contrast to ODEs and PDEs, are non-local equations [SRH+81] since in order to evaluate the integral operator on a function , we need the value of over the full integration domain. In fact, to evaluate the RHS of Equation 1 at an arbitrary time point the function between and is needed. Here, and are arbitrary functions and common choices include and (called Fredholm equations), or and (called Volterra equations). Consequently, solving an integral equation requires an iterative procedure, based on the notion of Picard iterations (successive approximation method), where the solution is obtained as a sequence of approximations that converge to the solution. We refer the reader to Appendix B.3 for details on the solver implemented in this article, the theory upon which it is based, and the proofs regarding the convergence of our algorithms to a solution of the given IE (see Theorem B.1 and Corollary B.2). We also refer to [Waz11] for an elementary and computationally driven introduction to the theory behind the methods that motivate this procedure, and [DM88] for a more detailed account.

Interestingly, utilizing NIEs to model ODEs allows to bypass the use of the ODE solvers, as the one introduced in [CRBD18, CAN21]. The convenience in this approach is that the integral equation solver is more stable than the ODE solver [KR12]. ODE solver instabilities, induced by equation stiffness, have been previously considered in [GBD+20, FJNO20]. The IE solver presented in this work thus does not suffer from these problems, and is also significantly faster.

It is often useful to consider a more specific form for IEs, where the function factors in the product of a kernel and a generally non-linear function as . Here, is matrix-valued and it carries the dependence on the time (both and ), while depends only on the indeterminate function . The form of this IE is therefore:

| (2) |

NIEs in this form comprise two neural networks, namely and . We observe that in IEs, the initial condition is embedded in the equation itself, and it is not an arbitrary value to be specified as an extra condition. To solve the IE we implement a solver that performs an iterative procedure to obtain a solution, see Appendix B.3 and Appendix D. During the iterations, Monte Carlo sampling is performed to evaluate the integrals. This procedure allows our deep learning model to be independent of the temporal grid points, therefore resulting in a continuous model, since the model internally uses randomly sampled points to generate the successive iterations, as opposed to using fixed grid points. The general algorithm for training NIE is given in Algorithm 1, and a diagrammatic overview of it is shown in Figure 1. See also Figure 2 for a visualization of the general solving procedure.

2.1 Space, time and higher dimensional integration

IEs can have multiple space dimensions in addition to time. Such equations are formulated as

| (3) |

where is a domain in , and , for some interval . More commonly, in the literature, one finds a simpler case of higher dimensional IEs, where the integral component is obtained as a sum of terms with only partial integrations. Such an equation takes the form

| (4) |

These equations are the integral counterpart of PDEs, similarly to the relation between one-dimensional IEs and ODEs, and they are called Partial Integral Equations (PIEs). With slight abuse of notation we will still refer to Equation 3 as a PIE, as we will in practice use such an approach to model PDEs in the case of Burgers’ equation and the Navier-Stokes equation.

2.2 Attentional neural integral equations

Training of NIE requires an integration step at each time point, incurring in a potentially high computational cost. This integration step is implemented using the torchquad package [GTM21], a high performance numerical Monte Carlo integration method, resulting in fast integration and high scalability of NIE. For example, solving ODEs using NIE is significantly faster than using traditional ODE solvers (Table 5). However, several limitations are associated with the torchquad integration method. In fact, torchquad requires significantly increasing numbers of sampled points with increasing numbers of dimensions. To use NIE for solving PDEs and (P)IEs we require efficient spatial integration in high dimensions.

To address these challenges, we have employed an approach to NIE where the integral operator is based on a self-attention mechanism. In fact, self-attention can be viewed as an approximation of an integration procedure [TBY+19, XZC+21], where the product of queries and keys coincides with the notion of a kernel, as the one discussed in Section 2. In [Cao21] the parallelism between self-attention and integration of kernels was further explored to interpret transformers as Galerkin projections in operator learning tasks.

We have replaced the analytical integral in Equation 1 with a self-attention procedure. The resulting model, which we call Attentional Neural Integral Equation (ANIE), follows the same principle of iterative IE solving presented in Section 2 but where the neural networks and are replaced by attention matrices. It can be shown, see Appendix B.3, that the successive approximation method is still applicable in this case to obtain a solution for the corresponding equation. Observe, following the comparison between integration and self-attention, that is decomposed in the product of queries and keys, as described for instance in [Cao21]. The interval of integration is determined, in the attentional approximation, by means of the mask. In particular, if there is no mask we have a Fredholm IE, while the causal attention mask [YZQC21] corresponds to a Volterra type of IE.

An iterative procedure similar to the one discussed in Algorithm 1 is implemented to solve the corresponding IE, see Appendix B.3 and Appendix D.2. During iterations, we sample points uniformly from the spatiotemporal domain, and the corresponding integral operator does not depend on the grid points of the dataset. Our experiments on the Burgers’ dataset (Section 3.1 show that our model is stable with respect to change of spatiotemporal stamps since the model internally uses randomly sampled points to generate the successive iterations, rather than the fixed grid points. A detailed description of the integration procedure, along with solver steps and training for ANIE is given in Appendix D.2. Moreover, Theorem B.1, Corollary B.2 and Remark B.3 show that the solver procedure converges to a solution under certain mild assumptions.

Algorithm 2 summarizes the solving and training procedures for ANIE. A detailed description of the meaning of is found in Appendix D.2. Theoretical considerations on Fredholm generalized equations with general operators, integral operator approximation through self-attention, and existence of the solutions for these equations are given in Appendix B.4. Figure 13 gives a diagrammatic representation of the integration procedure implemented in this article, and Figure 14 gives a schematic representation of the solver procedure with space and time.

3 Experiments

3.1 Modeling PDEs with IEs: Burgers’ and Navier-Stokes equations

PDEs can be reformulated as IEs in several circumstances, and dynamics generated by differential operators can therefore be modeled through ANIE as a PIE, where integration is performed in space and time. We consider two well known types of PDEs, namely the Burgers’ equation and the Navier-Stokes equation. Since NIE is implemented only for time integration, we use only ANIE in these experiments, which allows for efficient space and time integration. We observe that our implementation of Algorithm 2 applied to the case of Navier-Stokes equation closely parallels the IE method employed in [GK98], with the main difference that we learn the Green’s function through gradient descent, since no knowledge of the underlying Navier-Stokes equations is assumed.

| Navier-Stokes | Burgers’ | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| t = 3 | t = 5 | t = 10 | t = 20 | t=10 | t=15 | t=25 | ||||

| s = 256 | s = 512 | s = 256 | s = 512 | s = 256 | s = 512 | |||||

| LSTM | ||||||||||

| ResNet | ||||||||||

| Conv1DLSTM | ||||||||||

| Conv2DLSTM | ||||||||||

| FNO1D | ||||||||||

| Galerkin | NA | NA | NA | |||||||

| FNO2D | NA | NA | ||||||||

| FNO3D | NA | NA | ||||||||

| ViT | ||||||||||

| ViTsmall | ||||||||||

| ViTparallel | ||||||||||

| ViT3D | ||||||||||

| ANIE (ours) | ||||||||||

For the Burgers’ equation, we focus on the ability of ANIE to model both space and time continuously, and we therefore perform an interpolation taks, where the model outputs time points that are not included in the training test, and for unseen initial conditions. This is in contrast to [Cao21, LKA+21] where a “static” Burgers’ equation was considered in which the learned operator maps the initial condition () to the final time (), thus treating time as a discrete two point set. In our approach, we model the system continuously over a time interval and randomly sample points during iterations to perform the quadrature of the temporal integrals. In this experiment, the Galerkin model [Cao21], was not included for the higher spatial dimension setting because the amount of memory required exceeded what was available to us during the experiments. The results are reported in Table 1 (right side), and an example of the learned dynamics is given in Figure 4.

For the Navier-Stokes equation, we consider an extrapolation task where we evaluate the model on unseen initial conditions. Previous works have shown high performance in predicting dynamics of Navier-Stokes from new initial conditions, but they require several frames (i.e. several time points) to be fed into the model in order to achieve such performance. We see that since ANIE learns the full dynamics from arbitrarily chosen initial conditions, we achieve good performance even when a single initial condition is used to initialize the system. We train FNO2D, ViT, ViTsmall and ViTparallel with initialization on a single time point, while convolutional LSTM, FNO3D, and ViT3D are trained with , and times for initialization, respectively. The results are given in Table 1 (left side). We note that ANIE outperforms also the models that use more data points for initialization. FNO2D did not converge for higher number of points, and therefore results for time points and have not been reported, while for FNO3D, we have conducted the experiments only for and since using fewer points for the time dimension would have effectively reduced FNO3D to FNO2D. An example of the Navier-Stokes dynamics is given in Figure 3.

3.2 Modeling brain dynamics using ANIE

Brain activity can be modeled as a spatiotemporal dynamical system [VGSS20]. While most connections between neurons are localized in space, there are a significant number of interactions that are long-range [ERML+13]. As such, brain dynamics can be modeled using integral equations [Ama77] which, unlike PDEs, allow for non-local interactions. Since ANIE allows efficient learning of integral operators from data, we demonstrate the ability of ANIE to learn non-local brain dynamics from functional Magnetic Resonance Imaging (fMRI) recordings.

To obtain fMRI data that has arbitrary time duration as well as unlimited trials, we make use of neurolib [CJO21], an fMRI simulation package. The data provided by this tool permit for more extensive comparison and statistical power. neurolib simulates whole-brain activity using a system of delay differential equations, which are non-local equations, thus allowing testing of ANIE’s ability to model non-local systems. Here we show the performance of ANIE and other models in modeling data generated by neurolib. Details about data generation and preprocessing can be found in the Appendix F.4.

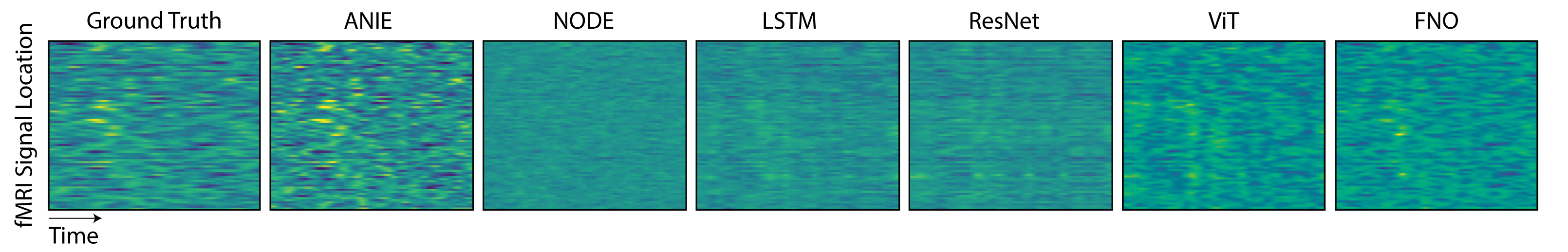

The generated fMRI data comprises neural activity for 80 nodes localized across the cortex. The first half of the data is used for training and the second half is used for testing. For training, the data are divided into segments of 20 time points, where the first time point is used as the initial condition, and the loss is computed over all 20 points. As such, the models are trained as an initial condition problem. During inference, the models are given points from the test set as new initial conditions and asked to extrapolate for the following 19 points. The mean error per point for 200 new initial conditions is shown in Figure 7 and summarized in Table 2. Figure 5 shows the data and model per fMRI recording node over time. We show that ANIE has significantly better performance than other benchmarked methods for medium () and long () time step predictions, demonstrating its ability to model non-local dynamics. For shorter and more localized dynamics (), FNO1D shows better performance, which can be explained by the fact that FNO1D outputs the average of the initial points provided as the prediction for the first 5 time steps.

3.3 Interpretable dynamics

In addition to modeling and generating new dynamics, it is useful to get insight into the underlying process that generates the dynamics. For example, in neuroscience, a major goal is to understand how specific brain activity patterns give rise to cognition, learning, and behavior. To explore the interpretability of ANIE we carry out two experiments. For the first experiment, we augment the spacetime integration domain with a CLS token [DCLT18], such that each dynamics is projected into a single vector. This vector can then be related to specific properties of the dynamics. Specifically, we embed these vectors for different Navier-Stokes dynamics and find that the resulting manifold (projected using PCA) has a highly nonrandom structure. This is in contrast to the projection of the raw data (see Figure 6). To further explore the resulting dynamics manifold, we color it by the velocities of the dynamics, a property that was not explicitly seen by the model during training. We find that the manifold highly correlates with velocity whereas the embedding of the raw data has no such correlation. To quantify this we compute the kNN regression error on the embeddings with respect to the velocities and find that the embedding obtained from ANIE has significantly lower error (see Table 3).

For the second experiment, we inspect the attention weights of the model when predicting brain dynamics (calcium imaging, Appendix G), in order to infer which cortical loci drive neuronal dynamics. Figure 11 shows that the motor and visual cortices are the areas of the brain with the highest attention values. We note that the attention plots are not directly correlated with the brain activity inputs, suggesting that they point to new information about the data. To validate this, we compare the performance of predicting the visual stimulus, which was not explicitly provided to the model, from either the raw data or the attention values using a kNN-regressor (k=3) (see Appendix G). In Table 4 we show that the attention weights significantly () outperform the raw data, thus demonstrating that ANIE can provide insights into the modeled dynamics.

3.4 Further experiments

In Appendix A we include several more experiments regarding the training speed of ANIE showcasing that it is significantly faster than ODE solver based models, hyperparameter sensitivity of the model, and modeling of IE dynamics, along with further tables and figures on the experiments of Subsections 3.1, 3.2 and 3.3. In Appendix A.5 we have explored the convergence of the solver to fixed points of the corresponding IE.

4 Limitations

An important consideration relates to the theoretical guarantees for convergence of the solver as considered in Appendix B.3. In fact, enforcing contractivity of the integral operator might be needed for certain types of applications as seen in Appendix B.3. However, we mention that Lipschitz constraints (in particular contractivity) have been long studied in machine learning, including the case of transformers [KPM21, QWC+23]. We therefore expect that in cases when the solver is unstable, and convergence is problematic, one can enforce convergence using a Lipschitz constraint.

5 Conclusions

We have presented a neural network-based integral equation solver that can learn an integral operator and its associated dynamics from data, and capture non-local spatiotemporal interactions. We have demonstrated the ability of our method to learn ODE, PDE, and IE systems better than other dynamics models, in terms of better predictability as well as scalability. We have studied the approximation capabilities of NIE and ANIE, and obtained theoretical guarantees for the convergence of the IE solver, enhancing the understanding of this deep-learning approach. Moreover, we have shown that ANIE is interpretable and produces meaningful dynamics embeddings, as demonstrated in our applications to the Navier-Stokes equation and brain activity dynamics. Since IEs can be used to model many real-world systems, we anticipate that NIE and ANIE will have broad applications in science and engineering.

6 Data availability statement

We have included descriptions regarding how to reproduce all synthetic datasets, used in Figures 4, 3, 5, 8 as well as Table 1. All synthetic datasets are available at https://github.com/emazap7/ANIE. Access to the datasets can be obtained also at https://figshare.com/articles/dataset/IE_spirals/25606242 for integral equation spirals, https://figshare.com/articles/dataset/Burgers_1k_t400/25606149 for Burgers data, https://figshare.com/articles/dataset/Navier_Stokes_Dataset_mat/25606152 for Navier-Stokes data, and https://figshare.com/articles/dataset/fMRI_data/25606272 for the simulated fMRI data. Lokta-Volterra and Lorentz system datasets can be generated using the notebooks found at https://github.com/emazap7/ANIE. The calcium imaging dataset is not available open source. We have included a detailed account of the techniques used in [BHS+20, HSF+20] (see Appendix G), where information on how to obtain the dataset is included.

7 Code availability statement

All codes are available at: https://github.com/emazap7/ANIE. Detailed installation descriptions are found thereby. Jupyter notebooks for training and testing of the models for the main experiments are provided in the repository. Pre-trained models are accessible directlty, and instructions on how to run the notebooks are added in the form of comments throughout the notebooks. The main codes for the models, along with the experiments, are found in the directory “IE_source”.

8 Author contributions

EZ conceived the algorithmic framework, obtained the theoretical results, and contributed to the numerical experiments. AF and JOC contributed to the numerical experiments. AM, MH and JC provided the calcium imaging data. DvD led the study and conceived the algorithmic framework. EZ, AF, JOC and DvD contributed to writing the article.

References

- [Ama77] Shun-ichi Amari. Dynamics of pattern formation in lateral-inhibition type neural fields. Biological cybernetics, 27(2):77–87, 1977.

- [BdBR+24] Francesca Bartolucci, Emmanuel de Bezenac, Bogdan Raonic, Roberto Molinaro, Siddhartha Mishra, and Rima Alaifari. Representation equivalent neural operators: a framework for alias-free operator learning. Advances in Neural Information Processing Systems, 36, 2024.

- [BHS+20] Daniel Barson, Ali S Hamodi, Xilin Shen, Gyorgy Lur, R Todd Constable, Jessica A Cardin, Michael C Crair, and Michael J Higley. Simultaneous mesoscopic and two-photon imaging of neuronal activity in cortical circuits. Nature methods, 17(1):107–113, 2020.

- [Bôc26] Maxime Bôcher. An introduction to the study of integral equations. Number 10. University Press, 1926.

- [BP72] Edward R Benton and George W Platzman. A table of solutions of the one-dimensional burgers equation. Quarterly of Applied Mathematics, 30(2):195–212, 1972.

- [Bra69] HJ Brascamp. The fredholm theory of integral equations for special types of compact operators on a separable hilbert space. Compositio Mathematica, 21(1):59–80, 1969.

- [BRSS17] Małgorzata Borówko, Wojciech Rżysko, Stefan Sokołowski, and Tomasz Staszewski. Integral equations theory for two-dimensional systems involving nanoparticles. Molecular Physics, 115(9-12):1065–1073, 2017.

- [CAN21] Ricky T. Q. Chen, Brandon Amos, and Maximilian Nickel. Learning neural event functions for ordinary differential equations. International Conference on Learning Representations, 2021.

- [Cao21] Shuhao Cao. Choose a transformer: Fourier or galerkin. Advances in neural information processing systems, 34:24924–24940, 2021.

- [Cho68] Alexandre Joel Chorin. Numerical solution of the navier-stokes equations. Mathematics of computation, 22(104):745–762, 1968.

- [CJO21] Caglar Cakan, Nikola Jajcay, and Klaus Obermayer. neurolib: a simulation framework for whole-brain neural mass modeling. Cognitive Computation, pages 1–21, 2021.

- [CRBD18] Ricky TQ Chen, Yulia Rubanova, Jesse Bettencourt, and David K Duvenaud. Neural ordinary differential equations. Advances in neural information processing systems, 31, 2018.

- [DAK23] Waleed Diab and Mohammed Al-Kobaisi. U-deeponet: U-net enhanced deep operator network for geologic carbon sequestration. arXiv preprint arXiv:2311.15288, 2023.

- [Dav60] Harold Thayer Davis. Introduction to nonlinear differential and integral equations. US Atomic Energy Commission, 1960.

- [DBK+20] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [DCLT18] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [DKM+20] Talgat Daulbaev, Alexandr Katrutsa, Larisa Markeeva, Julia Gusak, Andrzej Cichocki, and Ivan Oseledets. Interpolation technique to speed up gradients propagation in neural odes. Advances in Neural Information Processing Systems, 33:16689–16700, 2020.

- [DM88] Leonard Michael Delves and Julie L Mohamed. Computational methods for integral equations. CUP Archive, 1988.

- [DR07] Philip J Davis and Philip Rabinowitz. Methods of numerical integration. Courier Corporation, 2007.

- [EB12] Sohrab Effati and Reza Buzhabadi. A neural network approach for solving fredholm integral equations of the second kind. Neural Computing and Applications, 21(5):843–852, 2012.

- [ERML+13] Mária Ercsey-Ravasz, Nikola T Markov, Camille Lamy, David C Van Essen, Kenneth Knoblauch, Zoltán Toroczkai, and Henry Kennedy. A predictive network model of cerebral cortical connectivity based on a distance rule. Neuron, 80(1):184–197, 2013.

- [Fef00] Charles L Fefferman. Existence and smoothness of the navier-stokes equation. The millennium prize problems, 57:67, 2000.

- [FJNO20] Chris Finlay, Jörn-Henrik Jacobsen, Levon Nurbekyan, and Adam Oberman. How to train your neural ode: the world of jacobian and kinetic regularization. In International conference on machine learning, pages 3154–3164. PMLR, 2020.

- [GBD+20] Arnab Ghosh, Harkirat Behl, Emilien Dupont, Philip Torr, and Vinay Namboodiri. Steer: Simple temporal regularization for neural ode. Advances in Neural Information Processing Systems, 33:14831–14843, 2020.

- [GFZJ22] Yu Guan, Tingting Fang, Diankun Zhang, and Congming Jin. Solving fredholm integral equations using deep learning. International Journal of Applied and Computational Mathematics, 8(2):1–10, 2022.

- [GIKM10] Yurii N Grigoriev, Nail H Ibragimov, Vladimir F Kovalev, and Sergey V Meleshko. Symmetries of integro-differential equations: with applications in mechanics and plasma physics, volume 806. Springer, 2010.

- [GK98] Leslie Greengard and Mary Catherine Kropinski. An integral equation approach to the incompressible navier–stokes equations in two dimensions. SIAM Journal on Scientific Computing, 20(1):318–336, 1998.

- [GLS+21] Rui Guo, Zhichao Lin, Tao Shan, Maokun Li, Fan Yang, Shenheng Xu, and Aria Abubakar. Solving combined field integral equation with deep neural network for 2-d conducting object. IEEE Antennas and Wireless Propagation Letters, 20(4):538–542, 2021.

- [Gro07] Charles W Groetsch. Integral equations of the first kind, inverse problems and regularization: a crash course. In Journal of Physics: Conference Series, volume 73, page 012001. IOP Publishing, 2007.

- [GTM21] Pablo Gómez, Håvard Hem Toftevaag, and Gabriele Meoni. torchquad: Numerical integration in arbitrary dimensions with pytorch. Journal of Open Source Software, 6(64):3439, 2021.

- [GZ22] Nicholas Geneva and Nicholas Zabaras. Transformers for modeling physical systems. Neural Networks, 146:272–289, 2022.

- [HSF+20] Ali S Hamodi, Aude Martinez Sabino, N Dalton Fitzgerald, Dionysia Moschou, and Michael C Crair. Transverse sinus injections drive robust whole-brain expression of transgenes. Elife, 9:e53639, 2020.

- [HSW89] Kurt Hornik, Maxwell Stinchcombe, and Halbert White. Multilayer feedforward networks are universal approximators. Neural networks, 2(5):359–366, 1989.

- [HWS+23] Zhongkai Hao, Zhengyi Wang, Hang Su, Chengyang Ying, Yinpeng Dong, Songming Liu, Ze Cheng, Jian Song, and Jun Zhu. Gnot: A general neural operator transformer for operator learning. In International Conference on Machine Learning, pages 12556–12569. PMLR, 2023.

- [KBJD20] Jacob Kelly, Jesse Bettencourt, Matthew J Johnson, and David K Duvenaud. Learning differential equations that are easy to solve. Advances in Neural Information Processing Systems, 33:4370–4380, 2020.

- [KCL21] Patrick Kidger, Ricky TQ Chen, and Terry Lyons. “hey, that’s not an ode”: faster ode adjoints with 12 lines of code. Journal of Machine Learning Research, 2021.

- [KD19] Alexander Keller and Ken Dahm. Integral equations and machine learning. Mathematics and Computers in Simulation, 161:2–12, 2019.

- [KGES19] Manochehr Kazemi, Hamid Mottaghi Golshan, Reza Ezzati, and Mohsen Sadatrasoul. New approach to solve two-dimensional fredholm integral equations. Journal of Computational and Applied Mathematics, 354:66–79, 2019.

- [KLL+21] Nikola Kovachki, Zongyi Li, Burigede Liu, Kamyar Azizzadenesheli, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Neural operator: Learning maps between function spaces. arXiv preprint arXiv:2108.08481, 2021.

- [KLS24] Nikola B Kovachki, Samuel Lanthaler, and Andrew M Stuart. Operator learning: Algorithms and analysis. arXiv preprint arXiv:2402.15715, 2024.

- [KPM21] Hyunjik Kim, George Papamakarios, and Andriy Mnih. The lipschitz constant of self-attention. In International Conference on Machine Learning, pages 5562–5571. PMLR, 2021.

- [KR12] Dan Kushnir and Vladimir Rokhlin. A highly accurate solver for stiff ordinary differential equations. SIAM Journal on Scientific Computing, 34(3):A1296–A1315, 2012.

- [Kra] MA Krasnosel’skii. Geometrical methods of nonlinear analysis, springer-verlag, berlin, 1984. MR 85b, 47057.

- [Kra64] Yu P Krasnosel’skii. Topological methods in the theory of nonlinear integral equations. Pergamon Press, 1964.

- [Lak95] Vangipuram Lakshmikantham. Theory of integro-differential equations, volume 1. CRC press, 1995.

- [LJP+21] Lu Lu, Pengzhan Jin, Guofei Pang, Zhongqiang Zhang, and George Em Karniadakis. Learning nonlinear operators via deeponet based on the universal approximation theorem of operators. Nature Machine Intelligence, 3(3):218–229, 2021.

- [LKA+20] Zongyi Li, Nikola Kovachki, Kamyar Azizzadenesheli, Burigede Liu, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Neural operator: Graph kernel network for partial differential equations. In International Conference on Learning Representations, Workshop on Integration of Deep Neural Models and Differential Equations, 2020.

- [LKA+21] Zongyi Li, Nikola Kovachki, Kamyar Azizzadenesheli, Burigede Liu, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Fourier neural operator for parametric partial differential equations. In International Conference on Learning Representations, 2021.

- [LLS21] Seung Hoon Lee, Seunghyun Lee, and Byung Cheol Song. Vision transformer for small-size datasets. arXiv preprint arXiv:2112.13492, 2021.

- [LPW+17] Zhou Lu, Hongming Pu, Feicheng Wang, Zhiqiang Hu, and Liwei Wang. The expressive power of neural networks: A view from the width. Advances in neural information processing systems, 30, 2017.

- [LR02] Xian-Fang Li and Er-Qian Rong. Solution of a class of two-dimensional integral equations. Journal of computational and applied mathematics, 145(2):335–343, 2002.

- [MKH+22] Andreas Maier, Harald Köstler, Marco Heisig, Patrick Krauss, and Seung Hee Yang. Known operator learning and hybrid machine learning in medical imaging—a review of the past, the present, and the future. Progress in Biomedical Engineering, 4(2):022002, 2022.

- [Mor13] Valter Moretti. Spectral theory and quantum mechanics: with an introduction to the algebraic formulation. Springer Science & Business Media, 2013.

- [OOK+23] Oded Ovadia, Vivek Oommen, Adar Kahana, Ahmad Peyvan, Eli Turkel, and George Em Karniadakis. Real-time inference and extrapolation via a diffusion-inspired temporal transformer operator (ditto). arXiv preprint arXiv:2307.09072, 2023.

- [OSG+22] Vivek Oommen, Khemraj Shukla, Somdatta Goswami, Rémi Dingreville, and George Em Karniadakis. Learning two-phase microstructure evolution using neural operators and autoencoder architectures. npj Computational Materials, 8(1):190, 2022.

- [PMB+22] Michael Poli, Stefano Massaroli, Federico Berto, Jinkyoo Park, Tri Dao, Christopher Ré, and Stefano Ermon. Transform once: Efficient operator learning in frequency domain. Advances in Neural Information Processing Systems, 35:7947–7959, 2022.

- [PMSR21] Avik Pal, Yingbo Ma, Viral Shah, and Christopher V Rackauckas. Opening the blackbox: Accelerating neural differential equations by regularizing internal solver heuristics. In International Conference on Machine Learning, pages 8325–8335. PMLR, 2021.

- [PMY+20] Michael Poli, Stefano Massaroli, Atsushi Yamashita, Hajime Asama, and Jinkyoo Park. Hypersolvers: Toward fast continuous-depth models. Advances in Neural Information Processing Systems, 33:21105–21117, 2020.

- [PYD20] Kourosh Parand, Hafez Yari, and Mehdi Delkhosh. Solving two-dimensional integral equations of the second kind on non-rectangular domains with error estimate. Engineering with Computers, 36(2):725–739, 2020.

- [Que16] Qichao Que. Integral Equations For Machine Learning Problems. PhD thesis, The Ohio State University, 2016.

- [QWC+23] Xianbiao Qi, Jianan Wang, Yihao Chen, Yukai Shi, and Lei Zhang. Lipsformer: Introducing lipschitz continuity to vision transformers. arXiv preprint arXiv:2304.09856, 2023.

- [RCD19] Yulia Rubanova, Ricky TQ Chen, and David K Duvenaud. Latent ordinary differential equations for irregularly-sampled time series. Advances in neural information processing systems, 32, 2019.

- [Rok85] Vladimir Rokhlin. Rapid solution of integral equations of classical potential theory. Journal of computational physics, 60(2):187–207, 1985.

- [Rok90] Vladimir Rokhlin. Rapid solution of integral equations of scattering theory in two dimensions. Journal of Computational Physics, 86(2):414–439, 1990.

- [SB51] Edwin E Salpeter and Hans Albrecht Bethe. A relativistic equation for bound-state problems. Physical Review, 84(6):1232, 1951.

- [SRH+81] Harlan W Stech, Samuel M Rankin, Terry L Herdman, et al. Integral and functional differential equations, volume 67. CRC Press, 1981.

- [TBY+19] Yao-Hung Hubert Tsai, Shaojie Bai, Makoto Yamada, Louis-Philippe Morency, and Ruslan Salakhutdinov. Transformer dissection: A unified understanding of transformer’s attention via the lens of kernel. In Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 4344––4353, 2019.

- [TCEN+22] Hugo Touvron, Matthieu Cord, Alaaeldin El-Nouby, Jakob Verbeek, and Hervé Jégou. Three things everyone should know about vision transformers. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXIV, pages 497–515. Springer, 2022.

- [TF59] W Tobocman and LL Foldy. Integral equations for the schrödinger wave function. American Journal of Physics, 27(7):483–490, 1959.

- [VGSS20] Saurabh Vyas, Matthew D Golub, David Sussillo, and Krishna V Shenoy. Computation through neural population dynamics. Annual Review of Neuroscience, 43:249, 2020.

- [VSP+17] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- [Waz11] Abdul-Majid Wazwaz. Linear and nonlinear integral equations, volume 639. Springer, 2011.

- [XZC+21] Yunyang Xiong, Zhanpeng Zeng, Rudrasis Chakraborty, Mingxing Tan, Glenn Fung, Yin Li, and Vikas Singh. Nyströmformer: A nystöm-based algorithm for approximating self-attention. In Proceedings of the… AAAI Conference on Artificial Intelligence. AAAI Conference on Artificial Intelligence, volume 35, page 14138. NIH Public Access, 2021.

- [YBR+19] Chulhee Yun, Srinadh Bhojanapalli, Ankit Singh Rawat, Sashank J Reddi, and Sanjiv Kumar. Are transformers universal approximators of sequence-to-sequence functions? arXiv preprint arXiv:1912.10077, 2019.

- [YZQC21] Xu Yang, Hanwang Zhang, Guojun Qi, and Jianfei Cai. Causal attention for vision-language tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9847–9857, 2021.

- [Zem12] Stephen M Zemyan. The classical theory of integral equations: a concise treatment. Springer Science & Business Media, 2012.

- [ZFM+23] Emanuele Zappala, Antonio H de O Fonseca, Andrew H Moberly, Michael J Higley, Chadi Abdallah, Jessica A Cardin, and David van Dijk. Neural integro-differential equations. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 37, pages 11104–11112, 2023.

Appendix A Additional Experiments

A.1 Additional figures and tables

We hereby report additional figures and tables that complement the results given in Section 3.

| t = 5 | t = 10 | t = 20 | |

|---|---|---|---|

| NODE | |||

| LSTM | |||

| Residual Network | |||

| FNO1D | 0.4735 0.04857 | ||

| ViT | |||

| DeepOnet+AE | |||

| DeepOnet+UNET | |||

| ANIE (ours) |

| PCA | UMAP | LSTM | ViT | ANIE (ours) |

|---|---|---|---|---|

| PCA | ViT | ConvLSTM | ANIE (ours) |

|---|---|---|---|

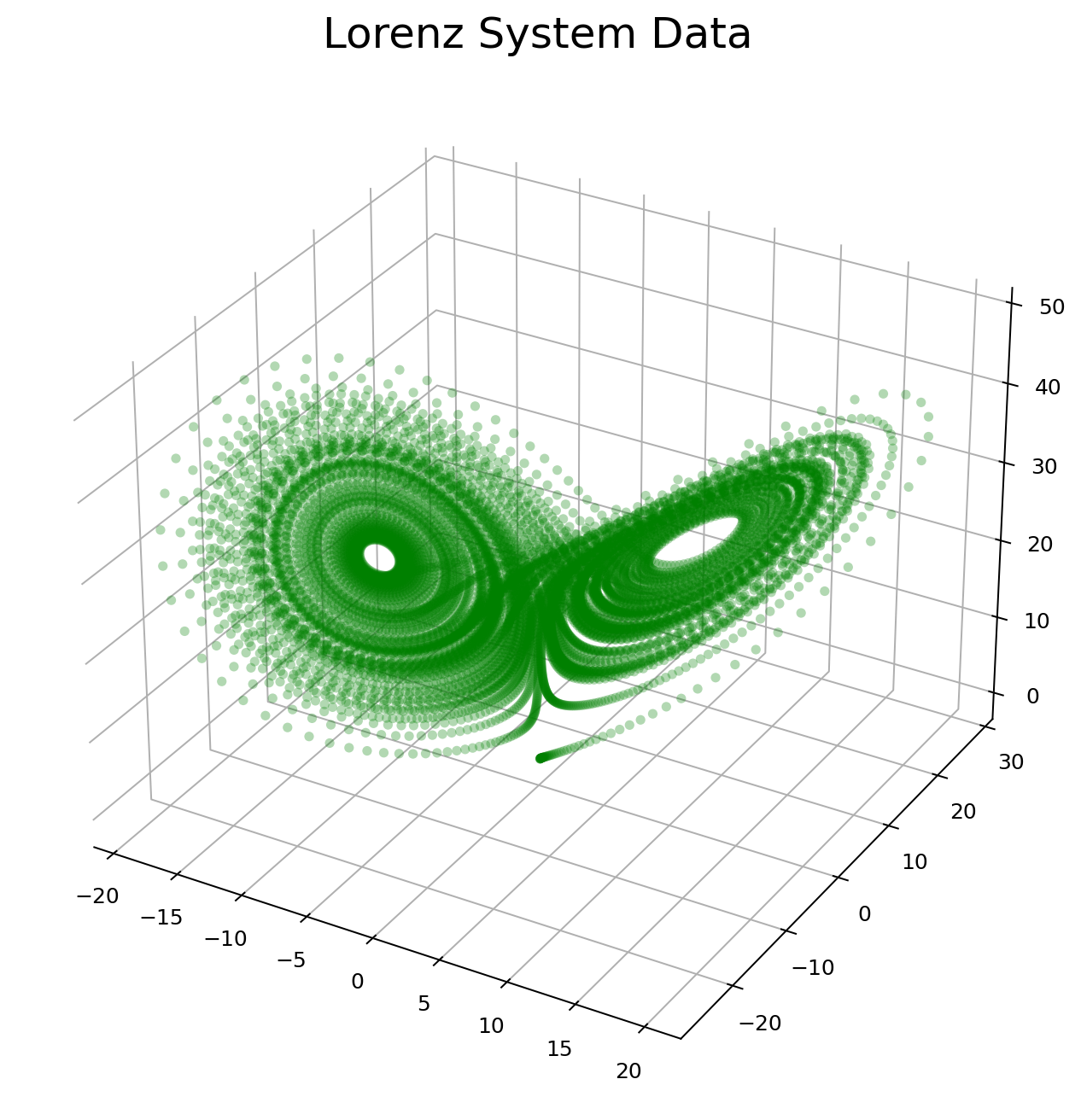

A.2 Benchmark of (A)NIE training speed

Neural ODEs (NODEs) can be slow and have poor scalability [KBJD20]. As such, several methods have been introduced to improve their performance [KBJD20, KCL21, DKM+20, PMY+20, PMSR21]. Despite these improvements, NODE is still significantly slower than discrete methods such as LSTMs. We hypothesize that (A)NIE has significantly better scalability than NODE, comparable to fast but discrete LSTMs, despite being a continuous model. To test this, we compare NIE and ANIE to the latest optimized version of (latent) NODE [RCD19] and to LSTM on three different dynamical systems: Lotka-Volterra equations, Lorenz system, and IE-generated 2D spirals (see Appendix F for data generation details). During training, models were initialized with the first half of the data and were tasked to predict the second half. Training speed are reported in Table 5. While all models achieve comparable (good) fits to the data, we find that ANIE outperforms all models in 2 out of the 3 datasets in terms of speed. Furthermore, ANIE has better MSE compared to all other models.

| Wall Time (sec per iteration) | Mean Squared Error | |||||

| Models | Lotka-Volterra | Lorenz | 2D-Spirals | Lotka-Volterra | Lorenz | 2D-Spirals |

| LSTM | ||||||

| Latent NeuralODE | ||||||

| NIE (ours) | ||||||

| ANIE (ours) | ||||||

A.3 Hyperparameter sensitivity benchmark

For most deep learning models, including NODE, finding numerically stable solutions usually requires an extensive hyperparameter search. Since IE solvers are known to be more stable than ODE solvers, we hypothesize that (A)NIE is less sensitive to hyperparameter changes. To test this, we quantify model fit, for the Lotka-Volterra dynamical system, as a function of two different hyperparameters: learning rate and L2 norm weight regularization. We perform this experiment for three different models: LSTM, Latent NODE, and ANIE. As shown in Figure 10, we find that ANIE generally has lower validation error as well as more consistent errors across hyperparameter values, compared to LSTM and NODE, therefore validating our hypothesis.

A.4 Modeling 2D IE spirals

To further test the ability of ANIE in modeling non-local systems, we benchmark ANIE, NODE, and LSTM on a dataset of 2D spirals generated by integral equations. This data consists of 500 2D curves of 100 time points each. The data was split in half for training and testing. During training, the first 20 points were given as initial condition and the models were tasked to predict the full 100 point dynamics. Details on the data generation are described in Appendix F. For ANIE, the initialization is given via the free function , which assumes the values of the first 20 points and sets the remaining 80 points to be equal to the value of the 20th point. For NODE, the initialization is given as the reverse RNN on the first 20 points, which outputs a distribution corresponding to the first time point (see [CRBD18] for more details on their Latent ODE experiments). For LSTM, we input the data in segments of 20 points to predict the consecutive point of the sequence. The process is repeated with the output of the previous step until all points of the curve are predicted. During inference, we test the models’ performance on never before seen initial conditions. Table 6 shows the correlation between the ground truth curve and the model predictions. Figure 9 shows the correlation coefficients for the 500 curves. In summary, ANIE significantly outperforms the other tested methods in predicting IE generated non-local dynamics.

| NODE | LSTM | ViT | FNO | ANIE (ours) |

|---|---|---|---|---|

A.5 Solver convergence

We now consider the convergence of the solver to a solution of an IE for a trained model. Our experiment here considers a model that has been trained with a number of iteration, and we explore whether the solver iterations converge to a solution at the end of the training. These results show that the model learns to converge to a solution of Equation 5 within the iterations that are fixed during training. They show that a fixed point for IE is obtained when outputting a prediction.

Appendix B Integral Equations

Integral Equations (IEs) are equations where the unknown function appears under the sign of integral. These equations can be given in general as

| (5) |

where is an integral operator, e.g. as in Equation 1 and Equation 3, and is a known term of the equation. In fact, this functional equations have been studied for classes of compact operators that are not necessarily in the form of integral operators – see [Bra69] and references therein. We can distinguish two fundamental kinds of equations from the form given in Equation 5 that have been studied extensively throughout the years. When , we say that the corresponding IE is of the first kind, while when we say that it is of the second kind.

In this article we formulate our methods based on equations of the second kind for the following important theoretical considerations which apply to the case where is bounded over an infinite space (such as the space of functions as we consider in the article). Firstly, an equation of the first kind can easily have no solution, as the range of a bounded operator on an infinite space is not the whole space (see Proposition 4.41 in [Mor13]). Therefore, for choices of , there is no such that and therefore Equation 5 has no solutions. The other issue is intrinsic to the nature of equation of the first kind, and does not relate to the existence of solutions. In fact, any compact injective operator (on an infnite space) does not admit a bounded left inverse (see Proposition 4.42 in [Mor13]). In practice, this means that if Equation 5 has a unique solution for , then varying by a a small amount can result in very significant variations in the corresponding solution . This is clearly a potential issue when dealing with a deep learning model that aims at learning the operator from data. In fact, observations from which is learned might be noisy, which might result in very considerable perturbations of the solution and, consequently, considerable perturbations on the operator that the model converges to. Since equations of the second kind are much more stable, we have formulated all the theory in this setting, and implemented our solver for such equations. The issues relating to existence and uniqueness of the solution for these equations are discussed in more detail in Section B.4 below.

The theories of IEs and Integro-Differential Equations (IDEs) are tightly related, and it is often the case to reduce problems in IEs to problems in IDEs and vice versa, both in practical and theoretical situations. IEs are also related to differential equations, and it is possible to reformulate problems in ODEs in the language of IEs or IDEs. In certain cases, IEs can also be converted to differential equations problems, even though this is not always possible [Zem12, GIKM10]. In fact, the theory of IEs is not equivalent to that of differential equations. The most intuitive way of understanding this is by considering the local nature of differential euqations, as opposed to the non-local origin of IEs. By non-locality of IEs it is meant that each spatiotemporal point in an IE depends on an integration over the full domain of the solution function . In the case of differential equations, each local point depends only on the contiguous points through the local definition of the differential operators.

B.1 Integral Equations (1D)

We first discuss IEs where the integral operator only involves a temporal integration (i.e. 1D), as discussed in Section 2. In analogy with the case of differential equations, this case can be considered as the one corresponding to ODEs.

These IEs are given by an equation of type

| (6) |

where is the free term, which does not depend on , while the unknown function appears both in the LHS, and in the RHS under the sign of integral. The term is an integral operator from the space of integrable functions over some domain of , into itself. We observe that the variables and appearing in are both in , and they are interpreted as time variables. We refer to them as global and local times, respectively, following the same convention of [ZFM+23]. The functions and determine the extremes of integration for each (global) time . Common choices for and include and (Volterra equations), or and (Fredholm equations).

The fundamental question in the theory of IEs is whether solutions exists and are unique. It turns out that under relatively mild assumptions on the regularity of IEs admit unique solutions [Zem12]. Furthermore, the proofs in the first chapter of [Lak95] show the close relation between IEs and IDEs, as existence an uniqueness problems for IDEs are shown to be equivalent to analogous problems for IEs. Then the fixed point theorems of Schauder and Tychonoff are used to prove the results.

B.2 Integral Equations (n+1D)

We now discuss the case of IEs where the integral operator involves integration over a multi-dimensional domain of . This is the IE version of PDEs, and they are commonly referred to as Partial Integral Equations (PIEs) when integration occurs on different components separately. We will consider equations where the multi-dimensional integral is obtained through multiple integrations. An equation of this type takes the form

| (7) |

where is a domain in , and . Here does not necessarily coincide with .

PIEs and higher dimensional IEs have been studied in some restricted form since the 1800’s, as they have been employed to formulate the laws of electromagnetism before the unified version of Maxwell’s equations was published. In addition, early work on the Dirichelet’s problem found the IE approach proficuous, and it is well known that several problems in scattering theory (molecular, atomic and nuclear) are formulated in terms of (P)IEs. In fact, the Schrödinger equation can be recast as an IE [TF59]. See also [SB51], where bound-state problems are treated with the IE formalism.

B.3 Generalities on solving Integral Equations

The most striking difference between the procedure of solving an IE and an ODE, is that for an IE in order to evaluate at a single time point, one needs to know the solution for all time points. This is clearly an issue, since solving for one point requires that we already know a solution for all points. In order to better elucidate this point, we consider a simple comparison between the solution procedure of an ODE equation of type , and an IE of type .

Let us assume we are solving an ODE of type and that is known at time points . Then one can obtain at by means of the Euler method by using the known value at by taking small enough steps forward in time. In general, therefore, one starts by the initial condition and determines the solution at the points by taking small steps and representing the derivative as for small intervals . Of course, more sophisticated methods are possible for the numerical solution of the ODE, but they essentially produce the next time point from the previous one in a sequential way. Let us now consider an analogous Fredholm IE to the ODE given above. This is a simple equation of type . Suppose we know at time points . In order to determine , we need to compute , which requires us to know over the full interval , as is integrated over . It is obvious that knowing a single time point for (or a sequence of values) does not suffice anymore. In a Volterra type of equation the integral would be between (where the unknown value is included!), which does not really change the essence of the issue.

Although several methods can be employed to solve IEs, most (if not all) of them are based on the concept of iteration over some initial guess for the solution of the IE. Iterating on the initial guess produces a sequence of functions that then converges to a solution of the integral equation. More specifically, one can consider the von Neumann series of the integral operator as we now discuss. In fact, let us consider Equation 5, which can be rewritten as

where we assume for a moment that is a linear operator. Observe that if we can find the inverse of , then we obtain as . This can be done by writing the von Neumann series for . This expression makes sense whenever the series converges in operator norm, which is guaranteed in important cases such as when converges (e.g. when ), while milder conditions on the convergence of the series exist as well. In such a situation when the von Neumann series is meaningful, we can then obtain by iteratively applying to . The nonlinear case is handled in a similar iterative procedure, which is called Method of Successive Approximations, or also Picard’s Iterations [Dav60]. It is in fact straightforward to convince oneself that under mild conditions, the method will output a solution of the integral equation. Conditions under which such succession is guaranteed to converge can be found in [Dav60]. A particularly well known case is when the integrand of the integral operator is contractive (i.e. Lipschitz with constant between and ) with respect to the variable . We give a proof of such approach for our setting, c.f. similar results found in [Dav60].

Theorem B.1.

Let be fixed, and suppose that is Lipschitz with constant . Then, we can find such that , independently of the choice of .

Proof.

Let us set and and consider the term . We have

For an arbitrary we have

Therefore, applying the same procedure to until we reach , we obtain the inequality

Since , the term is eventually smaller than , for all for some choice of . Defining for such gives the following

∎

The following now follows easily.

Corollary B.2.

Proof.

From the proof of Theorem B.1 it follows that the sequence is a Cauchy sequence. Since is Banach, then converges to . By continuity of , is a solution to Equation 5. For the second part of the statement, observe that when is contractive with respect to , then we can apply Theorem B.1 to show that the sequence generated following Algorithm 1 is Cauchy, and we can proceed as in the first part of the proof. ∎

Remark B.3.

Observe that the result in Corollary B.2 applies to Algorithm 2 as well, under the assumptions that the transformer architecture is contractive with respect to the input sequence . Also, a statement that refers to higher dimensional IEs can be obtained (and proved) similarly to the second part of the statement of Corollary B.2, using the measure of instead of the value .

In practice, the method of successive approximations is implemented as follows. The initial guess for the integral equation is simply given by the free function (i.e. ), which is used to initialize the iterative procedure. Then, we apply to to obtain a new solution . We set and apply to the solution and repeat. Here is a smoothing factor that determines the amount of contribution from the new approximation to consider at each step. As the iterations grow, the fractions of the contributions due to the smoothing factor tend to . Observe that when we sum with , we obtain the terms of the von Neumann series up to degree applied to : . The smoothing factor generally shows good empirical regularization for IE solvers, and we have set throughout our experiments, even though we have not seen any concrete difference between different values of . This procedure is shown in Fugure 2.

In [DM88], Chapter 4, computations on the error bounds for the iterative procedure described above when the integrand function splits into the product of a kernel (see above) and a linear function are given. In the same chapter a detailed description of the Nyström approximation for the computation of the error is given as well. We describe a concrete realization of the iterative procedure discussed above in Section D, along with the learning steps for the training of our model. Moreover, we additionally observe that the procedure described above does not depend on being an integral operator or a general operator and, therefore, applying this methodology to the case where we have a transformer instead of is still meaningful, in the assumption that is such that the iterated series of approximations is convergent.

Depending on the specific IE that one is solving (e.g. Fredholm or Volterra, or etc.) the actual numerical procedure for finding a numerical solution can vary. For instance, a list of articles that showcase such a wide variety of specific methods for the solution of certain types of equations is [KR12, BRSS17, LR02, KGES19, PYD20]. Such variations upon the same theme of iterative procedure depend on finding the most efficient way of converging to a solution, finding the best error bounds, improving stability of the solver and substantially depend on the form of the integral operator. As our method is applied without the actual knowledge of the shape of the integral operator, but it actually aims at inferring (i.e. learning) the integral operator from data, we implement an iterative procedure which is fixed and depends only on a hyperparameter smoothing factor. This is described in detail in the next section. However, we point out that since the integrand, and therefore the integral operator itself, is learned during the training, one can assume that the model will optimize with respect to the procedure in a way that our iterations are in a sense “optimal” with respect to the target.

Thus far, our considerations on the implementation of IE solvers seem to point to a fundamental computational issue, since they entail a more sophisticated solving procedure than that of ODEs or PDEs. However, in various situations, even solving ODEs and PDEs through IE solvers presents significant advantages that are not necessarily obvious from the above discussions. The first advantage is that IE solvers are significantly more stable than ODE and PDE solvers, as shown for instance in the articles [KR12, Rok85, Rok90]. This in particular provides a new solution to the issue of underflowing during training of NODEs that does not consist of a regularization, but of a complete change of perspective. In addition, even though to solve an IE one needs to iterate, in general the number of iterations is not particularly high. In fact, in our experiments the total number of iterations turned out to be sufficient to be fixed between and . However, when solving for instance an ODE, one needs to go sequentially through each time step. These can be in the order of the (as in some of our experiments). On the contrary, our IE solver processes the full time interval in parallel for each iteration. This results in a much faster algorithm compared to differential solvers, as shown in our experiments.

B.4 Existence and uniqueness of solutions

The solver procedure described in the previous subsection of course assumes that there exists a solution to start with. As mentioned at the beginning of the section, we treat equations of the second kind in this article also because the existence conditions are better behaved than for the equations of the first kind. We give now some theoretical considerations in this regard. We will also discuss when these solutions are uniquely determined. Existence and uniqueness are two fundamental parts of the well-posedness of IEs, the other being the continuity of solutions with respect to initial data.

A concise and relatively self-contained reference for the existence and uniqueness of solutions for (linear) Fredholm IEs is Section 4.5 in [Mor13]. In fact, it is shown in Theorem 4.43 that if a Fredholm equation has Hermitian kernel, then the IE has a unique solution whenever is not an eigenvalue of the integral operator. For real coefficients, which is the case we are interested into, one can simply reduce the case to symmetric kernels, which are kernels for which for all . Since in this article we have assumed , the condition becomes equivalent to saying that there is no function such that for all .

For more general (linear) integral operators (bounded on a Hilbert space), a similar result holds. In fact, from Theorem 4.44 in [Mor13] we have that a generalized Fredholm integral equation admits solutions if and only if the free function is orthogonal to each solution of the associated homogeneous adjoint equation. The latter admits the zero function as a solution (so the solution set is not empty), and is obtained from Equation 5 by deleting , and by taking the adjoint of and the complex conjugate of . In the real case, the conjugate of is itself. Moreover, uniqueness is guaranteed if the associated homogeneous equation has only trivial solutions. In the case of nonlinear integral operators several existence and uniqueness conditions can be found in the literature. The reader is referred to [Dav60, Zem12, Lak95] for specific formulations. Generally speaking, such conditions are assumed on the integrand functions that determine the integral operator, in such a way that contractive theorems (such as Schauder’s and Tikhonoff’s) can be applied.

Observe that such formulations of the existence and uniqueness based on the contractive properties of the operator are particularly interesting in the case where the integral operator is replaced by a general neural network (between function spaces) which is not necessarily obtained through integration. In practice, when is a general neural network that is possibly nonlinear on all the entries, except with respect to the function , can be approximated by an integral equation using the following reasoning. It is known that Hilber-Schmidt operators on the Hilbert space of square integrable functions are approximated by integral operators – see e.g. [Mor13] Theorem 4.26. It is reasonable to assume that neural network operators are well behaved enough to be considered Hilbert-Schmidt operators. They therefore approximate some integral operator, and the training process therefore learns an integral equation.

More generally, for IEs of Urysohn or Hammerstein type, the existence and uniqueness problems are well known under much milder conditions, namely when the operator is completely continuous [Kra64, Kra]. In this situation, it is sufficient for the operator to have a nonzero topological index to guarantee that the corresponding IE admits a solution, and to study the problem of uniqueness one can determine the value of the topological index in a bounded subset of the Banach space in consideration, since this is directly related to the number of fixed points of the given IE.

The previous discussion, however, does not directly apply to the case when is a transformer, due to the fact that is not linear in in this case. Such equations can still be considered generalized Fredholm equations, and conditions on nonlinear operators being approximated by integral operators can be found in the literature, but the extent to which such equations are equivalent to integral equations is a fascinating question which will not be explicitly considered in this article.

As a word of warning, we mention that the general theory ensures the existence and uniqueness of solutions under some (mild) assumptions. Of course, in principle one should impose constraints to ensure that such assumptions are satisfied and that the results would apply. However, in our experiments we have observed good stability and good convergence without imposing any additional constraints. This does not apply in general, but we hypothesize that during optimization the model converges towards operators whose associated IE is well behaved, in order to avoid regimes of poor stability due to lack of solutions or lack of uniqueness of solutions. For different datasets such behavior might not be satisfied, and extra care in this regard might be needed.

B.5 The initial condition for IEs

NIE does not learn a dynamical system via the derivative of a function , as is the case for ODEs and IDEs. Therefore, we do not need to specify an initial condition in the solver during training and evaluation. In fact, the initial condition for IEs is encoded in the equation itself. For instance, taking in a Volterra or a Fredholm equation uniquely fixes for all .

Therefore, we can specify a condition for IEs by constraining the free function . In what follows, we will make use of this paradigm several times. There are two immediate ways one could impose constraints on the free function. The simplest is to fix a value and let be fixed to be for all . Alternatively, one could choose an arbitrary function and keep this function fixed. In practice, the latter is conceptually more meaningful. For instance, in theoretical physics, when transforming the Schrödinger equation into an integral equation, on the RHS one can choose an arbitrary function which corresponds to the wave function of free particles, i.e. without potential . Applications of this procedure are found below in the experiments.

Appendix C Approximation capabilities

In this appendix we consider the capabilities of our models to approximate (nonlinear) integral operators and integral equations.

C.1 NIE

We consider two settings, where the integral operarator is modeled by a single hidden layer feed forward neural network of arbitrary width, or by an arbitrarily deep neural network.

We want to show that when we restrict ourselves to single hidden layer feed forward neural networks of arbitrary depth for our function in Equation 1, we can approximate a wide class of integral equations over a suitable subset of the space of functions. In the case of deep neural networks, we will argue that the NIE architecture can approximate any “regular enough” integral operator, where regularity will be described below. We restrict our considerations to the case of function spaces where the domain is , since the higher dimensional case is easily adapted from this discussion. We will therefore use instead of to indicate the elements of the domain of the integral operators.

Let be an integral operator on the space of continuous functions, defined as for continuous functions , and a continuous . In fact, for what follows we could consider as being Borel measurable, instead of imposing the more restrictive condition of being continuous. However, since in applications continuity is generally required, we impose this more restrictive conditions. Moreover, our discussion easily extends to the case when the definition intervals are instead of with simple modifications, and a similar approach also extends to higher dimensional integrals. We assume that is such that the corresponding integral equation of the second kind, i.e. Equation 1, admits a solution in . Since is continuous, there exists a compact , for , such that . Let us consider now a neighborhood of in the compact-open topology such that for all we have the property . This could be, for instance, the space of functions mapping into the open . We can therefore restrict to the domain , and we will still indicate this restriction by and the corresponding integral operator by (defined over the neighborhood ), for notational simplicity.

For an arbirtary chosen , we want to show that we can approximate with error at most in the metric induced by on through an NIE integral operator . To this purpose, let us set , and observe that by applying the Universal Approximation Theorem for single hidden layer feed forward neural networks (see [HSW89]) to the function , we can find a single hidden layer neural network such that for all we have . With such a , for all functions we have for any fixed in

Therefore, uniformly on we have that

But this means that with the metric on induced by that of .

We observe that while this approximation does not hold in complete generality, it is valid for a class of integral operators of importance, since we are usually interested in operators whose corresponding IE is admits continuous solutions, and we are interested in modeling the operator in a neighborhood of a solution. Moreover, under mild assumptions (e.g. discussed in Appendix B.4 the dependence of the solution on the initial data is continuous, and therefore the solutions to the equation for perturbed lie in a neighborhood of a solution obtained for . So, our results apply in such important cases. Lastly, we point out that throughout the previous reasoning we have implicitly assumed that numerical integration is performed with infinite precision. Of course this is not the case in practice, but since we can reduce the numerical error in the integration procedure arbitrarily by choosing densely enough samples for a choice of the integration scheme, the error due to numerical integration can be rendered small enough so that the previous inequalities hold.

We now consider the case where we allow deep neural networks as in [LPW+17], Theorem 1. In this case, we argue that for any IE of the second kind as in Equation 1 where we set for a Lebesgue integral function , we can approximate the integral operator with arbitrary precision. As a consequence, there is a NIE model which realizes any IE as in Equation 1 with arbitrary accuracy. We can proceed as for the case of single hidden layers neural networks above, with the main difference that applying Theorem 1 from [LPW+17] we do not need to restrict ourselves to a neighborhood of a solution of the integral equation, and the neural integral operator approximates uniformly with respect to for any . Observe that in order to use Theorem 1 in [LPW+17] we need to precompose and by a characteristic function , which does not affect the result.

C.2 ANIE

We give some comments on the approximation properties of ANIE with respect to generalized Fredholm equations. For simplicity, we consider the case where the integration is performed only over time, even though the same reasoning can be extended to spatiotemporal domains. Let denote a Fredholm integral operator defined through the assignment . Observe that this integral form is more general than considered in Equation 1, and it follows the interpretation of integration in terms of self-attention (c.f. Appendix D.2, where the integration approximation used in this article is given in more detail).

Let us assume that the integral equation admits a unique continuous solution , and that is regular enough so that the equation admits unique solution in for given functions in a neighborhood of in the compact open topology. Observe, that such well-posedness conditions are usually relatively mild (see for instance Appendix B.4 and references therein), and this is the main situation of interest in applications. Then, there exists a compact such that and we can choose a neighborhood of in the compact-open topology of such that for all . In fact, one can simply choose . Under such hypothesis, there are numerical integration schemes that can approximate the integral for any fixed choice of with arbitrary precision, upon choosing a number of points for evaluation that is sufficiently large. For instance, for any fixed , the error for trapezoidal rules is bound by a term that goes to zero as grows, where is the number of points chosen in for approximating the integral, see Equation 2.1.6 in [DR07]. This term is the modulus of continuity

For each choice of , there exists a compact such that maps into , and restricted to has for all . In this situation we can choose a neighborhood of , such that for all for each choice of , and this numerical integration approximates the value of with arbitrarily high accuracy.

We indicate our numerical integration scheme using the formula

where indicates the grid point of . We can therefore obtain the evaluation of at the grid points as

by choosing to be one of the grid points.

From our regularity assumptions on the derivatives we can uniformly bound the error on evaluating at the points , so that for sufficiently dense grids the evaluation error is smaller than for any choice of , when evaluating on functions in a neighborhood of .

Let us consider now a permutation of the input of for some . This means that we permute the grid points as . The approximated integration above gives