∎

22email: wuchuhan15@gmail.com, yfhuang@tsinghua.edu.cn 33institutetext: Fangzhao Wu, Xing Xie 44institutetext: Microsoft Research Asia, Beijing 100080, China

44email: wufangzhao@gmail.com, xingx@microsoft.com

Neural News Recommendation with Negative Feedback

Abstract

News recommendation is important for online news services. Precise user interest modeling is critical for personalized news recommendation. Existing news recommendation methods usually rely on the implicit feedback of users like news clicks to model user interest. However, news click may not necessarily reflect user interests because users may click a news due to the attraction of its title but feel disappointed at its content. The dwell time of news reading is an important clue for user interest modeling, since short reading dwell time usually indicates low and even negative interest. Thus, incorporating the negative feedback inferred from the dwell time of news reading can improve the quality of user modeling. In this paper, we propose a neural news recommendation approach which can incorporate the implicit negative user feedback. We propose to distinguish positive and negative news clicks according to their reading dwell time, and respectively learn user representations from positive and negative news clicks via a combination of Transformer and additive attention network. In addition, we propose to compute a positive click score and a negative click score based on the relevance between candidate news representations and the user representations learned from the positive and negative news clicks. The final click score is a combination of positive and negative click scores. Besides, we propose an interactive news modeling method to consider the relatedness between title and body in news modeling. Extensive experiments on real-world dataset validate that our approach can achieve more accurate user interest modeling for news recommendation.

Keywords:

News recommendation Dwell time Negative feedback1 Introduction

Online news services such as Google News111https://news.google.com and Microsoft News222https://www.msn.com can collect news from various sources and display them to users in a unified view das2007google ; wu2020mind . However, a large number of news articles are generated every day and it is overwhelming for users to find their interested news okura2017embedding . Thus, personalized news recommendation is critical for these news services to target user interests and alleviate information overload wu2019neural .

Accurate user interest modeling is a core problem in news recommendation. Existing news recommendation methods usually learn representations of users from their historical news click behaviors okura2017embedding ; wang2018dkn ; wu2019npa ; wu2019neuralnrms . For example, Okura et al. okura2017embedding proposed to learn user representations from the clicked news via a gated recurrent unit (GRU) network. Wang et al. wang2018dkn proposed to learn user representations from clicked news with a candidate-aware attention network that evaluates the relevance between clicked news and candidate news. In fact, users usually click news due to their interests in the news titles. However, news titles are usually short and the information they condensed is very limited and even misleading. Users may be disappointed after reading the content of their clicked news. Thus, news click behaviors do not necessarily indicate user interest, and modeling users solely based on news clicks may not be accurate enough.

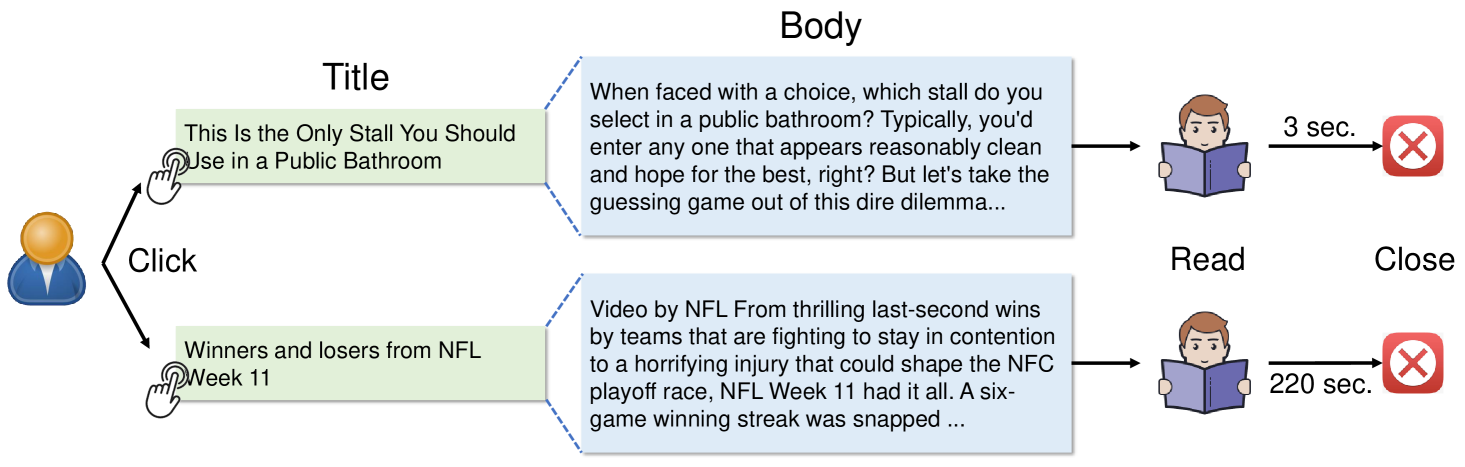

Besides news clicks, users also provide other implicit feedback like the dwell time of news reading, which is an important indication of user interest. As shown in Fig. 1, the user clicks two news articles and respectively reads them for 3 and 220 seconds before closing the news webpages. We can infer that this user may not be interested in the first news because she reads the body of this news for only 3 seconds before her leaving. Thus, a short dwell time of news reading can be regarded as a kind of implicit negative user feedback, which can help model user interest more accurately. In addition, since users usually decide which news to click according to news titles and read news bodies for detailed information, incorporating both news title and body is useful for news and user interest modeling. Moreover, the title and body of the same news usually have some inherent relatedness in describing news content. For example, in Fig. 1 the title of the second news indicates that this news is about NFL games, and the body introduces the details of these events. Capturing the relations between news title and body may help better understand news content and facilitate subsequent user interest modeling.

In this paper, we propose a neural news recommendation approach with negative feedback (NRNF). We propose to distinguish positive and negative news click behaviors according to the reading dwell time, and learn separate user representations from the positive and negative news clicks with a Transformer to capture behavior relations and an additive attention network to select important news for user modeling. In addition, we propose to respectively compute a positive click score and a negative click score based on the relevance between candidate news representation and the user representations learned from positive and negative news clicks. Besides, we propose an interactive news modeling method to incorporate both news title and body by using an interactive attention network to model their relatedness. Massive experiments conducted on real-world dataset demonstrate that our approach can effectively improve the performance of user modeling by incorporating implicit negative user feedback.

2 Related Work

News recommendation is an important task in the data mining field, and has gained increasing attention in recent years zheng2018drn . Learning accurate representations of users is critical for news recommendation. Many existing news recommendation methods rely on manual feature engineering to build user representations liu2010personalized ; capelle2012semantics ; son2013location ; karkali2013match ; garcin2013personalized ; bansal2015content ; ren2015personalized ; chen2017location ; zihayat2019utility . For example, Li et al. li2010contextual proposed to represent users using their demographics, geographic information and behavioral categories which summarize the consumption history on Yahoo!. Garcin et al. garcin2012personalized proposed to model users by aggregating the LDA features of all clicked news into a user vector by averaging. Lian et al. lian2018towards proposed to build user representations from various handcrafted features like user ID, demographics, locations and the feature collection of clicked news. However, these methods rely on manual feature engineering, which needs massive domain knowledge to craft. In addition, handcrafted features are usually not optimal for representing user interest.

In recent years, several news recommendation methods based on deep learning techniques are proposed okura2017embedding ; khattar2018weave ; wang2018dkn ; wu2019neural ; an2019neural ; wu2019neuralnaml ; wu2019neuralnrhub ; wu2019neuralnrms ; ge2020graph ; hu2020graph . For example, Okura et al. okura2017embedding proposed to learn representations of users from the representations of their browsed news using a GRU network. Wang et al. wang2018dkn proposed to learn user representations from clicked news based on their relevance to the candidate news. Zhu et al. zhu2019dan proposed to generate user representations using a combination of a CNN network and an attention-based LSTM network. Wu et al. wu2019neuralnrms proposed to use multi-head self-attention network to learn user representations by capturing the interactions between clicked news. These methods only rely on news click behaviors to learn user representations, which may be insufficient because news clicks may not exactly reflect user preferences. Different from these methods, our approach can learn representations of users by incorporating the implicit negative feedback of users inferred from their reading dwell times, which can provide useful clues to calibrate the user interest inferred from news click behaviors.

3 Our Approach

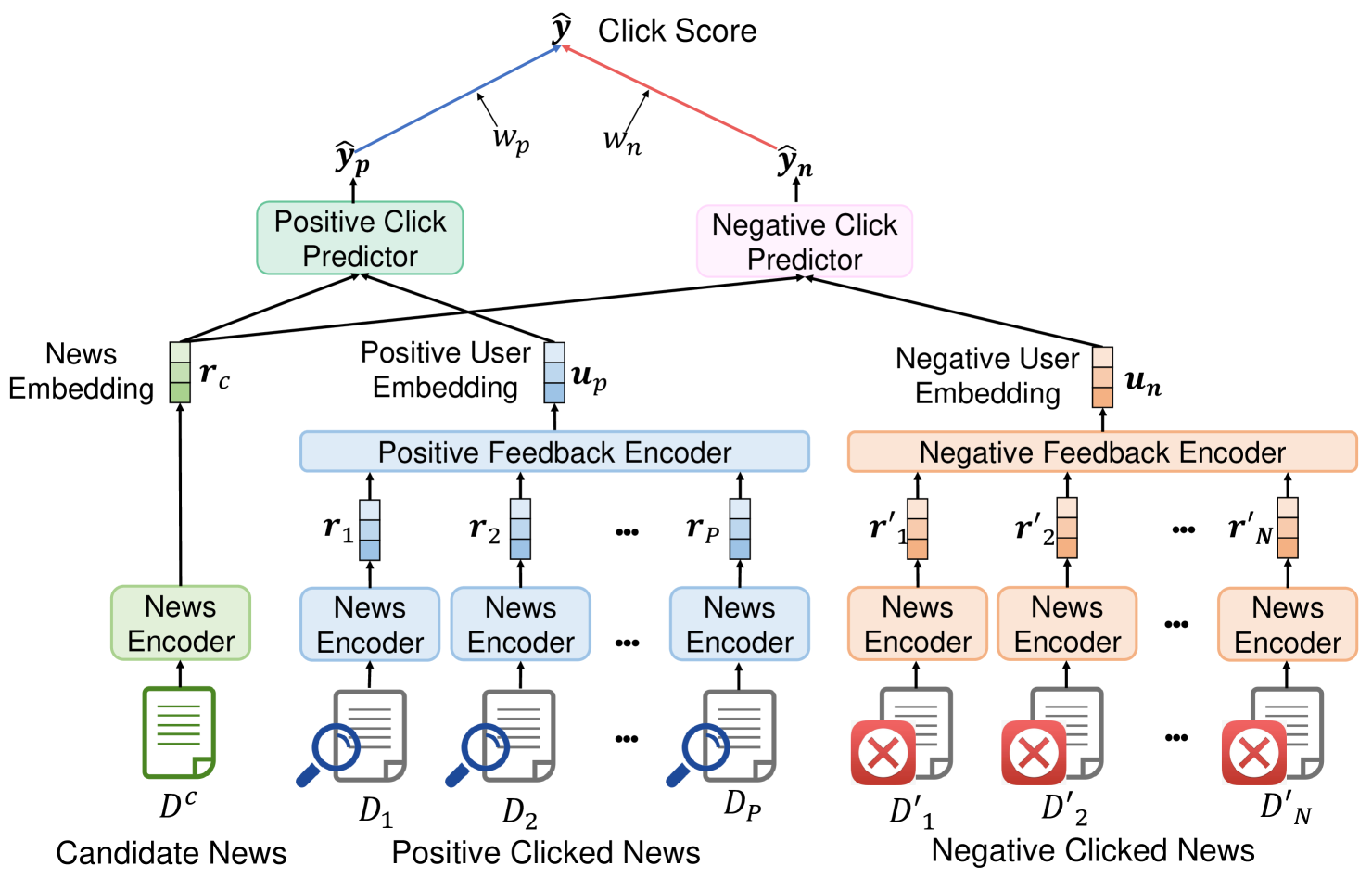

In this section, we introduce the details of our neural news recommendation approach with negative feedback (NRNF). The architecture of NRNF is illustrated in Fig. 2. We will introduce each core technique in our method, including user modeling, click prediction, news modeling, and model training, in the following sections.

3.1 User Modeling with Negative Feedback

In this section, we introduce how to model user interests from their clicked news by considering negative feedback. Usually, the interests of users on the news they read carefully are very different from those they closed quickly after having a glance at the body. For example, as shown in Fig. 1, we can infer that the user may be indeed interested in the second news since she reads this news for about 220 seconds. However, she is probably not interested in or unsatisfied with the first news since she reads this news only for 3 seconds after click. Thus, incorporating short reading dwell time as the implicit negative feedback of users has the potential to enhance the modeling of user interest. To characterize the differences of news in representing the real user interests, we use their reading dwell times to distinguish positive news clicks from the negative ones. We regard the clicked news with as negative news clicks and those with as positive news clicks, where is a time threshold. Since simply aggregating all news together will ignore the differences of positive and negative news, in the user encoder module we propose to use a multi-view learning framework to incorporate positive and negative news clicks as different views of users. Their details are introduced as follows.

The positive news click view is used to learn a positive user embedding from positive clicked news. We denote the positive clicked news of a user as . We first use a news encoder to obtain their representations, which are denoted as . Then, we use a positive feedback encoder to learn a unified user embedding from these news representations. It uses a similar architecture with the user encoder used by Wu et al. wu2019neuralnrms . Since different clicked news usually have some interactions with each other, we use a news-level Transformer vaswani2017attention to capture the interactions among them333We add position embeddings to the news embeddings to capture behavior orders., and the output sequence is computed as:

| (1) |

where the three parameters in respectively denote the input query, key and value. In addition, since different clicked news have different importance in modeling user interests, we use an additive attention yang2016hierarchical network to select important news to learn more informative user representations. The final positive user embedding learned from this view is computed as:

| (2) |

where is a query parameter vector, is regarded as the key and value. It summarizes user interests inferred from the positive user feedback.

The negative news click view is used to learn representations of users from their negative clicked news. Similar to positive news, we use a combination of Transformer and additive attention network to learn the negative user embedding from the representations of the negative clicked news. We denote the negative user embedding learned from this view as , which condenses the information of negative user interest inferred from the negative clicked news.

3.2 Click Prediction

In this section, we introduce the details of click prediction in our approach. Usually, if a candidate news is similar to the news that a user reads carefully, it may have a high probability to be clicked by this user. However, if it is very similar to the news that are previously closed by a user very quickly, it will probably not be clicked by this user. Motivated by the observations above, we propose to calculate a positive click score and a negative click score respectively based on the relevance of candidate news to the positive and negative user interest. We denote the embedding of a candidate news as . Following okura2017embedding , and are respectively computed by the inner product of the candidate news embedding and the user embeddings learned from the positive and negative views, which are formulated as:

| (3) |

The final click probability score is a weighted summation of the positive score and the negative score , which is formulated as:

| (4) |

where and are learnable parameters. A positive parameter means that the relevance between candidate news and the corresponding user embedding has a positive correlation with the final click score, and this correlation is negative if the parameter is negative.

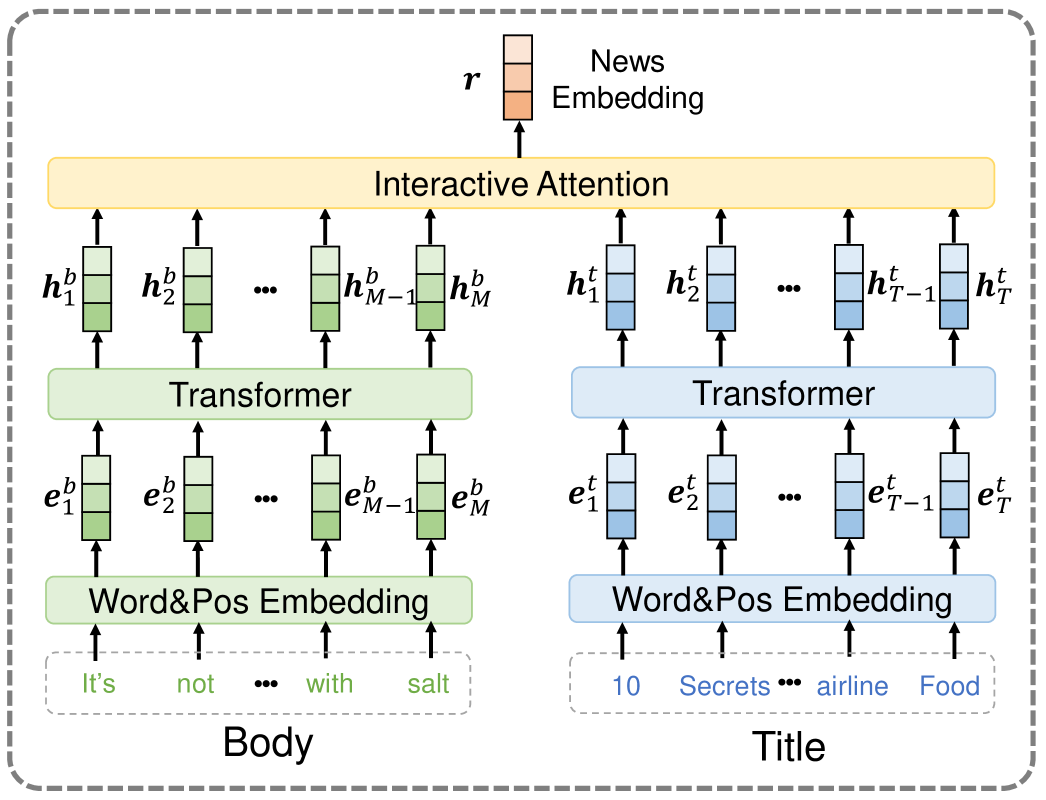

3.3 News Modeling

In this section, we introduce the architectures of the news encoder in our approach for news modeling. It is used to learn representations of news from their titles and bodies. Since news titles and bodies usually have very different characteristics, simply concatenating title and body together may not be optimal. Thus, in this module we use a multi-view learning framework to incorporate the information of title and body as different views of news. In addition, since there is usually inherent relatedness between news title and body, we propose to use an interactive attention network to capture their relations and form the unified news embedding. The details of each module in the news encoder are introduced as follows.

The first module in the news encoder is a title transformer, which is used to capture the contexts of words in a news title. We first use a word and position embedding layer to convert the sequence of words in a news title into a sequence of low-dimensional word embeddings with their position information. Denote a news title with words as . It is converted into an embedding sequence . Then, we use a Transformer to learn the contextual representation of each word, which is computed as follows:

| (5) |

The second module in the news encoder is a body transformer, which aims to capture the contexts of words in a news body. Similar to news title, we also use a word and position embedding layer to obtain the embeddings of the words in a news body, which are denoted as . Then, we use a Transformer to capture the contexts of words, and its output is computed as

| (6) |

The third module is an interactive attention network, which is used to capture the relations between news title and body when forming the unified news embedding. We first use two additive attention network to respectively attend to the important words in news title and body to form the title and body representations(denoted as and ), which are formulated as

| (7) |

| (8) |

where and are learnable query vectors. Then, we use the title representation as the query of the body attention network to select words in news body according to their relevance to the content of news title, and the output title-aware body representation is computed as follows:

| (9) |

We learn a body-aware title representation in a similar way by using the body representation as the title attention query, which is formulated as:

| (10) |

We finally aggregate all title and body representations into a unified news representation , i.e., .

3.4 Model Training

Following huang2013learning ; wu2019npa , we use negative sampling techniques to construct labeled data from the raw news impression logs for model training. For each news clicked by a user, we randomly sample news that are displayed in the same impression but not clicked by this user. We denote the click probability scores of the clicked news sample as and the unclicked news samples as . we re-formulate the news click prediction problem as a pseudo -way classification task to jointly predict these scores. We normalize these scores via the softmax function to compute the posterior click probability of the clicked news, which is formulated as follows:

| (11) |

The final loss function we used is the negative log-likelihood of all clicked news samples, which is formulated as:

| (12) |

where is the set of clicked news samples for training.

4 Experiments

4.1 Datasets and Experimental Settings

Our experiments were conducted on a real-world news recommendation dataset collected from MSN News444https://www.msn.com/en-us/news logs from Oct. 31, 2018 to Jan. 29, 2019. The detailed statistics are shown in Table 1. We sorted all sessions by time, and we used the first 80% of sessions for training, the next 10% for validation and the rest 10% for test.

| # users | 337,699 | avg. # words per title | 10.99 |

| # news | 249,038 | avg. # words per body | 719.34 |

| # sessions | 500,000 | avg. dwell time | 294.91 s |

| # samples | 40,400,206 | avg. # negative news per user | 5.00 |

| # clicked samples | 990,624 | # unclicked samples | 39,409,582 |

In our experiments, the word embeddings were 300-dimensional and initialized by Glove pennington2014glove The Transformers had 8 attention heads, whose output dimension was 32. The maximum lengths of title and body were respectively set to 30 and 200, respectively. The numbers of positive and negative clicked news were respectively limited to 50 and 20. Following huang2013learning , the negative sampling ratio was set to 4. The threshold was set to 10 seconds. Adam kingma2014adam was used as the optimizer, and the batch size was 30. To mitigate overfitting, we applied dropout srivastava2014dropout techniques to the word embeddings and Transformer networks, and the dropout ratio was set to 0.2. These hyperparameters were tuned on the validation set. We independently repeated each experiment 10 times and reported the average results in terms of AUC, MRR, nDCG@5 and nDCG@10.

4.2 Performance Evaluation

We evaluate the performance of our approach by comparing it with many baseline methods, including:

-

•

LibFM rendle2012factorization , a factorization machine based method for recommendation. We extracted the TF-IDF features from the titles and bodies of news clicked by users to build their representations.

-

•

DSSM huang2013learning , deep structured semantic model. We regarded the concatenation of the news clicked by a user as the query and candidate news as documents, and we used the same feature with LibFM for news and user representation.

-

•

Wide&Deep cheng2016wide , a famous neural recommendation method which consists of a wide linear channel and a deep neural network channel. We used the same features with LibFM as the input of both channels.

-

•

DeepFM guo2017deepfm , another widely used neural recommendation method which uses a combination of factorization machines and deep neural networks. We used the same TF-IDF features to feed for both components.

-

•

DFM lian2018towards , a deep fusion model for news recommendation, which combines fully connected layers with different depths. We used TF-IDF features and word embeddings as the input.

-

•

EBNR okura2017embedding , an embedding based neural news recommendation method which uses denoising autoencoders to learn news representations and a GRU network to learn user representations.

-

•

DKN wang2018dkn , a neural news recommendation method which learns news representations via knowledge-aware CNNs and learns user representations via an attention network based on the relevance between clicked news and candidate news.

-

•

DAN zhu2019dan , a neural news recommendation method which learns news representations via two parallel CNNs and learns user representations using a combination of attentional LSTM and CNN;

-

•

NAML wu2019neuralnaml , a neural news recommendation approach with attentive multi-view learning.

-

•

NRMS wu2019neuralnrms , a neural news recommendation method that uses multi-head self-attention for news and user modeling.

-

•

NRNF-basic, a variant of our NRNF approach that does not consider negative feedback.

-

•

NRNF, our news recommendation approach with negative feedback.

For fair comparison, in GRU, DKN, DAN and NRMS we used the concatenation of news title and news body to learn news representations. The results of these methods are summarized in Table 2.

| Methods | AUC | MRR | nDCG@5 | nDCG@10 |

|---|---|---|---|---|

| LibFM rendle2012factorization | 0.62960.0049 | 0.22330.0041 | 0.24670.0045 | 0.30440.0039 |

| DSSM huang2013learning | 0.65140.0031 | 0.23000.0028 | 0.25390.0032 | 0.30990.0030 |

| Wide&Deep cheng2016wide | 0.63670.0029 | 0.22570.0026 | 0.24960.0024 | 0.30500.0022 |

| DeepFM guo2017deepfm | 0.64100.0041 | 0.22750.0035 | 0.25020.0037 | 0.30690.0032 |

| DFM lian2018towards | 0.64440.0037 | 0.22880.0033 | 0.25110.0038 | 0.30820.0035 |

| EBNR okura2017embedding | 0.65540.0022 | 0.23240.0019 | 0.25560.0024 | 0.31250.0021 |

| DKN wang2018dkn | 0.65310.0036 | 0.23110.0028 | 0.25440.0030 | 0.31120.0026 |

| DAN zhu2019dan | 0.66210.0016 | 0.23540.0012 | 0.25880.0014 | 0.31530.0012 |

| NAML wu2019neuralnaml | 0.67390.0015 | 0.24120.0014 | 0.26340.0013 | 0.32150.0016 |

| NRMS wu2019neuralnrms | 0.67890.0019 | 0.24400.0017 | 0.26710.0017 | 0.32720.0018 |

| NRNF-basic | 0.68180.0016 | 0.24560.0016 | 0.26880.0017 | 0.32900.0019 |

| NRNF* | 0.68960.0015 | 0.25060.0014 | 0.27800.0015 | 0.33230.0016 |

From Table 2, we have several observations. First, compared with baseline methods that use handcrafted features to represent news and users (e.g., LibFM, DSSM and DeepFM), the models using neural networks for learning news and user representations (e.g., EBNR, NAML and NRNF) achieve better recommendation performance. This is probably because neural networks can learn more informative news and user representations than hand-crafted features. Second, compared with other neural news recommender like DAN, NAML and NRMS, NRNF and its variant NRNF-basic achieve better performance. This is because the latter methods can capture the relatedness between news title and body to enhance news modeling, while other methods cannot. Third, NRNF outperforms other baseline methods, and further hypothesis tests show that the improvement is significant. This is probably because incorporating the implicit negative user feedback can help learn more accurate user interest representations.

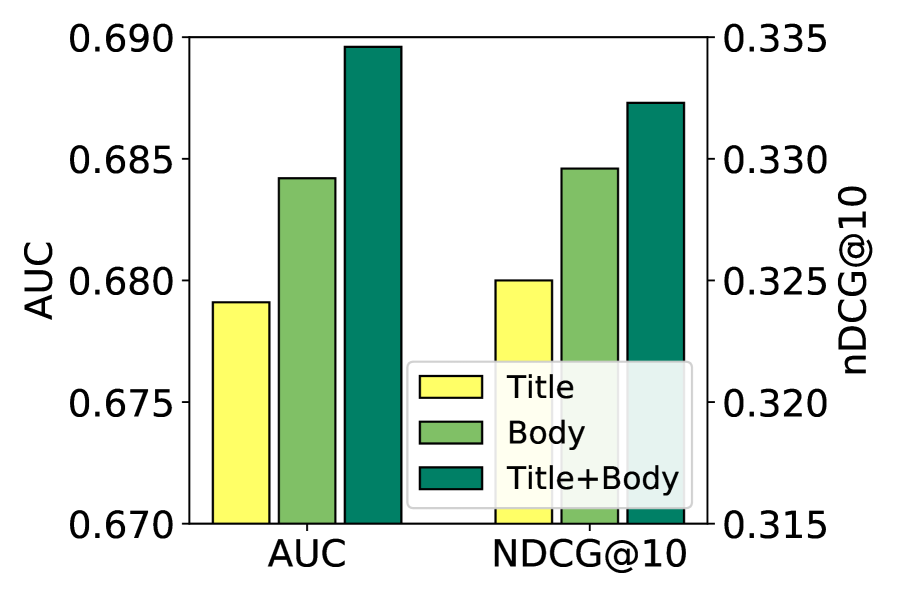

4.3 Effectiveness of Different News Information

Next, we explore the influence of incorporating different news information for news modeling. We compare the performance of our approach with its variants with different combinations of news information, and the results are shown in Fig. 4. From Fig. 4, we have several observations. First, both news title and body are useful for news recommendation. This is because users usually decide which news to click based on its title, and read news body for detailed information. Thus, both news title and body can provide important information for news recommendation. Second, news body is more useful than news title in representing news. This is intuitive because news body usually contains the details of news, which can provide richer information than news title. Besides, combining both title and body of news can further improve the performance of our approach. These results validate the effectiveness of the multi-view learning framework in our approach.

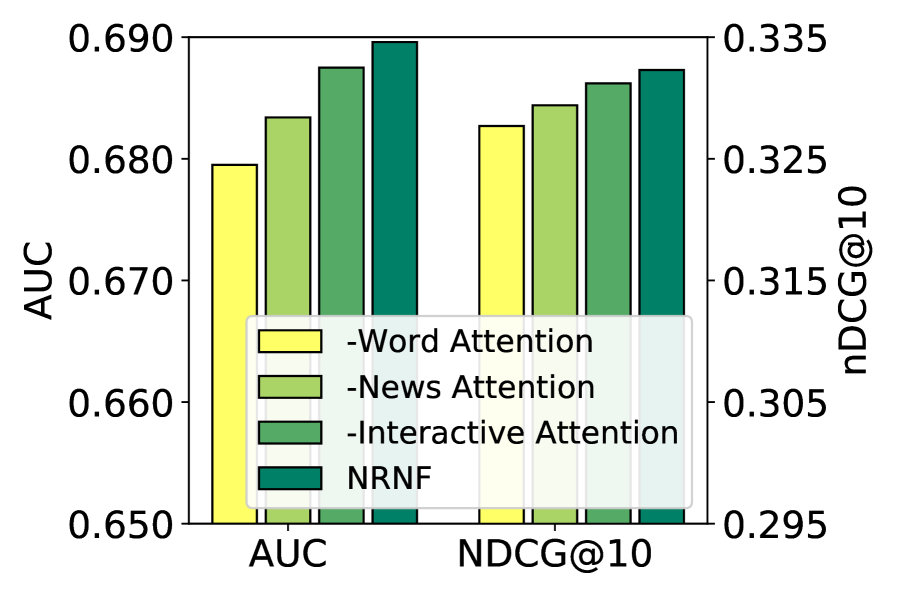

4.4 Effectiveness of Attention Network

Then, we explore the influence of different attention networks on the performance of our approach. The results are shown in Fig. 5. From Fig. 5, we find that word-level attention networks are very useful for our approach. This is because different words in news titles and bodies usually have different importance in representing news. Thus, selecting important words can help learn more informative news representations. In addition, news-level attention network is also important. This is because different news usually have different informativeness in representing the preferences of users, and selecting informative news can benefit user representation learning. Besides, the interactive attention network can also boost the performance. This may be because it can help capture the relations between news title and body to enhance news modeling. Moreover, combining all these kinds of attention networks can further improve the performance of our approach. These results verify the effectiveness of each kind of attention mechanism in our approach.

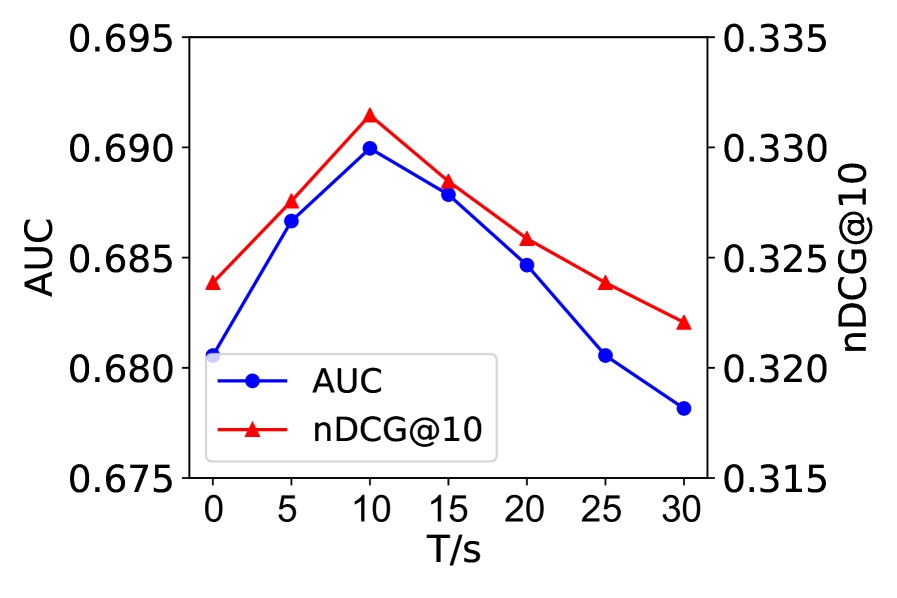

4.5 Influence of Hyperparameters

In this section, we study the influence of an important hyperparameter on our approach, i.e., the dwell time threshold . We compare the performance of our approach w.r.t different values of . The results are illustrated in Fig. 6. We find the performance of our approach improves when the threshold increases. This is probably because when is too small, many negative news clicks cannot be distinguished from the positive ones, and the modeling of user interest is not accurate enough. In addition, the performance of our approach declines when goes too large. This is also intuitive because when is too large, many positive news clicks will be mistakenly regarded as negative ones and the errors will be encoded into the negative user embedding, which leads to the sub-optimal performance. Therefore, a moderate selection of , e.g., 10 seconds may be more appropriate for our approach, which is also consistent with our news browsing experiences.

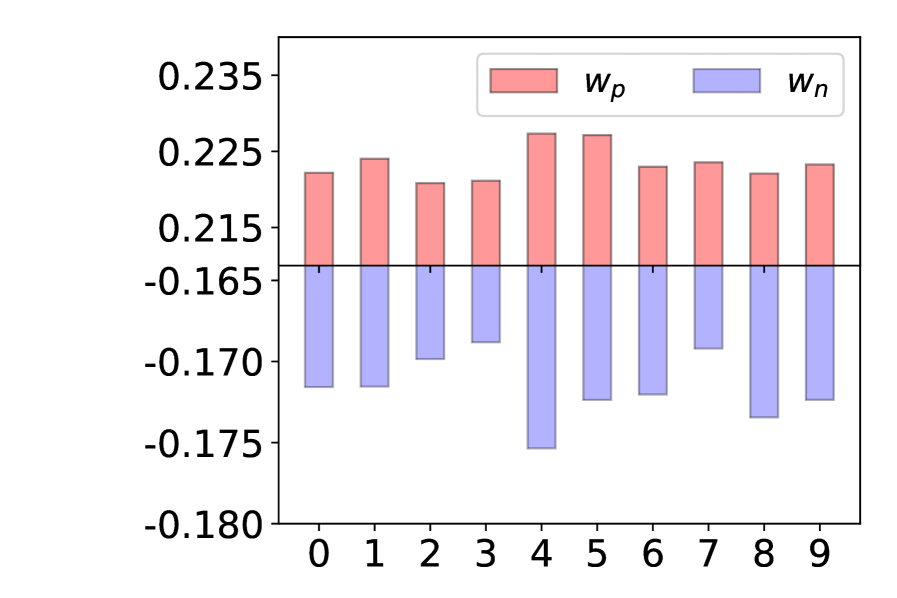

4.6 Parameter Analysis

In this section, we conducted several studies on the results of other two key parameters learned by our model, i.e., and . The results in 10 independent experiments are illustrated in Fig. 7. According to these results, we find is consistently positive. It is intuitive because the news read by users carefully can usually represent the preferences of users. Thus, the similarities between the candidate news and the positive clicked news should have a positive impact on the click probability. In addition, we find is consistently negative. This may be because if a user reads a news article very quickly, we can infer that he/she is probably not interested in this news. Thus, the click probability should have a negative correlation with the similarities between candidate news and negative clicked news.

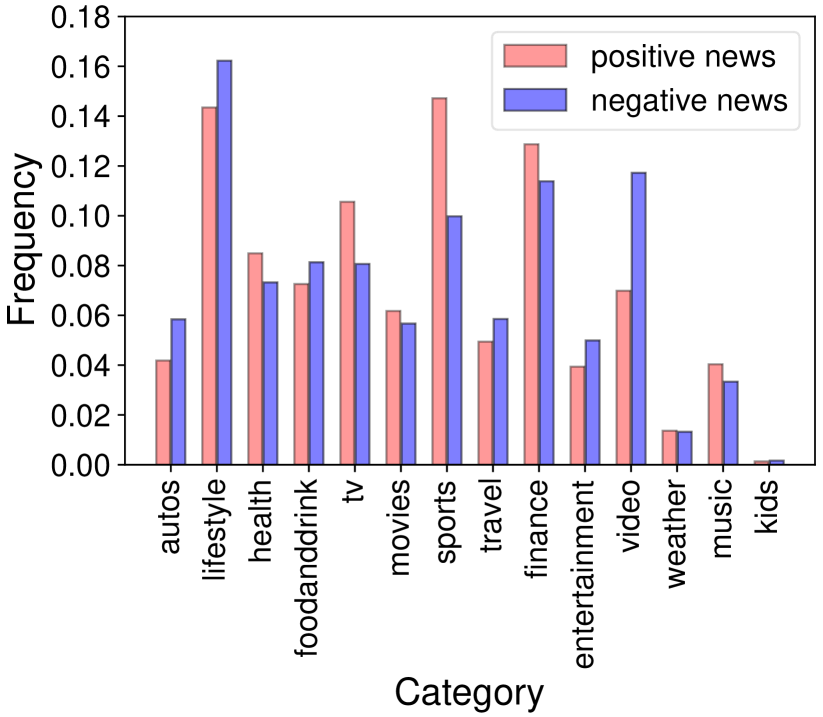

4.7 Qualitative Analysis of Negative News

In this section, we will conduct several qualitative analysis on the characteristics of the negative clicked news. First, we want to compare the topic distributions of positive and negative news, and the results are shown in Fig. 8. According to Fig. 8, we find the ratios of negative news in different topic categories have some differences. For example, it is interesting that the ratio of negative news is the highest in the “video” category. This may be because many users are attracted by the titles of the news in this category, but they are not interested in the content of these news. Thus, incorporating the information of negative feedback may also implicitly encode topical information into user representations, which is useful for more accurate news recommendation.

| Clicked News | NF Ratio |

|---|---|

| The 30 Most Heartwarming Random Acts of Kindness from 2018 | 75.8% |

| Pink Lettuce Is the Newest Pink Produce | 73.8% |

| 10 Secrets of Airline Food | 73.4% |

| Get Ready to Load Up Your Plates! 20 Restaurants Where Kids Eat Free | 73.2% |

| Christmas coat drive for Paterson students | 73.0% |

| The Best All-You-Can-Eat Deal in Every State | 72.0% |

| The Most Ingenious Foods Featured On Shark Tank | 71.7% |

| The Five Bikes Sabrina is Most Excited to See in 2019 | 70.3% |

| The greatest sports siblings in history | 67.1% |

| 2018 Holiday Gift Guide: The 25 Best Gift Ideas Under $500 | 66.7% |

In addition, we aim to discover some common patterns of negative news, e.g., the contents and writing styles of their titles. We calculate the ratio of negative feedback (NF) for each news555We ignore the news with less than 10 clicks to filter some possible noisy news., and the titles of the top 10 news with the highest NF ratios are listed in Table 3. From Table 3, we find many news with top NF ratios are clickbaits, and they have very similar writing styles, i.e., describing something in a sensationalized way but no details are provided. Thus, many users may be attracted by the news titles and click these news. However, after taking a glance at the news body, many users may be disappointed and close these news quickly. Thus, it is harmful for news platforms to improve user experiences if clickbaits are frequently recommended to users. Fortunately, our approach may have the potential to avoid recommending too many clickbaits to user by incorporating the negative feedback of users, which can improve the reading experiences of users.

| Clicked News | Dwell time | |

| 23 Celebs Who Still Live in Their Hometown | 75s (+) | |

| Ranking the Oscar’s Best Picture winners from every year | 1s (-) | |

| Oscars 2019: ’The Favourite,’ ’Roma’ Lead With 10 Nominations | 2s (-) | |

| Gladys Knight to Perform the National Anthem at Super Bowl LIII | 44s (+) | |

| Five Players Who Could Be Traded During Super Bowl Week | 187s (+) | |

| Candidate News | Score (w/o NF) | Score (w/ NF) |

| Ranking the possible Super Bowl LIII matchups | 0.669 | 0.893 |

| Bradley Cooper Snubbed by Oscars for Best Director And More Nomination Shocker | 0.912 | 0.075 |

| Olympic swimmer Nathan Adrian reveals he has testicular cancer | 0.212 | 0.158 |

4.8 Case Study

In this section, we conducted several case studies to show the effectiveness of our approach. First, we want to visually explore the effectiveness of incorporating the negative feedback of users. The clicked news and candidate news of a randomly selected user are listed in Table 4. We calculate the click probability score respectively using our approach and its variant without negative feedback. According to Table 4, the second candidate news is assigned the highest click score when negative feedback is not considered. This is probably because it is very similar to the second and third clicked news, since all of them are about Oscars. However, we can infer that this user is probably not very interested in this topic since she closes the second and third clicked news very quickly. Thus, it may be ineffective to recommend Oscars related news to this user. Fortunately, our approach can assign the second candidate news a low click score. This is because our approach can take the implicit negative user feedback into consideration, which is beneficial for modeling user preferences more accurately.

5 Conclusion

In this paper, we propose a neural news recommendation approach that can consider the implicit negative feedback of users. We propose to distinguish positive and negative news clicks according to users’ reading dwell time, and we propose to respectively learn two different embeddings of a user from her positive and negative news clicks. In addition, we propose to compute a unified news click score based on a combination of the relevance scores between candidate news and these two user embeddings. Besides, we propose an interactive news modeling method that can learn unified news representations from title and body with full consideration of their relatedness. Extensive experiments on real-world dataset show that incorporating negative user feedback can effectively improve the performance of user modeling for news recommendation.

Acknowledgements.

The authors would like to thank Microsoft News for providing technical support and data. This work was supported by the National Key Research and Development Program of China under Grant number 2018YFC1604002, and the National Natural Science Foundation of China under Grant numbers U1936216, U1936208, U1705261 and U1836204.Conflict of interest

The authors declare that they have no conflict of interest.

References

- (1) An, M., Wu, F., Wu, C., Zhang, K., Liu, Z., Xie, X.: Neural news recommendation with long-and short-term user representations. In: ACL, pp. 336–345 (2019)

- (2) Bansal, T., Das, M., Bhattacharyya, C.: Content driven user profiling for comment-worthy recommendations of news and blog articles. In: RecSys., pp. 195–202. ACM (2015)

- (3) Capelle, M., Frasincar, F., Moerland, M., Hogenboom, F.: Semantics-based news recommendation. In: WIMS, p. 27. ACM (2012)

- (4) Chen, C., Meng, X., Xu, Z., Lukasiewicz, T.: Location-aware personalized news recommendation with deep semantic analysis. IEEE Access 5, 1624–1638 (2017)

- (5) Cheng, H.T., Koc, L., Harmsen, J., Shaked, T., Chandra, T., Aradhye, H., Anderson, G., Corrado, G., Chai, W., Ispir, M., et al.: Wide & deep learning for recommender systems. In: DLRS, pp. 7–10. ACM (2016)

- (6) Das, A.S., Datar, M., Garg, A., Rajaram, S.: Google news personalization: scalable online collaborative filtering. In: WWW, pp. 271–280. ACM (2007)

- (7) Garcin, F., Dimitrakakis, C., Faltings, B.: Personalized news recommendation with context trees. In: Proceedings of the 7th ACM conference on Recommender systems, pp. 105–112. ACM (2013)

- (8) Garcin, F., Zhou, K., Faltings, B., Schickel, V.: Personalized news recommendation based on collaborative filtering. In: WI-IAT, vol. 1, pp. 437–441. IEEE (2012)

- (9) Ge, S., Wu, C., Wu, F., Qi, T., Huang, Y.: Graph enhanced representation learning for news recommendation. In: WWW, pp. 2863–2869 (2020)

- (10) Guo, H., Tang, R., Ye, Y., Li, Z., He, X.: Deepfm: a factorization-machine based neural network for ctr prediction. In: AAAI, pp. 1725–1731. AAAI Press (2017)

- (11) Hu, L., Li, C., Shi, C., Yang, C., Shao, C.: Graph neural news recommendation with long-term and short-term interest modeling. Information Processing & Management 57(2), 102142 (2020)

- (12) Huang, P.S., He, X., Gao, J., Deng, L., Acero, A., Heck, L.: Learning deep structured semantic models for web search using clickthrough data. In: CIKM, pp. 2333–2338. ACM (2013)

- (13) Karkali, M., Pontikis, D., Vazirgiannis, M.: Match the news: A firefox extension for real-time news recommendation. In: SIGIR, pp. 1117–1118. ACM (2013)

- (14) Khattar, D., Kumar, V., Varma, V., Gupta, M.: Weave& rec: A word embedding based 3-d convolutional network for news recommendation. In: CIKM, pp. 1855–1858. ACM (2018)

- (15) Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- (16) Li, L., Chu, W., Langford, J., Schapire, R.E.: A contextual-bandit approach to personalized news article recommendation. In: WWW, pp. 661–670. ACM (2010)

- (17) Lian, J., Zhang, F., Xie, X., Sun, G.: Towards better representation learning for personalized news recommendation: a multi-channel deep fusion approach. In: IJCAI, pp. 3805–3811 (2018)

- (18) Liu, J., Dolan, P., Pedersen, E.R.: Personalized news recommendation based on click behavior. In: IUI, pp. 31–40. ACM (2010)

- (19) Okura, S., Tagami, Y., Ono, S., Tajima, A.: Embedding-based news recommendation for millions of users. In: KDD, pp. 1933–1942. ACM (2017)

- (20) Pennington, J., Socher, R., Manning, C.: Glove: Global vectors for word representation. In: EMNLP, pp. 1532–1543 (2014)

- (21) Ren, R., Zhang, L., Cui, L., Deng, B., Shi, Y.: Personalized financial news recommendation algorithm based on ontology. Procedia Computer Science 55, 843–851 (2015)

- (22) Rendle, S.: Factorization machines with libfm. TIST 3(3), 57 (2012)

- (23) Son, J.W., Kim, A., Park, S.B., et al.: A location-based news article recommendation with explicit localized semantic analysis. In: SIGIR, pp. 293–302. ACM (2013)

- (24) Srivastava, N., Hinton, G.E., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. JMLR 15(1), 1929–1958 (2014)

- (25) Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: NIPS, pp. 5998–6008 (2017)

- (26) Wang, H., Zhang, F., Xie, X., Guo, M.: Dkn: Deep knowledge-aware network for news recommendation. In: WWW, pp. 1835–1844 (2018)

- (27) Wu, C., Wu, F., An, M., Huang, J., Huang, Y., Xie, X.: Neural news recommendation with attentive multi-view learning. In: IJCAI, pp. 3863–3869. AAAI Press (2019)

- (28) Wu, C., Wu, F., An, M., Huang, J., Huang, Y., Xie, X.: Npa: Neural news recommendation with personalized attention. In: KDD, pp. 2576–2584 (2019)

- (29) Wu, C., Wu, F., An, M., Huang, Y., Xie, X.: Neural news recommendation with topic-aware news representation. In: ACL, pp. 1154–1159 (2019)

- (30) Wu, C., Wu, F., An, M., Qi, T., Huang, J., Huang, Y., Xie, X.: Neural news recommendation with heterogeneous user behavior. In: EMNLP-IJCNLP, pp. 4876–4885 (2019)

- (31) Wu, C., Wu, F., Ge, S., Qi, T., Huang, Y., Xie, X.: Neural news recommendation with multi-head self-attention. In: EMNLP-IJCNLP, pp. 6390–6395 (2019)

- (32) Wu, F., Qiao, Y., Chen, J.H., Wu, C., Qi, T., Lian, J., Liu, D., Xie, X., Gao, J., Wu, W., et al.: Mind: A large-scale dataset for news recommendation. In: ACL, pp. 3597–3606 (2020)

- (33) Yang, Z., Yang, D., Dyer, C., He, X., Smola, A., Hovy, E.: Hierarchical attention networks for document classification. In: NAACL-HLT, pp. 1480–1489 (2016)

- (34) Zheng, G., Zhang, F., Zheng, Z., Xiang, Y., Yuan, N.J., Xie, X., Li, Z.: Drn: A deep reinforcement learning framework for news recommendation. In: WWW, pp. 167–176 (2018)

- (35) Zhu, Q., Zhou, X., Song, Z., Tan, J., Guo, L.: Dan: Deep attention neural network for news recommendation. In: AAAI, vol. 33, pp. 5973–5980 (2019)

- (36) Zihayat, M., Ayanso, A., Zhao, X., Davoudi, H., An, A.: A utility-based news recommendation system. Decision Support Systems 117, 14–27 (2019)