Neural Volumetric Blendshapes: Computationally Efficient Physics-Based Facial Blendshapes

Abstract

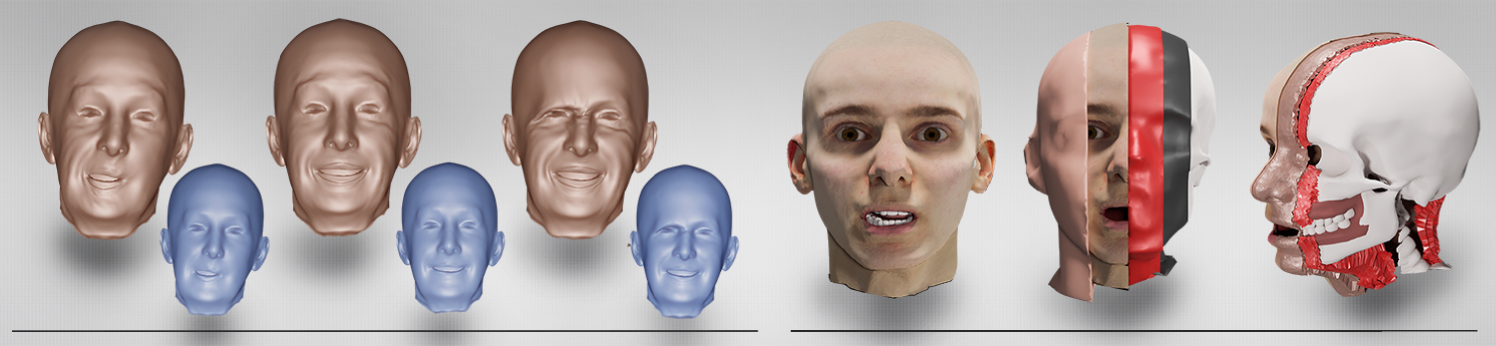

Computationally weak systems and demanding graphical applications are still mostly dependent on linear blendshape models for facial animations. At this, artifacts such as self-intersections, loss of volume, or missing soft tissue elasticity are often avoided by using comprehensively designed blendshape rigs. However, hundreds of blendshapes have to be manually created or scanned in for high-quality animations, which is very costly to scale to many characters. Non-linear physics-based animation systems provide an alternative approach which avoid most artifacts by construction. Nonetheless, they are cumbersome to implement and require immense computational effort at runtime. We propose neural volumetric blendshapes, a realtime approach on consumer-grade CPUs that combines the advantages of physics-based simulations while the handling is as effortless and fast as that of linear blendshapes. To this end, we present a neural network that efficiently approximates volumetric simulations and generalizes across human identities as well as facial expressions. Furthermore, it only requires a single neutral face mesh as input in the minimal setting. Along with the design of the network, we introduce a pipeline for the challenging creation of anatomically and physically plausible training data. Part of the pipeline is a novel layered head model that densely positions the biomechanical anatomy within a skin surface while avoiding intersections. The fidelity of all parts of the data generation pipeline as well as the accuracy and efficiency of the network are evaluated in this work. Upon publication, the trained models and associated code will be released.

1 Introduction

At present, research in the field of head avatars and facial animation is mainly concerned with obtaining photorealistic results through neural networks [13, 24, 4] which can be operated on computationally rich systems, require comprehensive per-person training data, and time-consuming individualization. What currently falls short, however, is the inclusion of less capable hardware setups and efficient training pipelines that avoid extensive data collection. For this, various adaptions of linear blendshape models paired with deformation transfer [54, 9] and example-based facial rigging [38] are still the usual means in production. Although linear facial models have been intensively researched and improved over the past decades, there are still known shortcomings like physically implausible distortions, loss of volume, anatomically impossible expressions, missing volumetric elasticity, or self-intersections. Physics-based simulations have been proposed that overcome most artifacts of linear blendshapes [27, 26, 18, 16], but they are usually laborious to handle and computationally expensive. Hybrid models that try to combine the best of both worlds are either not sophisticated enough in the quality of the simulated physical properties [6] or still too inefficient to be used on slower devices [26].

An auspicious approach in the latter category is physics-based volumetric blendshapes [26]. These can be animated with physical plausibility, anatomical constraints can be taken into account, self-intersections can be prevented, and the control is identical to linear blendshapes. Although the level of detail is slightly sacrificed in comparison to other physics simulations [18, 27], volumetric blendshapes can still only be used at low frame rates. We improve on this approach with neural volumetric blendshapes that approximate the involved calculations of physical and anatomical constraints with an efficient and lightweight neural network. Thereby, realtime inference of physics-based non-linear blendshapes on consumer-grade hardware becomes possible and only a slight computational overhead is necessary compared to linear blendshapes. The principal challenge we solve in this work is the creation of training data for neural volumetric blendshapes that reflects the previously discussed animation advantages and facilitates a generalization across different human identities. Thus, in contrast to other recent work that tries to approximate physics-based facial simulations by neural networks [53, 55, 16], we avoid time-consuming individualizations for a straightforward deployment. In addition, our facial animation method does not require sequences of optical scans or manually crafted facial animations. Instead, the network trained in this work induces an anatomical plausible deformation transfer such that our system is instantly applicable to neutral head surfaces or on top of arbitrary linear blendshape rigs.

The aforementioned data generation pipeline is unique in that, to the best of our knowledge, there are no other comprehensive datasets that relate a broad range of head shapes in diverse facial expressions to the underlying anatomical and physical characteristics. To curate such a dataset for the first time, we bring together multiple data sources such as CT data to reflect the anatomy of heads, 3D reconstructions of images in the wild to collect diverse head shapes, or facial expressions in the form of recorded blendshape weights from dyadic conversational situations. The result is a dataset of heads with neutral and non-neutral expressions represented by a novel standardized layered head model, relating skin surface displacements with the underlying biomechanical volumetric deformations and transformations of muscles, skull bones, and soft tissue.

The key novelties and contributions we present in this paper can be summarized as follows:

-

•

A novel layered head model (LHM) representing the skin surface as well as the entire biomechanical anatomy.

-

•

A data-driven procedure for fitting the LHM to neutral skin surfaces.

-

•

An inverse physics-based simulation which fits the LHM to skin surfaces of non-neutral facial expressions.

-

•

A novel neural network design that approximates physics-based simulations to efficiently implement neural volumetric blendshapes.

-

•

A pipeline for creating training data of the neural network that among other parts includes the LHM and the associated fitting procedures.

2 Related Work

2.1 Personalized Anatomical Models

Algorithms that create personalized anatomical models can essentially be distinguished according to two paradigms: heuristic-based and data-driven. Considering heuristic-based approaches, Anatomy Transfer [2] applies a space warp to a template anatomical structure to fit a target skin surface. The skull and other bones are only deformed by an affine transformation. A similar idea is proposed by Gilles et al. [23]. While they also implement a statistical validation of bone shapes, the statistics are collected from artificially deformed bones. In [26, 30], an inverse physics simulation was used to reconstruct anatomical structures from multiple 3D expression scans. Saito et al. [45] simulate the growth of soft tissue, muscles, and bones. A musculoskeletal biomechanical model is fitted from sparse measurements in [48] but not qualitatively evaluated.

There are only a few data-driven approaches because combined data sets of surface scans and MRI, CT or DXA images are hard to obtain for various reasons (e.g. data privacy or unnecessary radiation exposure). The recent work OSSO [32] predicts full body skeletons from 2000 DXA images that do not carry precise 3D information. Further, bones are positioned within a body by predicting only three anchor points per bone group and not avoiding intersections between skin and skull. A model that prevents skin-skull intersections and also considers muscles is based on fitting encapsulating wraps instead of the anatomy itself [34]. However, no accurate algorithm based on medical imaging but a BMI (Body mass index) regressor [41] is used to position the wraps. A much more accurate, pure face model, was developed by Achenbach et al. [1]. Here, CT scans are combined with optical scans by a multilinear model (MLM) which can map from skulls to faces and vice versa. As before, no self-intersections are prevented and only bones are fitted. Building on the data from [1] and following the idea of a layered body model [34], we create a statistical layered head model including musculature that avoids self-intersections.

2.2 Physics-Based Facial Animation

A variety of techniques for animating faces have been developed in the past [25, 12, 56, 43]. Data-driven models [36, 26, 37], which have recently been significantly improved by deep learning [57, 21, 13, 20, 51, 4], are certainly dominant. Due to their simplicity and speed, linear blendshapes [36] are still most commonly used in demanding applications and whenever no computationally rich hardware is available. Physics-based models have been developed for a long time [50] and avoid artifacts like implausible contortions and self-intersections, but due to their complexity and computational effort, they are rarely used. Hybrid approaches add surface-based physics to linear blendshapes for more detailed facial expressions [6, 7, 16]. However, by construction they can not model volumetric effects.

The pioneering work of Sifakis et al. [50] is the first fully physics-based facial animation. The simulation is conducted on a personalized tetrahedron mesh, which can only be of a limited resolution due to a necessary dense optimization problem. With Phace [27], this problem was overcome by an improved physics simulation. An art-directed muscle model [17, 5, 18] additionally represents muscles as B-splines and allows control of expressions via trajectories of spline control points. A solely inverse model for determining the physical properties of faces was proposed in [29]. Neural soft-tissue dynamics [46, 14] extend the SMPL (Skinned Multi-Person Linear Model) proposed in [40] with secondary motion. Recently, [53, 55, 16] adapted neural soft-body dynamics to learn the physical properties of a particular person. However, these approaches must be retrained for new objects and are slow in inference. With volumetric blendshapes [26], a hybrid approach has been presented that combines the structure of linear blendshapes with physical and anatomical plausibility. We extend this work to neural volumetric blendshapes to make physical plausibility realtime capable while maintaining the control structure of standard linear blendshapes.

3 Method

The foundation of our neural volumetric blendshapes approach (Sections 3.2 and 3.3) is a novel layered head representation (Section 2). Starting from there, we design a physics-based facial animation model (Section 3.4) and distill it into a defining dataset (Section 3.5). With this dataset, a neural network that makes the animation model realtime capable is trained.

3.1 Layered Head Model

We represent a head with neutral expression through a component-wise transformation (see Section 3.5 for details) of a layered head model (LHM) template

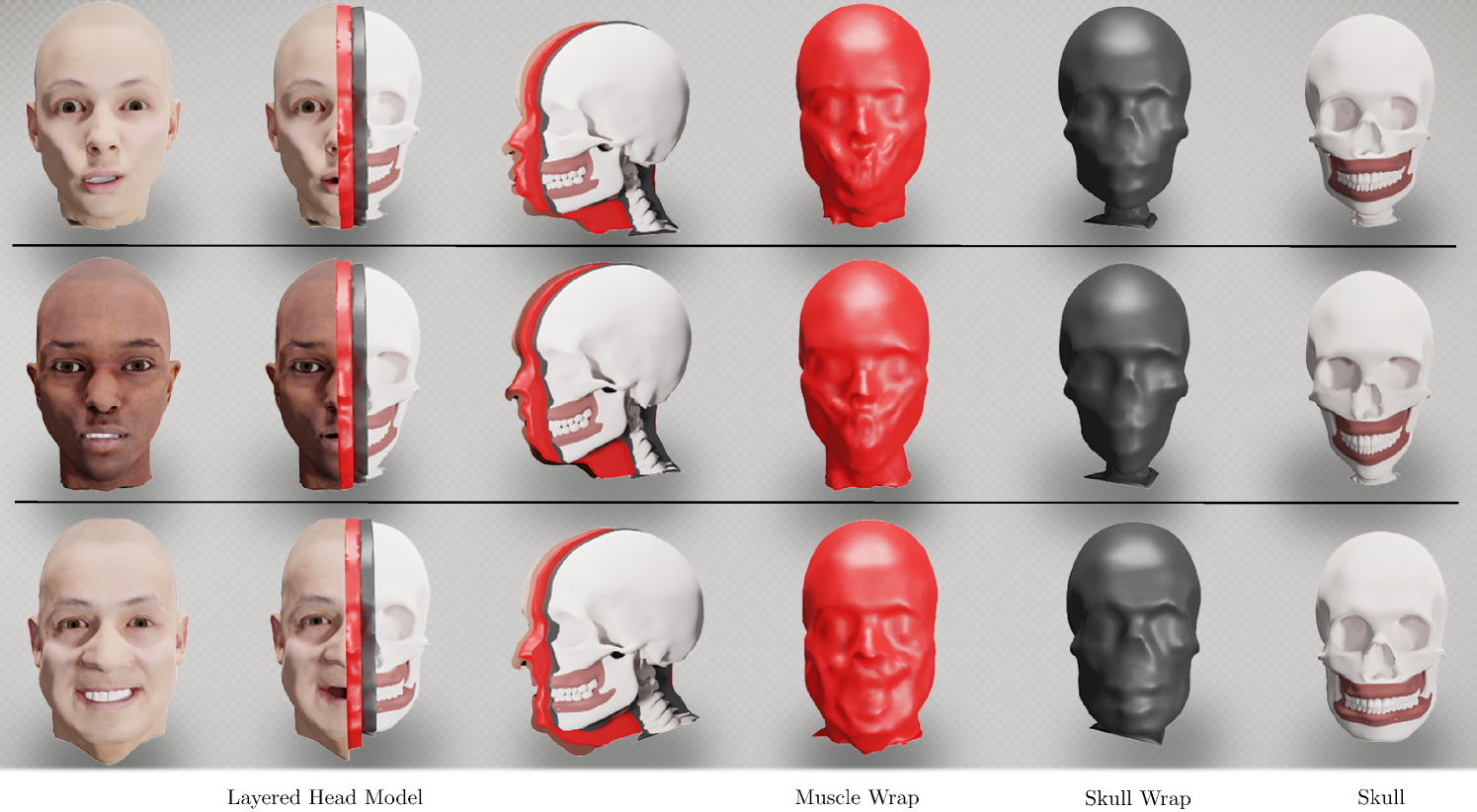

| (1) |

that consists of six triangle meshes. describes the skin surface including the eyes, the mouth cavity, and the tongue, the surface of all skull bones and teeth, the surface of all muscles and the cartilages of the ears and nose. is the skin layer, i.e. a closed wrap enveloping , the skull layer that envelopes , and the muscle layer that envelopes . Other anatomical structures are omitted for simplicity. The template structures and were designed by an experienced digital artist. The skin, skull, and muscle layers , and have the same triangulation and were generated by shrink-wrapping a sphere as close as possible to the corresponding surfaces without intersections. The complete template is shown in Figure 2.

The skull and muscle layers are further massaged such that the quads of all prisms that can be spanned between corresponding faces of the skin, muscle, and skull layer are as rectangular as possible while preserving the original geometries. To that end, we determine for the skull layer

| (2) |

within the efficient projective dynamics [11] optimization framework, initializing with . Here, is the two-sided Hausdorff distance to the non-massaged shape and

| (3) | ||||

induces the rectangular prism shapes. After the optimization we set . The same optimization is run for the muscle layer.

| Mesh | ||||

|---|---|---|---|---|

| #Vertices | 21875 | 14572 | 16388 | 7826 |

| #Faces / #Tets | 42738 | 28856 | 32370 | 15648 |

| Mesh | ||||

| #Vertices | 7826 | 7826 | 49852 | |

| #Faces / #Tets | 15648 | 15648 | 123429 | 73681 |

The wrapping layers of the LHM allow for at least two significant advantages. On the one hand, they provide a simplified representation of the skin surface, the musculature, and the skull, which we exploit in Section 3.5 for determining the LHM template transformation . On the other hand, they can be used for topologically and semantically consistent tetrahedralization of the head volume by splitting the prisms between the layers canonically into tetrahedrons.

More precisely, the construction of the LHM also defines a soft tissue tetrahedron mesh (i.e. between the skin and the muscle layer) and a muscle tissue tetrahedron mesh (i.e. between the muscle and the skull layer). The massage of the muscle and skull layers ensures nicely shaped tets with minimal shearing. Further, can be fine-tuned by removing all vertices and connected tets outside of , adding the vertices of , and connecting them via Delaunay tetrahedralization. The complexities of all template components are given in Table 1.

3.2 Linear & Volumetric Blendshapes

Building on the LHM representation, we can now introduce neural volumetric blendshapes. For this, the classical concept of linear blendshapes is reviewed first. Thereupon, we derive volumetric blendshapes and the involved physics-based simulations that are in general not real time capable. Finally, we introduce neural volumetric blendshapes (Section 3.3) as an efficient and fast alternative.

For a specific head , a linear blendshape model consists of surface blendshapes

| (4) |

and determines an unknown facial expression as the linear interpolation

| (5) |

The are the blending weights and determine the share of each blendshape in the expression.

The corresponding set of volumetric blendshapes can be defined as

| (6) |

where , describe soft and muscle tissue deformations as vectors of deformation gradients. describes the skull after rigid motion of jaw and cranium. An anatomically plausible inverse physics model (see Section 3.4 for details) relates the linear rig to the volumetric rig as

| (7) |

In words, determines the biomechanical volumetric deformations of that cause the skin surface of a facial expression. Vice versa, a forward physics model acts as a left inverse to and maps volumetric deformations back to surface deformations as

| (8) |

In reality, is often not an anatomically legal facial expression with respect to . As a consequence, is built such that is an anatomically reachable expression which, in Euclidean sense, is close but not necessarily equal to .

The major advantage of a volumetric blendshape model is that unknown facial expressions calculated as

| (9) |

can be shaped anatomically more plausible and thus more realistic, provided a suitable choice of interpolation (and extrapolation) functions and . At this, the essential requirement to and is the biologically necessary volume preservation of the deformation gradients. The common approach would be to to separately interpolate the stretch and the rotation components of the deformation gradients [26, 49] and the positions of the skull bones. Considering the stretching components, volume-preserving interpolation methods [3, 28] have been proposed. Considering the rotation components, quaternion-interpolation satisfies the volume-preservation by construction. However, to the best of our knowledge, it is yet to be discussed how both components should be extrapolated for facial animations if the blending weights do not form a convex combination. In this work, we therefore use a novel hybrid approach that calculates facial expressions as

| (10) |

This way, inter- and extrapolation capabilities of linear surface blendshapes can be used while maintaining the advantages of anatomical plausibility through .

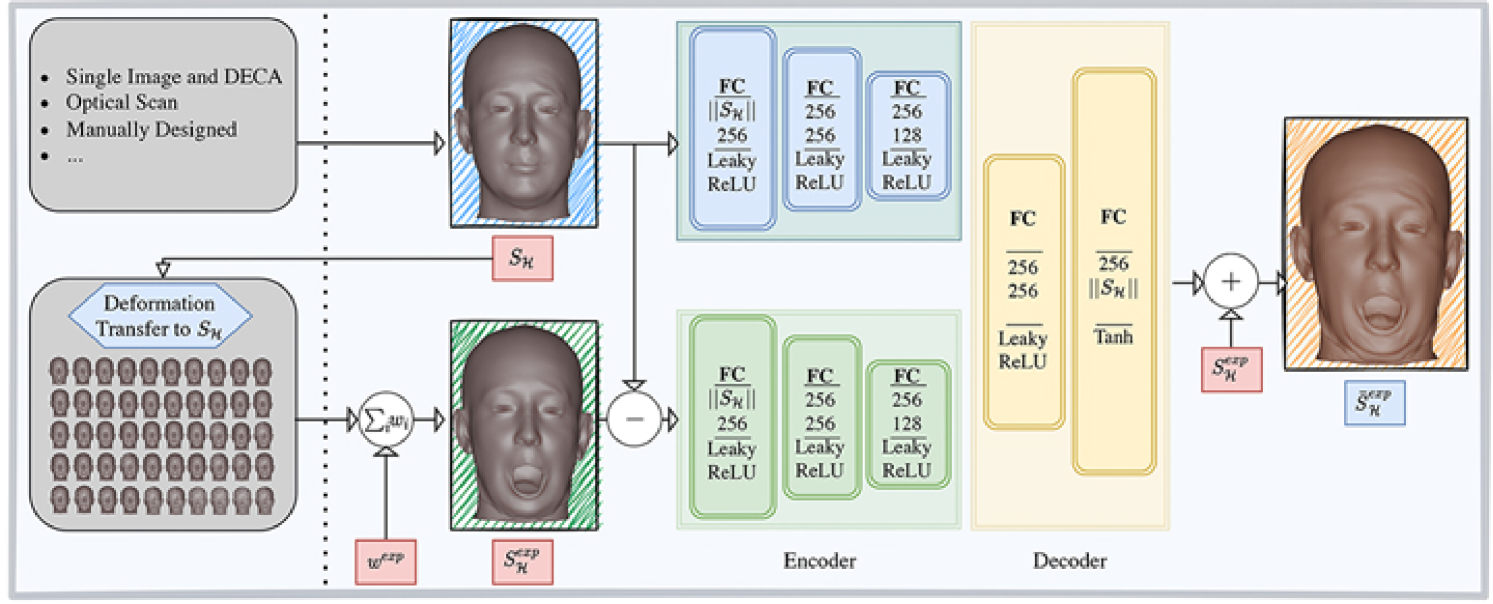

3.3 Neural Volumetric Blendshapes

Regardless of the construction of and , the calculation of facial expressions is in general not realtime-capable for high-resolution volumetric blendshapes and sophisticated physics models and . We therefore present the neural volumetric blendshape model that like the linear blendshape model (4) consists of a set of (not necessarily anatomically plausible) expression surfaces . However, unknown anatomically plausible expressions are formed by a neural network that is trained such that

| (11) |

The structure of Equation (11) enables us to implement efficiently as fully connected networks. More precisely, both inputs to , the neutral surface and the vector of the differences to the linear interpolation result , are tokenized with the help of respective encoders. Subsequently, the tokens are processed by a decoder that outputs the vertex-wise deformations from to the anatomically plausible expression .

The inputs and outputs of are justified as discussed next. Considering Equation (10) which is approximated by , it seems reasonable to use the same inputs and only learn the evaluation of . However, the accompanying tetrahedron meshes are significantly higher-dimensional than the corresponding surface meshes and would be slow downed severely in the inference speed. We therefore expect to implicitly learn the linking between linear and volumetric blendshapes as shown in Equation (7) as well as the fitting of the LHM. Since we demonstrate in Section 3.5 how the LHM can be fitted given only the neutral surface and also the linking does not need any further inputs, the neutral surface carries sufficient information to omit the tetrahedron meshes. For the second input, the difference vector, the blending weights or the linear interpolation result could alternatively be inserted. Inputting only the weights, however, would considerably limit the flexibility of because it does not allow the underlying surface blendshapes to be changed after training. On the other hand, inputting the linear interpolation result exhibits disadvantages at training time. The target deltas are mostly Gaussian distributed and can therefore be learned more easily by a neural network [39]. In the same spirit, the output of is chosen to be the differences to the anatomically plausible expression.

We evaluated alternatives to fully connected networks such as set transformers [35], convolutional networks on geometry images, graph neural networks [47], or implicit architectures [42], but all have exhibited substantially slower inference speeds while reaching a similar accuracy.

Our design of is not only fast, i.e., runs only 8 ms per frame on a consumer grade Intel i5 12600K, but it is also straightforward to deploy. A single neutral surface of a head is sufficient on which deformation transfer [9] can be applied. Building on this, an anatomically plausible animation can be performed with as shown in Figure 3. Further, no more volumetric information has to be processed in complex simulation frameworks. Thereby, and because only simple fully connected layers are used, the mesh is portable and easy to use on many different (computationally weak) devices.

Next, the construction of and is described and subsequently a pipeline for creating a dataset to learn is structured.

3.4 Physics-Based Simulations

We realize the anatomically plausible inverse physics and the left inverse as projective dynamics energies and , respectively. Starting with , separate terms for soft tissue, muscle tissue, the skin, the skull, and auxiliary components are applied. Considering the soft tissue , we closely follow the model of [27] and impose

| (12) |

which for each tetrahedron penalizes changes of volume

| (13) |

and strain

| (14) |

denotes the deformation gradient of a tetrahedron , the optimal rotation, and the Frobenius norm.

To reflect the biological structure of the skin, we additionally formulate a dedicated strain energy

| (15) |

on each triangle of the skin.

For the muscle tets , we follow the arguments of [29] that capturing fiber directions for tetrahedralized muscles is in general too restrictive. Hence, only a volume-preservation term

| (16) |

is applied. The weight is set sufficiently high such that the volume-preservation is almost a hard constraint.

The skull is not tetrahedralized as it is assumed to be non-deformable even though it is rigidly movable. The non-deformability of the skull is represented by

| (17) |

i.e. a strain on the triangles and mean curvature regularization

| (18) |

on the vertices of the skull. The matrix denotes the optimal rotation keeping the vertex Laplacian as close as possible to its initial value . The vertex Laplacian is discretized using the cotangent weights and the Voronoi areas [10]. We do not model the non-deformability as a rigidity constrain due to the significantly higher computational burden. Like for the volume constraints, the non-deformability of the skull is weighted to be an almost hard constraint.

To connect the muscle tets as well as the eyes to the skull, connecting tets are introduced. For the muscle tets, each skull vertex connects to the closest three vertices in to form a connecting tet. For the eyes, connecting tets are formed by connecting each eye vertex to the three closest vertices in . On these connecting tets, the energy with the same constraints as in Equation (12) is imposed as almost hard constraints. By this design, the jaw and the cranium are moved independent from each other though muscle activations but the eyes remain rigid and move only with the cranium.

Finally, the energy

| (19) |

of soft Dirichlet constraints

| (20) |

is added, attracting each vertex of the skin surface to the corresponding vertex from the target expression . As previously mentioned, the expression might be anatomically implausible. As a countermeasure, we can impose a maximum strain by balancing with . Thus, together with the almost hard constraints and by the construction of projective dynamics, always results in a plausible expression close to the target. The weighted sum of the aforementioned energies gives the total energy

| (21) | ||||

of the backward model .

The forward model is considerably simpler in structure and is realized as the energy

| (22) | ||||

that aims to achieve given deformation gradients while matching the positions of the targeted skull . Here,

| (23) |

is similar to Equation (14) and attracts the deformation of each tetrahedron to a corresponding target deformation gradient .

For both models we resolve self-intersections between colliding lips or teeth in a subsequent projective dynamics update. In the second update, colliding vertices are resolved as in [33]. The distinctive feature here is that no collision-gaps can occur after dissolving the self-intersections.

3.5 Generation of Training Data

To approximate Equation (10) with , a defining training dataset is required in first place. By the construction of , this training dataset must consist of instances that relate diverse facial expressions created through linear blendshapes to the corresponding anatomically plausible surfaces. This dataset must also cover a variety of distinct head shapes to train to be as generally applicable as and .

In the following, we describe a pipeline for creating instances of such dataset, which can be roughly divided into two high-level steps. First, in order to evaluate Equation (10) on a reasonable domain, it is necessary to model a head from an extensive head-model and determine the corresponding neutral soft and muscle tissue tetrahedron meshes and . Second, has to be deformed to an expression and mapped to the anatomically plausible . A more detailed algorithmic description is given in Algorithm 1.

Sampling Head Shapes We start the first part of the pipeline by randomly drawing a neutral skin surface from DECA [20], one of the most comprehensive high-resolution face models currently available. More specifically, we randomly draw an image from the Flickr-Faces-HQ [31] dataset and let DECA determine the corresponding neutral head shape. Further, a precomputed mapping is applied to adapt the DECA topology to our template.

| Algorithm 1 Data Generation | |

|---|---|

| Head Sampling and LHM Fitting | |

| 1. a) |

Draw a random image of a head from the

FlickerHQ dataset. |

| b) | Calculate skin surface with neutral expression parameters. |

| c) | Find LHM transformation from , build up tetrahedron meshes and . |

| Expression Sampling and Simulation | |

| 2. a) | Create ARKit blendshapes from with deformation transfer. |

| b) | Sample weights from dyadic recordings and calculate . |

| c) | Get from . |

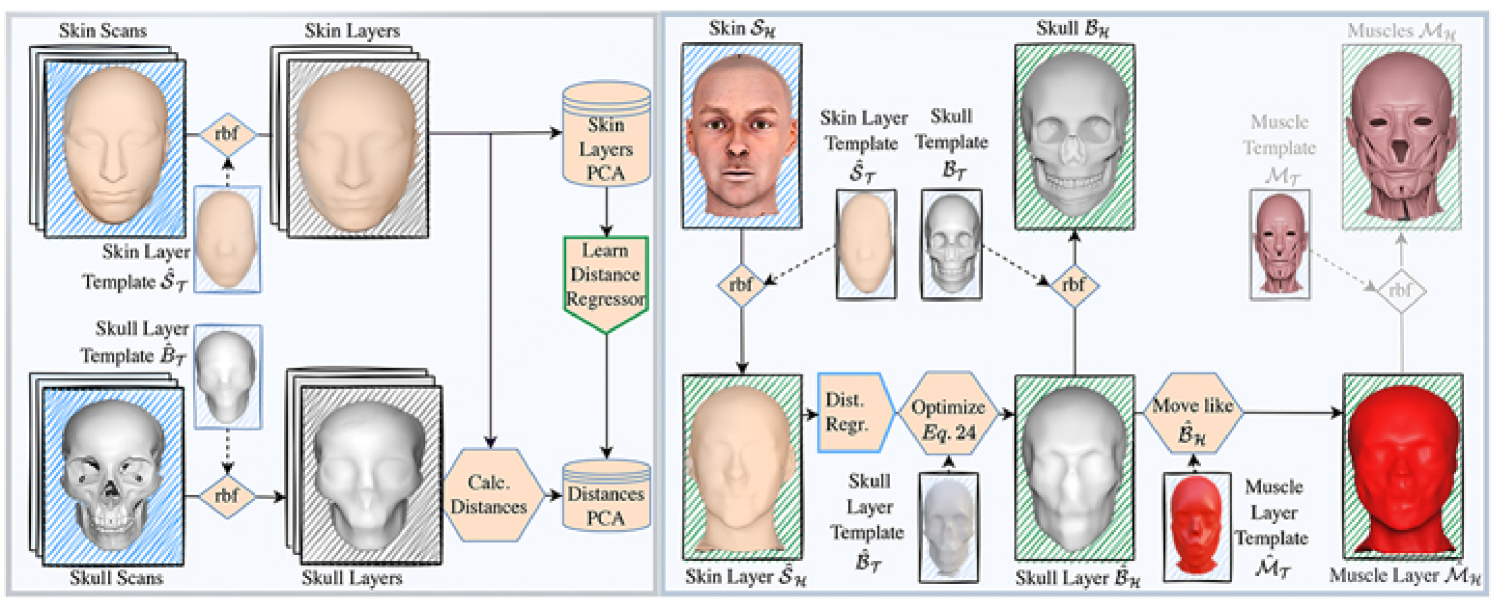

Fitting the LHM Next, the template LHM is aligned with the skin surface by finding that maps each of the template components individually. For this, we rely on a hybrid approach that is largely data-driven but also based on heuristics that ensure anatomic plausibility and avoids intersections.

As the first of the remaining five template meshes, we fit the skin layer by setting

| (24) |

The RBF function is a space warp based on triharmonic radial basis functions [8] that is calculated to displace from the template skin surface to the target and is then applied to the template skin layer. By construction, the skin layer will be warped semantically consistent and stick close to the targeted skin surface.

Next, we fit the skull layer by invoking a linear regressor that predicts the distances from the vertices of to the corresponding vertices of and subsequently minimizing with projective dynamics

| (25) | ||||

Here,

| (26) |

ensures that for each vertex the predicted distances is adhered to. Apart from , the same regularizing terms as in Equation (LABEL:eq::rect) and 18 are used. The optimization is initialized with where are area-weighted vertex normals. is trained on the dataset of [22] (SKULLS) that relates MRI skull measurements to skin surface scans. To ease the learning task, we learn the regressor between PCAs of the skin layers and the skin-to-skull-layer distances. Predicted lengths are set to a minimum value if they fall below a threshold, thus, avoiding skin-skull intersections and numerical issues in downstream physics-based simulations. In Figure 4a, the linear regressor training is visualized.

The muscle layer is fitted by positioning its vertices at the same absolute distances between the corresponding skin and skull layer vertices as in the template, and only passing on a small relative amount of the distance changes compared to the template. This approach assumes that the muscle mass in the facial area is only moderately affected by body weight and skull size.

The skull mesh is placed by setting

| (27) |

The properties of the RBF space warp ensure that the skull mesh remains within the skull layer if the layer is of sufficient resolution. The muscle mesh could be placed in a similar fashion but is not needed in our pipeline any further.

Finally, the soft and muscle tissue tetrahedron meshes and can be constructed as described in Section 2. On average, the complete fitting pipeline takes only about 500ms for one instance on an Intel i5 12600K processor. Figure 4b) visualizes the overall fitting process.

Sampling Expressions In the second part of the pipeline, the actual training instance is created. Beginning with deformation transfer [9] to transfer ARKit111https://developer.apple.com/ surface-based blendshapes to , we create expression by linearly blending the blendshapes with weights that are obtained from 8 around 10 minutes long dyadic conversations recorded with a custom iOS app.

Training Instance Finally, can be computed. Computing one training instance takes approximately 40 seconds on a AMD Threadripper Pro 3995wx.

4 Experiments

Before demonstrating the accuracy and efficiency (Section 4.3) of neural volumetric blendshapes, we first evaluate the fitting precision of the LHM (Section 4.1) as well as the quality of the proposed physical models (Section 4.2).

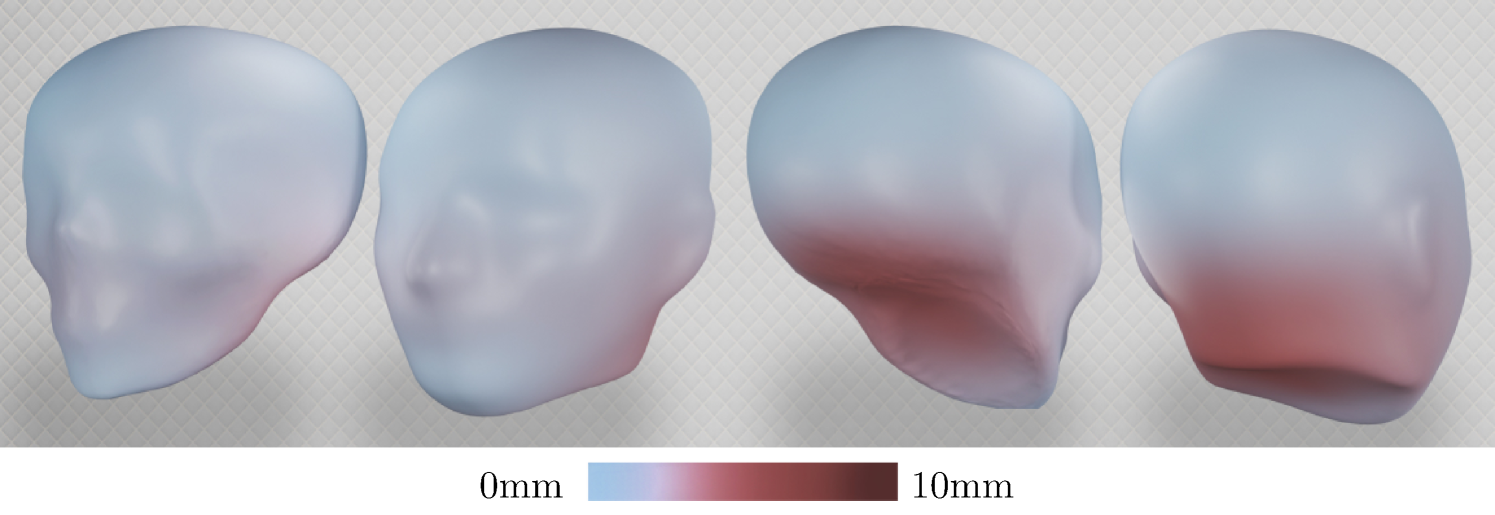

4.1 LHM Fitting

The fitting of the LHM is mainly composed of the data-driven positioning of the skull layer and the subsequent heuristic fitting of the muscle layer. We evaluate the crucial fitting of the skull layer with the SKULLS [22] dataset. Since this dataset consists of 43 instances only, a leave-one-out validation is performed in which the vertex-wise L2 errors are measured. The results are compared to the multilinear model as originally used for SKULLS in [1].

Both models cannot achieve a medical-grade positioning with errors between approximately and . The MLM achieves a higher precision with a mean error of than our approach that dispositions the skull by on average. However, the MLM cannot prevent collisions that might crash physics-based simulations. Also, our fitting algorithm produces large errors only in regions that are of less importance for facial simulations as can be seen in Figure 5. The errors are predominately distributed in the back area of the skull, since here the rectangular constraints of our fitting procedure can presumably no longer be aligned well to the skin layer. The following section demonstrates in downstream physics-based simulations that the prediction quality in the frontal face region is sufficiently adequate for detailed facial animations. Figure 6 displays fitting examples.

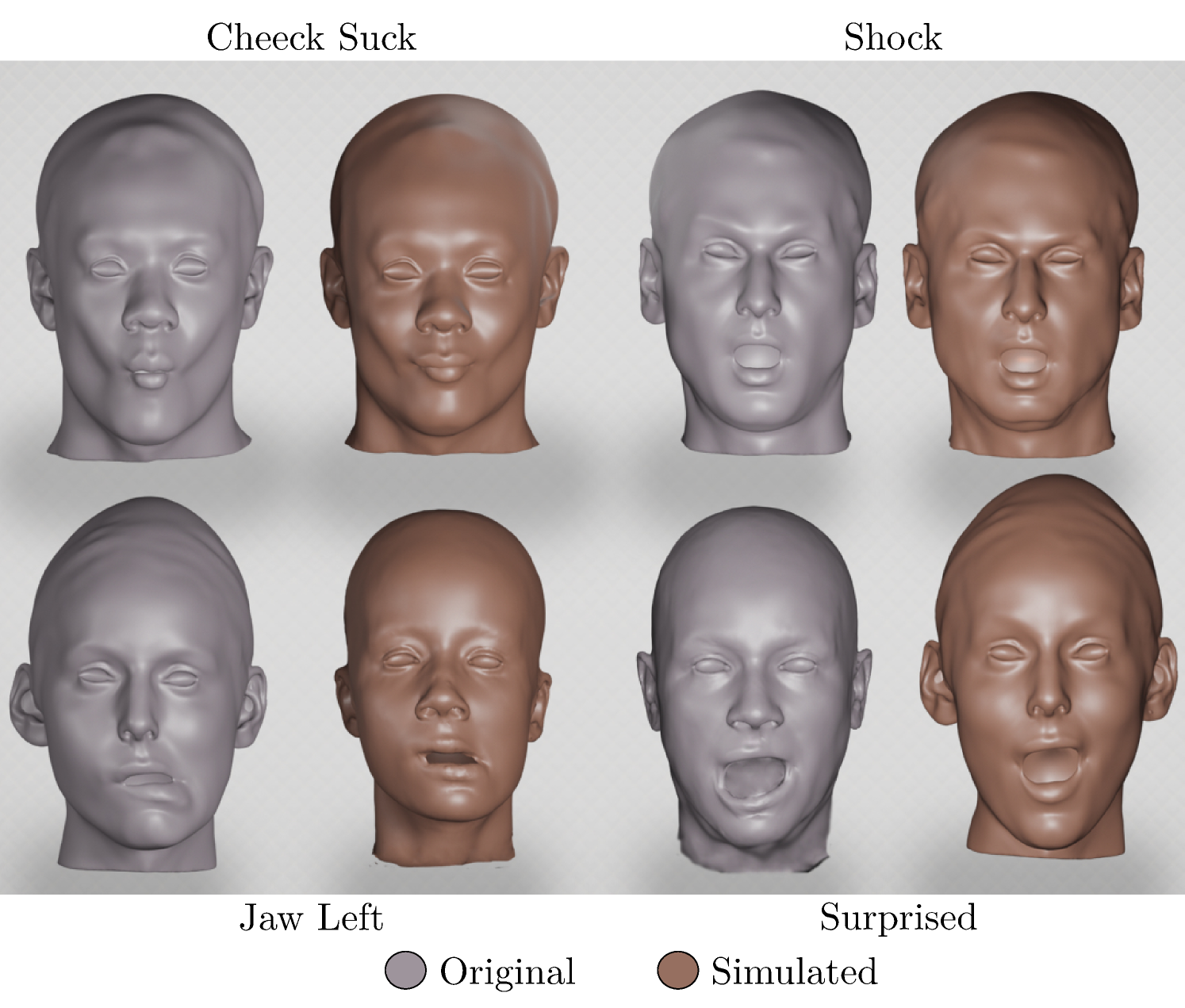

4.2 Physics-Based Simulation

To investigate the quality of and we perform an experiment on nearly 300 high-resolution optical facial expression scans from the proprietary 3DScanstore222https://www.3dscanstore.com dataset. First, we fit the LHM to each of the scans using and store only the resulting muscle deformation gradients and bone movements. Then, in turn, we apply these to the neutral scans of the corresponding individuals through and simulate the soft tissue as does. Only if our physic models and the previous LHM fitting operate with reasonable precision, do we expect a low L2-loss between the original expression and the simulated expression as well as visually appealing results.

Across all expression scans, we observe an average L2 reconstruction error of only 1.6 mm. The reproduction quality of the physical models is also reflected in the visual results of Figure 7. Further, the accuracy of the LHM fitting investigated in the previous section is again underlined. Additionally, we inspected expression retargeting by applying the extracted muscle and bone transformations to other identities. Again, visual appealing examples can be found Figure 7. The weights used to realize , and the LHM fitting in this and the following experiments are stated in Table 2.

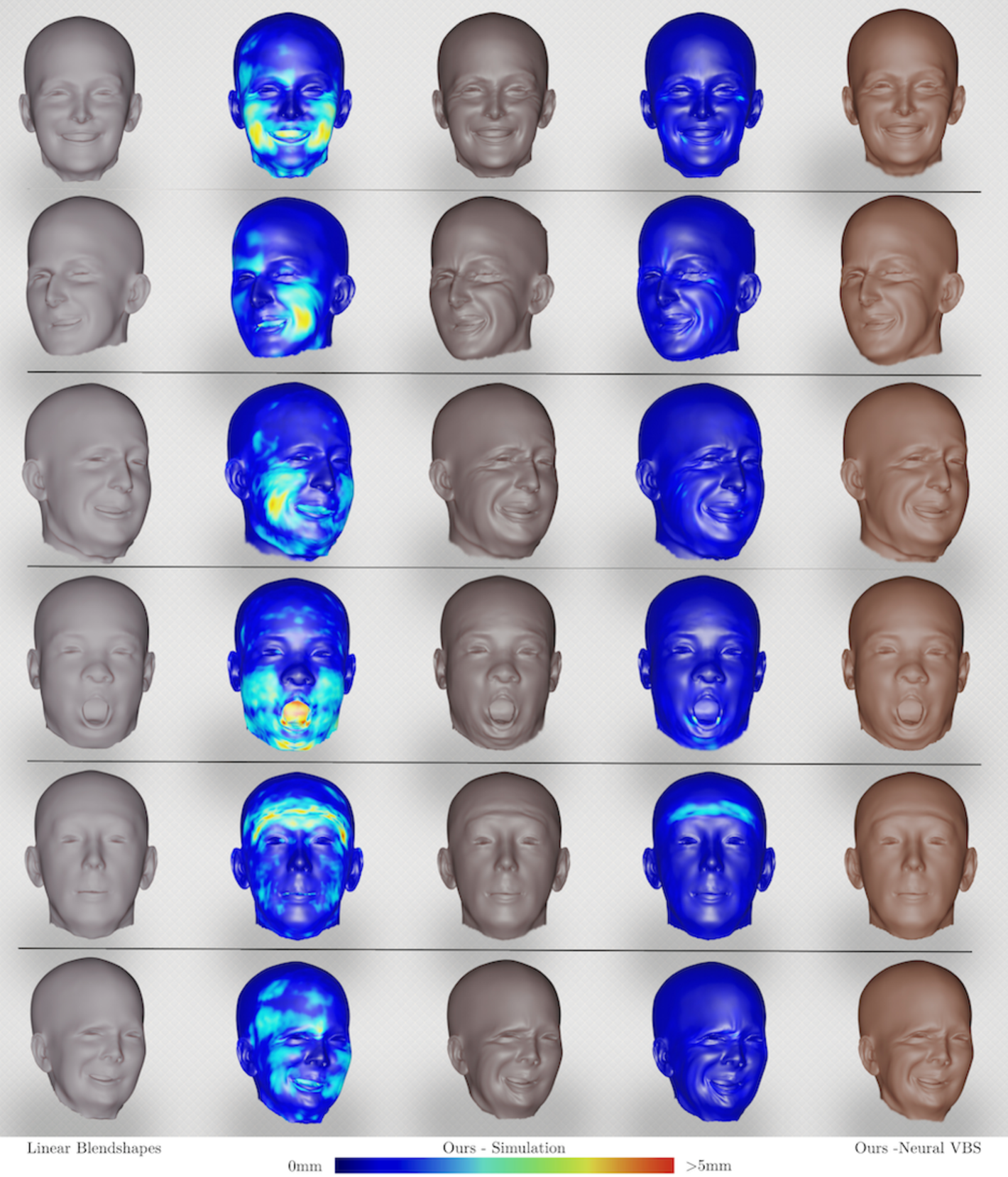

4.3 Neural Volumetric Blendshapes

To train and evaluate , we assemble a dataset of 50000 training and test instances by using the pipeline from Section 3.5. At this, we sample 10000 different head shapes and compute 5 different expressions per head. For training, the Adam optimizer performs 200k update steps with a learning rate of 0.0001. The learning rate is linearly decreased to 0.00005 over the course of training and a batch size of 128 is used. In total, the training specifications result in an approximate runtime of 8 hours on an NVIDIA A6000. The comparatively short training time can straightforwardly be explained by the less noisy training data as usually encountered for image-based deep learning models and the efficient network design. The identities are used 90% for training and 10% for testing.

First, we evaluate the efficiency of . The initial runtime of and in Equation (10) with 6 global projective dynamics updates as in [26] is about on a workstation AMD threadripper pro 3995wx with 128 cores (implemented with ShapeOp [19]). Our implementation of only takes 8ms on a consumer-grade Intel i5 12600K (implemented with PyTorch333https://pytorch.org). Moreover, a forward pass on an NVIDIA RTX3090 is calculated in less than one millisecond. Thus, neural volumetric blendshapes are suitable for realtime applications on weaker hardware setups and also offer advantages when many facial animations are to be executed in parallel on a GPU.

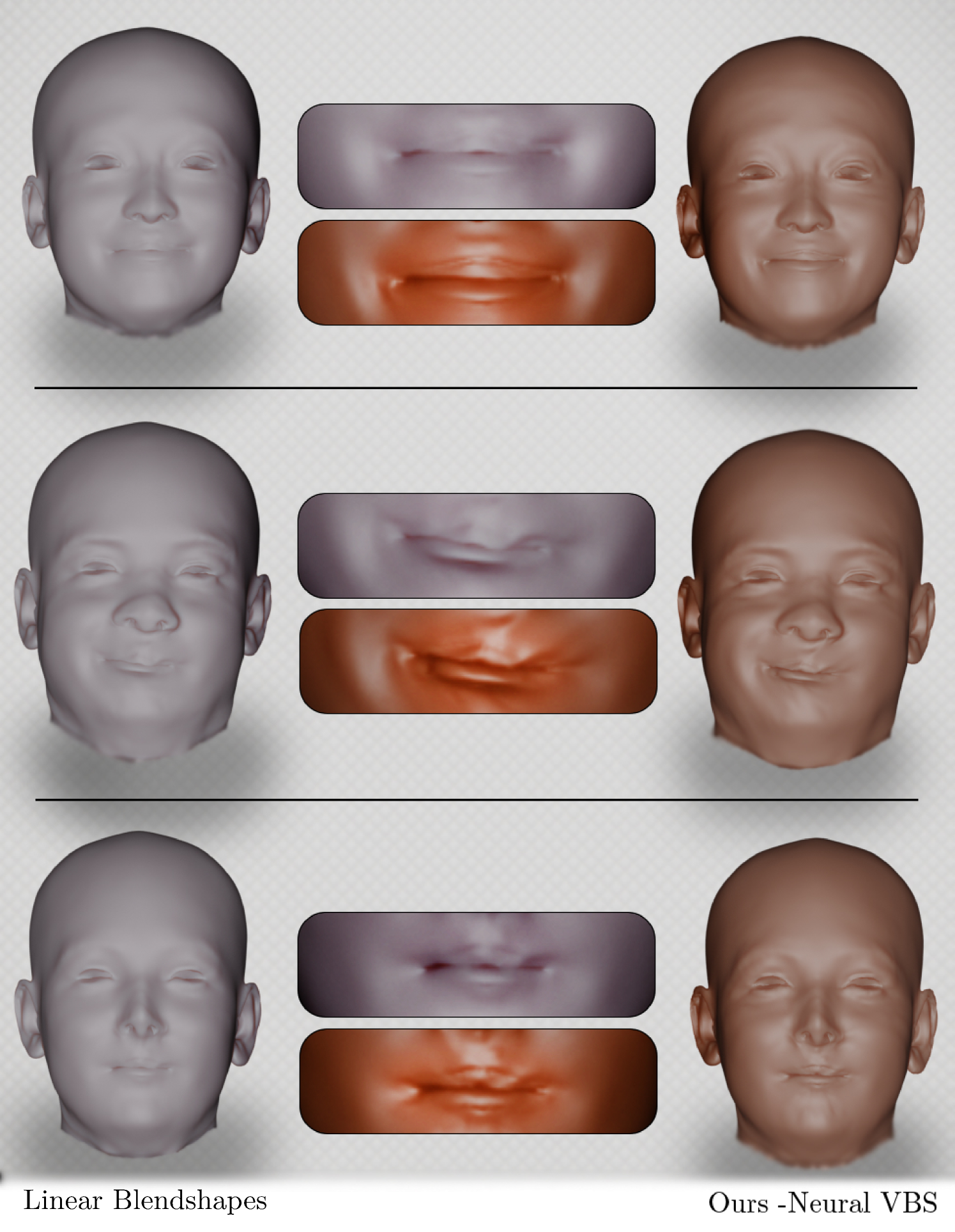

The time advantage alone is not useful if is not an adequate approximation. We evaluate the approximation quality by measuring the mean L2 error on the test dataset. More precisely, we randomly generate 5 training-test splits of the generated dataset and report the average of the test losses after training on each training set. We achieve an average test error of only , meaning that we can successfully achieve the approximation target of Equation (11) and generalizes well across head shapes and facial expressions. Another open question is the temporal consistency of which is inherent in physics-based simulations. To this end, we invite the reader to watch the attached video in the supplementary material. Finally, visual results of neural volumetric blendshapes as shown in Figure 8 exhibit the same improvements towards more realistic facial expressions as much slower physics-based simulations before. These include the resolution of self-intersections, the simulation of wrinkles, the adherence to anatomical boundaries, and the realism-enhancing constraining of volume preservation. Close-up images of resolved self-intersections can be seen in Figure 9. The only other approach that to the best of our knowledge can animate detailed faces at this speed, requires only a neutral surface as input, and allows for semantically consistent control, are linear blendshapes paired with a variant of deformation transfer [9, 39, 15]. As can be seen in Figure 8, too, neural volumetric blendshapes produce far more immersive facial animations.

5 Conclusion

In this work, we presented neural volumetric blendshapes, an efficient realization of physics-based facial animations even on consumer-grade hardware. Our approach yields more realistic results than the commonly used linear blendshapes, since artifacts such as volume-loss, self-intersections, or anatomical flaws are avoided and effects like volumetric elasticity and wrinkles are added. It is also convenient to use, since existing surface-based blendshape rigs can be extended into anatomically and physically plausible animations with almost no integration overhead while keeping the actuation as before.

We aim to improve neural volumetric blendshapes in at least two directions. On the one hand, with an even more accurate anatomical model that represents e.g. trachea and esophagus more precisely. On the other hand, recent results [44] show that contact deformations can also be efficiently learned. Since people touch their faces dozens of times [52] a day, adding contact-handling for more realistic gestures may improve the immersion significantly.

References

- [1] Jascha Achenbach et al. “A multilinear model for bidirectional craniofacial reconstruction” In Proceedings of the Eurographics Workshop on Visual Computing for Biology and Medicine, 2018, pp. 67–76

- [2] Dicko Ali-Hamadi et al. “Anatomy transfer” In ACM transactions on graphics (TOG) 32.6 ACM New York, NY, USA, 2013, pp. 1–8

- [3] Vincent Arsigny, Pierre Fillard, Xavier Pennec and Nicholas Ayache “Geometric means in a novel vector space structure on symmetric positive-definite matrices” In SIAM journal on matrix analysis and applications 29.1 SIAM, 2007, pp. 328–347

- [4] ShahRukh Athar et al. “RigNeRF: Fully Controllable Neural 3D Portraits” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 20364–20373

- [5] Michael Bao, Matthew Cong, Stéphane Grabli and Ronald Fedkiw “High-quality face capture using anatomical muscles” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 10802–10811

- [6] Vincent Barrielle, Nicolas Stoiber and Cédric Cagniart “Blendforces: A dynamic framework for facial animation” In Computer Graphics Forum 35.2, 2016, pp. 341–352 Wiley Online Library

- [7] Bernd Bickel et al. “Pose-Space Animation and Transfer of Facial Details.” In Symposium on Computer Animation, 2008, pp. 57–66

- [8] Mario Botsch and Leif Kobbelt “Real-time shape editing using radial basis functions” In Computer graphics forum 24.3, 2005, pp. 611–621 Blackwell Publishing, Inc Oxford, UKBoston, USA

- [9] Mario Botsch, Robert Sumner, Mark Pauly and Markus Gross “Deformation transfer for detail-preserving surface editing” In Vision, Modeling & Visualization, 2006, pp. 357–364 Citeseer

- [10] Mario Botsch et al. “Polygon mesh processing” CRC press, 2010

- [11] Sofien Bouaziz et al. “Projective dynamics: Fusing constraint projections for fast simulation” In ACM transactions on graphics (TOG) 33.4 ACM New York, NY, USA, 2014, pp. 1–11

- [12] Derek Bradley, Wolfgang Heidrich, Tiberiu Popa and Alla Sheffer “High resolution passive facial performance capture” In ACM SIGGRAPH 2010 papers, 2010, pp. 1–10

- [13] Chen Cao et al. “Authentic volumetric avatars from a phone scan” In ACM Transactions on Graphics (TOG) 41.4 ACM New York, NY, USA, 2022, pp. 1–19

- [14] Dan Casas and Miguel A Otaduy “Learning nonlinear soft-tissue dynamics for interactive avatars” In Proceedings of the ACM on Computer Graphics and Interactive Techniques 1.1 ACM New York, NY, USA, 2018, pp. 1–15

- [15] Prashanth Chandran, Loı̈c Ciccone, Markus Gross and Derek Bradley “Local anatomically-constrained facial performance retargeting” In ACM Transactions on Graphics (TOG) 41.4 ACM New York, NY, USA, 2022, pp. 1–14

- [16] Byungkuk Choi et al. “Animatomy: an Animator-centric, Anatomically Inspired System for 3D Facial Modeling, Animation and Transfer” In SIGGRAPH Asia 2022 Conference Papers, 2022, pp. 1–9

- [17] Matthew Cong and Ronald Fedkiw “Muscle-based facial retargeting with anatomical constraints” In ACM SIGGRAPH 2019 Talks, 2019, pp. 1–2

- [18] Matthew Deying Cong “Art-directed muscle simulation for high-end facial animation” Stanford University, 2016

- [19] Mario Deuss et al. “ShapeOp—a robust and extensible geometric modelling paradigm” In Modelling Behaviour Springer, 2015, pp. 505–515

- [20] Yao Feng, Haiwen Feng, Michael J Black and Timo Bolkart “Learning an animatable detailed 3D face model from in-the-wild images” In ACM Transactions on Graphics (ToG) 40.4 ACM New York, NY, USA, 2021, pp. 1–13

- [21] Stephan J Garbin et al. “VolTeMorph: Realtime, Controllable and Generalisable Animation of Volumetric Representations” In arXiv preprint arXiv:2208.00949, 2022

- [22] Thomas Gietzen et al. “A method for automatic forensic facial reconstruction based on dense statistics of soft tissue thickness” In PloS one 14.1 Public Library of Science San Francisco, CA USA, 2019, pp. e0210257

- [23] Benjamin Gilles, Lionel Reveret and Dinesh K Pai “Creating and animating subject-specific anatomical models” In Computer Graphics Forum 29.8, 2010, pp. 2340–2351 Wiley Online Library

- [24] Philip-William Grassal et al. “Neural head avatars from monocular RGB videos” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 18653–18664

- [25] Alexandru Eugen Ichim, Sofien Bouaziz and Mark Pauly “Dynamic 3D avatar creation from hand-held video input” In ACM Transactions on Graphics (ToG) 34.4 ACM New York, NY, USA, 2015, pp. 1–14

- [26] Alexandru Eugen Ichim, Ladislav Kavan, Merlin Nimier-David and Mark Pauly “Building and animating user-specific volumetric face rigs.” In Symposium on Computer Animation, 2016, pp. 107–117

- [27] Alexandru-Eugen Ichim, Petr Kadleček, Ladislav Kavan and Mark Pauly “Phace: Physics-based face modeling and animation” In ACM Transactions on Graphics (TOG) 36.4 ACM New York, NY, USA, 2017, pp. 1–14

- [28] Sungkyu Jung, Armin Schwartzman and David Groisser “Scaling-rotation distance and interpolation of symmetric positive-definite matrices” In SIAM Journal on Matrix Analysis and Applications 36.3 SIAM, 2015, pp. 1180–1201

- [29] Petr Kadleček and Ladislav Kavan “Building accurate physics-based face models from data” In Proceedings of the ACM on Computer Graphics and Interactive Techniques 2.2 ACM New York, NY, USA, 2019, pp. 1–16

- [30] Petr Kadleček et al. “Reconstructing personalized anatomical models for physics-based body animation” In ACM Transactions on Graphics (TOG) 35.6 ACM New York, NY, USA, 2016, pp. 1–13

- [31] Tero Karras, Samuli Laine and Timo Aila “A style-based generator architecture for generative adversarial networks” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 4401–4410

- [32] Marilyn Keller, Silvia Zuffi, Michael J Black and Sergi Pujades “OSSO: Obtaining Skeletal Shape from Outside” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 20492–20501

- [33] Martin Komaritzan and Mario Botsch “Projective skinning” In Proceedings of the ACM on Computer Graphics and Interactive Techniques 1.1 ACM New York, NY, USA, 2018, pp. 1–19

- [34] Martin Komaritzan, Stephan Wenninger and Mario Botsch “Inside Humans: Creating a Simple Layered Anatomical Model from Human Surface Scans” In Frontiers in Virtual Reality 2 Frontiers Media SA, 2021, pp. 694244

- [35] Juho Lee et al. “Set transformer: A framework for attention-based permutation-invariant neural networks” In International conference on machine learning, 2019, pp. 3744–3753 PMLR

- [36] John P Lewis et al. “Practice and theory of blendshape facial models.” In Eurographics (State of the Art Reports) 1.8, 2014, pp. 2

- [37] John P Lewis, Jonathan Mooser, Zhigang Deng and Ulrich Neumann “Reducing blendshape interference by selected motion attenuation” In Proceedings of the 2005 symposium on Interactive 3D graphics and games, 2005, pp. 25–29

- [38] Hao Li, Thibaut Weise and Mark Pauly “Example-based facial rigging” In Acm transactions on graphics (tog) 29.4 ACM New York, NY, USA, 2010, pp. 1–6

- [39] Jiaman Li et al. “Dynamic facial asset and rig generation from a single scan.” In ACM Trans. Graph. 39.6, 2020, pp. 215–1

- [40] Matthew Loper et al. “SMPL: A Skinned Multi-Person Linear Model” In ACM Trans. Graphics (Proc. SIGGRAPH Asia) 34.6 ACM, 2015, pp. 248:1–248:16

- [41] Nadia Maalin et al. “Beyond BMI for self-estimates of body size and shape: A new method for developing stimuli correctly calibrated for body composition” In Behavior Research Methods 53.3 Springer, 2021, pp. 1308–1321

- [42] Ben Mildenhall et al. “Nerf: Representing scenes as neural radiance fields for view synthesis” In Communications of the ACM 65.1 ACM New York, NY, USA, 2021, pp. 99–106

- [43] Frederic I Parke “Control parameterization for facial animation” In Computer Animation’91, 1991, pp. 3–14 Springer

- [44] Cristian Romero, Dan Casas, Maurizio M Chiaramonte and Miguel A Otaduy “Contact-centric deformation learning” In ACM Transactions on Graphics (TOG) 41.4 ACM New York, NY, USA, 2022, pp. 1–11

- [45] Shunsuke Saito, Zi-Ye Zhou and Ladislav Kavan “Computational bodybuilding: Anatomically-based modeling of human bodies” In ACM Transactions on Graphics (TOG) 34.4 ACM New York, NY, USA, 2015, pp. 1–12

- [46] Igor Santesteban, Elena Garces, Miguel A Otaduy and Dan Casas “SoftSMPL: Data-driven Modeling of Nonlinear Soft-tissue Dynamics for Parametric Humans” In Computer Graphics Forum 39.2, 2020, pp. 65–75 Wiley Online Library

- [47] Franco Scarselli et al. “The graph neural network model” In IEEE transactions on neural networks 20.1 IEEE, 2008, pp. 61–80

- [48] Robert Schleicher et al. “BASH: Biomechanical Animated Skinned Human for Visualization of Kinematics and Muscle Activity.” In VISIGRAPP (1: GRAPP), 2021, pp. 25–36

- [49] Ken Shoemake and Tom Duff “Matrix animation and polar decomposition” In Graphics Interface 92, 1992, pp. 258–264

- [50] Eftychios Sifakis, Igor Neverov and Ronald Fedkiw “Automatic determination of facial muscle activations from sparse motion capture marker data” In ACM SIGGRAPH 2005 Papers, 2005, pp. 417–425

- [51] Steven L Song, Weiqi Shi and Michael Reed “Accurate face rig approximation with deep differential subspace reconstruction” In ACM Transactions on Graphics (TOG) 39.4 ACM New York, NY, USA, 2020, pp. 34–1

- [52] Jente L Spille, Martin Grunwald, Sven Martin and Stephanie M Mueller “Stop touching your face! A systematic review of triggers, characteristics, regulatory functions and neuro-physiology of facial self touch” In Neuroscience & Biobehavioral Reviews 128 Elsevier, 2021, pp. 102–116

- [53] Sangeetha Grama Srinivasan et al. “Learning active quasistatic physics-based models from data” In ACM Transactions on Graphics (TOG) 40.4 ACM New York, NY, USA, 2021, pp. 1–14

- [54] Robert W Sumner and Jovan Popović “Deformation transfer for triangle meshes” In ACM Transactions on graphics (TOG) 23.3 ACM New York, NY, USA, 2004, pp. 399–405

- [55] Lingchen Yang et al. “Implicit neural representation for physics-driven actuated soft bodies” In ACM Transactions on Graphics (TOG) 41.4 ACM New York, NY, USA, 2022, pp. 1–10

- [56] Li Zhang, Noah Snavely, Brian Curless and Steven M Seitz “Spacetime faces: High-resolution capture for˜ modeling and animation” In Data-Driven 3D Facial Animation Springer, 2008, pp. 248–276

- [57] Yufeng Zheng et al. “Im avatar: Implicit morphable head avatars from videos” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 13545–13555