Neural–Shadow Quantum State Tomography

Abstract

Quantum state tomography (QST) is the art of reconstructing an unknown quantum state through measurements. It is a key primitive for developing quantum technologies. Neural network quantum state tomography (NNQST), which aims to reconstruct the quantum state via a neural network ansatz, is often implemented via a basis-dependent cross-entropy loss function. State-of-the-art implementations of NNQST are often restricted to characterizing a particular subclass of states, to avoid an exponential growth in the number of required measurement settings. To provide a more broadly applicable method for efficient state reconstruction, we present “neural–shadow quantum state tomography” (NSQST)—an alternative neural network-based QST protocol that uses infidelity as the loss function. The infidelity is estimated using the classical shadows of the target state. Infidelity is a natural choice for training loss, benefiting from the proven measurement sample efficiency of the classical shadow formalism. Furthermore, NSQST is robust against various types of noise without any error mitigation. We numerically demonstrate the advantage of NSQST over NNQST at learning the relative phases of three target quantum states of practical interest, as well as the advantage over direct shadow estimation. NSQST greatly extends the practical reach of NNQST and provides a novel route to effective quantum state tomography.

I Introduction

Efficient methods for state reconstruction are essential in the development of advanced quantum technologies. Important applications include the efficient characterization, readout, processing, and verification of quantum systems in a variety of areas ranging from quantum computing and quantum simulation to quantum sensors and quantum networks [1, 2, 3, 4, 5, 6]. However, with physical quantum platforms growing larger in recent years [7], reconstructing the target quantum state through brute-force quantum state tomography (QST) has become much more computationally demanding due to an exponentially increasing number of required measurements. To address this issue, various approaches have been proposed that are efficient in both the number of required measurement samples and in the number of parameters used to characterize the quantum state. These include classical shadows [8] and neural network quantum state tomography (NNQST) [9]. The goal of NNQST is to produce a neural network representation of a complete physical quantum state that is close to some target state. In contrast, the classical shadows formalism does not aim to reconstruct a full quantum state, but rather to obtain a reduced classical description that allows for efficient evaluation of certain observables.

A neural network quantum state ansatz has been shown to have sufficient expressivity to represent a wide range of quantum states [10, 11, 12, 13] using a number of model parameters that scales polynomially in the number of qubits. Furthermore, as methods for training neural networks have long been investigated in the machine learning community, many useful strategies for neural network model design and optimization have been directly adopted for NNQST [14, 15, 16]. Following the introduction of neural network quantum states [17], Torlai et al. proposed the first version of NNQST, an efficient QST protocol based on a restricted Boltzmann machine (RBM) neural network ansatz and a cross-entropy loss function [9]. NNQST has been applied successfully to characterize various pure states, including W states, the ground states of many-body Hamiltonians, and time-evolved many-body states [9, 18, 19]. Despite the promising results of NNQST in many use cases, the protocol faces a fundamental challenge: An exponentially large number of measurement settings is required to identify a general unknown quantum state (although a polynomial number is sufficient in some examples [20]). During NNQST, a series of measurements is performed in random local Pauli bases for qubits , where ). Because this set is exponentially large, some convenient subset of all possible must be selected for a large system, but this subset may limit the ability of NNQST to identify certain states. An important example is the phase-shifted multi-qubit GHZ state, relevant to applications such as quantum sensing. In this case, the relative phase associated with non-local correlations cannot be captured by measurement samples from almost-diagonal local Pauli bases, i.e., bases with indices for which or . Nonetheless, this limited set of almost-diagonal Pauli bases is widely used in NNQST implementations to avoid an exponential cost in classical post-processing [9, 18].

To address this challenge, we use the classical shadows of the target quantum state to estimate the infidelity between the model and target states. This is in contrast with approaches that use the conventional basis-dependent cross-entropy as the training loss for the neural network. This choice is motivated by two main factors. Firstly, infidelity is a natural candidate for a loss function compared to cross-entropy; the magnitude of the basis-dependent cross-entropy loss is in general not indicative of the distance between the neural network quantum state and the target state. Additionally, infidelity is the squared Bures distance [21], a measure of the statistical distance between quantum states that enjoys metric properties such as symmetry and the triangle inequality. The infidelity is therefore a better behaved objective function for optimization. Secondly, the classical shadow formalism of Huang et al. was originally developed to address precisely the scaling issues of brute-force QST [8]. Instead of reconstructing the unknown state, shadow-based protocols, first proposed by Aaronson [22], predict certain properties of the quantum state with a polynomial number of measurements. Therefore, classical shadows provide the following two main advantages in our work: (i) they are provably efficient in the number of required measurement samples for predicting various observables (e.g. the infidelity), and (ii) there is no choice of measurement bases required and therefore no previous knowledge of the target state is assumed.

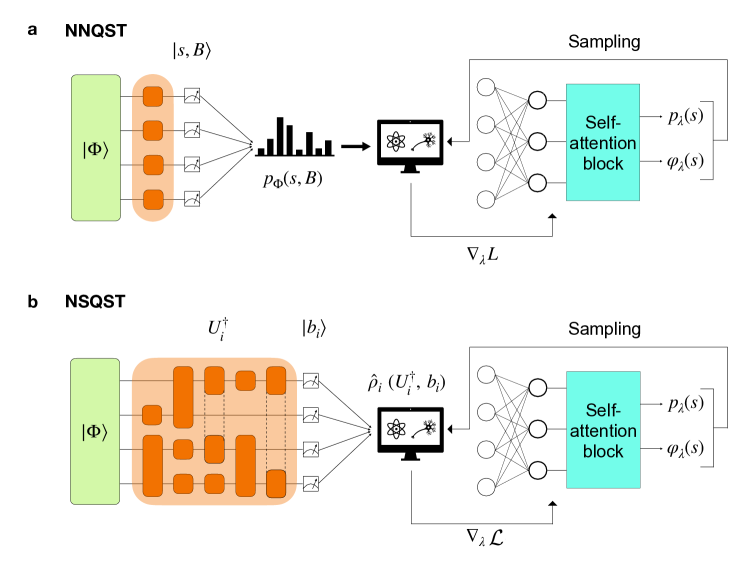

Our new pure-state QST protocol, “neural–shadow quantum state tomography” (NSQST), reconstructs the unknown quantum state in a neural network quantum state ansatz by using classical shadow estimations of the gradients of infidelity for training (Fig.˜1b). In our numerical experiments, NSQST demonstrates clear advantages in three example tasks: (i) reconstructing a time-evolved state in one-dimensional quantum chromodynamics, (ii) reconstructing a time-evolved state for an antiferromagnetic Heisenberg model, and (iii) reconstructing a phase-shifted multi-qubit GHZ state. Moreover, the natural appearance and inversion of a depolarizing channel from randomized measurements in the classical shadow formalism makes NSQST noise-robust without any calibration or modifications to the loss function, while one of these two extra steps is required in noise-robust classical shadows [23, 24]. We numerically demonstrate NSQST’s robustness against two of the most dominant sources of noise across a wide range of physical implementations: two-qubit CNOT errors and readout errors. The rest of this paper is organized as follows: In Sec.˜II, we summarize the methods used in our numerical simulations, including the neural network quantum state ansatz, NNQST, classical shadows, NSQST, and NSQST with pre-training. In Sec.˜III and Sec.˜IV, we provide numerical simulation results for NNQST, NSQST, and NSQST with pre-training in three useful examples, both noise-free and in the presence of noise. In particular, we provide a comparison to direct shadow estimation in Sec.˜III.4. Finally, Sec.˜V summarizes the key advantages of NSQST and some possible future directions. We provide additional technical details and suggestions for further improvements to NSQST in the appendices.

II Methods

In this section, we describe existing methods for characterizing quantum states and then introduce and describe two variants of NSQST. We introduce neural network quantum states in Sec.˜II.1. State-of-the-art NNQST implementations and the classical shadow protocol are summarized in Sec.˜II.2 and Sec.˜II.3, respectively. Our proposed NSQST protocol is described in Sec.˜II.4. In addition, a modified NSQST protocol with pre-training is described in Sec.˜II.5.

II.1 Neural network quantum state

Our pure-state neural network quantum state ansatz is adopted from Ref. [18]. The parameterized model is based on the transformer architecture [15], widely used in natural language processing and computer vision [25, 26]. As compared to older architectures such as the RBM, the transformer is superior in modelling long-range interactions and allows for more efficient sampling of the encoded probability distribution due to its autoregressive property [18].

The transformer neural network quantum state ansatz takes a bit-string corresponding to the computational basis state , and produces a complex-valued amplitude parameterized by as

| (1) |

where and are vectors of real-valued model parameters for the normalized probability amplitudes and the phases of the neural network quantum state. These amplitudes and phases may be parameterized by the neural network quantum state in various fashions. One approach is to use two completely disjoint models, independently parameterized by and for the amplitude and phase values, respectively [9, 27]. Another approach is to use a single model parameterized by to encode both the amplitude and phase outputs, either via complex-valued model parameters [17, 28] or by using real-valued model parameters with two disjoint layers of output neurons connected to a common preceding neural network [18]. In our numerical experiments, we use the later parameterization for NNQST and NSQST, but the modified NSQST protocol with pre-training in Sec.˜II.5 uses two separately parameterized neural networks. See Appendix˜A for a more detailed account of the transformer architecture.

Given a trained neural network quantum state, observables and other state properties of interest can be predicted by drawing (classical) samples from the neural network model. The number of samples required to predict the expectation value of an arbitrary Pauli string (independent of its weight) and fidelity to a computationally tractable state with bounded additive error is independent of the system size [29]. Computationally tractable states include stabilizer states and neural network quantum states. Thus, if sampling can be performed efficiently, the prediction errors from neural network quantum states are primarily due to imperfect training. We also note that not every neural network quantum state ansatz has this property of efficient observable and fidelity prediction, where an important example is a class of generative models trained on informationally complete positive-operator valued measures (IC-POVMs) [30, 31].

II.2 Neural network quantum state tomography (NNQST)

NNQST (Fig.˜1a) aims at obtaining a trained neural network representation that closely approximates an unknown target quantum state. The training is done by iteratively adjusting the neural network parameters along a loss gradient estimated from the measurement samples in various local Pauli bases (obtained by applying single-qubit rotations before performing measurements in the computational basis) [18]. We denote a local Pauli basis as , with . If a measurement sample is obtained after performing rotations to the Pauli basis , we store the pair as a training sample, corresponding to a product state .

After choosing a subset of Pauli bases for collecting measurement samples, we estimate a loss function that represents the distance between the target state and the neural network quantum state . The loss function in NNQST is based on the cross-entropy of the measurement outcome distributions for the target and neural network states in each basis , which is then averaged over the set of bases . Ignoring a -independent contribution arising from the average entropy of the target-state measurement distribution, this procedure gives the cross-entropy loss function for NNQST [18]:

| (2) |

Here, is the probability of measuring the outcome from the target state after rotating to the Pauli basis and is defined as

| (3) |

where the overlap between the Pauli product state and the neural network quantum state requires a summation over the computational basis states that satisfy . Note that the number of these states is , with being the number of positions where . This suggests that an efficient and exact calculation of requires the projective measurements to be in almost-diagonal Pauli bases for a generic neural network quantum state [18].

Using the law of large numbers, the cross-entropy loss can be approximated via a finite training data set as

| (4) |

An approximation for the gradient is then directly found from Eq.˜4. During training, the gradient is provided to an optimization algorithm such as stochastic gradient descent (SGD) or one of its variants (e.g., the Adam optimizer [16]). In this paper, we exclusively use the Adam optimizer.

II.3 Classical shadows

Shadow tomography relies on the ingenious observation that a polynomial number of measurement samples is sufficient to predict certain observables for quantum states of arbitrary size [22]. The classical shadow protocol further exploits the efficiency of the stabilizer formalism, making this procedure ready for practical experiments [8, 32, 33, 34]. In this paper, we focus on estimating linear observables of the form for a pure state . An important example (for ) is the fidelity between the target state and a reference state . The first step in the protocol is to collect the so-called classical shadows of . To obtain a single classical shadow sample, we apply a randomly-sampled Clifford unitary to the quantum state and measure all qubits in the computational basis, resulting in a single bit-string . The stabilizer states contain valuable information about . Using representation theory [35], it can be shown that the density matrix obtained from an average over both the random unitaries and the measured bit-strings coincides with the outcome of a depolarizing noise channel:

| (5) |

where denotes the depolarizing noise channel of strength . The original state can then be recovered as an average over classical shadows by inverting the above formula, . We emphasize that the original state can only be recovered after sampling from a prohibitively large number of Clifford unitaries, and for each of them sampling an exponentially large number of bit-strings. The classical shadows are therefore defined by [8]:

| (6) |

More generally, in the presence of a gate-independent, time-stationary, and Markovian noise channel afflicting the segment of the circuit between the preparation of the state and the measurements, this definition extends to [23]:

| (7) |

Here, is the strength of a depolarizing noise channel comprised of the combined effects of the channel in Eq.˜5 and the twirling of the additional noise by the random Clifford unitaries, effectively imposing further depolarization. Koh and Grewal [23] derived an analytic expression for as

| (8) |

where

| (9) |

is the sum of fidelities for the noise channel acting on each of the computational basis states (; at the lower bound, , the depolarizing parameter becomes negative, , but the associated depolarizing channel remains physical [23]). When the noise channel is not exactly known, extra calibration procedures are required in noise-robust classical shadow protocols [24].

Once the classical shadow samples are collected, we calculate for each of the classical shadows and obtain an estimator for the observable from an average over the samples (alternatively, the median-of-means can be used to improve the success rate of the protocol; see Ref. [8] for more details). The key advantage of classical shadows is the bounded variance of observable estimations which, in turn, provides a bound on the number of classical shadow samples required to predict linear observables of the quantum state within a target precision. Indeed, as shown in Refs. [24, 23], the number of classical shadow samples required to estimate arbitrary linear observables , up to an additive error , scales as

| (10) |

In the case of noise-free Clifford tails, , we have , , and we recover the sample complexity presented in [8], which is indeed independent of the system size . The variance of observable estimators is also bounded as in this case and is independent of the system size.

II.4 Neural–shadow quantum state tomography (NSQST)

Given a pure target state , our goal in NSQST (Fig.˜1b) is to progressively adjust the model parameters , such that the associated pure state (see Eq.˜1) approaches during optimization. We approximate the fidelity between the model and target states using the classical shadow formalism described in Sec.˜II.3, taking as a linear observable, averaged with respect to . The number of observables we predict during optimization therefore coincides with the number of descent steps taken by the optimizer, as updating changes the observable in every iteration. By collecting classical shadows, we can approximate our loss function (the infidelity) via

| (11) | ||||

In the noise-free case , this expression simplifies to

| (12) |

We see that, independent of the specific form of , training the model is simply equivalent to increasing the average overlap between the random stabilizer states and the model quantum state.

The next step in NSQST requires classical post-processing to estimate the overlaps . For certain states (e.g., stabilizer states), the overlap can be calculated efficiently. Many states of interest do not fall into this class, leading to a potential exponential overhead. However, we can obtain a Monte-Carlo estimate of the overlap by sampling from the model quantum state . In the model, we associate a probability

| (13) |

to each computational basis state . Therefore,

| (14) |

It is now straightforward to provide a Monte Carlo estimate of the above quantity [36] (see Appendix˜D for an alternative approach). For each sample from the neural network, we have direct access to the exact complex-valued amplitudes of the neural network quantum state in the computational basis. Moreover, we can compute the stabilizer state projections in time, in view of the Gottesman-Knill theorem [37, 38, 39]. Note that decomposing a randomly sampled Clifford operator into primitive unitary gates (e.g., with Hadamard, S, and CNOT gates) still takes time [39, 40]. However, this is a one-time procedure to be run for each and can be done in advance of state tomography.

For first-order optimization methods (such as SGD and Adam), it is the gradient of the loss function rather than the loss function itself that must be estimated. From Eq.˜11 and using the log-derivative trick, we obtain the gradient

| (15) |

where we define the diagonal operator as

| (16) | ||||

A simple but important observation is that the noise enters Eq.˜15 only in the overall prefactor . Thus, the noise may affect the learning rate, but it will not affect the direction of the gradient. This suggests that gradient-based optimization schemes can yield an accurate neural network quantum state without any noise calibration or mitigation. This is despite the fact that this same noise generally biases the estimated infidelity (see Eq.˜11).

We now discuss a possible limitation of our approach to classical post-processing. Given classical shadows collected experimentally, and computational basis samples collected from the neural network quantum state, the number of Monte Carlo samples collected from the neural network quantum state must be in order to guarantee a bounded standard error in the approximation of the gradient from Eq.˜15. Since , this suggests that there may be an exponential cost in performing the Monte Carlo estimations. We emphasize that this potential exponential cost in classical post-processing does not affect the required number of classical shadows from measurements. As system sizes grow significantly larger, it will eventually become hopeless to perform an exact sum over all computational basis states and the Monte Carlo average may still lead to successful convergence with only a sub-exponential number of samples in some cases (further details and an alternative approach to performing the Monte Carlo average are discussed in Appendix˜D). In our numerical simulations with six qubits (see Sec.˜III), having computational basis states, we have evaluated Eq.˜15 using 5000 Monte Carlo samples. With this many samples, the Monte Carlo estimation error is negligible and statistical fluctuations in the gradient are predominantly due to the finite number of classical shadows collected.

II.5 NSQST with pre-training

Along with the standard NSQST protocol, we also outline a modified NSQST protocol we call “NSQST with pre-training”, which combines the resources used in NNQST and the standard NSQST protocol. NSQST with pre-training aims to find a solution with a lower infidelity than either of the other protocols alone. In this protocol we train two models with disjoint sets of parameters and . We call these models the probability amplitude model and the phase model. Figure˜2 provides a visual overview of the protocol for NSQST with pre-training.

First, the parameters are optimized to produce an accurate distribution from measurements performed exclusively in the computational basis []. Note that we can efficiently evaluate the loss function, Eq.˜4, and its gradient in this case as they depend only on the probabilities and not on the phases. Next, we perform NSQST to train the model parameters , learning the phases . However, unlike the case of standard NSQST, to perform a Monte Carlo estimate of the gradient, Eq.˜15, here we select random samples from a set of computational basis states according to the pre-trained distribution . Since the NSQST Monte Carlo approximations do not follow the model in an on policy fashion, re-sampling in every iteration is no longer necessary. Nevertheless, we still re-sampled in our numerical experiments (described below) to reduce the sampling bias, and since the classical sampling procedure was not computationally costly in our examples.

NSQST with pre-training resembles coordinate descent optimization, with and being the two coordinate blocks. Optimizing first and fixing it for the optimization of reduces the dimension of the parameter space for the optimizers throughout the training. However, this does not guarantee convergence to a better local minimum in the loss landscape. We do not intend to demonstrate a clear advantage for NSQST with pre-training over the standard NSQST protocol, as the former uses more computational resources both experimentally (by requiring more measurements) and classically (in the form of the memory, time, and energy consumed to train the neural network quantum state). See Appendix˜C for a comparison of the number of model parameters and the number of measurements used for each of the two approaches. Another motivation for introducing this modified NSQST protocol is to provide new perspectives on the differences between learning the probability amplitudes and the phases of a target quantum state, as well as to inspire other useful hybrid protocols in the future.

III Numerical simulations without noise

In this section, we first demonstrate the advantage of our NSQST protocols over the NNQST protocol in three physically relevant scenarios, then demonstrate advantages over direct shadow extimation. Specifically, we consider a model from high-energy physics (time evolution for one-dimensional quantum chromodynamics), a model from condensed-matter physics (time evolution of a Heisenberg spin chain), and a model relevant to precision measurements and quantum information science (a phase-shifted GHZ state).

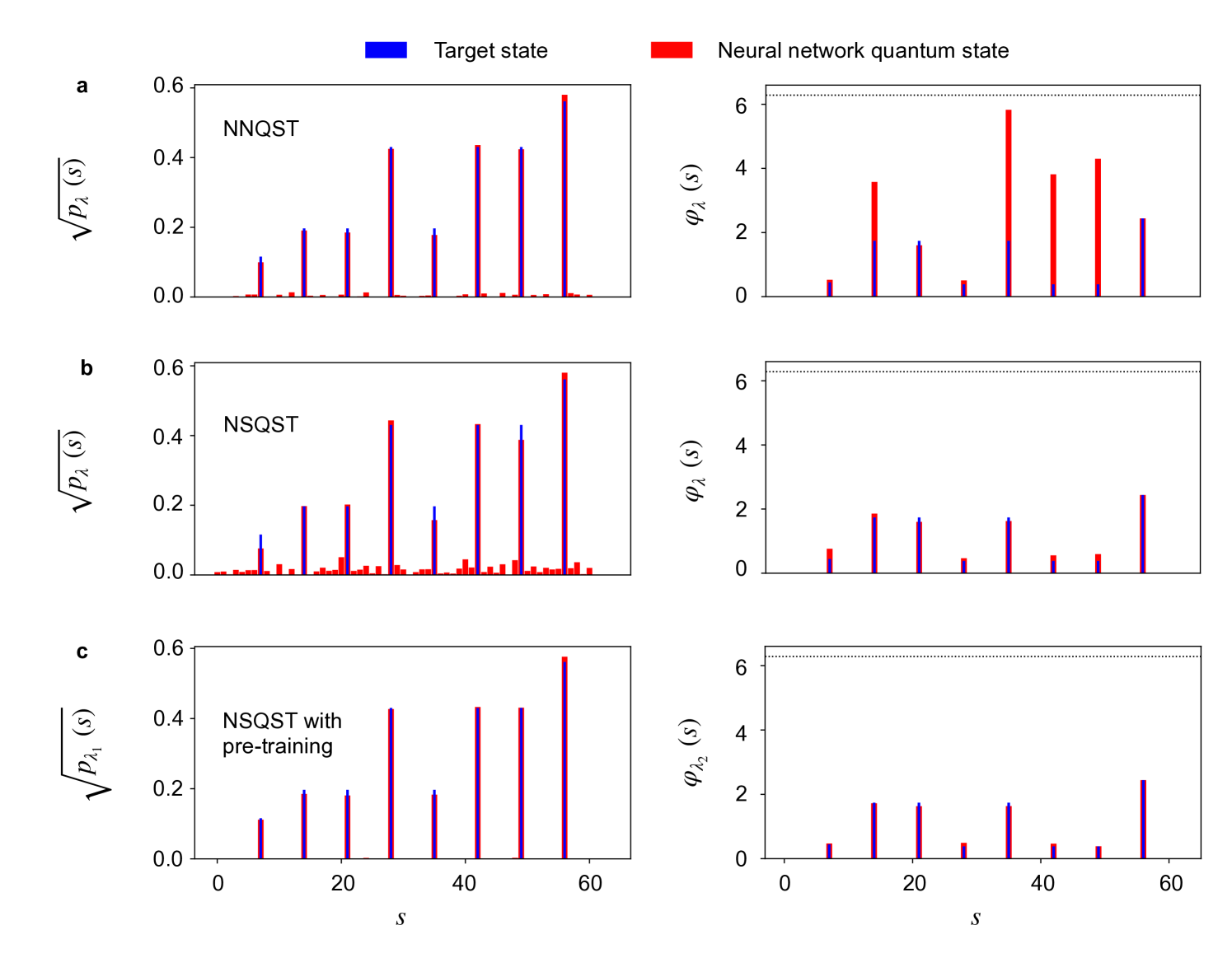

For all three physical settings, we compare the performance of NNQST, NSQST, and NSQST with pre-training by measuring the exact infidelity of the trained model quantum states to the target state averaged over the last 100 iterations of training (or epochs for NNQST, see Appendix˜C). For NNQST’s basis selection, since none of our target states is known to be the ground state of a -local Hamiltonian (i.e., a Hamiltonian with each term acting non-trivially on at most qubits), we simply use all of the almost-diagonal and nearest-neighbour local Pauli bases (i.e., Pauli bases with at most two neighbouring terms being non-). The number of these bases scales linearly with the system size ( bases). All NSQST protocols use only re-sampled classical shadows per iteration for model parameter updates. We perform ten independent trials of each protocol (NNQST, NSQST, and NSQST with pre-training) in each of the three examples.

Finally, we adopt an improved pre-training strategy described in Appendix˜D and fix Clifford shadows without re-sampling to demonstrate advantages of NSQST over direct shadow estimation with Clifford shadows or Pauli shadows.

III.1 Time-evolved state in one-dimensional quantum chromodynamics

Quantum chromodynamics (QCD) studies the fundamental strong interaction responsible for the nuclear force [41]. Lattice gauge theory, an important non-perturbative tool for studying QCD, discretizes spacetime into a lattice and the continuum results can be obtained through extrapolation [42]. Although lattice gauge theory has been extremely successful in QCD studies, simulations of many important physical phenomena such as real-time evolution are still out of reach due to the sign problem in current simulation techniques. Quantum computers are envisioned to overcome this barrier in lattice gauge theory-based QCD simulations and they may open the door to new discoveries in QCD [43, 44, 45, 46].

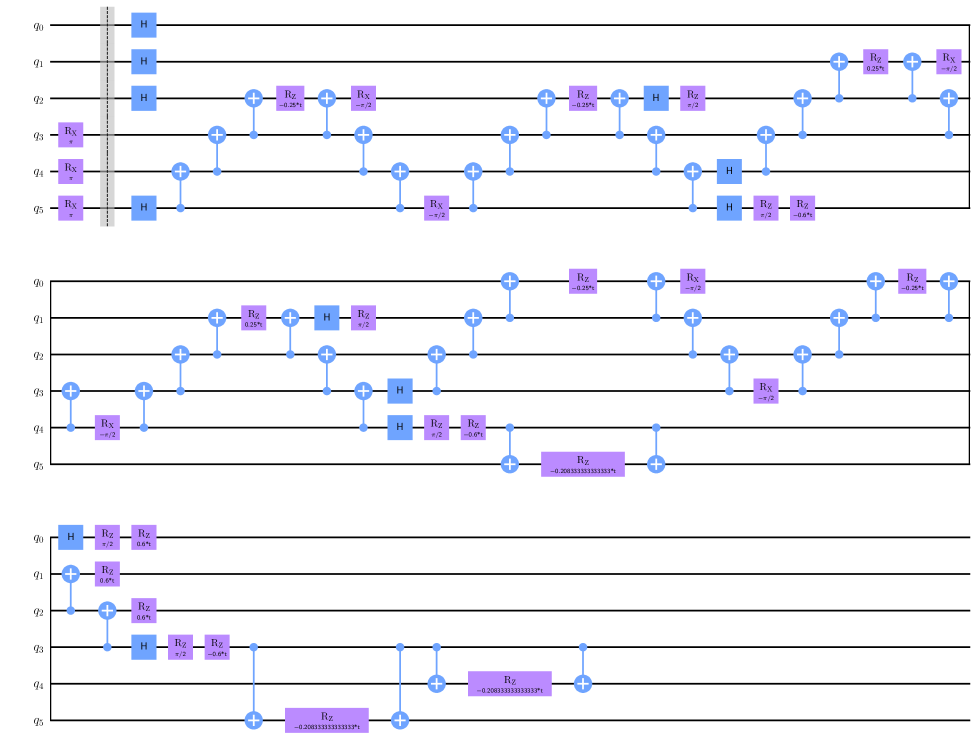

We consider a Trotterized time evolution with the gauge group SU(3) and aim to reconstruct the time-evolved quantum state after a given amount of time. To this end, we use the qubit formulation in Ref. [47] and study a single unit cell of the lattice. This corresponds to qubits representing three quarks (red, green, blue) and three anti-quarks (anti-red, anti-green, anti-blue) as shown in Fig.˜3a. The Trotterized time evolution starts from the initial state , which is known as the strong-coupling baryon-antibaryon state. The Hamiltonian governing the evolution is (from Ref. [47]):

| (17) |

where

| (18) |

with and for a lattice spacing , where is the bare quark mass and is the gauge coupling constant. We use two Trotter steps in our simulation, each for time . See Appendix˜B and Ref. [47] for more details on the circuit and the physical significance of this time evolution.

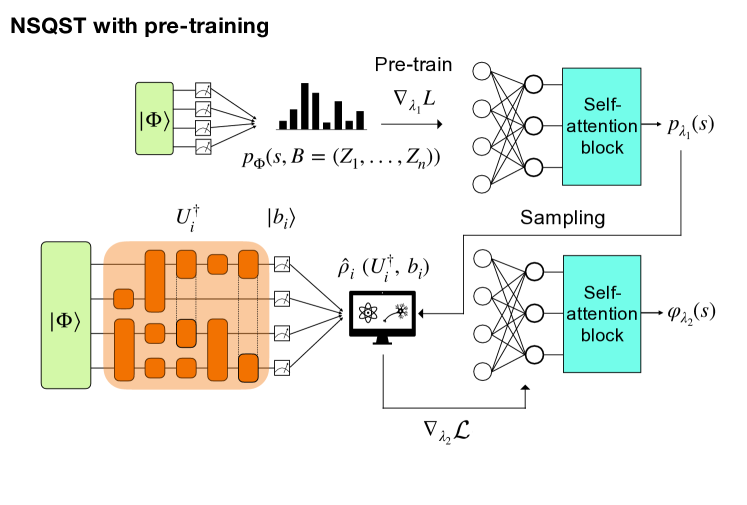

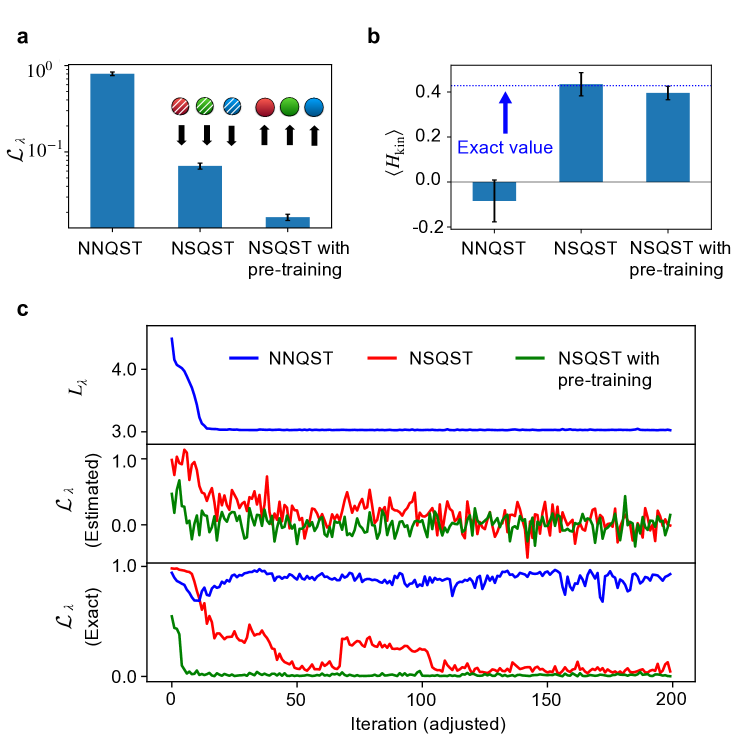

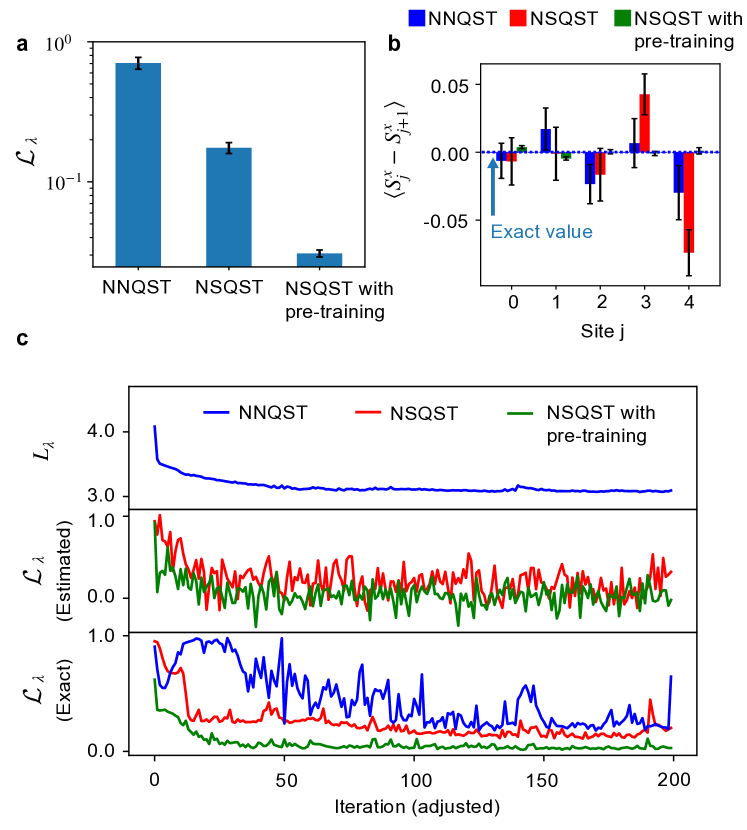

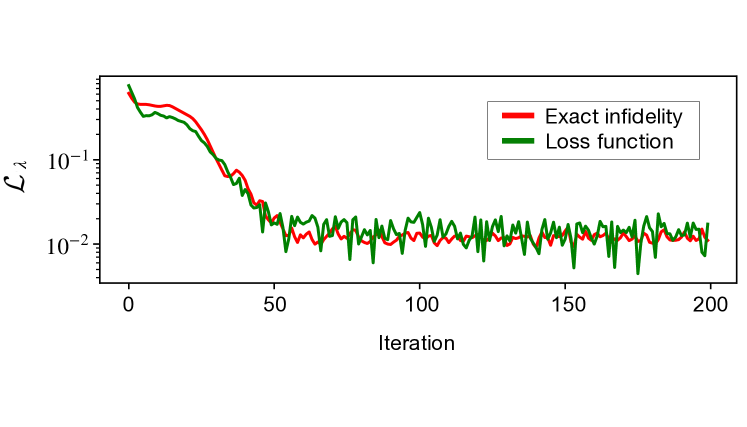

Figure˜3 shows the results of simulating tomography on the time-evolved state using NNQST, NSQST, and NSQST with pre-training. Note that, although the NSQST protocols (with and without pre-training) are run for 2000 iterations, we use increments of ten iterations in the plot to provide a visual comparison with NNQST (which is run for 200 epochs due to faster convergence of in optimization). For NSQST with pre-training, we display the optimization progress curve only after the probabilities have been pre-trained. This explains the lower initial infidelity for NSQST with pre-training. See Appendix˜C for further details on the simulation hyperparameters.

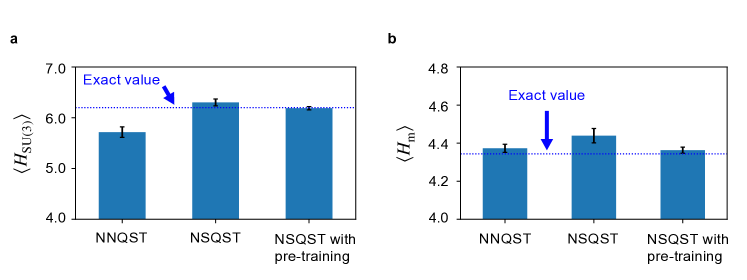

Based on Fig.˜3, NSQST and NSQST with pre-training both result in a lower final-state infidelity, relative to NNQST, and both predict the mean kinetic energy values better than NNQST. Figure˜3c further depicts the optimization progress curves of a typical trial. We see that for NNQST, the cross-entropy loss quickly converges with very little fluctuations, despite the continued fluctuations of the state infidelity in the lower plot near , indicating a very small overlap with the target state. On the other hand, standard NSQST and NSQST with pre-training both converge to a final state very close to the target state, despite fluctuations in the loss function caused by the finite number of classical shadows in each iteration. Moreover, we notice that NSQST with pre-training not only starts with a state of lower infidelity after pre-training, but also converges to a solution of lower infidelity than standard NSQST, with much more stable convergence in the end. One unexpected outcome is that NSQST with pre-training does not have a better kinetic energy prediction than the standard NSQST despite having lower infidelity. However, this can perhaps be an artifact of insufficient statistics given only ten trials. The predicted total energy and mass are also plotted in Fig.˜13 (Appendix˜E), where NSQST and NSQST with pre-training yield significantly better predictions of total energy but not the local observable .

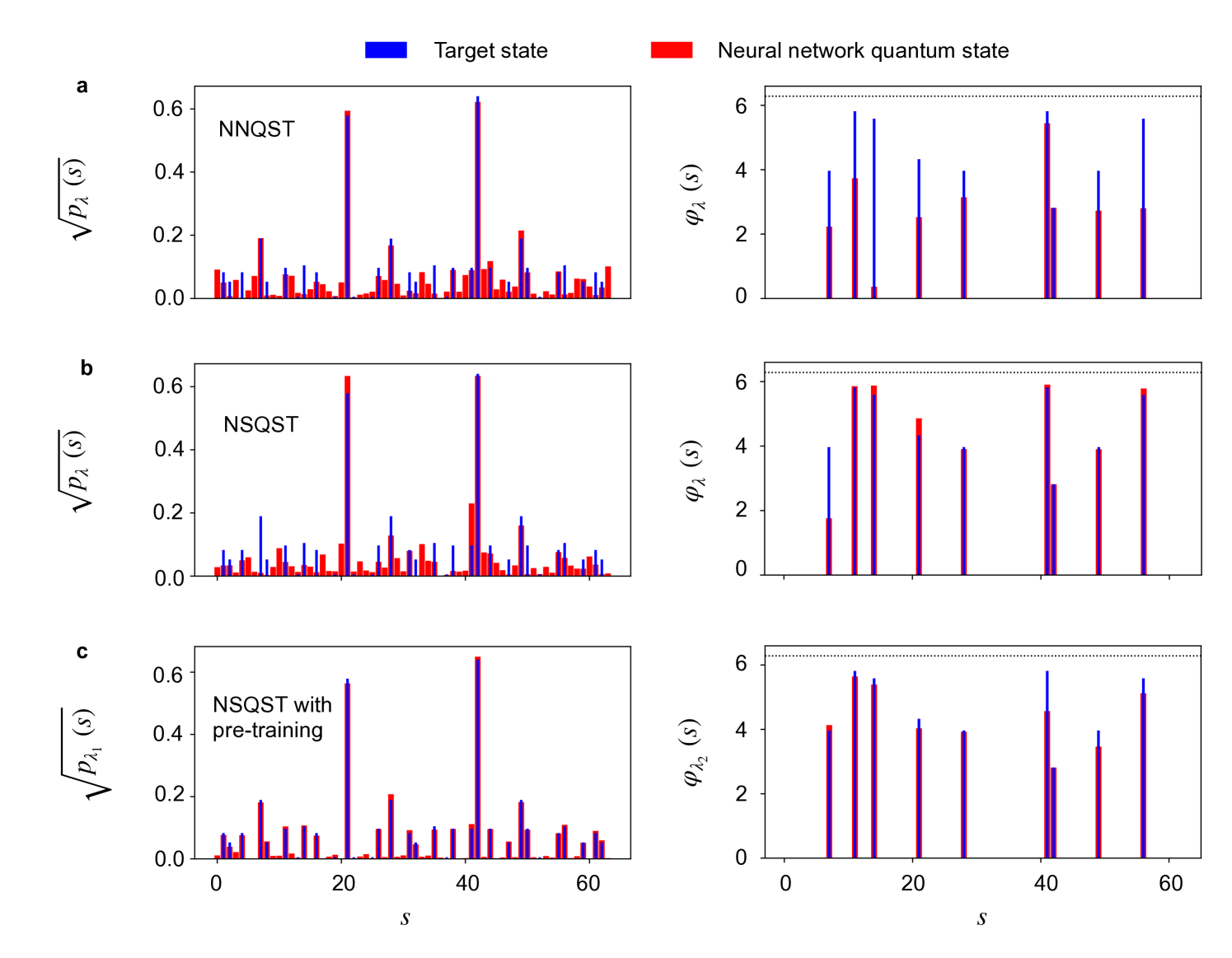

In Fig.˜4, the amplitudes and phases are displayed for typical final neural network quantum states for each protocol. We see that the NNQST protocol fails at learning the phase structure of the target state, despite accurately learning the probability distribution. This observation is consistent with NNQST’s convergence to a poor local minimum in the lower plot of Fig.˜3c, with the infidelity values stuck at around . On the other hand, standard NSQST and NSQST with pre-training are both successful at learning the phase structure of the target state, while NSQST with pre-training also learns the probability distribution better.

III.2 Time-evolved state for a one-dimensional Heisenberg antiferromagnet

The Heisenberg model describes magnetic systems quantum mechanically. Understanding the properties of the quantum Heisenberg model is crucial in many fields, including condensed matter physics, material science, and quantum information theory [48, 49, 50]. In this example, we perform tomography on a state that has evolved in time under the action of the one-dimensional antiferromagnetic Heisenberg (AFH) Hamiltonian. We use four Trotter time steps to approximate the time evolution.

The one-dimensional AFH model Hamiltonian is

| (19) |

We choose for our simulation and we take open boundary conditions. The 6-qubit initial state is set to the classical Néel-ordered state and our target state occurs after evolving under the Heisenberg Hamiltonian up to time . The circuit describing the Trotterized time evolution is given in Appendix˜B.

Figure˜5 shows the simulation results for performing tomography on the time-evolved AFH state using NNQST, NSQST, and NSQST with pre-training. We see that NSQST and NSQST with pre-training both reach a lower final state infidelity than NNQST in Fig.˜5a. Figure˜5b displays the mean staggered magnetization (along ) at each site. We observe that NSQST with pre-training results in a tighter spread of values about the exact result, relative to NNQST or NSQST across all sites. Comparing NNQST and standard NSQST, we observe that the standard NSQST protocol has significantly worse predictions at sites 3 and 4 than NNQST, with a mean more than one standard error away from the exact value, despite having a significantly lower final state infidelity. This is likely due to the fact that NNQST is trained using nearly-diagonal measurement data, providing direct access to the staggered magnetization observable of interest, whereas NSQST was trained using the infidelity loss. This result demonstrates that reaching a lower infidelity does not necessarily imply a better prediction of local observables, although we can improve the standard NSQST protocol by using more classical shadows per iteration. Finally, the typical optimization progress curves in Fig.˜5c are consistent with the statistical results shown in Fig.˜5a. For NNQST, the infidelity does not converge stably, despite the convergence of its loss function.

The probability amplitudes and phases obtained after training on a time-evolved AFH state are shown in Fig.˜6. Here, the two highest peaks in correspond to the two Nel states and [51]. Figure˜6 further confirms the advantage of NSQST with pre-training. Not only does NSQST with pre-training find more accurate phases, it also finds a better description of the probability distribution since the pre-training involves many measurement samples from the all- basis (whereas NNQST splits the same number of measurement samples over multiple bases).

III.3 The phase-shifted GHZ state

In this last example, we consider the tomography of a phase-shifted GHZ state. Here, our target is a 6-qubit GHZ state with a relative phase of . A GHZ state is a maximally entangled state that is highly relevant to quantum information science due to its non-classical correlations [52]. Moreover, the GHZ state is the only -qubit pure state that cannot be uniquely determined from its associated -qubit reduced density matrices [53], indicating genuine multipartite entanglement.

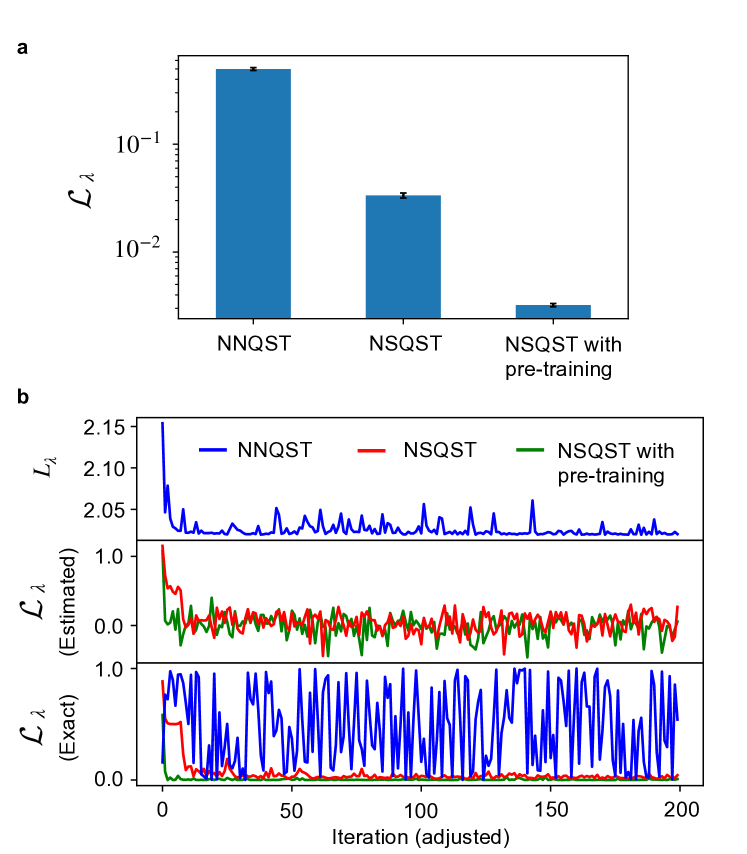

As shown in Fig.˜7a, both NSQST and NSQST with pre-training result in a significantly lower average final state infidelity than NNQST. The optimization progress curves displayed in Fig.˜7b confirm this result, as we see that the infidelity of the NNQST state is rapidly fluctuating during training, quite distinctly from the previous two examples. Given that we have employed a widely used adaptive optimizer and that we have chosen a reasonable initial learning rate (), the occurrence of such a divergence is likely due to the fact that the NNQST loss function does not incorporate tomographically complete information about the target GHZ state.

III.4 Comparison with direct shadow estimation

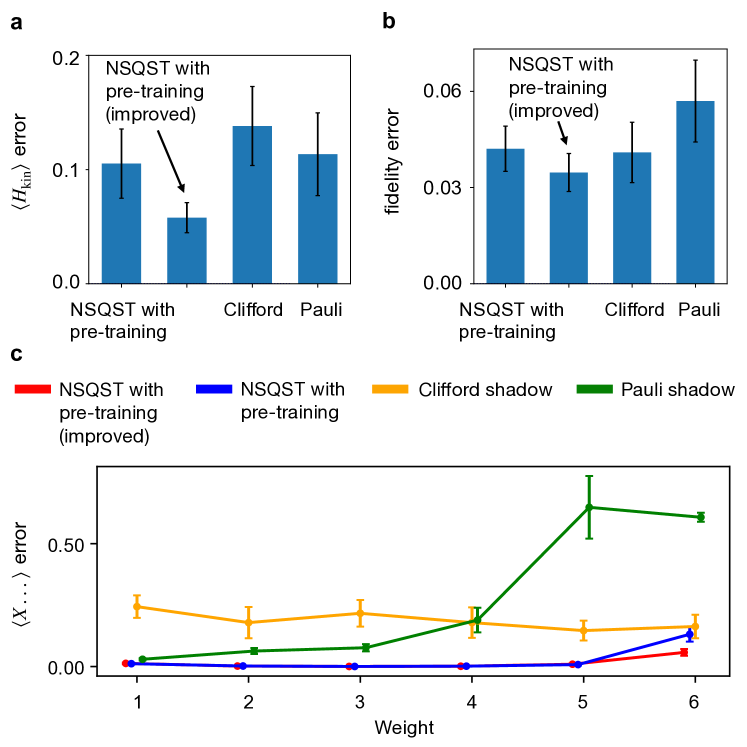

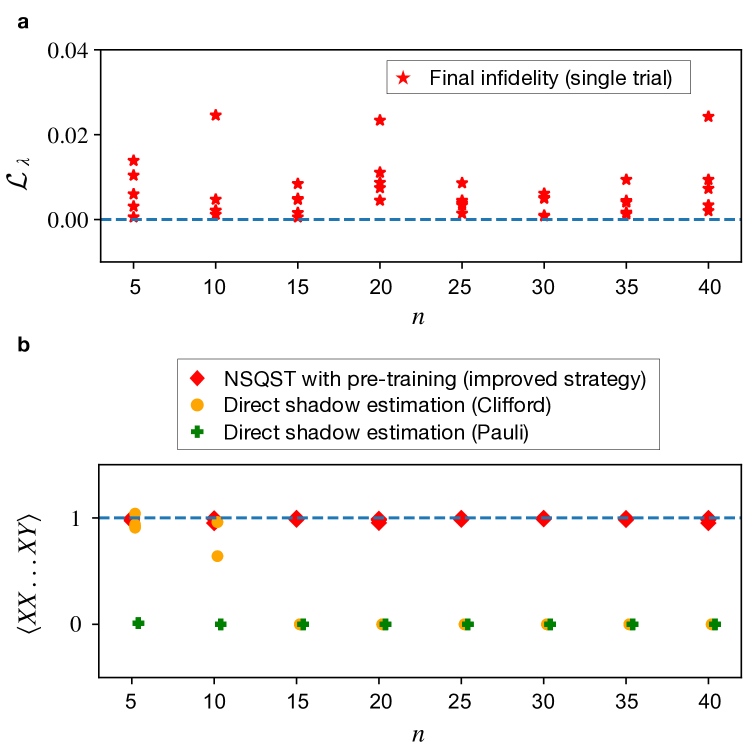

So far we have only compared the performance of NNQST, NSQST, and NSQST with pre-training, but an important question is whether any of the above methods has an advantage over direct shadow estimation. In this subsection, we compare the performance of NSQST with pre-training and direct shadow estimation for a one-dimensional QCD time-evolved state from Sec.˜III.1. In addition, we perform a scalability study of the phase-shifted GHZ state with up to qubits, comparing NSQST to direct shadow estimation. To minimize the number of Clifford shadows used for training, we fix Clifford shadows as training data and do not re-sample in every iteration. In addition to the original pre-training protocol from Sec.˜II.5, we adopt the improved pre-training strategy described in Appendix˜D. A typical optimization progress curve with the improved pre-training strategy is shown in Fig.˜14 from Appendix˜E, where very few iterations are required for convergence. Once training is completed, we compare the prediction errors of NSQST with pre-training, Clifford shadows, and Pauli shadows.

As shown in Fig.˜8, we have compared the absolute prediction error of NSQST with pre-training (with and without an improved strategy) and two types of direct shadow estimation methods. For a fair comparison, we have randomly sampled the same number () measurements for each method. For shadow reconstruction, Clifford shadows or Pauli shadows were used. For NSQST with pre-training, computational-basis measurements were used for pre-training and shadows were used. In Fig.˜8a, we observe that using an improved strategy, NSQST with pre-training achieves a significantly smaller prediction error than either Pauli shadows or Clifford shadows. This is expected, as the kinetic-energy Hamiltonian in Eq.˜18 contains high-weight Pauli strings, and Pauli shadows are provably efficient at predicting only local observables [8]. On the other hand, Clifford shadows have an exponentially growing variance bound for any Pauli observables irrespective of locality (since in Eq.˜10), which explains the large prediction error for kinetic energy. In Fig.˜8b, we report the predicted error in the fidelity to the ideal time-evolved state. The Pauli shadows yield the largest prediction error in fidelity estimation due to the exponentially growing variance bound for non-local observables. Finally, in Fig.˜8c, we apply the four methods to the problem of predicting a single Pauli string with increasing weight, where we change the identity matrix to Pauli-X at each site as the weight increases. The non-local observable of interest corresponds to the Wilson loop operator in lattice gauge theory with symmetry [54]. Since predicting high-weight observables is a hard task for both shadow protocols, the prediction error from NSQST with pre-training is much lower than for either Clifford or Pauli shadows for most of the observables. We also observe a consistent increasing prediction error for the Pauli shadows as the weight increases.

A natural question that arises is the scalability of NSQST’s advantages over direct shadow estimation. While a trained neural network quantum state closely approximating the target state has more predictive power than classical shadows alone, there is no guarantee of successful convergence during training. For instance, learning a general multi-qubit probability distribution without any prior knowledge in the pre-training step is hard [55] and would eventually require exponentially growing resources. The key to having a scalable advantage is to leverage prior knowledge of the prepared target state and to find ways to impose these known constraints in the neural network ansatz and the loss function [36, 56, 57].

As a proof of concept, we numerically study the sample complexity scaling of learning a phase-shifted GHZ state using NSQST with pre-training and using the improved strategy from Appendix˜D. With measurements in the computational basis, Clifford shadows, and Monte Carlo samples for each system size, we investigate the scaling of the final infidelity. As shown in Fig.˜9a, the final infidelity does not grow as the system size increases. This is expected, as the multi-qubit GHZ state is sparse in the computational basis and only a single relative phase needs to be determined. Although learning the GHZ state using a neural network ansatz is a trivial example, the sparseness property of the GHZ state is not exploited by direct shadow estimation and the collected Clifford shadows as training data would not be sample-efficient at predicting Pauli observables. In Fig.˜9b, we observe that direct shadow estimation fails to predict expectation values accurately and yields only values of zero as the system size increases. The result presented in Fig.˜9 suggests that there is hope to reconstruct sufficiently sparse states with sub-exponentially growing resources using NSQST, and potentially non-sparse states as well with enough known constraints imposed. Finally, we emphasize that, as compared to the neural network quantum state ansatz trained on IC-POVMs data in Ref. [30], our chosen state ansatz explained in Sec.˜II.1 is sample-efficient at predicting Pauli string observables of arbitrary weight and fidelity to any classically tractable states.

We make a final remark on the predictive power of randomized measurements alone versus a trained variational pure state. While one may generally expect the trained pure state to inherit the features of the training data, this may not be true in specific cases for NSQST and Clifford shadows, where the trained pure state’s predictive power mainly depends on the global reconstruction error (quantum infidelity), rather than an estimator for a particular observable. Moreover, the variational training framework of NSQST is not limited to a neural network quantum state with Clifford shadows. Other variational ansatzes such as matrix product states and other randomized measurement schemes such as Hamiltonian-driven shadows should be explored with proper locality adjustments, for practical advantages such as hardware-aware measurements and scalable classical post-processing [58, 59, 60, 61].

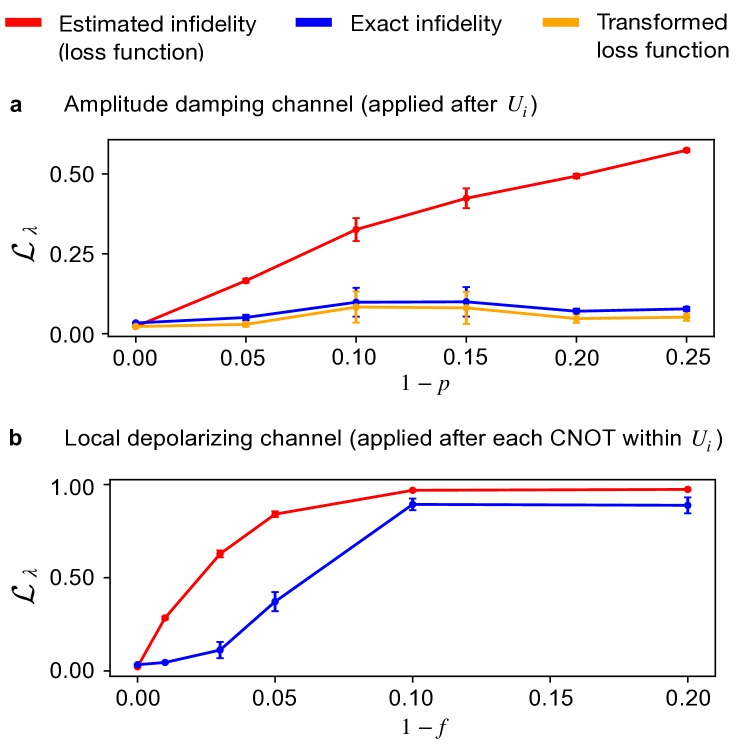

IV Numerical simulations with noise

We now numerically investigate the noise robustness of our NSQST protocol, focusing on the same phase-shifted GHZ state as in Sec.˜III.3. We consider two different sources of noise affecting the Clifford circuit used to evaluate our infidelity-based loss function using classical shadows (see Sec.˜II.4). The first noise model, (a particular case of the model already introduced in Sec.˜II.3), describes either measurement (readout) errors or gate-independent time-stationary Markovian (GTM) noise. The second noise model describes imperfect two-qubit entangling gates in the Clifford circuit. In the following, we introduce both noise models and discuss their effects on the fidelity of the reconstructed state.

Our first model is an amplitude damping channel applied before measurements. The amplitude damping channel is suitable for investigating the effect of measurement errors in the computational basis. The -qubit amplitude damping noise channel with channel parameter is defined as

| (20) |

where

| (21) |

Apart from modeling measurement noise, this noise channel also serves as a suitable model for studying gate-independent, time-stationary, and Markovian (GTM) noise [24]. In this case, each gate that appears in the Clifford circuit is subject to the same noise map. The resulting noisy random Clifford circuit can be decomposed into with being a noise channel applied after the ideal Clifford unitary.

To demonstrate the noise robustness of our NSQST protocol, we first perform tomography on a phase-shifted GHZ state (having a relative phase of ). Despite the presence of the amplitude damping noise , we simulate NSQST using the noise-free gradient expression in Eq.˜15. As discussed in Sec.˜II.4, the noise-free gradient expression in NSQST will still yield an estimate that is directed along the true gradient, being modified only with an overall prefactor. In contrast, the noise-free loss function and the true loss are related nontrivially in the presence of noise:

| (22) |

This means that our estimated loss function no longer converges to zero, while the infidelity between the neural network quantum state and the target state approaches zero during training.

Figure˜10a shows the simulation results for the effects of an amplitude damping noise channel applied before measurement. First, we observe that the average exact infidelity of the last 100 iterations remains small despite the growing noise channel strength. The increasing loss function value (red curves) is evidence of the growing variance in our gradient estimations, and will eventually lead to failure of the optimizer to converge to a state close to the target state. Intuitively, since the classical shadows method only uses the measured bit-string, but not the phase for post-processing, only the diagonal bit-flip errors in Eq.˜21 contribute to the noise model and these are twirled into depolarizing noise by random Clifford circuits. Finally, the agreement between the exact infidelity (blue curve) and the transformed loss function (the right hand side of Eq.˜22 represented by the orange curve) validates our theoretical account. Here, we have used Eq.˜8 to find the depolarizing noise channel strength

| (23) |

Note that may be hard to estimate in practice. However, since this parameter does not affect the direction of the estimated gradient, we expect training to converge to the same optimal parameters with or without noise. It is therefore not necessary to compensate for noise by computing the linear transformation in Eq.˜22 as long as we can verify the successful convergence of training.

We proceed now with a discussion of the second noise model, which assumes that entangling gates are the dominant source of error. For numerical simulations, we decompose each random Clifford unitary into CNOT, Hadamard, and phase gate operations. Subsequently, a local two-qubit noise map is applied after each CNOT gate in . We consider the depolarizing noise channel

| (24) |

with and a fixed noise strength . This noise model is not GTM and no longer has an analytic noisy shadow expression. However, we still expect NSQST to be fairly noise robust based on the numerically demonstrated robustness of classical shadows against many non-GTM errors, such as pulse miscalibration noise [24].

As shown in Fig.˜10b, NSQST exhibits some measure of noise robustness even in the presence of a more realistic non-GTM noise model. This is reflected in the positive curvature of the blue curve for decreasing noise, , leading to a weak-noise limit where the exact infidelity (blue curve) is small relative to the estimated infidelity (red curve). Our randomly-sampled six-qubit unitary has an average of 21 CNOT gates (see Appendix˜C), leading to a substantial accumulation of errors. Thus, the noise parameter controlling one-time measurement errors is not comparable to the parameter controlling the noise on the individual CNOT gates. A transformed loss function curve is not presented in Fig.˜10b because our local (two-qubit) depolarizing noise model does not yield an analytic expression. The robustness of the classical shadows formalism against many other non-GTM noise models (with an extra calibration step) has been well studied in Ref. [24], while our NSQST protocol holds a similar noise robustness without any extra calibration steps.

V Conclusions and Outlook

In this work, we have proposed a new QST protocol, neural–shadow quantum state tomography (NSQST). We have demonstrated its clear advantages over state-of-the-art implementations of neural network quantum state tomography (NNQST) in three relevant settings, as well as advantages over direct shadow estimation. We have further shown that NSQST is noise robust. Our study of the benefits of NSQST suggests that the choice of infidelity as a loss function has great potential to broaden the applicability of neural network-based tomography methods to a wider range of quantum states.

In Appendix˜D we describe technical developments (re-use of classical shadows and alternative Monte Carlo methods) that can be pursued to further enhance the performance of NSQST. Another direction for future work would be to tailor NSQST more closely to emerging quantum hardware platforms. This can be done by exploring NSQST with alternative shadow protocols. In particular, it would be interesting to investigate hardware-aware classical shadows that use the native interactions of the quantum device [62, 58, 59]. In addition, future work should extend NSQST to mixed-state protocols [31].

Relative to classical shadow protocols, which only allow for efficient fidelity and local observable predictions, but no efficient state reconstruction, NSQST achieves the goal of reconstructing a physical state that approximates a target quantum state via a variational ansatz. The variational ansatz in NSQST comes with the convenience of a quantum state and can be used to predict many global observables of interest beyond the reach of direct shadow estimation [63]. Moreover, NSQST inherits the advantages of NNQST. For example, we can incorporate symmetry constraints of the target state, reducing the computational resources needed [64, 36, 56]. Once trained, relative to the large number of classical shadows that must be collected, the variational ansatz in NSQST may yield a more efficient classical representation of the state. Finally, as demonstrated in NSQST with pre-training, the trained variational ansatz approximating the target state can be fed into a second round of optimization, performed with respect to a new loss function. This possibility provides great flexibility in addressing a variety of tasks, including, for example, error mitigation in classical post-processing [18].

NSQST is an efficient state reconstruction method. It will be useful as a benchmarking tool, an important element for testing the performance of near-term quantum devices as they scale up. In particular, NSQST can be used to construct a “digital twin” [65] of the prepared target state, where a neural network quantum state can be used for experimentally-relevant simulation [66, 67], cross-platform verification [68], error mitigation [18] and other uses. Having access to a digital twin of the target quantum state will become increasingly relevant for accelerating the development of quantum technologies. Further down the road, we also foresee great potential for NSQST as a stepping stone for interfacing classical probabilistic graphical models and quantum circuits, where data stored in quantum circuits can be transferred to classical memory and vice versa, leading to new hybrid computing approaches.

Appendix A Neural network quantum state architecture

For NNQST and NSQST, we use the transformer-based neural network quantum state ansatz directly adopted from Ref. [18]. A central component in the ansatz is the transformer layer, which has a self-attention block followed by a linear layer. With a bit-string as input, is extended to by prefixing a zero bit. Then, each bit is encoded into a -dimensional representation space using a learned embedding governed by , yielding the encoded bit with and . The encoded input is then processed using transformer layers.

We outline the parameters involved in a single transformer layer indexed by in the ansatz, and refer the reader to Ref. [18] for more details:

-

1.

The query, key, value matrices , , and , where , , . We require to be an integer.

-

2.

A matrix to process the output of the self-attention heads, , with .

-

3.

A weight matrix and a bias vector of the linear layer, and , with .

Once we have passed the final transformer layer, scalar-valued logits are obtained by using an extra linear layer. The conditional probabilities directly used in sampling are then given by

| (25) |

where is the logistic sigmoid function. Since the outcome at index is conditional on the preceding indices , we can efficiently draw unbiased samples from the probability distribution by proceeding one bit at a time. The phase output is obtained by first concatenating the output of the final transformer layer to a vector of length , then projecting the vector to a single scalar value using a linear layer (separate from the linear layer used in obtaining ).

For NSQST with pre-training, our is parameterized in the same way as in standard NSQST, except that we remove the phase output layer. The phase outcome is encoded in a separate transformer-based neural network ansatz, where we remove the other linear layer (the one producing scalar-valued logits representing the probability amplitudes). Thus, the encoded quantum state in NSQST with pre-training has its probability distribution and phase output separately parameterized by model parameters and , respectively.

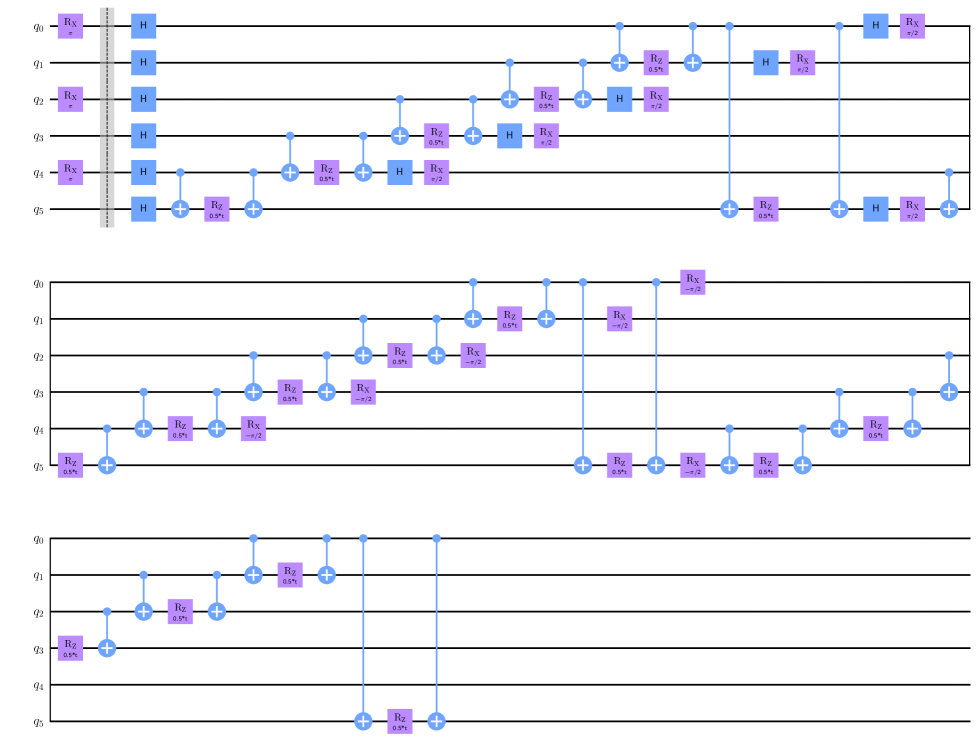

Appendix B Trotterized time evolution circuits

In this section, we elaborate on the details of Trotterized time evolution circuits for the two noiseless numerical experiments (Secs.˜III.1 and III.2).

B.1 One-dimensional QCD time evolution

B.2 One-dimensional AFH time evolution

Appendix C Simulation hyperparameters

For all transformer-based neural network quantum states, we have used two transformer layers (), four attention heads (), and eight internal dimensions (). The neural network quantum states for NNQST and the standard NSQST have model parameters. NSQST with pre-training uses two neural networks for the same quantum state, where the probability distribution ansatz has model parameters and the phase output ansatz has model parameters, a total of model parameters. It is likely that we have used more model parameters than necessary for a 6-qubit pure state, as the focus of our demonstrations is studying the new loss function. For transformer-based neural network quantum states used for NSQST with pre-training, we have used three transformer layers (), four attention heads (), and eight internal dimensions (), corresponding to a number of model parameters from to for system size from to .

All NNQST protocol trials use 21 ( with ) nearly-diagonal and nearest-neighbour Pauli bases, where 512 measurement samples are used for each basis. The batch sizes are 128 and all NNQST simulations are run for 200 epochs, where one epoch refers to one sweep over the entire training data set. In all simulations the Adam optimizer is used. For the one-dimensional QCD and AFH time evolution examples, the learning rate is set to . For the GHZ state tomography, a learning rate of is used.

All NSQST protocol trials (including the ones with pre-training) use 100 classical shadow samples () and 5000 neural network quantum state samples (both re-sampled in every iteration) per iteration. All simulations are run for 2000 iterations with the learning rate for the Adam optimizer. Note that we have not leveraged the re-usability of the classical shadows, which can bring a significant reduction in the number of measurements. The randomly sampled -qubit Clifford circuits have CNOT gates, assuming all-to-all connectivity.

The modified NSQST experiment with pre-training uses computational basis measurement samples in the pre-training stage using NNQST’s optimization framework, with identical hyperparameters to that of the NNQST protocol. NSQST with pre-training (with or without improved strategy) in Sec.˜III.4 uses computational basis measurements for and computational basis measurements for in the pre-training stage, and a fixed set of Clifford classical shadows are used for training the relative phases.

For predicting observable values given a trained neural network quantum state, exact calculations are done for and Monte Carlo samples are used for .

Appendix D Method improvement

For simplicity, we have naively re-sampled the 100 classical shadows in every iteration, which is not necessary as the previously-sampled classical shadows could be re-used. We expect many possible improvements could be made to reduce the number of sampled Clifford circuits and the number of measurements, such as measuring more bit-strings for each . Hybrid training protocols beyond NSQST with pre-training should also be explored.

We recognize that an exponential classical computational cost may be induced for convergence due to the growing variance of the gradient in Eq.˜15. To intuitively see this, we note that estimating the overlap in Eq.˜14 to within additive error with failure probability at most requires classical samples drawn from [70]. However, the prefactor in Eq.˜15 will make the error in estimating the gradient exponentially large. Since we want to be bounded, we demand to be exponentially small and this directly translates to as discussed in Sec.˜II.4. Imposing harder constraints on the quantum state ansatz may remove or alleviate this issue, and numerical experiments of larger system sizes should be done to explore NSQST’s limitations in the future.

An additional complication can arise when estimating the inner product shown in Eq.˜14 from a finite number of Monte Carlo samples. In practice, the overlap is estimated in terms of a subset of distinct bit-strings using:

| (26) |

Here, is determined from the frequency of the bit-string found from samples drawn according to the probability distribution . For a transformer-based neural network architechture, the samples can be generated efficiently bit-by-bit using the procedure described in Appendix˜A. Up to a constant factor, the right-hand side of Eq.˜26 can be interpreted as the exact overlap between a stabilizer state and a fictitious state with wavefunction:

| (27) |

The normalization constant approaches when (e.g., when the sample set includes all ). However, a problem arises when we sample only over a subset of possible bit-strings . In this case, it may be that for some , leading to . Estimating the infidelity from classical shadows to obtain the NSQST loss function (Eq.˜11) through Monte Carlo samples as in Eq.˜26 requires the estimated overlaps . When an incomplete sample set is taken, the factor can become very large, leading to an unphysical blow-up, potentially leading to estimated overlaps . In this limit, the Monte Carlo estimate is meaningless. A simple solution to this problem could be to truncate the set , allowing only for bit-strings for which exceeds some threshold value, then we replace the normalization constant . For a given task, it may be difficult to establish truncation thresholds that maintain convergence to an accurate state. In the rest of this appendix, we give an alterative procedure that does not show the ill-conditioned “blow-up” from a finite Monte Carlo sample size, while avoiding predetermined truncation thresholds.

To avoid the pitfalls of representing a Monte Carlo average as in Eq.˜26, we consider a hybrid NSQST protocol. In this hybrid protocol, the classical shadows are only used to learn the phases . The probability amplitudes are learned using NNQST from measurements performed in the computational basis (similar to NSQST with pre-training): with . The difference between this new hybrid protocol and NSQST with pre-training is in learning the phases. To train the phase model, we calculate the gradient of the loss function with an alternative approximation for the inner product:

| (28) |

where we have introduced an alternative normalized state:

| (29) |

The estimated loss function in this new hybrid protocol is the shadow-estimated infidelity between the target state and the state . The gradient of this infidelity can be calculated to optimize the phases from variations in the parameters :

| (30) |

We can efficiently evaluate the right-hand side of Eq.˜30 exactly for a sub-exponential number of distinct bit-strings . The optimization procedure is then limited only by the expressivity and accuracy of the sparse approximation for the neural network quantum state , arising from a finite number of samples. This new hybrid NSQST protocol does not suffer from the “blow-up” described above and it may converge with a sub-exponential number of samples, especially when is sufficiently sparse in the computational basis. This alternative strategy was unnecessary in most of our numerical experiments given the very small system size and the very large number of Monte Carlo samples .

Appendix E Additional plots

In this section, we provide additional plots relevant to the numerical simulation results presented in Sec.˜III.

In Fig.˜13, the predicted total energy and mass from the three protocols are plotted, where we see that NNQST fails to yield a better prediction of total energy than NSQST in Fig.˜13a. However, as shown in Fig.˜13b, NNQST predicts mass more accurately than NSQST, which is a local observable from Eq.˜18.

In Fig.˜14, a typical optimization progress curve is plotted for the numerical results presented in Sec.˜III.4. The iteration number is not adjusted and pre-training is repeated for every trial.

Acknowledgements.

We thank Abhijit Chakraborty, Luca Dellantonio, David Gosset, Arsalan Motamedi, Jinglei Zhang, Jan Friedrich Haase, Yasar Atas, and Randy Lewis for useful discussions. This work has been supported by the Transformative Quantum Technologies Program (CFREF), the Natural Sciences and Engineering Research Council (NSERC), the New Frontiers in Research Fund (NFRF), and the Fonds de Recherche-Nature et Technologies (FRQNT). CM acknowledges the Alfred P. Sloan foundation for a Sloan Research Fellowship and an Ontario Early Researcher Award. PR further acknowledges the support of NSERC Discovery grant RGPIN-2022-03339, Mike and Ophelia Lazaridis, Innovation, Science and Economic Development Canada (ISED), 1QBit, and the Perimeter Institute for Theoretical Physics. Research at the Perimeter Institute is supported in part by the Government of Canada through ISED, and by the Province of Ontario through the Ministry of Colleges and Universities.Code Availability Statement

The numerical implementation of NNQST from Ref. [18] can be found at https://github.com/1QB-Information-Technologies/NEM. The numerical implementation of NSQST can be found at https://github.com/victor11235/Neural-Shadow-QST.

References

- Eisert et al. [2020] J. Eisert, D. Hangleiter, N. Walk, I. Roth, D. Markham, R. Parekh, U. Chabaud, and E. Kashefi, Quantum certification and benchmarking, Nature Reviews Physics 2, 382 (2020).

- Knill et al. [2008] E. Knill, D. Leibfried, R. Reichle, J. Britton, R. B. Blakestad, J. D. Jost, C. Langer, R. Ozeri, S. Seidelin, and D. J. Wineland, Randomized benchmarking of quantum gates, Physical Review A 77, 012307 (2008).

- Carrasco et al. [2021] J. Carrasco, A. Elben, C. Kokail, B. Kraus, and P. Zoller, Theoretical and experimental perspectives of quantum verification, PRX Quantum 2, 010102 (2021).

- Choi et al. [2023] J. Choi, A. L. Shaw, I. S. Madjarov, X. Xie, R. Finkelstein, J. P. Covey, J. S. Cotler, D. K. Mark, H.-Y. Huang, A. Kale, et al., Preparing random states and benchmarking with many-body quantum chaos, Nature 613, 468 (2023).

- Elben et al. [2023] A. Elben, S. T. Flammia, H.-Y. Huang, R. Kueng, J. Preskill, B. Vermersch, and P. Zoller, The randomized measurement toolbox, Nature Reviews Physics 5, 9 (2023).

- Stricker et al. [2022] R. Stricker, M. Meth, L. Postler, C. Edmunds, C. Ferrie, R. Blatt, P. Schindler, T. Monz, R. Kueng, and M. Ringbauer, Experimental single-setting quantum state tomography, PRX Quantum 3, 040310 (2022).

- Preskill [2018] J. Preskill, Quantum computing in the nisq era and beyond, Quantum 2, 79 (2018).

- Huang et al. [2020] H.-Y. Huang, R. Kueng, and J. Preskill, Predicting many properties of a quantum system from very few measurements, Nature Physics 16, 1050 (2020).

- Torlai et al. [2018] G. Torlai, G. Mazzola, J. Carrasquilla, M. Troyer, R. Melko, and G. Carleo, Neural-network quantum state tomography, Nature Physics 14, 447 (2018).

- Huang et al. [2021] Y. Huang, J. E. Moore, et al., Neural network representation of tensor network and chiral states, Physical Review Letters 127, 170601 (2021).

- Sharir et al. [2022] O. Sharir, A. Shashua, and G. Carleo, Neural tensor contractions and the expressive power of deep neural quantum states, Physical Review B 106, 205136 (2022).

- Chen et al. [2018] J. Chen, S. Cheng, H. Xie, L. Wang, and T. Xiang, Equivalence of restricted boltzmann machines and tensor network states, Physical Review B 97, 085104 (2018).

- Deng et al. [2017] D.-L. Deng, X. Li, and S. D. Sarma, Quantum entanglement in neural network states, Physical Review X 7, 021021 (2017).

- Hinton [2012] G. E. Hinton, A practical guide to training restricted boltzmann machines, in Neural networks: Tricks of the trade (Springer, 2012) pp. 599–619.

- Vaswani et al. [2017] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, Attention is all you need, Advances in neural information processing systems 30 (2017).

- Kingma and Ba [2015] D. Kingma and J. Ba, Adam: A method for stochastic optimization, in International Conference on Learning Representations (ICLR) (San Diega, CA, USA, 2015).

- Carleo and Troyer [2017] G. Carleo and M. Troyer, Solving the quantum many-body problem with artificial neural networks, Science 355, 602 (2017).

- Bennewitz et al. [2022] E. R. Bennewitz, F. Hopfmueller, B. Kulchytskyy, J. Carrasquilla, and P. Ronagh, Neural error mitigation of near-term quantum simulations, Nature Machine Intelligence 4, 618 (2022).

- Torlai et al. [2019] G. Torlai, B. Timar, E. P. Van Nieuwenburg, H. Levine, A. Omran, A. Keesling, H. Bernien, M. Greiner, V. Vuletić, M. D. Lukin, et al., Integrating neural networks with a quantum simulator for state reconstruction, Physical review letters 123, 230504 (2019).

- Chen et al. [2013] J. Chen, H. Dawkins, Z. Ji, N. Johnston, D. Kribs, F. Shultz, and B. Zeng, Uniqueness of quantum states compatible with given measurement results, Physical Review A 88, 012109 (2013).

- Luo and Zhang [2004] S. Luo and Q. Zhang, Informational distance on quantum-state space, Physical Review A 69, 032106 (2004).

- Aaronson [2018] S. Aaronson, Shadow tomography of quantum states, in Proceedings of the 50th annual ACM SIGACT symposium on theory of computing (2018) pp. 325–338.

- Koh and Grewal [2022] D. E. Koh and S. Grewal, Classical shadows with noise, Quantum 6, 776 (2022).

- Chen et al. [2021] S. Chen, W. Yu, P. Zeng, and S. T. Flammia, Robust shadow estimation, PRX Quantum 2, 030348 (2021).

- Wolf et al. [2020] T. Wolf, L. Debut, V. Sanh, J. Chaumond, C. Delangue, A. Moi, P. Cistac, T. Rault, R. Louf, M. Funtowicz, et al., Transformers: State-of-the-art natural language processing, in Proceedings of the 2020 conference on empirical methods in natural language processing: system demonstrations (2020) pp. 38–45.

- Khan et al. [2022] S. Khan, M. Naseer, M. Hayat, S. W. Zamir, F. S. Khan, and M. Shah, Transformers in vision: A survey, ACM computing surveys (CSUR) 54, 1 (2022).

- Torlai and Melko [2018] G. Torlai and R. G. Melko, Latent space purification via neural density operators, Physical review letters 120, 240503 (2018).

- Vicentini et al. [2022] F. Vicentini, D. Hofmann, A. Szabó, D. Wu, C. Roth, C. Giuliani, G. Pescia, J. Nys, V. Vargas-Calderón, N. Astrakhantsev, et al., Netket 3: Machine learning toolbox for many-body quantum systems, SciPost Physics Codebases , 007 (2022).

- Havlicek [2023] V. Havlicek, Amplitude ratios and neural network quantum states, Quantum 7, 938 (2023).

- Carrasquilla et al. [2019] J. Carrasquilla, G. Torlai, R. G. Melko, and L. Aolita, Reconstructing quantum states with generative models, Nature Machine Intelligence 1, 155 (2019).

- Cha et al. [2021] P. Cha, P. Ginsparg, F. Wu, J. Carrasquilla, P. L. McMahon, and E.-A. Kim, Attention-based quantum tomography, Machine Learning: Science and Technology 3, 01LT01 (2021).

- Struchalin et al. [2021] G. Struchalin, Y. A. Zagorovskii, E. Kovlakov, S. Straupe, and S. Kulik, Experimental estimation of quantum state properties from classical shadows, PRX Quantum 2, 010307 (2021).

- Becker et al. [2024] S. Becker, N. Datta, L. Lami, and C. Rouzé, Classical shadow tomography for continuous variables quantum systems, IEEE Transactions on Information Theory (2024).

- Zhang et al. [2021] T. Zhang, J. Sun, X.-X. Fang, X.-M. Zhang, X. Yuan, and H. Lu, Experimental quantum state measurement with classical shadows, Physical Review Letters 127, 200501 (2021).

- Webb [2015] Z. Webb, The clifford group forms a unitary 3-design, Quantum Inf. Comput. 16, 1379 (2015).

- Choo et al. [2018] K. Choo, G. Carleo, N. Regnault, and T. Neupert, Symmetries and many-body excitations with neural-network quantum states, Physical review letters 121, 167204 (2018).

- Gottesman [1997] D. Gottesman, Stabilizer codes and quantum error correction (California Institute of Technology, 1997).

- Gidney [2021] C. Gidney, Stim: a fast stabilizer circuit simulator, Quantum 5, 497 (2021).

- Aaronson and Gottesman [2004] S. Aaronson and D. Gottesman, Improved simulation of stabilizer circuits, Physical Review A 70, 052328 (2004).

- Bravyi and Maslov [2021] S. Bravyi and D. Maslov, Hadamard-free circuits expose the structure of the clifford group, IEEE Transactions on Information Theory 67, 4546 (2021).

- Marciano and Pagels [1978] W. Marciano and H. Pagels, Quantum chromodynamics, Physics Reports 36, 137 (1978).

- Kogut [1983] J. B. Kogut, The lattice gauge theory approach to quantum chromodynamics, Reviews of Modern Physics 55, 775 (1983).

- Banuls et al. [2020] M. C. Banuls, R. Blatt, J. Catani, A. Celi, J. I. Cirac, M. Dalmonte, L. Fallani, K. Jansen, M. Lewenstein, S. Montangero, et al., Simulating lattice gauge theories within quantum technologies, The European physical journal D 74, 1 (2020).

- Nachman et al. [2021] B. Nachman, D. Provasoli, W. A. De Jong, and C. W. Bauer, Quantum algorithm for high energy physics simulations, Physical review letters 126, 062001 (2021).

- Dalmonte and Montangero [2016] M. Dalmonte and S. Montangero, Lattice gauge theory simulations in the quantum information era, Contemporary Physics 57, 388 (2016).

- Martinez et al. [2016] E. A. Martinez, C. A. Muschik, P. Schindler, D. Nigg, A. Erhard, M. Heyl, P. Hauke, M. Dalmonte, T. Monz, P. Zoller, et al., Real-time dynamics of lattice gauge theories with a few-qubit quantum computer, Nature 534, 516 (2016).

- Atas et al. [2023] Y. Y. Atas, J. F. Haase, J. Zhang, V. Wei, S. M.-L. Pfaendler, R. Lewis, and C. A. Muschik, Simulating one-dimensional quantum chromodynamics on a quantum computer: Real-time evolutions of tetra-and pentaquarks, Physical Review Research 5, 033184 (2023).

- Motta et al. [2020] M. Motta, C. Sun, A. T. Tan, M. J. O’Rourke, E. Ye, A. J. Minnich, F. G. Brandão, and G. K. Chan, Determining eigenstates and thermal states on a quantum computer using quantum imaginary time evolution, Nature Physics 16, 205 (2020).

- Gong et al. [2014] S.-S. Gong, W. Zhu, and D. Sheng, Emergent chiral spin liquid: Fractional quantum hall effect in a kagome heisenberg model, Scientific reports 4, 1 (2014).

- Arovas and Auerbach [1988] D. P. Arovas and A. Auerbach, Functional integral theories of low-dimensional quantum heisenberg models, Physical Review B 38, 316 (1988).

- Kennedy et al. [1988] T. Kennedy, E. H. Lieb, and B. S. Shastry, Existence of néel order in some spin-1/2 heisenberg antiferromagnets, Journal of statistical physics 53, 1019 (1988).

- Żukowski et al. [1998] M. Żukowski, A. Zeilinger, M. Horne, and H. Weinfurter, Quest for ghz states, Acta Phys. Pol. A 93, 187 (1998).

- Walck and Lyons [2008] S. N. Walck and D. W. Lyons, Only n-qubit greenberger-horne-zeilinger states are undetermined by their reduced density matrices, Physical review letters 100, 050501 (2008).

- Zohar [2020] E. Zohar, Local manipulation and measurement of nonlocal many-body operators in lattice gauge theory quantum simulators, Physical Review D 101, 034518 (2020).

- Blum et al. [2003] A. Blum, A. Kalai, and H. Wasserman, Noise-tolerant learning, the parity problem, and the statistical query model, Journal of the ACM (JACM) 50, 506 (2003).

- Morawetz et al. [2021] S. Morawetz, I. J. De Vlugt, J. Carrasquilla, and R. G. Melko, U (1)-symmetric recurrent neural networks for quantum state reconstruction, Physical Review A 104, 012401 (2021).

- Haldar et al. [2021] A. Haldar, O. Tavakol, and T. Scaffidi, Variational wave functions for sachdev-ye-kitaev models, Physical Review Research 3, 023020 (2021).

- Hu and You [2022] H.-Y. Hu and Y.-Z. You, Hamiltonian-driven shadow tomography of quantum states, Physical Review Research 4, 013054 (2022).

- Hu et al. [2023] H.-Y. Hu, S. Choi, and Y.-Z. You, Classical shadow tomography with locally scrambled quantum dynamics, Physical Review Research 5, 023027 (2023).

- Cramer et al. [2010] M. Cramer, M. B. Plenio, S. T. Flammia, R. Somma, D. Gross, S. D. Bartlett, O. Landon-Cardinal, D. Poulin, and Y.-K. Liu, Efficient quantum state tomography, Nature communications 1, 149 (2010).

- Lanyon et al. [2017] B. Lanyon, C. Maier, M. Holzäpfel, T. Baumgratz, C. Hempel, P. Jurcevic, I. Dhand, A. Buyskikh, A. Daley, M. Cramer, et al., Efficient tomography of a quantum many-body system, Nature Physics 13, 1158 (2017).

- Van Kirk et al. [2022] K. Van Kirk, J. Cotler, H.-Y. Huang, and M. D. Lukin, Hardware-efficient learning of quantum many-body states, arXiv preprint arXiv:2212.06084 (2022).

- Hibat-Allah et al. [2023] M. Hibat-Allah, R. G. Melko, and J. Carrasquilla, Investigating topological order using recurrent neural networks, Physical Review B 108, 075152 (2023).

- Luo et al. [2021] D. Luo, G. Carleo, B. K. Clark, and J. Stokes, Gauge equivariant neural networks for quantum lattice gauge theories, Physical review letters 127, 276402 (2021).

- Tao et al. [2018] F. Tao, H. Zhang, A. Liu, and A. Y. Nee, Digital twin in industry: State-of-the-art, IEEE Transactions on industrial informatics 15, 2405 (2018).

- Gutiérrez and Mendl [2022] I. L. Gutiérrez and C. B. Mendl, Real time evolution with neural-network quantum states, Quantum 6, 627 (2022).

- Medvidović and Carleo [2021] M. Medvidović and G. Carleo, Classical variational simulation of the quantum approximate optimization algorithm, npj Quantum Information 7, 101 (2021).

- Elben et al. [2020] A. Elben, B. Vermersch, R. van Bijnen, C. Kokail, T. Brydges, C. Maier, M. K. Joshi, R. Blatt, C. F. Roos, and P. Zoller, Cross-platform verification of intermediate scale quantum devices, Physical review letters 124, 010504 (2020).

- Aleksandrowicz et al. [2019] G. Aleksandrowicz, T. Alexander, P. Barkoutsos, L. Bello, Y. Ben-Haim, D. Bucher, F. J. Cabrera-Hernández, J. Carballo-Franquis, A. Chen, C.-F. Chen, et al., Qiskit: An open-source framework for quantum computing, Accessed on: Mar 16 (2019).

- Tang [2019] E. Tang, A quantum-inspired classical algorithm for recommendation systems, in Proceedings of the 51st annual ACM SIGACT symposium on theory of computing (2019) pp. 217–228.