Neuron: Learning Context-Aware Evolving Representations for Zero-Shot Skeleton Action Recognition

Abstract

Zero-shot skeleton action recognition is a non-trivial task that requires robust unseen generalization with prior knowledge from only seen classes and shared semantics. Existing methods typically build the skeleton-semantics interactions by uncontrollable mappings and conspicuous representations, thereby can hardly capture the intricate and fine-grained relationship for effective cross-modal transferability. To address these issues, we propose a novel dyNamically Evolving dUal skeleton-semantic syneRgistic framework with the guidance of cOntext-aware side informatioN (dubbed Neuron), to explore more fine-grained cross-modal correspondence from micro to macro perspectives at both spatial and temporal levels, respectively. Concretely, 1) we first construct the spatial-temporal evolving micro-prototypes and integrate dynamic context-aware side information to capture the intricate and synergistic skeleton-semantic correlations step-by-step, progressively refining cross-model alignment; and 2) we introduce the spatial compression and temporal memory mechanisms to guide the growth of spatial-temporal micro-prototypes, enabling them to absorb structure-related spatial representations and regularity-dependent temporal patterns. Notably, such processes are analogous to the learning and growth of neurons, equipping the framework with the capacity to generalize to novel unseen action categories. Extensive experiments on various benchmark datasets demonstrated the superiority of the proposed method 111The code is available at: https://anonymous.4open.science/r/Neuron..

1 Introduction

Action recognition has gained considerable attention for years due to its broad applications, e.g., sports analysis [37], rehabilitation assessment [15], anomaly action recognition [30], etc. Benefiting from the excellent privacy-preserving and data-efficiency properties of skeleton modality, a wealth of innovative research has sprung up to advance this field. Among these developments, several challenging and realistic issues have emerged, notably spurring interest in zero-shot skeleton action recognition [6, 21, 41].

Conventional supervised and self-supervised skeleton-based action recognition methods [6, 7] are usually hindered by their dependence on expensive annotated data and limited generalization to novel unseen categories. These methods generally confine their classification abilities to actions present in both training and test sets [40, 38, 5, 8]. In contrast, zero-shot skeleton action recognition effectively addresses the above dilemmas by leveraging only limited seen skeleton samples and a predefined semantic corpus, thus enabling the recognition of previously unseen action categories [6, 21, 41, 39, 20, 13, 18]. Furthermore, such a zero-shot learning (ZSL) setting can be extended to generalized zero-shot learning (GZSL), wherein both seen and unseen categories are recognized, making the task more challenging yet more applicable to real-world scenarios. The common solutions involve establishing robust cross-modal relationships by embedding skeleton and semantic data within a joint space, forming a bridge that transfers knowledge from seen to unseen actions [18, 13, 20, 39]. In pursuit of improved generalization, recent studies have further sought ways for fine-grained alignment by decomposing representations either explicitly [6, 41] or implicitly [21].

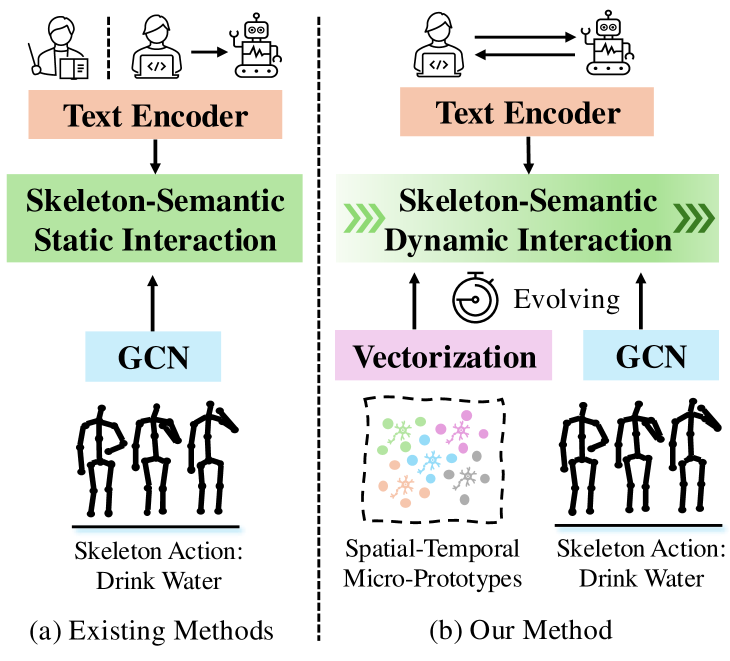

Despite the progress made, crucial challenges remain unsolved, i.e., 1) existing semantics are limited to manual-designed category names or one-turn LLMs-generated action descriptions (Fig. 1 (a)), leading to high homogeneity semantics among similar actions. Taking “kicking” and “side kick” as samples, homogeneous semantics have made the joint embedding space indivisible among these highly similar inter-class actions. Additionally, simple action descriptions cannot encapsulate the intra-class diversity of skeletons, where spatial variations (e.g., movement scale) and temporal differences (e.g., speed) vary across individuals; and 2) directly aligning skeleton and semantic features into a joint embedding space lacks fine-grained control over this process, which causes the model to overemphasize irrelevant or shortcut semantics, i.e., they cannot locate the vital skeleton features with intended semantics correctly, resulting in suboptimal cross-modal fine-grained correspondence. Inspired by the growth process of neurons from small to large that can learn and adapt incrementally through repeated stimuli to form complex connections that enable generalization, we consider designing a dynamic and controllable fine-grained aligning framework from micro to macro perspectives that can refine the interactions incrementally by the guidance of context-aware side information, allowing better generalization to unseen actions.

Specifically, we propose a novel dynamically evolving dual skeleton-semantic synergistic framework via the guidance from context-aware side information, termed Neuron, to enable controlled fine-grained cross-modal interactions (Fig. 1 (b)). Firstly, we employ LLMs (e.g., GPT-4o) with multi-turn interactive prompts to generate enriched, contextual side information, providing detailed semantic descriptions of skeletons that span spatial and temporal variations. Spatial variations range from coarse to mid- and fine-grained structural granularity, while temporal dynamics cover the evolution of movements from start to mid and end phases. With this contextual semantic guidance, we construct spatial-temporal micro-prototypes to capture the synergistic skeleton-semantic relationship iteratively. The spatial micro-prototype evolves through the spatial compression mechanism, guided by stepwise semantic granularity upgrades to isolate discriminative structure-related spatial representations. Similarly, the temporal micro-prototype grows dynamically through the temporal memory mechanism, directed by temporal phase-evolution semantics in turn, to search effective regularity-dependent temporal patterns. Both micro-prototypes are updated iteratively from the micro to the macro perspectives, emulating neuron-like incremental growth to refine their understanding of skeleton actions. This process enhances cross-modal transferability, empowering the framework to generalize effectively to unseen action categories.

The main contributions can be summarized as follows:

-

•

We introduce dynamic, context-aware side information to guide the spatial-temporal micro-prototypes in evolving from micro to macro perspectives, achieving controlled, fine-grained cross-modal alignment.

-

•

We propose the spatial compression and temporal memory mechanisms, enabling the micro-prototypes to explore structure-related spatial representations and regularity-dependent temporal patterns.

-

•

Extensive experiments show that the proposed method achieves state-of-the-art results in both ZSL and GZSL settings on NTU RGB+D, NTU RGB+D 120, and PKU-MMD datasets.

2 Related Work

| Alignment | Adaptability | Semantic Source | Method | ||||

| GL | FG | ST | DY | Manual | H-LLMs | C-LLMs | |

| ✓ | ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | RelationNet [18], SynSE [13] |

| ✓ | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ | SMIE [39] |

| ✗ | ✓ | ✓ | ✗ | ✓ | ✓ | ✗ | PURLS [41], STAR [6] |

| ✗ | ✓ | ✓ | ✗ | ✓ | ✗ | ✗ | SA-DAVE [21] |

| ✗ | ✓ | ✗ | ✓ | ✗ | ✗ | ✓ | Neruon (ours) |

(a) Evolving Representation Learning (b) Stepwise Cross-Modal Alignment

2.1 Zero-Shot Skeleton Action Recognition

Zero-shot skeleton action recognition methods aim to establish the relationship between the seen skeletons and their associated semantics to recognize novel action categories. A pioneering approach, RelationNet [18], identified novel categories by learning deep non-linear metrics between global skeleton features and category names in an embedding manner. Differently, SynSE [13] paves the generative way using VAEs to align global skeleton features with verb-noun embeddings of category names. SMIE [39] employs the temporal constraint with maximizing mutual information to achieve global alignment. However, these methods rely on global alignment with manual-designed category names, limiting the granularity and diversity of captured semantics. Recently, PURLS [41] and STAR [6] explicitly decompose skeletons into overlapping parts with LLMs-generated part-specific descriptions, enabling fine-grained alignment. Unfortunately, these generated descriptions are homogenous across actions, reducing their discriminative capacity. SA-DAVE [21] improves generalization by decoupling skeleton representations into semantic-related and irrelevant components. [Summary]: Unlike prior methods, our proposed framework advances zero-shot skeleton action recognition in two aspects. First, we utilize the context-aware side information generated through multi-turn interactive prompting on LLMs, providing more discriminative, diverse, and context-rich semantics that go beyond manual-designed category names or homogeneous descriptions generated by one-turn interaction. Second and more importantly, we design a dynamic, evolving, and synergistic alignment framework to explore better cross-modal correspondence, contrasting with prior methods that employ static, fixed, and isolated alignment processes, as shown in Table 1.

2.2 Multi-modal Skeleton Representation Learning

Inspired by the success of CLIP [28], numerous studies have explored multi-modal fusion or cross-model distillation strategies to enhance skeleton representation capacity. These approaches incorporate data from complementary modalities, such as RGB videos [23, 33, 2, 19, 7], depth sequences [10], text descriptions [25, 7, 36], flow information [34, 26], and sensor signals [27]. The core of the multi-modal fusion methods [14, 23, 33, 34, 10, 2, 19] is to compensate for the shortcomings of skeletons by relying on the strengths of other modalities, while the cross-modal distillation methods [7, 36, 25, 26, 27] aim to capture modality-agnostic representations for action recognition. Nevertheless, both methods lack explicit control over the representation learning process, resulting in models that capture shortcut features. Recently, some methods have made strides in controlled representation learning [1, 11, 24], yet not in the skeleton action recognition area. [Summary]: In this paper, we propose the spatial compression and temporal memory mechanisms that guide the constructed micro-prototypes to capture skeleton representations in a controlled manner, progressing from micro to macro perspectives. This approach allows the model to focus on intended semantics and avoid being stuck into shortcut features, thereby enhancing the transferability and robustness of learned representations.

3 Method

3.1 Problem Formulation

For the zero-shot skeleton action recognition problem, the skeleton dataset with categories can be divided into three overlapping parts: the train-set with seen categories , the test-set with unseen categories , and another test-set with seen categories . Here, represents the skeleton sequence, where 3 corresponds to the 3D coordinates of human joints, denotes the sequence frames, is the number of human joints, and indicates the human numbers. The symbol is the corresponding action label, where signifies the associated semantics. Notably, and . For each action category, a manual-designed category name and one-turn LLMs-generated action description are adopted as the semantics in prior works [18, 13, 39, 41, 6, 21]. During the training phase, we establish the relationship between the and within the train-set . In the inference phase, we need to predict categories in both and under the ZSL and GZSL settings through the above-constructed relationship, respectively. For simplicity, we omit the superscript for seen (s) and unseen (u) categories in the following sections.

3.2 Contextual Side Information Generation

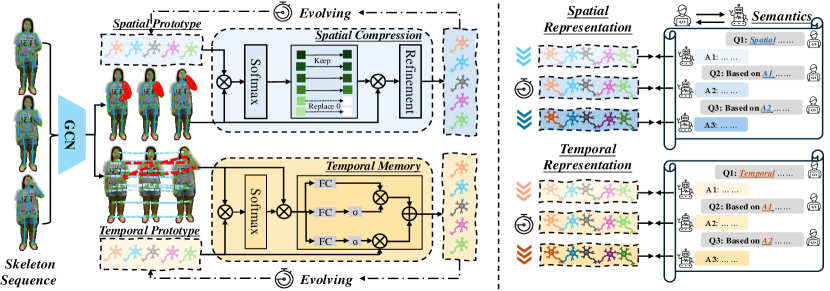

We generate more heterogeneous and multifaceted contextual semantic descriptions of skeletons from the perspective of spatial structure granularity and temporal resolution variation than previous methods [18, 13, 21, 39, 41, 6]. Specifically, we engage the LLM (e.g., GPT-4o) to produce semantic descriptions of skeleton movements in multi-turn inquiries, including the hierarchical spatial structure and evolving temporal resolutions, as shown in Fig. 2 (b). In the -th phase, we can obtain state descriptions for the same skeleton category , represented by different individuals, thereby enriching the intra-class diversity of semantics. With these step-by-step queries, the semantics among the categories become more discriminative, prompting inter-class separability of semantics. Upon this iterative process, we enhance the coherent and contextual representation capabilities of the semantics , where is the number of phase. Meanwhile, the evolving property of semantics from LLMs can guide the learning process of skeleton representation for better cross-modal correspondences. Afterward, we utilize pre-trained text encoder to extract the contextual semantic features , where , where and are the spatial and temporal semantics of -th action category at -th phase, d is the dimension of semantic feature. In this work, we set the .

3.3 Spatial-Temporal Synergistic Growing

Spatial-Temporal Micro-prototypes Construction. Decoupling spatial structures and temporal variations is vital in distinguishing similar actions. As described in [38], temporal variations help differentiate ”put things into a bag” and ”take things out of a bag”, while spatial structures are significant for distinguishing them from ”reach into pockets”. Thus, we separate spatial and temporal representations for alignment independently. First, we extract the skeleton representations by skeleton encoder , where represents the spatial-temporal dimension after transformations, is the feature dimension. Then, we pool the temporal and spatial dimensions respectively to obtain the spatial feature and the temporal feature . To effectively align the skeleton and semantic spaces, we design the learnable spatial micro-prototype to capture the patterns of skeleton topology structure and construct the learnable temporal micro-prototype to record the temporal motion variations of the skeleton, where and are randomly initialized attributes of skeleton representation (e.g., amplitude and velocity [6]), and are the number of primitives. Guided by semantics , and progressively learn useful skeleton representations, similar to the growth process of neurons.

Spatial Compression Mechanism. For the given spatial micro-prototype , we aim to enable it to progressively and controllably absorb structure-related spatial patterns from skeletons across three phases, enhancing the cross-modal correspondence at the spatial level from coarse-grained to fine-grained. Specifically, in the first phase, we treat the initialized as the query matrix to calculate similarity scores with spatial skeleton representations :

| (1) |

where denotes the similarity between the -th joint feature and the -th spatial micro-prototype attribute at the coarse-grained level. Normally, a straightforward approach is to aggregate the joint features to obtain the updated micro-prototype for alignment with semantics:

| (2) |

where is the refinement function composed of MLPs. However, not all the micro-prototype attributes are relevant for each joint across different samples; aggregating all attributes may inadvertently overemphasize those unrelated to the action itself (e.g., camera views). To mitigate this issue, we selectively drop joint-unrelated attributes, thus avoiding shortcut feature learning. We sort the matrix row-wise and partition it into two subsets with the hyper-parameter . Then, we maintain the top- scores and replace others with zeros. Using the modified matrix in Eq 2, we can get the updated micro-prototype . Considering coarse-grained exploration alone is insufficient, we repeat the above operations at the mid-grained and fine-grained levels further. Meanwhile, is incrementally increased in each phase to allow for more precise attribute selection. Through these controllable compression processes, the spatial micro-prototype is growing to , capturing more general and concise spatial pattern representations.

Temporal Memory Mechanism. Compared to the spatial micro-prototype growing through the refinement of hierarchical structure, the temporal micro-prototype develops alongside the evolution of resolution. Unlike the complete spatial feature , we split the temporal feature into three phases to obtain segment features for progressive alignment. Initially, we calculate the similarity scores between the initialized and 1-st temporal feature , and then aggregate all frame features to update the temporal micro-prototype as follows:

| (3) | ||||

| (4) |

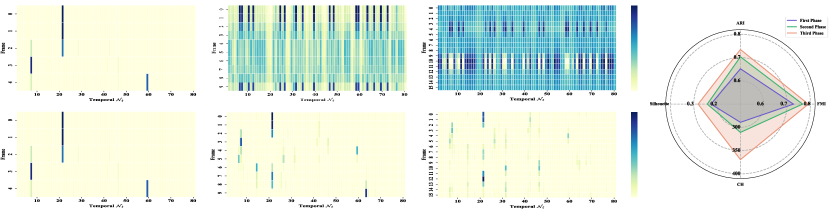

We do not adopt the compression mechanism here because the sequential temporal features in each phase can constrain the learning process not stuck into the short features. However, the updated temporal micro-prototype in the -th phase faces the challenge of knowledge oblivion that is learned in the -th phase, as shown in the first row of Fig. 5 (a) - 5 (c). To address this, in each phase, we introduce the memory mechanism to remember new information while selectively recalling the knowledge from the previous phase:

| (5) |

where is the sigmoid function to quantize the remember and recall probability, and are MLPs for projection, is the refine functions. Through the evolution from the first to the third phase, the micro-prototype progressively captures the regularity-dependent temporal clues, controllable forming to the at the macro level.

3.4 Overall Objective

To align the skeleton and semantic spaces at the fine-grained level, we start from the coarse-grained level, step-by-step, instructing micro-prototypes to update iteratively and controllably from micro to macro perspectives. For each -th phase, the spatial-temporal micro-prototypes and contain the specific skeleton patterns for different attributes, so we pool all the channels to produce the skeleton representation . Meanwhile, we pool all the contextual semantic features into . Then, we define the alignment operation as:

| (6) | |||

| (7) |

where , , and are project functions. Last, the overall optimization objective is defined as:

| (8) |

3.5 ZSL/GZSL Prediction

In the inference stage, we can contain the spatial-temporal features that are updated by micro-prototypes. Then, we can predict the unseen skeleton category by calculating the similarity with unseen semantics. Furthermore, the calibrated stacking method [4] is employed to mitigate the domain shift for GZSL prediction. The whole process is formulated as follows:

| (9) | |||

| (10) |

where corresponds to the ZSL/GZSL setting, and are calibration factors. As mentioned above, different actions have different propensities for spatial and temporal, so we define the final prediction as .

4 Experiments

4.1 Datasets

NTU RGB+D 60 [32]. It contains 56880 skeleton sequences with 60 action categories performed by 40 subjects captured from 3 distinct views. Generally, two benchmarks are commonly utilized for evaluation: cross-subject (Xsub) and cross-view (Xview). The Xsub splits all sequences based on subject indices, with 20 subjects allocated for training and the remaining 20 subjects for testing. The Xview divides data based on view variations, using views 2 and 3 for training while reserving view 1 for testing.

NTU RGB+D 120 [22]. As an extended version of the NTU RGB+D 60 dataset, it encompasses 114,480 skeleton sequences with 120 action categories. Similarly, it also provides the two official benchmarks: cross-subject (Xsub) and cross-setup (Xset). The Xsub task requires 53 subjects for training, with the remaining subjects designated for testing. Meanwhile, the Xset task utilizes data captured from even camera IDs for training and others for testing.

PKU-MMD [9]. Almost 20000 skeleton sequences with 51 categories are collected in this dataset, which can be split into two phases with increasing challenges. It offers two benchmarks: cross-subject (Xsub) and cross-view (Xview). For the Xsub task, 57 subjects are designated for training, while 9 subjects are reserved for testing. For Xview, the middle and right views data is included in the training set, with the left view used for testing. In this work, we utilize the first phase for experiments following the [39, 6].

| Method | Xsub | Xview | ||||||||||||||

| 55/5 Split | 48/12 Split | 55/5 Split | 48/12 Split | |||||||||||||

| ZSL | GZSL | ZSL | GZSL | ZSL | GZSL | ZSL | GZSL | |||||||||

| ReViSE [17] | 69.5 | 40.8 | 50.2 | 45.0 | 24.0 | 21.8 | 14.8 | 17.6 | 54.4 | 25.8 | 29.3 | 27.4 | 17.2 | 34.2 | 16.4 | 22.1 |

| JPoSE [35] | 73.7 | 66.5 | 53.5 | 59.3 | 27.5 | 28.6 | 18.7 | 22.6 | 72.0 | 61.1 | 59.5 | 60.3 | 28.9 | 29.0 | 14.7 | 19.5 |

| CADA-VAE [31] | 76.9 | 56.1 | 56.0 | 56.0 | 32.1 | 50.4 | 25.0 | 33.4 | 75.1 | 65.7 | 56.1 | 60.5 | 32.9 | 49.7 | 25.9 | 34.0 |

| SynSE [13] | 71.9 | 51.3 | 47.4 | 49.2 | 31.3 | 44.1 | 22.9 | 30.1 | 68.0 | 65.5 | 45.6 | 53.8 | 29.9 | 61.3 | 24.6 | 35.1 |

| SMIE [39] | 77.9 | - | - | - | 41.5 | - | - | - | 79.0 | - | - | - | 41.0 | - | - | - |

| PURLS [41] | 79.2 | - | - | - | 41.0 | - | - | - | - | - | - | - | - | - | - | - |

| SA-DAVE [21] | 82.4 | 62.8 | 70.8 | 66.3 | 41.4 | 50.2 | 36.9 | 42.6 | - | - | - | - | - | - | - | - |

| STAR [6] | 81.4 | 69.0 | 69.9 | 69.4 | 45.1 | 62.7 | 37.0 | 46.6 | 81.6 | 71.9 | 70.3 | 71.1 | 42.5 | 66.2 | 37.5 | 47.9 |

| Neuron (Ours) | 86.9 | 69.1 | 73.8 | 71.4 | 62.7 | 61.6 | 56.8 | 59.1 | 87.8 | 70.6 | 75.9 | 73.2 | 63.3 | 65.3 | 58.1 | 61.5 |

| Method | Xusb | Xset | ||||||||||||||

| 110/10 Split | 96/24 Split | 110/10 Split | 96/24 Split | |||||||||||||

| ZSL | GZSL | ZSL | GZSL | ZSL | GZSL | ZSL | GZSL | |||||||||

| ReViSE [17] | 19.8 | 0.6 | 14.5 | 1.1 | 8.5 | 3.4 | 1.5 | 2.1 | 30.2 | 4.0 | 23.7 | 6.8 | 13.5 | 2.6 | 3.4 | 2.9 |

| JPoSE [35] | 57.3 | 53.6 | 11.6 | 19.1 | 38.1 | 41.0 | 3.8 | 6.9 | 52.8 | 23.6 | 4.4 | 7.4 | 38.5 | 79.3 | 2.6 | 4.9 |

| CADA-VAE [31] | 52.5 | 50.2 | 43.9 | 46.8 | 38.7 | 48.3 | 27.5 | 35.1 | 52.5 | 46.0 | 44.5 | 45.2 | 38.7 | 47.6 | 26.8 | 34.3 |

| SynSE [13] | 52.4 | 57.3 | 43.2 | 49.5 | 41.9 | 48.1 | 32.9 | 39.1 | 59.3 | 58.9 | 49.2 | 53.6 | 41.4 | 46.8 | 31.8 | 37.9 |

| SMIE [39] | 61.3 | - | - | - | 42.3 | - | - | - | 57.0 | - | - | - | 42.3 | - | - | - |

| PURLS [41] | 72.0 | - | - | - | 52.0 | - | - | - | - | - | - | - | - | - | - | - |

| SA-DAVE [21] | 68.8 | 61.1 | 59.8 | 60.4 | 46.1 | 58.8 | 35.8 | 44.5 | - | - | - | - | - | - | - | - |

| STAR [6] | 63.3 | 59.9 | 52.7 | 56.1 | 44.3 | 51.2 | 36.9 | 42.9 | 65.3 | 59.3 | 59.5 | 59.4 | 44.1 | 53.7 | 34.1 | 41.7 |

| Neuron (Ours) | 71.5 | 67.6 | 59.5 | 63.3 | 57.1 | 67.5 | 44.4 | 53.6 | 71.1 | 67.5 | 58.9 | 62.9 | 54.0 | 67.0 | 44.9 | 53.8 |

| Method | Xsub | Xview | ||||||||||||||

| 46/5 Split | 39/12 Split | 46/5 Split | 39/12 Split | |||||||||||||

| ZSL | GZSL | ZSL | GZSL | ZSL | GZSL | ZSL | GZSL | |||||||||

| ReViSE [17] | 54.2 | 44.9 | 34.5 | 39.1 | 19.3 | 35.7 | 13.0 | 19.0 | 54.1 | 50.7 | 39.9 | 44.6 | 12.7 | 34.5 | 9.43 | 14.8 |

| JPoSE [35] | 57.4 | 67.0 | 43.0 | 52.4 | 27.0 | 64.8 | 26.5 | 37.6 | 53.1 | 72.9 | 42.5 | 53.7 | 22.8 | 57.6 | 20.2 | 29.9 |

| CADA-VAE [31] | 73.9 | 76.2 | 51.8 | 61.7 | 33.7 | 69.0 | 29.3 | 41.1 | 74.5 | 79.9 | 61.5 | 69.5 | 29.5 | 62.4 | 28.3 | 39.0 |

| SynSE [13] | 69.5 | 77.8 | 40.2 | 53.0 | 36.5 | 71.9 | 30.0 | 42.3 | 71.7 | 69.9 | 51.1 | 59.0 | 25.4 | 61.9 | 22.6 | 33.1 |

| SMIE [39] | 72.9 | - | - | - | 44.2 | - | - | - | 71.6 | - | - | - | 40.7 | - | - | - |

| STAR [6] | 76.3 | 59.1 | 72.3 | 65.0 | 50.2 | 72.7 | 44.7 | 55.4 | 75.4 | 73.5 | 72.2 | 72.8 | 50.5 | 69.8 | 47.5 | 56.5 |

| Neuron (Ours) | 89.2 | 78.9 | 75.9 | 77.4 | 61.4 | 78.3 | 56.8 | 65.9 | 88.2 | 78.7 | 77.8 | 78.3 | 62.2 | 75.4 | 57.1 | 65.0 |

4.2 Evaluation Settings

Protocols. We follow the previous studies [6] to conduct the experiments using predefined seen/unseen protocols and tasks in the ZSL and GZSL settings. For NTU 60, we adopt the 55/5 and 48/12 protocols. For NTU 120, they are extended to 110/10 and 96/24 protocols. For PKU-MMD I, we take the 46/5 and 39/12 split strategies.

Metrics. We evaluate the performance of our method using Top-1 classification accuracy . In the ZSL setting, we calculate accuracy () on the . For the GZSL setting, we first compute the seen accuracy () on the and unseen accuracy () on the , respectively. Then, the harmonic mean accuracy () can be calculated by .

4.3 Implementation Details

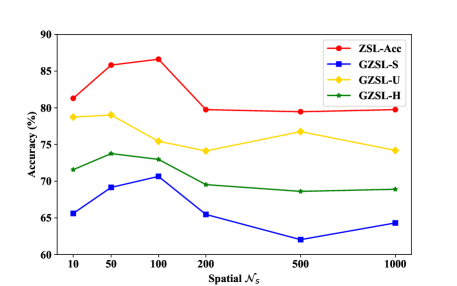

We sample the skeleton sequences to 64 frames using the data processing procedure adopted in [6]. For the skeleton encoder, we employ the Shift-GCN [8], which is pre-trained on the seen categories. Concurrently, we take the text encoder from the pre-trained ViT-L/14@336px model of CLIP [28] to extract semantic embeddings. The optimizer is SGD with a weight decay of 0.0005. The batch size is 64. The base learning rate is 0.1 and reduced with 0.1 multiplied at epochs 10 and 20. The number of and are set to 80. The hyperparameter and are set to 0.0003 and 0.0002. All the experiments are finished using the PyTorch platform on a GeForce RTX 4090 Ti GPU.

|

|

| (a) Spatial | (b) Temporal |

4.4 Comparison with State-of-the-Art

Zero-Shot Learning. We evaluate the performance of our method, Neuron, against state-of-the-art approaches in the ZSL setting, as shown in Table 2 - 4. Across three distinct datasets representing varied scenarios, our proposed method achieves superior performance. Furthermore, the performance of our method is robust across all tasks, demonstrating its ability to learn core spatial-temporal patterns from skeletons that enhance cross-modal correspondences, thereby facilitating better cross-modal transferability.

Generalized Zero-Shot Learning. As shown in Table 2 - 4, our method outperforms previous studies in both seen and unseen categories. Additionally, our method achieves an excellent balance between the seen and unseen categories, i.e., mitigating the tendency of the model towards the seen categories (domain bias), improving a considerable margin over others on the value of harmonic mean metrics. Thus, our synergistic framework actually helps to avoid shortcut representation learning, emphasizing spatial structure-related and temporal regularity-dependent feature learning.

| Spatial Stream | Temporal Stream | (%) | |||

| Granularity Update | Spatial Compression | Phase Evolving | Temporal Memory | ZSL | GZSL |

| ✗ | ✗ | ✗ | ✗ | 79.9 | 65.9 |

| ✓ | ✗ | ✗ | ✗ | 81.0 | 66.3 |

| ✓ | ✗ | ✓ | ✗ | 81.2 | 67.1 |

| ✓ | ✓ | ✓ | ✗ | 81.7 | 69.9 |

| ✓ | ✓ | ✓ | ✓ | 86.9 | 71.4 |

4.5 Ablation Study

Influence of Seen-Unseen Category Settings. We evaluate the robustness of our method under the different seen-unseen categories settings. To this end, we re-partition all skeleton categories into three different seen-unseen combinations that are non-overlap, keeping the same as the previous studies [39, 6], and then compute the average results of three settings to minimize variance. We report the ZSL and GZSL results in Table 5. It can be seen that our method consistently achieves state-of-the-art results in different settings, showing excellent stability and robustness.

|

|

|

|

| (a) Coarse-Grained Phase | (b) Mid-Grained Phase | (c) Fine-Grained Phase | (d) Intra-Class Compactness |

(a) First Phase (b) Second Phase (c) Third Phase (d) Intra-Class Compactness

Influence of Components. We evaluate the efficiency of different components of our method in Table 6. It is noted that the performance of Neuron drops significantly without the temporal memory mechanism, around 5.2%/1.5%, showing this technique can mitigate the situation of knowledge oblivion effectively. Additionally, the dual guidance on spatial granularity updating and temporal phase evolving is crucial in progressively controlling the process of cross-modal alignment. Meanwhile, the spatial compression mechanism can improve generalization, increasing the GZSL from 67.1% to 69.9%.

Influence of and in Micro-prototypes. As illustrated in Fig. 3 (a) - 3 (b), we observe that the accuracy initially improves and then decreases with the attribute number increases. The best performance of our method can be obtained when the is around 100 and the is approximately 50. With further increases in attribute values, accuracy stabilizes and remains consistently high, highlighting the effectiveness of our progressive learning approach.

4.6 Qualitative Analysis

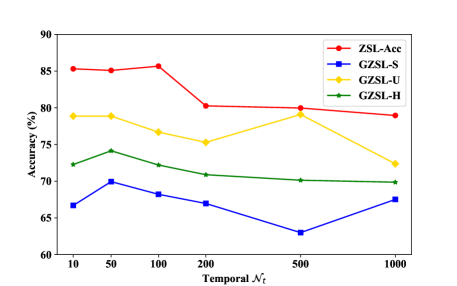

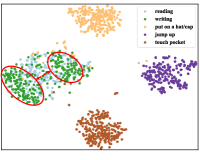

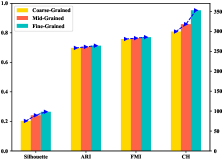

Visualization of Spatial Compression. Here, we plot the t-SNE visualization of unseen skeleton representation distribution at each phase during the spatial compression process, as shown in Fig. 4 (a) - 4 (c). Moving from the coarse-grained to the mid-grained phase, we can see that the compactness of features in ”writing” becomes better. Furthermore, the inter-class separability between the ”reading” and ”put on a hat/cup” actions can also be improved in transitioning from the mid-grained to fine-grained steps. To quantify intra-class compactness, we calculate the compactness of features based on mainstream clustering evaluation metrics, including the silhouette score [29], Adjusted Rand Index (ARI) [16], Fowlkes-Mallows Index (FMI) [12], and Calinski-Harabasz Index (CH) [3], as shown in Fig. 4 (d). The intra-class compactness continually improves, underscoring Neuron’s effective core feature learning.

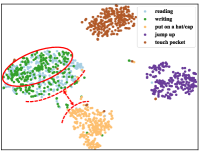

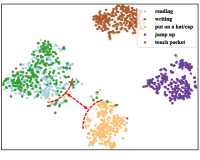

Visualization of Temporal Memory. As shown in Fig. 5 (a) - 5 (c), we draw the updating process of temporal micro-prototypes over multiple steps, comparing results with and without the temporal memory mechanism. In the first row, the updated temporal micro-prototype without the memory mechanism guidance will be chaotic as frames increase, leading to knowledge oblivion. In contrast, the second row exhibits a more effective learning process, where past knowledge is retained while new information is integrated. We also compute the intra-class compactness with the memory mechanism based on the above metrics [29, 16, 12, 3], all of them achieve better with the learning process ongoing.

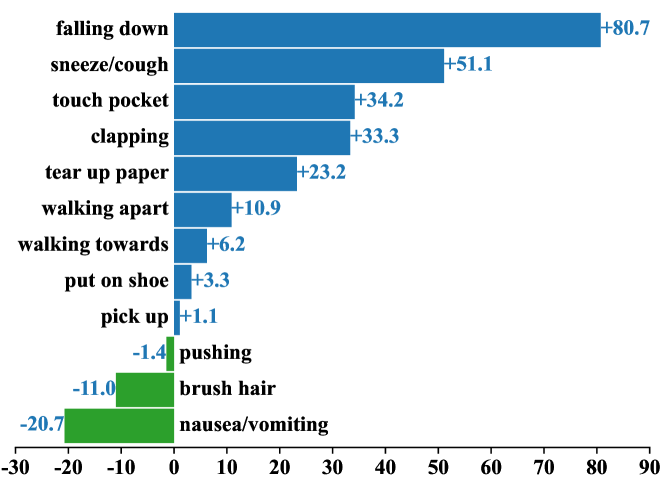

Fine-Grained Action Recognition. To compare the performance of our method with the fine-grained method STAR [6] more intuitive, we calculate the absolute difference value of recognition accuracy between Neuron and STAR in each unseen category. As shown in Fig. 6, we find the accuracy of 9 action categories recognized by our method over the STAR a lot. Specifically, the performance improved for ”walking apart” and ”walking towards” actions as we paid isolated attention to temporal cues during the training process. Meanwhile, some spatial-oriented actions, e.g., ”clapping” and ”falling down” actions, are improving significantly. However, some failure cases, such as the ”nausea/vomiting” action, are incorrectly classified into the ”sneeze/cough” action with highly similar skeletons. Meanwhile, the homogeneous situation still exists between their contextual side information. So, further exploration is still needed for those extremely similar and abstract actions.

5 Conclusion

In this paper, we propose Neuron, a novel dyNamically Evolving dUal skeleton-semantic syneRgistic framework guided by cOntext-aware side informatioN, to explore the desirable fine-grained cross-modal correspondence. By introducing the spatial-temporal evolving micro-prototypes to imitate the learning and growth of neurons, the synergistic skeleton-semantic correlations can be established and refined step-by-step under the guidance of contextual side information. In addition, the spatial compression and temporal memory mechanisms are appropriately designed to mitigate the shortcut feature learning and the situation of temporal knowledge oblivion, absorbing the structure-related and regularity-dependent core spatial-temporal representations. Experimental results indicate excellent cross-modal transferability and effective generalizability from seen to unseen categories.

References

- Bleeker [2022] Maurits Bleeker. Multi-modal learning algorithms and network architectures for information extraction and retrieval. In Proceedings of the 30th ACM International Conference on Multimedia, pages 6925–6929, 2022.

- Bruce et al. [2022] XB Bruce, Yan Liu, Xiang Zhang, Sheng-hua Zhong, and Keith CC Chan. Mmnet: A model-based multimodal network for human action recognition in rgb-d videos. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(3):3522–3538, 2022.

- Caliński and Harabasz [1974] Tadeusz Caliński and Jerzy Harabasz. A dendrite method for cluster analysis. Communications in Statistics-theory and Methods, 3(1):1–27, 1974.

- Chao et al. [2016] Wei-Lun Chao, Soravit Changpinyo, Boqing Gong, and Fei Sha. An empirical study and analysis of generalized zero-shot learning for object recognition in the wild. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14, pages 52–68. Springer, 2016.

- Chen et al. [2021] Yuxin Chen, Ziqi Zhang, Chunfeng Yuan, Bing Li, Ying Deng, and Weiming Hu. Channel-wise topology refinement graph convolution for skeleton-based action recognition. In Proceedings of the IEEE/CVF international conference on computer vision, pages 13359–13368, 2021.

- Chen et al. [2024a] Yang Chen, Jingcai Guo, Tian He, Xiaocheng Lu, and Ling Wang. Fine-grained side information guided dual-prompts for zero-shot skeleton action recognition. In Proceedings of the 32nd ACM International Conference on Multimedia, pages 778–786, 2024a.

- Chen et al. [2024b] Yang Chen, Tian He, Junfeng Fu, Ling Wang, Jingcai Guo, Ting Hu, and Hong Cheng. Vision-language meets the skeleton: Progressively distillation with cross-modal knowledge for 3d action representation learning. IEEE Transactions on Multimedia, 2024b.

- Cheng et al. [2020] Ke Cheng, Yifan Zhang, Xiangyu He, Weihan Chen, Jian Cheng, and Hanqing Lu. Skeleton-based action recognition with shift graph convolutional network. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 183–192, 2020.

- Chunhui et al. [2017] Liu Chunhui, Hu Yueyu, Li Yanghao, Song Sijie, and Liu Jiaying. Pku-mmd: A large scale benchmark for continuous multi-modal human action understanding. arXiv preprint arXiv:1703.07475, 2017.

- Cui and Kang [2023] Yufeng Cui and Yimei Kang. Multi-modal gait recognition via effective spatial-temporal feature fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 17949–17957, 2023.

- Dancette et al. [2021] Corentin Dancette, Remi Cadene, Damien Teney, and Matthieu Cord. Beyond question-based biases: Assessing multimodal shortcut learning in visual question answering. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 1574–1583, 2021.

- Fowlkes and Mallows [1983] Edward B Fowlkes and Colin L Mallows. A method for comparing two hierarchical clusterings. Journal of the American statistical association, 78(383):553–569, 1983.

- Gupta et al. [2021] Pranay Gupta, Divyanshu Sharma, and Ravi Kiran Sarvadevabhatla. Syntactically guided generative embeddings for zero-shot skeleton action recognition. In 2021 IEEE International Conference on Image Processing (ICIP), pages 439–443. IEEE, 2021.

- He et al. [2024a] Tian He, Yang Chen, Xu Gao, Ling Wang, Ting Hu, and Hong Cheng. Enhancing skeleton-based action recognition with language descriptions from pre-trained large multimodal models. IEEE Transactions on Circuits and Systems for Video Technology, 2024a.

- He et al. [2024b] Tian He, Yang Chen, Ling Wang, and Hong Cheng. An expert-knowledge-based graph convolutional network for skeleton-based physical rehabilitation exercises assessment. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2024b.

- Hubert and Arabie [1985] Lawrence Hubert and Phipps Arabie. Comparing partitions. Journal of classification, 2:193–218, 1985.

- Hubert Tsai et al. [2017] Yao-Hung Hubert Tsai, Liang-Kang Huang, and Ruslan Salakhutdinov. Learning robust visual-semantic embeddings. In Proceedings of the IEEE International conference on Computer Vision, pages 3571–3580, 2017.

- Jasani and Mazagonwalla [2019] Bhavan Jasani and Afshaan Mazagonwalla. Skeleton based zero shot action recognition in joint pose-language semantic space. arXiv preprint arXiv:1911.11344, 2019.

- Kim et al. [2023] Sangwon Kim, Dasom Ahn, and Byoung Chul Ko. Cross-modal learning with 3d deformable attention for action recognition. In Proceedings of the IEEE/CVF international conference on computer vision, pages 10265–10275, 2023.

- Li et al. [2023] Ming-Zhe Li, Zhen Jia, Zhang Zhang, Zhanyu Ma, and Liang Wang. Multi-semantic fusion model for generalized zero-shot skeleton-based action recognition. In International Conference on Image and Graphics, pages 68–80. Springer, 2023.

- Li et al. [2025] Sheng-Wei Li, Zi-Xiang Wei, Wei-Jie Chen, Yi-Hsin Yu, Chih-Yuan Yang, and Jane Yung-jen Hsu. Sa-dvae: Improving zero-shot skeleton-based action recognition by disentangled variational autoencoders. In European Conference on Computer Vision, pages 447–462. Springer, 2025.

- Liu et al. [2019] Jun Liu, Amir Shahroudy, Mauricio Perez, Gang Wang, Ling-Yu Duan, and Alex C Kot. Ntu rgb+ d 120: A large-scale benchmark for 3d human activity understanding. IEEE transactions on pattern analysis and machine intelligence, 42(10):2684–2701, 2019.

- Liu et al. [2024] Jinfu Liu, Chen Chen, and Mengyuan Liu. Multi-modality co-learning for efficient skeleton-based action recognition. arXiv preprint arXiv:2407.15706, 2024.

- Lu et al. [2024a] Chenyu Lu, Jun Yin, Hao Yang, and Shiliang Sun. Enhancing multi-modal fusion in visual dialog via sample debiasing and feature interaction. Information Fusion, 107:102302, 2024a.

- Lu et al. [2024b] Mingqi Lu, Siyuan Yang, Xiaobo Lu, and Jun Liu. Cross-modal contrastive pre-training for few-shot skeleton action recognition. IEEE Transactions on Circuits and Systems for Video Technology, 2024b.

- Mao et al. [2022] Yunyao Mao, Wengang Zhou, Zhenbo Lu, Jiajun Deng, and Houqiang Li. Cmd: Self-supervised 3d action representation learning with cross-modal mutual distillation. In European Conference on Computer Vision, pages 734–752. Springer, 2022.

- Ni et al. [2022] Jianyuan Ni, Anne HH Ngu, and Yan Yan. Progressive cross-modal knowledge distillation for human action recognition. In Proceedings of the 30th ACM International Conference on Multimedia, pages 5903–5912, 2022.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Rousseeuw [1987] Peter J Rousseeuw. Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. Journal of computational and applied mathematics, 20:53–65, 1987.

- Sato et al. [2023] Fumiaki Sato, Ryo Hachiuma, and Taiki Sekii. Prompt-guided zero-shot anomaly action recognition using pretrained deep skeleton features. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 6471–6480, 2023.

- Schonfeld et al. [2019] Edgar Schonfeld, Sayna Ebrahimi, Samarth Sinha, Trevor Darrell, and Zeynep Akata. Generalized zero-shot learning via aligned variational autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pages 54–57, 2019.

- Shahroudy et al. [2016] Amir Shahroudy, Jun Liu, Tian-Tsong Ng, and Gang Wang. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1010–1019, 2016.

- Song et al. [2020] Sijie Song, Jiaying Liu, Yanghao Li, and Zongming Guo. Modality compensation network: Cross-modal adaptation for action recognition. IEEE Transactions on Image Processing, 29:3957–3969, 2020.

- Sun et al. [2023] Shengkai Sun, Daizong Liu, Jianfeng Dong, Xiaoye Qu, Junyu Gao, Xun Yang, Xun Wang, and Meng Wang. Unified multi-modal unsupervised representation learning for skeleton-based action understanding. In Proceedings of the 31st ACM International Conference on Multimedia, pages 2973–2984, 2023.

- Wray et al. [2019] Michael Wray, Diane Larlus, Gabriela Csurka, and Dima Damen. Fine-grained action retrieval through multiple parts-of-speech embeddings. In Proceedings of the IEEE/CVF international conference on computer vision, pages 450–459, 2019.

- Xiang et al. [2023] Wangmeng Xiang, Chao Li, Yuxuan Zhou, Biao Wang, and Lei Zhang. Generative action description prompts for skeleton-based action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10276–10285, 2023.

- Xu et al. [2024] Jinglin Xu, Guohao Zhao, Sibo Yin, Wenhao Zhou, and Yuxin Peng. Finesports: A multi-person hierarchical sports video dataset for fine-grained action understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 21773–21782, 2024.

- Zhou et al. [2023a] Huanyu Zhou, Qingjie Liu, and Yunhong Wang. Learning discriminative representations for skeleton based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10608–10617, 2023a.

- Zhou et al. [2023b] Yujie Zhou, Wenwen Qiang, Anyi Rao, Ning Lin, Bing Su, and Jiaqi Wang. Zero-shot skeleton-based action recognition via mutual information estimation and maximization. In Proceedings of the 31st ACM International Conference on Multimedia, pages 5302–5310, 2023b.

- Zhou et al. [2024] Yuxuan Zhou, Xudong Yan, Zhi-Qi Cheng, Yan Yan, Qi Dai, and Xian-Sheng Hua. Blockgcn: Redefine topology awareness for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2049–2058, 2024.

- Zhu et al. [2024] Anqi Zhu, Qiuhong Ke, Mingming Gong, and James Bailey. Part-aware unified representation of language and skeleton for zero-shot action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18761–18770, 2024.