Neuroscience-Inspired Algorithms for the Predictive Maintenance of Manufacturing Systems

Abstract

If machine failures can be detected preemptively, then maintenance and repairs can be performed more efficiently, reducing production costs. Many machine learning techniques for performing early failure detection using vibration data have been proposed; however, these methods are often power and data-hungry, susceptible to noise, and require large amounts of data preprocessing. Also, training is usually only performed once before inference, so they do not learn and adapt as the machine ages. Thus, we propose a method of performing online, real-time anomaly detection for predictive maintenance using Hierarchical Temporal Memory (HTM). Inspired by the human neocortex, HTMs learn and adapt continuously and are robust to noise. Using the Numenta Anomaly Benchmark, we empirically demonstrate that our approach outperforms state-of-the-art algorithms at preemptively detecting real-world cases of bearing failures and simulated 3D printer failures. Our approach achieves an average score of 64.71, surpassing state-of-the-art deep-learning (49.38) and statistical (61.06) methods.

Index Terms:

Predictive Maintenance, Prognostics, Anomaly Detection, Hierarchical Temporal MemoryI Introduction

Predictive Maintenance (PM) is an emerging new paradigm in manufacturing where symptoms of machine degradation are detected before failures occur. It is a major part of the Industry 4.0 and smart manufacturing vision. Using sensor readings, process parameters, and other operational characteristics, PM can help maximize tool life by reducing the number of unnecessary repairs performed while also reducing the likelihood of unexpected failures [1]. In the United States alone, improper maintenance and the resulting outages cost more than 60 billion dollars per year [2]. Thus, smart data-driven paradigms such as PM have the potential to reduce industrial production costs significantly.

Recently, many statistical, machine learning (ML), and deep learning (DL) techniques for PM have been proposed. However, these methods are not without their shortcomings: statistical methods require extensive domain knowledge and often do not generalize well to more complex use cases, while DL and ML techniques often require large amounts of training data and are susceptible to increased error as machines age over time. Furthermore, ML and DL algorithms are highly susceptible to noise, making them insufficiently robust for industrial settings without data preprocessing. Due to the high noise level and diversity among industrial systems, PM models that do not require significant preprocessing or domain knowledge are considered more practical [3].

To overcome these issues, we propose the use of a learning algorithm inspired by neuroscience called Hierarchical Temporal Memory (HTM), pioneered by Hawkins and Blakeslee [4]. Using binary sparse distributed representations (SDRs) to represent data and an architecture incorporating feed-forward, lateral, and feedback connections, HTMs emulate the interactions between pyramidal neurons in the neocortex. HTMs are online learning algorithms that require less application-specific tuning, are robust to noise, and adapt to variations in the data as they continuously learn. In practice, this means HTMs can efficiently learn from a single training pass over small training datasets with little to no hyperparameter tuning. These characteristics also enable HTMs to learn in near real-time. For these reasons, they are suitable for practical applications such as detecting early symptoms of failure in manufacturing equipment. In this work, we demonstrate the effectiveness of an HTM-based anomaly detection methodology at detecting these symptoms in roller-element bearings and 3D printers.

I-A Related Work

We focus on the specific task of PM on roller-element bearings due to their broad application and utility in manufacturing. We also evaluate Additive Manufacturing (AM) as it is a modern technique that presents unique challenges due to the dynamics of 3D printers. Here, we briefly discuss works related to PM for roller bearings and additive manufacturing.

Many PM methods use statistical models due to their simplicity and explainability. These approaches rely on extracted time and frequency domain features. For example, the energy entropy mean and root mean squared (RMS) values of wavelets were used to diagnose ball bearing faults in [5]. In another example, the spectral kurtosis (SK) of vibration and current signals was used to detect and classify the surface roughness of ball bearings in [6]. Using a particle filter method, Zhang et al. performed fault detection on bearings similar to those found in helicopter oil cooler fans [7].

In addition to statistical methods, ML techniques have been applied to a wide array of industrial prognosis tasks. One such method: AutoRegressive Integrated Moving Average (ARIMA), is one of the most popular techniques for time-series forecasting and was used to predict failures and identify quality defects in a slitting machine in [8]. In another approach, Tobon-Mejia et al. used Mixture of Gaussians HMMs and Wavelet Packet Decomposition to estimate the Remaining Useful Life (RUL) of roller-element bearings [9].

DL methods such as Long Short-Term Memory (LSTM) Networks and Convolutional Neural Networks (CNNs) have also been used extensively for PM. In one example, Feng et al. used an LSTM for detecting anomalies in industrial control systems [10]. Additionally, an RNN-LSTM was used to perform PM on an air booster compressor motor used in oil and gas equipment in [11].

Due to the increased complexity and relatively late adoption of AM systems, PM techniques for AM have not been studied in great detail. Proposed approaches often draw from research in related applications, such as PM for bearings. For example, Yoon et al. evaluated the feasibility of AM equipment fault diagnosis using a piezoelectric strain sensor and an acoustic sensor. In this work, features such as RMS value, kurtosis, skewness, and crest factor were used to detect faults [12]. Deep learning has also been used for AM anomaly detection, such as in [13] where a neural network was used to classify faults in 3D printer vibration data.

Despite the proliferation of statistical, ML, and DL approaches to PM for manufacturing, to the best of our knowledge, no HTM-based solutions have been proposed. However, the structural and temporal properties of HTM algorithms allow them to excel at cross-domain tasks that apply to manufacturing, such as anomaly detection [14]. Since the core objective of PM in manufacturing is detecting early symptoms of part failure, HTMs are a natural candidate for this task. HTMs were shown to match or surpass neural networks at detecting and classifying foreign materials on a conveyor belt in a cigarette manufacturing plant [15]. HTMs have also proven effective at detecting anomalies in crowd movements [16], traffic patterns [17], human vital signs [18], electrical grids [19], and computer hardware [20].

I-B Research Challenges

Overall, PM for manufacturing presents the following key research challenges:

-

1.

Identifying time-series anomalies in near real-time despite ambient noise.

-

2.

Learning efficiently from small training datasets to improve applicability to practical use cases.

-

3.

Developing a solution that can be generalized to many heterogeneous manufacturing systems without requiring extensive domain-specific tuning.

-

4.

Adapting to changes in data statistics (i.e., machine aging).

Despite the successes achieved by existing methods in the aforementioned applications, industrial manufacturing systems are diverse and complex, making it difficult to find solutions that generalize across applications. Consequently, PM systems require specialization, which necessitates specialized knowledge and cross-domain skills. This is especially true in the case of bearing-failure prognosis, as bearing design and lifetime management lies squarely in the mechanical and materials engineering domains.

It is difficult for any single technique to address all these research challenges effectively. For example, statistical methods such as thresholding based on kurtosis or spectral analysis are highly efficient and real-time capable but require explicitly defined health indicators and thresholds, which are machine- and application-specific. Also, stationary methods including RMS, kurtosis, and crest factor are only effective for stationary signals (signals with time-invariant statistical properties), but bearing vibration signals are generally cyclostationary (statistical properties vary cyclically) or non-stationary (statistical properties change depending on speed and load conditions)[21]. Spectral kurtosis is applicable to non-stationary and non-periodic signals but is sensitive to noise and outliers [22].

Classical ML algorithms such as AR Models, Support Vector Machines, Hidden Markov Models (HMM), Random Forests, and k-Nearest Neighbors have been demonstrated for PM in existing work, but require the extraction of explicit health indicators (features) from data [23]. These algorithms also require application-specific hyperparameter tuning, data preprocessing as they have poor noise robustness [3], and regular updates of model settings as they do not adapt to account for machine aging [23]. Moreover, both HMM and AR methods are ineffective on non-stationary signals [21].

In DL algorithms such as neural networks and LSTMs, health indicators can be learned implicitly by the network. However, a network trained for one machine cannot generalize to a new machine without retraining with a large amount of data for hundreds or thousands of epochs. Larger models may be able to generalize better, but the complexity of training and optimizing these models increases drastically with size [23]. This domain-specific training and tuning process can be expensive, time-consuming, and impractical for real-world use cases. Like the ML methods, DL algorithms also have poor noise robustness [24] and require high-quality data, or else performance can suffer significantly [3]. To address this, significant preprocessing steps are often needed to generate clean data for these models [3].

As stated in Section I-A, HTM-based anomaly detection methods have demonstrated success in several distinct fields. However, to the best of our knowledge, no prior work has comprehensively explored HTM’s ability to model vibration data or demonstrated its practical value for PM. Overall, all of these existing methods fall short of addressing one or more research challenges.

I-C Our Novel Contributions

To address these key research challenges and improve on the PM performance demonstrated by previous works, our paper presents the following contributions:

-

1.

We demonstrate the ability of HTM-based anomaly detectors to detect early symptoms of bearing failure in several months’ worth of real-world vibration data. We show that HTM’s can efficiently learn with only a single training pass.

-

2.

We demonstrate the ability of HTMs to generalize across applications without much fine-tuning and their ability to continuously learn and adapt by evaluating their anomaly detection performance on a second, highly dynamic application: 3D printer vibration data. These characteristics of HTMs make them more practical for real-world use cases.

-

3.

We compare the performance of HTM anomaly detection methods against state-of-the-art anomaly detection techniques and traditional machine prognosis methods such as condition-based maintenance. Specifically, we evaluate each algorithm’s anomaly detection accuracy and robustness to noise.

-

4.

We demonstrate the efficiency and real-time capability of HTM-based prognosis by comparing its execution time with that of the other techniques.

II Background Theory

II-A Hierarchical Temporal Memory

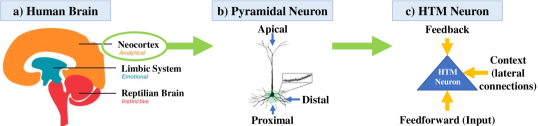

Hierarchical temporal memory is a sequence learning framework modeled after the structure of the neocortex in the human brain [4].

The basic unit of HTM is a neuron modeled after those present in the neocortex (Fig. 1(b)). These neurons are stacked on top of one another to form a column like the ‘cortical column’ of the neocortex. The final HTM is a composition of many such columns. A single HTM neuron (Fig. 1(c)), is connected to two types of segments: (i) proximal segments (aggregation of feed-forward connections from the input) and (ii) distal segments (aggregation of lateral connections from neurons of the other columns). Each HTM neuron can be in three states: (i) inactive (the default state), (ii) predictive, and (iii) active. The predictive state of a neuron is determined by the activity of the distal segments, which in turn is determined by the activation state of the other neurons. A neuron becomes active at any time only if it was in the predictive state at the previous instant, with an exception that will be described in Section III-A. When the sequences of activations are viewed temporally, it is easy to see that the distal segments provide the temporal context for activation and thus capture the temporal relations. The column structure augments this capability of HTM by enabling them to store multiple such overlapping temporal sequences. Further details on the HTM-based anomaly detection methodology are discussed in Section III-A.

II-B PM of Roller-Element Bearings

Roller-element bearings perform the critical task of reducing friction between rotating parts in machinery. Generally, catastrophic bearing failures present warning signs such as anomalous vibrations and/or noise. These anomalies can occur due to environmental factors (moisture or debris entering the bearing) as well as installation errors (misalignment, excessive loads, or poor/improper lubrication) [25]. Recently, sensor-based techniques that leverage vibration and temperature data to monitor bearing health have been proposed. For example, the NASA Bearing Dataset and the Pronostia Bearing Dataset contain vibration and temperature data for several bearings which were run until failure [26, 27]. In both datasets, anomalies in the vibration and temperature signals increase in size and frequency as the bearings approach failure, showing a strong correlation between the sensors’ readings and system state.

II-C PM of 3D Printers

3D printing is a manufacturing process where a physical object is constructed from layers of material in an iterative process. Fused Deposition Modeling (FDM) is a standard technique where melted thermoplastic is extruded through a moving print head nozzle to build each layer. To ensure precision, stepper motors control the extrusion rate of the nozzle as well as the X, Y, and Z-axis movement of the print head. Since the motors, bearings, and belts are moving parts, they are prone to wear and must be regularly maintained to prevent component failures. As shown in [28], these components leak vibration information that can be used by PM systems. However, this leaked information is non-stationary since 3D printers move on multiple axes and change direction and speed often, presenting a challenge for conventional PM methods.

III Methodology

III-A Anomaly Detection using Hierarchical Temporal Memory

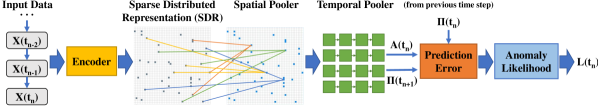

The end-to-end framework for the HTM-based detector is shown in Figure 2. Our methodology for anomaly detection consists of the following steps. First, the time-series vibration data is taken as input and encoded into a Sparse Distributed Representation (SDR). Next, the SDR is passed through the spatial pooler. The spatial pooler’s output is fed into the temporal pooler, which then outputs a prediction for the next activation . Simultaneously, the prediction from the previous time step is compared with the column activations in the current time step to give a prediction error value: a high error value indicates that this activation was not expected and may be anomalous. Finally, the anomaly detector uses the historical distribution of anomaly scores to calculate the anomaly likelihood for the current data point based on the prediction error value; if exceeds a set threshold, then is flagged as an anomaly. In the following paragraphs, we describe each of these components in detail.

III-A1 Encoder

The first stage in processing the input data is the encoder. The encoder converts the incoming data point into a sparse distributed representation (SDR). This representation is a vector of binary values, and it is sparse because only of the bits are activated for any input. This contrasts with deep learning methods that store and learn a dense, distributed representation. Later, we shall describe the advantages of using a sparse representation. We denote the output of the encoder by , a vector.

III-A2 Spatial Pooling

The second stage is spatial pooling. The spatial pooler identifies spatial relations between different regions of the encoder’s output through the proximal connections. Spatial poolers can also be stacked to identify more complex relations. The proximal segment of each neuron in a column is initialized such that each neuron, where the neurons of the same column share the same proximal segment, is connected to a large fraction of the inputs (). The output of this stage is also an SDR representing the columns of the HTM that will be activated in the final output. We denote the spatial pooling operation mathematically by , where the input is the list of columns ordered in decreasing order of their proximal segment values, and k indicates the number of columns to be picked for activation from the top of this list. The number is typically the top , so the output representation is sparse. Let denote the activation of the columns and denote the proximal connections where is a binary matrix of size . Then

| (1) |

III-A3 Prediction

The next stage is prediction. The prediction for the next time step is the predictive state of the HTM at the end of the current time step. Let the weights of the lateral connections of the th distal segment of the th neuron of th column be . We note that only those weights of connections which are above a certain threshold are considered to be established and the rest are set to zero. A neuron enters the predictive state provided the sum of activations of at least one of the distal segments exceeds a certain threshold, . Denote the predictive state of a neuron at time by . We denote the current activation state of all neurons at time by . We denote the total predictive state by the matrix , whose elements are therefore . Mathematically, is given by,

| (2) |

where denotes the element-wise multiplication operation.

III-A4 Temporal Pooling

The final stage is temporal pooling. Temporal pooling computes the activation state (an matrix where M is the number of neurons per mini-column and N is the number of mini-columns in the layer) of the HTM, which is also the output of HTM based on a temporal context. A neuron is activated provided its column is activated, i.e., and provided it is in the predictive state, i.e., . The other neurons in this column are inhibited. If none of the neurons in a column that is active are in the predictive state, then all the neurons of this column are activated. Here, the predictive state from the previous time step is the temporal context. This temporal context is updated at the end of this time step as described in the prediction step above. Let be the th element of denoting the activation state of neuron in column . Then, the temporal pooling operation can be mathematically described as:

| (3) |

Figure 2 shows the different stages of HTM processing in the context of anomaly detection. After activation, the prediction error between the prediction from the previous time step and the current activation state is computed and passed to the anomaly likelihood block, which uses the historical distribution of anomaly scores to determine if is a true anomaly.

III-A5 Learning

HTMs use a Hebbian-type learning algorithm that reinforces the connection weights of the segments that correctly predict the activation at the next time-step. Each time step, the weights are re-evaluated as follows. The connection weights of an activated neuron’s segments that originated from previously active neurons are increased. The connection weights from neurons that were not active in the previous time-step are decreased. Additionally, weights of connections that are wrongly predicted are also decreased but at a lesser rate, i.e., forgetting happens at a slower rate than updating. It is this type of learning that allows HTMs to learn continuously and adapt to changes over a long term. The learning algorithm is discussed in much greater detail in [29].

III-A6 On Capacity, Robustness, and Efficiency

Here, we illustrate why HTMs are efficient and robust to noise. Let us consider an HTM with a large , where denotes the size of the encoder’s output, , a binary vector. Denote by the maximum number of bits that can be one. Typically, is small relative to . Given this, lets define: . Here, is a measure of sparsity and denotes the fraction of the bits that can be active in the SDR of size . An example would be, and and so .

The number of possible unique encodings, that can be stored in vector , given and , is given by,

| (4) |

For example, if and then . Given , the probability that one SDR will match another SDR , which is randomly picked, is trivially computable:

| (5) |

Thus, the probability of a false match is, for all practical purposes, zero. This shows that SDRs can store and recall reliably an astronomically large number of vectors. Consequently, it follows that HTMs can store and recall reliably an astronomically large number of sequences.

We can now relax the requirement and say that two SDRs are equivalent if or more bits match. In this case, the matching is allowed an error of up to bits. Denote by the set of sparse vectors (of size and sparsity ) that have an overlap of bits with . Then, the probability that a false match will be generated, , is given by,

| (6) |

Clearly, the probability of a false match has increased by allowing an error of up to . In the same example as above, if , then , that is an error up to is allowed. We find that the probability of a false match is still , which for all practical purposes is zero. This is what gives SDRs and thereby HTMs robustness to noise.

The sparsity of allows for sparse computation, which makes computations with SDRs very efficient. For a representation of size and sparsity , one does not need to store information on all the bits. Instead, one can just store the address of the locations of bits of value one. Then, for an operation like matching, one just needs to check the value of the bits of the vector at its corresponding locations; this is doable almost in constant time. We can trivially extend this argument to show that the spatial pooling, prediction, and temporal pooling operations described above can also be performed very efficiently in HTMs, thus giving HTMs their computational efficiency. Next, we discuss our experimental setup for demonstrating the performance of the HTM-based anomaly detector.

III-B Experimental Setup

We evaluate our proposed methodology on real-world bearing failure and simulated 3D printer failure datasets. Here, we discuss details about these datasets and the scoring system used for evaluation.

III-B1 Bearing Dataset

We used the NASA Bearing Dataset and the Pronostia Bearing Dataset [26, 27]. The NASA Bearing Dataset contains three tests of bearings run to failure. The Pronostia Bearing Dataset contains vibration snapshots recorded with three different radial load and RPM settings. The accelerometer data for Test 2 of the NASA Dataset is shown in Figure 3. In total, our testing set consists of 40 vibration data files and 191 labeled anomalies.

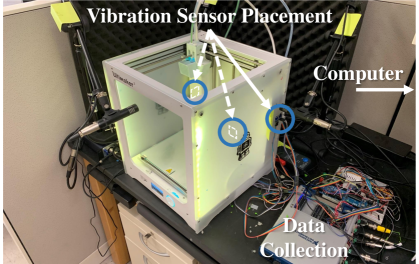

III-B2 3D Printer Dataset

Our experimental testbed for collecting vibration data from a 3D printer is shown in Figure 4. The 3D printer uses one stepper motor to control each movement axis (X, Y, and Z). We placed one accelerometer directly behind each stepper motor to capture vibration data from prints of various 3D objects. To the best of our knowledge, no publicly available 3D printer component-failure datasets exist, and generating real-world failures would risk damaging our equipment. Thus, we instead opted to generate synthetic anomalies in the 3D printer vibration data.

3D printer vibration signals are inherently non-stationary, meaning that their statistical properties vary with time. However, since printers contain bearings and rotating components with similar dynamics, they share the same time-series and frequency domain features as those correlated with bearing health, such as power spectral density (PSD) [21, 22]. For example, in Figure 3 it is clear that the overall power of the vibration signal increases as the bearing nears failure. Intuitively, this same phenomenon will occur in a 3D printer as components wear out. Thus, we synthesized anomalies in the 3D printer vibration data by mapping the PSD from our bearing failure data to the 3D printer data. This composition enabled us to simulate the magnitude changes characteristic of bearing and component failures in the 3D printer while preserving the frequency components unique to the 3D printer.

Our PSD mapping algorithm, shown in Algorithm 1, operates on a sliding window over one bearing vibration file and one 3D printer vibration file. For each window , the following steps are performed: First, the Fast Fourier Transform (FFT) of the bearing time-series data is calculated for a pre-set frequency bin-size. Next, the power in each frequency bin is calculated. Then, we calculate the ratio between the previous window’s power value and the current power value in each bin. This ratio is used to scale the corresponding frequency bin in the FFT of the 3D printer data , yielding an FFT with synthesized anomalies . Finally, the Inverse FFT (IFFT) of is taken and added to the output at location .

The result after all iterations is a 3D printer vibration signal with synthesized anomalies . Using this mapping algorithm, we produced a simulated 3D printer failure dataset containing 15 test cases and 57 hand-labeled anomalies.

III-B3 Anomaly Detectors

To evaluate the performance of HTMs at PM, we use the following two HTM-based anomaly detectors in our approach with slightly different temporal memory implementations, which we denote as HTM [14] and TM-HTM [30]. To explore the effectiveness of anomaly likelihood for HTM-based detectors, we evaluated HTM and TM-HTM with three different anomaly likelihood configurations:

-

1.

no anomaly likelihood: the prediction error of the HTM was directly used as the anomaly score.

-

2.

historical distribution (HD): the implementation described in Section III-A.

-

3.

LSTM-based predictor (LP): The HD anomaly likelihood block was replaced with a 2-layer LSTM predictor trained to predict normal HTM prediction error values in order to filter out false positives/noise. The prediction error of the LSTM was used as the final anomaly score.

We also evaluated baseline and state-of-the-art anomaly detectors including an RNN-based detector configured to use LSTM cells (denoted as LSTM) [31] (similar to [10, 11]), Windowed Gaussian (based on the tail probability of the distribution over a sliding window), a threshold-based detector (similar to condition-based maintenance and [5]), EXPoSE [32], Contextual Anomaly Detector (CAD-OSE) [33], Relative Entropy [34], Etsy Skyline [35], KNN Conformal Anomaly Detector (KNN-CAD) [36], Bayesian Changepoint (BC) [37], Random (random anomaly score), and Null (constant anomaly score). All of the listed algorithms except LSTM were exposed to the training data once before testing and updated their models as they were exposed to unseen test data. LSTM was trained for over 1000 epochs on the training data and was tested with the model settings that resulted in the lowest validation loss. LSTM was tested offline, meaning that it did not update its model weights during testing. The LP anomaly likelihood configuration was also trained in this manner but used the HTM output as its input data instead.

III-B4 Scoring

To score each algorithm fairly, we rely on the Numenta Anomaly Benchmark (NAB) [14]. NAB was designed to fairly benchmark anomaly detection algorithms against one another. It contains a built-in anomaly scoring algorithm, normalization, and three threshold optimization settings: standard, low false positives (Low FP), and low false negatives (Low FN). NAB takes in datasets with labeled anomalies and produces anomaly windows. These are used to score anomaly detectors on how precisely they can pinpoint anomalies; early/on-time detections are rewarded, and very early/late detections are penalized.

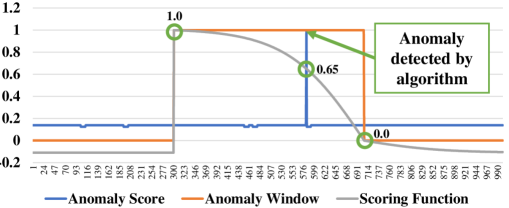

The NAB scoring function is as follows: given an application profile specifying the weights for each kind of detection, and the position of the detection relative to the anomaly window, the scoring function for each detection is:

| (7) |

These scores are summed up for all the detections in a file; the following weighted penalty is deducted for every missed detection (): . The summed score is then normalized to a 0-100 scale where 0 represents equivalent (or worse) performance to the Null detector, and 100 represents a perfect anomaly detector. An example of the scoring functionality is shown in Figure 5. To provide ground-truth values of anomaly locations in the dataset, we followed the NAB official anomaly labeling guide and manually labeled anomalies in each dataset. The first 15% of each vibration data file was used for training with the remaining 85% used for testing and scoring.

IV Results

IV-A Roller Bearing Anomaly Detection

Table I shows the NAB results for the selected algorithms on the labeled bearing failure dataset as well as the total running time of each algorithm. The runtime was recorded over the complete dataset using a PC with an Intel Core i7-7700k processor. As shown in Table I, TM-HTM+HD achieved the highest anomaly detection score for the Standard and Low FN profiles while HTM+LP achieved the highest score for the Low FP profile. TM-HTM+HD scored 67.05, 73.33, and 56.57 for the Standard, Low FN, and Low FP profiles, respectively. The approach that scored closest to HTM was Windowed Gaussian, which achieved scores of 64.70, 70.50, and 57.35 for the same profiles, respectively. HTM and HTM+LP performed better than TM-HTM TM-HTM+LP, indicating that TM-HTM’s implementation only works well with the HD anomaly likelihood block.

As expected, the statistical methods (Windowed Gaussian, Threshold-Based, Relative Entropy) processed the dataset faster than the DL, ML, and HTM-based methods, albeit with lower performance. The HTMs using HD were 1.41x slower than the HTMs with no anomaly likelihood and 3.76x faster than the HTMs using LP on average. TM-HTM+HD processed the dataset 8.3x faster than LSTM.

| Anomaly Detector | Scoring Profile | Runtime (s) | ||

|---|---|---|---|---|

| Standard | Low FN | Low FP | ||

| TM-HTM+HD (Ours) | 67.05 | 73.33 | 56.57 | 4728 |

| HTM+HD (Ours) | 66.38 | 71.93 | 55.33 | 5792 |

| Windowed Gaussian | 64.70 | 70.50 | 57.35 | 336 |

| HTM+LP (Ours) | 64.03 | 69.12 | 57.47 | 21084 |

| HTM (Ours) | 59.75 | 66.24 | 47.63 | 4277 |

| TM-HTM+LP (Ours) | 54.12 | 61.53 | 43.03 | 18508 |

| TM-HTM (Ours) | 54.39 | 63.33 | 32.47 | 3156 |

| Etsy Skyline [35] | 47.53 | 51.51 | 43.75 | 742632 |

| CAD-OSE [33] | 46.88 | 52.81 | 40.96 | 3589 |

| EXPoSE [32] | 41.75 | 44.80 | 36.96 | 5575 |

| Threshold-Based | 37.75 | 43.75 | 25.21 | 125 |

| Relative Entropy [34] | 34.97 | 37.05 | 32.94 | 806 |

| LSTM [31] | 33.99 | 38.13 | 28.38 | 43698 |

| KNN-CAD [36] | 32.31 | 43.06 | 4.69 | 4393 |

| Random | 3.06 | 9.16 | 0.00 | 233 |

| BC [37] | 0.00 | 0.00 | 0.00 | 10270 |

| Null | 0.00 | 0.00 | 0.00 | 235 |

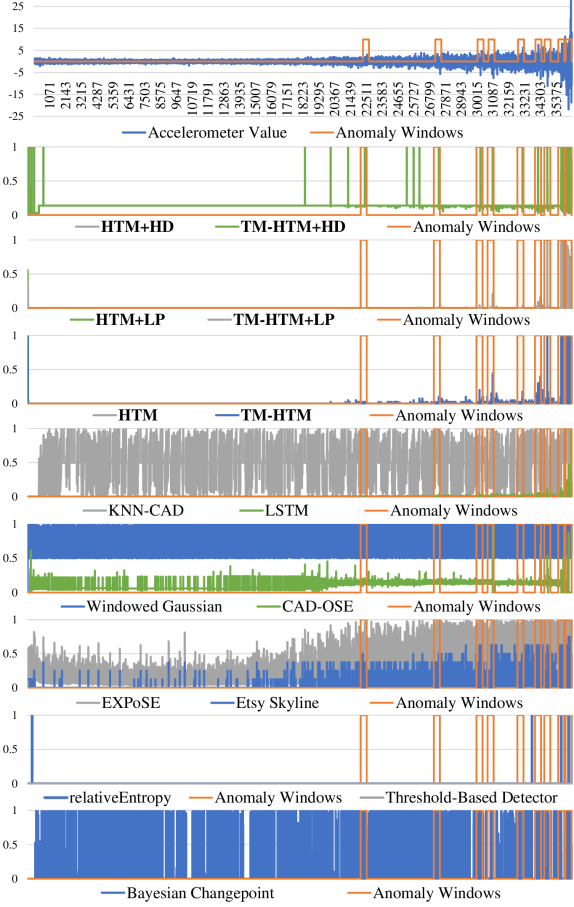

To evaluate the qualitative performance of each anomaly detector, we plotted the anomaly scores over time for each detector for Test 1 of the Pronostia Bearing dataset and compared them to the labeled ground truth anomaly windows in Figure 6.

IV-B 3D-Printer Anomaly Detection

Table II shows our experimental results for the 3D printer dataset. HTM+HD achieved the highest score on the Low FN profile while LSTM achieved the highest score on the Standard and Low FP profiles. HTM+HD achieved scores of 63.03, 73.18, and 42.23 for the Standard, Low FN, and Low FP scoring profiles, respectively. LSTM scored 64.76, 71.43, and 51.34 at the same profiles, respectively. On both applications the HTM, TM-HTM, and TM-HTM+LP detectors performed worse than the HTM+HD, HTM+LP, and TM-HTM+HD detectors. Overall, the use of HD anomaly likelihood yielded the best HTM performance across applications. Each algorithm’s execution time is consistent with the results shown in Table I.

| Anomaly Detector | Scoring Profile | Runtime (s) | ||

|---|---|---|---|---|

| Standard | Low FN | Low FP | ||

| HTM+HD (Ours) | 63.03 | 73.18 | 42.23 | 5813 |

| LSTM [31] | 64.76 | 71.43 | 51.34 | 16414 |

| TM-HTM+HD (Ours) | 58.01 | 68.13 | 44.14 | 3364 |

| Windowed Gaussian | 57.42 | 67.99 | 49.31 | 189 |

| HTM+LP (Ours) | 54.56 | 65.45 | 36.76 | 10062 |

| CAD-OSE [33] | 54.69 | 63.27 | 34.72 | 1573 |

| KNN-CAD [36] | 53.76 | 65.55 | 23.48 | 3375 |

| HTM (Ours) | 49.21 | 62.25 | 31.16 | 2713 |

| TM-HTM+LP (Ours) | 45.31 | 57.90 | 21.93 | 7456 |

| TM-HTM (Ours) | 41.43 | 54.43 | 13.86 | 1724 |

| Relative Entropy [34] | 41.78 | 52.49 | 15.96 | 668 |

| Etsy Skyline [35] | 39.16 | 47.84 | 19.91 | 146165 |

| BC [37] | 21.33 | 23.64 | 17.55 | 1946 |

| Random | 11.34 | 24.75 | 3.74 | 49 |

| EXPoSE [32] | 6.04 | 6.20 | 6.04 | 7136 |

| Threshold-Based | 0.00 | 0.00 | 0.00 | 54 |

| Null | 0.00 | 0.00 | 0.00 | 49 |

V Discussion

V-A Overall Performance and Adaptability

Interestingly, algorithms that performed well on the bearing dataset, such as EXPoSE and Etsy Skyline performed worse on the 3D printer dataset. Additionally, algorithms that performed worse on the bearing dataset, such as LSTM and BC performed much better on the 3D printer dataset. Our HTM-based methodology using HD anomaly likelihood achieved consistently high performance on both applications without any hyperparameter tuning, demonstrating that this configuration can generalize and adapt to different applications without domain-specific tuning. This result also suggests that HTMs significantly benefit from the inclusion of an HD anomaly likelihood block.

Also, HTM+LP was the best performing model on the Low FP profile for the bearing dataset. However, this performance was not replicated in the 3D printer dataset. Similarly, LSTM beat HTM on the Standard and Low FP profile for the 3D printer dataset while performing worse than HTM on the bearing dataset. Hence, our results suggest that LSTMs are highly data-dependent and need to be re-tuned for every machine and/or application. Thus, the LSTM approach is time-consuming, expensive, and impractical for real-world applications.

The benefits of HTM’s continuous learning capability are clearly shown in Figure 6: after identifying earlier anomalies, the HTM-based approaches learn the new baseline for the signal and can pinpoint the future anomalies despite higher signal amplitudes. CAD-OSE also appears to learn continuously, but not as well as the HTMs.

V-B Real-Time Detection Capability

In addition to detection accuracy and precision, an optimal PM system should be able to detect failure symptoms in real-time to allow adequate time for repairs to be scheduled and performed.

However, part failures are infrequent and generally present progressive symptoms before failure, so a hard real-time requirement for processing raw sensor data may unnecessarily limit the complexity (and subsequently the performance) of anomaly detection methods. Thus, we evaluate the anomaly detectors in the context of ”soft real-time,” where we determine if each detector can process a subsampled data segment before the next subsampled data segment arrives. For example, 1 second of data can be recorded each minute as a data segment to reduce data size while still ensuring that a wide range of vibration frequencies are captured at frequent intervals.

Both HTM+HD and TM-HTM+HD were able to process the complete bearing failure dataset in under 100 minutes; since the bearing dataset contains several months’ worth of vibration data and minimal data preprocessing was performed (subsampling and timestamping), this demonstrates that HTMs can accurately detect failure symptoms in real-time, meaning that machine operators can be notified of degradation promptly. Other complex algorithms such as CAD-OSE, KNN-CAD, and EXPoSE had execution time on the same order of magnitude as HTMs and are thus also capable of real-time anomaly detection. Although HTM+LP, TM-HTM+LP, and LSTM took longer to process the dataset than HTM+HD and TM-HTM+HD, they can still be considered real-time due to the aforementioned dataset characteristics. However, the significant training time associated with the LSTM (over 12 hours on our hardware platform) and the need for application-specific hyperparameter tuning put LSTM at a disadvantage in terms of applicability to practical use cases.

V-C Tunability, and Robustness to Noise

Figure 6 clearly shows HTM’s ability to pinpoint anomalies while remaining robust to noise in the input. This is likely due to HTMs use of sparse encodings, making it unlikely that bit errors in the input due to noise will affect the bits corresponding to the input pattern, making them robust to noise. From the figure, it is also clear that the HTM implementations using anomaly likelihood blocks were more robust to noise outside of the anomaly windows than the HTM or TM-HTM alone. This is likely because the anomaly likelihood components filter out smaller detections to isolate only the most plausible anomalies. The HTM+HD and TM-HTM+HD detected anomalies earlier than the other configurations, albeit with slightly more false positives. The outputs of the different HTMs starkly contrast with the highly variable anomaly score outputs of Windowed Gaussian, EXPoSE, KNN-CAD, and BC, among others. These detectors record high anomaly scores even when there is relatively low noise in the input, meaning that they will likely suffer from false positives at higher noise levels.

A detector’s threshold can be tuned to account for higher noise levels; however, for detectors such as Windowed Gaussian, which used the maximum detection threshold of 1.0, the threshold cannot be increased further to reduce its sensitivity. In contrast, TM-HTM+HD used a threshold of 0.5497 on the Standard profile. Thus, although Windowed Gaussian outperformed TM-HTM+HD on the Low FP scoring profile, it lacks tunability and will likely perform much worse than this HTM configuration in more noisy environments.

LSTM appears to have good robustness to noise, as shown in Figure 6. However, it is clear from the figure that it missed some of the earlier anomaly windows completely. In the context of PM, this can mean that an observer will only be warned of degradation later and will not have much time to organize repairs. Overall, our methodology demonstrates significant noise-robustness, better tunability, and the ability to detect early anomalies as well as larger, late-stage anomalies.

V-D Limitations and Future Work

Another related PM problem is Remaining Useful Life (RUL) estimation. In many cases, RUL and anomaly detection go hand in hand as part of a comprehensive PM system. Although we did not evaluate the performance of HTM at RUL estimation, the core architecture of HTM is good at sequence prediction and could likely be used to solve this problem. We leave this for future work.

Another limitation of our work is the use of synthesized 3D printer anomalies instead of real-world examples of 3D printer failures. Due to resource constraints, we opted not to perform these experiments and used synthetic failure data instead. The question of whether HTM’s performance on synthetic anomalies translates to real-world PM remains an open research problem.

V-E Feasibility

The idea of predicting machine failures in advance is not brand new; many variants of PM systems have already been implemented in real-world manufacturing applications. However, based on our results, we believe that HTM is a better solution than current state-of-the-art methods. Our results demonstrate that HTMs are efficient enough to run on consumer-grade processors while learning and adapting continuously. Additionally, HTMs can be easily installed on existing PM systems as they only require time-series sensor inputs, which likely already exist in the system. As shown by our results, the industry-standard LSTM requires a significant amount of time for training (over 1000 epochs) as well as application-specific tuning. In contrast, HTMs do not require any application-specific parameter tuning and are essentially plug-and-play since they only need to be trained with a single pass on normal sensor data. These characteristics make HTMs an extremely viable, out-of-the-box solution for industrial PM.

VI Conclusion

Existing methods for predicting machine failures from sensor data are limited in their practicality due to shortcomings, including poor noise resistance, efficiency, and adaptability. Our experiments demonstrated that our methodology outperforms state-of-the-art approaches at detecting anomalies in both bearing and 3D printer failure data with minimal to no pre-processing or application-specific tuning. On the Standard scoring profile, our methodology using HD anomaly likelihood achieved an average NAB score of 64.71. In comparison, the other top algorithms: LSTM and Windowed Gaussian, achieved average scores of 49.38 and 61.06, respectively. Furthermore, our qualitative results show that our methodology is significantly more noise-resistant than the Windowed Gaussian, KNN-CAD, EXPoSE, and BC detectors, which we attribute to the use of SDRs and an anomaly likelihood component. We also demonstrated that our methodology is real-time capable, with an execution time on the same order of magnitude as state-of-the-art methods. Consequently, we conclude that HTM-based anomaly detection is a novel, practical solution for a wide range of industrial PM applications.

Acknowledgment

This work was partially supported by the National Science Foundation (NSF) under awards CMMI-1739503 and ECCS-1839429 as well as by Graduate Assistance in Areas of National Need (GAANN) under award P200A180052. Any opinions, findings, conclusions, or recommendations expressed in this paper are those of the authors and do not necessarily reflect the views of the funding agencies.

References

- [1] C. Scheffer and P. Girdhar, Practical machinery vibration analysis and predictive maintenance. Elsevier, 2004.

- [2] R. K. Mobley, An introduction to predictive maintenance. Elsevier, 2002.

- [3] J. Fausing Olesen and H. R. Shaker, “Predictive maintenance for pump systems and thermal power plants: State-of-the-art review, trends and challenges,” Sensors, vol. 20, no. 8, p. 2425, 2020.

- [4] J. Hawkins and S. Blakeslee, On intelligence: How a new understanding of the brain will lead to the creation of truly intelligent machines. Macmillan, 2007.

- [5] O. Seryasat, F. Honarvar, A. Rahmani et al., “Multi-fault diagnosis of ball bearing using fft, wavelet energy entropy mean and root mean square (rms),” in 2010 IEEE International Conference on Systems, Man and Cybernetics. IEEE, 2010, pp. 4295–4299.

- [6] F. Immovilli, M. Cocconcelli, A. Bellini, and R. Rubini, “Detection of generalized-roughness bearing fault by spectral-kurtosis energy of vibration or current signals,” IEEE Transactions on Industrial Electronics, vol. 56, no. 11, pp. 4710–4717, 2009.

- [7] B. Zhang, C. Sconyers, C. Byington, R. Patrick, M. E. Orchard, and G. Vachtsevanos, “A probabilistic fault detection approach: Application to bearing fault detection,” IEEE Transactions on Industrial Electronics, vol. 58, no. 5, pp. 2011–2018, 2010.

- [8] A. Kanawaday and A. Sane, “Machine learning for predictive maintenance of industrial machines using iot sensor data,” in 2017 8th IEEE International Conference on Software Engineering and Service Science (ICSESS). IEEE, 2017, pp. 87–90.

- [9] D. A. Tobon-Mejia, K. Medjaher, N. Zerhouni, and G. Tripot, “A data-driven failure prognostics method based on mixture of gaussians hidden markov models,” IEEE Transactions on reliability, vol. 61, no. 2, pp. 491–503, 2012.

- [10] C. Feng, T. Li, and D. Chana, “Multi-level anomaly detection in industrial control systems via package signatures and lstm networks,” in 2017 47th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN). IEEE, 2017, pp. 261–272.

- [11] T. Abbasi, K. H. Lim, and K. San Yam, “Predictive maintenance of oil and gas equipment using recurrent neural network,” in IOP Conference Series: Materials Science and Engineering, vol. 495, no. 1. IOP Publishing, 2019, p. 012067.

- [12] J. Yoon, D. He, and B. Van Hecke, “A phm approach to additive manufacturing equipment health monitoring, fault diagnosis, and quality control,” in Proceedings of the prognostics and health management society conference, vol. 29. Citeseer, 2014, pp. 1–9.

- [13] C.-T. Yen and P.-C. Chuang, “Application of a neural network integrated with the internet of things sensing technology for 3d printer fault diagnosis,” Microsystem Technologies, pp. 1–11, 2019.

- [14] S. Ahmad, A. Lavin, S. Purdy, and Z. Agha, “Unsupervised real-time anomaly detection for streaming data,” Neurocomputing, vol. 262, pp. 134–147, 2017.

- [15] L. Rodriguez-Cobo, P. B. Garcia-Allende, A. Cobo, J. M. Lopez-Higuera, and O. M. Conde, “Raw material classification by means of hyperspectral imaging and hierarchical temporal memories,” IEEE Sensors Journal, vol. 12, no. 9, pp. 2767–2775, 2012.

- [16] A. Bamaqa, M. Sedky, T. Bosakowski, and B. B. Bastaki, “Anomaly detection using hierarchical temporal memory (htm) in crowd management,” in Proceedings of the 2020 4th International Conference on Cloud and Big Data Computing, 2020, pp. 37–42.

- [17] A. Almehmadi, T. Bosakowski, M. Sedky, and B. B. Bastaki, “Htm based anomaly detecting model for traffic congestion,” in Proceedings of the 2020 4th International Conference on Cloud and Big Data Computing, 2020, pp. 97–101.

- [18] B. B. Bastaki, “Application of hierarchical temporal memory to anomaly detection of vital signs for ambient assisted living,” Ph.D. dissertation, Staffordshire University, 2019.

- [19] A. Barua, D. Muthirayan, P. P. Khargonekar, and M. A. Al Faruque, “Hierarchical temporal memory based one-pass learning for real-time anomaly detection and simultaneous data prediction in smart grids,” IEEE Transactions on Dependable and Secure Computing, 2020.

- [20] S. Faezi, R. Yasaei, A. Barua, and M. A. Al Faruque, “Brain-inspired golden chip free hardware trojan detection,” IEEE Transactions on Information Forensics & Security, 2021.

- [21] W. Yan, H. Qiu, and N. Iyer, “Feature extraction for bearing prognostics and health management (phm)-a survey (preprint),” AIR FORCE RESEARCH LAB WRIGHT-PATTERSON AFB OH MATERIALS AND MANUFACTURING …, Tech. Rep., 2008.

- [22] D. Wang, K.-L. Tsui, and Q. Miao, “Prognostics and health management: A review of vibration based bearing and gear health indicators,” IEEE Access, vol. 6, pp. 665–676, 2017.

- [23] J. Wang, Y. Ma, L. Zhang, R. X. Gao, and D. Wu, “Deep learning for smart manufacturing: Methods and applications,” Journal of Manufacturing Systems, vol. 48, pp. 144–156, 2018.

- [24] M. Kordos and A. Rusiecki, “Reducing noise impact on mlp training,” Soft Computing, vol. 20, no. 1, pp. 49–65, 2016.

- [25] ISO 15243:2017, “Rolling bearings — damage and failures — terms, characteristics and causes,” International Organization for Standardization, Standard, Mar. 2017. [Online]. Available: https://www.iso.org/standard/59619.html

- [26] J. Lee, H. Qiu, G. Yu, J. Lin et al., “Bearing data set,” IMS, University of Cincinnati, NASA Ames Prognostics Data Repository, Rexnord Technical Services, 2007.

- [27] P. Nectoux, R. Gouriveau, K. Medjaher, E. Ramasso, B. Chebel-Morello, N. Zerhouni, and C. Varnier, “Pronostia: An experimental platform for bearings accelerated degradation tests.” in IEEE International Conference on Prognostics and Health Management, PHM’12. IEEE Catalog Number: CPF12PHM-CDR, 2012, pp. 1–8.

- [28] S. R. Chhetri and M. A. Al Faruque, “Side channels of cyber-physical systems: Case study in additive manufacturing,” IEEE Design & Test, vol. 34, no. 4, pp. 18–25, 2017.

- [29] J. Hawkins and S. Ahmad, “Why neurons have thousands of synapses, a theory of sequence memory in neocortex,” Frontiers in neural circuits, vol. 10, p. 23, 2016.

- [30] numenta, “Numenta Temporal Memory Implementation,” Feb 2020, [Online; accessed 10. Feb. 2020]. [Online]. Available: https://github.com/numenta/nupic.core/blob/master/src/nupic/algorithms/TemporalMemory.hpp

- [31] J. Park, “RNN based Time-series Anomaly Detector Model Implemented in Pytorch,” 2018, [Online code repository]. [Online]. Available: {https://github.com/chickenbestlover/RNN-Time-series-Anomaly-Detection}

- [32] M. Schneider, W. Ertel, and F. Ramos, “Expected similarity estimation for large-scale batch and streaming anomaly detection,” Machine Learning, vol. 105, no. 3, pp. 305–333, 2016.

- [33] M. Smirnov, “Contextual Anomaly Detector,” Aug 2016, [Online code repository]. [Online]. Available: {https://github.com/smirmik/CAD}

- [34] C. Wang, K. Viswanathan, L. Choudur, V. Talwar, W. Satterfield, and K. Schwan, “Statistical techniques for online anomaly detection in data centers,” in 12th IFIP/IEEE International Symposium on Integrated Network Management (IM 2011) and Workshops. IEEE, 2011, pp. 385–392.

- [35] A. Stanway, “Etsy Skyline,” Oct 2015, [Online code repository]. [Online]. Available: https://github.com/etsy/skyline

- [36] E. Burnaev and V. Ishimtsev, “Conformalized density-and distance-based anomaly detection in time-series data,” arXiv preprint arXiv:1608.04585, 2016.

- [37] R. P. Adams and D. J. MacKay, “Bayesian online changepoint detection,” arXiv preprint arXiv:0710.3742, 2007.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8ae2295e-cb55-4966-94de-51bb7417126e/arnav_headshot.jpg) |

Arnav V. Malawade received a B.S. in Computer Science and Engineering from the University of California Irvine (UCI) in 2018. He is currently an M.S./Ph.D. Student studying Computer Engineering at UCI under the supervision of Professor Mohammad Al Faruque. His research interests include the design and security of cyber-physical systems in connected/autonomous vehicles, manufacturing, IoT, and healthcare. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8ae2295e-cb55-4966-94de-51bb7417126e/NathanCosta.jpg) |

Nathan D. Costa received a B.S. in Computer Science and Engineering from the University of California Irvine (UCI) in 2020. He is currently applying to industries relevant to his interests, those being embedded software development and embedded system design. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8ae2295e-cb55-4966-94de-51bb7417126e/Deepan_Photo.png) |

Deepan Muthirayan is currently a Post-doctoral Researcher in the department of Electrical Engineering and Computer Science at University of California at Irvine. He obtained his Phd from the University of California at Berkeley (2016) and B.Tech/M.tech degree from the Indian Institute of Technology Madras (2010). His doctoral thesis work focused on market mechanisms for integrating demand flexibility in energy systems. Before his term at UC Irvine he was a post-doctoral associate at Cornell University where his work focused on online scheduling algorithms for managing demand flexibility. His current research interests include control theory, machine learning, topics at the intersection of learning and control, online learning, online algorithms, game theory, and their application to smart systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8ae2295e-cb55-4966-94de-51bb7417126e/PPK.png) |

Pramod P. Khargonekar received B. Tech. Degree in electrical engineering in 1977 from the Indian Institute of Technology, Bombay, India, and M.S. degree in mathematics in 1980 and Ph.D. degree in electrical engineering in 1981 from the University of Florida, respectively. He was Chairman of the Department of Electrical Engineering and Computer Science from 1997 to 2001 and also held the position of Claude E. Shannon Professor of Engineering Science at The University of Michigan. From 2001 to 2009, he was Dean of the College of Engineering and Eckis Professor of Electrical and Computer Engineering at the University of Florida till 2016. After serving briefly as Deputy Director of Technology at ARPA-E in 2012-13, he was appointed by the National Science Foundation (NSF) to serve as Assistant Director for the Directorate of Engineering (ENG) in March 2013, a position he held till June 2016. Currently, he is Vice Chancellor for Research and Distinguished Professor of Electrical Engineering and Computer Science at the University of California, Irvine. His research and teaching interests are centered on theory and applications of systems and control. He has received numerous honors and awards including IEEE Control Systems Award, IEEE Baker Prize, IEEE CSS Axelby Award, NSF Presidential Young Investigator Award, AACC Eckman Award, and is a Fellow of IEEE, IFAC, and AAAS. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8ae2295e-cb55-4966-94de-51bb7417126e/alfaruque.jpg) |

Mohammad A. Al Faruque (M’06, SM’15) received his B.Sc. degree in Computer Science and Engineering (CSE) from Bangladesh University of Engineering and Technology (BUET) in 2002, and M.Sc. and Ph.D. degrees in Computer Science from Aachen Technical University and Karlsruhe Institute of Technology, Germany in 2004 and 2009, respectively. He is currently with the University of California Irvine (UCI) as an Associate Professor and Directing the Embedded and Cyber-Physical Systems Lab. He served as an Emulex Career Development Chair from October 2012 till July 2015. Before, he was with Siemens Corporate Research and Technology in Princeton, NJ as a Research Scientist. His current research is focused on the system-level design of embedded and Cyber-Physical-Systems (CPS) with special interest in low-power design, CPS security, data-driven CPS design, etc. He is an ACM senior member. He is the author of 2 published books. Besides many other awards, he is the recipient of the School of Engineering Mid-Career Faculty Award for Research 2019, the IEEE Technical Committee on Cyber-Physical Systems Early-Career Award 2018, and the IEEE CEDA Ernest S. Kuh Early Career Award 2016. He is also the recipient of the UCI Academic Senate Distinguished Early-Career Faculty Award for Research 2017 and the School of Engineering Early-Career Faculty Award for Research 2017. Besides 120+ IEEE/ACM publications in the premier journals and conferences, he holds 9 US patents. |