Node-Variant Graph Filters in Graph Neural Networks

Abstract

Graph neural networks (GNNs) have been successfully employed in a myriad of applications involving graph signals. Theoretical findings establish that GNNs use nonlinear activation functions to create low-eigenvalue frequency content that can be processed in a stable manner by subsequent graph convolutional filters. However, the exact shape of the frequency content created by nonlinear functions is not known and cannot be learned. In this work, we use node-variant graph filters (NVGFs) –which are linear filters capable of creating frequencies– as a means of investigating the role that frequency creation plays in GNNs. We show that, by replacing nonlinear activation functions by NVGFs, frequency creation mechanisms can be designed or learned. By doing so, the role of frequency creation is separated from the nonlinear nature of traditional GNNs. Simulations on graph signal processing problems are carried out to pinpoint the role of frequency creation.

1 Introduction

Graph neural networks (GNNs) [1, 2] are learning architectures that have been successfully applied to a wide array of graph signal processing (GSP) problems ranging from recommendation systems [3, 4] and authorship attribution [5, 6], to physical network problems including wireless communications [7], control [8], and sensor networks [9]. In the context of GSP, frequency analysis has been successful at providing theoretical insight into the observed success of GNNs [10, 11, 12] and graph scattering transforms [13, 14].

Of particular interest is the seminal work by Mallat [15, 16], concerning discrete-time signals and images, that argues that the improved performance of convolutional neural networks (CNNs) over linear convolutional filters is due to the activation functions. Concretely, nonlinear activation functions allow for high-frequency content to be spread into lower frequencies, where it can be processed in a stable manner—a feat that cannot be achieved by convolutional filters alone, which are unstable when processing high frequencies.Leveraging the notion of graph Fourier transform (GFT) [17], these results have been extended to GNNs [10], establishing that the use of functions capable of creating low-eigenvalue frequency content allows them to be robust to changes in the graph topology, facilitating scalability and transferability [18].

While nonlinear activation functions play a key role in the creation of low-eigenvalue frequency content, it is not possible to know the exact shape in which this frequency content is actually generated. Node-variant graph filters (NVGFs) [19], which essentially assign a different filter tap to each node in the graph, are also able to generate frequency content. Different from nonlinear activation functions, this frequency content can be exactly computed, given the filter taps. Thus, by learning or carefully designing these filter taps, it is possible to know exactly how the frequency content is being generated.

The NVGF is a linear filter, which means that replacing the nonlinear activation functions with NVGFs actually renders the whole architecture a linear one. Therefore, by comparing the performance of this architecture to that of a traditional GNN, it is possible to isolate the role of frequency creation in the overall performance of the architecture, from that of the nonlinear nature of mappings. The contributions of this paper can be summarized as follows:

-

(C1)

We introduce NVGFs as a means of replacing nonlinear activation functions, motivated by their ability to create frequencies. We obtain closed-form expressions for the frequency response of NVGFs as a function of the filter taps.

-

(C2)

We prove that NVGFs are Lipschitz continuous with respect to changes in the underlying graph topology.

-

(C3)

We put forth a framework for designing NVGFs.

-

(C4)

We propose a GNN architecture where the nonlinear activation function is replaced by a NVGF. The filter taps of the NVGF can be either learned from data (Learn NVGF) or obtained by design (Design NVGF). The aim of the resulting architecture is to decouple the role of frequency creation from the nonlinear nature of the GNN.

-

(C5)

We investigate the problem of authorship attribution to demonstrate, both quantitatively and qualitatively, the role of frequency creation in the performance of a GNN, and its relationship to the nonlinear nature of traditional architectures.

In essence, we show that nonlinear activation functions are not strictly required for creating frequencies, as originally thought in [15, 10], but that linear NVGF activation functions are sufficient. Furthermore, we demonstrate that this frequency content can be learned with respect to the specific problem under study. All proofs, as well as further simulations, can be found in the appendices. Code can be found online at http://github.com/fgfgama/nvgf.

Related work. GNNs constitute a very active area of research [20, 21]. From a GSP perspective, spectral filtering is used in [22], it is then replaced by computationally simpler Chebyshev polynomials [23], and subsequently by general graph convolutional filters [6]. GNNs were also adopted in non-GSP problems [24, 25, 26]. The proposed replacement of nonlinear activation functions by NVGFs creates a linear architecture that uses both convolutional and non-convolutional graph filters.

NVGFs are first introduced in [19] to extend time-variant filters into the realm of graph signals. In that work, NVGFs are used to optimally approximate linear operators in a distributed manner. In this paper, we focus on the frequency response of NVGFs and on their capacity to create frequency content.

Leveraging the notion of GFT, [10] shows that a GNN is Lipschitz continuous to small changes in the underlying graph structure. Likewise, frequency analysis has been used to understand graph scattering transforms, where the filters used in the GNN are chosen to be wavelets (and not learned) [13, 14]. In this paper, we focus on the role of frequency creation that is put forth in [15, 10], and study NVGFs as linear mechanisms for achieving this.

2 The Node-Variant Graph Filter

Let be an undirected, possibly weighted, graph with a set of nodes and a set of edges . Define a graph signal as the function that associates a scalar value to each node. For a fixed ordering of the nodes, the graph signal can be conveniently described by means of a vector such that the entry is the value of the signal on node , i.e., .

Describing a graph signal as the vector is mathematically convenient but carries no information about the underlying graph topology that supports it. This information can be recovered by defining a graph matrix description (GMD) which is a matrix that respects the sparsity pattern of the graph, i.e., for all distinct indices and such that . Examples of GMDs widely used include the adjacency matrix, the Laplacian matrix, and their corresponding normalizations [27, 28, 29].

The GMD can thus be leveraged to process the graph signal in such a way that the underlying graph structure is exploited. The most elementary example is the linear map between graph signals given by . This linear map is a linear combination of the information located in the one-hop neighborhood of each node:

| (1) |

where is the set of nodes that share an edge with node . The last equality in the above equation is due to the sparsity pattern of the GMD .

More generally, a graph filter is a mapping between graph signals that leverages the structure encoded in [19]. In particular, linear shift-invariant graph filters (LSIGFs) are those that can be built as a polynomial in :

| (2) |

where with . Note that is written in the form of to emphasize that the function is linear in the input , i.e., is multiplied by a matrix that is parametrized by . LSIGFs inherit their name from the fact that they satisfy the property that . The set of polynomial coefficients are called filter taps, and can be collected in a vector defined as for all . Note that the term is a convenient mathematical formulation, but in practice is computed by exchanging information times with one-hop neighbors, i.e., there are no matrix powers involved. In general, GSP regards as given by the structure of the problem, and regards as the actionable data [27, 28, 29].

This paper focuses on NVGFs [19], which are linear filters that assign a different filter tap to each node, for each application of . This can be compactly written as follows:

| (3) |

where and is the diagonal operator that takes a vector and creates a diagonal matrix with the elements of the vector in the diagonal. Since the NVGF is linear in the input, it holds that , where . The entry of the vector, , is the filter tap that node uses to weigh the information incoming after exchanges with its neighbors. The set of all filter taps can be conveniently collected in a matrix where the column is and the row, denoted by , contains the filter taps used by node , i.e., .

The LSIGF in (2) and the NVGF in (3) are both linear and local processing operators. They depend linearly on the input graph signal as indicated by the matrix multiplication notation in (2) and in (3). They are local in the sense that, to compute the output, each node requires information relayed directly by their immediate neighbors. The LSIGF is characterized by the collection of filter taps. The NVGF, on the other hand, is characterized by filter taps. It is noted that while the NVGF requires additional memory to store more parameters, this can be distributed throughout the graph. It is also observed that both the LSIGF and the NVGF have the same computational complexity.

3 Frequency Analysis

The GMD can be used to define a spectral representation of the graph signal [17]. Since the graph is undirected, assume that is symmetric so that it can be diagonalized by an orthonormal basis of eigenvectors , where and , with being the corresponding eigenvalue. Then, it holds that , where the column of is and where is given by for . We assume throughout this paper that the eigenvalues are distinct, which is typically the case for random connected graphs.

The spectral representation of a graph signal with respect to its underlying graph support described by is given by

| (4) |

where , see [17]. The spectral representation of the graph signal contains the coordinates of representing on the eigenbasis of the support matrix . The resulting vector is known as the frequency response of the signal . The entry of the frequency response measures how much the eigenvector contributes to the signal . The operation in (4) is called the GFT, and thus the frequency response is often referred to as the GFT of the signal .

The GFT offers an alternative representation of the graph signal that takes into account the graph structure in . The effect of a filter can be characterized in the spectral domain by computing the GFT of the output. For instance, when considering a LSIGF, the spectral representation of the output is

| (5) |

where the eigendecomposition of , the GFT of , and the fact that were all used. Note that is a shorthand notation that means . The vector is known as the frequency response of the filter and its entry is given by

| (6) |

where is a polynomial defined by the set of filter taps . The function depends only on the filter coefficients and not on the specific graph on which it is applied, and thus is valid for all graphs. The effect of filtering on a specific graph comes from instantiating on the specific eigenvalues of that graph. The function is denoted as the frequency response as well, and it will be clear from context whether we refer to the function or to the vector given in (6) that stems from evaluating at each of the eigenvalues . Note that since is an analytic function, it can be applied to the square matrix so that .

In the case of the LSIGF, it is observed from (5) that the entry of the frequency response of the output is given by the elementwise multiplication

| (7) |

This implies that the frequency response of the output is the elementwise multiplication of the frequency response of the filter and the frequency response of the input . This makes (7) the analogue of the convolution theorem for graph signals. Therefore, oftentimes the LSIGF in (2) is called graph convolution. It is observed from (7) that LSIGFs are capable of learning any type of frequency response (low-pass, high-pass, etc.), but that they are not able to create frequencies, i.e., if , then .

Unlike discrete-time signals, the frequency response of the LSIGF is not computed in the same manner as the frequency response of graph signals. More specifically, the frequency response of the filter can be directly computed from the filter taps by means of a Vandermonde matrix given by as follows:

| (8) |

This implies that when processing graph signals, the graph filter cannot be uniquely represented by a graph signal [27, Th. 5], and thus the concept of impulse response is no longer valid. Furthermore, the graph convolution is not symmetric, i.e., the filter and the graph signal do not commute. Finally, it is interesting to note that, when is the adjacency matrix of a directed cycle and , then and the GFT of the signal and of the filter taps (in time, known as the impulse response) becomes equivalent, as expected.

When considering NVGFs, as in (3), the convolution theorem (7) no longer holds. Instead, the frequency response of the output is given in the following proposition.

Proposition 1 (Frequency response of NVGF).

Let be the output of an arbitrary NVGF characterized by some filter tap matrix . Then, the frequency response of the output is given by

| (9) |

where denotes elementwise product of matrices.

It is immediately observed that NVGFs are capable of generating new frequency content, even though they are linear.

Corollary 2.

If the matrix is not diagonal, then the output exhibits frequency content that is not present in the input.

As an example, consider the case where the input is given by a single frequency signal, i.e., for some so that where if and otherwise. This is a signal that has a single frequency component. Yet, the entry of the frequency response of the NVGF output is , where and is the frequency response obtained from using the coefficients at node . Unless is a constant for all , i.e., , the entry of the GFT of the output is nonzero even when the entry of the GFT of the input is zero. This also shows that by carefully designing the filter taps for each node, the frequencies that are allowed to be created can be chosen, analyzed, and understood.

To finalize the frequency analysis of NVGFs, we establish their Lipschitz continuity to changes in the underlying graph support, as decribed by the matrix . In what follows, we denote the spectral norm of a matrix by .

Theorem 3 (Lipschitz continuity of the NVGF with respect to ).

Let and be two graphs with nodes, described by GMDs and , respectively. Let be the coefficients of any NVGF. Given a constant , if , it holds that

| (10) |

where and are the NVGF on and , respectively, and where is the Lipschitz constant of the frequency responses at each node, i.e., for all .

Theorem 3 establishes the Lipschitz continuity of the NVGF filter with respect to the support matrix (Lipschitz continuity with respect to the input is immediately given for bounded filter taps) as long as the graphs and are similar, i.e., . The bound is proportional to this difference, , and to the shape of the frequency responses at each node through the Lipschitz constant . It also depends on the number of nodes , but it is fixed for given graphs with the same number of nodes. In short, Theorem 3 gives mild guarantees on the expected performance of the NVGF across a wide range of graphs that are close to the graph .

4 Approximating Activation Functions

One of the main roles of activation functions in neural networks is to create low-frequency content that can be processed in a stable manner [15]. However, the way the nonlinearities create this frequency content is unknown and cannot be shaped. One alternative for tailoring the frequency creation process to the specific problem under study is to learn the NVGF filter taps (Sec. 5). However, doing so, implies that the number of learnable parameters depend on which may lead to overfitting. In what follows we propose one method of designing, instead of learning, the NVGFs.

Problem statement. Assume that each data point is a random graph signal with mean , correlation matrix , and covariance matrix . The objective is to estimate a pointwise nonlinear function such that , using a NVGF-based estimator as for as in (3) and . Given the random variable , the aim is to find the filter taps that minimize the mean squared error (MSE)

| (11) |

Our particular focus is set on obtaining unbiased estimators.

Lemma 4 (Unbiased estimator).

Let . The NVGF-based estimator is unbiased if and only if .

From Lemma 4, the unbiased estimator is now

| (12) |

Therefore, the objective becomes finding the filter tap matrix for some fixed value of that satisfies

| (13) |

Note that, due to the orthogonal nature of the GFT, minimizing (13) is equivalent to minimizing the difference of the corresponding frequency responses.

The optimal filter taps for each node, i.e., the rows of that solve (13), can be obtained by solving a linear system of equations as follows.

Proposition 5 (Optimal NVGF).

Let denote the row of , be the covariance matrix of the frequency response at node for the input , and denote the correlation between the filtered signal and the target nonlinearity. Then, a set of filter taps is optimal for (13) if and only if they solve the system of linear equations

| (14) |

From Proposition 5, it is immediate that only knowledge of the first and second moments of , and of the correlation between the input and the output , is required to solve for the optimal NVGF. These moments can be estimated from training data. Also observed is that the optimal filter taps for each node can be computed separately at each node.

We now consider the specific case of random graph signals with zero-mean, independent, identically distributed (i.i.d.) entries, to illustrate the form of the optimal NVGF.

Corollary 6 (Zero-mean, i.i.d., ReLU nonlinearity).

Assume that the elements of are i.i.d., and that and for some . Consider an unbiased NVGF-based estimator of the form

| (15) |

with and , where is distributed as the elements of . Then, the estimator (15) is optimal for (13). If, additionally, and the distribution of is symmetric around , then and , so that (15) reduces to .

Corollary 6 shows that if the elements of the graph signal are zero-mean and i.i.d., then the NVGF boils down to a LSI graph filter. Hence, this optimal unbiased NVGF is not capable of generating frequencies. This is sensible since the elements of the graph signal bare no relationship to the underlying graph support, and thus the NVGF does not leverage that support to create the appropriate frequencies. Furthermore, if the distribution of each element of is symmetric around zero, then an optimal approximation of the nonlinearity amounts to a LSI graph filter that outputs half of the input, as one would expect.

Corollary 7 (Stationary graph processes).

It is observed from Corollary 7 that the effect of the contribution of each frequency component to node gets modulated by the power spectral density . Also, the filter in this case is different for each node, and thus optimal unbiased NVGFs for processing stationary graph processes actually create frequencies.

5 Graph Neural Network Architectures Using Node-Variant Graph Filters

A graph convolutional neural network (GCNN) has a layered architecture [23, 6], where each layer applies a LSIGF as in (2), followed by a pointwise nonlinear activation function applied to each node and

| (16) |

The LSIGF is characterized by the specific filter taps . Note that the output of the GCNN is collected as the output of the last layer . This notation emphasizes that the input is , while the matrix is given by the problem, and the filter taps are learned from data.

Nonlinear activation functions are used in GCNNs to enable them to learn nonlinear relationships between input and output. Additionally, theoretical results have found that they play a key role in creating frequency content that can be better processed by subsequent graph convolutional filters. As previously discussed, NVGFs are also capable of creating new frequency content, albeit in a linear manner. Therefore, by replacing the nonlinear activation functions by NVGFs, it is possible to decouple the contribution made by frequency creation from that made by the architecture’s nonlinearity.

The first architecture proposed here is to use a designed NVGF in lieu of the activation function. That is, instead of using the nonlinear activation function , an optimal NVGF filter designed as in Proposition 5 is used. This requires estimating the first and second moments of the NVGF input data. The architecture, herein termed “Design NVGF”, is given by

| (17) |

Note that, in this case, the filter taps of the NVGF are obtained by Proposition 5, while the filter taps of the LSIGF are learned from data.

Alternatively, the filter taps of the NVGF replacing the nonlinear activation function can also be learned from data, together with the filter taps of the LSIGF:

| (18) |

where the filter taps to be learned are . This approach avoids the need to estimate first and second moments. Additionally, it allows the NVGF to learn how to create frequency content tailored to the application at hand, instead of just approximating the chosen nonlinear activation function. We note that while the increased number of parameters may lead to overfitting, this can be tackled by dropout. This architecture is termed “Learn NVGF”.

6 Numerical Experiments

The objectives of the experiments are twofold. First, they aim to show how the architecture obtained by replacing the nonlinear activation function with a NVGF performs when compared to other popular GNNs. The second objective is to isolate the impact that frequency creation has on the overall performance. Due to space constraints, we focus on the problem of authorship attribution [5]. Other applications can be found in the supplementary material, where the observations are summarized at the end of this section for reference.

Problem statement. In the problem of authorship attribution, the goal is to determine whether a given text has been written by a certain author, relying on other texts that the author is known to have written. To this end, word adjacency networks (WANs) are leveraged. WANs are graphs that are built by considering function words (i.e., words without semantic meaning) as nodes, and considering their co-occurrences as edges [5]. As it happens, the way each author uses function words gives rise to a stylistic signature that can be used to attribute authorship. In what follows, we address this problem by leveraging previously constructed WANs as graphs, and the frequency count (histogram) of function words as the corresponding graph signals.

Dataset. For illustrative purposes, in what follows, works by Jane Austen are considered. Attribution of other century authors can be found in the supplementary material. A WAN consisting of nodes (function words) and edges is built from texts belonging to a given corpus considered to be the training set. These texts are partitioned into segments of approximately words each, and the frequency count of those function words in each of the texts is obtained. These represent the graph signals that are considered to be part of the training set. Each of these is assigned a label to indicate that they have been authored by Jane Austen. An equal number of segments from other contemporary authors are randomly selected, and then their frequency count is computed and added to the training set with the label to indicate that they have not been written by Jane Austen. The total number of labeled samples in the training set is , of which are left aside for validation. The test set is built analogously by considering other text segments that were not part of the training set (and thus, not used to build the WAN either), totaling graph signals (half corresponding to texts authored by Jane Austen, and half corresponding to texts by other contemporary authors).

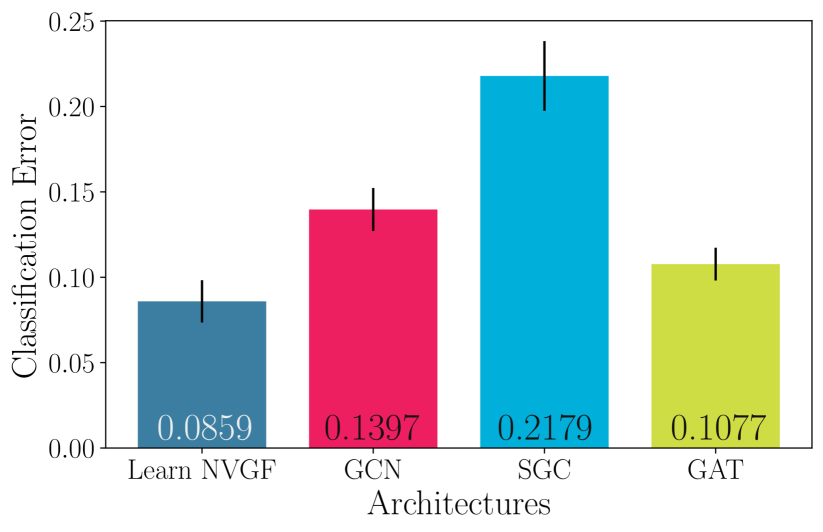

Architectures and training. For the first experiment, we compare the Learn NVGF architecture (18) with arguably three of the most popular non-GSP GNNs, namely, GCN [24], SGC [25], and GAT [26]. Note that the Learn NVGF is an entirely linear architecture, but one capable of creating frequencies due to the nature of the NVGF. The filter taps of both the LSIGF and the NVGF are learned from data. The other three architectures are nonlinear since they include a ReLU activation function after the first filtering layer. All architectures include a learnable linear readout layer. Dropout with a probability is included after the first layer. All architectures are trained for epochs with a batch size of , using the ADAM algorithm [32] with the forgetting factors and , respectively, as well as a learning rate . The value of the learning rate , the number of hidden units , and the number of filter taps are chosen by exhaustive search over triplets in the set .

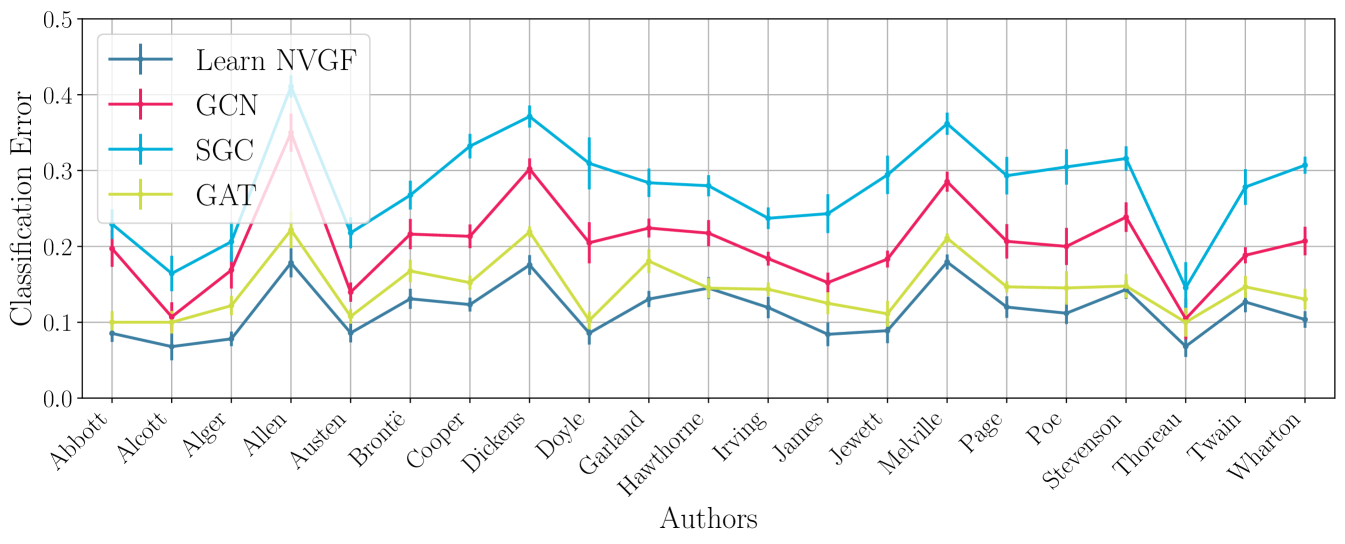

Experiment 1: Performance comparison. The objective of this first experiment is to illustrate that the performance of the Learn NVGF is comparable to the performance of popular (non-GSP) GNNs. The best results for each architecture are shown in Figure 1a, where the classification error was averaged over random splits of texts that are assigned to the training and test sets. One third of the standard deviation is also shown. It is observed that the Learn NVGF architecture has a comparable performance. It is emphasized that the objective of this paper is not to achieve state-of-the-art performance, but to offer insight on the role of nonlinear activation functions in frequency creation and how this translates to performance, as discussed next. Among the three popular architectures, GAT (, ) exhibits the best performance, which is better than SGC (, , ) and better than GCN (, ). This is expected since the graph filters involved in GAT are non-convolutional, and thus have more expressivity. Yet, when compared to the linear Learn NVGF (, , ), it is observed that the latter exhibits better performance than the GAT.

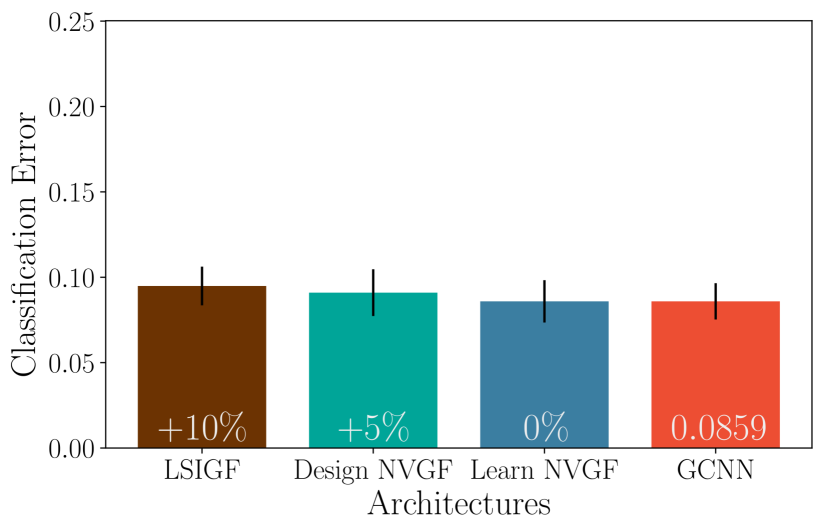

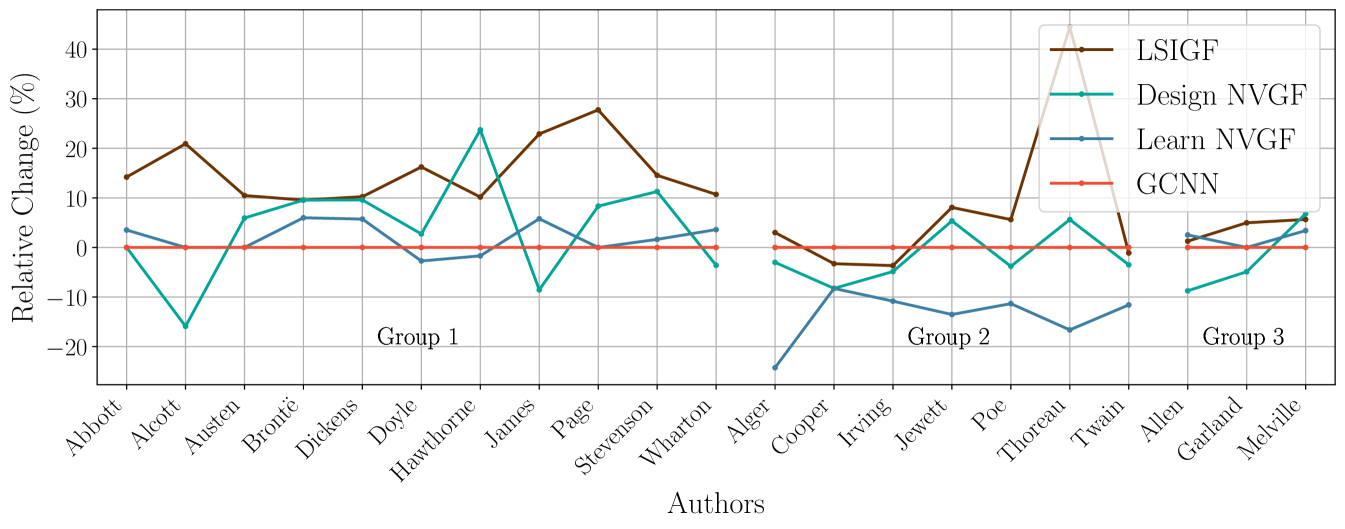

Experiment 2: The role of frequency creation. For the second experiment, we compare the Learn NVGF of the previous experiment with (i) a simple LSIGF, (ii) a Design NVGF as in (17), and (iii) a GCNN as in (16). The same values of are used for all architectures as a means of fixing all other variabiliity except for the nonlinearity/frequency creation. The results are shown in Figure 1b. Note that the GCNN in (16) can be interpreted as a stand-in for ChebNets [23], arguably the most popular GSP-based GNN architecture.

Discussion. First, note that the LSIGF, Design NVGF, and Learn NVGF architectures are linear, whereas the GCNN is not. The LSIGF, however, is not capable of creating frequencies, while the other three are, albeit through different mechanisms. Second, Figure 1b shows the percentage difference in performance with respect to the base architecture (the GCNN). Essentially, the difference in performance between the LSIGF and the Learn NVGF can be pinned down to the frequency creation, because both architectures are linear, while the difference between the Learn NVGF and the GCNN can be tied to the nonlinear nature of the GCNN. It is thus observed that the LSIGF performs considerably worse than the Learn NVGF and the GCNN, which perform the same. It is observed that the Design NVGF performs halfway between the GCNN and the LSIGF. The Design NVGF depends on the ability to accurately estimate the first and second moments from the data, and this has an impact on its performance. In any case, it is noted that this experiment suggests that the main driver of improved performance is the frequency creation and not necessarily the nonlinear nature of the GCNN.

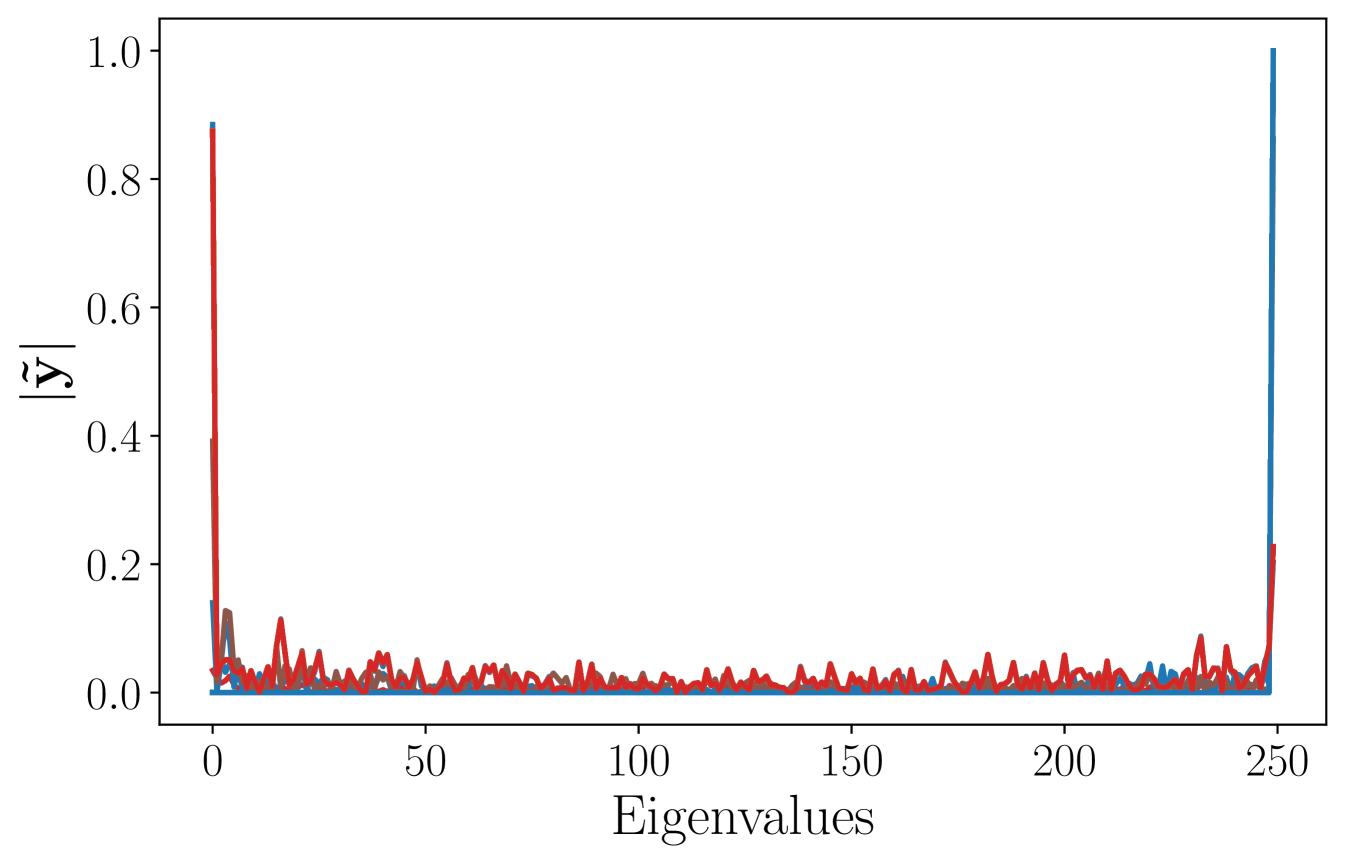

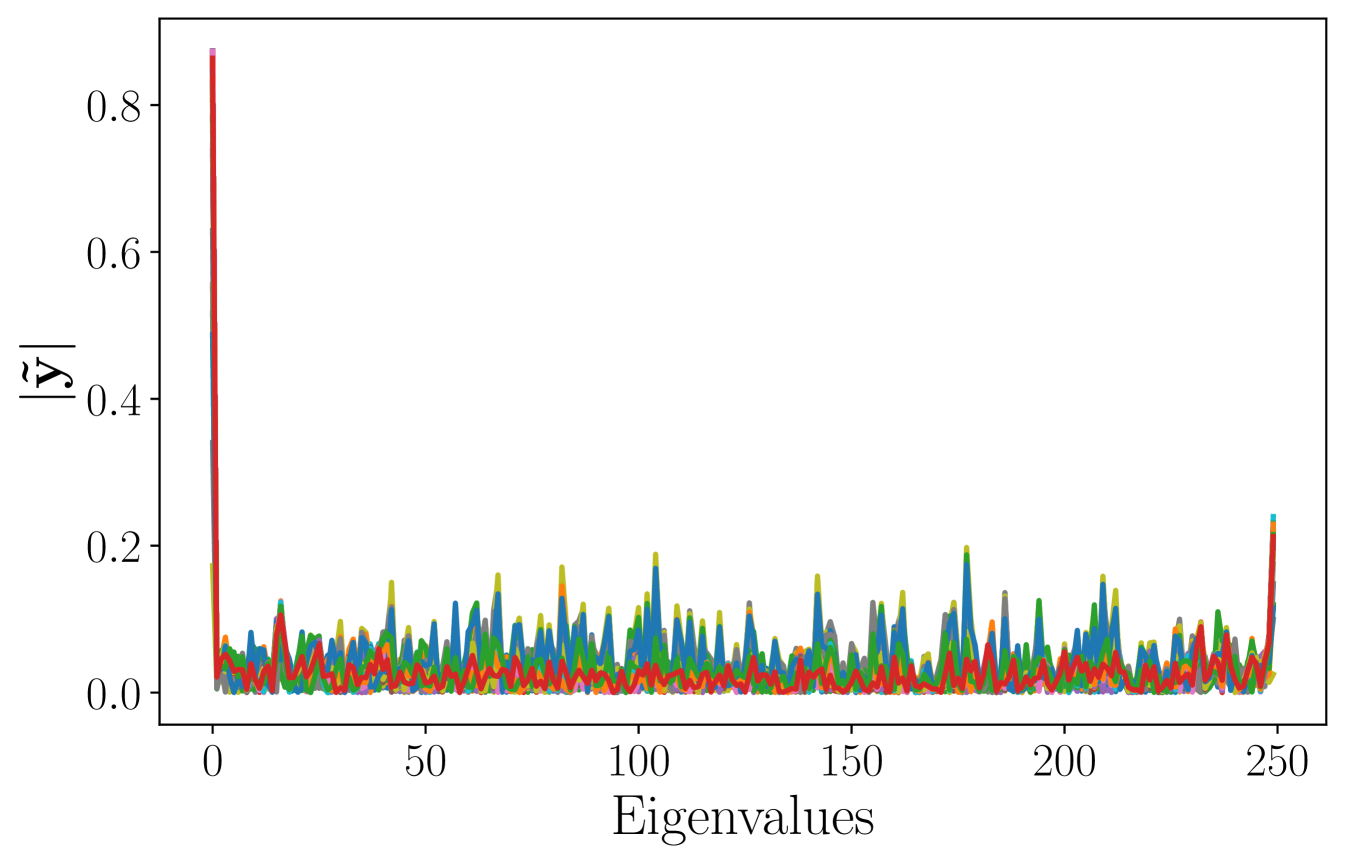

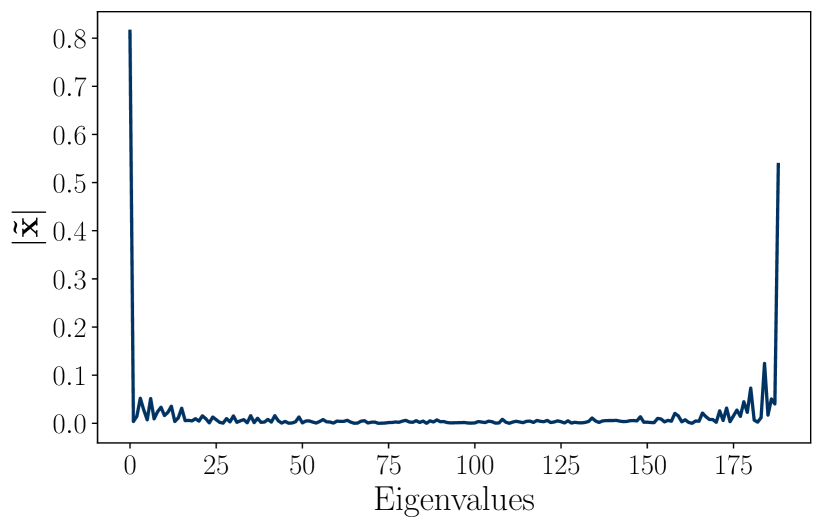

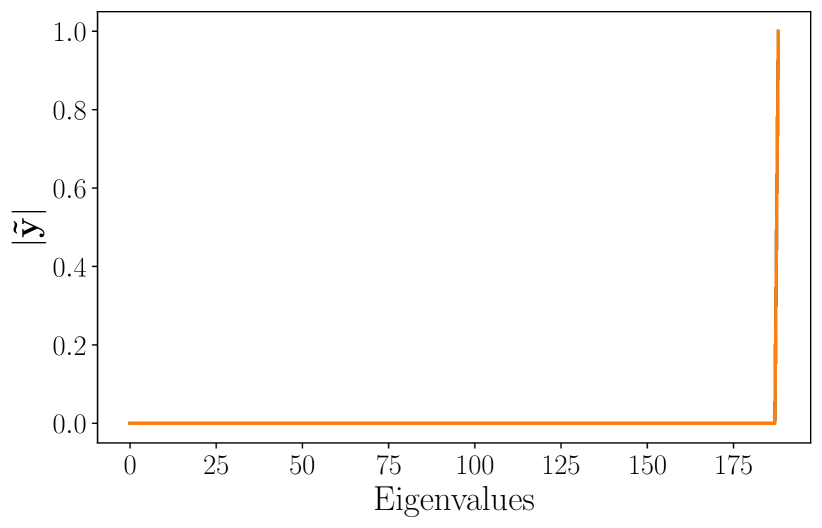

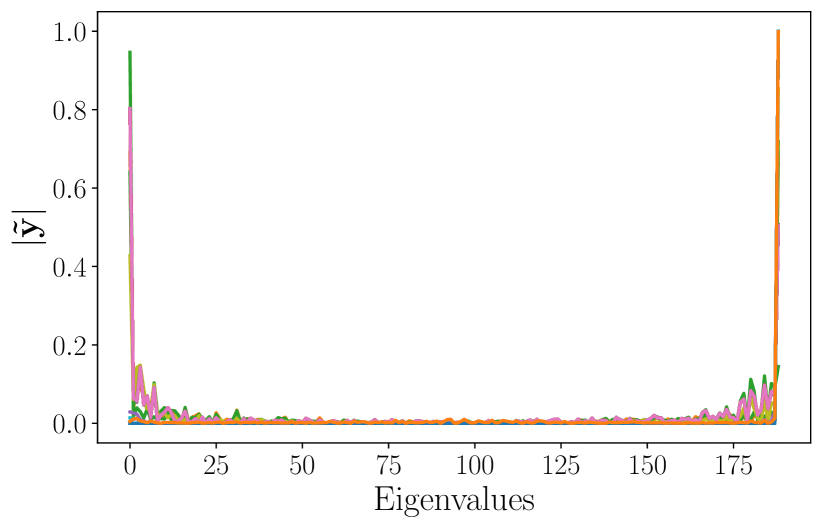

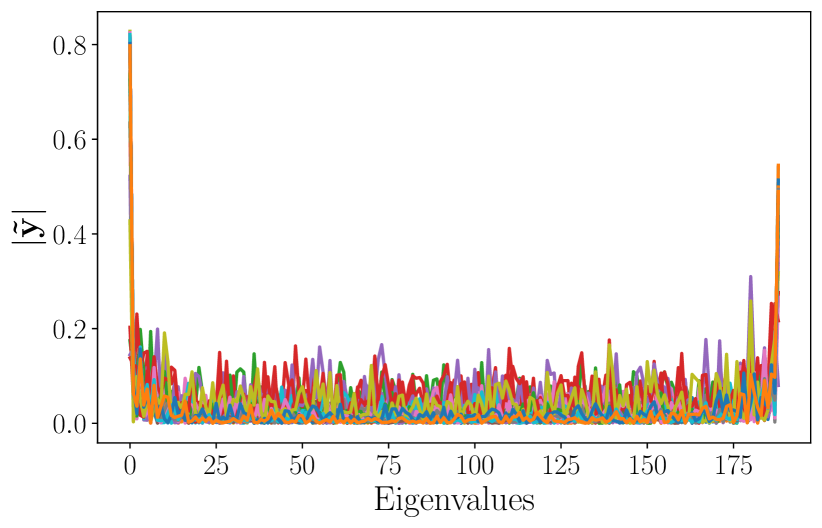

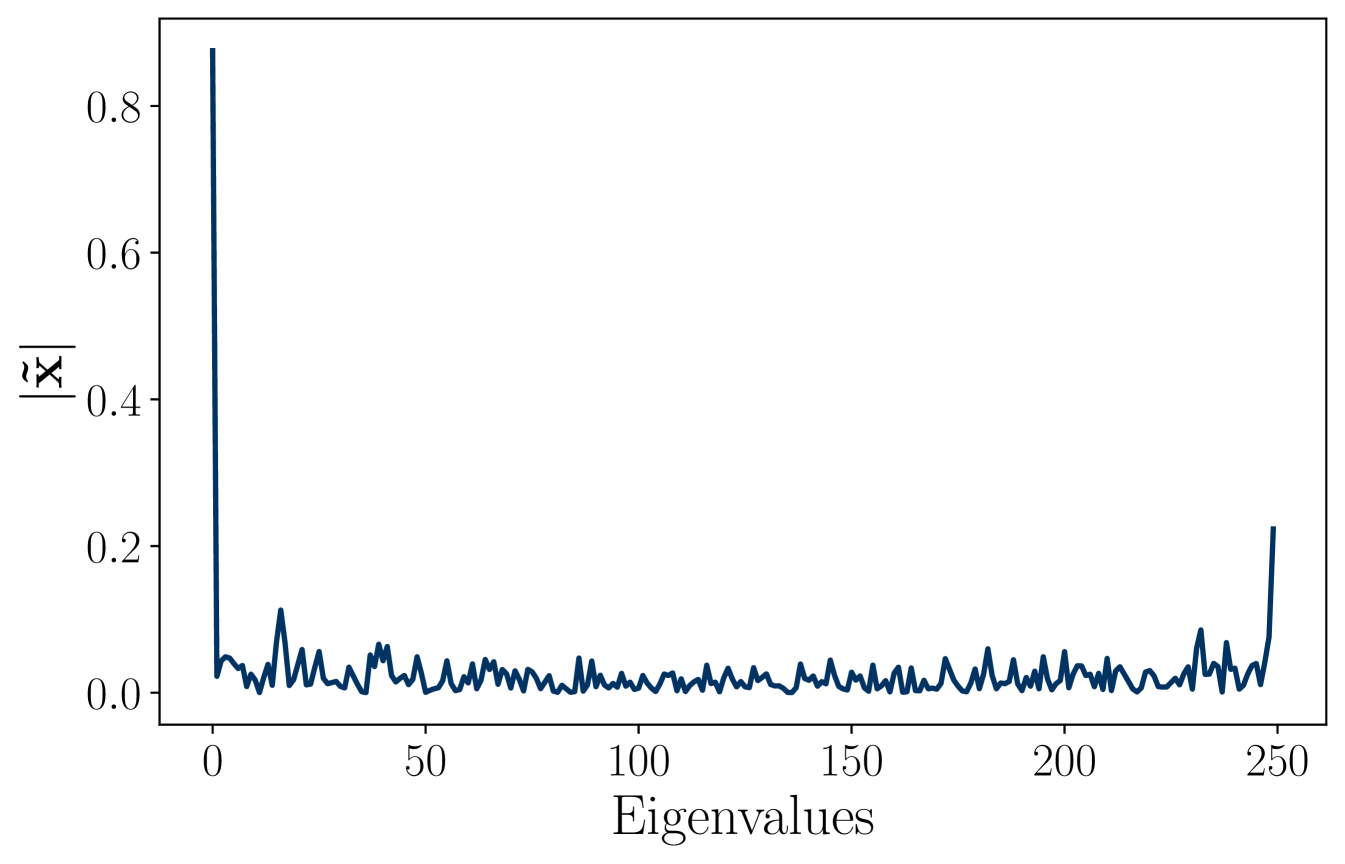

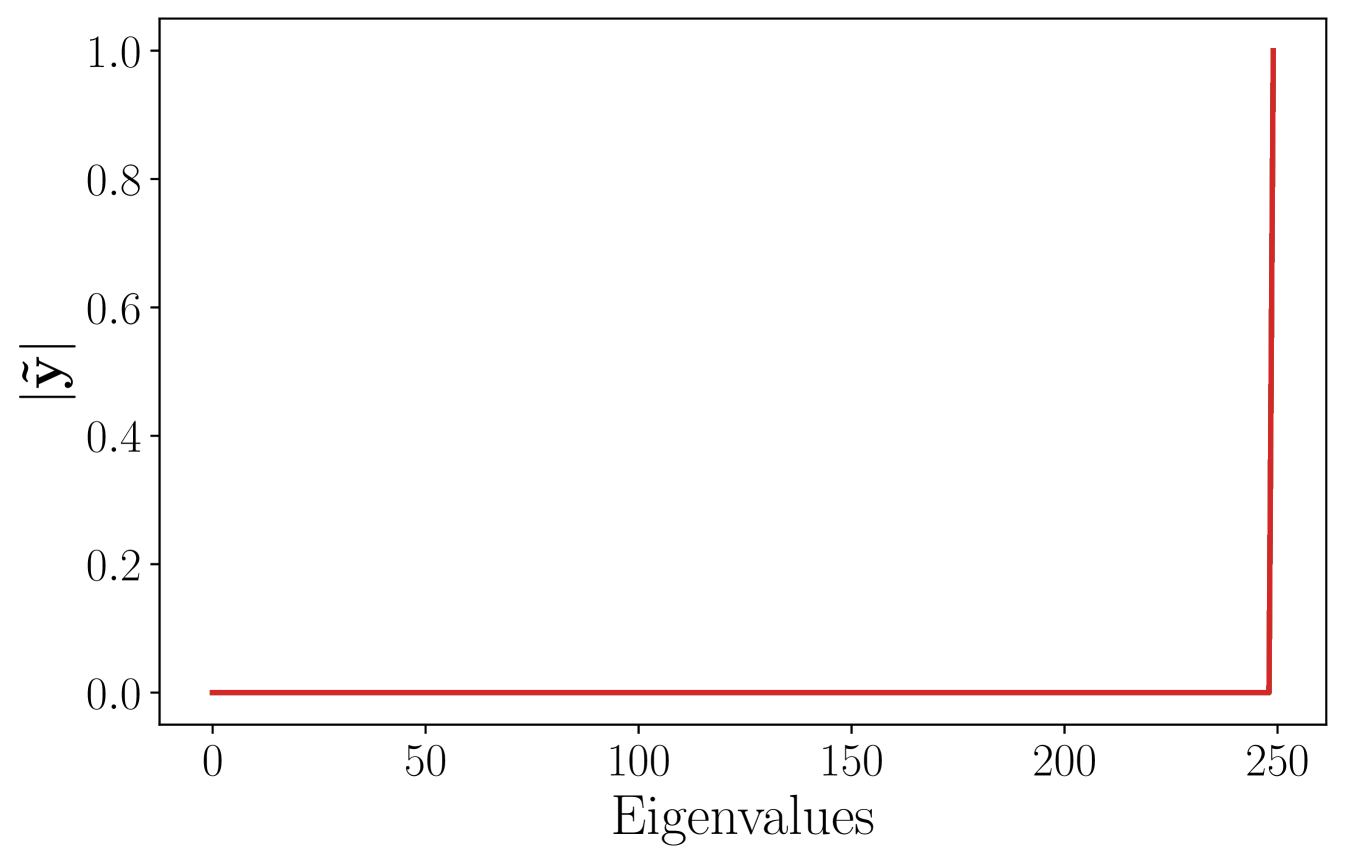

From a qualitative standpoint, the average frequency response of the signals in the test set is shown in Figure 1c. Since the high-eigenvalue content is significant, it is expected that the ability to better process this content will impact the overall performance. This explains the relatively poor performance of the LSIGF. In Figure 2, we show the frequency response of the output for each of the three architectures (LSIGF, Learn NVGF, and GCNN) when the input has a single high frequency, i.e., so that . Figure 2a shows that the output frequency response of the LSIGF exhibits a single frequency, the same as the input. Figure 2b shows that the output frequency response of the GCNN has content in all frequencies, but most notably a low-frequency peak appears. Figure 2c shows that the output frequency response of the Learn NVGF contains all frequencies, in a much more spread manner than the GCNN.

General observations. In the supplementary material, a similar analysis is carried out for other authors. Additionally, it is noted that Jane Austen is representative of the largest group (consisting of authors) where the Learn NVGF and the GCNN have similar performance and are better than the LSIGF. For other authors, the Learn NVGF actually performs better than the GCNN. Finally, for the remaining authors, there is no significant difference between the performance of the LSIGF, the GCNN, and the Learn NVGF, which implies that the high-frequency eigenvalue content is less significant for these authors. Additionally, the problem of movie recommendation is considered [33]. First, it is observed that the Learn NVGF exhibits better performance than the methods in [3, 4] and the nearest neighbor algorithm. Second, it is noted that the input signals do not have significant high-eigenvalue content and thus the LSIGF, the GCNN, and the Learn NVGF exhibit similar performance. For a detailed analysis, please see the supplementary material. 111It is noted that the popular benchmark of semi-supervised learning over citation networks does not fit the empirical risk minimization framework nor the graph signal processing framework, and thus, it does not admit a frequency analysis, precluding their use in this work.

Problems beyond processing graph signals. Two popular tasks that utilize graph-based data are semi-supervised learning and graph classification. The former involves a framework where each sample represents a node in the graph, i.e., for features instead of for nodes. This implies that the notion of graph frequency response used in (4) does not translate to the semi-supervised setting. Therefore, extending these results to this problem requires careful determination of the notion of frequency. For the graph classification problem, each sample in the dataset represents a signal together with a graph, and the graph associated to each sample is usually different. In this case, while the notion of frequency is properly defined for each sample, an overarching frequency response for all the samples is not. Possible extensions of this framework to the graph classification problem would entail redefining a common notion of frequency response for the dataset, for example, using graphons.

7 Conclusion

The objective of this work was to study the role of frequency creation in GSP problems. To do so, nonlinear activation functions (which theoretical findings suggest give rise to frequency creation) are replaced by NVGFs, which are also capable of creating frequencies, but in a linear manner. In this way, frequency creation was decoupled from the nonlinear nature of activation functions. Numerical experiments show that the main driver of improved performance is frequency creation and not necessarily the nonlinear nature of GCNNs. As future work, we are interested in extending this frequency analysis to non-GSP related problems such as semi-supervised node classification or graph classification problems, which require a careful definition of a notion of frequency. We discussed the caveats of extending this framework to include semi-supervised learning and graph classification problems, relating to the need of defining an appropriate notion of graph frequency. This opens up an interesting area of future research. We also note that it would be possible to use shift-variant filters to leverage this framework when using CNNs.

References

- [1] M. M. Bronstein, J. Bruna, Y. LeCun, A. Szlam, and P. Vandergheynst, “Geometric deep learning: Going beyond Euclidean data,” IEEE Signal Process. Mag., vol. 34, no. 4, pp. 18–42, July 2017.

- [2] F. Gama, E. Isufi, G. Leus, and A. Ribeiro, “Graphs, convolutions, and neural networks: From graph filters to graph neural networks,” IEEE Signal Process. Mag., vol. 37, no. 6, pp. 128–138, Nov. 2020.

- [3] F. Monti, M. M. Bronstein, and X. Bresson, “Geometric matrix completion with recurrent multi-graph neural networks,” in 31st Conf. Neural Inform. Process. Syst. Long Beach, CA: Neural Inform. Process. Syst. Foundation, 4-9 Dec. 2017, pp. 3700–3710.

- [4] R. Levie, F. Monti, X. Bresson, and M. M. Bronstein, “CayleyNets: Graph convolutional neural networks with complex rational spectral filters,” IEEE Trans. Signal Process., vol. 67, no. 1, pp. 97–109, 5 Nov. 2018.

- [5] S. Segarra, M. Eisen, and A. Ribeiro, “Authorship attribution through function word adjacency networks,” IEEE Trans. Signal Process., vol. 63, no. 20, pp. 5464–5478, 30 June 2015.

- [6] F. Gama, A. G. Marques, G. Leus, and A. Ribeiro, “Convolutional neural network architectures for signals supported on graphs,” IEEE Trans. Signal Process., vol. 67, no. 4, pp. 1034–1049, 17 Dec. 2018.

- [7] M. Eisen and A. Ribeiro, “Optimal wireless resource allocation with random edge graph neural networks,” IEEE Trans. Signal Process., vol. 68, pp. 2977–2991, 20 Apr. 2020.

- [8] F. Gama and S. Sojoudi, “Distributed linear-quadratic control with graph neural networks,” Signal Process., 11 Feb. 2022, early access. [Online]. Available: http://doi.org/10.1016/j.sigpro.2022.108506

- [9] D. Owerko, F. Gama, and A. Ribeiro, “Predicting power outages using graph neural networks,” in 2018 IEEE Global Conf. Signal and Inform. Process. Anaheim, CA: IEEE, 26-29 Nov. 2018, pp. 743–747.

- [10] F. Gama, J. Bruna, and A. Ribeiro, “Stability properties of graph neural networks,” IEEE Trans. Signal Process., vol. 68, pp. 5680–5695, 25 Sep. 2020.

- [11] H. Kenlay, D. Thanou, and X. Dong, “On the stability of graph convolutional neural networks under edge rewiring,” in 46th IEEE Int. Conf. Acoust., Speech and Signal Process. Toronto, ON: IEEE, 6-11 June 2021.

- [12] R. Levie, W. Huang, L. Bucci, M. Bronstein, and G. Kutyniok, “Transferability of spectral graph convolutional neural networks,” arXiv:1907.12972v2 [cs.LG], 5 March 2020. [Online]. Available: http://arxiv.org/abs/1907.12972

- [13] F. Gao, G. Wolf, and M. Hirn, “Geometric scattering for graph data analysis,” in 36th Int. Conf. Mach. Learning, vol. 97. Long Beach, CA: Proc. Mach. Learning Res., 9-15 June 2019, pp. 2122–2131.

- [14] D. Zou and G. Lerman, “Graph convolutional neural networks via scattering,” Appl. Comput. Harmonic Anal., vol. 49, no. 3, pp. 1046–1074, Nov. 2020.

- [15] S. Mallat, “Group invariant scattering,” Commun. Pure, Appl. Math., vol. 65, no. 10, pp. 1331–1398, Oct. 2012.

- [16] J. Bruna and S. Mallat, “Invariant scattering convolution networks,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 8, pp. 1872–1886, Aug. 2013.

- [17] A. Sandyhaila and J. M. F. Moura, “Discrete signal processing on graphs: Frequency analysis,” IEEE Trans. Signal Process., vol. 62, no. 12, pp. 3042–3054, 30 Apr. 2014.

- [18] L. Ruiz, F. Gama, and A. Ribeiro, “Graph neural networks: Architectures, stability and transferability,” Proc. IEEE, vol. 109, no. 5, pp. 660–682, May 2021.

- [19] S. Segarra, A. G. Marques, and A. Ribeiro, “Optimal graph-filter design and applications to distributed linear networks operators,” IEEE Trans. Signal Process., vol. 65, no. 15, pp. 4117–4131, 11 May 2017.

- [20] S. Rey, V. Tenorio, S. Rozada, L. Martino, and A. G. Marques, “Deep encoder-decoder neural network architectures for graph output signals,” in 53rd Asilomar Conf. Signals, Systems and Comput. Pacific Grove, CA: IEEE, 3-6 Nov. 2019, pp. 225–229.

- [21] S. Rey, V. Tenorio, S. Rozada, L. Martino, and A. G. Marques, “Overparametrized deep encoder-decoder schemes for inputs and outputs defined over graphs,” in 28th Eur. Signal Process. Conf. Amsterdam, the Netherlands: Eur. Assoc. Signal Process., 18-21 Jan. 2021, pp. 855–859.

- [22] J. Bruna, W. Zaremba, A. Szlam, and Y. LeCun, “Spectral networks and deep locally connected networks on graphs,” in 2nd Int. Conf. Learning Representations, Banff, AB, 14-16 Apr. 2014, pp. 1–14.

- [23] M. Defferrard, X. Bresson, and P. Vandergheynst, “Convolutional neural networks on graphs with fast localized spectral filtering,” in 30th Conf. Neural Inform. Process. Syst. Barcelona, Spain: Neural Inform. Process. Syst. Foundation, 5-10 Dec. 2016, pp. 3844–3858.

- [24] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in 5th Int. Conf. Learning Representations, Toulon, France, 24-26 Apr. 2017, pp. 1–14.

- [25] F. Wu, T. Zhang, A. H. de Souza Jr., C. Fifty, T. Yu, and K. Q. Weinberger, “Simplifying graph convolutional networks,” in 36th Int. Conf. Mach. Learning, vol. 97. Long Beach, CA: Proc. Mach. Learning Res., 9-15 June 2019, pp. 6861–6871.

- [26] P. Veličković, G. Cucurull, A. Casanova, A. Romero, P. Liò, and Y. Bengio, “Graph attention networks,” in 6th Int. Conf. Learning Representations, Vancouver, BC, 30 Apr.-3 May 2018, pp. 1–12.

- [27] A. Sandryhaila and J. M. F. Moura, “Discrete signal processing on graphs,” IEEE Trans. Signal Process., vol. 61, no. 7, pp. 1644–1656, 11 Jan. 2013.

- [28] D. I. Shuman, S. K. Narang, P. Frossard, A. Ortega, and P. Vandergheynst, “The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains,” IEEE Signal Process. Mag., vol. 30, no. 3, pp. 83–98, May 2013.

- [29] A. Ortega, P. Frossard, J. Kovačević, J. M. F. Moura, and P. Vandergheynst, “Graph signal processing: Overview, challenges and applications,” Proc. IEEE, vol. 106, no. 5, pp. 808–828, May 2018.

- [30] N. Perraudin and P. Vendergheynst, “Stationary signal processing on graphs,” IEEE Trans. Signal Process., vol. 65, no. 13, pp. 3462–3477, 3 Apr. 2017.

- [31] A. G. Marques, S. Segarra, G. Leus, and A. Ribeiro, “Stationary graph processes and spectral estimation,” IEEE Trans. Signal Process., vol. 65, no. 22, pp. 5911–5926, 11 Aug. 2017.

- [32] D. P. Kingma and J. L. Ba, “ADAM: A method for stochastic optimization,” in 3rd Int. Conf. Learning Representations, San Diego, CA, 7-9 May 2015, pp. 1–15.

- [33] F. M. Harper and J. A. Konstan, “The MovieLens datasets: History and context,” ACM Trans. Interactive Intell. Syst., vol. 5, no. 4, pp. 19:(1–19), Jan. 2016.

Appendix A Frequency Response of Node-Variant Graph Filters

Proof of Proposition 1.

The output graph signal of a node-variant graph filter is given by (3), which is reproduced here for ease of exposition:

| (19) |

Recall that is the column of the matrix containing the filter taps, that is the support matrix describing the graph, and that is the input graph signal. As given by (4), the spectral representation of the input graph signal are the coordinates of representing on the eigenbasis of the support matrix , i.e., . Similarly, . Therefore, by (19) together with the fact that , it holds that

| (20) | ||||

where is the -vector with element given by . Denoting , note that , so that

| (21) | ||||

where is the frequency response of the filter taps at node ; see (6). Next, observe that the filter taps for each node are collected in the rows of . Therefore, in analogy to (8), it holds that , with

| (22) |

Substituting (22) into (21) gives that

| (23) |

so that (20) becomes

| (24) |

This completes the proof. ∎

Proof of Corollary 2.

The element of the graph Fourier transform of the output, , is

| (25) |

where collects the frequency response of all nodes at eigenvalue . Note that, if depends on for some , then frequencies are created. When is not diagonal, there exists such that and . In this case, (25) indeed shows that frequencies are created.

Now suppose that no frequency creation occurs. Then must be diagonal. Therefore, there exists such that for all . Recalling that the set of eigenvectors of is an orthonormal basis, we have that , so it must be that for all . This implies for all that or . In the case that for all , it is clear that , meaning that the frequency response at all nodes is the same. This restriction implies that the NVGF filter is a LSI graph filter. This concludes the proof. ∎

Appendix B Lipschitz Continuity of Node-Variant Graph Filters

Proof of Theorem 3.

Leveraging the fact that the filter taps of both and are the same, start by writing the difference between the filter outputs as

| (26) |

Let and note that is symmetric and satisfies by assumption. Recall that with such that . Leveraging this fact in (26), it yields

| (27) | ||||

with satisfying , since the filter taps define analytic frequency responses with bounded derivatives for all . The input graph signal can be rewritten as using the GFT for the support matrix . Then, (27) becomes

| (28) | ||||

Using the fact that is an eigenvector of the first term in (28) can be conveniently rewritten as

| (29) | ||||

Denoting by the eigendecomposition of with the corresponding eigenvectors and eigenvalues , [10, Lemma 1] states that with for an appropriate matrix that depends on , , and . Using this in (29),

| (30) | ||||

| (31) |

is obtained. For the term (30), note that and that , so that the term (30) is equivalent to

| (32) | ||||

where is a vector where the entry is the derivative of the frequency response of node , i.e., . To rewrite (31), consider the following lemma, which is conveniently proved after completing the current proof.

Lemma 8.

For all , define by

| (33) |

where is the frequency response at node and is its derivative. Then

| (34) | ||||

With Lemma 8 in place, use (32) in (30) and (34) in (31), and this in turn back into (28) to obtain

| (35) | ||||

Next, compute the norm of (35) by applying the triangle inequality to compute the norms of each of the three summands. For the norm of the first term in (35), the triangle inequality gives

| (36) | ||||

where the submultiplicative property of the operator norm was used to bound . Leveraging that , that by Lipschitz continuity, and that by the hypothesis that , the norm can be further bounded as

| (37) | ||||

Note that the inequality between the -norm and the -norm was used, as well as the fact that the GFT is a Parseval operator. For the norm of the second term in (35), the triangle inequality together with the submultiplicativity of the operator norm are used to get

| (38) | ||||

For the last inequality to hold, denote by the entrywise maximum norm of a matrix, and note that for all , by the Lipschitz continuity of the frequency responses and the fact that is an orthogonal matrix, so for all and . Also recall that . Finally, note that the inequality between the -norm and -norm of vectors together with the Parseval nature of the GFT were used. For the third term in (35), it simply holds that

| (39) | ||||

Substituting (37), (38), and (39) into (35) gives (10), which concludes the proof. ∎

Proof of Lemma 8.

We have for all that

| (40) | ||||

The last summation is a diagonal matrix, where the element of the diagonal can be written as

| (41) | ||||

so that

| (42) | ||||

Note that the subindex in indicates that this is parametrized by , while each entry of this vector, i.e., the entry, actually depends on . Now, remark that since we have a full matrix in between two diagonal matrices, the matrix product does not commute.

To continue simplifying the expressions, start by considering a vector , a matrix and another vector . Observe that

Therefore, it can be written

| (43) |

where is the matrix defined in (33);

| (44) | ||||

Using this result in (40) and (42) gives that

| (45) |

This equality, together with the fact that , gives the desired result (34). ∎

Appendix C Optimal Unbiased Node-Variant Graph Filters

Proof of Lemma 4.

The NVGF-based estimator is given by:

| (46) |

This estimator is unbiased if and only if . By (46) and linearity of expectation, this holds precisely when

| (47) |

This is equivalent to the condition on that

| (48) |

which completes the proof. ∎

Proof of Proposition 5.

Given an unbiased estimator , an optimal matrix of filter taps are the ones that minimize (13). First, note that the estimator output at node , i.e., the entry of , is given by

| (49) |

where , is the row of , and is the row of the matrix ; see [19]. Note that if are three -dimensional real vectors, then , which means that they commute, i.e., . Using this fact in (49) yields

| (50) |

with

The objective function in (13) can be rewritten as

| (51) |

Since each depends only on , as indicated in (50), minimizing (51) over is equivalent to minimizing each of the summands in (51) over . Therefore, (13) becomes equivalent to the following system of optimization problems over each of the rows of :

| (52) |

Substituting (50) into the objective function of (52) gives

| (53) | ||||

Now, with

the objective (53) becomes

| (54) |

Since is a positive semidefinite matrix, (54) is a convex quadratic function in . Therefore, setting the gradient of this function to zero, it can be concluded that is a global minimizer of (54) if and only if , or, equivalently,

| (55) |

This completes the proof. ∎

Appendix D Authorship Attribution

Problem objective. Consider a set of texts that are known to be written by author . Given a new text the objective is to determine whether was written by or not.

Approach. The approach is to leverage word adjacency networks (WAN) built from the set of known texts to build a graph support , and then use the word frequency count of the function words in as the graph signal . Then, is processed through a GNN (in any of the variants discussed in Section 5) to obtain a predictor of the text being written by author .

Graph construction. The WAN can be modeled by a graph where the set of nodes consists of function words (i.e., words that do not carry semantic meaning but express grammatical relationships among other words within a sentence, e.g., “the”, “and”, “a”, “of”, “to”, “for”, “but”). The existence of an edge connecting words and the corresponding edge weight are determined as follows. Consider each text and split it into a total of sentences where each sentence gives the function word present in each position within a sentence, or if the word is not a function word. Then, given a discount factor and a window length , the edge weight between nodes and is computed as

| (56) | ||||

where is the indicator function that takes value when condition is met and 0 otherwise, see [5]. Equation (56) essentially computes each weight by going text by text and sentence by sentence , looking position by position for the corresponding word to match the function word . Once the word is matched, the following words in the window length are looked at and, if the word matches , then the discounted weight is added. In this way, not only co-occurrence of words, but also their proximity counts in establishing the WAN. Note that the edge weight function (56) is asymmetric, which results in a directed graph.

Graph signal processing description. The support matrix is chosen to be

| (57) |

where the is the adjacency matrix with entry equal to and is the degree matrix. The operation normalizes the matrix by rows, and the support matrix comes from symmetrizing the matrix by adopting one half of the weight on each direction. The graph signal contains a normalized word frequency count for each word

| (58) |

Note that the graph signals are normalized by the -norm and can therefore be interpreted as the probability of finding the function word in text .

Dataset and code. The dataset is presented in [5], and is publicly available at http://github.com/alelab-upenn/graph-neural-networks/tree/master/datasets/authorshipData. The dataset consists of authors from the century. The corpus of each author is split in texts of about words. The WANs and the word frequency count for each text are already present in the dataset. The texts are split, at random, in for training and for testing. The weights of the WANs of the texts selected for training are averaged to obtain an average WAN from which the support matrix is obtained by following (57). The resulting support matrix is further normalized to have unit spectral norm. Note that no text from the test set is used in building the WAN. From the texts in the training set, are further separated to build the validation set. Denote by , , and the number of texts in the training, validation, and test set, respectively. The word frequency counts for each text are normalized as in (58) and used as graph signals. A label of is attached to these signals to indicate that they have been written by author in a supervised learning context. To complete the datasets, an equal number of texts are obtained at random from other contemporary authors, and their frequency word counts are normalized and incorporated into the corresponding sets and assigned a label of . In this way, the resulting training, validation, and test set have , , and samples, respectively (half of them labeled with and the other half with ). The code to run the simulations will be provided as a .zip file.

Architectures for comparison. The Learn NVGF architecture in (18) is compared against three of the most popular GNN architectures in the literature, namely, the GCN [24], the SGC [25], and the GAT [26]. The Learn NVGF adopts the support matrix in (57) and consists of a LSI graph filter (a graph convolution) that outputs features (hidden units) and has filter taps, followed by a NVGF that takes those input features and applies a NVGF with filter taps, and also outputs features. The GCN considers in (57) to be the adjacency matrix of the graph and thus adopts a support matrix given by where as indicated in [24]. The graph convolutional layer consists of LSI graph filters, each one of the form for . This is followed by a ReLU activation function. The SGC considers the same support matrix as the GCN and learns output features, where each filter in the bank is of the form for a predetermined hyperparameter . This is followed by a ReLU activation function. Finally, for the GAT, the support matrix is (although this is relevant only in terms of the nonzero elements, which are the same in and —except for the diagonal elements—since the weights of each edge are learned through the attention mechanism), and the output features are learned through the attention mechanism exactly as described in [26]. All four architectures are followed by a readout layer consisting of a learnable linear transform that maps the output features into a vector of size that is interpreted to be the logits for the two classes (either the text is written by the author or not).

Architectures for analysis. To analyze the impact of the nonlinearity, four architectures are considered. First, as a baseline, a GCNN consisting of a graph convolutional layer that outputs features, with filter taps, followed by a ReLU activation function, as indicated in (16). Second, the Learn NVGF that replaces the ReLU activation function by a NVGF with filter taps that can be learned from data as in (18). Third, the Design NVGF where the nonlinear activation function of the GCNN is replaced by a NVGF, but one whose filter taps are designed to mimic the ReLU (the LSI graph filters in the Design NVGF are the same ones learned by the GCNN). Fourth, a LSI graph filter with taps that outputs features. Note that the GCNN is a nonlinear architecture, while the other three are linear. Also, note that the first three architectures are capable of creating frequency content while the fourth one, the LSIGF, is not. For fair comparison, the values of and are the same for all architectures.

Training. The loss function during training is a cross-entropy loss between the logits obtained from the output of each architecture, and the labels in the training set. All the architectures are trained by using an ADAM optimizer [32] with forgetting factors and , and with a learning rate . The training is carried out for epochs with batches of size . Dropout with probability is included before the readout layer, during training, to avoid overfitting. At testing time, the weights are correspondingly rescaled. Validation is run every training steps. The learned filters that result in the best performance on the validation set are kept and used during the testing phase. For each experiment, realizations of the random train/test split are carried out (also randomizing the selection of the texts by other authors that complete the training, validation, and test sets). The average evaluation performances (measured as classification error—ratio of texts wrongly attributed in the test set) is reported, together with the estimated standard deviation.

Hyperparameter selection. The number of hidden units , , , and , the polynomial order and , and the learning rate , , , and are selected, independently for each architecture, from the set for the number of hidden units, for the polynomial order, and for the learning rate. In other words, all possible combinations of these three parameters are run for each architecture, and the ones that show the best performance on the test set are kept.

| Learn NVGF | GCN [24] | SGC [25] | GAT [26] | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Authors | ||||||||||

| Abbott | 0.001 | 32 | 2 | 0.005 | 64 | 0.01 | 64 | 2 | 0.01 | 64 |

| Alcott | 0.01 | 16 | 4 | 0.01 | 32 | 0.01 | 32 | 2 | 0.01 | 32 |

| Alger | 0.005 | 16 | 4 | 0.01 | 32 | 0.001 | 64 | 2 | 0.005 | 64 |

| Allen | 0.005 | 64 | 2 | 0.01 | 64 | 0.005 | 32 | 2 | 0.005 | 64 |

| Austen | 0.001 | 32 | 3 | 0.01 | 64 | 0.005 | 64 | 2 | 0.01 | 64 |

| Brontë | 0.001 | 32 | 3 | 0.01 | 64 | 0.005 | 16 | 2 | 0.01 | 16 |

| Cooper | 0.005 | 16 | 3 | 0.005 | 64 | 0.005 | 64 | 2 | 0.01 | 64 |

| Dickens | 0.001 | 32 | 4 | 0.005 | 32 | 0.005 | 64 | 2 | 0.01 | 64 |

| Doyle | 0.005 | 16 | 3 | 0.005 | 16 | 0.005 | 64 | 2 | 0.005 | 32 |

| Garland | 0.005 | 32 | 3 | 0.01 | 64 | 0.005 | 64 | 2 | 0.01 | 32 |

| Hawthorne | 0.01 | 16 | 3 | 0.005 | 64 | 0.01 | 64 | 2 | 0.01 | 16 |

| Irving | 0.005 | 16 | 3 | 0.005 | 64 | 0.01 | 64 | 2 | 0.005 | 64 |

| James | 0.001 | 32 | 2 | 0.005 | 64 | 0.01 | 64 | 2 | 0.01 | 64 |

| Jewett | 0.001 | 16 | 2 | 0.01 | 64 | 0.01 | 64 | 2 | 0.01 | 32 |

| Melville | 0.005 | 16 | 4 | 0.01 | 64 | 0.005 | 64 | 2 | 0.005 | 64 |

| Page | 0.005 | 16 | 2 | 0.005 | 64 | 0.005 | 64 | 2 | 0.005 | 64 |

| Poe | 0005 | 32 | 4 | 0.005 | 64 | 0.005 | 32 | 2 | 0.01 | 32 |

| Stevenson | 0.001 | 16 | 4 | 0.005 | 64 | 0.005 | 32 | 2 | 0.01 | 64 |

| Thoreau | 0.005 | 64 | 3 | 0.01 | 64 | 0.01 | 16 | 2 | 0.01 | 32 |

| Twain | 0.005 | 16 | 2 | 0.01 | 16 | 0.001 | 64 | 2 | 0.01 | 32 |

| Wharton | 0.005 | 16 | 4 | 0.01 | 64 | 0.01 | 64 | 2 | 0.001 | 64 |

Experiment 1: Performance comparison. In the first experiment, the performance is measured by classification error (ratio of texts in the test set that were wrongly attributed), and the comparison between the Learn NVGF, the GCN [24], the SGC [25], and the GAT [26] is carried out, for all authors. The hyperparameters used for each architecture and each author are present in Table 1 (recall that the hyperparameters that exhibit the best performance—the lowest classification error on the test set—are the ones used).

Results are shown in Figure 3. The general trend observed is for the Learn NVGF to exhibit better performance than the GAT, which in turn is better than the GCN, and all of them are better than SGC. The differences are usually significant between the four architectures, with a marked improvement by the Learn NVGF. It is observed, however, that for Doyle, Hawthorne, and Stevenson, the performance of the Learn NVGF and the GAT is comparable.

Experiment 2: Impact of nonlinearities. In the second experiment, the objective is to decouple the contribution made by frequency creation from that made by the nonlinear nature of the architecture. To do this, the GCNN architecture is taken as a baseline (a nonlinear, frequency-creating architecture), and the relative change in performance of the three other architectures is measured (Learn NVGF and Design NVGF, both linear and frequency-creating architectures, and LSIGF, which is linear but cannot create frequencies). The learning rate , the number of hidden units , and order of the filters are the same for all four architectures, and are those found in Table 1 under the column of Learn NVGF. The results showing relative change in the mean classification error with respect to the GCNN are shown in Figure 4.

The authors have been classified into three groups according to their relative behavior. Group 1 consists of those authors where the Learn NVGF has a comparable performance with respect to the GCNN (i.e., or less relative variation in performance), and both have a considerably better performance than the LSIGF. These results suggest that, for this group of authors (the most numerous one, consisting of authors, or of the total), the improvement in performance is mostly due to the capability of the architectures to create frequency, and not necessarily due to the nonlinear nature of the GCNN.

Group 2 consists of those authors where the Learn NVGF exhibits a better performance than the GCNN, which in turn exhibits a similar performance to the LSIGF (except for Jewett and Thoreau, where the GCNN still exhibits considerably better performance than the LSIGF). This group of 7 authors ( of the total) suggests that in some cases, frequency creation is the key contributor to improved performance, and that the inclusion of a nonlinear mapping could possibly have a negative impact. In essence, we observe that a linear architecture capable of creating frequencies outperforms the rest, and that a frequency-creating nonlinear architecture performs similarly to a linear architecture that cannot create frequencies. This suggests that the relationship between input and output is approximately linear with frequencies being created, but that attempting to model this frequency creation with a nonlinear architecture is not a good approach.

Finally, Group 3 consists of those architectures for which all architectures exhibit a similar performance. This group consists of authors ( of the total). This may be explained by the fact that the high-frequency content for these authors does not carry useful information, and thus the role of frequency creation is less relevant.

With respect to the Design NVGF, a somewhat erratic behavior is observed. In near half of the cases ( authors), the performance of the Design NVGF closely resembles the performance of the GCNN, as expected. In a few other cases (Alcott, James, Cooper, and Allen), the Design NVGF results are better, and in the rest they are considerably worse (Brontë, Dickens, Hawthorne, Page, Stevenson, and Melville). The Design NVGF architecture is designed to mimic the GCNN, but its accurate design depends on good estimates of the first and second moments of the data. Thus, one possible explanation is that there is not enough data to get good estimates of these values. Another possible explanation is that higher-order moments have a larger impact in these cases, and the NVGF, being linear, is not able to accurately capture them.

Overall, this second experiment shows the importance of frequency-creation in improving performance, especially when high-frequency content is significant. Among the two ways of creating frequency (linear and nonlinear), we see that in most cases, they essentially perform the same. But there are cases in which creating frequency content in a linear manner is better (Group 2). In any case, this experiment shows the importance of frequency creation and calls for further research on what other contributions the nonlinear nature of the activation function has on performance.

Appendix E Movie Recommendation

Problem objective. Consider a set of items, and let be the ratings assigned by user to these items, i.e., is the rating assigned by user to item , this function yields if the item has not been rated. The objective is to estimate what rating a user would give to some target item not yet rated [3, 4].

Approach. The idea is to create a graph of rating similarities and take the graph signal to be the ratings given by a user to some of the items. Then is processed through a GNN (in any of the variants discussed in Section 5) to obtain the estimated rating the user would give to the target item , by looking at the output value of the GNN on node . In short, this amounts to an interpolation problem.

Graph construction. Let be a set where collects the ratings given by user to some of the items, such that if item has been rated and if . Denote by the set of users that have rated item , and by the set of users that have rated both items and . Define the mean intersection score as . Note that this is the rating average for item , computed among those users that have rated both and . Then, the rating similarity between items and is computed by means of the Pearson correlation as

| (59) |

These weights can be used to build a complete graph where is the set of items and is the complete set of edges with being the corresponding weights. In what follows, the -nearest neighbor graph of is built with , and the matrix is used to denote the weighted adjacency matrix of , such that if , and otherwise.

Graph signal processing description. The support matrix is chosen to be

| (60) |

This problem can be cast as a supervised interpolation problem. Given the set and the target item , consider the set of all users that have rated the item . Extract the specific rating as a label, and set a in the entry of . The resulting vector is a graph signal which always has a in the entry. The resulting set contains all the users that have rated the item with the corresopnding rating extracted as a label and the entry of the graph signal set to zero, i.e., for all such that .

Dataset and code. The dataset is the MovieLens-100k dataset [33] publicly available at http://files.grouplens.org/datasets/movielens/ml-100k.zip. This dataset consists of one hundred thousand ratings given by users to movies, and where each user has rated at least of them. The ratings are integers ranging from to meaning that for every user . In particular, the subset of movies that have received the largest number of ratings is used to build a graph with nodes. The resulting dataset has ratings given by users to some of these movies. The five movies with the largest number of ratings are considered as target movies, namely “Star Wars” with pairs , “Contact” with pairs, “Fargo” with , “Return of the Jedi” with , and “Liar, Liar” with . Each of these datasets is split randomly into for training, for validation, and for testing. The code to run the simulations will be provided as a .zip file.

Architectures for comparison. The Learn NVGF architecture in (18) is compared against two graph signal processing based methods for movie recommendation, namely the RMGCNN in [3] and the RMCayley in [4], which are implemented exactly as in the corresponding papers, with the same values for the hyperparameters. For the Learn NVGF, the values are hidden units and filters of order . The values used are for Star Wars and Contact, for Fargo, for Return of the Jedi, and for Liar, Liar. The support matrix is given by (60). All architectures are followed by a learnable local linear transformation (the same for all nodes, i.e., a LSI graph filter with ) that takes the value of the hidden units and outputs a single scalar that represents the estimated rating for that movie. A comparison with the nearest neighbor method (i.e., averaging the ratings of the nearest nodes) is also included.

Architectures for analysis. The architectures for analyzing the role of frequency creation are the same four architectures that in the authorship attribution problem. Namely, the LSI graph filter as a linear architecture unable to create frequencies, the Design NVGF and the Learn NVGF are linear frequency-creating architectures, and the GCNN with a ReLU nonlinearity is a nonlinear frequency-creating architecture. The values of and in all cases are the same.

Training. The loss function during training is the “Smooth L1” loss between the output scalar at the target node and the labels in the training set. All architectures are trained by using an ADAM optimizer [32] with forgetting factors and , and a learning rate . The training is carried out for epochs with batches of size . Validation is run every training steps. The learned filters that result in the best performance on the validation set are kept and used during the testing phase. For each experiment, realizations of the random dataset split are carried out. The average evaluation performances (measured by RMSE) is reported, together with the estimated standard deviation.

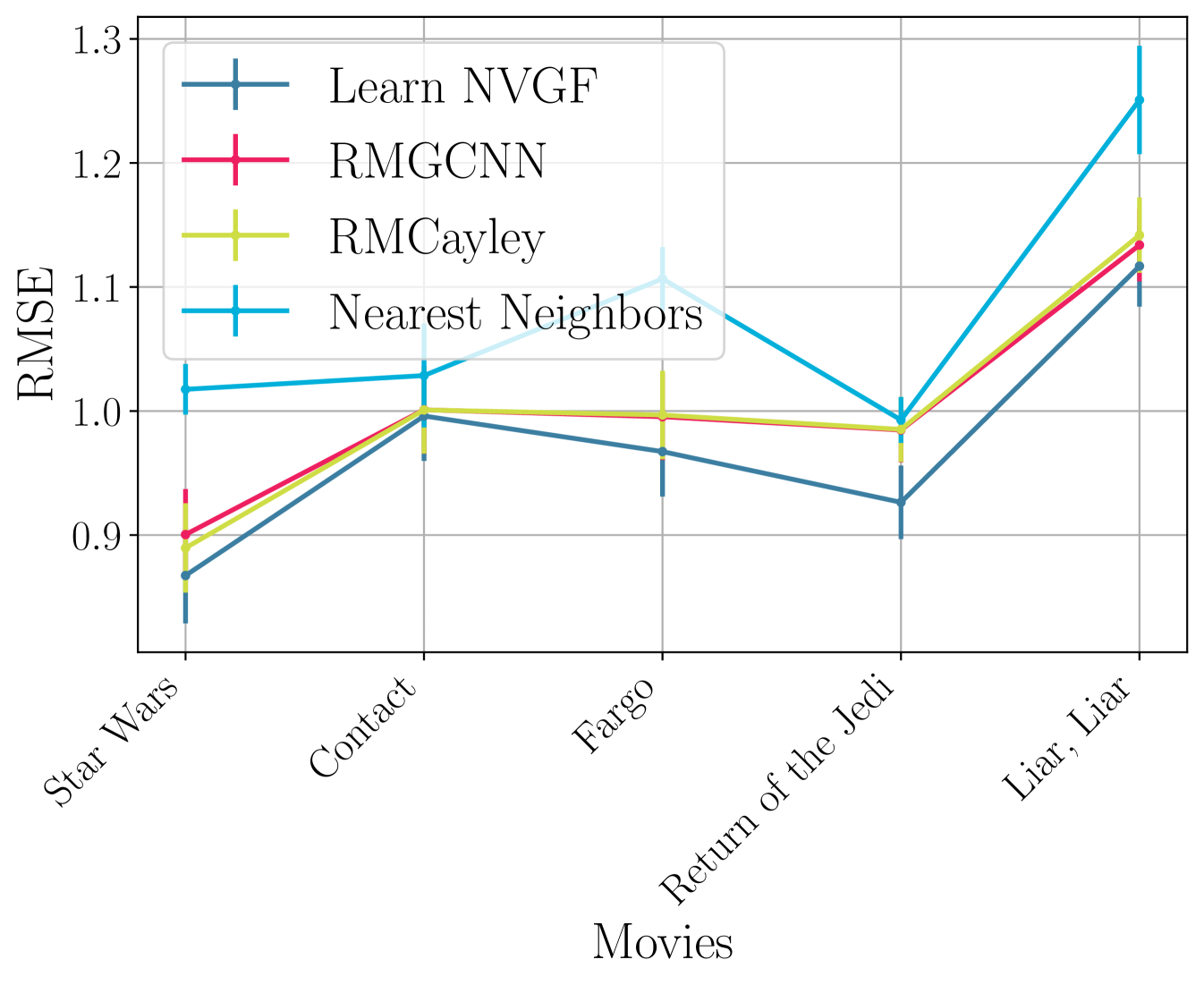

Experiment 1: Performance comparison. In the first experiment, the performance is measured by the RMSE, and the comparison between the Learn NVGF, the RMGCNN [3], the RMCayley [4], and the Nearest Neighbor approach is carried out for the aforementioned movies with the most number of ratings. Results are shown in Figure 5. It is generally observed that the Learn NVGF performs better than the alternatives, although the performance is comparable to the RMGCNN and the RMCayley in the case of the movie Contact. The nearest neighbor approach yields worse performance.

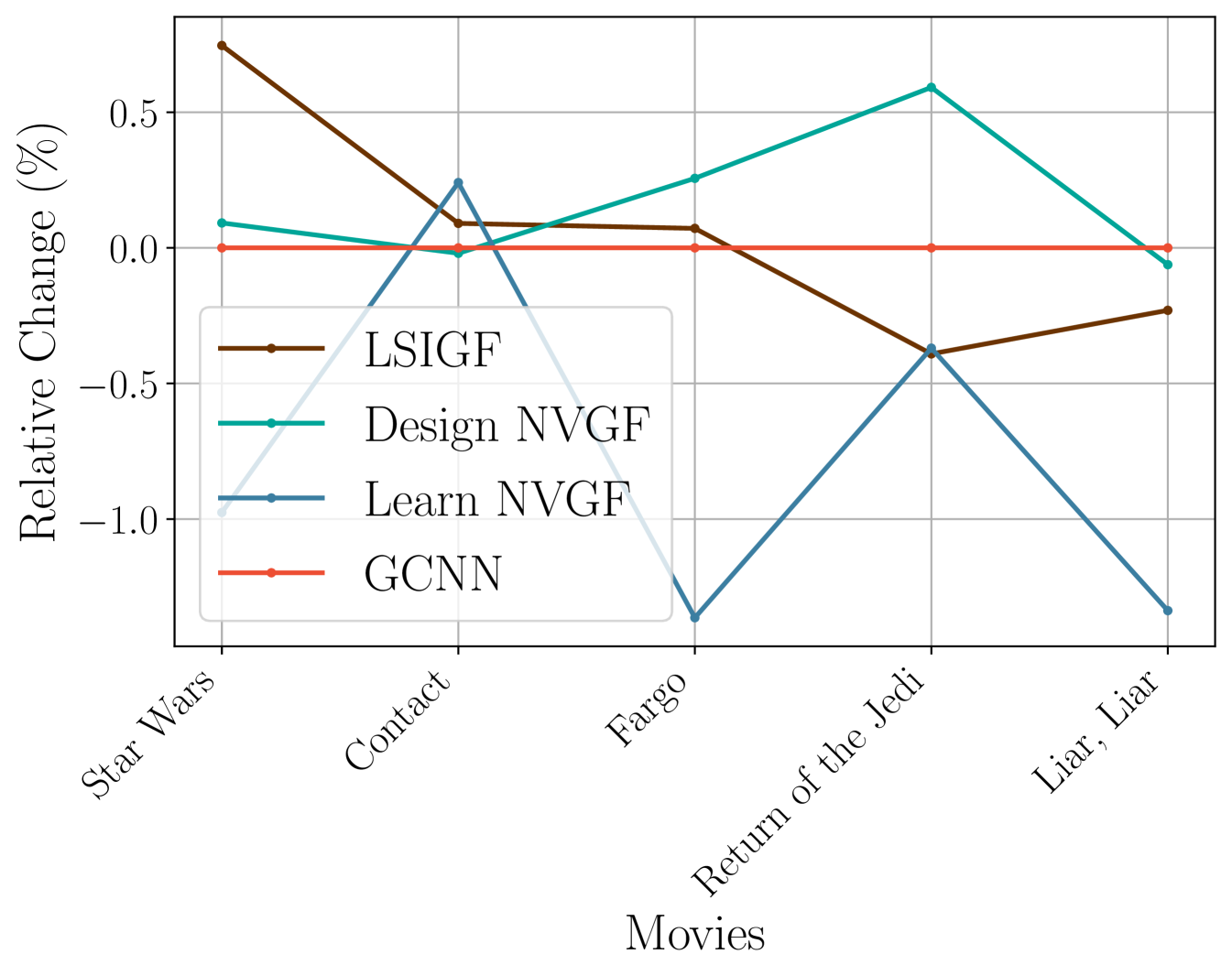

Experiment 2: Impact of nonlinearities. In this second experiment, the objective is to decouple the contribution made by frequency creation from that made by the nonlinear nature of the architecture. To do this, the GCNN architecture is taken as a baseline (a nonlinear, frequency-creating architecture), and the relative change in performance of the three other architectures is measured. The results shown in Figure 6 show that the relative change is quite small (approximately change in the highest case, the movies Fargo and Liar, Liar), which implies that all four architectures have relatively similar performance. This can be easily explained by computing the average frequency response of the signals in the test set of the movie Star Wars. The result is shown in Figure 7. It is observed that it is a signal with low-eigenvalue frequency content. Therefore, as expected, there is not much to gain for using architectures that create frequencies. In Figures 8, 9, and 10, the frequency responses of the output of each architecture to an input that is equal to the largest eigenvector, i.e., , are shown. As expected, the LSIGF (Figure 8) does not create frequency content, while the other two architectures, do (Figures 9 and 10). However, since this high-eigenvalue frequency content is not really significant, the frequency creation capabilities do not markedly improve the performance.