Non-asymptotic estimation of risk measures using stochastic gradient Langevin dynamics

Abstract

In this paper we will study the approximation of some law invariant risk measures. As a starting point, we approximate the average value at risk using stochastic gradient Langevin dynamics, which can be seen as a variant of the stochastic gradient descent algorithm. Further, the Kusuoka’s spectral representation allows us to bootstrap the estimation of the average value at risk to extend the algorithm to general law invariant risk measures. We will present both theoretical, non-asymptotic convergence rates of the approximation algorithm and numerical simulations.

Keywords Convex risk measure Stochastic Optimization Risk minimization Average value at risk Stochastic gradient Langevin

Mathematics Subject Classification 91G70 90C90

Statements and Declarations The authors gratefully acknowledge support from the NSF grant DMS-2005832. The authors have no competing interests to declare.

1 Introduction

Every financial decision involves some degree of risk. Quantifying risk associated with a future random outcome allows organizations to compare financial decisions and develop risk management plans to prepare for potential loss and uncertainty. By the seminal work of Artzner, Delbaen, Eber, and Heath [3], the canonical way to quantify the riskiness of a random financial position is to compute the number for a convex risk measure , whose definition we recall.

Definition 1.1.

A mapping is a convex risk measure if it satisfies the following conditions for all :

-

•

translation invariance: for all

-

•

monotonicity: if

-

•

convexity: for .

Intuitively333In the rest of the paper, for notation simplicity, we will assume risk measures to be increasing. That is, we work with . This does not restrict the generality., measures the minimum amount of capital that should be added to the current financial position to make it acceptable. Due to its fundamental importance in quantitative finance, the theory of risk measures (sometimes called quantitative risk management) has been extensively developed. We refer for instance to [18, 28, 42, 16, 45, 15, 33, 46] for a few milestones and the influential textbooks of McNeil, Frey, and Embrechts [48] and Föllmer and Schied [30] for overviews.

An important problem for risk managers in practice is to efficiently simulate the number for a financial position and a risk measure . The difficulty here stems from the fact that, unless is a “simple enough” risk measure and the law of belongs to a tractable family of distributions, there are no closed form formula allowing to compute . The goal of this paper is to develop a method allowing to numerically simulate the riskiness for general convex risk measures, and when the law of is not necessarily known (as it is the case in practical applications).

One commonly used measure of the riskiness of a financial position is the value at risk (VaR). For a given risk intolerance , the value at risk of is the -quantile of the distribution of . Despite the various shortcomings of this measure of risk documented by the academic community [48], remains the standard in the banking industry, and due to its widespread use, the computation of has been extensively studied. We refer interested readers for instance to [35, 38, 10, 25], and references therein for various simulation techniques. Recommendations [50] from the Basel Committee on Banking Supervision which advises on risk management for financial institutions have revived the development of convex risk measures such as the average value at risk (AVaR), also called conditional value at risk or expected shortfall. This risk measure is the expected loss given that losses are greater than or equal to the . That is, is given by:

| (1.1) |

For general distributions of , usually does not have closed form expressions. Therefore, in practice, numerical estimations are often required. As a result, the estimation of has received considerable attention. We refer for instance to works by Eckstein and Kupper [24] and Bühler, Gonon, Teichmann, and Wood [9] in which (among other things) the simulation of optimized certainty equivalents (of which is a particular case) are considered using deep learning techniques. One approximation technique for the is based on Monte-Carlo type algorithm. In this direction, let us refer for instance to Hong and Liu. [37] and Chen [13] on Monte Carlo estimation of and , Zhu and Zhou [64] on nested Monte Carlo estimation. More recently, motivated by developments of gradient descent methods in stochastic optimization, (in particular the stochastic Langevin gradient descent (SGLD) technique), Sabanis and Zhang [56] provide non-asymptotic error bounds for the estimation of . Other works developing such gradient descent techniques in the context of risk management include Iyengar and Ma [41], Tamar, Glassner, and Mannor [59] and Soma and Yoshida [58]. Essentially, these papers take advantage of new developments in machine learning and optimization, see e.g. Allen-Zhu [2], Gelfand and Mitter [34], Nesterov [49] and Raginsky, Rakhlin, and Telgarsky [53]. Let us also mention the recent work of Reppen and Soner [54] who develop a data–driven approach based on ideas from learning theory.

In this work we go beyond the numerical simulation of by extending stochastic gradient descent type techniques to compute a large family of risk measures, including the . We are interested in this work in deriving explicit (non–asymptotic) error estimates for the approximation. We will restrict our attention to law-invariant convex risk measures (whose definition we recall below), since in practice, only the law of a financial position can be (approximately) observed. In fact, the requirement for a risk measure to be law-invariant is natural and is satisfied by most risk measures444All risk measures considered in this work will be implicitly assumed to be convex law–invariant risk measures..

Definition 1.2.

[32] A risk measure is law-invariant if for all with the same distribution, we have

To the best of our knowledge, the papers considering (non-parametric) estimation of general convex risk measures are Weber [61], Belomestny and Krätschmer [6] and Bartl and Tangpi [4]. These papers consider a (data-driven) Monte-Carlo estimation method by proposing a plug-in estimator based on the empirical measure of the historical observations of the underlying distribution of the random outcome. Weber [61] proves a large deviation theorem, and Belomestny and Krätschmer [6] provide a central limit theorem. Note that both of these papers give asymptotic estimation results. Bartl and Tangpi [4] provide sharp non-asymptotic convergence rates for the estimation.

To estimate general law-invariant convex risk measures, we rely on the Kusuoka’s spectral representation [46]. Intuitively, this representation says that any law invariant risk measure can be constructed as an integral of the risk measure. Therefore, the first step of our approximation of general law–invariant risk measures is to estimate . Since we would like to analyze approximation algorithms for the risk of claims with possibly non-convex payoffs, we employ the idea of Raginsky, Rakhlin, and Telgarsky [53] and use the stochastic gradient Langevin dynamic which, essentially, adds a Gaussian noise to the unbiased estimate of the gradient in stochastic gradient descent. To quantify the distance between the estimator and the true value of the risk measure, we present non–asymptotic rates on the mean squared estimation error both in the case of a , and of general law–invariant risk measures. The proof of the mean squared error of estimating the makes use of the observation that the SGLD algorithm is a variant of the Euler-Maruyama discretization of the solution of the Langevin stochastic differential equation (SDE). This observation allows us to use results on the convergence rate of the Euler-Maruyama scheme and classical techniques of deriving the convergence rate of the solution of the Langevin SDE to the invariant measure. For the rate on the mean squared estimation error of the general case, our proof relies heavily on Kusuoka’s representation which allows to build general law–invariant risk measures from .

Beyond our theoretical guarantees for the convergence of approximation algorithms for general convex risk measures, the present work also contributes to the non-convex optimization literature in that we propose a new proof for the convergence of SGLD algorithms for some non–convex objective functions. The idea is essentially to reduce the problem into the analysis of contractivity properties of the semi–group originating from a Langevin diffusion with non–convex potential. This problem was notably investigated by Eberle [23].

The paper is organized as follows: We start by describing the approximation techniques and presenting the main results in Section 2. In the same section, we also present numerical results on the estimation of AVaR. In Section 3, we prove the rates on the mean squared error for the estimation of AVaR. The derivation of the mean squared error for the estimation of a general law-invariant risk measure is done in Section 4.

Notations: Let and let be the set of real positive numbers. Fix an arbitrary Polish space endowed with a metric . Throughout this paper, for every -dimensional -valued vector with , we denote by its coordinates. For , we also denote by the usual inner product, with associated norm , which we simplify to when is equal to . For any , will denote the space of matrices with -valued entries.

Let be the Borel -algebra on (for the topology generated by the metric on . For any , for any two probability measures and on with finite -moments, we denote by the -Wasserstein distance between and , that is

where the infimum is taken over the set of all couplings of and , that is, probability measures on with marginals and on the first and second factors respectively.

2 Approximation technique and main results

In this section we rigorously describe the approximation method developed in this article as well as our main results. Throughout, we fix a probability space on which all random variables will be defined, unless otherwise stated. Let us denote by the space of essentially bounded random variables on this probability space. The starting point of our method is based on the following spectral representation of law-invariant risk measures due to Kusuoka [46]:

Theorem 2.1.

A mapping is a law-invariant risk measure if and only if it satisfies

| (2.1) |

for some functional , where is the set of all Borel probability measures on .

In fact, this spectral representation suggests that the risk measure is the “basic building block” allowing to construct all convex law–invariant risk measures. Thus, the idea will be to propose an approximation algorithm for that will be later bootstrapped to derive an algorithm for general law invariant risk measures. This approach is also used in [4] for a very different approximation method.

2.1 Approximation of average value at risk

Let us first focus on estimating . For this purpose, recall (see e.g. [30, Proposition 4.51]) that for every and , takes the form

In other words, is nothing but the value of a stochastic optimization problem. In most financial applications, the contingent claim whose risk is assessed is of the form where is a –dimensional random vector of risk factors, and , see e.g. [48, Section 2.1] for details. Note that here the space can be infinite dimensional. A standard practice to approach the infinite dimensional case is to use neural networks for approximation, which leads to non-convex objective functions. Therefore, we allow to be non-convex with certain regularity conditions. A standard example arises when is the profit and loss (PL) of an investment strategy. In this case, is the portfolio and the random vector represents (increments) of the stock prices. That is, where and are the values of the stock at times and , respectively. Hence, we let be in a compact and convex set , and our goal will be to estimate the value of the (multi-dimensional) risk minimization problem

| (2.2) |

A natural way to numerically solve such problems is by gradient descent. However, when the dataset is large, gradient descent usually does not perform well, since computing the gradient on the full dataset at each iteration is computationally expensive.

Among others, one method that has been proposed to get around the high computational cost of gradient descent is the stochastic gradient descent (SGD) algorithm, which replaces the true gradient with an unbiased estimate calculated from a random subset of the data. A more recent approach, called the Stochastic Langevin Gradient Descent, injects a random noise to an unbiased estimate of the gradient at each iteration of the SGD algorithm. Originally introduced by Welling and Teh. [62] as a tool for Bayesian posterior sampling on large scale and high dimensional datasets, SGLD maintains the scalability property of SGD, and has a few advantages over the SGD: By adding a noise to SGD, SGLD navigates out of saddle points and local minima more easily [7], outperforms SGD in terms of accuracy [19], and overcomes the curse of dimensionality [14]. Moreover, SGLD also applies to cases where the objective function is non-convex but sufficiently regular [34] [53].

We will apply the SGLD in the present context of estimation of . Recall that our goal is to solve the optimization problem given in equation (2.2). Let , and consider the (objective) function

and, given a strictly positive constant , let

| (2.3) |

be the usual penalized objective function, where

denotes the squared distance from to the set . Since for small we have

we will approximate the left hand side above using the SGLD algorithm, which consists in approximating its minimizer by the (support of the) invariant measure of the Markov chain given by

| (2.4) |

where are independent Gaussian random variables. In the practice of financial risk management, the distribution of is typically unknown. This is a well-studied issue in quantitative finance, refer for instance to [5, 17, 44] and the references therein. In particular, cannot be directly computed. It will be replaced by an unbiased estimator. Following Monte–Carlo simulation ideas, we let be independent copies of and be independent Brownian motions, and we thus let

In the following, we will take for simplicity. Put

| (2.5) |

| (2.6) |

and

| (2.7) |

Hence we will show that

| (2.8) |

approximates . Note that the optimal portfolio can be easily recovered. It is simply the last coordinates of . Similarly, the value-at-risk can be obtained from the Markov chain . See Remark 3.1 for details. Let us now formulate the assumptions we make on and the random vector .

Assumption 2.2.

The random variable takes values in and

the function is Borel measurable, and they satisfy

has finite fourth moment.

The function Lipschitz–continuous and continuously differentiable.

.

The random variable is bounded, uniformly in , and is Lipschitz, uniformly in

Consider the function defined as

It holds

Let us briefly comment on these conditions before stating the result. The integrability, regularity, and lower boundedness conditions allow to ensure that the problem is well-posed. The boundedness condition is assumed mostly to simplify the exposition. Most of our statements will remain true if it is replaced by a suitable integrability condition. We introduce the more involved condition to make for the possible lack of convexity of the objective function . This condition is by now standard when employing coupling by reflection techniques to prove contractivity of diffusion semigroups. We refer for instance to Eberle [23, 22] or the earlier work of Chen and Li [12]. Note, for instance, that this condition is automatically satisfied if is convex (since in this case is strongly convex) or when is strictly convex outside a given ball (see [23, Example 1]).

The following is the first main result of this work:

Theorem 2.3.

Let Assumptions 2.2 hold. Let be such that . For all and , we have

| (2.9) |

where the constants are given in the appendix.

Theorem 2.3 provides a non-asymptotic rate for the convergence of the estimator to the (optimized) average value at risk. Such a rate is crucial in applications since it gives a precise order of magnitude for the choice of the parameters and needed to achieve a desired order of accuracy. Moreover, the rate is independent of the dimension , implying in particular that the rate is not made worst when increasing the size of the portfolio (or in general the number of risk factors). Furthermore, observe that this estimator is rather easy to simulate: one only needs to simulate independent Gaussian random variables, for each of them simulate the iterative scheme (2.6) and compute the empirical average of the outcomes. We provide numerical results on the estimation of AVaR in Section 2.3 below.

Remark 2.4.

Observe that the method developed here can also allow (with minor changes) to simulate the value function of utility maximization problems of the form

where is a concave utility function and the expectation when , or even of the robust utility maximization problem

where is the set of possible distributions of . In the latter case, one will need to compute (or find an appropriate unbiased estimator of) with

This is easily done for instance when is a ball with respect to the Wasserstein metric around a given distribution , see e.g. [5].

2.2 Approximation of general convex risk measures

Let us return to the problem of approximating general law-invariant convex risk measures. In this context (as in the case of ) our goal is to simulate the optimized risk measure

To that end, let us recall a notion of regularity of risk measures introduced in [4] that will be needed to derive an explicit non-asymptotic convergence rate. Recall that a random variable is said to follow the Pareto distribution with scale parameter and shape parameter if

Definition 2.5.

[4] Let , and let follow Pareto distribution with scale parameter 1 and shape parameter . A convex risk measure is said to be -regular if it satisfies

We refer to [4] for a discussion on this notion of regularity, but note for instance that is –regular for all and that this notion of regularity is slightly stronger than the well-known Fatou property and the Lebesgue property often assumed for risk measures, see e.g. Föllmer and Schied [30]. Moreover, one consequence of –regularity is the following slight refinement of Kusuoka’s representation: The risk measure satisfies

| (2.10) |

for some , see [4, Lemma 4.4] for details. Thus, the estimator we consider for is given by

| (2.11) |

for some , and where is the estimator of given by (2.8), which implicitly depends on through the objective functions and . The following theorem gives a convergence rate for the approximation of the general law-invariant convex risk measure by .

Theorem 2.6.

Let be a –regular convex risk measure with . Let be bounded and satisfy the assumptions of Theorem 2.3. Let . For all and , we have

where is given in the Appendix, and constants and correspond to those given in the Appendix, with replaced by .

2.3 Numerical results on AVaR

Let us complement the above theoretical guarantees with empirical experiments555Code available at https://github.com/jiaruic/sgld_risk_measures. We first focus on the approximation of the average value at risk and the value at risk with respect to the time evolution of the Markov chain in the SGLD algorithm. Thus, for the numerical computations, we set

We will consider two cases in our experiments. In the first case we assume the underlying distribution to be known and use Monte–Carlo simulation, and in the second case we use real historical stock price data.

2.3.1 Monte Carlo simulation

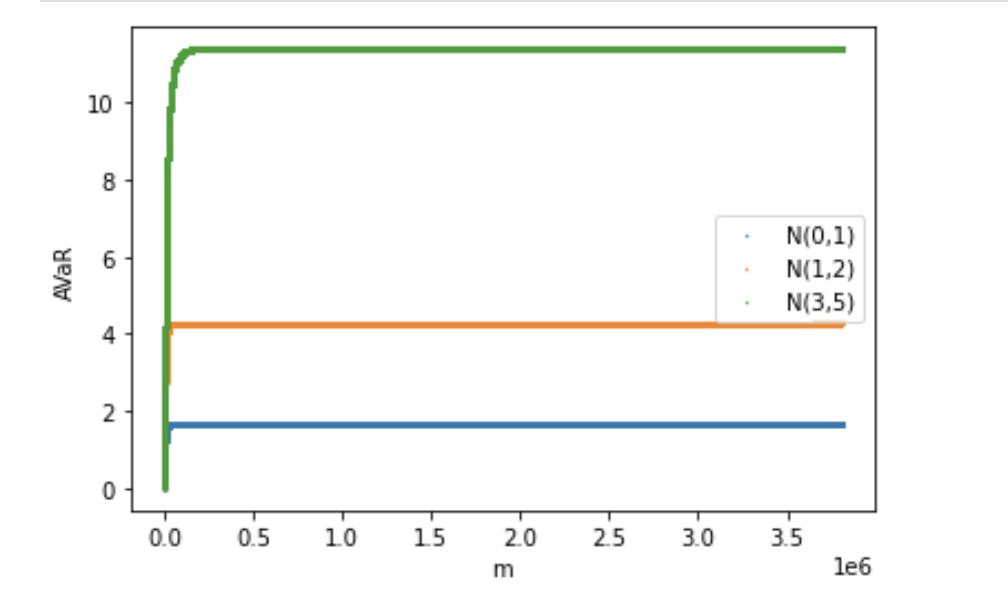

For the Monte Carlo experiments, we set . Figure 1(a) shows the convergence of AVaR in the 1 dimensional case with , where is sampled from a Gaussian distributions. Figure 1(b) shows the estimation error, , where is the theoretical average value at risk for 1-dimensional Gaussian distributions given by

| (2.12) |

where and are respectively, the PDF and the CDF of a standard Gaussian distribution.

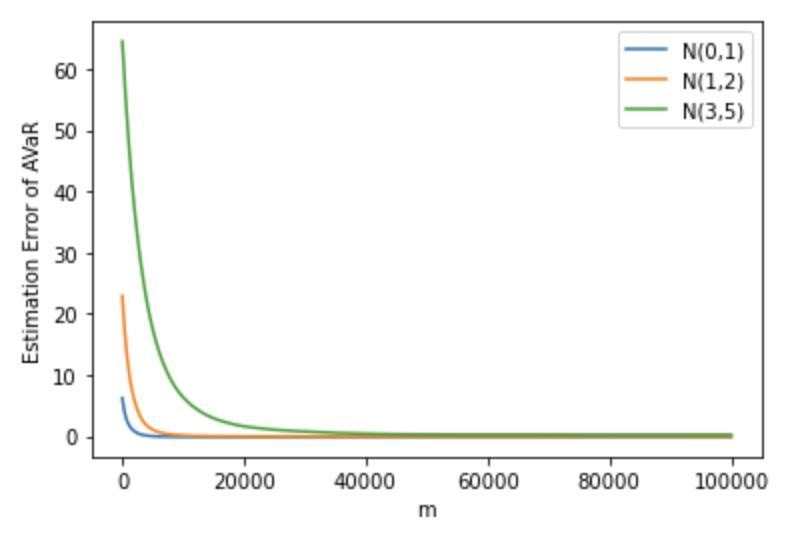

For the multi-dimensional case, we take the function

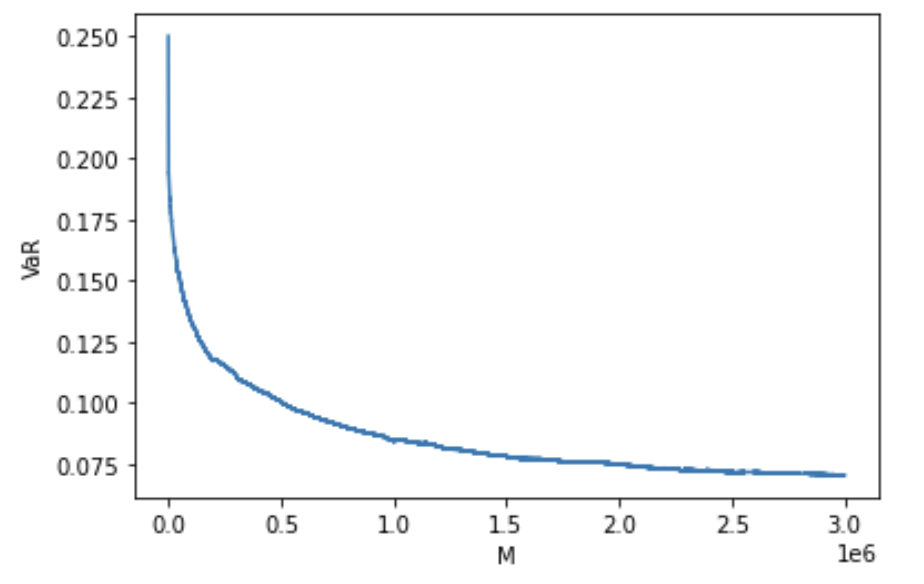

Figure 2(a) and Figure 2(b) show the convergence of VaR and AVaR in the 2-dimensional case, where is sampled from and is sampled from .

2.3.2 Numerical results with real data

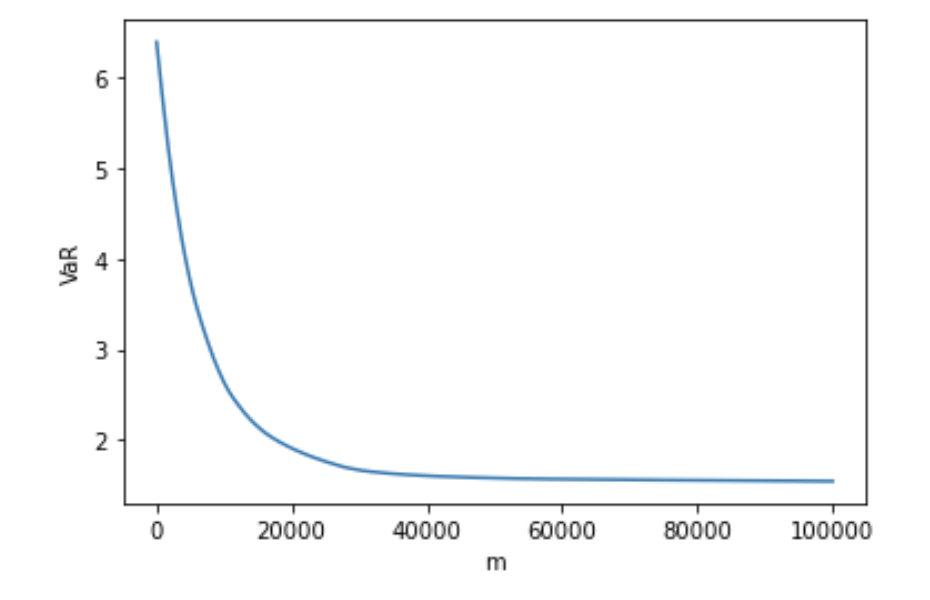

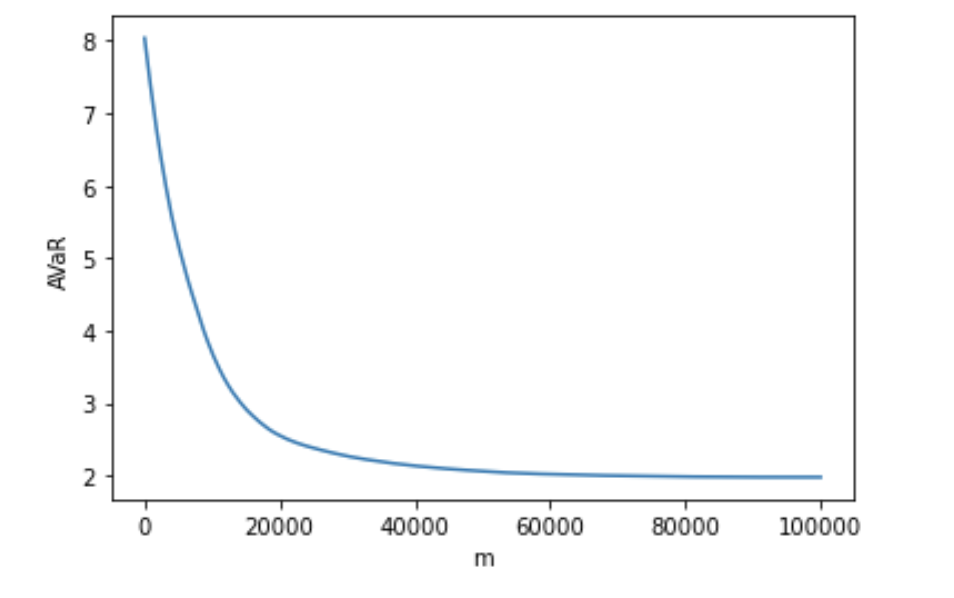

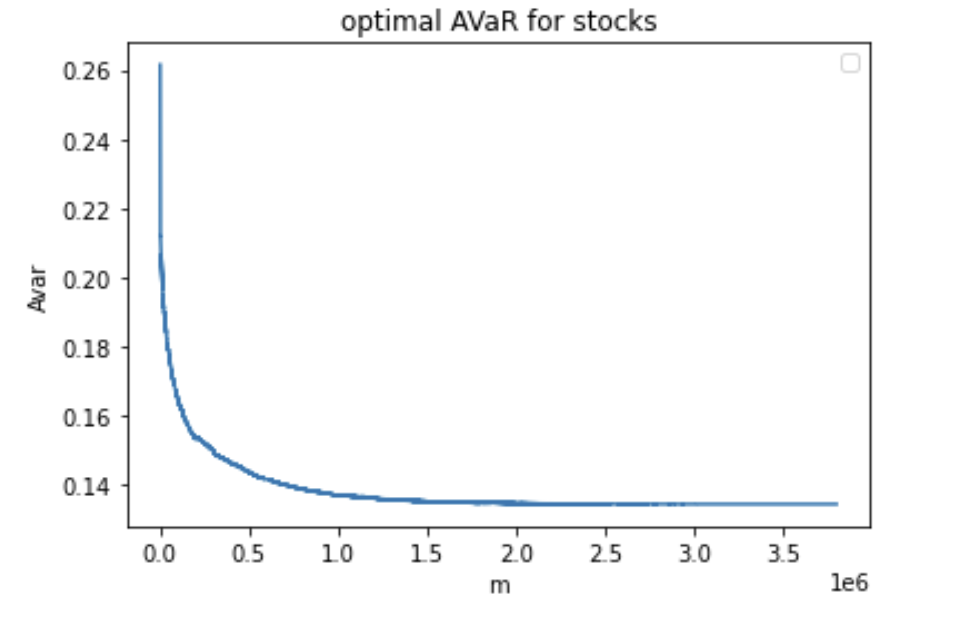

In this subsection, we compute AVaR for a portfolio of 106 stocks using real aggregated stock prices over 15-minute time intervals from January 2, 2015 to August 31, 2015. Among 128 NASDAQ stocks that are "sufficiently liquid", we remove the ones with missing values, and use the remaining 106 stocks. For a detailed description of the data used and for a definition of "sufficiently liquid", please refer to Section 3.2 of Pohl, Ristig, Schachermayer, and Tangpi [52]. We use changes in stock prices instead of stock prices themselves, because stock prices are highly dependent. We present paths of the estimated optimized VaR and AVaR of the portfolio of 106 stocks in Figures 3(a) and 3(b) respectively.

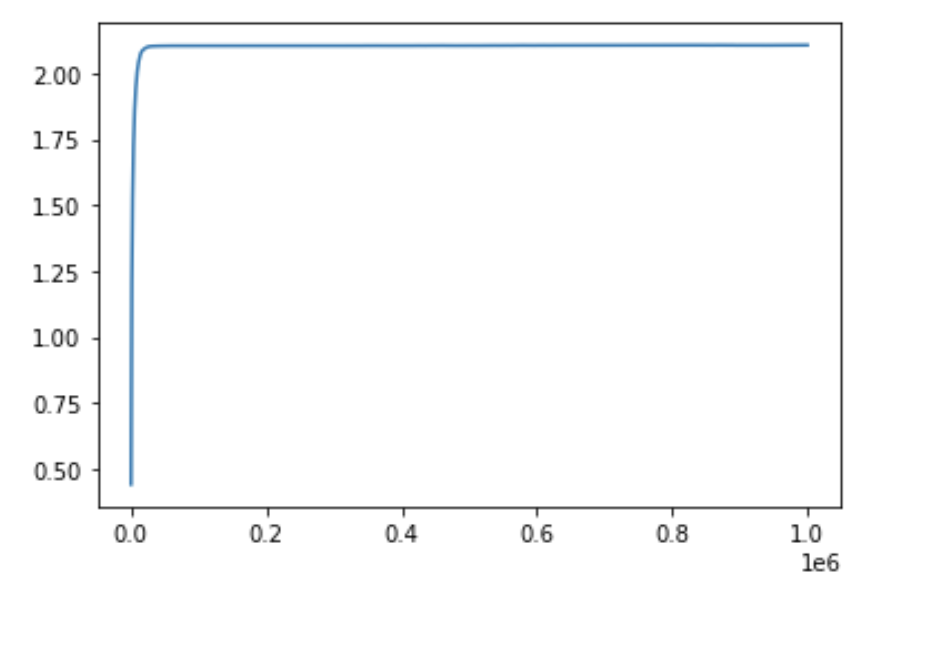

In addition, our approach can also be easily applied to a fixed portfolio of stocks. We take 20 stocks from the 106 stocks described above, and consider a fixed portfolio of equal weights, i.e., for each . We present paths the estimated AVaR in Figure 4.

2.4 Numerical results on general risk measures

In order to simulate general risk measures one needs to specify the penalty function , or alternatively the precise form of since is given by [31]

In general, the simulation of as given in (2.11) will probably require introducing neural networks since it is the value of an infinite dimensional optimization problem. This will be addressed in future research. We will focus here on a case where the problem can be simplified.

In fact, denote by the so–called linear functional derivative of . It is defined as the function such that

Up to an additive constant, there exists a unique such derivative , see e.g. [11]. We have the following:

Proposition 2.7.

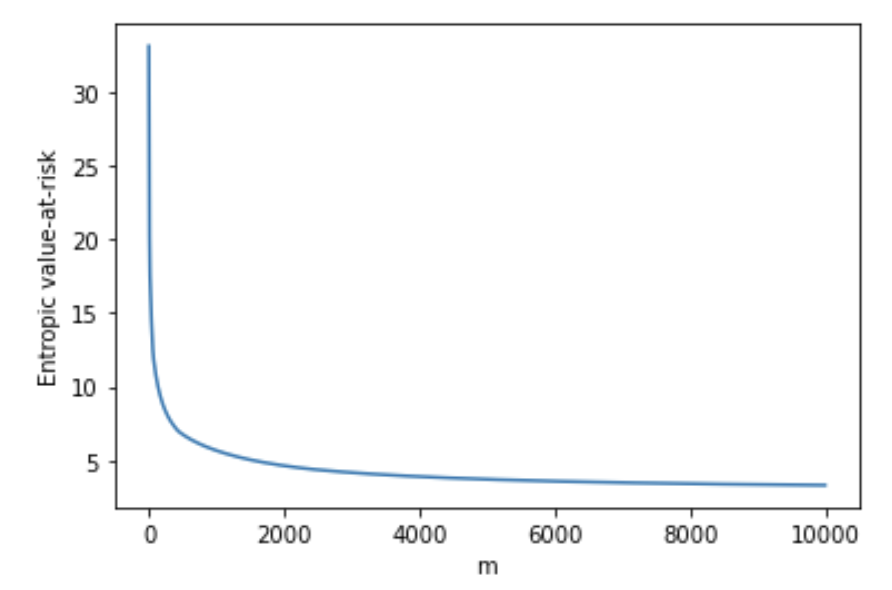

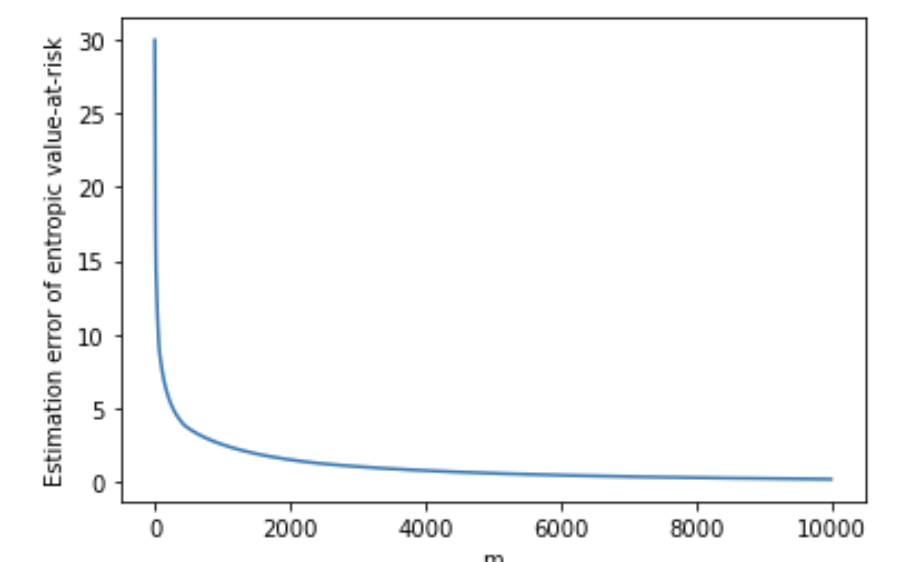

As an illustrative example, we consider the so–called entropic value-at-risk introduced by Ahmadi-Javid [1] and studied e.g. by Pichler and Schlotter [51] and Föllmer and Knispel [27] in connection to large portfolio asymptotics. This is a risk measure based on the Rényi entropy given by

with for . The associated penalty function takes the form

To numerically compute entropic value-at-risk, for a large , we simulate

For Monte Carlo simulation, we set , , , and estimate the supremum over by the maximum of 5000 random partitions, each consisting of points, of the interval . Figure 5(a) shows the convergence of the entropic value at risk in the 1 dimensional case with , where is sampled from . Figure 5(b) shows the estimation error compared to the theoretical entropic value-at-risk for given by .

3 Rates for the average value at risk

This section is dedicated to the proof of Theorem 2.3. We will start by some preliminary considerations allowing us to introduce ideas used in the proofs. The details of the proofs will be given in the subsection 3.2.

3.1 Preliminaries

The starting point of our method is to recognize (2.6) as the –th step of the Euler-Maruyama scheme that discretizes the stochastic differential equation

| (3.1) |

where is a -dimensional Brownian motion. This SDE is the Langevin SDE, with inverse temperature parameter . The Langevin SDE is widely studied in physics [57] and for the sampling of Gibbs distribution via Markov chain Monte–Carlo methods [21]. Equipping the probability space with the –completion of the filtration of , the equation (3.1) admits a unique strong solution. It is well-known that this solution has a unique invariant measure (that we denote by ) and whose density reads666As usual, in this article, we use the same notation for a probability measure on for any and its density function.

| (3.2) |

see e.g. [47, Lemma 2.1]. In this work, the interest of the Langevin equation (aside from its analytical tractability) stems from the fact that the limiting measure of as concentrates on the minimizers of , which we will show exist. This follows from results of Hwang [40]. Intuitively, this means that if is the minimizer of , then for

| (3.3) |

Moreover, the Langevin equation allows us to exploit classical techniques in order to derive explicit convergence rates to the invariant measure in the present non–convex potential case.

Remark 3.1.

One interesting byproduct of our method is that, the simulation of directly allows to compute the value at risk and the optimal portfolios, as well as deriving non–asymptotic rates. Let us illustrate this on the problem of simulation of optimal portfolios in Equation 2.2. As observed above, converges to a measure supported on the optimal portfolios. Now, let be a strictly convex function such that the gradient is invertible. Then, by Taylor’s expansion we have

for some random variable , showing that

Therefore, provided that the inverse of does not grow too fast, the argument we give below to prove Theorem 2.3 would allow to derive theoretical guarantees for the optimal portfolio as well, replacing by .

3.2 Proof of Theorem 2.3

Throughout this section we assume that the assumptions of Theorem 2.3 are satisfied. We split the proof into several intermediate lemmas. The first one is probably well known, it asserts that the optimization problem defining admits a solution.

Lemma 3.2.

The function defined in Equation 2.3 admits a minimum.

Proof.

In [40, Proposition 2.1], Hwang gives a sufficient condition for admitting a minimum: is tight. A sufficient condition for the tightness of is that there exists such that the set is compact [40, Proposition 2.3]. The rest of the proof checks the compactness of set for any .

Since is continuous, the set is closed as the pre-image of the closed set . In addition, since for large enough, we have that is bounded. To see this, assume to the contrary that is unbounded. Then there exists a sequence such that . Then for the subsequence of with , we have , which contradicts . Thus, the set is bounded, and is therefore compact. ∎

To derive the claimed convergence rate, we decompose the expected error into terms that will be handled independently. First, we will exploit the approximation (3.3). Next, using the independent Brownian motions introduced just before (2.6), we construct i.i.d. copies of the solution of the Langevin equation as solutions of the SDEs

| (3.4) |

Recall that

and

We decompose the error as

| (3.5) |

The rest of the proof consists in controlling each term above separately.

Lemma 3.3.

Proof.

Let denote the last coordinates of . By the definition of and Jensen’s inequality, we have

| (3.6) |

For the first term in (3.6), using the definition of , we have

| (3.7) |

where we used that is -Lipschitz in the last step.

To control , using the definitions of and , we have

Using this recursive relationship, it can be checked by induction that we have

| (3.8) |

Note that the derivative of is where we denote . In addition, . Therefore, is Lipschitz. By adding and then subtracting , and then using the definitions of and , we have

| (3.9) |

Combining equations (3.8) and (3.9), we have

Using the discrete version of the Grönwall’s inequality [36, Proposition 5], we have

| (3.10) |

For the second term in (3.6), we rewrite as

| (3.11) |

where the last step follows because the set is compact.

It remains to bound the fourth moments of and . Using the definition of , and letting we have

where we used the compactness of in the last step. Using this recursive relationship, it can be checked by induction that for all , we have

| (3.12) |

where the second inequality uses sum of geometric series. The bound of is given in the proof of Lemma 3.4 below. Combining equations (3.6), (3.2), (3.10),(3.11), and the moments given in equations (3.2) and (3.2), and taking , and , we have the result of the lemma. ∎

Lemma 3.4.

Proof.

By the definition of and Jensen’s inequality, we have

| (3.13) |

where the last inequality follows by Cauchy-Schwarz inequality.

Next, we will bound the fourth moments of and . Recall . Using the definition of , we have

where the third inequality follows from the fact that is Lipschitz. Using this recursive relationship, it can be checked by induction that for all ,

| (3.14) |

where the second inequality follows by properties of geometric series. By the same argument, we also have

| (3.15) |

Let us now turn to the first term on the right hand side of (3.13). Adding and subtracting , we have

| (3.17) |

Using that is –Lipschitz, it follows that

Next, we will bound the fourth moment of the difference between and . Using the definitions of and , given in Equation 2.4 and Equation 2.6 respectively, we have

Using this recursive relationship, it can be checked by induction that we have

| (3.18) |

Let (resp. ) denote the last coordinates of (resp. ). Define the vector , and let be a vector with in the first entry and everywhere else. Adding and subtracting , we get

| (3.19) | ||||

| (3.20) |

where the last inequality follows by Assumption 2.2 . Putting equations (3.18), (3.20) together, we have

Using the discrete version of the Grönwall’s inequality [36, Proposition 5], we have

| (3.21) |

Therefore, it follows that

For the second term on the right hand side of (3.17), we use a law of large number type argument. In fact, we have

| (3.22) |

For , using that and are independent, we have

Therefore, we can estimate the desired term as

| (3.23) | |||

| (3.24) |

with given in (5.4). Here the third to last step follows by Jensen’s inequality and tower property. The penultimate step follows by the boundedness of and Lipschitzness of . In the last step, we used that has finite fourth moment, and equation (3.2). Finally, putting (3.13), (3.16) and (3.24) together yields the lemma. ∎

We now investigate the second term on the right hand side of (3.5).

Lemma 3.5.

Under the conditions of Theorem 2.3, for all and , we have

where constants , and are given in Equations (5.6) and (5.7).

Proof.

Following the same argument as in the proof of Lemma 3.3, we have

| (3.25) |

Let be a continuous time approximation of the Euler-Maruyama scheme in (2.6). One way to define such an approximation is by setting

with . Note that for each , and , we have . In other words, coincides with at the time discretization points. For the first term in (3.25), we have

where we used that is –Lipschitz in the first inequality. By standard results on the error estimation for SDE approximations, see e.g. [43, Theorems 10.3.5 and 10.6.3], we have

| (3.26) |

for some constant . Thus, we have

| (3.27) |

For the second term on the right hand side of (3.25), by Cauchy-Schwartz inequality, we have

| (3.28) |

where we used equation (3.26) in the last step.

It remains to control the fourth moment of , since that of was bounded in Equation (3.2). Since solves the SDE

with a linearly growing drift, the following bound on the fourth moment of the solution follows by standard arguments:

| (3.29) |

where is a constant depending only on the Lipschitz constant of . We omit the proof. Putting equations (3.27), (3.28), (3.29) and (3.2) together, and recalling that , we have the result of the lemma. ∎

Now we move on to analyzing the third term in (3.5).

Lemma 3.6.

Proof.

Since are i.i.d. copies of , a standard law of large numbers argument gives

where is the variance of . Since is –Lipschitz, it follows by [20, Corollary 5.11], that the law of satisfies the Poincaré inequality. That is,

Now using that is –Lipschitz, we have . Thus, it follows that

where the last inequality follows from (3.29). Taking yields the result of the lemma. ∎

In the next lemma we analyze the fourth term in (3.5). Essentially, this concerns the rate of convergence of the law of the solution of the Langevin equation to its invariant measure .

Lemma 3.7.

Proof.

The investigation of convergence rates to the invariance measure is an active research area, see e.g. [8]. In the present case with non-convex potential functions , this follows from the so-called coupling by reflection arguments of Eberle [22]. In fact, by 2.2. , it follows from [22, Corollary 2.1], (see also [23, Corollary 2]) that there is a constant depending only on and such that

| (3.30) |

It now remains to bound by . Let be an optimal coupling of and , i.e. such that

see e.g. [60] for existence of . Above, we denote by the expectation under of , with . By definition of and Lipschitz–continuity of , it holds

Taking the expectation with respect to we have

| (3.31) |

where . As in the proof of Lemma 3.5, see e.g. Equation 3.29, has second moment bounded by a constant . Concerning the term , note that it holds

Since is -Lipschitz, for some constant . We thus have

| (3.32) |

Using the same argument, . Therefore, we have . Combining this with (3.30) and (3.31) yields the desired result. ∎

Remark 3.8.

If the function is convex, it follows that is strongly convex in the sense that , where is the identity matrix. In this case, the exponential convergence to equilibrium follows by standard arguments, see e.g. [8]. It fact, we have the following bound is second order Wasserstein distance:

| (3.33) |

In this case, a slight modification of the above arguments allow to get the bound

The estimation of the fifth term in the decomposition (3.5) is an immediate consequence of the existence of second moment of the invariant measure obtained in the proof of the preceding lemma. In fact, by definition of and we have

| (3.34) |

for a constant . We conclude the proof of the theorem with the following lemma estimating the last term on the right hand side of (3.5).

Lemma 3.9.

Proof.

First recall, see Equation 2.2 and Lemma 3.2 that where is the optimizer in (2.2). Now, consider the differential entropy

Rearranging the terms gives the following expression for the integral of :

| (3.36) |

Since for any continuous random variable, a Gaussian distribution with the same second moment maximizes the differential entropy ([63, Theorem 10.48]), it holds

| (3.37) |

and using that (see equation (3.32)) and subtracting from both sides of (3.36), we have

Hence, using the fact that is -Lipschitz, we can estimate the exponent in the integral above as

Thanks to the above inequality, using we obtain

For the other side of the inequality, since is a minimizer of , we have

Using and concludes the proof. ∎

4 Rate for general law invariant convex risk measures

We now focus on the estimation of the approximation error of general convex risk measures. As explained in Section 2, the main argument for the derivation of the rate is the representation of the (law invariant) convex risk measure with respect to . One technical difficulty is that this representation involves an integral with respect to the risk aversion level of . Notice that both the functions and as well as the Markov chain in the approximation scheme depend on . We will make this dependence explicit in this section by writing , , and for the function and processes defined in (2.3), (3.4) and (2.6) respectively.

4.1 Proof of Theorem 2.6

This subsection covers the proof of Theorem 2.6. Recall that we approximate a general law invariant convex risk measure by

with . Further define

| (4.1) |

To begin, we decompose the approximation error into two parts:

| (4.2) |

Let us estimate the first term. First, we will show that for all , satisfies

| (4.3) | ||||

Note that going from the definition in (4.1) to the expression in (4.3) requires interchaging the infimum and the supremum and then the infimum and the integral in the definition given in (4.1). Since the supremum is taken over a compact set and the function is continuous in and upper semi–continuous and concave in , by Fan’s minimax theorem, see [26, Theorem 2], we can interchange the supremum and the infimum. To interchange the infimum and the integral, we apply Rockafellar’s interchange theorem [55]. Thus, using Equation 2.10 and Equation 4.3, it holds that

Let us partition the interval into , where for every . Defining

, we obtain the estimation

| (4.4) |

Now by [4, Lemma 4.5 and Lemma 4.3], we have

| (4.5) |

for every . Therefore, for any given and , it holds

| (4.6) |

Choosing and using (4.4), (4.5) and (4.6), we have

Since , it holds for some universal constant , and thus

| (4.7) |

For the second term in equation (4.2), we use the error rate for the estimation of AVaR. Let be the set of all random probability measures on . We have

For each random measure , define the corresponding random variable , and let be the set of all such random variables. To make use of the error rate for the estimation of AVaR, we first show the set of random variables is directed upward, i.e. for any pair of random variables , there exists with . Then, by the following theorem, we can rewrite for some increasing sequence .

Theorem 4.1.

[29] If is directed upward, there exists an increasing sequence such that -almost surely.

To show is directed upward, for any define the set of events

| (4.8) |

and the random measure

| (4.9) |

Then, we have and . Therefore, is directed upward. By Theorem 4.1, there exists an increasing sequence in with -almost surely, and we have

where we used monotone convergence theorem and Fubini’s theorem in the second line. Using Theorem 2.3 and taking the supremum over , we have the result of the theorem.

5 Appendix

Here we give explicit formulas for constants in the proof of Theorem 2.3.

| (5.1) | ||||

| (5.2) | ||||

| (5.3) | ||||

| (5.4) | ||||

| (5.5) | ||||

| (5.6) | ||||

| (5.7) | ||||

| (5.8) | ||||

| (5.9) | ||||

| (5.10) | ||||

| (5.11) | ||||

| (5.12) | ||||

| (5.13) | ||||

| (5.14) | ||||

| (5.15) | ||||

| (5.16) | ||||

| (5.17) |

References

- Ahmadi-Javid [2012] A. Ahmadi-Javid. Entropic value-at-risk: A new coherent risk measure. J Optimiz Theory App, 155(3):1105–1123, 2012.

- Allen-Zhu [2018] Zeyuan Allen-Zhu. Natasha 2: Faster non-convex optimization than sgd. Advances in neural information processing systems, 31, 2018.

- Artzner et al. [1999] Philippe Artzner, Freddy Delbaen, Jean Marc Eber, and David Heath. Coherent measures of risk. Math. Finance, 9:203–228, 1999.

- Bartl and Tangpi [2022] Daniel Bartl and Ludovic Tangpi. Nonasymptotic convergence rates for the plug-in estimation of risk measures. Mathematics of Operations Research, 2022.

- Bartl et al. [2020] Daniel Bartl, Samuel Drapeau, and Ludovic Tangpi. Computational aspects of robust optimized certainty equivalents and option pricing. Math. Finance, 30:287–309, 2020.

- Belomestny and Krätschmer [2012] Denis Belomestny and Volker Krätschmer. Central limit theorems for law-invariant coherent risk measures. Journal of Applied Probability, 49(1):1–21, 2012.

- Bhardwaj [2019] Chandrasekaran Anirudh Bhardwaj. Adaptively preconditioned stochastic gradient langevin dynamics. arXiv preprint arXiv:1906.04324, 2019.

- Bolley et al. [2012] François Bolley, Ivan Gentil, and Armand Guillin. Convergence to equilibrium in Wasserstein distance for Fokker–Planck equations. J. Funct. Anal., 263(8):2430–2457, 2012.

- Bühler et al. [2019] Hans Bühler, Lukas Gonon, Josef Teichmann, and Ben Wood. Deep hedging. Quantitative Finance, 19(8):1271–1291, 2019.

- Butler and Schachter [1997] JS Butler and Barry Schachter. Estimating value-at-risk with a precision measure by combining kernel estimation with historical simulation. Rev. Deriv. Res., 1:371–390, 1997.

- Carmona and Delarue [2018] René Carmona and François Delarue. Probabilistic theory of mean field games with applications. I, volume 83 of Probability Theory and Stochastic Modelling. Springer, Cham, 2018. ISBN 978-3-319-56437-1; 978-3-319-58920-6. Mean field FBSDEs, control, and games.

- Chen and Li [1989] Mu-Fa Chen and Shao-Fu Li. Coupling methods for multidimensional diffusion processes. The Ann. Probab., pages 151–177, 1989.

- Chen [2008] Song Xi Chen. Nonparametric estimation of expected shortfall. Journal of Financial Econometrics, 6(1):87–107, 2008.

- Chen et al. [2014] Tianqi Chen, Emily Fox, and Carlos Guestrin. Stochastic gradient hamiltonian monte carlo. In International conference on machine learning, pages 1683–1691. PMLR, 2014.

- Cheridito and Li [2009] Patrick Cheridito and Tianhui Li. Risk measures on Orlicz hearts. Math. Finance, 19(2):189–214, 2009.

- Cheridito et al. [2006] Patrick Cheridito, Freddy Delbaen, and Michael Kupper. Dynamic monetary risk measures for bounded discrete-time processes. Electron. J. Probab., 11(3):57–106, 2006.

- Cont et al. [2010] Rama Cont, Romain Deguest, and Giacomo Scandolo. Robustness and sensitivity analysis of risk measurement procedures. Quantitative finance, 10(6):593–606, 2010.

- Delbaen [2012] Freddy Delbaen. Monetary Utility Functions. Osaka University Press, 2012.

- Deng et al. [2020] Wei Deng, Guang Lin, and Faming Liang. A contour stochastic gradient langevin dynamics algorithm for simulations of multi-modal distributions. Advances in neural information processing systems, 33:15725–15736, 2020.

- Djellout et al. [2004] H. Djellout, A. Guillin, and L. Wu. Transportation cost-information inequalities and applications to random dynamical systems and diffusions. The Annals of Probability, 32(3B):2702–2732, 2004.

- "Durmus et al. [2019] Alain "Durmus, Szymon Majewski, and Blażej" Miasojedow. Analysis of langevin monte carlo via convex optimization. The Journal of Machine Learning Research, 20(1):2666–2711, 2019.

- Eberle [2011] Andreas Eberle. Reflection coupling and wasserstein contractivity without convexity. C. R. Math, 349(19-20):1101–1104, 2011.

- Eberle [2016] Andreas Eberle. Reflection couplings and contraction rates for diffusions. Probab. Theory Relat. Fields, 166(3):851–886, 2016.

- Eckstein and Kupper [2021] Stephan Eckstein and Michael Kupper. Computation of optimal transport and related hedging problems via penalization and neural networks. Applied Mathematics & Optimization, 83:639–667, 2021.

- Fan and Gu [2003] Jianqing Fan and Juan Gu. Semiparametric estimation of value at risk. Econom. J., 6(2):261–290, 2003.

- Fan [1953] Ky Fan. Minimax theorems. PNAS USA, 39(1):42, 1953.

- Föllmer and Knispel [2011] Hans Föllmer and T. Knispel. Entropic risk measures: Coherence vs. convexity, model ambiguity and robust large deviations. Stoch. Dyn., 11(02n03):333–351, 2011.

- Föllmer and Schied [2002] Hans Föllmer and Alexander Schied. Convex measures of risk and trading constraint. Finance Stoch., 6(4):429–447, 2002.

- Föllmer and Schied [2004] Hans Föllmer and Alexander Schied. Stochastic Finance: An Introduction in Discrete Time. Walter de Gruyter, Berlin, New York, 2 edition, 2004.

- Föllmer and Schied [2004] Hans Föllmer and Alexander Schied. Stochastic Finance. An Introduction in Discrete Time. de Gruyter Studies in Mathematics. Walter de Gruyter, Berlin, New York, 2 edition, 2004.

- Föllmer and Schied [2016] Hans Föllmer and Alexander Schied. Stochastic finance. de Gruyter, 2016.

- Frittelli and Gianin. [2005] Marco Frittelli and Emanuela Rosazza Gianin. Law invariant convex risk measures. Advances in mathematical economics., pages 33–46, 2005.

- Frittelli and Rosazza Gianin [2002] Marco Frittelli and Emanuela Rosazza Gianin. Putting order in risk measures. Journal of Banking & Finance, 26(7):1473–1486, July 2002.

- Gelfand and Mitter [1991] Saul B Gelfand and Sanjoy K Mitter. Recursive stochastic algorithms for global optimization in r^d. SIAM J Control Optim, 29(5):999–1018, 1991.

- Glasserman et al. [2000] Paul Glasserman, Philip Heidelberger, and Perwez Shahabuddin. Variance reduction techniques for estimating value-at-risk. Manag. Sci, 46(10):1349–1364, 2000.

- Holte [2009] John M Holte. Discrete gronwall lemma and applications. In MAA-NCS meeting at the University of North Dakota, volume 24, pages 1–7, 2009.

- Hong and Liu. [2011] L. Jeff Hong and Guangwu Liu. Monte carlo estimation of value-at-risk, conditional value-at-risk and their sensitivities. Proceedings of the 2011 Winter Simulation Conference (WSC). IEEE, 2011.

- Hoogerheide and van Dijk [2010] Lennart Hoogerheide and Herman K van Dijk. Bayesian forecasting of value at risk and expected shortfall using adaptive importance sampling. Int. J. Forecast., 26(2):231–247, 2010.

- Hu et al. [2020] Kaitong Hu, Shenjie Ren, David Siska, and Lukasz Szpruch. Mean–field Langevin dynamics and energy landscape of neural networks. Annales de l’Institut Henri Poincaré (B) Probabilités and Statistiques, to appear, 2020.

- Hwang [1980] C. R. Hwang. Laplace’s method revisited: weak convergence of probability measures. Annals of Probability, pages 2189–2211, 1980.

- Iyengar and Ma [2013] Garud Iyengar and Alfred Ka Chun Ma. Fast gradient descent method for mean-cvar optimization. Ann. Oper. Res., 205(1):203–212, 2013.

- Jouini et al. [2006] Elyès Jouini, Walter Schachermayer, and Nizar Touzi. Law invariant risk measures have the Fatou property. In Shigeo Kusuoka and Akira Yamazaki, editors, Advances in Mathematical Economics, volume 9 of Advances in Mathematical Economics, pages 49–71. Springer Japan, 2006.

- Kloeden and Platen [2013] P. E. Kloeden and E. Platen. Numerical solution of stochastic differential equations. Springer Science and Business Media, 2013.

- Krätschmer et al. [2014] Volker Krätschmer, Alexander Schied, and Henryk Zähle. Comparative and quantitative robustness for law-invariant risk measures. Finance Stoch., 2014.

- Kupper and Schachermayer [2009] Michael Kupper and Walter Schachermayer. Representation results for law invariant time consistent functions. Math. Financ. Econ., 2(3):189–210, 2009.

- Kusuoka [2001] Shigeo Kusuoka. On law invariant coherent risk measures. In Advances in mathematical economics, pages 83–95. Springer, 2001.

- Lacker et al. [2020] D. Lacker, M. Shkolnikov, and J. Zhang. Inverting the markovian projection, with an application to local stochastic volatility models. Annals of Probability, 48(5):2189–2211, 2020.

- McNeil et al. [2015] Alexander J. McNeil, Rüdiger Frey, and Paul Embrechts. Quantitative Risk Management. Princeton University Press, 2015.

- Nesterov [2005] Yu Nesterov. Smooth minimization of non-smooth functions. Math. Program., 103(1):127–152, 2005.

- on Banking Supervision [2014] Basel Committee on Banking Supervision. Fundamental review of the trading book: A revised market risk framework. Technical report, Bank of international settlments, 2014.

- Pichler and Schlotter [2020] Alois Pichler and R. Schlotter. Entropic based risk measures. European Journal on Operational Research, 285(1):223–236, 2020.

- Pohl et al. [2020] Mathias Pohl, Alexander Ristig, Walter Schachermayer, and Ludovic Tangpi. Theoretical and empirical analysis of trading activity. Math. Program., 181(2):405–434, 2020.

- Raginsky et al. [2017] Maxim Raginsky, Alexander Rakhlin, and Matus Telgarsky. Non-convex learning via stochastic gradient langevin dynamics: a nonasymptotic analysis. In COLT, pages 1674–1703. PMLR, 2017.

- Reppen and Soner [2023] Anders Max Reppen and Halil Mete Soner. Deep empirical risk minimization in finance: looking into the future. Mathematical Finance, 33(1):116–145, 2023.

- Rockafellar [1968] Ralph Rockafellar. Integrals which are convex functionals. Pacific journal of mathematics, 24(3):525–539, 1968.

- Sabanis and Zhang [2020] Sotirios Sabanis and Ying Zhang. A fully data-driven approach to minimizing CVaR for portfolio of assets via SGLD with discontinuous updating. arXiv preprint arXiv:2007.01672, 2020.

- Sekimoto [2010] Ken Sekimoto. Stochastic energetics, volume 799. Springer, 2010.

- Soma and Yoshida [2020] Tasuku Soma and Yuichi Yoshida. Statistical learning with conditional value at risk. arXiv preprint arXiv:2002.05826, 2020.

- Tamar et al. [2015] Aviv Tamar, Yonatan Glassner, and Shie Mannor. Optimizing the cvar via sampling. In AAAI-15, 2015.

- Villani [2009] C. Villani. Optimal Transport. Old and New, volume 338 of Grundlehren der mathematischen Wissenschaften. Springer, 2009.

- Weber [2007] Stefan Weber. Distribution-invariant risk measures, Entropy, and large deviations. J. Appl. Prob., 44:16–40, 2007.

- Welling and Teh. [2011] Max Welling and Yee W. Teh. Bayesian learning via stochastic gradient langevin dynamics. In Proceedings of the 28th international conference on machine learning (ICML-11), pages 681–688, 2011.

- Yeung [2008] R. W. Yeung. Information theory and network coding. Springer Science and Business Media, 2008.

- Zhu and Zhou [2015] Helin Zhu and Enlu Zhou. Estimation of conditional value-at-risk for input uncertainty with budget allocation. In Proc. 2015 WSC, pages 655–666. IEEE, 2015.