Nonlinear Functional Principal Component Analysis Using Neural Networks

Abstract

Functional principal component analysis (FPCA) is an important technique for dimension reduction in functional data analysis (FDA). Classical FPCA method is based on the Karhunen-Loève expansion, which assumes a linear structure of the observed functional data. However, the assumption may not always be satisfied, and the FPCA method can become inefficient when the data deviates from the linear assumption. In this paper, we propose a novel FPCA method that is suitable for data with a nonlinear structure by neural network approach. We construct networks that can be applied to functional data and explore the corresponding universal approximation property. The main use of our proposed nonlinear FPCA method is curve reconstruction. We conduct a simulation study to evaluate the performance of our method. The proposed method is also applied to two real-world data sets to further demonstrate its superiority.

Keywords : Functional principal component analysis; Neural Network; Nonlinear dimension reduction; Curve reconstruction.

1 Introduction

Functional data analysis (FDA) has become widely concerned, with the rapid development of data collection technology. There are many monographs that provide a detailed introduction of FDA, such as Ramsay and Silverman (2005), Ferraty and Vieu (2006) and Horváth and Kokoszka (2012). Functional principal component analysis (FPCA), as a dimension reduction technique, plays a greatly important role in FDA, since functional data is a type of data with infinite dimensional. However, traditional FPCA is merely a linear approach, and the linear assumption can limit the effectiveness of dimensional reduction. In this paper, we aim to develop a nonlinear FPCA method based on the use of neural networks.

Recently, neural network approach draws more and more attention in the field of FDA and shows strong potential. Thind et al. (2023) proposed a functional neural network to handle nonlinear regression model with functional covariates and scalar response. Further, Rao and Reimherr (2023) introduced a continuous layer in the construction of functional neural networks, so that the functional nature of the data can be maintained as long as possible. The nonlinear function-on-scalar regression model has been considered by Wu et al. (2022) with the use of neural networks. Moreover, neural network method is also employed in the classification problem of functional data, such as Thind et al. (2020) and Wang et al. (2022a). The above works all focus on supervised learning, as the regression or classification issues for functional data are considered, where label variables are defined. Furthermore, unsupervised learning problems for functional data have also been studied by neural networks. Wang et al. (2021) discussed the mean function estimation for functional data using neural networks. Multi-dimensional functional data is taken into account by Wang and Cao (2022a), and a robust location function estimator is proposed via neural networks. Sarkar and Panaretos (2022) concentrated on covariance estimation for multi-dimensional functional data, and three types of covariance networks are defined correspondingly. Though neural networks have gained extensive interest in FDA, there are only very few studies working on nonlinear dimensional reduction for functional data through neural networks. Wang and Cao (2022b) presented a functional nonlinear learning method, which is a representation learning approach for multivariate functional data and can be applied to curve reconstruction and classification. However, their method is developed based on recurrent neural network (RNN), thus only discrete values of the data are used in the neural network. Therefore, a nonlinear dimension reduction method by neural networks that treats the continuously observed data from a functional perceptive is needed.

FPCA is a crucial dimension reduction tool in FDA. There has been a great many works contributing to the development of FPCA in various aspects. These include, but are not limited to the study of principal component analysis for sparsely observed functional data (Yao et al., 2005; Hall et al., 2006; Li and Hsing, 2010). Robust FPCA approaches were introduced in Locantore et al. (1999); Gervini (2008) and Zhong et al. (2022). Moreover, Chiou et al. (2014) and Happ and Greven (2018) discussed principal component analysis methods for more complex functional data, such as multivariate functional data and multi-dimensional functional data. For nonlinear FPCA, Song and Li (2021) generalized kernel principal component analysis to accommodate functional data. Currently, research on nonlinear FPCA is not sufficient enough. Nevertheless, the consideration of nonlinear structure of functional data can be beneficial, since more parsimonious representation can be obtained.

To this end, we propose a new nonlinear functional principal component analysis method by neural networks, which can be simply denoted as nFunNN. In specific, we borrow the idea of the autoassociative neural networks in (Kramer, 1991) for the construction of our networks, to realize dimension reduction and curve reconstruction. Kramer (1991) achieved the purpose of dimension reduction for multivariate data through an internal “bottleneck” layer. However, the extension to functional data is nontrivial due to the infinite dimensional nature of functional data, which adds the complexity of the neural networks and increases the difficulty in the optimization. For our proposed neural network, both input and output are functions. To the best of our knowledge, though neural networks with functional input have been studied in the existing works, networks with both functional input and functional output have not been taken into account yet, and the consideration of which can be more complicated. B-spline basis functions are employed in our computation and backpropagation algorithm is applied. The simulation study and real data application show the superiority of the nFunNN method under various nonlinear settings. Moreover, we also establish the universal approximation property of our proposed nonlinear model.

The contributions of this paper can be summarized as follows. First, our work is the first attempt to the generalization of the autoassociative neural networks to functional data settings, which is not straightforward. The use of neural networks provides new framework of nonlinear dimension reduction for functional data. Second, the universal approximation property of the proposed model is discussed, which brings theoretical guarantees to our method. Third, we present an innovative algorithm for the computation in practice and develop a python package, called nFunNN, for implementation.

The organization of this paper is as follows. In Section 2, we first give an explanation of nonlinear FPCA, and then introduce a functional autoassociative neural network to complete nonlinear principal component analysis for functional data. We also discuss the practical implementation of our method. In Section 3, we display the simulation results in our numerical study. The evaluation of our method by real-world data is provided in Section 4. In Section 5, we conclude this paper with some discussions.

2 Methodology

2.1 Nonlinear FPCA

Let be a smooth random function in , where is a bounded and closed interval. In this paper, is set as if there is no specific explanation. Let and denote the mean function and covariance function of , respectively. For linear FPCA, according to the Karhunen-Loève Theorem, admits the following expansion

where is the -th functional principal component score, is the -th eigenfunction of and satisfies and for . Moreover, the functional principal component scores are uncorrelated random variables with mean zero and , where is the -th eigenvalue of . In practice, as only the first several functional principal component scores dominate the variation, a truncated expansion

| (1) |

is often applied, where is the number of functional principal components that used. Furthermore, we also have that

| (2) |

It is obvious that is mapped into a lower dimensional space through a linear transformation. However, with the constraint of linear map, the nonlinear structure is ignored, which may lower the efficiency of dimension reduction.

For nonlinear FPCA, we extend the linear map in (2) to arbitrary nonlinear map. That is

| (3) |

where is a nonlinear function that maps function in square integrable space to scalar in . Similarly, (1) can be generalized into nonlinear version as

where and is a nonlinear function that maps from a vector space to a square integrable space. The scores obtained from nonlinear FPCA also contain the main information of , but the dimension of the feature space can be lower, as the nonlinear structure is taken into account in the process of dimension reduction. To estimate the nonlinear functional principal component scores, the nonlinear functions and have to be learnt, and a neural network method is employed.

2.2 Neural Networks for Nonlinear FPCA

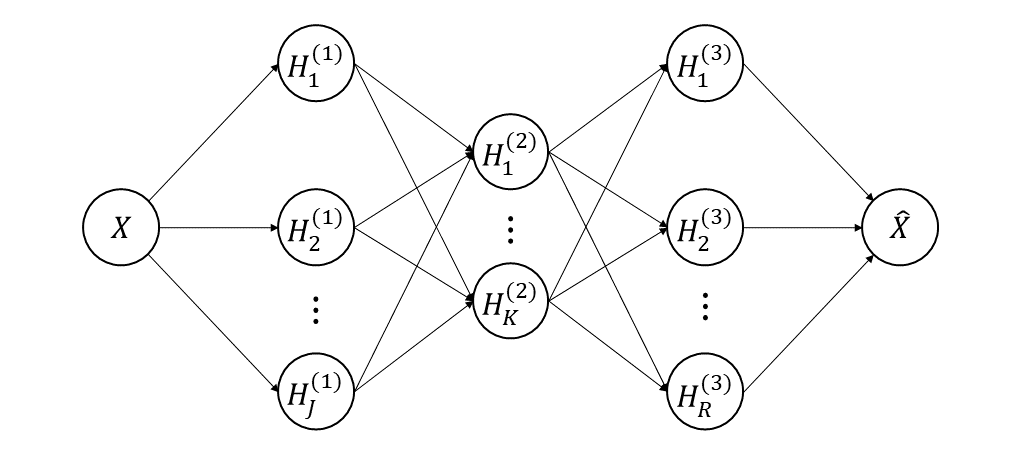

In this section, we construct a functional autoassociative neural network for nonlinear FPCA. The structure of the proposed neural network is shown in Figure 1. The output is the reconstruction of the input data, thus both input and output are functions in our network, which brings challenge to the optimization of the neural network. Furthermore, dimension reduction is realized by the second hidden layer, which can also be called “bottleneck” layer as in (Kramer, 1991). More details about the computation of the proposed functional autoassociative neural network are given below.

To be specific, the left two hidden layers are designed to learn the nonlinear functions ’s that map the functional input to the scores, which can be viewed as a dimension reduction process. For the th neuron in the first hidden layer, we define that

where is the intercept, is the weight function, is a nonlinear activation function, and is the number of neurons in the first hidden layer. This is a natural generalization of the first two layers of a multilayer perceptron to adapt to the functional input. As , computation of the score in the second hidden layer can be promoted naturally. The th neuron in the second hidden layer is

where is the weight. Moreover, let be the set of functions from to of the form

According to Corollary 5.1.2 in (Stinchcombe, 1999) and the proof in Section 6.1.2 of (Rossi and Conan-Guez, 2006), has the universal approximation property for . That means any nonlinear function from to can be approximated up to arbitrary degree of precision by functions in .

The right two layers in Figure 1, which correspond to the estimation of the nonlinear function , are used to reconstruct by the low-dimensional scores in the second hidden layer. The procedure can be more challenging, since we have to get functional output from scalars in the second hidden layer. To this end, the th neuron in the third layer is defined as

where is the intercept function, is the weight function, and is the number of the neurons in the third hidden layer. It can be observed that each neuron in the third hidden layer is a function. Then, is reconstructed by

| (4) |

where is the weight. The whole network is trained by minimizing the following reconstruction error

As functional data is involved in the proposed network, some of the parameters need to be estimated are functions, which makes the optimization of the network nontrivial. In Section 2.3, we introduce the optimization algorithm for practical implementation.

Note that can be viewed as the estimation of in (3). Therefore, the dimension of is reduced to through the functional autoassociative neural network. We can use the low-dimensional vector to complete further inference, such as curve reconstruction, regression and clustering. In this paper, we mainly focus on the curve reconstruction by the low-dimensional representation.

2.3 The Transformed Network for Practical Implementation

As discussed in Section 2.2, it can be hard to optimize the proposed functional autoassociative neural network in Figure 1, since many parameters appear as a function. Here, we employ the B-spline basis functions to transform the estimation of functions to the estimation of their coefficients. Let be a set of B-spline basis functions with degree , where is the number of basis functions. Then, we have

for , , and , where , and are the basis expansion coefficients of , and , respectively.

With the use of B-spline basis functions, the computation of the first two layers for the proposed functional autoassociative neural network turns out to be

| (5) |

where . In practice, is calculated through the B-spline expansion of , that is , where is the basis expansion coefficient of . Then, we have

where . The following theorem discusses the universal approximation property of the first two layers based on B-spline basis functions. The proof is provided in the Appendix.

Theorem 1.

Let be a continuous non polynomial function from to , and be the set of functions from to of the form

where is the th coordinate of on the basis , , , and . Then, has the universal approximation property. That is for any compact subset of , for any from to and for any , there exists such that for all , .

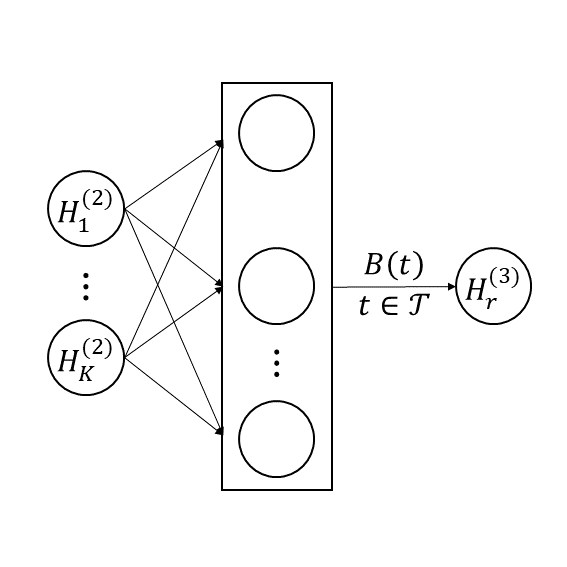

For the computation of the third hidden layer of the proposed functional autoassociative neural network, we have

where , , and . For the term above, it can be viewed as weighted sums of . Thus, the computation from the second hidden layer to the third hidden layer of the functional autoassociative neural network can be transformed accordingly, that is

| (6) |

the structure of which is shown in Figure 2. The middle layer in Figure 2 represents the elements of the -dimensional vector . Furthermore, the reconstruction of can be obtained by (4) as discussed in Section 2.2.

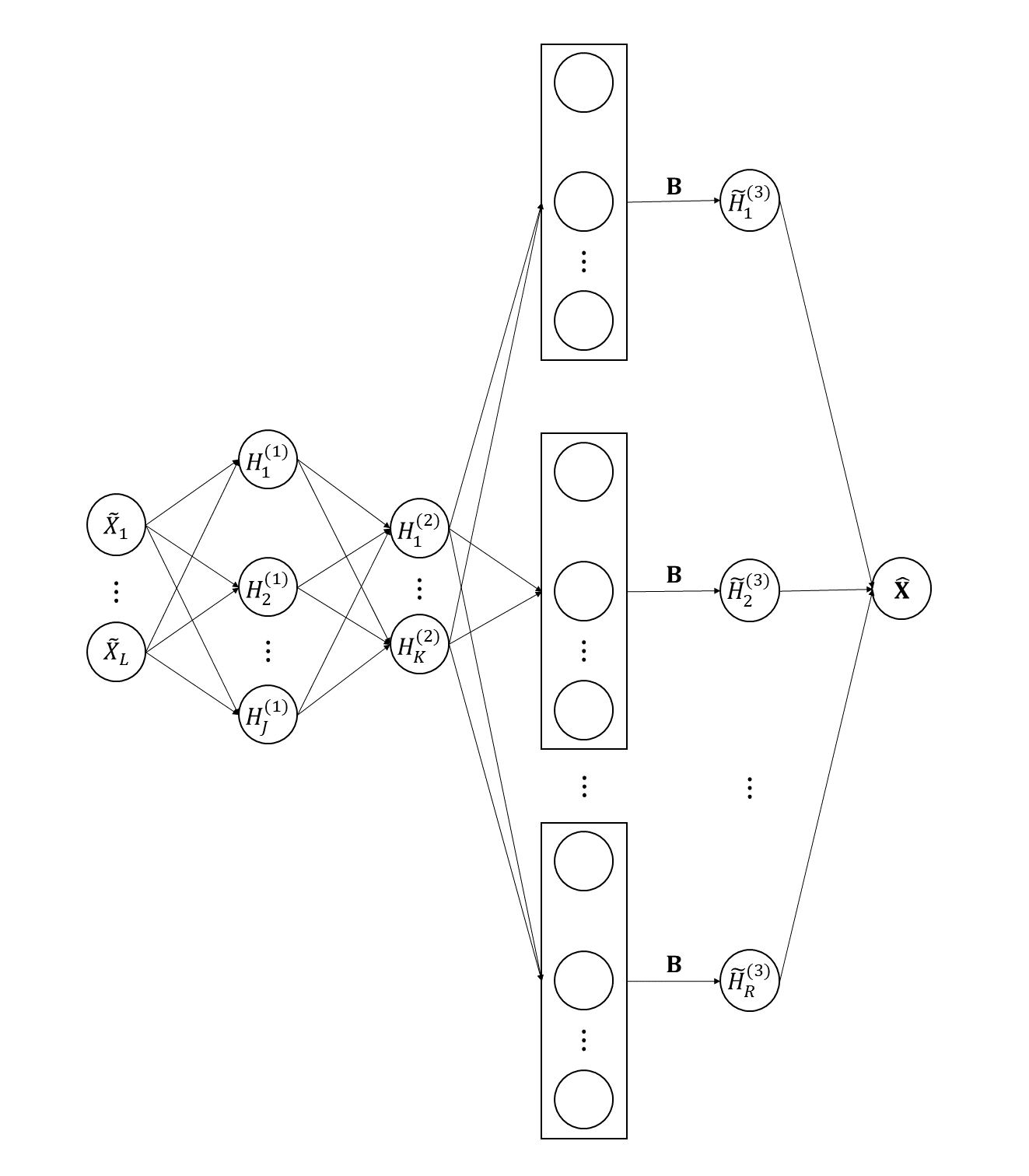

In practice, suppose that are independent realizations of , where is the sample size. Then, the loss function for the network can be represented as

However, integral appeared in the loss function can increase the difficulty of optimization. Hence, right-hand Riemann sum is employed for the computation. Specifically, let be some equally spaced times points on . Moreover, denote be the observation time points of the random curves, where is the observation size. Then, we consider the following loss function

| (7) |

where is the estimation of using the observed data by smoothing. Note that only values of the curves at involved in the loss function. Therefore, we just need to consider discrete values of in the last two layers of the network. Let for , and . The th neuron of the third hidden layer can be computed by

| (8) |

Then the output is given by

| (9) |

where . To be clear, the transformed functional autoassociative neural network is shown in Figure 3. The computation involved in the transformed autoassociative neural network corresponds to (5), (8) and (9) respectively.

By turning the proposed functional autoassociative neural network in Section 2.2 into the transformed functional autoassociative neural network, the Adam algorithm (Kingma and Ba, 2014) can be employed in the optimization. This algorithm is popularly-used, and it can be realized by the Python package torch. Moreover, we also provide the Python package nFunNN for the implementation specific to our method.

3 Simulation

3.1 Numerical performance

In this section, we conduct a simulation study to explore the performance of the proposed nFunNN method. For the simulated data, we take into account the measurement error to make it more consistent with the practical cases. Specifically, the observed data is generated by

where is the th observation for the th subject, and ’s are the independent measurement errors, which we obtain from the normal distribution . We set , and the observation size is set as . The observation grids are equally spaced on . For the setting of , we consider the following cases.

-

•

Case 1: , where ’s and ’s are simulated from and , respectively.

-

•

Case 2: , where both ’s and ’s are simulated from .

-

•

Case 3: , where both ’s and ’s are simulated in the same way as that in Case 2.

-

•

Case 4: , where both ’s and ’s are simulated in the same way as that in Case 2.

-

•

Case 5: , where both ’s and ’s are simulated in the same way as that in Case 2.

The above setups include various structures of . In Case 1, is actually generated through the Karhunen-Loève expansion, with zero mean, , , and for . Thus, in Case 1 has a linear structure, and a linear method may be suitable enough for this case. Moreover, the other four cases consider the nonlinear structure of . Case 2 and Case 3 impose only one nonlinear term in the setup, while Case 4 and Case 5 combine the linear terms in Case 1 with nonlinear terms in Case 2 and Case 3 respectively. Further, whether a linear structure or a nonlinear structure is considered, all the five cases are set to contain two principal components.

The proposed nFunNN method is compared with the classical linear FPCA method (Ramsay and Silverman, 2005). Specifically, the numbers of neurons in different layers for our transformed functional autoassociative network are set as , , , and . And the number of principal components for the linear FPCA method is selected as . To evaluate the performance of curve reconstruction, we consider the following criteria:

| RMSE | |||

| RRMSE |

where RRMSE means the relative RMSE. Note that the prediction at is assessed, and these time points can be different from the observation time points. In our simulation study, are also equally spaced on with being set as . We consider the performance for both training set and test set in the evaluation, and the sizes of both sets are set as . Moreover, Monte Carlo runs are conducted for each considered case.

Table 1 lists the simulation results of the proposed nFunNN method and FPCA method in all the five cases. In Case 1, though FPCA method yields slightly better RMSE and RRMSE than our nFunNN method, both methods perform well for training set and test set. The result is not surprising, since is generated by the Karhunen-Loève expansion in Case 1, and classical linear FPCA is good enough to handle such case. For Case 2 and Case 3, where nonlinear structure is considered, it is evident that the proposed nFunNN method outperforms the FPCA method and gives more accurate prediction. That implies the advantage of our nFunNN method when solving nonlinear cases. Furthermore, FPCA method is almost invalid for Case 4 and Case 5, while the proposed nFunNN method still provides encouraging curve reconstruction results. In the setting of in Case 4 and Case 5, we add a nonlinear term besides two linear terms, which makes the linear FPCA method cannot fulfill the prediction with only two principal components. It can be observed that the prediction error of our nFunNN method is much lower than that of the FPCA method in Case 4 and Case 5. That indicates our method can achieve more effective dimension reduction results in nonlinear settings.

To sum up, the proposed nFunNN method gives great predicting results for all the five cases. Although the error can be a bit larger than that of the linear method in linear case, the predicting results of our nFunNN method is still reasonable. Furthermore, the nFunNN method shows obvious superiority for the nonlinear cases. Therefore, our nFunNN method can be a good choice, when we have no idea whether the data at hand has a linear structure or a nonlinear structure.

| Training set | Test set | ||||

|---|---|---|---|---|---|

| RASE | RRASE | RASE | RRASE | ||

| Case 1 | nFunNN | 0.0285 (0.0088) | 0.0112 (0.0034) | 0.0324 (0.0187) | 0.0127 (0.0072) |

| FPCA | 0.0201 (0.0003) | 0.0079 (0.0002) | 0.0201 (0.0003) | 0.0079 (0.0002) | |

| Case 2 | nFunNN | 0.0453 (0.0146) | 0.0386 (0.0121) | 0.0607 (0.0182) | 0.0519 (0.0154) |

| FPCA | 0.0890 (0.0177) | 0.0760 (0.0147) | 0.0878 (0.0192) | 0.0753 (0.0164) | |

| Case 3 | nFunNN | 0.0790 (0.0250) | 0.0488 (0.0156) | 0.1036 (0.0300) | 0.0639 (0.0182) |

| FPCA | 0.1955 (0.0255) | 0.1207 (0.0156) | 0.2033 (0.0290) | 0.1254 (0.0174) | |

| Case 4 | nFunNN | 0.1828 (0.0576) | 0.0791 (0.0252) | 0.2181 (0.0746) | 0.0943 (0.0320) |

| FPCA | 0.9992 (0.0279) | 0.4323 (0.0093) | 1.0065 (0.0275) | 0.4356 (0.0096) | |

| Case 5 | nFunNN | 0.2248 (0.0514) | 0.0877 (0.0199) | 0.2781 (0.0637) | 0.1080 (0.0244) |

| FPCA | 0.6573 (0.0291) | 0.2565 (0.0112) | 0.6628 (0.0348) | 0.2566 (0.0123) | |

3.2 Effect of the tuning parameters

In this section, we discuss the effect of , , , and on the performance of the proposed nFunNN method. Though the values of and can be different for our method, we set for simplicity here. We consider the settings of Case 1, Case 2 and Case 4 for explanation in this section. For the discussion of the effect of , we fix and , and the value of can be selected by , , and . Moreover, we set , and , for the exploration of the influence of . When discussing the effect of , we set , and . Note that the number of parameters in the network is when . As the sample size should be larger than the number of parameters, we increase the sample size of training set to , and the size of test set is still as in Section 3.1.

Tables 2–4 present the simulation results of our nFunNN method with the use of various , and . From Table 2, it can be observed that with the rise of , both RMSE and RRMSE increase slightly. As shown in Table 3, there is no obvious difference in the prediction errors with the use of various , which implies that the effect of is not significant. For results in Table 4, the prediction errors for Case 1 are similar when different values of are considered. However, for Case 2 and Case 4, which are both nonlinear cases, various values of lead to different performance of the network. In Case 2, the prediction error first decreases with the increase of , and then shows a minor growth. Furthermore, the performance of the nFunNN method gets much better when larger is used in Case 4. We conjecture the reason is related to the complex setting of Case 4.

To summarize, according to the simulation results, the effects of and are not very obvious, while different values of can bring large changes for the prediction in some complex cases. Moreover, the selection of tuning parameters in the neural network can be completed through the validation set.

| Training set | Test set | ||||

|---|---|---|---|---|---|

| RASE | RRASE | RASE | RRASE | ||

| Case 1 | 0.0247 (0.0038) | 0.0097 (0.0015) | 0.0262 (0.0042) | 0.0103 (0.0016) | |

| 0.0301 (0.0060) | 0.0119 (0.0024) | 0.0312 (0.0062) | 0.0122 (0.0024) | ||

| 0.0375 (0.0082) | 0.0147 (0.0032) | 0.0386 (0.0080) | 0.0151 (0.0031) | ||

| Case 2 | 0.0414 (0.0084) | 0.0354 (0.0071) | 0.0522 (0.0162) | 0.0443 (0.0138) | |

| 0.0547 (0.0209) | 0.0469 (0.0179) | 0.0628 (0.0240) | 0.0533 (0.0204) | ||

| 0.0708 (0.0309) | 0.0605 (0.0261) | 0.0780 (0.0318) | 0.0663 (0.0276) | ||

| Case 4 | 0.1802 (0.0770) | 0.0777 (0.0328) | 0.1956 (0.0796) | 0.0845 (0.0352) | |

| 0.1881 (0.0678) | 0.0812 (0.0293) | 0.2018 (0.0763) | 0.0870 (0.0327) | ||

| 0.2350 (0.1851) | 0.1014 (0.0804) | 0.2473 (0.1885) | 0.1067 (0.0812) | ||

| Training set | Test set | ||||

|---|---|---|---|---|---|

| RASE | RRASE | RASE | RRASE | ||

| Case 1 | 0.0228 (0.0031) | 0.0090 (0.0012) | 0.0251 (0.0060) | 0.0099 (0.0023) | |

| 0.0231 (0.0024) | 0.0091 (0.0009) | 0.0250 (0.0041) | 0.0098 (0.0016) | ||

| 0.0246 (0.0035) | 0.0097 (0.0014) | 0.0262 (0.0043) | 0.0103 (0.0017) | ||

| Case 2 | 0.0522 (0.0122) | 0.0445 (0.0104) | 0.0599 (0.0165) | 0.0512 (0.0137) | |

| 0.0457 (0.0112) | 0.0390 (0.0094) | 0.0541 (0.0147) | 0.0463 (0.0125) | ||

| 0.0413 (0.0088) | 0.0352 (0.0074) | 0.0506 (0.0136) | 0.0433 (0.0114) | ||

| Case 4 | 0.2102 (0.0507) | 0.0907 (0.0220) | 0.2266 (0.0612) | 0.0978 (0.0259) | |

| 0.1953 (0.0794) | 0.0843 (0.0340) | 0.2145 (0.0898) | 0.0926 (0.0386) | ||

| 0.1684 (0.0413) | 0.0727 (0.0179) | 0.1854 (0.0507) | 0.0801 (0.0224) | ||

| Training set | Test set | ||||

|---|---|---|---|---|---|

| RASE | RRASE | RASE | RRASE | ||

| Case 1 | 0.0248 (0.0042) | 0.0097 (0.0016) | 0.0258 (0.0045) | 0.0102 (0.0018) | |

| 0.0255 (0.0038) | 0.0100 (0.0015) | 0.0266 (0.0040) | 0.0105 (0.0016) | ||

| 0.0263 (0.0029) | 0.0103 (0.0012) | 0.0275 (0.0036) | 0.0108 (0.0015) | ||

| Case 2 | 0.0394 (0.0082) | 0.0337 (0.0071) | 0.0512 (0.0185) | 0.0436 (0.0158) | |

| 0.0271 (0.0016) | 0.0232 (0.0015) | 0.0349 (0.0133) | 0.0297 (0.0114) | ||

| 0.0290 (0.0015) | 0.0248 (0.0014) | 0.0352 (0.0096) | 0.0300 (0.0082) | ||

| Case 4 | 0.1733 (0.0522) | 0.0748 (0.0224) | 0.1899 (0.0663) | 0.0820 (0.0283) | |

| 0.0610 (0.0084) | 0.0263 (0.0036) | 0.0754 (0.0158) | 0.0326 (0.0067) | ||

| 0.0334 (0.0027) | 0.0144 (0.0012) | 0.0405 (0.0079) | 0.0175 (0.0033) | ||

4 Real Data Analysis

In this section, we discuss the performance of the proposed nFunNN method for real data application. Yoga data set and StarLightCurves data set from (Chen et al., 2015) are considered. In specific, we aim to assess the predicting ability of the nFunNN method by these two data sets.

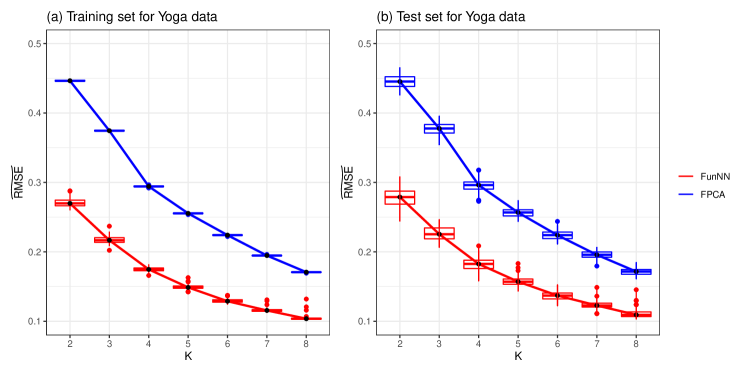

For the Yoga data set, it contains samples and the observation size is for each subject. We randomly divide the data set into training set and test set with the sample size being and respectively. The values at these observation grids are predicted by both our nFunNN method and the classical FPCA method via different . The numbers of neurons in other layers for our transformed functional autoassociative neural network are set as , , and . The following criteria are considered:

where for the Yoga data set. The data set is randomly split for times, and the predicting results for both training set and test set are presented in Table 5. It is shown that with the rise of , the predicting results are getting better for both nFunNN and FPCA methods. Moreover, the proposed nFunNN method can provide more precise predicting results when using the same as the FPCA method, which demonstrates the advantage of our method in real data application. Figure 4 exhibits the predicting performance of both methods for training set and test set by boxplots. It can be observed that nFunNN method always produces less predicting error.

| Training set | Test set | ||||

|---|---|---|---|---|---|

| FunNN | 0.2709 (0.0061) | 0.2712 (0.0061) | 0.2777 (0.0127) | 0.2780 (0.0127) | |

| FPCA | 0.4464 (0.0009) | 0.4469 (0.0009) | 0.4453 (0.0093) | 0.4459 (0.0093) | |

| FunNN | 0.2172 (0.0052) | 0.2174 (0.0052) | 0.2265 (0.0097) | 0.2267 (0.0097) | |

| FPCA | 0.3745 (0.0009) | 0.3750 (0.0009) | 0.3769 (0.0085) | 0.3773 (0.0085) | |

| FunNN | 0.1747 (0.0032) | 0.1749 (0.0032) | 0.1821 (0.0089) | 0.1823 (0.0089) | |

| FPCA | 0.2943 (0.0009) | 0.2947 (0.0009) | 0.2952 (0.0092) | 0.2955 (0.0092) | |

| FunNN | 0.1492 (0.0032) | 0.1494 (0.0032) | 0.1572 (0.0068) | 0.1574 (0.0068) | |

| FPCA | 0.2554 (0.0007) | 0.2557 (0.0007) | 0.2565 (0.0068) | 0.2568 (0.0068) | |

| FunNN | 0.1294 (0.0029) | 0.1295 (0.0029) | 0.1366 (0.0059) | 0.1367 (0.0059) | |

| FPCA | 0.2240 (0.0006) | 0.2243 (0.0006) | 0.2242 (0.0063) | 0.2245 (0.0063) | |

| FunNN | 0.1159 (0.0028) | 0.1161 (0.0028) | 0.1232 (0.0055) | 0.1234 (0.0055) | |

| FPCA | 0.1947 (0.0005) | 0.1949 (0.0005) | 0.1955 (0.0055) | 0.1958 (0.0055) | |

| FunNN | 0.1043 (0.0036) | 0.1044 (0.0036) | 0.1110 (0.0063) | 0.1111 (0.0063) | |

| FPCA | 0.1707 (0.0005) | 0.1709 (0.0005) | 0.1714 (0.0049) | 0.1716 (0.0049) | |

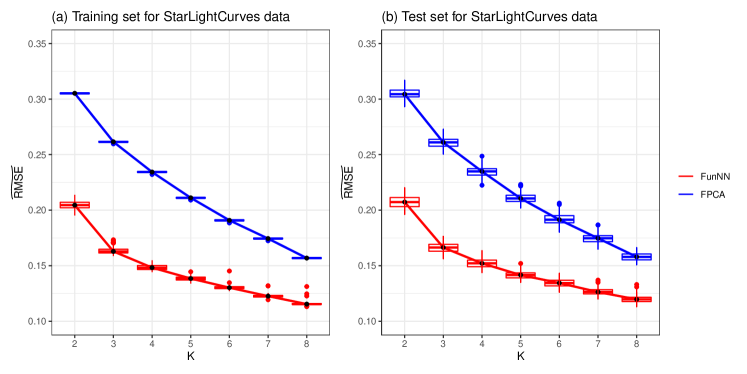

Furthermore, we consider the analysis of the StarLightCurves data set. There are subjects in this data set and each subject has observations. Similar to the analysis of Yoga data set, we randomly divide the StarLightCurves data set into training set and test set, and the sizes are set as and respectively. We intend to predict the values at these observation grids by both nFunNN and FPCA methods using various . The numbers of the neurons for the transformed functional autoassociative neural network are set as the same as that in the analysis of Yoga data set. We also conduct runs for StarLightCurves data set, and the averaged and are reported in Table 6. The trend of the prediction error for both methods is analogous with that for Yoga data set. Figure 5 provides visual illustration for the comparison of nFunNN and FPCA methods. It is shown that our nFunNN method constantly outperforms the linear FPCA method with the use of different . That further indicates the effectiveness of the proposed nFunNN method in real-world application.

| Training set | Test set | ||||

|---|---|---|---|---|---|

| FunNN | 0.2048 (0.0039) | 0.2049 (0.0039) | 0.2074 (0.0057) | 0.2075 (0.0057) | |

| FPCA | 0.3052 (0.0007) | 0.3053 (0.0007) | 0.3047 (0.0046) | 0.3048 (0.0046) | |

| FunNN | 0.1636 (0.0032) | 0.1637 (0.0032) | 0.1662 (0.0045) | 0.1663 (0.0045) | |

| FPCA | 0.2615 (0.0007) | 0.2617 (0.0007) | 0.2609 (0.0047) | 0.2611 (0.0047) | |

| FunNN | 0.1484 (0.0023) | 0.1485 (0.0023) | 0.1519 (0.0044) | 0.1520 (0.0044) | |

| FPCA | 0.2344 (0.0008) | 0.2345 (0.0008) | 0.2346 (0.0050) | 0.2347 (0.0050) | |

| FunNN | 0.1386 (0.0019) | 0.1387 (0.0019) | 0.1415 (0.0033) | 0.1416 (0.0033) | |

| FPCA | 0.2110 (0.0007) | 0.2112 (0.0007) | 0.2110 (0.0044) | 0.2111 (0.0044) | |

| FunNN | 0.1305 (0.0020) | 0.1305 (0.0020) | 0.1344 (0.0032) | 0.1344 (0.0032) | |

| FPCA | 0.1908 (0.0007) | 0.1909 (0.0007) | 0.1919 (0.0048) | 0.1920 (0.0048) | |

| FunNN | 0.1228 (0.0017) | 0.1229 (0.0017) | 0.1267 (0.0032) | 0.1267 (0.0032) | |

| FPCA | 0.1744 (0.0007) | 0.1745 (0.0007) | 0.1747 (0.0044) | 0.1748 (0.0044) | |

| FunNN | 0.1158 (0.0021) | 0.1158 (0.0021) | 0.1198 (0.0031) | 0.1199 (0.0031) | |

| FPCA | 0.1568 (0.0006) | 0.1569 (0.0006) | 0.1580 (0.0037) | 0.1581 (0.0037) | |

5 Conclusions and Discussion

In this paper, we introduce a nonlinear FPCA method to realize effective dimension reduction and curve reconstruction. We generalize the autoassociative neural network to our functional data analysis framework and construct a transformed functional autoassociative neural network for practical implementation. The proposed method takes into account the nonlinear structure of the functional observations. A Python package is developed for the convenience of using the proposed nFunNN method. The theoretical properties of the proposed networks are also considered. Moreover, the results of the simulation study and real data application further suggest the superiority of our nFunNN method.

There are also several possible extension for our work. First, we only consider usual functional data in the development of our method. However, complex function data, such as multivariate functional data (Chiou et al., 2014) and multidimensional functional data (Wang et al., 2022b), becomes more and more common nowadays. Thus, considering nonlinear FPCA method for these types of data and generalizing our method to solve such issue can be of great significance. Second, only curve reconstruction error is considered in the construction of the loss function (7) for our method. So, our method is particularly suitable for the curve reconstruction issue. It can be beneficial if other concerns can be imposed in the loss function, such as regression and clustering problems. To achieve this goal, some modifications of the proposed neural network are needed, which is worth further research.

Acknowledgments

This work was supported by Public Health Disease Control and Prevention, Major Innovation Planning Interdisciplinary Platform for the “Double-First Class” Initiative, Renmin University of China. This work was also supported by the Outstanding Innovative Talents Cultivation Funded Programs 2021 of Renmin University of China.

Appendix A Proof of Theorem 1

Proof.

Let be a compact subset of . Recall that is the set of functions from to of the form

where , , and . According to Corollary 5.1.2 in (Stinchcombe, 1999) and Section 6.1.2 of (Rossi and Conan-Guez, 2006), has the universal approximation property. Hence, for any continuous function from to and for any , there exist such that for all ,

| (10) |

As is continuous in , we have that for any , there exist such that for any , . By the approximation property of B-splines (Zhong et al., 2023) and the compactness of , we can get

| (11) |

similar to the proof in Section 6.1.3 of (Rossi and Conan-Guez, 2006), where . Then according to (10) and (11),

Moreover,

Define , then we have . The proof is completed.

∎

References

- Ramsay and Silverman [2005] James O. Ramsay and Bernard W. Silverman. Functional Data Analysis (2nd ed.). Springer Series in Statistics, New York: Springer, 2005.

- Ferraty and Vieu [2006] Frédéric Ferraty and Philippe Vieu. Nonparametric Functional Data Analysis: Theory and Practice. Springer, New York, 2006.

- Horváth and Kokoszka [2012] Lajos Horváth and Piotr Kokoszka. Inference for Functional Data with Applications. Springer, New York, 2012.

- Thind et al. [2023] Barinder Thind, Kevin Multani, and Jiguo Cao. Deep learning with functional inputs. Journal of Computational and Graphical Statistics, 32(1):171–180, 2023.

- Rao and Reimherr [2023] Aniruddha Rajendra Rao and Matthew Reimherr. Non-linear functional modeling using neural networks. Journal of Computational and Graphical Statistics, pages 1–20, 2023.

- Wu et al. [2022] Sidi Wu, Cédric Beaulac, and Jiguo Cao. Neural networks for scalar input and functional output. arXiv preprint arXiv:2208.05776, 2022.

- Thind et al. [2020] Barinder Thind, Kevin Multani, and Jiguo Cao. Neural networks as functional classifiers. arXiv preprint arXiv:2010.04305, 2020.

- Wang et al. [2022a] Shuoyang Wang, Guanqun Cao, and Zuofeng Shang. Deep neural network classifier for multi-dimensional functional data. arXiv preprint arXiv:2205.08592, 2022a.

- Wang et al. [2021] Shuoyang Wang, Guanqun Cao, Zuofeng Shang, and Alzheimer’s Disease Neuroimaging Initiative. Estimation of the mean function of functional data via deep neural networks. Stat, 10(1):e393, 2021.

- Wang and Cao [2022a] Shuoyang Wang and Guanqun Cao. Robust deep neural network estimation for multi-dimensional functional data. Electronic Journal of Statistics, 16(2):6461–6488, 2022a.

- Sarkar and Panaretos [2022] Soham Sarkar and Victor M Panaretos. Covnet: Covariance networks for functional data on multidimensional domains. Journal of the Royal Statistical Society Series B: Statistical Methodology, 84(5):1785–1820, 2022.

- Wang and Cao [2022b] Haixu Wang and Jiguo Cao. Functional nonlinear learning. arXiv preprint arXiv:2206.11424, 2022b.

- Yao et al. [2005] Fang Yao, Hans-Georg Müller, and Jane-Ling Wang. Functional data analysis for sparse longitudinal data. Journal of the American statistical association, 100(470):577–590, 2005.

- Hall et al. [2006] Peter Hall, Hans-Georg Müller, and Jane-Ling Wang. Properties of principal component methods for functional and longitudinal data analysis. The Annals of Statistics, 34(3):1493–1517, 2006.

- Li and Hsing [2010] Yehua Li and Tailen Hsing. Uniform convergence rates for nonparametric regression and principal component analysis in functional/longitudinal data. The Annals of Statistics, 38(6):3321–3351, 2010.

- Locantore et al. [1999] N Locantore, JS Marron, DG Simpson, N Tripoli, JT Zhang, KL Cohen, Graciela Boente, Ricardo Fraiman, Babette Brumback, Christophe Croux, et al. Robust principal component analysis for functional data. Test, 8:1–73, 1999.

- Gervini [2008] Daniel Gervini. Robust functional estimation using the median and spherical principal components. Biometrika, 95(3):587–600, 2008.

- Zhong et al. [2022] Rou Zhong, Shishi Liu, Haocheng Li, and Jingxiao Zhang. Robust functional principal component analysis for non-gaussian longitudinal data. Journal of Multivariate Analysis, 189:104864, 2022.

- Chiou et al. [2014] Jeng-Min Chiou, Yu-Ting Chen, and Ya-Fang Yang. Multivariate functional principal component analysis: A normalization approach. Statistica Sinica, 24:1571–1596, 2014.

- Happ and Greven [2018] Clara Happ and Sonja Greven. Multivariate functional principal component analysis for data observed on different (dimensional) domains. Journal of the American Statistical Association, 113(522):649–659, 2018.

- Song and Li [2021] Jun Song and Bing Li. Nonlinear and additive principal component analysis for functional data. Journal of Multivariate Analysis, 181:104675, 2021.

- Kramer [1991] Mark A Kramer. Nonlinear principal component analysis using autoassociative neural networks. AIChE journal, 37(2):233–243, 1991.

- Stinchcombe [1999] Maxwell B Stinchcombe. Neural network approximation of continuous functionals and continuous functions on compactifications. Neural Networks, 12(3):467–477, 1999.

- Rossi and Conan-Guez [2006] Fabrice Rossi and Brieuc Conan-Guez. Theoretical properties of projection based multilayer perceptrons with functional inputs. Neural Processing Letters, 23(1):55–70, 2006.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Chen et al. [2015] Yanping Chen, Eamonn Keogh, Bing Hu, Nurjahan Begum, Anthony Bagnall, Abdullah Mueen, and Gustavo Batista. The ucr time series classification archive, July 2015. www.cs.ucr.edu/~eamonn/time_series_data/.

- Wang et al. [2022b] Jiayi Wang, Raymond KW Wong, and Xiaoke Zhang. Low-rank covariance function estimation for multidimensional functional data. Journal of the American Statistical Association, 117(538):809–822, 2022b.

- Zhong et al. [2023] Rou Zhong, Shishi Liu, Haocheng Li, and Jingxiao Zhang. Sparse logistic functional principal component analysis for binary data. Statistics and Computing, 33(1):15, 2023.