Nonlinear Kalman Filtering based on Self-Attention Mechanism and Lattice Trajectory Piecewise Linear Approximation

Abstract

The traditional Kalman filter (KF) is widely applied in control systems, but it relies heavily on the accuracy of the system model and noise parameters, leading to potential performance degradation when facing inaccuracies. To address this issue, introducing neural networks into the KF framework offers a data-driven solution to compensate for these inaccuracies, improving the filter’s performance while maintaining interpretability. Nevertheless, existing studies mostly employ recurrent neural network (RNN), which fails to fully capture the dependencies among state sequences and lead to an unstable training process. In this paper, we propose a novel Kalman filtering algorithm named the attention Kalman filter (AtKF), which incorporates a self-attention network to capture the dependencies among state sequences. To address the instability in the recursive training process, a parallel pre-training strategy is devised. Specifically, this strategy involves piecewise linearizing the system via lattice trajectory piecewise linear (LTPWL) expression, and generating pre-training data through a batch estimation algorithm, which exploits the self-attention mechanism’s parallel processing ability. Experimental results on a two-dimensional nonlinear system demonstrate that AtKF outperforms other filters under noise disturbances and model mismatches.

I INTRODUCTION

In the field of modern control theory, the Kalman filter (KF) [1] and its variant, the extended Kalman filter (EKF) [2], are fundamental tools for state estimation in control system design. However, the performance of these model-based filters depends significantly on the accuracy of the system model and noise parameters. Inaccurate settings can lead to a notable decline in KF’s performance.

To address this challenge, many studies improved KF by integrating data-driven approaches, which are mainly categorized into external combination and internal embedding [3]. External combination approaches employ neural networks for either identifying system parameters or enhancing fusion filtering with KF, where the neural network works independently of KF. Gao et al. [4] proposed an adaptive KF that uses reinforcement learning to estimate process noise covariance dynamically, thus improving navigation accuracy and robustness. Tian et al. [5] devised a battery state estimation method that merges the outputs of a deep neural network with the ampere-hour counting method through a linear KF, yielding faster and more precise estimations. Internal embedding strategies integrate neural networks within the KF framework and replace certain parts of the traditional KF. Jung et al. [6] introduced a memorized KF that uses long short-term memory (LSTM) networks [7] to learn transition probability density functions. This approach effectively surpasses the Markovian and linearity constraints inherent in traditional KF. KalmanNet [8] combined KF with gated recurrent units (GRU) [9] to estimate the Kalman gain, showing improved filtering performance in model mismatched and nonlinear systems. Directly embedding neural networks into the KF framework represents a novel and promising research direction.

However, most current approaches employ LSTM or GRU to learn from time series data. These recurrent neural networks (RNN) perform poorly in comprehensively capturing the dependencies in time series data. Additionally, their recursive training processes suffer from instability and inefficiency.

Inspired by KalmanNet [8], we introduce a novel technique that incorporates the self-attention mechanism from Transformer [10] into the Kalman filtering. By analyzing state sequences over historical time windows, our method aims to capture dependencies among state sequences more effectively, thereby enhancing estimation accuracy and robustness. However, due to KF’s recursive structure, directly applying the attention mechanism within KF leads to an inherently recursive training process, which is incapable of addressing the issues of instability and inefficiency. To solve this, we design a pre-training method that constructs all pre-training data through batch estimation. It estimates the system states over a period in one go, thereby avoiding the recursive process. This approach sets up better starting points for the attention network, enabling it to replicate the benefits of extensive training through a minimal number of iterations.

Nevertheless, for batch estimation of nonlinear systems, it is necessary to perform linearization first, for which the lattice trajectory piecewise linear (LTPWL) expression offers an analytical and compact solution. The lattice piecewise linear (PWL) expression is named for its algebraic properties of performing max and min operations on affine functions [11]. Tarela et al. [11] summarized several representation methods of lattice PWL functions from [12] [13]. Ovchinnikov [14] provided proof that lattice PWL can represent any PWL function, and Xu et al. [15] introduced methods for removing redundant terms and literals in lattice PWL. Wang et al. [16] proposed a LTPWL method for approximating nonlinear systems with lattice PWL. Here, we use the LTPWL to perform piecewise linearization of the nonlinear system, then generate pre-training data through a batch estimation algorithm for non-nested training of the network.

The main contributions of this paper can be summarized as follows:

-

•

A Kalman filtering algorithm embedded with a simplified attention mechanism is proposed, which better captures the dependencies among state sequences, thereby improving the accuracy and robustness of state estimation.

-

•

A pre-training method based on the LTPWL and batch estimation algorithm is designed, addressing the instability and inefficiency of the recursive training process, while fully leveraging the parallel processing capabilities of the self-attention network.

The paper is structured as follows: Section 2 introduces the self-attention mechanism and LTPWL expression. Section 3 details the structure of AtKF and the pre-training method. Section 4 evaluates our approach through experiments on a two-dimensional nonlinear system, and Section 5 concludes the paper.

II PRELIMINARIES

II-A Self-attention Mechanism

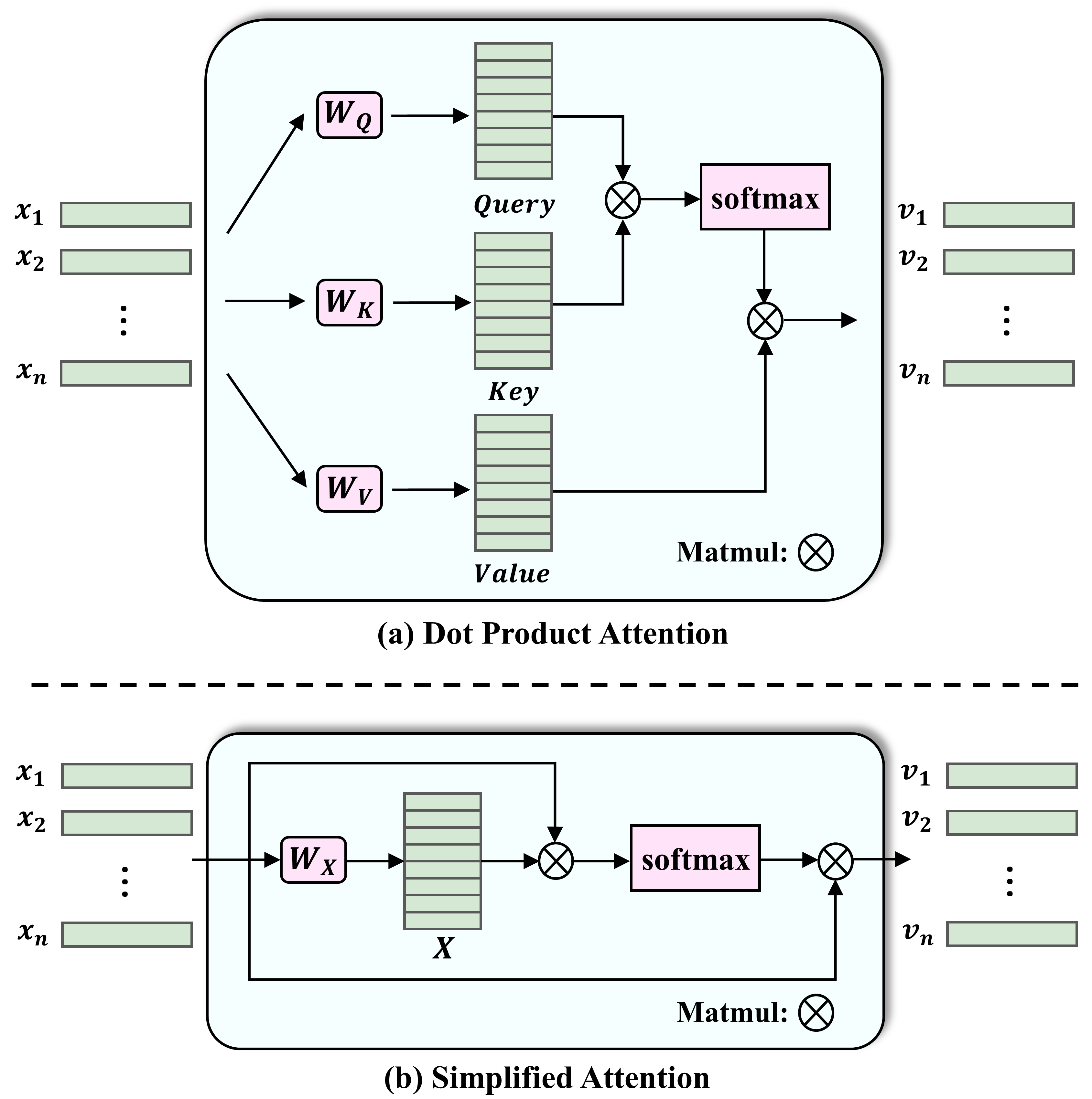

This section introduces the operation of the attention mechanism and our proposed simplified attention network. The self-attention mechanism is shown in Fig. 1(a). It transforms the input sequence into query, key, and value matrices through linear mappings. For each sequence element , it calculates the dot products with all sequence elements, forming an attention distribution through softmax that indicates the elements’ dependencies. This attention distribution is then multiplied with value to produce the output sequence , where each element integrates information from the entire sequence. This ensures each processed element reflects the influence of every other element, overcoming the limitations of distance.

A simplified version of this mechanism, as depicted in Fig. 1(b), streamlines the process by using a single matrix for multiplying both the attention distribution and the output sequence. This is feasible because , and the dimension of matches that of the input sequence matrix, eliminating the need for separate and extra linear mappings. Given the small amount of sequence data and the simple distribution of features in the Kalman filtering process, employing the full multi-head attention mechanism can increase training difficulty and lead to overfitting. By reducing the number of parameters, this simplified approach boosts efficiency without sacrificing the model’s ability to capture crucial dependencies.

II-B Lattice Trajectory Piecewise Linear Expression

The LTPWL expression is an approximate method of constructing a lattice PWL expression for a nonlinear function. It can simultaneously accomplish the linearization of the nonlinear system and the construction of lattice PWL expression. Its main process involves selecting a set of linearization points along the system’s state trajectory, constructing linear segments at each of these points, and finally using these segments to build a lattice PWL expression to approximate the original nonlinear system.

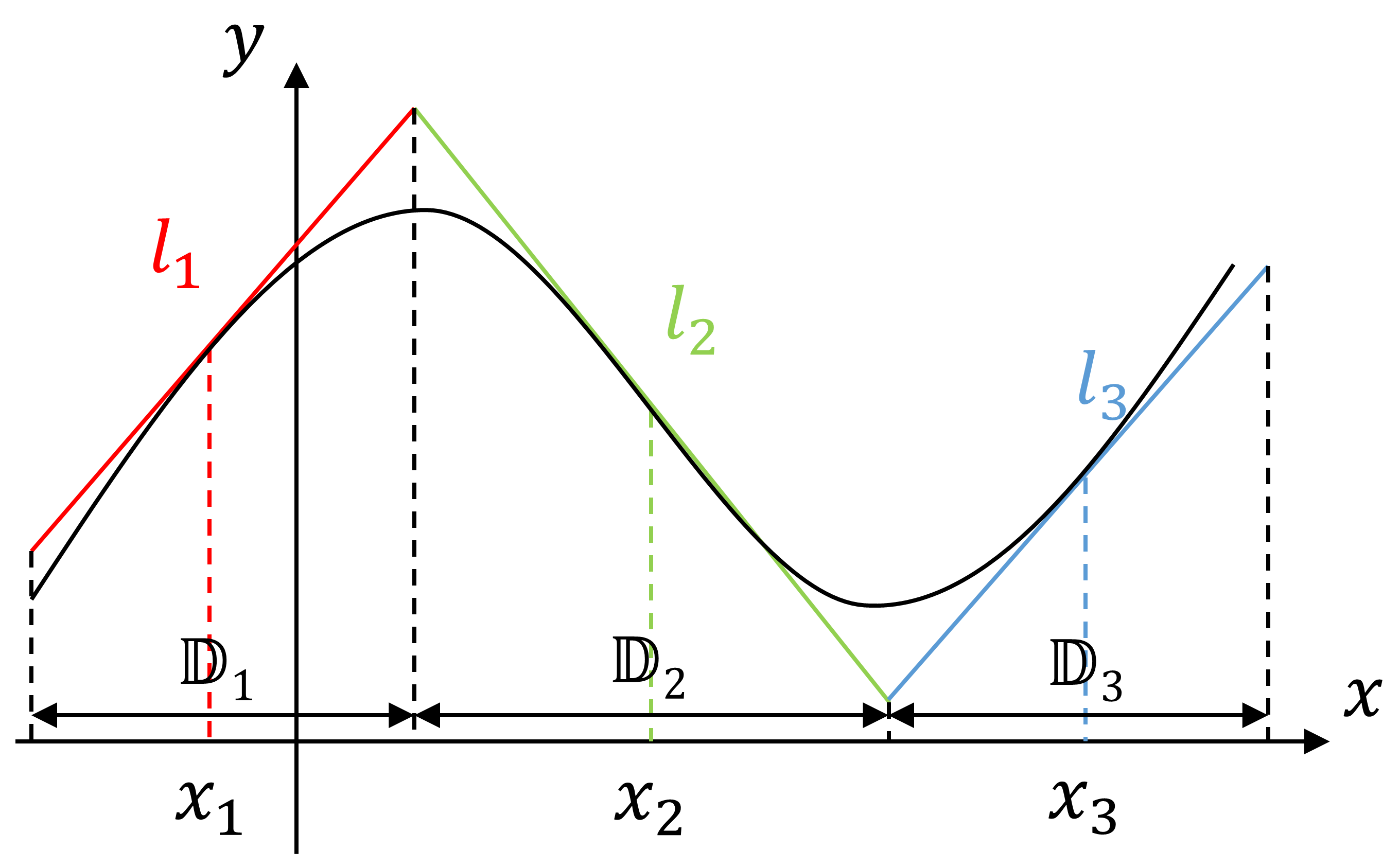

Taking the nonlinear function shown in Fig. 2 as an example, by selecting points and along the state trajectory (here, the -axis), and creating corresponding linear segments and at each point, we represent the PWL function as shown in (1),

| (1) |

this method simplifies the approximation of nonlinear functions without defining specific interval ranges for , making it a more compact solution compared with traditional piecewise form (2),

| (2) |

The general form of the lattice PWL is shown in (3),

| (3) |

where is the number of base regions , with each being a polyhedron satisfying . Furthermore, denotes the index set of affine functions in the base region that are greater than or equal to the linear segment , i.e., .

For the PWL function shown in Fig. 2 with three basic regions, . Taking the linear segment as an example, in the basic region , the affine functions that are greater than or equal to are and . Then, a minimum operation is applied to and to construct a “term” in the lattice PWL expression, resulting in . Terms for linear segments and are constructed in a similar way, resulting in and , respectively. Then, a maximum operation on these three terms yields the final lattice PWL expression (1). When evaluating the lattice piecewise linear expression, it is only necessary to substitute the value of the variable , without the need to consider the interval range of the variable as in the traditional piecewise form. Moreover, we can conveniently identify the linear segment that represents the current system dynamics through comparison operations. As we will see in Section III-D2, this property of the lattice expression is particularly suited for batch estimation algorithms to generate pre-training data.

III Kalman Filtering Algorithm with Attention Mechanism

This section offers an overview of the AtKF framework and the pre-training approach based on the LTPWL expression. It is structured into four parts: the system model, the overall architecture, the network structure, and the training methodology.

III-A System Model

Considering the discrete-time nonlinear system given by (4),

| (4a) | ||||

| (4b) | ||||

| (4c) | ||||

where and represent the nonlinear state transition and observation functions, respectively, denotes the state vector at time step , and represents the observation at . and correspond to the process noise and observation noise at , respectively, both assumed to be Gaussian white noise with their covariance matrices and .

III-B Overall Architecture

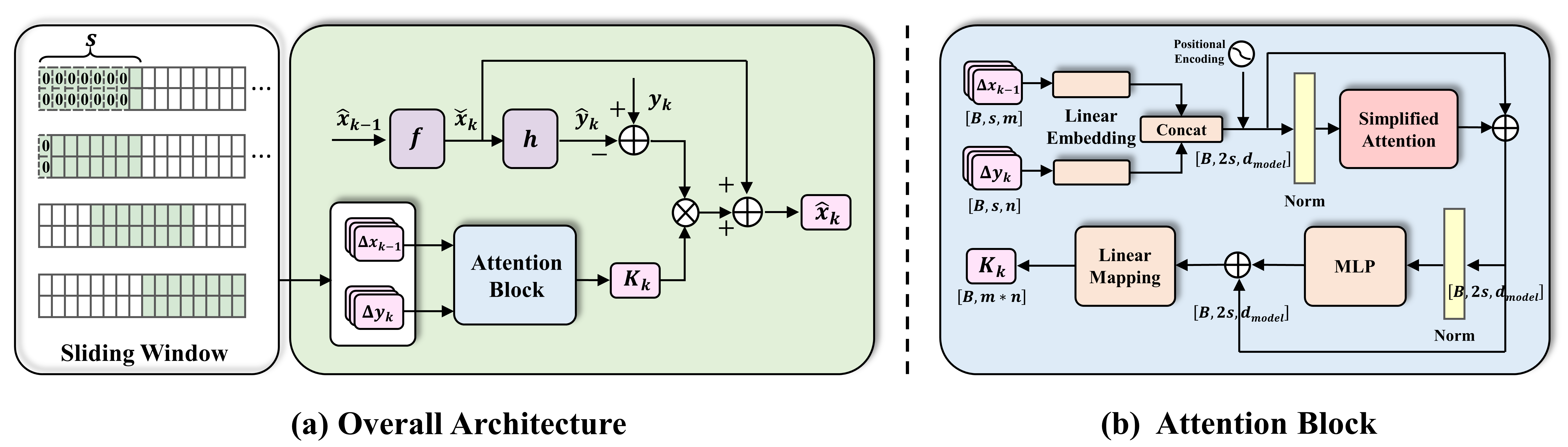

As shown in Fig. 3(a), the framework aligns with the traditional Kalman filtering algorithm, using the system model for state recursion and output prediction at each time step. At any given moment, such as time step , and represent the prior estimate of the state and the predicted system output, respectively, while is the posterior state estimate. A self-attention mechanism is incorporated to predict the Kalman gain . The gain, acting as a fusion coefficient between model predictions and system observations, adjusts based on process and observation noise . By leveraging the self-attention mechanism, the network captures sequential data dependencies more effectively, leading to enhanced estimation accuracy by fitting the system’s noise characteristics.

The input to the self-attention mechanism network comprises two types of features. which can be expressed as in (5) at time step ,

| (5a) | ||||

| (5b) | ||||

represents the forward update difference, and represents the innovation. To fully utilize the sequence processing capability of the self-attention network and to capture the features among state sequences thoroughly, values from a past time window for each feature are collected to form a sequence, which then serves as the network input. Moreover, as the filtering process goes, the window slides accordingly. Assuming the size of the time window is , the network input at can be represented as in (6),

| (6) |

If the historical data is insufficient (i.e., when ), the network fills missing feature values in the input sequence with zeros, as shown in Fig. 3(a). Then the network uses the processed input sequence to predict the Kalman gain , which is subsequently utilized to update the system state.

Similar to the traditional KF, the overall filtering framework can also be summarized into prediction and update steps:

-

•

Prediction step:

(7a) (7b) -

•

Update step:

(8a) (8b)

III-C Network Structure

As shown in Fig. 3(b), the entire self-attention network consists of two linear embedding layers for initial processing, a positional encoding layer to integrate sequence position information, a simplified attention layer for capturing dependencies within the sequence, and two fully connected layers within a multi-layer perceptron (MLP) block to enrich feature representation. It concludes with a linear mapping layer that projects the processed features to predict the Kalman gain . Starting with input features and , the network fuses and transforms these inputs into a sequence via the embedding layers. is then enhanced for dependency recognition in the attention layer and further processed by the MLP to capture non-linear characteristics, finally outputting the predicted Kalman gain .

III-D Network Training

III-D1 Training

We conduct end-to-end training of the entire AtKF framework. The training dataset is constructed from a nonlinear system (4) and includes noise that is generated randomly. Each instance in the training dataset captures the true state and system output across a defined period, with the dataset encompassing instances, each spanning time steps. The -th training data instance is specified as (9),

| (9) |

Assuming represents all trainable parameters of AtKF, the network is trained using a loss function defined as (10),

| (10) |

Furthermore, since the loss is derivatively related to the network output, the Kalman gain , as formulated in (11),

| (11) | ||||

where , thus enabling the entire AtKF framework to be trained end-to-end through backpropagation.

III-D2 PreTraining

The recursive nature of the KF leads to nested forward and backward propagation during training, posing risks of gradient issues and making the training unstable. Moreover, this recursive training process fails to fully exploit the parallel processing strengths of the self-attention network. To address these issues, we propose a pre-training method based on batch estimation and LTPWL. Here, we first linearize the system with LTPWL and then use batch estimation to directly estimate the system states over a period at once, thus generating pre-training data. This method avoids the recursive limitations of KF and enhances training stability and efficiency.

Batch estimation requires a linear system; thus, we consider approximating a nonlinear system (4) through LTPWL. Assuming that a state trajectory with state points is derived from the initial state estimate and , select all state points as linearization points. At each point , a linear segment is constructed through a first-order Taylor expansion, as shown in (12):

| (12a) | ||||

| (12b) | ||||

where and represent the linear segments constructed at for and , respectively. Then, the LTPWL expression can be constructed, with and . Assuming training data (9) from time step 1 to , the application of batch estimation for this LTPWL model is formulated as detailed in Lemma 1.

Lemma 1

Define vectors and as in (13),

| (13a) | ||||

| (13b) | ||||

where , , and . and are noise covariance matrices in moment .

Proof:

The constructed LTPWL system is equivalent to a discrete-time linear time-varying system, where the dynamics of the system at each moment are determined by the state variables. With the evaluation properties of LTPWL described in the preliminaries, it is straightforward to derive the transition matrix and observation matrix for every moment. This leads to a concise system model representation, as outlined in (17),

| (17a) | |||

| (17b) | |||

where and represent the system input and observation at time , and represent the process and observation noise, respectively, both following Gaussian distributions. According to [17], for the linear time-varying system (17), the batch posterior estimate can be derived using (16), in which the vector , the matrices and are defined as in (13a), (14) and (15), respectively.

∎

In summary, with training data (9) available, we systematically construct the matrices and for each moment based on the true states , facilitating batch estimation from moments 1 to as outlined in Lemma 1. Based on the batch estimated values , alongside the system model , , and the initial training dataset, we prepare a pre-training dataset. This dataset comprises input features (6) for the network and the corresponding true state values, with each pre-training instance specified as (18),

| (18) |

The pre-training dataset is denoted as . By employing the loss function (10), the network can be pre-trained in a batch, avoiding recursion. This pre-training serves as a better starting point for subsequent formal training.

IV EXPERIMENTS

This section presents simulation experiments on a two-dimensional nonlinear system [8] with AtKF, under varying noise conditions and model mismatches. Results are compared to those from the traditional EKF, unscented Kalman filter (UKF), particle filter (PF) and KalmanNet.

IV-A System Function and Parameters

The system function is given by (19), where both the system state and output are two-dimensional vectors, i.e., . The parameters of the system are shown in Table I.

| (19a) | ||||

| (19b) | ||||

| 0.9 | 1.1 | 0.01 | 1 | 1 | 0 | ||

| 1 | 1 | 0 | 0 | 1 | 1 | 0 |

represents the true parameters of the system, and denotes the parameters of the model. The system’s state transition function (19a) and observation function (19b) are both nonlinear. and represent the process noise and observation noise, respectively, both assumed to be Gaussian white noise with covariance matrices denoted by and .

IV-B Experimental Setup

We use the original nonlinear model (19), starting with the initial state . datasets for training, validation, and testing are generated under random noise. The training dataset contains data entries, each with time steps. The validation dataset contains data entries, each with time steps. The test dataset contains data entries, each with time steps. Moreover, to generate pre-training data, a noise-free state trajectory of 10 time steps is produced using the same nonlinear model and initial state.

For training parameters, the self-attention network’s sliding window size was set to , with a batch size of 50 and a learning rate of 1e-4. For the AtKF, pre-training was conducted for 50 epochs and the subsequent training phase for 20 epochs. To ensure fairness, KalmanNet was trained for 70 epochs. All training processes are conducted on a GTX-3090 GPU.

IV-C Results and Analysis

IV-C1 Noise Robustness

By setting the weight coefficients to different values, simulation experiments are conducted for various noise levels and the model used by the filter is consistent with the true system. The results are shown in Table II.

| 1 | 2 | 4 | 8 | 16 | |

| EKF | 3.0216 | 7.6312 | 20.5524 | 64.4445 | 218.2332 |

| UKF | 3.1972 | 9.8430 | 29.0027 | 103.1582 | 460.5745 |

| PF | 1.4986 | 2.8381 | 5.6377 | 11.2771 | 23.9068 |

| KalmanNet | 1.6303 | 3.3716 | 6.4136 | 9.6848 | 18.5984 |

| AtKF | 1.6175 | 2.9235 | 4.9186 | 8.7522 | 16.6712 |

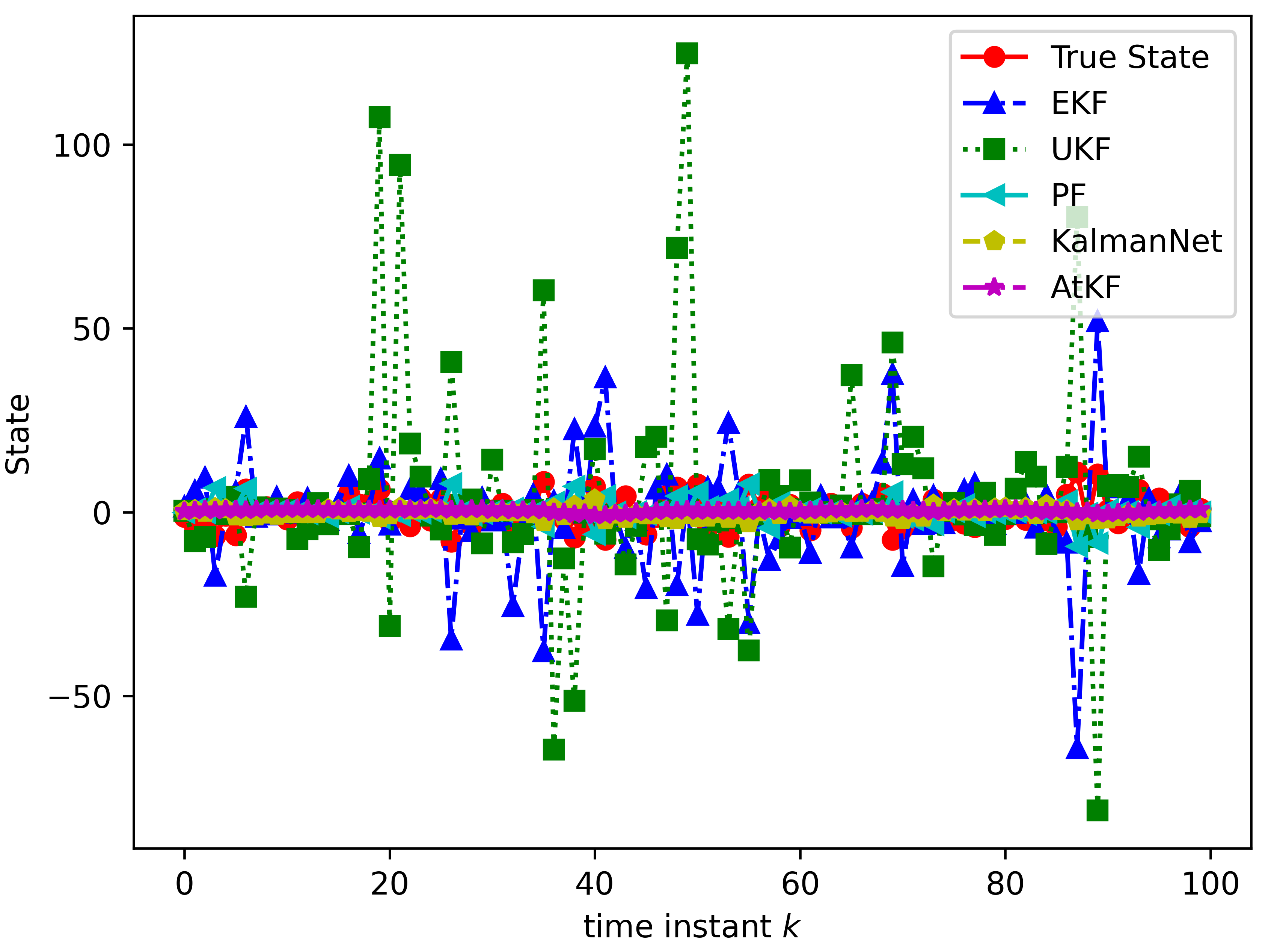

It is obvious that when the noise is significant, our AtKF shows superior performance compared with other filters. Although our performance is similar to PF under low noise, AtKF significantly surpass PF under high noise. Specifically when , AtKF achieves an MSE of 16.6712, while our performance is 30% better than PF. This superiority is attributed to the attention network’s ability to compensate for noise. Since our capability to capture dependencies among sequences and start from a good initialization point through pre-training, our results outperform KalmanNet. Fig. 4 shows the first component of the true state values and the estimated values from different filters for a selected data entry in the test dataset. It is evident that AtKF provides the best tracking of the true state. In conclusion, the results demonstrate that the noise characteristics in the system can be better fitted with the assistance of neural networks, leading to improved estimation results. Furthermore, networks based on the attention mechanism achieve better estimation performance by more comprehensively capturing the dependencies among sequences.

IV-C2 Model Mismatch

Here we assume a difference between the model used by the filter and the true system. Simulation experiments are conducted under model mismatch conditions for different noise levels. The results are shown in Table III.

| 1 | 2 | 4 | 8 | 16 | |

| EKF | 3.7272 | 8.1047 | 20.2963 | 60.7735 | 211.4128 |

| UKF | 3.7043 | 7.8158 | 24.1762 | 81.3889 | 319.6542 |

| PF | 1.6882 | 2.9876 | 5.8008 | 11.3298 | 23.9946 |

| KalmanNet | 2.0326 | 3.2508 | 6.3598 | 9.5641 | 18.6151 |

| AtKF | 1.4880 | 2.8058 | 4.5026 | 8.4523 | 16.5934 |

It is observed that filters embedded with neural networks remain widely better than model-based filtering. Furthermore, the attention mechanism network-based AtKF outperforms the GRU-based KalmanNet. This shows that AtKF offers greater robustness, and attention mechanism networks are better at capturing the dependencies between state sequences, thereby achieving superior filtering results.

V CONCLUSIONS

This paper introduces the attention Kalman filter (AtKF), a novel approach that integrates self-attention with the KF to improve the accuracy and robustness of state estimation. AtKF addresses traditional KFs’ shortcomings in handling system model inaccuracies and noise parameters. Specifically, AtKF uses self-attention to comprehensively capture dependencies in state sequences and perform an innovative pre-training strategy, which includes LTPWL for system linearization and batch estimation for data generation. AtKF addresses the challenges of instability and inefficiency associated with recursive training. Experiments with a two-dimensional nonlinear system demonstrate AtKF’s effectiveness in managing noise disturbances and model mismatches. This work enhances KF’s performance with neural network architectures and paves the way for future research on integrating data-driven techniques with traditional estimation methods for complex systems.

References

- [1] R. E. Kalman, “A New Approach to Linear Filtering and Prediction Problems,” Journal of Basic Engineering, vol. 82, no. 1, pp. 35–45, 1960.

- [2] P. S. Maybeck, Stochastic models, estimation, and control. Academic press, 1982.

- [3] Y. Bai, B. Yan, C. Zhou, T. Su, and X. Jin, “State of art on state estimation: Kalman filter driven by machine learning,” Annual Reviews in Control, vol. 56, p. 100909, 2023.

- [4] X. Gao, H. Luo, B. Ning, F. Zhao, L. Bao, Y. Gong, Y. Xiao, and J. Jiang, “Rl-akf: An adaptive kalman filter navigation algorithm based on reinforcement learning for ground vehicles,” Remote Sensing, vol. 12, no. 11, p. 1704, 2020.

- [5] J. Tian, R. Xiong, W. Shen, and J. Lu, “State-of-charge estimation of lifepo4 batteries in electric vehicles: A deep-learning enabled approach,” Applied Energy, vol. 291, p. 116812, 2021.

- [6] S. Jung, I. Schlangen, and A. Charlish, “A mnemonic kalman filter for non-linear systems with extensive temporal dependencies,” IEEE Signal Processing Letters, vol. 27, pp. 1005–1009, 2020.

- [7] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural Computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [8] G. Revach, N. Shlezinger, X. Ni, A. L. Escoriza, R. J. Van Sloun, and Y. C. Eldar, “Kalmannet: Neural network aided kalman filtering for partially known dynamics,” IEEE Transactions on Signal Processing, vol. 70, pp. 1532–1547, 2022.

- [9] J. Chung, C. Gulcehre, K. Cho, and Y. Bengio, “Empirical evaluation of gated recurrent neural networks on sequence modeling,” in NIPS 2014 Workshop on Deep Learning, 2014.

- [10] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in Neural Information Processing Systems, vol. 30, 2017.

- [11] J. Tarela and M. Martínez, “Region configurations for realizability of lattice piecewise-linear models,” Mathematical and Computer Modelling, vol. 30, no. 11, pp. 17–27, 1999.

- [12] J.-N. Lin and R. Unbehauen, “Explicit piecewise-linear models,” IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications, vol. 41, no. 12, pp. 931–933, 1994.

- [13] J. Tarela, E. Alonso, and M. Martínez, “A representation method for pwl functions oriented to parallel processing,” Mathematical and Computer Modelling, vol. 13, no. 10, pp. 75–83, 1990.

- [14] S. Ovchinnikov, “Max-min representation of piecewise linear functions,” Beiträge zur Algebra und Geometrie, vol. 43, no. 1, pp. 297–302, 2002.

- [15] J. Xu, T. J. van den Boom, B. De Schutter, and S. Wang, “Irredundant lattice representations of continuous piecewise affine functions,” Automatica, vol. 70, pp. 109–120, 2016.

- [16] J. Wang, J. Xu, and S. Wang, “Lattice trajectory piecewise linear method for the simulation of diode circuits,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 68, no. 5, pp. 2069–2081, 2021.

- [17] T. D. Barfoot, State estimation for robotics. Cambridge University Press, 2017.