Nonlinear reduced models for state and parameter estimation

Abstract

State estimation aims at approximately reconstructing the solution to a parametrized partial differential equation from linear measurements, when the parameter vector is unknown. Fast numerical recovery methods have been proposed in [19] based on reduced models which are linear spaces of moderate dimension which are tailored to approximate the solution manifold where the solution sits. These methods can be viewed as deterministic counterparts to Bayesian estimation approaches, and are proved to be optimal when the prior is expressed by approximability of the solution with respect to the reduced model [2]. However, they are inherently limited by their linear nature, which bounds from below their best possible performance by the Kolmogorov width of the solution manifold. In this paper we propose to break this barrier by using simple nonlinear reduced models that consist of a finite union of linear spaces , each having dimension at most and leading to different estimators . A model selection mechanism based on minimizing the PDE residual over the parameter space is used to select from this collection the final estimator . Our analysis shows that meets optimal recovery benchmarks that are inherent to the solution manifold and not tied to its Kolmogorov width. The residual minimization procedure is computationally simple in the relevant case of affine parameter dependence in the PDE. In addition, it results in an estimator for the unknown parameter vector. In this setting, we also discuss an alternating minimization (coordinate descent) algorithm for joint state and parameter estimation, that potentially improves the quality of both estimators.

1 Introduction

1.1 Parametrized PDEs and inverse problems

Parametrized partial differential equations are of common used to model complex physical systems. Such equations can generally be written in abstract form as

| (1.1) |

where is a vector of scalar parameters ranging in some domain . We assume well-posedness, that is, for any the problem admits a unique solution in some Hilbert space . We may therefore consider the parameter to solution map

| (1.2) |

from to , which is typically nonlinear, as well as the solution manifold

| (1.3) |

that describes the collection of all admissible solutions. Throughout this paper, we assume that is compact in and that the map (1.2) is continuous. Therefore is a compact set of . We sometimes refer to the solution as the state of the system for the given parameter vector .

The parameters are used to represent physical quantities such as diffusivity, viscosity, velocity, source terms, or the geometry of the physical domain in which the PDE is posed. In several relevant instances, may be high or even countably infinite dimensional, that is, or .

In this paper, we are interested in inverse problems which occur when only a vector of linear measurements

| (1.4) |

is observed, where each is a known continuous linear functional on . We also sometimes use the notation

| (1.5) |

One wishes to recover from the unknown state or even the underlying parameter vector for which . Therefore, in an idealized setting, one partially observes the result of the composition map

| (1.6) |

for the unknown . More realistically, the measurements may be affected by additive noise

| (1.7) |

and the model itself might be biased, meaning that the true state deviates from the solution manifold by some amount. Thus, two types of inverse problems may be considered:

-

(i)

State estimation: recover an approximation of the state from the observation . This is a linear inverse problem, in which the prior information on is given by the manifold which has a complex geometry and is not explicitly known.

-

(ii)

Parameter estimation: recover an approximation of the parameter from the observation when . This is a nonlinear inverse problem, for which the prior information available on is given by the domain .

These problems become severely ill-posed when has dimension . For this reason, they are often addressed through Bayesian approaches [17, 25]: a prior probability distribution being assumed on (thus inducing a push forward distribution for ), the objective is to understand the posterior distributions of or conditioned by the observations in order to compute plausible solutions or under such probabilistic priors. The accuracy of these solutions should therefore be assessed in some average sense.

In this paper, we do not follow this avenue: the only priors made on and are their membership to and . We are interested in developping practical estimation methods that offer uniform recovery guarantees under such deterministic priors in the form of upper bounds on the worst case error for the estimators over all or . We also aim to understand whether our error bounds are optimal in some sense. Our primary focus will actually be on state estimation (i). Nevertheless we present in §4 several implications on parameter estimation (ii), which to our knowledge are new. For state estimation, error bounds have recently been established for a class of methods based on linear reduced modeling, as we recall next.

1.2 Reduced models: the PBDW method

In several relevant instances, the particular parametrized PDE structure allows one to construct linear spaces of moderate dimension that are specifically tailored to the approximation of the solution manifold , in the sense that

| (1.8) |

where is a certified bound that decays with significanly faster than when using for classical approximation spaces of dimension such as finite elements, algebraic or trigonometric polynomials, or spline functions. Throughout this paper

| (1.9) |

denotes the norm of the Hilbert space . The natural benchmark for such approximation spaces is the Kolmogorov -width

| (1.10) |

The space that achieves the above minimum is thus the best possible reduced model for approximating all of , however it is computationally out of reach.

One instance of computational reduced model spaces is generated by sparse polynomial approximations of the form

| (1.11) |

where is a conveniently chosen set of multi-indices such that . Such approximations can be derived, for example, by best -term truncations of infinite Taylor or orthogonal polynomial expansions. We refer to [11, 12] where convergence estimates of the form

| (1.12) |

are established for some even when . Therefore, the space approximates the solution manifold with accuracy .

Another instance, known as reduced basis approximation, consists of using spaces of the form

| (1.13) |

where are instances of solutions corresponding to a particular selection of parameter values (see [20, 23, 24]). One typical selection procedure is based on a greedy algorithm: one picks such that is furthest away from the previously constructed space in the sense of maximizing a computable and tight a-posteriori bound of the projection error over a sufficiently fine discrete training set . In turn, this method also delivers a computable upper estimate for . It was proved in [1, 16] that the reduced basis spaces resulting from this greedy algorithm have near-optimal approximation property, in the sense that if has a certain polynomial or exponential rate of decay as , then the same rate is achieved by .

In both cases, these reduced models come in the form of a hierarchy , with computable decreasing error bounds , where corresponds to the level of truncation in the first case and the step of the greedy algorithm in the second case. Given a reduced model , one way of tackling the state estimation problem is to replace the complex solution manifold by the simpler prior class described by the cylinder

| (1.14) |

that contains . The set therefore reflects the approximability of by . This point of view leads to the Parametrized Background Data Weak (PBDW) method introduced in [19], also termed as one space method and further analyzed in [2], that we recall below in a nutshell.

In the noiseless case, the knowledge of is equivalent to that of the orthogonal projection , where

| (1.15) |

and are the Riesz representers of the linear functionals , that is

| (1.16) |

Thus, the data indicates that belongs to the affine space

| (1.17) |

Combining this information with the prior class , the unknown state thus belongs to the ellipsoid

| (1.18) |

For this posterior class , the optimal recovery estimator that minimizes the worst case error is therefore the center of the ellipsoid, which is equivalently given by

| (1.19) |

It can be computed from the data in an elementary manner by solving a finite set of linear equations. The worst case performance for this estimator, both over and , for any , is thus given by the half-diameter of the ellipsoid which is the product of the width of and the quantity

| (1.20) |

Note that is the inverse of the cosine of the angle between and . For , this quantity can be computed as the inverse of the smallest singular value of the cross-Gramian matrix with entries between any pair of orthonormal bases and of and , respectively. It is readily seen that one also has

| (1.21) |

allowing us to extend the above definition to the case of the zero-dimensional space for which .

Since , the worst case error bound over of the estimator, defined as

| (1.22) |

satisfies the error bound

| (1.23) |

Remark 1.1

The estimation map is linear with norm and does not depend on . It thus satisfies, for any individual and ,

| (1.24) |

We may therefore account for an additional measurement noise and model bias: if the observation is with , and if the true states do not lie in but satisfy , the guaranteed error bound (1.23) should be modified into

| (1.25) |

In practice, the noise component typically results from a noise vector affecting the observation according to . Assuming a bound where is the Euclidean norm in , we thus receive the above error bound with , where is the matrix that transforms the representer basis into an orthonormal basis of . Here estimation accuracy benefits from decreasing noise without increasing computational cost. This is in contrast to Bayesian methods for which small noise level induces computational difficulties due to the concentration of the posterior distribution.

Remark 1.2

To bring out the essential mechanisms, we have idealized (and technically simplified) the description of the PBDW method by omitting certain discretization aspects that are unavoidable in computational practice and should be accounted for. To start with, the snapshots (or the polynomial coefficients ) that span the reduced basis spaces cannot be computed exactly, but only up to some tolerance by a numerical solver. One typical instance is the finite element method, which yields an approximate parameter to solution map

| (1.26) |

where is a reference finite element space ensuring a prescribed accuracy

| (1.27) |

The computable states are therefore elements of the perturbed manifold

| (1.28) |

The reduced model spaces are low dimensional subspaces of , and with certified accuracy

| (1.29) |

The true states do not belong to and this deviation can therefore be interpreted as a model bias in the sense of the previous remark with . The application of the PDBW also requires the introduction of the Riesz lifts in order to define the measurement space . Since we operate in the space , these can be defined as elements of this space satisfying

| (1.30) |

thus resulting in a measurement space . For example, if is the Sobolev spaces for some domain and is a finite element subspace, the Riesz lifts are the unique solutions to the Galerkin problem

| (1.31) |

and can be identified by solving linear systems. Measuring accuracy in , i.e., in a metric dictated by the continuous PDE model, the idealization, to be largely maintained in what follows, also helps understanding how to properly adapt the background-discretization to the overall achievable estimation accuracy. Other computational issues involving the space will be discussed in §3.4.

Note that increases with and that its finiteness imposes that , that is . Therefore, one natural way to decide which space to use is to take the value of that minimizes the bound . This choice is somehow crude since it might not be the value of that minimizes the true reconstruction error for a given , and for this reason it was referred to as a poor man algorithm in [1].

The PBDW approach to state estimation can be improved in various ways:

-

•

One variant that is relevant to the present work is to use reduced models of affine form

(1.32) where is a linear space and is a given offset. The optimal recovery estimator is again defined by the minimization property (1.19). Its computation amounts to the same type of linear systems and the reconstruction map is now affine. The error bound (1.23) remains valid with and a bound for . Note that is also a bound for the distance of to the linear space of dimension . However, using instead this linear space, could result in a stability constant that is much larger than , in particular, when the offset is close to .

-

•

Another variant proposed in [10] consists in using a large set of precomputed solutions in order to train the reconstruction maps by minimizing the least-square fit over all linear or affine map, which amounts to optimizing the choice of the space in the PBDW method.

-

•

Conversely, for a given reduced basis space , it is also possible to optimize the choice of linear functionals giving rise to the data, among a dictionary that represent a set of admissible measurement devices. The objective is to minimize the stability constant for the resulting space , see in particular [3] where a greedy algorithm is proposed for selecting the . We do not take this view in the present paper and think of the space as fixed once and for all: the measurement devices are given to us and cannot be modified.

1.3 Objective and outline

The simplicity of the PBDW method and its above variants come together with a fundamental limitation of its performance: since the map is linear or affine, the reconstruction necessarily belongs to an or dimensional space, and thefore the worst case performance is necessarily bounded from below by the Kolmogorov width or . In view of this limitation, one principle objective of the present work is to develop nonlinear state estimation techniques which provably overcome the bottleneck of the Kolmogorov width .

In §2, we introduce various benchmark quantities that describe the best possible performance of a recovery map in a worst case sense. We first consider an idealized setting where the state is assumed to exactly satisfy the theoretical model described by the parametric PDE, that is . Then we introduce similar benchmarks quantities in the presence of model bias and measurement noise. All these quantities can be substantially smaller than .

In §3, we discuss a nonlinear recovery method, based on a family of affine reduced models , where each has dimension and serves as a local approximations to a portion of the solution manifold. Applying the PBDW method with each such space, results in a collection of state estimators . The value for which the true state belongs to being unknown, we introduce a model selection procedure in order to pick a value , and define the resulting estimator . We show that this estimator has performance comparable to the benchmark introduced in §2. Such performances cannot be achieved by the standard PBDW method due to the above described limitations.

Model selection is a classical topic of mathematical statistics [22], with representative techniques such as complexity penalization or cross-validation in which the data are used to select a proper model. Our approach differs from these techniques in that it exploits (in the spirit of data assimilation) the PDE model which is available to us, by evaluating the distance to the manifold

| (1.33) |

of the different estimators for , and picking the value that minimizes it. In practice, the quantity (1.33) cannot be exactly computed and we instead rely on a computable surrogate quantity expressed in terms of the residual to the PDE, see § 3.4.

One typical instance where such a surrogate is available is when (1.1) has the form of a linear operator equation

| (1.34) |

where is boundedly invertible from to , or more generally, from for a test space different from , uniformly over . Then is obtained by minimizing the residual

| (1.35) |

over . This task itself is greatly facilitated in the case where the operators and source terms have affine dependence in . One relevant example that has been often considered in the literature is the second order elliptic diffusion equation with affine diffusion coefficient,

| (1.36) |

In §4, we discuss the more direct approach for both state and parameter estimation based on minimizing over both and . The associated alternating minimization algorithm amounts to a simple succession of quadratic problems in the particular case of linear PDE’s with affine parameter dependence. Such an algorithm is not guaranteed to converge to a global minimum (since the residual is not globally convex), and for this reason its limit may miss the optimaliy benchmark. On the other hand, using the estimator derived in §3 as a “good initialization point” to this mimimization algorithm leads to a limit state that has at least the same order of accuracy.

These various approaches are numerically tested in §5 for the elliptic equation (1.36), for both the overdetermined regime , and the underdetermined regime .

2 Optimal recovery benchmarks

In this section we describe the performance of the best possible recovery map

| (2.1) |

in terms of its worst case error. We consider first the case of noiseless data and no model bias. In a subsequent step we take such perturbations into account. While these best recovery maps cannot be implemented by a simple algorithm, their performance serves as benchmark for the nonlinear state estimation algorithms discussed in the next section.

2.1 Optimal recovery for the solution manifold

In the absence of model bias and when a noiseless measurement is given, our knowledge on is that it belongs to the set

| (2.2) |

The best possible recovery map can be described through the following general notion.

Definition 2.1

The Chebychev ball of a bounded set is the closed ball of minimal radius that contains . One denotes by the Chebychev center of and its Chebychev radius.

In particular one has

| (2.3) |

where is the diameter of . Therefore, the recovery map that minimizes the worst case error over for any given , and therefore over is defined by

| (2.4) |

Its worst case error is

| (2.5) |

In view of the equivalence (2.3), we can relate to the quantity

| (2.6) |

by the equivalence

| (2.7) |

Note that injectivity of the measurement map over is equivalent to . We provide in Figure (1(a)) an illustration the above benchmark concepts.

If for some , then any such that , meets the ideal benchmark . Therefore, one way for finding such a would be to minimize the distance to the manifold over all functions such that , that is, solve

| (2.8) |

This problem is computationally out of reach since it amounts to the nested minimization of two non-convex functions in high dimension.

Computationally feasible algorithms such as the PBDW methods are based on a simplification of the manifold which induces an approximation error. We introduce next a somewhat relaxed benchmark that takes this error into account.

2.2 Optimal recovery under perturbations

In order to account for manifold simplification as well as model bias, for any given accucary , we introduce the -offset of ,

| (2.9) |

Likewise, we introduce the perturbed set

| (2.10) |

which, however, still excludes uncertainties in . Our benchmark for the worst case error is now defined as (see Figure (1(b)) for an illustration)

| (2.11) |

The map satisfies some elementary properties:

-

•

Monotonicity and continuity: it is obviously non-decreasing

(2.12) Simple finite dimensional examples show that this map may have jump discontinuities. Take for example a compact set consisting of the two points and , and where . Then for , while . Using the compactness of , it is possible to check that is continuous from the right and in particular .

-

•

Bounds from below and above: for any , and for any , let and with . Then, and , which shows that , and

(2.13) In particular,

(2.14) On the other hand, we obviously have the upper bound .

-

•

The quantity

(2.15) may be viewed as a general stability constant inherent to the recovery problem, similar to that is more specific to the particular PBDW method: in the special case where and , one has and for all . Note that in view of (2.14).

Regarding measurement noise, it suggests to introduce the quantity

| (2.16) |

The two quantities and are not equivalent, however one has the framing

| (2.17) |

To prove the upper inequality, we note that for any such that , there exists at distance from , respectively, and therefore such that . Conversely, for any such that , there exists at distance from , respectively, such that , which gives the lower inequality. Note that the upper inequality in (2.17) combined with (2.14) implies that .

In the following analysis of reconstruction methods, we use the quantity as a benchmark which, in view of this last observation, also accounts for the lack of accuracy in the measurement of . Our objective is therefore to design an algorithm that, for a given tolerance , recovers from the measurement an approximation to with accuracy comparable to . Such an algorithm requires that we are able to capture the solution manifold up to some tolerance by some reduced model.

3 Nonlinear recovery by reduced model selection

3.1 Piecewise affine reduced models

Linear or affine reduced models, as used in the PBDW algorithm, are not suitable for approximating the solution manifold when the required tolerance is too small. In particular, when one would then need to use a linear space of dimension , therefore making infinite.

One way out is to replace the single space by a family of affine spaces

| (3.1) |

each of them having dimension

| (3.2) |

such that the manifold is well captured by the union of these spaces, in the sense that

| (3.3) |

for some prescribed tolerance . This is equivalent to saying that there exists a partition of the solution manifold

| (3.4) |

such that we have local certified bounds

| (3.5) |

We may thus think of the family as a piecewise affine approximation to . We stress that, in contrast to the hierarchies of reduced models discussed in §1.2, the spaces do not have dimension and are not nested. Most importantly, is not limited by while each is.

The objective of using a piecewise reduced model in the context of state estimation is to have a joint control on the local accuracy as expressed by (3.5) and on the stability of the PBDW when using any individual . This means that, for some prescribed , we ask that

| (3.6) |

According to (1.23), the worst case error bound over when using the PBDW method with a space is given by the product . This suggests to alternatively require from the collection , that for some prescribed , one has

| (3.7) |

This leads us to the following definitions.

Definition 3.1

Obviously, any -admissible family is -admissible with . In this sense the notion of -admissibility is thus more restrictive than that of -admissibility. The benefit of the first notion is in the uniform control on the size of which is critical in the presence of noise, as hinted at by Remark 1.1.

If is our unknown state and is its observation, we may apply the PBDW method for the different in the given family, which yields a corresponding family of estimators

| (3.8) |

If is -admissible, we find that the accuracy bound

| (3.9) |

holds whenever .

Therefore, if in addition to the observed data one had an oracle giving the information on which portion of the manifold the unknown state sits, we could derive an estimator with worst case error

| (3.10) |

This information is, however, not available and such a worst case error estimate cannot be hoped for, even with an additional multiplicative constant. Indeed, as we shall see below, can be fixed arbitrarily small by the user when building the family , while we know from §2.1 that the worst case error is bounded from below by which could be non-zero. We will thus need to replace the ideal choice of by a model selection procedure only based on the data , that is, a map

| (3.11) |

leading to a choice of estimator . We shall prove further that such an estimator is able to achieve the accuracy

| (3.12) |

that is, the benchmark introduced in §2.2. Before discussing this model selection, we discuss the existence and construction of -admissible or -admissible families.

3.2 Constructing admissible reduced model families

For any arbitrary choice of and , the existence of an -admissible family results from the following observation: since the manifold is a compact set of , there exists a finite -cover of , that is, a family such that

| (3.13) |

or equivalently, for all , there exists a such that . With such an cover, we consider the family of trivial affine spaces defined by

| (3.14) |

thus with for all . The covering property implies that (3.5) holds. On the other hand, for the dimensional space, one has

| (3.15) |

and therefore (3.6) also holds. The family is therefore -admissible, and also -admissible with .

This family is however not satisfactory for algorithmic purposes for two main reasons. First, the manifold is not explicely given to us and the construction of the centers is by no means trivial. Second, asking for an -cover, would typically require that becomes extremely large as goes to . For example, assuming that the parameter to solution has Lipschitz constant ,

| (3.16) |

for some norm of , then an cover for would be induced by an cover for which has cardinality growing like as . Having a family of moderate size is important for the estimation procedure since we intend to apply the PBDW method for all .

In order to construct -admissible or -admissible families of better controlled size, we need to split the manifold in a more economical manner than through an -cover, and use spaces of general dimensions for the various manifold portions . To this end, we combine standard constructions of linear reduced model spaces with an iterative splitting procedure operating on the parameter domain . Let us mention that various ways of splitting the parameter domain have already been considered in order to produce local reduced bases having both, controlled cardinality and prescribed accuracy [18, 21, 5]. Here our goal is slightly different since we want to control both the accuracy and the stability with respect to the measurement space .

We describe the greedy algorithm for constructing -admissible families, and explain how it should be modified for -admissible families. For simplicity we consider the case where is a rectangular domain with sides parallel to the main axes, the extension to a more general bounded domain being done by embedding it in such a hyper-rectangle. We are given a prescribed target value and the splitting procedure starts from .

At step , a disjoint partition of into rectangles with sides parallel to the main axes has been generated. It induces a partition of given by

| (3.17) |

To each we associate a hierarchy of affine reduced basis spaces

| (3.18) |

where with the vector defined as the center of the rectangle . The nested linear spaces

| (3.19) |

are meant to approximate the translated portion of the manifold . For example, they could be reduced basis spaces obtained by applying the greedy algorithm to , or spaces resulting from local -term polynomial approximations of on the rectangle . Each space has a given accuracy bound and stability constant

| (3.20) |

We define the test quantity

| (3.21) |

If , the rectangle is not split and becomes a member of the final partition. The affine space associated to is

| (3.22) |

where for the value of that minimizes . The rectangles with are, on the other hand, split into a finite number of sub-rectangles in a way that we discuss below. This results in the new larger partition after relabelling the . The algorithm terminates at the step as soon as for all , and the family is -admissible. In order to obtain an -admissible family, we simply modify the test quantity by defining it instead as

| (3.23) |

and splitting the cells for which .

The splitting of one single rectangle can be performed in various ways. When the parameter dimension is moderate, we may subdivide each side-length at the mid-point, resulting into sub-rectangles of equal size. This splitting becomes too costly as gets large, in which case it is preferable to make a choice of and subdivide at the mid-point of the side-length in the -coordinate, resulting in only sub-rectangles. In order to decide which coordinate to pick, we consider the possibilities and take the value of that minimizes the quantity

| (3.24) |

where are the values of for the two subrectangles obtained by splitting along the -coordinate. In other words, we split in the direction that decreases most effectively. In order to be certain that all sidelength are eventually split, we can mitigate the greedy choice of in the following way: if has been generated by consecutive refinements, and therefore has volume , and if is even, we choose . This means that at each even level we split in a cyclic manner in the coordinates .

Using such elementary splitting rules, we are ensured that the algorithm must terminate. Indeed, we are guaranteed that for any , there exists a level such that any rectangle generated by consecutive refinements has side-length smaller than in each direction. Since the parameter-to-solution map is continuous, for any , we can pick such that

| (3.25) |

Applying this to and , we find that for

| (3.26) |

Therefore, for any rectangle of generation , we find that the trivial affine space has local accuracy and , which implies that such a rectangle would not anymore be refined by the algorithm.

3.3 Reduced model selection and recovery bounds

We return to the problem of selecting an estimator within the family defined by (3.8). In an idealized version, the selection procedure picks the value that minimizes the distance of to the solution manifold, that is,

| (3.27) |

and takes for the final estimator

| (3.28) |

Note that also depends on the observed data . This estimation procedure is not realistic, since the computation of the distance of a known function to the manifold

| (3.29) |

is a high-dimensional non-convex problem, which necessitates to explore the solution manifold. A more realistic procedure is based on replacing this distance by a surrogate quantity that is easily computable and satisfies a uniform equivalence

| (3.30) |

for some constants . We then instead take for the value that minimizes this surrogate, that is,

| (3.31) |

Before discussing the derivation of in concrete cases, we establish a recovery bound in the absence of model bias and noise.

Theorem 3.2

Proof: Let be an unknown state and . There exists , such that , and for this value, we know that

| (3.34) |

Since , it follows that

| (3.35) |

On the other hand, for the value selected by (3.31) and , we have

| (3.36) |

It follows that belongs to the offset . Since and , we find that

| (3.37) |

which establishes the recovery estimate (3.33). The estimate (3.32) for the idealized estimator follows since it corresponds to having .

Remark 3.3

One possible variant of the selection mechanism, which is actually adopted in our numerical experiments, consists in picking the value that minimizes the distance of to the corresponding local portion of the solution manifold, or a surrogate with equivalence properties analogous to (3.30). It is readily checked that Theorem 3.2 remains valid for the resulting estimator with the same type of proof.

In the above result, we do not obtain the best possible accuracy satisfied by the different , since we do not have an oracle providing the information on the best choice of . We next show that this order of accuracy is attained in the particular case where the measurement map is injective on and the stability constant of the recovery problem defined in (2.15) is finite.

Theorem 3.4

Assume that and that

| (3.38) |

Then, for any given state with observation , the estimator obtained by the model selection procedure (3.31) satisfies the oracle bound

| (3.39) |

In particular, if is -admissible, it satisfies

| (3.40) |

Proof: Let be the value for which . Reasoning as in the proof of Theorem 3.2, we find that

| (3.41) |

and therefore

| (3.42) |

which is (3.39). We then obtain (3.40) using the fact that

for the value of such that .

We next discuss how to incorporate model bias and noise in the recovery bound, provided that we have a control on the stability of the PBDW method, through a uniform bound on , which holds when we use -admissible families.

Theorem 3.5

Proof: There exists such that

| (3.44) |

and therefore

| (3.45) |

As already noted in Remark 1.1, we know that the PBDW method for this value of has accuracy

| (3.46) |

Therefore

| (3.47) |

and in turn

| (3.48) |

On the other hand, we define

| (3.49) |

so that

| (3.50) |

Since , we conclude that , from which (3.43) follows.

While the reduced model selection approach provides us with an estimator of a single plausible state, the estimated distance of some of the other estimates may be of comparable size. Therefore, one could be interested in recovering a more complete estimate on a plausible set that may contain the true state or even several states in sharing the same measurement. This more ambitious goal can be viewed as a deterministic counterpart to the search for the entire posterior probability distribution of the state in a Bayesian estimation framework, instead of only searching for a single estimated state, for instance, the expectation of this distribution. For simplicity, we discuss this problem in the absence of model bias and noise. Our goal is therefore to approximate the set

| (3.51) |

Given the family , we consider the ellipsoids

| (3.52) |

which have center and diameter at most . We already know that is contained inside the union of the which could serve as a first estimator. In order to refine this estimator, we would like to discard the that do not intersect the associated portion of the solution manifold.

For this purpose, we define our estimator of as the union

| (3.53) |

where is the set of those such that

| (3.54) |

It is readily seen that implies that . The following result shows that this set approximates with an accuracy of the same order as the recovery bound established for the estimator .

Theorem 3.6

For any state with observation , one has the inclusion

| (3.55) |

If the family is -admissible for some , the Hausdorff distance between the two sets satisfies the bound

| (3.56) |

Proof: Any is a state from that gives the observation . This state belongs to for some particular , for which we know that belongs to the ellipsoid and that

| (3.57) |

This implies that , and therefore . Hence , which proves the inclusion (3.55). In order to prove the estimate on the Hausdorff distance, we take any , and notice that

| (3.58) |

and therefore, for all such and all , we have

| (3.59) |

Since , it follows that

| (3.60) |

which proves (3.56).

Remark 3.7

If we could take to be exactly the set of those such that , the resulting would still contain but with a sharper error bound. Indeed, any belongs to a set that intersects at some , so that

| (3.61) |

In order to identify if a belongs to this smaller , we need to solve the minimization problem

| (3.62) |

and check whether the minimum is zero. As explained next, the quantity is itself obtained by a minimization problem over . The resulting double minimization problem is globally non-convex, but it is convex separately in and , which allows one to apply simple alternating minimization techniques. These procedures (which are not guaranteed to converge to the global minimum) are discussed in §4.2.

3.4 Residual based surrogates

The computational realization of the above concepts hinges on two main constituents, namely (i) the ability to evaluate bounds for as well as (ii) to have at hand computationally affordable surrogates for . In both cases one exploits the fact that errors in are equivalent to residuals in a suitable dual norm. Regarding (i), the derivation of bounds has been discussed extensively in the context of Reduced Basis Methods [23], see also [15] for the more general framework discussed below. Substantial computational effort in an offline phase provides residual based surrogates for permitting frequent parameter queries at an online stage needed, in particular, to construct reduced bases. This strategy becomes challenging though for high parameter dimensionality and we refer to [9] for remedies based on trading deterministic certificates against probabilistic ones at significantly reduced computational cost. Therefore, we focus here on task (ii).

One typical setting where a computable surrogate can be derived is when is the solution to a parametrized operator equation of the general form

| (3.63) |

Here we assume that for every the right side belongs to the dual of a Hilbert test space , and is boundedly invertible from to . The operator equation has therefore an equivalent variational formulation

| (3.64) |

with parametrized bilinear form and linear form . This setting includes classical elliptic problems with , as well as saddle-point and unsymmetric problems such as convection-diffusion problems or space-time formulations of parabolic problems.

We assume continuous dependence of and with respect to , which by compactness of , implies uniform boundedness and invertibility, that is

| (3.65) |

for some . It follows that for any , one has the equivalence

| (3.66) |

where

| (3.67) |

is the residual of the PDE for a state and parameter .

Therefore the quantity

| (3.68) |

provides us with a surrogate of that satisfies the required framing (3.30).

One first advantage of this surrogate quantity is that for each given , the evaluation of the residual does not require to compute the solution . Its second advantage is that the minimization in is facilitated in the relevant case where and have affine dependence on , that is,

| (3.69) |

Indeed, then amounts to the minimization over of the function

| (3.70) |

which is a convex quadratic polynomial in . Hence, a minimizer of the corresponding constrained linear least-squares problem exists, rendering the surrogate well-defined.

In all the above mentioned examples the norm can be efficiently computed. For instance, in the simplest case of an -elliptic problem one has with

| (3.71) |

The obvious obstacle is then, however, the computation of the dual norm which in the particular example above is the -norm. A viable strategy is to use the Riesz lift , defined by

| (3.72) |

which implies that . Thus, is computed for a given by introducing the lifted elements

| (3.73) |

so that, by linearity

| (3.74) |

Note that the above derivation is still idealized as the variational problems (3.73) are posed in the infinite dimensional space . As already stressed in Remark 1.2, all computations take place in reference finite element spaces and . We thus approximate the by , for , using the Galerkin approximation of (3.72). This gives rise to a computable least-squares functional

| (3.75) |

The practical distance surrogate is then defined through the corresponding constrained least-squares problem

| (3.76) |

which can be solved by standard optimization methods. As indicated earlier, the recovery schemes can be interpreted as taking place in a fixed discrete setting, with replaced by , comprised of approximate solutions in a large finite element space , and measuring accuracy only in this finite dimensional setting. One should note though that the approach allows one to disentangle discretization errors from recovery estimates, even with regard to the underlying continuous PDE model. In fact, given any target tolerance , using a posteriori error control in , the spaces can be chosen large enough to guarantee that

| (3.77) |

Accordingly, one has , so that recovery estimates remain meaningful with respect to the continuous setting as long as remains sufficiently dominated by the the threshholds appearing in the above results. For notational simplicity we therefore continue working in the continuous setting.

4 Joint parameter and state estimation

4.1 An estimate for

Searching for a parameter , that explains an observation , is a nonlinear inverse problem. As shown next, a quantifiable estimate for can be obtained from a state estimate combined with a residual minimization.

For any state estimate which we compute from , the most plausible parameter is the one associated to the metric projection of into , that is,

Note that depends on but we omit the dependence in the notation in what follows. Finding is a difficult task since it requires solving a non-convex optimization problem.

However, as we have already noticed, a near metric projection of to can be computed through a simple convex problem in the case of affine parameter dependence (3.69), minimizing the residual given by (3.70). Our estimate for the parameter is therefore

| (4.1) |

and it satisfies, in view of (3.66),

| (4.2) |

Hence, if we use, for instance, the state estimate from (3.28), we conclude by Theorem 3.2 that deviates from the true state by

| (4.3) |

where is the benchmark quantity defined in (2.11). If in addition also is injective so that and if and are favorably oriented, as detailed in the assumptions of Theorem 3.4, one even obtains

| (4.4) |

To derive from such bounds estimates for the deviation of from , more information on the underlying PDE model is needed. For instance, for the second order parametric family of elliptic PDEs (1.36) and strictly positive right hand side , it is shown in [4] that the parameter-to-solution map is injective. If in addition the parameter dependent diffusion coefficient belongs to , one has a quantitative inverse stability estimate of the form

| (4.5) |

Combining this, for instance, with (4.3), yields

| (4.6) |

Under the favorable assumptions of Theorem 3.4, one obtains a bound of the form

| (4.7) |

Finally, in relevant situations (Karhunen-Loeve expansions) the functions in the expansion of form an -orthogonal system. The above estimates translate then into estimates for a weighted -norm,

| (4.8) |

where .

4.2 Alternating residual minimization

The state estimate is defined by selecting among the potential estimates the one that sits closest to the solution manifold, in the sense of the surrogate distance . Finding the element in that is closest to would provide a possibly improved state estimate, and as pointed out in the previous section, also an improved parameter estimate. As explained earlier, it would help in addition with improved set estimators for .

Adhering to the definition of the residual from (3.67), we are thus led to consider the double minimization problem

| (4.9) |

We first show that a global minimizing pair meets the optimal benchmarks introduced in §2. In the unbiased and non-noisy case, the value of the global minimum is , attained by the exact parameter and state . Any global minimizing pair will thus satisfy and . In other word, the state estimate belongs to , and therefore meets the optimal benchmark

| (4.10) |

In the case of model bias and noise of amplitude, the state satisfies

| (4.11) |

It follows that there exists a parameter such that and a state such that . For this state and parameter, one thus have

| (4.12) |

Any global minimizing pair will thus satisfy

| (4.13) |

Therefore belongs to the set , as defined by (2.10), with and so does since . In turn, the state estimate meets the perturbed benchmark

| (4.14) |

From a numerical perspective, the search for a global minimizing pair is a difficult task due to the fact that is generally not a convex function. However, it should be noted that in the case of affine parameter dependence (3.69), the residual given by (3.70) is a convex function in each of the two variables separately, keeping the other one fixed. More precisely is a quadratic convex function in each variable. This suggests the following alternating minimization procedure. Starting with an initial guess , we iteratively compute for ,

| (4.15) | ||||

| (4.16) |

Each problem has a simply computable minimizer, as discussed in the next section, and the residuals are non-increasing

| (4.17) |

Of course, one cannot guarantee in general that converges to a global minimizer, and the procedure may stagnate at a local minimum.

The above improvement property still tells us that if we initialize the algorithm by taking the state estimate from (3.28) and , then we are ensured at step that

| (4.18) |

and therefore, by the same arguments as in the proof of Theorem 3.5, one finds that

| (4.19) |

with and as in (3.43). In other words, the new estimate satisfies at least the same accuracy bound than . The numerical tests performed in §5.3 reveal that it can be significanly more accurate.

4.3 Computational issues

We now explain how to efficiently compute the steps in (4.15) and (4.16). We continue to consider a family of linear parametric PDEs with affine parameter dependence (3.69), admitting a uniformly stable variational formulation over the pair trial and test spaces , see (3.64)-(3.65).

Minimization of (4.15):

Minimization of (4.16):

Problem (4.21) is of the form

| (4.21) |

for a fixed . A naive approach for solving (4.21) would consist in working in a closed subspace of of sufficiently large dimension. We would then optimize over . However, this would lead to a large quadratic problem of size which would involve Riesz representer computations. We next propose an alternative strategy involving the solution of only variational problems. To that end, we assume in what follows that is continuously embedded in , which is the case for all the examples of interest, mentioned earlier in the paper.

The proposed strategy is based on two isomorphisms from to that preserve inner products in a sense to be explained next. We make again heavy use of the Riesz-isometry defined in (3.72) and consider the two isomorphisms

| (4.22) |

where and are the previously introduced Riesz lifts. One then observes that, by standard duality arguments, they preserve inner products in the sense that for

| (4.23) |

where we have used selfadjointness of Riesz isometries. In these terms the objective functional takes the form

| (4.24) |

We can use (4.23) to reformulate (4.21) as

| (4.25) |

where we have used that to obtain the last equality. Note that the unique solution to the right hand side gives a solution to the original problem through the relationship . The minimizer can be obtained by an appropriate orthogonal projection onto . This indeed amounts to solving a fixed number of variational problems without compromising accuracy by choosing a perhaps too moderate dimension for a subspace of .

More precisely, we have where minimizes , and therefore

| (4.26) |

This shows that

| (4.27) |

Thus, a single iteration of the type (4.21) requires assembling followed by solving the variational problem

| (4.28) |

that gives . Assembling involves

-

(i)

evaluating , which means solving the Riesz-lift , ;

-

(ii)

computing the Riesz-lift by solving , ;

-

(iii)

computing the projection . This requires computing the transformed basis functions , which are solutions to the variational problems

(4.29)

Of course, these variational problems are solved only approximately in appropriate large but finite dimensional spaces along the remarks at the end of the previous section. While approximate Riesz-lifts involve symmetric variational formulations which are well-treated by Galerkin schemes, the problems involving the operator or may in general require an unsymmetric variational formulation where and Petrov-Galerkin schemes on the discrete level. For each of the examples (such as a time-space variational formulation of parabolic or convection diffusion equations) stable discretizations are known, see e.g. [6, 8, 14, 15, 26].

A particularly important strategy for unsymmetric problems is to write the PDE first as an equivalent system of first order PDEs permitting a so called “ultraweak” formulation where the (infinite-dimensional) trial space is actually an -space and the required continuous embedding holds. The mapping is then just the identity and so called Discontinuous Petrov Galerkin methods offer a way of systematically finding appropriate test spaces in the discrete case with uniform inf-sup stability, [7]. In this context, the mapping from(4.22) plays a pivotal role in the identification of “optimal test spaces” and is referred to as “trial-to-test-map”.

Of course, in the case of problems that admit a symmetric variational formulation, i.e., , things simplify even further. To exemplify this, consider the a parametric family of elliptic PDEs (1.36). In this case one has (assuming homogeneous boundary conditions) so that . Due to the selfadjointness of the underlying elliptic operators in this case, the problems (4.29) are of the same form as in (4.28) that can be treated on the discrete level by standard Galerkin discretizations.

5 Numerical illustration

In this section we illustrate the construction of nonlinear reduced models, and demonstrate the mechanism of model selection using the residual surrogate methods outlined in §3.4.

In our tests we consider the elliptic problem mentioned in §1.3 on the unit square with homogeneous Dirichelet boundary conditions, and a parameter dependence in the diffusivity field . Specifically, we consider the problem

| (5.1) |

with on , with . The classical variational formulation uses the same trial and test space . We perform space discretization by the Galerkin method using finite elements to produce solutions , with a triangulation on a regular grid of mesh size .

5.1 Test 1: pre-determined splittings

In this first test, we examine the reconstruction performance with localized reduced bases on a manifold having a predetermined splitting. Specifically, we consider two partitions of the unit square, and , with

The partitions are symmetric along the axis as illustrated in Figure 5.2. This will play a role in the understanding of the results below. We next define two parametric diffusivity fields

| (5.2) |

where the vector of parameters ranges in and is the indicator function of (similarly for ). The fields and are mirror images of each other along . In the numerical tests that follow, we take and .

We denote by the solution to the elliptic problem (5.1) with diffusivity field , and then label by the resulting solution manifold. Strictly speaking, we should write as our solutions are finite dimensional approximations, however we suppress the as there should be little ambiguity going forward. Similarly, is the set of all solutions of (5.1) over where the diffusivity field is given by . We take their union to be our global solution manifold that has the obvious pre-determined splitting available to us.

For our computations, we generate training and test sets. For the training, we draw independent samples that are uniformly distributed over . The collection of solutions and , are used as training sets for and . The training set for the full manifold is . Since we use the same parameter points for both sets, any solution in has a corresponding solution in that is its symmetric image along the axis . To test our reconstruction methods, we generate independent points in that are distinct from the training set. The corresponding test solution sets are and . All computations are done by solving (5.1) in the finite element space.

Given an unknown , we want to recover it from its observation . For the measurement space , we take a collection of measurement functionals that are local averages in a small area which are boxes of width , each placed randomly in the unit square. The measurement space is then .

Since we are only given , we do not know if the function to reconstruct is in or and we consider two possibilities for reconstruction:

-

•

Affine method: We use affine reduced models generated for the full manifold . In our example, we take and is computed by the greedy selection algorithm over . Of course the spaces with sufficiently large have high potential for approximation of the full manifold , and obviously also for the subsets and (see Figure 5.4). Yet, we can expect some bad artefacts in the reconstruction with this space since the true solution will be approximated by snapshots, some of which coming from the wrong part of the manifold and thus associated to the wrong partition of . In addition, we can only work with and this may not be sufficient regarding the approximation power. Our estimator uses the space , where the dimension is the one that reaches as defined in (3.20) and (3.21). Figure 5.4 shows the product for and we see that .

-

•

Nonlinear method: We generate affine reduced bases spaces and , each one specific for and . Similarly as for the affine method, we take as offsets , for , and we run two separate greedy algorithms over and to build and . We select the dimensions that reach for . From Figure 5.4, we deduce111Due to the spatial symmetry along the axis for the functions in and , the greedy algorithm selects exactly the same candidates to build as for , except that each element is mirrored in the axis. One may thus wonder why . The fact that different values are chosen for each manifold reflects the fact that the measurement space introduces a symmetry break and the reconstruction scheme is no longer spatially symmetric contrary to the . that and . This yields two estimators and . We can expect better results than the affine approach if we can detect well in which part of the manifold the target function is located. The main question is thus whether our model selection strategy outlined in Section 3.3 is able to detect well from the observed data if the true lies in or . For this, we compute the surrogate manifold distances

(5.3) where

is the residual of related to the PDE with diffusion field . To solve problem (5.3), we follow the steps given in Section 3.4. The final estimator is , where

Table 1 quantifies the quality of the model selection approach. It displays how many times our model selection strategy yields the correct result or incorrect result for the functions from the test set (and vice-versa for ). Recalling that these tests sets have snapshots, we conclude that the residual gives us the correct manifold portion roughly 75% of the time. We can compare this performance with the one given by the oracle estimator (see Table 1)

In this case, we see that the oracle selection is very efficient since it gives us the correct manifold portion roughly 99% of the time. Figure 5.3 completes the information given in Table 1 by showing the distribution of the values of the residuals and oracle errors. The distributions give visual confirmation that both the model and oracle selection tend to pick the correct model by giving residual/error values which are lower in the right manifold portion. Last but not least, Figure 5.4 gives information on the value of inf-sup constants and residual errors leading to the choice of the dimension for the reduced models. Table 2 summarizes the reconstruction errors.

| Test function from: | ||

|---|---|---|

| Surrogate selection | ||

| 1625 | 386 | |

| 375 | 1614 | |

| Success rate | 81.2 % | 80.7 % |

| Test function from: | ||

|---|---|---|

| Oracle selection | ||

| 1962 | 9 | |

| 38 | 1991 | |

| Success rate | 98.1 % | 99.5 % |

| Test function from: | ||

|---|---|---|

| Average error | ||

| Affine method | 6.047e-02 | 6.661e-02 |

| Nonlinear with oracle model selection | 5.057e-02 | 4.855e-02 |

| Nonlinear with surrogate model selection | 5.522e-02 | 5.201e-02 |

| Test function from: | ||

|---|---|---|

| Worst case error | ||

| Affine method | 4.203e-01 | 4.319e-01 |

| Nonlinear with oracle model selection | 2.786e-01 | 2.641e-01 |

| Nonlinear with surrogate model selection | 4.798e-01 | 2.660e-01 |

5.2 Test 2: constructing -admissible families

In this example we examine the behavior of the splitting scheme to construct -admissible families outlined in §3.2.

The manifold is given by the solutions to equation (5.1) associated to the diffusivity field

| (5.4) |

where is the indicator function on the set , and parameters ranging uniformly in . We study the impact of the intrinsic dimensionality of the manifold by considering two cases for the partition of the unit square , a uniform grid partition resulting in parameters, and a grid partition of resulting in parameters. We also study the impact of coercivity and anisotropy on our reconstruction algorithm by examining the different manifolds generated by taking with or and or . The value corresponds to a severe degeneration of coercivity, and the rate corresponds to a more pronounced anisotropy.

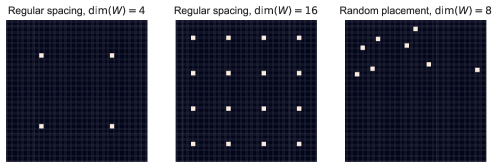

We use two different measurement spaces, one with evenly spaced local averages and the other with evenly spaced local averages. The measurement locations are shown diagrammatically in Figure 5.5. The local averages are exactly as in the last test, taken in squares of side-length . Note that the two values and which we consider for the dimension of the measurement space are the same as the parameter dimensions and of the manifolds. This allows us to study different regimes:

-

•

When , we have a highly ill-posed problem since the intrinsic dimension of the manifold is larger than the dimension of the measurement space. In particular, we expect that the fundamental barrier is strictly positive. Thus we cannot expect very accurate reconstructions even with the splitting strategy.

-

•

When , the situation is more favorable and we can expect that the reconstruction involving manifold splitting brings significant accuracy gains.

As in the previous case, the training set is generated by a subset of samples taken uniformly on . We build the -admissible families outlined in §3.2 using a dyadic splitting and the splitting rule is given by (3.21). For example, our first split of results in two rectangular cells and , and the corresponding collections of parameter points and , as well as split collections of solutions and . On each we apply the greedy selection procedure, resulting in , with computable values and . The coordinate direction in which we split is precisely the direction that gives us the smallest resulting , so we need to compute greedy reduced bases for each possible splitting direction before deciding which results in the lowest . Subsequent splittings are performed in the same manner, but at each step we first chose cell to be split.

After splits, the parameter domain is divided into disjoint subsets and we have computed a family of affine reduced spaces . For a given , we have possible reconstructions and we select a value with the surrogate based model selection outlined in §3.4. The test is done on a test set of snapshots which are different from the ones used for the training set .

In Figure 5.6 we plot the reconstruction error, averaged over the test set, as a function of the number of splits for all the different configurations: we consider the 2 different diffusivity fields with and parameters, the two measurement spaces of dimension and , and the different ellipticity/coercivity regimes of in . We also plot the error when taking for the oracle value that corresponds to the value of that contains the parameter which gave rise to the snapshot and measurement.

Our main findings can be summarized as follows:

-

(i)

The error decreases with the number of splits. As anticipated, the splitting strategy is more effective in the overdetermined regime .

-

(ii)

Degrading coercivity has a negative effect on the estimation error, while anisotropy has a positive effect.

-

(iii)

Choosing by the surrogate based model selection yields error curves that are above yet close to those obtained with the oracle choice. The largest discrepancy is observed when coercivity degrades.

Figure 5.7 presents the error bounds which are known to be upper bounds for the estimation error when choosing the oracle value for at the given step of the splitting procedure. We observe that these worst upper bounds have similar behaviour as the averaged error curves depicted on Figure 5.6. In Figure 5.8, for the particular configuration , we demonstrate that indeed acts as an upper bound for the worst case error of the oracle estimator.

5.3 Test 3: improving the state estimate by alternating residual minimization

The goal of this test is to illustrate how the alternating residual minimization outlined in §4.2 allows one to improve the accuracy of the state estimate. We use the same setting as Test 2, in particular, we consider the solution manifold of equation (5.1) with the random field defined as in (5.4). Again we consider the cases where the from (5.4) are from the and grid, resulting in and respectively. Our test uses all three measurement regimes presented in Figure 5.5, with and evenly spaced local average functions, and randomly placed local averages confined to the upper half. We use the coercivity/anisotropy regime .

In this test we compare three different candidates for , the starting point of the alternating minimization procedure:

-

•

, the measurement vector without any further approximation, or equivalently the reconstruction of minimal norm among all functions that agree with the observations.

-

•

, the PBDW state estimation using the greedy basis over the whole manifold, thus starting the minimization from a “lifted” candidate that we hope is closer to the manifold and should thus offer better performance.

-

•

, the surrogate-chosen local linear reconstruction from the same family of local linear models from §5.2 (where is the index of the chosen local linear model). In this last case we take local linear models, i.e. where has been split 19 times.

Furthermore, in the third case, we restrict our parameter range to be the local parameter range chosen by the surrogate, that is we alter the step outlined in (4.15) to be

where denotes the parameter found at the -th step of the procedure. The hope is that we have correctly chosen the local linear model and restricted parameter range from which the true solution comes thanks to our local model selection. The alternating minimization will thus have a better starting position and then a faster convergence rate due to the restricted parameter range.

In our test we use the same training set as in the previous test, with samples, in order to generate the reduced basis spaces. We consider a set of snapshots, distinct from any snapshots in , and perform the alternate minimization for each of the snapshots in the test set.

The final figures display the state error trajectories for each snapshots (dashed lines) as well as their geometric average (full lines), in different colors depending on the initialization choice. Similarly we display the residual trajectories and parameter error trajectories . Our main findings can be summarized as follows:

-

(i)

In all cases, there is a substantial gain in taking , the surrogate-chosen local linear reconstruction, as starting point. In certain cases, the iterative procedure initiated from the two other choices or stagnates at an error level that is even higher than .

-

(ii)

The state error, residual and parameter error decrease to zero in the overdetermined configurations where is , or , with equally spaced measurement sites. In the underdetermined configurations , the state and parameter error stagnates, while the residual error decreases to zero, which reflects the fact that there are several satisfying , making the fundamental barrier strictly positive.

-

(iii)

The state error, residual and parameter error do not decrease to zero in the overdetermined configuration where the measurement sites are concentrated on the upper-half of the domain. This case is interesting since, while we may expect that there is a unique pair reaching the global minimal value , the algorithm seems to get trapped in local minima.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0b36db21-d00d-4fb8-8614-8a4a13350698/x7.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0b36db21-d00d-4fb8-8614-8a4a13350698/x8.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0b36db21-d00d-4fb8-8614-8a4a13350698/x9.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0b36db21-d00d-4fb8-8614-8a4a13350698/x10.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0b36db21-d00d-4fb8-8614-8a4a13350698/x11.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0b36db21-d00d-4fb8-8614-8a4a13350698/x12.png)

References

- [1] P. Binev, A. Cohen, W. Dahmen, R. DeVore, G. Petrova, and P. Wojtaszczyk, Convergence Rates for Greedy Algorithms in Reduced Basis Methods, SIAM Journal of Mathematical Analysis 43, 1457-1472, 2011. DOI: 10.1137/100795772

- [2] P. Binev, A. Cohen, W. Dahmen, R. DeVore, G. Petrova, and P. Wojtaszczyk, Data assimilation in reduced modeling, SIAM/ASA J. Uncertain. Quantif. 5, 1-29, 2017. DOI: 10.1137/15M1025384

- [3] P. Binev, A. Cohen, O. Mula and J. Nichols, Greedy algorithms for optimal measurements selection in state estimation using reduced models, SIAM Journal on Uncertainty Quantification 43, 1101-1126, 2018. DOI: 10.1137/17M1157635

- [4] A. Bonito, A. Cohen, R. DeVore, G. Petrova and G. Welper, Diffusion Coefficients Estimation for Elliptic Partial Differential Equations, SIAM J. Math. Anal 49. 1570-1592, 2017. DOI: 10.1137/16M1094476

- [5] A. Bonito, A. Cohen, R. DeVore, D. Guignard, P. Jantsch and G. Petrova, Nonlinear methods for model reduction, arXiv:2005.02565, 2020.

- [6] D. Broersen and R. P. Stevenson, A Petrov-Galerkin discretization with optimal test space of a mild-weak formulation of convection-diffusion equations in mixed form, IMA J. Numer. Anal. 35 (2015), no. 1, 39–73. DOI 10.1093/imanum/dru003.

- [7] C. Carstensen, L. Demkowicz, J. Gopalakrishnan, Breaking spaces and forms for the DPG method and applications including Maxwell equations, Comput. Math. Appl. 72 (2016), no. 3, 494–522. DOI: 10.1016/j.camwa.2016.05.004

- [8] A. Cohen, W. Dahmen, and G. Welper, Adaptivity and variational stabilization for convection-diffusion equations, ESAIM Math. Model. Numer. Anal. 46 (2012), no. 5, 1247–1273, DOI: 10.1051/m2an/2012003.

- [9] A. Cohen, W. Dahmen, R. DeVore, and J. Nichols, Reduced Basis Greedy Selection Using Random Training Sets, ESAIM: Mathematical Modelling and Numerical Analysis, vol. 54, no. 5, 2020. DOI: 10.1051/m2an/2020004

- [10] A. Cohen, W. Dahmen, R. DeVore, J. Fadili, O. Mula and J. Nichols, Optimal reduced model algorithms for data-based state estimation, arXiv:1903.07938. Accepted in SIAM Journal of Numerical Analysis, 2020.

- [11] A. Cohen and R. DeVore, Approximation of high dimensional parametric PDEs, Acta Numerica, no. 24, 1-159, 2015. DOI: 10.1017/S0962492915000033

- [12] A. Cohen, R. DeVore and C. Schwab, Analytic Regularity and Polynomial Approximation of Parametric Stochastic Elliptic PDEs, Analysis and Applications 9, 11-47, 2011. DOI: 10.1142/S0219530511001728

- [13] A. Cohen, R. DeVore, G. Petrova, and P. Wojtaszczyk, Finding the minimum of a function, Methods and Applications of Analysis 20, 393-410, 2013. DOI: 10.4310/MAA.2013.v20.n4.a4

- [14] W. Dahmen, C. Huang, C. Schwab, and G. Welper, Adaptive Petrov-Galerkin meth- ods for first order transport equations, SIAM J. Numer. Anal. 50 (2012), no. 5, 2420–2445. DOI: 10.1137/110823158. MR3022225

- [15] W. Dahmen, C. Plesken, G. Welper, Double Greedy Algorithms: Reduced Basis Methods for Transport Dominated Problems, ESAIM: Mathematical Modelling and Numerical Analysis, 48(3) (2014), 623–663. DOI: 10.1051/m2an/2013103.

- [16] R. DeVore, G. Petrova, and P. Wojtaszczyk, Greedy algorithms for reduced bases in Banach spaces, Constructive Approximation, 37, 455-466, 2013. DOI: 10.1007/s00365-013-9186-2

- [17] M. Dashti and A.M. Stuart, The Bayesian Approach to Inverse Problems, Handbook of Uncertainty Quantification, 311–428, Editors R. Ghanem, D. Higdon and H. Owhadi, Springer, 2017. DOI: 10.1007/978-3-319-12385-1_7

- [18] J.L. Eftang, A.T. Patera and E.M. Ronquist, An certified reduced basis method for parametrized elliptic partial differential equations, SIAM J. Sci. Comput. 32, 3170-3200, 2010. DOI: 10.1137/090780122

- [19] Y. Maday, A.T. Patera, J.D. Penn and M. Yano, A parametrized-background data-weak approach to variational data assimilation: Formulation, analysis, and application to acoustics, Int. J. Numer. Meth. Eng. 102, 933-965, 2015. DOI: 10.1002/nme.4747

- [20] Y. Maday, A. T. Patera, and G. Turinici, Global a priori convergence theory for reduced-basis approximations of single-parameter symmetric coercive elliptic partial differential equations, Comptes Rendus Académie des Sciences, Série I, Math.335, 289-294, 2002. DOI: 10.1016/S1631-073X(02)02466-4

- [21] Y. Maday and B. Stamm, Locally adaptive greedy approximations for anisotropic parametrer reduced basis spaces, SIAM J. Scientific Computing 35, 2417-2441, 2013. DOI: 10.1137/120873868

- [22] P. Massart, Concentration inequalities and model selection, Springer 2007. DOI: 10.1007/978-3-540-48503-2

- [23] G. Rozza, D.B.P. Huynh, and A.T. Patera, Reduced basis approximation and a posteriori error estimation for affinely parametrized elliptic coercive partial differential equations - application to transport and continuum mechanics, Archive of Computational Methods in Engineering 15, 229-275, 2008. DOI: 10.1007/s00791-006-0044-7

- [24] S. Sen, Reduced-basis approximation and a posteriori error estimation for many-parameter heat conduction problems, Numerical Heat Transfer B-Fund 54, 369-389, 2008. DOI: 10.1080/10407790802424204

- [25] A. M. Stuart, Inverse problems: A Bayesian perspective Acta Numerica, 451-559, 2010. DOI: 10.1017/S0962492910000061

- [26] R. P. Stevenson, J. Westerdiep, Stability of Galerkin discretizations of a mixed space-time variational formulation of parabolic evolution equations, IMA J. Numer. Anal. (2020). DOI: 10.1093/imanum/drz069