Nonparametric estimation of a covariate-adjusted counterfactual treatment regimen response curve

Abstract

Flexible estimation of the mean outcome under a treatment regimen (i.e., value function) is the key step toward personalized medicine. We define our target parameter as a conditional value function given a set of baseline covariates which we refer to as a stratum based value function. We focus on semiparametric class of decision rules and propose a sieve based nonparametric covariate adjusted regimen-response curve estimator within that class. Our work contributes in several ways. First, we propose an inverse probability weighted nonparametrically efficient estimator of the smoothed regimen-response curve function. We show that asymptotic linearity is achieved when the nuisance functions are undersmoothed sufficiently. Asymptotic and finite sample criteria for undersmoothing are proposed. Second, using Gaussian process theory, we propose simultaneous confidence intervals for the smoothed regimen-response curve function. Third, we provide consistency and convergence rate for the optimizer of the regimen-response curve estimator; this enables us to estimate an optimal semiparametric rule. The latter is important as the optimizer corresponds with the optimal dynamic treatment regimen. Some finite-sample properties are explored with simulations.

keywords:

[class=MSC]keywords:

appReferences \startlocaldefs \endlocaldefs

, and

1 Introduction

Marginal structural models have been widely used in causal inference as a tool for estimating the mean outcome under a give decision rule (Robins, 1998) and constructing optimal treatment regimens. This approach requires modeling the potential outcome given a set of baseline covariates, including treatment and possibly some subset of baseline information on a subject. Existing methods typically impose a parametric causal model on the mean of the potential outcome and draw inference under the assumption that the model is correctly specified (Robins, Hernan and Brumback, 2000; Murphy et al., 2001; Joffe et al., 2004; Orellana, Rotnitzky and Robins, 2010). The latter is particularly restrictive when the conditioning set of treatment and baseline covariates includes some continuous variables. Although model selection approaches have been proposed (Van der Laan, Laan and Robins, 2003), it is likely that finite sample size prevents recovering the true model and leads to poor out-of-sample performance (Shinozaki and Suzuki, 2020). Neugebauer and van der Laan (2007) proposed a nonparametric marginal structural model framework to improve the causal interpretability of the estimated parameters of a potentially misspecified parametric working model. However, when the working model is misspecified, the causally interpretable parameters might not be the parameters of interest and the resulting treatment regimen might be suboptimal.

The parameters of the proposed marginal structural model can be estimated using an inverse probability weighted loss functions where weights are the conditional probability of following a particular decision rule. The consistency and causal interpretability of the corresponding estimators rely on the correctly specified weight function. To mitigate the chance of model misspecification, one may decide to model the weight function nonparametrically using data-adaptive techniques. However, this choice may result in nonregular estimators of the primary parameters in the marginal structural model. This lack of regularity is because the rate of convergence for inverse probability weighted estimators rely on a rate of convergence of the weight function which will be slower than the desired root- rate when data-adaptive techniques are used. To overcome this concern, authors have used so-called doubly robust estimators for the parameters in the working marginal structural model; that is, estimators that are consistent as long as one of either the treatment model or the outcome models are correctly specified (Robins, 2004; Wahed and Tsiatis, 2004; Orellana, Rotnitzky and Robins, 2010; Rosenblum, 2011; Petersen et al., 2014; Ertefaie et al., 2015). However, doubly-robust nonparametric estimators may suffer from two important issues: (1) the performance of the estimators depends on the modeling choice of nuisance parameters and they can be irregular with large bias and a slow rate of convergence (van der Laan and Luedtke, 2014; Benkeser et al., 2017); and (2) the quality of the constructed decision rule still relies on correctly-specified marginal structural model.

Alternative to the marginal structural models framework, several authors have proposed methods to estimate nonparametric decision rules that circumvent the need for specifying a parametric outcome model. Zhao et al. (2012) proposed an inverse probability weighting approach to estimate a nonparametric decision rule (Dudík, Langford and Li, 2011; Zhao et al., 2015). Doubly robust augmented inverse probability weighting procedures have also been proposed (Rubin and van der Laan, 2012; Zhang et al., 2012; Liu et al., 2018; Athey and Wager, 2021). Due to the nonparametric nature of the estimated decision rule, inference for the resulting value function (i.e., mean outcome under the estimated rule) is challenging. Zhao et al. (2012) developed Fisher consistency, the excess risk bound, and the convergence rate of the value function to provide a theoretical guarantee for their proposed method. Athey and Wager (2021) derived bounds for the regret function under the estimated nonparametric treatment strategy and showed that the bounds decay as as long as the Vapnik–Chervonenkis (VC) dimension of the class of decision rules does not grow too fast. Other recent germane contributions on estimating optimal treatment regimes and conditional average treatment effects include Swaminathan and Joachims (2015), Kallus (2018), Nie and Wager (2021), Zhao et al. (2022), and references therein.

We define a stratum-based value function as the conditional expectation of the potential outcome under a treatment regimen given a prespecified set of baseline covariates . The value is a function of regimens and we are interested in understanding the functional association between the mean outcome and the regimens within a class which we refer to as the regimen-response curve. We consider a current status data setting with two levels of coarsening at random (i.e., treatment assignment and censoring). We overcome the two important shortcomings of the existing methods by: (1) allowing the regimen-response curve to be estimated nonparametrically; and (2) estimating the weight functions of the inverse probability weighted loss function using a data-adaptive technique. Recently, Ertefaie, Hejazi and van der Laan (2022) proposed a nonparametric efficient inverse probability weighted estimator for the average causal effect where the weights are estimated using undersmoothed highly adaptive lasso. We will generalize below the same technique to estimate conditional average causal effects via nonparametric marginal structural models. An important byproduct of our nonparametric regimen-response curve estimator is a method to construct semiparametric decision rules where the parameters of the rule are allowed to be a nonparametric function of the set baseline covariates (i.e., -specific optimal decision rule). The parameters are defined as the optimizer of the regimen-response curve which allows us to provide a rate of convergence for the corresponding functional parameter estimators using empirical process theory. Our theoretical contributions can be summarized as follows:

-

•

Considering a kernel smoothed regimen-response curve function as the target parameter, we show that the proposed inverse probability weighted estimator (1) is nonparametrically efficient when the weight functions are properly undersmoothed; (2) convergences to a Gaussian process at root- rate; and (3) is uniformly consistent at a derived rate.

-

•

Considering the regimen-response curve function as the target parameter, we derive the asymptotic normality of our estimator and show that the bias caused by the kernel smoothing of the estimator vanishes under some mild assumptions and properly undersmoothed kernel and weight functions.

-

•

Using a novel empirical process algorithm, we show that the minimizer of the estimated regimen-response curve is a consistent estimator of the minimizer of the true regimen-response curve and derive the convergence rate. This result is of independent interest as it derives a rate of convergence for an optimizer of a nonparametrically estimated function.

In contrast to the existing literature which targets the value function under the estimated rule, our estimand is the stratum based value function. Deriving the asymptotic properties of our estimand is more challenging because it will be nonpathwise differentiable functional when includes at least one continuous variable (Bickel et al., 1998). Moreover, existing methods can either estimate a parametric or nonparametric rules where in each case flexibility or interpretability is sacrificed. Our proposed semiparametric rule lands in between and provides a reasonable trade-off between flexibility and interpretability. For example, clinicians might have strong opinion about an important tailoring variable and want to ensure to capture the correct functional form of that variable in the decision rule. In such settings, we can include that variable in the set and provide a stratum-based decision rule where the decision rule will be a nonparametric function of .

2 Preliminaries

2.1 Notation and Formulation

Let be a univariate failure time, be a vector of all available baseline covariates measured before treatment , and be a censoring variable. Let be a subvector of and where . For simplicity of notation, we denote as . A decision rule is defined as a function that maps values of to a treatment option . In our formulation is a class of deterministic rules indexed by a vector of coefficients. Specifically, we consider with where is an indicator function. Let be the potential outcome that would have been observed under the decision rule for a given value of . Suppose that we have the full data where is a nonparametric full data model. Define where is a class of cadlag functions with sectional variation norm bounded by and is a predetermined time point. The full-data risk of is defined as where is the log-likelihood loss function, and is a weight function/measure on the Euclidean set of all -values (e.g., multivariate normal with possibly large variance). Define a V-specific optimal decision rule that maximizes the -year survival probability as . Hence, we allow to be a functional parameter mapping onto .

The observed data follow some probability distribution that lies in some nonparametric model . Suppose we observe independent, identically distributed trajectories of . Let and be a functional nuisance parameter where . In our setting, denotes the joint treatment and censoring mechanism under a distribution . We refer to as and as . Under coarsening at random assumption, . Denote and . We denote the latter two functions under as and . Also define and where . Let be a projection operator in the Hilbert space with inner product .

The loss function is based on the full-data which is not observable, and thus, cannot be used to define our full data functional parameter . However, under consistency () and no unmeasured confounder ( and ) assumptions, it can be identified using the observed data distribution . Specifically, we define an inverse probability weighting mapping of the full-data loss as

| (1) |

Accordingly, define the regimen-response curve as . Following our convention, we denote as . We define a highly adaptive estimator of as where is a data adaptively selected and is an estimator of . The choice of function is inconsequential to the derivation of the efficient influence function of our target parameter but it may impact the level of undersmoothing needed in finite sample. One may consider or . The latter choice corresponds to the stabilized weight.

2.2 Highly adaptive lasso

The highly adaptive lasso is a nonparametric regression approach that can be used to estimate infinite dimensional functional parameters Benkeser and Van Der Laan (2016); van der Laan (2017); van der Laan and Bibaut (2017). It forms a linear combination of indicator basis functions to minimize the expected value of a loss function under the constraint that the constraint that the -norm of the coefficient vector is bounded by a finite constant value.

Let be the Banach space of d-variate real valued cadlag functions (right-continuous with left-hand limits) on a cube . For each function define the supremum norm as . For any subset of , we partition in and define the sectional variation norm of such as

where the sum is over all subsets of . For a given subset , define and as the complement of . Then, defined as . Thus, is a section of that sets the components in the complement of equal to zero and varies only along the variables in .

Let and denote the treatment and censoring mechanism under an arbitrary distribution . Assuming that our nuisance functional parameter has finite sectional variation norm, we can represent as (Gill, van der Laan and Wellner, 1993)

| (2) |

The representation (2) can be approximated using a discrete measure that puts mass on each observed denoted by . Let where are support points of . We then have

where is an approximation of the sectional variation norm of . The loss based highly adaptive lasso estimator is defined as

where is a given loss function. Denote as the highly adaptive lasso estimate of . Similarly, we can define as the highly adaptive lasso estimate . When the nuisance parameter correspond to a conditional probability of a binary variable (e.g., propensity score with a binary treatment or a binary censoring indicator), log-likelihood loss can be used. In practice, is unknown and must be obtained using data. We refer to as a value of that is determined using data.

3 Dynamic marginal structural models with highly adaptive lasso

3.1 The challenge

The target functional parameter is not a pathwise differentiable function which makes the inference challenging. The existing literature approximates this functional by pathwise differentiable function , where is a vector of unknown parameters van der Laan (2006); van der Laan and Petersen (2007); Murphy et al. (2001); Robins, Orellana and Rotnitzky (2008); Orellana, Rotnitzky and Robins (2010); Neugebauer and van der Laan (2007). The working model provides a user-specified summary of the unknown true function relating the expectation of the potential outcome under a strategy. Hence, the quality of the constructed decision rule depends on how well the working model approximates the true function. To mitigate this concern we propose a kernel smoothed highly adaptive lasso estimator of . Specifically, we define an regimen-response curve estimator as

| (3) |

where and is a highly adaptive lasso estimate of obtained based on the loss function (1). In this formulation, with where is a kernel satisfying . Moreover, we assume that is a product kernel defined by a univariate kernel and is a J-orthogonal kernel such that and for .

We study the asymptotic behaviour of our estimator relative to and a kernel smoothed parameter

where is a fixed bandwidth. In general, when the nuisance parameter is estimated using data adaptive regression techniques, the resulting regimen-response curve estimator won’t be asymptotically linear which makes inference challenging. For example, consider the target parameter , we have

| (4) |

The first term on the right-hand side of (4) is exactly linear and the third term is negligible under Donsker condition of . The problem arises because data adaptive regression techniques have a rate of convergence slower than the desired root- rate, and thus, the bias of diverges to infinity.

3.2 The intuition

We show that when the nuisance parameters are properly undersmoothed, the asymptotic linearity of our estimator can be retrieved. Specifically, in the proof of Theorem 4.2, we show that

| (5) | ||||

| (6) | ||||

| (7) |

where includes certain components of the canonical gradient of our statistical parameter and thus, contributes to the efficient influence function of our estimator. The last three terms do not converge to zero at the appropriate rate and thus induce non-negligible bias. However, the terms (5), (6) and (7) resemble the form of score equations for , and .

We first discuss the score equations for where . We can generate score for a nonparametric estimator by paths , such that

where is a uniformly bounded function on . Accordingly when a function is estimated using a highly adaptive lasso, the score functions can be generated by a path for a bounded vector as

| (8) |

where is the log-likelihood loss function. For example, for the treatment indicator, . The penalty in the highly adaptive lasso imposes a restriction on the function. Specifically, the path can be generated using those functions that do not change the -norm of the parameters. Let denote the set of perturbed parameters. Then, for small enough such that , . Hence, the restriction is satisfied when the inner product of and the vector is zero (i.e., ).

We now provide a thought experiment on how undersmoothing the nuisance function estimates using a highly adaptive lasso can eliminate the terms (5), (6) and (7). We first ignore the -norm restriction on the choice of function which is erroneous but contains the main idea of the approach. Without the restriction, one can choose . The latter choice perturbs one coefficient at a time and corresponds to maximum likelihood estimators. Then, for any and , the score function (19) becomes

Because, is a finite constant for all and , solving the above score equation is equivalent to solving

For example, for the treatment indicator, the score equation is given by . As we undersmooth the fit, we solve more and more score equations (i.e., the number of score equations increases with the number of features included in the model) and any linear combination of those score equations will also be solved. Now let’s consider the term (7) and let . Assuming that , and are càdlàg with finite sectional variation norm (Assumption 1 in Section 4), Gill, van der Laan and Wellner (1993) showed that can be approximated as a linear combination of indicator basis functions. Therefore, if we sufficiently undersmooth we will solve (7) up to a root- factor. That is

The same argument can be made to show that the other terms (5) and (6) can be made negligible when and are properly undersmoothed. Hence, the challenging term will be asymptotically linear with

Now, we study the actual case where the choice function is restricted to those that do not change the norm of the coefficients. Lemma A.2 shows than when (23), (24) and (25) are satisfied, our proposed estimator achieves asymptotic linearity. As we undersmooth the fit, we start adding features with small coefficients into the model. Conditions (23), (24) and (25) imply that the norm must be increases until one of the corresponding score equations is solved to a precision of .

Here we provide details for the score equations corresponding to the treatment indicator where . Let . For small enough ,

Hence, for any satisfying (i.e., does not change the norm of the coefficients), we have .

Let , where is defined above, and let be an approximation of using the basis functions that satisfy condition (24). Then, . Thus, there exists such that ; however, for this particular choice of , may not be zero (i.e., the restriction on might be violated). Now, define such that for ; is defined such that

| (9) |

That is, matches everywhere but for a single point , where it is forced to take a value such that . As a result, for such a choice of , by definition. Below, we show that which then implies that . We note that the choice of is inconsequential.

The details of the forth equality is given in the proof of Lemma A.2 and the last equality follows from the assumption that for being log-likelihood loss (i.e., condition (24)). As , it follows that . Using this result, under mild assumptions, we showed that indicating that the term (7) will be asymptotically negligible (see the proof of Lemma A.2 for details).

3.3 The estimator.

To improve the finite sample performance of our estimator we propose to estimate the nuisance parameters using cross-fitting (Klaassen, 1987; Zheng and van der Laan, 2011; Chernozhukov et al., 2017). We split the data at random into mutually exclusive and exhaustive sets of size approximately . Let and denote the the empirical distribution of a training and a validation sample, respectively. For a given and , exclude a single (validation) fold of data and fit the highly adaptive lasso estimator using data from the remaining folds; use this model to estimate the nuisance parameters for samples in the holdout (validation) fold. By repeating the process times, we will have estimates of the nuisance parameters for all sample units. Accordingly, we define the cross-fitted IPW estimator

| (10) |

where , and are highly adaptive lasso estimates of and applied to the training sample for the bth sample split for a given . The estimator uses a ()-orthogonal kernel with bandwidth centered at .

3.4 Data-adaptive bandwidth selector

The proposed estimator approaches to as at the cost of increasing the variance of the estimator. Mean square error (MSE) provides an ideal criterion for bias-variance trade-off. However, because the bias is unknown the latter cannot be used directly. Here we propose an approach that circumvent the need for knowing bias while targeting the optimal bias-variance trade-off. Let be a consistent estimator of where is the efficient influence function for (see Theorem 4.2). The goal is to find an that minimizes the MSE or equivalently set the derivative of the MSE to zero. That is

We know that the optimal (up to a constant) bias-variance trade-off implies (van der Laan, Bibaut and Luedtke, 2018). Hence, the optimal will also solve one of the following equations

| (11) |

where is a positive constant. Consider a scenario where shows an increasing trend as approaches zero. This implies that . We then define our optimal finite sample bandwidth selector as

Similarly, when shows decreasing trend as approaches zero, we define . A natural choice for the constant is -quantile of the standard normal distribution (i.e., ). Hence, is the bandwidth value that minimizes the difference between the true value and the corresponding lower (upper) confidence bound.

3.5 Undersmoothing criteria

The asymptotic linearity results of Theorems 4.2 and 4.3 rely on estimating the corresponding nuisance parameters using an undersmoothed highly adaptive lasso. Specifically, Theorem 4.2 requires that

-

(a)

,

-

(b)

,

-

(c)

,

where , and . Motivated by our theoretical results, we propose the following practical L1-norm bound selection criteria for , and :

| (12) | ||||

| (13) | ||||

| (14) |

where , , and are cross-validated highly adaptive lasso estimates of the corresponding nuisance parameters with the L1-norm bound based on the global cross-validation selector.

To achieve the Gaussian process convergence results in Theorem 4.3 (with a fixed ), we would require stronger conditions. Specifically, the conditions listed above must hold uniformly for any , that is, ,

and . Accordingly, the practical criteria are given by

| (15) | ||||

| (16) | ||||

| (17) |

where includes sample points of . The asymptotic linearity result of Theorem 4.3 with a fixed bandwidth relies on similar undersmoothing criteria as those listed in (a)–(c) but with being replaced by one, and thus, the same practical undersmoothing criteria as(12)-(14) can be considered.

In Theorems 4.2 and 4.3, we show that the proposed estimators are asymptotically efficient when they solve the efficient influence function, that is, and , respectively, for . The criteria proposed in this section correspond to the most efficient estimators for a given data. Increasing results in decreasing the empirical mean of pseudo score functions and and increasing the variance of and . At a certain point in the grid of , decreases in the empirical mean of the pseudo score functions are insufficient for satisfying the conditions, which starts increasing on account of and . Unlike and , decreases as increases which motivates the undersmoothing selection criteria (14) and (17). Specifically, the sectional variation norm and are defined as the smallest value (larger than that chosen by the cross-validation selector) for which the left-hand side of the condition is less than where be a consistent estimator of with being the efficient influence function for . The cutpoint will lead to a bias that is of a smaller magnitude of the standard error, and thus, won’t affect the coverage.

Remark 1.

The variance estimator is calculated using the efficient influence function. One can, instead, use a conservative variance estimator obtained by an influence function where the nuisance parameters are assumed to be known. That is

| (18) |

where can be replaced by their corresponding estimators. The variance estimator (18) can also be used in Section 3.4 where we proposed an adaptive bandwidth selector.

4 Theoretical results

The asymptotic properties of our estimator relies on the following assumptions.

Assumption 1 (Complexity of nuisance functions).

The functions , , and are càdlàg with finite sectional variation norm.

Assumption 1 characterizes the global smoothness assumption of the true functions which is much weaker than than local smoothness assumptions like those characterized by an assumption utilizing Hölder balls (e.g., Robins et al., 2008, 2017; Mukherjee, Newey and Robins, 2017). The assumption facilitates the fast convergence rate of obtainable by the highly adaptive lasso (regardless of dimensionality ) (van der Laan, 2017; van der Laan and Bibaut, 2017; Bibaut and van der Laan, 2019). The latter rate is generally faster than the typical minimax rates that appear under differing, but similarly positioned, assumptions regarding function classes in nonparametric estimation. In fact, we expect that almost all true conditional mean models that we have to deal with in real data will satisfy Assumption 1. The sectional variation is defined in more detail in Appendix B.

Assumption 2.

(causal inference and censoring assumptions) For ,

-

(a)

Consistency of the observed outcome: ;

-

(b)

Unconfoundedness of the treatment and censoring mechanisms: , and ;

-

(c)

Positivity of the treatment and censoring mechanisms: for all where is a small positive constant.

Assumption 2 lists standard causal identifiability assumptions. The consistency assumption ensures that a subject’s potential outcome is not affected by other subjects’ treatment levels and there are no different versions of treatment. Assumption 2b is a version of the coarsening at random assumption that imposes conditional independence between (1) treatment and potential outcomes given the measured covariates; and (2) censoring indicator and the observed outcome given the treatment and measured covariates. Positivity states that every subject has some chance of receiving treatment level with censoring status regardless of covariates. Using Assumption 2 the full data risk of can be identified using the observed data as

where and were defined in Section 2.1. Even if the Assumption 2a-b do not hold, it may still be of interest to estimate using as an adjusted measure of association.

Remark 2.

The censoring at random assumption implies that on which is weaker than (Van der Laan, Laan and Robins, 2003, Chapter 1). However, because the additional restriction can not be identified using the observed data, it will not impose any restriction on the tangent space and thus, the statistical model remains nonparametric.

Assumption 3 (Undersmoothing).

The level of undersmoothing satisfies the following conditions:

- (a)

- (b)

where is an appropriate measure (i.e., the Lebesgue or the counting measure).

Assumption 3 is arguably the most important of the conditions to retain the asymptotic linearity of . Assumption 3 (a) states that when the bandwidth is fixed and the estimated coarsening mechanisms (i.e., and ) and are sufficiently undersmoothed, the generated features can approximate functions , and with rate. Lemma A.2 in the supplementary material provides theoretical undersmoothing conditions required. Assumption 3 (b) correspond to the case where the bandwidth is allows to converge to zero and requires similar conditions as in part (a). Under Assumption 1, , and (and , and ) will fall into the class of càdlàg functions with finite sectional variation norm. Hence these functions can be approximated using the highly adaptive lasso generated basis functions at rate (up to a factor). Importantly, the required rate in Assumption 3 (a) is which is slower than the rate obtained by the highly adaptive lasso estimator. In Remark 4, we discuss that, under a certain smoothness assumption, the required rate in Assumption 3 (b) can also be slowed down to . Consequently, this key assumption is not particularly strong.

Assumption 4.

There exist constants and such that, for all , .

Assumption 4 is the margin assumption of Audibert and Tsybakov (2007) which can also be found in Tsybakov (2004). The assumption is needed to derive the rate of convergence of (Theorem 4.4). The extreme case of corresponds to a case where there is a margin around zero, that is, . As shown in the proof of Theorem 4.4 the latter leads to the fastest rate of convergence for .

Theorem 4.1.

Let be a functional parameter that is identified as where the loss function is defined in 1. Assume the functional parameter space contained in all -variate cadlag functions with a bound on its variation norm . Let where is the cross-validated selector of and let . Also assume falls in to the class of cadlag functions with a bound on its variation norm . Then, under Assumptions 1 and 2, .

Theorem 4.1 generalizes the results of van der Laan (2017) to settings where the estimation of involves estimation of nuisance parameters . The proof of the results requires techniques to handle the additional complexities due to the presence of such nuisance parameters. The theorem relies on Assumptions 1 without the need for undersmoothing the nuisance function estimators.

Theorem 4.2.

Let be -times continuously differentiable at . Suppose the support of is uniformly bounded, i.e., for some finite constant . Let , and be highly adaptive lasso estimators of , and with -norm bounds equal to , and , respectively. Assuming that

-

(i)

the bandwidth satisfies and ,

- (ii)

we have

where is obtained using a ()-orthogonal kernel, and is the corresponding efficient influence function defined in the proof of the theorem. Moreover, assuming that , converges to a mean zero normal random variable.

Theorem 4.2 gives conditions under which the proposed kernel estimator is consistent for , and it also gives the corresponding rate of convergence. In general this result follows if the bandwidth decreases with sample size sufficiently slowly, and if the nuisance functions is estimated sufficiently well. The standard local linear kernel smoothing requires that to control the variance (Wasserman, 2006, Chapter 5). Using the entropy number of the class of càdlàg functions with finite sectional variation norm, we showed that bandwidth can converge to zero at much faster rate (i.e., ) than the standard results. Condition (ii) is the standard causal assumptions. Condition (iii) indicates the level of undersmoothing of the nuisance parameter estimators required to achieve asymptotic linearity of our estimator. Section S6 in the supplementary material provides uniform convergence results with a rate for our regimen-response curve estimator for all .

Remark 3 (The optimal bandwidth rate).

Theorem 4.2 shows that in order to eliminate the bias term, we must undersmooth the kernel such that (i.e., ). In combination with rate condition (i) in the theorem, we have . Note that for the latter constraint to hold we must have . Also, the theoretical undersmoothing conditions in Lemma A.1 require . Hence, the constraint implies that the optimal bandwidth rate is with .

Remark 4 (Assumption 3 (b)).

We can allow slower rate of convergence of in Assumption 3 (b) (i.e., , and ) at the cost of requiring higher level of smoothness for . Specifically, the optimal bandwidth rate would be with .

Theorem 4.3.

Let . Suppose the support of is uniformly bounded, i.e., for some finite constant . Let , and be highly adaptive lasso estimators of , and with -norm bounds equal to , and , respectively. Under Assumptions 1, 2 and 3, the estimator will be asymptotically linear. That is

where and is the corresponding efficient influence function defined in the proof of the theorem. Moreover, converges weakly as a random element of the cadlag function space endowed with the supremum norm to a Gaussian process with covariance structure implied by the covariance function .

Theorem 4.3 strengthens the results of Theorem 4.2 when the bandwidth is fixed. Specifically, it shows that for a fixed bandwidth , convergences to a Gaussian process. This is an important results because in enables us to construct simultaneous confidence intervals for the entire curve and .

Characterizing the minimizer (or in general optimizer) of the estimated regimen-response curve is of interest as it forms an optimal individualized decision rule. Under the margin assumption (i.e., Assumption 4) and using a novel iterative empirical process theory, in Theorem 4.4, we derive the convergence rate of a minimizer of a nonparametrically estimated function .

5 Simulation Studies

We examine the performance of our estimator through two simulation studies. Within these studies, we compare the results of our estimator against theoretical values and random forest based estimation, demonstrating the practical benefits of using undersmoothed highly adaptive lasso based estimation when the data generating mechanism is unknown.

In both scenarios and . Additionally and For each scenario we sample observations, applying our estimator for each sample. The results displayed below give average effects 360 replicates of each scenario and sample size pair where we set . The latter implies that is obtained by solving a set of simpler score equations (compared with ) which may call for more undersmoothing in order to solve .

The fundamental difference between the scenarios comes in the treatment assignment mechanism. In Scenario 1, we have , giving us a randomized trial scenario, whereas in Scenario 2, we have The highly adaptive lasso estimate of requires solving fewer score functions in Scenario 1 compared with Scenario 2. In fact the only score equation that to be solved in the former is . Hence, higher level of undersmooting is required to solve . In general, the simpler the , the more undersmooting is needs to solve and .

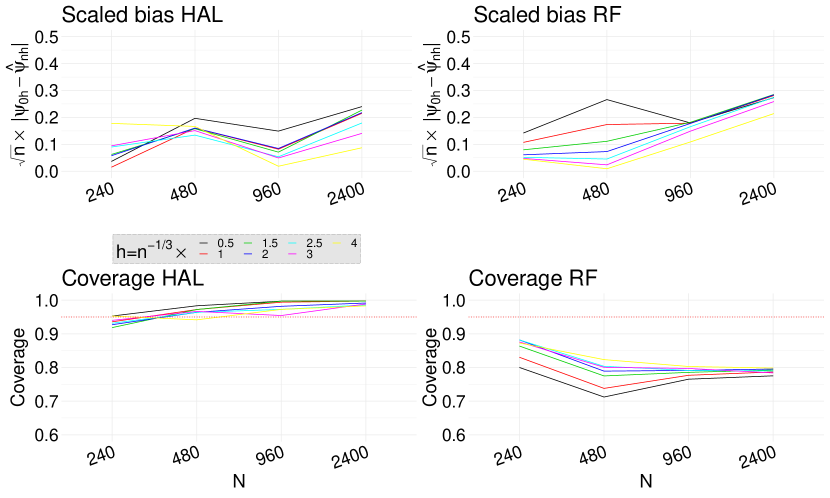

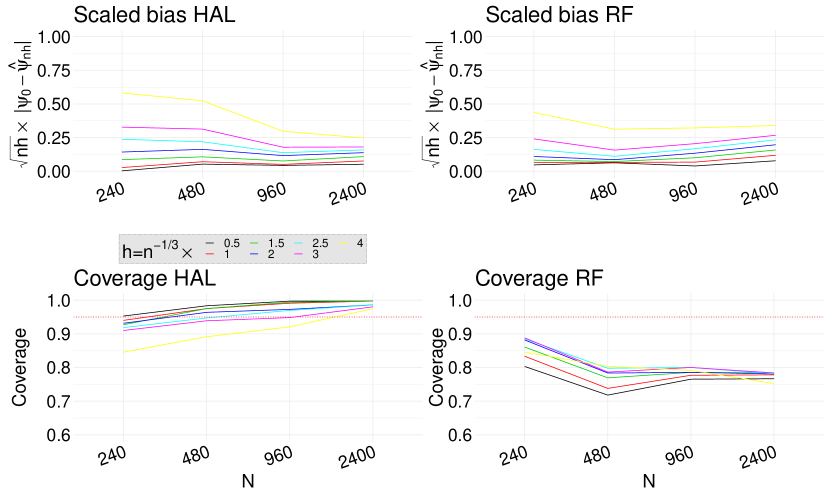

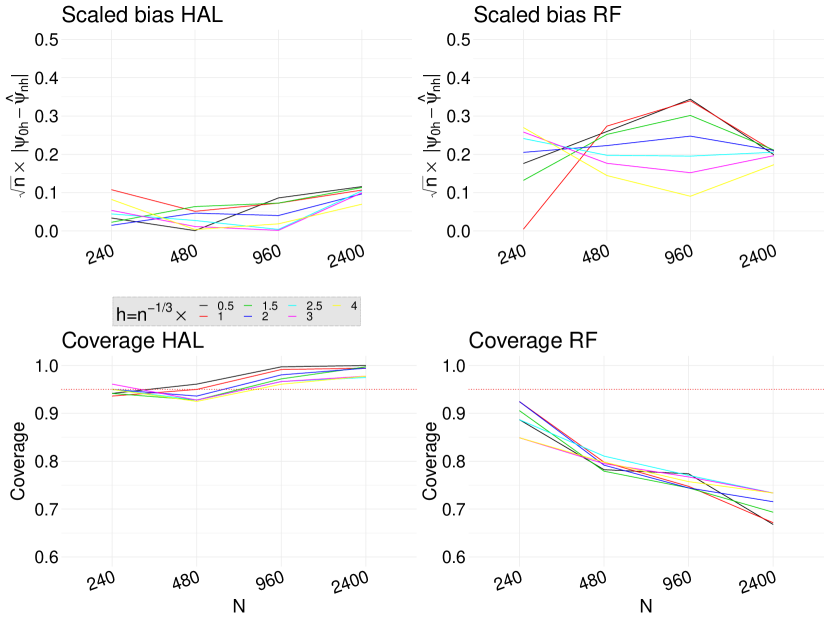

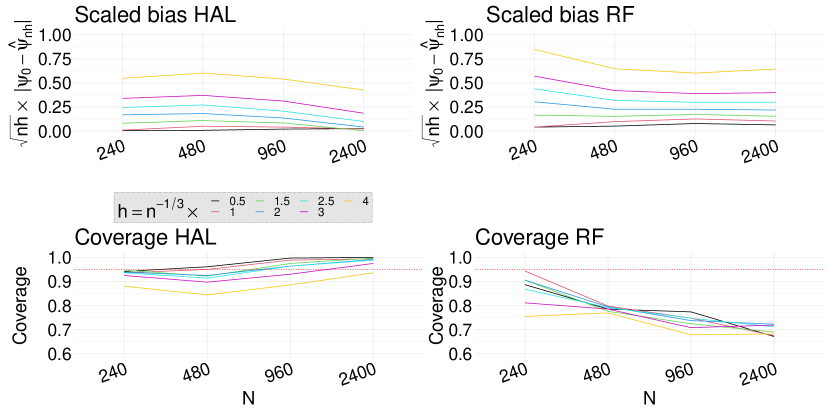

In all simulations we are targeting the estimands and using the estimator where and we consider the bandwidths . Recall that the optimal bandwidth rate is with . In our simulation studies and we are setting leading to a rate which is faster than obtained by setting . The choice of rate matters in cases where the target parameter is and the goal is to show that the scaled biases remain constant and converges are nominal even under the faster rate of (Figures 2 and 3). Coverage results are also given for where for each sample is chosen from the possible bandwidths as described in Section 3.4. In all cases highly adaptive lasso was implemented using the R package hal9001 considering quadratic basis functions for up to 2-way interactions between all predictor variables.

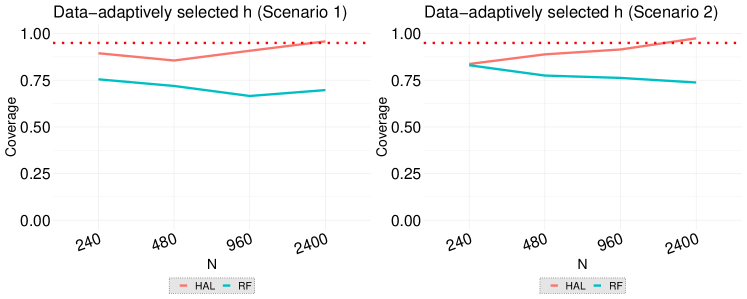

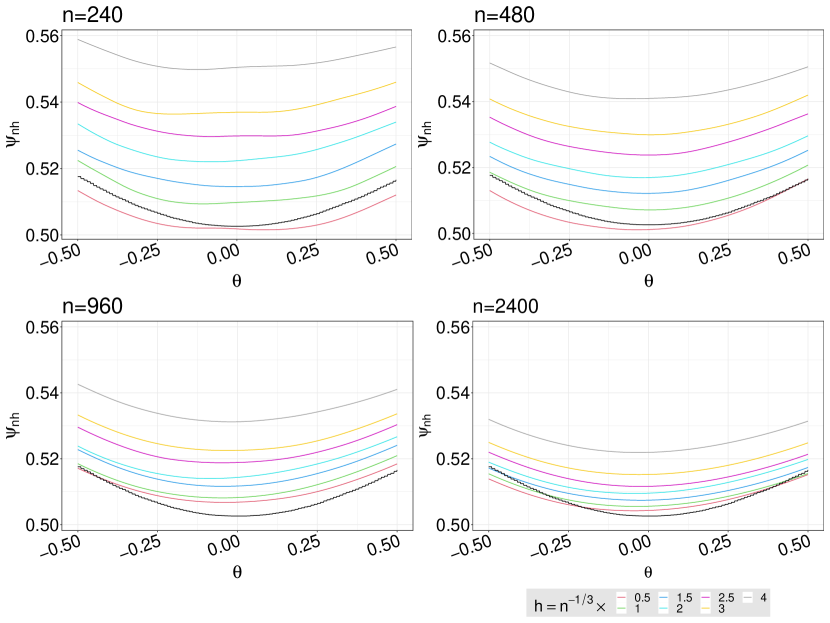

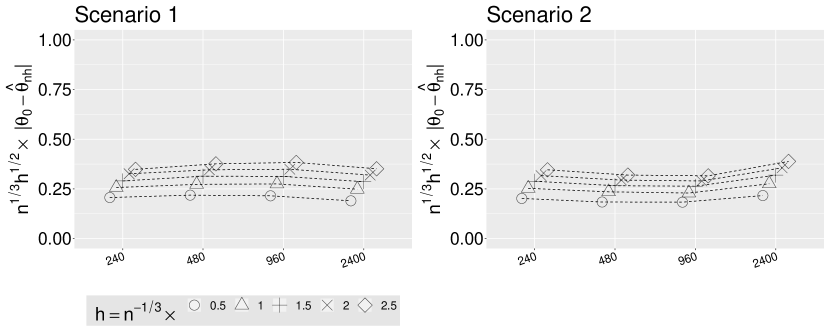

We estimate the weight functions (i.e., propensity score and censoring indicator models) using an undersmoothed highly adaptive lasso (HAL) and a random forest (RF). In both cases, is a highly adaptive lasso estimate of obtained based on the loss function (1). Figure 1 shows the scaled bias and the coverage rate of the estimators when the target parameter is . The results confirm our theoretical result presented in Theorem 4.3. The scaled bias if the estimator with undersmoothed HAL is considerably smaller than the one obtained using RF. Importantly, while the coverage rate of undersmoothed HAL estimator remains close to the nominal rate of 95%, the coverage rate of RF based estimator sharply declines as sample size increases. To assess our theoretical result of Theorem 4.2, we plotted the the scaled bias and the coverage rate of the estimators when the target parameter is . Figure 2 shows that the undersmoothed HAL outperforms RF based estimators. Figures S5-S6 in the Supplementary Material show that similar results hold in Scenario 2. Finally, Figure 3 shows that our proposed data-adaptive bandwidth selector performs very well when combined with undersmoothed HAL estimators of weight functions. Specifically, even with smaller sample size of , our approach results in nearly 95% coverage rate and as the sample size increases it quickly recovers the nominal rate. Figure 4 displays against values. As the sample size increases the bias reduces across all the bandwidth values. Figure S7 in the Supplementary Material shows the scaled bias of when the weight functions are estimated using an undersmoothed highly adaptive lasso. The plot shows that the scaled bias is relatively constant across different sample sizes thereby confirming our result in Theorem 4.4 (by setting and ).

6 Concluding Remarks

6.1 Multi-stage decision making

A natural and important extension of our work is to learn dynamic treatment regimens in multi-stage problems. In settings with many decision points (e.g., mobile health), it would be beneficial to consider a stochastic class of decision rules rather than a deterministic one (Kennedy, 2019; Luckett et al., 2019).

6.2 Score based undersmoothing criteria

The practical undersmoothing criteria proposed in Section 3.5 requires calculating the efficient influence function and deriving the relevant terms. The latter can be challenging and tedious, for example, in multi-stage settings (i.e., time-varying treatment) with many decision points. This motivates the derivation of score based criteria which requires only the score of the corresponding nuisance function. This will, in fact, resemble closely the theoretical undersmoothing conditions listed in Lemmas A.1, A.1 and A.3 in Appendix and Supplementary materials. For example, for the treatment assignment model, we can consider

| (19) |

in which is the -norm of the coefficients in the highly adaptive lasso estimator for a given , and . Note that involves the score equation for the propensity score model (i.e., ) and the inverse of the estimate propensity score. A more detailed discussion of the score based criteria is provided in Section 4.2 of Ertefaie, Hejazi and van der Laan (2022). However, the theoretical properties of these criteria and their performance in regimen-curve estimation problems are unknown and merit further research.

6.3 Near positively violation

In general, near positivity violation can negatively impact the performance of causal estimators. In particular, it can lead to an increased bias and variance in inverse probability weighted estimators and undersmoothing may exacerbate the issue. One possible remedy is to consider truncation where individuals whose estimated weight functions are smaller than a given cutpoint are removed from the analyses (Crump et al., 2009). There are also other methods to trim the population in an interpretable way (Traskin and Small, 2011; Fogarty et al., 2016). Another possible way to improve the performance of our approach under near positivity violation is to fit a highly adaptive lasso by enforcing the positivity and , for a positive constant . One can even make data dependent (i.e., consider ). This is an interesting direction from both methodological and practical point of view.

6.4 Variable selection

In our proposed method, we assume that the set of variables included in regimen response-curve function and the decision rule (i.e., and , respectively) are a priori specified. Particularly, in health research, variable selection in decision making is important as it may reduce the treatment costs and burden on patients. Moreover, the quality of the constructed rules can be severely hampered by inclusion of many extraneous variables (Jones, Ertefaie and Strawderman, 2022). Therefore, it will be interesting to systematically investigate how to perform variable selection in regimen response-curve function and the decision rule and to provide theoretical guarantees.

Appendix A Theoretical undersmoothing conditions

Lemma A.1 (Theoretical undersmoothing conditions when ).

Let , and denote , the treatment and censoring mechanism under an arbitrary distribution . Let , and be the highly adaptive lasso estimators of , and using -norm bound , and , respectively. Choosing , and such that

| (20) | ||||

| (21) | ||||

| (22) |

where, in (20), is the loss function (1), and, in (21), is log-likelihood loss. Also, is a set of indices for the basis functions with nonzero coefficients in the corresponding model. Let , , and . The functions , and are such that , and are càdlàg with finite sectional variation norm. Let , and be projections of , and onto the linear span of the basis functions in , where satisfies condition (20) and (21), respectively. Then, under the optimal bandwidth rate and , we have , and .

Lemma A.2 (Theoretical undersmoothing conditions for a fixed bandwidth ).

Let , and denote , the treatment and censoring mechanism under an arbitrary distribution . Let , and be the highly adaptive lasso estimators of , and using -norm bound , and , respectively. Choosing , and such that

| (23) | ||||

| (24) | ||||

| (25) |

where, in (23), is the loss function (1), and, in (24) and (25), is log-likelihood loss. Also, is a set of indices for the basis functions with nonzero coefficients in the corresponding model. Let , , and . The functions , and are càdlàg with finite sectional variation norm. Let , and be projections of , and onto the linear span of the basis functions in , where satisfies condition (23) and (24), respectively. Then, we have , and .

Lemma A.3 (Theoretical undersmoothing conditions for uniform convergence with fixed bandwidth ).

Let , and denote , the treatment and censoring mechanism under an arbitrary distribution . Let , and be the highly adaptive lasso estimators of , and using -norm bound , and , respectively. Choosing , and such that

| (26) | ||||

| (27) | ||||

| (28) |

where, in (26), is the loss function (1), and, in (27) and (28), is log-likelihood loss. Also, is a set of indices for the basis functions with nonzero coefficients in the corresponding model. Let , , and . Here, , and are càdlàg with finite sectional variation norm, and we let , and be projections of , and onto the linear span of the basis functions in , where satisfies condition (26) and (27), respectively. Assuming , and , it follows that , , , and .

Appendix B Sectional variation norm

Suppose that the function of interest falls into a càdlàg class of functions on a p-dimensional cube with finite sectional variation norm where is the upper bound of all supports which is assumed to be finite. The p-dimensional cube can be represented as a union of lower dimensional cubes (i.e., -dimensional with ) plus the origin. That is where is over all subsets of .

Denote as the Banach space of -variate real-valued càdlàg functions on a cube . For each function , we define the th section as . By definition, varies along the variables in according to while setting other variables to zero. We define the sectional variation norm of a given as

where the sum is over all subsets of the coordinates . The term denotes the specific variation norm. To clarify the definition, let’s consider a simple case of . In this case, the variation norm of function over is defined as

where , is a partition of such as and is the class of all possible partitions. For differentiable functions , the sectional variation norm is .

For , the variation norm of function over is defined as

where , is a partition of such as , and is the class of all possible partitions.

In practice, we choose the support points to be the observed values of denoted as . Hence the indicator basis functions will be . For simplicity lets consider , then, in a hypothetical example, the design matrix based on indicator basis functions would be

| 1.45 | 1 | 1 | 0 | 1 | |

|---|---|---|---|---|---|

| 0.84 | 0 | 1 | 0 | 1 | |

| 1.0 | 1 | 1 | 1 | 1 | |

| 0.16 | 0 | 0 | 0 | 1 | |

The first author was supported by National Institute on Drug Abuse under award number R01DA048764, and National Institute of Neurological Disorders and Stroke under award numbers R33NS120240 and R61NS12024. The third author was supported in part by NIH Grant R01AI074345.

References

- Athey and Wager (2021) {barticle}[author] \bauthor\bsnmAthey, \bfnmSusan\binitsS. and \bauthor\bsnmWager, \bfnmStefan\binitsS. (\byear2021). \btitlePolicy learning with observational data. \bjournalEconometrica \bvolume89 \bpages133–161. \endbibitem

- Audibert and Tsybakov (2007) {barticle}[author] \bauthor\bsnmAudibert, \bfnmJean-Yves\binitsJ.-Y. and \bauthor\bsnmTsybakov, \bfnmAlexandre B\binitsA. B. (\byear2007). \btitleFast learning rates for plug-in classifiers. \bjournalThe Annals of statistics \bvolume35 \bpages608–633. \endbibitem

- Benkeser and Van Der Laan (2016) {binproceedings}[author] \bauthor\bsnmBenkeser, \bfnmDavid\binitsD. and \bauthor\bsnmVan Der Laan, \bfnmMark\binitsM. (\byear2016). \btitleThe highly adaptive lasso estimator. In \bbooktitleProceedings of the… International Conference on Data Science and Advanced Analytics. IEEE International Conference on Data Science and Advanced Analytics \bvolume2016 \bpages689. \bpublisherNIH Public Access. \endbibitem

- Benkeser et al. (2017) {barticle}[author] \bauthor\bsnmBenkeser, \bfnmDavid\binitsD., \bauthor\bsnmCarone, \bfnmMarco\binitsM., \bauthor\bsnmLaan, \bfnmMJ Van Der\binitsM. V. D. and \bauthor\bsnmGilbert, \bfnmPB\binitsP. (\byear2017). \btitleDoubly robust nonparametric inference on the average treatment effect. \bjournalBiometrika \bvolume104 \bpages863–880. \endbibitem

- Bibaut and van der Laan (2019) {barticle}[author] \bauthor\bsnmBibaut, \bfnmAurélien F\binitsA. F. and \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. (\byear2019). \btitleFast rates for empirical risk minimization over cadlag functions with bounded sectional variation norm. \bjournalarXiv preprint arXiv:1907.09244. \endbibitem

- Bickel et al. (1998) {bbook}[author] \bauthor\bsnmBickel, \bfnmPeter J\binitsP. J., \bauthor\bsnmKlaassen, \bfnmChris A\binitsC. A., \bauthor\bsnmBickel, \bfnmPeter J\binitsP. J., \bauthor\bsnmRitov, \bfnmY\binitsY., \bauthor\bsnmKlaassen, \bfnmJ\binitsJ., \bauthor\bsnmWellner, \bfnmJon A\binitsJ. A. and \bauthor\bsnmRitov, \bfnmYA’Acov\binitsY. (\byear1998). \btitleEfficient and adaptive estimation for semiparametric models \bvolume2. \bpublisherSpringer New York. \endbibitem

- Chernozhukov et al. (2017) {barticle}[author] \bauthor\bsnmChernozhukov, \bfnmVictor\binitsV., \bauthor\bsnmChetverikov, \bfnmDenis\binitsD., \bauthor\bsnmDemirer, \bfnmMert\binitsM., \bauthor\bsnmDuflo, \bfnmEsther\binitsE., \bauthor\bsnmHansen, \bfnmChristian\binitsC. and \bauthor\bsnmNewey, \bfnmWhitney\binitsW. (\byear2017). \btitleDouble/debiased/neyman machine learning of treatment effects. \bjournalAmerican Economic Review \bvolume107 \bpages261–65. \endbibitem

- Crump et al. (2009) {barticle}[author] \bauthor\bsnmCrump, \bfnmRichard K\binitsR. K., \bauthor\bsnmHotz, \bfnmV Joseph\binitsV. J., \bauthor\bsnmImbens, \bfnmGuido W\binitsG. W. and \bauthor\bsnmMitnik, \bfnmOscar A\binitsO. A. (\byear2009). \btitleDealing with limited overlap in estimation of average treatment effects. \bjournalBiometrika \bvolume96 \bpages187–199. \endbibitem

- Dudík, Langford and Li (2011) {barticle}[author] \bauthor\bsnmDudík, \bfnmMiroslav\binitsM., \bauthor\bsnmLangford, \bfnmJohn\binitsJ. and \bauthor\bsnmLi, \bfnmLihong\binitsL. (\byear2011). \btitleDoubly robust policy evaluation and learning. \bjournalarXiv preprint arXiv:1103.4601. \endbibitem

- Ertefaie, Hejazi and van der Laan (2022) {barticle}[author] \bauthor\bsnmErtefaie, \bfnmAshkan\binitsA., \bauthor\bsnmHejazi, \bfnmNima S\binitsN. S. and \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. (\byear2022). \btitleNonparametric inverse probability weighted estimators based on the highly adaptive lasso. \bjournalBiometrics. \endbibitem

- Ertefaie et al. (2015) {barticle}[author] \bauthor\bsnmErtefaie, \bfnmAshkan\binitsA., \bauthor\bsnmWu, \bfnmTianshuang\binitsT., \bauthor\bsnmLynch, \bfnmKevin G\binitsK. G. and \bauthor\bsnmNahum-Shani, \bfnmInbal\binitsI. (\byear2015). \btitleIdentifying a set that contains the best dynamic treatment regimes. \bjournalBiostatistics \bvolume17 \bpages135–148. \endbibitem

- Fogarty et al. (2016) {barticle}[author] \bauthor\bsnmFogarty, \bfnmColin B\binitsC. B., \bauthor\bsnmMikkelsen, \bfnmMark E\binitsM. E., \bauthor\bsnmGaieski, \bfnmDavid F\binitsD. F. and \bauthor\bsnmSmall, \bfnmDylan S\binitsD. S. (\byear2016). \btitleDiscrete optimization for interpretable study populations and randomization inference in an observational study of severe sepsis mortality. \bjournalJournal of the American Statistical Association \bvolume111 \bpages447–458. \endbibitem

- Gill, van der Laan and Wellner (1993) {bbook}[author] \bauthor\bsnmGill, \bfnmRichard D\binitsR. D., \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. and \bauthor\bsnmWellner, \bfnmJon A\binitsJ. A. (\byear1993). \btitleInefficient estimators of the bivariate survival function for three models. \bpublisherRijksuniversiteit Utrecht. Mathematisch Instituut. \endbibitem

- Joffe et al. (2004) {barticle}[author] \bauthor\bsnmJoffe, \bfnmMarshall M\binitsM. M., \bauthor\bsnmTen Have, \bfnmThomas R\binitsT. R., \bauthor\bsnmFeldman, \bfnmHarold I\binitsH. I. and \bauthor\bsnmKimmel, \bfnmStephen E\binitsS. E. (\byear2004). \btitleModel selection, confounder control, and marginal structural models: review and new applications. \bjournalThe American Statistician \bvolume58 \bpages272–279. \endbibitem

- Jones, Ertefaie and Strawderman (2022) {barticle}[author] \bauthor\bsnmJones, \bfnmJeremiah\binitsJ., \bauthor\bsnmErtefaie, \bfnmAshkan\binitsA. and \bauthor\bsnmStrawderman, \bfnmRobert L\binitsR. L. (\byear2022). \btitleValid post-selection inference in Robust Q-learning. \bjournalarXiv preprint arXiv:2208.03233. \endbibitem

- Kallus (2018) {barticle}[author] \bauthor\bsnmKallus, \bfnmNathan\binitsN. (\byear2018). \btitleBalanced policy evaluation and learning. \bjournalAdvances in neural information processing systems \bvolume31. \endbibitem

- Kennedy (2019) {barticle}[author] \bauthor\bsnmKennedy, \bfnmEdward H\binitsE. H. (\byear2019). \btitleNonparametric causal effects based on incremental propensity score interventions. \bjournalJournal of the American Statistical Association \bvolume114 \bpages645–656. \endbibitem

- Klaassen (1987) {barticle}[author] \bauthor\bsnmKlaassen, \bfnmChris AJ\binitsC. A. (\byear1987). \btitleConsistent estimation of the influence function of locally asymptotically linear estimators. \bjournalThe Annals of Statistics \bvolume15 \bpages1548–1562. \endbibitem

- Liu et al. (2018) {barticle}[author] \bauthor\bsnmLiu, \bfnmYing\binitsY., \bauthor\bsnmWang, \bfnmYuanjia\binitsY., \bauthor\bsnmKosorok, \bfnmMichael R\binitsM. R., \bauthor\bsnmZhao, \bfnmYingqi\binitsY. and \bauthor\bsnmZeng, \bfnmDonglin\binitsD. (\byear2018). \btitleAugmented outcome-weighted learning for estimating optimal dynamic treatment regimens. \bjournalStatistics in medicine \bvolume37 \bpages3776–3788. \endbibitem

- Luckett et al. (2019) {barticle}[author] \bauthor\bsnmLuckett, \bfnmDaniel J\binitsD. J., \bauthor\bsnmLaber, \bfnmEric B\binitsE. B., \bauthor\bsnmKahkoska, \bfnmAnna R\binitsA. R., \bauthor\bsnmMaahs, \bfnmDavid M\binitsD. M., \bauthor\bsnmMayer-Davis, \bfnmElizabeth\binitsE. and \bauthor\bsnmKosorok, \bfnmMichael R\binitsM. R. (\byear2019). \btitleEstimating dynamic treatment regimes in mobile health using v-learning. \bjournalJournal of the American Statistical Association. \endbibitem

- Mukherjee, Newey and Robins (2017) {barticle}[author] \bauthor\bsnmMukherjee, \bfnmRajarshi\binitsR., \bauthor\bsnmNewey, \bfnmWhitney K\binitsW. K. and \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M. (\byear2017). \btitleSemiparametric efficient empirical higher order influence function estimators. \bjournalarXiv preprint arXiv:1705.07577. \endbibitem

- Murphy et al. (2001) {barticle}[author] \bauthor\bsnmMurphy, \bfnmSusan A\binitsS. A., \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J., \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M. and \bauthor\bsnmGroup, \bfnmConduct Problems Prevention Research\binitsC. P. P. R. (\byear2001). \btitleMarginal mean models for dynamic regimes. \bjournalJournal of the American Statistical Association \bvolume96 \bpages1410–1423. \endbibitem

- Neugebauer and van der Laan (2007) {barticle}[author] \bauthor\bsnmNeugebauer, \bfnmRomain\binitsR. and \bauthor\bparticlevan der \bsnmLaan, \bfnmMark\binitsM. (\byear2007). \btitleNonparametric causal effects based on marginal structural models. \bjournalJournal of Statistical Planning and Inference \bvolume137 \bpages419–434. \endbibitem

- Nie and Wager (2021) {barticle}[author] \bauthor\bsnmNie, \bfnmXinkun\binitsX. and \bauthor\bsnmWager, \bfnmStefan\binitsS. (\byear2021). \btitleQuasi-oracle estimation of heterogeneous treatment effects. \bjournalBiometrika \bvolume108 \bpages299–319. \endbibitem

- Orellana, Rotnitzky and Robins (2010) {barticle}[author] \bauthor\bsnmOrellana, \bfnmLiliana\binitsL., \bauthor\bsnmRotnitzky, \bfnmAndrea\binitsA. and \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M. (\byear2010). \btitleDynamic regime marginal structural mean models for estimation of optimal dynamic treatment regimes, part I: main content. \bjournalThe international journal of biostatistics \bvolume6. \endbibitem

- Petersen et al. (2014) {barticle}[author] \bauthor\bsnmPetersen, \bfnmMaya\binitsM., \bauthor\bsnmSchwab, \bfnmJoshua\binitsJ., \bauthor\bsnmGruber, \bfnmSusan\binitsS., \bauthor\bsnmBlaser, \bfnmNello\binitsN., \bauthor\bsnmSchomaker, \bfnmMichael\binitsM. and \bauthor\bparticlevan der \bsnmLaan, \bfnmMark\binitsM. (\byear2014). \btitleTargeted maximum likelihood estimation for dynamic and static longitudinal marginal structural working models. \bjournalJournal of causal inference \bvolume2 \bpages147–185. \endbibitem

- Robins (1998) {barticle}[author] \bauthor\bsnmRobins, \bfnmJM\binitsJ. (\byear1998). \btitleMarginal structural models. 1997 proceedings of the American Statistical Association, section on Bayesian statistical science (pp. 1–10). \bjournalRetrieved from. \endbibitem

- Robins (2004) {binproceedings}[author] \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M. (\byear2004). \btitleOptimal structural nested models for optimal sequential decisions. In \bbooktitleProceedings of the second seattle Symposium in Biostatistics \bpages189–326. \bpublisherSpringer. \endbibitem

- Robins, Hernan and Brumback (2000) {bmisc}[author] \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M., \bauthor\bsnmHernan, \bfnmMiguel Angel\binitsM. A. and \bauthor\bsnmBrumback, \bfnmBabette\binitsB. (\byear2000). \btitleMarginal structural models and causal inference in epidemiology. \endbibitem

- Robins, Orellana and Rotnitzky (2008) {barticle}[author] \bauthor\bsnmRobins, \bfnmJames M.\binitsJ. M., \bauthor\bsnmOrellana, \bfnmL.\binitsL. and \bauthor\bsnmRotnitzky, \bfnmA.\binitsA. (\byear2008). \btitleEstimation and extrapolation of optimal treatment and testing strategies. \bjournalStatistics in Medicine \bvolume27 \bpages4678–4721. \endbibitem

- Robins et al. (2008) {barticle}[author] \bauthor\bsnmRobins, \bfnmJames\binitsJ., \bauthor\bsnmLi, \bfnmLingling\binitsL., \bauthor\bsnmTchetgen, \bfnmEric\binitsE., \bauthor\bparticlevan der \bsnmVaart, \bfnmAad\binitsA. \betalet al. (\byear2008). \btitleHigher order influence functions and minimax estimation of nonlinear functionals. \bjournalProbability and statistics: essays in honor of David A. Freedman \bvolume2 \bpages335–421. \endbibitem

- Robins et al. (2017) {barticle}[author] \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M., \bauthor\bsnmLi, \bfnmLingling\binitsL., \bauthor\bsnmMukherjee, \bfnmRajarshi\binitsR., \bauthor\bsnmTchetgen, \bfnmEric Tchetgen\binitsE. T. and \bauthor\bparticlevan der \bsnmVaart, \bfnmAad\binitsA. (\byear2017). \btitleMinimax estimation of a functional on a structured high-dimensional model. \bjournalThe Annals of Statistics \bvolume45 \bpages1951–1987. \endbibitem

- Rosenblum (2011) {bincollection}[author] \bauthor\bsnmRosenblum, \bfnmMichael\binitsM. (\byear2011). \btitleMarginal structural models. In \bbooktitleTargeted Learning \bpages145–160. \bpublisherSpringer. \endbibitem

- Rubin and van der Laan (2012) {barticle}[author] \bauthor\bsnmRubin, \bfnmDaniel B\binitsD. B. and \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. (\byear2012). \btitleStatistical issues and limitations in personalized medicine research with clinical trials. \bjournalThe international journal of biostatistics \bvolume8 \bpages18. \endbibitem

- Shinozaki and Suzuki (2020) {barticle}[author] \bauthor\bsnmShinozaki, \bfnmTomohiro\binitsT. and \bauthor\bsnmSuzuki, \bfnmEtsuji\binitsE. (\byear2020). \btitleUnderstanding marginal structural models for time-varying exposures: pitfalls and tips. \bjournalJournal of epidemiology \bpagesJE20200226. \endbibitem

- Swaminathan and Joachims (2015) {barticle}[author] \bauthor\bsnmSwaminathan, \bfnmAdith\binitsA. and \bauthor\bsnmJoachims, \bfnmThorsten\binitsT. (\byear2015). \btitleBatch learning from logged bandit feedback through counterfactual risk minimization. \bjournalThe Journal of Machine Learning Research \bvolume16 \bpages1731–1755. \endbibitem

- Traskin and Small (2011) {barticle}[author] \bauthor\bsnmTraskin, \bfnmMikhail\binitsM. and \bauthor\bsnmSmall, \bfnmDylan S\binitsD. S. (\byear2011). \btitleDefining the study population for an observational study to ensure sufficient overlap: a tree approach. \bjournalStatistics in Biosciences \bvolume3 \bpages94–118. \endbibitem

- Tsybakov (2004) {barticle}[author] \bauthor\bsnmTsybakov, \bfnmAlexander B\binitsA. B. (\byear2004). \btitleOptimal aggregation of classifiers in statistical learning. \bjournalThe Annals of Statistics \bvolume32 \bpages135–166. \endbibitem

- Vaart and Wellner (1996) {bbook}[author] \bauthor\bsnmVaart, \bfnmAad W\binitsA. W. and \bauthor\bsnmWellner, \bfnmJon A\binitsJ. A. (\byear1996). \btitleWeak convergence and empirical processes: with applications to statistics. \bpublisherSpringer. \endbibitem

- van der Laan (2006) {barticle}[author] \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. (\byear2006). \btitleCausal effect models for intention to treat and realistic individualized treatment rules. \endbibitem

- van der Laan (2017) {barticle}[author] \bauthor\bparticlevan der \bsnmLaan, \bfnmMark\binitsM. (\byear2017). \btitleA generally efficient targeted minimum loss based estimator based on the highly adaptive lasso. \bjournalThe international journal of biostatistics \bvolume13. \endbibitem

- van der Laan and Bibaut (2017) {barticle}[author] \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. and \bauthor\bsnmBibaut, \bfnmAurélien F\binitsA. F. (\byear2017). \btitleUniform consistency of the highly adaptive lasso estimator of infinite dimensional parameters. \bjournalarXiv preprint arXiv:1709.06256. \endbibitem

- van der Laan, Bibaut and Luedtke (2018) {bincollection}[author] \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J., \bauthor\bsnmBibaut, \bfnmAurélien\binitsA. and \bauthor\bsnmLuedtke, \bfnmAlexander R\binitsA. R. (\byear2018). \btitleCV-TMLE for nonpathwise differentiable target parameters. In \bbooktitleTargeted Learning in Data Science \bpages455–481. \bpublisherSpringer. \endbibitem

- Van der Laan, Laan and Robins (2003) {bbook}[author] \bauthor\bparticleVan der \bsnmLaan, \bfnmMark J\binitsM. J., \bauthor\bsnmLaan, \bfnmMJ\binitsM. and \bauthor\bsnmRobins, \bfnmJames M\binitsJ. M. (\byear2003). \btitleUnified methods for censored longitudinal data and causality. \bpublisherSpringer Science & Business Media. \endbibitem

- van der Laan and Luedtke (2014) {barticle}[author] \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. and \bauthor\bsnmLuedtke, \bfnmAlexander R\binitsA. R. (\byear2014). \btitleTargeted learning of an optimal dynamic treatment, and statistical inference for its mean outcome. \bjournalU.C. Berkeley Division of Biostatistics Working Paper Series. \endbibitem

- van der Laan and Petersen (2007) {barticle}[author] \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. and \bauthor\bsnmPetersen, \bfnmMaya L\binitsM. L. (\byear2007). \btitleStatistical learning of origin-specific statically optimal individualized treatment rules. \bjournalThe international journal of biostatistics \bvolume3. \endbibitem

- van Der Laan and Rose (2018) {bbook}[author] \bauthor\bparticlevan \bsnmDer Laan, \bfnmMark J\binitsM. J. and \bauthor\bsnmRose, \bfnmSherri\binitsS. (\byear2018). \btitleTargeted Learning in Data Science: Causal Inference for Complex Longitudinal Studies. \bpublisherSpringer. \endbibitem

- Wahed and Tsiatis (2004) {barticle}[author] \bauthor\bsnmWahed, \bfnmAbdus S\binitsA. S. and \bauthor\bsnmTsiatis, \bfnmAnastasios A\binitsA. A. (\byear2004). \btitleOptimal estimator for the survival distribution and related quantities for treatment policies in two-stage randomization designs in clinical trials. \bjournalBiometrics \bvolume60 \bpages124–133. \endbibitem

- Wasserman (2006) {bbook}[author] \bauthor\bsnmWasserman, \bfnmLarry\binitsL. (\byear2006). \btitleAll of nonparametric statistics. \bpublisherSpringer Science & Business Media. \endbibitem

- Zhang et al. (2012) {barticle}[author] \bauthor\bsnmZhang, \bfnmBaqun\binitsB., \bauthor\bsnmTsiatis, \bfnmAnastasios A\binitsA. A., \bauthor\bsnmLaber, \bfnmEric B\binitsE. B. and \bauthor\bsnmDavidian, \bfnmMarie\binitsM. (\byear2012). \btitleA robust method for estimating optimal treatment regimes. \bjournalBiometrics \bvolume68 \bpages1010–1018. \endbibitem

- Zhao et al. (2012) {barticle}[author] \bauthor\bsnmZhao, \bfnmYingqi\binitsY., \bauthor\bsnmZeng, \bfnmDonglin\binitsD., \bauthor\bsnmRush, \bfnmA John\binitsA. J. and \bauthor\bsnmKosorok, \bfnmMichael R\binitsM. R. (\byear2012). \btitleEstimating individualized treatment rules using outcome weighted learning. \bjournalJournal of the American Statistical Association \bvolume107 \bpages1106–1118. \endbibitem

- Zhao et al. (2015) {barticle}[author] \bauthor\bsnmZhao, \bfnmYing-Qi\binitsY.-Q., \bauthor\bsnmZeng, \bfnmDonglin\binitsD., \bauthor\bsnmLaber, \bfnmEric B\binitsE. B. and \bauthor\bsnmKosorok, \bfnmMichael R\binitsM. R. (\byear2015). \btitleNew statistical learning methods for estimating optimal dynamic treatment regimes. \bjournalJournal of the American Statistical Association \bvolume110 \bpages583–598. \endbibitem

- Zhao et al. (2022) {barticle}[author] \bauthor\bsnmZhao, \bfnmQingyuan\binitsQ., \bauthor\bsnmSmall, \bfnmDylan S\binitsD. S., \bauthor\bsnmErtefaie, \bfnmAshkan\binitsA. \betalet al. (\byear2022). \btitleSelective inference for effect modification via the lasso. \bjournalJournal of the Royal Statistical Society Series B \bvolume84 \bpages382–413. \endbibitem

- Zheng and van der Laan (2011) {bincollection}[author] \bauthor\bsnmZheng, \bfnmWenjing\binitsW. and \bauthor\bparticlevan der \bsnmLaan, \bfnmMark J\binitsM. J. (\byear2011). \btitleCross-validated targeted minimum-loss-based estimation. In \bbooktitleTargeted Learning \bpages459–474. \bpublisherSpringer. \endbibitem

Supplement to “Nonparametric estimation of a covariate-adjusted counterfactual treatment regimen response curve”

Appendix S1 Proof of Lemmas A.1, A.2 and A.3

Proof of Lemma A.1.

We first proof the result for the treatment indicator and . Let and , where is a càdlàg function with a finite sectional variation norm, and let be an approximation of using the basis functions that satisfy condition (21).

The last equality follows from and Lemma S1 as

Moreover, by the convergence rate of the highly adaptive lasso estimate (i.e., ),

Therefore, the proof is complete if we show

Considering the optimal bandwidth rate of , the first term satisfies the desired rate when which implies . The inequality is satisfied for . We can similarly show that for . Hence, for ,

In the following we show that is also of the order .

For simplicity of notation, let . Define a set of score functions generated by a path for a uniformly bounded vector as

When is the log-likelihood loss function, . Thus, and . Let . For small enough ,

Hence, for any satisfying , we have .

Because , there exists such that . However, for this particular choice of , may not be zero. Now, define such that for ; is defined such that

| (S29) |

That is, matches everywhere but for a single point , where it is forced to take a value such that . As a result, for such a choice of , by definition. Below, we show that which then implies that . We note that the choice of is inconsequential.

where the third equality follows from equation (S30) above with

Moreover,

Assuming has finite sectional variation norm, the norm of the coefficients approximating will be finite which implies that is finite, and thus . Then,

where the last equality follows from the assumption that for

being log-likelihood loss. As , it

follows that and thus .

We just showed that . Similarly we can show that and which completes the proof.

∎

Proof of Lemma A.2.

The prove follows from the proof of Lemma A.1 with the bandwidth replaced by a constant value (e.g., one). ∎

Proof of Lemma A.3.

We first proof the result for the treatment indicator and .

The last equality follows from and Lemma S1 as

Moreover, by the convergence rate of the highly adaptive lasso estimate (i.e., ),

Therefore, the proof is complete if we show

In the following we show that is also of the order .

For simplicity of notation, let . Define a set of score functions generated by a path for a uniformly bounded vector as

When is the log-likelihood loss function, . Thus, and . Let . For small enough ,

Hence, for any satisfying , we have .

Because , there exists such that . However, for this particular choice of , may not be zero. Now, define such that for ; is defined such that

| (S30) |

That is, matches everywhere but for a single point , where it is forced to take a value such that . As a result, for such a choice of , by definition. Below, we show that which then implies that . We note that the choice of is inconsequential.

where the third equality follows from equation (S30) above with

Moreover,

Assuming has finite sectional variation norm, the norm of the coefficients approximating will be finite which implies that is finite, and thus . Hence,

where the last equality follows from the assumption that for

being log-likelihood loss. As , it

follows that .

We just showed that . Similarly we can show that and which completes the proof.

∎

Appendix S2 Other Lemmas

Lemma S1 (Lemma 3.4.2 in Vaart and Wellner (1996)).

For a class of functions with envelop bounded from above by , we have

This implies that is bounded in probability by the right-hand side.

Lemma S2 (Proposition 2 in Bibaut and van der Laan (2019)).

Let and . Denote the class of cadlag functions on with sectional variation norm smaller than . Suppose that is such that, for all , for all real-valued function on , , for some , and where is the Lebesgue measure. Then, for any , the bracketing entropy integral of with respect to norm is bounded by

where is a constant that depends only on and .

Lemma S3.

Consider a sequence of processes where

Assuming the following:

-

(i)

The function belongs to a cadlag class of functions with finite sectional variation norm.

-

(ii)

The function is the highly adaptive lasso estimate of .

-

(iii)

The bandwidth satisfies and .

Then .

Proof.

Let . Define . The function belongs to a class of function . We have

where the last equality follows from the kernel and the highly adaptive lasso rate of convergence. Using the result of Lemma S2, . Therefore,

Thus, to obtain the desired rate we must have which implies that has to converge to zero slower than (i.e., ). Note that under continuity and no smoothness assumptions, the rate for the bandwidth is which is much slower than . Thus, under continuity, for every level of smoothness the choice of optimal will satisfy the rate (i.e., no condition is imposed).

∎

Lemma S4.

Let the function is the highly adaptive lasso estimate of where belongs to a cadlag class of functions with finite sectional variation norm. Then

Proof.

We have

The last equality follows because by the highly adaptive lasso rate of convergence. ∎

Lemma S5.

Let where is the weighted squared-loss function defined in (1) and be the set of cadlag functions with variation norm smaller than . Then, uniformly over , we have

where is a positive finite constant.

Lemma S6.

Suppose and . Under Assumption 4, .

Proof.

We assume that and are bounded uniformly by some constants and . Moreover, by strong positivity assumption for all and . By definition the norm can be written as

The forth inequality follows from Assumption 4, the Cauchy-Schwarz and . The latter holds because because when (a) and ; or (b) and . The former and latter imply that and , respectively, and thus, . The fifth inequality holds by Markov inequalities. The upper bound is minimized when for a constant which depends on and . Hence,

| (S31) |

Hence where there is a margin around zero, that is, , the right hand side of (S2) reduces to . ∎

Appendix S3 Proof of Theorem 4.1: Rate of convergence of HAL with unknown nuisance parameters

Define . Then using the definition of the loss-based dissimilarity, we have

Moreover, using Lemma S5 we have

where is a positive finite constant. The last equality follows from the convergence rate of HAL minimum loss based estimator of . Let and , the above equality is equivalent to

which implies that .

Appendix S4 Proof of Theorem 4.2: Asymptotic linearity of the regimen-response curve estimator

We represent the difference between and as

Moreover, we can write

| (S32) | ||||

S4.1 Convergence rate of

We consider a ()-orthogonal kernel with bandwidth centered at . Then following Lemma 25.1 in van Der Laan and Rose (2018), as ,

where

S4.2 Asymptotic behaviour of the first term on the RHS of (S32)

Because, ,

The second and third equalities follows because , and thus, those are of order . In the last equality we have also used the consistency of .

S4.3 Asymptotic negligibility of the second term on the RHS of (S32)

We will show that the second order term . Let . Define and . The function belongs to a class of function . We have

The rest follows from Lemma S3. Therefore, when the bandwidth satisfies and .

S4.4 Asymptotic behaviour of the forth term on the RHS of (S32)

The last term on the RHS can be represented as

Using Lemma S3 and similar techniques used in Section S4.3, we have .

Let . Then

Using the rate of convergence of the highly adaptive lasso estimator . Moreover,

Hence, if we undersmooth and such that and , then

S4.5 The influence function.

Gathering all the terms, we have,

Thus, the asymptotic normality follows from the choice of bandwidth such that which implies . Thus, converges to a mean zero normal random variable when (i.e., converges to zero faster than ).

S4.6 The optimal bandwidth rate.

Appendix S5 Proof of Theorem 4.3: Asymptotic linearity of the regimen-response curve estimator for a fixed bandwidth

| (S33) | ||||

S5.1 Asymptotic behaviour of the first term on the RHS of (S33)

S5.2 Asymptotic negligibility of the second term on the RHS of (S32)

We show that . Let and . Then we have

By consistency of , we can consider which implies that the right hand side of the inequality above is of order .

S5.3 Asymptotic behaviour of the forth term on the RHS of (S33)

S5.4 Convergence to a Gaussian process

If we undersmooth , and such that , , and , then

where

Hence, for all , converges weakly as a random element of the cadlag function space endowed with the supremum norm to a Gaussian process with covariance structure implied by the covariance function .

Appendix S6 Uniform consistency of the regimen-response curve estimator

In Theorem 4.2 we showed that our estimator is pointwise consistent. In this section we show that our estimator is uniformly consistent as well in the sense that and derive a rate of convergence. Recall that

where

| (S34) | ||||

We know that , , and . Moreover, . Thus, assuming that , we have

Appendix S7 Proof of Theorem 4.4: Consistency of

The upper bound of given in Lemma S1 is either dominated by or . The switch occurs when . By Lemma S2, for the cadlag class of functions , we have . Therefore, the switch occurs when which implies (This is because where is the leading term). So the result of Lemma S1 can be written as

In the following, for the ease of notation, we denote and . Define a loss-based dissimilarity . Then by definition

The last equality follows from Theorem 4.2. Assuming (i.e., converges to zero faster than ), we have

Let . Using the rate obtained for the remainder terms in Theorem 4.2, we have . We proceed with assuming that the convergence rate of is the dominating rate. We will show that the assumption holds latter in the proof.

Recall,

Let and . Then,

| (S35) |

The equality (S35), follows from Markov inequality and the result of Lemma S6. Hence, . Using Taylor expansion, we have which implies . Let . Using Lemma S6,

| (S36) |

Then . The iteration continues until there is no improvement in the rate. That is which implies

Hence, . When there is a margin around zero, that is, , we will have .

At each iteration the convergence rate of and at the fix point it achieves the rate of . Hence, to show that the latter rate dominates the remainder term rate, it is sufficient to show that

The condition is satisfied when . The right hand side is a decreasing function of and as , . This rate condition is satisfied when where and for the optimal rate of . So, no further condition is imposed to the rate of bandwidth and the optimal rate remains as . Consequently,

Appendix S8 Additional figures

biometrika \bibliographyappchalipwbib