Novel Nonlinear Neural-Network Layers for High Performance and Generalization in Modulation-Recognition Applications

Abstract

The paper presents a novel type of capsule network (CAP) that uses custom-defined neural network (NN) layers for blind classification of digitally modulated signals using their in-phase/quadrature (I/Q) components. The custom NN layers of the CAP are inspired by cyclostationary signal processing (CSP) techniques and implement feature extraction capabilities that are akin to the calculation of the cyclic cumulant (CC) features employed in conventional CSP-based approaches to blind modulation classification and signal identification. The classification performance and the generalization abilities of the proposed CAP are tested using two distinct datasets that contain similar classes of digitally modulated signals but that have been generated independently, and numerical results obtained reveal that the proposed CAP with novel NN feature extraction layers achieves high classification accuracy while also outperforming alternative deep learning (DL)-based approaches for signal classification in terms of both classification accuracy and generalization abilities.

Index Terms:

Capsule Networks, Cyclic Cumulants, Digital Communications, Machine Learning, Modulation Recognition, Signal Classification.I Introduction

Identification and classification of wirelessly transmitted digital communication signals into their respective modulation schemes without prior knowledge of signal parameters is a challenging problem that occurs in both military and commercial applications such as spectrum monitoring, signal intelligence, or electronic warfare [1], and conventional approaches to modulation classification employing either likelihood-based methods [2] or CSP-based techniques [3] can prove challenging [4]. As an alternative, in recent years, DL-based techniques have been explored for blind classification of digitally modulated signals using for NN training and signal recognition/classification either the raw I/Q signal components [5, 6, 7, 8], or pre-processing them to extract CC features [9] or other information that characterizes the signal amplitude/phase and frequency domain representations [10, 11, 12, 13].

We note that a common theme of the DL-based approaches proposed for signal classification involves the use of NN layers with structures developed in the context of image processing, which appear to be poorly suited to extract information from time-domain signal samples. Specifically, the DL-based approaches that use raw I/Q data as inputs along with conventional image processing NN layers for modulation classification have been shown to be unable to generalize to new I/Q data when the distribution of the signal parameters of the new data differs slightly from the training data [5, 6]. Furthermore, pre-processing the I/Q data to obtain CCs and using these in conjunction with CAPs having conventional image processing NN layers results in robust signal classification and generalization performance [9].

Motivated by the brittleness of DL-based classifiers that feed I/Q signal components into conventional image processing NN layers, and with the knowledge that DL-based classifiers using CCs are robust, we propose a new approach to DL-based classification of digitally modulated signals, which involves custom-designed NN layers that perform specific nonlinear mathematical functions on the input I/Q data to enable downstream conventional image processing NN capsules to identify specific features that are similar to those implied by the CCs. By combining novel function layers that force the NN to extract periodic (cyclic) features with capsules, our proposed approach enables a DL-based classifier to use the I/Q signal components as inputs, obtain generalization that outperforms other types of NNs in the classification of digitally modulated signals [5], yet obviates the computational expense of estimating CC features. We note that the robustness and generalization abilities of the proposed DL-based digital modulation classifier are assessed on two distinct datasets that are publicly available [14] and include signals with similar digital modulation schemes but which have been generated independently using distinct signal distribution parameters, such that data from the testing dataset is not used in the training dataset.

The remainder of this paper is organized as follows: we provide related background information on CSP and the cycle frequency (CF) features relevant for digital modulation classification in Section II, followed by presentation of the novel NN function layers and proposed NN structure in Section III. In Section IV we provide details on the datasets used for training and testing the NNs, and we present numerical performance results in Section V. We conclude the paper with discussion and final remarks in Section VI.

II CSP and Periodic Features for

Digital Modulation Classification

CSP provides a set of analytical tools for estimating distinct features that are exhibited by digital modulation schemes and that can be used to perform classification of digitally modulated signals in various scenarios involving stationary noise and/or co-channel interference. These tools include higher-order CC estimators [15, 16] and spectral correlation function (SCF) estimators [17, 18] that can be compared to a set of theoretical CCs or SCFs for classifying the digital modulation scheme embedded in a noisy signal.

To accurately estimate CC features for NN classification, specific signal parameters must be either known or accurately estimated from the I/Q data prior to estimating the CC features [9]. These parameters include the symbol interval, , and symbol rate , the carrier-frequency offset (CFO), , the excess bandwidth of the signal111For square-root raised-cosine (SRRC) pulse shaping this is implied by the roll-off parameter, ., and the signal power level222This directly impacts the in-band signal-to-noise (SNR) ratio.. These signal parameters define the CFs needed for CC computation, with a key processing step involving blind estimation of the coarse second-order CF pattern for the signal. CFs for which a CC is non-zero for typical communication signals include harmonics of the symbol rate , multiples of the carrier frequency , and combinations of these two sets, such that can be written as

| (1) |

where is the order, is the number of conjugations, and is the set of non-negative integers typically constrained to a maximum value of .

Although the set of possible CFs for all PSK/QAM/SQPSK signals is determined by the symbol rate and the carrier frequency offset in (1), most signals exhibit only a subset of the maximum possible set. Moreover, there are only a few basic patterns: BPSK-like, QAM-like, -DQPSK-like, 8-PSK-like, and staggered QPSK (SQPSK)-like. If we can find the pattern, we can also determine the actual number of cycle frequencies needed to fully characterize the modulation type through its set of associated CC values.

Two distinct features that are meaningful for identifying which of the five basic CF patterns are present in a signal of interest are [17]:

-

1.

The Fourier transform of the squared signal

-

2.

The Fourier transform of the quadrupled signal

Furthermore, features that are meaningful in relation to even-order CC estimates are:

-

1.

The squared signal

-

2.

The quadrupled signal

-

3.

The signal raised to a power of six

-

4.

The signal raised to a power of eight

Since second and higher even orders in both the time- and frequency-domains are necessary to estimate CC features, we create custom NN function layers that perform generic mathematical equations to provide feature extraction layers that are more consistently meaningful (in that they are proportional to CC features) than the I/Q inputs prior to the trainable branches and final layers with many learnable hyperparameters. The aforementioned list of features is not exhaustive in regards to CCs but only includes orders where no conjugations have been performed (). This subset of CC features was chosen for these proposed feature extraction layers to limit the size and complexity of the resulting NN structure.

III CAP with Custom Feature Extraction NN Layers for Modulation Classification

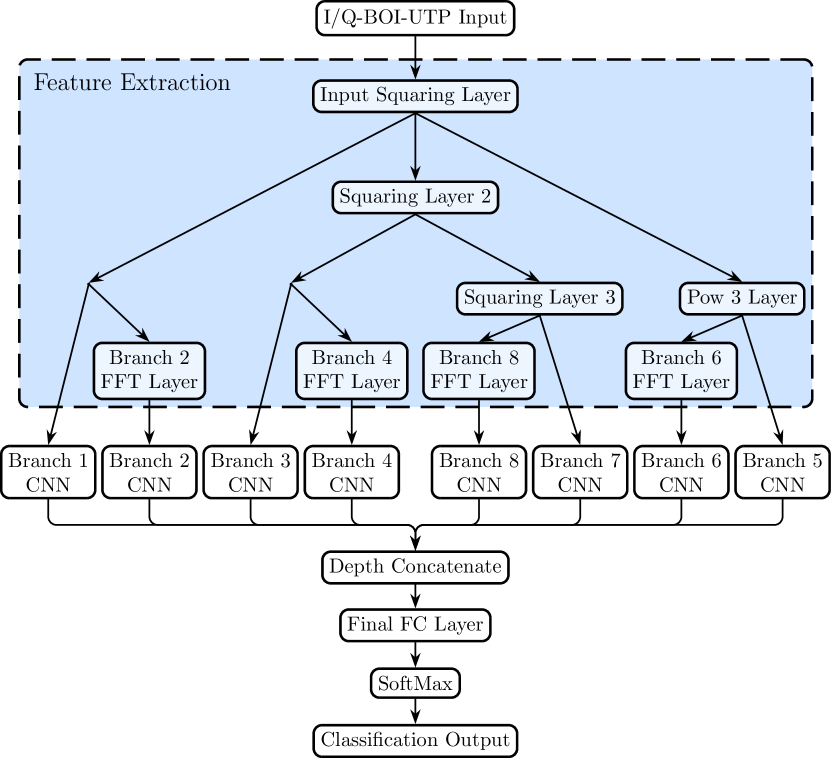

To consistently extract the second, fourth, sixth, and eighth-order signal features in both time- and frequency-domain using the I/Q signal data, we only need to implement three custom NN layers for the needed nonlinear computations: a squaring layer, a raise to the power of three (or Pow3) layer, and a fast Fourier transform (FFT) layer. These custom layers can then be connected together as shown in Fig. 1, such that their outputs branch out until all eight feature types have been extracted.

Noting that the I/Q signal data is input as pairs of real numbers , the squaring and power-of-three layers can be implemented as follows:

– Squaring Layer:

| (2) | ||||

| (3) |

– Pow3 Layer:

| (4) | ||||

| (5) |

For the FFT layer, only the magnitude is needed at the output, with the zero frequency bin in the center, as this information is most likely to reveal CF patterns present in the signal. Therefore, after taking the FFT, its absolute value is obtained to ensure the output magnitude is a real number, suitable for use by downstream trainable NN layers.

| Layer | (# Filters)[Filter Size] | Activations |

|---|---|---|

| Input | ||

| ConvMaxPool | ()[] | |

| ConvMaxPool | ()[] | |

| ConvMaxPool | ()[] | |

| ConvMaxPool | ()[] | |

| ConvMaxPool | ()[] | |

| ConvAvgPool | ()[] | |

| FC | # Classes |

| Layer | (# Filters)[Filt Size] | Stride | Activations |

|---|---|---|---|

| Input | ()[] | ||

| Conv | ()[] | [] | |

| Batch Norm | |||

| ReLU | |||

| Max Pool | ()[] | [] |

| Layer | (# Filters)[Filter Size] | Stride | Activations |

|---|---|---|---|

| Input | ()[] | ||

| Conv | ()[] | [] | |

| Batch Norm | |||

| ReLU | |||

| Avg Pool | ()[] | [] |

The CAP includes eight convolutional neural network (CNN) branches that implement the primary capsules and will be trained to classify eight common digital modulation schemes: BPSK, QPSK, 8-PSK, -DQPSK, MSK, 16-QAM, 64-QAM, and 256-QAM. The primary capsules contain the majority of the network’s learnable hyperparameters, with each branch having as its input one of the eight desired features. At the output of each branch there is a fully connected layer with eight outputs, which is used to reduce the branch output size to the number of modulation classes in the training dataset; this is done to ensure that each branch has the ability to distinguish between all modulation types in the training dataset. This ability is important because, while not every branch will be able to fully distinguish between all modulation types, each branch will be able to identify which classes it can distinguish between and this determination can be made during NN training. For example, due to the nature of the CF patterns, we expect that the branch with second-order frequency-domain inputs will be able to distinguish between BPSK and MSK, but all other modulation types will not be distinguishable on this branch. Likewise, the branch with fourth-order frequency-domain inputs should be able to distinguish between 8-PSK-like, -DQPSK-like, and SQPSK-like patterns, but QAM-like and BPSK-like CF patterns may not be distinguishable to this branch. Each branch, having opportunity to distinguish between all eight modulation types, then has its outputs concatenated together with the other branches followed by a final fully connected layer. This last fully connected layer learns the appropriate weights to apply to the outputs of each CNN branch so that each branch’s ability is combined together to obtain the minimum error on the training dataset.

The overall structure of the proposed CAP with custom feature extracting NN layers is shown in Fig. 1, with the characteristics of the subsequent CNN branches for classifying digitally modulated signals outlined in Tables I – III. The number of filters and filter size for each convolutional layer are defined in Table I as are the number of output activations (e.g., ) for each layer.

| Parameter | CSPB.ML.2018 | CSPB.ML.2022 |

|---|---|---|

| Sampling Frequency, | 1 Hz | 1 Hz |

| CFO, | ||

| Symbol Period, , Range | ||

| Excess Bandwidth, , Range | ||

| In-Band SNR Range (dB) | ||

| SNR Center of Mass | dB | dB |

IV Datasets Used for NN Training and Testing

To train and test the performance of the proposed CAP (including its generalization ability) we use two publicly available datasets that both contain signals corresponding to the eight digital modulation schemes of interest (BPSK, QPSK, 8-PSK, -DQPSK, MSK, 16-QAM, 64-QAM, and 256-QAM). The two datasets are available from [14] as CSPB.ML.2018 and CSPB.ML.2022, and their signal generation parameters are listed in Table IV.

We note that the CFO ranges corresponding to signals in the two datasets are non-intersecting, which allows evaluation of the generalization ability of the trained CAP. Specifically, if the CAP trained on a large portion of the CSPB.ML.2018 dataset displays high classification accuracy on the remaining signals in the dataset, and its performance when classifying signals in the CSPB.ML.2022 dataset is at similarly high levels, then the CAP is robust and has a high ability to generalize333Likewise, if its classification accuracy on signals in CSPB.ML.2018 is high, while on signals in CSPB.ML.2022 is low, then its generalization ability is also low..

Prior to training the proposed CAP on these datasets, a blind band-of-interest (BOI) detector [19] is used to locate the bandwidth for signals in the datasets, filter out-of-band noise, and center the I/Q data at zero frequency based on the CFO estimate provided by the BOI detector. Finally, the I/Q data is normalized to unit total power (UTP) so that it does not prevent the activation functions of the NN from converging. To show that using a blind BOI detector to shift the signal to an estimated zero frequency does not necessarily enable an I/Q-trained CAP such as the one in [5] to generalize between the CSPB.ML.2018 and CSPB.ML.2022 datasets, we also use this data to retrain the CAP in [5] as an alternative to provide a point of comparison with the proposed CAP.

V Network Training and Numerical Results

The proposed CAP and the alternative CAP in [5] have been implemented in MATLAB and trained on a high-performance computing cluster, such that each CAP was trained and tested two separate times as follows:

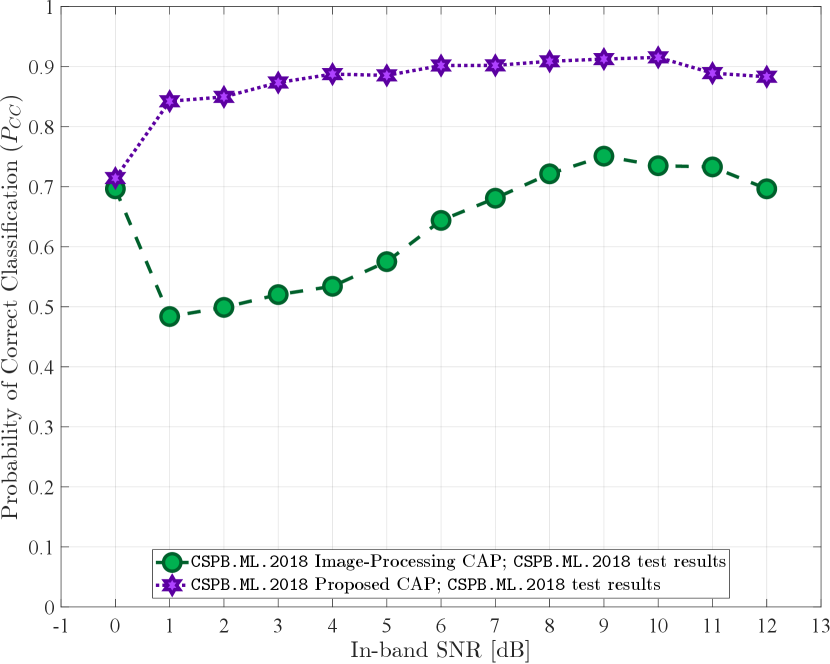

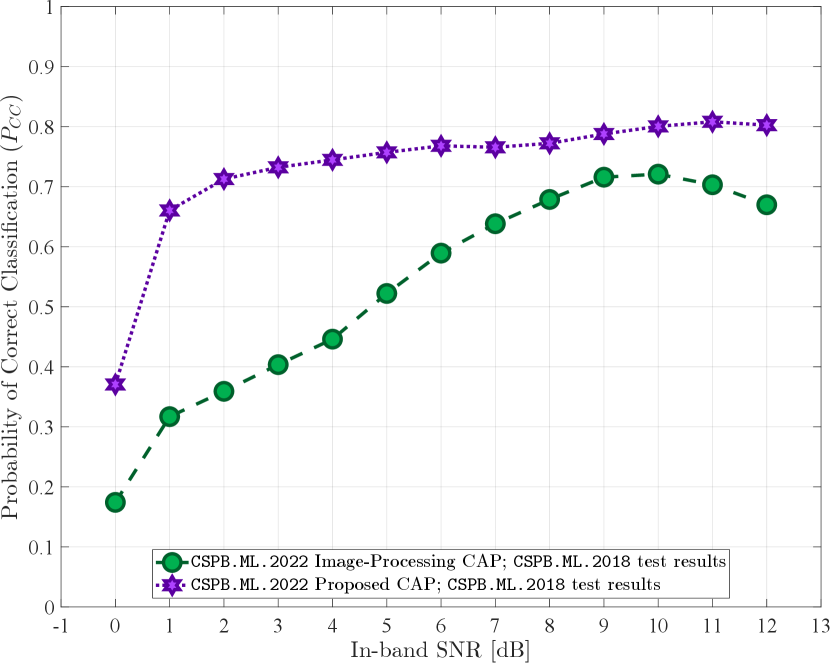

-

•

In the first training instance, dataset CSPB.ML.2018 was used, splitting the available signals into for training, for validation, and for testing. The probability of correct classification for the test results obtained using the test portion of signals in CSPB.ML.2018 is shown in Fig. 2.

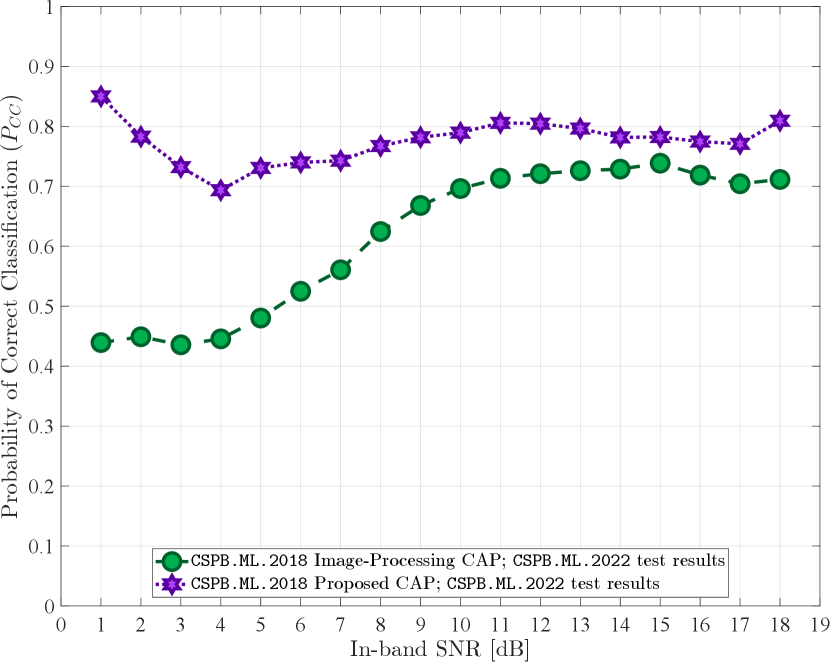

The CAPs trained on CSPB.ML.2018 are then tested on dataset CSPB.ML.2022 to assess the generalization abilities of a trained CAP when classifying all signals available in CSPB.ML.2022. The probability of correct classification for this test is shown in Fig. 3.

-

•

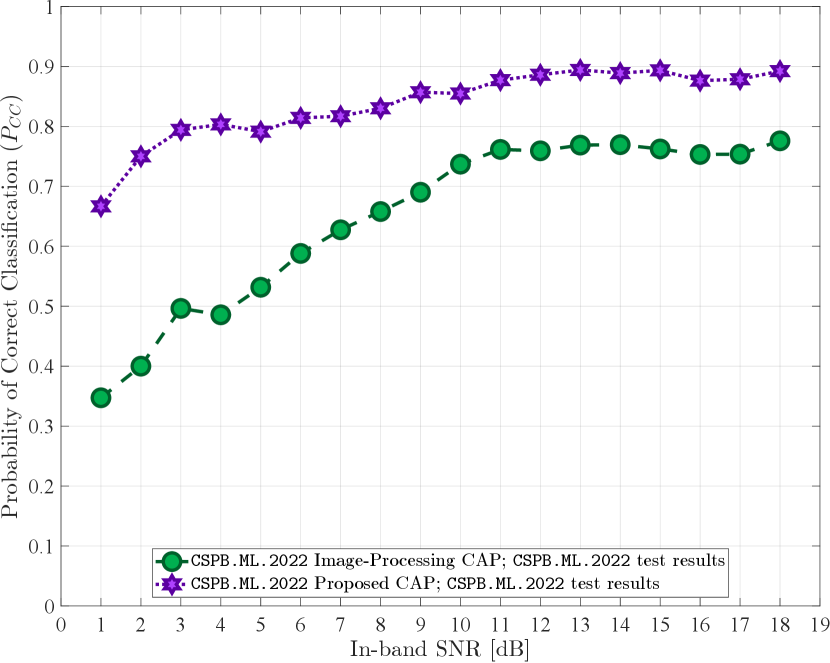

In the second training instance, the NN was reset and trained anew using signals in dataset CSPB.ML.2022, with a similar split of signals used for training, for validation, and for testing. The probability of correct classification for the test results obtained using the test portion of signals in CSPB.ML.2022 is shown in Fig. 4.

The CAPs trained on CSPB.ML.2022 are then tested on dataset CSPB.ML.2018 to assess the generalization abilities of the trained NN when classifying all signals available in CSPB.ML.2018. The probability of correct classification for this test is shown in Fig. 5.

In summary, from the plots shown in Figs. 2 – 5, we note that the proposed CAP with custom feature extraction layers outperforms the alternative CAP from [5] in all training and testing scenarios, displaying high classification performance and generalization abilities.

The overall probability of correct classification () for all experiments performed is shown in Table V and provides further confirmation that the proposed CAP containing the novel custom layers is able to perform feature extraction tailored to the modulated signals of interest more effectively than the alternative CAP in [5], which employs only the conventional NN layers used in the context of image processing. The lowest classification performance seen by the proposed CAP came from training on CSPB.ML.2022 and testing on CSPB.ML.2018 with an overall correct classification probability , while the highest classification performance observed for the alternative CAP in [5] came from training on CSPB.ML.2022 and testing on CSPB.ML.2022, resulting in a corresponding overall . Thus, even the lowest performance of the proposed CAP exceeds by the best performance of the alternative CAP in [5].

| Classification Model |

|

|

||||

|---|---|---|---|---|---|---|

|

||||||

|

||||||

|

||||||

|

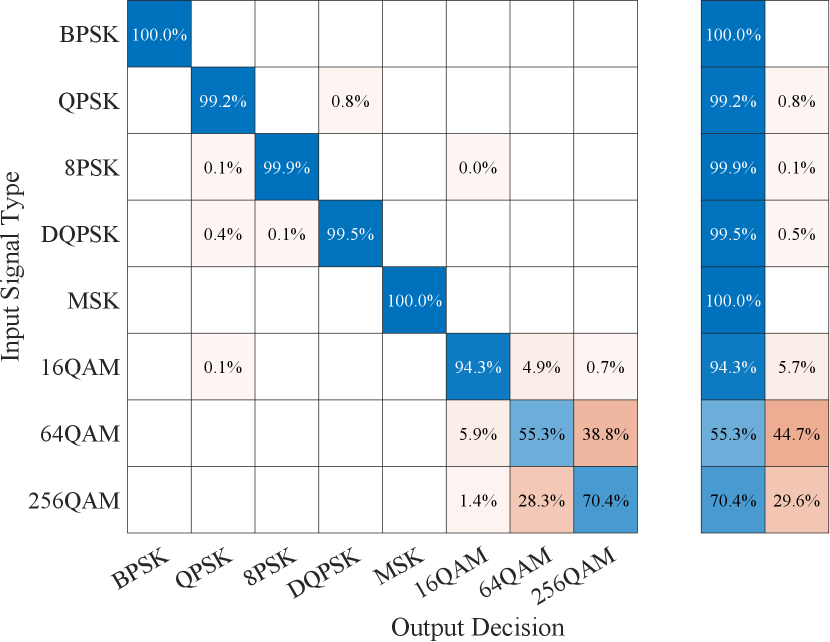

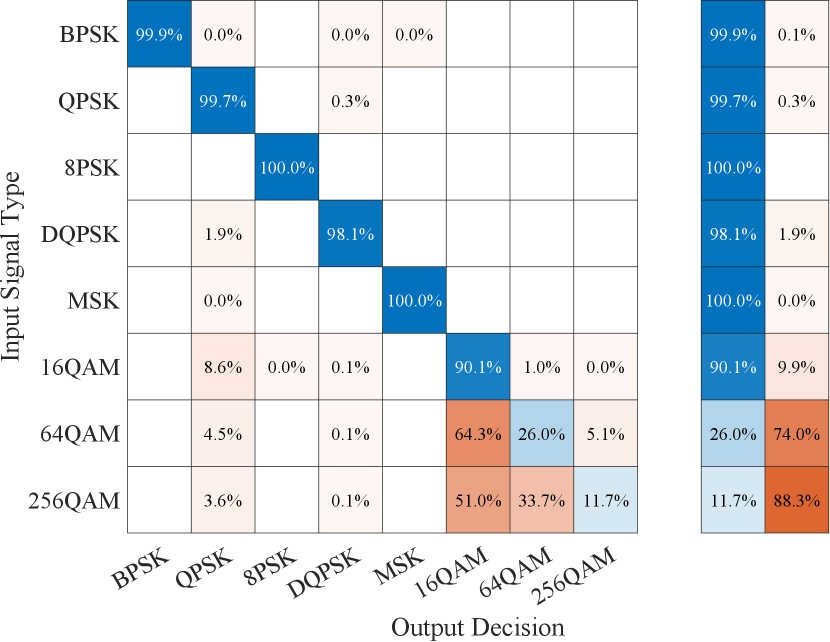

For additional insight into the classification performance of the proposed CAP with custom feature extracting layers, we have also looked at its corresponding confusion matrices, which are shown in Figs. 6 and 7. As can be observed from these figures, the classification performance for individual BPSK, QPSK, 8-PSK, -DQPSK, and MSK modulation schemes when the CAP is trained and tested on CSPB.ML.2018 dataset is within difference of the performance observed when the CAP is trained on CSPB.ML.2018 dataset and tested on CSPB.ML.2022 dataset444Similar confusion matrices are obtained for the cases when the CAP is trained and tested on CSPB.ML.2022 dataset and when the CAP is trained on CSPB.ML.2022 dataset and tested on CSPB.ML.2018, but the corresponding plots are omitted due to space considerations., indicating excellent generalization performance for these five types of digital modulation schemes. Furthermore, the only modulation schemes for which the classification performance of the proposed CAP decreased when the testing dataset was changed from CSPB.ML.2018 to CSPB.ML.2022 are 16-QAM, 64-QAM, and 256-QAM.

The reason the proposed CAP is unable to generalize its training well for the three QAM modulation types is due to the fact that these modulation types are only distinguishable from each other at higher orders when their signal power is known or accurately estimated. Since we normalized the total (signal+noise) power rather than just the signal power, the relative differences between the power levels of the 16-QAM, 64-QAM, and 256-QAM higher-order moments were removed. Thus, the differences between the extracted features for 16-QAM, 64-QAM, and 256-QAM were different enough between CSPB.ML.2018 and CSPB.ML.2022 that the proposed CAP could not obtain good generalization on these three modulation types.

In future work, we plan to investigate additional custom NN function layers that process the I/Q signal data with only signal power normalization, rather than total power normalization, in order to overcome the issue of poor generalization for QAM modulation types, without needing to resort to full estimates of the CCs.

VI Conclusions

This paper presents a novel DL-based neural network (NN) classifier for digitally modulated signals that uses a capsule (CAP) network with custom feature-extraction layers. The proposed CAP takes as input pre-processed I/Q data, on which blind band-of-interest (BOI) estimation has been applied to enable out-of-band noise filtering and CFO-estimate-based spectral centering. The input data is normalized to unit total power prior to input to the CAP. The proposed custom function layers are essentially homogeneous even-order nonlinear functions, and perform feature generation and extraction on the pre-processed I/Q data more reliably and predictably than convolutional NN layers alone, resulting in very good classification and generalization performance. Prior to this effort, we have been unable to achieve significant generalization using any conventional CAP or convolutional NN that takes I/Q data as input and know of no other published successes.

Future research will involve additional novel NN function layers that enable the use of I/Q data which has been normalized to unit signal power rather than unit total (signal+noise) power, to further improve classification and generalization performance for QAM modulation schemes of interest (16-QAM, 64-QAM, and 256-QAM). Additionally, the inclusion of NN function layers that extract features proportional to non-conjugate CCs () and conjugate CCs, where the number of conjugations is greater than zero ( and ), will be considered as this may prove necessary to distinguish between expanded input classes, such as multiple kinds of FSK/CPM/CPFSK, which can have little or no cyclostationarity for . In this way we intend to create neural-network classifiers that can accept I/Q data samples as input, yet simultaneously produce excellent classification performance and high generalization, proving to be highly robust in the face of probability-model departures from the training-set density functions.

Acknowledgment

The authors would like to acknowledge the use of Old Dominion University High-Performance Computing facilities for obtaining numerical results presented in this work.

References

- [1] O. Dobre, A. Abdi, Y. Bar-Ness, and W. Su, “Blind Modulation Classification: A Concept Whose Time Has Come,” in Proceedings 2005 IEEE/Sarnoff Symposium on Advances in Wired and Wireless Communication, Princeton, NJ, April 2005, pp. 223–228.

- [2] F. Hameed, O. A. Dobre, and D. C. Popescu, “On the Likelihood-Based Approach to Modulation Classification,” IEEE Transactions on Wireless Communications, vol. 8, no. 12, pp. 5884–5892, December 2009.

- [3] C. M. Spooner, W. A. Brown, and G. K. Yeung, “Automatic Radio-Frequency Environment Analysis,” in Proceedings of the Thirty-Fourth Annual Asilomar Conference on Signals, Systems, and Computers, vol. 2, Monterey, CA, October 2000, pp. 1181–1186.

- [4] O. A. Dobre, “Signal Identification for Emerging Intelligent Radios: Classical Problems and New Challenges,” IEEE Instrumentation & Measurement Magazine, vol. 18, no. 2, pp. 11–18, 2015.

- [5] J. A. Latshaw, D. C. Popescu, J. A. Snoap, and C. M. Spooner, “Using Capsule Networks to Classify Digitally Modulated Signals with Raw I/Q Data,” in Proceedings IEEE International Communications Conference (COMM), Bucharest, Romania, June 2022.

- [6] J. A. Snoap, D. C. Popescu, and C. M. Spooner, “On Deep Learning Classification of Digitally Modulated Signals Using Raw I/Q Data,” in Proceedings IEEE Annual Consumer Communications Networking Conference (CCNC), Las Vegas, NV, January 2022, pp. 441–444.

- [7] T. J. O’Shea, T. Roy, and T. C. Clancy, “Over-the-Air Deep Learning Based Radio Signal Classification,” IEEE Journal of Selected Topics in Signal Processing, vol. 12, no. 1, pp. 168–179, February 2018.

- [8] T. O’Shea and J. Hoydis, “An Introduction to Deep Learning for the Physical Layer,” IEEE Transactions on Cognitive Communications and Networking, vol. 3, no. 4, pp. 563–575, October 2017.

- [9] J. A. Snoap, J. A. Latshaw, D. C. Popescu, and C. M. Spooner, “Robust Classification of Digitally Modulated Signals Using Capsule Networks and Cyclic Cumulant Features,” in Proceedings 2022 IEEE Military Communications Conference (MILCOM), Rockville, MD, December 2022, pp. 298–303.

- [10] D. Zhang, W. Ding, C. Liu, H. Wang, and B. Zhang, “Modulated Autocorrelation Convolution Networks for Automatic Modulation Classification Based on Small Sample Set,” IEEE Access, vol. 8, pp. 27 097–27 105, February 2020.

- [11] M. Kulin, T. Kazaz, I. Moerman, and E. De Poorter, “End-to-End Learning From Spectrum Data: A Deep Learning Approach for Wireless Signal Identification in Spectrum Monitoring Applications,” IEEE Access, vol. 6, pp. 18 484–18 501, March 2018.

- [12] S. Rajendran, W. Meert, D. Giustiniano, V. Lenders, and S. Pollin, “Deep Learning Models for Wireless Signal Classification With Distributed Low-Cost Spectrum Sensors,” IEEE Transactions on Cognitive Communications and Networking, vol. 4, no. 3, pp. 433–445, September 2018.

- [13] K. Bu, Y. He, X. Jing, and J. Han, “Adversarial Transfer Learning for Deep Learning Based Automatic Modulation Classification,” IEEE Signal Processing Letters, vol. 27, pp. 880–884, May 2020.

- [14] C. Spooner, J. Snoap, J. Latshaw, and D. Popescu, “Synthetic Digitally Modulated Signal Datasets for Automatic Modulation Classification,” 2022. [Online]. Available: https://dx.doi.org/10.21227/pcj5-zr38

- [15] C. M. Spooner, “On the Utility of Sixth-Order Cyclic Cumulants for RF Signal Classification,” in Conference Record of Thirty-Fifth Asilomar Conference on Signals, Systems and Computers, vol. 1, Pacific Grove, CA, November 2001, pp. 890–897.

- [16] ——, “Classification of Co-channel Communication Signals Using Cyclic Cumulants,” in Conference Record of The Twenty-Ninth Asilomar Conference on Signals, Systems and Computers, vol. 1, Pacific Grove, CA, November 1995, pp. 531–536.

- [17] W. Gardner and C. Spooner, “The Cumulant Theory of Cyclostationary Time-Series, Part I: Foundation,” IEEE Transactions on Signal Processing, vol. 42, no. 12, pp. 3387–3408, December 1994.

- [18] C. Spooner and W. Gardner, “The Cumulant Theory of Cyclostationary Time-Series, Part II: Development and Applications,” IEEE Transactions on Signal Processing, vol. 42, no. 12, pp. 3409–3429, December 1994.

- [19] C. M. Spooner, “Multi-Resolution White-Space Detection for Cognitive Radio,” in Proceedings 2007 IEEE Military Communications Conference (MILCOM), Orlando, FL, October 2007, pp. 1–9.