Numerical Stability for Differential Equations with Memory

Abstract

In this note, we systematically investigate linear multi-step methods for differential equations with memory. In particular, we focus on the numerical stability for multi-step methods. According to this investigation, we give some sufficient conditions for the stability and convergence of some common multi-step methods, and accordingly, a notion of A-stability for differential equations with memory. Finally, we carry out the computational performance of our theory through numerical examples.

Keywords— Differential Equations with Memory, Linear Multistep Methods, Zero-Stability, A-Stability

1 Introduction

The past few decades have been witnessing a strong interest among physicists, engineers and mathematicians for the theory and numerical modeling of ordinary differential equations (ODEs). For ODEs, some are solved analytically; see, for example the excellent book by V.I. Arnold [2], and the references therein. However, only some special cases of these equations can be solved analytically, so we shall turn to numerical solutions as an alternative. Sophisticated methods have been developed recently for the numerical solution of ODEs, to name a few, the most commonly used Euler methods, the elegant Runge-Kutta and extrapolation methods, and multistep and general multi-value methods, all of which can be found in the well-known book by Hairer et al. [7].

According to [1], Picard (1908) emphasized the importance of the consideration of hereditary effects in the modeling of physical systems. Careful studies of the real world compel us that, reluctantly, the rate of change of physical systems depends not only their present state, but also on their past history [3]. Development of the theory of delay differential equations ([1, 16]) gained much momentum after that. DDEs belong to the class of functional differential equations, which are infnite dimensional, as opposed to ODEs. The study of DDEs is also popular and stability of some linear method for DDEs have been discussed in [19, 20]. However, the hypothesis that a physical system depends on the time lagged solution at a given point is not realistic, and one should rather extend its dependence over a longer period of time instead of a instant [7]. Volterra (1909), (1928) discussed the integro-differential equations that model viscoelasticity and he wrote a book on the role of hereditary effects on models for the interaction of species (1931). To illustrate the difference, DDEs are equations with “time lags”, such as

| (1.1) |

whilst an integro-differential equation reads

| (1.2) |

where the memory term comes into effect and is the duration of the memory. Moreover, instead of integro-differential equations that are only concerned with the local “time lags”, we focus on the ODEs with memory which contains all the global states. Generally, an ODE with memory in this note reads

| (1.3) |

where , , . In fact, the memory term in (1.3) is quiet common in various fields. A popular example is the time-fractional differential equation [8, 14] which is used to describe the subdiffusion process. Numerical schemes and the analysis for these differential equations with memory have been estabished in [10, 11, 9, 13].

In this note, we aim to develop new tools for the analysis of linear multi-step methods (LMMs) designed for the initial value problem (1.3). Truncation errors and numerical stability of classical LMMs, i.e., LMMs designed for normal ODEs, are well understood. Due to the existence of the memory term, however, one may find the implementation of LMMs on (1.3) challenging. A nature resolution is the introduction of quadratures, a broad family of algorithms for calculating the numerical value of a definite integral, in purpose of the approximation of the memory integration. Hence, LMMs for ODEs with memory are numerical methods comprising characteristics from classical LMMs and quadratures. However, such LMMs are far from being well understood from a numerical analysis perspective. More importantly, the numerical stability might not be guaranteed due to the accumulation of error aroused from the memory integration. In this note, we present our study on LMMs designed for ODEs with memory, in particular, providing a definition of stability, which is consistent to the classical ODE case. We believe our methods can be extended to nonlinear methods such as Runge-Kutta.

2 Preliminaries

2.1 Notations

We begin this section by introducing some notations that will be used in the rest of this note. We set as the constant step size, then the -th grid point for all . We let , and we use and for the standard Big-O and Small-O notations. Finally, we denote vector norm as , vector or function norm as , matrix spectral (operator) norm as , and matrix infinity norm as .

2.2 Linear Multistep Methods

Definition 1.

A linear -step method for the initial value problem (1.3) reads

| (2.1) |

In the above formula, the coefficients and satisfy , and , . In terms of LMMs, if , we say the method is explicit. Otherwise, it is implicit. In terms of quadratures, if for all , we say that the numerical integration is open. Otherwise, it is close.

Remark 1.

The series of weights varies for each .

In Section 2.3 and Section 2.4, we summarize the two main ingredients of our methods designed for ODEs with memory. One is the classical LMMs, and we remark that throughout the whole note, the term LMMs refers to LMMs in Definition 1, otherwise we would say classical LMMs to distinguish out. The other is the numerical quadratures, methods involved to approximate the integration term .

2.3 Classical Linear Multistep Methods

For the initial value problem

| (2.2) |

where and , we consider the general finite difference scheme

| (2.3) |

where , and (2.3) includes all considered methods as special cases. Specifically, some commonly used classical LMMs designed for (2.2) are listed out as follows

| BDF1/AM1/Backward Euler method: | (2.4) | |||

| BDF2: | ||||

| AM2/Trapezoidal rule method: | ||||

| AB1/Euler method: | ||||

| AB2: | ||||

| MS1/Mid-point method: | ||||

| MS2/Milne method: |

and many other classical LMMs can be found in the literature of [6, 7, 21].

2.4 Numerical Quadratures

In order to approximate the integration term

| (2.5) |

only the composite rules of Newton–Cotes formulas are considered in this note, the formulas are termed closed when the end points of the integration interval are included in the formula. Otherwise, if excluded, we have an open Newton-Cotes quadrature. Some commonly used Newton–Cotes formulas designed for (2.5) are listed out below:

| Trapezoidal rule (closed): | (2.6) | |||

| Simpson’s rule (closed): | ||||

| Mid-point rule (open): | ||||

| Trapezoidal rule (open): | ||||

| Milne’s rule (open): |

and many other quadratures can be found in the literature of [4, 5, 18].

We remark that the implementation of these rules on the initial problem (1.3) could be a little tricky. For instance, the closed Simpson’s rule presents a challenge in that its formula requires to be an even number. However, such condition may not always be met, as the time step tends to increase and varies with time due to the presence of memory effects. In the circumstances where is odd, however, we may circumvent this complication by using Simpson’s rule to approximate the term instead of . As for the residue term , it can be estimated by applying Simpson’s rule to the time region , i.e., . Therefore, we suggest that the grid points need be computed with comparatively small time step at initial stage.

2.5 Truncation Errors, Consistency, Convergence and Zero-Stability

Before we proceed to the definition of local and global truncation errors for classical LMM, we associate with (2.1) the numerical solution at denoted by , given exact solution to the initial value problem (1.3), i.e., satisfies

| (2.7) | ||||

and for any , we set for convenience.

Definition 2.

The local truncation error reads

| (2.8) |

and we say the numerical scheme is consistent if for . Moreover, a numerical scheme is said to be consistent of order if for sufficiently regular differential equations (1.3), .

Definition 3.

The global truncation error reads

| (2.9) |

and we say the numerical method converges if holds uniformly for all .

Definition 4 (Zero-Stability).

A classical LMM (2.3) is called zero-stable, if the generating polynomial

| (2.10) |

satisfies the root condition, i.e.,

-

i)

The roots of lie on or within the unit circle;

-

ii)

The roots on the unit circle are simple.

Another equivalent definition of zero-stability is given out as follows

Definition 5.

The consistency and zero-stability are in fact the sufficient and necessary conditions for the convergence of classical LMMs, which can be represented by the Dahlquist equivalence theorem [7, Theorem 4.5].

Theorem 1 (Convergence for classical LMMs).

A classical LMM is convergent if and only if it is consistent and zero-stable.

2.6 A-Stability

Another crucial concept of stability in numerical analysis is the A-stability [6, Definition 6.3]. For the test problem

| (2.12) |

where , and with negative real part, i.e., , its solution reads

Hence, as , regardless of . The A-stable methods is a class of classical LMMs, when applied to (2.12), for any given step size , the numerical solutions also tend to zero as , regardless of the choice of starting values , and we formalize our aspirations by the following definition [6, Definition 6.3].

Definition 6 (Absolute Stability).

A classical LMM is said to be absolutely stable if, when applied to the test problem (2.12) with some given value of , its solutions tend to zero as for any choice of starting values.

We introduce the single parameter since the parameters and occur altogether as a product, and a classical LMM is not always absolutely stable for every choice of , hence we are led to the definition of the region of absolute stability [6, Definition 6.5].

Definition 7 (Region of Absolute Stability).

The set of values in the complex -plane for which a classical LMM is absolutely stable forms its region of absolute stability.

With these two definitions, we are able to give out an equivalent definition of A-stability for the classical LMMs.

Definition 8 (A-stability).

A classical LMM designed for the initial value problem (2.12) is said to be A-stable if its region of absolute stability includes the entire left half plane, i.e.,

| (2.13) |

2.7 Formulation as One-step Methods

We are now at the point where it is useful to rewrite a LMM as a one-step method in a higher dimensional space [7]. In order for this, we define associated with the LMM

| (2.14) |

as follows

then the LMM (2.14) can be written as

| (2.15) |

For any , we introduce a -dimensional vector

equipped with

and

then the LMM (2.14) can be written in a closed form as follows: For any ,

| (2.16) |

where

and denotes the Kronecker tensor product.

3 Zero-Stability of Linear Multistep Methods

3.1 Zero-Stability and Root Condition

Our results concerning zero-stability are essentially based on the following lemma.

Lemma 1 (Induction lemma for zero-stability).

For and , let be a non-negative sequence satisfying

| (3.1) |

then given any , the following holds for all ,

| (3.2) |

Proof.

We prove this by induction. Suppose that (3.2) holds for , then

It suffices to show that

| (3.3) |

As we notice that , then

which finishes the proof. ∎

We impose some technical conditions on and in the initial value problem (1.3).

Assumption 1 (Uniform Lipschitz condition).

There exists , such that

| (3.4) | ||||

hold uniformly for all and .

Theorem 2 (Zero-stablity for LMM with memory).

If a linear -step method

| (3.5) |

whose generating polynomial

| (3.6) |

satisfies the root condition, then it is zero-stable.

Proof.

Let and be the numerical solutions with initial values and , then we have

Taking their difference leads to

and by taking -norm on both sides,

| (3.7) | ||||

Note that the coefficients , and series of weights satisfy that: For each and ,

for some universal constant . By Assumption 1, we have that

then we obtain that

hence

| (3.8) | ||||

Then, if we apply the inequality above to (3.7), the following holds

| (3.9) | ||||

As we set , then for sufficiently small , by direction application of Lemma 1, we obtain that

for some universal constants and , which finishes the proof. ∎

4 Convergence for Linear Multistep Methods

4.1 Consistency for LMM with quadrature

Lemma 2 (Consistency for LMM with memory).

If the classical LMM is consistent of order , , and equipped with a quadrature of order , , then this LMM is consistent and has a local truncation error .

Proof.

Recall the scheme reads

| (4.1) |

Suppose that we can calculate the integral explicitly and denote the exact value of the integral by , then the scheme reads

| (4.2) |

As the classical LMM is consistent of order , then the local truncation error of (4.2) satisfies

| (4.3) |

Since we have to apply quadratures to approximate , and as the quadrature is of order , we obtain that: For any ,

hence the truncation error of (4.1) reads

| (4.4) | ||||

∎

4.2 Convergence

In the case of LMM with quadrature, we still have the Dahlquist equivalence theorem.

Theorem 3 (Convergence for LMM with memory).

Under the Assumption 1, if a linear -step method

| (4.5) |

satisfies the root condition, and consistent, then it is convergent. Moreover, if the method has local truncation errors of order , then the global error is convergent of order .

5 Weak A-Stability

5.1 Definition of Weak A-Stability

Recall that the general solution for the test problem

| (5.1) |

has the form , and as , we have that as , regardless of the value of . Hence, in order to extend the notion of A-Stability to ODEs with memory, we endeavor to choose an appropriate class of test problems, and dig into the long time behavior of the solutions to the test problems. Our test problem is chosen to be a linear equation equipped with a stationary memory kernel, i.e.,

| (5.2) |

WLOG, we assume throughout this note that , , and furthermore, is an function over the region , i.e., . In order for the property of the long time behavior of the solution to our test problem (5.2) to be discovered, the Laplace Transform[17] needs to be employed.

Definition 9 (Laplace transform).

The Laplace transform of a locally integrable function is defined by

| (5.3) |

for those where the integral makes sense.

Proposition 1 (Sufficient condition for Absolute stability).

Proof.

Taking Laplace transform on (5.2), we have

Thus

Apply final value theorem, we obtain that

The standard assumptions for the final value theorem[15, 17] require that the Laplace transform have all of its poles either in the open-left-half plane or at the origin, with at most a single pole at the origin. Our condition (5.4) implies that , and

| (5.6) |

Then have no poles in the open-right-half plane and on the imaginary line, which finishes the proof.

∎

Proposition 1 reveals that both and the kernel have impact on the long time behavior of the solution , hence it is natural that both components have impact on our notions of the regions of absolute stability for ODEs with memory.

Definition 10 (Regions of Absolute Stability).

We define the region of absolute stability for the exact solution to the test problem (5.2) as

| (5.7) |

and we define the region of absolute stability for the numerical scheme as

| (5.8) |

Then directly from Proposition 1, we obtain that

| (5.9) |

and we are able to give out the definition of weak A-stability.

Definition 11 (Weak A-Stability).

A LMM designed for test problem (5.2) is weak A-stable if the following relation holds

| (5.10) |

We would like to show that Definition 11 is coherent with Definition 6 in that for the test problem (2.12), since and , then the region of absolute stability for the exact solution reads

and the region of absolute stability for the numerical scheme coincides with in Definition 7, hence the set relation (5.10) reads

which is exactly the case in (2.13).

5.2 Weak A-Stability for One-step Methods

Our results concerning weak A-stability are essentially based on the following lemma.

Lemma 3 (Induction lemma for weak A-stability).

For , given , and a non-negative absolutely convergent infinite series whose summands equal to , i.e., , then for all and , consider the non-negative sequence satisfying

| (5.11) |

we have that

| (5.12) |

Proof.

WLOG, we set . We claim that given any , there exists , such that for all ,

| (5.13) |

for some constant

We will prove (5.13) by induction.

(i). The base case: for , we show that there is a constant such that for , . Indeed, we set . For , we have

For , we have

For , suppose that for all , we have , then for , we have

(ii). The inductive step: For , suppose that for all , there exists such that for , . Our goal is to prove that for , there exists , such that for , .

As the infinite series is absolute convergent, hence for large enough , there exists , such that for any ,

we choose , i.e.,

and we set , as we have for , then for ,

| (5.14) | ||||

which finishes our proof. ∎

In the case where , we have that

Proposition 2 (Weak A-stablity for Backward Euler method).

In the case where , we have that

Proposition 3 (Weak A-stability for Trapezoidal method).

6 Numerical experiments

In this section, we present several numerical examples to verify the proposed zero-stability and weak A-stability definitions and theorems for ordinary differential equations (ODEs) with memory. We consider different types of memory kernels and initial conditions, and compare the numerical solutions obtained by the proposed methods with the exact solutions or reference solutions. We also measure the errors and convergence rates of the methods, and demonstrate the stability of each method. The numerical examples illustrate the applicability and validity of our theoretical results for ODEs with memory.

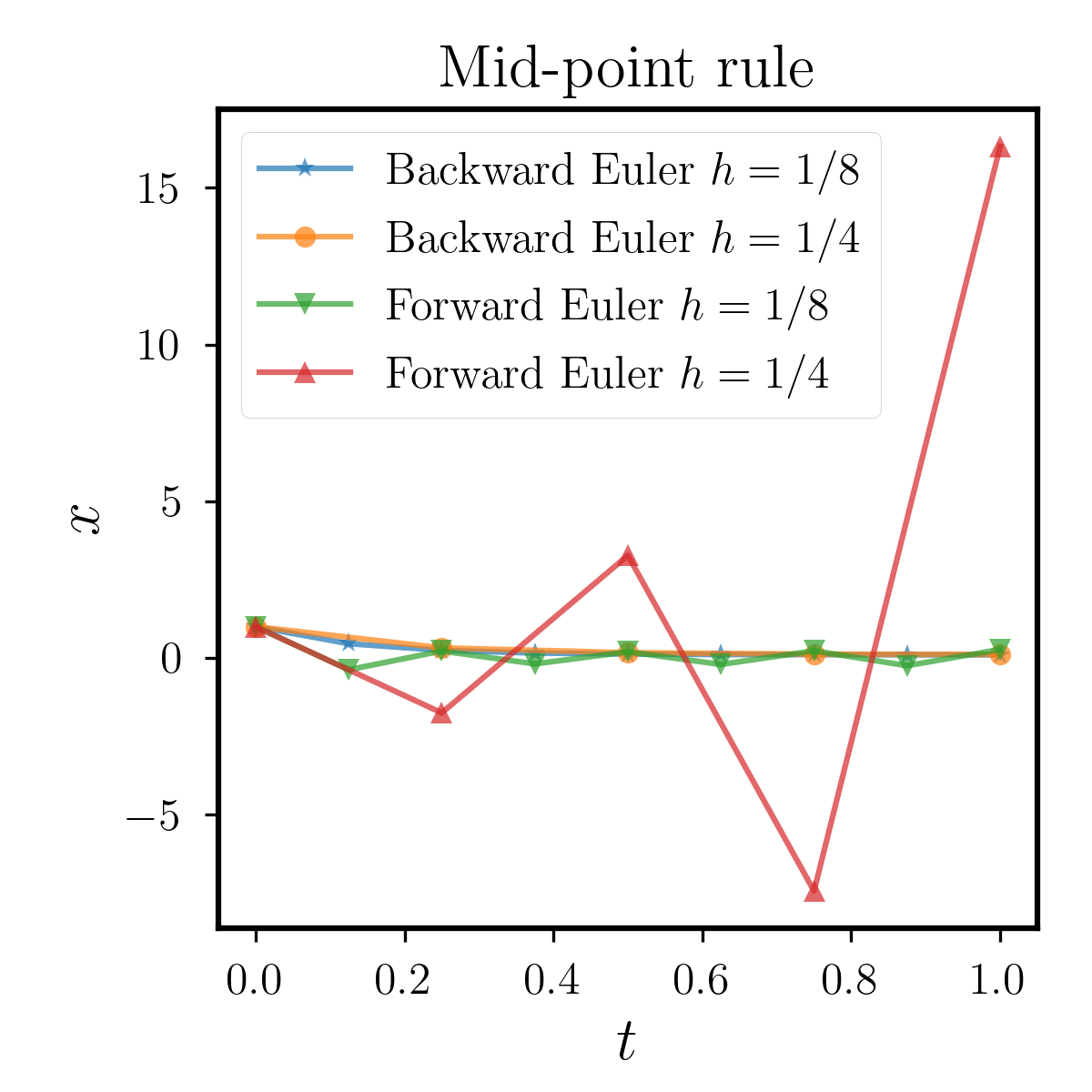

6.1 Zero-stability

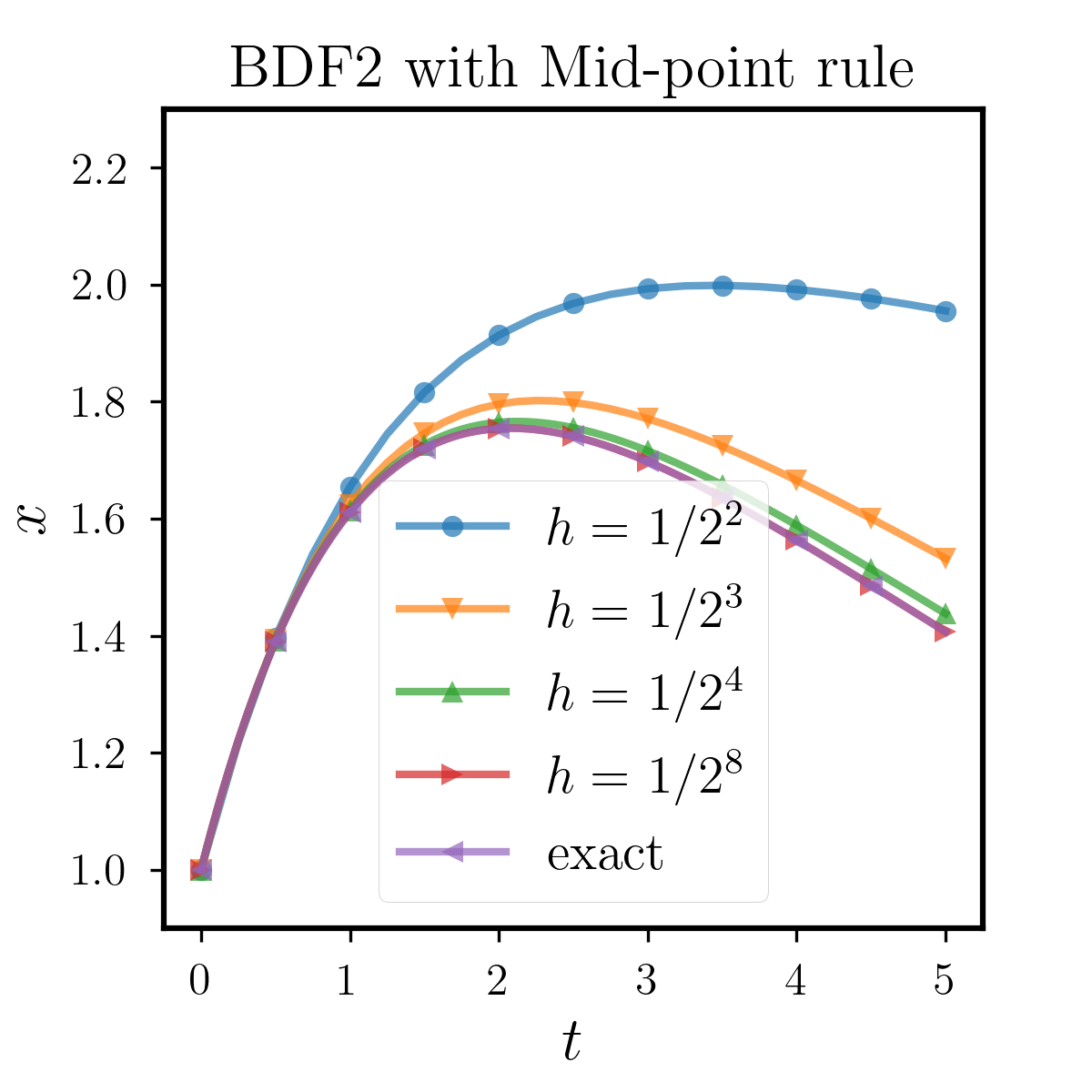

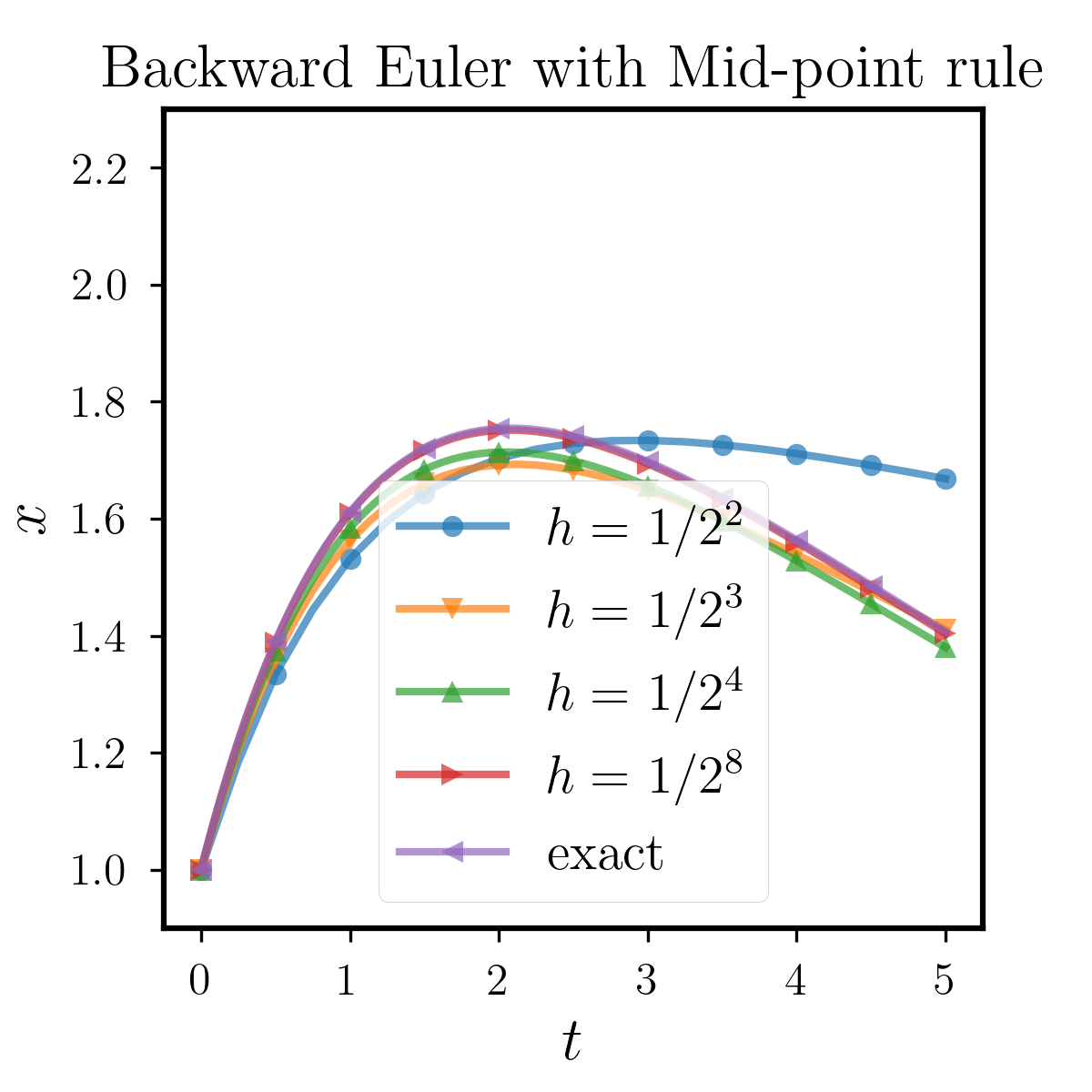

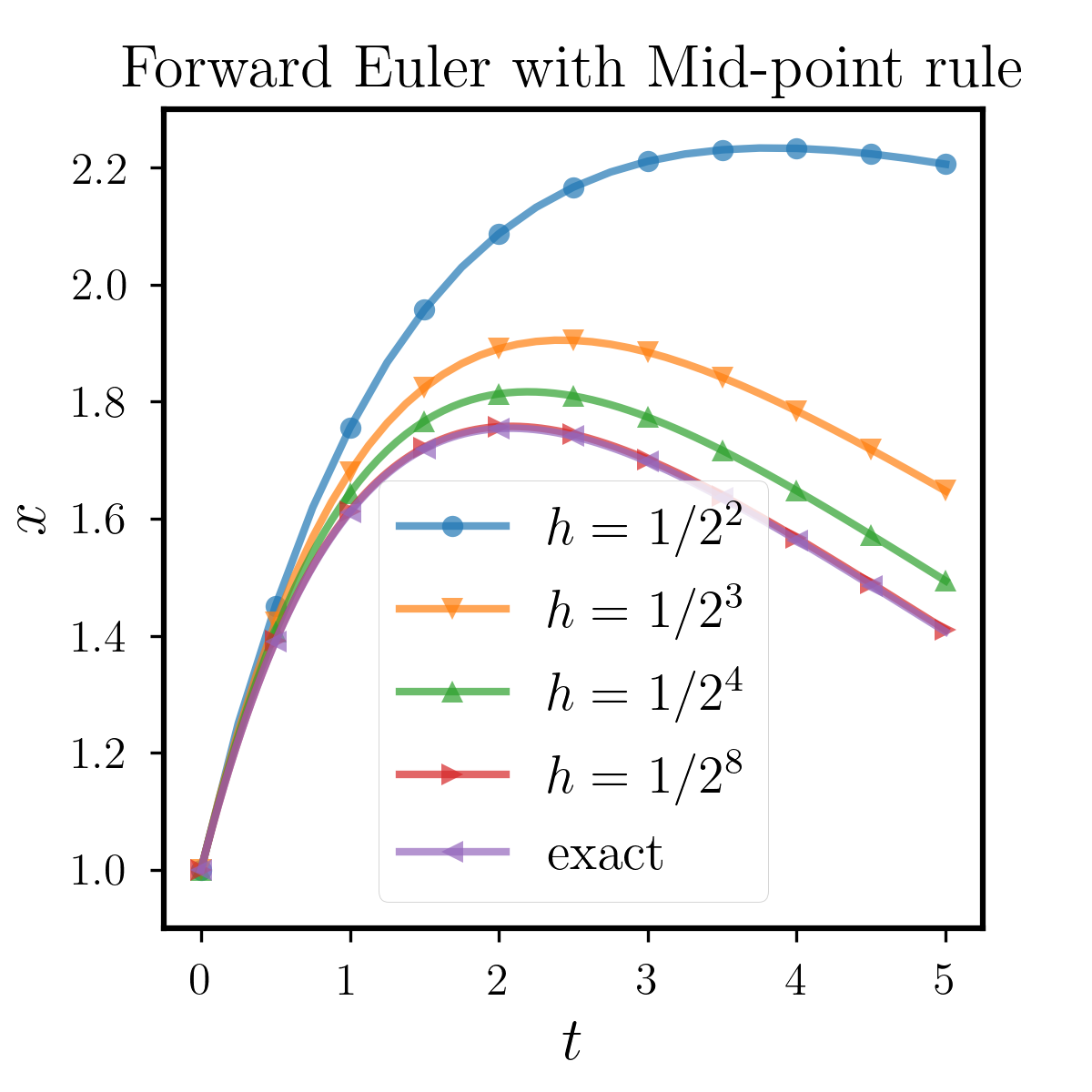

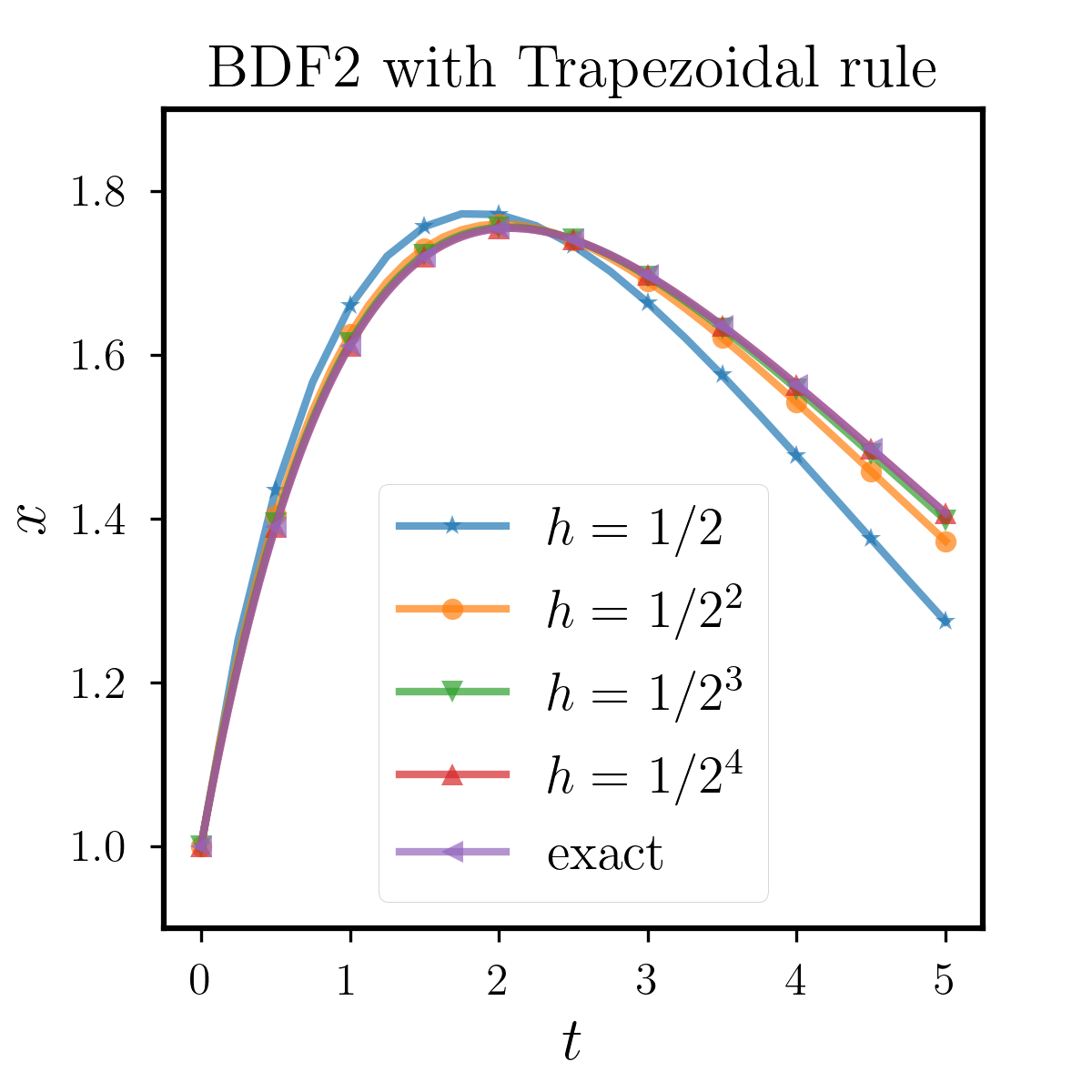

Firstly we perform numerical experiments to verify the zero-stability that we have proved above. As we have Theorem 2 that all the classical LMMs that satisfy the root condition are zero-stable after being equipped with open quadrature. In this subsection, we consider the most commonly used methods, i.e., Forward Euler, Backward Euler and BDF2 that are all zero-stable in our conclusion. We vary the time step from to , and observe the performance of the numerical solutions.

6.1.1 Example 1

We consider the following ODE with memory:

| (6.1) |

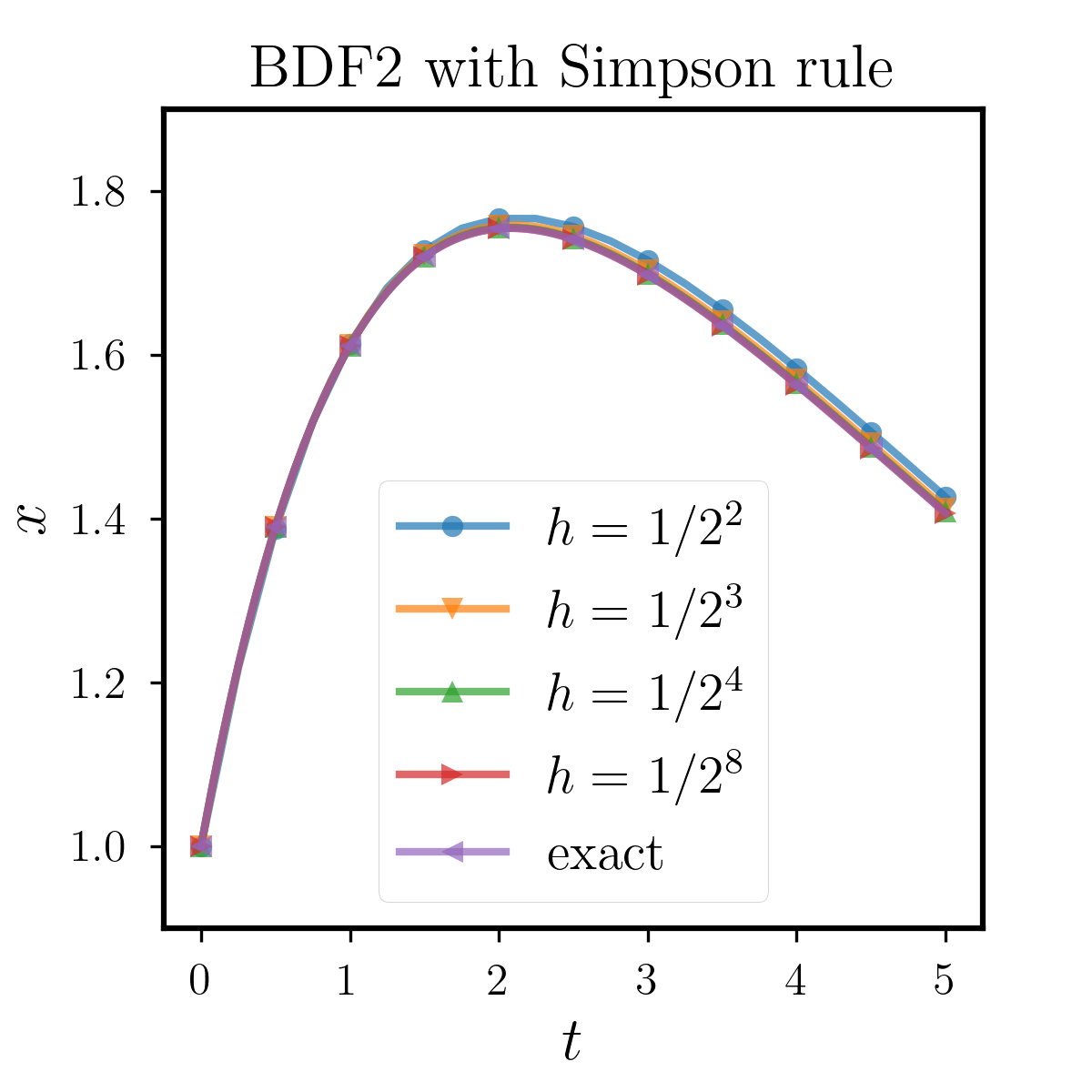

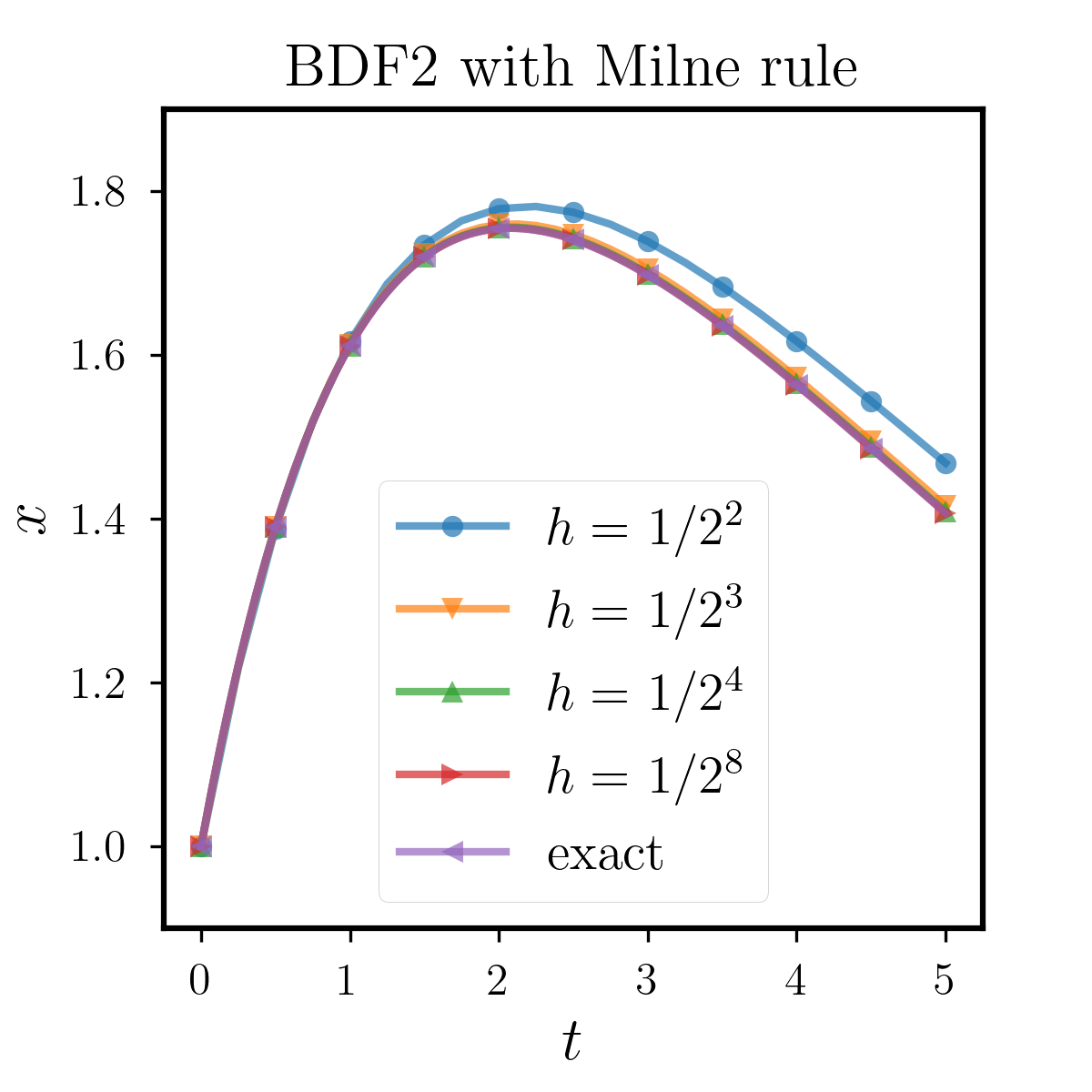

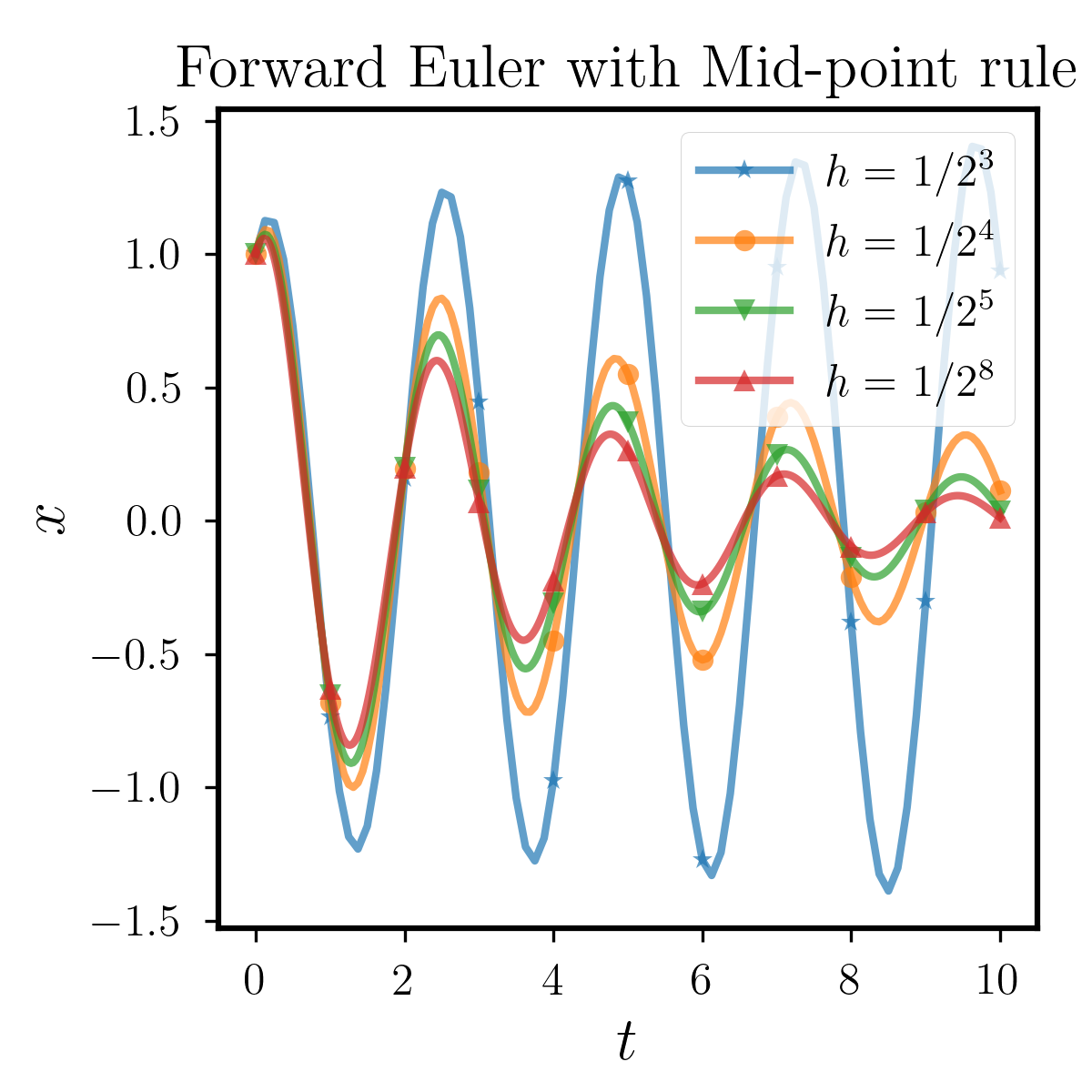

We can see that the memory item in this example is an exponential function, thus we can obtain the exact soluiton of the equation through Laplace Transform. We first fix the quadrature as open Mid-point rule, and compare the stability between BDF2, Backward Euler and Forward Euler methods. The results are shown in Figure 6.1.1, where we can observe that when the step size is relatively small, the three methods can approximate the true solution well. However, as we increase , different methods have errors due to accuracy issues. With the same quadrature as Mid-point rule, the performance of the Forward Euler is relatively poor, while that of the Backward Euler and BDF2 are similarly better. Moreover, to study the effects of different quadratures, we test BDF2 with Trapezoidal rule, Simpson rule and Milne rule. All of them have higher order than Mid-point rule and the results are shown in Figure 6.1.2. We can see that all the methods perform better than that with Mid-point rule as we expect. From this example, we conclude that these two implicit methods have superior numerical performance than the explicit Forward Euler. As for the usage of quadratures, the above tests show that the stable domain highly depends on the quadrature rules we use, which should be carefully chosen in applications.

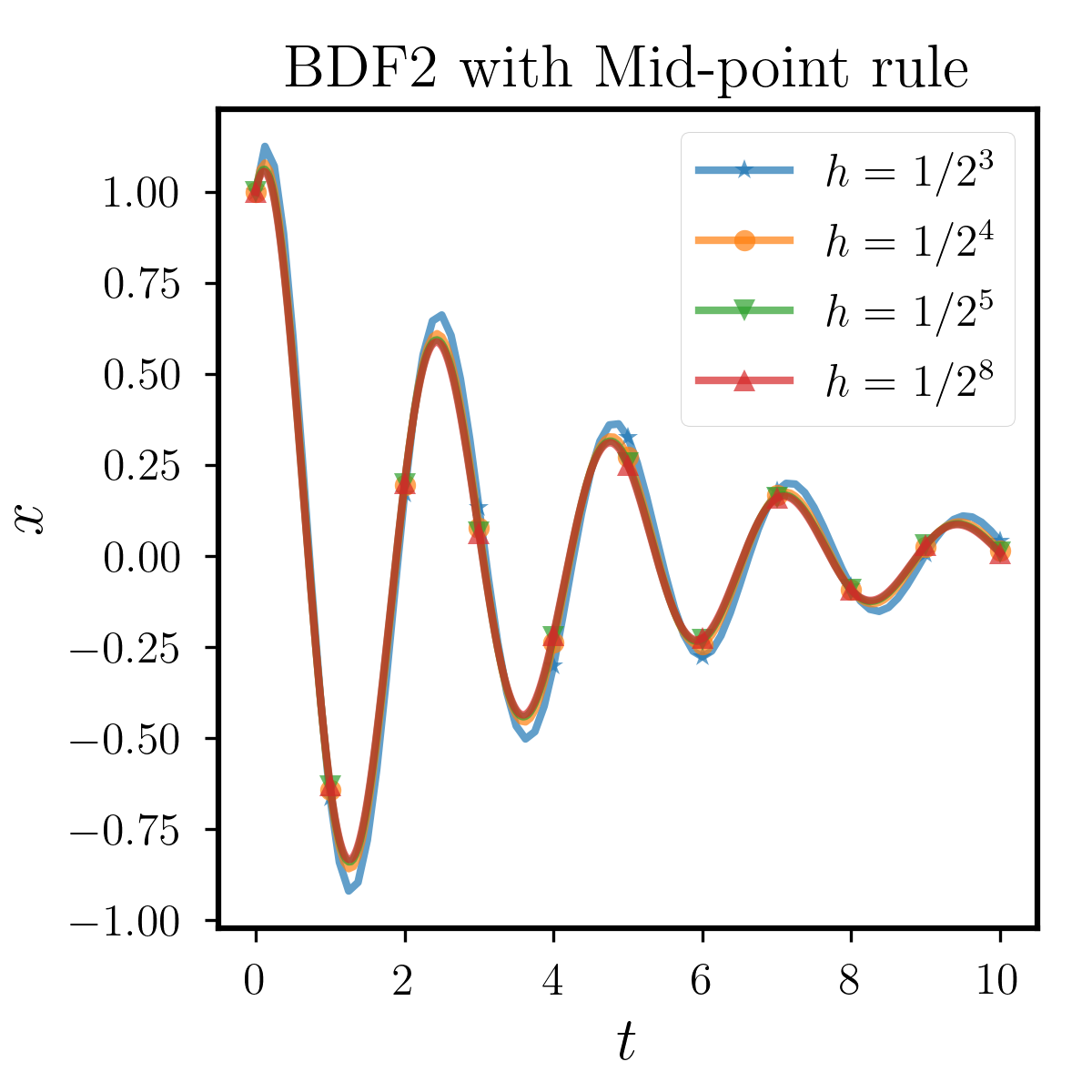

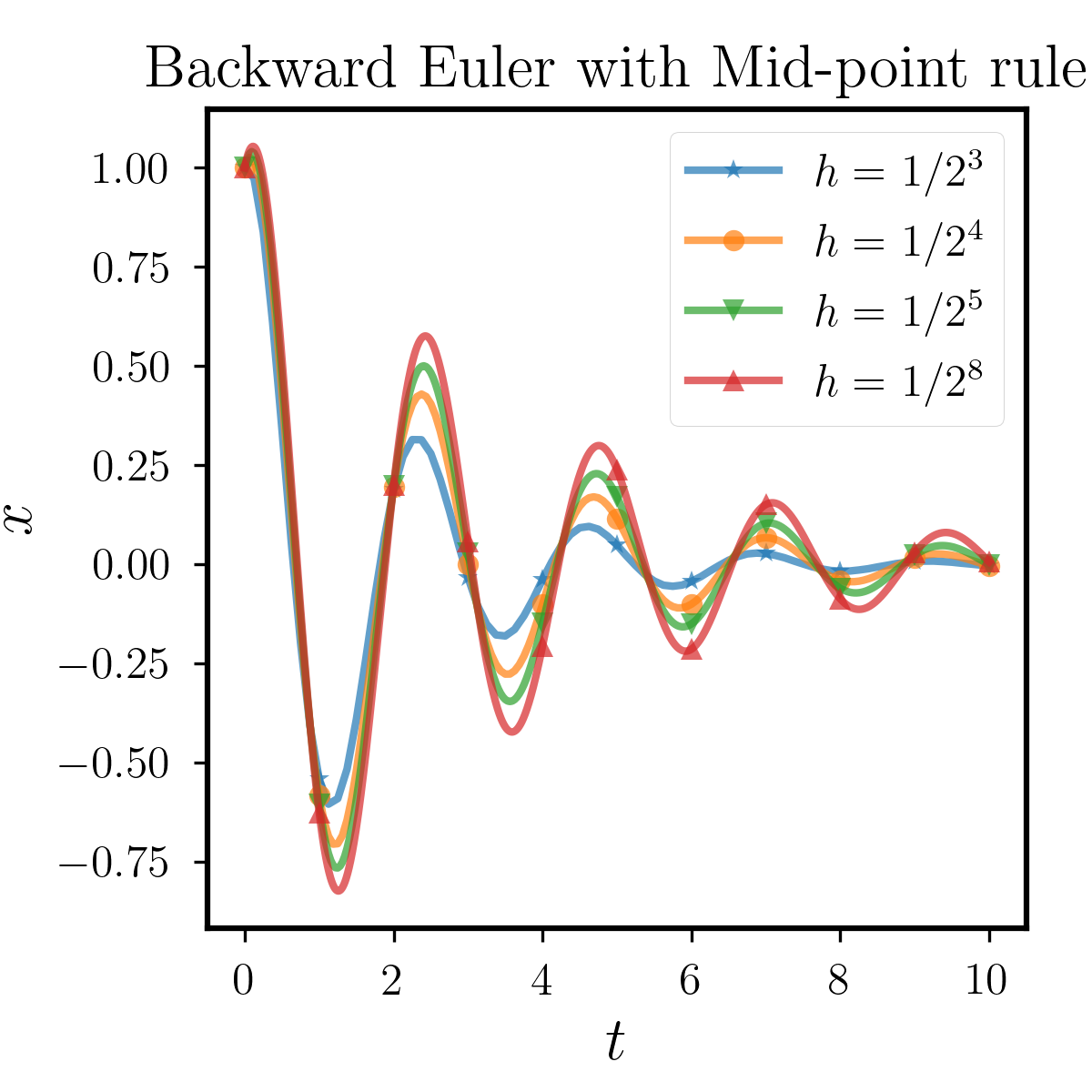

6.1.2 Example 2

In the previous example, we consider an exponential memory term, which has a relatively weak impact on the solution. In this example, we change the kernel to a power function then the equation reads:

| (6.2) |

At this time, we can not derive the analytic solution so that we treat the numerical solution with as the exact solution. The result are shown in Figure 6.1.3, where we observe that the solution of the (6.2) is periodically oscillatory and decaying. In this example, we still use the Mid-point rule to compare the differences between the three different multi-step methods. It can be seen that for various step sizes, the BDF2 can capture this periodic oscillation well, while for the two Euler methods, as the step size is relatively larger, the numerical solution has a significantly higher or lower amplitude. Therefore, BDF2 has a clear advantage over the two Euler methods in this example.

6.2 Convergence

In this subsection, we test the convergence of the proposed methods for ODEs with memory. In the following examples, we set various time steps and measure the error of the numerical solutions. We use the following error measure:

| (6.3) |

where the exact solution is obtained analytically and is a grid point. As we have Lemma 2, the order of LMM is determined by both the classical LMM and the quadrature. Thus we will combine different method with different quadratures and verify the order of the error we define as (6.3).

6.2.1 Example 3

In this example we consider the equation

| (6.4) |

This equation has an analytical solution:

| (6.5) |

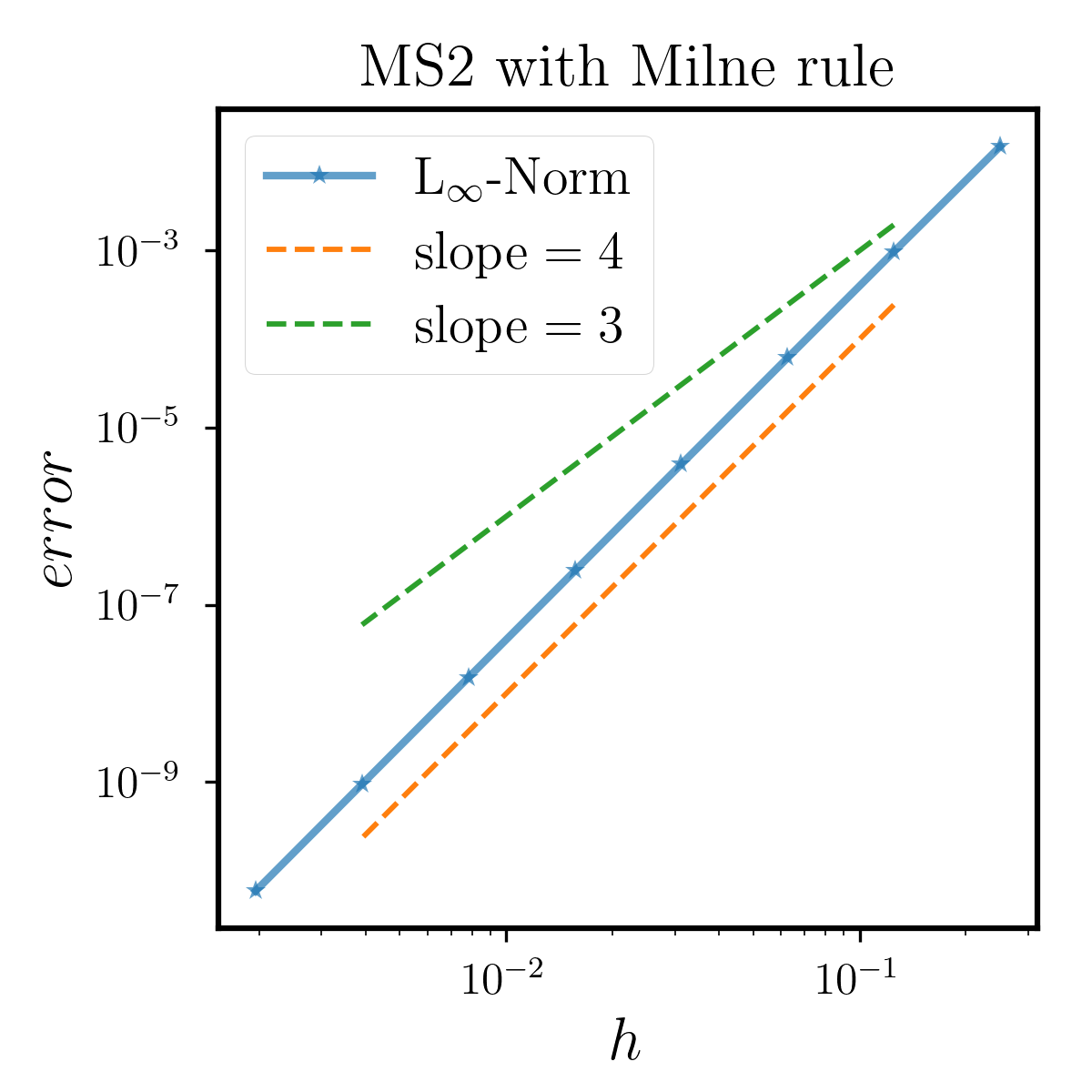

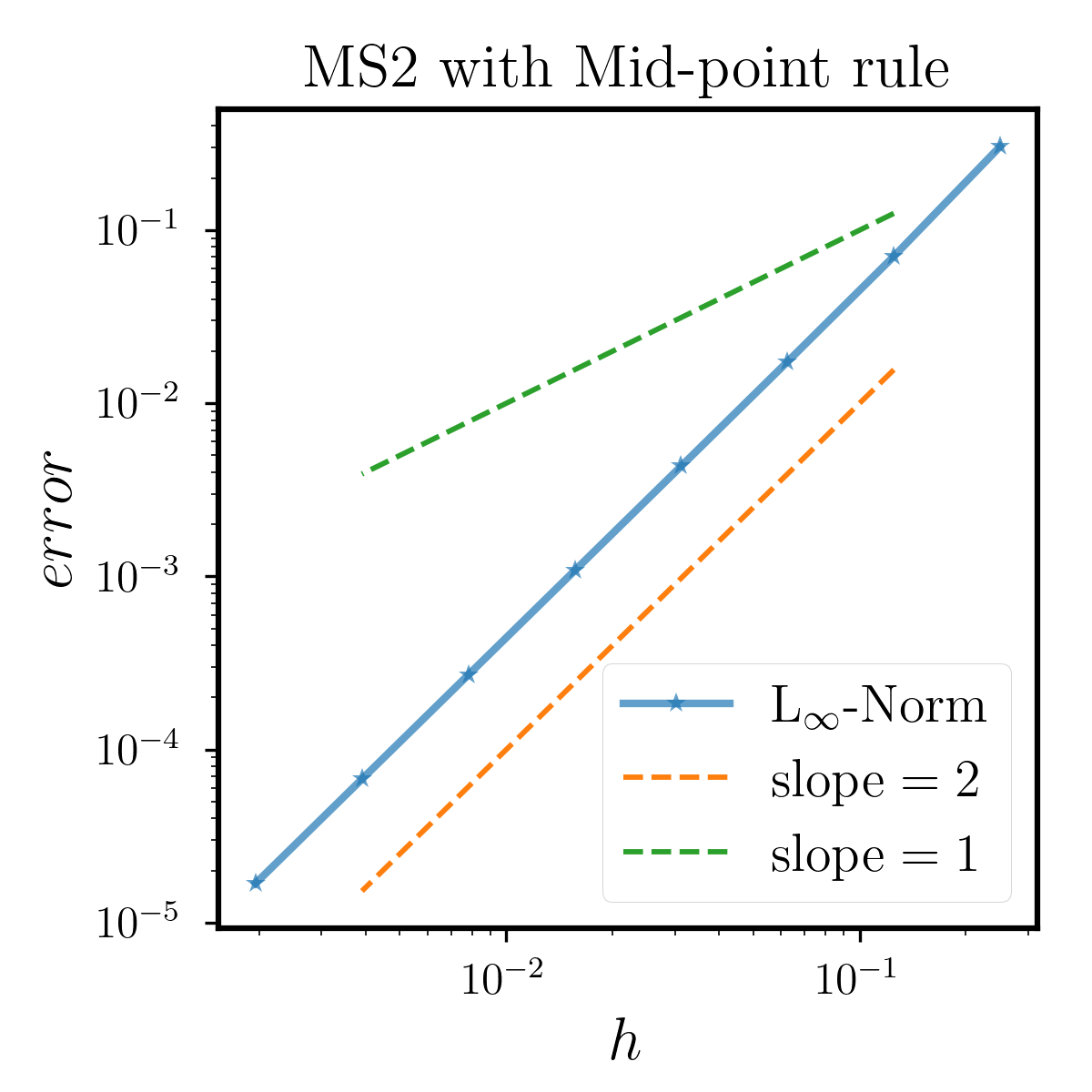

We test MS2 with both Milne rule and Mid-point rule for convergence from to . Recall that MS2 is a fourth-order method, the order of composed Milne rule and composed Mid-point rule is and . The results are shown in Figure 6.2.1, where both the -axis and -axis are on a logarithmic scale. It shows that MS2 with Milne rule is fourth-order accurate as the order of both two components are . While MS2 with Mid-point rule is second-order accurate, since it is restricted by the accuracy of quadrature.

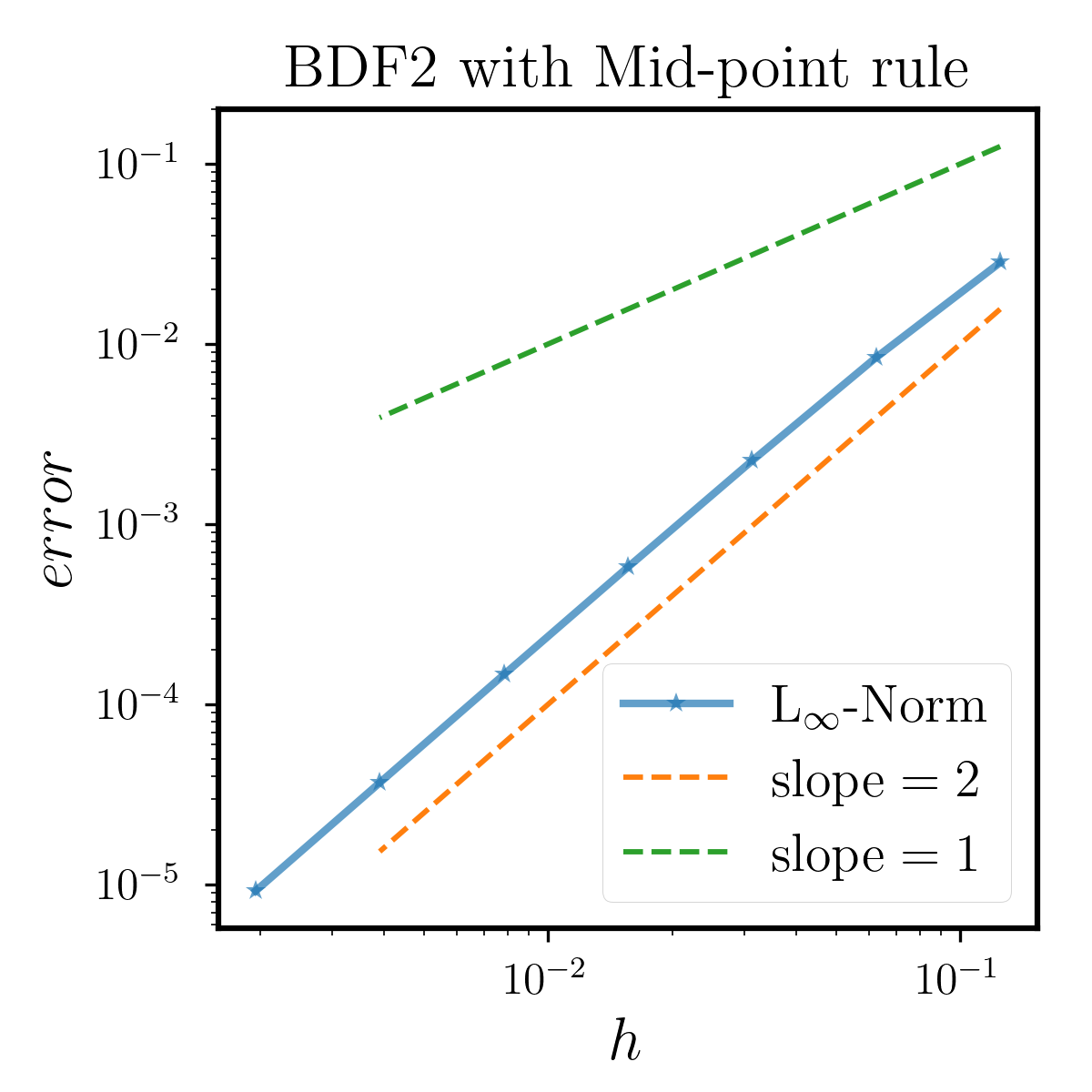

6.2.2 Example 4

In this example, we change the memory kernel and use BDF2 instead to solve the equation which reads

| (6.6) |

The analytical solution is

| (6.7) |

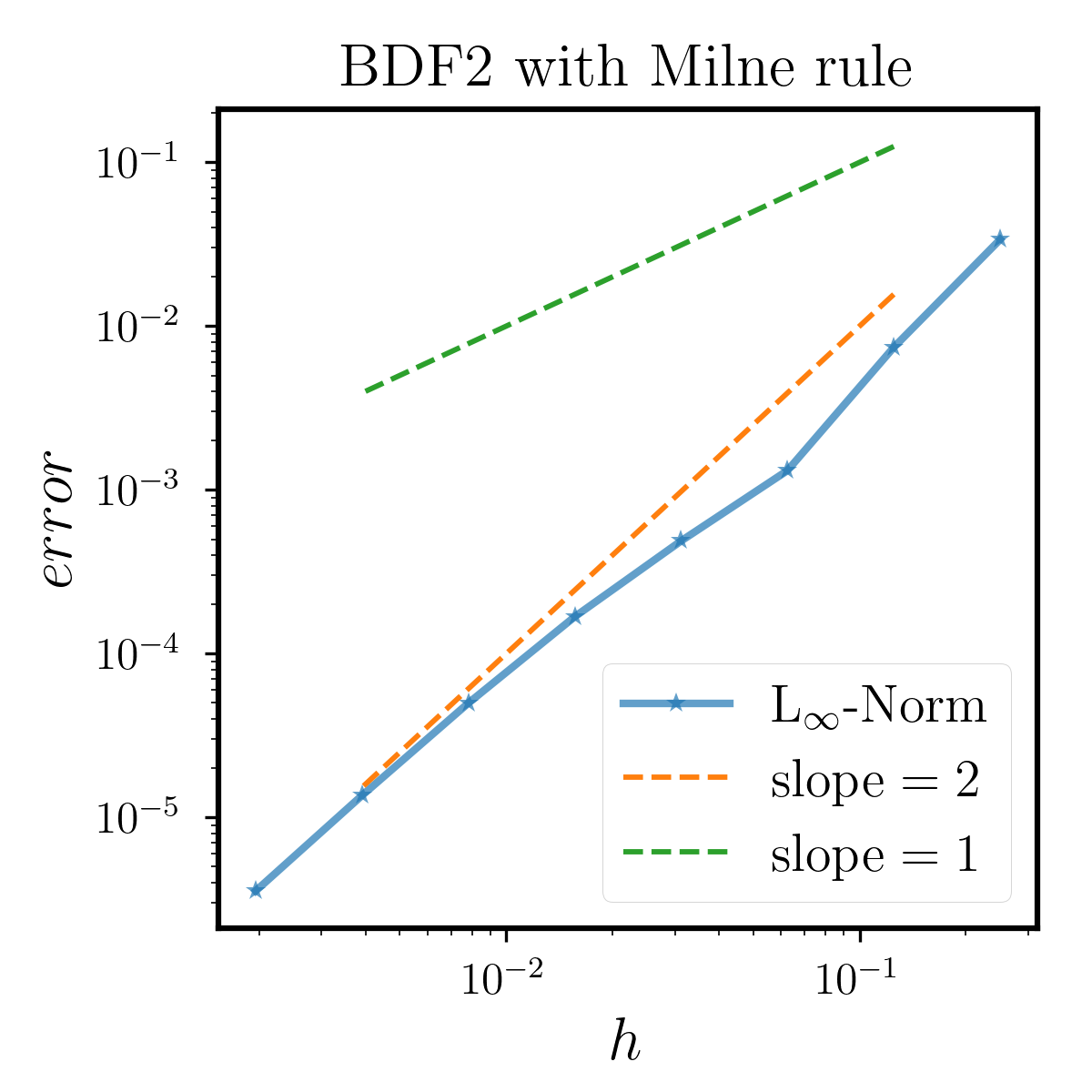

We test the convergence rate of BDF2 with both Milne rule and Mid-point rule from to . The results are shown in Figure 6.2.2, which shows that both methods are second-order accurate. Unlike the former example, the two methods are mainly restricted by the accuracy of the BDF2, as it is just of order .

From the above two examples, we can see that the order of the method is determined by both the classical LMM and the quadrature rule, i.e., when we use MS2 the error of integral part is dominant. From this prospective, it is unnecessary to use a method with a large difference of order between the two components.

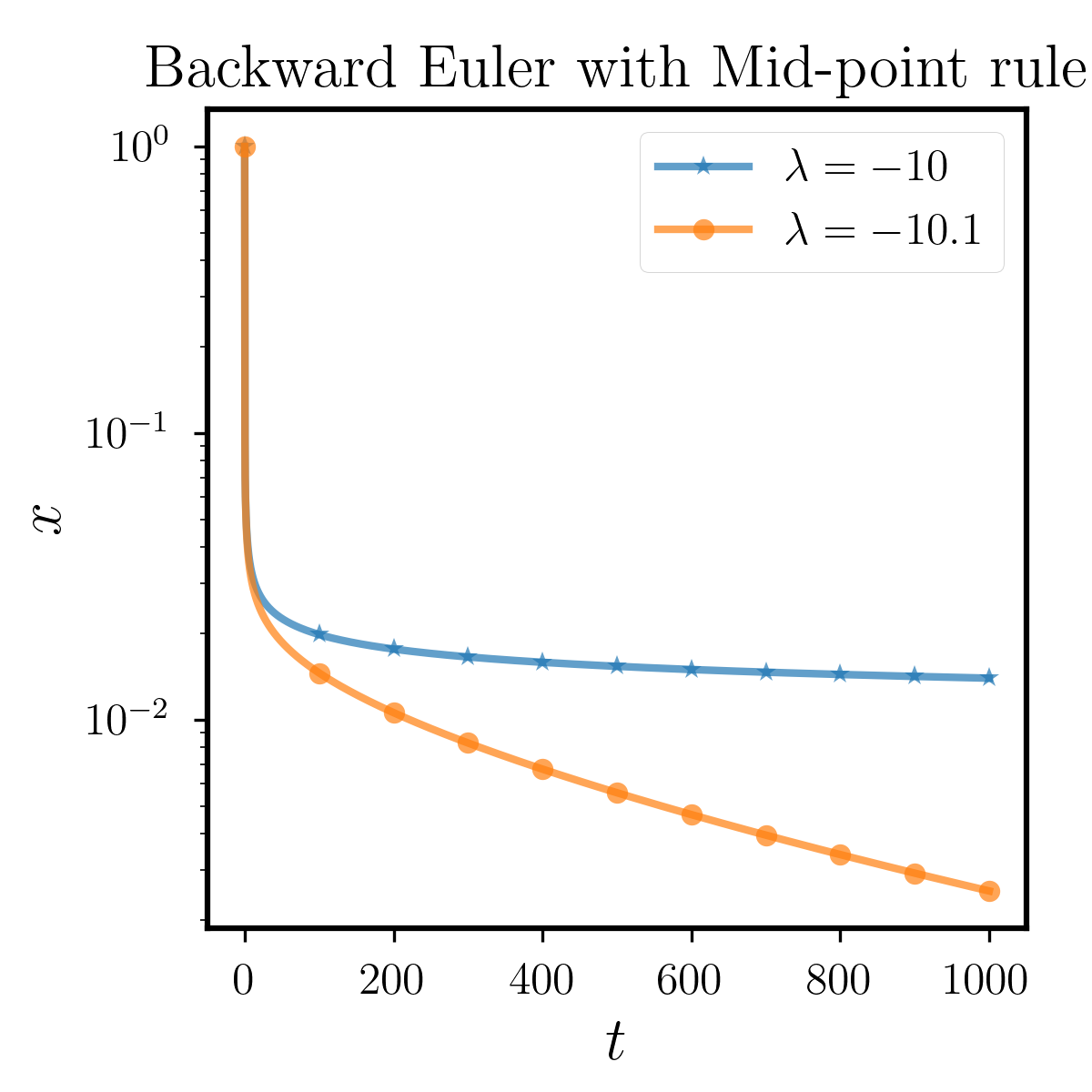

6.3 Weak A-stability

Finally, we verify the weak A-stability results proved in Section 5, where we give the modified definition 11 and obtain that Backward Euler is weak A-stable.

6.3.1 Example 5

In this example, we can consider the equation

| (6.8) |

Here , , thus and the solution of (6.8) is absolutely stable. Numerically, we use Mid-point as the quadrature, with values of and , and employ the Forward Euler method and the Backward Euler method. The results are shown in the left side of Figure 6.3.1. It is observed that the numerical solutions obtained from the Backward Euler method, which is weak A-stable, are stable for both . While for the Forward Euler method, the numerical solution obtained with a larger , i.e., is divergent, indicating that the method is not weak A-stable.

6.3.2 Example 6

In this example, we discuss our modified definition weak A-stability, where the “weakness” results from that we treat (5.9) as the region of absolute stability for the exact solution which should be a proper subset of the region. However, as we can derive the analytic solution when the kernel is exponential. We find that indeed equals to in the case of exponential kernel. In this example, we consider the power function kernel and study the distinction of the two sets in (5.9). We look at the following equation:

| (6.9) |

with or .

In this experiment, we use Backward Euler with Mid-point rule and set so that we treat the solution as exact one. The results are shown in the right side of Figure 6.3.1. It can be observed that we take the logarithmic scale on the -axis to observe the changes in the function value. When , we found that solution value is convergent at a non-zero value, indicating that this setting does not belong to the absolute stability region . However, when , we can see that the solution continues to monotonically decrease. We calculate its long-term behavior up to and conclude that it converges to zero, although it does so slowly, indicating that this setting is within . These phenomena show that numerically equals to when we use the type of kernel in (6.9). From this point of view, our modified definition is properly reasonable for at least some types of kernel function.

References

- [1] O. Arino, M. L. Hbid, and E. A. Dads, Delay Differential Equations and Applications: Proceedings of the NATO Advanced Study Institute held in Marrakech, Morocco, 9-21 September 2002, vol. 205, Springer Science & Business Media, 2007.

- [2] V. I. Arnold, Ordinary Differential Equations, 10th printing, 1998.

- [3] R. E. Bellman and K. L. Cooke, Differential-difference Equations, Rand Corporation, 1963.

- [4] R. L. Burden and D. J. Faires, Numerical Analysis, PWS publishing company, 1985.

- [5] P. J. Davis and P. Rabinowitz, Methods of Numerical Integration, Courier Corporation, 2007.

- [6] D. F. Griffiths and D. J. Higham, Numerical Methods for Ordinary Differential Equations: Initial Value Problems, Springer Science & Business Media, 2010.

- [7] E. Hairer, S. P. Nørsett, and G. Wanner, Solving Ordinary Differential Equations I: Nonstiff Problems, Springer-Verlag, 1987.

- [8] R. Hilfer, Applications of fractional calculus in physics, World scientific, 2000.

- [9] H.-l. Liao, D. Li, and J. Zhang, Sharp error estimate of the nonuniform l1 formula for linear reaction-subdiffusion equations, SIAM Journal on Numerical Analysis, 56 (2018), pp. 1112–1133.

- [10] H.-L. Liao, W. McLean, and J. Zhang, A second-order scheme with nonuniform time steps for a linear reaction-sudiffusion problem, arXiv preprint arXiv:1803.09873, (2018).

- [11] H.-l. Liao, W. McLean, and J. Zhang, A discrete gronwall inequality with applications to numerical schemes for subdiffusion problems, SIAM Journal on Numerical Analysis, 57 (2019), pp. 218–237.

- [12] P. Linz, Analytical and numerical methods for Volterra equations, SIAM, 1985.

- [13] N. Liu, H. Qin, and Y. Yang, Unconditionally optimal h1-norm error estimates of a fast and linearized galerkin method for nonlinear subdiffusion equations, Computers & Mathematics with Applications, 107 (2022), pp. 70–81.

- [14] I. Podlubny, An introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications, Math. Sci. Eng, 198 (1999), p. 340.

- [15] J. L. Schiff, The Laplace transform: theory and applications, Springer Science & Business Media, 1999.

- [16] H. Smith, An introduction to delay differential equations with applications to the life sciences, springer, 2011.

- [17] M. R. Spiegel, Laplace transforms, McGraw-Hill New York, 1965.

- [18] J. Stoer and R. Bulirsch, Introduction to Numerical Analysis, vol. 12, Springer Science & Business Media, 2013.

- [19] L. Torelli, Stability of numerical methods for delay differential equations, Journal of Computational and Applied Mathematics, 25 (1989), pp. 15–26.

- [20] W.-S. Wang, S.-F. Li, and K. Su, Nonlinear stability of general linear methods for neutral delay differential equations, Journal of Computational and Applied Mathematics, 224 (2009), pp. 592–601.

- [21] G. Wanner and E. Hairer, Solving Ordinary Differential Equations II: Stiff and Differential-Algebraic Problems, Springer Berlin Heidelberg, 1996.