ODE Discovery for Longitudinal Heterogeneous Treatment Effects Inference

Abstract

Inferring unbiased treatment effects has received widespread attention in the machine learning community. In recent years, our community has proposed numerous solutions in standard settings, high-dimensional treatment settings, and even longitudinal settings. While very diverse, the solution has mostly relied on neural networks for inference and simultaneous correction of assignment bias. New approaches typically build on top of previous approaches by proposing new (or refined) architectures and learning algorithms. However, the end result—a neural-network-based inference machine—remains unchallenged. In this paper, we introduce a different type of solution in the longitudinal setting: a closed-form ordinary differential equation (ODE). While we still rely on continuous optimization to learn an ODE, the resulting inference machine is no longer a neural network. Doing so yields several advantages such as interpretability, irregular sampling, and a different set of identification assumptions. Above all, we consider the introduction of a completely new type of solution to be our most important contribution as it may spark entirely new innovations in treatment effects in general. We facilitate this by formulating our contribution as a framework that can transform any ODE discovery method into a treatment effects method.

1 Introduction

Inferring treatment effects over time has received a lot of attention from the machine-learning community (Lim, 2018; Schulam & Saria, 2017; Gwak et al., 2020; Bica et al., 2020b). A major reason for this is the wide range of applications one can apply such a longitudinal treatment effects model. Consider for example the important problem of constructing a treatment plan for cancer patients or even a training schedule to combat unemployment.

The increased attention from the machine learning community resulted in many methods relying on novel neural network architectures and learning algorithms. Once trained, these neural nets are used as inference machines, with innovation focusing on new architectures and learning strategies. This type of approach has indeed yielded many successes, but we believe that for some situations, one may want to consider an entirely different type of model: the ordinary differential equation (ODE).

This point is illustrated in fig.˜1, where we show a standard treatment effects (TE) model on the left, and our new approach on the right. Essentially, a standard TE model will first build a representation to adjust the covariate shift—e.g. through propensity weighting (Robins et al., 2000) or adversarial learning (Bica et al., 2020b)—used to model the outcome. Conversely, our new approach does not adjust the dataspace in any way, and instead discovers a global ODE which we refine per patient, as the goal of ODE discovery is finding underlying closed-form concise ODEs from observed trajectories.

Doing so yields advantages ranging from interpretability to irregular sampling (Lok, 2008; Saarela & Liu, 2016; Ryalen et al., 2019), and even modifying certain assumptions relied upon by contemporary techniques (Pearl, 2009; Bollen & Pearl, 2013). Most of all, we believe that proposing this new strategy may spark a radically different approach to inferring treatment effects over time. Moreover, given that the resulting model is an equation, one may use the discovered solution to engage in further research and data collection, given that we can now understand certain behaviours of the treatment in the environment. The latter is, of course, not possible when using black-box neural network models (Rudin, 2019; Angrist, 1991; Kraemer et al., 2002; Bica et al., 2020a; 2021).

Our contribution is a usable framework that allows us to translate any ODE discovery method (Brunton et al., 2016) into the treatment effects problem formulation. While other TE inference methods have used ideas from ODE literature, none have focused on ODE discovery, we have devoted section˜C.1 to explain this subtle but important difference. Hence, in this paper, we first explain the differences between ODE discovery (and ODEs in general), and treatment effects inference, before connecting them through our proposal. Using our framework, we build an example method (called INSITE)111We provide code at https://github.com/samholt/ODE-Discovery-for-Longitudinal-Heterogeneous-Treatment-Effects-Inference., tested in accepted benchmark settings used throughout the literature. Transforming an ODE discovery method into a TE method results in a new set of identification assumptions. This need not be a limitation, instead, it can be seen as an extension as the typical TE identification assumptions may not always hold in ODE discovery settings.

2 Heterogeneous Treatment effects over time

To reformulate the longitudinal heterogeneous treatment effects problem as an ODE discovery problem, in this section, we first introduce longitudinal treatment effects with its assumptions and then introduce ODE discovery in section˜3. Let random variables , and be the individual’s features, treatment, and outcome at time , with the time horizon. We also denote the static covariates of the individual as . In the treatment effects literature, typically and . Unless required for clarity, we will drop the individual indicator . We use the following notation to denote the observed features in the time window . The observed dataset contains the realizations of the random variables above, .

Of interest is estimating the expected potential outcome , for some under hypothetical future treatments given the historical features and the previous treatments (Neyman, 1923; Rubin, 1980):

| (1) |

By definition, the potential outcome defined above includes multiple hypothetical scenarios that cannot be simultaneously observed in the real world. This problem is often referred to as the fundamental challenge to treatment effects and causal inference (Holland, 1986).

As such, treatment effects (over time) literature typically introduces a set of assumptions to link the (unobservable) potential outcomes to the observable quantities , , and , to correctly estimate in eq.˜1. These assumptions are:

Assumption 2.1 (Consistency)

For an observed treatment process , the potential outcome is the same as the factual outcome .

Assumption 2.2 (Overlap)

The treatment intensity process is not deterministic given any filtration 222We further explain treatment intensity processes and filtrations in the assumptions in appendix B. (Klenke, 2008) and time point , i.e.,

Assumption 2.3 (Ignorability)

The intensity process given the filtration is equal to the intensity process that is generated by the filtration that includes the -algebras generated by future outcomes .

The above generalizes the standard identification assumptions made in static treatment effect literature (Rosenbaum & Rubin, 1983; Seedat et al., 2022). This generalization is largely based on previous extensions to continuous-time causal effects (Lok, 2008; Saarela & Liu, 2016; Ryalen et al., 2019).

Assum.˜2.2 and assum.˜2.3 rely on a treatment intensity process, which can be considered a generalization of the propensity score in continuous time (Robins, 1999). Essentially, assum.˜2.2 allows that any treatment can be chosen at time , given the past observations in the filtering . Furthermore, assum.˜2.3 ensures it is sufficient to condition on a patient’s past observed trajectory to block any backdoor paths to future potential outcomes (eq.˜1).

Given the above, we are allowed the following equality, which identifies the potential outcome (LHS) to be estimated using the observed variables (RHS):

| (2) |

which is exploited by most works in the treatment effects literature (albeit by first regularizing models such that they respect assum.˜2.1, 2.2 and 2.3) (Bica et al., 2020b; Lim, 2018; Melnychuk et al., 2022). A thorough overview of related works is presented in appendix˜C.

3 Underpinnings of Ordinary Differential Equation Discovery

We now propose to model this treatment effect over time problem as a dynamical system, where their temporal evolution can often be well represented by ODEs (Hamilton, 2020). That means we assume the time-varying features and outcomes of the individual () are discrete (and possibly noisy) measurements of underlying continuous trajectories of observed features and potential outcomes , where is called the time horizon (Birkhoff, 1927). To make our formalism consistent, we also assume there is a treatment trajectory such that ’s either constitute snapshots of the underlying continuous treatment or if the treatments are administered at discrete times then is a step function whose values corresponds to the currently administered treatment.

There are many fields which already use ODEs as a formal language to express time dynamics. One such example is pharmacology, where a large portion of the literature is dedicated to recovering an ODE from observational data. The found ODE is then used to reason about possible treatments and disease progression (Geng et al., 2017; Butner et al., 2020). We further assume that this system is modelled by a system of ODEs which describes the time derivative of as a function of , and . The outcome trajectory, , depends on the features . In particular, we assume

| (3) |

where is the differential of . is prespecified by the user and often models the outcome as one of the features (), e.g., the tumour volume. This is a general formulation that may also include direct effects of on . We further discuss eq.˜3 in appendix˜D. The goal of ODE discovery is to recover the underlying system of ODEs based on the observed dataset (Brunel, 2008; Brunel et al., 2014). Of course, the reliance on such a dataset implies that, while the trajectories are defined in continuous time, they are observed in discrete time. Moreover, methods for ODE discovery make the following assumptions:

Assumption 3.1 (Existence and Uniqueness)

The underlying process can be modelled by a system of ODEs ,333Current ODE discovery methods are not designed to work with static features but they can be adapted to this setting by considering them as time-varying features that are constant throughout the trajectory. and for every initial condition , and treatment plan at , there exists a unique continuous solution satisfying the ODEs for all (Lindelöf, 1894; Ince, 1956).

Assumption 3.2 (Observability)

All dimensions of all variables in are observed for all individuals, ensuring sufficient data to identify the system’s dynamics and infer the ODE’s structure and parameters (Kailath, 1980).

Assumption 3.3 (Functional Space)

Each ODE in belongs to some subspace of closed-form ODEs. These are equations that can be represented as mathematical expressions consisting of binary operations , input variables, some well-known functions (e.g., ), and numeric constants (e.g., ) (Schmidt & Lipson, 2009).

Identification. Assum.˜3.1 and 3.2 play a crucial role in ODE discovery. Essentially, we require making such assumptions in order to correctly identify the underlying equation. The assumptions made in the treatment effects literature (assum.˜2.3, 2.2 and 2.1) serve a similar purpose as they allow us to interpret the estimand as a causal effect, i.e., they are necessary for identification (Imbens & Rubin, 2015; Rosenbaum & Rubin, 1983; Imbens, 2004).

Assum.˜3.1 ensures that the discovered ODE has a unique solution, which is essential for making reliable predictions, assum.˜3.2 is necessary such that the observed data can be used to accurately identify the underlying ODE. Finally, assum.˜3.3 defines the space of equations for the optimization algorithm to consider. We review methods for ODE discovery in appendix˜C.

4 Connecting treatment effects inference and ODE discovery

—the framework

From eqs.˜2 and 3, one can see that both treatment effects and ODE discovery involve learning a function that can issue predictions about future states. Although the connection is natural, there remain some discrepancies between these two fields. To apply ODE discovery methods for treatment effects inference, we have to resolve these discrepancies. Essentially, we establish a framework one can use to apply any ODE discovery technique in treatment effects.

We identify three discrepancies between the treatment effects literature and the ODE discovery literature: (1) different assumptions, (2) discrete (not continuous) treatment plans, and (3) variability across subjects. Each discrepancy is explained and reconciled in a dedicated subsection below with actionable steps.

Figure˜2 shows the areas ODE discovery methods can be expanded to, using our framework. Ranging from simple adaption, to proposing a completely new method (in section˜5), our framework significantly increases the reach of existing ODE discovery methods. Our new method—INSITE—should be considered the result after implementing our practical framework we present in the remainder of this section.

4.1 Deciding on assumptions (discr.˜1)

Discrepancy 1 (Different assumptions.)

In sections˜2 and 3 we listed the most common assumptions made in treatment effects and ODE discovery literature, respectively. While solving similar problems, these assumptions do not correspond one-to-one (table˜1). However, the fact that they do not can be seen as a major advantage—in some scenarios it may be more appropriate to assume assum.˜2.1, 2.2 and 2.3 versus assum.˜3.1, 3.2 and 3.3 or vice versa, which expands the application domain.

Increasingly, the treatment effects literature is considering settings that violate the overlap assumption assum.˜2.2 (D’Amour et al., 2021). It has been shown that correct model specification can weaken or even replace the overlap assumption (Gelman & Hill, 2006, Chapter 10). The same holds true for the ODE discovery methods, where overlap in assum.˜2.2 can be relaxed with assum.˜3.1 and 3.3. Consider an example where the true and specified models are both linear. Here, overlap can be violated as we can safely extrapolate outside the support of either treatment distribution. In contrast, the recent neural network-based treatment effects models can rarely satisfy correct model specification (Lim, 2018; Bica et al., 2020b; Berrevoets et al., 2021; Melnychuk et al., 2022). As a result, the overlap assumption plays a key role for these methods. We show this empirically in section˜6.

Framework step 1: Accept ODE discovery assumptions (assum.˜3.1, 3.2 and 3.3).

4.2 Incorporating diverse treatment types (discr.˜2)

Discrepancy 2 (Discrete treatment plans.)

In section˜3 we assume that is a trajectory like and , with a snapshot observed similarly to observing covariates and outcomes. This implies that, like covariates and outcomes, the treatment plan is defined in continuous time, and the treatment is itself a continuous value. While there exist scenarios where this could be possible (e.g., in settings where treatment is constantly administered), most settings violate this. Hence, we need to reconcile modelling treatments in continuous time and values to settings violating these basic setups.

To connect ODE discovery with the treatment effects literature, we require incorporating different types of treatment plans. Continuous valued treatment that is administered over time is adopted quite naturally in a differential equation. However, the treatment effects literature is typically focused on other types of treatment (Bica et al., 2021): binary treatments, categorical treatments, multiple simultaneous treatments, and dosage. Each of these treatments can be static (i.e., constant throughout the trajectory) or dynamic. With such diverse treatments, it might be impossible to express as a closed-form expression. For instance, where the treatment is a categorical variable (i.e., ). This violates assum.˜3.3 of continuous solutions made by ODE discovery methods.

To reconcile it, we need to decide how to incorporate treatment into . This can be done in two ways: either we discover different (piecewise) closed-form ODEs for different treatments (Trefethen et al., 2017; Jianwang et al., 2021), or we incorporate the action variable into the closed-form ODE. Depending on the type of treatment one or both of these approaches can be chosen. In appendix˜E we outline the ways of incorporating the treatment plan in according to the treatment types listed above. In summary, there are 4 different treatment types: binary, categorical, multiple, and continuous treatments (Bica et al., 2020b). Each of them can be a static or dynamic treatment, which is either constant or changes during a trajectory, respectively. This results in 8 scenarios, which we model in two ways: either the ODE changes for each treatment option; or the treatment is part of the starting condition. These are all outlined in table˜6 in appendix˜E.

In section˜6 we demonstrate the effectiveness of discovering ODEs per categorical treatment. {actionpoint} Framework step 2: Incorporate treatment plans as dynamical systems using appendix˜E.

4.3 Modelling between-subject variability (discr.˜3)

Discrepancy 3 (Between-subject variability.)

In a classical ODE discovery setting, we usually have only one kind of between-subject variability corresponding to a noisy measurement - residual unexplained variability (RUV). However, there are a few other sources of variability that need to be accounted for before considering it as an ODE discovery problem.

As pharmacological models play a prominent role in treatment effect literature (Geng et al., 2017; Bica et al., 2020b; Berrevoets et al., 2021; Lim, 2018; Seedat et al., 2022) we employ formalism from pharmacology literature to discuss between-subject variability (BSV).

There are two types of BSV (Mould & Upton, 2012): (i) Unexplained variability which includes RUV and parameter distributions; and (ii) Explained variability includes covariate models where we model the impact of static covariates on the equation’s parameters.

We base the model of our causal dynamical system on the formalism introduced in Peters et al. (2022). In particular, they use the term Deterministic Casual Kinetic Model (DCKM), which can be summarised as a system of first-order autonomous ODEs. This is the simplest pharmacological model with no BSV (setting A in Table˜2). In light of realism, however, we can add different layers of BSV, making the model more complex and thus more difficult to discover by current methods.

Table˜2 outlines different types of BSV as graphical models, each with increasing complexity. In section˜6, we relate the parameter columns in table˜2 to the underlying ground-truth equations used in our experiments. We now detail these layers in increasing complexity.

B: RUV - noisy measurements. Noisy measurements are one of the biggest challenges of ODE discovery (Brunton et al., 2016). This is because noisy measurements cause derivative estimation to be very challenging. Recently, methods based on the weak formulation of ODEs (Qian et al., 2022; Messenger & Bortz, 2021) have managed to circumvent the derivative estimation step, making them more robust to noisy measurements. We represent DCKMs with noisy measurements graphically similar to the standard DCKM but will now explicitly add noise-related nodes (table˜2 row B).

C: Covariate models. One way we can incorporate explainable variability into the model is by modelling the impact of the covariates on the model parameters. The covariates are incorporated into the model by closed-form expressions. Usually simple ones such as linear, exponential, or power (Mould & Upton, 2012). Sometimes other equations are used, such as complex closed-form expressions or piece-wise functions (Chung et al., 2021). We add a node corresponding to static covariates to represent covariate models graphically (table˜2 row C).

D: Parameter distributions. Although the covariate models let us calculate the group parameters—parameters for a specific group of people based, e.g., on their age or weight, the actual parameter for an individual might deviate from this value in some random way. We can model this by assuming a distribution of parameter values with its mean depending on the covariates. We can depict this graphically by adding the nodes corresponding to the parameters and the associated noise nodes coming into them. Similarly, ODE discovery methods are not designed to discover such models (table˜2 row D).

Framework step 3: Decide on the BSV using table˜2 and, if type D, adjust as in section˜5.

| (i) | (ii) | (iii) | (iv) | Causal graph | Example | ||

| ODE. | +RUV | +Cov. | +Dist | Parameters (eq.˜5) | |||

| A | ✓ | ✗ | ✗ | ✗ | |||

| B | ✓ | ✓ | ✗ | ✗ | |||

| C | ✓ | ✓ | ✓ | ✗ | |||

| D | ✓ | ✓ | ✓ | ✓ | , | ||

5 INSITE —the framework in practice

Having introduced our general framework of applying ODE discovery techniques to treatment effects inference (in section˜4), we now turn to apply it. Following the framework steps (FS) as described in section˜4, we have to: (FS1) accept a new set of assumptions (discr.˜1), (FS2) incorporate treatment plans (discr.˜2), and (FS3) decide on the BSV type (discr.˜3). For our purposes, we use SINDy (Brunton et al., 2016), one of the most widely adopted and used methods in ODE discovery. One can use any underlying ODE discovery methods as long as they: (1) model ODEs that include a set of numeric constants (, with numeric constants), and (2) we accept that BSV is modelled through these numeric constants alone, i.e., two varying subjects can only differ by having different model numeric constants.444Essentially, we cannot have a mathematical expression changed across subjects (such as an changed to a . In fact, a scenario such as this would violate assum. 3.3).

Individualized Nonlinear Sparse Identification Treatment Effect (INSITE) consists of two main steps, reminiscent of the remaining discr.˜2 and 3: (1) discover the population (global) differential equation for all patients, and (2) discover the patient-specific differential equation by fine-tuning the population equation’s numeric constants—as outlined in algorithm˜1. Having a set of numeric constants (one for each patient) we derive a population differential equation where each individual’s numeric constants are represented by a sample from this population differential equation distribution.

◆ Step 1 (cfr. table˜1): Discovering the Population Differential Equation. Given an observed dataset of patients, we aim to discover the population differential equation governing the patient covariates’ interaction with their potential outcomes. We can use any deterministic differential equation discovery method such as SINDy (Brunton et al., 2016) to recover the population ODE . We adapt it to handle treatments as outlined in section˜4.2 and appendix˜E (as per discr.˜2), where for each separate categorical (or binary) treatment, we discover a separate ODE—which is only active for that categorical treatment, i.e., .

◆ Step 2 (cfr. discr.˜3): Discovering Patient-Specific Differential Equations. Once the population differential equation is discovered, we then fine-tune the numeric constants in the population equation to obtain patient-specific differential equations . By keeping the same functional form but allowing unique numeric constants for each patient to be refined, we can model the individualized treatment effect and account for patient heterogeneity (between-subject variability). Crucially, to avoid overfitting and allow only small deviations away from the initial population numeric constants , we fit the observed patient trajectory up to the current time by minimizing,

| (4) |

a Mean Squared Error (MSE) of the predicted trajectory evolution for the observed trajectory. Additionally, we also require a regularization term in eq.˜4. Specifically, for each individual trajectory, , we refine the population set of parameters () to obtain while still using them to regularize to prevent overfitting. The latter is an insight borrowed from transfer learning (Tommasi et al., 2010; Tommasi & Caputo, 2009; Takada & Fujisawa, 2020). Interestingly, without such regularization, we find INSITE to underperform significantly (cfr. section˜K.1). We use Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm (Fletcher, 2013) to minimize eq.˜4 as is standard in symbolic regression (Petersen et al., 2020), and provide full inference details in section˜G.1.

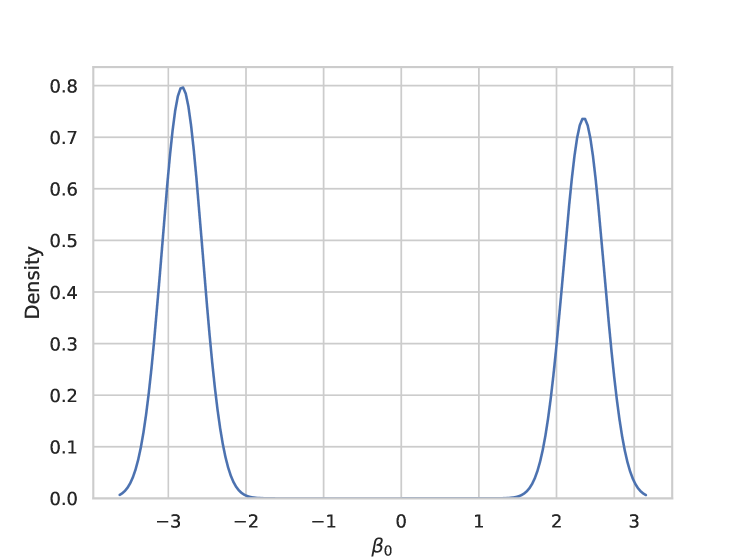

Parameter distributions. Unique to our method is that after obtaining the patient-specific differential equations, we can derive a population differential equation where each numeric constant is represented by a distribution, such as a normal distribution or a mixture of distributions—recovering a probabilistic interpretation of the underlying data generating process. This is explored in section˜K.2.

6 Experiments and evaluation

To allow for a robust and systematic comparison of longitudinal treatment effect (LTE) methods and ODE discovery techniques, we create a synthetic testbed designed for treatment effect prediction across different synthetic datasets generated from different classes of pharmacological models. We note that using synthetic datasets is common in benchmarking LTE methods, as for a real dataset the counterfactual outcomes are unknown (Lim, 2018; Bica et al., 2020b). Our testbed consists of:

Diverse underlying pharmacological models . The synthetic datasets are generated by sampling a pharmacological model with a given treatment assignment policy. To ensure robustness across diverse pharmacological scenarios, we include the model classes outlined in section˜4.3, from A to D—which differ between noise, static covariates, and parametric distributions of parameters. We analyze two standard Pharmacokinetic-Pharmacodynamic (PKPD) models for each model class (section˜4.3). First, a one-compartmental PKPD model (eq.˜5) with a binary static action. Second, a state-of-the-art biomedical PKPD model of tumor growth, used to simulate the combined effects of chemotherapy and radiotherapy in lung cancer (Geng et al., 2017) (eq.˜6)—this has been extensively used by other works (Seedat et al., 2022; Bica et al., 2020b; Melnychuk et al., 2022). Here, to explore continuous types of treatments, we use a continuous chemotherapy treatment and a binary radiotherapy treatment , both changing over time. For both models , is the volume of the tumor days after diagnosis, modeled separately as:

| (5) |

| (6) |

where the parameters are sampled according to the different layers of between-subject variability (table˜2) forming variations of A-D, with parameter distributions following that as described in Geng et al. (2017) or otherwise detailed in appendix˜F. We also compare to the standard implementation of eq.˜6 (labelled as Cancer PKPD) to ensure standard comparison to existing state-of-the-art methods, where the treatments are both binary. We further detail dataset generation for each in appendix˜F.

Action assignment policy. We introduce time-dependent confounding by making the treatment assignment vary from a purely random treatment assignment to purely deterministic based on a threshold of the outcome predictor value, controlled by a scalar —such that corresponds to no time-dependent confounding and larger values correspond to increasing time-dependent confounding. Further details of this treatment policy are in appendix˜F.

Benchmark methods. We seek to compare against the existing state-of-the-art (SOTA) methods from (1) longitudinal treatment effect models and (2) ODE discovery. First, longitudinal treatment effect models often consist of black-box neural network-based approaches, i.e., not closed-form or easily interpretable. A significant theme in these works is the development of methods to mitigate time-dependent confounding. Many mitigation methods have been proposed that we use as benchmarks, such as propensity networks in Marginal Structural Models (MSM) (Robins et al., 2000) and Recurrent Marginal Structural Networks (RMSN) (Lim, 2018), Gradient Reversal in Counterfactual Recurrent Networks (CRN) (Bica et al., 2020b), Confusion in Causal Transformers (CT) (Melnychuk et al., 2022) and g-computation in G-Net (G-Net) (Li et al., 2021). Second, ODE discovery methods aim to discover an underlying closed-form mathematical equation that best fits the underlying controlled ODE of the observed state and action trajectories dataset, . In these methods, the state derivative is unobserved; therefore, they have to approximate the derivative using finite differences, as in Sparse Identification of Nonlinear Dynamics (Brunton et al., 2016), or employ a variational loss as the objective, as in Weak Sparse Identification of Nonlinear Dynamics (Messenger & Bortz, 2021)555We only include results for WSINDy when it is possible to use it, as it does not support sparse short trajectories. Detailed further in appendix G.. To make these two ODE discovery methods more competitive, we adapt them to model individual ODEs per categorical treatment (section˜4.2), (termed A-SINDy, A-WSINDy respectively). We further discuss why we chose these SOTA methods and their implementation details in appendix˜G.

Evaluation. We evaluate against the standard longitudinal treatment effect metrics of test counterfactual -step ahead prediction normalized root mean squared error (RMSE), where , for a sliding treatment plan (Melnychuk et al., 2022). Unless otherwise specified, each result is run for five random seed runs with time-dependent confounding of —see appendix˜H for details.

Main results. The test counterfactual normalized RMSE for 6-step ahead prediction for each benchmark dataset is tabulated in table˜3. Our method, INSITE, achieves the lowest test counterfactual normalized RMSE across all methods. We provide additional experimental results in appendix˜K.

Interpretable equations. Unique to the proposed framework and INSITE, is that the discovered differential equation is fully interpretable; however, it relies on strong assumptions. Some of these discovered equations are shown in appendix˜J. It is clear that even with binary actions, INSITE is able to discover a useful equation that performs well and is similar in form to the true underlying equation, even discovering it exactly in some simple settings of eq.˜5. The method can even do this in the presence of noise (BSV layers of B-D) and extrapolate well for future -step predictions, fig.˜3 (a).

Model misspecification. INSITE and the adapted ODE discovery methods (A-SINDy, A-WSINDy) are only correctly specified for eq.˜5.A-D datasets, as they use the feature library of . Crucially, the ODE discovery methods are misspecified for eq.˜6.A-D, and the Cancer PKPD datasets, as their underlying equation would require the feature library of to be correctly specified. Although this misspecification persists, as noticeable from the increased order of magnitude increase in error, we still observe INSITE achieves a lower error than the longitudinal treatment effect models (app. K.6).

| Method | eq.˜5.A | eq.˜5.B | eq.˜5.C | eq.˜5.D | eq.˜6.A | eq.˜6.B | eq.˜6.C | eq.˜6.D | Cancer PKPD | |

| LTE | MSM | 0.998.37e-17 | 0.990.00 | 0.978.37e-17 | 2.090.00 | 2.550.13 | 2.550.13 | 2.060.16 | 2.110.04 | 2.300.12 |

| RMSN | 1.920.24 | 1.940.23 | 1.680.19 | 1.910.18 | 1.230.15 | 1.250.15 | 1.100.18 | 1.100.11 | 1.040.17 | |

| CRN | 1.050.10 | 1.050.10 | 0.820.09 | 1.980.14 | 1.050.03 | 1.050.03 | 1.030.08 | 1.030.10 | 0.920.08 | |

| G-Net | 0.910.20 | 0.910.20 | 0.720.14 | 0.970.15 | 1.330.27 | 1.340.27 | 1.020.11 | 1.250.15 | 1.220.14 | |

| CT | 0.900.18 | 0.900.18 | 0.750.14 | 1.000.14 | 1.290.07 | 1.290.10 | 1.030.11 | 1.140.10 | 1.070.07 | |

| ODE-D | A-SINDy | 0.110.00 | 0.110.00 | 0.132.09e-17 | 0.150.00 | 1.450.03 | 1.450.03 | 1.400.01 | 1.510.09 | 1.230.13 |

| A-WSINDy | 0.117.24e-05 | 0.112.49e-04 | 0.121.47e-03 | 0.107.61e-04 | NA | NA | NA | NA | NA | |

| INSITE | 0.02 2.62e-18 | 0.03 0.00 | 0.04 0.00 | 0.05 5.23e-18 | 0.94 0.05 | 0.94 0.05 | 0.84 0.04 | 0.87 0.08 | 0.79 8.37e-17 |

Relaxing the overlap assumption. We can relax the overlap assumption (assum.˜2.2) by increasing the time-dependent confounding of the treatment given, increasing . We observe, in fig.˜3 (b), that INSITE can still discover a good approximating underlying equation even in the presence of high time-dependent confounding—hence where there is low overlap.

| ODE per Cat. | Fine Tuning | eq.˜5.D -step |

| (section˜4.2) | n-RMSE | |

| ✓ | ✓ | 0.05 5.23e-18 |

| ✗ | ✓ | 0.43 7.71e-17 |

| ✓ | ✗ | 0.15 0.00 |

| ✗ | ✗ | 0.87 0.00 |

INSITE Ablation. INSITE’s gain in performance derives from our framework’s discr.˜2, to discover individual ODEs per categorical treatment, as well as the ability to discover individualized (fine-tuned) ODEs. Notably, the improvement arising from the ability to discover individual ODEs per categorical treatment can be widely applied to existing ODE discovery methods using our framework for treatment effects over time. Furthermore, we conduct additional insight experiments to evaluate the methods against datasets of smaller sample sizes and increasing observation noise in appendix˜K.

7 Conclusion and Future Work

In conclusion, we presented a first framework that connects longitudinal heterogeneous treatment effects with ODE discovery methods, enabling improved interpretability and performance. Naturally, this is just the beginning. We hope that building on our framework (appendix˜A), the connection between treatment effects and ODE discovery is further solidified and perhaps extended to other types of differential equations and dynamical systems in general (see e.g. appendix˜C).

Ethics statement. This paper’s novel approach of integrating ODE discovery methods into treatment effects inference can revolutionize precision medicine by enabling personalized, effective treatment strategies. However, misuse or application in inappropriate contexts could lead to incorrect treatment decisions. Moreover, despite the potential for individualized treatment, privacy concerns arise. Thus, proper data governance, clear communication of model limitations, and rigorous validation using diverse datasets are vital for responsible application.

Regarding limitations of our method, INSITE, and our framework in general, we list 3 major limitations one should take into account before applying our work in practice:

-

1.

A correct set of candidate functions (tokens) is necessary for correct model recovery. To show the importance of this, we include experiments where we have wrongly specified this token library (see section˜K.6) and observe degrading performance as a result.

-

2.

ODE discovery works best in sparse settings. The reason is two-fold: from a technical point of view, sparse equations are much less complex and simply easier to recover; from a usability point of view, the usefulness of non-sparse equations is limited as interpretability is negatively affected by non-sparse (or non-parsimonious) equations (Crabbe et al., 2020).

-

3.

ODEs are noise free. Since we recover ODEs, the found equations do not model a source of noise as is typically the case in structural equation modelling. To model noise terms explicitly, our framework should be extended into stochastic DEs, as is stated in our future works paragraph above.

Reproducibility statement. We provide all code at https://github.com/samholt/ODE-Discovery-for-Longitudinal-Heterogeneous-Treatment-Effects-Inference. To ensure this paper is fully reproducible, we include an extensive appendix with all implementation and experimental details to recreate the method and experiments. These are outlined as the following: for benchmark dataset details, see appendix˜F; benchmark method implementation details, including those of the treatment effects baselines and adapted ODE discovery methods which include the proposed INSITE method see G; evaluation metric details see appendix˜H; dataset generation and model training see appendix˜I.

Acknowledgements. The authors would like to acknowledge and thank their corresponding funders, where SH is funded by AstraZeneca, JB is funded by W.D. Armstrong Trust, KK is funded by Roche, ZQ is funded by the Office of Naval Research (ONR). Moreover, we would like to warmly thank all the anonymous reviewers, alongside research group members of the van der Schaar lab (www.vanderschaar-lab.com), for their valuable input, comments, and suggestions as the paper was developed.

References

- Alaa & van der Schaar (2019) Ahmed M Alaa and Mihaela van der Schaar. Attentive state-space modeling of disease progression. Advances in neural information processing systems, 32, 2019.

- Alvarez & Lawrence (2009) Mauricio A Alvarez and Neil D Lawrence. Sparse convolved multiple output gaussian processes. arXiv preprint arXiv:0911.5107, 2009.

- Angrist (1991) Joshua Angrist. Instrumental variables estimation of average treatment effects in econometrics and epidemiology, 1991.

- Angrist et al. (1996) Joshua D Angrist, Guido W Imbens, and Donald B Rubin. Identification of causal effects using instrumental variables. Journal of the American statistical Association, 91(434):444–455, 1996.

- Athey & Imbens (2006) Susan Athey and Guido W Imbens. Identification and inference in nonlinear difference-in-differences models. Econometrica, 74(2):431–497, 2006.

- Berrevoets et al. (2021) Jeroen Berrevoets, Alicia Curth, Ioana Bica, Eoin McKinney, and Mihaela van der Schaar. Disentangled counterfactual recurrent networks for treatment effect inference over time. arXiv preprint arXiv:2112.03811, 2021.

- Berrevoets et al. (2023) Jeroen Berrevoets, Krzysztof Kacprzyk, Zhaozhi Qian, and Mihaela van der Schaar. Causal deep learning. arXiv preprint arXiv:2303.02186, 2023.

- Bica et al. (2020a) Ioana Bica, Ahmed Alaa, and Mihaela Van Der Schaar. Time series deconfounder: Estimating treatment effects over time in the presence of hidden confounders. In International Conference on Machine Learning, pp. 884–895. PMLR, 2020a.

- Bica et al. (2020b) Ioana Bica, Ahmed M. Alaa, James Jordon, and Mihaela van der Schaar. Estimating counterfactual treatment outcomes over time through adversarially balanced representations. In International Conference on Learning Representations, 2020b.

- Bica et al. (2021) Ioana Bica, Ahmed M. Alaa, Craig Lambert, and Mihaela Schaar. From Real-World Patient Data to Individualized Treatment Effects Using Machine Learning: Current and Future Methods to Address Underlying Challenges. Clinical Pharmacology & Therapeutics, 109(1):87–100, January 2021. ISSN 0009-9236, 1532-6535. doi: 10.1002/cpt.1907.

- Birkhoff (1927) George David Birkhoff. Dynamical systems, volume 9. American Mathematical Soc., 1927.

- Bollen & Pearl (2013) Kenneth A Bollen and Judea Pearl. Eight myths about causality and structural equation models. Handbook of causal analysis for social research, pp. 301–328, 2013.

- Brunel (2008) Nicolas JB Brunel. Parameter estimation of ode’s via nonparametric estimators. 2008.

- Brunel et al. (2014) Nicolas JB Brunel, Quentin Clairon, and Florence d’Alché Buc. Parametric estimation of ordinary differential equations with orthogonality conditions. Journal of the American Statistical Association, 109(505):173–185, 2014.

- Brunton et al. (2016) Steven L Brunton, Joshua L Proctor, and J Nathan Kutz. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proceedings of the national academy of sciences, 113(15):3932–3937, 2016.

- Butner et al. (2020) Joseph D Butner, Dalia Elganainy, Charles X Wang, Zhihui Wang, Shu-Hsia Chen, Nestor F Esnaola, Renata Pasqualini, Wadih Arap, David S Hong, James Welsh, et al. Mathematical prediction of clinical outcomes in advanced cancer patients treated with checkpoint inhibitor immunotherapy. Science advances, 6(18):eaay6298, 2020.

- Camps-Valls et al. (2023) Gustau Camps-Valls, Andreas Gerhardus, Urmi Ninad, Gherardo Varando, Georg Martius, Emili Balaguer-Ballester, Ricardo Vinuesa, Emiliano Diaz, Laure Zanna, and Jakob Runge. Discovering causal relations and equations from data. arXiv preprint arXiv:2305.13341, 2023.

- Cho et al. (2014) Kyunghyun Cho, Bart Van Merriënboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078, 2014.

- Chung et al. (2021) Erin Chung, Jonathan Sen, Priya Patel, and Winnie Seto. Population Pharmacokinetic Models of Vancomycin in Paediatric Patients: A Systematic Review. Clinical Pharmacokinetics, 60(8):985–1001, August 2021. ISSN 0312-5963, 1179-1926. doi: 10.1007/s40262-021-01027-9.

- Crabbe et al. (2020) Jonathan Crabbe, Yao Zhang, William Zame, and Mihaela van der Schaar. Learning outside the black-box: The pursuit of interpretable models. In H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin (eds.), Advances in Neural Information Processing Systems, volume 33, pp. 17838–17849. Curran Associates, Inc., 2020. URL https://proceedings.neurips.cc/paper_files/paper/2020/file/ce758408f6ef98d7c7a7b786eca7b3a8-Paper.pdf.

- Cranmer et al. (2020) Miles Cranmer, Alvaro Sanchez Gonzalez, Peter Battaglia, Rui Xu, Kyle Cranmer, David Spergel, and Shirley Ho. Discovering symbolic models from deep learning with inductive biases. Advances in Neural Information Processing Systems, 33:17429–17442, 2020.

- Datta & Mohan (1995) Kanti Bhushan Datta and Bosukonda Murali Mohan. Orthogonal functions in systems and control, volume 9. World Scientific, 1995.

- D’Amour et al. (2021) Alexander D’Amour, Peng Ding, Avi Feller, Lihua Lei, and Jasjeet Sekhon. Overlap in observational studies with high-dimensional covariates. Journal of Econometrics, 221(2):644–654, 2021.

- Falcon (2019) William A Falcon. Pytorch lightning. GitHub, 3, 2019.

- Fletcher (2013) Roger Fletcher. Practical methods of optimization. John Wiley & Sons, 2013.

- Florens et al. (2008) Jean-Pierre Florens, James J Heckman, Costas Meghir, and Edward Vytlacil. Identification of treatment effects using control functions in models with continuous, endogenous treatment and heterogeneous effects. Econometrica, 76(5):1191–1206, 2008.

- Funk et al. (2011) Michele Jonsson Funk, Daniel Westreich, Chris Wiesen, Til Stürmer, M Alan Brookhart, and Marie Davidian. Doubly robust estimation of causal effects. American journal of epidemiology, 173(7):761–767, 2011.

- Ganin & Lempitsky (2015) Yaroslav Ganin and Victor Lempitsky. Unsupervised domain adaptation by backpropagation. In International conference on machine learning, pp. 1180–1189. PMLR, 2015.

- Gasull et al. (2004) Armengol Gasull, Antoni Guillamon, and Jordi Villadelprat. The period function for second-order quadratic odes is monotone. Qualitative Theory of Dynamical Systems, 4(2):329–352, 2004.

- Gelman & Hill (2006) Andrew Gelman and Jennifer Hill. Data analysis using regression and multilevel/hierarchical models. Cambridge university press, 2006.

- Geng et al. (2017) Changran Geng, Harald Paganetti, and Clemens Grassberger. Prediction of Treatment Response for Combined Chemo- and Radiation Therapy for Non-Small Cell Lung Cancer Patients Using a Bio-Mathematical Model. Scientific Reports, 7(1):13542, October 2017. ISSN 2045-2322. doi: 10.1038/s41598-017-13646-z.

- Goyal & Benner (2022) Pawan Goyal and Peter Benner. Discovery of nonlinear dynamical systems using a runge–kutta inspired dictionary-based sparse regression approach. Proceedings of the Royal Society A, 478(2262):20210883, 2022.

- Gwak et al. (2020) Daehoon Gwak, Gyuhyeon Sim, Michael Poli, Stefano Massaroli, Jaegul Choo, and Edward Choi. Neural ordinary differential equations for intervention modeling. arXiv preprint arXiv:2010.08304, 2020.

- Hamilton (2020) James Douglas Hamilton. Time series analysis. Princeton university press, 2020.

- Hernán et al. (2001) Miguel A Hernán, Babette Brumback, and James M Robins. Marginal structural models to estimate the joint causal effect of nonrandomized treatments. Journal of the American Statistical Association, 96(454):440–448, 2001.

- Hızlı et al. (2022) Çağlar Hızlı, ST John, Anne Tuulikki Juuti, Tuure Tapani Saarinen, Kirsi Hannele Pietiläinen, and Pekka Marttinen. Joint point process model for counterfactual treatment-outcome trajectories under policy interventions. In NeurIPS 2022 Workshop on Learning from Time Series for Health, 2022.

- Hochreiter & Schmidhuber (1997) Sepp Hochreiter and Jürgen Schmidhuber. Long short-term memory. Neural computation, 9(8):1735–1780, 1997.

- Holland (1986) Paul W Holland. Statistics and causal inference. Journal of the American statistical Association, 81(396):945–960, 1986.

- Holt et al. (2023a) Samuel Holt, Alihan Hüyük, Zhaozhi Qian, Hao Sun, and Mihaela van der Schaar. Neural laplace control for continuous-time delayed systems. In International Conference on Artificial Intelligence and Statistics, pp. 1747–1778. PMLR, 2023a.

- Holt et al. (2023b) Samuel Holt, Zhaozhi Qian, and Mihaela van der Schaar. Deep generative symbolic regression. arXiv preprint arXiv:2401.00282, 2023b.

- Holt et al. (2024) Samuel Holt, Alihan Hüyük, and Mihaela van der Schaar. Active observing in continuous-time control. Advances in Neural Information Processing Systems, 36, 2024.

- Holt et al. (2022) Samuel I Holt, Zhaozhi Qian, and Mihaela van der Schaar. Neural laplace: Learning diverse classes of differential equations in the laplace domain. In International Conference on Machine Learning, pp. 8811–8832. PMLR, 2022.

- Imbens (2004) Guido W Imbens. Nonparametric estimation of average treatment effects under exogeneity: A review. Review of Economics and statistics, 86(1):4–29, 2004.

- Imbens & Rubin (2015) Guido W Imbens and Donald B Rubin. Causal inference in statistics, social, and biomedical sciences. Cambridge University Press, 2015.

- Ince (1956) Edward L Ince. Ordinary differential equations. Courier Corporation, 1956.

- Jha et al. (2020) Rakshit Jha, Mattijs De Paepe, Samuel Holt, James West, and Shaun Ng. Deep learning for digital asset limit order books. arXiv preprint arXiv:2010.01241, 2020.

- Jianwang et al. (2021) Hong Jianwang, Ricardo A Ramirez-Mendoza, and Xiang Yan. Statistical inference for piecewise affine system identification. Mathematical Problems in Engineering, 2021:1–9, 2021.

- Johansson et al. (2016) Fredrik Johansson, Uri Shalit, and David Sontag. Learning representations for counterfactual inference. In International conference on machine learning, pp. 3020–3029. PMLR, 2016.

- Johnson et al. (2019) Kaitlyn E Johnson, Grant Howard, William Mo, Michael K Strasser, Ernesto ABF Lima, Sui Huang, and Amy Brock. Cancer cell population growth kinetics at low densities deviate from the exponential growth model and suggest an allee effect. PLoS biology, 17(8):e3000399, 2019.

- Kailath (1980) Thomas Kailath. Linear systems, volume 156. Prentice-Hall Englewood Cliffs, NJ, 1980.

- Kingma & Ba (2014) Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Klenke (2008) Achim Klenke. Probability theory. universitext, 2008.

- Kraemer et al. (2002) Helena Chmura Kraemer, G Terence Wilson, Christopher G Fairburn, and W Stewart Agras. Mediators and moderators of treatment effects in randomized clinical trials. Archives of general psychiatry, 59(10):877–883, 2002.

- Kuzmanovic et al. (2023) Milan Kuzmanovic, Tobias Hatt, and Stefan Feuerriegel. Estimating conditional average treatment effects with missing treatment information. In International Conference on Artificial Intelligence and Statistics, pp. 746–766. PMLR, 2023.

- Lechner & Hasani (2020) Mathias Lechner and Ramin Hasani. Learning long-term dependencies in irregularly-sampled time series. arXiv preprint arXiv:2006.04418, 2020.

- Li et al. (2021) Rui Li, Stephanie Hu, Mingyu Lu, Yuria Utsumi, Prithwish Chakraborty, Daby M Sow, Piyush Madan, Jun Li, Mohamed Ghalwash, Zach Shahn, et al. G-net: a recurrent network approach to g-computation for counterfactual prediction under a dynamic treatment regime. In Machine Learning for Health, pp. 282–299. PMLR, 2021.

- Lim (2018) Bryan Lim. Forecasting treatment responses over time using recurrent marginal structural networks. Advances in neural information processing systems, 31, 2018.

- Lindelöf (1894) Ernest Lindelöf. Sur l’application de la méthode des approximations successives aux équations différentielles ordinaires du premier ordre. Comptes rendus hebdomadaires des séances de l’Académie des sciences, 116(3):454–457, 1894.

- Ljung (1998) Lennart Ljung. System identification. Springer, 1998.

- Lok (2008) Judith J Lok. Statistical modeling of causal effects in continuous time. 2008.

- Melnychuk et al. (2022) Valentyn Melnychuk, Dennis Frauen, and Stefan Feuerriegel. Causal transformer for estimating counterfactual outcomes. In International Conference on Machine Learning, pp. 15293–15329. PMLR, 2022.

- Messenger & Bortz (2021) Daniel A. Messenger and David M. Bortz. Weak SINDy: Galerkin-Based Data-Driven Model Selection. Multiscale Modeling & Simulation, 19(3):1474–1497, January 2021. ISSN 1540-3459, 1540-3467. doi: 10.1137/20M1343166.

- Mould & Upton (2012) Dr Mould and Rn Upton. Basic Concepts in Population Modeling, Simulation, and Model-Based Drug Development. CPT: Pharmacometrics & Systems Pharmacology, 1(9):6, 2012. ISSN 2163-8306. doi: 10.1038/psp.2012.4.

- Mouli et al. (2023) S Chandra Mouli, Muhammad Ashraful Alam, and Bruno Ribeiro. Metaphysica: Ood robustness in physics-informed machine learning. arXiv preprint arXiv:2303.03181, 2023.

- Neyman (1923) Jersey Neyman. Sur les applications de la théorie des probabilités aux experiences agricoles: Essai des principes. Roczniki Nauk Rolniczych, 10(1):1–51, 1923.

- Noorbakhsh & Rodriguez (2022) Kimia Noorbakhsh and Manuel Rodriguez. Counterfactual temporal point processes. Advances in Neural Information Processing Systems, 35:24810–24823, 2022.

- Pearl (2009) Judea Pearl. Causal inference in statistics: An overview. 2009.

- Peters et al. (2022) Jonas Peters, Stefan Bauer, and Niklas Pfister. Causal models for dynamical systems. In Probabilistic and Causal Inference: The Works of Judea Pearl, pp. 671–690. ACM, 2022.

- Petersen et al. (2020) Brenden K Petersen, Mikel Landajuela Larma, Terrell N Mundhenk, Claudio Prata Santiago, Soo Kyung Kim, and Joanne Taery Kim. Deep symbolic regression: Recovering mathematical expressions from data via risk-seeking policy gradients. In International Conference on Learning Representations, 2020.

- Qian et al. (2020) Zhaozhi Qian, Ahmed M Alaa, and Mihaela van der Schaar. When and how to lift the lockdown? global covid-19 scenario analysis and policy assessment using compartmental gaussian processes. Advances in Neural Information Processing Systems, 33:10729–10740, 2020.

- Qian et al. (2022) Zhaozhi Qian, Krzysztof Kacprzyk, and Mihaela van der Schaar. D-code: Discovering closed-form odes from observed trajectories. In International Conference on Learning Representations, 2022.

- Rasmussen et al. (2006) Carl Edward Rasmussen, Christopher KI Williams, et al. Gaussian processes for machine learning, volume 1. Springer, 2006.

- Robins (1994) James M Robins. Correcting for non-compliance in randomized trials using structural nested mean models. Communications in Statistics-Theory and methods, 23(8):2379–2412, 1994.

- Robins (1999) James M Robins. Association, causation, and marginal structural models. Synthese, 121(1/2):151–179, 1999.

- Robins & Hernán (2009) James M Robins and Miguel A Hernán. Estimation of the causal effects of time-varying exposures. Longitudinal data analysis, 553:599, 2009.

- Robins et al. (2000) James M Robins, Miguel Angel Hernan, and Babette Brumback. Marginal structural models and causal inference in epidemiology. Epidemiology, pp. 550–560, 2000.

- Rosenbaum & Rubin (1983) Paul R Rosenbaum and Donald B Rubin. The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1):41–55, 1983.

- Rubin (1980) Donald B. Rubin. Comment on "randomization analysis of experimental data: The fisher randomization test". Journal of the American Statistical Association, 75(371):591, 1980. doi: 10.2307/2287653. URL https://doi.org/10.2307/2287653.

- Rudin (2019) Cynthia Rudin. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature machine intelligence, 1(5):206–215, 2019.

- Ryalen et al. (2019) Pl C Ryalen, Mats J Stensrud, and Kjetil Røysland. The additive hazard estimator is consistent for continuous-time marginal structural models. Lifetime data analysis, 25:611–638, 2019.

- Ryalen et al. (2020) Pl Christie Ryalen, Mats Julius Stensrud, Sophie Foss, and Kjetil Røysland. Causal inference in continuous time: an example on prostate cancer therapy. Biostatistics, 21(1):172–185, 2020.

- Saarela & Liu (2016) Olli Saarela and Zhihui Liu. A flexible parametric approach for estimating continuous-time inverse probability of treatment and censoring weights. Statistics in medicine, 35(23):4238–4251, 2016.

- Schmidt & Lipson (2009) Michael Schmidt and Hod Lipson. Distilling free-form natural laws from experimental data. science, 324(5923):81–85, 2009.

- Schulam & Saria (2017) Peter Schulam and Suchi Saria. Reliable decision support using counterfactual models. Advances in neural information processing systems, 30, 2017.

- Seedat et al. (2022) Nabeel Seedat, Fergus Imrie, Alexis Bellot, Zhaozhi Qian, and Mihaela van der Schaar. Continuous-time modeling of counterfactual outcomes using neural controlled differential equations. arXiv preprint arXiv:2206.08311, 2022.

- Sherstinsky (2020) Alex Sherstinsky. Fundamentals of recurrent neural network (rnn) and long short-term memory (lstm) network. Physica D: Nonlinear Phenomena, 404:132306, 2020.

- Soleimani et al. (2017) Hossein Soleimani, Adarsh Subbaswamy, and Suchi Saria. Treatment-response models for counterfactual reasoning with continuous-time, continuous-valued interventions. arXiv preprint arXiv:1704.02038, 2017.

- Stone (1948) Marshall H Stone. The generalized weierstrass approximation theorem. Mathematics Magazine, 21(5):237–254, 1948.

- Takada & Fujisawa (2020) Masaaki Takada and Hironori Fujisawa. Transfer learning via \ell_1 regularization. In H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin (eds.), Advances in Neural Information Processing Systems, volume 33, pp. 14266–14277. Curran Associates, Inc., 2020. URL https://proceedings.neurips.cc/paper_files/paper/2020/file/a4a83056b58ff983d12c72bb17996243-Paper.pdf.

- Thron (1974) C. D. Thron. Linearity and Superposition in Pharmacokinetics. Pharmacological Reviews, 26(1):3–31, March 1974. ISSN 0031-6997, 1521-0081.

- Tommasi & Caputo (2009) Tatiana Tommasi and Barbara Caputo. The more you know, the less you learn: from knowledge transfer to one-shot learning of object categories. In Proceedings of the British Machine Vision Conference, number CONF, pp. 80–1, 2009.

- Tommasi et al. (2010) Tatiana Tommasi, Francesco Orabona, and Barbara Caputo. Safety in numbers: Learning categories from few examples with multi model knowledge transfer. In 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 3081–3088. IEEE, 2010.

- Trefethen et al. (2017) Lloyd N Trefethen, Ásgeir Birkisson, and Tobin A Driscoll. Exploring ODEs. SIAM, 2017.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in neural information processing systems, 30, 2017.

- Wang et al. (2023) Bochen Wang, Liang Wang, Jiahui Peng, Mingyue Hong, and Wei Xu. The identification of piecewise non-linear dynamical system without understanding the mechanism. Chaos: An Interdisciplinary Journal of Nonlinear Science, 33(6), 2023.

- Williams & Zipser (1989) Ronald J Williams and David Zipser. A learning algorithm for continually running fully recurrent neural networks. Neural computation, 1(2):270–280, 1989.

- Xu et al. (2016) Yanbo Xu, Yanxun Xu, and Suchi Saria. A bayesian nonparametric approach for estimating individualized treatment-response curves. In Machine learning for healthcare conference, pp. 282–300. PMLR, 2016.

Appendix

Author Contributions

All authors provided valuable contributions to the paper from the idea, writing, and editing, and managing the project’s progress.

KK wrote the formalism in section˜3, proposed (and described) how to adapt ODE discovery methods to include discrete treatment plans (section˜4.2) and different layers of between-subject variability (section˜4.3), constructed the initial experimental settings in table˜8, and provided the idea for fig.˜2.

SH wrote parts of the introduction, all the ODE assumptions in section˜3; proposed and wrote the method in section˜5, developed and wrote all the experiments in section˜6, and the conclusion in section˜7; designed and came up with the method INSITE; solely coded up the method and all baseline implementations, initial exploratory experiments for INSITE; all coding and experiment design, implementation and running for all nine datasets, seven baselines, evaluation; led all results within the paper in section˜6, and eight additional new experimental settings and ablations of all the baselines across all datasets in appendix˜K; solely wrote 20 appendix sections, of additional experiments, ablations, explanations of key parts of the paper, additional related work, baseline descriptions, and synthetic dataset descriptions.

JB connected causal treatment effect estimation with ordinary differential equation discovery leading to the framework presented in this paper.

Appendix A Future Work

In our paper we have been very explicit about the settings in which we can use ODE discovery settings (see section˜3 in particular). As these settings do not correspond one-to-one with standard TE settings, our paper can be viewed as an expansion of typical TE application areas. However, there is still more to be done! In particular, future endeavours could include: discovering stochastic differential equations, leveraging latent variable models for handling unobserved variables, learning piece-wise continuous ODE systems (Wang et al., 2023), and developing strategies to transfer population-level ODEs to other populations where there is limited data. Furthermore, INSITE could be considered a first link in connecting equation discovery over time with causality (Berrevoets et al., 2023), we hope many more works of this type will follow.

Appendix B Explanation of Treatment Intensity Processes and Filtrations in the Assumptions

The concept of treatment intensity processes has its roots in the generalization for treatment effects in continuous time, introduced in seminal works such as Lok (2008) and later embraced by Saarela & Liu (2016). Intensity processes serve as an extension of propensity scores, traditionally denoted as , to a continuous time framework.

In treatment effects literature, propensity scores provide the probability of treatment assignment conditioned on observed covariates. However, when dealing with continuous-time data, the traditional propensity scores pose a challenge. Specifically, propensities in this setting would govern entire treatment trajectories, considering not just the treatment at a specific time point , but all potential future treatments. Given the vast space of potential trajectories in discrete time, this complexity becomes unmanageable in continuous time.

To address this challenge, filtrations are employed to restrict when a new treatment can be sampled. Filtrations essentially offer a controlled space for the random processes at play. Using the terminology from probability theory:

For a stochastic process , the filtration is a -algebra. This means the filtration limits the stochastic process to only those random variables for which , even though the original probability space might encompass variables with .

Such a structure becomes increasingly important in continuous time applications, as it enables us to understand and manage the dependencies among observations over time. Furthermore, intensity processes in this framework can be seen as analogous to well-known processes in other contexts, such as the Hawkes point processes (that model excitation) or the Poisson point processes (which assume no influence from past observations).

Now, relating back to assum.˜2.2 (Overlap) and assum.˜2.3 (Ignorability): The intensity process, represented by , captures the propensity of an individual receiving treatment at time given all the information up to that point, encapsulated in the filtration . The overlap assumption ensures that the intensity process is never deterministic, implying that there’s always some randomness in treatment assignment irrespective of past information. On the other hand, the ignorability assumption implies that the intensity process conditioned on past information (up to time ) is the same as the intensity process that considers even the future outcomes beyond time . This ensures that treatment assignment is independent of potential outcomes, thereby satisfying a key requirement for unbiased causal inference.

Appendix C Extended Related Work

C.1 Related work approaches

This research brings together two previously separated fields, namely temporal treatment effect estimation and ODE discovery, and there exists a plethora of works within each domain. Here, we will focus on the most relevant and recent ones.

Treatment Effects over Time. There has been substantial research on the problem of estimating treatment effects. One of the pioneering works in this area is the potential outcomes framework introduced by Neyman (1923) and further developed by Rubin (1980).

Traditional methods for estimating treatment effects include propensity score matching (Rosenbaum & Rubin, 1983), instrumental variables (Angrist et al., 1996; Angrist, 1991), and difference-in-differences (Athey & Imbens, 2006). However, these methods are primarily suited for static treatment settings and may have limitations when extended to handle treatment effects over (continuous) time.

The treatment effects literature is well represented in discrete time settings. With more traditional work stemming from epidemiology, based on g-computation, Structural Nested Models, Gaussian Processes, and Marginal Structural Models (MSMs) (Robins, 1994; Robins & Hernán, 2009; Xu et al., 2016; Robins et al., 2000); and more recent work relying on advances in deep learning architectures (Bica et al., 2020b; Melnychuk et al., 2022; Berrevoets et al., 2021; Lim, 2018). Some works have expanded the static treatment effect literature to the continuous time setting (Lok, 2008; Saarela & Liu, 2016; Ryalen et al., 2019), introducing new assumptions to help identify potential outcomes. Nevertheless, these works still face some critical challenges. For example, they often assume constant treatment effects and do not naturally handle irregular sampling and continuous treatments.

ODE discovery. The field of ODE discovery has also seen significant progress over the years. One of the primary goals of ODE discovery is to learn a model of a system’s behaviour from observational data. This involves discovering the underlying system of ODEs that governs the system.

The methods that solve this problem range from linear ODE discovery techniques (Ljung, 1998) to more recent machine learning approaches like Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM) (Hochreiter & Schmidhuber, 1997; Sherstinsky, 2020). However, these later methods often produce black-box models that lack the interpretability required for many applications, including those in regulated domains.

Recent efforts in the ML community have focused on finding interpretable models using techniques such as Sparse Identification of Nonlinear Dynamics (SINDy) (Brunton et al., 2016), which discovers sparse dynamical systems from data. However, traditional ODE discovery methods largely focus on homogeneous systems where the system dynamics are the same across different systems or individuals. They do not readily handle the heterogeneity of treatment effects (between-subject variability), which is a major concern in real-world applications like personalized medicine.

We consider ODE discovery to be a sub-field of the wider Symbolic Regression field, which is concerned with discovering a more general class of equation (beyond ODEs) (Camps-Valls et al., 2023; Mouli et al., 2023; Cranmer et al., 2020).

Dynamical systems, ODEs, and treatment effects. We want to explicitly state that, ODE discovery is a subfield of the broader area of dynamical systems identification. We believe this to be necessary as often work is presented as an identification method for dynamical systems, while they are only applicable to ODEs which is only one particular type of dynamical system. That means that works such as Schulam & Saria (2017); Hızlı et al. (2022); Noorbakhsh & Rodriguez (2022); Ryalen et al. (2020); Alaa & van der Schaar (2019); Qian et al. (2020); Soleimani et al. (2017) may appear related as they model treatment effects in a type of dynamical system. However, it is crucial to note that none of these works model treatment effects as an ODE, nor are they concerned with actually uncovering the underlying ground truth dynamical system in general. Instead, they model treatment effects as a parameterized dynamical system (such as, for example, a point process (Noorbakhsh & Rodriguez, 2022; Alaa & van der Schaar, 2019)), which is learned purely for accurate inference. In contrast, while we are also interested in accurate inference, the resulting model (the ODE) is additionally important.

Fusing Treatment Effects and ODE discovery. Our work is the first, to our knowledge, to combine these two fields for the problem of treatment effect estimation. We seek to harness the strengths of both fields to address their respective challenges, such as the lack of interpretability and robustness in the treatment effects literature and the assumptions about system behaviours in the ODE discovery community. In particular, our INSITE approach is unique in its ability to address the challenges from both communities and thus provides a promising new direction for these intertwined fields.

There are some ideas that seem to correspond, but only at first glance. For example Florens et al. (2008) connects control (a field which is very much related to ODE discovery), yet differs one some crucial points: (i) time is not considered; (ii) no equations are discovered; (iii) the assumption sets differ widely.

C.2 Related work methods

Longitudinal Treatment Effect Methods. Longitudinal treatment effect methods focus on estimating the outcome of treatment at a given time , often using black-box neural network-based approaches, i.e., not closed-form or easily interpretable. A significant theme in these works is the development of methods to mitigate time-dependent confounding. Many mitigation methods have been proposed that we use as benchmarks, such as propensity networks in Marginal Structural Models (MSM) (Robins et al., 2000) and Recurrent Marginal Structural Networks (RMSN) (Lim, 2018), Gradient Reversal in Counterfactual Recurrent Networks (CRN) (Bica et al., 2020b), Confusion in Causal Transformers (CT) (Melnychuk et al., 2022) and g-computation in G-Net (G-Net) (Li et al., 2021).

ODE Discovery Methods. ODE discovery methods aim to discover an underlying closed-form mathematical equation that best fits the underlying controlled ODE of the observed state and action trajectories dataset, . In these methods, the state derivative is unobserved; therefore, they have to approximate the derivative using finite differences, as in Sparse Identification of Nonlinear Dynamics (Brunton et al., 2016), or employ a variational loss as the objective, as in Weak Sparse Identification of Nonlinear Dynamics (Messenger & Bortz, 2021). Underlying these methods is the broader class of methods for symbolic regression (Holt et al., 2023b). To make these two ODE discovery methods more competitive, we adapt them to model individual ODEs per categorical treatment (section˜4.2), (termed A-SINDy, A-WSINDy respectively).

Other Related Approaches. In addition to the aforementioned categories, several other methods have been proposed to model longitudinal data and estimate treatment effects. For instance, recurrent neural networks (RNNs) (Cho et al., 2014) and long short-term memory (LSTM) networks (Hochreiter & Schmidhuber, 1997) are commonly used for handling sequential data, as well as other sequential time series models (Holt et al., 2022), and they have been applied to estimate treatment effects in longitudinal settings (Lim, 2018). Gaussian processes (Rasmussen et al., 2006) and continuous-time Gaussian processes (Alvarez & Lawrence, 2009) have also been employed for modelling and learning from longitudinal data. Furthermore, counterfactual regression (Johansson et al., 2016) and doubly robust estimation (Funk et al., 2011) are statistical methods used to estimate causal effects in observational studies, and they can be adapted to handle time-dependent confounding in longitudinal settings. Additionally, once a model is learned, it can be used for optimal planning (Holt et al., 2023a; 2024), and such time-series models can also applied to other related domains such as in finance (Jha et al., 2020).

A more detailed comparison of the related approaches discussed above can be seen in table˜5. This table compares various aspects of these methods, such as interpretability, between-subject variability, continuous-time modelling, robustness to observation noise and the ability to handle categorical and continuous treatments, and data size requirements.

| Approach | Ref | Interpretable? | BSV? | Continuous-time? | Noise? | Categorical ? | Continuous ? |

| Longitudinal Treatment Effect Methods | |||||||

| MSM | (Robins et al., 2000) | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ |

| RMSN | (Lim, 2018) | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ |

| CRN | (Bica et al., 2020b) | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ |

| G-Net | (Li et al., 2021) | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ |

| CT | (Melnychuk et al., 2022) | ✗ | ✓ | ✗ | ✓ | ✓ | ✗ |

| ODE discovery Methods | |||||||

| A-SINDy | (Brunton et al., 2016) | ✓ | ✗-population only | ✓ | ✗ | ✓ | ✓ |

| A-WSINDy | (Messenger & Bortz, 2021) | ✓ | ✗-population only | ✓ | ✓ | ✓ | ✓ |

| INSITE | (Ours) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

Appendix D ODE formalism for treatment effect estimation

In this section, we want to justify our ODE formalism for treatment effect estimation, eq.˜3, and explain its generality.

We assume that the treatment effect is a deterministic function of the modelled covariates (often the identity). This is inspired by examples considered in treatment effect papers, where we equate the treatment effect to the tumour volume or the response to a drug given a particular concentration (through a dose-response curve) (Bica et al., 2020b; Berrevoets et al., 2021; Lim, 2018; Seedat et al., 2022).

We want to emphasize that a direct link between and can be included through extended covariates and the function that links covariates to the outcome as . Then the new treatment effect variable is defined as . This is the same as treating one of the covariates as the outcome. Please note that our formalism extends this setting and allows for a more complicated treatment outcome that depends on several covariates.

Appendix E Treatments

In the following, we summarize the ways of incorporating the treatment plan in according to the treatment types of binary treatments, categorical treatments, multiple simultaneous treatments, and continuous treatments. This is necessary so that we can simplify the complex and possibly not closed-form into simpler closed-form ’s that we can discover using current methods. In this subsection, every function denoted by the letter or is assumed to be closed-form.

As described in Bica et al. (2021) there are four main types of treatments considered in the treatment effect estimation literature

-

•

Binary treatment (treatment or no treatment)

-

•

Categorical treatment (one treatment out of multiple possible treatment options)

-

•

Multiple treatments assigned simultaneously

-

•

Single treatment or multiple treatments with associated dosage

Each of these treatments can be either static (constant throughout the trajectory) or dynamic. As we are interested in the discovery of closed-form ODEs, the treatment can be incorporated into in two ways.

-

•

We learn different closed-form ODEs for different treatments

-

•

We incorporate the action variable into the closed-form ODE.

Depending on the type of treatment one or both of these approaches can be chosen. We summarize the ways of treating in based on the type of treatment in Table 6.

Binary treatment considers only two kinds of treatments (usually "treatment" and "no treatment"). The action trajectory is one-dimensional with values in . In a static setting, is constant, in a dynamic setting it is piece-wise constant—that means that we are allowed to change the treatment throughout the trajectory. This kind of action can be incorporated in in two ways. We can either learn two different closed-form systems of ODEs ( and ) or incorporate directly in the equation, e.g., .

Categorical treatment considers one treatment out of multiple possible treatments. The action trajectory is one-dimensional with values in . As previously, is constant in a static setting and piece-wise constant in a dynamic setting. We no longer can easily incorporate into the closed-form expression (as it attains discrete values), so the only option is to learn separate closed-form systems of ODEs .

Multiple treatments considers scenarios where multiple treatments can be assigned simultaneously. We represent as a vector , where if the treatment was assigned at time . As previously, is constant in a static setting and piece-wise constant in a dynamic setting. We can consider this case as just having treatments (all possible subsets) and reduce to a categorical treatment where we learn separate equations. An alternative approach would be to learn separate terms for each treatment and combine them using a principle of superposition (Thron, 1974). We can then represent the system of ODEs at time as . However, no current ODE discovery algorithm leverages this representation.

Continuous treatment is usually considered when we want to model the dosage or the strength of the treatment. is represented as a -dimensional real vector where is the dose/strength of the treatment. In a static setting, we consider to be constant. In a dynamic setting, we consider it to be continuous. As can have any real value, the only way to incorporate it into the equations is to have it directly in the closed-form ODE .

| Treatment | S/D | Domain of | Constant | |

| Binary | S | Yes | or | |

| D | Piece-wise | |||

| Categorical | S | Yes | ||

| D | Piece-wise | |||

| Multiple | S | Yes | or | |

| D | Piece-wise | |||

| Continuous | S | Yes | ||

| D | No |

Appendix F Benchmark Dataset Details

In the following we outline the standard Cancer PKPD equation, then outline the between-subject variability layers that we use to generate the different equation classes from the two base PKPD equation models. We also provide the parameter distributions used to generate the different equation classes. For dataset generation and model training see appendix˜I.

F.1 Cancer PKPD

This is a state-of-the-art biomedical Pharmacokinetic-Pharmacodynamic (PKPD) model of tumour growth, used to simulate the combined effects of chemotherapy and radiotherapy in lung cancer (Geng et al., 2017) (eq.˜7)—this has been extensively used by other works (Seedat et al., 2022; Bica et al., 2020b; Melnychuk et al., 2022). Specifically, this models the volume of the tumour for days after the cancer diagnosis—where the outcome is one-dimensional. The model has two binary treatments: (1) radiotherapy and (2) chemotherapy .

| (7) |

Where the parameters for each simulated patient are sampled distributions detailed in Geng et al. (2017), which are also described in table˜7. Here is random noise, modelling randomness in the tumour growth.

| Model | Variable | Parameter | Distribution | Parameter Value ()) |

| Tumor growth | Growth parameter | Normal | , | |

| Carrying capacity | Constant | 30 | ||

| Radiotherapy | Radio cell kill () | Normal | 0.0398, 0.168 | |

| Radio cell kill () | - | Set s.t. =10 | ||

| Chemotherapy | Chemo cell kill | Normal | 0.028, 0.0007 |

Furthermore, we incorporate heterogeneous responses, following Bica et al. (2020b); Lim (2018); Melnychuk et al. (2022), where the means are modified for and by creating three groups of patients (i.e., to represent three types of patients with heterogeneity in treatment response).

For patient group 1, we modify the mean of so that and for patient group 3, we modify the mean of so that .

Additionally, the chemotherapy drug concentration follows an exponential decay relationship with a half-life of one day:

| (8) |

where the chemotherapy binary action represents increasing the concentration by of Vinblastine given at time .

Whereas the radiotherapy concentration represents fractions of radiotherapy given at timestep , where Gy is the Gray ionizing radiation dose.

Time-dependent confounding. We introduce time-varying confounding into the data generation process. This is accomplished by characterizing the allocation of chemotherapy and radiotherapy as Bernoulli random variables. The associated probabilities, and , are determined by the tumor diameter as follows:

| (9) |

where represents the largest tumor diameter, and signifies the mean tumor diameter. The parameters and manage the extent of time-varying confounding. Higher values of amplify the influence of this confounding factor over time.