On a Security vs Privacy Trade-off in Interconnected Dynamical Systems

Abstract

We study a security problem for interconnected systems, where each subsystem aims to detect local attacks using local measurements and information exchanged with neighboring subsystems. The subsystems also wish to maintain the privacy of their states and, therefore, use privacy mechanisms that share limited or noisy information with other subsystems. We quantify the privacy level based on the estimation error of a subsystem’s state and propose a novel framework to compare different mechanisms based on their privacy guarantees. We develop a local attack detection scheme without assuming the knowledge of the global dynamics, which uses local and shared information to detect attacks with provable guarantees. Additionally, we quantify a trade-off between security and privacy of the local subsystems. Interestingly, we show that, for some instances of the attack, the subsystems can achieve a better detection performance by being more private. We provide an explanation for this counter-intuitive behavior and illustrate our results through numerical examples.

keywords:

Privacy , Attack-detection , Interconnected Systems , Chi-squared test1 Introduction

Dynamical systems are becoming increasingly more distributed, diverse, complex, and integrated with cyber components. Usually, these systems are composed of multiple subsystems, which are interconnected among each other via physical, cyber and other types of couplings [1]. An example of such system is the smart city, which consists of subsystems such as the power grid, the transportation network, the water distribution network, and others. Although these subsystems are interconnected, it is usually difficult to directly measure the couplings and dependencies between them [1]. As a result, they are often operated independently without the knowledge of the other subsystems’ models and dynamics.

Modern dynamical systems are also increasingly more vulnerable to cyber/physical attacks that can degrade their performance or may even render them inoperable [2]. There have been many recent studies on analyzing the effect of different types of attacks on dynamical systems and possible remedial strategies (see [3] and the references therein). A key component of these strategies is detection of attacks using the measurements generated by the system. Due to the autonomous nature of the subsystems, each subsystem is primarily concerned with detection of local attacks which affect its operation directly. However, local attack detection capability of each subsystem is limited due to the absence of knowledge of the dynamics and couplings with external subsystems. One way to mutually improve the detection performance is to share information and measurements among the subsystems. However, these measurements may contain some confidential information about the subsystem and, typically, subsystem operators may be willing to share only limited information due to privacy concerns. In this paper, we propose a privacy mechanism that limits the shared information and characterize its privacy guarantees. Further, we develop a local attack detection strategy using the local measurements and the limited shared measurements from other subsystems. We also characterize the trade-off between the detection performance and the amount/quality of shared measurements, which reveals a counter-intuitive behavior of the involved chi-squared detection scheme.

Related Work: Centralized attack detection and estimation schemes in dynamical systems have been studied in both deterministic [4, 5, 6] and stochastic [7, 8] settings. Recently, there has also been studies on distributed attack detection including information exchange among the components of a dynamical system. Distributed strategies for attacks in power systems are presented in [9, 10, 11]. In [5, 12], centralized and decentralized monitor design was presented for deterministic attack detection and identification. In [13, 14], distributed strategies for joint attacks detection and state estimation are presented. Residual based tests [15] and unknown-input observer-based approaches [16] have also been proposed for attack detection. A comparison between centralized and decentralized attack detection schemes was presented in [17].The local detectors in [17] use only local measurements, whereas we allow the local detectors to use measurements from other subsystems as well.

Distributed fault detection techniques requiring information sharing among the subsystems have also been widely studied. In [18, 19, 20, 21, 22], fault detection for non-linear interconnected systems is presented. These works typically use observers to estimate the state/output, compute the residuals and compare them with appropriate thresholds to detect faults. For linear systems, distributed fault detection is studied using consensus-based techniques in [23, 24] and unknown-input observer-based techniques in [25].

There have also been recent studies related to privacy in dynamical systems. Differential privacy based mechanisms in the context of consensus, filtering and distributed optimization have been proposed (see [26] and the references therein). These works develop additive noise-based privacy mechanisms, and characterize the trade-offs between the privacy level and the control performance. Other privacy measures based on information theoretic metrics like conditional entropy [27], mutual information [28, 29] and Fisher information [30] have also been proposed. In [31], a privacy vs. cooperation trade-off for multi-agent systems was presented. In [32], a privacy mechanism for consensus was presented, where privacy is measured in terms of estimation error covariance of the initial state. The authors in [33] showed that the privacy mechanism can be used by an attacker to execute stealthy attacks in a centralized setting.

In contrast to these works, we identify a novel and counter-intuitive trade-off between security and privacy in interconnected dynamical systems. In a preliminary version of this work [34], we compared the detection performance between the cases when the subsystems share full measurements (no privacy mechanism) and when they do not share any measurements. In this paper, we introduce a privacy framework and present an analytic characterization of privacy-performance trade-offs.

Contributions: The main contributions of this paper are as follows. First, we propose a privacy mechanism to keep the states of a subsystem private from other subsystems in an interconnected system. The mechanism limits both the amount and quality of shared measurements by projecting them onto an appropriate subspace and adding suitable noise to the measurements. This is in contrast to prior works which use only additive noise for privacy. We define a privacy ordering and use it to quantify and compare the privacy of different mechanisms. Second, we propose and characterize the performance of a chi-squared () attack detection scheme to detect local attacks in absence of the knowledge of the global system model. The detection scheme uses local and received measurements from neighboring subsystems. Third, we characterize the trade-off between the privacy level and the local detection performance in both qualitative and quantitative ways. Interestingly, our analysis shows that in some cases both privacy and detection performance can be improved by sharing less information. This reveals a counter-intuitive behavior of the widely used test for attack detection [7, 8, 35], which we illustrate and explain.

Mathematical notation: ,

, and

denote the trace, image, null space, and rank of a matrix,

respectively. and denote the

transpose and Moore-Penrose pseudo-inverse of a matrix. A positive

(semi)definite matrix is denoted by . denotes a block diagonal matrix

whose block diagonal elements are . The identity

matrix is denoted by (or to denote its dimension

explicitly). A scalar is called a generalized

eigenvalue of if is singular.

denotes the Kronecker product. A zero mean Gaussian random variable

is denoted by , where denotes

the covariance of . The (central) chi-square distribution with

degrees of freedom is denoted by and the noncentral

chi-square distribution with noncentrality parameter is

denoted by . For , let and

denote the right tail probabilities of a

chi-square and noncentral chi-square distributions, respectively.

2 Problem Formulation

We consider an interconnected discrete-time LTI dynamical system composed of subsystems. Let denote the set of all subsystems and let , where denotes the exclusion operator. The dynamics of the subsystems are given by:

| (1) | ||||

| (2) |

where and are the state and output/measurements of subsystem , respectively. Let . Subsystem is coupled with other subsystems through the interconnection term , where denotes the states of all other subsystems. We refer to as the interconnection signal. Further, and are the process and measurement noise, respectively. We assume that and for all , with and . The process and measurement noise are assumed to be white and independent for different subsystems. Finally, we assume that the initial state is independent of and for all . We make the following assumption regarding the interconnected system:

Assumption 1: Subsystem has perfect knowledge of its dynamics, i.e., it knows , the statistical properties of , and . However, it does not have knowledge of the dynamics, states, and the statistical properties of the noise of the other subsystems.

Remark 1

(Control input) The dynamics in (1) typically includes a control input. However, since each subsystem has the knowledge of its control input, its effect can be easily included in the attack detection procedure. Therefore, for the ease of presentation, we omit the control input.

We consider the scenario where each subsystem can be under an attack. We model the attacks as external linear additive inputs to the subsystems. The dynamics of the subsystems under attack are given by

| (3) | ||||

| (4) |

where is the local attack input for Subsystem , which is assumed to be a deterministic but unknown signal for all . The matrix dictates how the attack affects the state of Subsystem , which we assume to be unknown to Subsystem .

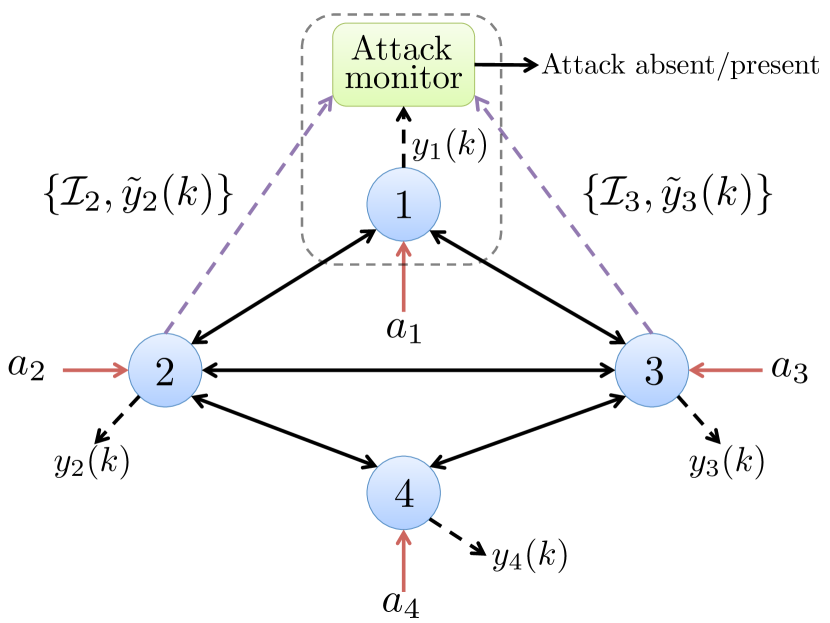

Each subsystem is equipped with an attack monitor whose goal is to detect the local attack using the local measurements. Since Subsystem does not know , it can only detect . The detection procedure requires the knowledge of the statistical properties of which depend on the interconnection signal . Since the subsystems do not have knowledge of the interconnection signals (c.f. Assumption 1), they share their measurements among each other to aid the local detection of attacks (see Fig. 1). The details of how these shared measurements are used for attack detection are presented in Section 3.

While the shared measurements help in detecting local attacks, they can reveal sensitive information of the subsystems. For instance, some of the states/outputs of a subsystem may be confidential, which it may not be willing to share with other subsystems. To protect the privacy of such states/outputs, we propose a privacy mechanism through which a subsystem limits the amount and quality of its shared measurements. Thus, instead of sharing the complete measurements in (4), Subsystem shares limited measurements (denoted as ) given by:

| (5) |

where is a selection matrix suitably chosen to select a subspace of the outputs, and is an artificial white noise (independent of and ) added to introduce additional inaccuracy in the shared measurements. Without loss of generality, we assume to be full row rank for all . Thus, a subsystem can limit its shared measurement via a combination of the following two mechanisms (i) by sharing fewer (or a subspace of) measurements, and (ii) by sharing more noisy measurements. Intuitively, when Subsystem limits its shared measurements, the estimates of its states/outputs computed by the other subsystems become more inaccurate. This prevents other subsystems from accurately determining the confidential states/outputs of Subsystem , thereby protecting its privacy. We will explain this phenomenon in detail in the next section.

Let the parameters corresponding to the limited measurements of

subsystem be denoted by

. We make the

following assumption:

Assumption 2: Each subsystem shares its limited measurements in (2) and the parameters with all subsystems 111To be precise, this information sharing is required only between neighboring subsystems, i.e., between subsystems that are directly coupled with each other in (1).

Under Assumptions 1 and 2, the goal of each subsystem is to detect the local attack using its local measurements and the limited measurements received from the other subsystems (see Fig. 1). Further, we are interested in characterizing the trade-off between the privacy level and the detection performance.

3 Local attack detection

In this section, we present the local attack detection procedure of the subsystems and characterize their detection performance. For the ease or presentation, we describe the analysis for Subsystem and remark that the procedure is analogous for the other subsystems.

3.1 Measurement collection

We employ a batch detection scheme in which each subsystem collects the measurements for , with , and performs detection based on the collective measurements. In this subsection, we model the collected local and shared measurements for Subsystem .

Local measurements: Let the time-aggregated local measurements, interconnection signals, attacks, process noise and measurement noise corresponding to Subsystem be respectively denoted by

| (6) | ||||

By using (3) recursively and (4), the local measurements can be written as

| (7) | ||||

Note that and with

Let denote the effective local noise in the measurement equation (7). Using the fact that are independent, the overall local measurements of the subsystem are given by

| (8) | ||||

Shared measurements: Let

denote the limited measurements received by Subsystem from all the other subsystems at time . Further, let and denote similar aggregated vectors of and , respectively.

Then, from (2) we have

| (9) | ||||

Further, let the time-aggregated limited measurements received by Subsystem be denoted by

, and let denote similar time-aggregated vector of . Then, from (9), the overall limited measurements received by Subsystem read as

| (10) | ||||

The goal of Subsystem is to detect the local attack using the local and received measurements given by (8) and (10), respectively.

3.2 Measurement processing

Since Subsystem does not have access to the interconnection signal , it uses the received measurements to obtain an estimate of . Note that Subsystem is oblivious to the statistics of the stochastic signal . Therefore, it computes an estimate of assuming that is a deterministic but unknown quantity.

According to (10), , and the Maximum Likelihood (ML) estimate of based on is computed by maximizing the log-likelihood function of , and is given by:

| (11) | ||||

is any real vector of appropriate dimension, and equality follows from Lemma A.1 in the Appendix. If (or equivalently ) is not full column rank, then the estimate can lie anywhere in Null() = Null() (shifted by ). Thus, the component of that lies in Null() cannot be estimated and only the component of that lies in Im() = Im() can be estimated. Based on this discussion, we decompose as

| (12) |

Substituting from (12) in (8), we get

| (13) |

Next, we process the local measurements in two steps. First, we subtract the known term . Second, we eliminate the component (which cannot be estimated) by premultiplying (3.2) with a matrix , where

| (14) |

Since the columns of are basis vectors, is full column rank. The processed measurements are given by

| (15) |

where . The random variables and are independent because they depend exclusively on the local and external subsystems’ noise, respectively. Using this fact

| (16) |

where follows from the facts that is full column rank and . The processed measurements in (15) depend only on the local attack , and the Gaussian noise whose statistics is known to Subsystem (c.f. Assumptions 1 and 2), i.e. . Thus, Subsystem uses to perform attack detection. Note that the attack vectors that belong to Null() cannot be detected.

The operation of elimination of the unknown component from also eliminates a component of the attack . As a result, this operation increases the space of undetectable attack vectors from Null() to Null(). In some cases, this operation could also result in complete elimination of attacks as shown in the next result.

Lemma 3.1

Proof: Since Null() = Null(), we have

Let . Then, substituting from (7) in (14), we get

| (18) |

If (17) holds, then there exists a matrix such that . Thus, from (7), we have

The above result has the following intuitive interpretation: if the attacks lie in the subspace of the interconnections that cannot be estimated, then eliminating these interconnections also eliminates the attacks. In this case, the processed measurements do not have any signature of the attacks, which, therefore, cannot be detected. This result highlights the limitation of our measurement processing procedure. Next, we illustrate the result using an example.

Example 1

Consider an interconnected subsystem consisting of two subsystems with the following parameters (see Fig. 2):

and . We have and . Consider the following two cases:

Case (i): Subsystem shares its 2nd state, i.e., . In this case, Subsystem does not get information about the interconnection affecting its 1st state and the elimination of this interconnection also eliminates the attack. It can be verified that

and .

Case (ii): Subsystem shares its 1st state, i.e., . In this case, Subsystem gets information about the interconnection affecting its 1st state. Thus, its elimination is not required and this preserves the attack. It can be verified that

and .

3.3 Statistical hypothesis testing

The goal of Subsystem is to determine whether it is under attack

or not using the processed measurements in

(15). Recall that, since Subsystem 1 does not know

, it can only detect . Let

. Then, from

(7), we have , where

. Thus, processed measurements are distributed

according to . We cast

the attack detection problem as a binary hypothesis testing

problem. Since Subsystem does not know the attack , we consider

the following composite (simple vs. composite) testing problem

We use the Generalized Likelihood Ratio Test (GLRT) criterion [36] for the above testing problem, which is given by

| (19) | ||||

are the probability density functions of the multivariate Gaussian distribution of under hypothesis and , respectively, and is a suitable threshold. Using the result in Lemma A.1 in the Appendix to compute the denominator in (19) and taking the logarithm, the test (19) can be equivalently written as

| (20) | ||||

| where |

and is the threshold. The above test is a test since the test statistics follows a chi-squared distribution (see Lemma 3.3). The next result simplifies the test statistics and provides an interpretation of the test.

Lemma 3.2

(Simplification of test statistics) Let denote the Cholesky decomposition of . Then,

| (21) |

where is a matrix whose columns are the orthonormal basis vectors of .

Proof: Let . Then

Thus, we have

Since is the orthogonal projection operator on , , and the proof is complete.

Using Lemma 3.2, the test (20) can be written as

| (22) |

Thus, the test compares the energy of the signal with a given threshold to detect the attacks. Next, we derive the distribution of the test statistics under both hypothesis.

Lemma 3.3

(Distribution of test statistics) The distribution of test statistics in (22) is given by

| (23) | ||||

| (24) |

where and .

Proof: By the definition of in (21), and recalling with being non-singular, we have

Let . Under , . Thus,

where follows from and . Therefore, .

Remark 2

(Interpretation of detection parameters ) The parameter denotes the number of independent observations of the attack vector in the processed measurements (15). The parameter can be interpreted as the signal to noise ratio (SNR) of the processed measurements in (15), where the signal of interest is the attack.

Next, we characterize the performance of the test (20). Let the probability of false alarm and probability of detection for the test be respectively denoted by

where and follow from (23) and (24), respectively. Recall that and denote the right tail probabilities of chi-square and noncentral chi-square distributions, respectively. Inspired by the Neyman-Pearson test framework, we select the size () of the test and determine the threshold which provides the desired size. Then, we use the threshold to perform the test and compute the detection probability. Thus, we have

| (25) | ||||

| (26) |

The arguments in and explicitly denote the dependence of these quantities on the detection parameters and the probability of false alarm (). Note that the detection performance of Subsystem is characterized by the pair , where a lower value of and a higher value of is desirable. Later, in order to compare the performance of two different tests, we select a common value of for both of them, and then compare the detection probability .

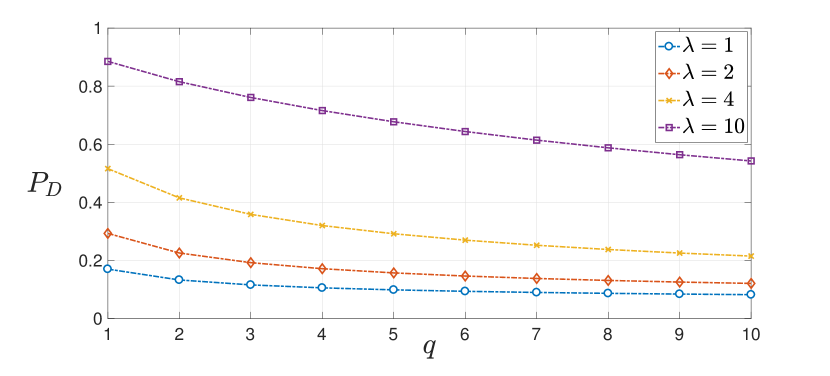

The next result states the dependence of the detection probability on the detection parameters .

Lemma 3.4

(Dependence of detection performance on detection parameters ) For any given false alarm probability , the detection probability is decreasing in and increasing in .

Proof: Since is fixed, we omit it in the notation. It is a standard result that for a fixed (and ), the CDF of a noncentral chi-square random variable is decreasing in [37]. Thus, is increasing in .

Next, we have [37]

From [38, Corollary 3.1], it follows that is decreasing in for all . Thus, is decreasing in .

Figure 3 illustrates the dependence of the detection probability on the parameters . Lemma 3.4 implies that for a fixed , a higher SNR () leads to a better detection performance, which is intuitive. However, for a fixed , an increase in the number of independent observations () results in degradation of the detection performance. This counter-intuitive behavior is due to the fact that the GLRT in (19) is not an uniformly most powerful (UMP) test for all values of the attack . In fact, a UMP test does not exist in this case [39]. Thus, the test can perform better for some particular attack values while it may not perform as good for other attack values. This suboptimality is an inherent property of the GLRT in (19). It arises due to the composite nature of the test and the fact that the value of the attack vector is not known to the attack monitor.

Remark 3

(Composite vs. simple test) If the value of the attack vector (say ) is known, we can cast a simple (simple vs. simple) binary hypothesis testing problem as vs. and use the standard Likelihood Ratio Test criterion for detection. In this case the detection probability depends only on and SNR , and for any given , the detection performance improves as the SNR increases.

4 Privacy quantification

In this section, we quantify the privacy of the mechanism in (2) in terms of the estimation error covariance of the state . For simplicity, we assume , and this estimation is performed by Subsystem , which is directly coupled with Subsystem and receives limited measurements from it. Then, we use this quantification to compare and rank different privacy mechanisms.

We use a batch estimation scheme in which the estimate is computed based on the collective measurements obtained for , with . Let , and let , , be similar time-aggregated vectors of , respectively. Then, using (2), we have

| (27) |

where with . Note that Subsystem that receives measurements (27) from Subsystem knows (c.f. Assumption 2). However, it is oblivious to the statistics of the confidential stochastic signal . Therefore, it computes an estimate of assuming that is a deterministic but unknown quantity. Further, this estimate is computed by Subsystem using the measurements received only from Subsystem , and it does not use its local measurements or the measurements received from other subsystems for this purpose. The reason is twofold. First, although the local measurements of Subsystem depend on due to the interconnected nature of the system (see (8)), they cannot be used due to the presence of the unknown attack on Subsystem given by , where is known and is unknown. If we try to eliminate these unknown attacks by pre-multiplying (8) with a matrix , where is the basis of Null(), this operation also eliminates (and ), since . Second, the measurements received from other subsystems cannot be used since Subsystem does not have the knowledge of the dynamics or attacks on these other subsystems.222Due to these reasons, the estimation capability of any Subsystem trying to infer will be the same.

According to (27), , and the Maximum Likelihood (ML) estimate of based on is computed by maximizing the log-likelihood function of , and is given by:

| (28) | ||||

is any real vector of appropriate dimension, and equality follows from Lemma A.1 in the Appendix. If (or equivalently ) is not full column rank, then the estimate can lie anywhere in Null() = Null() (shifted by ). Thus, the component of that lies in Null() cannot be estimated and only the component of that lies in Im() = Im() can be estimated. Let denote the projection operator on Im(). The estimation error in this subspace is given by:

| (29) |

and the estimation error covariance is given by:

| (30) |

Note that since the model in (27) is linear with Gaussian noise, is the minimum-variance unbiased (MVU) estimate of projected on Im(). Thus, the covariance captures the fundamental limit on how accurately can be estimated and, therefore, it is a suitable metric to quantify privacy.

The privacy level of mechanism in (2) is characterized by two quantities: (i) rank(), and (ii) . Intuitively, if rank() is small, then Subsystem shares fewer measurements and, as a result, the component of that cannot be estimated becomes large. Further, if is large (in a positive semi-definite sense), this implies that the estimation accuracy of the component of that can be estimated is worse. Thus, a lower value of rank() and a larger value of implies a larger level of privacy. Based on this discussion, we next define an ordering between two privacy mechanisms.

Consider two privacy mechanisms and , and let , denote the limited measurements and estimates corresponding to the two mechanisms, respectively. Further, let denote the quantities defined above corresponding to and .

Definition 1

(Privacy ordering) Mechanism is more private than , denoted by , if

| (31) | ||||

The first condition implies that is a limited

version of and is required for the ordering to be

well defined. Under this condition, it is easy to see that

. Thus, the estimated

component lies in a subspace that is

contained in the subspace of the estimated component

. For a fair comparison between the

two mechanisms, we consider the projection of

on , given

by

. Then, we compare its estimation error (given by

) with the estimation

error of (given by

) to obtain the second condition in

(31). Next, we present an example to illustrate

Definition 1.

Example 2

Let , , , and consider two privacy mechanisms given by:

with and . Mechanism shares both components of the measurement vector () whereas shares only the first component (), and both add some artificial noise. The state estimates under the two mechanisms (using (28)) are given by

Thus, under both components of can be estimated while under , only the first component can be estimated. Further, we have and . Thus, the estimation error covariance of the first component of under and are and , respectively, and is more private than if .

On the other hand, if , then an ordering between the mechanisms cannot be established. In this case, under , both the state components can be estimated but the estimation error in first component is large. In contrast, under , only the first component can be estimated but its estimation error is small.

Next, we state a sufficient condition on the noise added by two privacy mechanisms that guarantee the ordering of the mechanisms. This condition implies that, if one privacy mechanism shares a subspace of the measurements of the other mechanism and injects a sufficiently large amount of noise, then it is more private.

Lemma 4.1

Proof: From (27) and (28), we have

Since satisfy (31) , there always exist a full row rank matrix satisfying . Next we have,

| (33) |

where and follows from (using (32)) and Lemma A.3 in the Appendix. From (4), it follows that

where follows from [40, Lemma 1], and , follow from facts that is symmetric and . Thus, both conditions in (31) are satisfied and .

We conclude this section by showing that the privacy mechanism in (2) exhibits an intuitive post-processing property. It implies that if we further limit the measurements produced by a privacy mechanism, then this operation cannot decrease the privacy of the measurements. This post-processing property also holds in the differential privacy framework [26].

Lemma 4.2

(Post-processing increases privacy) Consider two privacy mechanisms and , where further limits the measurements of as:

where is full row rank and . Then, is more private than .

Remark 4

(Comparison with Differential Privacy (DP)) Additive noise based privacy mechanisms have also been proposed in the framework of DP. Specifically, the notion of -DP uses a zero-mean Gaussian noise [26]. Although the frameworks of DP and this paper use additive Gaussian noises, there are conceptual differences between the two. The DP framework distinguishes between the cases when a single subsystem is present or absent in the system, and tries to make the output statistically similar in both the cases. It allows access to arbitrary side information and does not involve any specific estimation algorithm. In contrast, our privacy framework assumes no side information and privacy guarantees are specific to the considered estimation procedure. Moreover, besides adding noise, our framework also allows for an additional means to vary privacy by sending fewer measurements, which is not feasible in the DP framework.

5 Detection performance vs privacy trade-off

In this section, we present a trade-off between the attack detection performance and privacy of the subsystems. As before, we focus on detection for Subsystem and consider two measurement sharing privacy mechanisms and for all other subsystems . The trade-off is between the detection performance of Subsystem 1 and the privacy level of all other subsystems. We begin by characterizing the relation between the detection parameters corresponding to these two sets of privacy mechanisms.

Theorem 5.1

(Relation among the detection parameters of privacy mechanisms) Let be more private than for all . Given any attack vector , let and denote the detection parameters under the privacy mechanisms , for . Then, we have

| (34) |

where and are the largest and smallest generalized eigenvalues of , respectively.

Proof: From (2), (9) and (10), for , we have

Since for all , the first condition in (31) results in

From (11), we have . Since , from (14), it follows that

. Recalling from (24) that , it follows that .

Since , we have for some full column rank matrix . Let . From (16), we have

| (35) |

Next, we show that . . Using , and using (14) for both , , we have

| (36) |

Thus, we get

| (37) |

where the last inequality follows from (36) and the fact that . Next, we have,

| (38a) | ||||

| (38b) | ||||

where is a permutation matrix with . Substituting (38a) and (38b) in (5), we have

where follows from the second condition in (31) for all . Next, from (24), we have,

where follows from Lemma A.3 in the Appendix, and the facts that and is full column rank. Finally, the second condition in (34) follows from Lemma A.4 in the Appendix, and the proof is complete.

Theorem 5.1 shows that when the subsystems share measurements with Subsystem using more private mechanisms, both the number of processed measurements and the SNR reduce. This has implications on the detection performance of Subsystem , as explained next. To compare the performance corresponding to the two sets of privacy mechanisms, we select the same false alarm probability for both the cases and compare the detection probability. Theorem 5.1 and Lemma 3.4 imply that can be greater or smaller than depending on the actual values of the detection parameters. In fact, ignoring the dependency on since it is same for both cases, we have

Intuitively, if the decrease in due to the decrease in the SNR333Note that the SNR depends upon the attack vector (via (24)), which we do not know a-priori. Thus, depending on the actual attack value, the SNR can take any positive value. () is larger than the increase in due to the decrease in the number of measurements (), then the the detection performance decreases, and vice-versa. The next result formalizes this intuition.

Theorem 5.2

The above result presents an analytical condition for the trade-off. When this condition is violated, a counter trade-off exists. This is an interesting and counter-intuitive trade-off between the detection performance and privacy/ information sharing, and it implies that, in certain cases, sharing less information can lead to a better detection performance. This phenomenon occurs because the GLRT for the considered hypothesis testing problem is a sub-optimal test, as discussed before.

5.1 Privacy using only noise

In this subsection, we analyze the special case when the subspace of the shared measurements is fixed, and the privacy level can be varied by changing only the noise level. We begin by comparing the detection performance corresponding to two privacy mechanisms that share the same subspace of measurements.

Corollary 5.3

(Strict security-privacy trade-off ) Consider two privacy mechanisms such that for . Let () denote the detection parameters of Subsystem 1 under the privacy mechanisms , for . Then, for any given , we have

Proof: Since the mechanisms share the same subspace of measurements, from the proof of Theorem 5.1, we have

The fact that follows from Theorem 5.1, and the result then follows from Lemma 3.4.

The above result implies that there is strict trade-off between privacy and detection performance when the subspace of the shared measurements is fixed and the privacy level is varied by changing the noise level. In this case, more private mechanisms result in a poorer detection performance, and vice-versa.

Corollary 5.3 qualitatively captures the security-privacy trade-off. Next, we present a quantitative analysis that determines the best possible detection performance subject to a given privacy level. Note that since the subspace of the shared measurements is fixed, the detection parameter is also fixed, as well as and the attack . Thus, according to Lemma 3.4, the detection performance can be improved by increasing . Intuitively, is large (irrespective of ) if is large, or when is small (in a positive-semidefinite sense).444Minimization of allows us to formulate a semidefinite optimization problem, as we show later. Further, the privacy level is quantified by the error covariance in (30). Based on this, we formulate the following optimization problem:555This problem corresponds to Subsystem . A similar problem can be formulated for the whole system whose cost is the sum of the costs of the individual subsystems.

| (39) | |||||

| s.t. |

where is the minimum desired privacy level of Subsystem . The design variables of the above optimization problem are the positive semi-definite noise covariance matrices . Next, we show that under some mild assumptions, (39) is a semidefinite optimization problem.

Lemma 5.4

Assume that in (6) and for are full row rank. Let , where the matrices , are the block diagonal elements of . Further, let

Then, and , where .

Proof: From (30), , where and . Since, and are assumed to be full row rank, is full row rank. Next, we have

where follows from Lemma A.5 in the Appendix.

6 Simulation Example

We consider a power network model of the IEEE 39-bus test case [42] consisting of generators interconnected by transmission lines whose resistances are assumed to be negligible. Each generator is modeled according to the following second-order swing equation [43]:

| (41) |

where and denote the rotor angle, moment of inertia, damping coefficient, internal voltage and mechanical power input of the generator, respectively. Further, denotes the reactance of the transmission line connecting generators and ( if they are not connected). We linearize (41) around an equilibrium point to obtain the following collective small-signal model:

| (42) |

where denotes a small deviation of from the equilibrium value, , , and is a symmetric Laplacian matrix given by

| (43) |

Further, models small malicious alterations (attacks) in the mechanical power input of the generators that need to be detected. We assume that generators are under attack. Thus, , where denotes the canonical vector. We assume that the power network is divided into 3 subsystems consisting of generators , and . Accordingly, we permute the state vector in (42) using a permutation matrix such that , where consists of rotor angles and velocities of all generators in Subsystem . The transformed system is given by , where and . Next, we sample this continuous time system with sampling time to obtain a discrete-time system with and . We assume that the discrete-time process dynamics are affected by process noise according to (1). The rotor angle and the angular velocity of all generators are measured using Phasor Measurement Units (PMUs) according to the noisy model (2). The time horizon is .

The generator voltage and angle values are obtained from [42]. We fix the damping coefficient for each generator as , and the moment of inertia values are chosen as . The reactance matrix is generated randomly, where each entry of is distributed independently according to . We focus on the attack detection for Subsystem , where Subsystems and use privacy mechanisms to share their measurements with Subsystem 1. The parameters of Subsystem 1 can be extracted from as and . The noise covariances are and and .

We consider the following three cases of privacy mechanisms for Subsystems 2 and 3:

-

(i)

: Subsystems 2 and 3 do not use any privacy mechanisms and share actual measurements, i.e., , and .

-

(ii)

: Subsystem 2 does not use any privacy mechanism () while Subsystem 3 shares noisy measurements of generators

(, ). -

(iii)

: Subsystems 2 and 3 share noisy measurements of generators and , respectively. (, and ).

Using Lemma 4.1, it can be easily verified that the following privacy ordering holds: . Recall that the detection performance is completely characterized by and the detection parameters . We choose for all the cases. Let , denote the detection parameters for the above three cases. Recall that the parameter depends only the system parameters, whereas the parameter depends on the system parameters as well as the attack values. For the above cases, we have and . Recalling (24), the value of can lie anywhere between depending on the attack value . Thus, for simplicity, we present the results in this section in terms of .

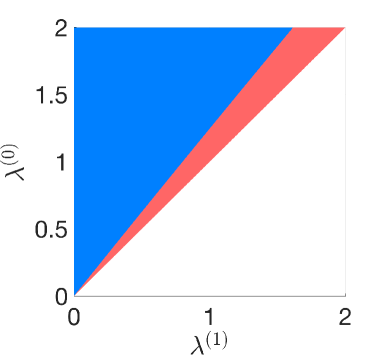

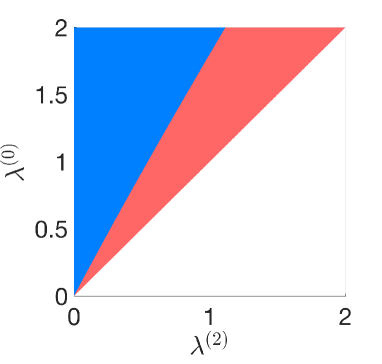

We aim to compare the detection performance of case 0 with cases 1 and 2, respectively. We are interested in identifying the ranges of the detection parameters for which one case performs better than the other. As mentioned previously, the parameters are fixed for the three cases, so we compare the performance for different values of the parameter . Fig. 4 presents the performance comparison of case 0 with case 1 (Fig. 4) and case 2 (Fig. 4). Any point in the colored regions are achievable by an attack, i.e., there exists an attack such that and , whereas the white region is inadmissible (see (34)). The blue region corresponds to the pairs for which case 0 performs better than case , i.e., for . In the red region, case performs better that case 0, .

We observe that case 0 performs better than case if is large, and vice versa. This shows that if the attack vector is such that is small, then the detection performance corresponding to a more private mechanism () is better. This implies that there is non-strict trade-off between privacy and detection performance. This counter-intuitive result is due to the suboptimality of the GLRT used to perform detection, as explained before (c.f. discussion above Remark 3). Further, we observe that the red region of Fig. 4 is larger than (and contains) the red region of Fig. 4. This is because is more private than .

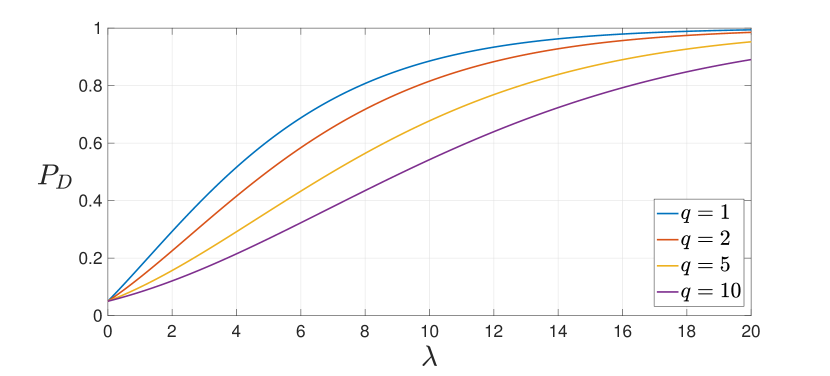

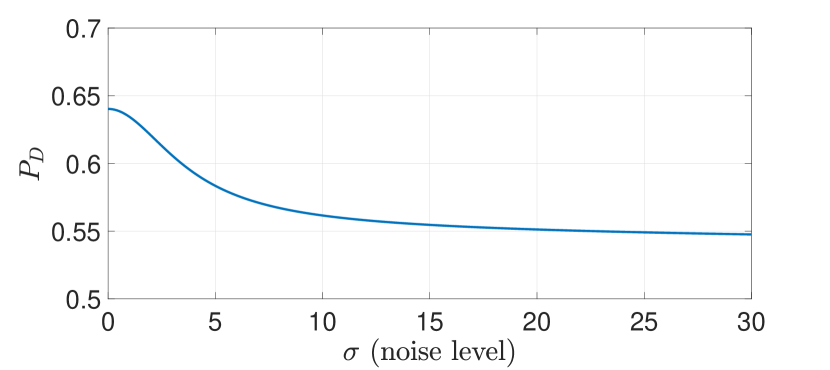

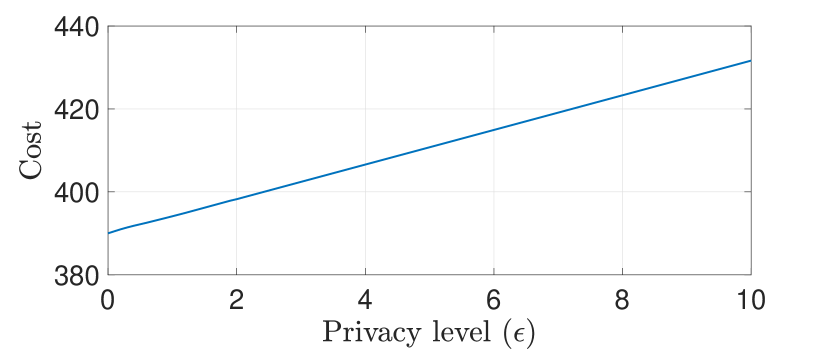

Next, we consider the case where Subsystems and implement their privacy mechanisms by only adding artificial noise in (2). Thus, , and the artificial noise covariances are given by and . The attack on Subsystem (that is, on generator ) is for . Clearly, as the noise level increases, the privacy level also increases. Fig. 5 shows the detection performance of Subsystem for varying noise level . We observe that the detection performance is a decreasing function of the noise level (c.f. Corollary 5.3), implying a strict trade-off between detection performance and privacy in this case. Finally, we illustrate this strict trade-off by also explicitly solving the optimization problem (40) and computing the optimal noise covariance matrices. We fix the same desired privacy level for Subsystems 2 and 3: . Fig. 6 shows that the optimal cost in (40) increases with , indicating that the detection performance decreases as privacy level increases.

7 Conclusion

We study an attack detection problem in interconnected dynamical systems where each subsystem is tasked with detection of local attacks without any knowledge of the dynamics of other subsystems and their interconnection signals. The subsystems share measurements among themselves to aid attack detection, but they also limit the amount and quality of the shared measurements due to privacy concerns. We show that there exists a non-strict trade-off between privacy and detection performance, and in some cases, sharing less measurements can improve the detection performance. We reason that this counter-intuitive result is due the suboptimality of the considered test.

Future work includes exploring if this counter-intuitive trade-off exist for alternative detection schemes (for instance, unknown-input observers) and for other types of statistical tests. Also, recursive schemes to compute the state estimates, eliminate interconnections and compute the detection probability will be explored. Finally, privacy ordering of two mechanisms irrespective of their subspaces of shared measurement will be defined using suitable weighing matrix for each subspace.

APPENDIX

Lemma A.1

The optimal solutions of the following weighted least squares problem:

| (44) |

with are given by

| (45) |

where and is any real vector of appropriate dimension. Further, the optimal value of the cost is

| (46) |

Lemma A.2

Let be a positive definite matrix with , . Further, let . Then,

Proof: Using the Schur complement, we have

where the Schur complement . Further,

Since , . Thus,

and the result follows.

Lemma A.3

Let and with , and let be full (column) rank. Then,

| (47) |

Proof: Since is full column rank, , is invertible and . Thus, . Let denote a matrix whose columns are the basis of . Then, Since, , is non-singular. Let . Then, we have . Thus,

The result follows from Lemma A.2.

Lemma A.4

Let , and let . Then, the maximum and minimum values of subject to are given by and respectively, where and are the largest and smallest generalized eigenvalues of , respectively.

Proof: Consider the following optimization problem

The Lagrangian of this problem is given by , where is the Lagrange multiplier. By differentiating , the first order optimality condition is given by . Thus, is a generalized eigenvalue of . Further, using , the cost at the optimum is given by and the maximum and minimum values of the cost given in the lemma follow.

Lemma A.5

Let where and has a full row rank. Then, .

Proof: Let be the Cholesky decomposition.

where follows since is full row rank.

References

- [1] S. M. Rinaldi, J. P. Peerenboom, and T. K. Kelly. Identifying, understanding, and analyzing critical infrastructure interdependencies. IEEE Control Systems Magazine, 21(6):11–25, 2001.

- [2] A. Cardenas, S. Amin, and S. Sastry. Secure control: Towards survivable cyber-physical systems. In International Conference on Distributed Computing Systems Workshops, page 495—500, Beijing, China, 2008.

- [3] J. Giraldo, E. Sarkar, A. Cardenas, M. Maniatakos, and M. Kantarcioglu. Security and privacy in cyber-physical systems: A survey of surveys. IEEE Design & Test, 34(4):7–17, 2017.

- [4] H. Fawzi, P. Tabuada, and S. Diggavi. Secure estimation and control for cyber-physical systems under adversarial attacks. IEEE Transactions on Automatic Control, 59(6):1454–1467, 2014.

- [5] F. Pasqualetti, F. Dörfler, and F. Bullo. Attack detection and identification in cyber-physical systems. IEEE Transactions on Automatic Control, 58(11):2715–2729, 2013.

- [6] Y. Chen, S. Kar, and J. M. F. Moura. Dynamic attack detection in cyber-physical systems with side initial state information. IEEE Transactions on Automatic Control, 62(9):4618–4624, 2017.

- [7] Y. Mo and B. Sinopoli. On the performance degradation of cyber-physical systems under stealthy integrity attacks. IEEE Transactions on Automatic Control, 61(9):2618–2624, 2016.

- [8] Y. Chen, S. Kar, and J. M. F. Moura. Optimal attack strategies subject to detection constraints against cyber-physical systems. IEEE Transactions on Control of Network Systems, 5(3):1157–1168, 2018.

- [9] H. Nishino and H. Ishii. Distributed detection of cyber attacks and faults for power systems. In IFAC World Congress, pages 11932–11937, Cape Town, South Africa, August 2014.

- [10] S. Cui, Z. Han, S. Kar, T. T. Kim, H. V. Poor, and A. Tajer. Coordinated data-injection attack and detection in the smart grid: A detailed look at enriching detection solutions. IEEE Signal Processing Magazine, 29(5):106–115, 2012.

- [11] F. Dörfler, F. Pasqualetti, and F. Bullo. Distributed detection of cyber-physical attacks in power networks: A waveform relaxation approach. In Allerton Conf. on Communications, Control and Computing, September 2011.

- [12] F. Pasqualetti, F. Dörfler, and F. Bullo. A divide-and-conquer approach to distributed attack identification. In IEEE Conf. on Decision and Control, pages 5801–5807, Osaka, Japan, December 2015.

- [13] N. Forti, G. Battistelli, L. Chisci, S. Li, B. Wang, and B. Sinopoli. Distributed joint attack detection and secure state estimation. IEEE Transactions on Signal and Information Processing over Networks, 4(1):96–110, 2018.

- [14] Y. Guan and X. Ge. Distributed attack detection and secure estimation of networked cyber-physical systems against false data injection attacks and jamming attacks. IEEE Transactions on Signal and Information Processing over Networks, 4(1):48–59, 2018.

- [15] F. Boem, A. J. Gallo, G. Ferrari-Trecate, and T. Parisini. A distributed attack detection method for multi-agent systems governed by consensus-based control. In IEEE Conf. on Decision and Control, pages 5961–5966, Melbourne, Australia, 2017.

- [16] A. Teixeira, H. Sandberg, and K. H. Johansson. Networked control systems under cyber attacks with applications to power networks. In American Control Conference, pages 3690–3696, 2010.

- [17] R. Anguluri, V. Katewa, and F. Pasqualetti. Centralized versus decentralized detection of attacks in stochastic interconnected systems. IEEE Transactions on Automatic Control, 2019. To appear.

- [18] R. M. G. Ferrari, T. Parisian, and M. M. Polycarpou. Distributed fault detection and isolation of large-scale discrete-time nonlinear systems: An adaptive approximation approach. IEEE Transactions on Automatic Control, 57(2):275–290, 2012.

- [19] C. Kiliris, M. M. Polycarpou, and T. Parisian. A robust nonlinear observer-based approach for distributed fault detection of input–output interconnected systems. Automatica, 53:408–415, 2015.

- [20] V. Reppa, M. M. Polycarpou, and C. G. Panayiotou. Distributed sensor fault diagnosis for a network of interconnected cyber-physical systems. IEEE Transactions on Control of Network Systems, 2(1):11–23, 2015.

- [21] X. Zhang and Q. Zhang. Distributed fault diagnosis in a class of interconnected nonlinear uncertain systems. International Journal of Control, 85(11):1644–1662, 2012.

- [22] X. G. Yan and C. Edwards. Robust decentralized actuator fault detection and estimation for large-scale systems using a sliding mode observer. International Journal of Control, 81(4):591–606, 2008.

- [23] E. Franco, R. Olfati-Saber, T. Parisini, and M. M. Polycarpou. Distributed fault diagnosis using sensor networks and consensus-based filters. In IEEE Conf. on Decision and Control, pages 386–391, San Diego, CA, USA, December 2006.

- [24] S. Stankovic, N. Ilic, Z. Djurovic, M. Stankovic, and K. H. Johansson. Consensus based overlapping decentralized fault detection and isolation. In Conference on Control and Fault Tolerant Systems, Nice, France, 2010.

- [25] I. Shames, A. M. H. Teixeira, H. Sandberg, and K. H. Johansson. Distributed fault detection for interconnected second-order systems. Automatica, 47:2757–2764, 2011.

- [26] J. Cortes, G. E. Dullerud, S. Han, J. Le Ny, S. Mitra, and G. J. Pappas. Differential privacy in control and network systems. In IEEE Conf. on Decision and Control, pages 4252–4272, Las Vegas, USA, 2016.

- [27] E. Akyol, C. Langbort, and T. Basar. Privacy constrained information processing. In IEEE Conf. on Decision and Control, Osaka, Japan, 2015.

- [28] F. Farokhi and G. Nair. Privacy-constrained communication. In IFAC Workshop on Distributed Estimation and Control in Networked Systems, pages 43–48, Tokyo, Japan, September 2016.

- [29] T. Tanaka, M. Skoglund, H. Sandberg, and K. H. Johansson. Directed information and privacy loss in cloud-based control. In American Control Conference, Seattle, USA, 2017.

- [30] F. Farokhi and H. Sandberg. Ensuring privacy with constrained additive noise by minimizing fisher information. Automatica, 99:275–288, 2019.

- [31] V. Katewa, F. Pasqualetti, and V. Gupta. On privacy vs cooperation in multi-agent systems. International Journal of Control, 91(7):1693–1707, 2018.

- [32] Y. Mo and R. M. Murray. Privacy-preserving average consensus. IEEE Transactions on Automatic Control, 62(2):753–765, 2017.

- [33] J. Giraldo, A. Cardenas, and M. Kantarcioglu. Security and privacy trade-offs in cps by leveraging inherent differential privacy. In IEEE Conference on Control Technology and Applications, pages 1313–1318, Hawaii, USA, 2017.

- [34] R. Anguluri, V. Katewa, and F. Pasqualetti. On the role of information sharing in the security of interconnected systems. In Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, Honolulu, Hi, 2018.

- [35] A. S. Willsky. A survey of design methods for failure detection in dynamic systems. Automatica, 12:601–611, 1976.

- [36] L. Wasserman. All of Statistics: A Concise Course in Statistical Inference. Springer, 2004.

- [37] N. L. Johnson, S. Kotz, and N. Balakrishnan. Continuous Univariate Distributions, Volume 2. Wiley-Interscience, 1995.

- [38] E. Furman and R. Zitikis. A monotonicity property of the composition of regularized and inverted-regularized gamma functions with applications. Journal of Mathematical Analysis and Applications, 348(2):971–976, 2008.

- [39] E. L. Lehmann and J. P. Romano. Testing Statistical Hypotheses. Springer-Verlag New York, 2005.

- [40] R. E. Hartwig. A note on the partial ordering of positive semi-definite matrices. Linear and Multilinear Algebra, 6(3):223–226, 1978.

- [41] L. Vandenberghe and S. Boyd. Semidefinite programming. SIAM Review, 38(1):49–95, 1996.

- [42] T. Athay, R. Podmore, and S. Virmani. A practical method for the direct analysis of transient stability. IEEE Transactions on Power Apparatus and Systems, PAS-98(2):573–584, 1979.

- [43] P. Kundur. Power System Stability and Control. McGraw-Hill Education, 1994.