On Bounds for Greedy Schemes in String Optimization based on Greedy Curvatures

Abstract

We consider the celebrated bound introduced by Conforti and Cornuéjols (1984) for greedy schemes in submodular optimization. The bound assumes a submodular function defined on a collection of sets forming a matroid and is based on greedy curvature. We show that the bound holds for a very general class of string problems that includes maximizing submodular functions over set matroids as a special case. We also derive a bound that is computable in the sense that they depend only on quantities along the greedy trajectory. We prove that our bound is superior to the greedy curvature bound of Conforti and Cornuéjols. In addition, our bound holds under a condition that is weaker than submodularity.

I INTRODUCTION

In many sequential decision-making or machine learning problems, we encounter the problem of optimally choosing a string (ordered set) of actions over a finite horizon to maximize a given objective function. String optimization problems have the added complexity relative to set optimization problems in that the objective function depends on both the set of actions taken and the order in which the actions are taken. In such problems, determining the optimal solution (optimal string of actions) can become computationally intractable with increasing size of state/action space and optimization horizon. Therefore, we often have to resort to approximate solutions. One of the most common approximation schemes is the greedy scheme, in which we sequentially select the action that maximizes the increment in the objective function at each step. A natural question that arises is how good is the greedy solution to a string optimization problem relative to the optimal solution?

In this paper, we derive a ratio bound for the performance of greedy solutions to string optimization problems relative to that of the optional solution. By a ratio bound, we mean a bound of the form

where and are the objective function values of the greedy solution and optimal solution, respectively, and is the horizon. The bound guarantees that the greedy solution achieves at least a factor of of the optimal solution. Our focus is on establishing a bound in which the factor is easily computable.

Prior work: Bounding greedy solutions to set optimization problems has a rich history, especially in the context of submodular set functions. The most celebrated results are from Fisher et al. [1] and Nemhauser et al. [2]. They showed that over a finite general set matroid [1] and over a finite uniform set matroid with being the horizon [2]. Improved bounds (with larger than ) have been developed by Conforti and Cornuéjols [3], Vondrak [4, 5], and Wang et al. [6], by introducing various notions of curvature for set submodular functions under uniform and/or general matroid settings.

However, for most of these bounds, computing the value of is intractable and hence the bound is effectively not computable in problems with large action spaces and decision horizons. In addition, the value of depends on the values of the objective function beyond the optimization horizon. One notable exception is the bound derived by Conforti and Cornuéjols [3] that is based on a quantity called greedy curvature. This bound is easily computable. Another notable exception is the bound derived by Welikala et al. [7], which is also computable and in some problems has shown to be larger than other curvature bounds. However, the value of for this bound depends on values of the objective function beyond the optimization horizon.

Recently, Zhang et al. [8] extended the concept of set submodularity to string submodularity and established a bound for greedy solutions of string optimization problems. They also derived improved bounds involving various notions of curvature for string submodular functions. However, these curvatures have the same computational intractability issue as mentioned above. Alaei et al. [9] proved and bounds for online advertising and query rewriting problems, both of which can be formulated under the framework of string submodular optimization.

Main contributions: The contributions of this paper are as follows:

-

1.

We first derive a computable bound for greedy solutions to string optimization problems by extending the notion of greedy curvature and the proof technique used by Conforti and Cornuéjols [3] from set functions to string functions. Our bound coincides with the greedy curvature bound in [3] if reducing our string objective function to a set objective function. However, in deriving our bound, we don’t use submodularity. Rather we rely on weaker conditions on the string objective function. Therefore, our bounding result is stronger. This result is reported in \threfGenConforti.

-

2.

We then establish an even stronger result. We derive another computable bound that relies on weaker assumptions than those used in deriving our first bound. This is done in \threftopK.

-

3.

We then show that the value of the bound in \threftopK is larger than the value of the bound in \threfGenConforti (i.e., the generalization of the Conforti and Cornuéjols bound). This is shown in \threfbetterThm.

Organization: The paper is organized as follows. Section II introduces all the mathematical preliminaries and formulates the string optimization problem. Section III presents the mains results regarding our computable bounds. Applications of our theoretical results to task scheduling and sensor coverage problems are demonstrated in Section IV. Finally, conclusions are given in Section V.

II DEFINITIONS FOR STRING OPTIMIZATION

In this section, we introduce notation, terminology, and definitions that we use in our paper and present a formulation for a general string optimization problem.

Definition 1.

Let be a set. In our context, is called the ground set and its elements are called symbols.

-

1.

Let . Then is a string with length .

-

2.

Let be a positive integer, called the horizon.

-

3.

Let be the set of all positive strings of length up to , including the empty string . It is also called the uniform string matroid of rank .

Definition 2.

Consider two strings and in .

-

1.

Define the concatenation of and as

-

2.

We say that is a prefix of if for some , in which we write .

-

3.

We say that is prefix-closed if for all and , .

-

4.

Let be the objective function.

Definition 3.

Let . Then is a finite rank string matroid if

-

1.

for all .

-

2.

If and , then .

-

3.

For every where , there exists that is a symbol in and .

Definition 4.

is string submodular on if

-

1.

, .

-

2.

such that , .

Remark 1.

-

1.

Unlike the permutation invariance in the set case, the order of the symbols in a string matters. Different orders of the same set of symbols represent different strings.

-

2.

Note that if and only if .

-

3.

In the matroid literature, a prefix-closed collection is also said to satisfy the hereditary property and is called as independence system. An independence system does not require condition in Definition 3.

Remark 2.

The definitions of finite rank string matroid and string submodular function are introduced in [10]. Their formulations are inspired by the definitions of set matroid and set submodular function in [3]. Most of the previous work is established in the set case. Here, we extend the theoretical results to the more general string case.

Consider an objective function and a prefix-closed with . The general string-optimization is given by

| (1) | ||||

Remark 3.

-

1.

The stipulation that is without loss of generality because if , we can subtract from all values of without changing the maximizer of the optimization problem (1).

-

2.

We deal with the case that for any .

-

3.

The constraint set here is not necessarily the uniform matroid or a finite rank string matroid . In particular, our analysis applies to general prefix-closed sets as constraint sets subject to certain assumptions.

-

4.

The stipulation that is without loss of generality because we can always define .

Definition 5.

Any solution to the optimization problem (1) is said to be optimal, denoted by , where .

Remark 4.

-

1.

The length of an optimal solution could be anything from to .

-

2.

There may be multiple optimal solutions.

-

3.

If is finite, then and are finite, and an optimal solution always exists.

Definition 6.

We define to be a greedy solution if for all ,

Note that we are going all the way to horizon . This implicitly assumes that is nondecreasing along and . Theses implicit conditions are reflected in assumption in Section III.

Remark 5.

-

1.

The length of a greedy solution is always .

-

2.

A greedy scheme is the one that adds a symbol to the existing string at each time , so that the resulting string produces the highest value of without regard to the future times. In other words, a greedy solution is generated by a greedy scheme.

-

3.

The above could be nonunique, in which case there would be multiple greedy solutions.

Definition 7.

We define as the performance bound of the greedy solution.

Remark 6.

can be computed exactly, but is computational intractable. Finding a valid lower bound of is equivalent to finding an upper bound of .

III Main Results

In this section, we present our bounding results. We first introduce the assumptions that our bounding results rely on. Then we generalize the greedy curvature bound of Conforti and Cornuéjols [3] from set optimization problems to string optimization problems. Then, we establish a stronger bounding result that relies on fewer assumptions and has a larger bound value.

Given any string and , denote if and if . For simplicity, we use the abbreviation . Note that by definition, .

Let . Define the notation as follows: for each , , called the increment of . In [3], is called a discrete derivative.

For any , let . The set contains the actions that are feasible with respect to following string . The set is frequently referred to in our derivations. When , . For , is nonemepty because by definition.

Assumptions: We introduce the following three key assumptions regarding feasibility along the greedy sequence and the diminishing return property along the optimal and greedy paths:

Our bounding results (Theorem 1-3) rely on a subset or all of the the above three assumptions. We note that is a weaker conditions than string submodularity, they only involve diminishing return along the optimal sequence instead of any sequence in . Any string submodular function satisfies , as stated in the following lemma.

Lemma 1.

If is string submodular, then satisfies .

Proof.

By condition (2) of string submodularity in Definition 4, we can set . Then and are both feasible in the domain of and . ∎

We now establish our first bounding result. We define the greedy curvature for a string function as

| (2) |

Remark 7.

alpha_G_1 Based on assumptions and , always holds. This can be proved by contradiction. Assume . By the definition of and assumptions and , we have:

| (3) | ||||

Adding and summing over for all yield:

| (4) | ||||

which brings contradiction with .

Then, we have the following theorem.

Theorem 1.

GenConforti Assuming , , where

Proof.

The definition of gives us:

for every and

By assumption ,

| (5) |

Moreover, by assumption ,

| (6) |

in which holds since , by the definition of greedy solution, dominates each term in .

Then,

| (7) | ||||

where is by the relation in inequality , and is by the definition of .

Dividing on both sides of yields:

| (8) | ||||

where is due to inequality and the fact that from \threfalpha_G_1. ∎

We note that the above proof technique is similar to the proof technique used in Theorem 3.1 in [3] by Conforti and Cornuéjols, in deriving their greedy curvature bound for set optimization problems. However, Theorem 1 is more general in that it applies to bounding greedy solutions in string optimization problems, which subsume set optimization problems, and it does not rely on having submodularity. The weaker assumptions suffices. For a set submodular function, the value of the bound in Theorem 1 coincides with the value of the bound of Conforti and Cornuéjols. The result in Theorem 1 also generalizes our prior result for bounding string optimizations [10].

We now establish a stronger result.

Theorem 2.

topK Assuming and , , where

| (9) |

Proof.

By , for . Therefore,

where is by and is by and the definition of the operator. ∎

Theorem 2 essentially shows that the sum of the objective values under the first greedy and feasible actions serves a valid upper bound for . This upper bound is easy to compute along the greedy trajectory and is obtained under weaker assumptions than those in Theorem 1.

We now show that the bound in Theorem 2 is better than the bound in Theorem 1.

Theorem 3.

betterThm Assuming , .

Proof.

Let denote the maximizer of for where . Then by the definition of . The definition of further gives us:

| (10) | ||||

Dividing on both sides of inequality (10) yields:

| (11) | ||||

where inequality (a) is due to the fact that and from \threfalpha_G_1. ∎

Remark 8.

IV APPLICATIONS

IV-A Task Scheduling

As a canonical optimization problem in operations research, task scheduling was studied in [11] and further investigated in [8] and [12]. In task scheduling, we aim to assign agents at multiple stages throughout the task to maximize the probability of successful completion. The detailed mathematical formulation is as follows.

Assume a task is comprised of stages, and an agent needs to be assigned at stage to accomplish the task. We have a pool of agents, denoted by , and no agent is allowed to be repeatedly selected. For each agent , the probability of accomplishing the task at each stage is denoted by . Therefore, the probability of accomplishment after selecting in the end is:

| (13) |

where the subscript in and represents the selected agent number. Note that the probability of accomplishing the task for each agent is stage-variant. in is henceforth a string function and order dependent. Streeter and Golovin proved in [11] that the greedy schemes yields a performance bound when bears submodularity and monotonicity.

Consider the following example with , , and the probabilities of successful completion in Table. I. The optimal sequence and greedy sequence are equal in this example with . We can verify that assumption is satisfied, and is string submodular. The three performance bounds are as follows:

We observe that the bound outperforms the other two bounds. This example shows the curvature bounds are not necessarily better than the original bound. On some problems they are better, in others they are not. Therefore, we need to choose the largest of the three bounds. But this is easy, because all three bounds are easily compuatble. In the next example, we show a plot that compares the three bounds under different parameters of another optimization problem.

| Stage 1 | Stage 2 | Stage 3 | |

|---|---|---|---|

| 0.2 | 0.16 | 0.14 | |

| 0.18 | 0.16 | 0.14 | |

| 0.16 | 0.14 | 0.14 | |

| 0.14 | 0.12 | 0.10 | |

| 0.12 | 0.1 | 0.08 |

IV-B Multi-agent Sensor Coverage

The multi-agent sensor coverage problem was originally studied in [13] and further analyzed in [14] and [7]. In a given mission space, we need to find a placement of a set of homogeneous sensors to maximize the probability of detecting randomly occurring events. This problem can be formulated as a set optimization problem, which is a special case in the string setting. In this subsection, we demonstrate our theoretical results by applying them to a discrete version of the coverage problem. Our simplified version can be easily generalized to more complicated settings within the same theoretical framework.

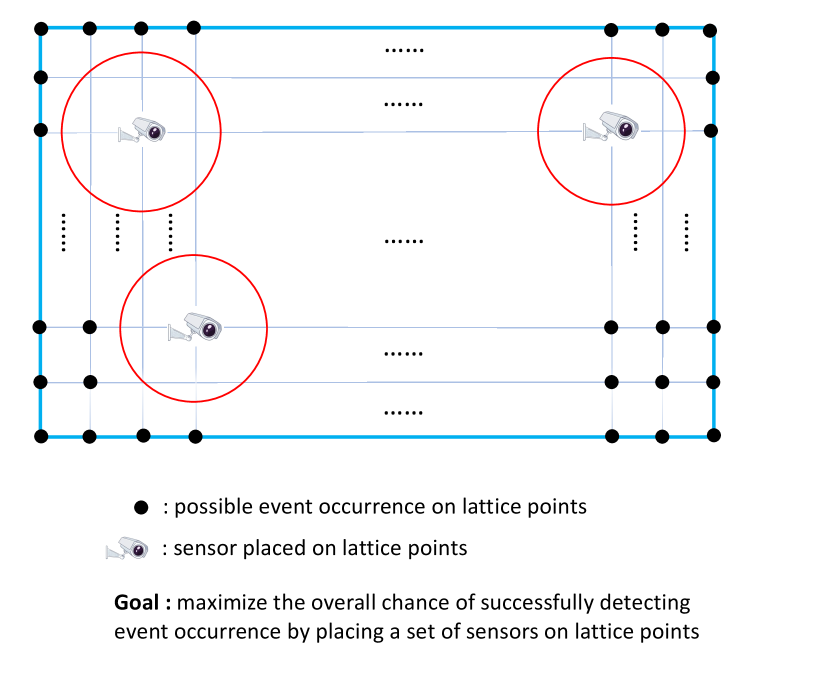

The mission space is modeled as a non-self-intersecting polygon where homogeneous sensors will be placed to detect a randomly occurring event in . For simplicity of calculation, we assume both the sensors and the random event can only be placed and occur at lattice points. We denote the feasible space for sensor placement and event occurrence as . Our goal is to maximize the overall likelihood of successful detection in the mission space, as illustrated in Fig. 1.

The likelihood of event occurrence over is given by an event mass function , and we assume that . may reflect a particular distribution if some prior information is available. Otherwise, when no prior information is obtained. The locations of all the sensors are represented as , where are coordinates of the placed sensors. Each sensor placed at can detect any occurring event at location with probability , where is the decay rate characterizing how quick the sensing capability decays along the distance.

Assuming all the sensors are working independently, the probability of detecting an occurring event at location after placing homogeneous sensors at locations is . In order to consider the whole feasible space for event occurrence, we need to incorporate the event mass function. Our objective function becomes . The goal is to maximize :

| (14) | ||||

If lattice points in are feasible for sensor placement, we need to select out of locations with its complexity being . This becomes a set optimization problem, and exhaustive search is computationally intractable when is large. Therefore, greedy algorithm is an approach for an approximate solution in polynomial time. It was proved that the continuous version of is submodular in [14], and it is not difficult to verify that its discrete version is also submodular.

In our experiment, we consider a rectangular mission space of size . A set of homogeneous sensors are waiting to be deployed on those integer coordinates within , denoted by . For a point , the event mass function is given by , where and are the largest values of the and coordinates in the mission space. This event mass function implies that the random events are more likely to occur around the top right corner of the rectangular mission space.

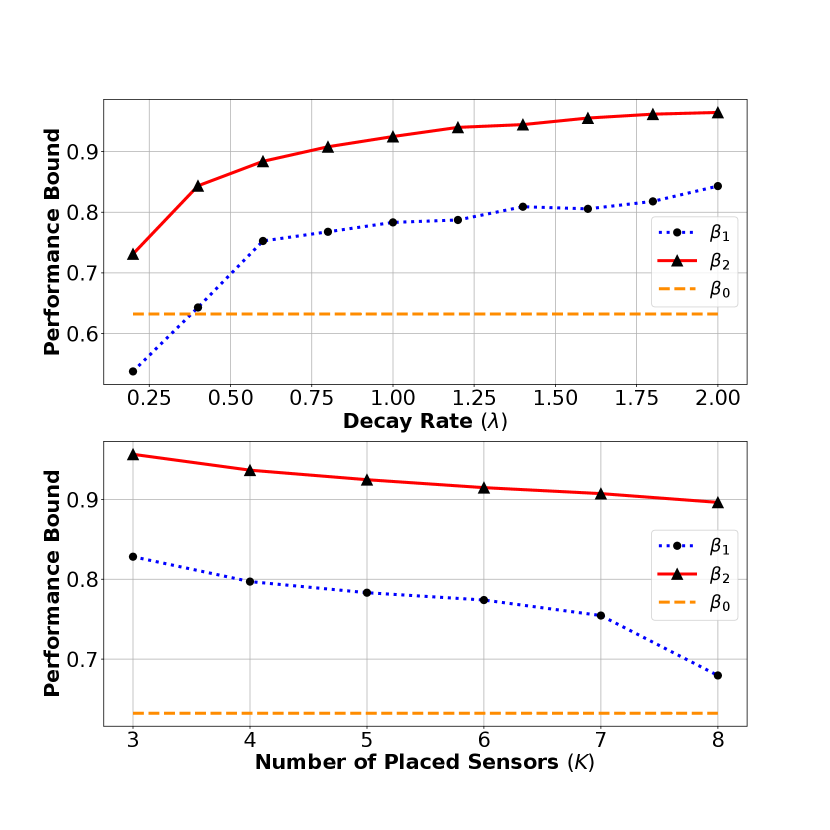

A comparison of the performance bounds under different decay rate with is shown in the upper figure of Fig. 2. Small decay rates imply good sensing capability and strong submodularity, under which the greedy scheme does not produce nearly optimal objective function value. In the upper figure of Fig. 2, the bound (red line) always exceeds the bound (blue line), illustrating \threfbetterThm. In addition, we can observe instances in which the bound is larger than the , while the bound is below this value.

A comparison of performance bounds under different number of placed sensors with is shown in the lower figure of Fig. 2. We can still observe that the bound (red line) always exceeds the bound (blue line) with significant advantages. Both and bound decrease as the number of placed sensors increases.

V CONCLUSION

We derived a computable bound for greedy solutions to string optimization problems by extending the notion of greedy curvature and the proof technique used by Conforti and Cornuéjols [3] from set functions to string functions. However, in deriving our bound we did not use submodularity. Rather we relied on weaker conditions on the string objective function, and hence produced a stronger bounding result. We then derived another computable bound that relies on even weaker assumptions than those used in deriving our first bound , further strengthening the bounding result. We also showed that this second bound has a larger value than the first bound . We demonstrated the superiority of our bound on two applications, task scheduling and multi-agent sensor coverage.

References

- [1] M. L. Fisher, G. L. Nemhauser, and L. A. Wolsey, An analysis of approximations for maximizing submodular set functions—II. Springer Berlin Heidelberg, 1978.

- [2] G. L. Nemhauser, L. A. Wolsey, and M. L. Fisher, “An analysis of approximations for maximizing submodular set functions—I,” Math. Program., vol. 14, pp. 265–294, 1978.

- [3] M. Conforti and G. Cornuéjols, “Submodular set functions, matroids and the greedy algorithm: Tight worst-case bounds and some generalizations of the Rado-Edmonds theorem,” Discrete. Appl. Math., vol. 7, no. 3, pp. 251–274, 1984.

- [4] J. Vondrák, “Submodularity and curvature: The optimal algorithm (combinatorial optimization and discrete algorithms),” RIMS Kokyuroku Bessatsu B, vol. 23, pp. 253–266, 2010.

- [5] G. Calinescu, C. Chekuri, M. Pal, and J. Vondrák, “Maximizing a monotone submodular function subject to a matroid constraint,” SIAM J. Comput., vol. 40, no. 6, pp. 1740–1766, 2011.

- [6] Z. Wang, B. Moran, X. Wang, and Q. Pan, “Approximation for maximizing monotone non-decreasing set functions with a greedy method,” J. Comb. Optim., vol. 31, pp. 29–43, 2016.

- [7] S. Welikala, C. G. Cassandras, H. Lin, and P. J. Antsaklis, “A new performance bound for submodular maximization problems and its application to multi-agent optimal coverage problems,” Automatica, vol. 144, p. 110493, 2022.

- [8] Z. Zhang, E. K. P. Chong, A. Pezeshki, and W. Moran, “String submodular functions with curvature constraints,” IEEE Trans. Autom. Control, vol. 61, no. 3, pp. 601–616, 2015.

- [9] S. Alaei, A. Makhdoumi, and A. Malekian, “Maximizing sequence-submodular functions and its application to online advertising,” Manage. Sci., vol. 67, no. 10, pp. 6030–6054, 2021.

- [10] B. Van Over, B. Li, E. K. P. Chong, and A. Pezeshki, “An improved greedy curvature bound in finite-horizon string optimization with an application to a sensor coverage problem,” in Proc. 62nd IEEE Conf. Decision Control (CDC), Singapore, Dec. 2023, pp. 1257–1262.

- [11] M. Streeter and D. Golovin, “An online algorithm for maximizing submodular functions,” in Proc. Adv. in Neural Inf. Process. Syst. 21 (NIPS 2008), vol. 21, Vancouver, BC, Canada, Dec. 2008, pp. 1577–1584.

- [12] Y. Liu, Z. Zhang, E. K. P. Chong, and A. Pezeshki, “Performance bounds with curvature for batched greedy optimization,” J. Optim. Theory Appl., vol. 177, pp. 535–562, 2018.

- [13] M. Zhong and C. G. Cassandras, “Distributed coverage control and data collection with mobile sensor networks,” IEEE Trans. Autom. Control, vol. 56, no. 10, pp. 2445–2455, 2011.

- [14] X. Sun, C. G. Cassandras, and X. Meng, “Exploiting submodularity to quantify near-optimality in multi-agent coverage problems,” Automatica, vol. 100, pp. 349–359, 2019.