On Distributionally Robust Multistage Convex Optimization: Data-driven Models and Performance

Abstract

This paper presents a novel algorithmic study with extensive numerical experiments of distributionally robust multistage convex optimization (DR-MCO).

Following the previous work on dual dynamic programming (DDP) algorithmic framework for DR-MCO [48], we focus on data-driven DR-MCO models with Wasserstein ambiguity sets that allow probability measures with infinite supports.

These data-driven Wasserstein DR-MCO models have out-of-sample performance guarantees and adjustable in-sample conservatism.

Then by exploiting additional concavity or convexity in the uncertain cost functions, we design exact single stage subproblem oracle (SSSO) implementations that ensure the convergence of DDP algorithms.

We test the data-driven Wasserstein DR-MCO models against multistage robust convex optimization (MRCO), risk-neutral and risk-averse multistage stochastic convex optimization (MSCO) models on multi-commodity inventory problems and hydro-thermal power planning problems.

The results show that our DR-MCO models could outperform MRCO and MSCO models when the data size is small.

Keywords:

distributionally robust optimization,

multistage convex optimization,

dual dynamic programming algorithm,

multi-commodity inventory,

hydro-thermal power planning problem

1 Introduction

Multistage convex optimization is a decision-making problem where objective functions and constraints are convex and decisions need to be made each time some of the uncertainty information is revealed. As it is often challenging to gain precise knowledge of the probability distributions of the uncertainty, distributionally robust multistage convex optimization (DR-MCO) allows ambiguities in probability distribution and aims to find optimal decisions that minimize the overall expected objective cost with respect to the worst-case distribution. The DR-MCO framework encompasses multistage stochastic convex optimization (MSCO) and multistage robust convex optimization (MRCO) as special cases, and thus have been widely applied in many areas including energy systems, supply chain and inventory planning, portfolio optimization, and finance [43, 6].

Distributionally robust optimization (DRO) has received significant research attention in recent years. Common choices of the ambiguity sets include moment-based ambiguity sets [40, 10, 42, 47], discrepancy or distance-based ambiguity sets [5, 4, 36, 7], and many other ones that are constructed from shape requirements or special properties of the distributions (we refer any interested reader to the review papers [37, 29] for more details). Among all these choices, data-driven Wasserstein ambiguity sets, i.e., Wasserstein distance balls centered at an empirical probability distribution obtained from given uncertainty data, has gained increasing popularity because of the following reasons: (1) measure concentration in Wasserstein distance guarantees with high probability that the decisions or policies obtained from solving the in-sample model provides an upper bound on the out-of-sample mean cost [16, 15]; and (2) even if we consider general probability distributions in the ambiguity sets, tractable finite-dimensional reformulation or approximation can be derived using strong duality for 1-Wasserstein distance of probability measures on Euclidean spaces [15, 50, 23], or more generally for any -Wasserstein distance of probability measures on Polish spaces [17]. We remark that these reformulations or approximations are derived for single-stage or two-stage settings. To the best of our knowledge, it remains unclear how to solve DR-MCO problems with Wasserstein ambiguity sets and infinite uncertainty sets.

There are also many works on DRO in the multistage settings. In particular, since taking expectation with respect to a worst-case probability distribution in an ambiguity set gives a coherent risk measure, multistage DRO falls into the category of risk-averse multistage stochastic optimization [43, 35], which dates back to at least [14]. When the underlying uncertainty is stagewise independent, a random nested cutting plane algorithm, which is called stochastic dual dynamic programming (DDP) and is very often used for risk-neutral multistage stochastic linear optimization (MSLO) problems [31], has been extended to risk-averse MSLO with polyhedral risk measures in [21, 45]. DDP algorithms iteratively build under-approximations of the worst-case expected cost-to-go functions in the dynamic programming recursion, which lead to policies that minimize the approximate total cost starting from each stage. To estimate the quality of the obtained policy, deterministic over-approximation is proposed in addition to the under-approximations for risk-averse MSLO problem in [34]. Alternatively, for time-consistent conditional-value-at-risk (CVaR) risk measures, a new sampling scheme based on importance sampling is proposed to achieve tighter estimation of the policy quality [25]. Further exploiting the deterministic over-approximation, a deterministic version of the DDP algorithm is proposed for risk-averse MSLO in [1]. As a variant, robust DDP is proposed for multistage robust linear optimization (MRLO) using similar deterministic over-approximation of cost-to-go functions in [18]. In [32], stochastic DDP algorithm is used to solve distributionally robust multistage linear optimization (DR-MLO) using ambiguity sets defined by a modified -distance for probability distributions supported on the historical data. In [13], stochastic DDP algorithm is used to solve DR-MLO with Wasserstein ambiguity sets on finitely supported probability distributions with asymptotic convergence and promising out-of-sample performance. We comment that the above solution approaches for risk-averse MSLO or DR-MLO rely either on sample average approximations of the risk measures or on the assumption that all uncertainties have finite support.

As DDP-type algorithms are extensively applied in solving risk-neutral and risk-averse MSLO and DR-MLO, their convergence analysis and complexity study become central questions. The finite time convergence of stochastic DDP algorithms is first proved for MSLO problems using polyhedrality of cost-to-go functions in [33, 41]. Such convergence is similarly proved for deterministic DDP algorithms for MSLO in [2], for MRLO in [18], and for DR-MLO in [1]. An asymptotic convergence of stochastic DDP algorithms is proved using monotone convergence argument in the space of convex cost-to-go function approximations for general risk-neutral multistage stochastic convex optimization (MSCO) in [19] and risk-averse MSCO in [20]. Complexity study of DDP algorithms is established using Lipschitz continuity of the under-approximations of the cost-to-go functions for risk-neutral MSCO in [26, 27], and for risk-neutral multistage mixed-integer nonlinear optimization in [49]. Our recent work [48] adopts an abstract definition of single stage subproblem oracles (SSSO) and proves SSSO-based complexity bounds, hence also the convergence, for two DDP algorithms applied to DR-MCO problems with general uncertainty supports and ambiguity sets.

Following [48], we aim to further study data-driven DR-MCO models and compare their numerical performance to other baselines models. In particular, our paper makes the following contributions to the literature.

-

1.

We prove the out-of-sample performance guarantee using measure concentration results, adjustable in-sample conservatism assuming Lipschitz continuity of the value functions in the uncertainty variables for the data-driven DR-MCO models with Wasserstein ambiguity sets.

-

2.

We discuss implementations of SSSO in the context of Wasserstein ambiguity sets containing infinitely supported probability distributions. Such SSSO allows DDP algorithms to converge with provable complexity bounds to an -global optimal first-stage solution, which could help minimize the influence from the sub-optimality caused by early termination of the algorithms when we compare model performances.

-

3.

We present extensive numerical experiments using multi-commodity inventory problem with either uncertain demands or uncertain prices, and hydro-thermal power planning problems with real-world data, that compares the out-of-sample performances of our DR-MCO models against risk-neutral and CVaR risk-averse MSCO models, as well as the MRCO model. To the best of our knowledge, these are the first numerical experiments in the literature that compare DR-MCO models with MSCO and MRCO models when the uncertainty has infinitely many outcomes.

The rest of the paper is organized as follows. In Section 2, we define the DR-MCO model considered in this paper and show some of its favorable properties. In Section 3, we review DDP algorithms for DR-MCO and study the implementation of SSSO for Wasserstein ambiguity sets. In Section 4, we present numerical experiment results comparing DR-MCO models against other baseline models on two application problems. We provide some concluding remarks in Section 5.

2 Data-driven Model and Its Properties

2.1 Data-driven Model Formulation

In this section, we present a data-driven model for DR-MCO and some of its properties. Let denote the set of stage indices. In each stage , we use to denote the convex state space and its elements, which is known as the state vector. We denote the set of uncertainties before stage as and its elements as . For simplicity, we use the notation and to denote parameter sets of the given initial state. After each stage , the uncertainty is assumed to be distributed according to an unknown probability measure taken from a subset of the probability measures supported on . The cost in each stage is described through a nonnegative, lower semicontinuous local cost function , which is assumed to be convex in and for every . We allow to take to model constraints relating the states and the uncertainty (so that can be independent of and ). The DR-MCO can be written in a nested formulation as follows.

| (1) | ||||

Here, is the expectation with respect to variable distributed according to the probability measure . We remark that both the uncertainty sets and the ambiguity sets are independent between stages, which are usually referred to as stagewise independence.

Based on stagewise independence in the nested formulation, we can write the following recursion that is equivalent to (1) using the (worst-case expected) cost-to-go functions,

| (2) |

for each and we set by convention for any . To simplify the notation, we also define the following value function for each stage :

| (3) |

Using these value functions, we may write the optimal value of the DR-MCO (1) as and further simplify the recursion (2) as

| (4) |

While there are many different choices of the ambiguity set for each stage (see e.g., [47]), we focus on the data-driven ambiguity sets constructed as follows. Suppose we have the knowledge of samples of the uncertainty . The empirical probability measure is given by , where for each , is the Dirac probability measure supported at the point , i.e., for any compactly supported function on . Such an empirical probability measure captures the information from the sample data and is often used to build the sample average approximation for multistage stochastic optimization [43].

Fix any distance function on , the Wasserstein distance of order 1 (a.k.a, Kantorovich-Rubinstein distance) is defined as

| (5) |

for any two probability measures , where is the pushforward measure induced by the projection maps by sending , for or . That is, the joint probability measure in (5) has marginal probability measures equal to and .

It can be shown that is indeed a distance on the space of probability measures [46, Definition 6.1] except that it may take the value of . Thus it is natural to restrict our attention to the convex subset of probability measures with finite distance to a Dirac measure on

| (6) |

Note that any continuous function that satisfies for some and would be integrable for any probability measure in . Now given any such continuous functions on and a real vector , we define the Wasserstein ambiguity set as

| (7) |

The first inequality constraint in the definition (7) bounds the Wasserstein distance of the probability measure from the empirical measure , while the second set of constraints on are defined as , which could be bounds on the moments.

It is well studied that the recursion for data-driven Wasserstein DR-MCO problems (2) can be reformulated as a finite-dimensional minimum-supremum optimization problem, under the assumption of strict feasibility.

Assumption 1.

The empirical probability measure is a strictly feasible solution of (7), i.e. it satisfies for all .

Theorem 1.

We provide the derivation and proof details in Section A. As a corollary, we can prove a special version of the Kantorovich-Rubinstein duality formula [46, Remark 6.5].

Corollary 2.

Under Assumption 1, if the value function is -Lipschitz continuous in the uncertainty for any , then we have

2.2 Out-of-Sample Performance Guarantee

A major motivation for using Wasserstein DR-MCO models is the out-of-sample performance guarantee, which ensures that the decisions evaluated on the true probability distribution would perform no worse than the in-sample training with high probability. To begin with, we say that a probability measure is sub-Gaussian if for some constant , or it has finite third moments if . Our discussion is based on the following specialized version of the measure concentration inequality [16, Theorem 2].

Theorem 3.

Fix any probability measure in stage and let denote the empirical measure constructed from independent and identically distributed (iid) samples of . Then for any , we have

| if is sub-Gaussian and , | (9) | ||||

| if has finite third moments, | (10) |

for some positive constants that depend only on .

The measure concentration bound in (9) becomes slightly more intricate when the dimension of the uncertainty (see the details in [16]), so we focus our discussion below on the other cases.

The out-of-sample performance refers to the evaluation of the solutions and policies obtained from solving DR-MCO (1) on the true probability measures for each . To be precise, fix any optimal policy given by the DR-MCO (1), i.e., an optimal initial stage state , and a collection of mappings for , such that

-

1.

is Borel measurable for any ;

-

2.

for any and .

We define recursively the costs in the out-of-sample evaluation cost-to-go functions as

| (11) |

with for any . Then the out-of-sample evaluation mean cost associated with this policy is defined as . The next theorem provides a lower bound on the probability that the event happens, which is often known as the out-of-sample performance guarantee.

Theorem 4.

Fix any probability measure and let denote the empirical measure from iid samples of for all stages . Assume that for , and for all . Then for any , we have with probability at least if either of the following conditions holds for each :

-

1.

the probability measure is sub-Gaussian, , and

-

2.

the probability measure has finite third moments and

where and are the positive constants in Theorem 3, that depend only on .

Proof.

If either of the conditions is satisfied, then it is straightforward to check from Theorem 3 that the probability . By the assumption on the iid sampling of and that for , the event has the probability

To complete the proof, we claim that for all and everywhere on . Note that for all . Now if for all , then

Therefore, on the event , we have

This recursion shows that for all , and thus

∎

While Theorem 4 is a direct consequence of Theorem 3, it shows some interesting aspects of DR-MCO using Wasserstein ambiguity sets. First, to get a certain probabilistic bound for the out-of-sample performance guarantee, we may need to increase the number of samples or the Wasserstein distance bound for a larger number of stages . Second, for probability measures that are not sub-Gaussian (or more generally those with heavy tails, see [15]), for which we would like to apply the second condition, such increase require the product to grow approximately on the order of when is close to 1. Therefore, it is sometimes useful to take larger values of for out-of-sample performance guarantees, especially when the number of sample is limited, or when the true probability measures are not sub-Gaussian.

2.3 Adjustable In-Sample Conservatism

Another well studied approach to guarantee out-of-sample performance is the multistage robust convex optimization (MRCO) model [18, 48]. However, MRCO considers only the worst-case outcomes of the uncertainties and thus can be overly conservative. To be precise, we define the nominal MSCO from the empirical measures without risk aversion by the following recursion

| (12) |

where

| (13) |

and for any . Any MRCO that is built directly from data could have a much larger optimal cost than the nominal MSCO with a probability growing with the numbers of samples and stages , as illustrated by the following example.

Example 1.

Consider an MSCO with local cost functions and state spaces for all . For each , the uncertainties are described by a probability measure on the set such that for any , we have . Now to approximate this MSCO, suppose we are given iid samples from the probability measure in each stage . Then any MRCO defined by the following recursion of expected cost-to-go functions

where is an uncertainty subset constructed from the data, would have its optimal value , where . The optimal value of the corresponding nominal MSCO is where . Therefore, for any constant , we can show that

which goes to 1 as .

In contrast, the difference of the optimal values between the DR-MCO and the nominal MSCO can be bounded by the Wasserstein distances , as shown below.

Theorem 5.

Suppose the value function is -Lipschitz continuous in for any , for each stage . Under Assumption 1, the difference of optimal values between the DR-MCO and the nominal MSCO satisfies

Proof.

We first observe that if there exists such that , then the value functions satisfy by the definitions (3) and (13). Now we prove by recursion that for any , which holds trivially for . For any , we have

where the first part before the inequality is bounded by using Corollary 2, and the second part is bounded by our observation above. This recursion shows that , which completes the proof by the same observation. ∎

Theorem 5 shows that the conservatism of the DR-MCO can be adjusted linearly with the Wasserstein distance bound , assuming the Lipschitz continuity of the value functions in the uncertainties. However, as the out-of-sample performance guarantee in Theorem 4 depends on some unknown constants in Theorem 3, it is not easy to numerically determine the Wasserstein distance bounds . We discuss some practical choices of the bounds in Section 4.

3 Dual Dynamic Programming Algorithm

In this section, we first review the recursive cutting plane approximations and the dual dynamic programming (DDP) algorithm. Then we focus on different realizations of the single stage subproblem oracles (SSSO) for DR-MCO with Wasserstein ambiguity sets that would guarantee the convergence of the DDP algorithm.

3.1 Recursive Approximations and Regularization

Recall that for any convex function , an affine function is called a (valid) linear cut if for all . A collection of such valid linear cuts defines a valid under-approximation of . Similarly by convexity, given a collection of overestimate values for , we can define a valid over-approximation by the convex envelope , where when and otherwise, is the convex indicator function centered at . The validness of these approximations for all suggests that we may use them in the place of for recursive updates during a stagewise decomposition algorithm.

To see how the recursive updates may work, let us assume temporarily in this subsection that the worse-case probability measure in (4) exists and can be found. Given any under-approximation for , for any , we can generate a linear cut for the value function with some , where and are the optimal value and an optimal dual solution to the following Lagrangian dual of the under-approximation problem that is parametrized by :

| (14) |

assuming that exists. Then we can aggregate these linear cuts into a linear cut for , where the expectation is taken componentwise with respect to the probability measure . In this way, we can use the under-approximation for to update the under-approximation for by the aggregated linear cut .

Likewise, given any over-approximation for , for any and , we can solve the following over-estimation problem

| (15) |

which gives an overestimate value . Again we can aggregate the overestimate value by setting , which by definition satisfies and thus can be used to update the over-approximation for .

There are, however, some potential issues with this recursive approximation method. First, the supremum in the Lagrangian dual problem (14) may not be attained, which could happen if (i.e., is an infeasible state for ) or if any neighborhood of contains such an infeasible state. In this case, we may fail to generate a linear cut . Second, Lipschitz constants of the linear cuts may be affected by the under-approximation , causing a worse approximation quality. This may happens when is an extreme point of . In fact, it is shown in [48] that the Lipschitz constants of could exceed those of and grow with the total number of stages . Third, the over-approximation function evaluates to at any point that is not in the convex hull of previously visited points, which makes the gap less useful as an estimate of the quality of the current solutions or policies.

To remedy these issues, we consider a technique called Lipschitzian regularization. Given regularization factors , we define the regularized local cost function as

| (16) |

and the regularized value function

| (17) |

recursively for , where is the regularized expected cost-to-go function defined as

| (18) |

for , and for any . It is then straightforward to check that is uniformly -Lipschitz continuous in for each , and consequently is also -Lipschitz continuous. In the definitions (14) and (15), we can replace accordingly the original cost functions with the regularized cost function , which would guarantee the -Lipschitz continuity of the generated cuts and allow us to enhance the over-approximation to be -Lipschitz continuous.

Lipschitzian regularization in general only gives under-approximations of the true value and expected cost-to-go functions. We need the following assumption to preserve the optimality and feasibility of the solutions.

Assumption 2.

For the given regularization factors , , the optimal value of the regularized DR-MCO satisfies

and the sets of optimal first-stage solutions are the same, i.e.,

We remark by the following proposition that Assumption 2 can be satisfied in any problem that already have uniformly Lipschitz continuous value function for all .

Proposition 6 (Proposition 4,[48]).

Suppose each state space is full dimensional, i.e., . Then Assumption 2 holds if for each stage , the value function is -Lipschitz continuous for any .

In general, we can execute the DDP algorithm even with , as described below.

3.2 Dual Dynamic Programming Algorithm

DDP algorithms generally refer to the recursive cutting plane algorithms that exploit the stagewise independence structure. We first review the definitions of single stage subproblem oracles (SSSO), which symbolize the subroutines of subproblem solving in each stage with under- and over-approximations of the expected cost-to-go functions [48].

Definition 1 (Initial stage subproblem oracle).

Let denote two lsc convex functions, representing an under-approximation and an over-approximation of the cost-to-go function in (18), respectively. Consider the following subproblem for the first stage ,

| (I) |

The initial stage subproblem oracle provides an optimal solution to (I) and calculates the approximation gap at the solution. We thus define the subproblem oracle formally as a map .

Definition 2 (Noninitial stage subproblem oracle).

Fix any regularization parameter . Let denote two lsc convex functions, representing an under-approximation and an over-approximation of the cost-to-go function in (18), respectively, for some stage . Then given a feasible state , the noninitial stage subproblem oracle provides a feasible state , an -Lipschitz continuous linear cut , and an over-estimate value such that

-

•

they are valid, i.e., for any and ;

-

•

the gap is controlled, i.e., .

We thus define the subproblem oracle formally as a map .

Now we can present the (consecutive) DDP algorithm based on the SSSO. In Algorithm 1, each iteration consists of two steps: the noninitial stage step and the initial stage step. In the noninitial stage step, we evaluate the SSSO from stage to to collect feasible states , overestimate values , and a valid linear cut for updating the approximations and . Then we evaluate the initial stage SSSO to get an optimal solution and its optimality gap . We terminate the algorithm when the gap is sufficiently small.

Algorithm 1 is proved to terminate with an -optimal solution in finitely many iterations [48]. We include the theorem here for completeness.

Theorem 7.

Suppose that all the state spaces have the dimensions bounded by and diameters by , and let . If for each stage , the local cost functions are strictly positive for all feasible solutions , uncertainties and some constant , then the total number of noninitial stage subproblem oracle evaluations before achieving an -relative optimal solution for Algorithm 1 is bounded by

We remark that the DDP algorithm can also be executed in a nonconsecutive way with a similar complexity bound. However, since we need to run the algorithm for a fixed number of iterations to compare different models, we restrict our attention to the consecutive version here. Any interested reader is referred to [48] for more detailed discussion.

In Section 3.1, we have seen how SSSO could be implemented if the worst-case probability measure exists in (4) and can be found. However, it is well known that the worst-case probability measure may not exist (see e.g., [15, Example 2]). Moreover, the integration with respect to some worst-case probability measure could also be numerically challenging. Therefore, we next provide two possible SSSO implementation methods directly using the recursion (8).

3.3 Subproblem Oracles: Concave Uncertain Cost Functions

If the following assumption holds, we are able to reformulate the recursion (8) into a convex optimization problem.

Assumption 3.

The local cost function is concave and upper semicontinuous in the uncertainty for any and .

A direct consequence of Assumption 3 is that the effective domain of the state does not depend on the uncertainty , as shown in the following lemma.

Lemma 8.

Under Assumption 3, we have for any and .

Proof.

Assume for contradiction that there exists some , , and such that but . Then for , we have by the concavity and nonnegativity of . It follows from upper semicontinuity that , which is a contradiction. ∎

We are now ready to prove the alternative formulation of the recursion (8).

Theorem 9.

Under Assumption 3, if we further assume that the continuous functions are convex for and for , then we have

| (19) | ||||

where for each , is defined as

Proof.

By Lemma 8, for any , we can define a set which is independent of and closed by the lower semicontinuity of . Note that the norm function has the dual representation . Thus by the recursion (8), we can write

Now for any fixed and , we see that the sets and are compact. Moreover, the function inside the supremum of is concave and upper semicontinuous in , while convex and lower semicontinuous in and . Thus the result follows by applying Sion’s minimax theorem [24]. ∎

Remark.

The proof remains valid if we replace simultaneously , , and with , , and in the theorem. In this case we use to denote the convex conjugate functions.

We provide a possible implementation for noninitial stage SSSO in Algorithm 2 based on Theorem 9. Its correctness is verified by the following corollary.

Corollary 10.

Proof.

To check the validness of , let denote an optimal solution in the minimization (19) with replaced by . Then we have

the last inequality is due to the feasibility of in the minimization (19). For the validness of , note that the value and the dual solution define a valid linear under-approximation for the function defined by replacing with in the minimization (19). Since clearly for all , we see that is a valid under-approximation for . Finally the gap is controlled. ∎

Theorem 9 and Algorithm 2 would be most useful when the functions can be written explicitly as minimization problems. We thus spend the rest of this section to derive the form of in a special yet practically important case, where the local cost function can be written as

| (20) | ||||

for some compact convex set in each stage . It is straightforward to check that in (20) is lower semicontinuous and convex in for any . To simplify our discussion, we assume that is -Lipschitz continuous, so by Proposition 6 for all . The problem (20) is a common formulation in the usual MSCO literature, such as [41], where is supposed to be a polytope.

Proposition 11.

Suppose the local cost function is given in the form (20) and uniformly -Lipschitz continuous in the variable . Fix any point and let denote the support function of the set . If the functions are quadratic with coefficients and for , then we can write

| (21) | ||||

Here, is a real matrix such that is the pseudoinverse of .

Proof.

Under the assumptions, we can write the function as

Note that the objective function in (21) is continuous in both and , and the projection of onto the variables is compact. Thus by the minimax theorem [24], we can exchange the supremum and minimum operations

| (22) | ||||

where is the convex indicator function of the set , the convex conjugate of which is the support function by definition. If we further denote , the supremum can be written using convex conjugacy as

Note that for each , the parametrized conjugate function can be written as [39, Example 11.10]

which is nonnegative and second-order conic representable. Here the convention for is consistent: we have and for any because , which implies that . Now using the formula for convex conjugate of sum of convex functions, we have

| (23) |

where denotes the infimal convolution (a.k.a. epi-addition) of two convex functions and denotes the lower semicontinuous hull of a proper function. Since , the support function is coercive, i.e., . Moreover, each is bounded below as it is nonnegative. Therefore, the closure operation is superficial and the convex conjugate of the sum is indeed lower semicontinuous [3, Proposition 12.14]. The rest of the proof follows from substitution of this convex conjugate expression (23) into the supremum in (22). ∎

3.4 Subproblem Oracles: Convex Uncertain Cost Functions

We provide another useful reformulation of the recursion (8) in this section, based on the following assumption.

Assumption 4.

The local cost function is jointly convex in the state variable and the uncertainty , for any . Moreover, the uncertainty set is a pointed polyhedron and the distance function is polyhedrally representable.

We first mention some direct consequences of Assumption 4. First, the value function would be a convex function in the uncertainty for each state , although it may not be a jointly convex function. Second, recall that a polyhedron is pointed if it does not contain any lines. Any point in a pointed polyhedron can be written as a convex combination of its extreme points and rays [9, Theorem 3.37]. Now under Assumption 4, we may define a lifted uncertainty set as . It is easy to see that is also a pointed polyhedron. We denote the finite set of extreme points of it as where is the set of indices, and want to show that the maximization in (8) can be taken over the finite set in two important cases. First, we consider problems with bounded uncertainty sets .

Proposition 12.

Proof.

From the definition of lifted uncertainty set , we have

To see the last equality, note that if is bounded, then the only recession direction of the lifted uncertainty set is . Since , any maximum solution lies in the convex hull of . Now the last equality follows from the convexity of the function in terms of and . Finally, the reformulation is done by replacing the maximum of finitely many functions by its epigraphical representation for all and . ∎

If the uncertainty sets are unbounded, then in general the supremum in (8) can take in some unbounded directions of , even when the value function has finite values everywhere. To avoid such situation, we consider the growth rate of the value function defined as

| (25) |

for any real-valued , where the limit superior is in fact independent of the choice of , and the inequality is due to that is assumed to be lower bounded by 0. Our convention is to set when is bounded. We now consider problems with unbounded uncertainty sets .

Proposition 13.

Proof.

We claim that the supremum

if and only if , for each . Suppose . By definition (25), there exists a sequence and a constant such that as and . Thus as for are bounded.

Conversely, by definition (25), there exists a constant such that for all with . Thus we have

where , and the maximum is finite because it is attained on some extreme point by convexity, so .

Now from this claim, we see that for any such that , the problem (8) can be formulated equivalently as

The supremum can be attained in : otherwise there exists a point and such that

In other words, defines a strictly increasing ray of , which by convexity implies that the supremum is , a contradiction. Using the convexity again as in the proof of Proposition 12, we conclude that the supremum is indeed attained in , and this completes the proof. ∎

Proposition 13 reduces to Proposition 12 since the growth rate and any continuous function over a bounded polyhedron is bounded. The finite growth rate condition is often satisfied, especially when the value function is Lipschitz continuous. However, it is in general difficult to estimate the growth rate (25). Fortunately, in some application problem, we can derive explicitly the growth rate, as discussed in Section 4.3.

Note that the problems (24) and (26) are standard linear optimization problems in the variables and . Thus by strong duality, we can write the dual problem as

| (27) | ||||

Consequently, any feasible dual solutions and to the dual (27) define a valid under-approximation

| (28) |

We now describe an SSSO implementation in Algorithm 3. Its correctness is verified in the following corollary.

Corollary 14.

Proof.

The validness of follows directly from the inequality (28) and the fact that for any and by definition. To see the validness of , note that for any and , we have

by the definition of in Algorithm 3. Thus for any optimal solution to the problem (26) with replaced by , we have

and consequently since is also a feasible solution to the minimization in (26). Finally, the gap is controlled since . ∎

4 Numerical Experiments

In this section, we first introduce baseline models used for comparison against the DR-MCO model (1). Then we present comprehensive numerical studies of two application problems: the multi-commodity inventory problem with either uncertain demands or uncertain prices, and the hydro-thermal power system planning problem with uncertain water inflows.

4.1 Baseline Models and Experiment Settings

For performance comparison, we introduce two types of baseline models in addition to the DR-MCO with Wasserstein ambiguity sets (7). The first baseline model is the simple multistage robust convex optimization (MRCO) model, where we simply consider the worst-case outcome out of the uncertainty set in each stage . Namely, the cost-to-go functions of the MRCO can be defined recursively as

| (29) |

When the sum is jointly convex in the state and the uncertainty for any given , then the supremum can be attained at some extreme point of the convex hull of if it is finite. In particular, if we have relatively complete recourse, (i.e., the sum is always finite for any given ), and if is a polytope, (i.e., it is a convex hull of finitely many points), then we can enumerate over the extreme points of to find the supremum, which allows us to solve the simple MRCO by Algorithm 1. In general, if the uncertainty set is unbounded, then the cost-to-go functions of the MRCO model can take everywhere, so we will only use the baseline MRCO model when we have polytope uncertainty sets .

The second type of baseline models consists of risk-neutral and risk-averse multistage stochastic convex optimization (MSCO) models. The nominal probability measures in the MSCO models can be either the empirical measure , or a probability measure associated with the sample average approximation (SAA) of a fitted probability measure, which we denote as . The main difference here is that the outcomes in an SAA probability measure can be different from those given by the empirical measure . Moreover, we are able to take for a potentially better training effect. To ease the notation, we also allow to happen when we describe the risk measures in the rest of this section.

For the risk-averse MSCO models, we use the risk measure that is called conditional value-at-risk (CVaR, a.k.a. average value-at-risk or expected shortfall). Its coherence leads to a dual representation [34], that allows the risk-averse MSCO models solved by Algorithm 1 with a straightforward implementation of SSSO. For simplicity, we only introduce the CVaR risk-averse MSCO based on this dual representation, and any interested reader is referred to [43] for the primal definition and the proof of duality.

Given parameters and , we define the cost-to-go functions associated with the -CVaR risk measures recursively for as

| (30) |

where the ambiguity set is defined as

| (31) |

Note that when , the ambiguity set has only one element corresponding to the SAA probability measure . Thus the CVaR risk-averse MSCO model (30) reduces to the risk-neutral nominal MSCO in this case. Alternatively, if and , then the CVaR risk-averse MSCO model 30 considers only the worst outcome of the SAA probability measure in each stage. We remark that both the simple MRCO model (29) and the CVaR risk-averse MSCO model (30) can be solved by Algorithm 1 since only finitely many outcomes need to be considered in each stage . More details on the SSSO for these two baseline models can be found in [48].

Our numerical experiments aim to demonstrate two attractive aspects of the DR-MCO models on some application problems: better out-of-sample performance compared to the baseline models, and ability to achieve out-of-sample performance guarantee with reasonable conservatism. For ease of evaluation, we assume that we have the knowledge of the true underlying probability measure , and thus the marginal probability measures , where is the pushforward of the canonical projection map , for . Here, we do not restrict our attention to the case that is a product of , i.e., the true probability measure is stagewise independent, so our modeling (2) can be used as approximation for problems under general stochastic processes. The experiments are then carried out in the following procedures with a uniform number of data points in each stage.

-

1.

Draw iid samples from to form the empirical probability measures ;

-

2.

Construct the baseline models and DR-MCO models using ;

-

3.

Solve these models using our DDP algorithm (Algorithm 1) to a desired accuracy or within the maximum number of iterations or computation time;

-

4.

Draw iid sample paths from and evaluate the performance profiles (mean, variance, and quantiles) of the models on these sample paths.

In particular, we focus on limited or moderate training sample sizes , while keeping our sizes of evaluation sample paths to be relatively large (). In each independent test run of our numerical experiment, the training samples used in a smaller-sized test are kept in larger-sized tests, and the evaluation sample paths are held unchanged for all models and sample sizes.

Our algorithms and numerical examples are implemented using Julia 1.6 [8], with Gurobi 9.0 [22] interfaced through the JuMP package (version 0.23) [12]. We use 25 single-core 2.1GHz Intel Xeon processors (24 for the worker processes and 1 for the manager process) with 50 GByte of RAM to allow parallelization of the SSSO (Algorithm 3).

4.2 Multi-commodity Inventory Problems

We consider a multi-commodity inventory problem which is adapted from the ones studied in [18, 48]. Let denote the set of product indices. We first describe the variables in each stage . We use to denote the variable of inventory level, (resp. ) to denote the amount of express (resp. standard) order fulfilled in the current (resp. subsequent) stage, and to denote the amount of rejected order of each product . Let be the state variable and be the internal variable for each stage . The stage subproblem can be defined through the local cost functions as

| (32) | ||||

In the definition (32), we use (resp. ) to denote the uncertain express (resp. standard) order unit cost, (resp. ) the inventory holding (resp. backlogging) unit cost, the penalty on order rejections, a positive fixed cost, (resp. ) the bound for the express (resp. standard) order, and the bounds on the backlogging and inventory levels, the uncertain demand for the product , respectively. The first constraint in (32) is a cumulative bound on the express orders, the second constraint characterizes the change in the inventory level, and the rest are bounds on the decision variables with respect to each product. The notations and are used to denote the positive and negative parts of a real number . The initial state is given by for all . Before we discuss the details of the uncertain parameters or , we make the following remarks on the definition (32).

First, it is easy to check that if we (resp. ) are deterministic, then Assumption 4 (resp. Assumption 3) is satisfied so we are able to apply the SSSO implementations discussed in Sections 3.4 and 3.3. Second, as the bounds do not change with and all orders can be rejected (i.e., is feasible for all ), we see that the problem (32) has relatively complete recourse. Third, the Lipschitz constant of the value functions is uniformly bounded by , so Proposition 6 can be applied here if we set the regularization factors to be sufficiently large . Besides, the Lipschitz continuity guarantees the in-sample adjustable conservatism by Theorem 5. Last, since all state variables are bounded, together with the above observation, we know by Theorem 7 that Algorithm 1 would always converge on our inventory problem (32).

4.2.1 Inventory Problems with Uncertain Demands

First, we consider the inventory problems with uncertain demands, where the goal is to seek a policy with minimum mean inventory cost plus the penalty on order rejections. The uncertain demands are modeled by the following expression:

| (33) |

Here, is a factor and is the period for the base demands, and is the bound on the uncertain demands. The uncertain vector has its components described as follows: , and for , we have

| (34) |

For the experiments, we consider products and stages. The unit prices of each product are deterministically set to and for all ; the inventory and holding costs are and , and the rejection costs are , for each . The bounds are set to , , , , and for each . We pick the uncertainty parameters and . We terminate the DDP algorithm if it reaches 1% relative optimality or 2000 iterations. For Wasserstein ambiguity sets, we only consider the radius constraint (i.e., ) with radius set to be relative to the following estimation of the distance among data points:

| (35) |

For the MSCO models, we directly use the empirical probability measures for each . Further, we consider parameters and for the CVaR risk-averse MSCO models.

Using the experiment procedure described in Section 4.1, we present the results of our data-driven DR-MCO model with Wasserstein ambiguity sets and the baseline models.

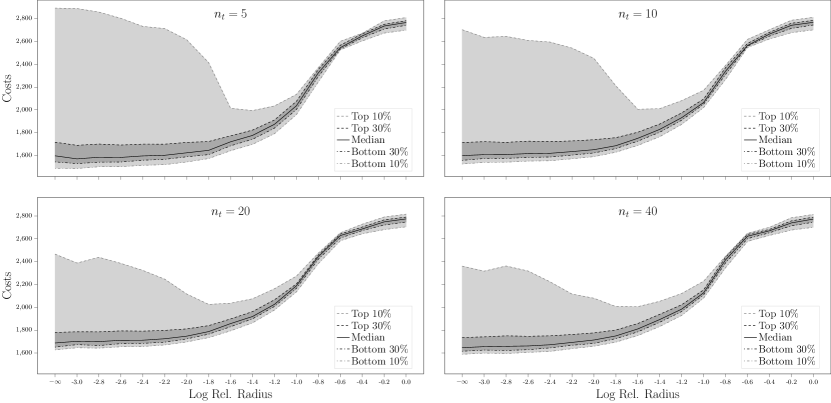

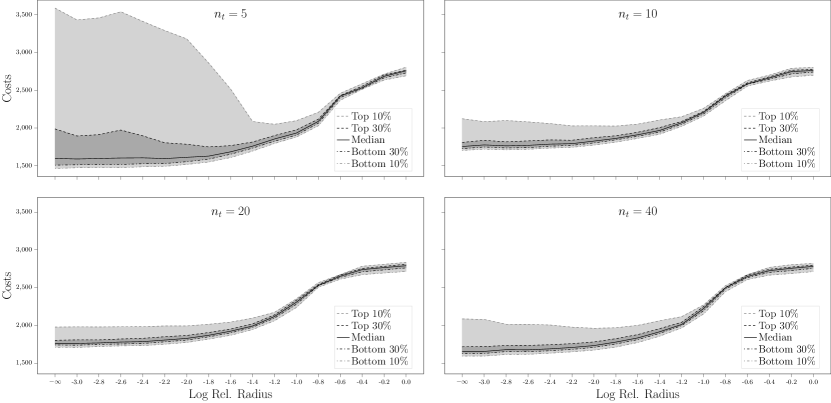

Figure 1 (and Figures 5 and 6 in Section B) displays the out-of-sample cost quantiles of the nominal stochastic model and the DR-MCO models with different Wasserstein radii, constructed from the empirical probability measures . Here we use the log radius to denote the nominal stochastic model, i.e., . From the plot, we see that in small-sample case (), the Wasserstein DR-MCO model significantly reduces the top 10% out-of-sample evaluation costs when the radius is set to be of the estimation . Moreover, the difference between top 10% and bottom 10% of the out-of-sample evaluation costs becomes smaller around the even for larger sample sizes. However, the median out-of-sample cost increases with the Wasserstein radius, suggesting that larger Wasserstein radii may lead to overly conservative policies.

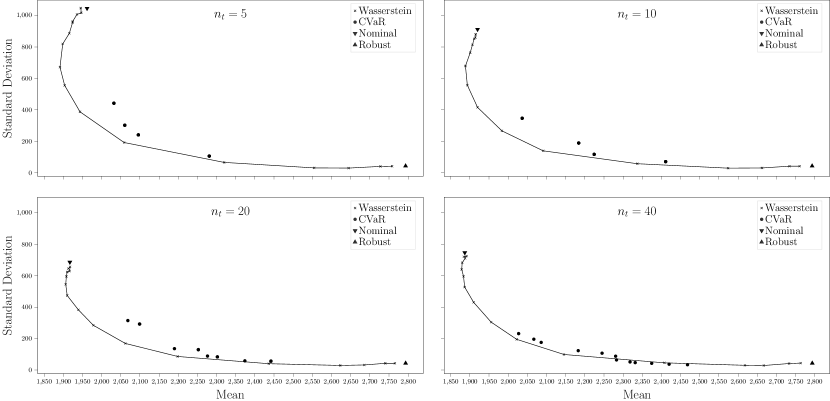

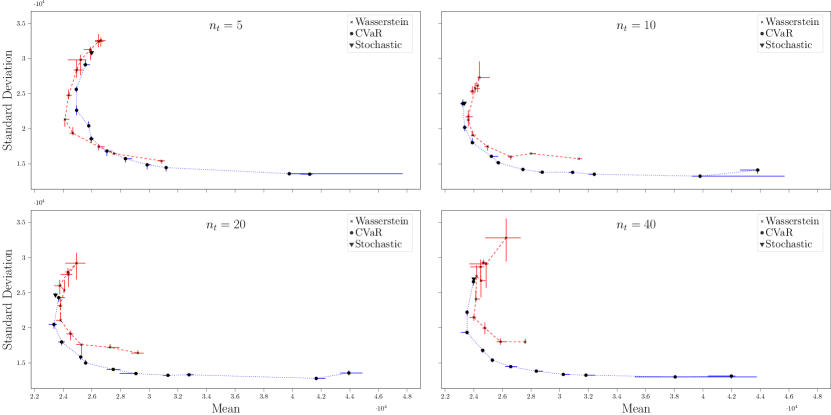

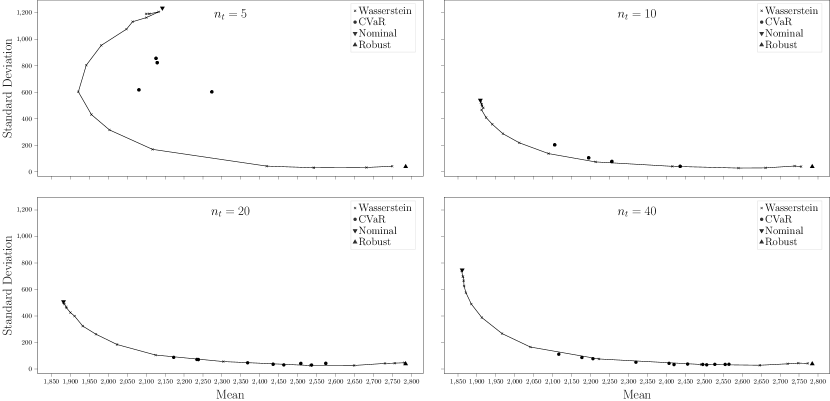

To better quantify the trade-off between mean and variance of the out-of-sample evaluation costs, we present Figure 2 (and Figures 7 and 8 in Section B). Here, the lines connect the points representing Wasserstein DR-MCO models from the smallest radius to the largest one. We say one policy dominates another policy if the former has smaller mean and standard deviation than the latter does, and have the following observations. First, in all cases, the policy from the robust model is dominated by some policy from the Wasserstein DR-MCO model. Second, the policies from the nominal and CVaR MSCO models are all dominated by some policy from the DR-MCO model, when the sample size is small (). Last, policies from the CVaR MSCO model could penetrate the frontier formed by policies from the DR-MCO model, especially when the sample sizes increase to or .

4.2.2 Inventory Problems with Uncertain Prices

Now we discuss the inventory problems with uncertain prices and fixed demands. These problems can be viewed as a simplified model for supply contract problems [28], where the goal is to estimate the total cost of such supply contract and under-estimation would be very undesirable. The uncertain prices are modeled by the following expression:

| (36) |

Here, is a factor for express orders. The uncertain vector follows a truncated multivariate normal distribution:

| (37) |

where the maximum is taken componentwise, is a factor for base prices, is the period, is the magnitude on the price variation, is the lower bound on the prices, and the covariance matrix is randomly generated (by multiplying a uniformly distributed random matrix with its transpose) and normalized to have its maximum eigenvalue equal to 1. The demands are deterministically given by

| (38) |

For the experiments, we consider products, stages, and the period . The price uncertainty has parameters , , , and . We choose the demand parameters and . The inventory and holding costs are and , and the rejection costs are , for each . The bounds are set to , , , , and for each . The Wasserstein radii in the DR-MCO models are set relatively with respect to defined in (35). The baseline MSCO models are constructed in the same way as described in Section 4.2.1. We do not consider the baseline MRCO model here as the uncertainty set is unbounded.

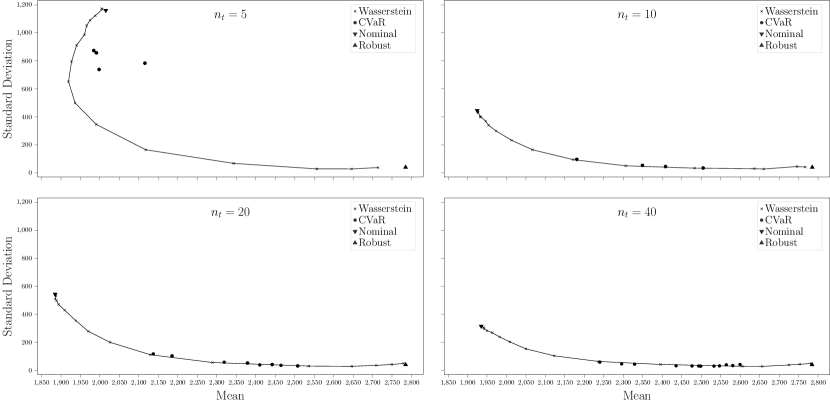

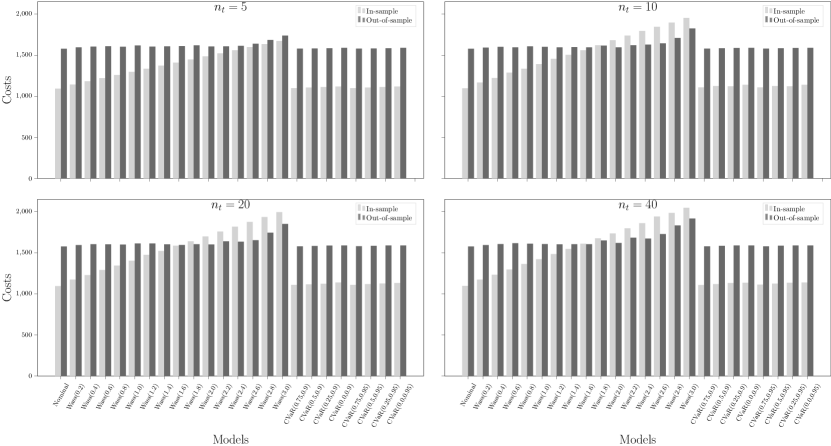

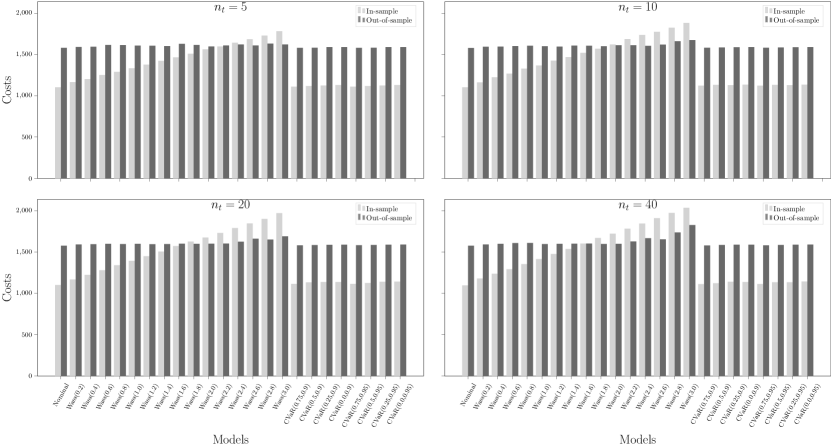

We plot the in-sample objective costs and out-of-sample mean evaluation costs in Figure 3 (and Figures 9 and 10 in Section B). The label refers to the nominal risk-neutral MSCO model using the empirical probability measures ; refers to the Wasserstein DR-MCO model with radius in each stage ; and refers to the CVaR risk-averse MSCO model with parameters and . As the uncertainty vectors now have an unbounded support, the robust model is no longer applicable. We see that in all cases, the in-sample objective cost grows linearly with respect to the Wasserstein distance, as predicted by Theorem 5. As the nominal stochastic model inevitably under-estimates the mean evaluation costs, using Wasserstein DR-MCO models with a relative radius depending on the sample size could achieve the out-of-sample performance guarantee in almost all test cases. Moreover, none of the CVaR risk-averse model in the experiments could achieve similar effect. Thus we believe that the Wasserstein DR-MCO models are particularly more favorable in the context of supply contracts. It is however worth mentioning that we do not observe any improvement of the mean or the variance of evaluation costs from the Wasserstein DR-MCO model over the baseline models.

4.3 Hydro-thermal Power Planning Problem

We next consider the Brazilian interconnected power system planning problem described in [11]. Let denote the indices of four regions in the system, and the indices of thermal power plants, where each of the disjoint subsets is associated with the region . We first describe the decision variables in each stage . We use to denote the stored energy level, to denote the hydro power generation, and to denote the energy spillage, of some region ; and to denote the thermal power generation for some thermal power plant . For two different regions , we use to denote the energy exchange from region to region , and to denote the deficit account for region in region . Let be the state vector of energy levels, the internal decision vector consisting of and for , for all , and for any ; and the uncertain vector energy inflows in stage . We define the DR-MCO by specifying cost functions as

| (39) | ||||

Here in the formulation, denotes the unit penalty on energy spillage, the unit cost of thermal power generation of plant , the unit cost of power exchange from region to region , the unit cost on the energy deficit account for region in region , the deterministic power demand in stage and region , the bound on the storage level in region , the bound on hydro power generation in region , the lower and upper bounds of thermal power generation in plant , the bound on the deficit account for region in region such that , and the bound on the energy exchange from region to region . The first constraint in (39) characterizes the change of energy storage levels in each region, the second constraint imposes the power generation-demand balance for each region, and the rest are bounds on the decision variables. The initial state and uncertainty vector are given by data. In our experiment, we consider and all other parameters used in this problem can be found in [11].

For the problem (39), we have the following remarks. First, we always have the relatively complete recourse as we allow spillage for extra energy inflows and deficit for energy demands in each region. Then it is straightforward to check that the Lipschitz constant of in either or can be bounded by the maximum of the deficit cost and the spillage penalty . Second, as now the uncertainty has an unbounded support , we need to estimate the growth rate of the value function . Note that if for any region the inflow is sufficiently large such that all demands and energy exchanges have met their upper bounds, then the only cost incurred by further increasing the inflow is simply the spillage penalty. Thus we conclude that , which is a constant function for all . Last, it is easy to see that Assumption 4 holds for the problem (39) and that the state variables is compact, so our SSSO implementation (Algorithm 3) would guarantee the convergence of our DDP algorithm (Algorithm 1).

Now we assume that the true uncertainty can be described by the following logarithmic autoregressive time series:

| (40) |

where the logarithm and the product are taken componentwise, and the parameters and are fit from historical data (see modeling details in [44] and coding details in [11]). Note that (40) is not linear with respect to the uncertainty vectors , and consequently it cannot directly be reformulated into a stagewise independent MSCO (or a DR-MCO) [30]. While there are approaches based on Markov chain DDP or linearized version of the model (40), they would require alteration of the DDP algorithm or an increase in the state space dimension. Alternatively, we would like to study the effects of the stagewise independence assumption [13] and the Wasserstein ambiguity sets in our DR-MCO model (2).

We can see that under the assumption on the true uncertainty (40), each uncertainty follows a multivariate lognormal distribution. Thus instead of directly using the empirical probability measures in the MSCO models, we can fit lognormal distributions based on the empirical outcomes , from which we further construct the SAA probability measures for the MSCO models. Moreover, we can also use this SAA probability measure to estimate the Wasserstein distance bound using

| (41) | ||||

We then set the Wasserstein radius to be for the relative factors . For the baseline risk-averse MSCO models, we use CVaR parameters and .

Note that as the SAA resampling step is random, the performance of our DR-MCO models and MSCO models would also be random. In addition, as it is often very challenging to solve the problem (39) to certain optimality gap, we choose to terminate it with a maximum of 1000 iterations, and allow random sampling in the Algorithm 1, in which the noninitial stage step does not strictly follow Definition 2 and guarantees only the validness. The benefit of such random sampling is that empirically the under-approximations (hence the policies) often improve faster especially in the beginning stage of the algorithms. We refer any interested readers to stochastic DDP literature (e.g., [2, 49, 26]) for more details.

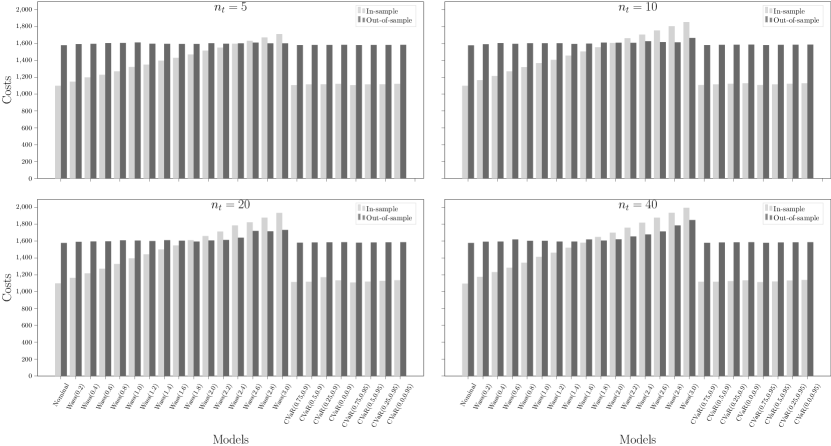

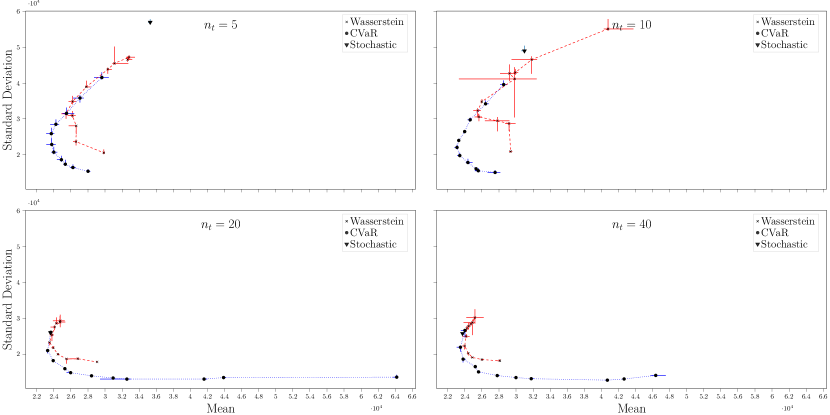

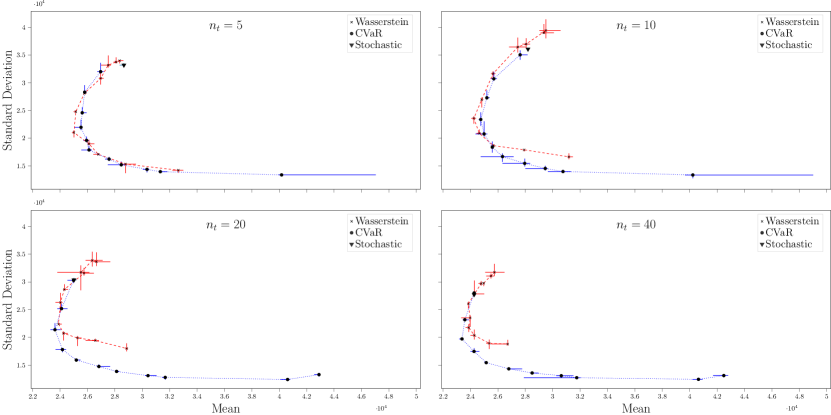

Due to the randomness of the models and the algorithm, we repeat each test 3 times with the same empirical probability measures in the experiment procedure. Then we plot the median values with error bars indicating the maximum and minimum values in Figure 4 (and Figures 11 and 12 in Section B) with increasing values of in the Wasserstein DR-MCO models and decreasing values of in the CVaR risk-averse MSCO models. First, we see that most of the Wasserstein DR-MCO models and the CVaR risk-averse MSCO models achieve better performances in either the mean or the standard deviation of evaluation costs, compared with the risk-neutral MSCO model. This observation justifies the usage of ambiguity sets or risk aversion when we approximate a stagewise dependent problem with stagewise independent models. Second, when the sample sizes are small ( or ), we see that the Wasserstein DR-MCO could outperform the CVaR risk-averse MSCO models (e.g., for ) in the out-of-sample mean cost. Third, as the sample size grows to or , while the policies obtained from Wasserstein DR-MCO models are always dominated by those from the CVaR risk-averse MSCO models, the latter could achieve both lower mean cost and standard deviation. Our conjecture is that for larger sample sizes, the cost-to-go functions of the Wasserstein DR-MCO models have more complicated shapes, thus making it hard to approximate in the limited 1000 iterations. This also suggests that the CVaR risk-averse MSCO models could lead to good out-of-sample performances when an probability distribution fitting is possible and the computation budget is limited.

5 Concluding Remarks

In this work, we study the data-driven DR-MCO models with Wasserstein ambiguity sets. We show that with a sufficiently large Wasserstein radius, such DR-MCO model satisfies out-of-sample performance guarantee with high probability even with limited data sizes. Using convex dual reformulation, we show that the in-sample conservatism is linearly bounded by the radius when the value functions are Lipschitz continuous in the uncertainties. To numerically solve the data-driven DR-MCO models, we design exact SSSO subproblems for the DDP algorithms by exploiting either the concavity of the convexity of the cost functions in terms of the uncertainties. We conduct extensive numerical experiments to compare the performance of our DR-MCO models against MRCO models, risk-neutral and risk-averse MSCO models. On the multi-commodity inventory problems with uncertain demands, we observe that the DR-MCO models are able to provide policies that dominate those baseline model policies in the out-of-sample evaluations when the in-sample data size is small. Moreover, on the inventory problems with uncertain prices, the DR-MCO models could achieve out-of-sample performance guarantee with little compromise of the objective value, which has not been achieved by the baseline models. On the hydro-thermal power planning problems with uncertain energy inflows, we see that with limited number of iterations, while the policies from the DR-MCO models could achieve better out-of-sample performances than the risk-averse MSCO models for small data sizes, they are dominated by the risk-averse MSCO models for larger data sizes. We hope these numerical experiments could serve as benchmarks for future studies on DR-MCO problems.

References

- [1] R Baucke, A Downward, and G Zakeri. A deterministic algorithm for solving stochastic minimax dynamic programmes. Optimization Online, 2018.

- [2] Regan Baucke, Anthony Downward, and Golbon Zakeri. A deterministic algorithm for solving multistage stochastic programming problems. Optimization Online, pages 1–25, 2017.

- [3] Heinz H Bauschke, Patrick L Combettes, et al. Convex analysis and monotone operator theory in Hilbert spaces, volume 408. Springer, 2011.

- [4] Güzin Bayraksan and David K Love. Data-driven stochastic programming using phi-divergences. In The operations research revolution, pages 1–19. INFORMS, 2015.

- [5] Aharon Ben-Tal, Dick Den Hertog, Anja De Waegenaere, Bertrand Melenberg, and Gijs Rennen. Robust solutions of optimization problems affected by uncertain probabilities. Management Science, 59(2):341–357, 2013.

- [6] Aharon Ben-Tal, Laurent El Ghaoui, and Arkadi Nemirovski. Robust optimization, volume 28. Princeton university press, 2009.

- [7] Dimitris Bertsimas, Vishal Gupta, and Nathan Kallus. Data-driven robust optimization. Mathematical Programming, 167(2):235–292, 2018.

- [8] Jeff Bezanson, Alan Edelman, Stefan Karpinski, and Viral B Shah. Julia: A fresh approach to numerical computing. SIAM review, 59(1):65–98, 2017.

- [9] Michele Conforti, Gérard Cornuéjols, Giacomo Zambelli, et al. Integer programming, volume 271. Springer, 2014.

- [10] Erick Delage and Yinyu Ye. Distributionally robust optimization under moment uncertainty with application to data-driven problems. Operations research, 58(3):595–612, 2010.

- [11] Lingquan Ding, Shabbir Ahmed, and Alexander Shapiro. A python package for multi-stage stochastic programming. Optimization online, pages 1–42, 2019.

- [12] Iain Dunning, Joey Huchette, and Miles Lubin. Jump: A modeling language for mathematical optimization. SIAM Review, 59(2):295–320, 2017.

- [13] Daniel Duque and David P Morton. Distributionally robust stochastic dual dynamic programming. SIAM Journal on Optimization, 30(4):2841–2865, 2020.

- [14] Andreas Eichhorn and Werner Römisch. Polyhedral risk measures in stochastic programming. SIAM Journal on Optimization, 16(1):69–95, 2005.

- [15] Peyman Mohajerin Esfahani and Daniel Kuhn. Data-driven distributionally robust optimization using the wasserstein metric: Performance guarantees and tractable reformulations. Mathematical Programming, 171(1):115–166, 2018.

- [16] Nicolas Fournier and Arnaud Guillin. On the rate of convergence in wasserstein distance of the empirical measure. Probability Theory and Related Fields, 162(3):707–738, 2015.

- [17] Rui Gao and Anton Kleywegt. Distributionally robust stochastic optimization with wasserstein distance. Mathematics of Operations Research, 2022.

- [18] Angelos Georghiou, Angelos Tsoukalas, and Wolfram Wiesemann. Robust dual dynamic programming. Operations Research, 67(3):813–830, 2019.

- [19] Pierre Girardeau, Vincent Leclere, and Andrew B Philpott. On the convergence of decomposition methods for multistage stochastic convex programs. Mathematics of Operations Research, 40(1):130–145, 2015.

- [20] Vincent Guigues. Convergence analysis of sampling-based decomposition methods for risk-averse multistage stochastic convex programs. SIAM Journal on Optimization, 26(4):2468–2494, 2016.

- [21] Vincent Guigues and Werner Römisch. Sampling-based decomposition methods for multistage stochastic programs based on extended polyhedral risk measures. SIAM Journal on Optimization, 22(2):286–312, 2012.

- [22] Gurobi Optimization, LLC. Gurobi Optimizer Reference Manual, 2022.

- [23] Grani A Hanasusanto and Daniel Kuhn. Conic programming reformulations of two-stage distributionally robust linear programs over wasserstein balls. Operations Research, 66(3):849–869, 2018.

- [24] Hidetoshi Komiya. Elementary proof for sion’s minimax theorem. Kodai mathematical journal, 11(1):5–7, 1988.

- [25] Václav Kozmík and David P Morton. Evaluating policies in risk-averse multi-stage stochastic programming. Mathematical Programming, 152(1):275–300, 2015.

- [26] Guanghui Lan. Complexity of stochastic dual dynamic programming. Mathematical Programming, pages 1–38, 2020.

- [27] Guanghui Lan. Correction to: Complexity of stochastic dual dynamic programming. Mathematical Programming, pages 1–3, 2022.

- [28] Chung-Lun Li and Panos Kouvelis. Flexible and risk-sharing supply contracts under price uncertainty. Management science, 45(10):1378–1398, 1999.

- [29] Fengming Lin, Xiaolei Fang, and Zheming Gao. Distributionally robust optimization: A review on theory and applications. Numerical Algebra, Control & Optimization, 12(1):159, 2022.

- [30] Nils Löhndorf and Alexander Shapiro. Modeling time-dependent randomness in stochastic dual dynamic programming. European Journal of Operational Research, 273(2):650–661, 2019.

- [31] Mario VF Pereira and Leontina MVG Pinto. Multi-stage stochastic optimization applied to energy planning. Mathematical programming, 52(1):359–375, 1991.

- [32] Andrew B Philpott, Vitor L de Matos, and Lea Kapelevich. Distributionally robust sddp. Computational Management Science, 15(3):431–454, 2018.

- [33] Andrew B Philpott and Ziming Guan. On the convergence of stochastic dual dynamic programming and related methods. Operations Research Letters, 36(4):450–455, 2008.

- [34] Andy Philpott, Vitor de Matos, and Erlon Finardi. On solving multistage stochastic programs with coherent risk measures. Operations Research, 61(4):957–970, 2013.

- [35] Alois Pichler and Alexander Shapiro. Mathematical foundations of distributionally robust multistage optimization. SIAM Journal on Optimization, 31(4):3044–3067, 2021.

- [36] Hamed Rahimian, Güzin Bayraksan, and Tito Homem-de Mello. Identifying effective scenarios in distributionally robust stochastic programs with total variation distance. Mathematical Programming, 173(1):393–430, 2019.

- [37] Hamed Rahimian and Sanjay Mehrotra. Distributionally robust optimization: A review. arXiv preprint arXiv:1908.05659, 2019.

- [38] R Tyrrell Rockafellar. Convex analysis. Number 28. Princeton university press, 1970.

- [39] R Tyrrell Rockafellar and Roger J-B Wets. Variational analysis, volume 317. Springer Science & Business Media, 2009.

- [40] Herbert E Scarf. A min-max solution of an inventory problem. Rand Corporation Santa Monica, 1957.

- [41] Alexander Shapiro. Analysis of stochastic dual dynamic programming method. European Journal of Operational Research, 209(1):63–72, 2011.

- [42] Alexander Shapiro and Shabbir Ahmed. On a class of minimax stochastic programs. SIAM Journal on Optimization, 14(4):1237–1249, 2004.

- [43] Alexander Shapiro, Darinka Dentcheva, and Andrzej Ruszczynski. Lectures on stochastic programming: modeling and theory. SIAM, 2021.

- [44] Alexander Shapiro, Wajdi Tekaya, Joari P da Costa, and Murilo P Soares. Final report for technical cooperation between georgia institute of technology and ons-operador nacional do sistema elétrico. Georgia Tech ISyE Report, 2012.

- [45] Alexander Shapiro, Wajdi Tekaya, Joari Paulo da Costa, and Murilo Pereira Soares. Risk neutral and risk averse stochastic dual dynamic programming method. European journal of operational research, 224(2):375–391, 2013.

- [46] Cédric Villani. Optimal transport: old and new, volume 338. Springer Science & Business Media, 2008.

- [47] Wolfram Wiesemann, Daniel Kuhn, and Melvyn Sim. Distributionally robust convex optimization. Operations Research, 62(6):1358–1376, 2014.

- [48] Shixuan Zhang and Xu Andy Sun. On distributionally robust multistage convex optimization: New algorithms and complexity analysis. arXiv preprint arXiv:2010.06759, 2020.

- [49] Shixuan Zhang and Xu Andy Sun. Stochastic dual dynamic programming for multistage stochastic mixed-integer nonlinear optimization. Mathematical Programming, pages 1–51, 2022.

- [50] Chaoyue Zhao and Yongpei Guan. Data-driven risk-averse stochastic optimization with wasserstein metric. Operations Research Letters, 46(2):262–267, 2018.

Appendix A Finite-dimensional Dual Recursion for DR-MCO

In this section, we briefly review some general strong Lagrangian duality theory and then apply it to derive our finite-dimensional dual recursion for our DR-MCO problems (2).

A.1 Generalized Slater Condition and Lagrangian Duality

Given an -vector space , we consider the following optimization problem.

| (42) | ||||

Here, is a convex subset, the functions are convex for each and are affine for each . Using a vector of multipliers , the Lagrangian dual problem of (42) can be written as

| (43) |

where the admissible set for the multipliers is defined as . We want to show the strong duality between (42) and (43), given the following condition.

Definition 3.

Recall that the effective domain of a convex function is defined as , which is clearly a convex set. The affine hull of a convex set is defined to be the smallest affine space containing , and the relative interior of is the interior of viewed as a subset of its affine hull (equipped with the subspace topology). By convention, we have if there is no such that for all .

Proposition 15.

Assuming the Slater condition, the strong duality holds with an optimal dual solution (i.e., the supremum in the dual problem (43) is attained).

Proof.

The weak duality holds with a standard argument of exchanging the and operators, so it suffices to show that . If then the inequality holds trivially, so we assume that . Given the Slater condition, the value function of the primal problem (42) must be proper for all (ref. Theorem 7.2 in [38]) because is in the relative interior of the effective domain of and . Thus it is also subdifferentiable at the point (ref. Theorem 23.4 in [38]), i.e., there exists a subgradient vector such that for any . Here, for each , the multiplier must be nonnegative since the function is not increasing in the -th component, so we have . Since the inequality holds for any , we have

The first equality here results from exchanging two infimum operators, while the second one follows by taking , due to the nonnegativity of for each , and replacing with for each . ∎∎

The strong Lagrangian duality guaranteed by the Slater condition is useful for many applications because we do not have to specify the topology on the vector space . The corollary below summarizes a special case where there is no equality constraint, and all the inequality constraints can be strictly satisfied.

Corollary 16.

Proof.

Let for , and be an open hyperrectangle. Then for any , we have . Therefore, we know that the Slater condition holds because , and the result follows from Proposition 15. ∎∎

Assuming that is normed and complete, we could have another generalized Slater condition for problems with equality constraints. However, it is in general much harder to check so we do not base our discussion in Section 2 on it.

A.2 Finite-dimensional Dual Recursion

Now we derive the finite-dimensional dual recursion for the DR-MCO (2). Recall that by the definition of Wasserstein distance (5), the constraint is ensured if there exists a probability measure on the product space with marginal probability measures and , such that

| (44) |

where we define to be the probability measure conditioned on and for any . Then we have by the law of total probability. Due to this condition, we define the following parametrized optimization problem for any and any state

| (45) | ||||

From the discussion above, we see that . We next show that the equality holds assuming the strict feasibility of the empirical measure .

Let denote a multiplier vector. Assumption 1 guarantees by Corollary 16 the strong duality of the following Lagrangian dual problem

| (46) |

where the second equality holds because each Dirac measure centered at satisfies and each is a probability measure. We are now ready to show that , which implies Theorem 1.

Theorem 17.

Proof.

Let us fix any . The first assertion on the concavity of follows directly from (46) since is a minimum of affine functions. Moreover, from the definition (45) we see that for any as the measures satisfy the constraints and by the nonnegativity of the cost functions . If , then the equality holds trivially as we already showed that . Otherwise, we must have for any due to the concavity. Thus is a continuous function on .

To prove the inequality , take any . From the definition (5), the constraint implies that there exists a probability measure with marginal probability measures and such that . In other words, we have . Now by the continuity of , we conclude that . ∎∎

Appendix B Supplemental Numerical Results

In this section, we display supplemental results from our numerical experiments in Section 4.