On Kalman-Bucy filters, linear quadratic control and active inference

Abstract

Linear Quadratic Gaussian (LQG) control is a framework first introduced in control theory that provides an optimal solution to linear problems of regulation in the presence of uncertainty. This framework combines Kalman-Bucy filters for the estimation of hidden states with Linear Quadratic Regulators for the control of their dynamics. Nowadays, LQG is also a common paradigm in neuroscience, where it is used to characterise different approaches to sensorimotor control based on state estimators, forward and inverse models. According to this paradigm, perception can be seen as a process of Bayesian inference and action as a process of optimal control. Recently, active inference has been introduced as a process theory derived from a variational approximation of Bayesian inference problems that describes, among others, perception and action in terms of (variational and expected) free energy minimisation. Active inference relies on a mathematical formalism similar to LQG, but offers a rather different perspective on problems of sensorimotor control in biological systems based on a process of biased perception. In this note we compare the mathematical treatments of these two frameworks for linear systems, focusing on their respective assumptions and highlighting their commonalities and technical differences.

1 Introduction

The Linear Quadratic Regulator (LQR) is is a method originally developed in the field of control theory [57, 55, 3, 85] with the goal of describing a solution to linear control problems using “full-state” feedback methods, i.e., methods relying on a state of the system to regulate (or plant) that is fully available. In more realistic applications however, it is common to assume that the state of a plant and its ensuing dynamics cannot be fully observed, i.e., they are only partially observable, or hidden. With incomplete information, an observer (or estimator) is usually introduced to first infer the hidden dynamics of a system, combining this inference on the (hidden) state of a plant with LQR methods to build an optimal feedback law [5, 3, 85]. In the case of linear continuous time problems, the optimal observer has the form of a Kalman-Bucy filter [50, 4, 85]. The combination of Kalman-Bucy filters and LQR often goes by the name of Linear Quadratic Gaussian (LQG) controllers [105, 5, 3, 85]. Despite some of its well-known limitations (e.g., its robustness issues [25]), LQG constitutes nonetheless a standard approach for a large class of linear problems in stochastic optimal control [5], due to its analytical tractability and guaranteed optimality under a given set of assumptions. In the last few decades, LQG and some of its extensions have also become a standard paradigm outside of the field of control theory, in particular for models of sensorimotor control in neuroscience [68, 95, 92, 31]. This reflects recent developments in computational models of perception and behaviour, nowadays mostly described in terms of state estimation, or inference [64, 80, 66, 63, 35], and optimal control [62, 103, 95, 91, 31] respectively.

In the last few years, active inference has also been introduced in computational and cognitive neuroscience as a theory to describe brain functioning in terms of (approximate Bayesian) inference and control [38, 35, 40, 39, 78]. Similarly to LQG, active inference has also been instrumental in defining models of perceptual and motor processes, from physiological accounts of motor control [1], all the way to computational descriptions of optimal behaviour claimed to be contrasting LQG [36]. The rapidly evolving nature of this theory has so far encouraged different authors to cover and summarise some of its different technical aspects [19, 21, 17, 81, 47, 23]. At the moment, however, the relationship between this framework and popular LQG-based models in neuroscience remains still unclear, especially considering how the two share a common mathematical background based on methods coming from (approximate) Bayesian inference and stochastic optimal control, but generate rather different predictions. The goal of this note is thus to produce a comparison of the mathematical treatments introduced by active inference and LQG. The discussion will be based on 1) continuous time linear problems, using 2) standard formulations of both frameworks (thus not explicitly addressing more advanced proposals, such as the “optimal feedback control” extension of LQG [95, 92], leaving this for future work), to focus on 3) their possible implications for the neural and the cognitive sciences.

2 Linear Quadratic Gaussian control for linear multivariate continuous time systems

In this section we introduce the mathematical underpinnings of LQG control, starting from a general formulation of the Kalman-Bucy filter and LQR for multivariate systems in continuous time, and then combining their results under the separation principle [105] to form LQG controllers. A full derivation, including mathematical proofs and other technical details not relevant to our comparison can be found in standard treatments of Kalman filtering [50, 22] and LQR/LQG [3, 85].

2.1 Kalman-Bucy filter or Linear Quadratic Estimator (LQE)

The Kalman-Bucy filter, also known as Linear Quadratic Estimator (LQE), is an algorithm for the estimation of hidden or latent variables evolving over time, i.e., hidden states with some (linear) dynamics [56, 84, 50, 22]. Its popularity is mainly due to the fact that

- •

-

•

it provides a recursive algorithm well suited for computer simulations and online applications with new data added over time, and

-

•

it makes no assumptions on the stationarity of the hidden dynamics a system, particularly relevant for real-world applications and especially control problems.

In this treatment we will focus on the continuous formulation of this algorithm, the Kalman-Bucy filter [58], as opposed to its discrete formulation, often simply going by the name of Kalman filter [56]. To introduce the Kalman-Bucy filter, we initially define a (linear) continuous dynamical systems in state-space form, representing the simplest plant whose hidden state the filter ought to estimate:

| (1) |

Here the bold characters indicate vectors. The first equation describes linear dynamics for a vector of hidden states . In the second equation, is a vector of noisy measurements, or observations, of hidden states . Vectors correspond to Wiener processes for state and observation equations respectively, with thus defined as zero-mean white Gaussian random variables with covariance matrices [50, 22]. Matrices are represented using capital letters, with as the observation matrix mapping dynamics to observations and as the state transition matrix characterising the time-dependent evolution of .

The Kalman-Bucy filter is known in the literature to be the optimal estimator for linear systems with quadratic cost functions and Gaussian white random variables [56, 50, 22, 6]. Under these assumptions, it is also a minimum variance estimator [50, 22], i.e., it minimises an objective function represented by the mean square error (MSE), or variance of the error, given by:

| (2) |

where is a vector of the estimates of states . Through the Kalman-Bucy algorithm, one can determine estimates via [50, 85, 22]:

| (3) |

The vector is thus updated using the sum of the current best estimate multiplied by the known transition matrix, , and prediction errors (or innovations), . These prediction errors are multiplied by the Kalman gain matrix, , expressed in the second equation, and representing the optimal trade-off between previous estimates and information gathered from new observations. To calculate the Kalman gain matrix one then needs to estimate , the a-posteriori error covariance matrix, expressing the accuracy of the state estimate in the first equation. The trace of (i.e., the sum of the components on the main diagonal) gives the sum of the independent components of the covariance matrix equivalent to the MSE in equation (2), thus describing the accuracy of the state estimation process too. Kalman(-Bucy) filters can also be described in terms of Bayesian inference, with and representing mean and covariance matrix of an estimated (multivariate) Gaussian distribution of hidden states [50, 71, 22].

2.2 Linear Quadratic Regulator (LQR)

Linear Quadratic Regulator (LQR) is defined for deterministic linear systems with quadratic cost functions. Under these assumptions, the optimal control law is equivalent to a negative (proportional) feedback mechanism222In the case of infinite-horizon control problem. [57, 55, 3]. The noiseless plant to regulate is represented by a linear differential equation:

| (4) |

with as a vector of measured variables to be controlled. In this case we assume that the state of the plant is directly observable (no measurement noise, in the LQE formulation) so while the differential form is not strictly necessary (i.e., we could replace with and drop the notation), it is maintained for consistency with the Kalman-Bucy filter definition provided previously. is the state transition matrix, as in the case of the Kalman-Bucy filter, while is the control matrix mapping actions to outputs . Actions are often represented by in the control theory literature, however here we highlight a difference that will become important later: in the present note, describes inputs from a more general state-space perspective, i.e., all the variables that affect the (hidden) states of a system but are not states themselves, such that

| (5) |

In this formulation, is used to represent only a part of all possible inputs, i.e., the subset of inputs produced by a regulator that have an effect on the hidden state of a system (in the sense on Kalman’s controllability of a plant [55, 85]), such as the motor actions of an agent that affect its environment. Variables include, on the other hand, disturbances that influence a system but cannot be governed by a controller. Often, the definitions of and are often used interchangeably, assuming either that

- •

- •

In LQR, the goal is to stabilise (control, compensate or regulate) variables around target values defined by 333Here we want to highlight that in general , meaning that the degree of controllability of a system, or rather the dimension of action vector to reach target values , need not necessarily coincide with the number of states in ., controlling through actions . Such actions are determined via the optimisation of a function that accumulates costs over time, called cost-to-go or value functional:

| (6) |

which represents the infinite horizon simplification of the problem, i.e., the upper limit of the integral is infinity. The instantaneous version, simply called cost function or cost rate, is defined for LQR as:

| (7) |

with and as arbitrary matrices representing the relative balance between the minimisation of the distance from the target and costs for control respectively. In LQR, the optimal action vector is computed using [3, 85, 93]:

| (8) |

The first equation implements a negative feedback mechanism on using actions . In the second equation, is the feedback gain matrix, determined using the matrix , which is the Hessian of the cost-to-go functional defined in equation (6) [94].

2.3 Linear Quadratic Gaussian (LQG) control

One of the limitations of standard LQR controllers lies in the fact that they do not explicitly deal with state/observation uncertainty (or noise), i.e., the original formulation is for deterministic systems only [55]. On the other hand, in real-world engineering applications as well as in biological systems, it is more common to think of systems with limited access to information from the environment and actions thus applied to a set of hidden states when only noisy measurements are available. In the control theory literature, Linear Quadratic Gaussian (LQG) control [5, 8, 3, 85] is thus introduced as a combination of estimation and control for linear systems, building on the previously defined Kalman-Bucy filter and LQR. Under a particular set of assumptions, LQG controllers can then be seen as “modular”, with estimation and control processes independently designed to form an optimal solution following the separation principle of control theory [54, 105, 46]. The idea behind the separation theorem is very closely related to the certainty equivalence described in econometrics and decision making [83, 90], although some works, including [102, 14, 85], highlight their differences especially in the context of “dual effects” (see Discussion) and risk-sensitive control. In information theory, Shannon [82] also introduced a different notion of a separation principle, to explain coding via two (separate) phases of source compression and channel coding [45]. The connections between Shannon’s work and the separation principle in control theory have become more clear in recent years, thanks to a growing literature showing how Shannon’s definition captures and potentially generalises the results from control theory, see for instance [89, 88, 30]. Here however, the focus will be on the principle traditionally described in control theory for LQG in continuous systems [54, 105], under the following standard assumptions [105, 5, 14, 3, 85]:

-

1.

linear process dynamics and observation laws describing the environment and its latent variables,

-

2.

Gaussian white additive (cf. [92]) noise in both process and measurement equations,

-

3.

known covariance matrices for both process and measurement noise,

-

4.

quadratic cost function used to measure the performance of a system, and

-

5.

known inputs, .

The last condition is particularly relevant for a comparison with active inference and is due to the assumptions behind the Kalman-Bucy filter, defined as an optimal estimator in an unbiased sense only for known inputs [32], outputs (i.e., measurements) and parameters. To provide a more formal treatment of the separation principle, we then define a general linear system to be regulated in the presence of noise or uncertainty:

| (9) |

where all the variables and parameters follow the notation previously defined for Kalman-Bucy filters and LQR. In this case, the cost rate is modified to deal with a stochastic system with white additive noise on both dynamics and observations. To do so, the standard formulation of LQR is extended, including stochastic variables so to minimise the expected cost-to-go [85, 93]:

| (10) |

Importantly, one can show that under the assumptions introduced above

| (11) |

where we replaced states with their estimates , thus implying that the optimal control can be computed using only the state (point) estimates, i.e., means . Minimising the expected value of the cost-to-go is thus equivalent to minimising the cost-to-go for the expected state.

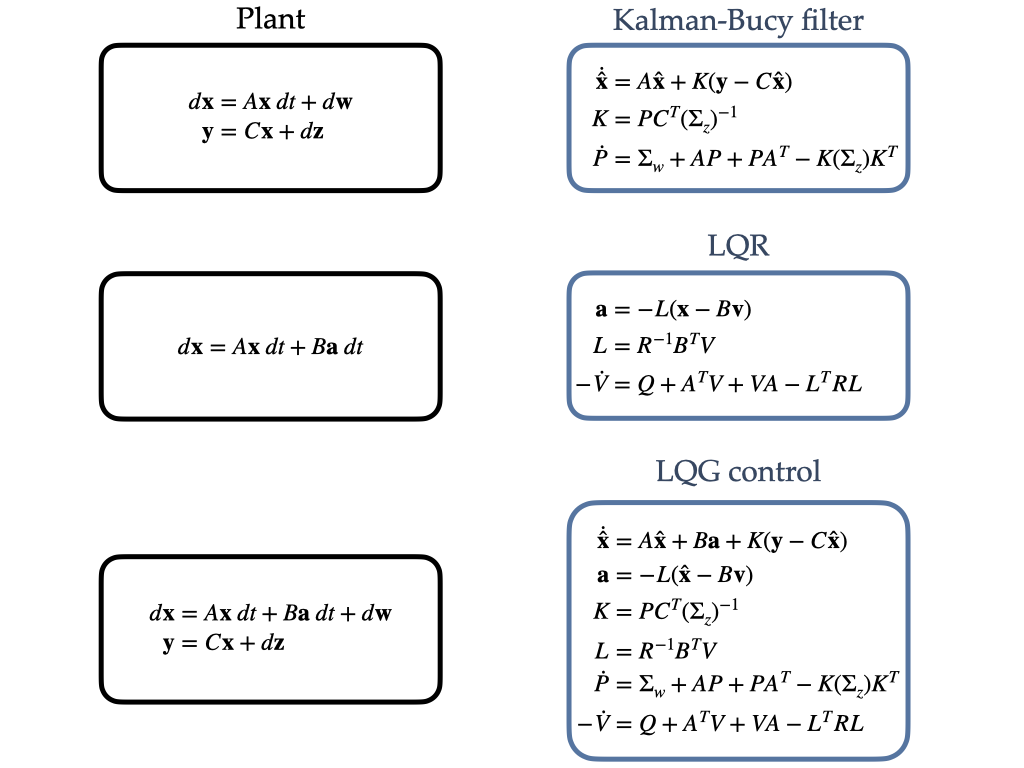

Optimal estimation and control are then achieved by sequentially combining two separate parts, a Kalman-Bucy filter and a LQR, to produce a regulator optimal in a minimum-variance, unbiased sense [3, 85]. One thus obtain the following scheme:

| (12) |

where the Kalman-Bucy filter provides optimal (unbiased) state-estimates of observations that are conditionally dependent only on the vector of motor actions that contributed to the generation of the current observations (i.e., at the time of the estimation), thanks to a Markov assumption due to the presence of white noise [22]. The LQR then uses these point estimates to implement an optimal controller that essentially treats the problem as a deterministic one by replacing the state of a plant with its best estimate, the mean, see also Fig. 1. The ability to analytically evaluate the expected cost rate using only expectations , see equation (11), is thus at the core of LQG architectures. In this way, estimation and control processes interact via the sharing of some information in the form of estimates to the controller and a copy of actions to the estimator, but are otherwise seen as fundamentally separable modules to be optimised independently.

3 Active inference for linear multivariate continuous time systems

Active inference is a process theory describing brain functioning and behaviour in living systems using ideas from Bayesian inference and stochastic optimal control [38, 35, 40, 21, 39]. In this section we begin to establish its relations to the LQG architecture, building an active inference solution to the regulation problem of a linear multivariate system. To do so, we first define a generative model [15, 18] for a system that prescribes 1) the dynamics of the plant to control (see equation (9)) and 2) how these relate to observations. The linear generative model is presented in a state-space form, similar to the the one expressed in equation (9), but is a rather different mathematical object: while equation (9) describes the “real” plant (i.e., its generative process [38, 21, 39]), in a generative model we write a set of equations embodying our assumptions regarding a plant that are used to derive update equations that define a controller. Importantly, the mapping between a generative process and a generative model is not necessarily one-to-one, and different generative models can ensure regulation while representing different sets of assumptions [9, 96]. The generative model to regulate the same linear system previously introduced in equation (9) is thus defined as444It should be noted that Wiener processes in continuous time are often presented using a notation in terms of differentials , and where is a Wiener process or Brownian motion, see equation (9). However, it is also possible to simply use a Langevin form as we do here even in the case of white noise, at least until an appropriate calculus (e.g., Ito or Stratonovich) is introduced and the appropriate interpretation applied [98]. Notice also that we adopt here a Lagrange notation for derivatives, with Newton’s notation introduced later to specify the ensemble dynamics of the recognition dynamics, see [21].:

| (13) |

where the hat over matrices highlights the important fact that such matrices do not (have to) mirror their counterparts describing the world dynamics in equation (9), i.e., as explained above one can describe several generative models for a given plant. In the simplest case, this may simply imply that matrices are unknown and should also be estimated [79, 34]. More in general however, different matrices in a generative model can implement desired dynamics that substantially differ from the observed ones in order to obtain specific desired behaviours555within reachability and controllability constraints [85] as previously shown in for instance [37, 38, 10, 13]. As we will discuss later, this constitutes a point of departure from the traditional assumption behind architectures built on the separation principle, where the variables described in LQG controllers reflect, by construction, the linear dynamics of the observed system, such that

| (14) |

Furthermore, while in LQG one has to assume full knowledge of motor actions for the unbiasedness of Kalman filters [85], in active inference this vector is not explicitly modeled, leading to a case where no copy of motor signals is available or even necessary, relying instead on a process of biased estimation [12] (cf. [32]). Active inference proposes that a deeper duality of estimation and control exists whereby, in the simplest case, actions are just peripheral open-loop responses to the presence of prediction errors on observations, irrespectively of the causes of sensations: self-generated, , or external, [36, 41, 20, 1]. While our focus remains on the continuous time formulation of active inference, it should be noted that in more recent discrete formulations of behaviour under this framework, this account was extended with action cast as a problem of minimising expected free energy, inferring (fictitious) control states or rather, time-dependent sequences of control states, or policies 666called and in [40]. associated to a set of actions [41, 40, 96]. Importantly however, both these proposals rely on theories in neuroscience that suggest a lack of information on self-produced controls (i.e., efference copy [101]), proposing an alternative for effective motor control in biological systems [27, 36, 1, 28, 65]. The generative model thus does not describe directly the role of actions as seen in equation (9). In their place, the vector encodes instead priors in the form of external or exogenous inputs in a general state-space models sense, (cf. in equation (5)), or biases in probabilistic settings. In this light, priors can be used as targets for the regulation problem (see equation (6) and equation (10)), effectively biasing the estimator to infer desired rather than observed states, with a controller instantiating actions on the world to fulfill the target (state or trajectory) of a controller [13]. Variables model noise in or uncertainty about the environment in a Stratonovich sense, i.e., having non-zero autocorrelations (see Discussion).

Following the general formulation of the variational free energy under Gaussian assumptions (Laplace and variational Gaussian approximations) applied to the multivariate case, we define (dropping terms constant during the minimisation process):

| (15) |

with a full derivation found in, for instance, [21, 24, 9]. Here denotes the set of all the hidden variables of a generative model, such that , with defined above and parameters including the matrices previously introduced, while hyperparameters encode the stochastic properties of the generative model, i.e., the covariance matrices and their possible reparameterizations.

With Gaussian assumptions on , the state-space model in equation (13) can then be written down in a probabilistic form, mapping the measurements equation to a likelihood

| (16) |

assuming that observations are conditionally independent on inputs . The system’s dynamics are also then expressed as a prior [34]

| (17) |

where we assumed that inputs are known, with their prior thus reducing to distribution with its mass densely concentrated around its mean (in the limit, a delta function). This assumption simply expresses the fact that in our current formulation of control problems, will encode values assigned by us and representing the target-state or trajectory of the system. Since the probabilities densities are both multivariate Gaussian, they can be written as:

| (18) |

where represent the dimensions of vectors and and are the determinants of the respective covariance matrices. By substituting equation (18) in equation (15), the variational free energy for a generic linear multivariate system becomes:

| (19) |

where we replaced with their expectations , since under the Laplace and the variational Gaussian assumptions, the free energy in equation (15) must be evaluated at the mode of , equivalent to the mean for Gaussian variables. In the same way, we then introduced precision matrices as the inverse of the best estimate of covariance matrices , derived from an application of the Laplace approximation to covariances [34]. It is important to highlight that, in general, the covariance matrices (or their inverse, the precision matrices) used in the generative model can in fact be different from the ones used to describe the environment or generative process [13]. The same approximations can in principle be applied to [34], but to simplify the treatment (and in line with the idea of comparing active inference and LQG on equal ground), we assumed that these parameters are known quantities, even if different from their respective matrices in the generative process (see equation (14)).

The recognition dynamics, prescribing estimation and control in a system minimising variational free energy [43, 42, 21] are implemented in standard active inference formulations as a gradient descent scheme on free energy with respect to and for estimation:

| (20) |

This system for the update of the means of and is similar to the standard form of prediction and update steps in a Kalman filter for discrete systems [22, 33]. The equations include a term , ensuring the convergence of the scheme when higher embedding orders are also optimised, i.e., , see [43]. This differential operator is defined so that and thus gives 0 for all higher orders of derivatives that are normally assumed to be white noise (see discussion in [43]). Its use is equivalent to the presence of a-priori state estimates found in the update equations of traditional treatments of Kalman(-Bucy) filters, and computed in the prediction step. In practice the system in equation (20) collapses to a single equation when the update step (second line in equation (20)) is assumed to be faster:

giving an equation resembling the Kalman-Bucy matrix equation for the estimated mean in equation (3):

On the other hand, control is defined via a gradient descent on actions , assuming the perspective of a system that can only infer that actions have an effect on observations (i.e., no direct knowledge of how affect the latent states or their estimates ):

| (21) |

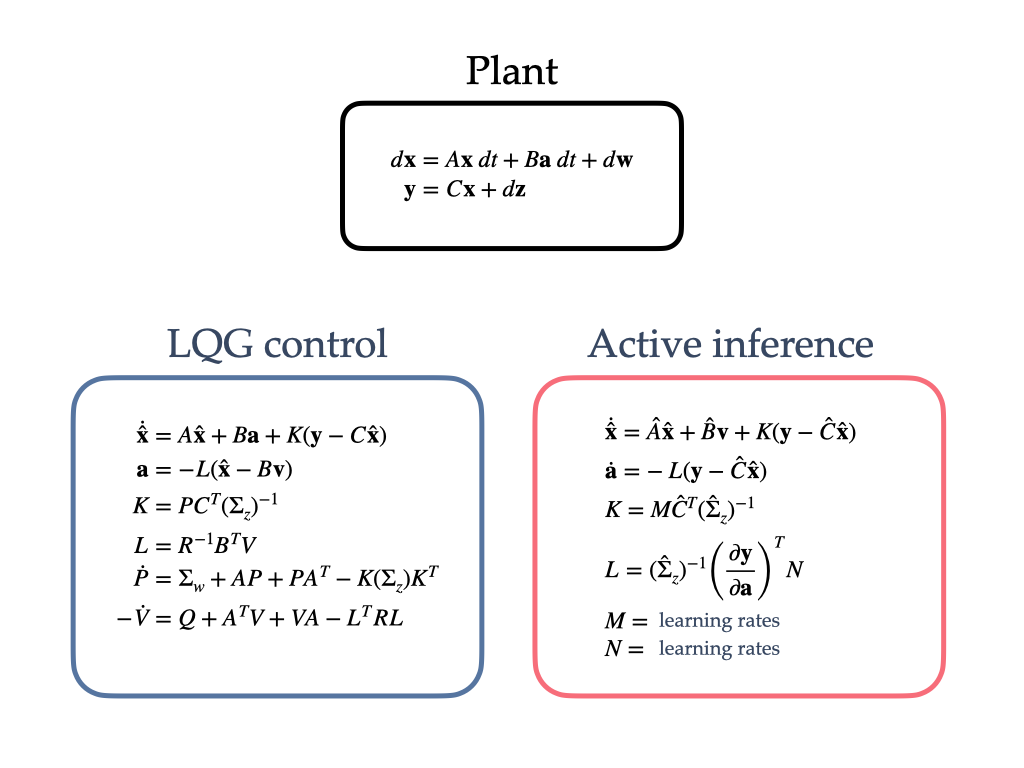

These equations are finally reported in Fig. 2, with the introduction of learning rates and to facilitate a more direct comparison.

4 A comparison of LQG and active inference formulations

4.1 Related work

In recent years, several reviews [19, 21, 17, 47, 23] have tackled different mathematical aspects of active inference, in order to analyse some of its claims and possibly fully uncover its potential as a general theory for the neurosciences. This note however differs in crucial ways from said reviews, offering a technical perspective that should be seen as complementary to previous works. In particular, unlike [19] here we focus on sensorimotor control rather than on pure perceptual accounts of cognitive systems. The current mathematical treatment builds on [21] where an explicit derivation of active inference in generalised coordinates of motion for non-Markovian univariate continuous time stochastic processes was introduced, inspired by more general formulations found, for instance, in [43, 34]. Here we extend this formulation to multivariate systems to introduce a comparison with the standard LQG paradigm, inspired by work initially discussed in [11, 12, 9]. In [17, 81, 23] the focus is on discrete time formulations of active inference [40, 39] based on partially observable Markov decision processes (POMDPs) and thus differ in fundamental ways from the work presented here which, on the other hand, relies on continuous time systems [43, 38, 21]. Finally, the work presented in [47] also focuses on discrete formulations, without including an explicit account of control and behaviour (similar to [19]).

4.2 Differences

As seen in previous sections, and summarised in Fig. 2, LQG and active inference share a common mathematical background rooted in Bayesian inference and optimal control. In practice, however, there are a few key differences between these two architectures for linear continuous time systems. Some of them are rather substantial and affect even more general cases, while others end up playing a relatively minor role, especially for the simplified linear systems presented in this note. Here we will discuss, in particular, differences due to:

-

•

state-space model formulations

-

•

action selection policies

-

•

minimisation schemes

-

•

cost functionals and general derivations

4.2.1 Different state-space model formulations

LQG and active inference both rely on state-space model formulations, however while LQG implements a more traditional one, active inference is said to introduce a definition of models in generalised coordinates of motion, i.e., higher embedding orders for continuous time models [43, 34]. These models are equivalent to a continuous time version of standard treatments of coloured (Gaussian) noise, i.e., non-Markovian (Markovian of order N, or semi-Markovian) processes, in terms of augmented state-space models seen for discrete systems [50, 34]. When the noise is not white, the typical strategy involves increasing the dimensionality of the state-space, treating coloured noise as an autoregressive model of independent white noise components that can be explicitly separated by introducing higher embedding dimensions that maintain an overall Markov property [50]. In generalised state-space models for continuous systems, random variables are treated as analytical noise with non-zero autocorrelation, replacing the (Markovian) Wiener processes typical of Ito’s formulations of stochastic variables777In Ito calculus autocorrelations are strictly equal to zero [50]. and adopted in most standard formulations of Kalman-Bucy filters and LQG control. Wiener processes are usually considered a good approximation of coloured noise only when the time scale of an estimator is much slower than the true time scale of the dynamics of an environment/plant. In this light, non-Markovian processes should simply be seen as a natural generalisation of treatments based on Wiener noise, whose derivatives are mathematically not well defined in the continuous limit, thus making it less suited for models whose characteristic time scale more closely matches the real dynamics. This is often the case for systems studied in various areas of engineering [86, 50], physics [29, 99, 69, 44], ecology [48, 100] and neuroscience [34, 97, 67], among others. Generalised state-space models use a Stratonovich’ formulation that allows for differentiable (analytical) noise [16, 86], often thought to be a better model for coloured noise [104, 86, 98, 34]. This generalisation is claimed to allow for the treatment of non-Markovian continuous random variables in a more principled way where derivatives of stochastic processes are defined as in traditional (non-stochastic) calculus and included as extra dimensions of the state-space in terms of higher embedding orders (cf. the role of delayed coordinates in embedding theorems [77, 87]). These “extra” embedding orders represent a set of coordinates expressed in terms of “position”, “velocity”, “acceleration”, etc., for each variable and correspond to a linearised (for convenience [34, 21]) path generated by a Taylor expansion in time of each trajectory.

The differences between generalised and standard state-space models are however less noticeable for the linear case treated in this note, often used as a starting point for models of sensorimotor control in computational and cognitive neuroscience. This is due both to 1) the treatment in terms of white noise adopted in equation (9), making higher embedding orders redundant when the plant’s model is known, and to 2) the white noise being additive. Under these assumptions, while the theoretical interpretations of Ito and the Stratonovich formulations still differ [98], they lead to the same results [50]. For nonlinear problems the discrepancies between these two approaches become more obvious, at the cost however, of analytical tractability since stronger assumptions are required for the treatment of more general cases. For instance, in LQG architectures, the separation principle heavily relies on the linearity of observations and dynamics, and only weaker versions of this principle are at the moment defined for some special classes of nonlinear systems, e.g., [7]. In active inference, the effects of the local linearity assumption on higher embedding order remains largely unexplored especially for non-smooth processes. More in general, however, while it is sometimes claimed that active inference has a clear edge over traditional approaches Markovian noisem, such as LQG [43, 42], one can show that non-Markovian systems can be formulated as Markovian ones using an augmented state-space [50, 34], in the same way higher embedding orders increase the number of state equations in active inference [34]. Due to this, some comparisons between active inference algorithms and existing methods in the literature may appear at the moment slightly confusing. For instance, in [43] we see an analysis comparing (extended) Kalman-Bucy filters and Dynamic Expectation Maximisation (DEM, one of the standard algorithmic implementations of estimation algorithms in active inference). In presence of white noise, the two methods achieve very similar performances, while for non-white noise, DEM is claimed to display better results [43]. At the same time, while the number the state-space dimension for DEM was effectively increased via the use of generalised coordinates of motion, the dimension of the state-space of the (extended) Kalman-Bucy filter remained unchanged, thus suggesting that differences are mainly due to the treatments (or lack of) of coloured noise, with the (extended) Kalman-Bucy filter capable of replicating the same results with an adequate state augmentation.

4.2.2 Different update equations for action

As shown in Fig. 2, the update equations for action in LQG and active inference present a few crucial differences. Importantly, actions in LQG are implemented using an algebraic equation equivalent to standard negative proportional feedback mechanisms, while in active inference the same update relies, effectively, on an implementation based on biased perception and akin to integral control [13]. This is mainly due to the fact that while LQG requires full knowledge of inputs to calculate the best estimates , active inference relies on an implicit mechanism to handle the lack of such inputs: integral control. In particular, the target value for the control problem, encoded by the vector , is introduced as a prior in the form of a constant “disturbance”, or bias, in the generative model in equation (13). This unaccounted disturbance generates a biased estimate (cf. [32]) that encodes the desired state/trajectory of a system, reached via the use of an integral controller that acts to “regulate” such bias [51, 52]. Integral control can in fact be seen as an implicit mechanism to estimate linear (i.e., constant) perturbations/inputs, inferred by accumulating evidence based on steady-state errors generated by the presence of the bias vector term 888An explicit version of this method corresponds to introducing extra variables in a state-space model for the inference of (linear) inputs as shown, for instance, in [53]..

A second key point is that actions built in LQG schemes use prediction errors on hidden states, while active inference updates are based on errors on observed states. In LQG, this is due to an assumption on the invertibility of matrix , mapping estimated states to observations , see for instance eqn. 4.1-1 to 4.1-8 in [3]. Thanks to this assumption, the desired/target trajectory can be expressed directly into the frame of reference of hidden rather than observed states, adapting (thanks to the separation principle) a problem of output-feedback control into one of state-feedback. While this assumption holds for the linear systems defined in LQG under the separation principle, it is not trivially generalised to nonlinear cases where processes of perception and control have strong co-dependences [14]. On the other hand, active inference implementations can in principle easily provide approximate solutions to increasingly complicated problems of (sensorimotor) control for a reasonable choice of the peripheral (open-loop) “inverse” model, [13].

Furthermore, it should also be noted that the update equation for action in active inference replaces the arbitrary positive definite weight matrix , standard in LQG control, with the expected precision matrix of observation noise . This move can be seen in light of the established duality of deterministic control and stochastic filtering/estimation problems first formalised by Kalman [56, 57, 58]. Unlike standard uses of this duality theorem, normally simply highlighting matching terms in the solutions of these two problems in the linear case under a coordinate transformation (including time reversal), active inference assumes that the matrix in fact just – is – the expected precision matrix of observation noise . The implications of this idea include, among others, an intrinsic “dual effect” of actions within the active inference framework due to the lack the conditions for the separation principle, cf. LQG [14]. This dual effect can be seen as an instantiation of the fundamental exploration-exploitation dilemma [26], portraying the time-constrained trade-off between inferring/learning about the unknown structure of a system and regulating its state to a desired target. The active inference formulation for linear systems constitutes thus an intermediate stage between LQG models where dual effects are not present [14], and more general dualities of estimation and control for nonlinear problems found for instance in [72, 94]. These generalised dualities are related to the formulation of the information filter, a linear estimator propagating the error precision, rather than covariance, matrix. The information filter provides a formulation that simplifies the duality relations by rearranging matching terms, creating new and different dualities, and in particular by replacing a difficult generalisation of the matrix transpose operator with a second time reversal (for details see [94]). The same method based on an exponential transformation was likewise applied in path integral control [60, 59]. In this scheme, one can also show how (rather than as in the Kalman duality expressed by active inference in the linear case) for a class of tractable (i.e., linear in ) fully-observable nonlinear problems where the conditions for the standard separation principle are not met (i.e., LQG emerges only as a special case [60]).

4.2.3 Different minimisation schemes

The updates proposed with active inference are apparently consistent with Kalman-Bucy filters, although an important difference is clear: the Kalman gain, , and feedback gain, are not explicitly computed in active inference. The Kalman gain, , and feedback gain, , matrices prescribe the optimal (i.e., minimum variance) update speed of estimates of hidden states and controls, while balancing prior information and new observations. Both and require solving Riccati equations involving knowledge of the covariance matrices of dynamics and observation noise in the first case, and weights representing costs for estimation and control in the cost-to-go function for the latter. In current formulations of active inference, the matrices and are not as clearly directly defined as they are in LQG architectures. If we consider only the Kalman gain , the main reason is that active inference relies on a series of simplifying assumptions perhaps not best suited to computation, online, Kalman and feedback gains. In particular, while the Laplace [15] and variational Gaussian approximations [75] greatly simplify the variational Bayesian treatment of inference and control problems (and are, together, exact for linear, Gaussian plants), they are usually accompanied by further approximations. These include, for example, the post-hoc optimisation of the covariance matrix of an approximate density under the variational Gaussian approximation, i.e., performed after a certain number of observations [43] (although see [42] for an approximate online version). In general however, no equations of the Riccati type currently appear in standard formulations of variational free energy minimisation in active inference. Under further assumptions, for example by considering fading-memory Kalman filters, it is however possible to draw a more direct comparison that will be developed in future work. Kalman filters with fading memory can, in fact, be shown to be equivalent to a natural gradient descent on statistical manifolds ([2]) in the univariate case, see for instance [73, 74]. Following then the known correspondence ([70]) between natural gradient and Gauss-Newton type methods used in active inference [34] for exponential family models, one can consider cases where active inference emerges as a special (i.e., fading-memory) case of (extended) Kalman-filters, and then discuss a similar approach for the dual equations involving action and the feedback matrix . It should be highlighted that, unlike repeatedly stated in the literature, we believe it is all but clear how the minimisation scheme used by active inference ought to generalise algorithms such as (extended) Kalman-filters beyond, perhaps, the use of higher embedding orders (however see above). At the moment, most practical applications simply replace explicit and with approximations based on the use of learning rates, e.g. [10], or on local linearisation methods [76] with varying integration time-steps [43].

4.2.4 Different cost functionals and derivations

A further point of departure between these models can be found in the cost functional minimised by the two methods: a value, or cost-to-go, functional for LQG, equation (10), and a variational free energy functional for active inference, equation (19). The most striking difference is that the former includes a time integral of costs, while the second one doesn’t. This difference is crucial in nonlinear cases, or rather in cases where the separation principle does not hold: the minimisation of variational free energy can in fact be seen as giving a “time-independent” equilibrium strategy, generating a fixed control strategy independent of future observations [61] or equivalently, following [14], one can see the minimisation of variational free energy as implementing a feedback rather than closed-loop policy that assumes fixed dynamics and costs in the limit of an infinite time horizon [61]. At the same time, LQG methods based on the separation principle rely on the same (future) time-independence assumption [14], thus implying that the two functionals are not fundamentally different at this level. A more appropriate comparison may be drawn on generalisations of both approaches, path integral control/KL control on one side and the minimisation of expected free energy on the other, where active inference is claimed to generalise other control approaches [39, 23], however this comparison lies outside the scope of this note, focusing instead on linear continuous time and continuous state-space models.

Finally, the free energy functional equation (15) appears to include extra terms when compared to the value function of LQG equation (10). The presence of multiple prediction errors in the variational free energy formulation is however just an expression of the probabilistic (i.e., Bayesian) derivation of inference methods [50], now generalised to control problems. The different predictions errors in the variational free energy directly map to likelihood and prior distributions as obtained from the joint density of observed and hidden states used to define free energy, see [21]. On the other hand, LQG derivations are often based on least-square methods [50, 85] with no a-priori interpretation of uncertainty, normally included only post-hoc.

References

- [1] Rick A Adams, Stewart Shipp, and Karl J Friston. Predictions not commands: active inference in the motor system. Brain Structure and Function, 218(3):611–643, 2013.

- [2] Shun-Ichi Amari. Natural gradient works efficiently in learning. Neural computation, 10(2):251–276, 1998.

- [3] Brian Anderson and John B Moore. Optimal control: linear quadratic methods. Prentice-Hall, Inc., 1990.

- [4] Philip W Anderson. More is different. Science, 177(4047):393–396, 1972.

- [5] Karl J Åström. Introduction to stochastic control theory. Academic Press, 1970.

- [6] Karl J Åström and Richard M Murray. Feedback systems: an introduction for scientists and engineers. Princeton university press, 2010.

- [7] Ahmad N Atassi and Hassan K Khalil. A separation principle for the stabilization of a class of nonlinear systems. IEEE Transactions on Automatic Control, 44(9):1672–1687, 1999.

- [8] Michael Athans. The role and use of the stochastic linear-quadratic-gaussian problem in control system design. IEEE transactions on automatic control, 16(6):529–552, 1971.

- [9] Manuel Baltieri. Active inference: building a new bridge between control theory and embodied cognitive science. PhD thesis, University of Sussex, 2019.

- [10] Manuel Baltieri and Christopher L Buckley. An active inference implementation of phototaxis. In Artificial Life Conference Proceedings, pages 36–43. MIT Press, 2017.

- [11] Manuel Baltieri and Christopher L Buckley. The modularity of action and perception revisited using control theory and active inference. In Artificial Life Conference Proceedings, pages 121–128. MIT Press, 2018.

- [12] Manuel Baltieri and Christopher L Buckley. Nonmodular architectures of cognitive systems based on active inference. In 2019 International Joint Conference on Neural Networks (IJCNN), pages 1–8. IEEE, 2019.

- [13] Manuel Baltieri and Christopher L Buckley. PID control as a process of active inference with linear generative models. Entropy, 21(3):257, 2019.

- [14] Yaakov Bar-Shalom and Edison Tse. Dual effect, certainty equivalence, and separation in stochastic control. IEEE Transactions on Automatic Control, 19(5):494–500, 1974.

- [15] Matthew J. Beal. Variational algorithms for approximate Bayesian inference. University of London London, 2003.

- [16] Yu K Belyaev. Analytic random processes. Theory of Probability & Its Applications, 4(4):402–409, 1959.

- [17] Martin Biehl, Christian Guckelsberger, Christoph Salge, Simón C. Smith, and Daniel Polani. Expanding the active inference landscape: More intrinsic motivations in the perception-action loop. Frontiers in Neurorobotics, 12:45, 2018.

- [18] Christopher M Bishop. Pattern Recognition and Machine Learning. Springer-Verlag New York, 2006.

- [19] Rafal Bogacz. A tutorial on the free-energy framework for modelling perception and learning. Journal of mathematical psychology, 76:198–211, 2017.

- [20] Harriet Brown, Rick A Adams, Isabel Parees, Mark Edwards, and Karl J Friston. Active inference, sensory attenuation and illusions. Cognitive processing, 14(4):411–427, 2013.

- [21] Christopher L Buckley, Chang Sub Kim, Simon McGregor, and Anil K Seth. The free energy principle for action and perception: A mathematical review. Journal of Mathematical Psychology, 14:55–79, 2017.

- [22] Zhe Chen. Bayesian filtering: From kalman filters to particle filters, and beyond. Statistics, 182(1):1–69, 2003.

- [23] Lancelot Da Costa, Thomas Parr, Noor Sajid, Sebastijan Veselic, Victorita Neacsu, and Karl J Friston. Active inference on discrete state-spaces: a synthesis. arXiv preprint arXiv:2001.07203, 2020.

- [24] Jean Daunizeau. The variational laplace approach to approximate bayesian inference. arXiv preprint arXiv:1703.02089, 2017.

- [25] John C Doyle. Guaranteed margins for lqg regulators. IEEE Transactions on automatic Control, 23(4):756–757, 1978.

- [26] Alexander A Feldbaum. Dual-control theory i-iv. Optimal Control Systems, 1965.

- [27] Anatol G Feldman. New insights into action–perception coupling. Experimental Brain Research, 194(1):39–58, 2009.

- [28] Anatol G Feldman. Active sensing without efference copy: referent control of perception. Journal of neurophysiology, 116(3):960–976, 2016.

- [29] Ronald F Fox. Stochastic calculus in physics. Journal of statistical physics, 46(5-6):1145–1157, 1987.

- [30] Roy Fox and Naftali Tishby. Minimum-information lqg control part i: Memoryless controllers. In 2016 IEEE 55th Conference on Decision and Control (CDC), pages 5610–5616. IEEE, 2016.

- [31] David W Franklin and Daniel M Wolpert. Computational mechanisms of sensorimotor control. Neuron, 72(3):425–442, 2011.

- [32] Bernard Friedland. Treatment of bias in recursive filtering. IEEE Transactions on Automatic Control, 14(4):359–367, 1969.

- [33] Karl Friston, Biswa Sengupta, and Gennaro Auletta. Cognitive dynamics: From attractors to active inference. Proceedings of the IEEE, 102(4):427–445, 2014.

- [34] Karl J Friston. Hierarchical models in the brain. PLoS Computational Biology, 4(11), 2008.

- [35] Karl J Friston. The free-energy principle: a unified brain theory? Nature reviews. Neuroscience, 11(2):127–138, 2010.

- [36] Karl J Friston. What is optimal about motor control? Neuron, 72(3):488–498, 2011.

- [37] Karl J Friston, Jean Daunizeau, and Stefan J Kiebel. Reinforcement learning or active inference? PLoS One, 4(7):e6421, 2009.

- [38] Karl J Friston, Jean Daunizeau, James Kilner, and Stefan J. Kiebel. Action and behavior: A free-energy formulation. Biological Cybernetics, 102(3):227–260, 2010.

- [39] Karl J Friston, Thomas FitzGerald, Francesco Rigoli, Philipp Schwartenbeck, and Giovanni Pezzulo. Active inference: a process theory. Neural Computation, 29(1):1–49, 2017.

- [40] Karl J Friston, Francesco Rigoli, Dimitri Ognibene, Christoph Mathys, Thomas Fitzgerald, and Giovanni Pezzulo. Active inference and epistemic value. Cognitive neuroscience, pages 1–28, 2015.

- [41] Karl J Friston, Spyridon Samothrakis, and Read Montague. Active inference and agency: Optimal control without cost functions. Biological Cybernetics, 106(8-9):523–541, 2012.

- [42] Karl J Friston, Klaas Stephan, Baojuan Li, and Jean Daunizeau. Generalised filtering. Mathematical Problems in Engineering, 2010, 2010.

- [43] Karl J Friston, N. Trujillo-Barreto, and J. Daunizeau. DEM: A variational treatment of dynamic systems. NeuroImage, 41(3):849–885, 2008.

- [44] Crispin Gardiner. Stochastic methods: a handbook for the natural and social sciences, volume 4. springer Berlin, 2009.

- [45] Michael Gastpar, Bixio Rimoldi, and Martin Vetterli. To code, or not to code: Lossy source-channel communication revisited. IEEE Transactions on Information Theory, 49(5):1147–1158, 2003.

- [46] Tryphon T Georgiou and Anders Lindquist. The separation principle in stochastic control, redux. IEEE Transactions on Automatic Control, 58(10):2481–2494, 2013.

- [47] Samuel J Gershman. What does the free energy principle tell us about the brain? arXiv preprint arXiv:1901.07945, 2019.

- [48] John M Halley. Ecology, evolution and 1f-noise. Trends in ecology & evolution, 11(1):33–37, 1996.

- [49] Jiping He, William S Levine, and Gerald E Loeb. Feedback gains for correcting small perturbations to standing posture. In Proceedings of the 28th IEEE Conference on Decision and Control,, pages 518–526. IEEE, 1991.

- [50] Andrew H Jazwinski. Stochastic Processes and Filtering Theory, volume 64. Academic Press, 1970.

- [51] Carroll D. Johnson. Optimal control of the linear regulator with constant disturbances. IEEE Transactions on Automatic Control, 13(4):416–421, 1968.

- [52] Carroll D. Johnson. Further study of the linear regulator with disturbances – The case of vector disturbances satisfying a linear differential equation. IEEE Transactions on Automatic Control, 15(2):222–228, 1970.

- [53] Carroll D. Johnson. On observers for systems with unknown and inaccessible inputs. International journal of control, 21(5):825–831, 1975.

- [54] D Peter Joseph and T Julius Tou. On linear control theory. Transactions of the American Institute of Electrical Engineers, Part II: Applications and Industry, 80(4):193–196, 1961.

- [55] Rudolf E Kalman. Contributions to the theory of optimal control. Bol. Soc. Mat. Mexicana, 5(2):102–119, 1960.

- [56] Rudolf E Kalman. A new approach to linear filtering and prediction problems. Journal of basic Engineering, 82(1):35–45, 1960.

- [57] Rudolf E Kalman. On the general theory of control systems. In Proceedings First International Conference on Automatic Control, Moscow, USSR, 1960.

- [58] Rudolf E Kalman and Richard S Bucy. New results in linear filtering and prediction theory. Journal of basic engineering, 83(1):95–108, 1961.

- [59] Hilbert J Kappen. Linear theory for control of nonlinear stochastic systems. Physical review letters, 95(20):200201, 2005.

- [60] Hilbert J Kappen. Path integrals and symmetry breaking for optimal control theory. Journal of statistical mechanics: theory and experiment, 2005(11):P11011, 2005.

- [61] Hilbert J Kappen, Vicenç Gómez, and Manfred Opper. Optimal control as a graphical model inference problem. Machine learning, 87(2):159–182, 2012.

- [62] Mitsuo Kawato. Internal models for motor control and trajectory planning. Current opinion in neurobiology, 9(6):718–727, 1999.

- [63] David C Knill and Alexandre Pouget. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends in Neurosciences, 27(12):712–719, 2004.

- [64] David C Knill and Whitman Richards. Perception as Bayesian inference. Cambridge University Press, 1996.

- [65] Mark L Latash. Physics of Biological Action and Perception. Academic Press, 2019.

- [66] Tai Sing Lee and David Mumford. Hierarchical bayesian inference in the visual cortex. JOSA A, 20(7):1434–1448, 2003.

- [67] Baojuan Li, Jean Daunizeau, Klaas E Stephan, Will Penny, Dewen Hu, and Karl J Friston. Generalised filtering and stochastic dcm for fmri. neuroimage, 58(2):442–457, 2011.

- [68] Gerald E Loeb, WS Levine, and Jiping He. Understanding sensorimotor feedback through optimal control. In Cold Spring Harbor symposia on quantitative biology, volume 55, pages 791–803. Cold Spring Harbor Laboratory Press, 1990.

- [69] J Łuczka. Non-markovian stochastic processes: Colored noise. Chaos: An Interdisciplinary Journal of Nonlinear Science, 15(2):026107, 2005.

- [70] James Martens. New insights and perspectives on the natural gradient method. arXiv preprint arXiv:1412.1193, 2014.

- [71] Richard J Meinhold and Nozer D Singpurwalla. Understanding the kalman filter. The American Statistician, 37(2):123–127, 1983.

- [72] Sanjoy K Mitter and Nigel J Newton. A variational approach to nonlinear estimation. SIAM journal on control and optimization, 42(5):1813–1833, 2003.

- [73] Yann Ollivier. Online natural gradient as a kalman filter. Electronic Journal of Statistics, 12(2):2930–2961, 2018.

- [74] Yann Ollivier. The extended kalman filter is a natural gradient descent in trajectory space. arXiv preprint arXiv:1901.00696, 2019.

- [75] Manfred Opper and Cédric Archambeau. The variational gaussian approximation revisited. Neural computation, 21(3):786–792, 2009.

- [76] Tohru Ozaki. A bridge between nonlinear time series models and nonlinear stochastic dynamical systems: a local linearization approach. Statistica Sinica, pages 113–135, 1992.

- [77] Norman H Packard, James P Crutchfield, J Doyne Farmer, and Robert S Shaw. Geometry from a time series. Physical review letters, 45(9):712, 1980.

- [78] Thomas Parr and Karl J Friston. The discrete and continuous brain: from decisions to movement—and back again. Neural computation, 30(9):2319–2347, 2018.

- [79] Rajesh PN Rao. An optimal estimation approach to visual perception and learning. Vision research, 39(11):1963–1989, 1999.

- [80] Rajesh PN Rao and Dana H Ballard. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nature neuroscience, 2(1):79–87, 1999.

- [81] Noor Sajid, Philip J Ball, and Karl J Friston. Active inference: demystified and compared. arXiv preprint arXiv:1909.10863, 2019.

- [82] Claude Elwood Shannon. A mathematical theory of communication. Bell system technical journal, 27(3):379–423, 1948.

- [83] Herbert A Simon. Dynamic programming under uncertainty with a quadratic criterion function. Econometrica, Journal of the Econometric Society, pages 74–81, 1956.

- [84] Harold W Sorenson. Least-squares estimation: from gauss to kalman. IEEE spectrum, 7(7):63–68, 1970.

- [85] Robert F Stengel. Optimal control and estimation. Courier Corporation, 1994.

- [86] Rouslan L Stratonovich. Topics in the theory of random noise, volume 2. CRC Press, 1967.

- [87] Floris Takens. Detecting strange attractors in turbulence. In Dynamical systems and turbulence, Warwick 1980, pages 366–381. Springer, 1981.

- [88] Takashi Tanaka, P Mohajerin Esfahani, and Sanjoy K Mitter. Lqg control with minimal information: Three-stage separation principle and sdp-based solution synthesis. arXiv preprint arXiv:1510.04214, 2015.

- [89] Sekhar C Tatikonda. Control under communication constraints. PhD thesis, Massachusetts Institute of Technology, 2000.

- [90] Henri Theil. A note on certainty equivalence in dynamic planning. Econometrica: Journal of the Econometric Society, pages 346–349, 1957.

- [91] Emanuel Todorov. Optimality principles in sensorimotor control. Nature neuroscience, 7(9):907–915, 2004.

- [92] Emanuel Todorov. Stochastic optimal control and estimation methods adapted to the noise characteristics of the sensorimotor system. Neural computation, 17(5):1084–1108, 2005.

- [93] Emanuel Todorov. Optimal control theory. Bayesian brain: probabilistic approaches to neural coding, pages 269–298, 2006.

- [94] Emanuel Todorov. General duality between optimal control and estimation. In Decision and Control, 2008. CDC 2008. 47th IEEE Conference on, pages 4286–4292. IEEE, 2008.

- [95] Emanuel Todorov and Michael I Jordan. Optimal feedback control as a theory of motor coordination. Nature neuroscience, 5(11):1226, 2002.

- [96] Alexander Tschantz, Anil K Seth, and Christopher L Buckley. Learning action-oriented models through active inference. PLOS Computational Biology, 16(4):e1007805, 2020.

- [97] Pedro A Valdes-Sosa, Alard Roebroeck, Jean Daunizeau, and Karl J Friston. Effective connectivity: influence, causality and biophysical modeling. Neuroimage, 58(2):339–361, 2011.

- [98] Nicolaas G Van Kampen. Itô versus stratonovich. Journal of Statistical Physics, 24(1):175–187, 1981.

- [99] Nicolaas G Van Kampen. Stochastic processes in physics and chemistry, volume 1. Elsevier, 1992.

- [100] David A Vasseur and Peter Yodzis. The color of environmental noise. Ecology, 85(4):1146–1152, 2004.

- [101] Erich von Holst and Horst Mittelstaedt. Das reafferenzprinzip. Naturwissenschaften, 37(20):464–476, 1950.

- [102] Peter Whittle. Risk-sensitive linear/quadratic/gaussian control. Advances in Applied Probability, 13(4):764–777, 1981.

- [103] Daniel M Wolpert and Zoubin Ghahramani. Computational principles of movement neuroscience. Nature neuroscience, 3(11s):1212, 2000.

- [104] Eugene Wong and Moshe Zakai. On the convergence of ordinary integrals to stochastic integrals. The Annals of Mathematical Statistics, 36(5):1560–1564, 1965.

- [105] W Murray Wonham. On the separation theorem of stochastic control. SIAM Journal on Control, 6(2):312–326, 1968.