∎

22email: jupatel@wpi.com 33institutetext: P. Sonar 44institutetext: Worcester Polytechnic Institute

44email: prajankya@wpi.com 55institutetext: C. Pinciroli 66institutetext: Worcester Polytechnic Institute

66email: cpinciroli@wpi.com

On Multi-Human Multi-Robot Remote Interaction

Abstract

In this paper, we investigate how to design an effective interface for remote multi-human multi-robot interaction. While significant research exists on interfaces for individual human operators, little research exists for the multi-human case. Yet, this is a critical problem to solve to make complex, large-scale missions achievable in which direct human involvement is impossible or undesirable, and robot swarms act as a semi-autonomous agents. This paper’s contribution is twofold. The first contribution is an exploration of the design space of computer-based interfaces for multi-human multi-robot operations. In particular, we focus on information transparency and on the factors that affect inter-human communication in ideal conditions, i.e., without communication issues. Our second contribution concerns the same problem, but considering increasing degrees of information loss, defined as intermittent reception of data with noticeable gaps between individual receipts. We derived a set of design recommendations based on two user studies involving 48 participants.

Keywords:

Information Transparency Inter-Human Communication Information Loss Remote Interaction Multi-Human Multi-Robot Interaction1 Introduction

Robot swarms promise solutions for missions in which direct human involvement is either impossible or undesirable, such as search-and-rescue, firefighting, planetary exploration, and ocean restoration murphy2012decade . When robot swarms are deployed to perform complex missions, autonomy is only part of the picture. Along with autonomy, it is equally important for human operators to monitor and affect the behavior of the swarm. This creates the issue of designing effective solutions for remote interaction between humans and robot swarms.

While a significant body of work exists in remote interaction involving single humans and one or more robots, the scenario in which multiple humans interact with a robot swarm has received little attention. In this paper, we argue that it will be common for multiple humans to cooperate in the supervision of robot swarms. First, the amount of information generated by robot swarms is likely to exceed the span of apprehension of any individual operator miller1956magical , even when considering highly skilled ones such as video gamers. Cooperation among human operators would make monitoring more efficient. Second, the involvement of multiple humans allows for improved flexibility in robot control and task assignment, an important advantage in complex operations.

However, the involvement of multiple humans comes with old and new challenges. Among the old, we highlight the need for information transparency, which is the ability of the interface-swarm system to convey useful data for the operators to understand and modify the status of the swarm wohleber_effects_2017 ; chen_situation_2018 ; roundtree_transparency:_2019 ; bhaskara_agent_2020 ; chakraborti_explicability_nodate ; tulli_eects_nodate . Multiple operators also create the new challenge of conveying intentions and actions to other operators, i.e., effective inter-human communication, for better cooperation and conflict mitigation tomasello2010origins . Inter-human communication can be either direct or indirect. Direct communication includes verbal and non-verbal communication (e.g., gestures) holdcroft1976forms . Indirect communication is mediated through the remote interface (e.g., a graphical user interface on a laptop or tablet). Effective indirect communication requires inter-operator transparency, which pushes for interface designs that make it simple for operators far away from each other to exchange information on their intentions and plans breazeal2005effects ; lyons2013being ; chen_situation_2014 ; wohleber_effects_2017 ; roundtree_transparency:_2019 ; bhaskara_agent_2020 .

In this paper, we explore the design space of remote interfaces for multi-human multi-robot interaction. We study the role of direct and indirect communication among operators, and investigate how to achieve high levels of information and inter-operator transparency through several variants of our interface. The result of this work is a set of recommendations on which design elements contribute to making a remote interface effective. This part of our study builds upon previous work Patel2021 in which we investigated transparency and inter-human communication on the performance of human operators in proximal interaction. Proximal interaction occurs when humans and robots share the same environment.

Remote interaction allows us to study another important aspect—the role of information loss. In this paper, we consider information loss as a decrease in the frequency of the visual information presented to the operators. We measure information loss as the time interval, measured in seconds, between the delivery of consecutive video frames (the inverse of frames per second). Packet loss, bandwidth limitations, and geographical distance between the locations of the operators and the robots act as causal factors for information loss. Information loss leads to degraded operator performance, lack of awareness and trust, and increase in cognitive workload ellis2004generalizeability .

The last factor we consider in our study is that, in presence of non-ideal communication, it is also likely that the operators experience heterogeneous levels of information loss, causing a disparity in workload and situational awareness across operators.

The main contributions of this paper can be summarized as follows:

-

•

We provide an extensive investigation of the design space of remote interfaces for multi-human multi-robot interaction. We consider factors such as direct and indirect communication, information and inter-operator transparency, and homogeneous and heterogeneous information loss.

-

•

We compile a set of design recommendations validated through a user study that included 48 participants. We implemented a highly configurable remote interface that incorporates these recommendations and enables future studies of this kind.

This paper is organized as follows. We discuss related literature on remote human-robot interaction in Sec. 2. In Sec. 3, we discuss the design of our configurable remote interface. We report the results of our user study in ideal conditions in Sec. 4. We then introduce different types of information loss and report the results of a dedicated user study in Sec. 5. We summarize our contributions and outline directions for future work in Sec. 6.

2 Related Work

Remote robot control and manipulations has been a field of interest since Goertz and Thompson laid the foundation of modern tele-operation goertz1954electronically . The field has mostly focused manipulators hokayem_bilateral_2006 ; lichiardopol_survey_2007 ; varkonyi_survey_2014 ; jung_robotic_2018 ; li_operator_2018 rather than on mobile robots. This body of research has contributed advancements in tele-presence ferreira_immersive_nodate ; klow_privacy_2017 ; dimitoglou_telepresence_2019 ; salichs_privacy_2019 , tele-robotics nak_young_chong_remote_2000 ; rakita_remote_2019 , tele-operation jingtai_liu_competitive_2005 ; ma_teleoperation_2010 ; hutchison_evaluation_2010 ; mansour_dynamic_2012 ; hong_visual_2013 , and tele-surgery patel_long_2019 ; shahzad_telesurgery_2019 ; dardona_remote_2019 . This research has focused on identifying suitable interfaces and improving their usability lager_remote_2019 ; lunghi_multimodal_2019 ; roldan_bringing_2019 ; regenbrecht_intuitive_nodate ; music_humanrobot_2019 ; esfahlani_mixed_2019 ; welburn_mixed_2019 ; jang_omnipotent_2019 , as well as proposing novel control architectures for these interfaces cheung_semi-autonomous_2011 ; do_multiple_2011 ; lee_development_2012 ; lee_semiautonomous_2013 . Chen et al. chen_human_2007 categorize existing research according to the factors that affect remote control of robots. These factors are field of view, system orientation, camera viewpoints, depth perception, degraded video quality, time delay, and camera motion. Building upon this work, Feth et al. dillmann_shared-control_2009 and Kim et al. kim_implementation_2012 ; kim_implementation_2013 present a shared control framework to allow multiple operators to interact with manipulators. Lee et al. dong_gun_lee_human-centered_2013 extend these shared control frameworks to study the impact of information delay on the performance of human operators. In their work, the authors incorporate a passivity-based controller to counteract the negative effects of information delay on operator’s performance. These works are limited to interface design for remote interaction with industrial manipulators, and their findings may not be applicable to remote interface for manipulating numerous mobile robots. To the best of my knowledge, our study is the first study that investigates the impact of transparency and inter-human communication on a multi-human multi-robot interaction.

Loss of information has been recognized as a key factor in the performance and engagement of human operators chen_human_2007 ; mackenzie1993lag ; ellis2004generalizeability ; lane2002effects ; sheridan1963remote ; rastogi1997design ; darken2014spatial ; watson1998effects ; massimino1994teleoperator ; chen2008human . Research suggests that the effect of information loss and the ability to handle the loss may vary according to the tasks and the interface to interact with the system. To overcome the degradation in performance, there are three methods to mitigate the effects of loss on the performance of human operators. These methods are adopting passivity-based control methods lewis_two_2011 ; varkonyi_survey_2014 ; cheung_semi-autonomous_2011 ; jung_robotic_2018 ; hokayem_bilateral_2006 , predictive displays keskinpala2004objective ; baker2004improved ; calhoun200611 ; collett2006developer ; daily2003world ; nielsen2006comparing ; sheridan2002humans ; kheddar2014virtual ; ricks2004ecological and higher-granularity of control patel2019 ; ayanian_controlling_2014 ; kolling_human_2013 . However, these studies are limited to the scenario in which a single operator interacts with one of more robots. Our study furthers this line of research by providing an extensive investigation of the factors that affect the design of remote interfaces for multi-human multi-robot interaction in presence of information loss.

3 System Design

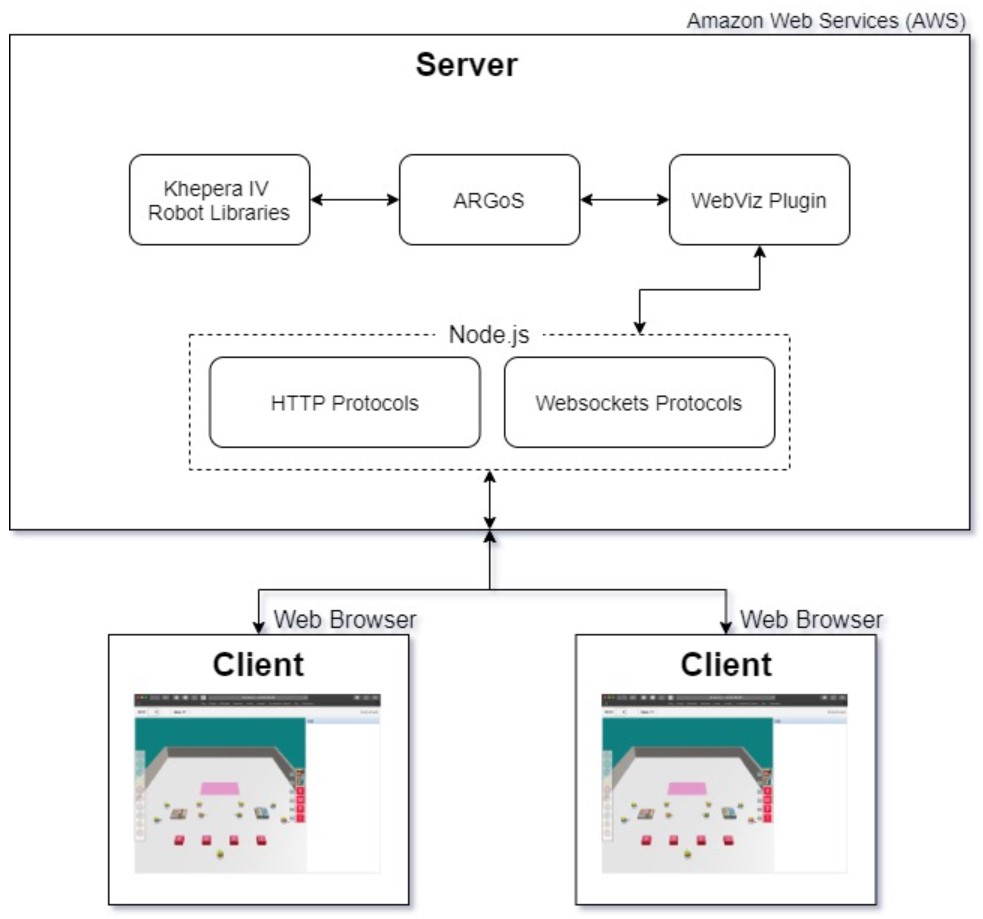

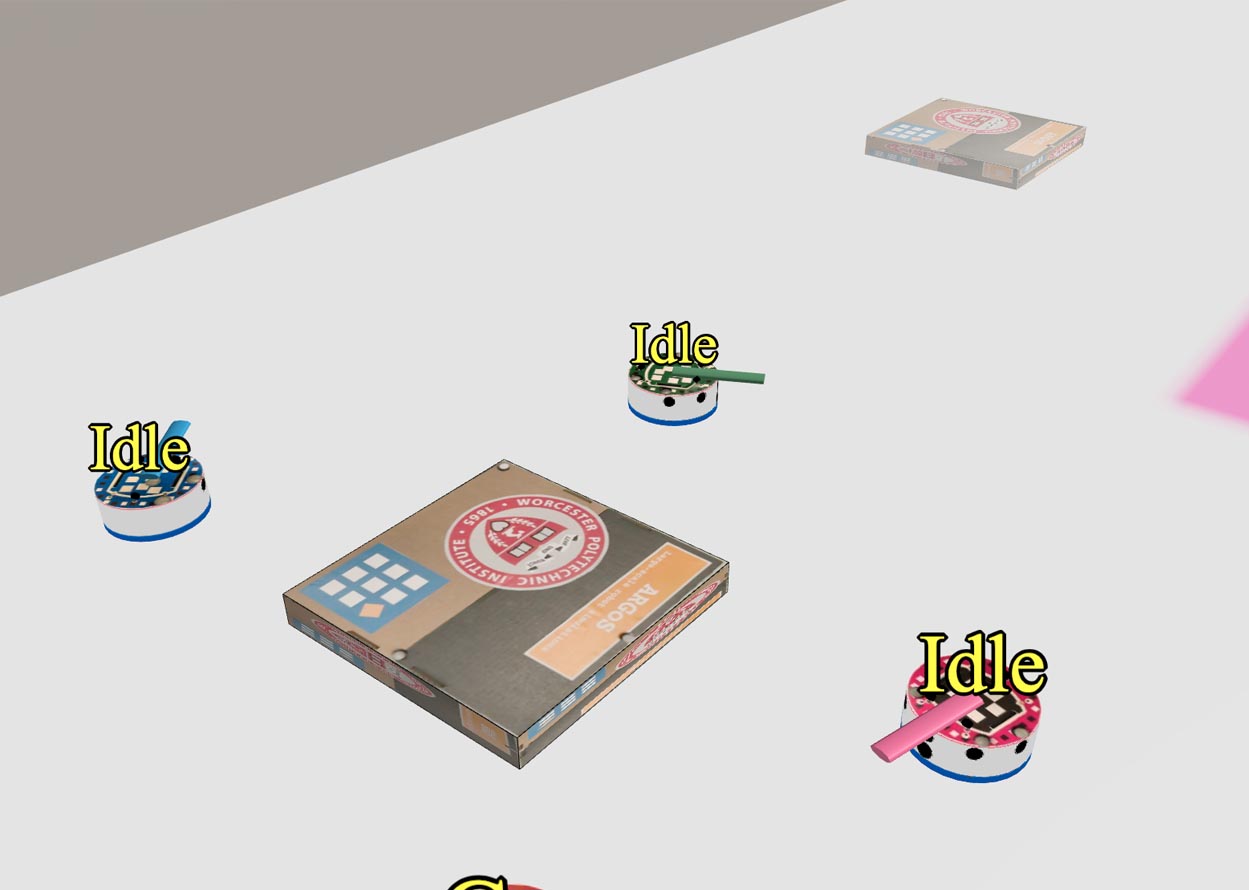

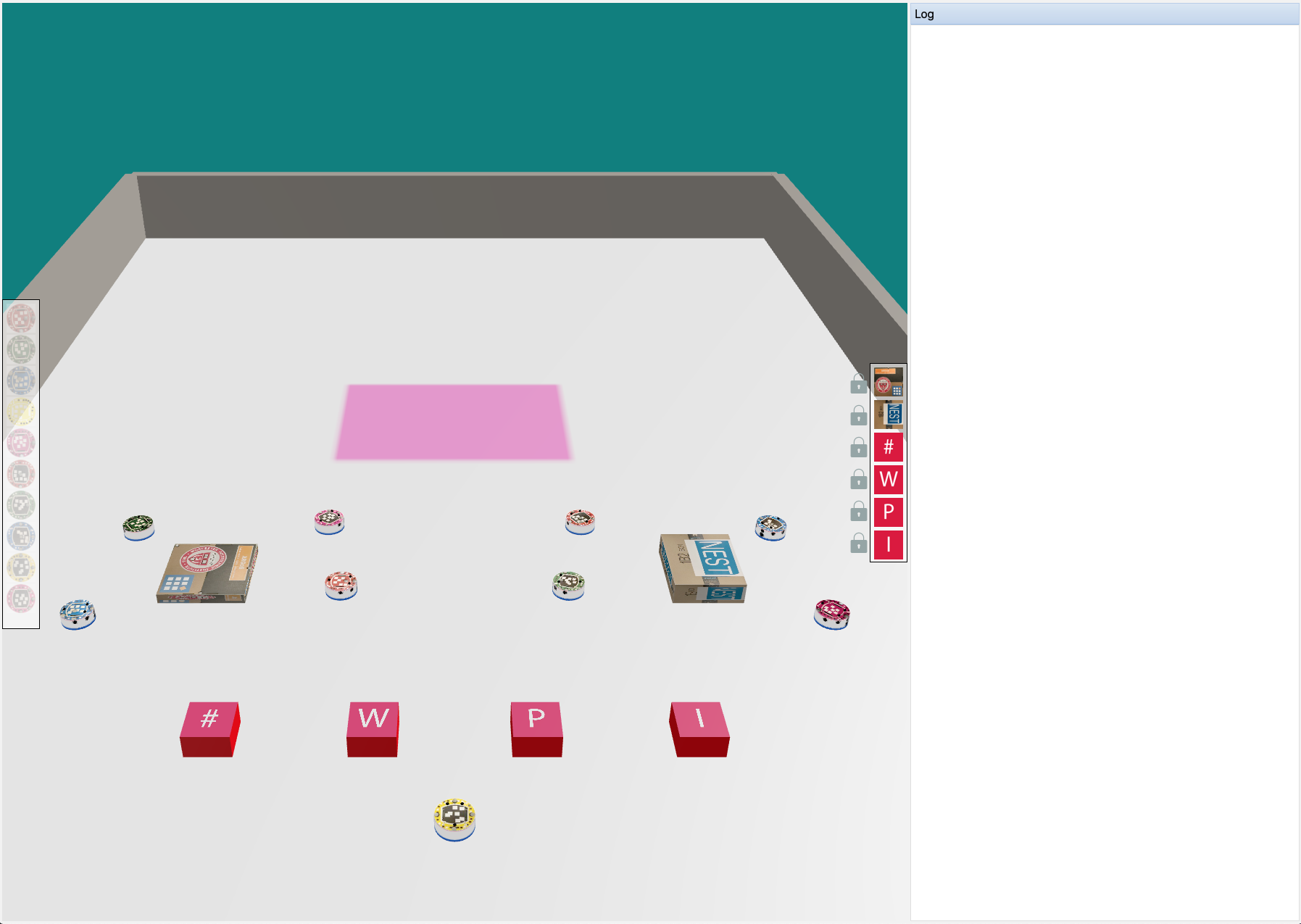

In this section, we present the main features of our remote interface and the behavior of the robots. At its essence, our interface is a web-based client-server architecture. The server runs ARGoS Pinciroli:SI2012 , a fast multi-robot simulator, on a node offered by Amazon Web Services111https://aws.amazon.com/. The server is implemented as a visualization plugin that accepts multiple connections from the clients. The client side is a web application implemented with Node.js222https://nodejs.org/ and WebGL333https://get.webgl.org/ which offers similar features with respect to the original graphical visualization of ARGoS. A diagram of the client-server architecture is reported in Fig. 1 and a screenshot of the web interface is shown in Fig. 2. The source code of the system is available online as open source software.444https://github.com/NESTLab/argos3-webviz

The process starts when a user performs a command on the client. The web interface allows the user to operate at multiple levels of granularity. In our previous work patel_mixed-granularity_2019 , we found that mixed granularity of control offers superior usability in complex missions that require both navigation and environment modification. Similarly to patel_mixed-granularity_2019 , in this paper we focus on a collective transport scenario due to the compositional nature that this kind of task presents — collective transport combines navigation, task allocation, and object manipulation. Our interface is therefore designed for this scenario and it mirrors many of the features we presented in patel_mixed-granularity_2019 . It is important to highlight, however, that the remote interface presented here is a completely new artifact based on a different technology: in fact, the work in patel_mixed-granularity_2019 studied proximal interactions with a touch-based interface.

3.1 Collective Transport

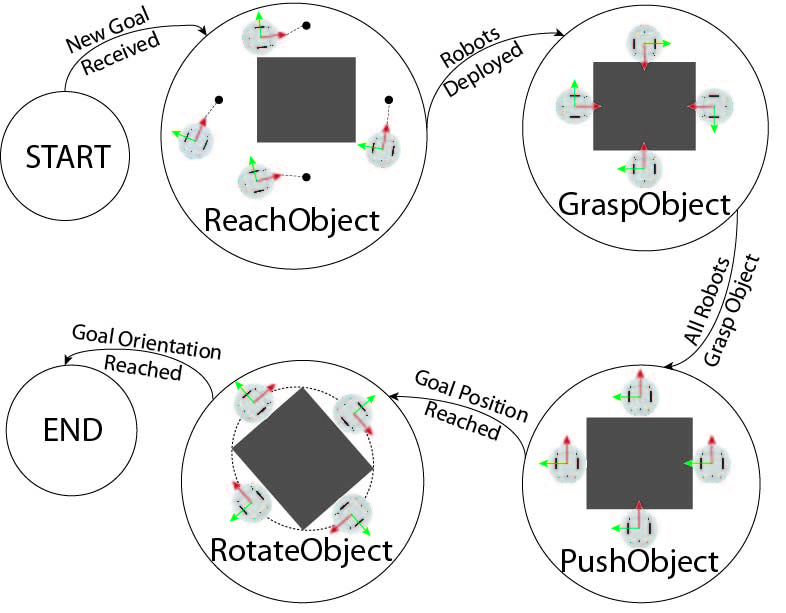

We employ a collective transport behavior based on the finite state machine shown in Fig. 3. The behavior is identical to the one discussed in our previous work patel_mixed-granularity_2019 . The states in the finite state machine are as follows:

Reach Object. On receiving the desired goal position for the object, the robots in the transport team navigate and organize themselves around the object in a circular manner. These positions are generated based on the number of robots in the team and their distance from the object. The state comes to an end once all the robots reach their designated positions.

Approach Object. After organizing themselves, the robots move towards the centroid of the object. The state comes to an end once all the robots are touching the object.

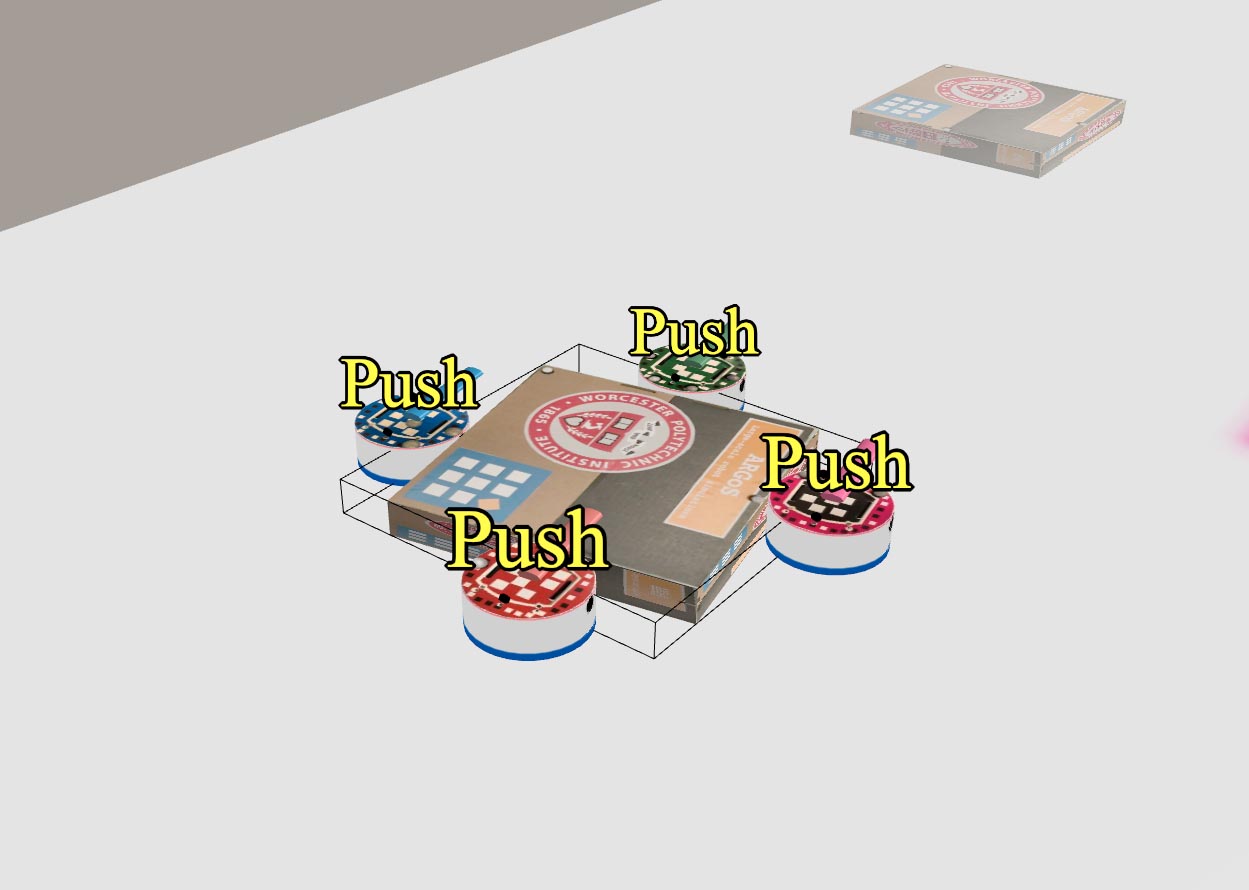

Push Object. Once the robots are in contact with the object, the robots rotate in place to face the direction of the goal. The robots start moving at equal speed towards the goal, while maintaining a fixed distance from the centroid of the object. This strategy prevents the robot in front and on the sides from breaking formation. If a robot breaks the formation, the robots switch back to Reach Object, wait for its completion, and subsequently resume their transport operation. The state comes to an end once the object reaches the goal position.

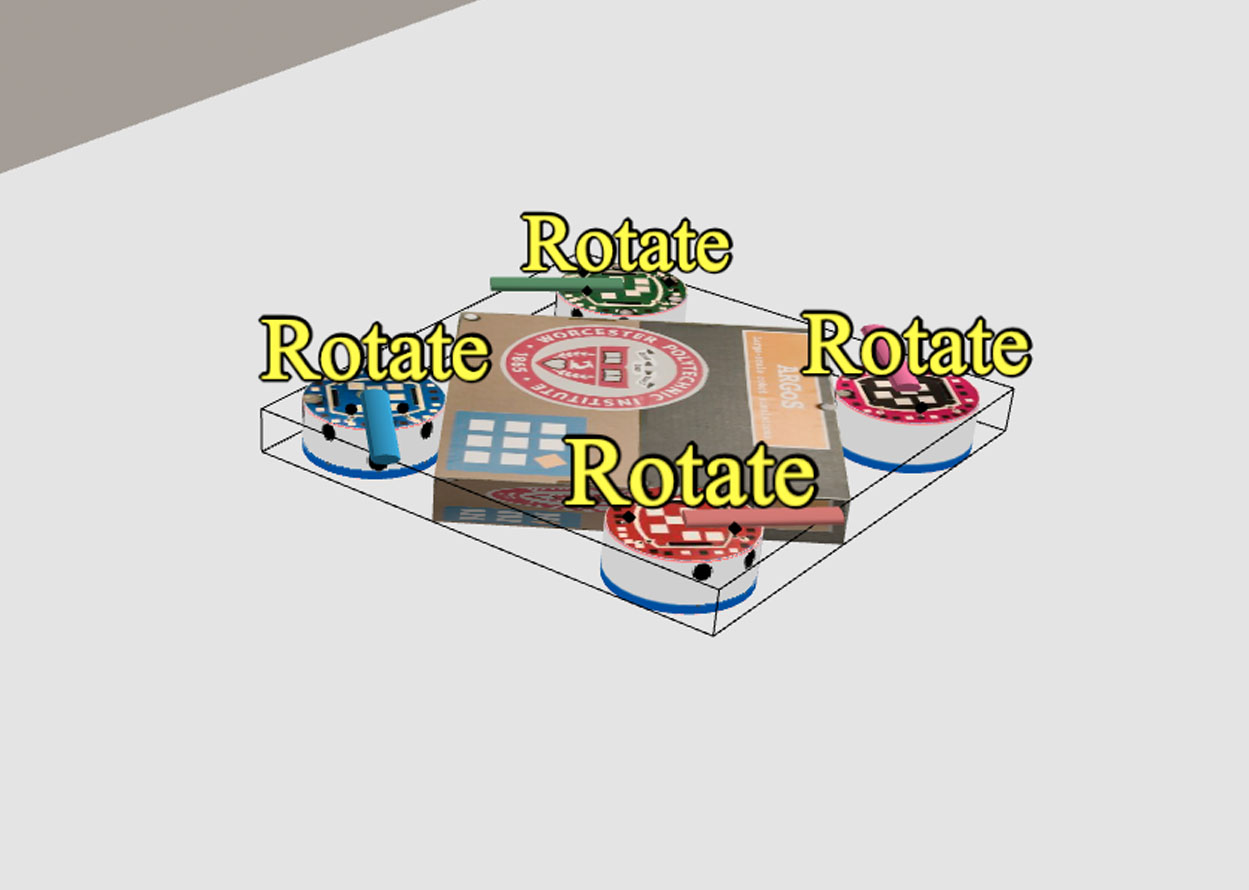

Rotate Object. The robots rearrange themselves around the object and move in a circular path in the outward direction, thereby rotating the object in place. If any robot breaks the formation, the robots rearrange themselves and resume rotating the object. The state comes to an end once the robots achieve the desired rotation.

3.2 User Interface

Object Manipulation. Object manipulation is triggered when an operator selects an object with a left click. The goal position always requires a right click, and the interface overlays the selected object with a transparent bounding box. The operator can also define the goal position for multiple objects. In this case, the robots autonomously distributed across the objects and transport them using the collective transport behavior. If two or more operators manipulate the same object, the interface keeps the position specified by the last operator. Fig. 4(a) shows a selected object overlaid with a bounding box. Fig. 4(b) illustrates how the goal position is visualized. The desired position and orientation of the object is conveyed by the interface as shown in Fig. 4(c) and 4(d).

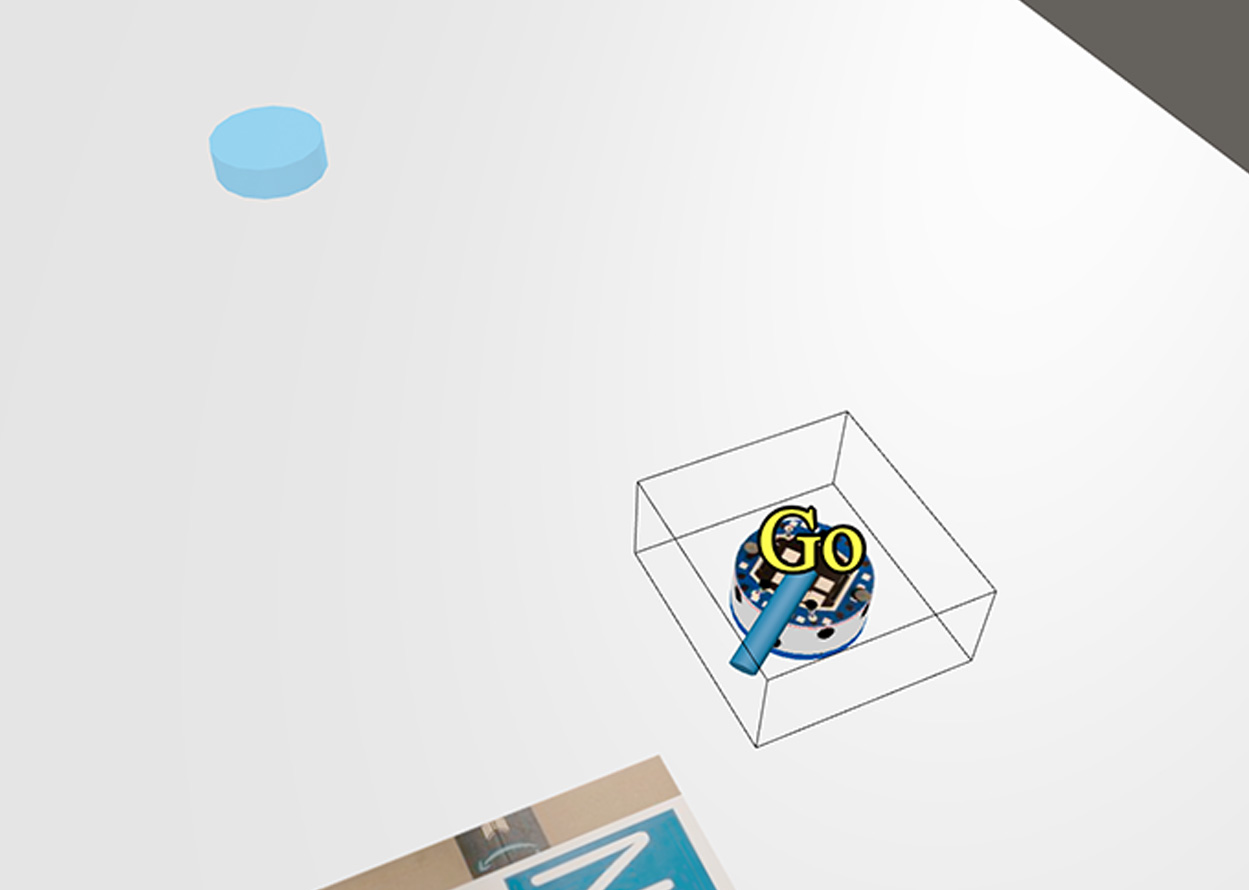

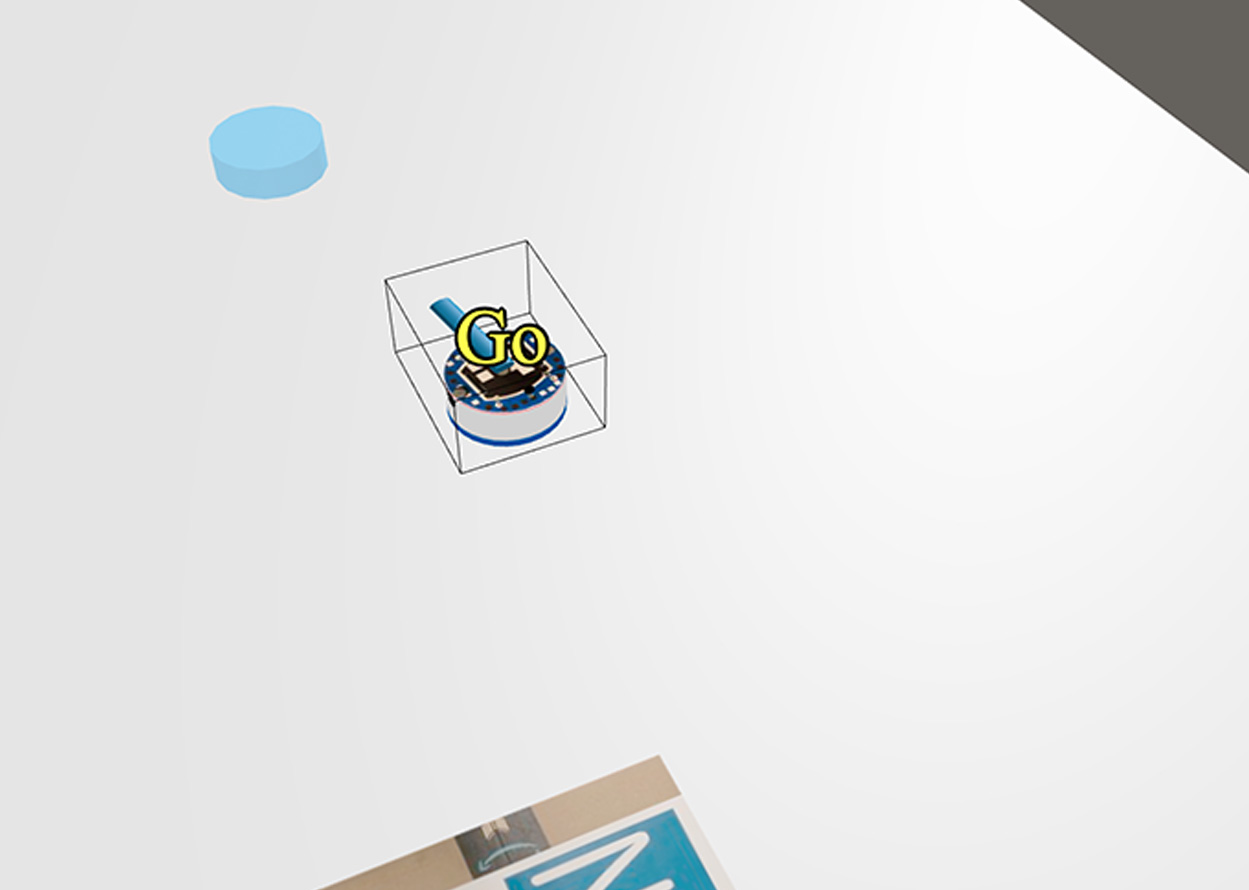

Robot Manipulation. Robot manipulation starts with an operator selecting a robot with a left click. The goal position is assigned using a right click. The interface overlays the selected robot with a transparent bounding box convey the current selection. The operator can define the goal position for multiple robots at once. If the robot is performing the collective transport behavior during this request, other robots in the collective transport team pause their operation until the selected robot reaches the desired position. In case the robot is a part of an operator-defined team, the selected robot navigates to the newly specified position and other robots continue their respective operations. When two or more operators want to manipulate the same robot, the interface processes the position specified by the last operator. Fig. 5(a) shows a selected robot overlaid with a bounding box to visualize the current selection. Fig. 5(b) shows the goal position determined by the operator and visualized as a colored representation of the selected robot. The color of the goal position matches the color of the fiducial markers to differentiate between the goal positions of different robots. Fig. 5(c) shows the selected robot navigating to the specified goal position.

Robot Team Selection and Manipulation. In addition to manipulating a single robot, the operator can select a team of robots by pressing control key and clicking the left mouse button. The goal position is still assigned with a right click. The interface overlays a transparent bounding box over all the selected robots to identify the current selection. If two or more operators have the same robot in their team, then the common robot navigates to the position specified by the last operator without affecting other robots in other teams. Fig. 6(a) shows a screenshot in which the selected robots are overlaid with a bounding box. Fig. 6(b) shows the goal position visualized as colored virtual objects, one for each of the selected robots. The color of the virtual objects matches the color of the fiducial markers on the body of the robots. Fig. 6(c) shows the robots navigating to the goal position.

3.3 Transparency Modes

To investigate the role of various elements of the user interface, we endowed our client with the possibility to provide information to the user in several modalities. The main insight in our work is to consider the natural field of view of the human eye (see Fig. 7). We implemented our client to allow for both central transparency, i.e., displaying elements in the center of the screen or directly above robots and objects (green region in Fig. 7); and peripheral transparency, i.e., relegating interface elements to the borders of the screen (yellow region in Fig. 7). The key difference between central and peripheral transparency is the type and quantity of information displayed. With central transparency, the information is contextual and limited to the robots effectively visible on the screen (which changes as the operator modifies the camera pose). Peripheral transparency, on the other hand, always displays summary information on all the robots and the progress of each task.

The interface can be configured to show or hide every element. For the purposes of our work, we identified four essential “transparency modes”:

-

•

No Transparency (NT). The interface hides all the information originated by the robots or other operators. The operator can still interact with robots and objects using all the control modalities.

-

•

Central Transparency (CT). The interface overlays a direction pointer and text to indicate the heading and current task of each robot (as shown in Fig. 8). The color of the pointer resembles the color of the fiducial markers on each robot to differentiate between multiple pointers. The robot status displays the current operation executed by the robot corresponding to the states of the collective transport finite state machine (see Fig. LABEL:fig-loi:statemachine). Additionally, the interface indicates the commands of other operators, to foster shared awareness across operators. This information is available only for entities in the operator’s field-of-view. The operator can move around in the environment to view information of other robots and objects that are not in the current field-of-view.

-

•

Peripheral Transparency (PT). The interface offers a robot panel, an object panel, and a log window containing global information on the system and its constituents (see Fig. 9). The robot panel contains one icon for each robot. The panel highlights the icon corresponding to the robots that are moving or performing operator-defined actions. The panel also displays a warning, through a blinking exclamation point, to notify the operators of any fault conditions. These include getting stuck due to an obstacle, and software or hardware failures. The object panel shows all the objects in the environment. The interface highlights the objects currently manipulated by the robots. The panel also provides a functionality to select an object by clicking on the lock icon. An operator can convey their intention of manipulating an object by selecting the lock in the object panel. The interface highlights the lock with a blue icon to signify own selection and a red icon to indicate the selection of another operator. An operator can lock only one object at a time and cannot overwrite the selection of other operators.

-

•

Mixed Transparency (MT). The interface also allows one to enable both central and peripheral transparency. In this case, the displayed information is a combination of the two transparency modes.

3.4 Communication Modes

Analogously to transparency modes, the interface also defines different modes for inter-human communication. We classify inter-human communication into direct, indirect, and a combination of both. The communication modes are described as follows.

-

•

No Communication (NC). In this mode, the operators are completely unable to communicate with each other. The interface hides all the information originating from other operators, such as which robots are being used and which objects are being manipulated.

-

•

Direct Communication (DC). In this mode, the operators can communicate verbally while performing the task. We established a verbal communication channel using Zoom555www.zoom.us, a video-conferencing application. The operators are allowed to ask for help and strategize at will towards the completion of the task.

-

•

Indirect Communication (IC). In contrast to direct communication, in this mode the operators cannot verbally communicate their intentions and actions, but they can use the presented transparency modes to communicate indirectly. In this paper, the choice of which transparency mode is active was determined by us at experiment time for the purposes of our study. In a realistic setting, however, each operator is allowed to choose the most appropriate mode.

-

•

Mixed Communication (MC). In this mode, the operators can communicate both directly and indirectly throughout the duration of the experiment.

4 User Study under Ideal Conditions

4.1 Preliminaries

The main purpose of this first set of experiments is to validate the usability of the various transparency () and communication () modes under ideal conditions in remote interaction (), i.e., with negligible loss of information. We base the experiments on the following main hypotheses.

Hypotheses on the impact of different transparency modes:

-

•

H1: Mixed transparency (MT) has the best outcome with respect to other modes.

-

•

H2: Operators prefer mixed transparency (MT) over other modes.

-

•

H3: Operators prefer central transparency (CT) over peripheral transparency (PT).

Hypotheses on the impact of different communication modes:

-

•

H1: Mixed communication (MC) has the best outcome with respect to other modes.

-

•

H2: Operators prefer mixed communication (MC) over other modes.

-

•

H3: Operators prefer direct communication (DC) over indirect communication (IC).

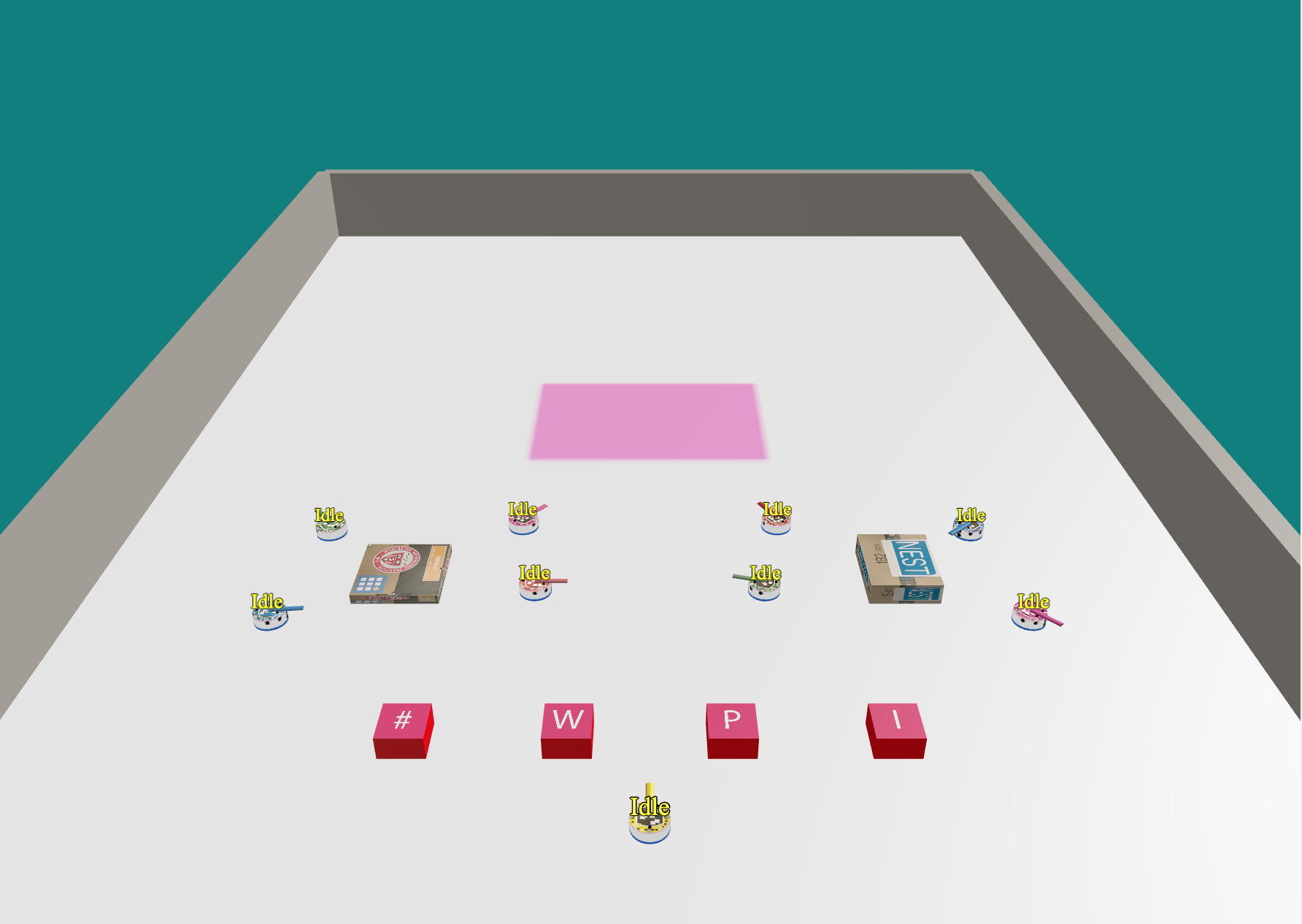

Experimental Setup. We designed a game scenario (shown in Fig. 10) where the operators were given 9 robots to transport 6 objects (2 big and 4 small) to a goal region. Big objects were worth 2 points each, and small objects were worth 1 point each. The operators had to work as a team to score as many points as possible, over a maximum of 8, in experiments lasting 8 minutes. The operators could move the big objects using the collective transport behavior, or directly use individual robots or team manipulation commands to push the objects.

Participant Sample. For this user study, we recruited 28 university students. 14 of them (5 female, 9 male), with ages ranging from 19 to 37 years old (), performed the task four times with a different transparency mode (NT, CT, PT and MT) each time. The other 14 participants (4 female, 10 male), with ages ranging from 18 to 48 years old (), performed the task four times with a different communication mode (NC, DC, IC and MC) each time. We chosen the teams and the assignments at random. No participant had prior experience with the remote interface.

Procedures. Each session of the study had two participants and approximately took a total of 105 minutes. After signing the consent form, we explained the task and gave each participant 10 minutes to familiarize with the system. We randomized the order of the tasks and the modalities to reduce the influence of learning effects. After each task, the participants had to answer a subjective questionnaire.

Metrics. We recorded subjective and objective measures for each participant and each task. We used the following common measures:

-

•

Situational Awareness. We used the Situational Awareness Rating Technique (SART) taylor2017situational on a 4-point Likert scale likert to assess the awareness of the situation after each task.

-

•

Task Workload. We used the NASA TLX hart1988development scale on a 4-point Likert scale to compare the perceived workload in each task.

-

•

Trust. We used the trust questionnaire uggirala2004measurement on a 4-point Likert scale to compare the trust in the interface affected by each transparency mode.

-

•

Quality of Interaction. We used a custom questionnaire on a 5-point Likert scale to assess the team-level and robot-level interaction. The interaction questionnaire is reported in Fig. 11.

-

•

Performance. We used the points earned for each task as a metric to scale the performance achieved for each transparency mode.

-

•

Usability. We asked participants to select the features (log, robot panel, object panel, and on-robot status) they used during the study. Additionally, we asked them to rank the transparency modes from 1 to 4, 1 being the highest rank.

- Did you understand your teammate’s intentions? Were you able to understand why your teammate was taking a certain action? - Could you understand your teammate’s actions? Could you understand what your teammate was doing at any particular time? - Could you follow the progress of the task? While performing the tasks, were you able to gauge how much of it was pending? - Did you understand what the robots were doing? At all times, were you sure how and why the robots were behaving the way they did? - Was the information provided by the interface clear to understand?

| Attributes | Relationship | -value | |

|---|---|---|---|

| SART SUBJECTIVE SCALE | |||

| Instability of Situation- | NTPTCTMT∗∗ | ||

| Complexity of Situation- | NTPTCTMT∗∗ | ||

| Variability of Situation- | not significant | ||

| Arousal | MTCTPTNT∗∗ | ||

| Concentration of Attention | MTCTPTNT∗∗ | ||

| Spare Mental Capacity | not significant | ||

| Information Quantity | MTCTPTNT∗∗ | ||

| Information Quality | MTCTPTNT∗∗ | ||

| Familiarity with Situation | CTMTPTNT∗ | ||

| NASA TLX SUBJECTIVE SCALE | |||

| Mental Demand- | NTPTCTMT∗∗ | ||

| Physical Demand- | not significant | ||

| Temporal Demand- | not significant | ||

| Performance | not significant | ||

| Effort- | PTNTMTCT∗∗ | ||

| Frustration- | not significant | ||

| TRUST SUBJECTIVE SCALE | |||

| Competence | MTCTPTNT∗∗ | ||

| Predictability | MTCTPTNT∗∗ | ||

| Reliability | MTCTPTNT∗ | ||

| Faith | MTCTPTNT∗∗ | ||

| Overall Trust | MTCTPTNT∗∗ | ||

| Accuracy | MTCTPTNT∗∗ | ||

| INTERACTION SUBJECTIVE SCALE | |||

| Teammate’s Intent | MTCTPTNT∗∗ | ||

| Teammate’s Action | MTCTNTPT∗∗ | ||

| Task Progress | MTCTPTNT∗ | ||

| Robot Status | MTCTPTNT∗∗ | ||

| Information Clarity | CTMTPTNT∗∗ | ||

| PERFORMANCE OBJECTIVE SCALE | |||

| Points Scored | not significant | ||

| Borda Count | NT | CT | PT | MT |

|---|---|---|---|---|

| Based on Collected Data Ranking (Table 1) | 22 | 63.5 | 38 | 76.5 |

| Based on Preference Data Ranking (Fig. 13) | 16 | 40 | 29 | 55 |

| Attributes | Relationship | -value | |

|---|---|---|---|

| SART SUBJECTIVE SCALE | |||

| Instability of Situation- | NCDCICMC∗∗ | ||

| Complexity of Situation- | NCICDCMC∗∗ | ||

| Variability of Situation- | NCDCICMC∗∗ | ||

| Arousal | MCDCICNC∗∗ | ||

| Concentration of Attention | MCDCICNC∗∗ | ||

| Spare Mental Capacity | MCDCICNC∗∗ | ||

| Information Quantity | not significant | ||

| Information Quality | not significant | ||

| Familiarity with Situation | MCDCICNC∗∗ | ||

| NASA TLX SUBJECTIVE SCALE | |||

| Mental Demand- | NCICDCMC∗∗ | ||

| Physical Demand- | NCICDCMC∗∗ | ||

| Temporal Demand- | NCICDCMC∗∗ | ||

| Performance | MCDCICNC∗∗ | ||

| Effort- | NCICDCMC∗∗ | ||

| Frustration- | NCICDCMC∗∗ | ||

| TRUST SUBJECTIVE SCALE | |||

| Competence | MCDCICNC∗∗ | ||

| Predictability | MCICDCNC∗∗ | ||

| Reliability | MCICDCNC∗ | ||

| Faith | MCDCICNC∗∗ | ||

| Overall Trust | MCDCICNC∗∗ | ||

| Accuracy | MCDCICNC∗∗ | ||

| INTERACTION SUBJECTIVE SCALE | |||

| Teammate’s Intent | MCDCICNC∗∗ | ||

| Teammate’s Action | MCDCICNC∗∗ | ||

| Task Progress | MCDCICNC∗∗ | ||

| Robot Status | MCICDCNC∗∗ | ||

| Information Clarity | MCICDCNC∗∗ | ||

| PERFORMANCE OBJECTIVE SCALE | |||

| Points Scored | not significant | ||

4.2 Analysis and Discussion

4.2.1 Collected Data

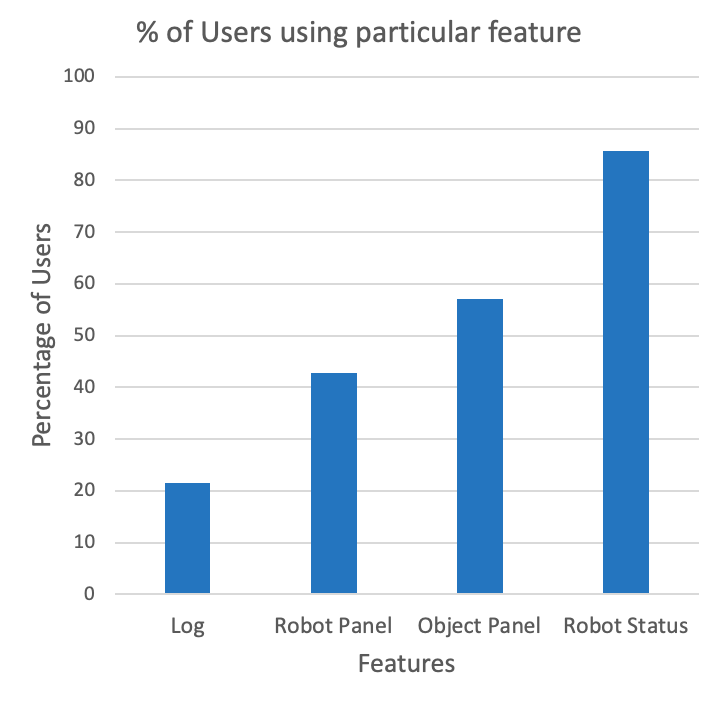

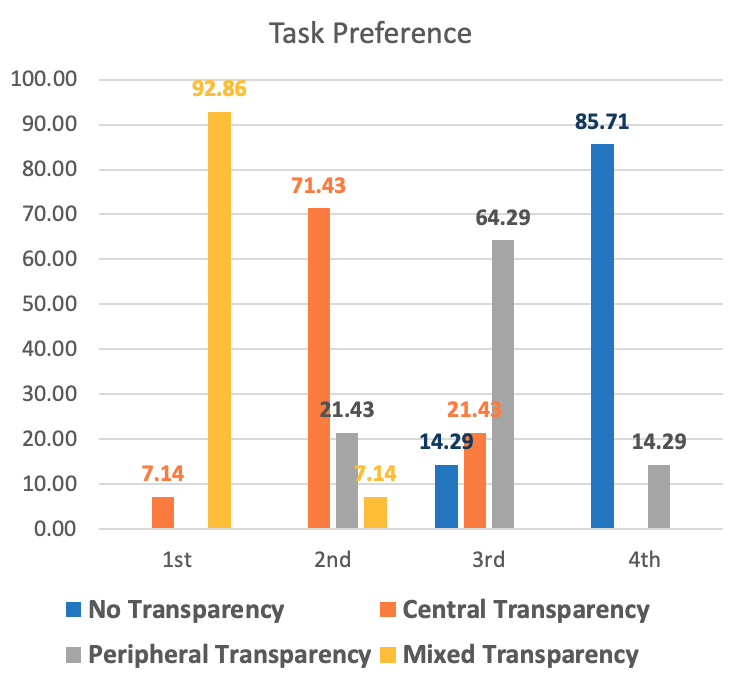

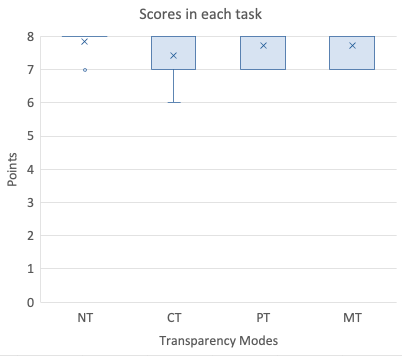

Transparency Data. Table 1 shows the summarized results for all the subjective scales and the objective performance. We used the Friedman test friedman1937use to analyze the data and assess the significance between different modes of transparency. We derived a ranking based on the mean ranks for all the attributes that showed statistical significance () or marginal significance (). Fig. 12 shows the percentage of operators using a particular feature. Fig. 13 shows the percentage of people ranking a task based on their choice. We used the Borda count black1976partial method for calculating the overall ranking of the collected data and transparency mode usability data. We inverted the ranking of the negative scales for calculating the Borda count scores. Table 2 shows the results of the Borda count for each category.

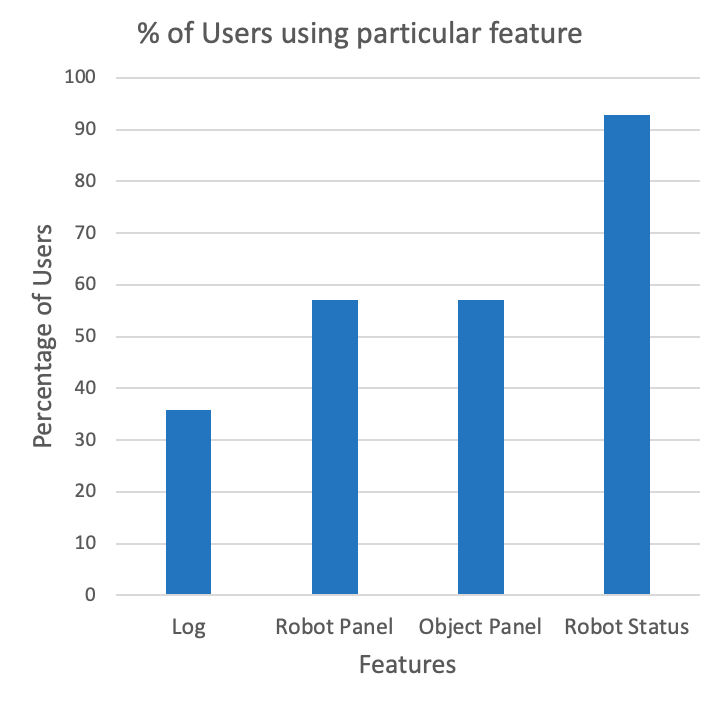

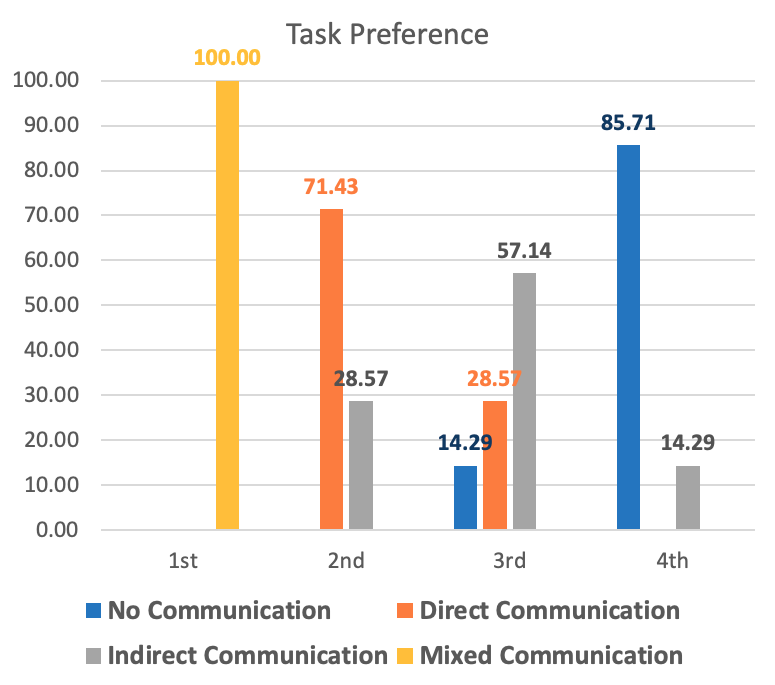

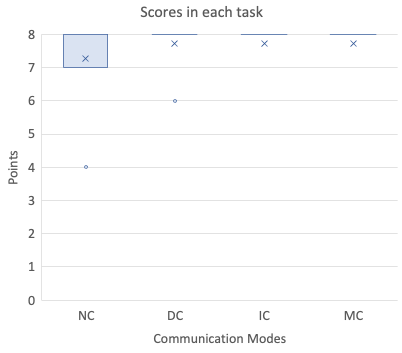

Communication Data. Table 3 shows the summarized results of the communication user study. We analyzed the data using the Friedman test friedman1937use to assess the significant relationships among different modes of communication. We used statistical significance () and marginal significance () to derive a ranking based on their mean ranks. Fig. 14 shows the percentage of operators using a particular feature. Fig. 15 shows the percentage of people ranking task based on their choice. Using the Borda count method, we derived an overall ranking based on the collected data and the user preference data (shown in Table 4).We inverted the ranking of the negative scales for the Borda count scores.

4.2.2 Transparency Modes

Table 2 shows that mixed transparency (MT) is the best transparency mode in terms of usability, supporting hypotheses H1 and H2. From the results, central transparency (CT) dominates peripheral transparency (PT), supporting hypothesis H3. In addition to this, we also analyzed the modes of transparency based on the sub-scales of the subjective data and further analysed for each mode as follows.

Mixed Transparency. This mode is the overall best choice for the operators. The results suggest that this mode provides the operators with the best situational awareness, measured in terms of least instability of situation, complexity of situation, best information arousal, level of concentration, information quality, and information quantity. Through this transparency mode, the operators had the most information about actions and intentions of teammates and robots, as well as of the task progress. This led the operators to report the highest trust across all trust sub-scales.

Central Transparency. This mode is the second best choice after mixed transparency. The operators had the best familiarity and clarity in terms of information provided by the interface. The operators experienced the lowest mental load and reported the least effort in performing the task. Fig. 12 supports these findings as 92% (13 out of 14 operators) indicated the on-robot status as the most useful feature.

Peripheral Transparency. The operators reported peripheral transparency as the most cumbersome mode. The operators experienced the lowest awareness, which caused degraded trust. The operators reported that the mode was merely better than no transparency (NT), because the presence of some information is still better than no information.

Comparison with Proximal Interaction. Overall, the conclusions of this study are in line those we reported for proximal interaction. However, the results in this paper are more substantial compared to what we observed for proximal interaction. Unlike proximal interaction, mixed transparency in remote interaction was the clear winner, both from the collected data ranking and the preference data ranking (see Table. 2). Central transparency not only outperformed peripheral transparency in remote interaction, but dominated the results when compared to the findings of the study with proximal interaction. We speculate that this difference is due to the fact that, in proximal interaction, the operators had to devote effort to avoid bumping into robots and other operators while walking. This made the operators alert and anxious, affecting their focus on the information offered by the interface and the transparency modes. In remote interaction, as there was no need to physically move, the operators could focus on the displayed information more effectively.

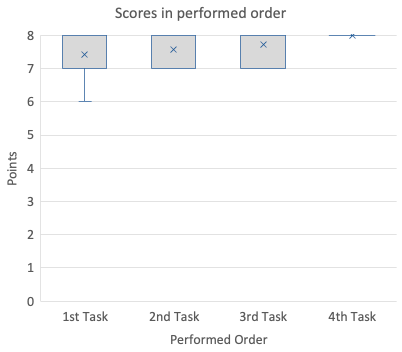

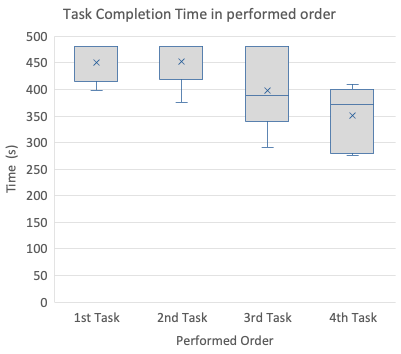

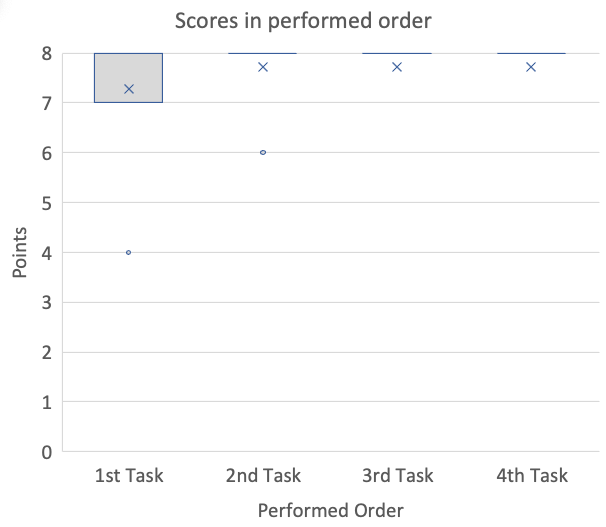

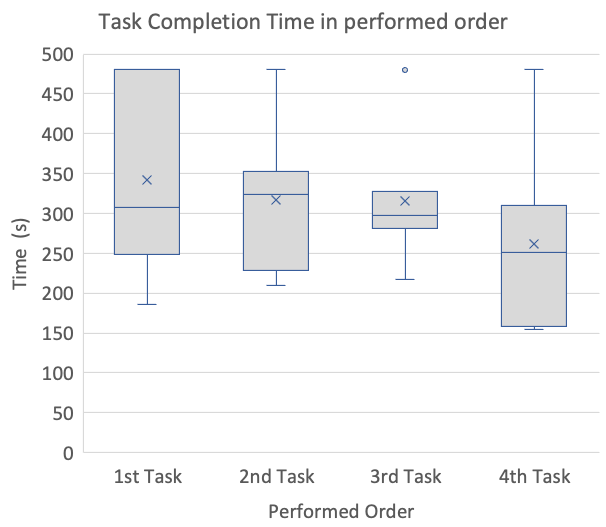

Our experiments did not reveal a substantial difference in performance across transparency modes. We hypothesize that this lack of difference is due to the learning effect across the four runs that each team had to perform. Fig. 16 shows the performance in each task and Fig. 17 reports the increase in performance due to the task order (learning effect). As most of the teams were able to complete the task in less than 8 minutes, Fig. 18 shows the decrease in time taken to complete the task in order of the performed task, indicating the impact of the learning effect.

4.2.3 Modes of Communication

Table 4 suggests that mixed communication (MC) is the best mode of communication, both in terms of usability preference and in terms of the data collected during the user study, supporting hypotheses H1 and H2. In addition, direct communication (DC) outperformed indirect communication (IC), confirming hypothesis H3. We also analysed the modes of communication based on the sub-scales of the subjective data and further analysed for each mode.

Mixed Communication. Mixed communication was recognized as the best mode, not only based on the Borda count but also looking at the results of the subjective data. This mode had the best situational awareness, trust in the system, and interaction with the robots and the operator, while having the lowest task load.

Direct Communication. This mode was the second best. It outperformed indirect communication in terms of information awareness and communication with the other operator (operator-level information), resulting in better trust in the system and lower workload with respect to indirect communication.

Indirect Communication. This mode was the third best choice. This mode proved to be better in conveying robot-level information, thus allowing the operator to better understand and predict robot actions, when compared to direct communication. This made the operators trust this mode more in terms of predictability and reliability, but at the cost of experiencing higher workload in comparison to mixed communication and direct communication.

Comparison with Proximal Interaction. Analogously to what we said about transparency, these observations are in line with the results of the proximal interaction study Patel2021 . However, the results of this study were more decisive with respect to the proximal interaction study. Also in this case, we observed that the proximal interaction made the operators alert and anxious about robots and the other operator. Also, as the operators had to physically walk around other robots, the interaction felt at times cumbersome. This observation is supported by workload results of the proximal interaction studies in our previous work, indicating high workload experienced in all modes of communication. In contrast, the results of workload in remote interaction showed significant difference between communication modes.

Our experiments did not reveal a significant difference in performance across communication modes. Similarly to what we discussed for transparency, we hypothesize that this lack of difference is due to the learning effect across the four runs that each team had to perform. Fig. 19 indicates the points earned by the operators in each task and Fig. 20 shows the learning effect as the increase in points earned in order of the performed task. As most of the operator teams were able to complete the task earlier than 8 minutes, Fig. 21 shows the decrease in time taken to complete the task in order of the performed task as a clear indicator of the learning effect.

5 User Study with Information Loss

The study presented so far was based on the assumption that the information flow was fast and continuous for every operator. This was possible because all the users involved in our experimental evaluation had fast, stable Internet connections that showed no issues. However, in remote operations, fast and stable connectivity cannot be taken for granted.

For this reason, we investigate the role that intermittent information flow plays in the efficiency of remote multi-human multi-robot interaction. In this paper, we measure information loss as the time elapsed between two updates of the graphical user interface. In other words, we define information loss as the inverse of the frame rate. With operators and robots in separate environments, it is likely for the operators to experience different levels of information loss. When this happens, we speak of heterogeneous information loss.

For the purposes of our study, we categorize information loss in two ranges of usability. The high usability range (UH) corresponds to levels of information loss that cause negligible discomfort in the operators that experience it. Conversely, we are in low usability range (UL) when the level of information loss is such that an operator cannot ignore its presence, experiencing some sort of discomfort.

In general, the exact extent of these ranges changes with the operators. We thus split our study in two parts. In the pilot study (Sec. 5.1), we investigate the extent of the usability ranges in experiments that involve a single operator. Next, in the main study (see Sec. 5.2), we turn to multiple operators and assess the effect of heterogeneous information loss, using the homogeneous case as a baseline reference.

5.1 Information Loss Pilot Study

Experimental Setup. For our pilot study with a single operator we used the game scenario presented in Sec. 4 (see Fig. 10). The operator was tasked with performing half of the game: moving 1 big object and 2 small objects. In contrast to the previous game, we set no time limit to complete the task, instead declaring completion when the required objects reached the goal region. Every participant had to perform the task 6 times with different levels of information loss each time. The levels spanned from 0 s to 2.5 s in increments of 0.5 s. To compensate for possible learning effects or other confusing factors, we determined different level orderings:

-

•

Increasing order: information loss increases with every task.

-

•

Decreasing order: the information loss decreases with every task.

-

•

Random 1: information loss is in the order s.

-

•

Random 2: the reverse order with respect to Random 1.

Participant Sample. We recruited 20 university students (7 females, 13 males) with ages ranging from 18 to 31 years old (). All participants were randomly assigned one task ordering. Each participant performed the 6 tasks in the determined order. No participant had prior experience with the remote interface.

Pilot Study Procedure. Each session of the study took approximately 90 minutes. After signing the consent form, we explained the task setup and gave the participant 12 minutes to familiarize with the system. After each task, the participant had to answer a subjective questionnaire.

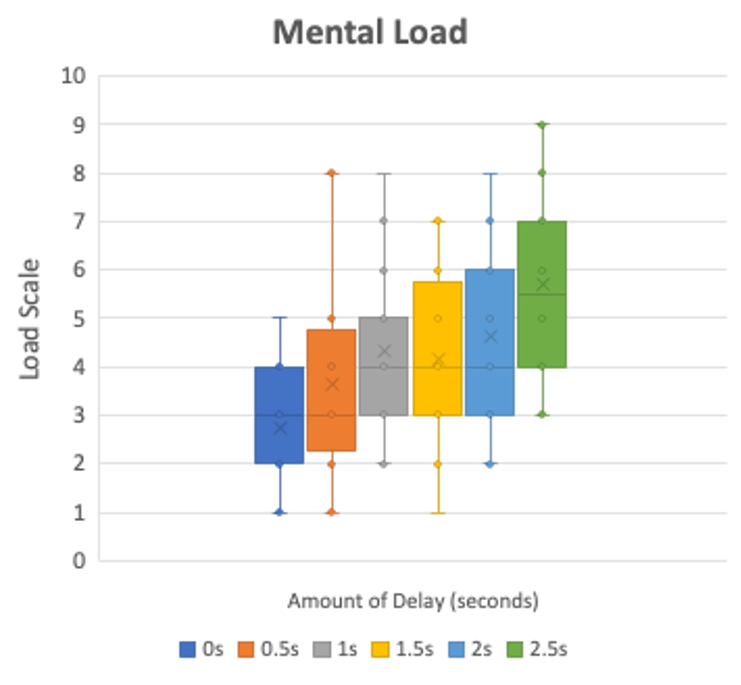

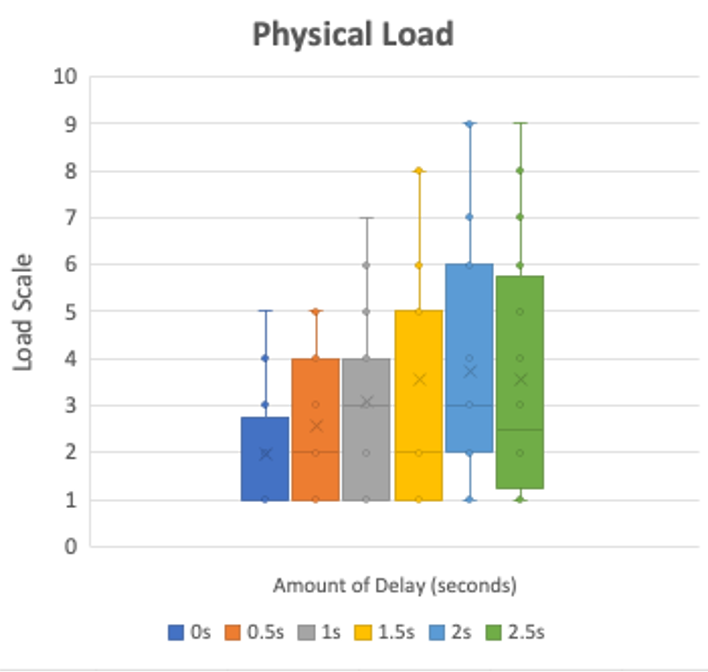

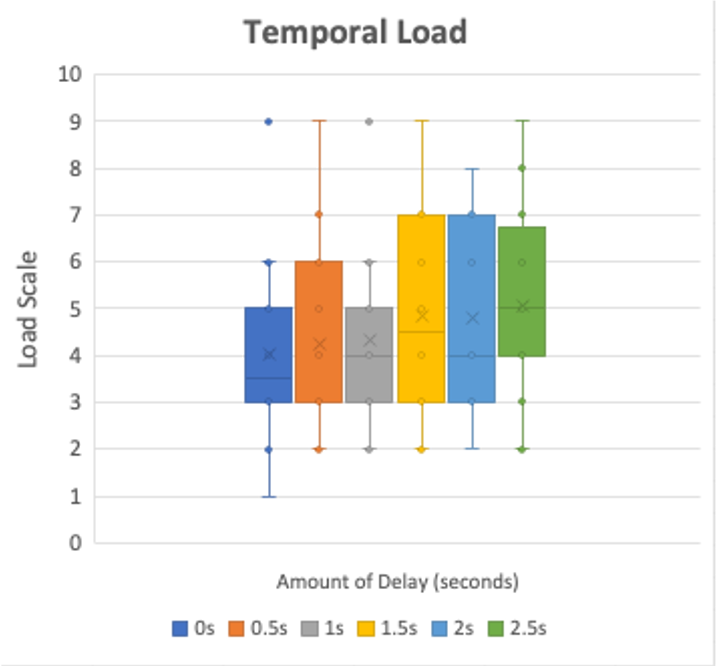

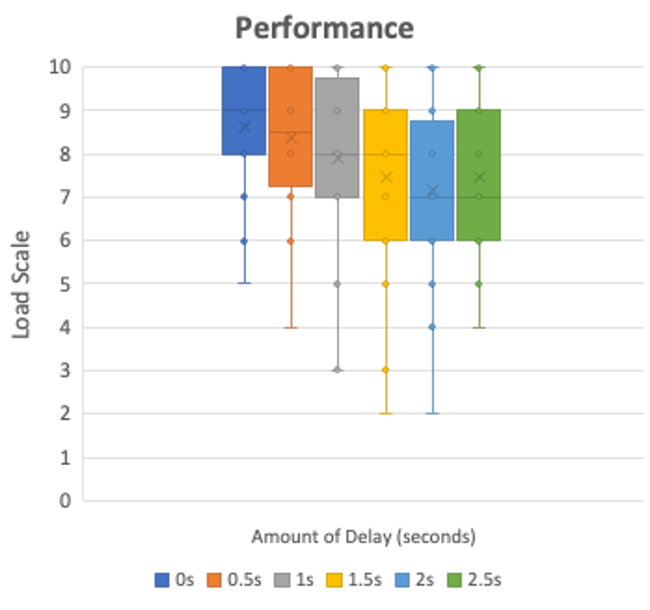

Metrics. We recorded the subjective and objective measures for each participant for each task. The performance of the operator was measured as time taken to complete a task. We used the NASA TLX hart1988development scale on a 10-point Likert scale to compare the perceived workload in each task. In addition to the workload questionnaire, the participants were requested to report the experienced discomfort on a 10-point Likert Scale, followed by a comment box for free-form description of the type of discomfort experienced.

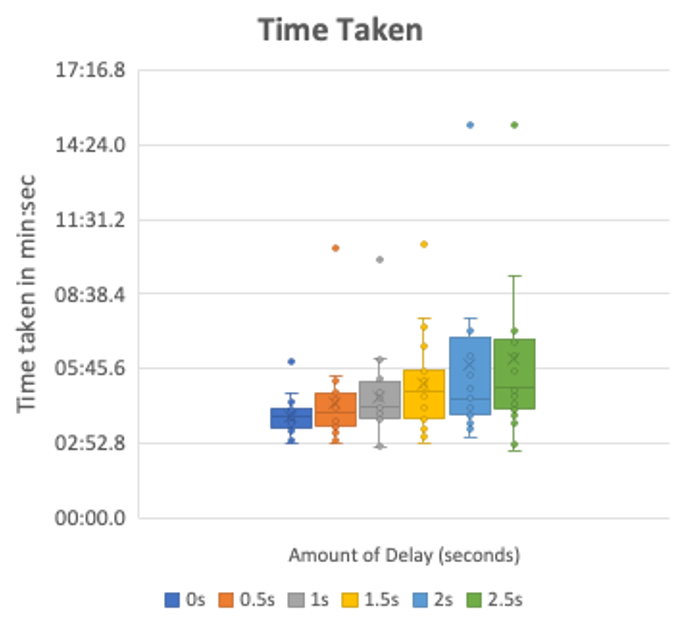

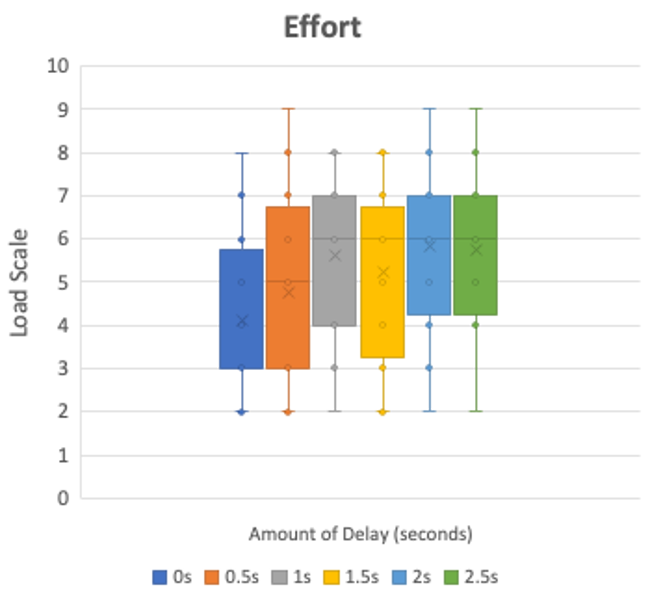

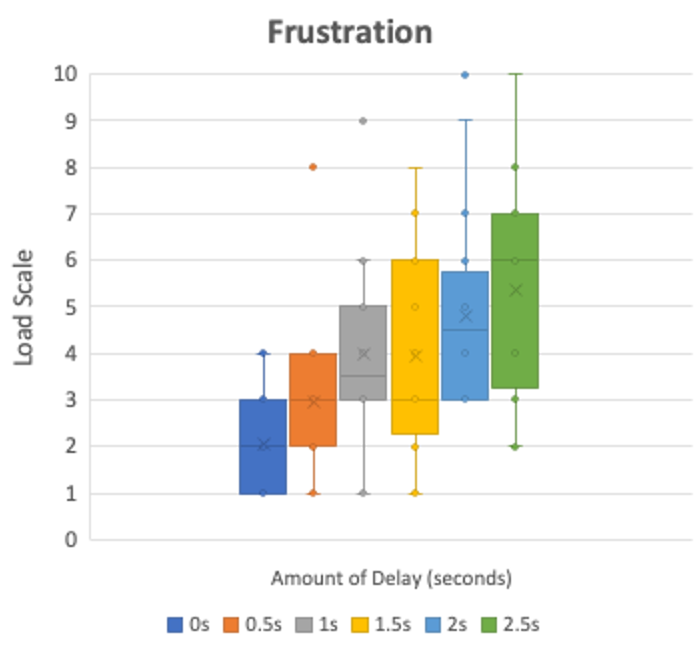

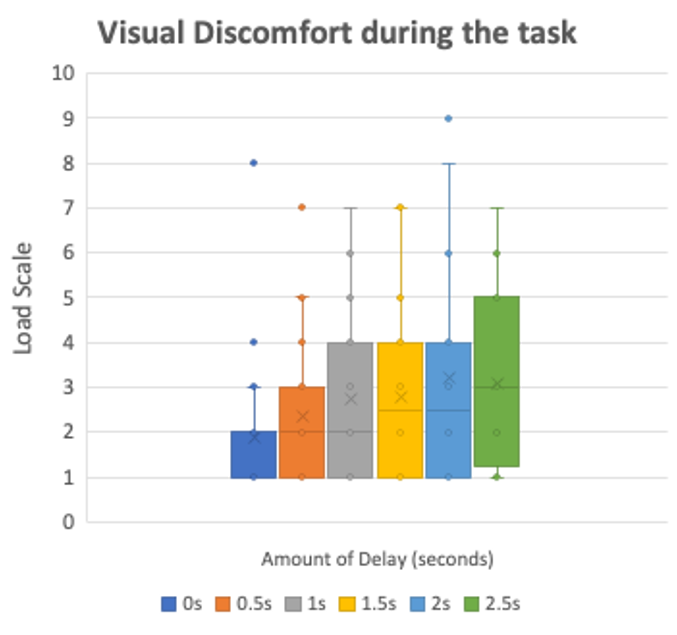

Results. For each item in the NASA TLX scale, we report a significance matrix based on the Friedman test to identify the two ranges of usability. The results are shown in Tables 5-12. The green cells in these tables indicate the high usability range and the red cells indicate the low usability range. We also superimposed the usability ranges in Table 13. From our data, we estimate the high usability range between 0 s and 0.5 s, and the low usability range between 2 s and 2.5 s. For the upcoming main study on information loss (Sec. 5.2), we took the midpoints of these ranges (0.25 s and 2.25 s). Figures 22-29 report the box plots of the recorded readings for the respective metrics.

| Performance | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.007 | 0.007 | 0.001 | 0.002 | ||

| 0.5s | 0.025 | 0.025 | ||||

| 1s | 0.007 | 0.025 | 0.025 | |||

| 1.5s | 0.007 | |||||

| 2s | 0.001 | 0.025 | 0.025 | |||

| 2.5s | 0.002 | 0.025 | 0.025 |

| ML | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.001 | 0.008 | 0.001 | 0.001 | ||

| 0.5s | 0.008 | 0.012 | 0.003 | |||

| 1s | 0.001 | 0.008 | 0.018 | |||

| 1.5s | 0.008 | 0.033 | 0.005 | |||

| 2s | 0.001 | 0.012 | 0.033 | 0.002 | ||

| 2.5s | 0.001 | 0.003 | 0.018 | 0.005 | 0.002 |

| PL | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.007 | 0.004 | 0.002 | 0.001 | 0.001 | |

| 0.5s | 0.007 | 0.008 | 0.004 | 0.02 | 0.033 | |

| 1s | 0.004 | 0.008 | ||||

| 1.5s | 0.002 | 0.004 | ||||

| 2s | 0.001 | 0.02 | ||||

| 2.5s | 0.001 | 0.033 |

| TL | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.013 | |||||

| 0.5s | 0.046 | |||||

| 1s | ||||||

| 1.5s | 0.013 | 0.046 | ||||

| 2s | ||||||

| 2.5s |

| PP | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.021 | 0.002 | ||||

| 0.5s | 0.034 | 0.02 | 0.033 | 0.033 | ||

| 1s | 0.034 | |||||

| 1.5s | 0.021 | 0.02 | ||||

| 2s | 0.002 | 0.033 | ||||

| 2.5s | 0.033 |

| E | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.005 | 0.018 | 0.039 | |||

| 0.5s | 0.029 | 0.013 | 0.046 | |||

| 1s | 0.005 | 0.029 | ||||

| 1.5s | ||||||

| 2s | 0.018 | 0.013 | ||||

| 2.5s | 0.039 | 0.046 |

| F | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.012 | 0.002 | 0.001 | 0.001 | ||

| 0.5s | 0.012 | 0.001 | 0.02 | 0.001 | 0.001 | |

| 1s | 0.002 | 0.001 | 0.018 | |||

| 1.5s | 0.001 | 0.02 | 0.029 | 0.005 | ||

| 2s | 0.001 | 0.001 | 0.029 | |||

| 2.5s | 0.001 | 0.001 | 0.018 | 0.005 |

| VD | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

|---|---|---|---|---|---|---|

| 0s | 0.035 | 0.001 | 0.013 | |||

| 0.5s | 0.004 | 0.021 | ||||

| 1s | ||||||

| 1.5s | 0.035 | |||||

| 2s | 0.001 | 0.004 | ||||

| 2.5s | 0.013 | 0.021 |

| VD | 0s | 0.5s | 1s | 1.5s | 2s | 2.5s |

| 0s | ||||||

| 0.5s | ||||||

| 1s | ||||||

| 1.5s | ||||||

| 2s | ||||||

| 2.5s |

5.2 Information Loss Main Study

Experimental Setup. The final study we performed concerns the role of information loss in remote interaction between multiple humans and multiple robots. A particular aspect we intent to explore is the role of heterogeneous information loss across operators. To this aim, we consider also the homogeneous case as a baseline. From the results of the pilot study in Sec. 5.1, we identified two levels of information loss: a low level, corresponding to high usability (0.25 s), and a high level, corresponding to low usability (2.25 s). We again used our collective transport game scenario and asked every participant to perform four experiments, one for each combination of levels of information loss for the operators. Once more, we randomized the order of the tasks to mitigate learning effects and other artifacts. In the following figures and tables, we use the following symbols to denote the four cases:

-

•

HoLL: low homogeneous information loss;

-

•

HoHH: high homogeneous information loss;

-

•

HeLH: heterogeneous information loss in which operator 1 has low loss and operator 2 has high loss;

-

•

HeHL: heterogeneous information loss in which the operators are reversed with respect to HeLH.

Hypotheses. We seek to validate the following working hypotheses:

-

•

HIL1: The case of low homogeneous information loss is the best overall with respect to the other cases in terms of measured metrics.

-

•

HIL2: The operators prefer low homogeneous information loss to the other cases.

-

•

HIL3: In the heterogeneous information loss case, operators prefer to be the ones with low information loss.

-

•

HIL4: Operators prefer to experience high information loss in the heterogeneous case to being in the high homogeneous loss case.

Participant Sample. We randomly paired the participants of the pilot study, forming 10 teams. Each team went through the four aforementioned cases.

Procedures. Each session took approximately 105 minutes. Each session began with a training period, followed by 12 minutes of independent exploration of the system by the participants. After each session, each participant had to answer a subjective questionnaire.

Metrics. We recorded subjective objective metrics for each participant and for each case. We used the same metrics presented in Sec. 4. In addition, we recorded the number of interactions the participants made with the interface, as well as the time interval between those interactions. This allowed us to analyze the difference in workload between operators of the same team.

| Attributes | Relationship | -value | |

|---|---|---|---|

| SART SUBJECTIVE SCALE | |||

| Instability of Situation- | HoHeHeHoLL | ||

| Complexity of Situation- | HoHeHeHoLL | ||

| Variability of Situation- | HoHeHeHoLL | ||

| Arousal | HoHeHoHeHL | ||

| Concentration of Attention | HoHeHeHoHH | ||

| Spare Mental Capacity | HoHeHoHeHL | ||

| Information Quantity | HoHeHoHeHL | ||

| Information Quality | HoHeHeHoHH | ||

| Familiarity with Situation | HoHeHoHeHL | ||

| NASA TLX SUBJECTIVE SCALE | |||

| Mental Demand- | HoHeHeHoLL | ||

| Physical Demand- | HoHH=HeHeHoLL | ||

| Temporal Demand- | not significant | ||

| Performance | HoHeHeHoHH | ||

| Effort- | HoHeHeHoLL | ||

| Frustration- | HoHeHeHoLL | ||

| TRUST SUBJECTIVE SCALE | |||

| Competence | HoHeHeHoHH | ||

| Predictability | HoHeHeHoHH | ||

| Reliability | HoHeHeHoHH | ||

| Faith | HoHeHeHoHH | ||

| Overall Trust | HoHeHeHoHH | ||

| Accuracy | HoHeHeHoHH | ||

| INTERACTION SUBJECTIVE SCALE | |||

| Teammate’s Intent | not significant | ||

| Teammate’s Action | HoHoHeHeLH | ||

| Task Progress | HoHeHoHeHL | ||

| Robot Status | HoHeHoHeHL | ||

| Information Clarity | HoHoHeHeHL | ||

| Attributes | Relationship | -value | |

|---|---|---|---|

| PERFORMANCE OBJECTIVE SCALE | |||

| Time Taken for the task | HoHeHL=HeHoLL | ||

| Number of Interactions | HeHoHoHeHL | ||

| Time gap between interactions | HoHeHeHoLL | ||

| Borda Count | HoLL | HoHH | HeLH | HeHL |

|---|---|---|---|---|

| Based on Collected Data Ranking (Tables 14 & 15) | 104 | 36.5 | 74.5 | 45 |

| Based on Preference Data Ranking (Fig. 30) | 77 | 29 | 52 | 42 |

| Attributes | Homogeneous IL | Heterogeneous IL | ||||

| HoLL | HoHH | |||||

| -value | -value | -value | ||||

| SART SUBJECTIVE SCALE | ||||||

| Instability of Situation | 0 | 1 | 0 | 1 | 0.6 | 0.439 |

| Complexity of Situation | 3 | 0.083 | 0 | 1 | 0.529 | 0.467 |

| Variability of Situation | 2.667 | 0.102 | 1.286 | 0.257 | 1.143 | 0.285 |

| Arousal | 0.5 | 0.480 | 0.667 | 0.414 | 7.143 | 0.008 |

| Concentration of Attention | 2.667 | 0.102 | 0.667 | 0.414 | 2.778 | 0.096 |

| Spare Mental Capacity | 0.2 | 0.655 | 1.286 | 0.257 | 5.444 | 0.02 |

| Information Quantity | 0.5 | 0.480 | 0.5 | 0.480 | 1.667 | 0.197 |

| Information Quality | 0.5 | 0.480 | 0.143 | 0.750 | 5.444 | 0.02 |

| Familiarity with Situation | 0.2 | 0.655 | 0 | 1 | 0.057 | 0.796 |

| NASA TLX SUBJECTIVE SCALE | ||||||

| Mental Demand | 0 | 1 | 0.5 | 0.48 | 3.257 | 0.071 |

| Physical Demand | 0.333 | 0.564 | 0.111 | 0.739 | 1.143 | 0.285 |

| Temporal Demand | 1 | 0.317 | 0.143 | 0.705 | 0.077 | 0.782 |

| Performance | 2 | 0.157 | 0.111 | 0.739 | 7.143 | 0.008 |

| Effort | 0 | 1 | 0.2 | 0.655 | 5.444 | 0.02 |

| Frustration | 0.333 | 0.564 | 0 | 1 | 3.267 | 0.071 |

| TRUST SUBJECTIVE SCALE | ||||||

| Competence | 0 | 1 | 1.8 | 0.180 | 9.308 | 0.002 |

| Predictability | 2 | 0.157 | 0 | 1 | 6.231 | 0.013 |

| Reliability | 0.333 | 0.564 | 2.667 | 0.102 | 6.231 | 0.013 |

| Faith | 0.333 | 0.564 | 0.667 | 0.414 | 3.769 | 0.052 |

| Overall Trust | 0 | 1 | 0.2 | 0.655 | 6.231 | 0.013 |

| Accuracy | 0 | 1 | 0.2 | 0.655 | 5.444 | 0.02 |

| INTERACTION SUBJECTIVE SCALE | ||||||

| Teammate’s Intent | 0.667 | 0.414 | 0 | 1 | 0.057 | 0.795 |

| Teammate’s Action | 1.8 | 0.180 | 0.143 | 0.705 | 0 | 1 |

| Task Progress | 0.333 | 0.564 | 2.667 | 0.102 | 2.579 | 0.108 |

| Robot Status | 0.667 | 0.414 | 0.4 | 0.527 | 5.333 | 0.021 |

| Information Clarity | 0.143 | 0.705 | 0.5 | 0.480 | 0.286 | 0.593 |

| Attributes | Homogeneous IL | Heterogeneous IL | ||||

| HoLL | HoHH | |||||

| -value | -value | -value | ||||

| PERFORMANCE OBJECTIVE SCALE | ||||||

| Number of Interactions | 0.111 | 0.739 | 1 | 0.317 | 0 | 1 |

| Time gap between interactions | 0.4 | 0.527 | 1.6 | 0.206 | 0 | 1 |

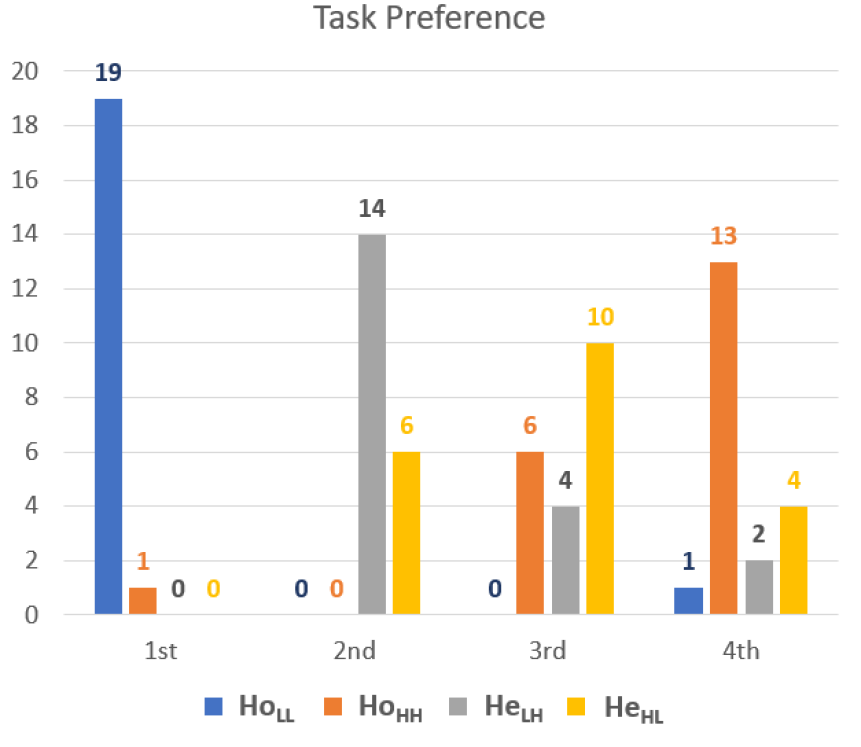

Results. Tables 14 and 15 show the summarized results for the subjective scales and the objective metrics. We used the Friedman test to establish significance between different cases. We formed rankings based on the mean ranks for all the attributes that showed statistical significance () or marginal significance (). Tables 17 and 18 report an imbalance in awareness, workload, trust and interaction quality between operators of the same team in tasks with heterogeneous information loss. Fig. 30 shows which information loss cases were preferred by each operator. We used the Borda count black1976partial to calculate the overall ranking. Table 16 shows the results of the Borda count for each category.

5.2.1 Pilot and Main Study: Comparative Analysis

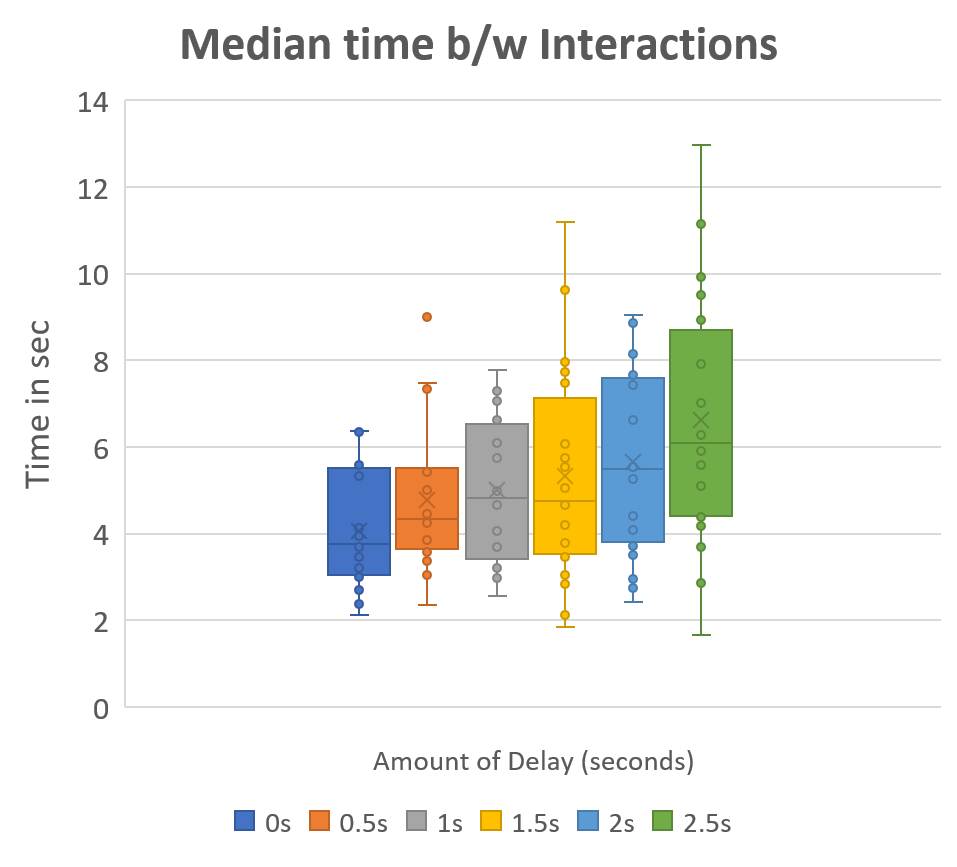

Pilot Study Data Analysis. Tables 5-29 and Figures 22-28 indicate that, with the increase in information loss, the workload experienced by the operator increases while performance degrades. We compared the number of interactions made with each level of information loss, and found no significant difference. We also recorded the time interval between interactions. The box plot of the median values (shown in Fig. 31) indicates a significant increase (, ) in time waited between interactions, according to the well-known waiting strategy observed in user studies with traditional tele-operation and remote interaction systems ellis2004generalizeability .

Pilot Study Behavioral Analysis. We observed the behaviour of the operators during and after each session. Two operators (out of 20) chose to stop their session with 2 s and 2.5 s of information loss. They reported that they had reached their ability to handle the high information loss. Eleven operators reported that they had reached their limit of frustration at 2.5 s, but nevertheless chose to continue because of their never give up attitude and their willingness to help our research. Seven operators reported that they could have handled higher than 2.5 s of loss because of their past experience with laggy systems and internet. As for the discomfort experienced by the operators, three operators started experiencing discomfort with 1 s of information loss; four operators with values over 1.5 s; three operators with information loss over 2 s; and six operators with information loss over 2.5 s. The reported discomfort included a slight headache and fatigue in their eyes. As a part of the exit interview for the pilot study, we asked the participants if the task order assigned to them impacted their performance in the study. The participants in the increasing order of information loss reported that the increase in loss made them ready for the next task and they expected the loss to increase. They reported that, with each task, the familiarity with experiencing loss was increasing, causing them to be better trained at handling it. All the participants in this category reported that they would have been more frustrated if the task ordering was reversed and they would be most frustrated if they had to experience the maximum information loss in the first task. However, the participants in the decreasing order of information loss reported that they would have been more frustrated if the information loss were increasing in each task. All the participants in this cohort reported that, as the loss was decreasing, they knew the worst was over and the tasks will only get easier from there on. We call this the count one’s blessing phenomenon: the participants preferred and defended their task order, assuming that the reverse order would only harm their performance and interaction quality.

Main Study Data Analysis. Table 16 shows that HoLL is the best information loss case both in terms of usability preference and according to the data collected during the user study. This supports our hypotheses HIL1 and HIL2 that low homogeneous information loss is the best overall case. The HeLH case is the next best choice for the participants, indicating preference for low personal information loss. This supports hypothesis, HIL3. The HeHL case is the third choice, showing that either operator experiencing low loss is still better than both operators experiencing high information loss. This supports hypothesis HIL4.

Main Study Behavioral Analysis. We also observed the behaviour of the operators during and after the sessions. Based on the preference shown in Fig. 30, we could categorize the participants in four typologies. (a) The Egocentrics: ten participants gave higher preference to the tasks with low information loss, and lower preference to the tasks with high information loss. However, when they had to rank their preference between the options of choosing low and high information loss for themselves and give the other to their teammate, the participants opted for low information loss even though that meant that their teammate might get more frustrated by experiencing higher loss. (b) The Altruists: five participants preferred to handle high information loss so that their teammate might face lower levels of frustration while interacting with a low information loss. These participants, the altruists, reported that they were confident in their ability to handle high information loss, and with their teammate experiencing low information loss their chances of completing the task might increase. (c) The Egalitarians: four participants preferred homogeneous loss over heterogeneous loss, even if that means that both operators would have to experience a high information loss. These participants reported that, with homogeneous information loss, they could actively interact with their teammate and handle equal workload, which they did not experience in tasks with heterogeneous information loss. (d) The Thinker: one participant preferred high information loss over low information loss. This participant reported that high information loss provided more time to think before making the next step and could interact more with the fellow teammate while doing so.

On the Out-of-the-Loop Performance Problem. Tables 17 and 18 show that the participants experienced unbalanced awareness, workload, trust and interaction quality, while engaging in the tasks with heterogeneous information loss. This imbalance indicates that the operator experiencing high information loss will go out of the loop endsley1995out ; gouraud2017autopilot . However, the interaction quality scales show that the significant difference in information awareness is observed only for the robot-level information and not on operator-level information. We conclude this as there was no loss or delay experienced in the communication channel for this user study; future work could investigate the impact of loss of communication between the operators.

6 Conclusions and Future Work

In this paper, we studied the effects of transparency, inter-human communication, and information loss on multi-human multi-robot interaction. We first performed a study of the most effective interface elements to support information transparency and inter-operator transparency. We analyzed the usability of our interface through a user study with 28 operators measuring awareness, workload, trust, and interaction efficiency. The findings of the user study indicated mixed transparency as the best transparency mode and mixed communication as the best communication mode.

We then studied the effects of information loss on the performance of the operators. We performed two user studies. The first, a pilot study, aimed to identify the amount of information loss that can be considered noticeable but bearable for the average operator, and which amount of information loss is unbearable. Using the result of this study, we performed a thorough exploration of the role of information loss in multi-operator scenarios, comparing heterogeneous and homogeneous cases. We derived a set of behavioral typologies of users, revealing that remote interaction must consider personal preferences and individual attitude when forming groups of operators.

Future work will focus on the role of training in multi-human multi-robot interaction. In this study, we assumed that no participant had prior experience with the interface, and we provided minimal guidance to avoid biasing our studies. However, effective multi-human multi-robot interaction for complex missions cannot ignore the need for training and proper teaming according to individual skills.

References

- (1) Ayanian, N., Spielberg, A., Arbesfeld, M., Strauss, J., Rus, D.: Controlling a team of robots with a single input. In: Robotics and Automation (ICRA), 2014 IEEE International Conference on, pp. 1755–1762. IEEE (2014). URL http://ieeexplore.ieee.org/abstract/document/6907088/

- (2) Baker, M., Casey, R., Keyes, B., Yanco, H.A.: Improved interfaces for human-robot interaction in urban search and rescue. In: 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No. 04CH37583), vol. 3, pp. 2960–2965. IEEE (2004)

- (3) Bhaskara, A., Skinner, M., Loft, S.: Agent Transparency: A Review of Current Theory and Evidence. IEEE Transactions on Human-Machine Systems pp. 1–10 (2020). DOI 10.1109/THMS.2020.2965529. URL https://ieeexplore.ieee.org/document/8982042/

- (4) Black, D.: Partial justification of the borda count. Public Choice pp. 1–15 (1976)

- (5) Breazeal, C., Kidd, C.D., Thomaz, A.L., Hoffman, G., Berlin, M.: Effects of nonverbal communication on efficiency and robustness in human-robot teamwork. In: 2005 IEEE/RSJ international conference on intelligent robots and systems, pp. 708–713. IEEE (2005)

- (6) Calhoun, G.L., Draper, M.H.: 11. multi-sensory interfaces for remotely operated vehicles. In: Human factors of remotely operated vehicles. Emerald Group Publishing Limited (2006)

- (7) Chakraborti, T., Kulkarni, A., Sreedharan, S., Smith, D.E., Kambhampati, S.: Explicability? Legibility? Predictability? Transparency? Privacy? Security? The Emerging Landscape of Interpretable Agent Behavior. Proceedings of the international conference on automated planning and scheduling 29, 86–96 (2019)

- (8) Chen, J.Y., Durlach, P.J., Sloan, J.A., Bowens, L.D.: Human–robot interaction in the context of simulated route reconnaissance missions. Military Psychology 20(3), 135–149 (2008)

- (9) Chen, J.Y., Procci, K., Boyce, M., Wright, J., Garcia, A., Barnes, M.: Situation Awareness-Based Agent Transparency:. Tech. rep., Defense Technical Information Center, Fort Belvoir, VA (2014). DOI 10.21236/ADA600351. URL http://www.dtic.mil/docs/citations/ADA600351

- (10) Chen, J.Y.C., Haas, E.C., Barnes, M.J.: Human Performance Issues and User Interface Design for Teleoperated Robots. IEEE Transactions on Systems, Man and Cybernetics, Part C (Applications and Reviews) 37(6), 1231–1245 (2007). DOI 10.1109/TSMCC.2007.905819. URL http://ieeexplore.ieee.org/document/4343985/

- (11) Chen, J.Y.C., Lakhmani, S.G., Stowers, K., Selkowitz, A.R., Wright, J.L., Barnes, M.: Situation awareness-based agent transparency and human-autonomy teaming effectiveness. Theoretical Issues in Ergonomics Science 19(3), 259–282 (2018). DOI 10.1080/1463922X.2017.1315750. URL https://www.tandfonline.com/doi/full/10.1080/1463922X.2017.1315750

- (12) Cheung, Y., Chung, J.H.: Semi-Autonomous Control of Single-Master Multi-Slave Teleoperation of Heterogeneous Robots for Multi-Task Multi-Target Pairing. International Journal of Control and Automation 4(3), 17 (2011)

- (13) Collett, T., MacDonald, B.: Developer oriented visualization of a robot program: An augmented reality approach. In: Proc of the 2006 ACM Conference on Human-Robotic Interaction, pp. 2–4 (2006)

- (14) Daily, M., Cho, Y., Martin, K., Payton, D.: World embedded interfaces for human-robot interaction. In: 36th Annual Hawaii International Conference on System Sciences, 2003. Proceedings of the, pp. 6–pp. IEEE (2003)

- (15) Dardona, T., Eslamian, S., Reisner, L.A., Pandya, A.: Remote Presence: Development and Usability Evaluation of a Head-Mounted Display for Camera Control on the da Vinci Surgical System. Robotics 8(2), 31 (2019). DOI 10.3390/robotics8020031. URL https://www.mdpi.com/2218-6581/8/2/31

- (16) Darken, R.P., Peterson, B.: Spatial orientation, wayfinding, and representation. (2014)

- (17) Dimitoglou, G.: Telepresence: Evaluation of Robot Stand-Ins for Remote Student Learning. Journal of Computing Sciences in Colleges 35, 15 (2019)

- (18) Do, N.D., Yamashina, Y., Namerikawa, T.: Multiple Cooperative Bilateral Teleoperation with Time-Varying Delay. SICE Journal of Control, Measurement, and System Integration 4(2), 89–96 (2011). DOI 10.9746/jcmsi.4.89. URL http://japanlinkcenter.org/JST.JSTAGE/jcmsi/4.89?lang=en&from=CrossRef&type=abstract

- (19) Dong Gun Lee, Gun Rae Cho, Min Su Lee, Byung-Su Kim, Sehoon Oh, Hyoung Il Son: Human-centered evaluation of multi-user teleoperation for mobile manipulator in unmanned offshore plants. In: 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5431–5438. IEEE, Tokyo (2013). DOI 10.1109/IROS.2013.6697142. URL http://ieeexplore.ieee.org/document/6697142/

- (20) Ellis, S.R., Mania, K., Adelstein, B.D., Hill, M.I.: Generalizeability of latency detection in a variety of virtual environments. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, vol. 48, pp. 2632–2636. SAGE Publications Sage CA: Los Angeles, CA (2004)

- (21) Endsley, M.R., Kiris, E.O.: The out-of-the-loop performance problem and level of control in automation. Human factors 37(2), 381–394 (1995)

- (22) Esfahlani, S.S.: Mixed reality and remote sensing application of unmanned aerial vehicle in fire and smoke detection. Journal of Industrial Information Integration p. S2452414X18300773 (2019). DOI 10.1016/j.jii.2019.04.006. URL https://linkinghub.elsevier.com/retrieve/pii/S2452414X18300773

- (23) Ferreira, L.R.N., Pereira, L.T.: Immersive Mobile Telepresence Systems: A Systematic Literature Review. Journal of Mobile Multimedia 15, 16 (2020)

- (24) Feth, D., Tran, B.A., Groten, R., Peer, A., Buss, M.: Shared-Control Paradigms in Multi-Operator-Single-Robot Teleoperation. In: R. Dillmann, D. Vernon, Y. Nakamura, S. Schaal, H. Ritter, G. Sagerer, R. Dillmann, M. Buss (eds.) Human Centered Robot Systems, vol. 6, pp. 53–62. Springer Berlin Heidelberg, Berlin, Heidelberg (2009). DOI 10.1007/978-3-642-10403-9˙6. URL http://link.springer.com/10.1007/978-3-642-10403-9_6. Series Title: Cognitive Systems Monographs

- (25) Friedman, M.: The use of ranks to avoid the assumption of normality implicit in the analysis of variance. Journal of the american statistical association 32(200), 675–701 (1937)

- (26) Goertz, R.C., Thompson, W.M.: Electronically controlled manipulator. Nucleonics (US) Ceased publication 12 (1954)

- (27) Gouraud, J., Delorme, A., Berberian, B.: Autopilot, mind wandering, and the out of the loop performance problem. Frontiers in neuroscience 11, 541 (2017)

- (28) Hart, S.G., Staveland, L.E.: Development of nasa-tlx (task load index): Results of empirical and theoretical research. In: Advances in psychology, vol. 52, pp. 139–183. Elsevier (1988)

- (29) Hokayem, P.F., Spong, M.W.: Bilateral teleoperation: An historical survey. Automatica 42(12), 2035–2057 (2006). DOI 10.1016/j.automatica.2006.06.027. URL https://linkinghub.elsevier.com/retrieve/pii/S0005109806002871

- (30) Holdcroft, D.: Forms of indirect communication: An outline. Philosophy & Rhetoric pp. 147–161 (1976)

- (31) Hong, A., Bulthoff, H.H., Son, H.I.: A visual and force feedback for multi-robot teleoperation in outdoor environments: A preliminary result. In: 2013 IEEE International Conference on Robotics and Automation, pp. 1471–1478. IEEE, Karlsruhe, Germany (2013). DOI 10.1109/ICRA.2013.6630765. URL http://ieeexplore.ieee.org/document/6630765/

- (32) Jang, I., Hu, J., Arvin, F., Carrasco, J., Lennox, B.: Omnipotent Virtual Giant for Remote Human-Swarm Interaction. arXiv:1903.10064 [cs] (2019). URL http://arxiv.org/abs/1903.10064. ArXiv: 1903.10064

- (33) Jingtai Liu, Lei Sun, Tao Chen, Xingbo Huang, Chunying Zhao: Competitive Multi-robot Teleoperation. In: Proceedings of the 2005 IEEE International Conference on Robotics and Automation, pp. 75–80. IEEE, Barcelona, Spain (2005). DOI 10.1109/ROBOT.2005.1570099. URL http://ieeexplore.ieee.org/document/1570099/

- (34) Jung, H., Song, Y.E.: Robotic remote control based on human motion via virtual collaboration system: A survey. Journal of Advanced Mechanical Design, Systems, and Manufacturing 12(7), JAMDSM0126–JAMDSM0126 (2018). DOI 10.1299/jamdsm.2018jamdsm0126. URL https://www.jstage.jst.go.jp/article/jamdsm/12/7/12_2018jamdsm0126/_article

- (35) Keskinpala, H.K., Adams, J.A.: Objective data analysis for a pda-based human robotic interface. In: 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No. 04CH37583), vol. 3, pp. 2809–2814. IEEE (2004)

- (36) Kheddar, A., Chellali, R., Coiffet, P.: Virtual environment–assisted teleoperation (2014)

- (37) Kim, J., Oh, S., Kim, D.: Implementation of a work distribution function for tele-operation under multi-user and multi-robot environments. 2012 12th International Conference on Control, Automation and Systems p. 4 (2012)

- (38) Kim, J., Yun, S., Yoo, J., Kim, D.: Implementation of N:1/1:N work distribution function for tele-operation under multi-user and multi-robot environments. In: 2013 10th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), pp. 328–330. IEEE, Jeju, Korea (South) (2013). DOI 10.1109/URAI.2013.6677378. URL http://ieeexplore.ieee.org/document/6677378/

- (39) Klow, J., Proby, J., Rueben, M., Sowell, R.T., Grimm, C.M., Smart, W.D.: Privacy, Utility, and Cognitive Load in Remote Presence Systems. In: Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, pp. 167–168. ACM, Vienna Austria (2017). DOI 10.1145/3029798.3038341. URL https://dl.acm.org/doi/10.1145/3029798.3038341

- (40) Klow, J., Proby, J., Rueben, M., Sowell, R.T., Grimm, C.M., Smart, W.D.: Privacy, Utility, and Cognitive Load in Remote Presence Systems. In: M.A. Salichs, S.S. Ge, E.I. Barakova, J.J. Cabibihan, A.R. Wagner, A. Castro-González, H. He (eds.) Social Robotics, vol. 11876, pp. 730–739. Springer International Publishing, Cham (2019). DOI 10.1007/978-3-030-35888-4˙68. URL http://link.springer.com/10.1007/978-3-030-35888-4_68. Series Title: Lecture Notes in Computer Science

- (41) Kolling, A., Sycara, K., Nunnally, S., Lewis, M.: Human Swarm Interaction: An Experimental Study of Two Types of Interaction with Foraging Swarms. Journal of Human-Robot Interaction 2(2) (2013). DOI 10.5898/JHRI.2.2.Kolling. URL http://dl.acm.org/citation.cfm?id=3109714

- (42) Lager, M., Topp, E.A., Malec, J.: Remote Supervision of an Unmanned Surface Vessel - A Comparison of Interfaces. In: 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), pp. 546–547. IEEE, Daegu, Korea (South) (2019). DOI 10.1109/HRI.2019.8673100. URL https://ieeexplore.ieee.org/document/8673100/

- (43) Lane, J.C., Carignan, C.R., Sullivan, B.R., Akin, D.L., Hunt, T., Cohen, R.: Effects of time delay on telerobotic control of neutral buoyancy vehicles. In: Proceedings 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), vol. 3, pp. 2874–2879. IEEE (2002)

- (44) Lee, D., Franchi, A., Son, H.I., Ha, C., Bulthoff, H.H., Giordano, P.R.: Semiautonomous Haptic Teleoperation Control Architecture of Multiple Unmanned Aerial Vehicles. IEEE/ASME Transactions on Mechatronics 18(4), 1334–1345 (2013). DOI 10.1109/TMECH.2013.2263963. URL http://ieeexplore.ieee.org/document/6522198/

- (45) Lee, S., Lucas, N.P., Ellis, R.D., Pandya, A.: Development and human factors analysis of an augmented reality interface for multi-robot tele-operation and control. In: Unmanned Systems Technology XIV, vol. 8387, p. 83870N. Baltimore, Maryland, USA (2012). DOI 10.1117/12.919751. URL http://proceedings.spiedigitallibrary.org/proceeding.aspx?doi=10.1117/12.919751

- (46) Lewis, B., Sukthankar, G.: Two hands are better than one: Assisting users with multi-robot manipulation tasks. In: 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 2590–2595. IEEE, San Francisco, CA (2011). DOI 10.1109/IROS.2011.6094815. URL http://ieeexplore.ieee.org/document/6094815/

- (47) Li, H., Zhang, L., Kawashima, K.: Operator dynamics for stability condition in haptic and teleoperation system: A survey. The International Journal of Medical Robotics and Computer Assisted Surgery 14(2), e1881 (2018). DOI 10.1002/rcs.1881. URL http://doi.wiley.com/10.1002/rcs.1881

- (48) Lichiardopol, S.: A Survey on Teleoperation. Technische Universitat Eindhoven, DCT report 20, 34 (2007)

- (49) LIKERT, R.: A technique for the measurement of attitudes. Arch Psych 140, 55 (1932). URL https://ci.nii.ac.jp/naid/10024177101/en/

- (50) Lunghi, G., Marin, R., Di Castro, M., Masi, A., Sanz, P.J.: Multimodal Human-Robot Interface for Accessible Remote Robotic Interventions in Hazardous Environments. IEEE Access 7, 127290–127319 (2019). DOI 10.1109/ACCESS.2019.2939493. URL https://ieeexplore.ieee.org/document/8823931/

- (51) Lyons, J.B.: Being transparent about transparency: A model for human-robot interaction. In: 2013 AAAI Spring Symposium Series (2013)

- (52) Ma, L., Yan, J., Zhao, J., Chen, Z., Cai, H.: Teleoperation System of Internet-Based Multi-Operator Multi-Mobile-Manipulator. In: 2010 International Conference on Electrical and Control Engineering, pp. 2236–2240. IEEE, Wuhan, China (2010). DOI 10.1109/iCECE.2010.551. URL http://ieeexplore.ieee.org/document/5631615/

- (53) MacKenzie, I.S., Ware, C.: Lag as a determinant of human performance in interactive systems. In: Proceedings of the INTERACT’93 and CHI’93 conference on Human factors in computing systems, pp. 488–493 (1993)

- (54) Mansour, C., Shammas, E., Elhajj, I.H., Asmar, D.: Dynamic bandwidth management for teleoperation of collaborative robots. In: 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), pp. 1861–1866. IEEE, Guangzhou, China (2012). DOI 10.1109/ROBIO.2012.6491239. URL http://ieeexplore.ieee.org/document/6491239/

- (55) Massimino, M.J., Sheridan, T.B.: Teleoperator performance with varying force and visual feedback. Human factors 36(1), 145–157 (1994)

- (56) Miller, G.A.: The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological review 63(2), 81 (1956)

- (57) Murphy, R.R.: A decade of rescue robots. In: 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5448–5449. IEEE (2012)

- (58) Music, S., Salvietti, G., Dohmann, P.B.g., Chinello, F., Prattichizzo, D., Hirche, S.: Human–Robot Team Interaction Through Wearable Haptics for Cooperative Manipulation. IEEE Transactions on Haptics 12(3), 350–362 (2019). DOI 10.1109/TOH.2019.2921565. URL https://ieeexplore.ieee.org/document/8733002/

- (59) Nak Young Chong, Kotoku, T., Ohba, K., Komoriya, K., Matsuhira, N., Tanie, K.: Remote coordinated controls in multiple telerobot cooperation. In: Proceedings 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No.00CH37065), vol. 4, pp. 3138–3143. IEEE, San Francisco, CA, USA (2000). DOI 10.1109/ROBOT.2000.845146. URL http://ieeexplore.ieee.org/document/845146/

- (60) Nielsen, C.W., Goodrich, M.A.: Comparing the usefulness of video and map information in navigation tasks. In: Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction, pp. 95–101 (2006)

- (61) Patel, J., Ramaswamy, T., Li, Z., Pinciroli, C.: Direct and indirect communication in multi-human multi-robot interaction. IEEE Transactions on Human-Machine Systems (2021). Submitted

- (62) Patel, J., Xu, Y., Pinciroli, C.: Mixed-granularity human-swarm interaction. In: Robotics and Automation (ICRA), 2019 IEEE International Conference on. IEEE (2019)

- (63) Patel, J., Xu, Y., Pinciroli, C.: Mixed-Granularity Human-Swarm Interaction. In: 2019 IEEE International Conference on Robotics and Automation (ICRA). IEEE, Montreal, Canada (2019). URL http://arxiv.org/abs/1901.08522. ArXiv: 1901.08522

- (64) Patel, T.M., Shah, S.C., Pancholy, S.B.: Long Distance Tele-Robotic-Assisted Percutaneous Coronary Intervention: A Report of First-in-Human Experience. EClinicalMedicine 14, 53–58 (2019). DOI 10.1016/j.eclinm.2019.07.017. URL https://linkinghub.elsevier.com/retrieve/pii/S2589537019301373

- (65) Pinciroli, C., Trianni, V., O’Grady, R., Pini, G., Brutschy, A., Brambilla, M., Mathews, N., Ferrante, E., Di Caro, G., Ducatelle, F., Birattari, M., Gambardella, L.M., Dorigo, M.: ARGoS: a modular, parallel, multi-engine simulator for multi-robot systems. Swarm Intelligence 6(4), 271–295 (2012)

- (66) Rakita, D., Mutlu, B., Gleicher, M.: Remote Telemanipulation with Adapting Viewpoints in Visually Complex Environments. In: Robotics: Science and Systems XV. Robotics: Science and Systems Foundation (2019). DOI 10.15607/RSS.2019.XV.068. URL http://www.roboticsproceedings.org/rss15/p68.pdf

- (67) Rastogi, A.: Design of an interface for teleoperation in unstructured environments using augmented reality displays. MA Sc. Thesis, University of Toronto (Canada) (1997)

- (68) Regenbrecht, J., Tavakkoli, A., Loffredo, D.: An intuitive human interface for remote operation of robotic agents in immersive virtual reality environments. 2016 IEEE International Sumpoiums on Human Robot Interactive Communication (RoMAN) (2016)

- (69) Ricks, B., Nielsen, C.W., Goodrich, M.A.: Ecological displays for robot interaction: A new perspective. In: 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), vol. 3, pp. 2855–2860. IEEE (2004)

- (70) Roldan, J.J., Pena-Tapia, E., Garcia-Aunon, P., Del Cerro, J., Barrientos, A.: Bringing Adaptive and Immersive Interfaces to Real-World Multi-Robot Scenarios: Application to Surveillance and Intervention in Infrastructures. IEEE Access 7, 86319–86335 (2019). DOI 10.1109/ACCESS.2019.2924938. URL https://ieeexplore.ieee.org/document/8746262/

- (71) Roundtree, K.A., Goodrich, M.A., Adams, J.A.: Transparency: Transitioning From Human–Machine Systems to Human-Swarm Systems. Journal of Cognitive Engineering and Decision Making p. 155534341984277 (2019). DOI 10.1177/1555343419842776. URL http://journals.sagepub.com/doi/10.1177/1555343419842776