On Multivariate Extensions of Value-at-Risk

Abstract

In this paper, we introduce two alternative extensions of the classical univariate Value-at-Risk (VaR) in a multivariate setting. The two proposed multivariate VaR are vector-valued measures with the same dimension as the underlying risk portfolio. The lower-orthant VaR is constructed from level sets of multivariate distribution functions whereas the upper-orthant VaR is constructed from level sets of multivariate survival functions. Several properties have been derived. In particular, we show that these risk measures both satisfy the positive homogeneity and the translation invariance property. Comparison between univariate risk measures and components of multivariate VaR are provided. We also analyze how these measures are impacted by a change in marginal distributions, by a change in dependence structure and by a change in risk level. Illustrations are given in the class of Archimedean copulas.

keywords:

Multivariate risk measures, Level sets of distribution functions, Multivariate probability integral transformation, Stochastic orders, Copulas and dependence.Introduction

During the last decades, researchers joined efforts to properly compare, quantify and manage

risk. Regulators edict rules for bankers and insurers to improve

their risk management and to avoid crises, not always successfully as illustrated by recent

events.

Traditionally, risk measures are thought of as mappings from a set of real-valued random

variables to the real numbers. However, it is often insufficient to consider a single real measure to quantify risks created by business activities, especially if the latter are affected by other external risk factors. Let us consider for instance the problem of solvency capital allocation for financial institutions with multi-branch businesses confronted to risks with specific characteristics. Under Basel II and Solvency II, a bottom-up approach is used to estimate a “top-level” solvency capital. This is done by using risk aggregation techniques who may capture risk mitigation or risk diversification effects. Then this global capital amount is re-allocated to each subsidiaries or activities for internal risk management purpose (“top-down approach”). Note that the solvability of each individual branch may strongly be affected by the degree of dependence amongst all branches. As a result, the capital allocated to each branch has to be computed in a multivariate setting where both marginal effects and dependence between risks should be captured. In this respect, the “Euler approach” (e.g., see Tasche, 2008) involving vector-valued risk measures has already been tested by risk management teams of some financial institutions.

Whereas the previous risk allocation problem only involves internal risks associated with businesses in different subsidiaries, the solvability of financial institutions could also be affected by external risks whose sources cannot be controlled. These risks may also be strongly heterogeneous in nature and difficult to diversify away. One can think for instance of systemic risk or contagion effects in a strongly interconnected system of financial companies. As we experienced during the 2007-2009 crisis, the risks undertaken by some particular institutions may have significant impact on the solvability of the others. In this regard, micro-prudential regulation has been criticized because of its failure to limit the systemic risk within the system. This question has been dealt with recently by among others, Gauthier et al. (2010) and Zhou (2010) who highlights the benefit of a “macro-prudential” approach as an alternative solution to the existing “micro-prudential” one (Basel II) which does not take into account interactions between financial institutions.

In the last decade, much research has been devoted to risk measures and many extensions to multidimensional settings have been investigated.

On theoretical grounds, Jouini et al. (2004) proposes a class of set-valued coherent risk measures. Ekeland et al., (2012) derive a multivariate generalization of Kusuoka’s representation for coherent risk measures.

Unsurprisingly, the main difficulty regarding multivariate generalizations of risk measures is the fact that vector preorders are, in general, partial preorders. Then, what can be considered in a context of multidimensional portfolios as the analogous of a “worst case” scenario and a related “tail distribution”? This is why several definitions of quantile-based risk measures are possible in a higher dimension.

For example, Massé and Theodorescu (1994) defined multivariate quantiles as half-planes and Koltchinskii (1997) provided a general treatment of multivariate quantiles as inversions of mappings. Another approach is to use geometric quantiles (see, for example, Chaouch et al., 2009). Along with the geometric quantile, the notion of depth function has been developed in recent years to characterize the quantile of multidimensional distribution functions (for further details see, for instance, Chauvigny et al., 2011).

We refer to Serfling (2002) for a large review on multivariate quantiles. When it turns to generalize the Value-at-Risk measure, Embrechts and Puccetti (2006), Nappo and Spizzichino (2009), Prékopa (2012) use the notion of quantile curve which is defined as the boundary of the upper-level set of a distribution function or the lower-level set of a survival function.

In this paper, we introduce two alternative extensions of the classical univariate Value-at-Risk (VaR) in a multivariate setting. The proposed measures are based on the Embrechts and Puccetti (2006)’s definitions of multivariate quantiles. We define the lower-orthant Value-at-Risk at risk level as the conditional expectation of the underlying vector of risks given that the latter stands in the -level set of its distribution function. Alternatively, we define the upper-orthant Value-at-Risk of at level as the conditional expectation of given that stands in the )-level set of its survival function. Contrarily to Embrechts and Puccetti (2006)’s approach, the extensions of Value-at-Risk proposed in this paper are real-valued vectors with the same dimension as the considered portfolio of risks. This feature can be relevant from an operational point of view.

Several properties have been derived. In particular, we show that the lower-orthant Value-at-Risk and the upper-orthant Value-at-Risk both satisfy the positive homogeneity and the translation invariance property. We compare the components of these vector-valued measures with the univariate VaR of marginals.

We prove that the lower-orthant Value-at-Risk (resp. upper-orthant Value-at-Risk) turns to be more conservative (resp. less conservative) than the vector composed of univariate VaR. We also analyze how these measures are impacted by a change in marginal distributions, by a change in dependence structure and by a change in risk level.

In particular, we show that, for Archimedean families of copulas, the lower-orthant Value-at-Risk and the upper-orthant Value-at-Risk are both increasing with respect to the risk level whereas their behavior is different with respect to the degree of dependence. In particular, an increase of the dependence amongts risks tends to lower the lower-orthant Value-at-Risk whereas it tends to widen the upper-orthant Value-at-Risk.

In addition, these two measures may be useful for some applications where risks are heterogeneous in nature. Indeed, contrary to many existing approaches, no arbitrary real-valued aggregate transformation is involved (sum, min, max,).

The paper is organized as follows. In Section 1, we introduce some notations, tools and technical assumptions. In Section 2, we propose two multivariate extensions of the Value-at-Risk measure. We study the properties of our multivariate VaR in terms of Artzner et al. (1999)’s invariance properties of risk measures (see Section 2.1). Illustrations in some Archimedean copula cases are presented in Section 2.2. We also compare the components of these multivariate risk measures with the associated univariate Value-at-Risk (see Section 2.3). The behavior of our with respect to a change in marginal distributions, a change in dependence structure and a change in risk level is discussed respectively in Sections 2.4, 2.5 and 2.6. In the conclusion, we discuss open problems and possible directions for future work.

1 Basic notions and preliminaries

In this section, we first introduce some notation and tools which will be used later on.

Stochastic orders

From now on, let be the univariate quantile function of a risk at level . More precisely, given an univariate continuous and strictly monotonic loss distribution function , , . We recall here the definition and some properties of useful univariate and multivariate stochastic orders.

Definition 1.1 (Stochastic dominance order)

Let and be two random variables. Then is said to be smaller than in stochastic dominance, denoted as , if the inequality is satisfied for all

Definition 1.2 (Stop-loss order)

Let and be two random variables. Then is said to be smaller than in the stop-loss order, denoted as , if for all with .

Definition 1.3 (Increasing convex order)

Let and be two random variables. Then is said to be smaller than in the increasing convex order, denoted as , if for all non-decreasing convex function such that the expectations exist.

The stop-loss order and the increasing convex order are equivalent (see Theorem 1.5.7 in Müller and Stoyan, 2001). Note that stochastic dominance order implies stop-loss order. For more details about stop-loss order we refer the interested reader to Müller (1997).

Finally, we introduce the definition of supermodular function and supermodular order for multivariate random vectors.

Definition 1.4 (Supermodular function)

A function is said to be supermodular if for any it satisfies

where the operators and denote coordinatewise minimum and maximum respectively.

Definition 1.5 (Supermodular order)

Let X and Y be two dimensional random vectors such that for all supermodular functions , provided the expectation exist. Then X is said to be smaller than Y with respect to the supermodular order (denoted by ).

This will be a key tool to analyze the impact of dependence on our multivariate risk measures.

Kendall distribution function

Let be a dimensional random vector, . As we will see later on, our study of multivariate risk measures strongly relies on the key concept of Kendall distribution function (or multivariate probability integral transformation), that is, the distribution function of the random variable , where is the multivariate distribution of random vector X. From now on, the Kendall distribution will be denoted by , so that , for . We also denote by the survival distribution function of , i.e., .

For more details on the multivariate probability integral transformation, the interested reader is referred to Capéraà et al., (1997), Genest and Rivest (2001), Nelsen et al. (2003), Genest and Boies (2003), Genest et al. (2006) and Belzunce et al. (2007).

In contrast to the univariate case, it is not generally true that the

distribution function of is uniform on , even when is continuous. Note also that it is not possible to characterize the joint distribution or reconstruct it from the knowledge of alone, since the latter does not contain any information about

the marginal distributions (see Genest and Rivest, 2001). Indeed, as a consequence of Sklar’s Theorem, the Kendall distribution only depends on the dependence structure or the copula function associated with X (see Sklar, 1959). Thus, we also have where and .

Furthermore:

-

1.

For a dimensional random vector with copula , the Kendall distribution function is linked to the Kendall’s tau correlation coefficient via: , for (see Section 5 in Genest and Rivest, 2001).

-

2.

The Kendall distribution can be obtain explicitly in the case of multivariate Archimedean copulas with generator333Note that generates a dimensional Archimedean copula if and only if its inverse is a monotone on (see Theorem 2.2 in McNeil and Nešlehová, 2009). , i.e., for all Table 1 provides the expression of Kendall distributions associated with Archimedean, independent and comonotonic dimensional random vectors (see Barbe et al., 1996). Note that the Kendall distribution is uniform for comonotonic random vectors.

Copula Kendall distribution Archimedean case Independent case Comonotonic case Table 1: Kendall distribution in some classical dimensional dependence structure. For further details the interested reader is referred to Section 2 in Barbe et al. (1996) and Section 5 in Genest and Rivest (2001). For instance, in the bivariate case, the Kendall distribution function is equal to for Archimedean copulas with differentiable generator It is equal to for the bivariate independence copula and to for the counter-monotonic bivariate copula.

-

3.

It holds that , for all i.e., the graph of the Kendall distribution function is above the first diagonal (see Section 5 in Genest and Rivest, 2001). This is equivalent to state that, for any random vector U with copula function and uniform marginals, where is a comonotonic random vector with copula function and uniform marginals.

This last property suggests that when the level of dependence between increases, the Kendall distribution also increases in some sense. The following result, using definitions of stochastic orders described above, investigates rigorously this intuition.

Proposition 1.1

Let (resp. ) be a random vector with copula (resp. ) and uniform marginals.

If then

Proof: Trivially, , for all (see Section 6.3.3 in Denuit et al., 2005). Let be a non-decreasing and convex function. It holds that , for all , and . Remark that since is non-decreasing and supermodular and is non-decreasing and convex then is a non-decreasing and supermodular function (see Theorem 3.9.3 in Müller and Stoyan, 2001). Then, by assumptions, . This implies Hence the result.

From Proposition 1.1, we remark that implies an ordering relation between corresponding Kendall’s tau : . Note that the supermodular order between U and

does not necessarily yield the stochastic dominance order between and (i.e., does not hold in general). For a bivariate counter-example, the interested reader is referred to, for instance, Capéraà et al. (1997) or Example 3.1 in Nelsen et al. (2003).

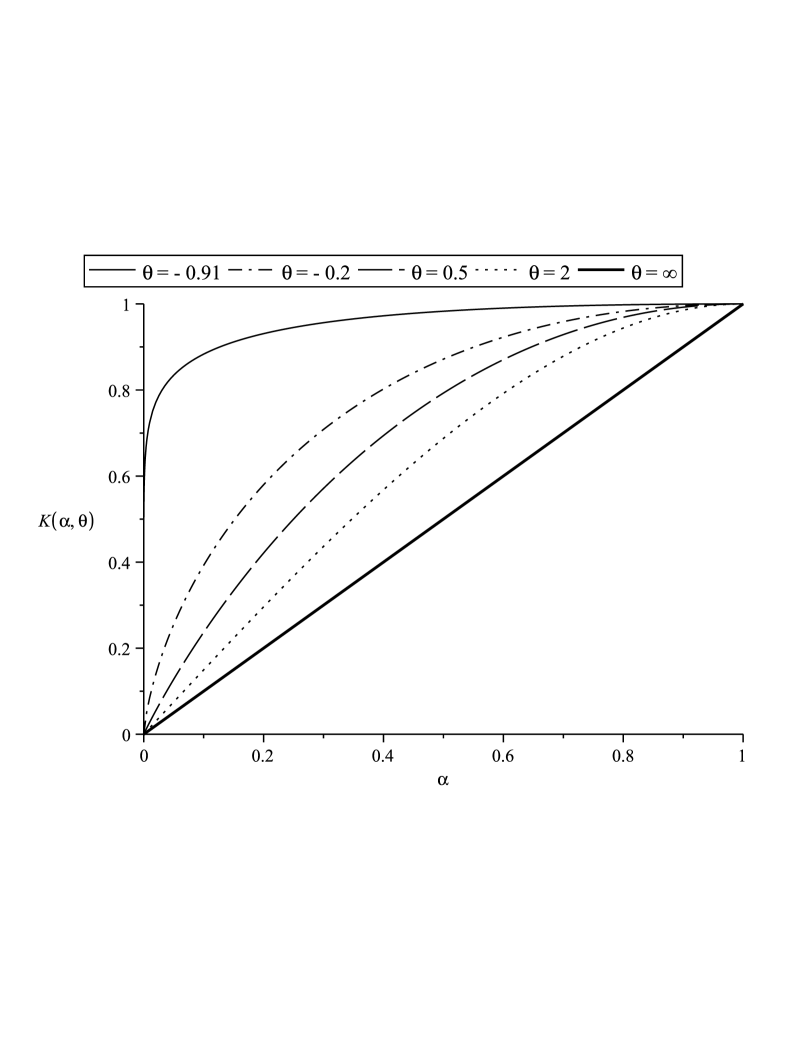

Let us now focus on some classical families of bivariate Archimedean copulas. In Table 2, we obtain analytical expressions of the Kendall distribution function for Gumbel, Frank, Clayton and Ali-Mikhail-Haq families.

| Copula | Kendall distribution | |

|---|---|---|

| Gumbel | ||

| Frank | ||

| Clayton | ||

| Ali-Mikhail-Haq |

Remark 1

Bivariate Archimedean copula can be extended to -dimensional copulas with as far as the generator is a -monotone function on (see McNeil and Nešlehová, 2009 for more details). For the -dimensional Clayton copulas, the underlying dependence parameter must be such that (see Example 4.27 in Nelsen, 1999). Frank copulas can be extended to -dimensional copulas for (see Example 4.24 in Nelsen, 1999).

Note that parameter governs the level of dependence amongst components of the underlying random vector. Indeed, it can be shown that, for all Archimedean copulas in Table 2, an increase of yields an increase of dependence in the sense of the supermodular order, i.e., (see further examples in Joe, 1997 and Wei and Hu, 2002). Then, as a consequence of Proposition 1.1, the following comparison result holds

In fact, a stronger comparison result can be derived for Archimedean copulas of Table 2, as shown in the following remark.

Remark 2

For copulas in Table 2, one can check that , for all . This means that, for these classical examples, the associated Kendall distributions actually increase with respect to the stochastic dominance order when the dependence parameter increases, i.e.,

In order to illustrate this property we plot in Figure 1 the Kendall distribution function for different choices of parameter in the bivariate Clayton copula case and in the bivariate Gumbel copula case.

2 Multivariate generalization of the Value-at-Risk measure

From the usual definition in the univariate setting, the Value-at-Risk is the minimal amount of the loss which accumulates a probability to the left tail and to the right tail. Then, if denotes the cumulative distribution function associated with risk and its associated survival function, then

and equivalently,

Consequently, the classical univariate VaR can be viewed as the boundary of the set or, similarly, the boundary of the set .

This idea can be easily extended in higher dimension, keeping in mind that the two previous sets are different in general as soon as . We propose a multivariate generalization of Value-at-Risk for a portfolio of dependent risks. As a starting point, we consider Definition 17 in Embrechts and Puccetti (2006). They suggest to define the multivariate lower-orthant Value-at-Risk at probability

level , for a increasing function : , as the boundary of its –upper-level set, i.e., and analogously, the multivariate upper-orthant Value-at-Risk, for a decreasing function

: , as the boundary of its –lower-level set, i.e., .

Note that the generalizations of Value-at-Risk by Embrechts and Puccetti (2006) (see also Nappo and Spizzichino, 2009; Tibiletti, 1993) are represented by an infinite number of points (an hyperspace of dimension , under some regularly conditions on the functions and ). This choice can be unsuitable when we face real risk management problems. Then, we propose more parsimonious and synthetic versions of the Embrechts and Puccetti (2006)’s measures. In particular in our propositions, instead of considering the whole hyperspace (or ) we only focus on the particular point in that matches the conditional expectation of X given that X stands in this set. This means that our measures are real-valued vectors with the same dimension as the considered portfolio of risks.

In addition, to be consistent with the univariate definition of , we choose (resp. ) as the dimensional loss distribution function (resp. the survival distribution function ) of the risk portfolio. This allows to capture information coming both from the marginal distributions and from the multivariate dependence structure, without using an arbitrary real-valued aggregate transformation (for more details see Introduction).

In analogy with the Embrechts and Puccetti’s notation we will denote our multivariate lower-orthant Value-at-Risk

and the upper-orthant one.

In the following, we will consider non-negative absolutely-continuous random vector444We restrict ourselves to because, in our applications, components of dimensional vectors correspond to random losses and are then valued in . (with respect to Lebesgue measure on ) with partially increasing multivariate distribution function555A function is partially increasing on if the functions of one variable are increasing. About properties of partially increasing multivariate distribution functions we refer the interested reader to Rossi (1973), Tibiletti (1991). and such that for . These conditions will be called regularity conditions.

However, extensions of our results in the case of multivariate distribution function on the entire space or in the presence of plateau in the graph of are possible. Starting from these considerations, we introduce here a multivariate generalization of the VaR measure.

Definition 2.1 (Multivariate lower-orthant Value-at-Risk)

Consider a random vector with distribution function satisfying the regularity conditions. For , we define the multidimensional lower-orthant Value-at-Risk at probability level by

where is the boundary of the set . Under the regularity conditions, is the -level set of , i.e., and the previous definition can be restated as

Note that, under the regularity conditions, has Lebesgue-measure zero in (e.g., see Property 3 in Tibiletti, 1990). Then we make sense of Definition 2.1 using the limit procedure in Feller (1966), Section 3.2:

| (1) |

for .

Dividing numerator and denominator in (1) by , we obtain, as

| (2) |

for , where is the Kendall distribution density function. This procedure gives a rigorous sense to our in Definition 2.1. Remark that the existence of and in (2) is guaranteed by the regularity conditions (for further details, see Proposition 1 in Imlahi et al., 1999 or Proposition 4 in Chakak and Ezzerg, 2000).

In analogy with Definition 2.1, we now introduce another possible generalization of the VaR measure based on the survival distribution function.

Definition 2.2 (Multivariate upper-orthant Value-at-Risk)

Consider a random vector with survival distribution satisfying the regularity conditions. For , we define the multidimensional upper-orthant Value-at-Risk at probability level by

where is the boundary of the set . Under the regularity conditions, is the -level set of , i.e., and the previous definition can be restated as

As for , under the regularity conditions, has Lebesgue-measure zero in (e.g., see Property 3 in Tibiletti, 1990) and we make sense of Definition 2.2 using the limit Feller’s procedure (see Equations (1)-(2)).

From now on, we denote by , , the components of the vector and by , , the components of the vector .

Note that if X is an exchangeable random vector, and for any . Furthermore, given a univariate random variable , for all in . Hence, lower-orthant VaR and upper-orthant VaR are the same for (univariate) random variables and Definitions 2.1 and 2.2 can be viewed as natural multivariate versions of the univariate case. As remarked above, in Definitions 2.1-2.2 instead of considering the whole hyperspace (or ), we only focus on the particular point in that matches the conditional expectation of X given that X falls in (or in ).

2.1 Invariance properties

In the present section, the aim is to analyze the lower-orthant VaR and upper-orthant VaR introduced in Definitions 2.1-2.2 in terms of classical invariance properties of risk measures (we refer the interested reader to Artzner et al., 1999). As these measures are not the same in general for dimension greater or equal to , we also provide some connections between these two measures.

We now introduce the following results (Proposition 2.1 and Corollary 2.1) that will be useful in order prove invariance properties of our risk measures.

Proposition 2.1

Let the function be such that .

-

-

If are non-decreasing functions, then the following relations hold

-

-

If are non-increasing functions, then the following relations hold

From Proposition 2.1 one can trivially obtain the following property which links the multivariate upper-orthant Value-at-Risk and lower-orthant one.

Corollary 2.1

Let be a linear function such that .

-

-

If are non-decreasing functions then then it holds that

and .

-

-

If are non-increasing functions then it holds that

and .

Example 1

If is a random vector with uniform margins and if, for all , we consider the functions such that , , then from Corollary 2.1,

| (3) |

for all , where . In this case, is the point reflection of with respect to point with coordinates . If X and have the same distribution function, then is invariant in law by central symmetry and additionally the copula of and its associated survival copula are the same. In that case is the point reflection of with respect to . This property holds for instance for elliptical copulas or for the Frank copula.

Finally, we can state the following result that proves positive homogeneity and translation invariance for our measures.

Proposition 2.2

Consider a random vector X satisfying the regularity conditions. For , the multivariate upper-orthant and lower-orthant Value-at-Risk satisfiy the following properties:

Positive Homogeneity:

Translation Invariance:

The proof comes down from Corollary 2.1.

2.2 Archimedean copula case

Surprisingly enough, the VaR and introduced in Definitions 2.1-2.2 can be computed analytically for any dimensional random vector with an Archimedean copula dependence structure. This is due to McNeil and Nešlehová’s stochastic representation of Archimedean copulas.

Proposition 2.3

(McNeil and Nešlehová, 2009) Let be distributed according to a -dimensional Archimedian copula with generator , then

| (4) |

where is uniformly distributed on the unit simplex and is an independent non-negative scalar random variable which can be interpreted as the radial part of since . The random vector S follows a symmetric Dirichlet distribution whereas the distribution of is directly related to the generator through the inverse Williamson transform of

Recall that a -dimensional Archimedean copula with generator is defined by , for all Then, the radial part of representation (4) is directly related to the generator and the probability integral transformation of U, that is,

As a result, any random vector which follows an Archimedean copula with generator can be represented as a deterministic function of and an independent random vector uniformly distributed on the unit simplex, i.e.,

| (5) |

The previous relation allows us to obtain an easily tractable expression of for any random vector X with an Archimedean copula dependence structure.

Corollary 2.2

Let X be a -dimensional random vector with marginal distributions . Assume that the dependence structure of X is given by an Archimedian copula with generator . Then, for any

| (6) |

where is a random variable with distribution.

Proof: Note that X is distributed as where follows an Archimedean copula with generator . Then, each component of the multivariate risk measure introduced in Definition 2.1 can be expressed as . Moreover, from representation (5) the following relation holds

| (7) |

since S and are stochastically independent. The result comes down from the fact that the random vector follows a symmetric Dirichlet distribution.

Note that, using (7), the marginal distributions of U given can be expressed in a very simple way, that is, for any

| (8) |

The latter relation derives from the fact that which is - distributed, is such that where is uniformly-distributed on .

We now adapt Corollary 2.2 for the multivariate upper-orthant Value-at-Risk, i.e., .

Corollary 2.3

Let X be a -dimensional random vector with marginal survival distributions . Assume that the survival copula of X is an Archimedean copula with generator . Then, for any

| (9) |

where is a random variable with distribution.

Proof:

Note that X is distributed as where follows an Archimedean copula with generator . Then, each component of the multivariate risk measure introduced in Definition 2.2 can be expressed as .

Then, relation 7 also holds for and , i.e.,

. Hence the result.

In the following, from (6) and (9), we derive analytical expressions of the lower-orthant and the upper-orthant Value-at-Risk for a random vector distributed as a particular Archimedean copula. Let us first remark that , as Archimedean copulas are exchangeable, the components of (resp. ) are the same. Moreover, as far as closed-form expressions are available for the lower-orthant of , it is also possible to derive an analogue expression for the upper-orthant of since from Example 1

| (10) |

Clayton family in dimension :

As a matter of example, let us now consider the Clayton family of bivariate copulas. This family is interesting since it contains the counter-monotonic, the independence and the comonotonic copulas as particular cases. Let be a random vector distributed as a Clayton copula with parameter . Then, and are uniformly-distributed on and the joint distribution function of is such that

| (11) |

Table 3 gives analytical expressions for the first (equal to the second) component of as a function of the risk level and the dependence parameter . For and we obtain the Fréchet-Hoeffding lower and upper bounds: (counter-monotonic copula) and (comonotonic random copula) respectively. The settings and correspond to degenerate cases. For we have the independence copula . For , we obtain the copula denoted by in Nelsen (1999), where .

| Copula | ||

|---|---|---|

| Clayton | ||

| Counter-monotonic | ||

| Independent | ||

| Comonotonic |

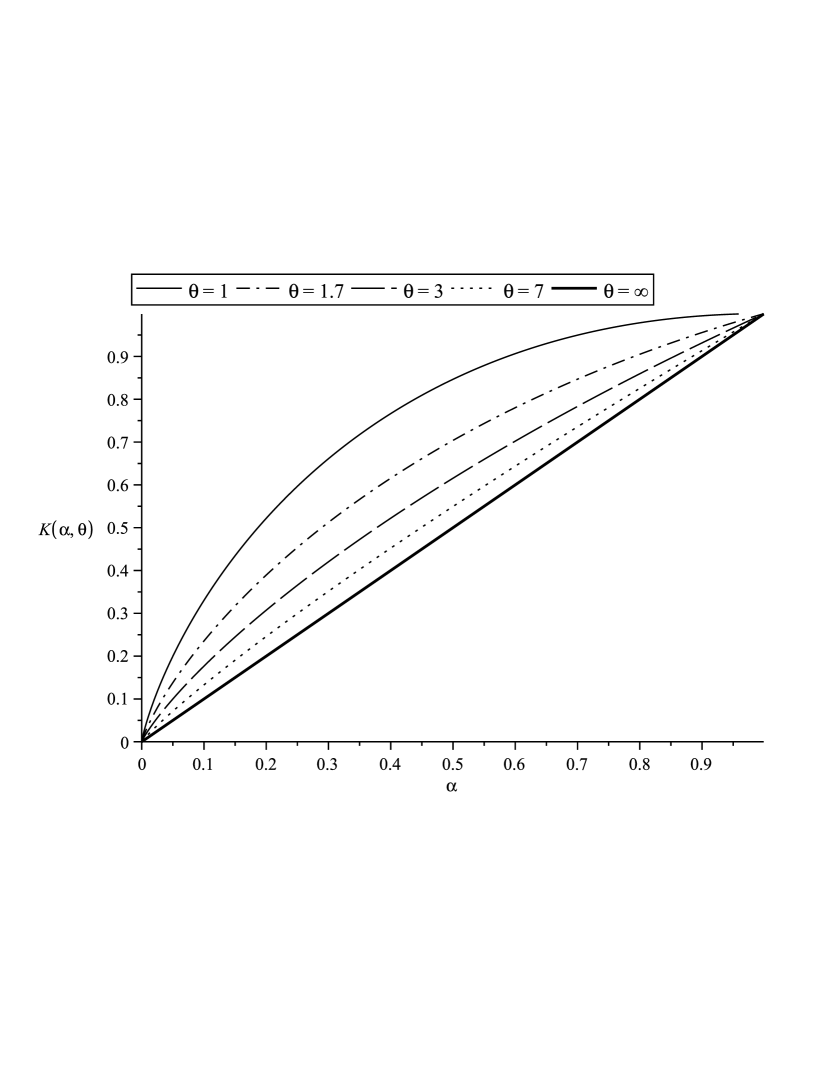

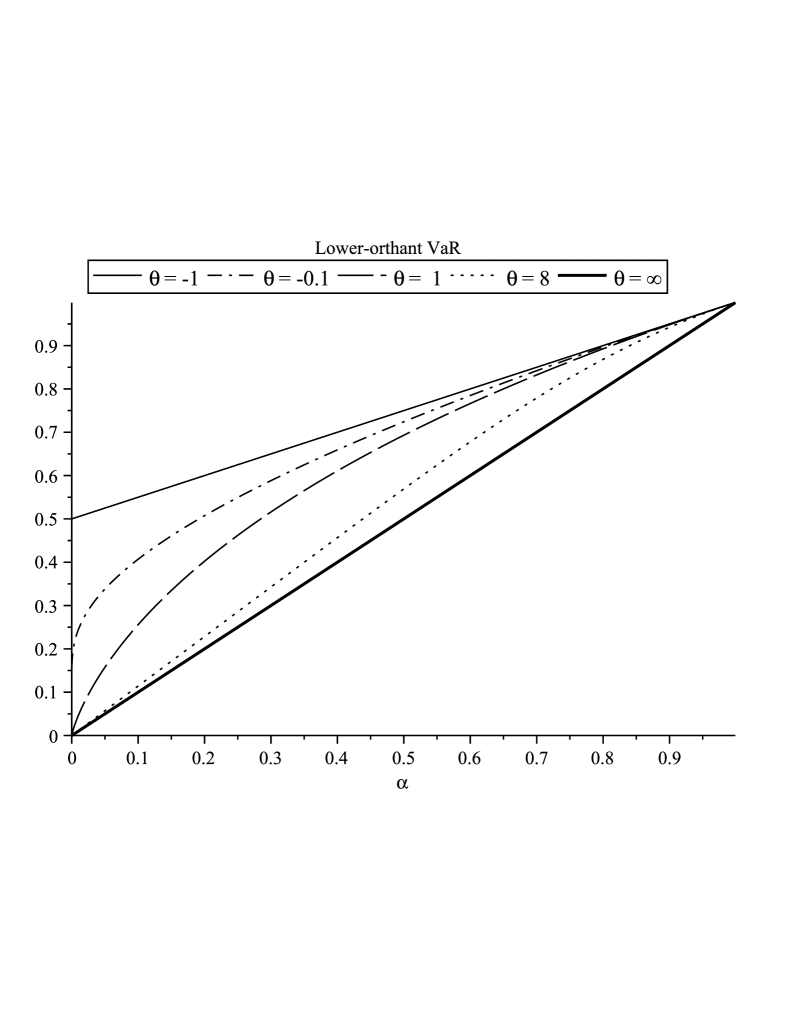

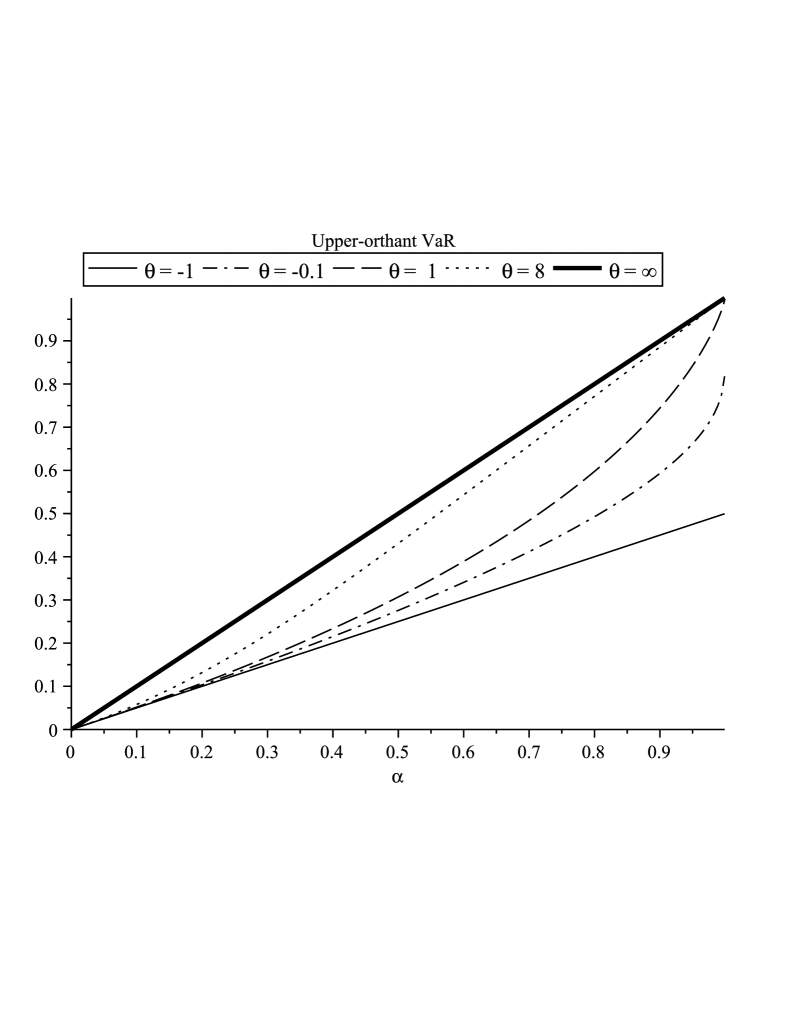

Interestingly, one can readily show that and , for and . This proves that, for Clayton-distributed random couples, the components of our multivariate VaR are increasing functions of the risk level and decreasing functions of the dependence parameter . Note also that the multivariate VaR in the comonotonic case corresponds to the vector composed of the univariate VaR associated with each component. These properties are illustrated in Figure 2 (left) where is plotted as a function of the risk level for different values of the parameter . Observe that an increase of the dependence parameter tends to lower the VaR up to the perfect dependence case where . The latter empirical behaviors will be formally confirmed in next sections.

In the same framework, using Equation 10, one can readily show that and , for and . This proves that, for random couples with uniform margins and Clayton survival copula, the components of our multivariate are increasing functions both of the risk level and of the dependence parameter . Note also that the multivariate in the comonotonic case corresponds to the vector composed of the univariate VaR associated with each component. These properties are illustrated in Figure 2 (right) where is plotted as a function of the risk level for different values of the parameter . Observe that, contrary to the lower-orthant , an increase of the dependence parameter tends to increase the . The upper bound is represented by the perfect dependence case where . The latter empirical behaviors will be formally confirmed in next sections.

Ali-Mikhail-Haq in dimension :

Let be a random vector distributed as a Ali-Mikhail-Haq copula with parameter . In particular, the marginal distribution of and are uniform. Then, the distribution function of is such that

Using Corollary 2.2, we give in Table 4 analytical expressions for the first (equal to the second) component of the , i.e., . When we obtain the independence copula .

| Copula | ||

|---|---|---|

| Ali-Mikhail-Haq copula | ||

| Independent |

Clayton family in dimension :

We now consider a -dimensional vector with Clayton copula and parameter (see Remark 1) and uniform marginals. In this case we give an analytical expression of for . Results are given in Table 5.

| Copula | ||

|---|---|---|

| Clayton | ||

| Independent |

As in the bivariate case above, one can readily show that

when is distributed as a dimensional Clayton copula. In addition, using Equation 10,

and when admits a trivariate Clayton survival copula.

Then, the results obtained above in the bivariate case are confirmed also in higher dimension. These empirical behaviors will be formally confirmed in next sections.

2.3 Comparison of univariate and multivariate VaR

Note that, using a change of variable, each component of the multivariate VaR can be represented as an integral transformation of the associated univariate VaR. Let us denote by the marginal distribution functions of for and by (resp. ) the copula (resp. the survival copula) associated with X. Using the Sklar’s theorem we have (see Sklar, 1959). Then the random variables defined by , for , are uniformly distributed and their joint distribution is equal to . Using these notations and since , we get

| (12) |

for where is the density of the Kendall distribution associated with copula and is the density function of the bivariate vector .

As for the upper-orthant VaR, let , for . Using these notations and since , we get

| (13) |

for , where the density of the Kendall distribution associated with the survival copula and is the density function of the bivariate vector .

Remark that the bounds of integration in (12) and (13) derive from the geometrical properties of the considered level curve, i.e., (resp. ) is inferiorly (resp. superiorly) bounded by the marginal univariate quantile functions at level .

The following proposition allows us to compare the multivariate lower-orthant and upper-orthant Value-at-Risk with the corresponding univariate VaR.

Proposition 2.4

Consider a random vector X satisfying the regularity conditions. Assume that its multivariate distribution function is quasi concave666A function is quasi concave if the upper level sets of are convex sets. Tibiletti (1995) points out families of distribution functions which satisfy the property of quasi concavity. For instance, multivariate elliptical distributions and Archimedean copulas are quasi concave functions (see Theorem 4.3.2 in Nelsen, 1999 for proof in dimension ; Proposition 3 in Tibiletti, 1995, for proof in dimension ).. Then, for all the following inequalities hold

| (14) |

for .

Proof: Let . From the definition of the accumulated probability, it is easy to show that is inferiorly bounded by the marginal univariate quantile functions. Moreover, recall that is a convex set in from the quasi concavity of (see Section 2 in Tibiletti, 1995). Then, for all , and trivially, is greater than , for . Then , for all and . Analogously, from the definition of the survival accumulated probability, it is easy to show that is superiorly bounded by the marginal univariate quantile functions at level . Moreover, recall that is a convex set in . Then, for all , and trivially, is smaller than , for all and . Hence the result

Proposition 2.4 states that the multivariate lower-orthant (resp. the multivariate upper-orthant ) is more conservative (resp. less conservative) than the vector composed of the univariate -Value-at-Risk of marginals. Furthermore, we can prove that the previous bounds in (14) are reached for comonotonic random vectors.

Proposition 2.5

Consider a comonotonic non-negative random vector X. Then, for all it holds that

for .

Proof: Let . If is a comonotonic non-negative random vector then there exists a random variable and increasing functions such that X is equal to in distribution. So the set becomes where is the quantile function of . So, trivially, , for and . Since then for . Hence the result.

2.4 Behavior of the multivariate VaR with respect to marginal distributions

In this section we study the behavior of our VaR measures with respect to a change in marginal distributions. Results presented below provide natural multivariate extensions of some classical results in the univariate setting (see, e.g., Denuit and Charpentier, 2004).

Proposition 2.6

Let X and Y be two –dimensional continuous random vectors satisfying the regularity conditions and with the same copula . If then it holds that

and

The proof of the previous result directly comes down from Equation (12) and (13). From Proposition 2.6, we remark that, for a fixed copula , the -th component and do not depend on marginal distributions of the other components with .

In order to derive the next result, we use the definitions of stochastic orders presented in Section 1.

Proposition 2.7

Let X and Y be two –dimensional continuous random vectors satisfying the regularity conditions and with the same copula . If then it holds that

and

Proof: The proof comes down from formulas (12)- (13) and Definition 1.1. Furtheremore, we remark that if then for all , and for all . Hence the result.

Note that, the result in Proposition 2.7 is consistent with the one-dimensional setting (see Section 3.3.1 in Denuit et al., 2005). Indeed, as in dimension one, an increase of marginals with respect to the first order stochastic dominance yields an increase in the corresponding components of .

As a result, in an economy with several interconnected financial institutions, capital required for one particular institution is affected by its own marginal risk. But, for a fixed dependence structure, the solvency capital required for this specific institution does not depend on marginal risks bearing by the others. Then, our multivariate VaR implies a “fair” allocation of solvency capital with respect to individual risk-taking behavior. In other words, individual financial institutions may not have to pay more for risky business activities undertook by the others.

2.5 Behavior of multivariate VaR with respect to the dependence structure

In this section we study the behavior of our VaR measures with respect to a variation of the dependence structure, with unchanged marginal distributions.

Proposition 2.8

Let X and be two –dimensional continuous random vectors satisfying the regularity conditions and with the same margins and , for , and let (resp. ) denote the copula function associated with X (resp. ) and (resp. ) the survival copula function associated with X (resp. ).

Let , , and .

If

Let , , and .

If

Proof: Let and . We recall that if and only if , for all non-decreasing function such that the expectations exist (see Denuit et al., 2005; Proposition 3.3.14). We now choose , for . Then, we obtain

,

But the right-hand side of the previous inequality is equal to

since and have the same distribution. Finally, from formula (12) we obtain .

Let now and . We now choose the non-decreasing function , for . Since and have the same distribution, we obtain

,

Hence the result.

We now provide an illustration of Proposition 2.8 in the case of dimensional Archimedean copulas.

Corollary 2.4

Consider a –dimensional random vector X, satisfying the regularity conditions, with marginal distributions , for , copula and survival copula .

-

If belongs to one of the -dimensional family of Archimedean copulas introduced in Table 2, an increase of the dependence parameter yields a decrease in each component of .

-

If belongs to one of the -dimensional family of Archimedean copulas introduced in Table 2, an increase of the dependence parameter yields an increase in each component of .

Proof: Let and be two Archimedean copulas of the same family with generator and such that . Given Proposition 2.8, we have to check that the relation holds for all where and are distributed (resp.) as and . However, using formula (8), we can readily prove that the previous relation can be restated as a decreasing condition on the ratio of generators and , i.e.,

Eventually, we have check that, for all Archimedean family introduced in Table 2, the function defined by is indeed decreasing when . We immediately obtain from Proposition 2.8 that each component of is a decreasing function of . The proof of the second statement of Corollary 2.4 follows trivially usign the same arguments.

Example 2

From Corollary 2.4 the multivariate (resp. ) for copulas in Table 2 is increasing (resp. decreasing) with respect to the dependence parameter (coordinate by coordinate). In particular, this means that, in the case of Archimedean copula, limit behaviors of dependence parameters are associated with bounds for our multivariate risk measure. For instance, let be a bivariate random vector with a Clayton dependence structure and fixed margins and be a bivariate random vector with a Clayton survival copula and the same margins as . If we denote by (resp. ) the first component of the lower-orthant VaR (resp. upper-orthant VaR) when the dependence parameter is equal to , then the following comparison result holds for all and all :

Note that the upper bound corresponds to comonotonic random variables, so that , for a random vector () with uniform marginal distributions.

2.6 Behavior of multivariate VaR with respect to risk level

In order to study the behavior of the multivariate lower-orthant Value-at-Risk with respect to risk level , we need to introduce the positive regression dependence concept. For a bivariate random vector we mean by positive dependence that and are likely to be large or to be small together. An excellent presentation of positive dependence concepts can be found in Chapter 2 of the book by Joe (1997). The positive dependence concept that will be used in the sequel has been called positive regression dependence (PRD) by Lehmann (1966) but most of the authors use the term stochastically increasing (SI) (see Nelsen, 1999; Section 5.2.3).

Definition 2.3 (Positive regression dependence)

A bivariate random vector is said to admit positive regression dependence with respect to , PRD(), if

| (15) |

Clearly condition in (15) is a positive dependence notion (see Section 2.1.2 in Joe, 1997).

From Definition 2.3, it is straightforward to derive the following result.

Proposition 2.9

Consider a –dimensional random vector X, satisfying the regularity conditions, with marginal distributions , for , copula and survival copula . Let , , and . Then it holds that :

-

If is then is a non-decreasing function of .

-

If is then is a non-decreasing function of .

Then and , for any which proves that and are non-decreasing functions of .

Note that behavior of the multivariate VaR with respect to a change in the risk level does not depend on marginal distributions of X.

The following result proves that assumptions of Proposition 2.9 are satisfied in the large class of -dimensional Archimedean copulas.

Corollary 2.5

Consider a –dimensional random vector X, satisfying the regularity conditions, with marginal distributions , for , copula and survival copula .

If is a -dimensional Archimedean copula, then is a non-decreasing function of .

If is a -dimensional Archimedean copula, then is a non-decreasing function of .

Proof: Let , , and . If is the copula of , then U is distributed as and if is Archimedean, is a non-decreasing function of from formula (8). In addition, if is the survival copula of , then V is distributed as and if is Archimedean, is a non-decreasing function of from the same argument. The result then derives from Proposition 2.9.

Conclusion and perspectives

In this paper, we proposed two multivariate extensions of the classical Value-at-Risk for continuous random vectors. As in the Embrechts and Puccetti (2006)’s approach, the introduced risk measures are based on multivariate generalization of quantiles but they are able to quantify risks in a much more parsimonious and synthetic way: the risk of a -dimensional portfolio is evaluated by a point in . The proposed multivariate risk measures may be useful for some applications where risks are heterogeneous in nature or because they cannot be diversify away by an aggregation procedure.

We analyzed our multivariate risk measures in several directions. Interestingly, we showed that many properties satisfied by the univariate VaR expand to the two proposed multivariate versions under some conditions. In particular, the lower-orthant VaR and the upper-orthant VaR both satisfy the positive homogeneity and the translation invariance property which are parts of the classical axiomatic properties of Artzner et al. (1999). Using the theory of stochastic ordering, we also analyzed the effect of some risk perturbations on these measures. In the same vein as for the univariate VaR, we proved that an increase of marginal risks yield an increase of the multivariate VaR. We also gave the condition under which an increase of the risk level tends to increase components of the proposed multivariate extensions and we show that these conditions are satisfied for -dimensional Archimedean copulas. We also study the effect of dependence between risks on individual contribution of the multivariate VaR and we prove that for different families of Archimedean copulas, an increase of the dependence parameter tends to lower the components of the lower-orthant VaR whereas it widens the components of the upper-orthant VaR. At the extreme case where risks are perfectly dependent or comonotonic, our multivariate risk measures are equal to the vector composed of univariate risk measures associated with each component.

Due to the fact that the Kendall distribution is not known analytically for elliptical random vectors, it is still an open question whether components of our proposed measures are increasing with respect to the risk level for such dependence structures. However, numerical experiments in the case of Gaussian copulas support this hypothesis. More generally, the extension of the McNeil and Nešlehová’s representation (see Proposition 2.3) for a generic copula and the study of the behavior of distribution , with respect to , are potential improvements to this paper that will be investigated in a future work.

In a future perspective, it should also be interesting to discuss the extensions of our risk measures to the case of discrete distribution functions, using “discrete level sets” as multivariate definitions of quantiles. For further details the reader is referred, for instance, to Laurent (2003). Another subject of future research should be to introduce a similar multivariate extension but for Conditional-Tail-Expectation and compare the proposed VaR and CTE measures with existing multivariate generalizations of risk measures, both theoretically and experimentally. An article is in preparation in this sense.

Acknowledgements: The authors thank an anonymous referee for constructive remarks and valuable suggestions to improve the paper. The authors thank Véronique Maume-Deschamps and Clémentine Prieur for their comments and help and Didier Rullière for fruitful discussions. This work has been partially supported by the French research national agency (ANR) under the reference ANR-08BLAN-0314-01. Part of this work also benefit from the support of the MIRACCLE-GICC project.

References:

References

- [1] P. Artzner, F. Delbaen, J. M. Eber, and D. Heath. Coherent measures of risk. Math. Finance, 9(3):203–228, 1999.

- [2] P. Barbe, C. Genest, K. Ghoudi, and B. Rémillard. On Kendall’s process. J. Multivariate Anal., 58(2):197–229, 1996.

- [3] P. Capéraà, A.-L. Fougères, and C. Genest. A stochastic ordering based on a decomposition of Kendall’s tau. In Distributions with given marginals and moment problems (Prague, 1996), pages 81–86. Kluwer Acad. Publ., Dordrecht, 1997.

- [4] A. Chakak and M. Ezzerg. Bivariate contours of copula. Comm. Statist. Simulation Comput., 29(1):175–185, 2000.

- [5] M. Chaouch, A. Gannoun, and J. Saracco. Estimation de quantiles géométriques conditionnels et non conditionnels. J. SFdS, 150(2):1–27, 2009.

- [6] M. Chauvigny, L. Devineau, S. Loisel, and V. Maume-Deschamps. Fast remote but not extreme quantiles with multiple factors. applications to solvency ii and enterprise risk management. to appear in European Actuarial Journal., 2012.

- [7] M. Denuit and A. Charpentier. Mathématiques de l’assurance non-vie. Tome 1: Principes fondamentaux de th orie du risque. Economica, 2004.

- [8] M. Denuit, J. Dhaene, M. Goovaerts, and R. Kaas. Actuarial Theory for Dependent Risks. Wiley, 2005.

- [9] P. Embrechts and G. Puccetti. Bounds for functions of multivariate risks. Journal of Multivariate Analysis, 97(2):526–547, 2006.

- [10] W. Feller. An introduction to probability theory and its applications. Vol. II. John Wiley & Sons Inc., New York, 1966.

- [11] C. Gauthier, A. Lehar, and M. Souissi. Macroprudential regulation and systemic capital requirements. Working Papers 10-4, Bank of Canada, 2010.

- [12] C. Genest and J.-C. Boies. Detecting dependence with kendall plots. The American Statistician, 57:275–284, 2003.

- [13] C. Genest, J.-F. Quessy, and B. Rémillard. Goodness-of-fit procedures for copula models based on the probability integral transformation. Scandinavian Journal of Statistics, 33:337–366, 2006.

- [14] C. Genest and L.-P. Rivest. On the multivariate probability integral transformation. Statist. Probab. Lett., 53(4):391–399, 2001.

- [15] M. Henry, A. Galichon, and I. Ekeland. Comonotonic measures of multivariate risks. Open Access publications from Universit Paris-Dauphine urn:hdl:123456789/2278, Universit Paris-Dauphine, 2012.

- [16] L. Imlahi, M. Ezzerg, and A. Chakak. Estimación de la curva mediana de una cópula . Rev. R. Acad. Cien. Exact. Fis. Nat, 93(2):241–250, 1999.

- [17] H. Joe. Multivariate models and dependence concepts, volume 73 of Monographs on Statistics and Applied Probability. Chapman & Hall, London, 1997.

- [18] E. Jouini, M. Meddeb, and N. Touzi. Vector-valued coherent risk measures. Finance Stoch., 8(4):531–552, 2004.

- [19] V. I. Koltchinskii. -estimation, convexity and quantiles. Ann. Statist., 25(2):435–477, 1997.

- [20] J.-P. Laurent. Sensitivity analysis of risk measures for discrete distributions. Working paper, 2003.

- [21] E. L. Lehmann. Some concepts of dependence. Ann. Math. Statist., 37:1137–1153, 1966.

- [22] J.-C. Massé and R. Theodorescu. Halfplane trimming for bivariate distributions. J. Multivariate Anal., 48(2):188–202, 1994.

- [23] A. J. McNeil and J. Nešlehová. Multivariate archimedean copulas, d-monotone functions and l1-norm symmetric distributions. The Annals of Statistics, 37:3059–3097, 2009.

- [24] A. Müller. Stop-loss order for portfolios of dependent risks. Insurance Math. Econom., 21(3):219–223, 1997.

- [25] A. Müller and D. Stoyan. Comparison methodes for stochastic models and risks. Wiley, 2001.

- [26] G. Nappo and F. Spizzichino. Kendall distributions and level sets in bivariate exchangeable survival models. Information Sciences, 179:2878–2890, August 2009.

- [27] R. B. Nelsen. An introduction to copulas, volume 139 of Lecture Notes in Statistics. Springer-Verlag, New York, 1999.

- [28] R. B. Nelsen, J. J. Quesada-Molinab, J. A. Rodr guez-Lallenac, and M. Úbeda-Floresc. Kendall distribution functions. Statistics and Probability Letters, 65:263–268, 2003.

- [29] A. Prékopa. Multivariate value at risk and related topics. Annals of Operations Research, 193:49–69, 2012. 10.1007/s10479-010-0790-2.

- [30] C. Rossi. Sulle curve di livello di una superficie di ripartizione in due variabili. Giornale dell’Istituto Italiano degli Attuari, 36:87–108, 1973.

- [31] R. Serfling. Quantile functions for multivariate analysis: approaches and applications. Statist. Neerlandica, 56(2):214–232, 2002. Special issue: Frontier research in theoretical statistics, 2000 (Eindhoven).

- [32] M. Sklar. Fonctions de répartition à dimensions et leurs marges. Publ. Inst. Statist. Univ. Paris, 8:229–231, 1959.

- [33] D. Tasche. Capital allocation to business units and sub-portfolios: the Euler Principle. Quantitative finance papers, arXiv.org, 2008.

- [34] L. Tibiletti. Gli insiemi di livello delle funzioni di ripartizione n-dimensionali: un’applicazione statistica (Level sets of n-dimensional distribution functions: a statistic application). Quaderni dell’Istituto di Matematica Finanziaria dell’universit di Torino: terza serie, 56:1–11, 1990.

- [35] L. Tibiletti. Sulla quasi concavita delle funzioni di ripartizione n-dimensionali - on quasi-concavity of n-dimensional distribution functions. In Atti del XV convegno A.M.A.S.E.S., pages 503–515, 1991.

- [36] L. Tibiletti. On a new notion of multidimensional quantile. Metron. International Journal of Statistics, 51(3-4):77–83, 1993.

- [37] L. Tibiletti. Quasi-concavity property of multivariate distribution functions. Ratio Mathematica, 9:27–36, 1995.

- [38] G. Wei and T. Hu. Supermodular dependence ordering on a class of multivariate copulas. Statist. Probab. Lett., 57(4):375–385, 2002.

- [39] C. Zhou. Why the micro-prudential regulation fails? The impact on systemic risk by imposing a capital requirement. Dnb working papers, Netherlands Central Bank, Research Department, 2010.