On Risk Evaluation and Control of Distributed Multi-Agent Systems

Abstract

In this paper, we deal with risk evaluation and risk-averse optimization of complex distributed systems with general risk functionals. We postulate a novel set of axioms for the functionals evaluating the total risk of the system. We derive a dual representation for the systemic risk measures and propose a way to construct non-trivial families of measures by using either a collection of linear scalarizations or non-linear risk aggregation. The new framework facilitates risk-averse sequential decision-making by distributed methods. The proposed approach is compared theoretically and numerically to some of the systemic risk measurements in the existing literature.

We formulate a two-stage decision problem with monotropic structure and systemic measure of risk. The structure is typical for distributed systems arising in energy networks, robotics, and other practical situations. A distributed decomposition method for solving the two-stage problem is proposed and it is applied to a problem arising in communication networks. We have used this problem to compare the methods of systemic risk evaluation. We show that the proposed risk aggregation leads to less conservative risk evaluation and results in a substantially better solution of the problem at hand as compared to an aggregation of the risk of individual agents and other methods.

Keywords stochastic programming, risk of complex systems, risk measures for multivariate risk, distributed risk-averse optimization, optimal wireless information exchange

1 Introduction

Evaluation of the risk of a system consisting of multiple agents is one of the fundamental problems relevant to many fields. A crucial question is the assessment of the total risk of the system taking into account the risk of each agent and its contribution to the total risk. Another issue arises when the risk evaluation is based on confidential or proprietary information. There is extensive literature addressing the properties of risk measures and their use in finance. Our goal is to address situations related to robotics, energy systems, business systems, logistic problems, etc. The analysis in financial literature may not be applicable in such situations due to the heterogeneity of the sources of risk, the nature, and the complexity of relations in those systems. In many systems, the source of risk is associated with highly non-trivial aggregation of the features of its agents, which may not be available in an analytical form. For example, in automated robotic systems, the exchange of information may be limited or distorted due to the speed of operation, the distance in space between the agents, or other reasons. Another difficulty associated with the evaluation of risk arises when the risk of one agent stems from various sources of uncertainty of different nature. The question of how to aggregate those risk factors in one loss function does not have a straightforward answer.

The risk of one loss function can be evaluated using a coherent measure of risk such as Average Value-at-Risk, mean-semideviation or others. More traditional (non-coherent) measures of risk such as Value-at-Risk (VaR) are also very popular and frequently used. We refer to [14] for an extensive treatment of risk measures for scalar-valued random variables, as well as to [31] where risk-averse optimization problems are analyzed as well.

The main objective of this paper is to suggest a new approach to the risk of a distributed system and show its viability and potential in application to risk-averse decision problems for distributed multi-agent systems. While building on the developments thus far, our goal is to identify a framework that is theoretically sound but also amenable to efficient numerical computations for risk-averse optimization of large multi-agent systems. We propose a set of axioms for functionals defined on the space of random vectors. The random vector is comprising risk factors of various sources, or is representing the loss of each individual agent in a multi-agent system. While axioms for random vectors have been proposed earlier, our set of axioms differs from those in the literature most notably with respect to the translation equivariance condition, which we explain in due course. The resulting systemic risk measures reduce to coherent measures of risk for scalar-valued random variables when the dimension of the random vectors becomes one. We derive the dual representation of the systemic measures of risk with less assumptions than known for multi-variate risks. In our derivation, we establish one-to-one correspondences between the axioms and properties of the dual variables. We also propose several ways to construct systemic risk measures and analyze their properties. The important features of the proposed measures are the following. They are conformant with the axioms; they can be calculated efficiently, and are amenable to distributed optimization methods.

We have formulated a risk-averse two-stage optimization problem with a structure, which is typical for a system of loosely coupled subsystems. The proposed numerical method is applied to manage the risk of a distributed operation of agents. The distributed method lets each subsystem optimize its operation with minimal information exchange among the other subsystems (agents). This aspect is important for multi-agent systems where some proprietary information is involved or when privacy concerns exist. The method demonstrates that distributed calculation of the systemic risk is possible without a big computational burden. We then consider a two-stage model in wireless communication networks, which extends the static model discussed in [21]. It addresses a situation when a team of robots explores an area and each robot reports relevant information. The goal is to determine a few reporting points so that the communication is conducted most efficiently while managing the risk of losing information. We conduct several numerical experiments to compare various systemic risk measures.

Our paper is organized as follows. In section 2 we provide preliminary information on coherent measures of risk for scalar-valued random variables and survey existing methods for risk evaluation of complex systems. Section 3 contains the set of axioms, the dual representation associated with the resulting systemic risk measures, and two ways to construct such measures in practice. Section 4 provides a theoretical comparison of the new measures of risk to other notions. In particular, we discuss other sets of axioms, explore relations to two notions of multivariate Average Value-at-Risk, and pay attention to the effect of the aggregation of risk before and after risk evaluation. In section 5, we formulate a risk-averse two-stage stochastic programming problem modeling wireless information exchange and seeking to locate a constraint number of information exchange points. We devise a distributed method for solving the problem and report a numerical comparison with several measures of risk, and other systemic measures. We pay attention to the comparison between the principles of aggregation for the purpose of total risk evaluation.

2 Preliminaries

2.1 Coherent risk measures

The widely accepted axiomatic framework for coherent measures of risk was proposed in [2] and further analyzed in [8], [14], [20], [29, 30], [25] and many others works. It is worth noting that another axiomatic approach was initiated in [18] and this line of thinking was developed to an entire framework in [27]. For a detailed exposition, we refer to [31] and the references therein. Let be the space of real-valued random variables, defined on the probability space , that have finite -th moments, , and are indistinguishable on events with zero probability. We shall assume that the random variables represent random costs or losses. A lower semi-continuous functional is a coherent risk measure if it is convex, positively homogeneous, monotonic with respect to the a.s. comparison of random variables, and satisfies the the following translation property

If is monotonicity, convex, and satisfies the translation property, then it is called a convex risk measure. Some examples of coherent measures of risk include Average Value-at-Risk (also called Conditional Value-at-Risk) and mean-semideviations measure, which are defined as follows. The Average Value-at-Risk at level for a random variable is defined as

It is a special case of the higher-order measures of risk:

where refers to the norm in . The mean semi-deviation of order is given by

The space equipped with its norm topology is paired with the space equipped with the weak∗ topology where . For any and , we use the bilinear form:

The following result is known as a dual representation of coherent measures of risk. A proper lower semicontinuous coherent risk measure has a dual representation

| (1) |

where is the convex-analysis subdifferential .

Risk measures have also been defined by specifying a set of desired values for the random quantity in question; this set is called an acceptance set. Denoting the acceptance set by , the risk of a random outcome is defined as:

| (2) |

In finance, this notion of risk is interpreted as the minimum amount of capital that needs to be invested to make the final position acceptable. It is easy to verify that in (2) is a coherent measure if and only if is a convex cone (cf. [13]).

2.2 Risk measures for complex systems

As the risk is not additive, when we deal with distributed complex systems, we need to address the question of risk evaluation for the entire system. This risk is usually called systemic in financial literature and the proposed measures for its evaluation are termed systemic risk measures.

Assume that the system consists of agents. One approach to evaluating the risk of a system is to use an aggregation function, , and univariate risk measures. Let be an -dimensional random vector comprising the costs incurred by the system, where each component corresponds to the costs of one agent. The first approach to systemic risk is to choose a univariate risk measure and apply it to the aggregated cost If we prefer to use an acceptance set as in (2), the systemic risk can be defined as:

| (3) |

In ([7] this point of view is analyzed in finite probability spaces and it is shown that any monotonic, convex, positively homogeneous function provides a risk evaluation as in (3) as long as it is consistent with the preferences represented in the definition of . The point of view presented in definition (3) is further extended in [11], where the authors analyzed convex risk measures defined on a general measurable space and proposed examples of aggregation functions suitable for a financial system. In both studies, the structural decomposition of the systemic risk measure (3) is established when the aggregation function satisfies properties similar to the axioms postulated for risk measures. In [4], the authors considered a particular case of an aggregation function, proposing an evaluation method for the risk associated with the cumulative externalities or costs endured by financial institutions. Note that these evaluation methods rely on a choice of one aggregation function suitable for a specific problem.

The translation property for constant vectors is introduced in [5] for convex risk measures defined for bounded random vectors. This property differs from the one we propose here. The authors analyzed the maximal risk over a class of aggregation functions rather than using one specific function. We refer to [28] for an overview of the risk measures constructed this way. A similar approach is taken in [10], where law-invariant risk measures for bounded random vectors are investigated for the purpose of obtaining a Kusuoka representation. The axioms proposed in [5, 10] are closest to ours and we provide more detailed discussion in section 3.

Another approach to risk evaluation of complex systems consists of evaluation of the risk of individual agents first and aggregation of the obtained values next. This method is used, for example, in [3] and [12]. Using the notion of acceptance sets the systemic risk measure is defined in [3] in the following way:

The proposed measures of risk in section 3 also accommodate this point of view. A further extension in [3] replaces the constant vector by a random vector , where is a given set of admissible allocations. This formulation of the risk measure allows to decide scenario-dependent allocations, where the total amount can be determined ahead of time while individual allocations may be decided in the future when uncertainty is revealed. In [12] a set-valued counterpart of this approach is proposed by defining the systemic risk measure as the set of all vectors that make the outcome acceptable. Once the set of all acceptable allocations is constructed, one can derive a scalar-valued efficient allocation rule by minimizing the weighted sum of components of the vectors in the set. Set-valued risk measures were proposed in [17], see also [1, 16] for duality theory including the dual representation for certain set-valued risk measures. In fast majority of literature, the systemic risk depends on the choice of the aggregation function and how well it captures the interdependence between the components. To capture the dependence, an approach based on copula theory was put forward in [24]. It is assumed that independent operation does not carry systemic risk and, hence, the local risk can be optimized by each agent independently. The systemic risk measures are then constructed based on the copulas of the distributions.

Another line of work includes methods that use some multivariate counterpart of the univariate risk measures. The main notion here is the Multivariate Value-at-Risk () for random vectors, which is identified with the set of -efficient points. Let be the right-continuous distribution function of a random vector with realizations in . A -efficient points for is a point such that and there is no point that satisfies with componentwise. This notion plays a key role in optimization problems with chance constraints (see e.g. [31]). Multivariate Value-at-Risk satisfies the properties of translation equivariance, positive homogeneity and monotonicity. This notion is used to define Average Value-at-Risk for multivariate distributions () in [19, 23, 26]. Let be the set of all points, each of which is component-wise larger than some -efficient point:

In [19], Lee and Prekopa define the of a random vector at level as

| (4) |

where is assumed integrable with respect to , i.e., is finite. It is shown in [19] that is translation equivariant, positive homogeneous and subadditive only when all of the components of the random vector are independent.

While the definition of above is scalar-valued, in [22] the authors define a Multivariate Average Value-at-Risk () using the notion of -efficient points as and the extremal representation of the Average Value-at-Risk. First for given probability , we consider the vectors

where , . Then, the following vector-optimization problem is solved:

The vector-valued Multivariate Average Value-at-Risk is monotonic, positively homogeneous, translation equivariant, but is not subadditive. Note that in both and , one needs to use a scalarization function to obtain a scalar value for the risk.

We shall compare our proposal to the aforementioned risk measures in section 4.

3 Axiomatic Approach to Risk Measures for Random Vectors

In this section, we propose a set of axioms to measures of risk for random vectors with realizations in . This framework is analogous to the coherent risk measures properties for scalar-valued random variables. In fact, if , the proposed set of axioms exactly coincides with those in [31]. We denote by be the space of random vectors with realizations in , defined on . Throughout the paper, we shall consider risk measure for random vectors in to be a lower-semi-continuous functional with non-empty domain. We denote the -dimensional vector, whose components are all equal to one by and the random vector with realizations equal to by .

Definition 1.

A lower semi-continuous functional is a coherent risk measure with preference to small outcomes, iff it satisfies the following axioms:

-

A1.

Convexity: For all and , we have:

-

A2.

Monotonicity: For all , if for all components -a.s., then .

-

A3.

Positive homogeneity: For all and , we have .

-

A4.

Translation equivariance: For all and , we have

A lower semi-continuous functional is a convex risk measure with preference to small outcomes, iff it satisfies axioms A1, A2, and A4.

The axioms of convexity and positive homogeneity are defined in a similar way to the properties of coherent risk measures, while the random vectors are now compared component-wise for the property of monotonicity. The main difference is the definition of a translation equivariance axiom. It suggests that if the random loss increases by a constant amount for all components, then the risk should also increase by the same amount. These axioms differ from the previous axioms proposed in the literature.

3.1 Dual representation

In order to derive a dual representation of the multivariate risk measure, we pair the space of random vectors , with the space , where is such that , for . For and the bilinear form on the product space is defined as follows:

The Fenchel conjugate function of the risk measure is given by

and the conjugate of (the bi-conjugate function) is

Fenchel-Moreau theorem implies that if is convex and lower semicontinuous, then and that

| (5) |

where is the domain of the conjugate function . Then based on the Fenchel-Moreau theorem and the axioms proposed in this paper, we show the following theorem.

Theorem 1.

Suppose is a convex and lower semicontinuous risk functional. Then the following holds:

-

(i)

Property A2 is satisfied if and only if a.s. for all in the domain of .

-

(ii)

Property A3 is satisfied if and only if is the indicator function of , i.e.

(6) -

(iii)

Property A4 is satisfied if and only if for all , where .

Proof.

Since is convex and lower semicontinuous and we have assumed that it has non-empty domain, the representation (5) holds by virtue of the Fenchel-Moreau theorem.

(i) Suppose satisfies the monotonicity condition. Assume that for with for some component . Define equal to the indicator function of the event and for . Take any with support in such that is finite and define . Then for , we have that , and by monotonicity. Consequently,

It follows that for every with at least one negative component, thus . Conversely, suppose that has realizations in with nonnegative components -a.s. Then whenever , we have:

Consequently,

Hence, the monotonicity condition holds.

(ii) Suppose the positive homogeneity property holds. If for all , then for any fixed , we get

Hence, if is finite, then as claimed. Conversely, if , then is positively homogeneous as a support function of a convex set.

(iii) Suppose the translation property is satisfied, i.e.

for any and a constant . Then for any and , we get:

If is finite, then .

Let us denote , then we obtain

| (7) |

Conversely, suppose . Then for any and :

Hence, the translation property is satisfied. ∎

It follows from Theorem 1 that if a risk measure is lower semicontinuous and satisfies the axioms of monotonicity, convexity and translation equivariance, then representation (6) holds with the set defined as:

Corollary 1.

If a risk measure is coherent, then

Proof.

If is also positive homogeneous, then is the support function of . Then

To show the form of the set recall that

Hence, for all , (6) implies that . On the other hand, if , then by the definition of a support function. ∎

We shall consider further the following property.

-

Normalization a coherent measure of risk is normalized iff .

Corollary 2.

For a normalized coherent measure of risk , we have .

Proof.

It follows from equation (7) that , as stated. This entails that for all , can be interpreted as a probability measure on the space . ∎

In the paper [5], the authors have adopted the following translation axiom:

T. For any constant and any vector whose -th component is 1 () and all other components are zero, we have

Theorem 2.

Assume that is a proper lower-semicontinuous convex risk functional. Property T holds if and only if for all for all . Furthermore, if property T holds, then

| (8) | |||

| (10) |

Proof.

Suppose T holds. Then for a random vector in the domain of and every , we have

It follows that for any such that . This entails that for every constant vector , the risk value is

| (12) |

The other direction is straightforward. Indeed,

Additionally, property T also implies that

Due to equation (12), for all and , we obtain

which completes the proof. ∎

We also observe that a particular implication of Theorem 2 is that risk measures are linear on constant vectors.

Corollary 3.

If a coherent measure of risk satisfies property T, then it is linear on constant vectors.

Proof.

Indeed, a special case of (LABEL:e:T-randomsum) shows that

This combined with the fact that and the positive homogeneity of the risk measure proves the statement. ∎

In [10], the authors have analyzed law-invariant risk measures for bounded random vectors. They have introduced a set of axioms that are closest to ours: their axioms include our axioms together with the two normalization properties and . We do not need these normalization properties to establish the dual representation for general random vectors with finite -moments, ; we derive that the risk of the deterministic zero vector is zero from the dual representation. The property of strong coherence of risk measures, introduced in that paper implies in particular that which appears to be a strong assumption.

3.2 Risk measures obtained via sets of linear scalarizations

Suppose we have a random vector with a right-continuous distribution function and marginal distribution function of each component . We consider linear scalarization using vectors taken from the simplex

Let be a lower semi-continuous risk measure. For any fixed set , we define the risk measure

| (13) |

It is straightforward to see that and hence, the risk measure is well-defined on

Theorem 3.

If is a coherent (convex) risk measure, then for any set , the risk measure is coherent (convex) according to Definition 1.

Proof.

For two random vectors with component-wise a.s., we have a.s. for all . This implies that a.s. and, hence, . Thus, the monotonicity axiom is satisfied. Given two random vectors and , consider their convex combination . Due to the convexity and monotonicity of , we have

Thus, the convexity axiom is satisfied. Given a random vector and a constant , it follows:

Positive homogeneity follows in a straightforward manner. ∎

If the set is a singleton, we obtain the following.

Corollary 4.

Let be a coherent (convex) risk measure. For any vector , the risk measure is coherent (convex) according to Definition 1.

Using the dual representation of the coherent risk measure for scalar-valued random variables, we obtain the following:

| (14) |

Additionally, a measurable selection exists by the Kuratowski-Ryll-Nadjevski theorem; we shall use the notation for any such selection.

Notice that the representations just derived have the form of the dual representation in (6), however we have not established that coincides with the domain of its conjugate function.

We observe the following properties of the aggregation by a single linear scalarization.

Proposition 1.

Given a coherent risk measure and a scalarization vector , for any random vector risk of the vector measured by does not exceed the maximal risk of its components measured by Furthermore, the following relation between aggregation methods holds

Proof.

The dual representation implies the following:

∎

The penultimate relation implies the second claim of the theorem.

We also show the following useful result, which implies that we can use statistical methods to estimate the systemic risk measure

Proposition 2.

If is a law-invariant risk measure, then for any set , the systemic risk measure is law-invariant.

Proof.

It is sufficient to show that for two random vectors and , which have the same distribution, the respective random variables and have the same distribution. ∎

We observe that and have the same distribution for any vector . Hence, for any real number , the following relations hold:

which shows the equality of the distribution functions.

3.3 Systemic Risk Measures Obtained via Nonlinear Scalarization

The second aggregation method that falls within the scope of our axiomatic framework is that of nonlinear scalarization. This class of risk measures cannot be obtained within the framework of aggregations by non-linear functions, and does not fit the axiomatic approaches in [7] or in [5]. Furthermore, we shall see that this method of evaluating systemic risk allows to maintain fairness between the system’s participants.

We define and consider a probability space , where and contains all subsets of . We view as a probability mass function of the space . Given an -dimensional random vector and a collection of univariate measures of risk , we define the random variable on the space as follows:

| (15) |

Choosing a scalar measure of risk , the measure of systemic risk is defined as follows:

| (16) |

This is a nonlinear aggregation of the individual risks , hence this approach falls within the category of methods that evaluate the risk of each component first and then aggregate their values. The measure satisfies the axioms postulated for systemic risk measures.

Theorem 4.

Suppose the univariate measures of risk , are coherent and let be defined as in (16). Then satisfies properties (A1)–(A4).

Proof.

(i) Given any and , we consider the random vector . We have , . Defining a random variable on by setting , we obtain that . Using the monotonicity and convexity of we obtain

Hence .

(ii) Suppose the vectors satisfy a.s. This implies that a.s. and, hence, for all by the monotonicity property of . This further implies that , entailing that . Thus (A2) is satisfied.

(iii) Given a random vector , , we have

where we have used the positive homogeneity property of for all .

(iv) Given a random vector and a constant , we have . Hence This shows property (A4). ∎

Examples A. Systemic Mean-AVaR measure

Consider the case when is a convex combination of the expected value and the Average Value-at-Risk at some level and all components of are evaluated by the same measure of risk . Then for any and , we have:

Here the infimum with respect to is taken over the individual risks of the components , . Hence, this method of aggregation imposes additional penalties for the components whose risk exceeds some threshold.

B. Systemic Mean-Semideviation measure

Now let be a Mean-Upper-Semideviation risk measure of the first order and all components of are evaluated by the same measure of risk . Then the measure of systemic risk can be defined as:

The last representation shows that this risk measure is an aggregation of the individual risk of the components, which compares the risk of every component with the weighted average risk of all components and penalizes the deviation of the individual risk from that average.

The presented method of non-linear aggregation maintains fairness within the system and keeps the components functioning within the same level of risk.

4 Relations to multivariate measures of systemic risk

In this section, we compare the proposed risk measures with the multivariate notions mentioned in section 2.2.

Consider first the Multivariate Value-at-Risk () is given as the set of -efficient points of the respective probability distribution. The following facts are shown in [9]. For every the level set of a the distribution function of a random vector is nonempty and closed. For a given scalarization vector , the -efficient points can be generated by solving the following optimization problem:

| (17) | ||||

| s.t. |

For every the solution set of the optimization problem (17) is nonempty and contains a -efficient point. Hence, given a random vector and a scalarization vector , at level can be calculated as:

Therefore, using linear scalarizations, one can find the -efficient point corresponding to any given vector .

Consider now the Multivariate Average Value-at-Risk () defined in (4). When small outcomes are preferred then, the unfavorable set of realizations of a random vector is given by the -level set of . Hence . If , then there exists a -efficient point such that . If the scalarization function is monotonically nondecreasing, then . Denote the -quantile of by . Then we observe that Therefore:

for all where the cumulative distribution function of is continuous. It follows that the Average Value-at-Risk of scalarized by a monotonically nondecreasing function has a smaller value than defined in (4). This implies in particular that for any ,

We not turn to the Vector-valued Multivariate Average Value-at-Risk. It is calculated as one of the Pareto-efficient optimal solution of the following optimization problem:

| (18) | ||||

| s.t. |

It is well-known that a feasible solution of a convex multiobjective optimization problem is Pareto-efficient if and only if it is an optimal solution of the scalarized problem with an objective function which is a convex combination of the multiple objectives. Then , which is the Pareto-efficient solution of the multiobjective optimization problem 18, is also optimal for the following problem:

| (19) | ||||

| s.t. |

where is a scalarization vector taken from the simplex .

Now for , we consider:

due to the convexity of the max function. It follows that:

In the scalar-valued case () the minimizer of the optimization problem defining is the for a random variable . In the multivariate case (), we established that the solution of (17) is the -efficient point, or , corresponding to a given scalarization vector . Denoting this -efficient point as , it follows that:

The relation implies that . Denoting the -quantile of as , it follows that: i.e. is not larger than . Therefore:

It follows that the scalarization of results in a smaller value of the Average Value-at-Risk of the scalarized random vector, which is one of the systemic risk measures following the constructions in section 3.

We do not pursue further investigation on set-valued systemic measures of risk as their calculation is numerically very expensive.

5 Two-stage stochastic programming problem with systemic risk measures

Our goal is to address a situation, when the agents cooperate on completing a common task and risk is associated (among other factors) with the successful completion of the task. This type of situations are typical in robotics, as well as in energy systems, where the units cover the energy demand in certain area.

5.1 Two-stage monotropic optimization problem with a systemic risk measure

In this section, we consider how the proposed approaches to evaluate systemic risk can be applied to a two-stage stochastic optimization problem with a monotropic structure. Specifically, we focus on a problem formulated as follows:

| (20) |

where has realizations defined as the optimal value of the second-stage problem in scenario :

| (21) | ||||

| s.t. | (22) | |||

| (23) | ||||

| (24) | ||||

| (25) |

Here is a continuous function that represents the cost of the first-stage decision and is a closed convex set. The random vector comprises the random data of the second-stage problem. In the second-stage problem, we would like to minimize the sum of cost functions for that depend on two second-stage decision variables: local decision variables for and the common decision variable . The decision variables are local for every , and the local constraints are represented as a closed convex set . The decision variable is common for all and needs global information to be calculated. The matrix is of size and the set is a closed convex set. Note that the constraints (22) linking the first-stage decision variable and the local second-stage decision variables are defined for every separately, where matrices , and depend on the scenario . The constraint (23) is a coupling constraint that links the local decision variables , where and depend on the scenario .

We define the total cost as the aggregation of the individual cost functions using some scalarization vector such that and we would like to develop a numerical method to solve the two-stage problem in a distributed way. Specifically, we use decomposition ideas based on the risk-averse multicut method proposed in [15] and the multi-cut methods in risk-neutral stochastic programming to solve the two-stage problem, but we also decompose the second-stage problem into subproblems that can be solved independently in order to allow for a distributed operation of units (agents).

First, we discuss how to apply the decomposition method to solve the two-stage problem. We use the multicut method to construct a piecewise linear approximation of the optimal value of the second-stage problem and we approximate the measure of risk by subgradient inequalities based on the dual representation of coherent risk measures . To this end, we introduce auxiliary variable , which will contain the lower approximation of the measure of risk. Further, we designate the random variable with realizations which represent the lower approximations of the function Then the master problem in our method takes on the following form:

| (26) | ||||

| s.t. | ||||

The optimal value contains the value of the approximation of where is the solution of the master problem at iteration . Notice that the approximation of is given by

with being the probability measures from calculated as subgradients in the previous iterations. We shall explain how the subgradients are obtained in due course. The value is the optimal value of the second-stage problem in scenario at iteration and is the subgradient calculated using the optimal dual variables of the constraints . One can solve the second-stage problem where the objective function consists of a scalarization of cost functions, but we would like to decompose the second-stage problem into subproblems that can be solved independently in a distributed manner.

Consider the second-stage problem for a fixed first-stage decision variable . To decompose the global problem into local subproblems, we need to handle two problems: (i) distribute the common decision variable to individual subproblems ; (ii) decompose the coupling constraints. The common decision variable can be distributed to subproblems by creating its copy for every , where . Then we ensure the uniqueness of by enforcing the decision variables to be equal to each other. Then the second-stage problem can be rewritten as:

| (27) | ||||

| s.t. | (28) | |||

| (29) | ||||

| (30) | ||||

| (31) |

In order to distribute the coupling constraints (29), (30), we can apply Lagrange relaxation using Lagrange multipliers and . Then the global augmented Lagrangian problem associated with the second-stage problem is defined as:

where is a penalty coefficient. This problem can be decomposed into subproblems with a local augmented Lagrangian defined as:

where are decision variables of subproblem , and contain given optimal decisions of other subproblems . Note that the first penalty term can be expanded as:

This implies that for such that , the remaining terms are constant with respect to and can be omitted from the optimization problem. Hence, the subproblem needs access to the decisions of which are coupled with in constraint . Similarly, consider the second penalty term:

The terms contained in the last summation that do not include are constants and can be excluded from the optimization problem. Hence, we can define the subproblem for every as follows:

| (32) | ||||

| s.t. | (33) | |||

| (34) |

For a fixed , in every scenario , we can implement the Accelerated Distributed Augmented Lagrangian (ADAL) method, we refer to [6] for detailed analysis of the method. The method consists of three main steps: (i) we solve every subproblem to find the optimal primal variables ; (ii) update the primal variables and check if the coupling constraints (29), (30) are satisfied; (iii) if the constraints are not satisfied, update the dual variables and go back to step (i). The ADAL method converges to the optimal solution in a finitely many steps and we can calculate the optimal value of the objective function for every subproblem . Then the global objective function of the second-stage problem can be calculated as .

Once the second-stage problem is solved for every scenario , we construct objective cuts for every scenario defined as:

where is the subgradient of at and scenario . Now note that

Hence, at , the subgradient for scenario can be calculated as . The subgradient is given as , where is the Lagrange multiplier associated with the constraint (28) in subproblem . Then the proposed method for solving the two-stage problem is formulated as follows:

-

Step 0. Set and define initial .

-

Step 1. Solve the master problem (26) and denote its optimal solution as .

-

Step 2. For every scenario apply the following method.

-

(a) Set and define initial Lagrange multipliers , and initial primal variables .

-

(b) Given the Lagrange multipliers and decision variables of the neighboring nodes , every node calculates its optimal solution by solving its local problem:

(35) s.t. -

(c) Every node updates its primal variables:

-

(d) If the constraints

(36) are satisfied, then calculate the following quantities and go to Step 3:

where is the optimal Lagrange multiplier associated with the constraint (33) in subproblem and is the optimal value of the objective function (35).

If any of the constraints (36) are not satisfied, update their Lagrange multipliers as follows:

Increase by one and return to Step (b).

-

-

Step 3. Calculate and .

-

Step 4. If , stop; otherwise, increase by one and go to Step 1.

Note that the penalty parameter can be chosen for every scenario according to the structure of the problem. The ADAL method converges to the optimal solution in scenario if the penalty parameter , where is the maximum number of nonzero rows in matrices for . Hence, can be chosen close to

5.2 Two-stage wireless information exchange problem

In this section, we formulate a two-stage information exchange problem and implement the proposed numerical method to solve it. Consider a problem in a wireless communication network consisting of robots. We denote the team of all robots by . The robots collect information about the unknown environment and send the information to a set of active reporting points by multi-hop communication. The active reporting points can receive information from robots and store it. The communication links between robots and reporting points are subject to the risk of information loss. Therefore, the objective is to choose the optimal set of active reporting points to minimize the risk associated with the amount of information lost and the proportion of the total information that was gathered but has not reached the reporting points. To this end, we shall formulate a two-stage stochastic programming problem.

The first-stage decision variables are known as the here and now variables. In our problem, these are binary variables for , where if the -th location is selected as an active reporting point is active and otherwise. We assume that at most reporting points can be active, where

Once the reporting points are chosen, the spatial configuration of the robots is observed. We model the uncertainty of the spacial configuration and the amount of information to be observed by a set of scenarios. The robots gather information about the environment and either deliver it to the reporting points or exchange it with their neighbors who then deliver it to the active reporting points. The following second-stage decision variables are involved in the second-stage optimization problem. The variables stand for the amount of information that is sent by node to node in scenario . The amount of information observed but not sent by robot in scenarios is denoted by . The proportion of information successfully delivered to the reporting points in scenario is denoted by . Every robot generates information and can send it to its neighbors within some communication range. These communication links between the nodes depend on a function that calculates what proportion of information sent by node is received and correctly decoded by node . Then is the amount of information received and correctly decoded by node in scenario . Then the set of neighbors of node in scenario can be defined as the set of nodes within its communication range .

We associate a local risk with each robot about the information that is not communicated to neighbors or delivered to the reporting points because the information might be lost due to damage to the robot or other issues. For every we define:

as the amount of information not communicated to neighbors nor to any of the reporting points by robot . The systemic risk associated with the team of robots is represented by the total proportion of information not delivered to the reporting points; it is defined as , where is calculated as follows:

| (37) |

To implement the distributed method for the operation of the robots, we introduce copies of the total proportion variable for each robot (denoted ). We then introduce additional constraints to impose equality among the auxiliary variables . Constraint (37) is then replaced by the following set of constraints:

Using these variables, we can express the loss function of every robot in scenario as follows

where is the weight associated with the local risk, while is the systemic risk. These are modeling parameters. We have used a choice of in-line with our theoretical proposal for aggregating sources of risk. The first-stage optimization problem takes on the following form:

| s.t. | |||

Here is a random variable with realizations for denoting the optimal values of the second-stage problem. The second-stage problem deals with the operation of the robots after the location of the reporting points is fixed; it is formulated as follows:

| (38) | ||||

| (39) | ||||

| (40) | ||||

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) | ||||

| (45) |

Here is a large constant, which helps us provide a logical upper bound for communication only to the active reporting points in constraint (43). Additionally, are weights associated with the loss functions of individual robots. Here again, we propose according to the proposed systemic measures of risk. We notice that the second-stage problems are always feasible for any feasible first-stage decision. Hence, the two-stage problem has a relatively complete recourse. Furthermore, the recourse function has finitely many realizations for every fixed argument . This implies that we can use any coherent measure of risk for evaluating the risk of ; the value is well defined and finite for all feasible first-stage decisions

5.3 Numerical results

We solve the problem using the distributed method proposed in 5.1. In the given problem, the decision variables are local to every node and there are four coupling constraints that need to be distributed to the nodes:

Since the ADAL algorithm operates equality constraints, we can introduce auxiliary variables and redefine (40) as . Then the coupling constraints can be rewritten using appropriate matrices and vectors stacking decision variables of every node . Let be a -dimensional vector stacking all decision variables of the node . Then the flow conservation constraint (39) can be rewritten as , where is a -dimensional vector and is dimensional matrix defined as:

where all elements in the -th row are equal to 1 except of terms and the last two columns which are equal to . Note that for all , hence the terms are equal to . Similarly, constraints (40) and (41) can be rewritten as

using appropriate matrices for every node , where is a vector of all ones. Note that since nodes can share information only with the neighbors, one can enforce the equality of the proportion variable between neighboring nodes and rewrite the constraint (42) as follows:

| (46) |

where is the set of nodes within communication range of node in scenario . If the network is connected, constraint (46) enforces all to be equal to each other and ensures the consistency and uniqueness of .

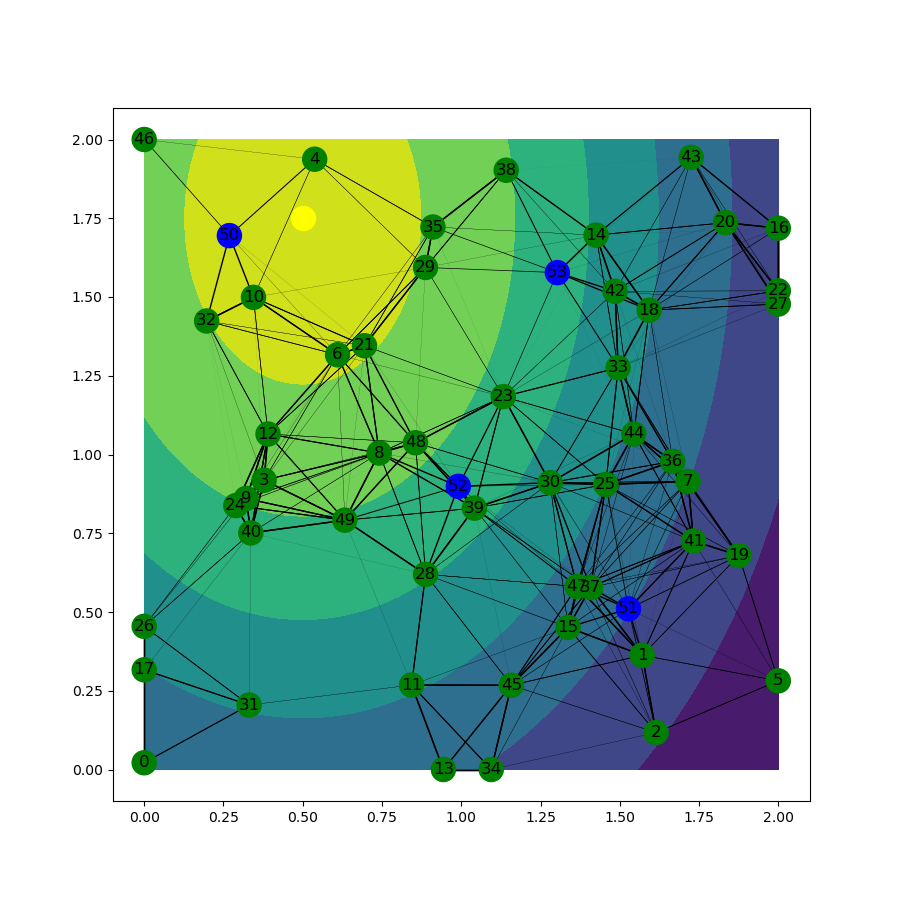

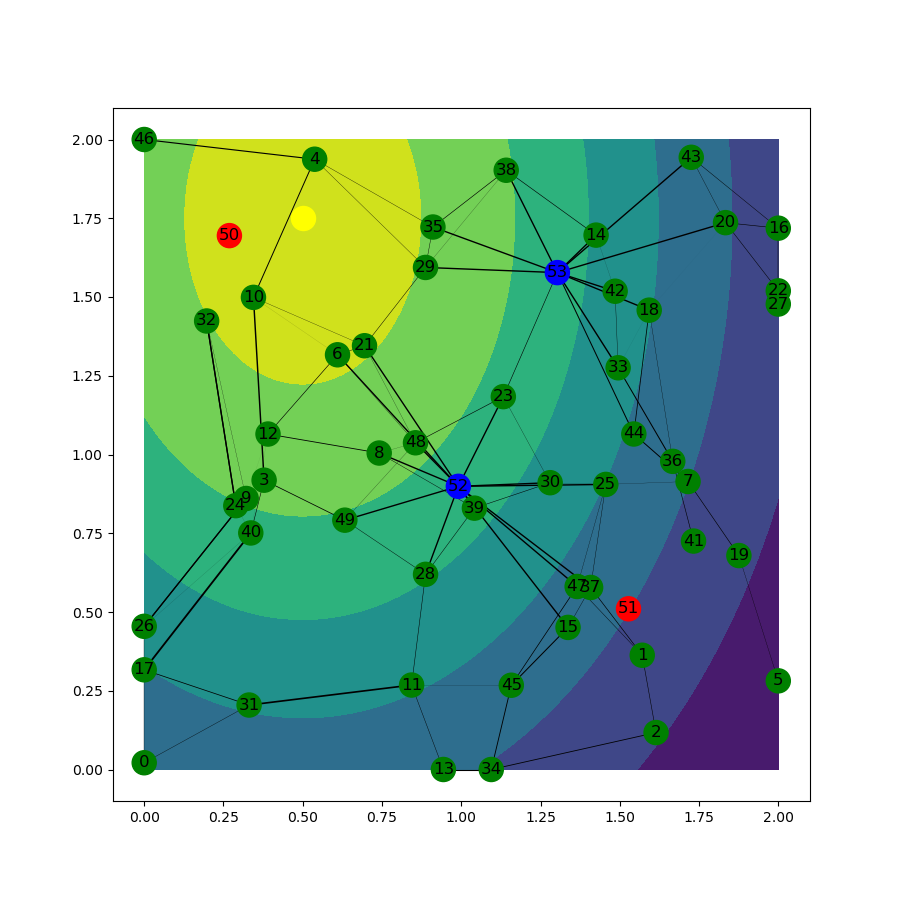

We assume that the team of robots works on a square map given by the points with relative coordinates and . The spatial distribution of available information to be gathered follows a normal distribution with an expected value in the upper left corner of the map. The network consists of 50 robots and 4 potential locations of the reporting points. We generated 200 scenarios for different spatial configurations of the robots. The four potential locations for the reporting points are fixed in the positions . The rate function depends on the distance between the nodes in the network and is defined as:

where is the distance between the nodes in scenario . We set and , and values and are chosen so that is a continuous function. This function is commonly used in literature, see e.g. [32]. The information gathered by robot in scenario , depends on the robot’s position relative to the expected value given above. In our experiments is calculated as follows:

where is the positions of robot is a scaling factor, and is a covariance matrix, which keep fixed for all experiments.

Comparison of aggregation methods. We solved the optimization problem using two different aggregation methods:

-

•

aggregate first Using the proposed multivariate measures of risk, we aggregate the individual losses of the robots with a fixed scalarization , we calculate for each scenario and evaluate its risk by several scalar-valued measures of risk;

-

•

evaluate first We evaluate the individual risk of every robot across all scenarios and calculate . Then we aggregate their values using two examples of nonlinear aggregation shown in section 4.2.1.

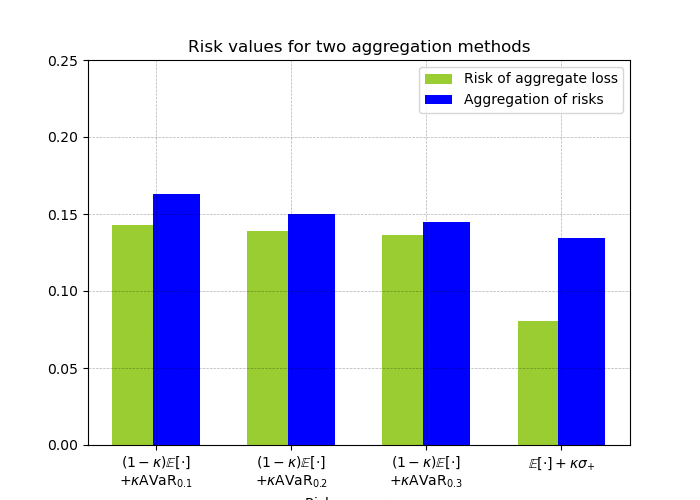

We solve the problem using a linear scalarization vector with equal weights for all , and for three values of .

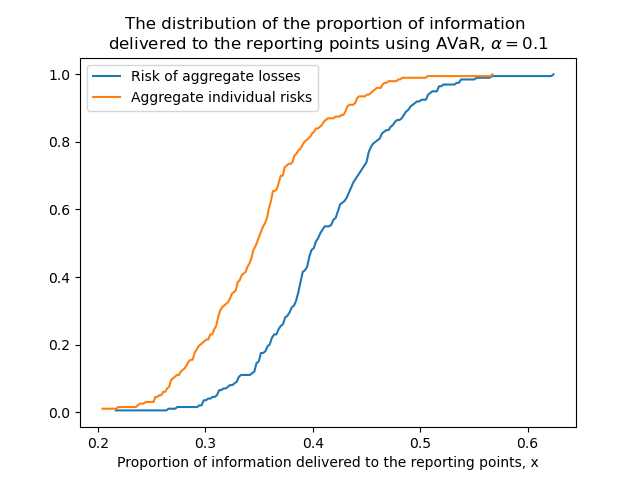

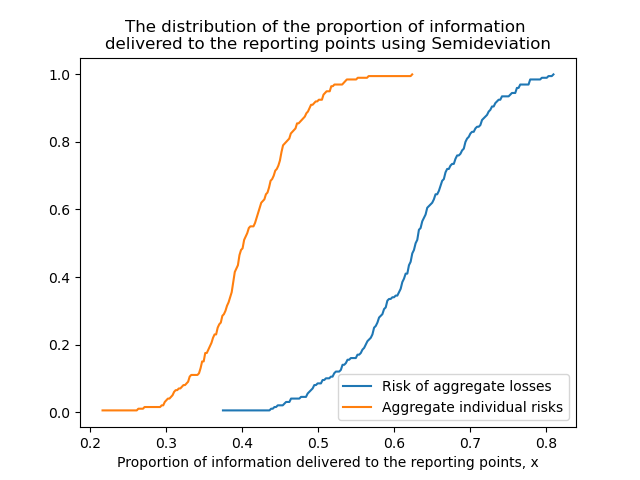

The setup of the communication network problem and the optimal solutions in one of the scenarios for both methods are shown in Fig. 1. One can notice that depending on what kind of aggregation method is used, the set of optimal reporting points might be different. The distribution of the proportion of information delivered to the reporting points for two methods is shown in Fig. 2. It can be seen that more information is delivered to the reporting points if we aggregate the losses of robots and evaluate the risk. This observation is also reflected in the values of the risk for both methods: imposing a risk measure on linear scalarization of the individual losses results in smaller values than aggregation of individual risks.

Using the optimal values of the decision variables, we can calculate and to compare their values with the AVaR applied on linear scalarization of the random cost. The following formulas were used to calculate the values:

| (47) | |||

| (48) | |||

| (49) |

The values of , and are shown in Table 1. It can be seen that results in smaller values than and at all confidence levels as it was shown theoretically in section 4.Those measures of risk are computationally very demanding and not amenable to the type of decision problems, we are considering. Hence, we only compare their values for the decision obtained via our proposed method.

| 0.1 | 0.2 | 0.3 | |

|---|---|---|---|

| 0.1429 | 0.1389 | 0.1364 | |

| 0.1992 | 0.1693 | 0.1634 | |

| 0.174 | 0.1622 | 0.1553 |

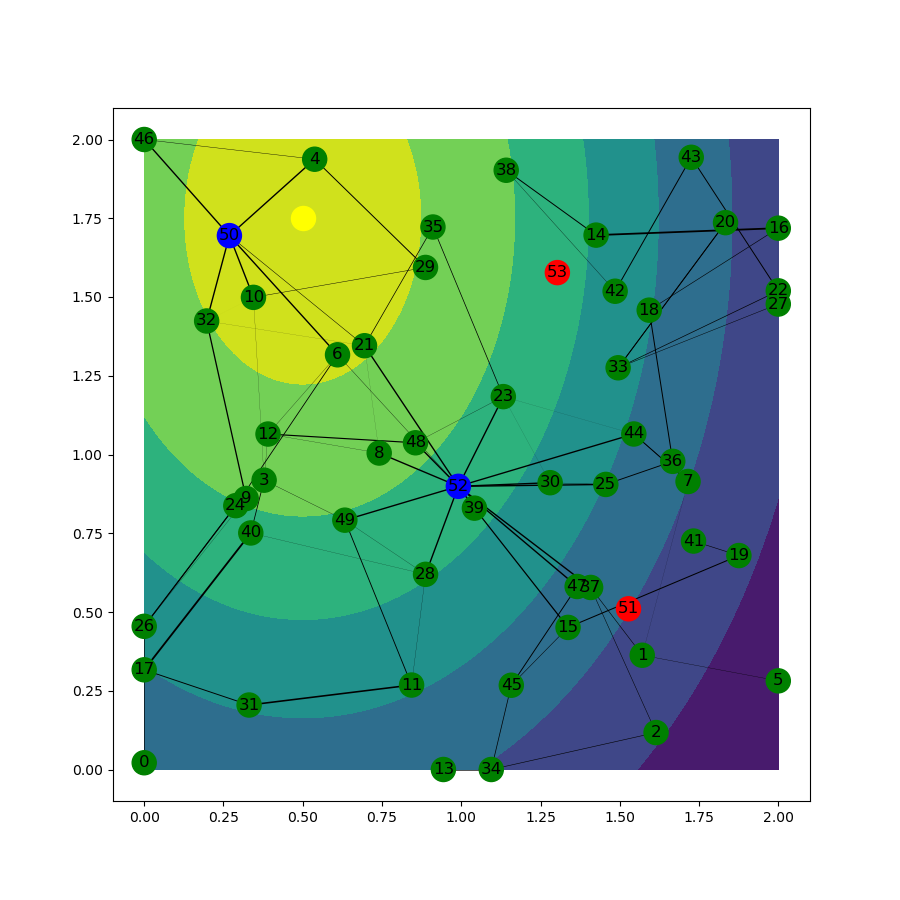

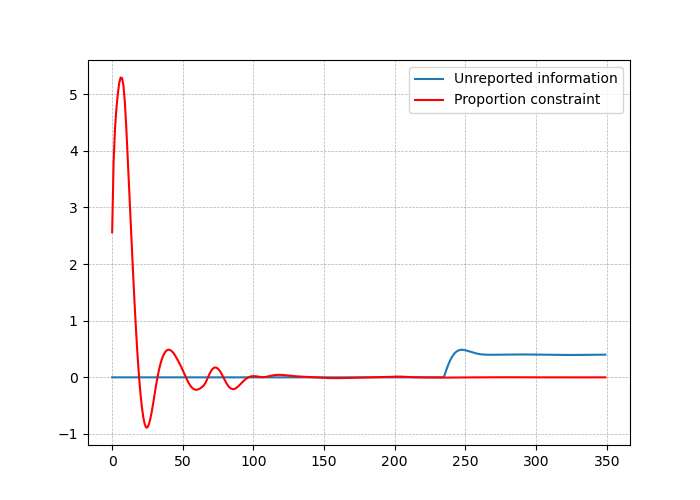

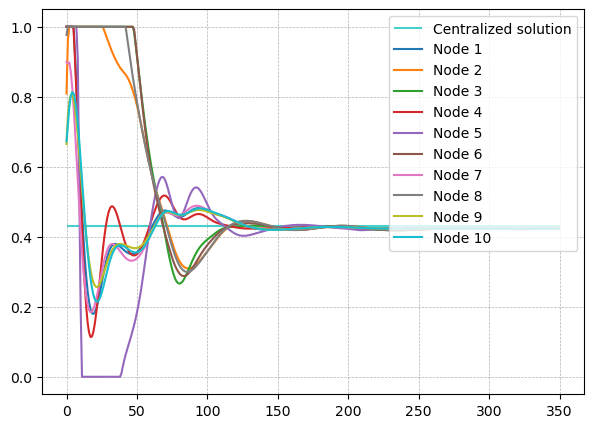

When we solve the problem in a distributed way, we use a smaller network consisting of 20 robots and 4 reporting points in a 1.5 by 1.5 square over 100 scenarios. It is assumed that the network is connected in all possible scenarios, that is, every node has at least one neighbor within the communication range, and all nodes are connected to the reporting points through multiple hops. This assumption is necessary for the proper calculation of the proportion of information delivered to the reporting points. If one of the nodes is isolated from the network, the rest of the group converges to a solution that does not take into account the isolated node’s contribution. The problem is solved in both centralized and distributed ways, and the results for one of the scenarios are shown in Fig. 3. As it can be seen in Fig. 3 (b), nodes converge to the centralized solution of the proportion of information delivered to the reporting points.

6 Conclusions

Our contributions can be summarized as follows. We propose a sound axiomatic approach to measures of risk for distributed systems. We show that several classes of non-trivial measures that satisfy the axioms can be constructed. These measures can be calculated efficiently and are less conservative than most of the other systemic measures of risk. The class of measures proposed in section 3.3 goes beyond the popular ways to evaluate risk of agents then aggregate.

We have devised a distributed method for solving the risk-averse two-stage problems with monotropic structure, which works for any measure of risk not only for those that are representable as expected value. The construction is quite general and could serve as a template for devising other distributed methods for problems with systemic measures of risk.

We demonstrate the viability of the proposed framework on a non-trivial two-stage problem involving wireless communication. The numerical experiments confirm the theoretical observations and show the advantage of the proposed approach to risk aggregation in distributed systems.

In conclusion, the advantage of the new approach is the good balance of robustness to the uncertainty, optimality of the loss functions involved, and the efficiency of the numerical operation.

References

- [1] Çağın Ararat, and Birgit Rudloff. Dual representations for systemic risk measures. Mathematics and Financial Economics, 14:139-174, 2020.

- [2] Philippe Artzner, Freddy Delbaen, Jean-Marc Eber, and David Heath. Coherent measures of risk. Mathematical Finance, 9(3):203–228, July 1999.

- [3] Francesca Biagini, Jean-Pierre Fouque, Marco Frittelli, and Thilo Meyer-Brandis. A Unified Approach to Systemic Risk Measures via Acceptance Sets. 2015.

- [4] Markus Brunnermeier and Patrick Cheridito. Measuring and Allocating Systemic Risk. Risks, 7(2), 2019.

- [5] Christian Burgert and Ludger Rüschendorf. Consistent risk measures for portfolio vectors. Insurance: Mathematics and Economics, 38(2):289–297, 2006.

- [6] Nikolaos Chatzipanagiotis, Darinka Dentcheva, and Michael Zavlanos. An augmented lagrangian method for distributed optimization. Mathematical Programming, 152, 01 2014.

- [7] Chen Chen, Garud Iyengar, and C. Ciamac Moallemi. An Axiomatic Approach to Systemic Risk. Management Science, 59(6):1373–1388, 2013.

- [8] Freddy Delbaen. Coherent risk measures on general probability spaces. Advances in Finance and Stochastics, pages 1–37, March 2000.

- [9] Darinka Dentcheva, Bogumila Lai, and Andrzej Ruszczyński. Dual methods for probabilistic optimization. Mathematical Methods of Operations Research, 60:331–346, 2004.

- [10] Ivar Ekeland and Walter Schachermayer, Law invariant risk measures on Statistics & Risk Modeling, 28(3): 195–225, 2011.

- [11] Kromer Eduard, Overbeck Ludger, and Zilch Katrin. Systemic Risk Measures on General Measurable Spaces. 05 2016.

- [12] Zachary Feinstein, Birgit Rudloff, and Stefan Weber. Measures of Systemic Risk. SIAM Journal on Financial Mathematics, 8(1):672–708, Jan 2017.

- [13] Hans Föllmer and Alexander Schied. Convex measures of risk and trading constraints. Finance and Stochastics, 6:429–447, 2002.

- [14] Hans Föllmer and Alexander Schied. Stochastic Finance: An Introduction in Discrete Time, 3rd Edition. Walter De Gruyter, 2011.

- [15] Sıtkı Gülten and Andrzej Ruszczyński. Two-stage portfolio optimization with higher-order conditional measures of risk. Annals of Operations Research, 229:409–427, 06 2015.

- [16] Andreas Hamel, and Frank Heyde, Duality for set-valued measures of risk, SIAM Journal on Financial Mathematics, 1(1):66–95,2010.

- [17] Elyes Jouini, Moncef Meddeb, and Nizar Touzi, Vector-valued coherent risk measures, Finance and stochastics, 8(4): 531–552, 2004.

- [18] M. Kijima and M. Ohnishi. Mean-risk analysis of risk aversion and wealth effects on optimal portfolios with multiple investment opportunities. Ann. Oper. Res., 45:147–163, 1993.

- [19] Jinwook Lee and András Prékopa. Properties and calculation of multivariate risk measures: MVaR and MCVaR. Annals of Operations Research, 211:225–254, 2013.

- [20] J. Leitner. A short note on second-order stochastic dominance preserving coherent risk measures. Mathematical Finance, 15:649–651, 2005.

- [21] Ma, Wann-Jiun and Oh, Chanwook and Liu, Yang and Dentcheva, Darinka and Zavlanos, Michael M., Risk-Averse Access Point Selection in Wireless Communication Networks, IEEE Transactions on Control of Network Systems, 6(1): 24–36, 2019.

- [22] Merve Meraklı and Simge Küçükyavuz. Vector-Valued Multivariate Conditional Value-at-Risk. Operations Research Letters, 46(3):300–305, 2018.

- [23] Nilay Noyan and Gábor Rudolf. Optimization with multivariate conditional value-at-risk constraints. Operations research, 61(4):990–1013, 2013.

- [24] Georg Pflug and Alois Pichler, Systemic risk and copula models, Central European Journal of Operations Research, 26: 465–483 2018.

- [25] G.Ch. Pflug and W. Römisch. Modeling, Measuring and Managing Risk. World Scientific, Singapore, 2007.

- [26] András Prékopa. Multivariate Value at Risk and Related Topics. Annals of Operations Research, 193:49–69, 2012.

- [27] R Tyrrell Rockafellar and Stan Uryasev. The fundamental risk quadrangle in risk management, optimization and statistical estimation. Surveys in Operations Research and Management Science, 18(1-2):33–53, 2013.

- [28] Ludger Rüschendorf. Mathematical Risk Analysis. Springer, 2015.

- [29] A. Ruszczyński and A. Shapiro. Optimization of risk measures. In G. Calafiore and F. Dabbene, editors, Probabilistic and Randomized Methods for Design under Uncertainty, pp. 117–158, Springer-Verlag, London, 2005.

- [30] A. Ruszczyński and A. Shapiro. Optimization of convex risk functions. Mathematics of Operations Research, 31:433–452, 2006.

- [31] Alexander Shapiro, Darinka Dentcheva, and Andrzej Ruszczyński. Lectures on Stochastic Programming: Modeling and Theory. Society for Industrial & Applied Mathematics (SIAM), 2009.

- [32] Michael M. Zavlanos, Alejandro Ribeiro, and George J. Pappas. Network integrity in mobile robotic networks. IEEE Transactions on Automatic Control, 58(1):3–18, 2013.