On the fitting of mixtures of multivariate skew -distributions via the EM algorithm

Abstract

We show how the expectation-maximization (EM) algorithm can be applied exactly for the fitting of mixtures of a general multivariate skew (MST) distributions, eliminating the need for computationally expensive Monte Carlo estimation. Finite mixtures of MST distributions have proven to be useful in modelling heterogeneous data with asymmetric and heavy tail behaviour. Recently, they have been exploited as an effective tool for modelling flow cytometric data. However, without restrictions on the the characterizations of the component skew -distributions, Monte Carlo methods have been used to fit these models. In this paper, we show how the EM algorithm can be implemented for the iterative computation of the maximum likelihood estimates of the model parameters without resorting to Monte Carlo methods for mixtures with unrestricted MST components. The fast calculation of semi-infinite integrals on the E-step of the EM algorithm is effected by noting that they can be put in the form of moments of the truncated multivariate -distribution, which subsequently can be expressed in terms of the non-truncated form of the -distribution function for which fast algorithms are available. We demonstrate the usefulness of the proposed methodology by some applications to three real data sets.

1 Introduction

Finite mixture distributions have become increasingly popular in the modelling and analysis of data due to their flexibility. This use of finite mixture distributions to model heterogeneous data has undergone intensive development in the past decades, as witnessed by the numerous applications in various scientific fields such as bioinformatics, cluster analysis, genetics, information processing, medicine, and pattern recognition. Comprehensive surveys on mixture models and their applications can be found, for example, in the monographs by Everitt and Hand (1981), Titterington, Smith, and Markov (1985), Lindsay (1995), McLachlan and Basford (1988), and McLachlan and Peel (2000), among others; see also the papers by Banfield and Raftery (1993) and Fraley and Raftery (1999).

Mixtures of multivariate -distributions, as proposed by McLachlan and Peel (1998, 2000), provide extra flexibility over normal mixtures. The thickness of tails can be regulated by an additional parameter – the degrees of freedom, thus enabling it to accommodate outliers better than normal distributions. However, in many practical problems, the data often involve observations whose distributions are highly asymmetric as well as having longer tails than the normal, for example, datasets from flow cytometry (Pyne et al., 2009). Azzalini (1985) introduced the so-called skew-normal (SN) distribution for modelling symmetry in data sets. Following the development of the SN and skew -mixture models by Lin, Lee, and Yen (2007), and Lin, Lee, and Hsieh (2007), respectively, Basso et al. (2010) studied a class of mixture models where the components densities are scale mixtures of skew-normal distributions introduced by Branco and Dey (2001), which include the classical skew-normal and skew -distributions as special cases. Recently, Cabral, Lachos, and Prates (2012) have extended the work of Basso et al. (2010) to the multivariate case.

In a study of automated flow cytometry analysis, Pyne et al. (2009) proposed a finite mixture of multivariate skew -distributions based on a ‘restricted’ variant of the skew -distribution introduced by Sahu, Dey, and Branco (2003). Lin (2010) considered a similar approach, but working with the original (unrestricted) characterization by Sahu et al. (2003). However, with this more general formulation, maximum likelihood (ML) estimation via the EM algorithm (Dempster, Laird, and Rubin, 1977) can no longer be implemented in closed form due to the intractability of some of the conditional expectations involved on the E-step. To work around this, Lin (2010) proposed a Monte Carlo (MC) version of the E-step. One potential drawback of this approach is that the model fitting procedure relies on MC estimates which can be computationally expensive.

In this paper, we show how the EM algorithm can be implemented exactly to calculate the ML estimates of the parameters for the (unrestricted) multivariate skew -mixture model, based on analytically reduced expressions for the conditional expectations, suitable for numerical evaluation using readily available software. A key factor in being able to compute the integrals quickly by numerical means is the recognition that they can be expressed as moments of a truncated multivariate -distribution, which in turn can be expressed in terms of the distribution function of a (non-truncated) multivariate central -random vector, for which fast programs already exist. We show that the proposed algorithm is highly efficient compared to the version with a MC E-step. It produces highly accurate results for which, if MC were to achieve comparable accuracy, a large number of draws would be necessary.

The remainder of the paper is organized as follows. In Section 2, for the sake of completeness, we include a brief description of the multivariate skew -distribution (MST) used for defining the multivariate skew -mixture model. We also describe the truncated -distribution in the multivariate case, critical for the swift evaluation of the integrals on the E-step occurring in the calculation of some of the conditional expectations. Section 3 presents the development of an EM algorithm for obtaining ML estimates for the MST distribution. In the following section, the finite mixture of MST (FM-MST) distributions is defined. Section 5 presents an implementation of the EM algorithm to the fitting of the FM-MST model. An approximation to the observed information matrix is discussed in Section 6. Finally, we present some applications of the proposed methodology in Section 7.

2 Preliminaries

We begin by defining the multivariate skew -distribution and briefly describing some related properties. Some alternative versions of the distribution are also discussed. Next, we introduce the truncated multivariate -distribution and provide some formulas for computing its moments. These expressions are crucial for the swift evaluation of the conditional expectations on the E-step to be discussed in the next section.

2.1 The Multivariate Skew -Distribution

Following Sahu et al. (2003), a random vector is said to follow a -dimensional (unrestricted) skew -distribution with location vector , scale matrix , skewness vector , and scalar degrees of freedom , if its density is given by

| (1) |

where

Here the operator denotes a diagonal matrix with diagonal elements specifed by the vector . Also, we let be the -dimensional -density with location vector , scale matrix , and degrees of freedom , and the corresponding (cumulative) distribution function. The notation will be used. Note that when , (1) reduces to the symmetric -density . Also, when , we obtain the skew normal distribution.

The MST distribution admits a convenient hierarchical form,

where is the identity matrix, denotes the multivariate normal distribution with mean and covariance matrix , represents the -dimensional half-normal distribution with mean and scale matrix , and is the Gamma distribution with mean .

We observe from (LABEL:MST_H) that the MST distribution (1) has the following stochastic representation. Suppose that conditional on the value of the gamma random variable ,

| (3) |

where denotes the identity matrix, denotes the zero vector of appropriate dimension, and is a -dimensional random vector. Then

| (4) |

has the multivariate skew -distribution density (1). In the above, denotes the vector whose th element is the magnitude of the th element of the vector . It is important to note that, although also known as the multivariate skew -distribution, the versions considered by Azzalini and Dalla Valle (1996), Gupta (2003), and Lachos, Ghosh, and Arellano-Valle (2010), among others, are different from (1). These versions are simpler in that the skew -density is defined in terms involving only the univariate -distribution function instead of the multivariate form of the latter as used in (1). Recently, Pyne et al. (2009) proposed a simplified version of the skew -density given by (1) by replacing the term in (4) by the term , where is a univariate central -random variable with degrees of freedom, leading to the reduced skew -density:

| (5) |

where . We shall refer to this characterization of skew -distribution as the ‘restricted’ multivariate skew (rMST)distribution. One immediate consequence of this type of ‘simplification’ is that the correlation structure of the original symmetric model is affected by the introduction of skewness, whereas for (1) the correlation structure remains the same, as noted in Arellano-Valle, Bolfarine, and Lachos (2007). Nevertheless, one major advantage of having simplified forms like (5) is that calculations on the E-step can be expressed in closed form. However, the form of skewness is limited in these characterizations. Here, we extend their approach to the more general form of the skew -density as proposed by Sahu et al. (2003).

2.2 The truncated multivariate -distribution

Let be a -dimensional random variable having a multivariate -distribution with location vector , scale matrix , and degrees of freedom. Truncating to the hyperplane region , where means each element is less than or equal to for , results in a right-truncated -distribution whose density is given by

| (6) |

For a random vector with density (6), we write . For our purposes, we will be concerned with the first two moments of , specifically and . Explicit formulas for the truncated central -distribution in the univariate case were provided by O’Hagan (1973), who expressed the moments in terms of the non-truncated -distribution. The multivariate case was studied in O’Hagan (1976), but still considering the central case only. We will generalize these results to the case with non-zero location vector here.

Before presenting the expressions, it will be convenient to introduce some

notation. Let be a vector, where denotes the th element

and is a two-dimensional vector with elements and

. Also, and represents the

and -dimensional vector, respectively, with the corresponding elements

removed. For a matrix , denotes the th element, and

defines the matrix consisting of the elements

, , and . is created by

removing the th row and column from . Similarly, is the -dimensional square matrix resulting from the removal of the th and

th row and column from . Lastly, is the

th and th column of with the elements of removed, yielding a matrix. We now proceed to the

expressions for the first two moments of .

With some effort, one can show that the first moment of (6) is

| (7) |

where , and is a vector with elements

for , and where

| and | ||||

The second moment is given by

| (8) | |||||

where is a matrix with off-diagonal elements

and diagonal elements,

| and | ||||

It is worth noting that evaluation of the expressions (7) and (8) rely on algorithms for computing the multivariate central -distribution function for which highly efficient procedures are readily available in many statistical packages. For example, an implementation of Genz’s algorithm (Genz and Bretz, 2002; Kotz and Nadarajah, 2004) is provided by the mvtnorm package available from the R website.

3 ML Estimation for the MST Distribution

In this section, we describe an EM algorithm for the ML estimation of the MST distribution specified by (1). To apply the EM algorithm, the observed data vector is regarded as incomplete, and we introduce two latent variables denoted by and , as defined by (LABEL:MST_H). We let be the parameter containing the elements of the location parameter , the distinct elements of the scale matrix , the elements of the skew parameter , and the degrees of freedom . It follows that the complete-data log-likelihood function for is given by

| (9) | |||||

where does not depend on .

The implementation of the EM algorithm requires alternating repeatedly the E- and M-steps until convergence in the case where the sequence of the log likelihood values is bounded above. Here denotes the value of after the th iteration.

On the th iteration, the E-step requires the calculation of the conditional expectation of the complete-data log likelihood given the observed data , using the current estimate for . That is, we have to calculate the so-called -function defined by

| (10) |

where denotes the expectation operator, using for . This, in effect, requires the calculation of the conditional expectations

Note that the -function does not admit a closed form expression for this

problem, due to the conditional expectations , ,

and not being able to be evaluated in

closed form.

Concerning the calculation of the expectation , the conditional density of given , is given by

| (11) |

where

and is the zero vector of appropriate dimension.

The conditional expectation can be reduced to

| (12) | |||||

where the last term is given by

| (13) | |||||

and is an integral given by

| (14) | |||||

and denotes the Digamma function.

We note that the term will be very small in practice since it would be zero if we adopted a one-step late (OSL) EM algorithm (Green, 1990). In which case, there would be no need to calculate the multiple integral in (13). Hence then, can be reduced to

| (16) | |||||

It can be easily shown that can be written in closed form (see, for example, Lin (2010)), given by

| (17) |

To obtain and , first note that the joint density of , , and is given by

| (18) | |||||

Using Bayes’ rule, the conditional density of and given can be written as

From (3), standard conditional expectation calculations yield

| (19) |

where represents the expected value of a truncated -dimensional -variate , which is distributed as,

| (20) |

That is, the random vector is truncated to lie in the positive hyperplane .

Analogously, can be reduced to

| (21) |

where represents the second moment of . The truncated moments and can be swiftly evaluated using the expressions (7) and (8) in Section 2.2.

3.1 M-step

On the th iteration, the M-step consists of the maximization of the -function (10) with respect to . For easier computation, we employ the ECM extension of the EM algorithm, where the M-step is replaced by four conditional–maximization (CM)-steps, corresponding to the decomposition of into four subvectors, , where , , is the vector containing the distinct elements of , and . To compute , we maximize with respect to , and to compute , we first update to and then maximize with respect to , and so on.

We let denote the operator that produces a vector by extracting the diagonal elements of . Straightforward algebraic manipulations lead to the following closed form expressions for , , and ,

| (22) | ||||

| (23) | ||||

| and | ||||

| (24) | ||||

where denotes the Hadamard or element-wise product, and .

An updated estimate of the degrees of freedom is obtained by solving the equation

| (25) |

4 The Multivariate Skew -Mixture Model

The probability density function (pdf) of a finite mixture of multivariate skew -components, using the notation above, is given by

| (26) |

where

denotes the th MST component of the mixture model as defined by (1),

with location parameter , scale matrix , skew parameter

, and degrees of freedom . The mixing proportions

satisfy and . We shall

denote the model defined by (26) by FM-MST (finite mixture of MST)

distributions. Let contain all the unknown parameters of the FM-MST model;

that is, where now consists of the unknown parameters of

the th component density function.

To formulate the estimation of the unknown parameters in the FM-MST model as an incomplete-data problem in the EM framework, a set of latent component labels is introduced, where each element is a binary variable defined as

| (27) |

and . Hence, the random vector corresponding to follows a multinomial distribution with one trial and cell probabilities ; that is, . It follows that the FM-MST model can be represented in the hierarchical form given by

| (28) |

where and .

5 ML Estimation for FM-MST Distributions

From the hierarchical characterization (28) of the FM-MST distributions, the complete-data log-likelihood function is given by

| (29) |

where

| (30) |

and where

It is clear from (29) that maximization of the -function of the complete-data log likelihood (McLachlan and Krishnan, 2008),

only requires maximization of the components functions separately . The necessary conditional expectations involved in computing the -function with respect to (30) are, namely,

| (31) |

The posterior probability of membership of the th component by , using the current estimate for , is given using Bayes’ Theorem by

| (32) |

The other four expectations have analogous expressions to their one-component counterpart given in Section 3. They are given by

| (33) | ||||

| (34) | ||||

| (35) | ||||

| (36) |

where is a scalar defined by

is a vector whose th element is

| (38) |

and is a matrix whose th element is

| (39) |

where, for convenience of notation, is used to denote .

It is important to note that and are related to the first two moments of a truncated -dimensional -variate . More specifically, let

the truncated -distribution as defined by (6). Then

| and | ||||

and hence (35) and (36) reduces to and respectively,

which can be implicitly expressed in terms of the parameters , ,

, using results (7) and (8). It is

worth emphasizing that computation of and

depends on algorithms for evaluating the multivariate -distribution function,

for which fast procedures are available.

In summary, the ECM algorithm is implemented as follows on the th iteration:

E-step: Given , compute using

(32), and , , , and

as described by (33), (34), (35), and

(36) respectively, for and .

M-step: Update the estimate of by calculating for , the following estimates of the parameters in ,

| and | ||||

An update of the degrees of freedom is obtained by solving iteratively the equation

A program for implementing this EM algorithm has been written in R.

6 The Empirical Information Matrix

We consider an approximation to the asymptotic covariance matrix of the ML estimates using the inverse of the empirical information matrix (Basford et al., 1997). The empirical information matrix is given by

| (40) |

where are the individual scores, consisting of

We let denote the complete-data log likelihood formed from the single observation . An estimate of the covariance matrix of is given by taking the inverse of (40). After some algebraic manipulations, one can show that the elements of are given by the following explicit expressions:

7 Examples

In this section, we fit the FM-MST model to three real data sets to demonstrate its usefulness in analyzing and clustering multivariate skewed data. In the first example, we focus on the flexibility of the FM-MST model in capturing the asymmetric shape of flow cytometric data. The next example illustrates the clustering capability of the model. In the final example, we demonstrate the computational efficiency of the proposed algorithm.

7.1 Lymphoma Data

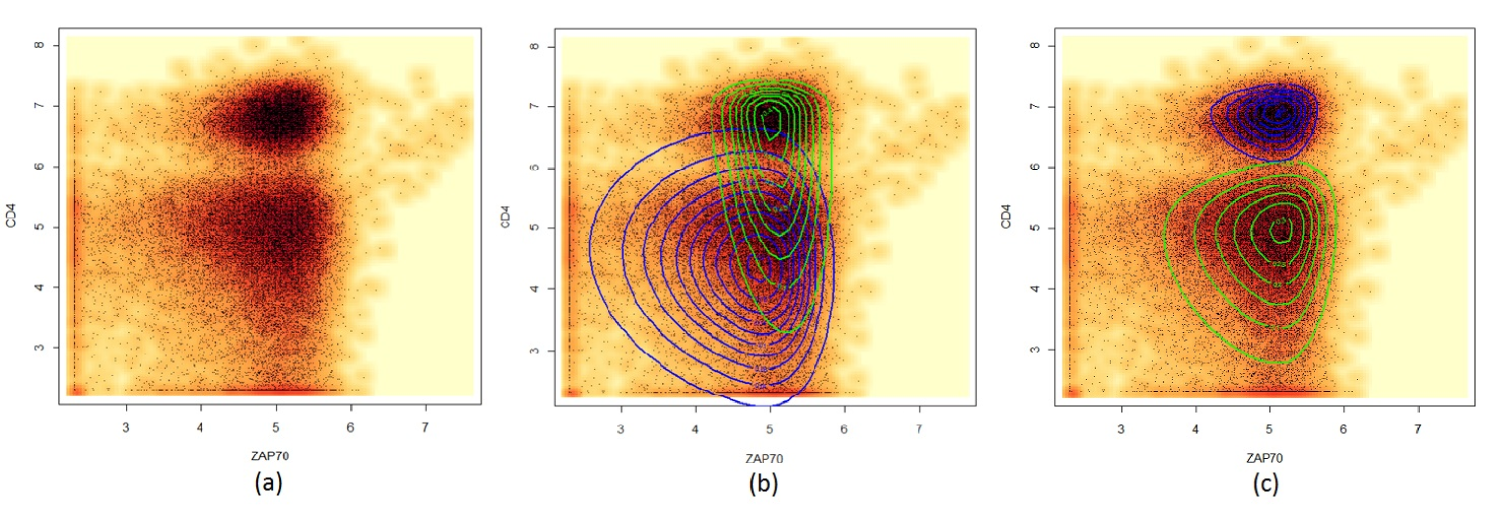

We consider a subset of the T-cell phosphorylation data collected by Maier et al. (2007). In the original data, blood samples from 30 subjects were stained with four fluorophore-labeled antibodies against CD4, CD45RA, SLP76(pY128), and ZAP70(pY292) before and after an anti-CD3 stimulation. In this example, we focus on a reduced subset of the data in two variables – CD4 and ZAP70. This bivariate sample (Figure 1) is apparently bimodal and exhibits asymmetric pattern. Hence we fit a two-component FM-MST model to the data. More specifically, the fitted model can be written as

where

For comparison, we include the fitting of a two-component mixture of skew -distributions from the skew-normal independent (SNI) family (Lachos, Ghosh, and Arellano-Valle, (2010)), hereafter named the FM-SNI-ST model. The estimated FM-SNI-ST density can be computed from the R package mixsmsn (Cabral, Lachos, and Prates, (2012)). Note that the MST distribution is different to the SNI-ST distribution since the skewing function is not of dimension one. Note also that the SNI-ST distribution is equivalent to the restricted MST distribution (5) after reparametrization. Moreover, under the FM-SNI-ST settings, the correlation structure of will also be dependent on the skewness parameter, whereas for the FM-MST distributions the covariance structure is not affected by . The contours of the fitted SNI-ST and MST component densities are depicted in Fig 1(b) and Fig 1(c), respectively. To better visualize the shape of the fitted models, we display the estimated densities of each component instead of the mixture contours. It can be seen that the FM-MST model provides a noticeably better fit. From a clustering point of view, the FM-MST model also shows better performance as it is able to separate the two clusters correctly. Moreover, it adapts to the asymmetric shape of each cluster more adequately. Thus the superiority of FM-MST model is evident in dealing with asymmetric and heavily tailed data in this data set.

7.2 GvHD Data

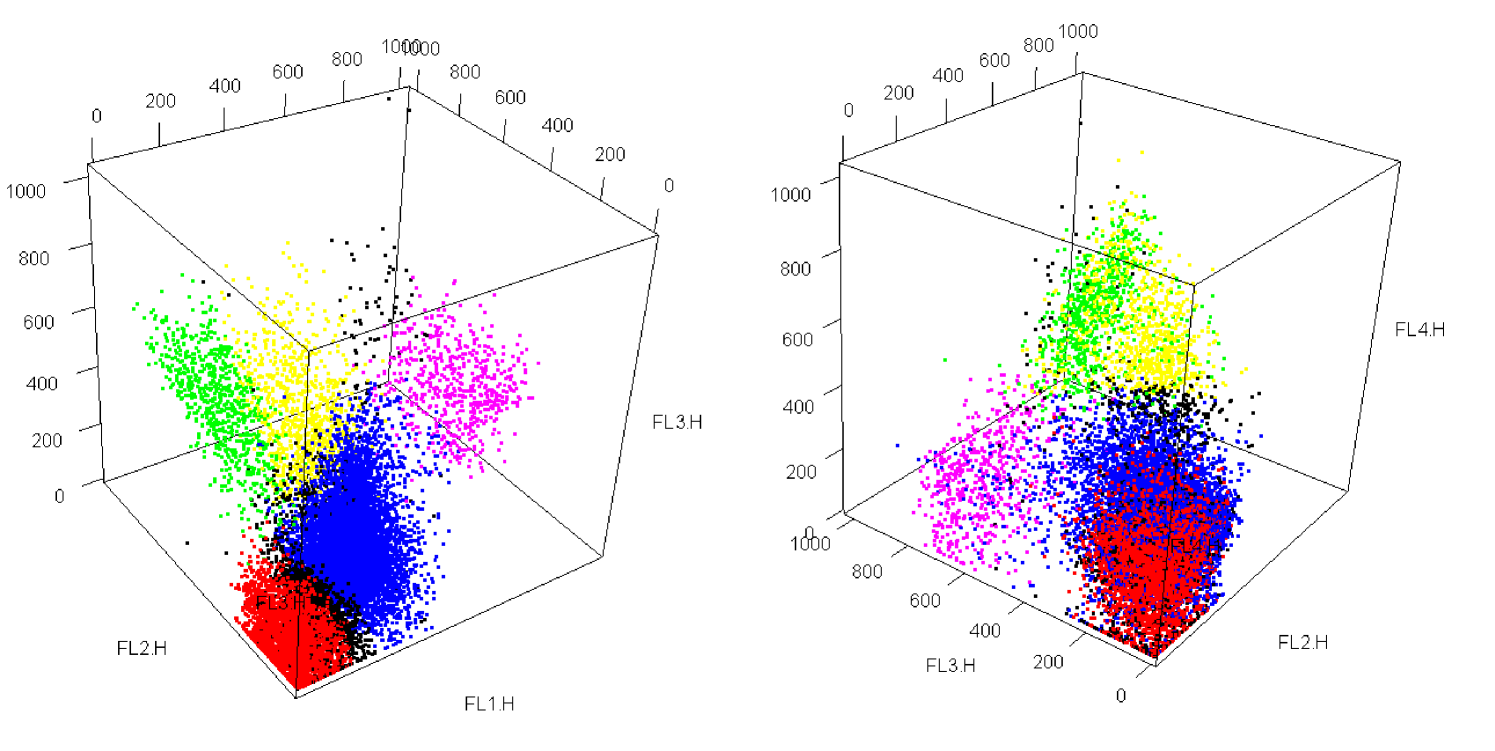

Our second example concerns a data set collected by Brinkman et al. (2007), where peripheral blood samples were collected weekly from patients following blood and bone marrow transplant. The original goal was to identify cellular signatures that can predict or assist in early detection of Graft versus Host Disease (GvHD), a common post-transplantation complication in which the recipient’s bone marrow was attacked by the new donor material. Samples were stained with four fluorescence reagents: CD4 FITC, CD8 PE, CD3 PerCP, and CD8 APC. Hence we fit a 4-variate FM-MST model to a case sample with a population of 13773 cells. The data set is shown in Figure 2, where cells are displayed in five different colours according to a manual expert clustering into five clusters. In addition, we include the results for the FM-SNI-ST model and the restricted MST mixture model introduced in Section 2.1 (equation 5), hereafter denoted by FM-RMST.

We compare the performance of the three models FM-MST, FM-SNI-ST, and FM-RMST in assigning cells to the expert clusters. Manual gating suggests there are five clusters in this case sample. Hence we applied the algorithm for the fitting of each model with predefined as . For a fair comparison, we started the three algorithms using the same initial values. The initial clustering is based on -means.The degrees of freedom are set to be identical for all components for the first iteration and assigned a relatively large value. A similar strategy was described in Lin (2010).

To assess the performance of these three algorithms, we take the manual expert clustering as being the ‘true’ class membership and we calculated the error rate of classification against this benchmark result with dead cells removed, measured by choosing among the possible permutations of class labels the one that gives the highest value.

As anticipated, the optimal clustering result was given by the FM-MST model. It achieved the lowest misclassification rate. The FM-SNI-ST model has a higher number of misallocations. The FM-RMST model has a disappointing performance in terms of clustering. Its error rate is almost double that of its competitors. It is worth pointing out that both the FM-MST and FM-RMST models have 99 free parameters, while the FM-SNI-ST model has 95 parameters. It is evident that these two restricted models have inferior performance. This reveals some evidence of the extra flexibility offered by the more general FM-MST model.

| Model | Error rate | Number of free parameters |

|---|---|---|

| FM-MST | 0.0875 | 99 |

| FM-SNI-ST | 0.13078 | 95 |

| FM-RMST | 0.20700 | 99 |

7.3 AIS Data

We now illustrate the computational efficiency of our exact implementation of the E-step of the EM algorithm as in Section 5. We denote this version of the EM algorithm with the exact E-step as EM-exact. In addition, we consider the EM alternative with a Monte Carlo (MC) E-step as given by Lin (2010), which is denoted by EM-MC. Since both models are based on the same characterization of the multivariate skew -distribution defined by Sahu et al. (2003), it is appropriate to compare their computation time. We assess their time performance on the well-analyzed Australian Institute of Sport (AIS) data, which consists of measurements made on athletes. As in Lin (2010), we limit this illustration to a bivariate subset of two variables – body mass index (BMI) and the percentage of body fat (Bfat). As noted by Lin (2010), these data are apparently bimodal. Hence a two-component mixture model is fitted to the data set.

A summary of the results are listed in Table 2. Also reported there are the values of the log-likelihood, the Akaike information criterion (AIC) (Akaike, 1974) and the Bayesian information criterion (BIC) (Schwarz, 1978) defined by

| (41) |

respectively, where is the value of the log likelihood at , is the number of free parameters, and is the sample size. Models with smaller AIC and BIC values are preferred when comparing different fitted results. The best value from each criterion are highlighted in bold font in Table 2. For this illustration, the EM-MC E-step is undertaken with random draws as recommended by Lin (2010). Note that the degrees of freedom is not restricted to be the same for the two components. The gender of each individual in this data set is also recorded, thus enabling us to evaluate the error rate of binary classification for the two methods.

Not surprisingly, the model selection criteria favour the EM-exact algorithm. Not only did it achieve lower AIC and BIC values, the computation time is remarkably lower than its competitor. It is more than five times faster than the EM-MC alternative.

| Model | EM-exact | EM-MC | |||

|---|---|---|---|---|---|

| Component | 1 | 2 | 1 | 2 | |

| 0.44 | 0.56 | 0.59 | 0.41 | ||

| 19.74 | 21.83 | 19.89 | 22.47 | ||

| 15.99 | 5.89 | 15.50 | 7.30 | ||

| 3.03 | 3.16 | 2.96 | 3.23 | ||

| 7.71 | 0.54 | 6.17 | 1.34 | ||

| 2.36 | 0.11 | 25.80 | 2.14 | ||

| 3.34 | 1.44 | 2.72 | 0.71 | ||

| 3.15 | 3.76 | 2.22 | 1.13 | ||

| 42.05 | 3.82 | 23.98 | 25.93 | ||

| -1077.257 | -1088.066 | ||||

| AIC | 2188.514 | 2207.956 | |||

| BIC | 2244.755 | 2264.197 | |||

| error rate | 0.0792 | 0.0891 | |||

| time | 64.63 | 349.9 | |||

8 Computation Time and Accuracy for E-step

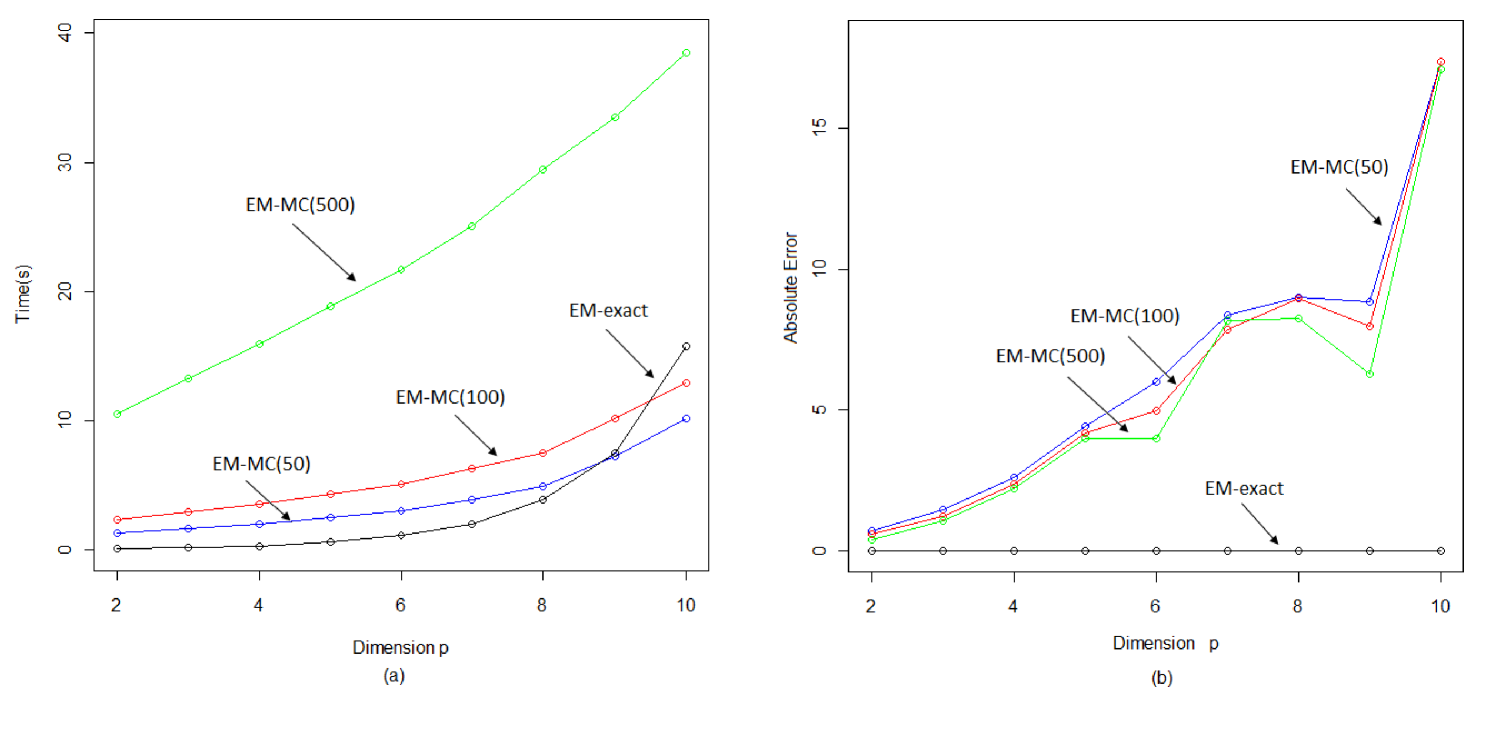

We now proceed to two interesting experiments for evaluating the computational cost and accuracy of using the EM-exact and EM-MC algorithms on high-dimensional data. As pointed out previously, the main computational cost for EM-exact is evaluating the multivariate -distribution function. Calculation of the first two moments of a -variate truncated -distribution requires the evaluation of two functions, evaluations of , and evaluations of . Hence, the computation time will increase substantially with the number of dimensions. However, with the EM-exact algorithm, accuracy can be compromised for time.

We sampled 100 data from a Brain Tumor dataset supplied by Geoff Osborne from the Queensland Brain Institute at the University of Queensland. In both experiments we varied the dimension of the sample. The graph in Figure 3(a) shows the typical CPU time per each E-step iteration for various dimensions of the data; EM-MC represents the EM-MC algorithm with random draws using the Gibbs sampling approach described in Lin (2010). It is worth noting that in both experiments EM-exact is evaluated with a default tolerance of at least . As seen in Figure 3, EM-exact is the fastest among the four versions of the E-step for low dimensions. For example, at , EM-exact at least 25 times faster than EM-MC(50). It is important to note that although EM-MC(50) is slightly faster than EM-exact at higher dimensions, EM-exact produces results to a significantly higher accuracy, while EM-MC requires a large number of draws to achieve comparable results. We note that in our simulations, for example, at , 50 draws is insufficient to achieve acceptable estimates. Preliminary results suggests that at least 500 draws is required to generate reasonable approximations when is greater than 6. In this case, EM-exact is at least ten times quicker. Furthermore, EM-exact also has an additional advantage over the EM-MC alternative in that its results are reproducible.

To compare the accuracy of the EM-exact and EM-MC algorithms, we compute the total absolute error against the baseline EM-exact with a maximum tolerance of . For each of the EM-MC algorithms, the average total absolute error of 100 trials is used. For EM-exact, the default tolerance is set to . The results are shown in Figure 3(b). Not surprisingly, the absolute error of the EM-MC algorithm is significantly higher than that of the EM-exact algorithm. It can be observed that the absolute error is very high even for EM-MC(500). At , for example, EM-exact is at least 30000 times more accurate and takes less than half the time required for EM-MC(500).

It is important to emphasize that as the dimension of the data increases, EM-MC requires considerably more draws to provide a comparable (and acceptable) level of accuracy as EM-exact, which can be computationally intensive. Hence we advocate the use of EM-exact, especially for applications involving high dimensional data.

9 Concluding Remarks

We have described an exact EM algorithm for evaluating the parameters of a general multivariate skew -mixture model. This model has a more general characterization than various alternative versions of the skew -distribution available in the literature and hence offers greater flexibility in capturing the asymmetric shape of skewed data.

Our proposed method is based on reduced analytical expressions for the E-step conditional expectations, which can be formulated in terms of the first and second moments of a multivariate truncated -distribution. The latter can then be expressed further in terms of the distribution function of the multivariate central -distribution for which fast algorithms capable of producing highly accurate results already exist. It is demonstrated to have a marked advantage over the EM algorithm with a Monte Carlo E-step. To achieve comparable accuracy to that of the EM algorithm with the E-step implemented using the above numerical approach, the version of the algorithm with a Monte Carlo E-step would require a large number of draws, which would be computationally expensive.

Acknowledgments

This work is supported by a grant from the Australian Research Council. Also, we would like to thank Professor Seung-Gu Kim for comments and corrections, and Dr Kui (Sam) Wang for his helpful discussions on this topic.

References

- [1] H. Akaike. A new look at the statistical model identification. Automatic Control, 19:716–723, 1974.

- [2] R.B. Arellano-Valle, H. Bolfarine, and V.H. Lachos. Bayesian inference for skew-normal linear mixed models. Journal of Applied Statistics, 34(6):663–682, 2007.

- [3] A. Azzalini. A class of distributions which includes the normal ones. Scandinavian Journal of Statistics, 12:171–178, 1985.

- [4] A. Azzalini and A Dalla Valle. The multivariate skew-normal distribution. Biometrika, 83(4):715–726, 1996.

- [5] J. D. Banfield and A. Raftery. Model-based gaussian and non-gaussian clustering. Biometrics, 49:803–821, 1993.

- [6] K. E. Basford, D. R. Greenway, G. J. McLachlan, and D. Peel. Standard errors of fitted means under normal mixture. Computational Statistics, 12:1–17, 1997.

- [7] R. M. Basso, V. H. Lachos, C. R. B. Cabral, and P. Ghosh. Robust mixture modeling based on scale mixtures of skew-normal distributions. Computational Statistics and Data Analysis, 54:2926–2941, 2010.

- [8] M. D. Branco and D. K. Dey. A general class of multivariate skew-elliptical distributions. Journal of Multivariate Analysis, 79:99–113, 2001.

- [9] R. Brinkman, M. Gaspareto, S.-J. Lee, A. Ribickas, J. Perkins, W. Janssen, R. Smiley, and C. Smith. High content flow cytometry and temporal data analysis for defining a cellular signature of graft versus host disease. Biological of Blood and Marrow Transplantation, 13:691–700, 2007.

- [10] C.S. Cabral, V.H. Lachos, and M.O. Prates. Multivariate mixture modeling using skew-normal independent distributions. Computational Statistics and Data Analysis, 56:126–142, 2012.

- [11] A.P. Dempster, N. M. Laird, and D. Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of Royal Statistical Society , Series B, 39:1–38, 1977.

- [12] B. S. Everitt and D. J. Hand. Finite Mixture Distributions. Chapman and Hall, London, 1981.

- [13] C. Fraley and A. E. Raftery. How many clusters? which clustering methods? answers via model-based cluster analysis. Computer Journal, 41:578–588, 1999.

- [14] A Genz and F. Bretz. Methods for the computation of multivariate t-probabilities. Journal of Computational and Graphical Statistics, 11:950–971, 2002.

- [15] P. J. Green. On use of the em algorithm for penalized likelihood estimation. Journal of the Royal Statistical Society B, 52:443–452, 1990.

- [16] A. K. Gupta. Multivariate skew- distribution. Statistics, 37:359–363, 2003.

- [17] S. Kotz and S. Nadarajah. Multivariate t Distributions and Their Applications. Cambridge University Press, Cambridge, 2004.

- [18] V. H. Lachos, P. Ghosh, and R. B. Arellano-Valle. Likelihood based inference for skew normal independent linear mixed models. Statistica Sinica, 20:303–322, 2010.

- [19] T. I. Lin. Robust mixture modeling using multivariate skew distribution. Statistics and Computing, 20:343–356, 2010.

- [20] T. I. Lin, J. C. Lee, and W. J. Hsieh. Robust mixture modeling using the skew- distribution. Statistics and Computing, 17:81–92, 2007.

- [21] T. I. Lin, J. C. Lee, and S. Y. Yen. Finite mixture modelling using the skew normal distribution. Statistica Sinica, 17:909–927, 2007.

- [22] B. G. Lindsay. Mixture Models: Theory, Geometry, and Applications. NSF-CBMS Regional Conference Series in probability and Statistics, Volume 5, Institute of Mathematical Statistics, Hayward, CA, 1995.

- [23] L. M. Maier, D. E. Anderson, P. L. De Jager, L.S. Wicker, and D. A. Hafler. Allelic variant in ctla4 alters t cell phosphorylation patterns. Proceedings of the National Academy of Sciences of the United States of America, 104:18607–18612, 2007.

- [24] G. J. McLachlan and K. E. Basford. Mixture Models: Inference and Applications. Marcel Dekker, New York, 1988.

- [25] G. J. McLachlan and T. Krishnan. The EM Algorithm and Extensions. Wiley-Interscience, Hokoben, N. J., 2nd edition, 2008.

- [26] G. J. McLachlan and D. Peel. Finite Mixture Models. Wiley Series in Probability and Statistics, 2000.

- [27] G.J. McLachlan and D. Peel. Robust cluster analysis via mixtures of multivariate -distributions. In A. Amin, D. Dori, P. Pudil, and H. Freeman, editors, Lecture Notes in Computer Science, volume 1451, pages 658–666. Springer-Verlag, Berlin, 1998.

- [28] A. O’Hagan. Bayes estimation of a convex quadratic. Biometrika, 60:565–571, 1973.

- [29] A. O’Hagan. Moments of the truncated multivariate- distribution. 1976.

- [30] S. Pyne, X. Hu, K. Wang, E. Rossin, T.-I. Lin, L. M. Maier, C. Baecher-Allan, G. J. McLachlan, P. Tamayo, D. A. Hafler, P. L. De Jager, and J. P. Mesirow. Automated high-dimensional flow cytometric data analysis. PNAS, 106:8519–8524, 2009.

- [31] S.K. Sahu, D.K. Dey, and M.D. Branco. A new class of multivariate skew distributions with applications to bayesian regression models. The Canadian Journal of Statistics, 31:129–150, 2003.

- [32] G. Schwarz. Estimating the dimension of a model. Annals of Statistics, 6:461–464, 1978.

- [33] D. M. Titterington, A. F. M. Smith, and U. E. Markov. Statistical Analysis of Finite Mixture Distributions. Wiley, New York, 1985.