On the resolution power of Fourier extensions

for oscillatory functions

Abstract

Functions that are smooth but non-periodic on a certain interval possess Fourier series that lack uniform convergence and suffer from the Gibbs phenomenon. However, they can be represented accurately by a Fourier series that is periodic on a larger interval. This is commonly called a Fourier extension. When constructed in a particular manner, Fourier extensions share many of the same features of a standard Fourier series. In particular, one can compute Fourier extensions which converge spectrally fast whenever the function is smooth, and exponentially fast if the function is analytic, much the same as the Fourier series of a smooth/analytic and periodic function.

With this in mind, the purpose of this paper is to describe, analyze and explain the observation that Fourier extensions, much like classical Fourier series, also have excellent resolution properties for representing oscillatory functions. The resolution power, or required number of degrees of freedom per wavelength, depends on a user-controlled parameter and, as we show, it varies between and . The former value is optimal and is achieved by classical Fourier series for periodic functions, for example. The latter value is the resolution power of algebraic polynomial approximations. Thus, Fourier extensions with an appropriate choice of parameter are eminently suitable for problems with moderate to high degrees of oscillation.

1 Introduction

In many physical problems, one encounters the phenomenon of oscillation. When approximating the solution to such a problem with a given numerical method, this naturally leads to the question of resolution power. That is, how many degrees of freedom are required in a given scheme to resolve such oscillations? Whilst it may be impossible to answer this question in general, important heuristic information about a given approximation scheme can be gained by restricting ones interest to certain simple classes of oscillatory functions (e.g. complex exponentials for problems in bounded intervals).

Resolution power represents an a priori measure of the efficiency of a numerical scheme for a particular class of problems. Schemes with low resolution power require more degrees of freedom, and hence increased computational cost, before the onset of convergence. Conversely, schemes with high resolution power capture oscillations with fewer degrees of freedom, resulting in decreased computational expense.

Consider the case of the unit interval (the primary subject of this paper). Here one typically studies the question of resolution via the complex exponentials

| (1) |

To this end, let , be a sequence of approximations of the function which converges to as (here is the number of degrees of freedom in the approximation ). For , let be the minimal such that

We now define the resolution constant of the approximation scheme as

Note that need not be well defined for an arbitrary scheme (for example, if were to scale superlinearly in ). However, for all schemes encountered in this paper, this will be the case.

Loosely speaking, the resolution constant corresponds to the required number of degrees of freedom per wavelength to capture oscillatory behaviour; a common concept in the literature on oscillatory problems [6, 20]. In particular, we say that a given scheme has high (respectively low) resolution power if it has small (large) resolution constant. It is also worth noting that, in many schemes of interest, the approximation is based on a collocation at a particular set of nodes in . In this circumstance, the resolution constant is equivalent to the number of points per wavelength required to resolve an oscillatory wave (for further details, see §1.3).

With little doubt, the approximation of a smooth, periodic function via its truncated Fourier series is one of the most effective numerical methods known. Fourier series, when computed via the FFT, lead to highly efficient, stable methods for the numerical solution of a large range of problems (in particular, PDE’s with periodic boundary conditions). A simple argument leads to a resolution constant of in this case (for periodic oscillations), with exponential convergence occurring once the number of Fourier coefficients exceeds .

However, the situation is altered completely once periodicity is lost. The slow pointwise convergence of the Fourier series of a nonperiodic function, as well as the presence of Gibbs oscillations near the domain boundaries, means that nonperiodic oscillations cannot be resolved by such an approximation. A standard alternative is to approximate with a sequence of orthogonal polynomials (e.g. Chebyshev polynomials). Such approximations possess exponential convergence, without periodicity, yet the resulting resolution constant increases to the value , making such an approach clearly less than ideal.

1.1 Fourier extensions

The purpose of this paper is to discuss an alternative to polynomial approximation for nonperiodic functions; the so-called Fourier extension. Our main result is to show that Fourier extensions have excellent resolution properties. In particular, there is a user-determined parameter that allows for continuous variation of the resolution constant from the value (in the limit ), the figure corresponding to Fourier series, to (), the value obtained by polynomial approximations. Thus, Fourier extensions are highly suitable tools for problems with oscillations at moderate to high frequencies.

Let us now describe Fourier extensions in more detail. As discussed, Fourier series are eminently suitable for approximating smooth and periodic oscillatory functions. It follows that a potential means to recover a highly accurate approximation of a nonperiodic function is to seek to extend to a periodic function defined on a larger domain and compute the Fourier series of . Unfortunately, unless is itself periodic, no periodic extension will be analytic, and hence only spectral convergence can be expected. Preferably, for analytic , we seek an approximation that converges exponentially fast.

To remedy this situation, rather than computing an extension explicitly, it was proposed in [7, 11] to directly compute a Fourier representation of on the domain via the so-called Fourier extension problem:

Problem 1.1.

Let be the space of -periodic functions of the form

| (2) |

The (continuous) Fourier extension of to the interval is the solution to the optimization problem

| (3) |

Note that there are infinitely many ways in which to define a smooth and periodic extension of a function , of which (3) is but one. We say an extension is a Fourier extension if is a finite Fourier series on . Note, however, that even within this stipulation, there are still infinitely many ways to define . We refer to the extension defined by (3) as the continuous Fourier extension of (in the sense that it minimizes the continuous -norm).

As numerically observed in [7, 11], the convergence of to is exponential, provided is analytic. When , this was confirmed by the analysis presented in [25]. A pivotal role is played by the map

| (4) |

The importance of this map is that it transforms the trigonometric basis functions that span the space into polynomials in . In this setting, Problem 1.1 reduces simply to the computation of expansions in a suitable basis of nonclassical orthogonal polynomials, since the least squares criterion corresponds exactly to an orthogonal projection in a particular weighted norm. Well-known results on orthogonal polynomials can then be used to establish convergence properties of the Fourier extension. We recall this analysis in greater detail in §2, along with its generalization to arbitrary .

The map (4) demonstrates the close relationship that exists between Fourier series and polynomials. Note the similarity to the classical Chebyshev map that takes Chebyshev polynomials to trigonometric basis functions. Compared to Chebyshev expansions, however, it is important to note that the roles of polynomials and trigonometric functions are interchanged. The Chebyshev expansion of a given function is a polynomial approximation, which is equivalent to the Fourier series of a related function. The Fourier extension of a function on the other hand is a trigonometric approximation, which is equivalent to the polynomial expansion of a related function. In that sense, Fourier extensions and Chebyshev expansions are dual to each other.

1.2 Key results

The main result of this paper is that the resolution constant of the continuous Fourier extension satisfies

Accordingly, the Fourier extension of the function (1) will begin to converge once exceeds (recall that involves degrees of freedom). In particular, we find that for and as . Note that the resolution of the function using classical Fourier series on would require a minimum of degrees of freedom (whenever is periodic). Thus, Fourier extensions exhibit comparable performance for close to . However, as increases, can be resolved more efficiently via its Fourier extension than if it had been directly expanded in a Fourier series on . In particular, the resolution power is bounded above for all by , which is precisely the resolution constant for polynomial approximations.

Aside from establishing when asymptotic convergence will occur, we also show that the convergence in this regime is exponential, and that for all sufficiently large the rate is precisely , where

(this result actually holds for all sufficiently analytic functions , not just (1)). Here, we note that , implying no exponential convergence for . This is of course a consequence of the Gibbs phenomenon of Fourier series on . Convergence is exponential for all greater than , however, and the rate increases for larger .

In summary, the main conclusion we draw in this paper is the following. Smaller yields better resolution power of the continuous Fourier extension, at a cost of slower, but still exponential, convergence. Conversely, larger yields faster exponential convergence, at the expense of reduced resolution power. Formally, one may also obtain a resolution constant of in the limit by allowing as , and towards the end of the paper we shall discuss different strategies for doing this, some of which are quite effective in practice.

Unfortunately, the linear system of equations to be solved when computing (3) is severely ill-conditioned. As a result, the best attainable accuracy with a continuous Fourier extension is of order , where is the machine precision used. To overcome this, we present a new Fourier extension (referred to as the discrete Fourier extension), based on a judicious choice of interpolation nodes, which leads to far less severe condition numbers. Numerical examples demonstrate much higher attainable accuracies with this approach, typically of order , whilst retaining both the same rates of convergence and resolution constant.

Inherent to Fourier extensions is the concept of redundancy: namely, there are infinitely many possible extensions of a given function. As we explain, this not only leads to the ill-conditioning mentioned above, it also means that the result of a numerical computation may differ somewhat from the ‘theoretical’ (i.e. infinite precision) continuous or discrete Fourier extension. Later in the paper, we discuss this observation and its consequences in some detail.

1.3 Relation to previous work

The question of resolution power of Fourier series and Chebyshev polynomial expansions was first developed rigorously by Gottlieb and Orszag [20, p.35] (see also [33]), who introduced and popularized the concept of points per wavelength. Therein the figures of and respectively were derived, the latter being generalized to arbitrary Gegenbauer polynomial expansions in [21] (in §4 we provide an alternative proof, valid for almost any orthogonal polynomial system (OPS)). Resolution was also discussed in detail in [6, chpt. 2], where, aside from Fourier and Chebyshev expansions, the extremely poor performance of finite difference schemes was noted.

One-dimensional Fourier extensions were, arguably, first studied in detail in [7, 11], where they were employed to overcome the Gibbs phenomenon in standard Fourier expansions, as well as the Runge phenomenon in equispaced polynomial interpolation (see also [9]). Application to surface parametrizations was also explored in [11].

Similar ideas (typically referred to as Fourier embeddings), were previously proposed to solve PDE’s in complex geometries. These work by embedding the domain in a larger bounding box, and computing a Fourier series approximation on this domain. See [4, 5, 8, 12, 13, 14, 15, 19, 26, 29, 34, 36]. Such methods can be shown to work well, in principle even for arbitrary regions, but they are typically prohibitively computationally expensive.

More recently, a very effective method was developed in [10, 32] to solve time-dependent PDE’s in complex geometries. This approach is based on a technique for obtaining one-dimensional Fourier extensions, known as the FE–Gram method, which is then combined with an alternating direction technique, as well as standard FFTs, to solve the PDE efficiently and accurately. This approach has also been applied to Navier–Stokes equations [3]. Interestingly, it is shown and emphasized in [32] (see also [3]) that this method leads to an absence of dispersion errors (or pollution errors) – another beneficial property for wave simulations shared with classical Fourier series, and very much related to resolution power.

Having said this, we note at this point that the FE–Gram approach is quite different to the Fourier extensions we consider in this paper. The FE–Gram approximation is not exponentially convergent, and in practice, the order of convergence is usually limited by the user to ensure stability in the overall PDE solver. However, the FE–Gram approximation is designed specifically to be computed efficiently, in time, using only function evaluations on an equispaced grid. Although there has been some recent progress in the rapid computation of exponentially-convergent Fourier extensions of the form (2) from equispaced data [30, 31], this is not an issue we shall dwell on in this paper. Thus, the main contribution of this paper is approximation-theoretic: we show that one can construct exponentially-convergent Fourier extensions with a resolution constant arbitrarily close to optimal. In §6 we discuss ongoing and future work pertaining to these issues.

This aside, our use of Fourier extensions in this paper to resolve oscillatory functions is superficially quite similar to the Kosloff–Tal–Ezer mapping (see [27], [6, chpt 16.9] and references therein, and more recently, [24]) for improving the severe time-step restriction inherent in Chebyshev spectral methods. Such an approach also improves the very much related property of resolution power. In this technique, one replaces the standard Chebyshev interpolation nodes with a sequence of mapped points, and expands in a nonpolynomial basis defined via the particular mapping. Roughly speaking, with Fourier extensions, the situation is reversed. Rather than fixing interpolation nodes, one specifies a particular basis (i.e. the Fourier basis for a particular ) that gives rapidly convergent expansions and good resolution properties, and chooses an appropriate means for computing the extension (for example, a particular configuration of collocation nodes) via the corresponding mapping.

Finally, we remark in passing that there are a number of commonly used alternatives to Fourier extensions. Such methods typically arise from the desire to reconstruct a function directly from its Fourier coefficients (or pointwise values on an equispaced grid), whether via re-expanding in a sequence Gegenbauer polynomials [22, 23], or by smoothing the function by implicitly matching its derivatives at the domain boundary [18]. Whilst the latter retains a resolution constant of , it only yields algebraic convergence of a finite order, and suffers from severe ill-conditioning. Conversely, the Gegenbauer reconstruction procedure offers exponential convergence, but with a significant deterioration in resolution power [21].

The outline of the remainder of this paper is as follows. In §2 we detail the convergence of Fourier extensions for arbitrary , and in §3 we address numerical computation. In §4 we consider the resolution power of polynomial expansions, and in §5 we derive the resolution constant for Fourier extensions.

2 Fourier extensions on arbitrary intervals

In this section, we recall and generalize the analysis given in [25] for the case . We first show a new result, namely that the continuous Fourier extension converges spectrally for all smooth functions and for all fixed. Next, a more involved analysis in §2.2 demonstrates that the continuous Fourier extension does in fact converge exponentially whenever is analytic. In doing so, we repeat some of the reasoning in [25] for the clarity of presentation, as well as for establishing notation that is needed later in the paper.

Before presenting these results, a word about terminology in order to avoid confusion. Throughout this paper, we will refer to the following three types of convergence of an approximation to a given function . We say that converges algebraically fast to at rate if as . Conversely, converges spectrally fast to if the error decays faster than any algebraic power of , and exponentially fast if there exists some constant such that for all large .

2.1 Spectral convergence

Standard approximations based on orthogonal polynomials (or Fourier series in the periodic case) converge exponentially fast provided is analytic, and spectrally fast if is only smooth [16, chpts 2,5]. Whenever has only finite regularity, convergence is algebraic at a rate determined by the degree of smoothness: specifically, if is -times continuously differentiable in and exists almost everywhere and is square-integrable (equivalently, – the th standard Sobolev space of functions defined on ), then converges algebraically fast at rate . Our first result regarding Fourier extensions illustrates identical convergence in this setting:

Theorem 2.1.

Suppose that for some and that . Then, for all and ,

| (5) |

where is independent of , and , is the standard norm on and is the continuous Fourier extension of on defined by (3).

Proof.

Recall that there exists an extension operator with and for some positive constant independent of [1].

Let be monotonically decreasing and satisfy for and for . We define the bump function by

Note that the function and has support in . Thus its restriction to is periodic for any . Since minimizes the norm error over all functions from the set , we have

where denotes the th partial Fourier sum of on . Since and is periodic on , a well-known estimate [16, eqn (5.1.10)] gives

Moreover, for some constant independent of . Therefore, the result follows. ∎

2.2 Exponential convergence

2.2.1 The continuous Fourier extension

As mentioned in the introduction, the continuous Fourier extension defined by (3) can be characterized in terms of certain nonclassical orthogonal polynomial expansions. This was demonstrated for the case in [25], but can be shown for any with relatively minor modifications.

Our approach is to construct an orthogonal basis for the space of -periodic functions. Since the least squares criterion (3) corresponds to an orthogonal projection, it then suffices to expand a given function in this basis in order to find its Fourier extension.

The cosine and sine functions in are already mutually orthogonal and hence can be treated separately. Consider the cosines first, i.e. the set

Trigonometric functions are closely related to polynomials, through an appropriate cosine-mapping. This can be seen, for example, from the defining property of Chebyshev polynomials of the first kind ,

In the same spirit, we define the map

| (6) |

and note that, since is an algebraic polynomial of degree in , the function is a polynomial in . From this we conclude that the set of cosines is a basis for the space of algebraic polynomials in of degree .

Since the functions in are linearly independent, an orthogonal basis exists. Moreover, we may write the basis functions as polynomials in , say . Orthogonality in implies

where, for ease of notation, we have defined the -dependent constant

In the latter step above, we applied the substitution (6), which maps the interval to and introduces the Jacobian with the inverse square root. It follows that the are orthonormal polynomials on with respect to the weight function

| (7) |

This weight differs only by a constant factor from the typical weight function of Chebyshev polynomials of the first kind . However, the interval of orthogonality is different from that of the Chebyshev polynomials, since is contained within for , whereas Chebyshev polynomials are orthogonal over the whole interval .

We now consider the set of sines in ,

Analogously, this leads to orthogonal polynomials resembling Chebyshev polynomials of the second kind . Indeed, from the property

we find that is also a polynomial in , but only up to an additional factor. This factor is

We therefore consider an orthogonal basis in the form

Orthogonality in implies

Thus, the are again orthonormal polynomials. The weight function

| (8) |

corresponds to that of the Chebyshev polynomials of the second kind, but here too the interval of orthogonality differs from the classical case in the same -dependent manner.

Since the functions , comprise a basis for the space , and since the continuous Fourier extension is the orthogonal projection of onto , we now deduce the following theorem:

2.2.2 Exponential convergence of the continuous Fourier extension

The functions being expanded in the theory of the continuous Fourier extension above are related to through the inverse of the map (6). It is necessary to distinguish between the even and odd parts of ,

Since

| (11) |

we see that the even part of the Fourier extension is precisely the orthogonal polynomial expansion of the function

A similar reasoning for the odd part of , based on the coefficients , yields the second function

with the odd part of , when divided by , corresponding to the expansion of in the polynomials .

Let us now recall some standard theory on orthogonal polynomial (see, for example, [6, 35, 37] for an in-depth treatment). The convergence rate of the expansion of an analytic function in a set of orthogonal polynomials is exponential, and for a wide class of orthogonal polynomials on the interval the precise rate of convergence is determined by the largest Bernstein ellipse

within which is analytic. We shall use the convention throughout. Note that the ellipse has foci , and the parameter coincides with the sum of the lengths of its major and minor semiaxes. Suppose now that is analytic within the Bernstein ellipse of radius and not analytic in any ellipse with . Then the rate convergence of the expansion of in a set of orthogonal polynomials on is precisely .

Returning to the continuous Fourier extension, we now see that its convergence rate is determined by the nearest singularity of or to the interval . Note that, even when is entire, singularities are introduced by the inverse cosine mapping at the points . One can verify that the singularity at is removable for both and . Thus, the nearest singularity lies at .

To apply the above polynomial theory, it remains to transform the interval to the standard interval . This is achieved by the affine map

| (12) |

with inverse mapping to . Note that maps the point to the point

which lies on the negative real axis. Since the Bernstein ellipse crosses the negative real axis in the point , equating this with yields the maximal value of . We therefore deduce the following result:

Theorem 2.3.

For all sufficiently analytic functions , the error in approximating by its continuous Fourier extension satisfies

| (13) |

uniformly for , where is a constant depending on only, and

This theorem extends Theorem 3.14 of [25] to the case of general . Note that the estimate (13) holds for all and . For the sake of brevity, we have limited the exposition here to the case of sufficiently analytic functions . Yet, we make the following comments:

-

•

The rate of exponential convergence may be slower than when is not sufficiently analytic as a function of , i.e. if has a singularity closer than that introduced by the inverse cosine mapping.

-

•

The convergence may also be faster than if is analytic and periodic on . In that case, one can verify that the singularity introduced by the inverse cosine at is also removable. Thus, the convergence rate is no longer limited by the singularity introduced by the map between the and domains.

-

•

For the case we recover the convergence rate found in [25, §3.4].

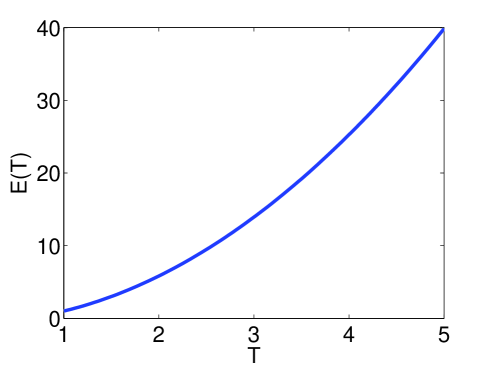

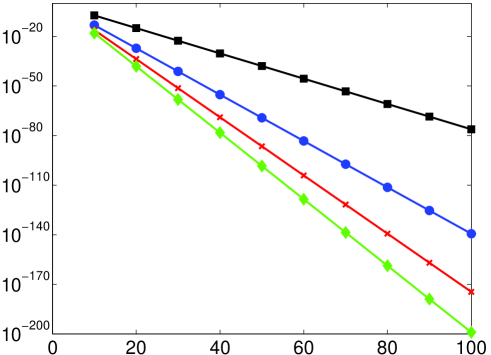

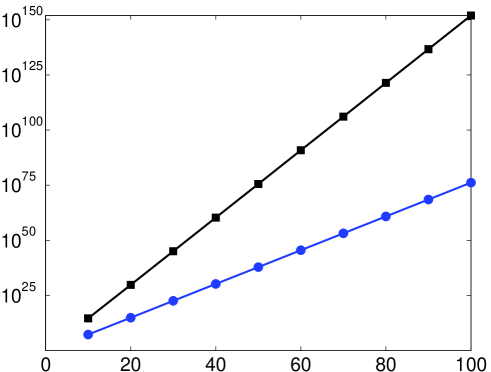

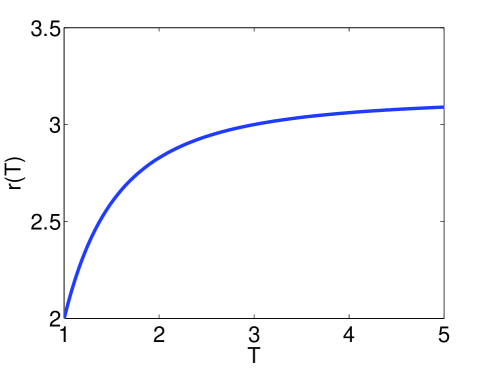

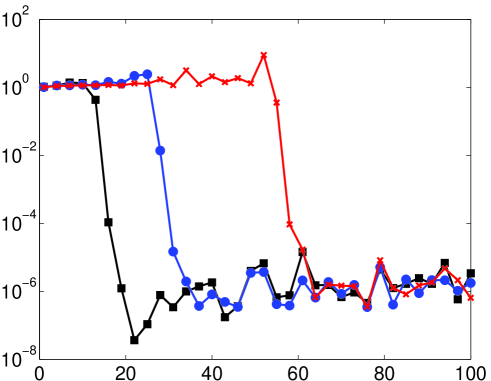

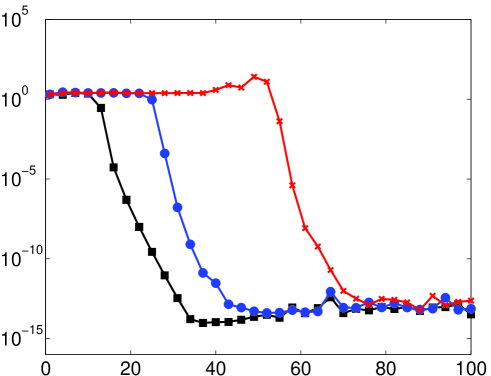

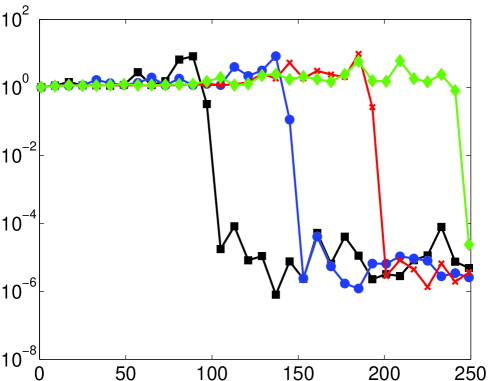

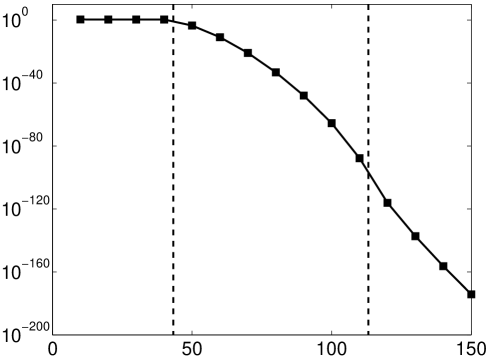

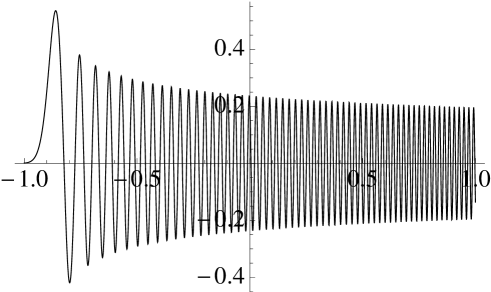

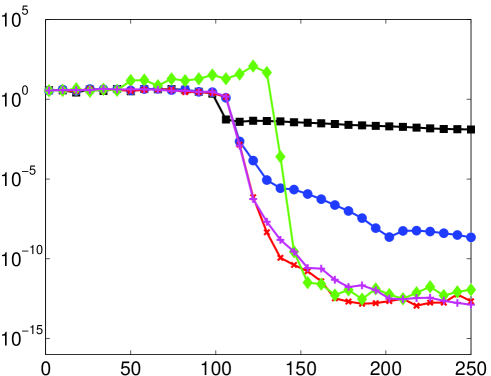

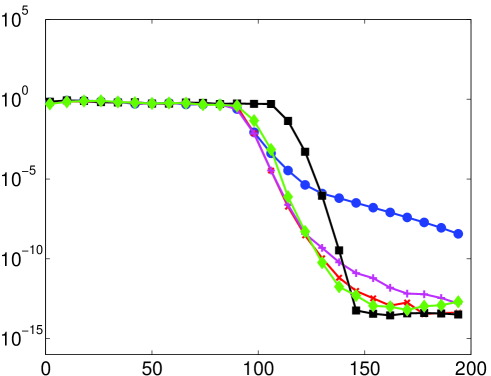

The function is depicted in Fig. 1. It is monotonically increasing on as a function of , and behaves like for and for . Thus, larger leads to more rapid exponential convergence. This is confirmed in Fig. 2, where we plot the error in approximating by for various and (for this example we use high precision to avoid any numerical effects – see §3). Note the close correspondence between the observed and predicted convergence rates, as well as the significant increase in convergence rate for larger . Having said this, it transpires that increasing adversely affects the resolution power, a topic we will consider further in §5.

3 Computation of Fourier extensions

Although in §2.2.1 we introduced a new, orthogonal basis for the set , this basis is never actually used in computations. Instead, we always use either complex exponentials or real sines and cosines. The reason for this is primarily simplicity (recall that the relevant orthogonal basis relates to nonstandard orthogonal polynomials, which therefore would need to be precomputed), and the fact that having a Fourier extension in the form of a standard Fourier series is certainly most convenient in practice.

With this in mind, let be an enumeration of a ‘standard’ basis for the set (e.g. complex exponentials), and suppose that the continuous Fourier extension of a function has coefficients in this basis. Since is the solution to (3), it is straightforward to show that

| (14) |

where and have entries and respectively, and is the vector of coefficients . Thus, given , we may compute the continuous Fourier extension by solving (14).

There are two issues with computing the continuous Fourier extension. First, one requires knowledge (or prior computation) of the integrals . Second, the condition number [25]. Such severe ill-conditioning typically limits the maximal achievable accuracy (by this we mean the smallest possible error that can be attained in finite precision for any ) of the continuous Fourier extension to , where is the machine precision used [25].

Ill-conditioning of the exact extension is due to the redundancy of the set . This is explained in the next section. Fortunately, its effect can be greatly mitigated by computing a so-called discrete Fourier extension instead. This extension, which converges at precisely the same rate as the continuous Fourier extension, involves only pointwise evaluations of , and consequently also avoids the first issue stated above. This is discussed in §3.2.

3.1 Ill-conditioning

The reason for ill-conditioning in the exact Fourier extension is simple: although the functions form an orthogonal basis on , they only constitute a frame when restricted to the interval .

Lemma 3.1.

The set

| (15) |

is a normalized tight frame for with frame bound .

Proof.

Let be given and define as the extension of by zero to . Since

| (16) |

we find that is precisely the th Fourier coefficient of on . By Parseval’s relation

as required. ∎

For a general introduction to the theory of frames, see [17].

Lemma 3.1 has several implications. Recall that frames are usually redundant: any given function typically has infinitely many representations in a particular frame. This implies that there will be approximate linear dependencies amongst the columns of the Gram matrix for all large . This makes ill-conditioned, and in the case of the frame (15), leads to the aforementioned exponentially large condition numbers.

Let us consider several particular representations of a smooth function in the frame (15). Recall that associated to any frame is the so-called canonical dual frame [17]. A representation of , the frame decomposition, is given in terms of this frame by

Since the frame (15) is tight, it coincides with its canonical dual up to a constant factor that is equal to the frame bound, in this case . Therefore its frame decomposition is precisely

where the ’s are given by (16). However, as illustrated in the proof of the previous lemma, this is precisely the Fourier series of the discontinuous function , and thus this infinite series converges only slowly and suffers from a Gibbs phenomenon.

On the other hand, as shown in the proof of Theorem 2.1, by extending more smoothly to , one obtain representations of in the frame (15) that converge algebraically at arbitrarily fast rates. Thus, we conclude the following: different frame expansions of the same function may give rise to wildly different approximations.

Clearly, given its exponential rate of convergence, the continuous Fourier extension will not coincide with any of the aforementioned representations (recall also that does not typically converge outside [25], and therefore its limit cannot be a frame expansion in general). More importantly, however, it is not certain that the solution of the linear system (14), when computed in finite precision, will actually coincide with the continuous Fourier extension itself. The reason is straightforward: for large , is approximately underdetermined, and therefore there will be many approximate solutions to (14) corresponding to different frame expansions of . A typical linear solver will usually select an approximate solution with bounded coefficient vector , and there is no guarantee that this need coincide with .

This phenomenon has a significant potential consequence: since the numerically computed extension need not coincide with the continuous Fourier extension , theoretical results for the behaviour of are not guaranteed to be inherited by the numerical solution. Having said this, in numerical experiments, one typically witnesses exponential convergence, exactly as predicted by Theorem 2.3. However, a difference can arise when investigating other properties, such as resolution power, as we discuss and explain in §5.

The above discussion is not intended to be rigorous. A full analysis of the behaviour of ‘numerical’ Fourier extensions is outside the scope of this paper. It transpires that this can be done, and a complete analysis will appear in a future paper [2]. Instead, in the remainder of this paper, we focus mainly on approximation-theoretic properties of theoretical extensions; resolution power, in particular. Nonetheless, in §5.3 we revisit the issue of numerical extensions insomuch as it relates to resolution, and give an explanation of the phenomenon of differing resolution power mentioned above.

3.2 A discrete Fourier extension

The continuous Fourier extension is defined by a least squares criterion, and as such it suffers from the drawback of requiring computation of the integrals . To avoid this issue one may instead define a Fourier extension by a collocation condition. In other words, if is a set of nodes in we replace the matrix and the vector in (14) by and with entries and respectively. We then define once more.

The key question is how to determine good nodes. Recall that , as defined by (3), is essentially a sum of two polynomial approximations in the variable . The polynomial interpolant of an analytic function in at Chebyshev nodes (appropriately scaled to the interval ) converges exponentially fast at the same rate as its orthogonal polynomial expansion. Therefore, such nodes, when mapped to the original domain via , will ensure exponential convergence at rate of the resulting Fourier extension.

It is now slightly easier to redefine to be the space of dimension spanned by the functions and . The collocation nodes in therefore take the form , where

| (17) |

Recall that . We refer to such nodes as symmetric mapped Chebyshev nodes, and the corresponding extension as the discrete Fourier extension of the function .

Besides removing the requirement to compute the integrals , this choice of nodes also has the significant effect of improving conditioning, as we now explain. We first note the following:

Lemma 3.2.

Let be the collocation matrix based on the symmetric mapped Chebyshev nodes (17), and suppose that is the diagonal matrix of weights . Then the matrix has entries

where is the positive, integrable function given by

Proof.

Note that is a block matrix with blocks corresponding to inner products of sines and cosines with sines and cosines. Consider the upper left block of the matrix . In the th entry, using the symmetry of the collocation points, we have that

where are Chebyshev nodes on . Recall that is the affine map from to , as defined in §2.2.2. Since the product is a polynomial in of degree at most , the Gaussian quadrature rule associated with the points is exact and it follows that

where is given by

The first factor is the Jacobian of the mapping to , the second factor is the scaling of the standard Chebyshev weight to that interval. The change of variables now gives

which is easily found to coincide with . The case corresponding to the sine functions is identical. ∎

This lemma demonstrates that the normal equations for the discrete Fourier extension are the equations of a continuous Fourier extension based on the weighted optimization problem. It is well-known that forming normal equations leads to worse numerical results [28, Ch.19], and exactly the same is true in this case. Indeed, since is an integrable weight function, we may expect that , i.e. exactly as in the unweighted case. Thus collocation leads to the significant reduction in condition number, with as opposed to . As a result, one typically observes a much higher accuracy, as opposed to , with this approach.

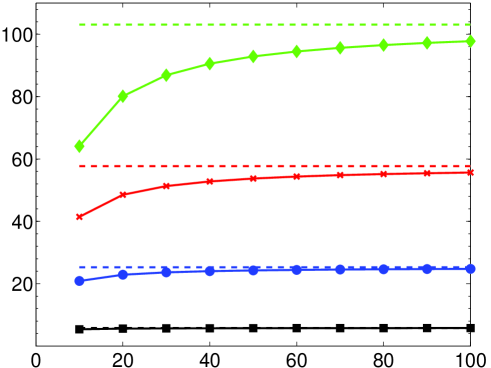

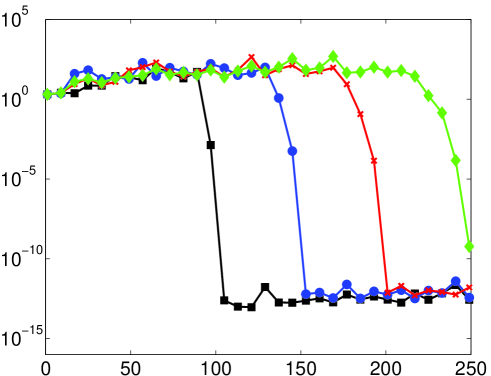

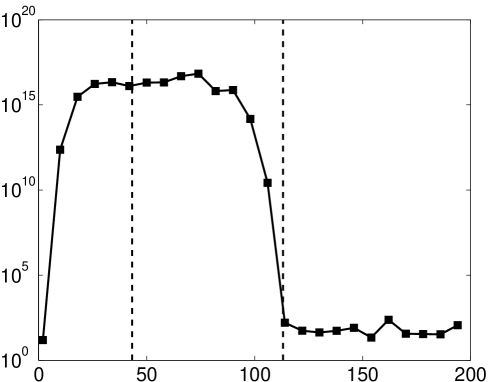

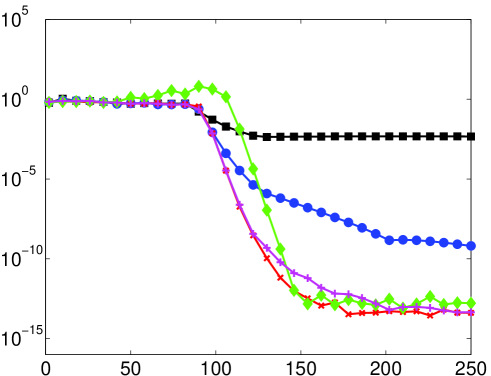

This improvement is illustrated in Fig. 3 for the example . As shown in the left panel, the best attainable error with the continuous Fourier extension is roughly , whereas the discrete extension obtains much closer to machine epsilon. The right panel indicates the significantly milder growth in condition number.

Such an improvement is by no means unique to this choice of nodes. A suitable alternative choice of collocation points follows from using the roots of the polynomials . With an appropriate number of points, the matrix , with containing the weights of the associated Gaussian quadrature rule on its diagonal, is precisely the matrix of the unweighted continuous Fourier extension, rather than the weighted extension that was identified in Lemma 3.2. The use of these points as Gaussian quadrature was already explored in [25]. However, these points depend on in a non-trivial way and, although they can easily be computed, are not available in closed form. As numerical experiments indicate that the performance of such point sets is not significantly better than the points proposed in this section, we forego a more detailed analysis.

This aside, we remark that, in some senses, the continuous and discrete Fourier extensions are analogous to the orthogonal expansion of a function in Chebyshev polynomials and its Chebyshev polynomial interpolant (sometimes known as the discrete Chebyshev expansion). Specifically, the discrete versions both arise by essentially replacing an inner product by a quadrature. However, the correspondence is not exact, since in the discrete Fourier extension the quadrature approximates the weighted inner product on . As mentioned, this is done for computational convenience. Nonetheless, the approximation-theoretic properties of the discrete and continuous Fourier extensions are nearly identical, with the only key difference being that of the condition number.

We have purposefully kept this section on numerical computation of the continuous and discrete Fourier extensions short. The main conclusion is that, despite quite severe ill-conditioning (even in the discrete case we have exponentially large condition numbers), one can typically obtain very high accuracies (i.e. close to machine precision) with the discrete Fourier extension. Although this apparent contradiction can be comprehensively explained, it is beyond the scope of this paper, and will be reported in a subsequent work [2].

4 Resolution power of polynomial expansions

Having introduced and analyzed the continuous and discrete Fourier extensions, and discussed their numerical implementation, in §5 we establish their resolution power. Before doing so, since similar techniques will be used subsequently, we first derive the well-known result for orthogonal polynomial expansions.

Suppose that is defined on and is analytic in a neighbourhood thereof. Let be its -term expansion in orthogonal polynomials with respect to the positive and integrable weight function . If is the space of weighted square integrable functions with respect to , then is given by

In particular, for any ,

Hence, we note that to study of the resolution power of any orthogonal polynomial expansion it suffices to consider only one particular example. Without loss of generality, we now focus on expansions in Chebyshev polynomials (i.e. ), in which case

| (18) |

where

| (19) |

and ′ indicates that the first term of the sum should be halved. Since the infinite sum (18) converges uniformly, we have the estimate

Therefore it suffices to examine the nature of the coefficients for the function

| (20) |

We use the following standard estimate, given in [35, p.175]:

| (21) |

Here corresponds to any Bernstein ellipse in which is analytic and is the maximum of along that ellipse.

For a given and , we consider the minimum of all bounds of the form (21). Since is a complex exponential, reaches a maximum at the point on the ellipse with the smallest (negative) imaginary part. This corresponds to and thus we have

| (22) |

The bound (21) now becomes

| (23) |

For fixed and , we find the minimal value of this bound by differentiating (23) with respect to . Equating the derivative to zero

| (24) |

yields two solutions

| (25) |

Consider the case . Both solutions of (24) are complex-valued. Note that

and

It follows that is strictly increasing as a function of . For , the best possible bound for of the form (21) is .

Consider next the case . Since it is easily seen that the roots (25) are inverses of each other, we restrict our attention to the one greater than , i.e.,

Since initially decays at , is the unique minimum of the bound for . Thus,

In particular, this implies exponential decay of the next coefficients:

Finally, consider the value . Then is a global minimum of the bound (23), since

and

In conclusion, we have seen that for an oscillatory function of the form (20), we need degrees of freedom before exponential decay of the coefficients sets in. This corresponds to degrees of freedom per wavelength – the well-known result on the resolution power of polynomials.

Note that this value was previously derived in [21] for orthogonal polynomial expansions corresponding to the Gegenbauer weight , (see also [20, p.35] for ). This result was based on explicit expressions for the Gegenbauer polynomial coefficients of . The previous arguments generalize this result to arbitrary weight functions . In fact, we could have also used the explicit formula for the Chebyshev polynomial expansion of to derive estimates similar to those given above (and possibly more accurate). However, we shall use similar techniques to those presented here in the next section, where such explicit expressions are not available.

5 Resolution power of Fourier extensions

We now consider the resolution power of the continuous and discrete Fourier extensions. As commented in §3, the continuous/discrete Fourier extension need not be realized in a finite precision numerical computation. Hence, we divide this section between theoretical estimates for the resolution power of the continuous/discrete extension, and its numerical realization.

5.1 Resolution power of the continuous Fourier extension

A naïve estimate for the resolution constant follows immediately from the bound (5) in Theorem 2.1. Indeed, for we have

and therefore satisfies , with spectral convergence occurring once exceeds .

On first viewing, this estimate seems plausible. For example, consider the special situation where the frequency of oscillation is an integer multiple of . Then the function is precisely the th complex exponential in the Fourier basis on . Given that the continuous Fourier extension of is error minimizing amongst all functions in , we can expect to be recovered perfectly (i.e. ) by its continuous Fourier extension whenever . Thus, for oscillations at frequencies , , the estimate appears correct.

However, empirical results indicate that such an estimate is only accurate for small : for large it transpires that . We shall prove this result subsequently. Before doing so, however, let us note the following unexpected conclusion: when is large, we can actually resolve the oscillatory exponentials accurately on using Fourier extensions comprised of relatively non-oscillatory exponentials , .

To obtain an accurate estimate for , we need to argue along the same lines as §2.2 and exploit the close relationship between Fourier extensions and certain orthogonal polynomial expansions. Recall that the theory of §2.2 treats even and odd cases separately. Let us first assume that is even, so that

(we consider the odd case later). Upon applying the transformation we obtain

The Fourier extension of is precisely the expansion of in the orthogonal polynomials . If we now map the domain to via

then this equates to an orthogonal polynomial expansion of the function

| (26) |

Thus, as in §4, to determine the resolution power of , it suffices to consider the expansion of in Chebyshev polynomials on .

In view of the bound (21), we now seek the maximum value of along the Bernstein ellipse . We first require the following lemma:

Lemma 5.1.

For and sufficiently large , the maximum value of on the Bernstein ellipse occurs at .

Proof.

Let . Then

Hence, for sufficiently large , the maximal value of on some curve in the complex plane occurs at the point where is maximized.

When , we may write , where and are defined by

and, letting for (recall that ),

Expanding the cosine, and equating real and imaginary parts, we find that

We seek to maximize . Note trivially that cannot vanish identically for all , and thus it suffices to consider only those for which . Let . Then is defined by

Rearranging,

To complete the proof, it suffices to show that attains its maximum value at . Suppose not. Then for some (with ), and, after differentiating the above expression, we obtain

i.e.

Let us assume first that . Hence

| (27) |

Suppose the left-hand side vanishes of (5.1) vanishes, i.e.

| (28) |

Since the right-hand side of (5.1) must also vanish, and therefore

| (29) |

Equations (28) and (29) cannot hold simultaneously (since ), and therefore the left-hand side of (5.1) cannot vanish. We deduce that

We now substitute this into the quadratic equation for to give

Since , we must have that

However, substituting this into (5.1), gives , a contradiction. Therefore . It is easy to see that leads to a smaller value of than . Hence the result follows. ∎

Using this lemma, we deduce that the th coefficient of the Chebyshev expansion of is bounded by

We proceeded in §4 by computing the roots of the partial derivative of the bound with respect to . The same approach applies here, but unfortunately it does not lead to explicit expressions. However, a simple modification makes the bound more manageable. We write

Since the argument of the cosine for is purely imaginary, with positive imaginary part, the second exponential dominates the first. Hence, for large , we may approximate by

| (30) |

One can then explicitly find roots of the partial derivative of with respect to . We have

| (31) |

These two roots are again each other’s inverse. One quickly verifies that the square root is real and positive if and only if

| (32) |

with

| (33) |

If the condition (32) is satisfied, selecting the sign in (31) yields the root that is greater than .

A little care is necessary when applying the bound (30). Recall that is only analytic in Bernstein ellipses with . It may be the case that and therefore we cannot use this bound directly. However, explicit computation yields the condition

| (34) |

Moreover, if is given by (33), then

With this to hand, we are now able to distinguish between the following cases:

-

1.

In this case, the argument of the square root in (31) is negative and hence both roots are imaginary. The function is either monotonically increasing or decreasing as a function of on . Reasoning as before, note that

(35) The partial derivative vanishes precisely when and it is positive for smaller . Thus, the bound is increasing and the best possible bound we can find of the form (21) is

-

2.

. In this case the argument of the square root in (31) is positive. Moreover, the condition ensures that the overall expression for both roots is positive (note that the denominator switches sign at ). Thus, there is a minimum of at , and this minimum satisfies . Therefore, we obtain the bound

Hence, we deduce exponential decay of the coefficients once exceeds .

-

3.

. The arguments of the previous case still hold, but now . Thus, the minimum value of is obtained at and therefore

(36) Note that this bound is valid for all , not just in the stated range. However, it only gives an accurate portrait of the coefficient decay once exceeds .

- 4.

This derivation establishes the resolution constant , but only for even oscillations . To prove the complete result, we need to obtain an equivalent statement for the odd functions

| (37) |

We proceed along similar lines to the cosine case. First, note that the continuous Fourier extension of (37) equates to the orthogonal polynomial expansion of

It is not immediately apparent that this function is analytic at . However, simple analysis reveals that the square-root type singularity is removable, and consequently is analytic in any Bernstein ellipse with .

Proceeding as before, we find that the th Chebyshev polynomial coefficient of admits the bound , , where

and . Suppose first that . Letting , we find that

Next suppose that . Then, for sufficiently large , we can replace by

where is the bound (30) of the cosine case. Hence, we deduce that

Note that the square root term is bounded since . Indeed, it attains its maximal value at , and hence is bounded in both and . This confirms exponential decay of the coefficients for .

In the third scenario, i.e. , we use the value to give

Note the similarity of this bound with (36) for the cosine case.

To sum up, we have shown the following theorem:

Theorem 5.2.

The resolution constant of the continuous Fourier extension is precisely . In particular, for and for .

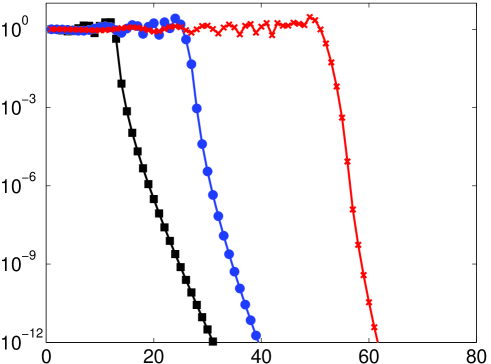

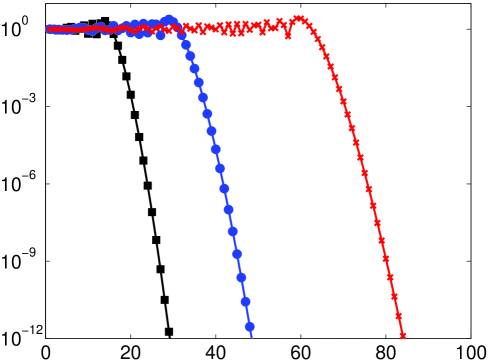

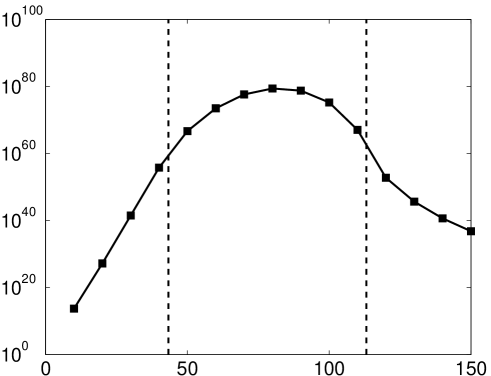

The resolution constant is illustrated as a function of in Fig. 4. In Fig. 5 we confirm this theorem with several numerical examples. As discussed in §3, the result of the numerical computation of (3) may not coincide with the exact solution . We shall discuss the question of resolution power of the numerical solution, as opposed to the exact solution, in detail in §5.3. For the moment, so that we can illustrate Theorem 5.2, we have removed this issue by carrying out the computations in Fig. 5 in additional precision.

As shown in the right panel of Fig. 5, the resolution constant for . Incidentally, the fact that is independent of for large can be seen by the studying the quantity . For fixed , we find that

The leading term is independent of , thus we expect to approach a constant value as . It is also natural to expect that this constant is the same as the resolution constant of polynomial approximations. Indeed, for large the functions and are not at all oscillatory on . In particular, they are well approximated on by their Taylor series. As such, the span of the first such functions is very close to the span of their Taylor series, which is exactly the space of polynomials of degree . Thus, for fixed and sufficiently large , the Fourier extension of an arbitrary function closely resembles the best polynomial approximation to in the sense; in other words, the Legendre polynomial expansion. The value of for the resolution constant arises from the discussion in §4.

As commented at the start of this section, oscillations at ‘periodic’ frequencies , , are informative in that they illustrate when the Fourier extension behaves roughly like a classical Fourier series on , that is, when , and when it does not, i.e. . The decay of the coefficients for these oscillations is also quite special. Since is entire and periodic on , the corresponding function given by (26) is also entire in the variable . This means that is analytic in any Bernstein ellipse , and hence the corresponding Chebyshev coefficients (respectively, the errors ) decay superexponentially fast, as opposed to merely exponentially fast at rate . Comparison of the left and right panels of Fig. 5 illustrates this difference.

We now summarize this observation, along with the other convergence characteristics derived above, in the following theorem:

Theorem 5.3.

The error in approximating by its continuous Fourier extension is for . Once exceeds the error begins to decay exponentially, and when , the rate of exponential convergence is precisely , unless for some , in which case it is superexponential.

5.2 Points per wavelength and resolution power of the discrete Fourier extension

The discrete Fourier extension possesses exactly the same resolution power as its continuous counterpart. This is a consequence of the fact that the error committed by the polynomial interpolant of a function at Chebyshev nodes can be bounded by a logarithmically growing factor (the Lebesgue constant) multiplied by the error of its expansion in Chebyshev polynomials [35].

However, the discrete Fourier extension does conveniently connect the concept of resolution power with the notion of points per wavelength (see §1). As shown by Theorem 5.2, Fourier extensions require points per wavelength to resolve oscillatory behaviour, provided the corresponding nodes are of the form (17).

As it transpires, the exact value for the resolution constant can also be heuristically obtained by looking at the maximal spacing between the mapped symmetric Chebyshev nodes (as defined in (17)). Intuitively speaking, if the maximal node spacing for a set of nodes is , then one can only expect to be able to resolve oscillations of frequency . For the mapped symmetric Chebyshev nodes, one can show that the maximal spacing

Given that there are a total of nodes, one also obtains the stipulated value for the resolution constant in this manner. Note that identical arguments based on either equispaced or Chebyshev nodes in give maximal grid spacings and respectively. These correspond to the resolution constants of Fourier series and polynomial approximations, in agreement with previous discussions.

5.3 Resolution power of numerical approximations

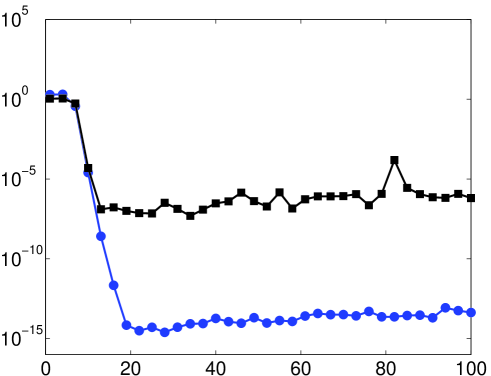

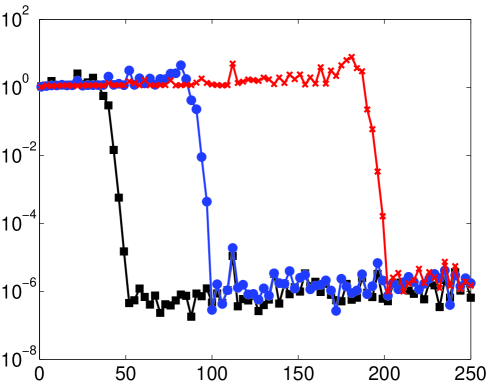

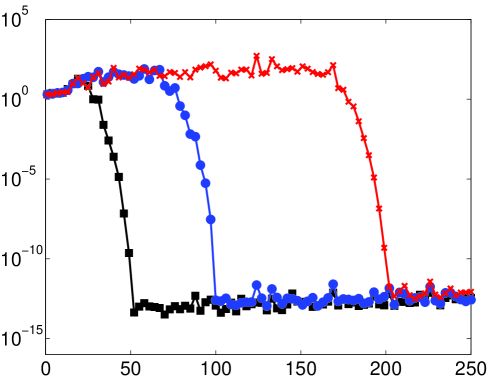

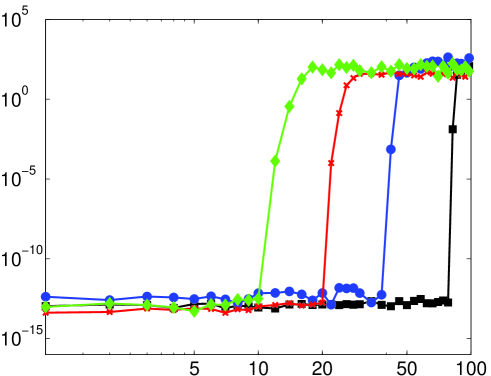

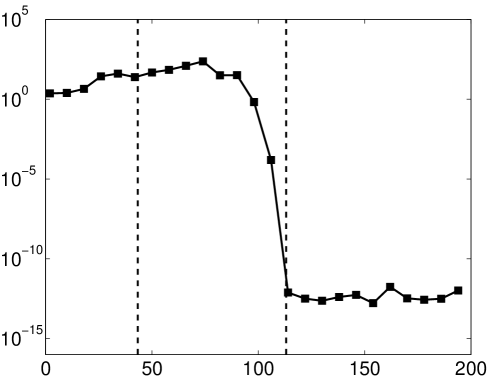

As mentioned, it is not necessarily the case that the numerical solution of (14) (or its discrete counterpart) will coincide with the continuous (discrete) Fourier extension. Therefore, the predictions of Theorem 5.2 may not be witnessed in computations. In Fig. 6 we give results for the numerical computation of the continuous/discrete Fourier extensions. For small there is little difference with the left panel of Fig. 5 (except, as mentioned in §5.3, the computation of the continuous extension only attains around eight digits of accuracy). However, when the numerically computed extension appears to possess a much larger resolution constant than the value predicted by Theorem 5.2. In fact, as demonstrated in Fig. 7, the numerical Fourier extension appears to have a resolution constant of for all , much like the naïve estimate given at the start of §5.1. This observation is further corroborated in Fig. 8, where a linear dependence of the numerical resolution constant with is witnessed.

This difference can be explained intuitively. The improvement of Theorem 5.2 over the naïve estimate given at the beginning of §5.1 revolves around the observation that slowly oscillatory functions in the frame can be recombined to approximate functions with higher frequencies with exponential accuracy. This is due to the redundancy of the frame. However, such combinations necessarily yield large coefficients: the orthogonal polynomials that give the exact solution grow rapidly outside (see [25]) and, hence their coefficients when represented in the frame consisting of exponentials that are bounded on must be large. In fact, one has the following result:

Lemma 5.4.

Let the continuous Fourier extension of have coefficient vector . Then

for some constant depending on only.

Proof.

By Parseval’s relation, . Writing as in Theorem 2.2, we obtain

where , and and are given by (9) and (10) respectively. It can be shown that

(see [25, Thm. 3.16] for the case – the extension to general is straightforward), and therefore

for some independent of and . By the analysis of the previous section, for , and hence we deduce the result. ∎

This lemma indicates that the coefficient vector of the continuous Fourier extension may well be exponentially large in the unresolved regime. As discussed (see §3), the numerical solution favours representations in the frame with bounded coefficients, since for large the system becomes underdetermined and most least squares solvers will seek a solution vector with minimal norm. We therefore conclude that, whilst the resolution power of the frame is bounded by , the resolution power of all representations in the frame with ‘reasonably small’ coefficients is only .

In Fig. 9 we illustrate this discrepancy by comparing the ‘theoretical’ Fourier extension and its counterpart computed in standard precision. In this example, , i.e. , and we therefore witness rapid exponential growth of the theoretical coefficient vector, in agreement with Lemma 5.4. In particular, the coefficient vector is of magnitude at the point at which the function begins to be resolved. On the other hand, since for large the numerical solver favours small norm coefficient vectors, the computed coefficient vector initially grows exponentially and then levels off around . Thus, in finite precision one cannot obtain the coefficient vector required to resolve at the point . As commented previously, these arguments can be made rigorous by performing an analysis of the numerical Fourier extension [2].

5.4 Fixed versus varying

Whilst the principal issue highlighted in the previous section, namely, that for large we witness rather than in practice, is unfortunate, it is mainly the case that is of interest, since this gives the highest resolution power.

However, with fixed independently of , the resolution constant ; in other words, greater than the optimal Fourier resolution constant (although this difference can be made arbitrarily small). One way to formally obtain the value of is to allow with increasing . For example, if

| (38) |

for some and , then we find that

thereby giving formally optimal resolution power. The disadvantage of such a choice of is that one forfeits exponential convergence. In fact,

and therefore

| (39) |

which indicates subexponential decay of the error (yet still spectral). Larger values of lead to slow, algebraic convergence, and are consequently inadvisable.

An alternative way to choose is found in the literature on the Kosloff–Tal–Ezer mapping [6, 27] (recall the discussion in §1.3). In [27] the authors suggest choosing the mapping parameter based on some tolerance . This limits the best achievable accuracy to but gives a formally optimal time-step restriction. We may do the same with the Fourier extension, by solving the equation

This gives

| (40) |

Note that

and therefore

Hence we expect formally optimal result power with this approach, as well as a best achievable accuracy on the order of .

As we show in the next section, choosing in this manner works particularly well in practice.

5.5 Numerical experiments

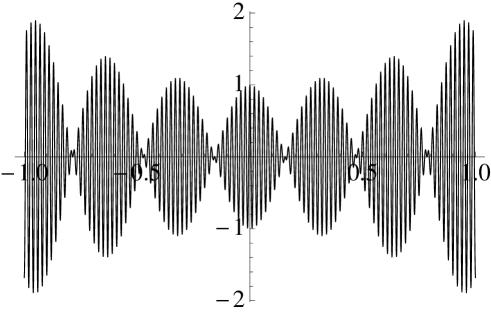

In this final section we present numerical results for the Fourier extension applied to the oscillatory functions

| (41) |

where is the Airy function. Graphs of these functions are given in the top row of Fig. 10.

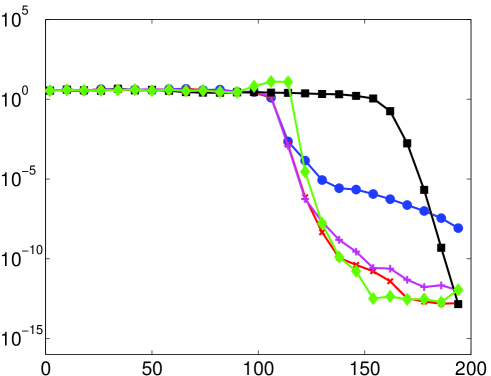

In the bottom row of Fig. 10 we present the error committed by the discrete Fourier extension for various choices of . The convergence rates predicted in the previous section are confirmed for these examples. For decreasing with the oscillations are resolved slightly sooner, but there is slower convergence in the resolved regime. Whether the approximation error is better or worse than that obtained from a fixed value of (in this case ) depends on what level of accuracy is desired. Yet, even when high accuracy is required, (i.e. ) appears to be a good choice for these two examples, in spite of the theoretical subexponential convergence rate. The varying value of , with tolerance set to 1e-13, also yields comparable results. The convergence rate for the case of larger is slower, as expected from the estimate (39).

As discussed, one motivation for using Fourier extensions is that, as rigorously proved in this paper, they offer a higher resolution power for small than polynomial approximations, for which the resolution constant is (see §4). In Fig. 11 we compare the behaviour of the Chebyshev expansion and the Fourier extension approximation for the functions (41). Note that the latter resolves the oscillatory behaviour using fewer degrees of freedom, in agreement with the result of §5. Indeed, for the first example function shown in the left panel, the Chebyshev expansion only begins to converge once exceeds (here, for purposes of comparison, the number of expansion coefficients is ), in agreement with a resolution constant of .

The results for the second function are shown in the right panel of Fig. 11. The differences in resolution are smaller in this case. Yet, the fixed choice and varying choices of still require significantly fewer degrees of freedom than the Chebyshev expansion to resolve the oscillatory behaviour. As before, a slower asymptotic convergence rate is observed for the case. Note that the oscillations of are not harmonic, with the frequency increasing towards the right endpoint of the approximation interval. This seems to have the effect of reducing the advantage of schemes with optimized resolution properties.

6 Conclusions and challenges

The purpose of this paper was to describe and analyze the observation that Fourier extensions are eminently well suited for resolving oscillations. To do this, we derived an exact expression for the so-called resolution constant of the continuous/discrete Fourier extension, and offered an explanation as to why this constant need not be realized in the case of large .

Although we have touched upon the subject of numerical behaviour of Fourier extensions in this paper, we have not provided a full description. As mentioned, the continuous/discrete Fourier extensions both result in severe ill-conditioning. However, numerical examples in this paper and elsewhere (see [7, 11, 30, 31]) point towards an apparent contradiction. Namely, despite this ill-conditioning, the best achievable accuracy can still be very high (close to machine epsilon for the discrete extension). It transpires that this effect can be quite comprehensively explained, with the main conclusion being the following: the discrete Fourier extension, although the result of solving an ill-conditioned linear system, is in fact numerically stable. A paper on this topic is in preparation [2]. Incidentally, the analysis presented therein also allows one to make rigorous the arguments given in §5.3 on the differing resolution constants of theoretical and numerical Fourier extensions for large .

The discrete Fourier extension introduced in this paper uses values of on a particular nonequispaced mesh to compute the Fourier extension. As mentioned, one important use of Fourier extensions is to solve PDE’s in complex geometries. In this setting, such meshes are usually infeasible. For this reason, it is of interest to consider the question of how to compute rapidly convergent, numerically stable Fourier extensions with good resolution power from function values on equispaced meshes (as discussed, related methods based on the FE–Gram extension are developed in [3, 10, 32]). It transpires that this can be done, and we will report the details in the upcoming paper [2] (see also [30]).

Finally, we remark that there has been little discussion in this paper on the computational cost of computing Fourier extensions. Instead, we have focused on approximation-theoretic properties, such as resolution. However, M. Lyon has recently introduced a fast algorithm for this purpose [31], which allows for computation of Fourier extensions in operations.

Acknowledgements

The authors would like to thank Rodrigo Platte for pointing out the relation to the Kosloff–Tal–Ezer mapping. They would also like to thank Jean–François Maitre for useful discussions and comments.

References

- [1] R. A. Adams. Sobolev Spaces. Academic Press, 1975.

- [2] B. Adcock, D. Huybrechs, and J. Martín-Vaquero. On the numerical stability of Fourier extensions. In preparation, 2012.

- [3] N. Albin and O. P. Bruno. A spectral FC solver for the compressible Navier–Stokes equations in general domains I: Explicit time-stepping. J. Comput. Phys., 230(16):6248–6270, 2011.

- [4] L. Badea and P. Daripa. On a fourier method of embedding domains using an optimal distributed control. Numer. Algorithms, 32(2–4):261–273, 2003.

- [5] F. P. Bertolotti, T. Herbert, and P. R. Spalart. Linear and nonlinear stability of the blasius boundary layer. J. Fluid Mech., 242:441–474, 1992.

- [6] J. P. Boyd. Chebyshev and Fourier Spectral Methods. Springer–Verlag, 1989.

- [7] J. P. Boyd. A comparison of numerical algorithms for Fourier Extension of the first, second, and third kinds. J. Comput. Phys., 178:118–160, 2002.

- [8] J. P. Boyd. Fourier embedded domain methods: extending a function defined on an irregular region to a rectangle so that the extension is spatially periodic and . Appl. Math. Comput., 161(2):591–597, 2005.

- [9] J. P. Boyd and J. R. Ong. Exponentially-convergent strategies for defeating the Runge phenomenon for the approximation of non-periodic functions. I. Single-interval schemes. Commun. Comput. Phys., 5(2–4):484–497, 2009.

- [10] O. Bruno and M. Lyon. High-order unconditionally stable FC-AD solvers for general smooth domains I. Basic elements. J. Comput. Phys., 229(6):2009–2033, 2010.

- [11] O. P. Bruno, Y. Han, and M. M. Pohlman. Accurate, high-order representation of complex three-dimensional surfaces via Fourier continuation analysis. J. Comput. Phys., 227(2):1094–1125, 2007.

- [12] A. Bueno-Orovio. Fourier embedded domain methods: period and extension of a function defined on an irregular region to a rectangle via convolution with Gaussian kernels. Appl. Math. Comput., 183(2):813–818, 2006.

- [13] A. Bueno-Orovio and V. M. Pérez-Garcia. Spectral smoothed boundary methods: The role of external boundary conditions. Numer. Methods Partial Differential Equations, 22(2):435–448, 2006.

- [14] A. Bueno-Orovio, V. M. Pérez-Garcia, and F. H. Fenton. Spectral methods for partial differential equations on irregular domains: The spectral smoothed boundary method. SIAM J. Sci. Comput., 28(3):886–900, 2006.

- [15] M. Buffat and L. Le Penven. A spectral fictitious domain method with internal forcing for solving elliptic PDEs. J. Comput. Phys., 230(7):2433–2450, 2011.

- [16] C. Canuto, M. Y. Hussaini, A. Quarteroni, and T. A. Zang. Spectral methods: Fundamentals in Single Domains. Springer, 2006.

- [17] O. Christensen. An Introduction to Frames and Riesz Bases. Birkhauser, 2003.

- [18] K. S. Eckhoff. On a high order numerical method for functions with singularities. Math. Comp., 67(223):1063–1087, 1998.

- [19] M. Garbey. On some applications of the superposition principle with Fourier basis. SIAM J. Sci. Comput., 22(3):1087–1116, 2000.

- [20] D. Gottlieb and S. A. Orszag. Numerical Analysis of Spectral Methods: Theory and Applications. Society for Industrial and Applied Mathematics, 1st edition, 1977.

- [21] D. Gottlieb and C.-W. Shu. Resolution properties of the Fourier method for discontinuous waves. Comput. Methods Appl. Mech. Engrg, 116:27–37, 1994.

- [22] D. Gottlieb and C.-W. Shu. On the Gibbs’ phenomenon and its resolution. SIAM Rev., 39(4):644–668, 1997.

- [23] D. Gottlieb, C.-W. Shu, A. Solomonoff, and H. Vandeven. On the Gibbs phenomenon I: Recovering exponential accuracy from the Fourier partial sum of a nonperiodic analytic function. J. Comput. Appl. Math., 43(1–2):91–98, 1992.

- [24] N. Hale and L. N. Trefethen. New quadrature formulas from conformal maps. SIAM J. Numer. Anal., 46(2):930–948, 2008.

- [25] D. Huybrechs. On the Fourier extension of non-periodic functions. SIAM J. Numer. Anal., 47(6):4326–4355, 2010.

- [26] H. H. Juang, S. Hong, and M. Kanamitsu. The NCEP regional model: An update. Mon. Weather Rev., 129:2904–2922, 2001.

- [27] D. Kosloff and H. Tal-Ezer. A modified Chebyshev pseudospectral method with an time step restriction. J. Comput. Phys., 104:457–469, 1993.

- [28] C. L. Lawson and R. J. Hanson. Solving least squares problems. Classics in Applied Mathematics. SIAM, Philadelphia, 1996.

- [29] S. H. Lui. Spectral domain embedding for elliptic pdes in complex domains. J. Comput. Appl. Math., 225(2):541–557, 2009.

- [30] M. Lyon. Approximation error in regularized SVD-based Fourier continuations. Preprint, 2011.

- [31] M. Lyon. A fast algorithm for Fourier continuation. SIAM J. Sci. Comput., 33(6):3241–3260, 2012.

- [32] M. Lyon and O. Bruno. High-order unconditionally stable FC-AD solvers for general smooth domains II. Elliptic, parabolic and hyperbolic PDEs; theoretical considerations. J. Comput. Phys., 229(9):3358–3381, 2010.

- [33] S. A. Orszag and M. Israeli. Numerical simulation of incompressible flow. Ann. Revs. Fluid Mech., 6:281–318, 1974. REVIEW.

- [34] R. Pasquetti and M. Elghaoui. A spectral embedding method applied to the advection–diffusion equation. J. Comput. Phys., 125:464–476, 1996.

- [35] T. Rivlin. Chebyshev Polynomials: from Approximation Theory to Algebra and Number Theory. Wiley New York, 1990.

- [36] F. Sabetghadam, S. Sharafatmandjoor, and F. Norouzi. Fourier spectral embedded boundary solution of the Poisson’s and Laplace equations with Dirichlet boundary conditions. J. Comput. Phys., 228(1):55–74, 2009.

- [37] L. N. Trefethen. Is Gauss quadrature better than Clenshaw-Curtis? SIAM Rev., 50(1):67–87, 2008.