On the Sparse DAG Structure Learning

Based on Adaptive Lasso

Abstract

Learning the underlying Bayesian Networks (BNs), represented by directed acyclic graphs (DAGs), of the concerned events from purely-observational data is a crucial part of evidential reasoning. This task remains challenging due to the large and discrete search space. A recent flurry of developments followed NOTEARS [1] recast this combinatorial problem into a continuous optimization problem by leveraging an algebraic equality characterization of acyclicity. However, the continuous optimization methods suffer from obtaining non-spare graphs after the numerical optimization, which leads to the inflexibility to rule out the potentially cycle-inducing edges or false discovery edges with small values. To address this issue, in this paper, we develop a completely data-driven DAG structure learning method without a predefined value to post-threshold small values. We name our method NOTEARS with adaptive Lasso (NOTEARS-AL), which is achieved by applying the adaptive penalty method to ensure the sparsity of the estimated DAG. Moreover, we show that NOTEARS-AL also inherits the oracle properties under some specific conditions. Extensive experiments on both synthetic and a real-world dataset demonstrate that our method consistently outperforms NOTEARS.

Index Terms:

Bayesian Networks learning, Sparse DAG, Continuous Optimization, Adaptive LassoI Introduction

Bayesian networks (BNs) [2] is a kind of graphical model that bridges probability theory and graph theory to represent a joint distribution, which can also be leveraged to model the shape and level of the relationships between random variables by representing conditional dependence as edges and corresponding parameters in a directed acyclic graph (DAG). With success in encoding uncertain expert knowledge in expert systems [3], BN has been widely used in many applications such as causal inference[4], biology [5], and healthcare [6].

Over the last decades, lots of work has been done on discovering Bayesian networks from purely-observational data. In general, the problem is ill-posed since there may be multiple DAGs producing the same distribution. Furthermore, concerning the computation complexity [7], since the search space of DAGs is discrete, many standard numerical algorithms cannot be utilized, which leads to the DAG structure learning problem NP-hard [8, 9]. In other words, searching the optimal DAG in the DAGs space scales super-exponentially by the number of variables.

In general, there are mainly two classes of DAG structure learning methods. One is constraint-based algorithms. The other is score-based algorithms. Constraint-based methods, such as SGS [10], PC [11], and GS [12], read the conditional independence relationships encoded in the data and try to find a completed partially directed acyclic graph (CPDAG) that entails all (and only) the corresponding d-separations by Markovian and Faithfulness assumptions [13]. That is to say, the output of these algorithms is a set of DAGs (equivalence class) instead of the ground-truth DAG. The main idea of score-based methods is to establish a suitable score function as the objective function and then find the graph structure that maximizes the objective in the solution space. Common score functions include BGe score [14], BDe score [15], MDL [16] and BIC [14]. Based on the idea of order search and greedy search, some approaches that optimize the score functions over the DAGs space are proposed, such as order-based search (OBS) [17], the max-min hill climbing method [18], greedy equivalence search (GES) [19], fast GES [20], and A* [21].

To improve the searching efficiency, recently, Zheng et al. [1] introduced a new approach, named NOTEARS, which seeks to replace the traditional combinatorial constraint by a smooth characterization of acyclicity whose value is set to be zero as a DAG. In this way, the combinatorial optimization problem is converted into a continuous optimization such that a wide range of numerical methods can be applied. As NOTEARS only considers the linear model, some following works [22, 23, 24, 25, 26, 27] extend it to non-linear or non-parametric situations by leveraging neural networks or orthogonal series. Following these works, many works also extend this work to time-series data [28], unmeasured common confounders [29], interventional data [30], distributed learning [31, 32] and incomplete data [33, 34]. Further related works include GOLEM [35], a likelihood-based method that suggests using likelihood as the learning objective with soft constraints. NOFEARS [36] focuses on optimization by considering the Karush–Kuhn–Tucker (KTT) conditions. Truncated Matrix Power Iteration [37] finds that enlarging the coefficients for higher-order terms can better approximate the DAG constraints and leads to a better graph recovery performance. The convergences of the augmented Lagrangian method and the quadratic penalty method are studied in [38].

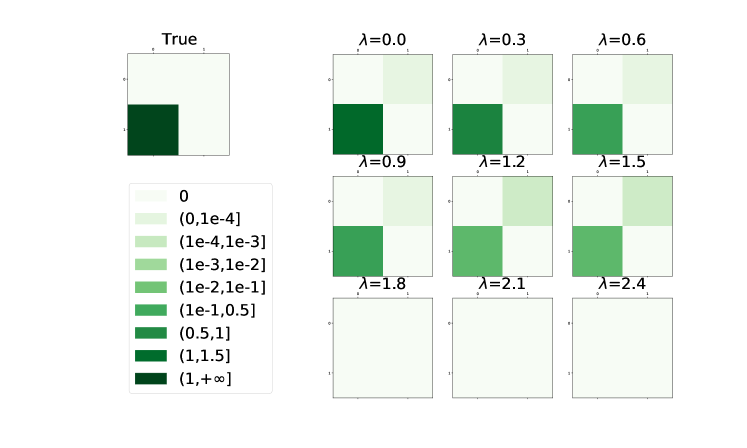

It is worth noting that NOTEARS and most of its following works [27, 26, 25, 24, 23, 35] cannot directly obtain a sparse solution since the numerical optimization always introduces many small false positive estimates. From Figure 1, we can observe that even with a large sample size over a -node graph, the false positive edge (the right one) always exists and cannot be ruled out by regularization. Moreover, as the penalty level goes larger, the bias of the estimates becomes larger and larger until a pure zeros matrix. This implies that, in NOTEARS, only increasing the value of the penalty coefficient cannot guarantee a consistent estimate, and extra procedures are necessary for obtaining a sparse solution.

To deal with this issue, NOTEARS utilizes a hard threshold to post-process the initial estimators. The fixed thresholding strategy is a direct method to cut down the false positive estimates caused by numerical error. On the other hand, the hard-threshold strategy is not a flexible and systematic way to reduce the number of false discoveries. Firstly, choosing a fixed and sub-optimal value as a threshold cannot adapt to different types of graphs. Secondly, although the amount of false positive edges is diminished via adopting a hard-thresholding strategy, small coefficients in the true model may also be deleted indiscriminately (see Section IV-B).

For NOTEARS, on the one hand, it cannot obtain a sparse solution without thresholding. On the other hand, threshold strategy causes inflexibility, and this is not a systematic way. Motivated by this, we develop a new DAG structure learning method based on the adaptive lasso, which abandons the fixed-threshold strategy and directly leads to a sparse output. As we will show, the main contributions are as follows.

-

1.

We propose a purely data-driven method that does not need a predefined threshold and then illustrate the specific algorithm.

-

2.

We study the oracle properties that our method possesses, including sparsity and asymptotic normality, which means that our method has the ability to select the right subset model and converges to the true model with an optimal rate when the sample size increases.

-

3.

We consider using a more sufficient and suitable validation set in cross-validation for our goal to find the optimal subset model.

-

4.

We demonstrate the effectiveness of our method through simulation experiments with weights generated from a broader range, e.g., Gaussian distributed weights with mean zero.

The rest of this paper is organized as follows: in Section II, we review some basic concepts of the DAG model and the specific algorithm of NOTEARS, as well as the limitation of its fixed-threshold strategy. Then, based on the idea of adaptive Lasso [39], we introduce our method and study its asymptotic behavior when the sample size goes to infinity in Section III. In Section IV, to verify our theoretical study and the effectiveness of our method, we compare our method with NOTEARS and existing state-of-the-art by simulated experiments. Finally, we conclude our work in Section V.

II Related works

In this section, we review the linear DAG model and the NOTEARS method. In section II-A, we first briefly review some fundamental concepts of the DAG model with linear structure assumption and the corresponding score function used to maximize the likelihood of observations. Then, we detail the NOTEARS method and its optimization algorithm in Section II-B.

II-A DAG with Linear Structure

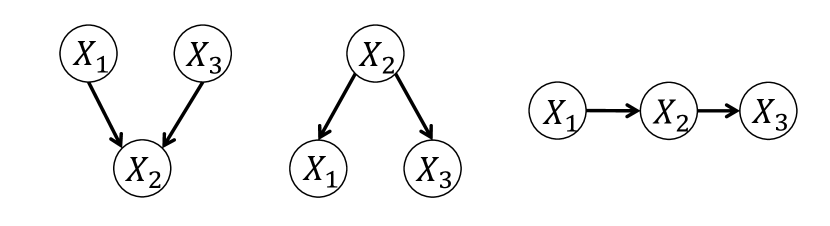

A DAG can be encoded as , where refers to the set of nodes and is the set of directed edges. The nodes in represent variables under consideration. And directed edges correspond to the direct conditional relationships between parental nodes and child nodes. For example, , represents is the parent of . Figure 2 illustrates three possible three-node DAGs with two edges.

The task of DAG structure learning is to seek a DAG from the DAGs space of nodes (discrete) that best fits the observational data . Let represent the observations consisting of -dimensional vectors. We assume that these vectors are realizations of identically independently distributed (iid) random vectors with joint distribution , where . Importantly, with no latent variable and Markovian assumptions [40], the joint distribution can be decomposed as follows:

| (1) |

where represents the parental set of . Then, we model the relationships of variables in by a structure equation model (SEM), denoted as , where refers to the uncovered variables. In this paper, we narrow our focus on the DAG with additive noise models (ANMs). By introducing the concept of weighted adjacency matrix , we can establish the linear ANMs as , where is a -dimensional random vector, denoted as . Each represents the noise term added to . In this paper, we assume are independent and identically distributed. The information about the structure of a DAG is completely involved in , which is defined in the following way:

| (2) |

We note as the graph induced by . To derive , we can maximize the likelihood of observations . Benefiting from the well-known probability density transformation method [35], this target can be equally achieved by minimizing the least-square loss function over the search space. A regularization term is added to deduce a sparse solution. Then, the overall score function is

| (3) |

II-B DAG with no NOTEARS

Previous score-based methods suffer from searching the discrete DAGs space and lead to low efficiency. To overcome this problem, Zheng et al.[1] proposed the NOTEARS method, which seeks to use a function satisfying these four desiderata: i) is a necessary and sufficient condition for to be a DAG; ii) its value reflects the level of acyclicity of the graph; iii) smooth; iv) its first derivative exists and is easy to compute. Based on the method in [41], which starts to use the matrix algebra to investigate the number of circles in a graph, NOTEARS verifies that is a proper function to characterize the acyclicity with the derivative . After determining the smooth equality constraint, the problem can be written as the following optimization program:

| (4) | ||||

| subject to |

In order to solve the program (4), this constrained optimization program is converted to a sequence of unconstrained problems by the augmented Lagrangian method [42]. The procedure is shown as follows.

| (5) | ||||

For each iteration, the first step can be solved by the L-BFGS proximal quasi-Newton method [43, 44] with the derivative as To overcome the problem caused by the non-smooth point zero in the penalty term, i.e., , the original weighted adjacency matrix is cast into the difference between two matrices with non-negative elements [45].

Remark After obtaining the initial estimate, NOTEARS zeros out small coefficients if their absolute values are less than the predefined threshold to reduce the number of false positive discovery edges. However, this strategy leads to more missing edges with small weights and is hard to adapt to different scenarios. For example, if the weights of a DAG are generated from a uniform distribution over , by setting , the estimates of small weights, which are located in the interval , have a high risk to be forced to in the post-processing step. Since the simulation experiments in previous work commonly set a gap to zero, for instance, in the simulation part of [1], the weights of edges commonly sampled uniformly from , where is a weight scale, this side effect is not obvious. The specific algorithm of NOTEARS with a fixed predefined threshold is presented in Algorithm 1.

III The proposed method

In this section, we extend the NOTEARS method with adaptive Lasso to achieve learning the sparse DAG structure from purely observational data and name our method as NOTEARS with adaptive Lasso (NOTEARS-AL). We first introduce the statistical tool we use in our method, named adaptive lasso, in Section III-A. After detailing our method in Section III-B, we investigate the asymptotic behavior of NOTEARS-AL theoretically in Section III-C. We then develop an algorithm to implement this method in Section III-D, and illustrate how we deal with our model’s hyper-parameters in Section III-E. Finally, We introduce how to extend NOTEARS to generalized linear models in Section III-F.

III-A Adaptive Lasso

In recent decades, variable selection has been studied extensively. In particular, Lasso [46] was introduced to improve the prediction accuracy and, simultaneously, reduce overfitting. In the linear model, the lasso estimates are obtained by optimizing the following program:

| (6) |

where is related to the sample size . Although Lasso variable selection has been shown to be consistent under some conditions [47], Zou[48] illustrated certain scenarios where the lasso is an inconsistent procedure in terms of variable selection. Zou[48] argued that the setup in lasso is somewhat unfair since it treats all the coefficients with indistinctive penalty levels in the sparsity term. To fix this problem, Zou[48] proposed a new methodology called adaptive lasso. In adaptive lasso, the weights of penalty levels are totally data-driven and cleverly chosen. The adaptive lasso estimates are obtained by optimizing the following program:

| (7) |

where is a predefined penalty weight. For example, we can use the ordinary least square estimates to define . Choose a , and define the penalty weight , where commonly be , , or determined by the cross-validation method.

III-B The Overall Learning Objective

From Figure 1, we can find that when the penalty level is relatively low, the learned false edges cannot be zeroed out. On the other hand, assigning a larger weight to the regularization term may lead to even worse results. This implies that Lasso put much more penalty on large coefficients rather than false positive ones, which is not consistent with our requirement. Motivated by this observation, to improve the criterion (4) on variable selection, one intuition is to apply the adaptive penalty levels to different coefficients, which has been developed in [39]. Following this motivation, we modify Eq. (4) to

| (8) | ||||

| subject to |

where is the specified penalty for . Expecting the minor false positive edges can be shrunk to zeros and reserve the true edges at the same time, we would like the corresponding to be large for the former and small for the latter. Hence, the minor false edges are heavily penalized, and the true positive edges are lightly penalized. More details about how to choose are given in Section III-C. With this modification of the score function, no extra predefined threshold is needed to post-processing the initial estimate anymore.

III-C Asymptotic Oracle Properties

In this section, we show that with a proper choice of and under some mild conditions, our method possesses the oracle properties when the sample number goes to infinity. Similar to procedure (5), via the augmented Lagrangian method, a dual ascent process can solve the ECP (8). The first step of each iteration can be regarded as finding the local minimum of the Lagrangian with a fixed Lagrange multiplier , that is,

| (9) | ||||

Let denote the underlying true adjacency matrix. And then, we define

which means that collects the indices for terms whose true parameters are nonzero, and contains the indices for terms that do not exist in the underlying true model. Here, we first make two conditions:

-

1.

, where are identically independent distributed with mean and variance .

-

2.

is finite and converges to a positive definite matrix .

Under these two conditions, the oracle properties of NOTEARS-AL are described in the following theorems. Rigorous proofs are given in Appendix VI.

Theorem 1 (Local Minimizer) Under above two conditions, let , where refer to the ’s associated with nonzero coefficients in , if for some , then there exists a local minimizer of , which is -consistent to . Let denotes the local minimizer in Theorem 1, which satisfies . Consistency is a nice start, and this motivates us to study more on its asymptotic properties as sample size goes to infinity. Before introducing the oracle properties, we need to define more notations because now we want to analyze the estimators of nonzero parameters in the underlying true model. We define

In condition 1), we already assume as . Furthermore, without loss of generality, we assume . Let

where is a matrix corresponding to the coefficients with index in .

Theorem 2 (Oracle Properties) Under the two conditions, let , where refer to the ’s associated with zero coefficients in , if as , then the local minimizer in Theorem 1 must satisfy the following:

-

•

(Sparsity)

-

•

(Asymptotic normality) For , as .

Firstly, for the zero coefficients part, Theorem 2 shows that given for some and , NOTEARS-AL can consistently remove all irrelevant variables with probability tending to 1. Secondly, by magnifying the difference by for the nonzero estimators, we can see that the pattern turns out to be a normal distribution. Then, there is only one question left. That is how to determine ’s such that the nonzero related part for some and zero related part . Here, using the fact that ordinary least square (OLS) estimates are -consistent estimates of (the process of proof is consistent with Theorem1), suppose that and , then, we can define ’s by with some specific power so that the above two requirements are met. Besides, specific details to determine are given in III-E.

| (10) | ||||

| subject to |

III-D NOTEARS with Adaptive Lasso

The algorithm can be divided into two parts. The first part is to find the solution for Eq. (10), and the second part is plugging in the adaptive penalty parameters to Eq. (9) and solving it. These equality-constrained programs (ECPs) are then solved by the augmented Lagrangian method, i.e., using the Lagrangian method to handle the hard DAG constraint . The first part follows the same procedure as the original NOTEARS without the thresholding step. And then, we obtain a matrix with element . Let , where is the Hadamard product. Thus, we have . In matrix notation, it is , where refers to Hadamard division. Then, ECP (4) can be transformed as

| (11) | ||||

where is the final estimate for . Then, similar to (5), via the augmented Lagrangian method, the above ECP (11) can be solved by a dual ascent process.

| (12) | ||||

To complete the above optimization, we need to derive a new form of the gradient for the first step in Eq. (12). Here we also reformulate the original as the difference between two matrices with positive elements. For the Loss function:

For the acyclicity term :

To sum up, the algorithm proceeds as Algorithm 2.

III-E Hyper-parameter

Choosing the tuning parameter is an important issue. We found that the results of NOTEARS-based methods are sensitive to the value of hyper-parameter, thus it is essential to choose a good one. Our goal is to choose the optimal model which contains the true variables as many as possible. Here, following Zou [48], we utilize cross-validation to find an optimal . From NOTEARS-AL, we can obtain a set of candidate models , where . The cross-validation procedure we use shows as Algorithm 3.

Another key modification is that we need a more sufficient validation set to find the best model to achieve the restricted model-selection consistency, especially when the candidate model set is large[49]. Motivated by this, instead of using the traditional -fold cross-validation method, whose validation set is only of data, we suggest swapping the proportions of the validation set and training set.

III-F Extension to Generalized Linear Models

Zheng et al.[27] also extend the idea of NOTEARS to generalized linear models (GLMs). Traditionally, a GLM is formulated as follows:

| (13) |

where is a link function used in GLMs, and . To extend the DAG constraint beyond the linear setting, the key point is to find a sensible way to redefine the weighted adjacency matrix since there is no explicit form of in the GLMs setting. Based on the idea in [50], which encodes the importance of each variable considered via utilizing corresponding partial derivatives, it is then easy to show that the dependence among variables can be precisely measured by the -norm of corresponding partial derivatives [27]. This implies the weighted adjacency matrix in GLMs can be defined as follows:

| (14) |

Thus, for linear mean functions, i.e. , is equivalent to . We can simplify the DAG constraint by plugging in . To construct the score function for GLMs, we need a more suitable loss function here. In this paper, we focus on one specific model in GLMs, the logistic regression model, where . For logistic regression, the least-square loss is not appropriate anymore since it will result in a non-convex optimal function. Here we introduce the concept named Log Loss, which is commonly used as the loss function for logistic regression, defined as follows:

| (15) |

where .

IV Experiments

We conduct experiments with a large sample size to verify our asymptotic oracle properties (Section III-C). To validate the effectiveness of our proposed method, we compare it against NOTEARS with fixed threshold, greedy equivalent search (GES) [19, 20] and the PC algorithm [4]. For NOTEARS, the value of the threshold is , and set the penalty level in our experiments. This setting can not only promise a DAG result but also remain a relatively good performance.

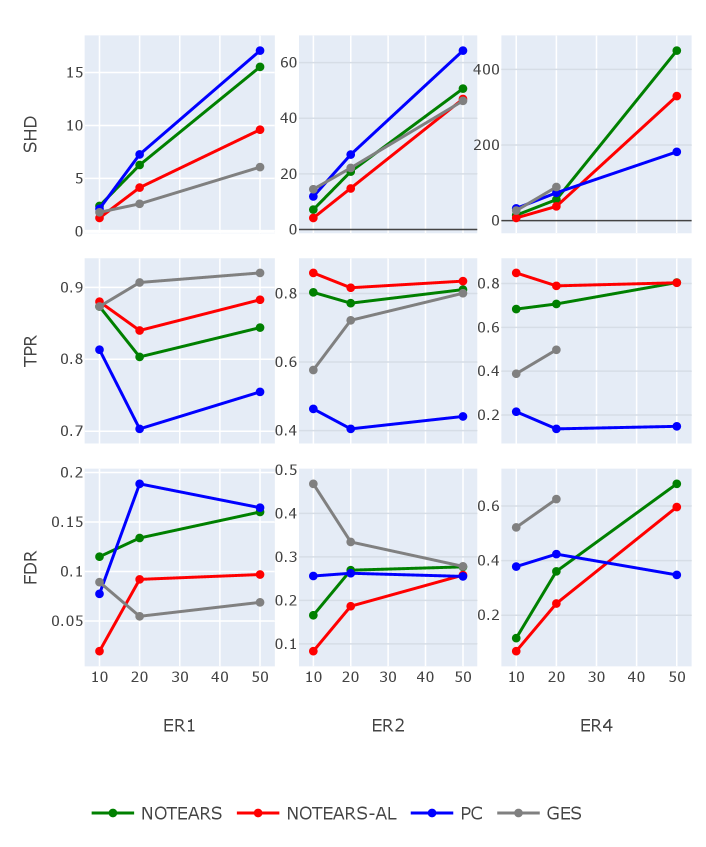

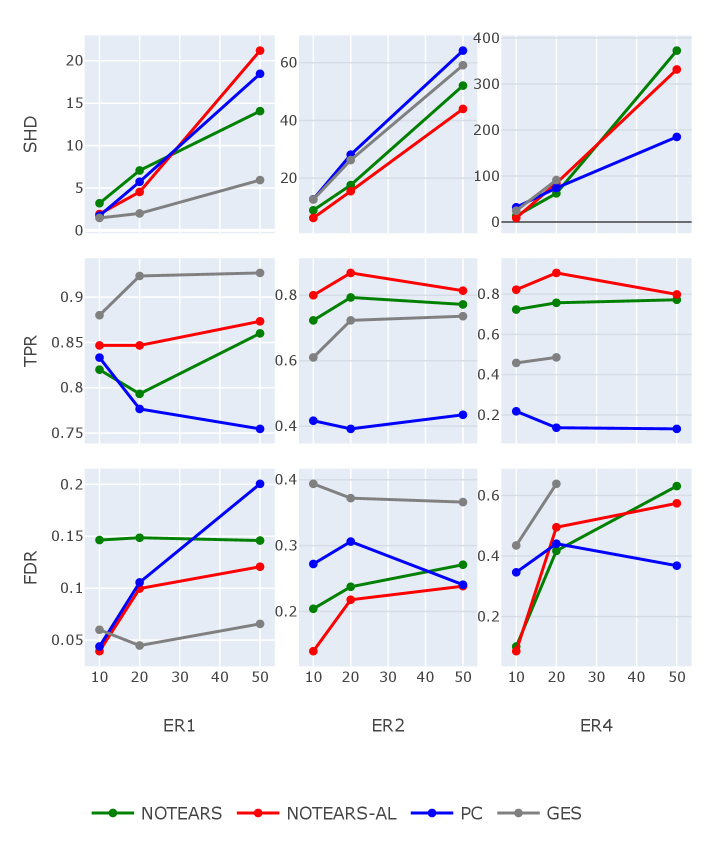

The basic setup of our experiments is as follows. For each experiment, the ground-truth DAG is generated from the random graph model: Erdös-Rényi (ER). We use ER to denote an ER graph with edges and similar meanings for ER and ER. Based on the ground truth DAG, we generate the weights of existing edges from ) a normal distribution with mean and variance ; 2) a uniform distribution over the interval , where . And then, we simulate the sample via , and the noise . Here, we mainly consider the linear case and extend to one type of GLMs named the logistic regression model. The metrics we use to evaluate the estimated DAGs include structural Hamming distance (SHD), true positive rate (TPR), and false discovery rate (FDR).

IV-A Numerical Results: Gaussian Weights

Compared to the original weight-generating interval of NOTEARS, which is Uniform , there are two points we consider to improve in our method. The first one is about the upper and lower bound, which are set to be . We expect our method can extend NOTEARS to a wider range with a better estimate. The second one is the coefficients near , which cannot be learned by the fixed-thresholding post-processing step. For the above two improvement points, we first perform the structure learning study in Gaussian-weight cases, where the weights of existing edges are sampled from a normal distribution with mean and variance . In this way, we can investigate the performance of NOTEARS-AL under a wider range of coefficients.

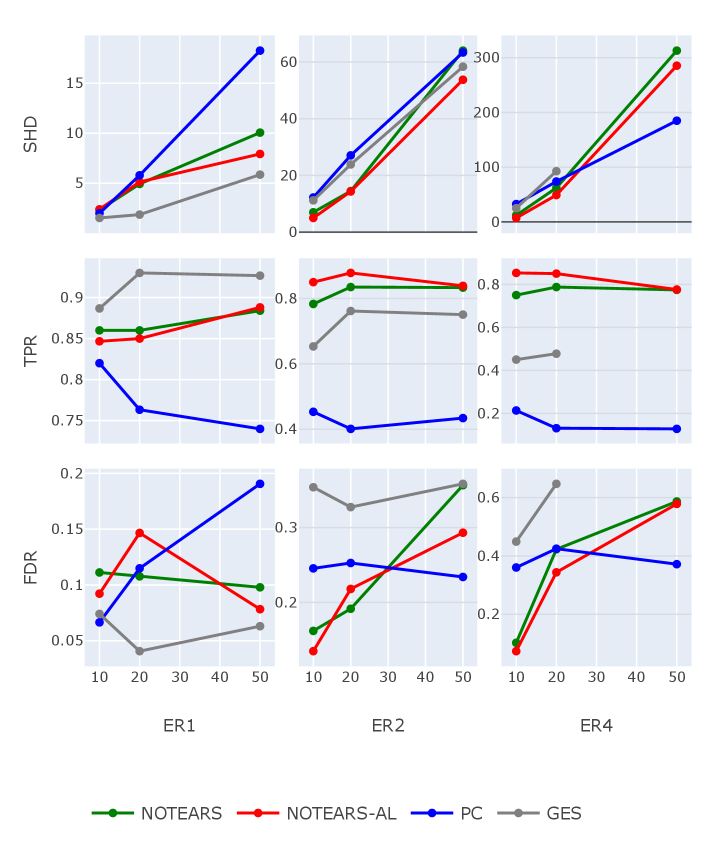

In our experiments, we generate {ER, ER, ER} graphs with different numbers of nodes , graphs for each type. And then, we simulate samples with iid Gaussian noises. The results are shown in Figure 3. For better visualization, only the average values of metrics are reported. Consistent with previous work GES, it shows a significant advantage in the ER case, no matter which metric we evaluate. But the performances of GES deteriorate rapidly when the level of sparsity decreases. Also, as we can see from Figure 3, for ER with nodes, the algorithm of GES takes too long to obtain the results because of the large searching space and low searching efficiency. Not surprisingly, the performance of NOTEARS and NOTEARS-AL show a positive relationship. This is natural since NOTEARS-AL extracts the weights of penalty level from conventional NOTEARS, which means that the performance of NOTEARS-AL is also sensitive to the results of the conventional one. Nonetheless, our approach performs uniformly better than NOTEARS across different graphs in terms of all metrics.

Figure 4 and Figure 5 show structure recovery results for SEM with exponential noise and Gumbel noise, respectively, where the weights of existing edges are sampled from a normal distribution with mean and variance .

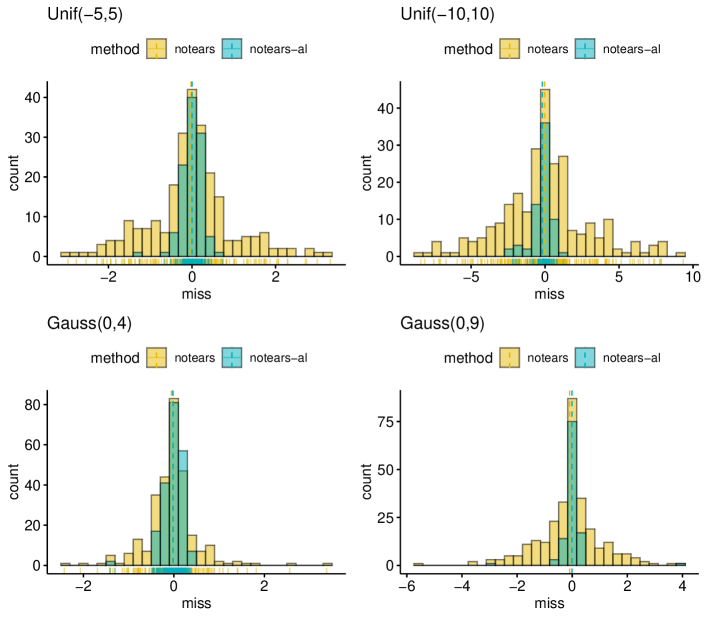

IV-B Coefficients in the Neighborhood of

As we mentioned, one side effect of the fixed-threshold strategy is that small coefficients in the true model may be deleted indiscriminately. Since the simulation experiments in previous work commonly set a gap to zero, which is , this side effect is not obvious. Hence, we investigate the value of missing edges when weights are generated from a range that covers zero, e.g., Uniform , Uniform , Gaussian and Gaussian . For each kind of distribution, we generated DAG structures from the ER model with nodes and then simulated samples. Based on the different methodologies of these two methods, one with a hard threshold of and the other with adaptive penalty levels, our method is more flexible and suitable to handle the coefficients around zero. Indeed, Figure 6 confirms this intuition. We plot the histogram of missing edges. In all three cases, our method (blue bar) loses fewer edges than NOTEARS with hard thresholding (yellow bar), especially the area around zero. Moreover, as the range becomes wider or the variance increases, the gap between these two methods becomes more and more significant.

IV-C High-dimension Cases

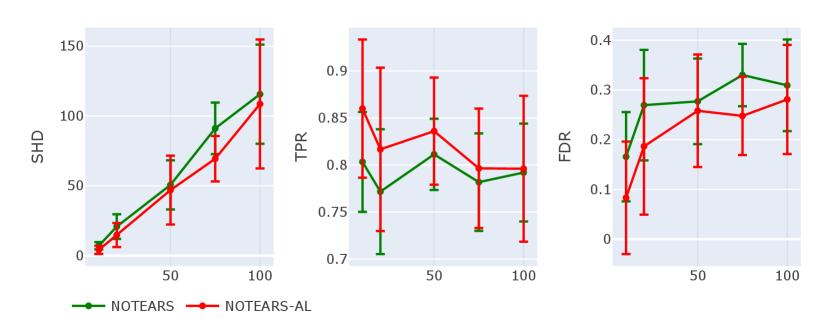

To investigate the performance of our method for estimating DAG from high-dimensional graphical models, we increase the number of nodes to and compare the results to those of fixed-thresholding NOTEARS. Here, to illustrate the validity of the evaluation criteria, we draw error bars with sample standard deviations. The results are shown in Figure 7. We can find that under nearly the same value of SHD, the true positive rate of NOTEARS-AL is significantly higher than that of NOTEARS with a fixed threshold. Besides, in terms of FDR (lower is better), NOTEARS-AL has lower values for all graphs. In general, NOTEARS-AL consistently outperforms the original fixed-threshold one, even in high-dimensional settings.

IV-D Generalized Linear Models

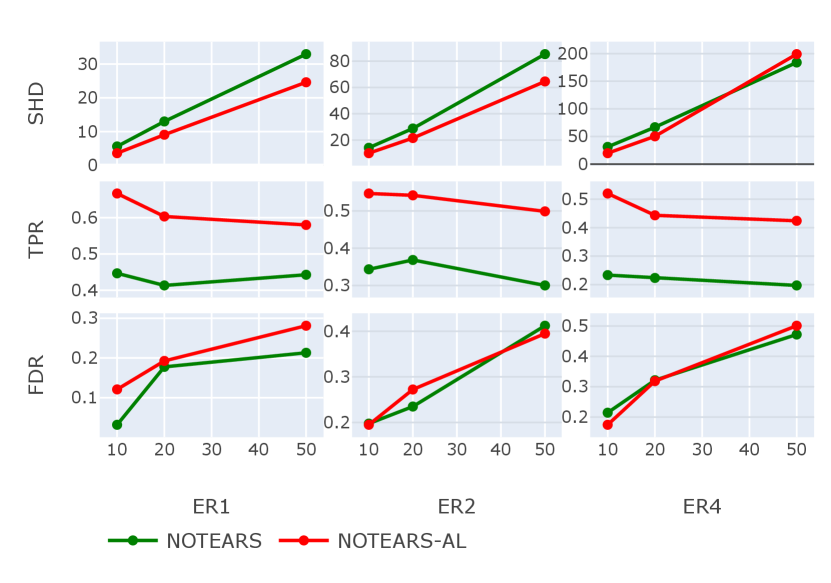

For GLMs, we concentrate on logistic regression for dichotomous data , with link function . As we introduced in Section III-F, for linear mean functions, the elements of in DAG constraint can be substituted by ’s, the coefficients in linear mean functions. We compare the performance of fixed-thresholding NOTEARS to NOTEARS-AL with edge weights following Gaussian .

Figure 8 shows the structure recovery results for . By comparison, We can find the TPR of NOTEARS-AL is significantly higher than that of NOTEARS with a fixed threshold. At the same time, in terms of the other two metrics, i.e., SHD and FDR, both methods keep at a similar level. In general, NOTEARS-AL consistently outperforms the original NOTEARS.

IV-E Real Data

Finally, consistent with Zheng et al. [1], we compare our method to NOTEARS on the same real dataset, which records the expression levels of phosphoproteins and phospholipids in human cells. This dataset is commonly used in the literature on Bayesian network structure inference as it provides a network that is widely accepted by the biological community. Based on cell types and observational samples, the ground truth structure given in [5] contains edges. In our experiments, NOTEARS with threshold estimates edges with an SHD of , compared to estimates for NOTEARS-AL with an SHD of .

V Conclusion

Based on the methodology of NOTEARS, we present a method for score-based learning of DAG models that do not need a hard thresholding post-processing step. The critical technical design is to use an adaptive penalty level for each edge rather than a common one. Notably, we prove that our method enjoys the oracle properties when the adaptive penalties satisfy some mild conditions. With a suitable choice of hyper-parameter in the second step, where we apply a modified -fold cross-validation to handle, our method can zero out edges automatically in each iteration. Our simulation experiments show the effectiveness of our method, and it is worth emphasizing that the adaptive strategy in our method is generally more flexible than the hard-threshold strategy since it is a purely data-driven procedure, which enables our method to own the ability to adapt to different types of graphs.

References

- [1] X. Zheng, B. Aragam, P. K. Ravikumar, and E. P. Xing, “Dags with no tears: Continuous optimization for structure learning,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- [2] J. Pearl, Probabilistic reasoning in intelligent systems: networks of plausible inference. Morgan kaufmann, 1988.

- [3] D. Heckerman, “A tutorial on learning with bayesian networks,” Innovations in Bayesian networks, pp. 33–82, 2008.

- [4] P. Spirtes, C. N. Glymour, R. Scheines, and D. Heckerman, Causation, prediction, and search. MIT press, 2000.

- [5] K. Sachs, O. Perez, D. Pe’er, D. A. Lauffenburger, and G. P. Nolan, “Causal protein-signaling networks derived from multiparameter single-cell data,” Science, vol. 308, no. 5721, pp. 523–529, 2005.

- [6] P. J. Lucas, L. C. Van der Gaag, and A. Abu-Hanna, “Bayesian networks in biomedicine and health-care,” Artificial intelligence in medicine, vol. 30, no. 3, pp. 201–214, 2004.

- [7] R. Ganian and V. Korchemna, “The complexity of bayesian network learning: Revisiting the superstructure,” Advances in Neural Information Processing Systems, vol. 34, pp. 430–442, 2021.

- [8] M. Chickering, D. Heckerman, and C. Meek, “Large-sample learning of bayesian networks is np-hard,” Journal of Machine Learning Research, vol. 5, pp. 1287–1330, 2004.

- [9] D. M. Chickering, “Learning bayesian networks is np-complete,” in Learning from data. Springer, 1996, pp. 121–130.

- [10] P. Spirtes, C. Glymour, and R. Scheines, “Causality from probability,” 1989.

- [11] P. Spirtes and C. Glymour, “An algorithm for fast recovery of sparse causal graphs,” Social science computer review, vol. 9, no. 1, pp. 62–72, 1991.

- [12] D. Margaritis and S. Thrun, “Bayesian network induction via local neighborhoods,” Advances in neural information processing systems, vol. 12, 1999.

- [13] J. Peters, D. Janzing, and B. Schölkopf, Elements of causal inference: foundations and learning algorithms. The MIT Press, 2017.

- [14] D. Geiger and D. Heckerman, “Learning gaussian networks,” in Uncertainty Proceedings 1994. Elsevier, 1994, pp. 235–243.

- [15] D. Heckerman, D. Geiger, and D. M. Chickering, “Learning bayesian networks: The combination of knowledge and statistical data,” Machine learning, vol. 20, no. 3, pp. 197–243, 1995.

- [16] R. R. Bouckaert, “Probabilistic network construction using the minimum description length principle,” in European conference on symbolic and quantitative approaches to reasoning and uncertainty. Springer, 1993, pp. 41–48.

- [17] M. Teyssier and D. Koller, “Ordering-based search: A simple and effective algorithm for learning bayesian networks,” arXiv preprint arXiv:1207.1429, 2012.

- [18] I. Tsamardinos, L. E. Brown, and C. F. Aliferis, “The max-min hill-climbing bayesian network structure learning algorithm,” Machine Learning, vol. 65, no. 1, pp. 31–78, 2006.

- [19] D. M. Chickering, “Optimal structure identification with greedy search,” Journal of machine learning research, vol. 3, no. Nov, pp. 507–554, 2002.

- [20] J. Ramsey, M. Glymour, R. Sanchez-Romero, and C. Glymour, “A million variables and more: the fast greedy equivalence search algorithm for learning high-dimensional graphical causal models, with an application to functional magnetic resonance images,” International journal of data science and analytics, vol. 3, no. 2, pp. 121–129, 2017.

- [21] I. Ng, Y. Zheng, J. Zhang, and K. Zhang, “Reliable causal discovery with improved exact search and weaker assumptions,” in Advances in Neural Information Processing Systems, vol. 34, 2021.

- [22] D. Kalainathan, O. Goudet, I. Guyon, D. Lopez-Paz, and M. Sebag, “Structural agnostic modeling: Adversarial learning of causal graphs,” arXiv preprint arXiv:1803.04929, 2018.

- [23] I. Ng, S. Zhu, Z. Fang, H. Li, Z. Chen, and J. Wang, “Masked gradient-based causal structure learning,” in SIAM International Conference on Data Mining, 2022, pp. 424–432.

- [24] Y. Yu, J. Chen, T. Gao, and M. Yu, “DAG-GNN: DAG structure learning with graph neural networks,” in International Conference on Machine Learning, 2019.

- [25] S. Lachapelle, P. Brouillard, T. Deleu, and S. Lacoste-Julien, “Gradient-based neural dag learning,” in International Conference on Learning Representations, 2020.

- [26] S. Zhu, I. Ng, and Z. Chen, “Causal discovery with reinforcement learning,” in International Conference on Learning Representations, 2020.

- [27] X. Zheng, C. Dan, B. Aragam, P. Ravikumar, and E. Xing, “Learning sparse nonparametric dags,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2020, pp. 3414–3425.

- [28] R. Pamfil, N. Sriwattanaworachai, S. Desai, P. Pilgerstorfer, K. Georgatzis, P. Beaumont, and B. Aragam, “Dynotears: Structure learning from time-series data,” in International Conference on Artificial Intelligence and Statistics, 2020.

- [29] R. Bhattacharya, T. Nagarajan, D. Malinsky, and I. Shpitser, “Differentiable causal discovery under unmeasured confounding,” in International Conference on Artificial Intelligence and Statistics, 2021.

- [30] P. Brouillard, S. Lachapelle, A. Lacoste, S. Lacoste-Julien, and A. Drouin, “Differentiable causal discovery from interventional data,” in Advances in Neural Information Processing Systems, 2020.

- [31] I. Ng and K. Zhang, “Towards federated bayesian network structure learning with continuous optimization,” in International Conference on Artificial Intelligence and Statistics, 2022.

- [32] E. Gao, J. Chen, L. Shen, T. Liu, M. Gong, and H. Bondell, “FedDAG: Federated DAG structure learning,” Transactions on Machine Learning Research, 2022.

- [33] T. Geffner, J. Antoran, A. Foster, W. Gong, C. Ma, E. Kiciman, A. Sharma, A. Lamb, M. Kukla, N. Pawlowski et al., “Deep end-to-end causal inference,” arXiv preprint arXiv:2202.02195, 2022.

- [34] E. Gao, I. Ng, M. Gong, L. Shen, W. Huang, T. Liu, K. Zhang, and H. Bondell, “MissDAG: Causal discovery in the presence of missing data with continuous additive noise models,” in Advances in Neural Information Processing Systems, 2022.

- [35] I. Ng, A. Ghassami, and K. Zhang, “On the role of sparsity and dag constraints for learning linear dags,” Advances in Neural Information Processing Systems, vol. 33, pp. 17 943–17 954, 2020.

- [36] D. Wei, T. Gao, and Y. Yu, “Dags with no fears: A closer look at continuous optimization for learning bayesian networks,” in Advances in Neural Information Processing Systems, 2020.

- [37] Z. Zhang, I. Ng, D. Gong, Y. Liu, E. M. Abbasnejad, M. Gong, K. Zhang, and J. Q. Shi, “Truncated matrix power iteration for differentiable DAG learning,” in Advances in Neural Information Processing Systems, 2022.

- [38] I. Ng, S. Lachapelle, N. R. Ke, S. Lacoste-Julien, and K. Zhang, “On the convergence of continuous constrained optimization for structure learning,” in International Conference on Artificial Intelligence and Statistics. PMLR, 2022, pp. 8176–8198.

- [39] H. Zou, “The adaptive lasso and its oracle properties,” Journal of the American statistical association, vol. 101, no. 476, pp. 1418–1429, 2006.

- [40] P. Spirtes, C. Glymour, R. Scheines et al., Causation, Prediction, and Search. The MIT Press, 2001, vol. 1.

- [41] F. Harary and B. Manvel, “On the number of cycles in a graph,” Matematickỳ časopis, vol. 21, no. 1, pp. 55–63, 1971.

- [42] D. P. Bertsekas, “Nonlinear programming,” Journal of the Operational Research Society, vol. 48, no. 3, pp. 334–334, 1997.

- [43] R. H. Byrd, P. Lu, J. Nocedal, and C. Zhu, “A limited memory algorithm for bound constrained optimization,” SIAM Journal on scientific computing, vol. 16, no. 5, pp. 1190–1208, 1995.

- [44] K. Zhong, I. E.-H. Yen, I. S. Dhillon, and P. K. Ravikumar, “Proximal quasi-newton for computationally intensive l1-regularized m-estimators,” Advances in Neural Information Processing Systems, vol. 27, 2014.

- [45] X. Zheng, C. Dan, B. Aragam, P. Ravikumar, and E. P. Xing, “Learning sparse nonparametric dags,” 2019. [Online]. Available: https://arxiv.org/abs/1909.13189

- [46] R. Tibshirani, “Regression shrinkage and selection via the lasso,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 58, no. 1, pp. 267–288, 1996.

- [47] N. Meinshausen and P. Bühlmann, “Variable selection and high-dimensional graphs with the lasso,” Ann Stat, vol. 34, pp. 1436–1462, 2006.

- [48] H. Zou, “The adaptive lasso and its oracle properties,” Journal of the American Statistical Association, vol. 101, no. 476, pp. 1418–1429, 2006. [Online]. Available: http://www.jstor.org/stable/27639762

- [49] Y. Feng and Y. Yu, “The restricted consistency property of leave--out cross-validation for high-dimensional variable selection,” 2013. [Online]. Available: https://arxiv.org/abs/1308.5390

- [50] L. A. Rosasco, S. Villa, S. Mosci, M. Santoro, and A. Verri, “Nonparametric sparsity and regularization,” 2013.

- [51] N. H. Choi, W. Li, and J. Zhu, “Variable selection with the strong heredity constraint and its oracle property,” Journal of the American Statistical Association, vol. 105, no. 489, pp. 354–364, 2010.

- [52] A. W. Van der Vaart, Asymptotic statistics. Cambridge university press, 2000, vol. 3.

VI Proof of Theorems

VI-A Proof of Theorem 1

Let , , , , where is a constant.

where .

Let , , we can derive

where we define

For ,

For ,

For ,

where , and .

For , since , , we can derive

.

Thus,

We can find when and , \@slowromancapii@ dominates \@slowromancapi@ and \@slowromancapiii@, then, .

Thus, we can conclude that there exists a local minimizer of with optimal convergence rate [51].

VI-B Proof of Theorem 2

Firstly, for sparsity property, it is sufficient to prove ,

| (16) |

with probability tending to 1 where .

Then,

where we can find

Let . Therefore, for , if , then, the sign of is dominated by .

Secondly, for asymptotic normality property, we concentrate on set , , and use to denote the sub adjacency matrix of graphs, to denote the sub observation data of corresponding variables, and to denote the corresponding adaptive penalty weights.

Denote

Then,

By Taylor expansion at , we have

Therefore, now we have

From Theorem 1, we have , , and , then,

Therefore, under condition 2, by Lindeberg-Feller central limit theorem in linear regression case, as our design satisfies the following mild condition[52]