On the training of sparse and dense deep neural networks: less parameters, same performance.

Abstract

Deep neural networks can be trained in reciprocal space, by acting on the eigenvalues and eigenvectors of suitable transfer operators in direct space. Adjusting the eigenvalues, while freezing the eigenvectors, yields a substantial compression of the parameter space. This latter scales by definition with the number of computing neurons. The classification scores, as measured by the displayed accuracy, are however inferior to those attained when the learning is carried in direct space, for an identical architecture and by employing the full set of trainable parameters (with a quadratic dependence on the size of neighbor layers). In this Letter, we propose a variant of the spectral learning method as appeared in Giambagli et al Nat. Comm. 2021, which leverages on two sets of eigenvalues, for each mapping between adjacent layers. The eigenvalues act as veritable knobs which can be freely tuned so as to (i) enhance, or alternatively silence, the contribution of the input nodes, (ii) modulate the excitability of the receiving nodes with a mechanism which we interpret as the artificial analogue of the homeostatic plasticity. The number of trainable parameters is still a linear function of the network size, but the performances of the trained device gets much closer to those obtained via conventional algorithms, these latter requiring however a considerably heavier computational cost. The residual gap between conventional and spectral trainings can be eventually filled by employing a suitable decomposition for the non trivial block of the eigenvectors matrix. Each spectral parameter reflects back on the whole set of inter-nodes weights, an attribute which we shall effectively exploit to yield sparse networks with stunning classification abilities, as compared to their homologues trained with conventional means.

Automated learning from data via deep neural networks bishop_pattern_2011 ; cover_elements_1991 ; hastie2009elements ; hundred is becoming popular in an ever-increasing number of applications sutton2018reinforcement ; graves2013speech ; sebe2005machine ; grigorescu2020survey . Systems can learn from data, by identifying distinctive features which form the basis of decision making, with minimal human intervention. The weights, which link adjacent nodes across feedforward architectures, follow the optimisation algorithm and store the information needed for the trained network to perform the assigned tasks with unprecedented fidelity chen2014big ; meyers2008using ; caponetti2011biologically . A radically new approach to the training of a deep neural network has been recently proposed which anchors the process to reciprocal space, rather than to the space of the nodes giambagli2021 . Reformulating the learning in reciprocal space enables one to shape key collective modes, the eigenvectors, which are implicated in the process of progressive embedding, from the input layer to the detection point. Even more interestingly, one can assume the eigenmodes of the inter-layer transfer operator to align along suitable random directions and identify the associated eigenvalues as target for the learning scheme. This results in a dramatic compression of the training parameters space, yielding accuracies which are superior to those attained with conventional methods restricted to operate with an identical number of tunable parameters. Nonetheless, neural networks trained in the space of nodes with no restrictions on the set of adjusted weights, achieve better classification scores, as compared to their spectral homologues with quenched eigendirections. In the former case, the number of free parameters grows as the product of the sizes of adjacent layer pairs, thus quadratically in terms of hosted neurons. In the latter, the number of free parameters increases linearly with the size of the layers (hence with the number of neurons), when the eigenvalues are solely allowed to change. Also training the eigenvectors amounts to dealing with a set of free parameters equivalent to that employed when the learning is carried out in direct space: in this case, the two methods yield performances which are therefore comparable.

Starting from this setting, we begin by discussing a straightforward generalisation of the spectral learning scheme presented in giambagli2021 , which proves however effective in securing a significant improvement on the recorded classification scores, while still optimising a number of parameters which scales linearly with the size of the network. The proposed generalisation paves the way to a biomimetic interpretation of the spectral training scheme. The eigenvalues can be tuned so as to magnify/damp the contribution associated to the input nodes. At the same time, they modulate the excitability of the receiving nodes, as a function of the local field. This latter effect is reminiscent of the homeostatic plasticity Surmeier2004 as displayed by living neurons. Further, we will show that the residual gap between conventional and spectral trainings methods can be eventually filled by resorting to apt decompositions of the non trivial block of the eigenvectors matrix, which place the emphasis on a limited set of collective variables. Finally, we will prove that working in reciprocal space turns out to be by far more performant, when aiming at training sparse neural networks. Because of the improvement in terms of computational load, and due to the advantage of operating with collective target variables as we will make clear in the following, it is surmised that modified spectral learning of the type here discussed should be considered as a viable standard for deep neural networks training in artificial intelligence applications.

To test the effectiveness of the proposed method we will consider classification tasks operated on three distinct database of images. The first is the celebrated MNIST database of handwritten digits lecun1998mnist , the second is Fashion-MNIST (F-MNIST), a dataset of Zalando’s article images, the third is CIFAR-10 a collection of images from different classes (airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks). In all considered cases, use can be made of a deep neural network to perform the sought classification, namely to automatically assign the image supplied as an input to the class it belongs to. The neural network is customarily trained via standard backpropagation algorithms to tune the weights that connect consecutive stacks of the multi-layered architecture. The assigned weights, target of the optimisation procedure, bear the information needed to allocate the examined images to their reference category.

Consider a deep feedforward network made of distinct layers and label each layer with the progressive index . Denote by the number of computing units, the neurons, that belong to layer . The total number of parameters that one seeks to optimise in a dense neural network setting (all neurons of any given layer with are linked to every neurons of the adjacent layer) equals , when omitting additional bias. As we shall prove in the following, impressive performance can be also achieved by pursuing a markedly different procedure, which requires acting on just free parameters (not including bias). To this end, let us begin by reviewing the essence of spectral learning method as set forth in giambagli2021 .

Introduce and create a column vector , of size , whose first entries are the intensities (from the top-left to the bottom-right, moving horizontally) as displayed on the pixels of the input image. All other entries of are set to zero. The ultimate goal is to transform into an output vector , of size , whose last elements reflect the intensities of the output nodes where reading takes eventually place. This is achieved with a nested sequence of linear transformations, as exemplified in the following. Consider the generic vector , with , as obtained after compositions of the above procedure. This latter vector undergoes a linear transformation to yield . Further, is processed via a suitably defined non-linear function, denoted by , where stands for an optional bias. Focus now on , a matrix with a rather specific structure, as we will highlight hereafter. Posit , by invoking a spectral decomposition. is the diagonal matrix of the eigenvalues of . By construction we impose, for and . The remaining elements are initially set to random numbers, e.g. extracted from a uniform distribution, and define the target of the learning scheme 111The only noticeable exception is when . In this case, the first diagonal elements of take part to the training.. Back to the spectral decomposition of , is assumed to be the identity matrix , with the inclusion of a sub-diagonal rectangular block (k) of size . This choice corresponds to dealing with a feedforward arrangements of nested layers. A straightforward calculation returns , which readily yields . In the simplest setting that we shall inspect in the following, the off-diagonal elements of matrix are frozen to nominal values, selected at random from a given distribution. In this minimal version, the spectral decomposition of the transfer operators enables one to isolate a total of adjustable parameters, the full collection of non trivial eigenvalues, which can be self-consistently trained. To implement the learning scheme on these premises, we consider , the image on the output layer of the input vector :

| (1) |

and calculate where stands for the softmax operation. We then introduce the categorical cross-entropy loss function where the quantity identifies the label attached to reflecting the category to which it belongs via one-hot encoding aggarwal2018NNdeeplearn . More specifically, the -th elements of vector is equal to unit (the other entries being identically equal to zero) if the image supplied as an input is associated to the class of items grouped under label .

The loss function can be minimized by acting on a limited set of free parameters, the collection of non trivial eigenvalues of matrices (i.e. eigenvalues of , eigenvalues of ,…, eigenvalues of ). In principle, the sub-diagonal blocks (k) (the non orthogonal entries of the basis that diagonalises ) can be optimised in parallel, but this choice nullifies the gain in terms of parameters containment, as achieved via spectral decomposition, when the eigenvalues get solely modulated. The remaining part of this Letter is entirely devoted to overcoming this limitation, while securing the decisive enhancement of the neural network’s performance.

The first idea, as effective as it is simple, is to extend the set of trainable eigenvalues. When mapping layer into layer , we can in principle act on eigenvalues, without restricting the training to the elements, which were identified as the sole target of the spectral method in its original conception (except for the first mapping, from the input layer to its adjacent counterpart). As we shall clarify in the following, the eigenvalues can be trained twice, depending on whether they originate from incoming or outcoming nodes, along the successive arrangement of nested layers. The global number of trainable parameters is hence , as anticipated above. A straightforward calculation, carried out in the annexed Supplementary Information, returns a closed analytical expression for , the weights of the edges linking nodes and in direct space, as a function of the underlying spectral quantities. In formulae:

| (2) |

where and , with and . More specifically, runs on the nodes at the departure layer (), whereas identifies those sitting at destination (layer ). In the above expression, stand for the first eigenvalues of . The remaining eigenvalues are labelled . To help comprehension denote by the activity on nodes . Then,

| (3) |

The eigenvalues modulate the density at the origin, while appears to regulate the local node’s excitability relative to the network activity in its neighbourhood. This is the artificial analogue of the homeostatic plasticity, the strategy implemented by living neurons to maintain the synaptic basis for learning, respiration, and locomotion Surmeier2004 .

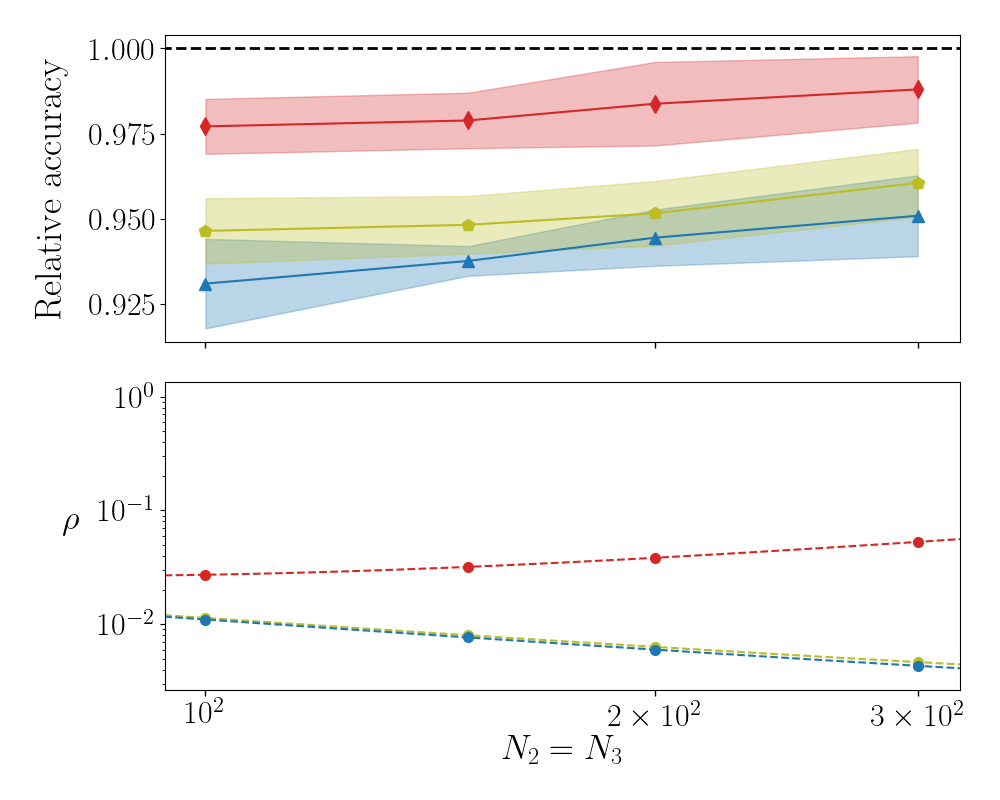

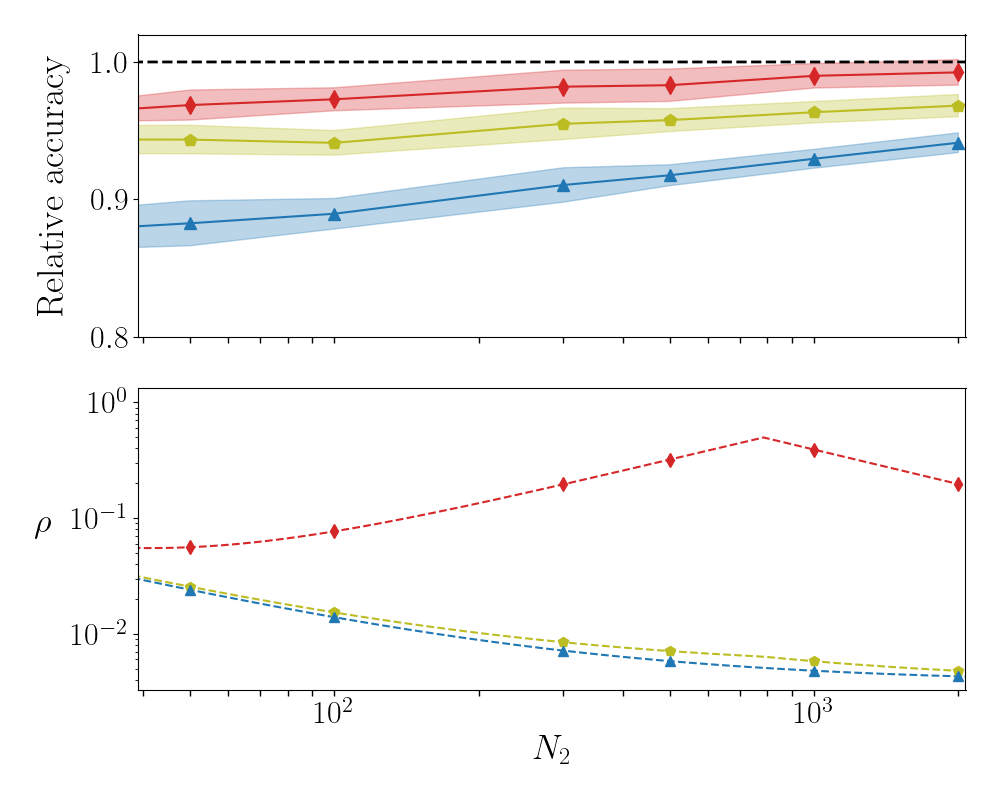

To illustrate the effectiveness of the proposed methodology we make reference to Fig. 1, which summarises a first set of results obtained for MNIST. To keep the analysis as simple as possible we have here chosen to deal with . The sizes of the input () and output () layers are set by the specificity of the considered dataset. Conversely, the size of the intermediate layer () can be changed at will. We then monitor the relative accuracy, i.e. the accuracy displayed by the deep neural networks trained according to different strategies, normalised to the accuracy achieved with an identical network trained with conventional methods. In the upper panel of Fig. 1, the performance of the neural networks trained via the modified spectral strategy (referred to as to Spectral) is displayed in blue (triangles). The recorded accuracy is satisfactory (about 90% of that obtained with usual means and few percent more than that obtained with the spectral method of original conception giambagli2021 ), despite the modest number of trained parameters. To exemplify this, in the bottom panel of Fig. 1 we plot the relative ratio of the number of tuned parameters (Spectral) vs. conventional one) against (blue triangles) : the reduction in the number of parameters as follows the modified spectral method is staggering. Working with the other employed dataset, respectively F-MNIST and CIFAR-10, yields analogous conclusions (see annexed Supplementary Information).

One further improvement can be achieved by replacing (k) with its equivalent singular value decomposition (SVD), a factorization that generalizes the eigendecomposition to rectangular (in this framework, ) matrices (see Gabri__2019 for an application to neural networks). In formulae, this amounts to postulate (k) where and are, respectively, and real orthogonal matrices. On the contrary, is a rectangular diagonal matrix, with non-negative real numbers on the diagonal. The diagonal entries of are the singular values of k. The symbol , stands for the transpose operation. The learning scheme can be hence reformulated as follows. For each , generate two orthogonal random matrices and . These latter are not updated during the successive stages of the learning process. At variance, the non trivial elements of take active part to the optimisation process. For each , parameters can be thus modulated to optimize the information transfer, from layer to layer . Stated differently, free parameters adds up to the eigenvalues that get modulated under the original spectral approach. One can hence count on a larger set of parameters as compared to that made available via the spectral method, restricted to operate with the eigenvalues. Nonetheless, the total number of parameters scales still with the linear size of the deep neural network, and not quadratically, as for a standard training carried out in direct space. This addition (referred to as the S-SVD scheme) yields an increase of the recorded classification score, as compared to the setting where the Spectral method is solely employed, which is however not sufficient to fill the gap with conventional schemes (see Fig. 1). Similar scenarios are found for F-MNIST and CIFAR-10 (see Supplementary Information), with varying degree of improvement, which reflects the specificity of the considered dataset.

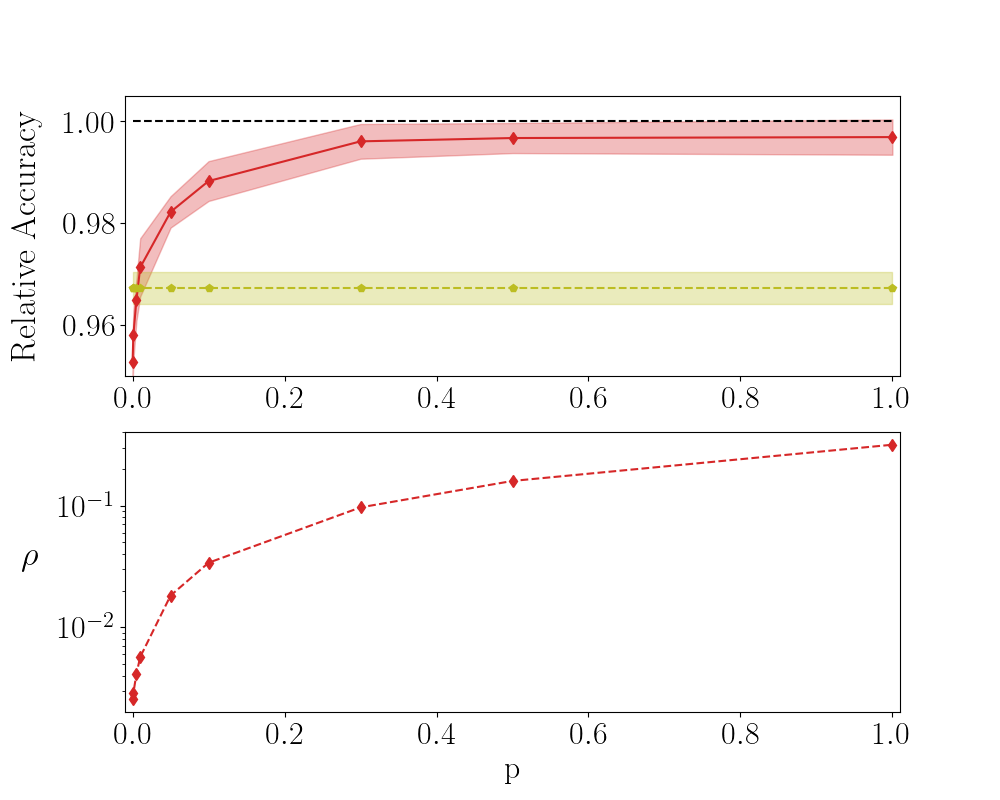

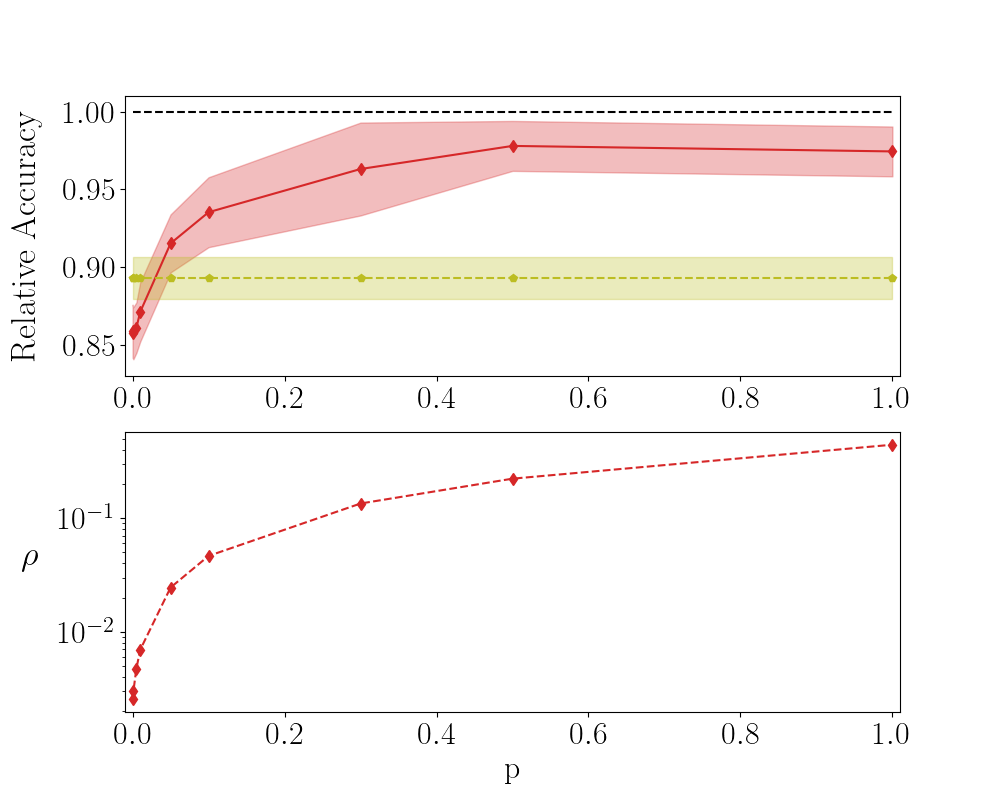

A decisive leap forward is however accomplished by employing a QR factorization of matrix (k). For , this corresponds to writing the matrix k as the product of an orthogonal matrix and an upper triangular matrix . Conversely, when , we factorize , in such a way that the square matrix has linear dimension . In both cases, matrix is randomly generated and stays frozen during gradient descent optimisation. The entries of the matrix can be adjusted so as to improve the classification ability of the trained network (this strategy of training, integrated to the Spectral method, is termed S-QR ). Results are depicted in Fig. 1 with (red) diamonds. The achieved performance is practically equivalent to that obtained with a conventional approach to learning. Also in this case , the gain in parameter reduction being noticeable when is substantially different (smaller or larger) than , for the case at hand. Interestingly enough, for a chief improvement of the performance, over the SVD reference case, it is sufficient to train a portion of the off diagonal elements of . In the Supplementary Information, we report the recorded accuracy against , the probability to train the entries that populate the non null triangular part of . The value of the accuracy attained with conventional strategies to the training is indeed approached, already at values of which are significantly different from unit.

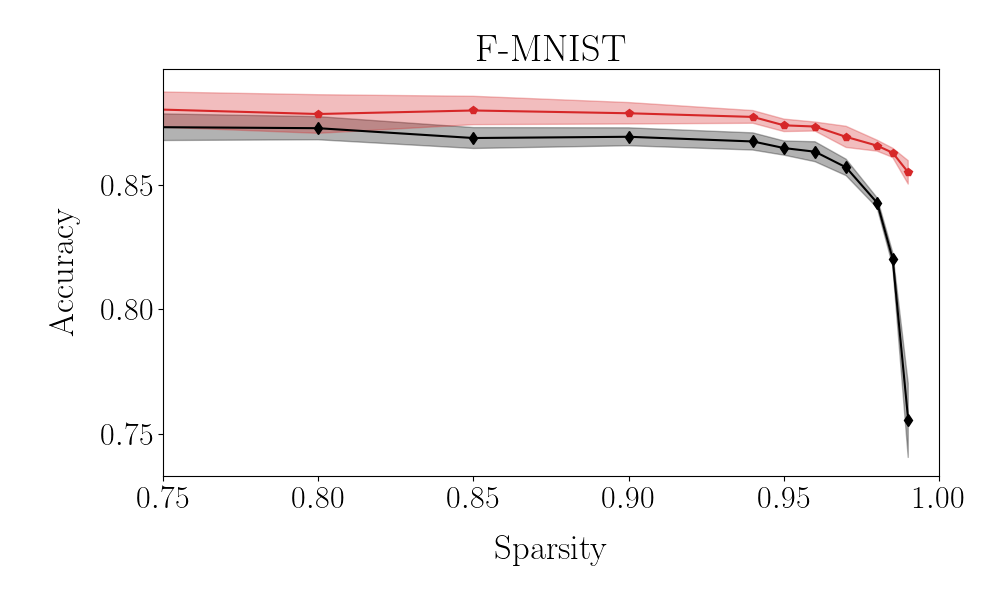

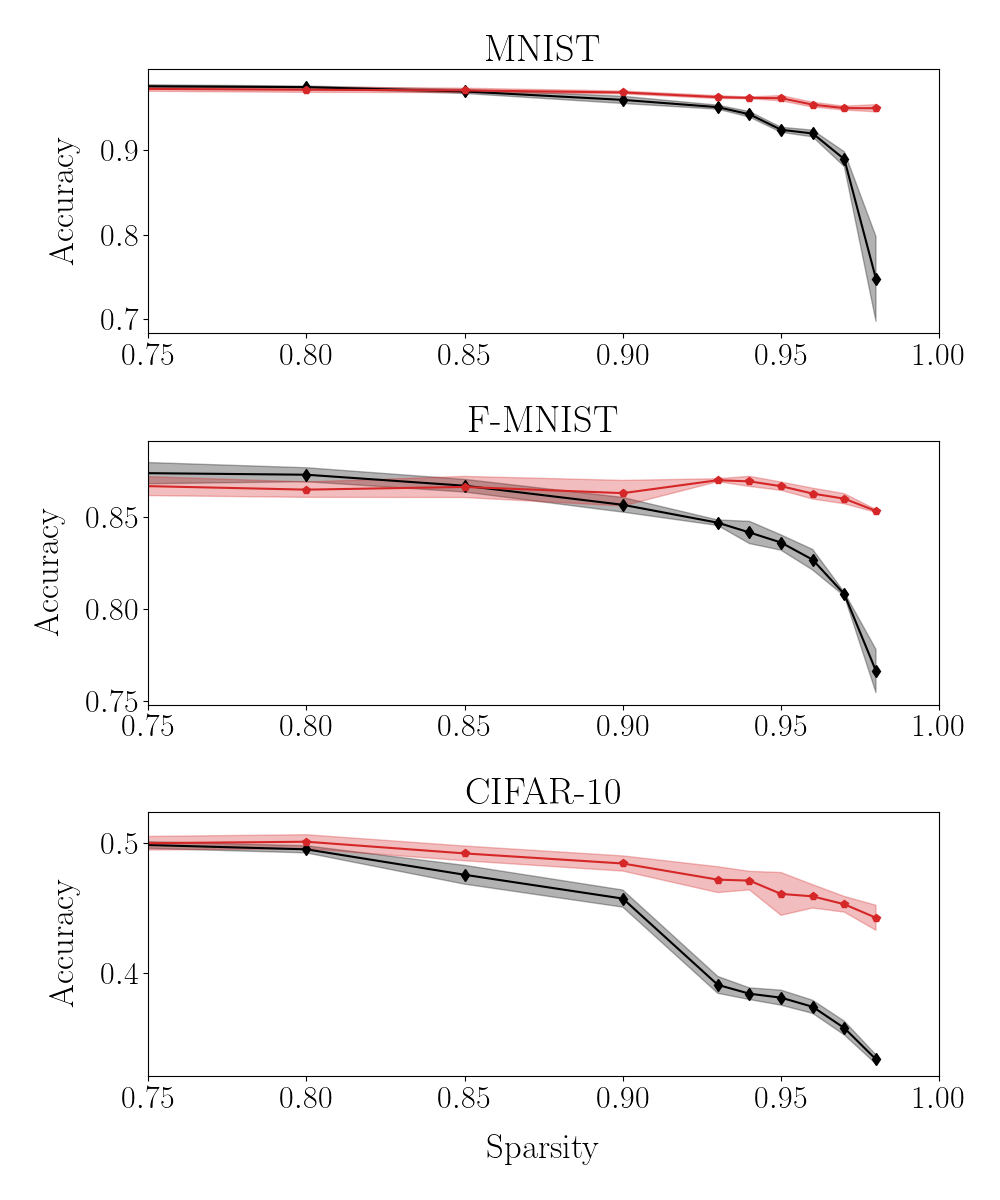

The quest for a limited subset of key parameters which define the target of a global approach to the training is also important for its indirect implications, besides the obvious reduction in terms of algorithmic complexity. As a key application to exemplify this point, we shall consider the problem of performing the classification tasks considered above, by training a neural network with a prescribed degree of imposed sparsity. This can be achieved by applying a non linear filter on each individual weight . The non linear mask is devised so as to return zero (no link present) when . Here, is an adaptive cut-off which can be freely adjusted to allow for the trained network to match the requested amount of sparsity. This latter is measured by a scalar quantity, spanning the interval : when the degree of sparsity is set to zero, the network is dense. At the opposite limit, when the sparsity equals one, the nodes of the network are uncoupled and the information cannot be transported across layers. Working with the usual approach to the training, which seeks to modulate individual weights in direct space, one has to face an obvious problem. When the weight of a given link is turned into zero, then it gets excluded by the subsequent stages of the optimisation process. Consequently, a weight that has been silenced cannot regain an active role in the classification handling. This is not the case when operating under the spectral approach to learning, also when complemented by the supplemental features tested above. The target of the optimisation, the spectral attributes of the transfer operators, are not biased by any filtering masks: as a consequence, acting on them, one can rescue from oblivion weights that are deemed useless at a given iteration (and, as such, silenced), but which might prove of help, at later stages of the training. In Fig. 2, the effect of the imposed sparsity on the classification accuracy is represented for conventional vs. S-QR method. The latter is definitely more performant in terms of displayed accuracy, when the degree of sparsity gets more pronounced. The drop in accuracy as exhibited by the sparse network trained with the S-QR modality is clearly less pronounced, than that reported for an equivalent network optimised in direct space. Deviations between the two proposed methodologies become indeed appreciable in the very sparse limit, i.e. when the residual active links are too few for a proper functioning of the direct scheme. In fact, edges which could prove central to the classification, but that are set silent at the beginning, cannot come back to active. At variance, the method anchored to reciprocal space can identify an optimal pool of links (still constraint to the total allowed for) reversing to the active state, those that were initially set to null. Interestingly, it can be shown that a few hubs emerge in the intermediate layer, which collect and process the information delivered from the input stack.

Taken altogether, it should be unequivocally concluded that a large body of free parameters, usually trained in machine learning applications, is de facto unessential. The spectral learning scheme, supplemented with a QR training of the non trivial portion of eigenvectors’ matrix, enabled us to identify a limited subset of key parameters which prove central to the learning procedure, and reflect back with a global impact on the computed weights in direct space. This observation could materialise in a drastic simplification of current machine learning technologies, a challenge at reach via algorithmic optimisation carried out in dual space. Quite remarkably, working in reciprocal space yields trained networks with better classification scores, when operating at a given degree of imposed sparsity. This finding suggests that shifting the training to the spectral domain might prove beneficial for a wide gallery of deep neural networks applications.

References

- [1] Christopher M. Bishop. Pattern Recognition and Machine Learning. Springer, New York, 1st ed. 2006. corr. 2nd printing 2011 edition edition, April 2011.

- [2] T. M. Cover and Joy A. Thomas. Elements of information theory. Wiley series in telecommunications. Wiley, New York, 1991.

- [3] Trevor Hastie, Robert Tibshirani, and Jerome Friedman. The elements of statistical learning: data mining, inference, and prediction. Springer Science & Business Media, 2009.

- [4] http://themlbook.com/.

- [5] Richard S Sutton and Andrew G Barto. Reinforcement learning: An introduction. MIT press, 2018.

- [6] Alex Graves, Abdel-rahman Mohamed, and Geoffrey Hinton. Speech recognition with deep recurrent neural networks. In 2013 IEEE international conference on acoustics, speech and signal processing, pages 6645–6649. IEEE, 2013.

- [7] Nicu Sebe, Ira Cohen, Ashutosh Garg, and Thomas S Huang. Machine learning in computer vision, volume 29. Springer Science & Business Media, 2005.

- [8] Sorin Grigorescu, Bogdan Trasnea, Tiberiu Cocias, and Gigel Macesanu. A survey of deep learning techniques for autonomous driving. Journal of Field Robotics, 37(3):362–386, 2020.

- [9] Min Chen, Shiwen Mao, and Yunhao Liu. Big data: A survey. Mobile networks and applications, 19(2):171–209, 2014.

- [10] Ethan Meyers and Lior Wolf. Using biologically inspired features for face processing. International Journal of Computer Vision, 76(1):93–104, 2008.

- [11] Laura Caponetti, Cosimo Alessandro Buscicchio, and Giovanna Castellano. Biologically inspired emotion recognition from speech. EURASIP journal on Advances in Signal Processing, 2011(1):24, 2011.

- [12] Lorenzo Giambagli, Lorenzo Buffoni, Timoteo Carletti, Walter Nocentini, and Duccio Fanelli. Machine learning in spectral domain. Nature Communications, 12(1):1330, 2021.

- [13] Foehring R. Surmeier, D. A mechanism for homeostatic plasticity. Nature Neuroscience, 7, 2004.

- [14] Yann LeCun. The mnist database of handwritten digits. http://yann. lecun. com/exdb/mnist/, 1998.

- [15] The only noticeable exception is when . In this case, the first diagonal elements of take part to the training.

- [16] Charu C. Aggarwal. Neural Networks and Deep Learning. Springer, 2018.

- [17] Marylou Gabrié, Andre Manoel, Clément Luneau, Jean Barbier, Nicolas Macris, Florent Krzakala, and Lenka Zdeborová. Entropy and mutual information in models of deep neural networks. Journal of Statistical Mechanics: Theory and Experiment, 2019(12):124014, dec 2019.

*

Appendix A Analytical characterisation of inter-nodes weights in direct space

In the following, we will derive Eq. (2) as reported in the main body of the paper. Recall that is a matrix. From extract a square block of size . This is formed by the set of elements with and , with , . For the sake of simplicity, we use to identify the obtained matrix. We proceed in analogy for and . Then:

| (4) | ||||

From hereon, we shall omit the apex . Let identify the eigenvalues of the transfer operator , namely

the diagonal entries of . In formulae, .

The quantities and can be cast in the form:

where runs on the nodes at the departure layer (), whereas . Hence, . The above expression for can be further processed to yield

Finally we can express the difference in (4) as

| (5) |

From the above expression, one readily obtains the sought equation, upon shifting the index to have it spanning the interval . Recall in fact that, by definition, (the matrix of the weights, see main body of the paper) is a matrix.

Appendix B Testing the S-SVD and S-QR methods on F-MNIST and CIFAR-10 database

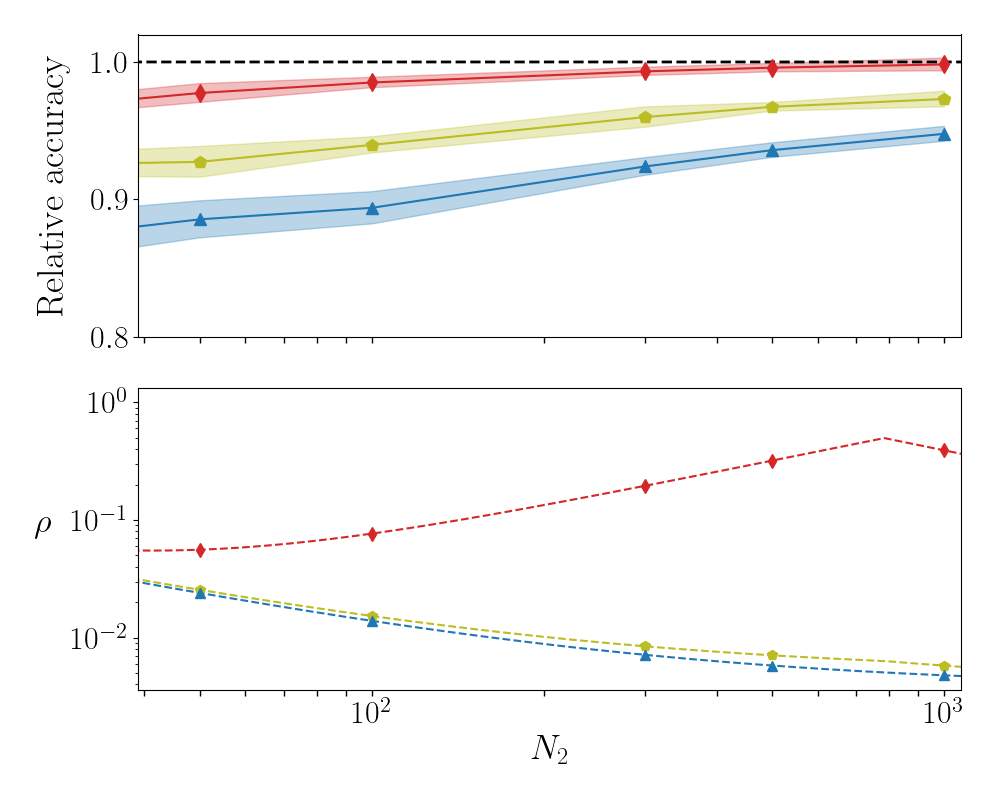

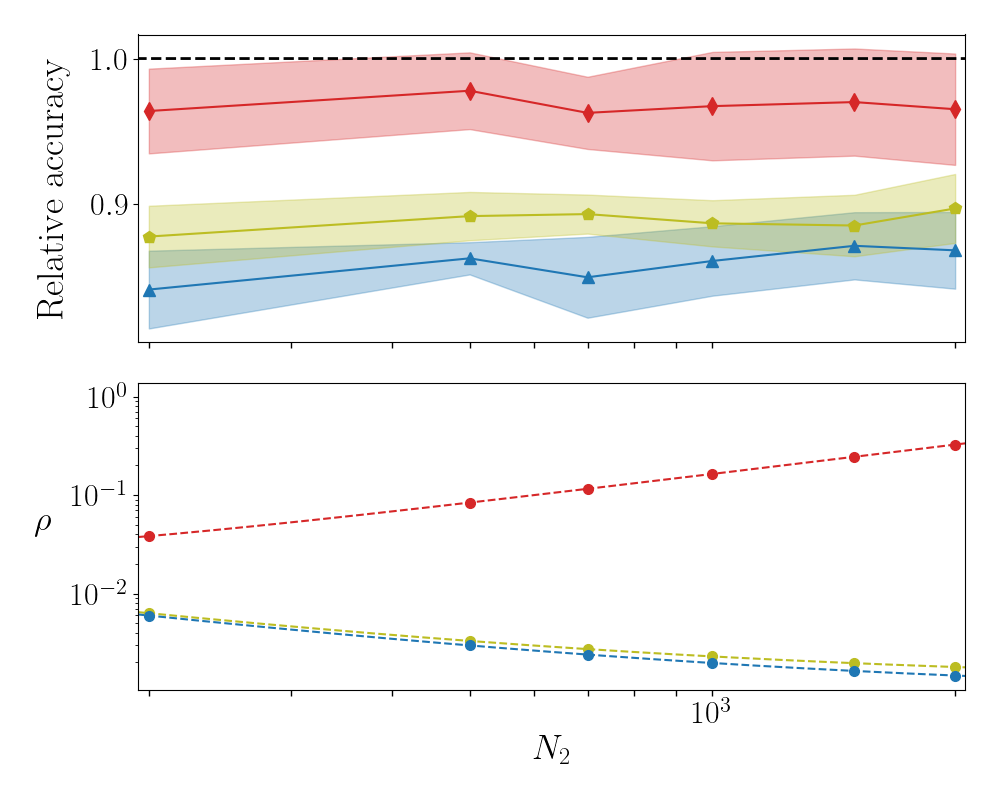

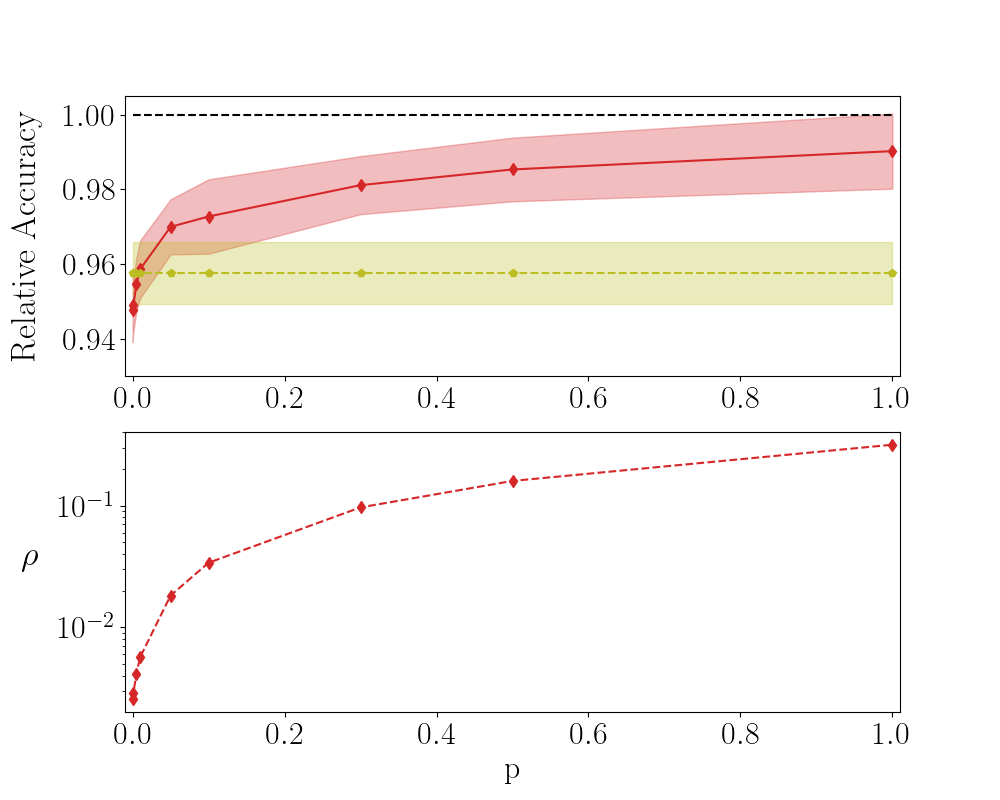

In the following we report on the accuracy of the S-SVD and S-QR methods when applied to the case of F-MNIST and CIFAR-10. The analysis refers to a three layers setting. The results displayed in Figs. 3 and 4 are in line with those discussed in the main body of the paper.

*

Appendix C Reducing the number of trainable parameters in the S-QR method

Introduce . When , the diagonal elements of in the S-QR method are solely trained. The off-diagonal elements are instead frozen to random values. In the opposite limit, when all elements of matrix are assumed to be trained. Intermediate values of interpolate between the aforementioned limiting conditions. More specifically, the entries that undergo optimisation, are randomly chosen from the pool of available the available ones, as reflecting the selected fraction. In Fig. 5 the relative accuracy for MNIST is plotted against . Here, the network is made of layers with . A limited fraction of parameters is sufficient to approach the accuracy displayed by the network trained with conventional means. In Figs. 6 and 7 the results relative to F-MNIST and CIFAR-10 are respectively reported.

*

Appendix D Testing the performance of the introduced methods on a multi-layered architecture.

In this Section we will test the setting of a multi-layered architecture by generalising beyond the case study that we employed in the main body of the paper. More specifically, we have trained according to different modalities a four-layer () deep neural network, by modulating over a finite window. As usual, the size of the incoming and outgoing layers are set by the specificity of the examined datasets. The results reported in Fig. 8 refer to F-MNIST and confirm that the S-QR strategy yields performance that are comparable to those reached with conventional learning approaches, but relying on a much smaller set of trainable parameters. In Fig. 9 the effect of the imposed sparsity on the classification accuracy is displayed for both conventional and S-QR method. Similar conclusions can be reached for MNIST and CIFAR-10.