Online Human Activity Recognition Employing Hierarchical Hidden Markov Models

Abstract

In the last few years there has been a growing interest in Human Activity Recognition (HAR) topic. Sensor-based HAR approaches, in particular, has been gaining more popularity owing to their privacy preserving nature. Furthermore, due to the widespread accessibility of the internet, a broad range of streaming-based applications such as online HAR, has emerged over the past decades. However, proposing sufficiently robust online activity recognition approach in smart environment setting is still considered as a remarkable challenge. This paper presents a novel online application of Hierarchical Hidden Markov Model in order to detect the current activity on the live streaming of sensor events. Our method consists of two phases. In the first phase, data stream is segmented based on the beginning and ending of the activity patterns. Also, on-going activity is reported with every receiving observation. This phase is implemented using Hierarchical Hidden Markov models. The second phase is devoted to the correction of the provided label for the segmented data stream based on statistical features. The proposed model can also discover the activities that happen during another activity - so-called interrupted activities. After detecting the activity pane, the predicted label will be corrected utilizing statistical features such as time of day at which the activity happened and the duration of the activity. We validated our proposed method by testing it against two different smart home datasets and demonstrated its effectiveness, which is competing with the state-of-the-art methods.

Index Terms:

Online Activity Recognition, Streaming Sensor Data, Activity Segmentation, Hierarchical Hidden Markov models, Smart Homes, Internet of ThingsI INTRODUCTION

Nowadays, the prominent enhancement of sensor technology, alongside with the remarkable progress on Machine Learning methods has opened up a wide variety of real-world applications through combining different types of sensor and machine learning techniques for data acquisition and processing, respectively. Various sensors have been utilized in research projects. Numerous studies have been done using visual sensors such as cameras which receive the sequence of images or videos as the sensor data. Despite the successful performance of vision based solutions, non-visual sensors are still required to solve the existing limitations. Non-visual sensors can be installed on the human’s body or in the environment as wearable and ambient sensors. Exploiting the network of heterogeneous sensors has been of interest in many recent studies with the aim of enhancing the performance as well.

Human Activity Recognition (HAR) as an active research area in Machine Learning is of great importance. In many of the real world applications, system’s decision is made based on the current human activity which is inferred by its HAR module from sensors data.

Smart Environment is a remarkable real world application which aims to monitor the behavior of individuals in the environment. This environment is enriched with sensors, actuator and processing units which can also be embedded in the objects. Monitoring systems as a use case of Smart Environments, are deployed to predict, detect and neutralize the abnormal behaviors of individuals in environments such as metro stations.

Ambient Assisted Living (AAL) approaches use Activity Recognition methods in order to monitor resident’s behavior and support them so that improve the quality of their lives. HAR has also been exploited in game consoles, fitness and health monitoring on smartphones’ applications. Many research laboratories have paid considerable attention to the Smart Environment related topics and consequently, several research projects such as CASAS [1], MAVhome [2], PlaceLab [3], CARE [4], and AwareHome [5] have been deployed. Activity recognition in this context denotes recognizing the daily activities of a person who is living in the environment. Activities of Daily Living (ADLs) is a terminology defined in the healthcare domain and it refers to people’s daily self-care activities [6].

I-A Activity Modeling

In general, there exist two main approaches to model the activity; data-driven and knowledge-driven approach. Data-driven models, analyze large sets of data for each activity by means of machine learning and data mining techniques. Another approach is to acquire sufficient prior knowledge of personal preferences and tendencies by applying knowledge engineering and management technology methods. Activity recognition task contains different challenges such as labeling the activities, interrupted activities, sensors with non-valuable data and activity extraction. However, online recognition is noted as one of the most dominant challenges since the main requirement in many modern real-world applications is to recognize user’s behavior based on the online stream of data.

In the present study, the stream of data is generated from a set of binary environmental sensors’ output. The majority of online activity recognition approaches are fed with the data which preprocessed using the sliding window technique. The main drawback of this approach is the optimization problem of window size parameter itself.

To address this issue, we proposed a method to detect the activity pane on data stream by discovering the sequential pattern of the sensors data in the beginning and termination of each activity. Detecting the activity boundaries, we can recognize the activity using a proper segment of streaming sensor data. Since the beginning of an activity is detected, the model keep reporting the identified activity till the end of it gets recognized by its sequential pattern. While the model is reporting the current activity, it is capable of recognizing other activities interrupting the ongoing one. Having activity’s segment identified, statistical features of the segment including activity duration will be taken as the input to correct predicted label of the activity. Consequently, we get ride of the non-valuable sensor data by ignoring all the intervals but activity panes and tuning the window size parameter.

Our model consists of a set of Hidden Markov Models implemented in a hierarchical manner in order to recognize the beginning, estimate on-going activity, detect end and class of the activities. Our evaluation results demonstrated the superiority of the proposed model in comparison with some existing ones and competitive performance compare to the state-of-the-art models.

The remainder of the paper is organized as follows. Section II outlines the previous researches have been done in this domain. Section III describes the proposed method and implementation details. Evaluation and experimental results are presented in Section IV and Section V summarizes the results of this work and draws conclusions.

II Related Work

Smart environments obtain knowledge from physical platform and its residents in order to modify setting based on residents interactions’ pattern with environment. These systems optimize numerous goals such as monitoring environment, assisting residents and control resources. The first idea that uses sensor technology for creating smart environment backs to 1990. Moser’s neural network house modifies environment conditional statues based on user behavior pattern [7]. The next decades witnessed a considerable progress in this field which obtained by exploiting HAR techniques.

Several publications have appeared documenting research works on Human Activity Recognition. Generally, sensor-based and vision-based approaches, have been documented in the literature as two main categories of HAR. This paper is concentrating on the sensor-based HAR. The common basis for the majority of attempts of this type is to process the sequence of sensors’ events and detect the corresponding activity, based on the discovered pattern. Furthermore, activity recognition has been vastly used in various applications and environments. Hence, it is conspicuous to have a robust Activity Recognition Model that be invariant to the environmental settings such as sensors’ structure and placement in the smart environment.

To address this requirement, author in [8] proposed a robust model of the sensors which extracts different features such as the duration of the activity. In this generalized model, the problem is independent of the sensor environment and can be implemented for different users. Besides, to evaluate the models, having enough correctly labeled data is inevitable. Nevertheless, manual labeling of the sensors’ data, is prohibitive due to being highly time-consuming and often inaccurate.

Moreover, the way that annotation has been done is often ignored while it makes bias on the data. In the most common approach of annotating, residents of the smart environment are asked to perform an activity and then annotation will be done based on activated sensors. Nonetheless, this approach may not be practical in all situations. A labeling mechanism presented in [9] is an example of existing solutions for annotating sensor data automatically.

Much researches on activity recognition have been done using pre-segmented data; which means the beginning and end of the activities is pre-determined in the dataset [10, 11]. Such approaches are far unrealistic compared to real-world setting and are not applicable in the online applications as the beginning and end of the activities is not determined when it comes to the stream of data. Researchers in [12, 13] developed methods for sensor stream segmentation which brings the activity recognition based systems closer to those of actual world.

Despite all the researches on HAR, still opened challenges such as overlapping or concurrent activities, have yet to be solved. Overlap is noted as the phenomenon that different activity classes activate the same set of sensor events which makes overlapping activities hard to discriminate only based on the types of sensor events that they have triggered [14]. AALO is an Activity recognition system in presence of overlapped activities which works based on Active Learning. It can recognize the overlapped activities by preprocessing step and item-set mining phase [15]. A key limitation of this research is that it is not capable of recognizing the overlapping activities which happen in the same location. In addition, performing on the online data stream is still lacking in this study.

Moreover, researchers in [16] suggest a two-phase method based on emerging pattern which can recognize complex activities.In the first phase, this method extracts emerging pattern for distinct activities. In the second phase, it segments streaming sensor data, then uses time dependency between segments in order to concatenate the relevant segments. Segments concatenation lets the method recognize complex activities. In [17], Quero et al. proposed a Real-Time method for recognizing interleaved activities based on Fuzzy Logic and Recurrent Neural Networks.

Authors in [18, 19] studied the problem of handling the large proportion of available data that are not categorized in predefined classes and addressed it by discovering patterns in them and segmenting it into learnable classes. These kinds of data usually belong to the sensors that are not exclusively involved in the predefined class of activities.

Several Machine Learning approaches have been examined in the domain of Activity Recognition. Ensemble methods [20], non-parametric models [21], Temporal Frequent pattern mining [22], SVM-based models [10], Recurrent Neural Networks [23], and probabilistic models like Hidden Markov Model and the Markov Random Field [24, 25, 26] have been exploited in the literature. Nonetheless, less attention has been paid to the domain of Online Activity Recognition which deals with processing stream of sensor data contrary to the conventional approaches that utilize pre-segmented data.

Most of the presented solutions for streaming data processing are based on sliding window technique [13, 27, 28, 29, 30]. The sliding window approach, briefly named as windowing, mainly considers the temporal relation or number of sensors for framing data. One of the key bottlenecks of this approach is fine-tuning the window size. One basic solution is to employ constant pre-determined window size [25, 27]. Though, as the number of activated sensors are varied in different activities, applying dynamic window size have been noticed by many researchers [31, 32, 29, 13].

Authors in [29, 30] present a novel probabilistic method to determine the window size. A different window size is initialized regarding each class of activity based on prior estimation and it is getting updated by the upcoming sensor events.

Krishnan et al. [27] consider temporal dependency among sensors as a criterion for sliding windowing so that those sensors, which get activated at a certain time interval, are examined as one activity. Their other proposed solution is a sensor-based method which recognizes group of sensors as a window that were continuously activated together by their Mutual Information measure. Authors in [28] extended the presented model of [27] and improved its performance by altering computation of Mutual Information.

Researchers in [24] presented a multi-stage classification method. The first stage is to cluster the activities using a Hidden Markov Model based on location data and then in the next stage, another HMM classifies the exact activity using a sequence of sensor data. The major weakness of this method is that it makes no attempt to specify the boundaries of activities which negatively affects the performance.

Another method to tackle online recognition is introduced by Li et al. [33]. They proposed cumulative fixed sliding windows for real time activity recognition. Their segmentation method consists of several fixed time length windows which have overlapped with each other. These overlapping windows considered as the whole a window, and its information is used to detect the on-going activities.

III Proposed approach

The Windowing technique is among the most widely used solutions to process the stream of sensor data. However, one practical question arises when dealing with this approach is how to determine the proper window size. There is still some controversy surrounding window size tuning while it has a great impact on the performance as the decision-making process is halting until the model receives a complete window of data.

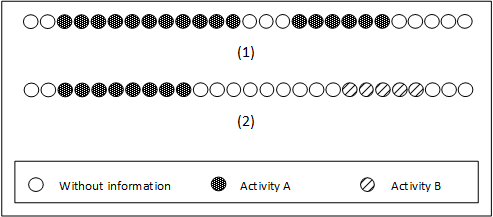

Moreover, the selected window passed to the model often contains inappropriate data which belongs to none of the predefined classes. Dynamic sliding window size may seem an appropriate solution. However, as it is shown in Fig. 2, not only different classes of activity need different window sizes, but also distinct samples of one class require distinct window sizes.

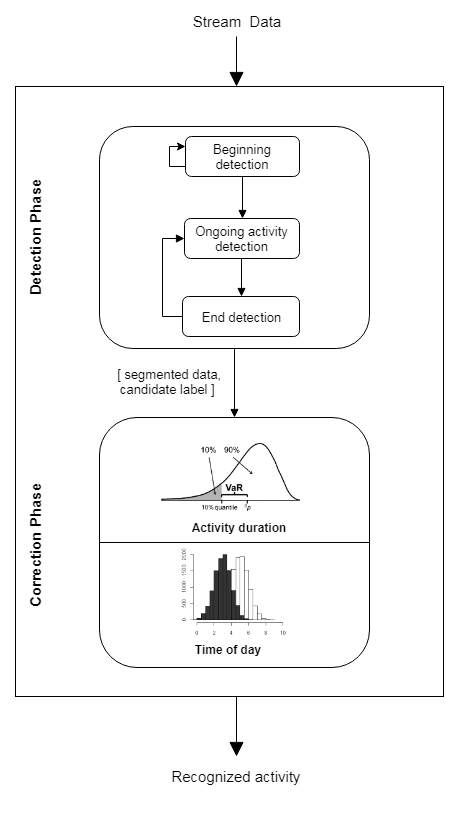

This paper seeks to address these problems by presenting a new model which works based on the dynamic segmentation of the data stream. Our proposed model breaks down the problem of Online Activity Recognition into two sub-problems as it is depicted in Fig. 1. In the initial stage, the model detects the activity pane by recognizing sensor events’ patterns of when the activities start and finish and makes the prediction while receiving data. Having the beginning and end of the activities determined, in the next stage the model proceeds to refine the prediction by classifying the activities into the predefined classes and the other class. Predefined classes proposed by medical specialists [6], included Daily Living Activities such as eating, bathing, sleeping, etc. Other class label is devoted to the activities that do not fit into predefined classes. Following sections explain each stage in detail.

The main contributions and innovations of this paper are as follows:

-

Description of a novel method based on the occurrence pattern of activities, that addresses window size problem of previous sliding window methods.

-

Providing an estimation of the on-going activity due to each sensor observation, which delivers Real-time Activity Recognition.

-

Recognizing interrupted activities even those which occur in the same location.

III-A Detection Phase

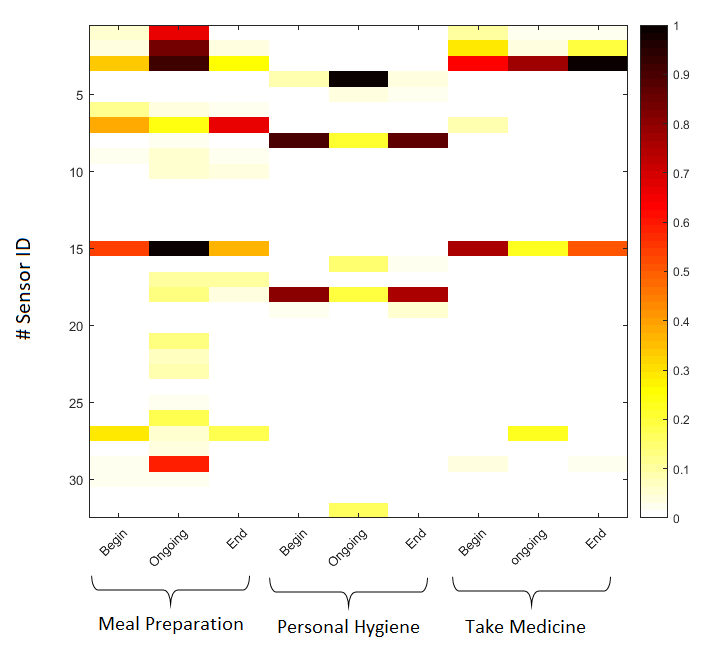

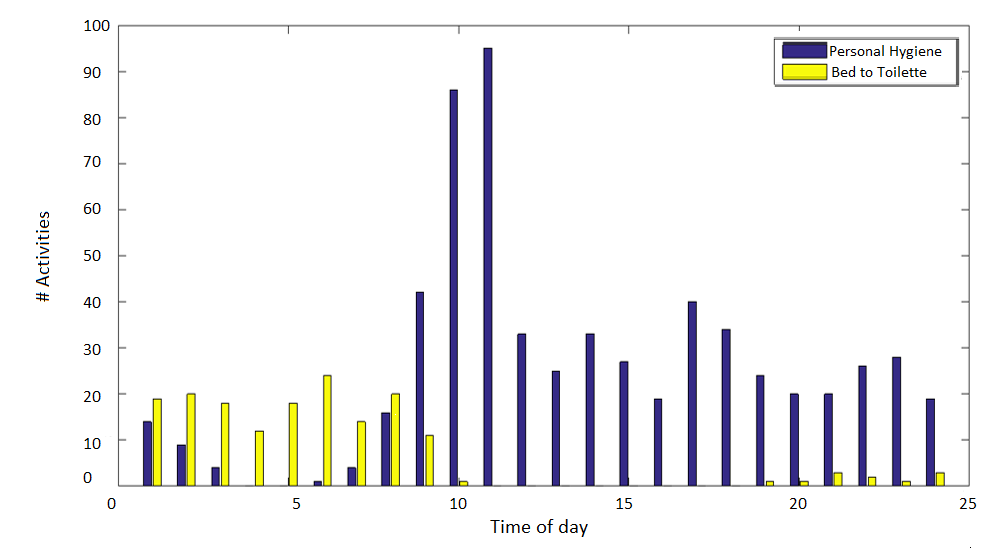

The first step to recognize the activity on the stream of sensor data is to detect its occurrence. The idea is to investigate the sensor events in the beginning and end of the activities to extract corresponding patterns of start and end. As it can be inferred from Fig. 3, the set of activated sensors, for each activity is almost unchanged in most of the occurrences. This assumption can be justified by considering locally limited functional zone for each activity. Meal preparation as an example happens in the kitchen, thus it should be kitchen’s sensors that get activated during this activity.

The Number of observations which are taken into account for beginning detection purpose, affects the model performance. Table I compares different values for this parameter and corresponding results. Based on this comparison, we opted for considering 3 sensor events in our model. Indeed, the number of considered sensors should be small enough to promise the recognition feasibility of the next activity. To exemplify, when the resident opens or closes the door, only a few sensors get activated; hence waiting for more observations is out of options.

| 2 | 3 | 4 | 5 | |

|---|---|---|---|---|

| HomeA | 78.7 | 97 | 92.9 | 93.2 |

| HomeB | 84.3 | 96.4 | 94.2 | 91 |

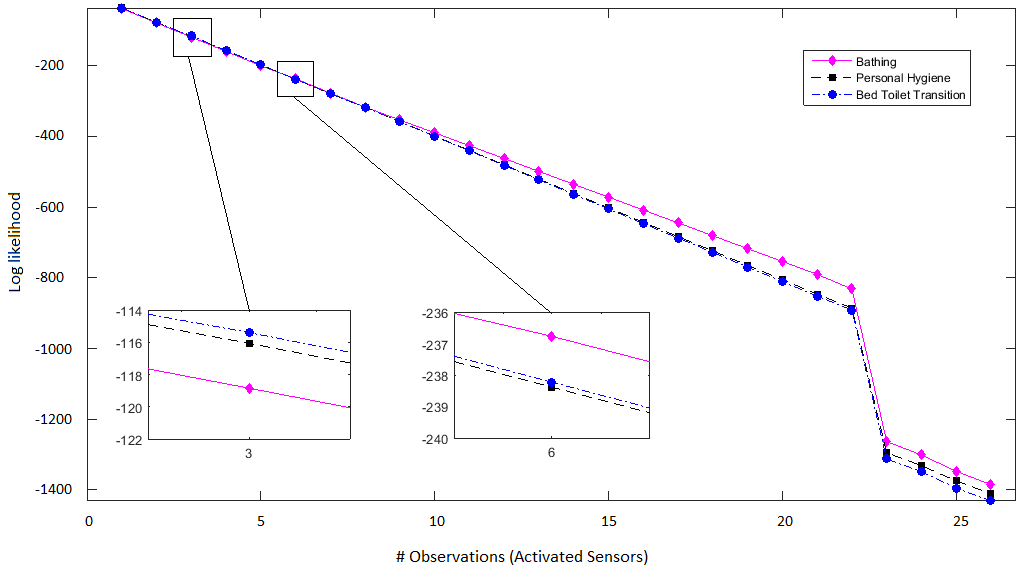

On the other hand, there exist some classes of activities such as Personal Hygiene and Bathing, which share a common set of activated sensors in the beginning and the end. Therefore, recognizing these activities requires more information like sensor activation sequences. Once the beginning of an activity gets recognized, the model utilizes the upcoming sequence of sensor events to recognize the activity class itself and as it receives more events, its prediction gradually becomes more confident. This trend can be seen in Fig. 4.

As Fig. 4 shows, at first the most probable activity is Bed to Toilet transition, while after receiving more observations, estimated activity changes to Bathing. These activities have overlap due to their similar sensors. Therefore, there is a need for more information to produce a reliable prediction.

Numerous Machine Learning methods such as SVM, Neural Networks, Decision Tree, and Probabilistic Graphical Models have been applied in activity recognition field. Hidden Markov Model is one of the most popular models in the literature for sequential data processing as it does not suffer from labor-intensive calculations like Neural Networks. Besides, prior knowledge can be simply leveraged in probabilistic models which are also more robust to the noise.

Taking into account the aforementioned reasons, we chose HMM to recognize the activities. Suppose represents the hidden state vector and represents vector of observations. Assuming K possible hidden states we have . In our case, observations are the sequence of sensor events. Equation (1) highlights the calculation to obtain the most probable class based on the observations:

| (1) |

The Hidden Markov Model approach is not well suited to process long sequences and this led us to employ an extension of HMM called Hierarchical Hidden Markov Model in our study. Hierarchical-HMM is suitable for the problems that contain multilevel dependencies in terms of time and follow a hierarchical structure in their context.

Hierarchical-HMM is represented by a triple while , , and stand for model structure, set of observations and model’s parameters respectively. Model structure defines number of levels and children-parent relations on each level. States in the lowest level, are the only generative ones which generate the observations. Parameter set is defined as following [34]:

| (2) |

where is an abstract state in level with children set , is the probability of generating observation by generative state , is the prior probability of , is the probability of inhering child to , and is the probability of termination of in state . The proper value set of is obtained by Maximum Likelihood technique.

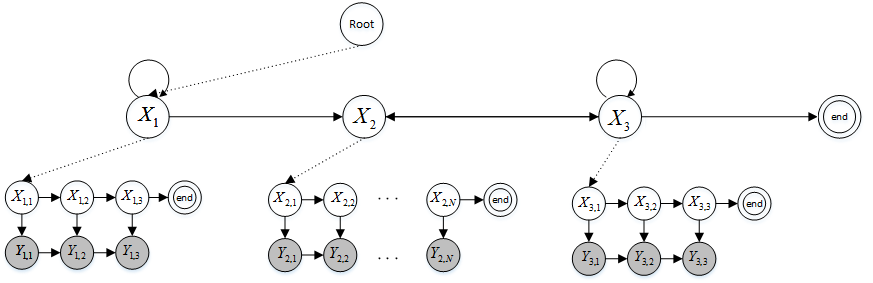

Fig. 6 demonstrates the transition diagram of our implemented Hierarchical-HMM. In this diagram we have 2 type of transition , vertical and horizontal. Vertical transitions are shown by dotted arc, and occurs between different level of HHMM. Horizontal transitions happen among same level node, when task of one node finishes.

Additionally, there are two types of node in HHMM, abstract nodes which produce a sequence of observations, and production nodes which produce single observation. In Fig. 6, nodes , , and are abstract nodes that each of them considered as a distinct HMM. In this model, is the responsible sub-HMM for detecting the beginning of an activity. is an auto regressive HMM which consist of 3 observation. After receiving 3 sensor observations, if beginning of an activity is detected, the control will pass through the in order to recognize the class of on-going activity.

In sub-HMM with occurring each observation, the corresponding activity will be recognized. This process continues until the termination of activity which detects by . At the end of the cycle, control will be given back to the root node. The output of this process , is the segmentation of data which holds the related information.

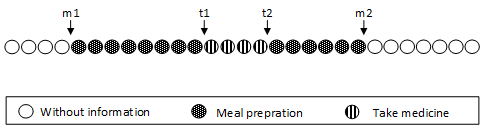

Sometimes, subject interrupts the current activity and commences a new activity. There exist some techniques which can recognize the latter activity independently of the first one [35]. Thought, their discrimination is limited to the activities which happen at different locations. Since our hierarchical detection technique is location invariant the proposed model is capable of recognizing interrupted activities that take place in a common location. Fig. 5 demonstrates an example of the interrupted activities.

III-B Correction Phase

With the completion of the Detection phase, our model can move to the next stage. In this stage, for each class, Joint Probabilistic Distribution Function (Joint PDF) of its time length and time of day gets extracted. Fig. 7 exemplifies how time of the day as an statistical feature can ease the discrimination. The extracted PDFs now can be used to measure the level of belonging of a data segment to each of the classes. As Equation (3) reveals, if this probability passes the threshold for each class , the data can be labeled as . Otherwise, it is labeled as Other class. A fine tuning process has been done to achieve the proper value for . Table II details this trial.

| (3) |

| 0.02 | 0.04 | 0.06 | 0.08 | 0.10 | |

|---|---|---|---|---|---|

| HomeA | 59.7 | 62.1 | 63 | 65.2 | 64.8 |

| HomeB | 54 | 53 | 60 | 58 | 57.4 |

| # Attribute | HomeA | HomeB |

|---|---|---|

| Motion sensors | 20 | 18 |

| Door sensors | 12 | 12 |

| Residents | 1 Person | 1 Person |

| Sensor events collected | 371925 | 274920 |

| Timespan | 5 months | 5 months |

In summary, this model first detects the activity occurrence utilizing the beginning pattern of activities. Next, it recognizes the class of ongoing activity based on activated sensors until the end of the activity gets detected. To improve the discrimination performance between similar activities, our model also exploits the time of day in which the activity is occurring as an statistical feature.

IV Evaluation

The performance of our proposed approach is assessed by conducting 2 experiments on 2 different datasets to recognize the current activity on the stream of data. We compared our approach with a set of most common Machine Learning models and achieved convincing results.

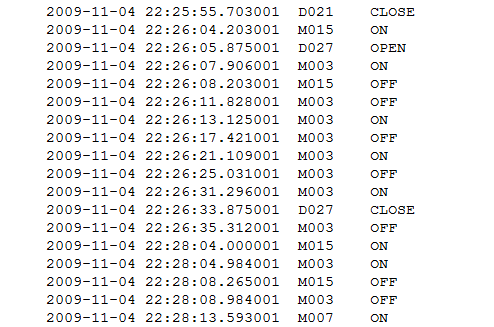

IV-A Dataset

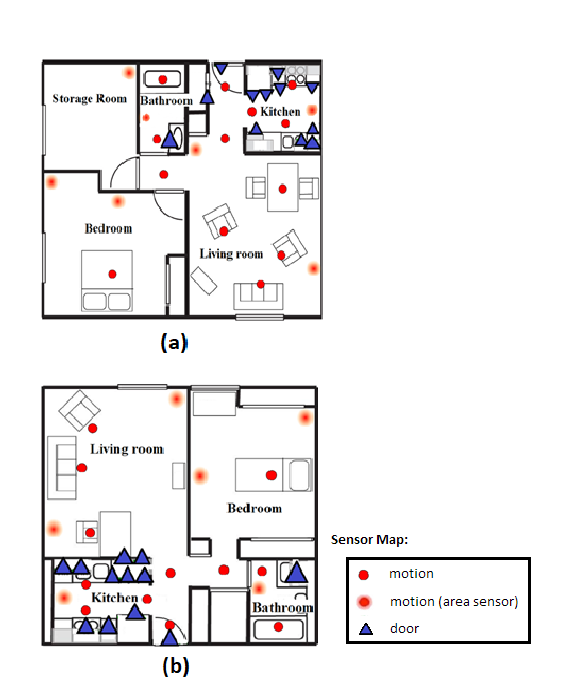

We evaluated our proposed model on HomeA111http://eecs.wsu.edu/~nazerfard/AIR/datasets/data1.zip and HomeB222http://eecs.wsu.edu/~nazerfard/AIR/datasets/data2.zip dataset. Fig. 8 shows a sample slice of this dataset. These 2 datasets have been collected using 32 and 30 sensors which have been deployed in 2 different houses depicted in Fig. 9 for the period of 5 months. Labeling of this dataset has been done later by human experts. Table III summarizes characteristics of data acquisition for HomeA and HomeB datasets. These datasets contain 11 classes of interest. Details on sample distribution of classes are highlighted in Table VI.

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1=Personal Hygiene | 0.88 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | 0.00 | 0.08 |

| 2=Leave Home | 0.00 | 0.14 | 0.00 | 0.00 | 0.29 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.57 |

| 3=Enter Home | 0.00 | 0.30 | 0.14 | 0.00 | 0.00 | 0.00 | 0.03 | 0.00 | 0.00 | 0.00 | 0.00 | 0.54 |

| 4=Bathing | 0.08 | 0.00 | 0.00 | 0.67 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.25 |

| 5=Meal Preparation | 0.00 | 0.00 | 0.00 | 0.00 | 0.52 | 0.00 | 0.08 | 0.00 | 0.04 | 0.00 | 0.00 | 0.36 |

| 6=Napping | 0.00 | 0.00 | 0.02 | 0.00 | 0.01 | 0.13 | 0.00 | 0.12 | 0.00 | 0.00 | 0.00 | 0.72 |

| 7=Take Medicine | 0.00 | 0.00 | 0.00 | 0.00 | 0.13 | 0.00 | 0.55 | 0.00 | 0.05 | 0.00 | 0.00 | 0.27 |

| 8=Eating Food | 0.00 | 0.00 | 0.01 | 0.00 | 0.01 | 0.00 | 0.00 | 0.28 | 0.00 | 0.00 | 0.00 | 0.69 |

| 9=Housekeeping | 0.00 | 0.00 | 0.00 | 0.00 | 0.13 | 0.00 | 0.26 | 0.00 | 0.00 | 0.00 | 0.00 | 0.61 |

| 10=Sleeping in Bed | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.40 | 0.02 | 0.58 |

| 11=Bed to Toilet transition | 0.60 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.27 | 0.13 |

| 12=Other | 0.19 | 0.01 | 0.25 | 0.02 | 0.04 | 0.01 | 0.13 | 0.03 | 0.01 | 0.01 | 0.02 | 0.28 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1=Personal Hygiene | 0.57 | 0.00 | 0.00 | 0.04 | 0.01 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.08 | 0.31 |

| 2=Leave Home | 0.00 | 0.50 | 0.00 | 0.00 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.50 |

| 3=Enter Home | 0.00 | 0.65 | 0.09 | 0.00 | 0.03 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.21 |

| 4=Bathing | 0.00 | 0.00 | 0.00 | 1.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 5=Meal Preparation | 0.00 | 0.01 | 0.00 | 0.00 | 0.30 | 0.00 | 0.02 | 0.00 | 0.08 | 0.00 | 0.00 | 0.59 |

| 6=Napping | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.13 | 0.00 | 0.05 | 0.00 | 0.00 | 0.00 | 0.82 |

| 7=Take Medicine | 0.00 | 0.00 | 0.00 | 0.00 | 0.11 | 0.00 | 0.09 | 0.00 | 0.56 | 0.00 | 0.00 | 0.23 |

| 8=Eating Food | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.33 | 0.04 | 0.00 | 0.00 | 0.63 |

| 9=Housekeeping | 0.00 | 0.00 | 0.00 | 0.00 | 0.05 | 0.00 | 0.00 | 0.00 | 0.35 | 0.00 | 0.00 | 0.60 |

| 10=Sleeping in Bed | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.96 | 0.00 | 0.04 |

| 11=Bed to Toilet transition | 0.13 | 0.00 | 0.00 | 0.03 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.56 | 0.28 |

| 12=Other | 0.14 | 0.01 | 0.05 | 0.01 | 0.00 | 0.00 | 0.00 | 0.00 | 0.01 | 0.01 | 0.05 | 0.71 |

| Activity | HomeA | HomeB |

|---|---|---|

| Personal Hygiene | 704 | 782 |

| Leave Home | 420 | 245 |

| Enter Home | 417 | 245 |

| Bathing | 72 | 41 |

| Meal Preparation | 554 | 245 |

| Napping | 564 | 138 |

| Take Medicine | 473 | 81 |

| Eating Meal | 334 | 208 |

| Housekeeping | 13 | 175 |

| Sleeping in Bed | 210 | 207 |

| Bed to Toilet transition | 96 | 236 |

| TOTAL | 2603 | 3857 |

| Accuracy | F1-measure | |||

|---|---|---|---|---|

| Model | HomeA | HomeB | HomeA | HomeB |

| Proposed model | 65.20% | 60% | 51% | 49% |

| SW | 58% | 48% | 51% | 49% |

| TW | 59% | 52% | 53% | 54% |

| SWMI | 64% | 54% | 60% | 57% |

| SWTW | 62% | 45% | 55% | 55% |

| DW | 59% | 55% | 58% | 58% |

| PWPA | 62% | 50% | 55% | 51% |

IV-B Baseline models

We have compared performance of our proposed model with several existing approaches:

-

•

SW: This model utilises constant window size. Sensors are equally contributing in window size calculation.

-

•

TW: It employs a constant window size that is obtained based on time interval.

-

•

SWMI: It considers window size as a constant value. Mutual Information of sensors and activity classes is taken into account for window size calculation.

-

•

SWTW: Window size remains constant in this model and is calculated by time based weighting of the sensor events.

-

•

DW: In this model window size is variable for each activity and its value is calculated using probabilistic information of sensor events.

-

•

PWPA: It benefits from a 2-level window with a fixed sizes. First level contains the probabilistic information of the previous windows and activities. Second level includes the data of current step with possible activity as a augmented feature.

IV-C Experimental Results and Analysis

We compare the performance of the models in terms of F1-measure and Accuracy as it is reported in Table IV ,V and VII respectively. For HomeA dataset, Personal Hygiene, Bathing, Sleeping, Take Medicine, Meal Preparation, and Eating Meal classes can be highly discriminated. However, unsatisfactory results were reached for Enter Home, Leave Home, and Sleeping classes. This performance degradation is justifying given the fact that Enter Home and Leave Home both activate the same set of sensors and these two classes of activity often occur in sequence. Besides, sometimes the resident walks out of the house just for short moments which is not considered as Leaving home.

In HomeA dataset, Bathing and Sleeping are well discriminated and detection of Meal Preparation, Personal Hygiene, Eating, and Bed to Toilette transition are satisfactory. The performance dropped for Taking Medicine, Napping, Enter Home and Leave Home. According to Table VI, Housekeeping activity has only 13 instances in the HomeA dataset which is dramatically low compared to the rest of the classes. This imbalanced distribution may have been the cause of inadequate performance of the model.

Generally, classes of activity that have the same local domain such as Napping and Eating Meal, are tough to discriminate. Note that the long time gap can compensate local overlap as it does in distinguishing Personal Hygiene and Bed to Toilette transition.

V Conclusions and Future Work

Robust online activity recognition in the domain of smart environments is considered as one of the most dominant challenges. While most of the previous approaches suffer from window size parameter tuning, this paper has highlighted a new activity recognition approach on sensor data stream which determines the beginning and end of the activities. In addition, our proposed model is capable of recognizing interrupted activities. The experimental results of this study indicate the efficiency of the proposed model compared to the existing ones.

In general, the accuracy of the activity recognition methods cannot exceed a certain amount due to the uncertainty of human behavior. Nevertheless, uncertain predictions can be offered through a recommendation context in smart environments.

Further work needs to be performed to achieve improvement on recognition of Other class as the most problematic activity class. Current recognition systems require a fair amount of labeled data to reach a certain satisfactory accuracy while data acquisition and labeling in this domain is impractical. Future studies on the current topic are therefore recommended in the domain of Knowledge Transfer to reduce the crucial need of labeled data.

References

- [1] D. Cook, M. Schmitter-Edgecombe, A. Crandall, C. Sanders, and B. Thomas, “Collecting and disseminating smart home sensor data in the casas project,” in Proceedings of the CHI workshop on developing shared home behavior datasets to advance HCI and ubiquitous computing research, pp. 1–7, 2009.

- [2] D. J. Cook, M. Youngblood, E. O. Heierman, K. Gopalratnam, S. Rao, A. Litvin, and F. Khawaja, “Mavhome: An agent-based smart home,” in Pervasive Computing and Communications, 2003.(PerCom 2003). Proceedings of the First IEEE International Conference on, pp. 521–524, IEEE, 2003.

- [3] S. S. Intille, K. Larson, J. Beaudin, E. M. Tapia, P. Kaushik, J. Nawyn, and T. J. McLeish, “The placelab: A live-in laboratory for pervasive computing research (video),” Proceedings of PERVASIVE 2005 Video Program, 2005.

- [4] B. Kröse, T. Van Kasteren, C. Gibson, T. Van Den Dool, et al., “Care: Context awareness in residences for elderly,” in International Conference of the International Society for Gerontechnology, Pisa, Tuscany, Italy, pp. 101–105, 2008.

- [5] C. D. Kidd, R. Orr, G. D. Abowd, C. G. Atkeson, I. A. Essa, B. MacIntyre, E. Mynatt, T. E. Starner, and W. Newstetter, “The aware home: A living laboratory for ubiquitous computing research,” in International Workshop on Cooperative Buildings, pp. 191–198, Springer, 1999.

- [6] S. Katz, T. D. Downs, H. R. Cash, and R. C. Grotz, “Progress in development of the index of adl,” The gerontologist, vol. 10, no. 1_Part_1, pp. 20–30, 1970.

- [7] M. C. Mozer, “The neural network house: An environment hat adapts to its inhabitants,” in Proc. AAAI Spring Symp. Intelligent Environments, vol. 58, 1998.

- [8] D. J. Cook, “Learning setting-generalized activity models for smart spaces,” IEEE intelligent systems, vol. 27, no. 1, pp. 32–38, 2012.

- [9] S. Szewcyzk, K. Dwan, B. Minor, B. Swedlove, and D. Cook, “Annotating smart environment sensor data for activity learning,” Technology and Health Care, vol. 17, no. 3, pp. 161–169, 2009.

- [10] D. Sánchez, M. Tentori, and J. Favela, “Activity recognition for the smart hospital,” IEEE intelligent systems, vol. 23, no. 2, 2008.

- [11] A. Fleury, N. Noury, and M. Vacher, “Supervised classification of activities of daily living in health smart homes using svm,” in Engineering in Medicine and Biology Society, 2009. EMBC 2009. Annual International Conference of the IEEE, pp. 6099–6102, IEEE, 2009.

- [12] X. Hong and C. D. Nugent, “Segmenting sensor data for activity monitoring in smart environments,” Personal and ubiquitous computing, vol. 17, no. 3, pp. 545–559, 2013.

- [13] G. Okeyo, L. Chen, H. Wang, and R. Sterritt, “Dynamic sensor data segmentation for real-time knowledge-driven activity recognition,” Pervasive and Mobile Computing, vol. 10, pp. 155–172, 2014.

- [14] J. Wen, M. Zhong, and Z. Wang, “Activity recognition with weighted frequent patterns mining in smart environments,” Expert Systems with Applications, vol. 42, no. 17-18, pp. 6423–6432, 2015.

- [15] E. Hoque and J. Stankovic, “Aalo: Activity recognition in smart homes using active learning in the presence of overlapped activities,” in Pervasive Computing Technologies for Healthcare (PervasiveHealth), 2012 6th International Conference on, pp. 139–146, IEEE, 2012.

- [16] H. T. Malazi and M. Davari, “Combining emerging patterns with random forest for complex activity recognition in smart homes,” Applied Intelligence, vol. 48, no. 2, pp. 315–330, 2018.

- [17] J. Quero, C. Orr, S. Zang, C. Nugent, A. Salguero, and M. Espinilla, “Real-time recognition of interleaved activities based on ensemble classifier of long short-term memory with fuzzy temporal windows,” in Multidisciplinary Digital Publishing Institute Proceedings, vol. 2, p. 1225, 2018.

- [18] D. J. Cook, N. C. Krishnan, and P. Rashidi, “Activity discovery and activity recognition: A new partnership,” IEEE transactions on cybernetics, vol. 43, no. 3, pp. 820–828, 2013.

- [19] H. Gjoreski and D. Roggen, “Unsupervised online activity discovery using temporal behaviour assumption,” in Proceedings of the 2017 ACM International Symposium on Wearable Computers, pp. 42–49, ACM, 2017.

- [20] A. Jurek, C. Nugent, Y. Bi, and S. Wu, “Clustering-based ensemble learning for activity recognition in smart homes,” Sensors, vol. 14, no. 7, pp. 12285–12304, 2014.

- [21] F.-T. Sun, Y.-T. Yeh, H.-T. Cheng, C. Kuo, M. Griss, et al., “Nonparametric discovery of human routines from sensor data,” in 2014 IEEE international conference on pervasive computing and communications (PerCom), pp. 11–19, IEEE, 2014.

- [22] E. Nazerfard, “Temporal features and relations discovery of activities from sensor data,” J Ambient Intell Human Comput (2018), https://doi.org/10.1007/s12652-018-0855-7.

- [23] D. Singh, E. Merdivan, I. Psychoula, J. Kropf, S. Hanke, M. Geist, and A. Holzinger, “Human activity recognition using recurrent neural networks,” in International Cross-Domain Conference for Machine Learning and Knowledge Extraction, pp. 267–274, Springer, 2017.

- [24] M. H. Kabir, M. R. Hoque, K. Thapa, and S.-H. Yang, “Two-layer hidden markov model for human activity recognition in home environments,” International Journal of Distributed Sensor Networks, vol. 12, no. 1, p. 4560365, 2016.

- [25] T. Kasteren, G. Englebienne, and B. Kröse, “An activity monitoring system for elderly care using generative and discriminative models,” Personal and ubiquitous computing, vol. 14, no. 6, pp. 489–498, 2010.

- [26] S. Yan, Y. Liao, X. Feng, and Y. Liu, “Real time activity recognition on streaming sensor data for smart environments,” in Progress in Informatics and Computing (PIC), 2016 International Conference on, pp. 51–55, IEEE, 2016.

- [27] N. C. Krishnan and D. J. Cook, “Activity recognition on streaming sensor data,” Pervasive and mobile computing, vol. 10, pp. 138–154, 2014.

- [28] N. Yala, B. Fergani, and A. Fleury, “Feature extraction for human activity recognition on streaming data,” in Innovations in Intelligent SysTems and Applications (INISTA), 2015 International Symposium on, pp. 1–6, IEEE, 2015.

- [29] F. Al Machot, H. C. Mayr, and S. Ranasinghe, “A windowing approach for activity recognition in sensor data streams,” in Ubiquitous and Future Networks (ICUFN), 2016 Eighth International Conference on, pp. 951–953, IEEE, 2016.

- [30] F. Al Machot, A. H. Mosa, M. Ali, and K. Kyamakya, “Activity recognition in sensor data streams for active and assisted living environments,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 28, no. 10, pp. 2933–2945, 2018.

- [31] J. Wan, M. J. O’grady, and G. M. O’hare, “Dynamic sensor event segmentation for real-time activity recognition in a smart home context,” Personal and Ubiquitous Computing, vol. 19, no. 2, pp. 287–301, 2015.

- [32] M. Espinilla, J. Medina, J. Hallberg, and C. Nugent, “A new approach based on temporal sub-windows for online sensor-based activity recognition,” Journal of Ambient Intelligence and Humanized Computing, pp. 1–13, 2018.

- [33] M. Li, M. O’Grady, X. Gu, M. A. Alawlaqi, G. O’Hare, et al., “Time-bounded activity recognition for ambient assisted living,” IEEE Transactions on Emerging Topics in Computing, 2018.

- [34] N. T. Nguyen, D. Q. Phung, S. Venkatesh, and H. Bui, “Learning and detecting activities from movement trajectories using the hierarchical hidden markov model,” in Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on, vol. 2, pp. 955–960, IEEE, 2005.

- [35] T. Nef, P. Urwyler, M. Büchler, I. Tarnanas, R. Stucki, D. Cazzoli, R. Müri, and U. Mosimann, “Evaluation of three state-of-the-art classifiers for recognition of activities of daily living from smart home ambient data,” Sensors, vol. 15, no. 5, pp. 11725–11740, 2015.