Online Learning with Optimism and Delay

Abstract

Inspired by the demands of real-time climate and weather forecasting, we develop optimistic online learning algorithms that require no parameter tuning and have optimal regret guarantees under delayed feedback. Our algorithms—DORM, DORM+, and AdaHedgeD—arise from a novel reduction of delayed online learning to optimistic online learning that reveals how optimistic hints can mitigate the regret penalty caused by delay. We pair this delay-as-optimism perspective with a new analysis of optimistic learning that exposes its robustness to hinting errors and a new meta-algorithm for learning effective hinting strategies in the presence of delay. We conclude by benchmarking our algorithms on four subseasonal climate forecasting tasks, demonstrating low regret relative to state-of-the-art forecasting models.

vskip=0pt

1 Introduction

Online learning is a sequential decision-making paradigm in which a learner is pitted against a potentially adversarial environment (Shalev-Shwartz, 2007; Orabona, 2019). At time , the learner must select a play from some set of possible plays . The environment then reveals the loss function and the learner pays the cost . The learner uses information collected in previous rounds to improve its plays in subsequent rounds. Optimistic online learners additionally make use of side-information or “hints” about expected future losses to improve their plays. Over a period of length , the goal of the learner is to minimize regret, an objective that quantifies the performance gap between the learner and the best possible constant play in retrospect in some competitor set : . Adversarial online learning algorithms provide robust performance in many complex real-world online prediction problems such as climate or weather forecasting.

In traditional online learning paradigms, the loss for round is revealed to the learner immediately at the end of round . However, many real-world applications produce delayed feedback, i.e., the loss for round is not available until round for some delay period 111Our initial presentation will assume constant delay , but we provide extensions to variable and unbounded delays in App. O. Existing delayed online learning algorithms achieve optimal worst-case regret rates against adversarial loss sequences, but each has drawbacks when deployed for real applications with short horizons . Some use only a small fraction of the data to train each learner (Weinberger & Ordentlich, 2002; Joulani et al., 2013); others tune their parameters using uniform bounds on future gradients that are often challenging to obtain or overly conservative in applications (McMahan & Streeter, 2014; Quanrud & Khashabi, 2015; Joulani et al., 2016; Korotin et al., 2020; Hsieh et al., 2020). Only the concurrent work of Hsieh et al. (2020, Thm. 13) can make use of optimistic hints and only for the special case of unconstrained online gradient descent.

In this work, we aim to develop robust and practical algorithms for real-world delayed online learning. To this end, we introduce three novel algorithms—DORM, DORM+, and AdaHedgeD—that use every observation to train the learner, have no parameters to tune, exhibit optimal worst-case regret rates under delay, and enjoy improved performance when accurate hints for unobserved losses are available. We begin by formulating delayed online learning as a special case of optimistic online learning and use this “delay-as-optimism” perspective to develop:

- 1.

- 2.

- 3.

- 4.

-

5.

The first meta-algorithm for learning a low-regret optimism strategy under delay (Thm. 13).

We validate our algorithms on the problem of subseasonal forecasting in Sec. 7. Subseasonal forecasting—predicting precipitation and temperature 2-6 weeks in advance—is a crucial task for allocating water resources and preparing for weather extremes (White et al., 2017). Subseasonal forecasting presents several challenges for online learning algorithms. First, real-time subseasonal forecasting suffers from delayed feedback: multiple forecasts are issued before receiving feedback on the first. Second, the regret horizons are short: a common evaluation period for semimonthly forecasting is one year, resulting in 26 total forecasts. Third, forecasters cannot have difficult-to-tune parameters in real-time, practical deployments. We demonstrate that our algorithms DORM, DORM+, and AdaHedgeD sucessfully overcome these challenges and achieve consistently low regret compared to the best forecasting models.

Our Python library for Optimistic Online Learning under Delay (PoolD) and experiment code are

available at

https://github.com/geflaspohler/poold.

Notation For integers , we use the shorthand and . We say a function is proper if it is somewhere finite and never . We let denote the set of subgradients of at and say is -strongly convex over a convex set with respect to with dual norm if and , we have . For differentiable , we define the Bregman divergence . We define , , and .

2 Preliminaries: Optimistic Online Learning

Standard online learning algorithms, such as follow the regularized leader (FTRL) and online mirror descent (OMD) achieve optimal worst-case regret against adversarial loss sequences (Orabona, 2019). However, many loss sequences encountered in applications are not truly adversarial. Optimistic online learning algorithms aim to improve performance when loss sequences are partially predictable, while remaining robust to adversarial sequences (see, e.g., Azoury & Warmuth, 2001; Chiang et al., 2012; Rakhlin & Sridharan, 2013b; Steinhardt & Liang, 2014). In optimistic online learning, the learner is provided with a “hint” in the form of a pseudo-loss at the start of round that represents a guess for the true unknown loss. The online learner can incorporate this hint before making play .

In standard formulations of optimistic online learning, the convex pseudo-loss is added to the standard FTRL or OMD regularized objective function and leads to optimistic variants of these algorithms: optimistic FTRL (OFTRL, Rakhlin & Sridharan, 2013a) and single-step optimistic OMD (SOOMD, Joulani et al., 2017, Sec. 7.2). Let and denote subgradients of the pseudo-loss and true loss respectively. The inclusion of an optimistic hint leads to the following linearized update rules for play :

| (OFTRL) | ||||

| (1) | ||||

| (SOOMD) |

where is the hint subgradient, is a regularization parameter, and is proper regularization function that is -strongly convex with respect to a norm . The optimistic learner enjoys reduced regret whenever the hinting error is small (Rakhlin & Sridharan, 2013a; Joulani et al., 2017). Common choices of optimistic hints include the last observed subgradient or average of previously observed subgradients (Rakhlin & Sridharan, 2013a). We note that the standard FTRL and OMD updates can be recovered by setting the optimistic hints to zero.

3 Online Learning with Optimism and Delay

In the delayed feedback setting with constant delay of length , the learner only observes before making play . In this setting, we propose counterparts of the OFTRL and SOOMD online learning algorithms, which we call optimistic delayed FTRL (ODFTRL) and delayed optimistic online mirror descent (DOOMD) respectively:

| (ODFTRL) | ||||

| (2) | ||||

| (DOOMD) |

for hint vector . Our use of the notation instead of for the optimistic hint here is suggestive. Our regret analysis in Thms. 5 and 6 reveals that, instead of hinting only for the “future“ missing loss , delayed online learners should uses hints that guess at the summed subgradients of all delayed and future losses: .

3.1 Delay as Optimism

To analyze the regret of the ODFTRL and DOOMD algorithms, we make use of the first key insight of this paper: {quoting} Learning with delay is a special case of learning with optimism. In particular, ODFTRL and DOOMD are instances of OFTRL and SOOMD respectively with a particularly “bad” choice of optimistic hint that deletes the unobserved loss subgradients .

The implication of this reduction of delayed online learning to optimistic online learning is that any regret bound shown for undelayed OFTRL or SOOMD immediately yields a regret bound for ODFTRL and DOOMD under delay. As we demonstrate in the remainder of the paper, this novel connection between delayed and optimistic online learning allows us to bound the regret of optimistic, self-tuning, and tuning-free algorithms for the first time under delay.

Finally, it is worth reflecting on the key property of OFTRL and SOOMD that enables the delay-to-optimism reduction: each algorithm depends on and only through the sum .222For SOOMD, . For the “bad” hints of Lems. 1 and 2, these sums are observable even though and are not separately observable at time due to delay. A number of alternatives to SOOMD have been proposed for optimistic OMD (Chiang et al., 2012; Rakhlin & Sridharan, 2013a, b; Kamalaruban, 2016). Unlike SOOMD, these procedures all incorporate optimism in two steps, as in the updates

| (3) | |||

| (4) |

described in Rakhlin & Sridharan (2013a, Sec. 2.2). It is unclear how to reduce delayed OMD to an instance of one of these two-step procedures, as knowledge of the unobserved is needed to carry out the first step.

3.2 Delayed and Optimistc Regret Bounds

To demonstrate the utility of our delay-as-optimism perspective, we first present the following new regret bounds for OFTRL and SOOMD, proved in Apps. B and C respectively.

Both results feature the robust Huber penalty (Huber, 1964)

| (8) |

in place of the more common squared error term . As a result, Thms. 3 and 4 strictly improve the rate-optimal OFTRL and SOOMD regret bounds of Rakhlin & Sridharan (2013a, Thm. 7.28); Mohri & Yang (2016, Thm. 7.28); Orabona (2019, Thm. 7.28) and Joulani et al. (2017, Sec. 7.2) by revealing a previously undocumented robustness to inaccurate hints . We will use this robustness to large hint error to establish optimal regret bounds under delay.

As an immediate consequence of this regret analysis and our delay-as-optimism perspective, we obtain the first general analyses of FTRL and OMD with optimism and delay.

Theorem 6 (DOOMD regret).

If is differentiable and , then, for all , the DOOMD iterates satisfy

| (11) | ||||

| (12) |

Our results show a compounding of regret due to delay: the term of Thm. 5 is of size whenever , and the same holds for of Thm. 6 if . An optimal setting of therefore delivers regret, yielding the minimax optimal rate for adversarial learning under delay (Weinberger & Ordentlich, 2002). Thms. 5 and 6 also reveal the heightened value of optimism in the presence of delay: in addition to providing an effective guess of the future subgradient , an optimistic hint can approximate the missing delayed feedback () and thereby significantly reduce the penalty of delay. If, on the other hand, the hints are a poor proxy for the missing loss subgradients, the novel huber term ensures that we still only pay the minimax optimal penalty for delayed feedback.

Related work A classical approach to delayed feedback in online learning is the so-called “replication” strategy in which distinct learners take turns observing and responding to feedback (Weinberger & Ordentlich, 2002; Joulani et al., 2013; Agarwal & Duchi, 2011; Mesterharm, 2005). While minimax optimal in adversarial settings, this strategy has the disadvantage that each learner only sees losses and is completely isolated from the other replicates, exacerbating the problem of short prediction horizons. In contrast, we develop and analyze non-replicated delayed online learning strategies that use a combination of optimistic hinting and self-tuned regularization to mitigate the effects of delay while retaining optimal worst-case behavior.

We are not aware of prior analyses of DOOMD, and, to our knowledge, Thm. 5 and its adaptive generalization Thm. 10 provide the first general analysis of delayed FTRL, apart from the concurrent work of Hsieh et al. (2020, Thm. 1). Hsieh et al. (2020, Thm. 13) and Quanrud & Khashabi (2015, Thm. 2.1) focus only on delayed gradient descent, Korotin et al. (2020) study General Hedging, and Joulani et al. (2016, Thm. 4) and Quanrud & Khashabi (2015, Thm. A.5) study non-optimistic OMD under delay. Thms. 5, 6, and 10 strengthen these results from the literature which feature a sum of subgradient norms ( or ) in place of . Even in the absence of optimism, the latter can be significantly smaller: e.g., if the gradients are i.i.d. mean-zero vectors, the former has size while the latter has expectation . In the absence of optimism, McMahan & Streeter (2014) obtain a bound comparable to Thm. 5 for the special case of one-dimensional unconstrained online gradient descent.

In the absence of delay, Cutkosky (2019) introduces meta-algorithms for imbuing learning procedures with optimism while remaining robust to inaccurate hints; however, unlike OFTRL and SOOMD, the procedures of Cutkosky require separate observation of and each , making them unsuitable for our delay-to-optimism reduction.

3.3 Tuning Regularizers with Optimism and Delay

The online learning algorithms introduced so far all include a regularization parameter . In theory and in practice, these algorithms only achieve low regret if the regularization parameter is chosen appropriately. In standard FTRL, for example, one such setting that achieves optimal regret is . This choice, however, cannot be used in practice as it relies on knowledge of all future unobserved loss subgradients. To make use of online learning algorithms, the tuning parameter is often set using coarse upper bounds on, e.g., the maximum possible subgradient norm. However, these bounds are often very conservative and lead to poor real-world performance.

In the following sections, we introduce two strategies for tuning regularization with optimism and delay. Sec. 4 introduces the DORM and DORM+ algorithms, variants of ODFTRL and DOOMD that are entirely tuning-free. Sec. 5 introduces the AdaHedgeD algorithm, an adaptive variant of ODFTRL that is self-tuning; a sequence of regularization parameters are set automatically using new, tighter bounds on algorithm regret. All three algorithms achieve the minimax optimal regret rate under delay, support optimism, and have strong real-world performance as shown in Sec. 7.

4 Tuning-free Learning with Optimism and Delay

Regret matching (RM) (Blackwell, 1956; Hart & Mas-Colell, 2000) and regret matching+ (RM+) (Tammelin et al., 2015) are online learning algorithms that have strong empirical performance. RM was developed to find correlated equilibria in two-player games and is commonly used to minimize regret over the simplex. RM+ is a modification of RM designed to accelerate convergence and used to effectively solve the game of Heads-up Limit Texas Hold’em poker (Bowling et al., 2015). RM and RM+ support neither optimistic hints nor delayed feedback, and known regret bounds have a suboptimal scaling with respect to the problem dimension (Cesa-Bianchi & Lugosi, 2006; Orabona & Pál, 2015). To extend these algorithms to the delayed and optimistic setting and recover the optimal regret rate, we introduce our generalizations, delayed optimistic regret matching (DORM)

| (DORM) | ||||

| (13) |

and delayed optimistic regret matching+ (DORM+)

| (DORM+) | ||||

| (14) |

Each algorithm makes use of an instantaneous regret vector that quantifies the relative performance of each expert with respect to the play and the linearized loss subgradient . The updates also include a parameter and its conjugate exponent that is set to recover the minimax optimal scaling of regret with the number of experts (see Cor. 9). We note that DORM and DORM+ recover the standard RM and RM+ algorithms when , , , and .

4.1 Tuning-free Regret Bounds

To bound the regret of the DORM and DORM+ plays, we prove that DORM is an instance of ODFTRL and DORM+ is an instance of DOOMD. This connection enables us to immediately provide regret guarantees for these regret-matching algorithms under delayed feedback and with optimism. We first highlight a remarkable property of DORM and DORM+ that is the basis of their tuning-free nature. Under mild conditions:

The normalized DORM and DORM+ iterates are independent of the choice of regularization parameter .

Lem. 7, proved in App. E, implies that DORM and DORM+ are automatically optimally tuned with respect to , even when run with a default value of . Hence, these algorithms are tuning-free, a very appealing property for real-world deployments of online learning.

To show that DORM and DORM+ also achieve optimal regret scaling under delay, we connect them to ODFTRL and DOOMD operating on the nonnegative orthant with a special surrogate loss (see App. D for our proof):

Lem. 8 enables the following optimally-tuned regret bounds for DORM and DORM+ run with any choice of :

Cor. 9, proved in App. F, suggests a natural hinting strategy for reducing the regret of DORM and DORM+: predict the sum of unobserved instantaneous regrets . We explore this strategy empirically in Sec. 7. Cor. 9 also highlights the value of the parameter in DORM and DORM+: using the easily computed value yields the minimax optimal dependence of regret on dimension (Cesa-Bianchi & Lugosi, 2006; Orabona & Pál, 2015). By Lem. 8, setting in this way is equivalent to selecting a robust regularizer (Gentile, 2003) for the underlying ODFTRL and DOOMD problems.

Related work Without delay, Farina et al. (2021) independently developed optimistic versions of RM and RM+ by reducing them to OFTRL and a two-step variant of optimistic OMD 4. Unlike SOOMD, this two-step optimistic OMD requires separate observation of and , making it unsuitable for our delay-as-optimism reduction and resulting in a different algorithm from DORM+ even when . In addition, their regret bounds and prior bounds for RM and RM+ (special cases of DORM and DORM+ with ) have suboptimal regret when the dimension is large (Bowling et al., 2015; Zinkevich et al., 2007).

5 Self-tuned Learning with Optimism and Delay

In this section, we analyze an adaptive version of ODFTRL with time-varying regularization and develop strategies for setting appropriately in the presence of optimism and delay. We begin with a new general regret analysis of optimistic delayed adaptive FTRL (ODAFTRL)

| (ODAFTRL) |

where is an arbitrary hint vector revealed before is generated, is -strongly convex with respect to a norm , and is a regularization parameter.

Theorem 10 (ODAFTRL regret).

If is nonnegative and is non-decreasing in , then, , the ODAFTRL iterates satisfy

| (20) | ||||

| (21) | ||||

| (22) |

The proof of this result in App. G builds on a new regret bound for undelayed optimistic adaptive FTRL (OAFTRL). In the absence of delay (), Thm. 10 strictly improves existing regret bounds (Rakhlin & Sridharan, 2013a; Mohri & Yang, 2016; Joulani et al., 2017) for OAFTRL by providing tighter guarantees whenever the hinting error is larger than the subgradient magnitude . In the presence of delay, Thm. 10 benefits both from robustness to hinting error in the worst case and the ability to exploit accurate hints in the best case. The bounded-domain factors strengthen both standard OAFTRL regret bounds and the concurrent bound of Hsieh et al. (2020, Thm. 1) when is small and will enable us to design practical -tuning strategies under delay without any prior knowledge of unobserved subgradients. We now turn to these self-tuning protocols.

5.1 Conservative Tuning with Delayed Upper Bound

Setting aside the bounded-domain factors in Thm. 10 for now, the adaptive sequence is known to be a near-optimal minimizer of the ODAFTRL regret bound (McMahan, 2017, Lemma 1). However, this value is unobservable at time . A common strategy is to play the conservative value , where is a uniform upper bound on the unobserved terms (Joulani et al., 2016; McMahan & Streeter, 2014). In practice, this requires computing an a priori upper bound on any subgradient norm that could possibly arise and often leads to extreme over-regularization (see Sec. 7).

As a preliminary step towards fully adaptive settings of , we analyze in App. H a new delayed upper bound (DUB) tuning strategy which relies only on observed terms and does not require upper bounds for future losses.

Theorem 11 (DUB regret).

As desired, the DUB setting of depends only on previously observed and terms and achieves optimal regret scaling with the delay period . However, the terms , are themselves potentially loose upper bounds for the instantaneous regret at time . In the following section, we show how the DUB regularization setting can be refined further to produce AdaHedgeD adaptive regularization.

5.2 Refined Tuning with AdaHedgeD

As noted by Erven et al. (2011); de Rooij et al. (2014); Orabona (2019), the effectiveness of an adaptive regularization setting that uses an upper bound on regret (such as ) relies heavily on the tightness of that bound. In practice, we want to set using as tight a bound as possible. Our next result introduces a new tuning sequence that can be used with delayed feedback and is inspired by the popular AdaHedge algorithm (Erven et al., 2011). It makes use of the tightened regret analysis underlying Thm. 10 to enable tighter settings of compared to DUB, while still controlling algorithm regret (see proof in App. I).

Theorem 12 (AdaHedgeD regret).

Remarkably, Thm. 12 yields a minimax optimal dependence on the delay parameter and nearly matches the Thm. 5 regret of the optimal constant tuning. Although this regret bound is identical to that in Thm. 11, in practice the values produced by AdaHedgeD can be orders of magnitude smaller than those of DUB, granting additional adaptivity. We evaluate the practical implications of these settings in Sec. 7.

As a final note, when is bounded on , we recommend choosing so that . For negative entropy regularization on the simplex , this yields and a regret bound with minimax optimal dependence on (Cesa-Bianchi & Lugosi, 2006; Orabona & Pál, 2015).

Related work Our AdaHedgeD terms differ from standard AdaHedge increments (see, e.g., Orabona, 2019, Sec. 7.6) due to the accommodation of delay, the incorporation of optimism, and the inclusion of the final two terms in the . These non-standard terms are central to reducing the impact of delay on our regret bounds. Prior and concurrent approaches to adaptive tuning under delay do not incorporate optimism and require an explicit upper bound on all future subgradient norms, a quantity which is often difficult to obtain or very loose (McMahan & Streeter, 2014; Joulani et al., 2016; Hsieh et al., 2020). Our optimistic algorithms, DUB and AdaHedgeD, admit comparable regret guarantees (Thms. 11 and 12) but require no prior knowledge of future subgradients.

6 Learning to Hint with Delay

As we have seen, optimistic hints play an important role in online learning under delay: effective hinting can counteract the increase in regret under delay. In this section, we consider the problem of choosing amongst several competing hinting strategies. We show that this problem can again be treated as a delayed online learning problem. In the following, we will call the original online learning problem the “base problem” and the learning-to-hint problem the “hinting problem.”

Suppose that, at time , we observe the hints of different hinters arranged into a matrix . Each column of is one hinter’s best estimate of the sum of missing loss subgradients . Our aim is to output a sequence of combined hints with low regret relative to the best constant combination strategy in hindsight. To achieve this using delayed online learning, we make use of a convex loss function for the hint learner that upper bounds the base learner regret.

Assumption 1 (Convex regret bound).

For any hint sequence and , the base problem admits the regret bound for and convex functions independent of .

As we detail in App. K, Assump. 1 holds for all of the learning algorithms introduced in this paper. For example, by Cor. 9, if the base learner is DORM, we may choose , and the convex function .333The alternative choice also bounds regret but may have size .

For any base learner satisfying Assump. 1, we choose as our hinting loss, use the tuning-free DORM+ algorithm to output the combination weights on each round, and provide the hint to the base learner. The following result, proved in App. J, shows that this learning to hint strategy performs nearly as well as the best constant hint combination strategy in restrospect.

Theorem 13 (Learning to hint regret).

To quantify the size of this regret bound, consider again the DORM base learner with . By Lem. 26 in App. K, for the maximum absolute entry of . Each column of is a sum subgradient hints, so is . Thus, for this choice of hinter loss, the term is , and the hint learner suffers only additional regret from learning to hint. Notably, this additive regret penalty is if (and when ), so the learning to hint strategy of Thm. 13 preserves minimax optimal regret rates.

Related work Rakhlin & Sridharan (2013a, Sec. 4.1) propose and analyze a method to learn optimism strategies for a two-step OMD base learner. Unlike Thm. 13, the approach does not accommodate delay, and the analyzed regret is only with respect to single hinting strategies rather than combination strategies, .

7 Experiments

| AdaHedgeD | DORM | DORM+ | Model1 | Model2 | Model3 | Model4 | Model5 | Model6 | |

|---|---|---|---|---|---|---|---|---|---|

| Precip. 3-4w | 21.726 | 21.731 | 21.675 | 21.973 | 22.431 | 22.357 | 21.978 | 21.986 | 23.344 |

| Precip. 5-6w | 21.868 | 21.957 | 21.838 | 22.030 | 22.570 | 22.383 | 22.004 | 21.993 | 23.257 |

| Temp. 3-4w | 2.273 | 2.259 | 2.247 | 2.253 | 2.352 | 2.394 | 2.277 | 2.319 | 2.508 |

| Temp. 5-6w | 2.316 | 2.316 | 2.303 | 2.270 | 2.368 | 2.459 | 2.278 | 2.317 | 2.569 |

We now apply the online learning techniques developed in this paper to the problem of adaptive ensembling for subseasonal forecasting. Our experiments are based on the subseasonal forecasting data of Flaspohler et al. (2021) that provides the forecasts of machine learning and physics-based models for both temperature and precipitation at two forecast horizons: 3-4 weeks and 5-6 weeks. In operational subseasonal forecasting, feedback is delayed; models make or forecasts (depending on the forecast horizon) before receiving feedback. We use delayed, optimistic online learning to play a time-varying convex combination of input models and compete with the best input model over a year-long prediction period ( semimonthly dates). The loss function is the geographic root-mean squared error (RMSE) across locations in the Western United States.

We evaluate the relative merits of the delayed online learning techniques presented by computing yearly regret and mean RMSE for the ensemble plays made by the online leaner in each year from 2011-2020. Unless otherwise specified, all online learning algorithms use the recent_g hint , which approximates each unobserved subgradient at time with the most recent observed subgradient . See App. L for full experimental details, App. N for algorithmic details, and App. M for extended experimental results.

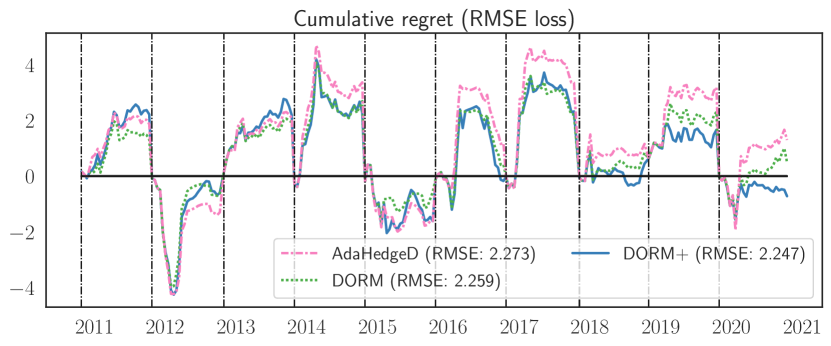

Competing with the best input model The primary benefit of online learning in this setting is its ability to achieve small average regret, i.e., to perform nearly as well as the best input model in the competitor set without knowing which is best in advance. We run our three delayed online learners—DORM, DORM+, and AdaHedgeD—on all four subseasonal prediction tasks and measure their average loss.

The average yearly RMSE for the three online learning algorithms and the six input models is shown in Table 1. The DORM+ algorithm tracks the performance of the best input model for all tasks except Temp. 5-6w. All online learning algorithms achieve negative regret for both precipitation tasks. Fig. 1 shows the yearly cumulative regret (in terms of the RMSE loss) of the online learning algorithms over the -year evaluation period. There are several years (e.g., 2012, 2014, 2020) in which all online learning algorithms substantially outperform the best input forecasting model. The consistently low regret year-to-year of DORM+ compared to DORM and AdaHedgeD makes it a promising candidate for real-world delayed subseasonal forecasting. Notably, RM+ (a special case of DORM+) is known to have small tracking regret, i.e., it competes well even with strategies that switch between input models a bounded number of times (Tammelin et al., 2015, Thm. 2). We suspect that this is one source of DORM+’s superior performance. We also note that the self-tuned AdaHedgeD performs comparably to the the optimally-tuned DORM, demonstrating the effectiveness of our self-tuning strategy.

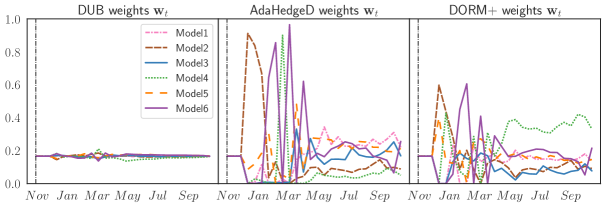

Impact of regularization We evaluate the impact of the three regularization strategies developed in this paper: 1) the upper bound DUB strategy, 2) the tighter AdaHedgeD strategy, and 3) the DORM+ algorithm that is tuning-free. This tuning-free property has evident practical benefits, as this section demonstrates.

Fig. 2 shows the yearly regret of the DUB, AdaHedgeD, and DORM+ algorithms. A consistent pattern appears in the yearly regret: DUB has moderate positive regret, AdaHedgeD has both the largest positive and negative regret values, and DORM+ sits between these two extremes. If we examine the weights played by each algorithm (Fig. 3), the weights of DUB and AdaHedgeD appear respectively over- and under-regularized compared to DORM+ (the top model for this task). DUB’s use of the upper bound results in a very large regularization setting () and a virtually uniform weight setting. AdaHedgeD’s tighter bound produces a value for that is two orders of magnitude smaller. However, in this short-horizon forecasting setting, AdaHedgeD’s aggressive plays result in higher average RMSE. By nature of it’s -free updates, DORM+ produces more moderately regularized plays and negative regret.

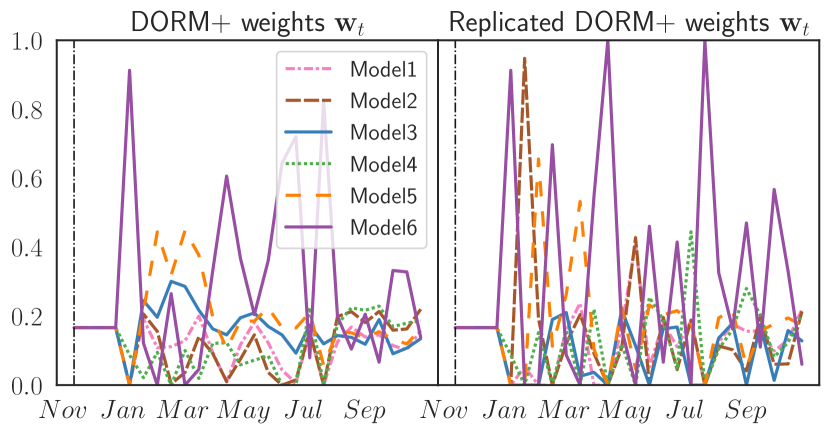

To replicate or not to replicate In this section, we compare the performance of replicated and non-replicated variants of our DORM+ algorithm. Both algorithms perform well (see Sec. M.3), but in all tasks, DORM+ outperforms replicated DORM+ (in which independent copies of DORM+ make staggered predictions). Fig. 4 provides an example of the weight plots produced by the replication strategy in the Temp. 5-6w task with . The separate nature of the replicated learner’s plays is evident in the weight plots and leads to an average RMSE of , versus for DORM+ in the Temp. 5-6w task.

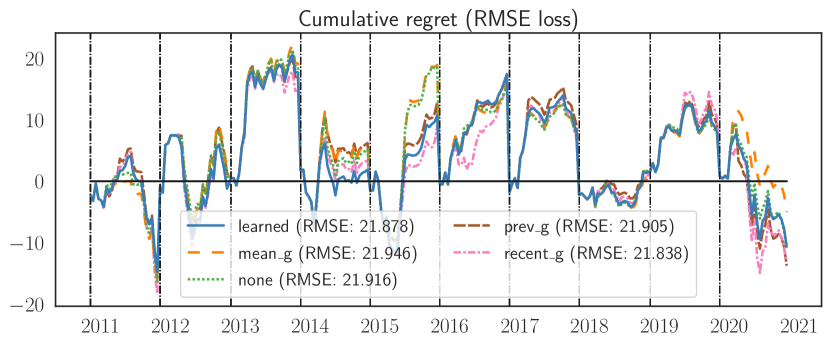

Learning to hint Finally, we examine the effect of optimism on the DORM+ algorithms and the ability of our “learning to hint” strategy to recover the performance of the best optimism strategy in retrospect. Following the hint construction protocol in Sec. N.2, we run the DORM+ base algorithm with subgradient hinting strategies: (recent_g), (prev_g), (mean_g), or (none). We also use DORM+ as the meta-algorithm for hint learning to produce the learned optimism strategy that plays a convex combination of the four hinters. In Fig. 5, we first note that several optimism strategies outperform the none hinter, confirming the value of optimism in reducing regret. The learned variant of DORM+ avoids the worst-case performance of the individual hinters in any given year (e.g., 2015), while staying competitive with the best strategy (although it does not outperform the dominant recent_g strategy overall). We believe the performance of the online hinter could be further improved by developing tighter convex bounds on the regret of the base problem in the spirit of Assump. 1.

8 Conclusion

In this work, we confronted the challenges of delayed feedback and short regret horizons in online learning with optimism, developing practical non-replicated, self-tuned and tuning-free algorithms with optimal regret guarantees. Our “delay as optimism” reduction and our refined analysis of optimistic learning produced novel regret bounds for both optimistic and delayed online learning and elucidated the connections between these two problems. Within the subseasonal forecasting domain, we demonstrated that delayed online learning methods can produce zero-regret forecast ensembles that perform robustly from year-to-year. Our results highlighted DORM+ as a particularly promising candidate due to its tuning-free nature and small tracking regret.

In future work, we are excited to further develop optimism strategies under delay by 1) employing tighter convex loss bounds on the regret of the base algorithm to improve the learning to hint algorithm, 2) exploring the relative impact of hinting for “past” () versus “future” () missing subgradients (see Sec. M.5 for an initial exploration), and 3) developing adaptive self-tuning variants of the DOOMD algorithm. Within the subseasonal domain, we plan to leverage the flexibility of our optimism formulation to explore hinting strategies that use meteorological expertise to improve beyond the generic mean and past subgradient hints and to deploy our open-source subseasonal forecasting algorithms operationally.

Acknowledgements

This work was supported by Microsoft AI for Earth, an NSF GRFP, and the NSF grants no. 1925930 “Collaborative Research: TRIPODS Institute for Optimization and Learning”, no. 1908111 “AF: Small: Collaborative Research: New Representations for Learning Algorithms and Secure Computation”, and no. 2022446 “Foundations of Data Science Institute”. FO also thanks Nicolò Cesa-Bianchi and Christian Kroer for discussions on RM and RM+.

References

- Agarwal & Duchi (2011) Agarwal, A. and Duchi, J. C. Distributed delayed stochastic optimization. In Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., and Weinberger, K. Q. (eds.), Advances in Neural Information Processing Systems, volume 24. Curran Associates, Inc., 2011.

- Azoury & Warmuth (2001) Azoury, K. S. and Warmuth, M. K. Relative loss bounds for on-line density estimation with the exponential family of distributions. Machine Learning, 43(3):211–246, 2001.

- Blackwell (1956) Blackwell, D. An analog of the minimax theorem for vector payoffs. Pacific Journal of Mathematics, 6(1):1–8, 1956.

- Bowling et al. (2015) Bowling, M., Burch, N., Johanson, M., and Tammelin, O. Heads-up limit hold’em poker is solved. Science, 347(6218):145–149, 2015. ISSN 0036-8075. doi: 10.1126/science.1259433.

- Cesa-Bianchi & Lugosi (2006) Cesa-Bianchi, N. and Lugosi, G. Prediction, learning, and games. Cambridge university press, 2006.

- Chiang et al. (2012) Chiang, C.-K., Yang, T., Lee, C.-J., Mahdavi, M., Lu, C.-J., Jin, R., and Zhu, S. Online optimization with gradual variations. In Mannor, S., Srebro, N., and Williamson, R. C. (eds.), Proceedings of the 25th Annual Conference on Learning Theory, volume 23, pp. 6.1–6.20, Edinburgh, Scotland, 25–27 Jun 2012.

- Cutkosky (2019) Cutkosky, A. Combining online learning guarantees. In Beygelzimer, A. and Hsu, D. (eds.), Proceedings of the Thirty-Second Conference on Learning Theory, volume 99 of Proceedings of Machine Learning Research, pp. 895–913, Phoenix, USA, 25–28 Jun 2019. PMLR.

- Danskin (2012) Danskin, J. M. The theory of max-min and its application to weapons allocation problems, volume 5. Springer Science & Business Media, 2012.

- de Rooij et al. (2014) de Rooij, S., van Erven, T., Grünwald, P. D., and Koolen, W. M. Follow the leader if you can, hedge if you must. Journal of Machine Learning Research, 15(37):1281–1316, 2014.

- Erven et al. (2011) Erven, T., Koolen, W. M., Rooij, S., and Grünwald, P. Adaptive hedge. In Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., and Weinberger, K. Q. (eds.), Advances in Neural Information Processing Systems, volume 24, pp. 1656–1664. Curran Associates, Inc., 2011.

- Farina et al. (2021) Farina, G., Kroer, C., and Sandholm, T. Faster game solving via predictive blackwell approachability: Connecting regret matching and mirror descent. Proceedings of the AAAI Conference on Artificial Intelligence, 35(6):5363–5371, May 2021.

- Flaspohler et al. (2021) Flaspohler, G., Orabona, F., Cohen, J., Mouatadid, S., Oprescu, M., Orenstein, P., and Mackey, L. Replication Data for: Online Learning with Optimism and Delay, 2021. URL https://doi.org/10.7910/DVN/IOCFCY.

- Gentile (2003) Gentile, C. The robustness of the -norm algorithms. Machine Learning, 53(3):265–299, 2003.

- Hart & Mas-Colell (2000) Hart, S. and Mas-Colell, A. A simple adaptive procedure leading to correlated equilibrium. Econometrica, 68(5):1127–1150, 2000.

- Hsieh et al. (2020) Hsieh, Y.-G., Iutzeler, F., Malick, J., and Mertikopoulos, P. Multi-agent online optimization with delays: Asynchronicity, adaptivity, and optimism. arXiv preprint arXiv:2012.11579, 2020.

- Huber (1964) Huber, P. J. Robust Estimation of a Location Parameter. The Annals of Mathematical Statistics, 35(1):73 – 101, 1964. doi: 10.1214/aoms/1177703732. URL https://doi.org/10.1214/aoms/1177703732.

- Hwang et al. (2019) Hwang, J., Orenstein, P., Cohen, J., Pfeiffer, K., and Mackey, L. Improving subseasonal forecasting in the western U.S. with machine learning. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 2325–2335, 2019.

- Joulani et al. (2013) Joulani, P., Gyorgy, A., and Szepesvári, C. Online learning under delayed feedback. In International Conference on Machine Learning, pp. 1453–1461, 2013.

- Joulani et al. (2016) Joulani, P., Gyorgy, A., and Szepesvári, C. Delay-tolerant online convex optimization: Unified analysis and adaptive-gradient algorithms. In Thirtieth AAAI Conference on Artificial Intelligence, 2016.

- Joulani et al. (2017) Joulani, P., György, A., and Szepesvári, C. A modular analysis of adaptive (non-) convex optimization: Optimism, composite objectives, and variational bounds. In International Conference on Algorithmic Learning Theory, pp. 681–720. PMLR, 2017.

- Kamalaruban (2016) Kamalaruban, P. Improved optimistic mirror descent for sparsity and curvature. arXiv preprint arXiv:1609.02383, 2016.

- Koolen et al. (2014) Koolen, W., Van Erven, T., and Grunwald, P. Learning the learning rate for prediction with expert advice. In Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., and Weinberger, K. Q. (eds.), Advances in Neural Information Processing Systems, volume 27, pp. 2294–2302. Curran Associates, Inc., 2014.

- Korotin et al. (2020) Korotin, A., V’yugin, V., and Burnaev, E. Adaptive hedging under delayed feedback. Neurocomputing, 397:356–368, 2020.

- Liu & Wright (2015) Liu, J. and Wright, S. J. Asynchronous stochastic coordinate descent: Parallelism and convergence properties. SIAM Journal on Optimization, 25(1):351–376, 2015.

- Liu et al. (2014) Liu, J., Wright, S., Ré, C., Bittorf, V., and Sridhar, S. An asynchronous parallel stochastic coordinate descent algorithm. In International Conference on Machine Learning, pp. 469–477. PMLR, 2014.

- McMahan & Streeter (2014) McMahan, B. and Streeter, M. Delay-tolerant algorithms for asynchronous distributed online learning. In Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., and Weinberger, K. Q. (eds.), Advances in Neural Information Processing Systems, volume 27, pp. 2915–2923. Curran Associates, Inc., 2014.

- McMahan (2017) McMahan, H. B. A survey of algorithms and analysis for adaptive online learning. The Journal of Machine Learning Research, 18(1):3117–3166, 2017.

- McQuade & Monteleoni (2012) McQuade, S. and Monteleoni, C. Global climate model tracking using geospatial neighborhoods. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 26, 2012.

- Mesterharm (2005) Mesterharm, C. On-line learning with delayed label feedback. In International Conference on Algorithmic Learning Theory, pp. 399–413. Springer, 2005.

- Mohri & Yang (2016) Mohri, M. and Yang, S. Accelerating online convex optimization via adaptive prediction. In Artificial Intelligence and Statistics, pp. 848–856. PMLR, 2016.

- Monteleoni & Jaakkola (2004) Monteleoni, C. and Jaakkola, T. Online learning of non-stationary sequences. In Thrun, S., Saul, L., and Schölkopf, B. (eds.), Advances in Neural Information Processing Systems, volume 16, pp. 1093–1100. MIT Press, 2004.

- Monteleoni et al. (2011) Monteleoni, C., Schmidt, G. A., Saroha, S., and Asplund, E. Tracking climate models. Statistical Analysis and Data Mining: The ASA Data Science Journal, 4(4):372–392, 2011.

- Nesterov (2012) Nesterov, Y. Efficiency of coordinate descent methods on huge-scale optimization problems. SIAM Journal on Optimization, 22(2):341–362, 2012.

- Nowak et al. (2020) Nowak, K., Beardsley, J., Brekke, L. D., Ferguson, I., and Raff, D. Subseasonal prediction for water management: Reclamation forecast rodeo I and II. In 100th American Meteorological Society Annual Meeting. AMS, 2020.

- Orabona (2019) Orabona, F. A modern introduction to online learning. ArXiv, abs/1912.13213, 2019.

- Orabona & Pál (2015) Orabona, F. and Pál, D. Scale-free algorithms for online linear optimization. In International Conference on Algorithmic Learning Theory, pp. 287–301. Springer, 2015.

- Orabona & Pál (2015) Orabona, F. and Pál, D. Optimal non-asymptotic lower bound on the minimax regret of learning with expert advice. arXiv preprint arXiv:1511.02176, 2015.

- Quanrud & Khashabi (2015) Quanrud, K. and Khashabi, D. Online learning with adversarial delays. In Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., and Garnett, R. (eds.), Advances in Neural Information Processing Systems, volume 28, pp. 1270–1278, 2015.

- Rakhlin & Sridharan (2013a) Rakhlin, A. and Sridharan, K. Online learning with predictable sequences. In Shalev-Shwartz, S. and Steinwart, I. (eds.), Proceedings of the 26th Annual Conference on Learning Theory, pp. 993–1019. PMLR, 2013a.

- Rakhlin & Sridharan (2013b) Rakhlin, S. and Sridharan, K. Optimization, learning, and games with predictable sequences. In Burges, C. J. C., Bottou, L., Welling, M., Ghahramani, Z., and Weinberger, K. Q. (eds.), Advances in Neural Information Processing Systems, pp. 3066–3074. Curran Associates, Inc., 2013b.

- Recht et al. (2011) Recht, B., Re, C., Wright, S., and Niu, F. Hogwild!: A lock-free approach to parallelizing stochastic gradient descent. In Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., and Weinberger, K. Q. (eds.), Advances in Neural Information Processing Systems, volume 24, pp. 693–701. Curran Associates, Inc., 2011.

- Rockafellar (1970) Rockafellar, R. T. Convex analysis, volume 36. Princeton university press, 1970.

- Shalev-Shwartz (2007) Shalev-Shwartz, S. Online learning: Theory, algorithms, and applications. PhD thesis, The Hebrew University, 2007.

- Shalev-Shwartz (2012) Shalev-Shwartz, S. Online learning and online convex optimization. Foundations and Trends® in Machine Learning, 4(2):107–194, 2012.

- Sra et al. (2016) Sra, S., Yu, A. W., Li, M., and Smola, A. AdaDelay: Delay adaptive distributed stochastic optimization. In Gretton, A. and Robert, C. C. (eds.), Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, volume 51, pp. 957–965. PMLR, 2016.

- Steinhardt & Liang (2014) Steinhardt, J. and Liang, P. Adaptivity and optimism: An improved exponentiated gradient algorithm. In International Conference on Machine Learning, pp. 1593–1601, 2014.

- Syrgkanis et al. (2015) Syrgkanis, V., Agarwal, A., Luo, H., and Schapire, R. E. Fast convergence of regularized learning in games. In Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., and Garnett, R. (eds.), Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc., 2015.

- Tammelin et al. (2015) Tammelin, O., Burch, N., Johanson, M., and Bowling, M. Solving heads-up limit texas hold’em. In Twenty-Fourth International Joint Conference on Artificial Intelligence, 2015.

- Weinberger & Ordentlich (2002) Weinberger, M. J. and Ordentlich, E. On delayed prediction of individual sequences. IEEE Transactions on Information Theory, 48(7):1959–1976, 2002.

- White et al. (2017) White, C. J., Carlsen, H., Robertson, A. W., Klein, R. J., Lazo, J. K., Kumar, A., Vitart, F., Coughlan de Perez, E., Ray, A. J., Murray, V., et al. Potential applications of subseasonal-to-seasonal (s2s) predictions. Meteorological applications, 24(3):315–325, 2017.

- Zinkevich et al. (2007) Zinkevich, M., Johanson, M., Bowling, M. H., and Piccione, C. Regret minimization in games with incomplete information. In Platt, J., Koller, D., Singer, Y., and Roweis, S. (eds.), Advances in Neural Information Processing Systems, volume 20. Curran Associates, Inc., 2007.

Appendix A Extended Literature Review

We review here additional prior work not detailed in the main paper.

A.1 General online learning

A.2 Online learning with optimism but without delay

Syrgkanis et al. (2015) analyzed optimistic FTRL and two-step variant of optimistic MD without delay. The work focuses on a particular form of optimism (using the last observed subgradient as a hint) and shows improved rates of convergence to correlated equilibria in multiplayer games. In the absence of delay, Steinhardt & Liang (2014) combined optimism and adaptivity to obtain improvements over standard optimistic regret bounds.

A.3 Online learning with delay but without optimism

Overview

Delayed stochastic optimization

FTRL-Prox vs. FTRL

Joulani et al. (2016) analyzed the delayed feedback regret of the FTRL-Prox algorithm, which regularizes toward the last played iterate as in online mirror descent, but did not study the standard FTRL algorithms (sometimes called FTRL-Centered) analyzed in this work.

A.4 Self-tuned online learning without delay or optimism

A.5 Online learning without delay for climate forecasting

Monteleoni et al. (2011) applied the Learn- online learning algorithm of Monteleoni & Jaakkola (2004) to the task of ensembling climate models. The authors considered historical temperature data from 20 climate models and tracked the changing sequence of which model predicts best at any given time. In this context, the algorithm used was based on a set of generalized Hidden Markov Models, in which the identity of the current best model is the hidden variable and the updates are derived as Bayesian updates. This work was extended to take into account the influence of regional neighboring locations when performing updates (McQuade & Monteleoni, 2012). These initial results demonstrated the promise of applying online learning to climate model ensembling, but both methods rely on receiving feedback without delay.

Appendix B Proof of Thm. 3: OFTRL regret

We will prove the following more general result for optimistic adaptive FTRL (OAFTRL)

| (OAFTRL) |

from which Thm. 3 will follow with the choice for all .

Theorem 14 (OAFTRL regret).

If is nonnegative and is non-decreasing, then, , the OAFTRL iterates satisfy,

| (38) | ||||

| (39) |

for

| (40) | ||||

| (41) | ||||

| (42) | ||||

| (43) |

Proof.

Consider a sequence of arbitrary auxiliary subgradient hints and the auxiliary OAFTRL sequence

| (44) |

Generalizing the forward regret decomposition of Joulani et al. (2017) and the prediction drift decomposition of Joulani et al. (2016), we will decompose the regret of our original sequence into the regret of the auxiliary sequence and the drift between and .

For each time , define the auxiliary optimistic objective function . Fixing any , we have the regret bound

| (45) | ||||

| (46) |

To control the drift term we employ the following lemma, proved in Sec. B.1, which bounds the difference between two OAFTRL optimizers with different losses but common regularizers.

Letting , we now bound each drift term summand using the Fenchel-Young inequality for dual norms and Lem. 15:

| (48) |

To control the auxiliary regret, we begin by invoking the OAFTRL regret bound of Orabona (2019, proof of Thm. 7.28), the nonnegativity of , and the assumption that is non-decreasing:

| (49) | ||||

| (50) |

We next bound the summands in this expression in two ways. Since is the minimizer of , we may apply the Fenchel-Young inequality for dual norms to conclude that

| (51) | ||||

| (52) |

Moreover, by Orabona (2019, proof of Thm. 7.28) and the fact that minimizes over ,

| (53) |

Our collective bounds establish that

| (54) | ||||

| (55) | ||||

| (56) |

To obtain an interpretable bound on regret, we will minimize the final expression over all convex combinations of and . The optimal choice is given by

| (57) | ||||

| (58) | ||||

| (59) |

For this choice, we obtain the bound

| (60) | ||||

| (61) | ||||

| (62) | ||||

| (63) | ||||

| (64) |

and therefore

| (65) |

Since is arbitrary, the advertised regret bounds follow as

| (66) | ||||

| (67) | ||||

| (68) |

∎

B.1 Proof of Lem. 15: OAFTRL difference bound

Fix any time , and define the optimistic objective function and the auxiliary optimistic objective function so that and . We have

| (69) | ||||

| (70) |

Summing the above inequalities and applying the Fenchel-Young inequality for dual norms, we obtain

| (71) |

which yields the first half of our target bound after rearrangement. The second half follows from the definition of diameter, as .

Appendix C Proof of Thm. 4: SOOMD regret

We will prove the following more general result for adaptive SOOMD (ASOOMD)

| (ASOOMD) |

from which Thm. 4 will follow with the choice for all .

Theorem 16 (ASOOMD regret).

Fix any . If each is proper and differentiable, , and , then, for all , the ASOOMD iterates satisfy

| (72) | ||||

| (73) |

Proof.

Fix any , instantiate the notation of Joulani et al. (2017, Sec. 7.2), and consider the choices

-

•

, for , so that for ,

-

•

for ,

-

•

and for all ,

-

•

,

-

•

for all .

Since, for each , and is convex, the Ada-MD regret inequality of Joulani et al. (2017, Eq. (24)) and the choice imply that

| (74) | ||||

| (75) | ||||

| (76) | ||||

| (77) | ||||

| (78) | ||||

| (79) |

To obtain our advertised bound, we begin with the expression 79 and invoke the -strong convexity of and the nonnegativity of to find

| (80) | ||||

| (81) |

We will bound the final sum in this expression using two lemmas. The first is a bound on the difference between subsequent ASOOMD iterates distilled from Joulani et al. (2016, proof of Prop. 2).

Lemma 17 (ASOOMD iterate bound (Joulani et al., 2016, proof of Prop. 2)).

If is differentiable and -strongly convex with respect to , then the ASOOMD iterates satisfy

| (82) |

The second, proved in Sec. C.1, is a general bound on under a norm constraint on .

Lemma 18 (Norm-constrained conjugate).

For any and ,

| (83) | ||||

| (84) | ||||

| (85) | ||||

| (86) | ||||

| (87) |

C.1 Proof of Lem. 18: Norm-constrained conjugate

By the definition of the dual norm,

| (90) | ||||

| (91) |

We compare to the values of less constrained optimization problems to obtain the final inequalities:

| (92) | ||||

| (93) |

Appendix D Proof of Lem. 8: DORM is ODAFTRL and DORM + is DOOMD

Our derivations will make use of several facts about norms, summarized in the next lemma.

Lemma 19 ( norm facts).

For , , and any vectors and ,

| (94) | ||||

| (95) | ||||

| (96) | ||||

| (97) | ||||

| (98) | ||||

| (99) | ||||

| (100) | ||||

| (101) | ||||

| (102) | ||||

| (103) | ||||

| (104) |

Proof.

The fact 94 follows from the chain rule as

| (105) | ||||

| (106) |

We now prove each claim in turn.

D.1 DORM is ODAFTRL

Fix , , and . The ODAFTRL iterate with hint , , , loss subgradients , and regularization parameter takes the form

| (107) | ||||

| (108) | ||||

| (109) | ||||

| (110) | ||||

| (111) |

proving the claim.

D.2 DORM+ is DOOMD

Fix and , and let denote the unnormalized iterates generated by DORM+ with hints , instantaneous regrets , regularization parameter , and hyperparameter . For , let denote the sequence generated by DOOMD with , hints , , , loss subgradients , and regularization parameter . We proceed by induction to show that, for each , .

Base case

By assumption, , confirming the base case.

Inductive step

Fix any and assume that for each , . Then, by the definition of DOOMD and our norm facts,

| (112) | ||||

| (113) | ||||

| (114) | ||||

| (115) | ||||

| (116) | ||||

| (117) | ||||

| (118) | ||||

| (119) |

completing the inductive step.

Appendix E Proof of Lem. 7: DORM and DORM+ are independent of

We will prove the following more general result, from which the stated result follows immediately.

Lemma 20 (DORM and DORM+ are independent of ).

Consider either DORM or DORM+ plays as a function of , and suppose that for all time points , the observed subgradient and chosen hint only depend on through and respectively. Then if is independent of the choice of , then so is for all time points . As a result, is also independent of the choice of at all time points.

Proof.

We prove each result by induction on .

E.1 Scaled DORM iterates are independent of

Base case

By assumption, is independent of the choice of . Hence is independent of , confirming the base case.

Inductive step

Fix any , suppose is independent of the choice of for all , and consider

| (120) |

Since depends on only through and for , our dependence assumptions for ; the fact that, for each , ; and our inductive hypothesis together imply that is independent of .

E.2 Scaled DORM+ iterates are independent of

Base case

By assumption, is independent of the choice of , confirming the base case.

Inductive step

Fix any and suppose is independent of the choice of for all . Since ,

| (121) |

Since depends on only through and , our dependence assumptions for ; the fact that, for each , ; and our inductive hypothesis together imply that is independent of . ∎

Appendix F Proof of Cor. 9: DORM and DORM+ regret

Fix any and , consider the unnormalized DORM or DORM+ iterates , and define for each . For either algorithm, we will bound our regret in terms of the surrogate losses

| (122) |

defined for . Since , , and each is convex, we have

| (123) |

For DORM, Lem. 8 implies that are ODFTRL iterates, so the ODFTRL regret bound (Thm. 5) and the fact that is -strongly convex with respect to (see Shalev-Shwartz, 2007, Lemma 17) with imply

| (124) |

Similarly, for DORM+, Lem. 8 implies that are DOOMD iterates with , so the DOOMD regret bound (Thm. 6) and the strong convexity of yield

| (125) |

Since, by Lem. 7, the choice of does not impact the iterate sequences played by DORM and DORM+, we may take the infimum over in these regret bounds. The second advertised inequality comes from the identity and the norm equivalence relations and for , as shown in Lem. 21 below. The final claim follows as

| (126) |

since .

Lemma 21 (Equivalence of -norms).

If and , then .

Proof.

To show for , suppose without loss of generality that . Then, . Hence .

For the inequality , applying Hölder’s inequality yields

| (127) |

so . ∎

Appendix G Proof of Thm. 10: ODAFTRL regret

Appendix H Proof of Thm. 11: DUB Regret

Fix any . By Thm. 10, ODAFTRL admits the regret bound

| (128) |

To control the second term in this bound, we apply the following lemma proved in Sec. H.1.

Lemma 22 (DUB-style tuning bound).

Fix any and any non-negative sequences , . If

| (129) |

then

| (130) |

Since , the result now follows by setting and , so that

| (131) |

H.1 Proof of Lem. 22: DUB-style tuning bound

We prove the claim

| (132) |

by induction on .

Base case

For ,

| (133) |

confirming the base case.

Inductive step

Now fix any and suppose that

| (134) |

for all . We apply this inductive hypothesis to deduce that, for each ,

| (135) | ||||

| (136) | ||||

| (137) | ||||

| (138) | ||||

| (139) |

Now, we sum this inequality over , to obtain

| (140) | ||||

| (141) | ||||

| (142) | ||||

| (143) |

Solving this quadratic inequality and applying the triangle inequality, we have

| (144) | ||||

| (145) |

Appendix I Proof of Thm. 12: AdaHedgeD Regret

Fix any . Since the AdaHedgeD regularization sequence is non-decreasing, Thm. 14 gives the regret bound

| (146) |

and the proof of Thm. 14 gives the upper estimate 65:

| (147) |

Hence, it remains to bound . Since and for ,

| (148) | ||||

| (149) | ||||

| (150) | ||||

| (151) | ||||

| (152) | ||||

| (153) |

Solving the above quadratic inequality for and applying the triangle inequality, we find

| (154) | ||||

| (155) |

Appendix J Proof of Thm. 13: Learning to hint regret

We begin by bounding the hinting problem regret. Since DORM+ is used for the hinting problem, the following result is an immediate corollary of Cor. 9.

Corollary 23 (DORM+ hinting problem regret).

With convex losses and no meta-hints, the DORM+ hinting problem iterates satisfy, for each ,

| (156) | ||||

| (157) | ||||

| (158) |

If, in addition, , then .

Our next lemma, proved in Sec. J.1, provides an interpretable bound for each term in terms of the hinting problem subgradients .

Lemma 24 (Hinting problem subgradient regret bound).

Under the notation and assumptions of Cor. 23,

| (159) | ||||

| (160) | ||||

| (161) |

Now fix any . We invoke Assump. 1, Cor. 23, and Lem. 24 in turn to bound the base problem regret

| (162) | ||||

| (163) | ||||

| (164) | ||||

| (165) |

The advertised bound now follows from the triangle inequality.

J.1 Proof of Lem. 24: Hinting problem subgradient regret bound

Fix any . The triangle inequality implies that

| (166) |

since . We repeatedly apply this finding in conjunction with Jensen’s inequality to conclude

| (167) | ||||

| (168) |

Appendix K Examples: Learning to Hint with DORM+ and AdaHedgeD

These choices give rise to the hinting losses

| (169) | ||||

| (170) |

The following lemma, proved in Sec. K.1, identifies subgradients of these hinting losses.

Lemma 25 (Hinting loss subgradient).

If for some and , then

| (171) |

for and .

Our next lemma, proved in Sec. K.2, bounds the -norm of this hinting loss subgradient in terms of the base problem subgradients.

Lemma 26 (Hinting loss subgradient bound).

Under the assumptions and notation of Lem. 25, the subgradient satisfies for the maximum absolute entry of .

K.1 Proof of Lem. 25: Hinting loss subgradient

The result follows immediately from the chain rule and the following lemma.

Lemma 27 (Subgradients of -norms).

Suppose and . Then

| (172) |

Proof.

Since is a minimizer of , we have for any and hence .

For , by the chain rule, if ,

| (173) | ||||

| (174) | ||||

| (175) |

For , we have that . By the Danskin-Bertsekas Theorem (Danskin, 2012) for subdifferentials, , where is the convex hull operation. ∎

K.2 Proof of Lem. 26: Hinting loss subgradient bound

If , we have

| (176) | ||||

| (177) | ||||

| (178) |

If , we have

| (179) |

Appendix L Experiment Details

L.1 Subseasonal Forecasting Application

We apply the online learning techniques developed in this paper to the problem of adaptive ensembling for subseasonal weather forecasting. Subseasonal forecasting is the problem predicting meteorological variables, often temperature and precipitation, 2-6 weeks in advance. These mid-range forecasts are critical for managing water resources and mitigating wildfires, droughts, floods, and other extreme weather events (Hwang et al., 2019). However, the subseasonal forecasting task is notoriously difficult due to the joint influences of short-term initial conditions and long-term boundary conditions (White et al., 2017).

To improve subseasonal weather forecasting capabilities, the US Department of Reclamation launched the Sub-Seasonal Climate Forecast Rodeo competition (Nowak et al., 2020), a yearlong real-time forecasting competition for the Western United States. Our experiments are based on Flaspohler et al. (2021), a snapshot of public subseasonal model forecasts including both physics-based and machine learning models. These models were developed for the subseasonal forecasting challenge and make semimonthly forecasts for the contest period (19 October 2019 – 29 September 2020).

To expand our evaluation beyond the subseasonal forecasting competition, we used the forecasts in Flaspohler et al. (2021) for analogous yearlong periods (26 semi-monthly dates starting from the last Wednesday in October) beginning in Oct. 2010 and ending in Sep. 2020. Throughout, we refer to the yearlong period beginning in Oct. 2010 – Sep. 2011 as the 2011 year and so on for each subsequent year. For each forecast date , the models in Flaspohler et al. (2021) were trained only on data available at time and model hyper-parameters were tuned to optimize average RMSE loss on the 3-year period preceding the forecast date . For a few of the forecast dates, one or more models had missing forecasts; only dates for which all models have forecasts were used in evaluation.

L.2 Problem Definition

Denote the set of input models with labels: llr (Model1), multillr (Model2), tuned_catboost (Model3), tuned_cfsv2 (Model4), tuned_doy (Model5) and tuned_salient_fri (Model6). On each semimonthly forecast date, each model makes a prediction for each of two meteorological variables (cumulative precipitation and average temperature over 14 days) and two forecasting horizons (3-4 weeks and 5-6 weeks). For the 3-4 week and 5-6 horizons respectively, the forecaster experiences a delay of and forecasts. Each model makes a total of semimonthly forecasts for these four tasks.

At each time , each input model produces a prediction at gridpoints in the Western United States: for task at time . Let be the matrix containing each input model’s predictions as columns. The true meterological outcome for task is . As online learning is performed for each task separately, we drop the task superscript in the following.

At each timestep, the online learner makes a forecast prediction by playing , corresponding to a convex combination of the individual models: . The learner then incurs a loss for the play according to the root mean squared (RMSE) error over the geography of interest:

| (180) | ||||

| (181) |

Our objective for the subseasonal forecasting application is to produce an adaptive ensemble forecast that competes with the best input model over the yearlong period. Hence, in our evaluation, we take the competitor set to be the set of individual models .

Appendix M Extended Experimental Results

We present complete experimental results for the four experiments presented in the main paper (see Sec. 7).

M.1 Competing with the Best Input Model

Results for our three delayed online learning algorithms — DORM, DORM+, and AdaHedgeD— on the four subseasonal prediction tasks for the four optimism strategies described in Sec. 7 (recent_g, prev_g, mean_g, none) are presented below. Each table and figure shows the average RMSE loss and the annual regret versus the best input model in any given year respectively for each algorithm and task.

DORM+ is a competitive model for all three hinting strategies and under the recent_g hinting strategy achieves negative regret on all tasks except Temp. 5-6w. For the Temp. 5-6w task, no online learning model outperforms the best input model for any hinting strategy. For the precipitation tasks, the online learning algorithms presented achieve negative regret using all three hinting strategies for all four tasks. Within the subseasonal forecasting domain, precipitation is often considered a more challenging forecasting task than temperature (White et al., 2017). The gap between the best model and the worst model tends to be larger for precipitation than for temperature, and this could in part explain the strength of the online learning algorithms for these tasks.

| recent_g | AdaHedgeD | DORM | DORM+ | Model1 | Model2 | Model3 | Model4 | Model5 | Model6 |

|---|---|---|---|---|---|---|---|---|---|

| Precip. 3-4w | 21.726 | 21.731 | 21.675 | 21.973 | 22.431 | 22.357 | 21.978 | 21.986 | 23.344 |

| Precip. 5-6w | 21.868 | 21.957 | 21.838 | 22.030 | 22.570 | 22.383 | 22.004 | 21.993 | 23.257 |

| Temp. 3-4w | 2.273 | 2.259 | 2.247 | 2.253 | 2.352 | 2.394 | 2.277 | 2.319 | 2.508 |

| Temp. 5-6w | 2.316 | 2.316 | 2.303 | 2.270 | 2.368 | 2.459 | 2.278 | 2.317 | 2.569 |

| prev_g | AdaHedgeD | DORM | DORM+ | Model1 | Model2 | Model3 | Model4 | Model5 | Model6 |

|---|---|---|---|---|---|---|---|---|---|

| Precip. 3-4w | 21.760 | 21.777 | 21.729 | 21.973 | 22.431 | 22.357 | 21.978 | 21.986 | 23.344 |

| Precip. 5-6w | 21.943 | 21.964 | 21.911 | 22.030 | 22.570 | 22.383 | 22.004 | 21.993 | 23.257 |

| Temp. 3-4w | 2.266 | 2.269 | 2.250 | 2.253 | 2.352 | 2.394 | 2.277 | 2.319 | 2.508 |

| Temp. 5-6w | 2.306 | 2.307 | 2.305 | 2.270 | 2.368 | 2.459 | 2.278 | 2.317 | 2.569 |

| mean_g | AdaHedgeD | DORM | DORM+ | Model1 | Model2 | Model3 | Model4 | Model5 | Model6 |

|---|---|---|---|---|---|---|---|---|---|

| Precip. 3-4w | 21.864 | 21.945 | 21.830 | 21.973 | 22.431 | 22.357 | 21.978 | 21.986 | 23.344 |

| Precip. 5-6w | 21.993 | 22.054 | 21.946 | 22.030 | 22.570 | 22.383 | 22.004 | 21.993 | 23.257 |

| Temp. 3-4w | 2.273 | 2.277 | 2.257 | 2.253 | 2.352 | 2.394 | 2.277 | 2.319 | 2.508 |

| Temp. 5-6w | 2.311 | 2.320 | 2.314 | 2.270 | 2.368 | 2.459 | 2.278 | 2.317 | 2.569 |

| None | AdaHedgeD | DORM | DORM+ | Model1 | Model2 | Model3 | Model4 | Model5 | Model6 |

|---|---|---|---|---|---|---|---|---|---|

| Precip. 3-4w | 21.760 | 21.835 | 21.796 | 21.973 | 22.431 | 22.357 | 21.978 | 21.986 | 23.344 |

| Precip. 5-6w | 21.860 | 21.967 | 21.916 | 22.030 | 22.570 | 22.383 | 22.004 | 21.993 | 23.257 |

| Temp. 3-4w | 2.266 | 2.272 | 2.258 | 2.253 | 2.352 | 2.394 | 2.277 | 2.319 | 2.508 |

| Temp. 5-6w | 2.296 | 2.311 | 2.308 | 2.270 | 2.368 | 2.459 | 2.278 | 2.317 | 2.569 |

M.2 Impact of Regularization

Results for three regularization strategies—AdaHedgeD, DORM+, and DUB—on all four subseasonal prediction as described in Sec. 7. Fig. 10 shows the annual regret versus the best input model in any given year for each algorithm and task, and Fig. 11 presents an example of the weights played by each algorithm in the final evaluation year, as well as the regularization weight used by each algorithm.

The under- and over-regularization of AdaHedgeD and DUB respectively compared with DORM+ is evident in all four tasks, both in the regret and weight plots. Due to the looseness of the regularization settings used in DUB, its plays can be seen to be very close to the uniform ensemble in all four tasks. For this subseasonal prediction problem, the uniform ensemble is competitive, especially for the 5-6 week horizons. However, in problems where the uniform ensemble has higher regret, this over-regularization property of DUB would be undesirable. The more adaptive plays of DORM+ and AdaHedgeD have the potential to better exploit heterogeneous performance among different input models.

M.3 To Replicate or Not to Replicate

We compare the performance of replicated and non-replicated variants of our DORM+ algorithm as in Sec. 7. Both algorithms perform well, but in all tasks, DORM+ outperforms replicated DORM+ (in which independent copies of DORM+ make staggered predictions). Fig. 12 provides an example of the weight plots produced by the replication strategy for all for tasks.

The replicated algorithms only have the opportunity to learn from plays. For the 3-4 week horizons tasks and for the 5-6 week horizons tasks . Because our forecasting horizons are short (), further limiting the feedback available to each online learner via replication could be detrimental to practical model performance.

| DORM+ | Replicated DORM+ | Model1 | Model2 | Model3 | Model4 | Model5 | Model6 | |

|---|---|---|---|---|---|---|---|---|

| Precip. 3-4w | 21.675 | 21.720 | 21.973 | 22.431 | 22.357 | 21.978 | 21.986 | 23.344 |

| Precip. 5-6w | 21.838 | 21.851 | 22.030 | 22.570 | 22.383 | 22.004 | 21.993 | 23.257 |

| Temp. 3-4w | 2.247 | 2.249 | 2.253 | 2.352 | 2.394 | 2.277 | 2.319 | 2.508 |

| Temp. 5-6w | 2.303 | 2.315 | 2.270 | 2.368 | 2.459 | 2.278 | 2.317 | 2.569 |

M.4 Learning to Hint

We examine the effect of optimism on the DORM+ algorithms and the ability of our “learning to hint” strategy to recover the performance of the best optimism strategy in retrospect as described in Sec. 7. We use DORM+ as the meta-algorithm for hint learning to produce the learned optimism strategy that plays a convex combination of the three constant hinters.

As reported in the main text, the regret of the base algorithm using the learned hinting strategy generally falls between the worst and the best hinting strategy for any given year. Because the best hinting strategy for any given year is unknown a priori, the adaptivity of the hint learner is useful practically. Currently, the hint learner is only optimizing a loose upper bound on base problem regret. Deriving loss functions for hint learning that more accurately quantify the effect of the hinter on base model regret is an important next step in achieving negative regret for online hinting algorithms.

M.5 Impact of Different Forms of Optimism

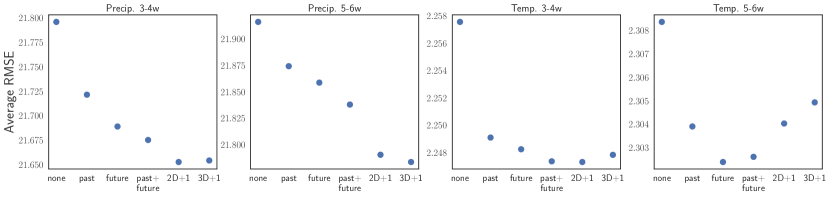

The regret analysis presented in this work suggest that optimistic strategies under delay can benefit from hinting at both the “past” missing losses and the “future” unobserved loss . To study the impact of different forms of optimism on DORM+, we provide a recent_g hint for either only the missing future loss , only the missing past losses , or both past and future losses (the strategy used in this paper) . Inspired by the recommendation of an anonymous reviewer, we also test two hint settings that only hint at the future unobserved loss but multiply the weight of that hint by 2D+1 or 3D+1, effectively increasing the importance of the future hint in the online learning optimization. Fig. 14 presents the experimental results.

In this experiment, all settings of optimism improve upon the non-optimistic algorithm, and, for all tasks, providing hints for missing future losses outperforms hinting at missing past losses. For all tasks save Temp. 5-6w, hinting at both missing past and future losses yields a further improvement. The 2D+1 and 3D+1 settings demonstrate that, for some tasks, increasing the magnitude of the optimistic hint can further improve performance in line with the online gradient descent predictions of Hsieh et al. (2020, Thm. 13).

Appendix N Algorithmic Details

N.1 ODAFTRL with AdaHedgeD and DUB tuning

The AdaHedgeD and DUB algorithms presented in the experiments are implementations of ODAFTRL with a negative entropy regularizer , which is -strongly convex with respect to the norm (Shalev-Shwartz, 2007, Lemma 16) with dual norm . Each algorithm optimizes over the simplex and competes with the simplex: . We choose . In the following, define for . Our derivations of the update equations for AdaHedgeD and DUB make use of the following properties of the negative entropy regularizer, proved in Sec. N.4.

Lemma 28 (Negative entropy properties).

The negative entropy regularizer with for satisfies the following properties on the simplex .

| (182) | ||||

| (183) | ||||

| (184) |

Corollary 29 (Optimal ODAFTRL objectives).

Instantiate the notation of Lem. 28, and define the functions for . Then

| (185) | ||||

| (186) |

Proposition 30 (AdaHedgeD ).

Leveraging these results, we present the pseudocode for the AdaHedgeD and DUB instantiations of ODAFTRL in Algorithm 1.

N.2 DORM and DORM+

The DORM and DORM+ algorithms presented in the experiments are implementations of ODAFTRL and DOOMD respectively that play iterates in using the default value . Both algorithms use a -norm regularizer , which is -strongly convex with respect to (see Shalev-Shwartz, 2007, Lemma 17) with . For the paper experiments, we choose the optimal value to obtain scaling in the algorithm regret; for , . The update equations for each algorithm are given in the main text by DORM and DORM+ respectively. The optimistic hinters provide delayed gradient hints , which are then used to compute regret gradient hints , where and .

N.3 Adaptive Hinting

For the adaptive hinting experiments, we use the DORM+ as both the base and hint learner. For the hint learner with DORM base algorithm, the hint loss function is given by 169 with . The plays of the online hinter are used to generate the hints for the base algorithm using the hint matrix . The -th column of contains hinter ’s predictions for the cumulative missing regret subgradients . The final hint for the base learner is . Psuedo-code for the adaptive hinter is given in Algorithm 2.

N.4 Proof of Lem. 28: Negative entropy properties

The expression of the Fenchel conjugate for is derived by solving an appropriate constrained convex optimization problem for , as shown in Orabona (2019, Section 6.6). The value of uses the properties of the Fenchel conjugate (Rockafellar, 1970; Orabona, 2019, Theorem 5.5) and is shown in Orabona (2019, Theorem 6.6).

N.5 Proof of Prop. 30: AdaHedgeD

First suppose . The first term in the of AdaHedgeD’s setting is derived as follows:

| (203) | ||||

| (204) | ||||

| (205) | ||||

| (206) | ||||

| because | (207) | |||

| (208) | ||||

| (209) | ||||

| (210) | ||||

| (211) |

The expression for the third term in the of AdaHedgeD’s setting follows from identical reasoning.

Now suppose . We have

| (212) | ||||

| (213) | ||||

| (214) |

Identical reasoning yields the advertised expression for the third term.

Appendix O Extension to Variable and Unbounded Delays

In this section we detail how our main results generalize to the case of variable and potentially unbounded delays. For each time , we define as the largest index for which is observable at time (that is, available for constructing ) and as the first time at which is observable at time (that is, available for constructing ).

O.1 Regret of DOOMD with variable delays

Consider the DOOMD variable-delay generalization

| (DOOMD with variable delays) |

We first note that DOOMD with variable delays is an instance of SOOMD respectively with a “bad” choice of optimistic hint that deletes the unobserved loss subgradients .

Lemma 31 (DOOMD with variable delays is SOOMD with a bad hint).

DOOMD with variable delays is SOOMD with

Theorem 32 (Regret of DOOMD with variable delays).

If is differentiable and , then, for all , the DOOMD with variable delays iterates satisfy

| (215) | ||||

| (216) |

O.2 Regret of ODAFTRL with variable delays

Consider the ODAFTRL variable-delay generalization

| (ODAFTRL with variable delays) |

Since ODAFTRL with variable delays is an instance of OAFTRL with , the following result follows immediately from the OAFTRL regret bound, Thm. 14.

Theorem 33 (Regret of ODAFTRL with variable delays).

If is nonnegative and is non-decreasing in , then, , the ODAFTRL with variable delays iterates satisfy

| (217) | ||||

| (218) | ||||

| (219) |

O.3 Regret of DUB with variable delays

Consider the DUB variable-delay generalization

| (DUB with variable delays) |

Theorem 34 (Regret of DUB with variable delays).

Fix , and, for as in 218, consider the DUB with variable delays sequence. If is nonnegative, then, for all , the ODAFTRL with variable delays iterates satisfy

| (220) | |||

| (221) |

Proof.

Fix any . By Thm. 33, ODAFTRL with variable delays admits the regret bound

| (222) |

To control the second term in this bound, we apply the following lemma proved in Sec. H.1.

Lemma 35 (DUB with variable delays-style tuning bound).

Fix any and any non-negative sequences , . If is non-decreasing and

| (223) |

then

| (224) |

∎

Since , , and , the result now follows by setting and , so that

| (225) |

O.4 Proof of Lem. 35: DUB with variable delays-style tuning bound

We prove the claim

| (226) |

by induction on .

Base case

For , since , we have

| (227) | ||||

| (228) |

confirming the base case.

Inductive step

Now fix any and suppose that

| (229) |

for all . Since and is non-decreasing in , we apply this inductive hypothesis to deduce that, for each ,

| (230) | ||||

| (231) | ||||

| (232) | ||||

| (233) | ||||

| (234) | ||||

| (235) |

Now, we sum this inequality over , to obtain

| (236) | ||||

| (237) | ||||

| (238) | ||||

| (239) |

We now solve this quadratic inequality, apply the triangle inequality, and invoke the relation to conclude that

| (240) | ||||

| (241) | ||||

| (242) |

O.5 Regret of AdaHedgeD with variable delays

Consider the AdaHedgeD variable-delay generalization

| (AdaHedgeD with variable delays) |

Theorem 36 (Regret of AdaHedgeD with variable delays).