Online Local Differential Private Quantile Inference via Self-normalization

Abstract

Based on binary inquiries, we developed an algorithm to estimate population quantiles under Local Differential Privacy (LDP). By self-normalizing, our algorithm provides asymptotically normal estimation with valid inference, resulting in tight confidence intervals without the need for nuisance parameters to be estimated. Our proposed method can be conducted fully online, leading to high computational efficiency and minimal storage requirements with space. We also proved an optimality result by an elegant application of one central limit theorem of Gaussian Differential Privacy (GDP) when targeting the frequently encountered median estimation problem. With mathematical proof and extensive numerical testing, we demonstrate the validity of our algorithm both theoretically and experimentally.

1 Introduction

Personal data is currently widely used for various purposes, such as facial recognition, personalized advertising, medical trials, and recommendation systems to name a few. While there are potential benefits, it is important also to consider the risks associated with handling sensitive personal information. For instance, research on diabetes can provide valuable insights that may benefit society as a whole in the long term. However, it is crucial to keep in mind that participants may suffer direct consequences if their data is not properly protected through controlled disclosure, such as a rise in health insurance premiums.

The concept of Differential Privacy (DP; Dwork et al., 2006) has been successful in providing a rigorous condition for controlled disclosure by bounding the change in the distribution of outputs of a query made on a dataset under the alteration of one data point. This has led to a vast amount of literature under the umbrella of DP, resulting in various generalizations, tools, and applications. However, while enjoying the mathematically solid guarantee of DP and its variants, concerns about a weak link in the process, the trusted curator, are beginning to arise.

The use of trusted curators undermines the spirit of the solid cryptographic level of privacy protection that DP provides. This risk is not limited to information security breaches and rogue researchers but also includes legal proceedings where researchers may be compelled to hand over the raw data, breaking the initial promise made to DP at the time of data collection. Two concepts, Local Differential Privacy (LDP) and pan-DPs, are proposed as solutions. The pan-DP directly counters this issue by solidifying the algorithm to withstand multiple announced intrusions (subpoenas) or one unannounced intrusion (hackers). The concept of LDP was first introduced formally by (Kasiviswanathan et al., 2011), but its early form can be traced back to (Evfimievski et al., 2003) and (Warner, 1965), in the name of ”amplification” and ”randomized response survey,” respectively.

In LDP settings, the sensitive information never leaves the control of the users unprotected. The users encode and alter their data locally before sending them to an untrusted central data server for further analysis and computation. Recently, in (Amin et al., 2020) unveiled a connection between pan-DP and LDP by considering variants of pan-DP framework that can defend against multiple unannounced intrusions. Surprisingly, this requirement can only be fulfilled if the data is scrambled before it leaves the owner’s control, which goes back to the definition of LDP. For better privacy protection, many big tech companies have already implemented LDP into their products, such as Google (Erlingsson et al., 2014) and Microsoft (Ding et al., 2017).

This discovery rekindled the research interest in LDP. Researchers have begun to consider fundamental statistical problems, such as estimating parameters, modeling, and hypothesis testing under this constraint. The quantiles, including the median, are basic summary statistics that have been widely studied within the framework of differential privacy. Early research in this area includes the estimation of quantiles under the central DP setting, as presented in (Dwork & Lei, 2009) and (Lei, 2011). More recent advancements, such as (Smith, 2011), have proposed a rate-optimal sample quantile estimator that does not rely on the evaluation of histograms. (Gillenwater et al., 2021) further extended this research by estimating multiple quantiles simultaneously. Despite these advances, the quantile estimation under the central DP setting remains an active area of research, with new work in various applications such as (Alabi et al., 2022) and (Ben-Eliezer et al., 2022).

In the central DP setting, a trusted curator can acquire the actual sample quantiles and other summary statistics, with the only limitation being that the release of the output must conform to the DP condition. However, under the local DP setting, the curator does not have access to the true data and can only see proxies generated by the users. This makes it more challenging to design local DP algorithms that can provide valid results leading to greater problems in developing corresponding theoretical properties and providing further statistical inference.

Researchers often propose consistent estimators for the parameters of interest and derive the asymptotic normality. However, these estimators often involve nuisance parameters that are not trivial to obtain or estimate, making them difficult to deploy in real-world scenarios. To address this issue, (Shao, 2010) developed the methodology of self-normalization for constructing confidence intervals. This method involves designing a statistic called the self-normalizer, which is proportional to the nuisance parameters, and making the original estimate a pivotal quantity by placing it and the self-normalizer in the numerator and the denominator, thereby canceling out the nuisance parameters and leading to an asymptotically pivotal distribution. This methodology provides a powerful tool for statistical inference under complex data, particularly in the context of LDP frameworks where obtaining accurate original data or consistently estimating nuisance parameters without an additional privacy budget is challenging.

Efficient computation is essential for the practicality of LDP algorithms, as large sample sizes are necessary to counteract the effects of local perturbations and achieve optimal performance. Meanwhile, online computation is another valuable attribute of LDP algorithms, as it reduces storage requirements and diminishes risks associated with information storage. Early attempts of introduce online computation to DP algorithms can be traced back to (Jain et al., 2012), where additive Gaussian noise was injected into the gradient to provide DP protection. Later, (Agarwal & Singh, 2017) gives an online linear optimization DP algorithm that with optimal regret bounds. The concept of online computation has also been incorporated into federated learning, as discussed by (Wei et al., 2020). More recently, (Lee et al., 2022) has facilitated online computation for a random scaling quantity using only the trajectory of stochastic optimization, effectively eliminating the need for past state storage and enhancing computational efficiency. In contrast to traditional studies on DP online algorithms, our emphasis is on harnessing online computation for convenience. Our theoretical analysis concentrates on the statistical properties of the proposed estimators, encompassing aspects such as consistency, asymptotic normality, and more.

In this paper, our contributions are listed as follows.

-

•

We propose a new LDP algorithm for population quantile estimation that does not require a trusted curator. Under some mild conditions, we derive the consistency and asymptotic normality of the proposed quantile estimator.

-

•

We construct the confidence interval of the population quantiles via self-normalization, which eliminates the need for estimating the asymptotic variance in the limiting distribution. Furthermore, this procedure can be implemented online without storing all past statuses.

-

•

We also discuss the optimality of the proposed algorithm. By combining it with the central limit theorem of GDP, we demonstrate that our algorithm for median estimation achieves the lower bound of asymptotic variance among all median estimators constructed by a binary random response-based sequential interactive mechanism under LDP.

The structure of this paper is as follows. We begin by providing an overview of the concepts of central DP and LDP. Then present our proposed methodology, detailing the algorithms and their corresponding theoretical results. Finally, we provide experimental results to demonstrate the effectiveness of our approach.

2 Preliminaries

2.1 Central Differential Privacy

Definition 2.1.

(Dwork et al., 2006) A randomized algorithm , taking a dataset consisting of individuals as its input, is -differentially private if, for any pair of datasets and that differ in the record of a single individual and any event , satisfies the below condition:

When , is called -Differentially Private (-DP).

The concept of DP only imposes constraints on the output distribution of an algorithm , rather than placing restrictions on the credibility of the entity running the algorithm or protecting the internal states of . The existence of the curator who has access to the raw data set is why this approach is known as ”Central” DP. The curator simplifies the algorithm design and often leads to an asymptotically negligible loss of accuracy from privacy protection (Cai et al., 2021).

2.2 Local Differential Privacy

Despite the varying definitions of LDP due to the level of interactions, all of them depend on the following concept called -randomizer.

Definition 2.2.

(Joseph et al., 2019) An -randomizer is an -differentially private function taking a single data point as input.

The definition of randomizer is mathematically a special case of the central DP. The main difference between the central and local DP is the role of the curator, which is further determined by the level of interactions allowed. In LDP, the curator coordinates interactions between users, each of whom holds their own private information . In each round of interaction, the curator selects a user and assigns them a randomizer . If the parameters are allowed by the experiment setting, the user will run the randomizer on their private information and release the output to the curator.

The level of interactions can vary from full-interactive, where the curator can choose the randomizer and the next user based on all previous interactions, to sequential (also called one-shot) interactive, where the curator is not allowed to pick one user twice but is still able to adaptively picking the next the user-randomizer pairs based on all previous interactions, to non-interactive, where adaptivity is forbidden, and all user-randomizer pairs must be determined before any information is collected. If the curator is further forbidden from varying the randomizer and tracking back outputs to a specific user, it will lead to another interesting setting called shuffle-DP (Cheu et al., 2019).

2.3 Notations

In this paper, we employ the following notations. is the indicator function and denotes the largest integer that does not exceed . (or ) denotes a sequence of real numbers of a certain order. For instance, means a smaller order than , and by (or ) almost surely (or ). For sequences and , denote if there exist positive contants and such that . The symbol means weak convergence or converge in distribution.

3 Algorithm and Main Results

3.1 Algorithm

Let be independently and identically distributed(i.i.d.) random variables defined on representing private information of each user, with target quantile and corresponding true value , i.e., . To ensure the uniqueness of quantiles, we assume the ’s are continuous random variables, with positive density on the target quantile. In practice, we can perturb the data by a small amount of additive data-independent noise to remove atoms in the distribution as is in (Gillenwater et al., 2021).

The design of the local randomizer is crucial for LDP mechanisms as it must properly choose the inquiry to the user in order to maximize the gathering of information related to the estimation of the target quantile without violating privacy conditions. The population quantiles can be considered as a minimizer of the check loss function:

In the non-DP case, a known solution is the use of stochastic gradient descent, as outlined in (Joseph & Bhatnagar, 2015). It is important to note that for each point, the gradient it contributes is purely determined by the binary variable representing whether the value is greater than or not. This motivates us to modify the stochastic gradient descent process by adding a local randomization process, resulting in the Algorithm 1 and 2 outlined below:

In Algorithm 1, generating randomness of before the if-condition fork may seem wasteful, but it prevents side-channel attacks such as inferring the true value based on the timing of response (Coppens et al., 2009; Lawson, 2009). Algorithm 2 collects random responses and generates the next inquiry accordingly. Therefore, Algorithm 2 satisfies the definition of sequential interactive local DP.

The following algorithm can be used when estimations and confidence intervals are required. These values are not calculated at every step to minimize computational expenses.

The use of dichotomous inquiry in data privacy brings multiple advantages. One benefit is the reduced communication cost, as it only takes one bit to respond. Additionally, the binary response can make full use of the DP budget, as opposed to methods such as the Laplace mechanism, which may provide unnecessary privacy guarantees beyond -DP, as outlined in Theorem 3 in (Balle et al., 2018) and Theorem 2.1 in (Liu et al., 2022).

Furthermore, people tend to be more comfortable answering dichotomous questions compared to open-ended ones (Brown et al., 1996) as they present a choice between two options and may be perceived as less threatening than open-ended questions, which require more detailed and nuanced responses. In addition, the binary approach is easy to understand for users. With the proper choice of truthful response rate , the algorithm known as the random response can be easily simulated through coin flips or dice rolls, allowing users to understand it fully and are able to ”run” it without the help of electronic devices. This is in contrast to a DP mechanism involving the usage of random distribution on real numbers. Due to the finite nature of the computer, the imperfection of floating-point arithmetic leads to serious risks with effective exploits. For more information, please refer to (Mironov, 2012; Jin et al., 2021; Haney et al., 2022).

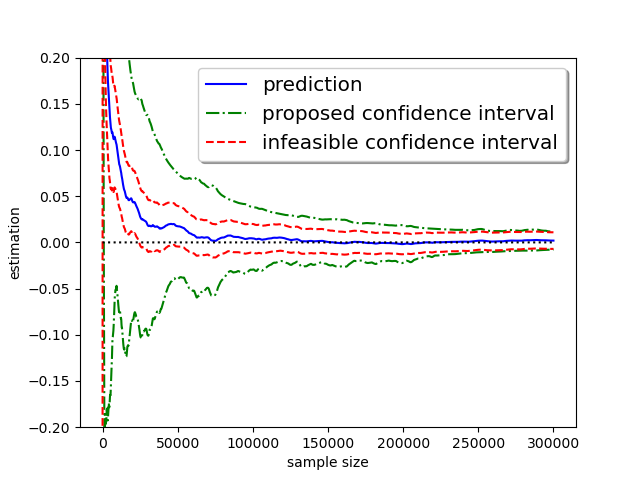

Before discussing the specific characteristics of our estimator, we will first demonstrate its performance through a sample trajectory. The experiment is conducted with a truthful response rate with , which means half of the responses are purely random. The objective is to estimate the median from i.i.d. samples. The true underlying distribution is a standard normal distribution.

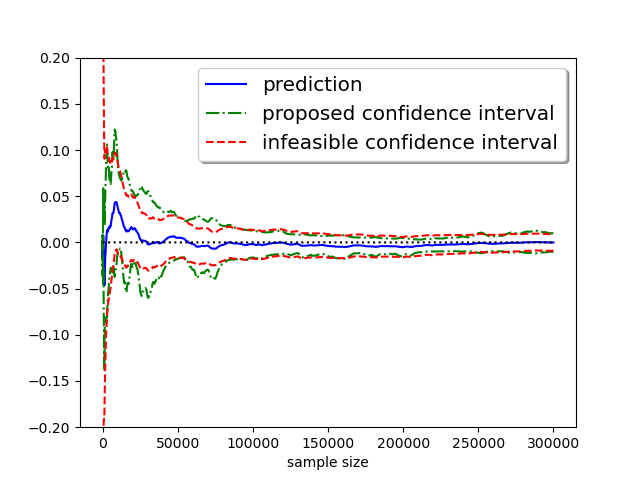

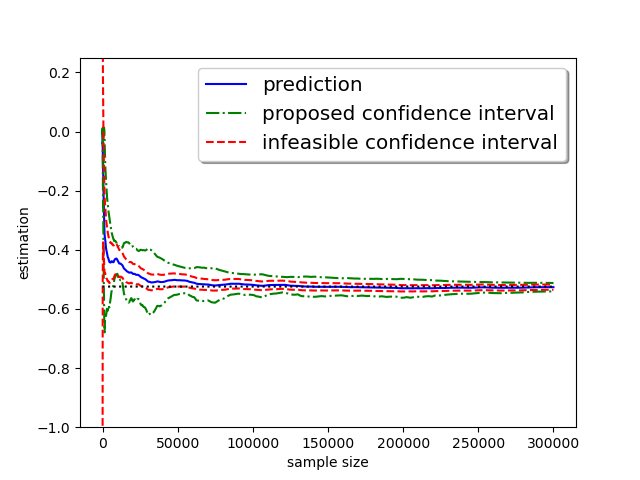

It can be seen that from Figure 1, the proposed estimator converges to the true value, and both infeasible and proposed confidence intervals, defined later, contain the true value at a slightly larger sample size. Also, the proposed confidence intervals are highly competitive with the infeasible one in width. Refer to Figure 5 and 6 for convergence trajectories under different initialization or target quantiles.

Next, we show the LDP property of our algorithm:

Theorem 3.1.

Algorithm 1 is an -randomizer with .

Proof.

see Appendix C.1 ∎

The algorithm presented in Algorithm 2 adaptively selects the next randomizer, determined by the parameter in Algorithm 1, based on its internal state . However, it never revisits previous users. As a result, Algorithm 2 satisfies sequential interactive -LDP, where (equivalently, ).

Throughout the remainder of this paper, we will use the truthful response rate to represent the privacy budget, as opposed to the more standard . This choice is made for the following reasons:

In the context of LDP, it is crucial to ensure understanding and acceptance by end-users who may not possess expertise in the field. The truthful response rate, denoted by , has a more intuitive interpretation. Additionally, appears in multiple results presented in this paper, and maintaining this form allows for a more direct presentation. If necessary, the results can be easily converted by replacing all instances of with . For a conversion table, please refer to Table 5.

3.2 Consistency

To discuss the asymptotic properties of estimator , we rewrite it as a recursive equation. Let and be the i.i.d. Bernoulli sequences with

For ,

| (1) |

where the step size , satisfies

The step size is vital for the convergence of , but it has a relatively minor effect on . The following theorem guarantees consistency:

Theorem 3.2.

For increasing positive number , satisfied

the -step output enjoys that

Proof.

see Appendix C.2. ∎

In particular, if , for some constant and , then for some , and for the sake of simplicity, we will set the step sizes as .

3.3 Asymptotic Normality

Next, the asymptotic normality will be discussed.

Theorem 3.3.

If , then

where is the value on for density function of .

Proof.

see Appendix C.2. ∎

Noticed that the conditions on in Theorem 3.2 and Theorem 3.3 are different. It is possible that fails to converge to , but still enjoys asymptotic normality. Following Theorem 3.3, one constructs the confidence interval of , if can be obtained or estimated by . Denote as the upper quantile of standard normal distribution. The infeasible confidence interval with significance level is:

| (2) |

However, obtaining a consistent estimator , such as using non-parametric methods under our differential privacy framework, is not straightforward, since we can only obtain the binary sequence for protecting privacy, and the original data set cannot be accessed directly.

An alternative approach to estimate the nuisance parameter is through the use of bootstrap methods to simulate the asymptotic distribution. Traditional bootstrap methods that rely on re-sampling are not suitable for the stochastic gradient descent method because of failing to recover the special dependence structure defined in (1).

Recently, (Fang et al., 2018) proposed online bootstrap confidence intervals for stochastic gradient descent, which involve recursively updating randomly perturbed stochastic estimates. Although this approach performs well when there are no constraints on DP, it requires multiple interactions with the users and will therefore blow up the privacy budget.

3.4 Inference via Self-normalization

To overcome the difficulties above, we propose a novel inference procedure of quantiles under the LDP framework via self-normalization, which will avoid estimating the nuisance parameter . We hope to construct an estimator that is proportional to the nuisance parameters. To approach that, we will first establish further theoretical properties of the proposed estimator . Define the process , .

Theorem 3.4.

If , then

where is the Brownian motion in .

Proof.

see Appendix C.2. ∎

Noticed that Theorem 3.3 is the special case in Theorem 3.4 when . Then, following Theorem 3.4, we define the self-normalizer:

By the continuous mapping theorem, we can derive:

where the asymptotical distribution is not associated with any unknown parameters, and its quantile can be computed by Monte Carlo simulation. Therefore, we have constructed an asymptotical pivotal quantity. Denote the quantile of , the self-normalized confidence interval of is constructed by:

| (3) |

As noted by (Shao, 2015), the distribution of has a heavier tail than that of the standard normal distribution, which is analogous to the heavier tail of distribution compared to the standard normal distribution, resulting in a wider but not conservative corresponding confidence interval. However, the average width of the confidence interval constructed through self-normalization is not excessively large when compared to the infeasible confidence interval, as demonstrated by numerical experiments in Figure 1. Furthermore, the construction of an asymptotic pivotal quantity is not unique. See Appendix B for examples of other possibilities.

Whether there are theoretical advantages between the different constructions of self-normalizer is still open to discussion, but according to (Lee et al., 2022), the proposed self-normalizer can be computed in a fully online fashion and is computationally efficient, as outlined in Algorithm 2 and 3. The algorithm only needs to store a single integer and four float numbers: and conduct only a dozen of arithmetic operations.

3.5 Discussion of Optimality

In this subsection, we will discuss the optimality of the proposed algorithm. To generalize the setting, we consider all binary random response-based sequential interactive mechanisms. The random response mechanism can be written as the following :

Let be a collection of binary query functions, which means , for some subset . In the sequential interactive LDP setting, the curator will generate its output based on the transcript and the choice of may depend on the transcript up to this point:. Notice that the Algorithm 1 is a special case where , and is given by

We aim to determine a lower bound for the estimation variance. Therefore, any lower bounds derived under specific conditions also serve as a general lower bound for the estimation variance. To demonstrate this, we will present a pair of distributions with distinct medians that are, to the best of our knowledge, the most indistinguishable given randomized binary queries.

Define:

| (4) |

Let . Simple computation yields that for any

| (5) |

Interestingly, if we consider the truth as a data set containing only one data point, (5) shows that is -DP. Notice that the transcript is a -fold adaptive composition (Kairouz et al., 2015) of -DP mechanisms. By Theorem 8 (Dong et al., 2021a), the transcript and all post-processing of it (Proposition 4; (Dong et al., 2021a)) asymptotically satisfies the Gaussian Differential Privacy condition with (or briefly -GDP).

We will now examine the limit on the best possible variance imposed by the -GDP condition. Denote the estimator of median as . First, we will consider asymptotically normal, unbiased, shift-invariant estimators of the median. By restricting our discussion to unbiased, shift-invariant estimators, we ensure that no estimator has an unfair advantage by favoring specific values. Under the null hypothesis, for the standard deviation of , one has that

and under the alternative hypothesis,

The -GDP condition implies that for sufficiently large , ( see Appendix C.3). By plugging in the values and , we deduce that:

which gives us an asymptotic lower bound of the variance: . This lower bound matches the asymptotic variance obtained in Theorem 3.3, showing the optimality of our approach. Although most estimators we are interested in have an asymptotically normal distribution, we wish to generalize the minimal variance result to other families as the theorem below.

Theorem 3.5.

If is a median estimator based on the random response of binary-based sequential interactive inquiries such that:

where has a log-concave density on such that , , and .

Then,

The minimal variance result can be attributed to two factors. In Appendix C.3, we demonstrate that asymptotic GDP imposes a condition on the variance of estimators that follow a normal distribution. This condition serves as a lower bound for -GDP estimators, without relying on any specific mechanism assumption. Secondly, the relaxation from the assumption of normality to milder conditions on the function is a consequence of Theorem 1.2 in (Dong et al., 2021b). This theorem establishes that among all -GDP estimators satisfying the aforementioned conditions, the variance is lower bounded by . This lower bound is attainable when the underlying distribution is normal.

4 Experiments

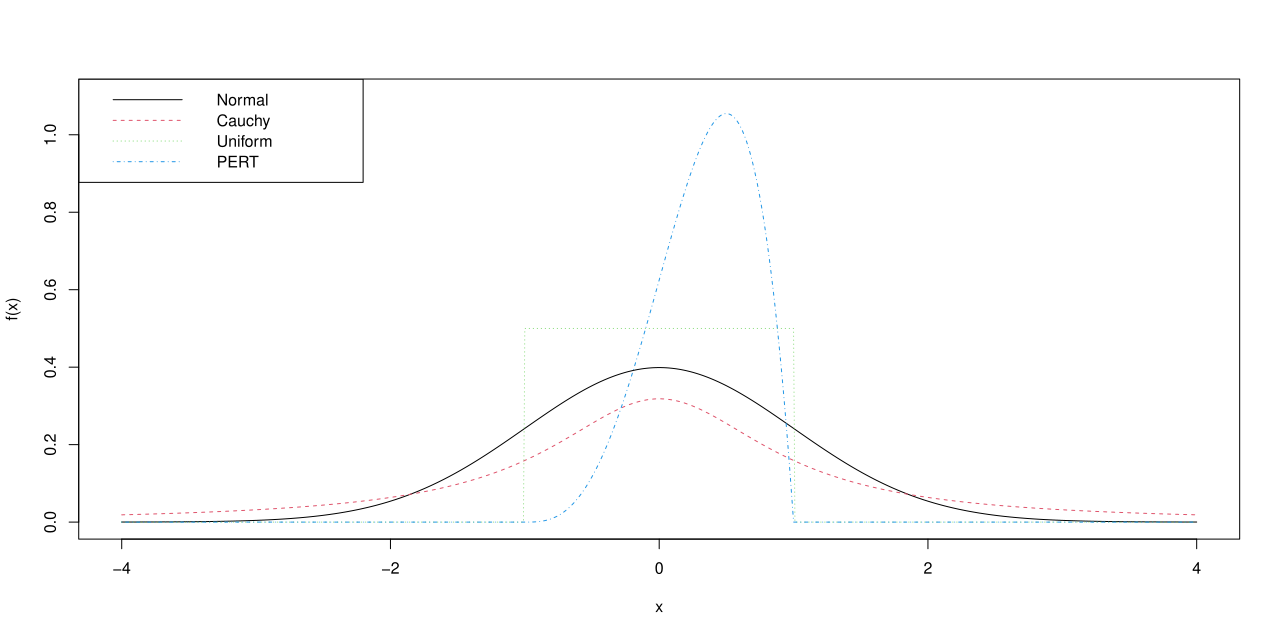

We evaluate the performance of our algorithms using a variety of distributions. The data come from four cases: standard Normal , Uniform , standard Cauchy , and PERT distribution (Clark, 1962) with probability density function:

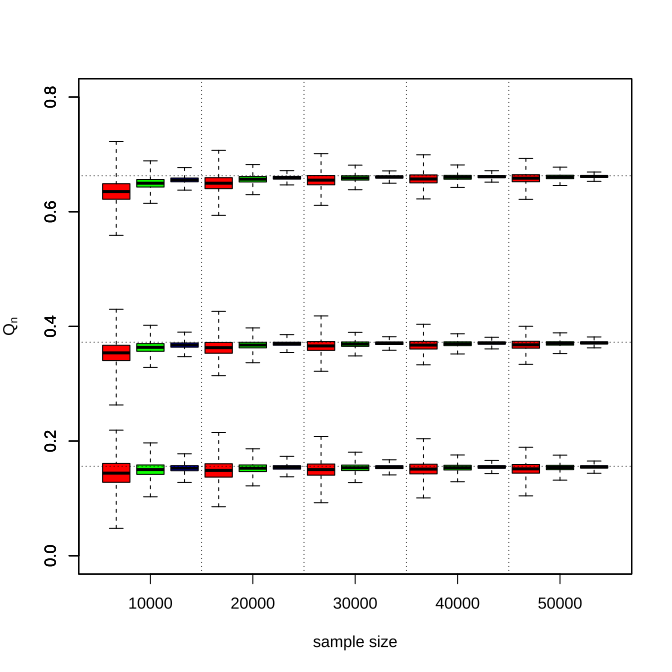

These cases represent situations with heavy tails, compact or non-compact support, and asymmetric distributions commonly found in practice, as shown in Figure 2.

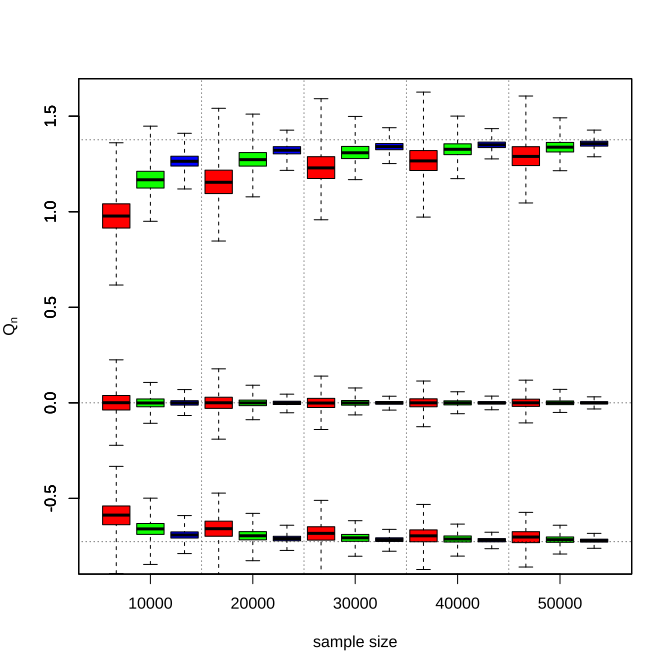

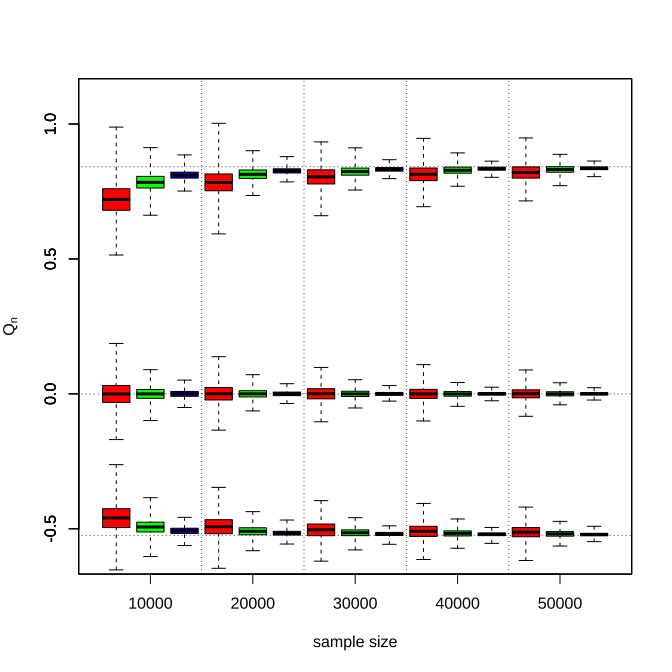

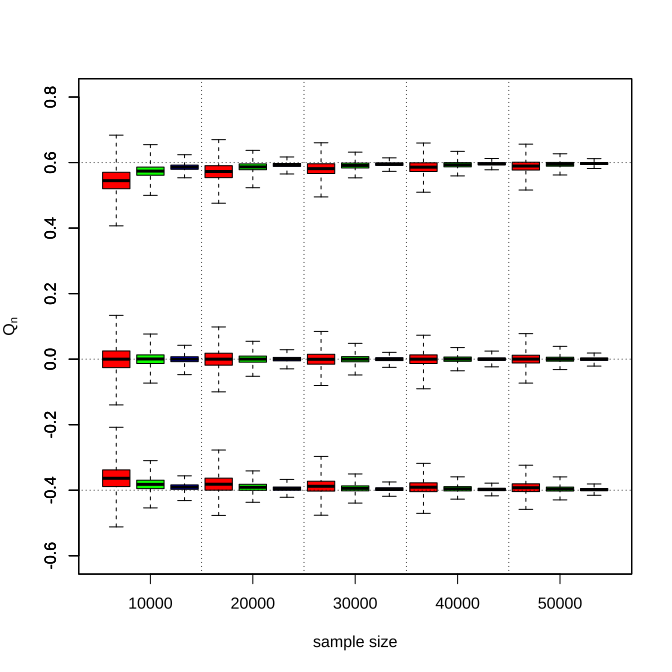

The target quantiles are , and the truthful response rate , which the privacy budget is corresponding to respectively. We use the step sizes for all experiments, which satisfies the assumptions of Theorem 3.3 and 3.4. The range of sample size is , the initial value , and the number of replication is . The results from different sample sizes are independently conducted from scratch to eliminate the correlation among experiments.

To show the consistency of the proposed estimator , Figure 3 displays the box plots of estimator under Normal distribution with sample size . As the sample size increases, the estimation becomes closer to the true values , the corresponding standard errors decay across all settings, and the truthful response rate leads to significantly better performance in small finite sample sizes but has diminishing effects afterward. Meanwhile, we can also see that the proximity between the true target value and the initialization is beneficial to early performances. But in an asymptotic view, the proposed algorithm is insensitive to the initial value selection.

We also demonstrate the empirical coverage rate and mean absolute error of the developed method in Table 1. The empirical coverage rate of the proposed method becomes closer to the nominal confidence level as the sample size increases in most cases and the mean absolute error decreases to zero. The corresponding figures and tables of other distributions can be found in Appendix A, which describes a similar phenomenon.

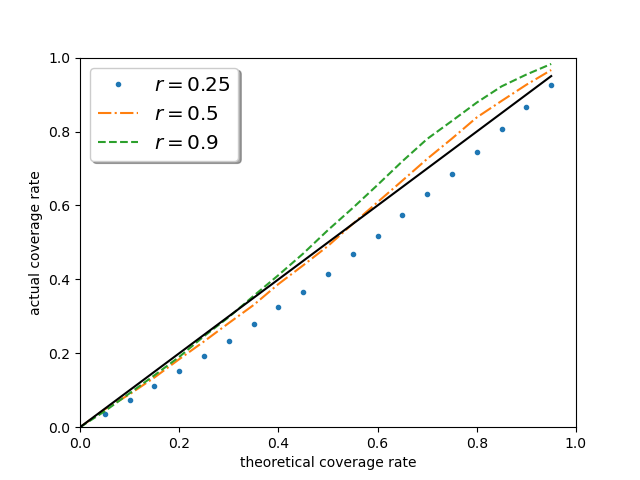

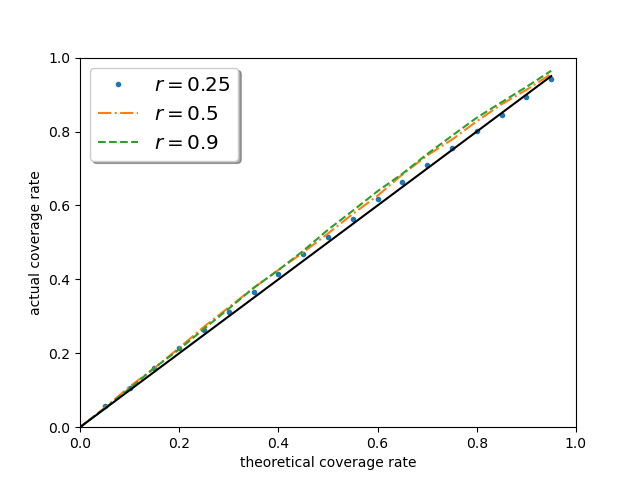

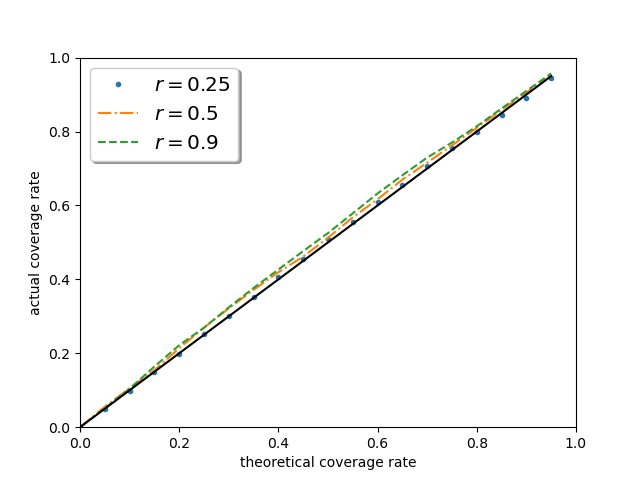

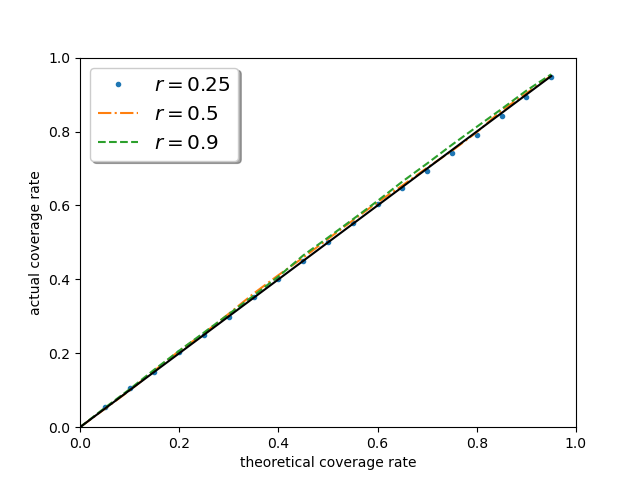

Figure 4 investigates the performance of the proposed confidence interval in other nominal levels. One can discover that the curves of the empirical coverage rate are getting closer to uniformly, as sample size increases in all privacy budget settings, which reveals the performance of the proposed method is irrelevant to the pre-determined significance level. It is worth noting that when , the effective sample size is of the original one, yet the performance of the proposed method remains excellent, which strongly supports the asymptotic theory.

| 10000 | 0.3 | 0.926(0.069) | 0.965(0.034) | 0.982(0.018) |

|---|---|---|---|---|

| 0.5 | 0.834(0.037) | 0.897(0.019) | 0.911(0.011) | |

| 0.8 | 0.962(0.121) | 0.992(0.058) | 0.999(0.031) | |

| 20000 | 0.3 | 0.936(0.041) | 0.958(0.020) | 0.971(0.011) |

| 0.5 | 0.888(0.027) | 0.915(0.014) | 0.936(0.008) | |

| 0.8 | 0.965(0.063) | 0.984(0.030) | 0.994(0.016) | |

| 40000 | 0.3 | 0.943(0.025) | 0.958(0.013) | 0.967(0.007) |

| 0.5 | 0.910(0.020) | 0.931(0.010) | 0.937(0.006) | |

| 0.8 | 0.966(0.035) | 0.978(0.017) | 0.984(0.009) | |

| 100000 | 0.3 | 0.946(0.015) | 0.954(0.007) | 0.958(0.004) |

| 0.5 | 0.929(0.013) | 0.944(0.006) | 0.941(0.004) | |

| 0.8 | 0.954(0.019) | 0.965(0.009) | 0.973(0.005) | |

| 200000 | 0.3 | 0.947(0.010) | 0.951(0.005) | 0.956(0.003) |

| 0.5 | 0.942(0.009) | 0.949(0.004) | 0.947(0.002) | |

| 0.8 | 0.956(0.013) | 0.960(0.006) | 0.964(0.003) | |

| 400000 | 0.3 | 0.945(0.007) | 0.953(0.004) | 0.948(0.002) |

| 0.5 | 0.942(0.006) | 0.949(0.003) | 0.944(0.002) | |

| 0.8 | 0.952(0.009) | 0.957(0.004) | 0.958(0.002) |

5 Conclusion and Future Works

In this paper, we proposed a novel algorithm for estimating population quantiles under the settings of LDP. The core design idea of the algorithm is based on using dichotomous inquiry. The proposed estimator enjoys excellent theoretical properties, including consistency, asymptotic normality, and optimality in some special cases. Importantly, by applying the technique of self-normalization to cancel out the nuisance parameters, we can construct confidence intervals of population quantiles for statistical inference. Finally, our algorithm is designed in an online setting, making it suitable for handling large streaming data without the need for data storage. Extensive simulation studies reveal a positive confirmation of the asymptotic theory.

Despite the contributions above, this article still leaves many exciting questions unanswered, which opens many avenues for future research. A general tight lower bound for other quantiles under our setting is still undetermined, and we have yet to consider other variants of LDP (e.g. full-interactive). Other directions include exploring data that is not independently and identically distributed, such as time series or spatial series data. Additionally, the quantile of interest may be influenced by other covariates, leading to the study of LDP quantile regression. This paper focuses on estimating quantiles for a specific sample size , with the potential for developing consistent bounds, resulting in the transition from quantile confidence intervals to confidence sequences.

References

- Agarwal & Singh (2017) Agarwal, N. and Singh, K. The price of differential privacy for online learning. In Precup, D. and Teh, Y. W. (eds.), Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pp. 32–40. PMLR, 06–11 Aug 2017.

- Alabi et al. (2022) Alabi, D., Ben-Eliezer, O., and Chaturvedi, A. Bounded space differentially private quantiles. arXiv preprint arXiv:2201.03380, 2022.

- Amin et al. (2020) Amin, K., Joseph, M., and Mao, J. Pan-private uniformity testing. In Abernethy, J. and Agarwal, S. (eds.), Proceedings of Thirty Third Conference on Learning Theory, volume 125 of Proceedings of Machine Learning Research, pp. 183–218. PMLR, 09–12 Jul 2020.

- Balle et al. (2018) Balle, B., Barthe, G., and Gaboardi, M. Privacy amplification by subsampling: Tight analyses via couplings and divergences. Advances in Neural Information Processing Systems, 31, 2018.

- Ben-Eliezer et al. (2022) Ben-Eliezer, O., Mikulincer, D., and Zadik, I. Archimedes meets privacy: On privately estimating quantiles in high dimensions under minimal assumptions. arXiv preprint arXiv:2208.07438, 2022.

- Brown et al. (1996) Brown, T. C., Champ, P. A., Bishop, R. C., and McCollum, D. W. Which response format reveals the truth about donations to a public good? Land Economics, pp. 152–166, 1996.

- Cai et al. (2021) Cai, T. T., Wang, Y., and Zhang, L. The cost of privacy: Optimal rates of convergence for parameter estimation with differential privacy. The Annals of Statistics, 49(5):2825–2850, 2021.

- Cheu et al. (2019) Cheu, A., Smith, A., Ullman, J., Zeber, D., and Zhilyaev, M. Distributed differential privacy via shuffling. In Advances in Cryptology–EUROCRYPT 2019: 38th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Darmstadt, Germany, May 19–23, 2019, Proceedings, Part I 38, pp. 375–403. Springer, 2019.

- Clark (1962) Clark, C. E. The pert model for the distribution of an activity time. Operations Research, 10(3):405–406, 1962.

- Coppens et al. (2009) Coppens, B., Verbauwhede, I., De Bosschere, K., and De Sutter, B. Practical mitigations for timing-based side-channel attacks on modern x86 processors. In 2009 30th IEEE Symposium on Security and Privacy, pp. 45–60, 2009. doi: 10.1109/SP.2009.19.

- Ding et al. (2017) Ding, B., Kulkarni, J., and Yekhanin, S. Collecting telemetry data privately. Advances in Neural Information Processing Systems, 30, 2017.

- Dippon (1998) Dippon, J. Globally convergent stochastic optimization with optimal asymptotic distribution. Journal of applied probability, 35(2):395–406, 1998.

- Dong et al. (2021a) Dong, J., Roth, A., and Su, W. J. Gaussian differential privacy. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 2021a.

- Dong et al. (2021b) Dong, J., Su, W., and Zhang, L. A central limit theorem for differentially private query answering. In Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., and Vaughan, J. W. (eds.), Advances in Neural Information Processing Systems, volume 34, pp. 14759–14770. Curran Associates, Inc., 2021b.

- Dwork & Lei (2009) Dwork, C. and Lei, J. Differential privacy and robust statistics. In Proceedings of the forty-first annual ACM symposium on Theory of computing, pp. 371–380, 2009.

- Dwork et al. (2006) Dwork, C., Kenthapadi, K., McSherry, F., Mironov, I., and Naor, M. Our data, ourselves: Privacy via distributed noise generation. In Annual International Conference on the Theory and Applications of Cryptographic Techniques, pp. 486–503. Springer, 2006.

- Erlingsson et al. (2014) Erlingsson, Ú., Pihur, V., and Korolova, A. Rappor: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC conference on computer and communications security, pp. 1054–1067, 2014.

- Evfimievski et al. (2003) Evfimievski, A., Gehrke, J., and Srikant, R. Limiting privacy breaches in privacy preserving data mining. In Proceedings of the twenty-second ACM SIGMOD-SIGACT-SIGART symposium on Principles of database systems, pp. 211–222, 2003.

- Fang et al. (2018) Fang, Y., Xu, J., and Yang, L. Online bootstrap confidence intervals for the stochastic gradient descent estimator. The Journal of Machine Learning Research, 19(1):3053–3073, 2018.

- Gillenwater et al. (2021) Gillenwater, J., Joseph, M., and Kulesza, A. Differentially private quantiles. In Meila, M. and Zhang, T. (eds.), Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pp. 3713–3722. PMLR, 18–24 Jul 2021.

- Haney et al. (2022) Haney, S., Desfontaines, D., Hartman, L., Shrestha, R., and Hay, M. Precision-based attacks and interval refining: how to break, then fix, differential privacy on finite computers. arXiv preprint arXiv:2207.13793, 2022.

- Jain et al. (2012) Jain, P., Kothari, P., and Thakurta, A. Differentially private online learning. In Conference on Learning Theory, pp. 24–1. JMLR Workshop and Conference Proceedings, 2012.

- Jin et al. (2021) Jin, J., McMurtry, E., Rubinstein, B. I., and Ohrimenko, O. Are we there yet? timing and floating-point attacks on differential privacy systems. arXiv preprint arXiv:2112.05307, 2021.

- Joseph & Bhatnagar (2015) Joseph, A. G. and Bhatnagar, S. A stochastic approximation algorithm for quantile estimation. In International Conference on Neural Information Processing, pp. 311–319. Springer, 2015.

- Joseph et al. (2019) Joseph, M., Mao, J., Neel, S., and Roth, A. The role of interactivity in local differential privacy. In 2019 IEEE 60th Annual Symposium on Foundations of Computer Science (FOCS), pp. 94–105, 2019. doi: 10.1109/FOCS.2019.00015.

- Kairouz et al. (2015) Kairouz, P., Oh, S., and Viswanath, P. The composition theorem for differential privacy. In International conference on machine learning, pp. 1376–1385. PMLR, 2015.

- Kasiviswanathan et al. (2011) Kasiviswanathan, S. P., Lee, H. K., Nissim, K., Raskhodnikova, S., and Smith, A. What can we learn privately? SIAM Journal on Computing, 40(3):793–826, 2011.

- Langley (2000) Langley, P. Crafting papers on machine learning. In Langley, P. (ed.), Proceedings of the 17th International Conference on Machine Learning (ICML 2000), pp. 1207–1216, Stanford, CA, 2000. Morgan Kaufmann.

- Lawson (2009) Lawson, N. Side-channel attacks on cryptographic software. IEEE Security & Privacy, 7(6):65–68, 2009. doi: 10.1109/MSP.2009.165.

- Lee et al. (2022) Lee, S., Liao, Y., Seo, M. H., and Shin, Y. Fast and robust online inference with stochastic gradient descent via random scaling. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 36, pp. 7381–7389, 2022.

- Lei (2011) Lei, J. Differentially private m-estimators. Advances in Neural Information Processing Systems, 24, 2011.

- Liu et al. (2022) Liu, Y., Sun, K., Kong, L., and Jiang, B. Identification, amplification and measurement: A bridge to gaussian differential privacy. Advances in Neural Information Processing Systems, 2022.

- Mironov (2012) Mironov, I. On significance of the least significant bits for differential privacy. In Proceedings of the 2012 ACM conference on Computer and communications security, pp. 650–661, 2012.

- Shao (2010) Shao, X. A self-normalized approach to confidence interval construction in time series. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 72(3):343–366, 2010.

- Shao (2015) Shao, X. Self-normalization for time series: a review of recent developments. Journal of the American Statistical Association, 110(512):1797–1817, 2015.

- Smith (2011) Smith, A. Privacy-preserving statistical estimation with optimal convergence rates. In Proceedings of the forty-third annual ACM symposium on Theory of computing, pp. 813–822, 2011.

- Warner (1965) Warner, S. L. Randomized response: A survey technique for eliminating evasive answer bias. Journal of the American Statistical Association, 60(309):63–69, 1965.

- Wei et al. (2020) Wei, K., Li, J., Ding, M., Ma, C., Yang, H. H., Farokhi, F., Jin, S., Quek, T. Q. S., and Vincent Poor, H. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Transactions on Information Forensics and Security, 15:3454–3469, 2020. doi: 10.1109/TIFS.2020.2988575.

Appendix A Additional figures and tables

| 10000 | 0.3 | 0.894(0.140) | 0.972(0.068) | 0.987(0.037) |

|---|---|---|---|---|

| 0.5 | 0.807(0.045) | 0.876(0.024) | 0.906(0.014) | |

| 0.8 | 0.853(0.399) | 0.989(0.207) | 1.000(0.112) | |

| 20000 | 0.3 | 0.928(0.076) | 0.966(0.037) | 0.982(0.020) |

| 0.5 | 0.872(0.034) | 0.908(0.018) | 0.927(0.010) | |

| 0.8 | 0.950(0.219) | 0.991(0.105) | 0.998(0.055) | |

| 40000 | 0.3 | 0.944(0.044) | 0.964(0.022) | 0.974(0.012) |

| 0.5 | 0.900(0.025) | 0.926(0.012) | 0.939(0.007) | |

| 0.8 | 0.965(0.114) | 0.984(0.053) | 0.993(0.028) | |

| 100000 | 0.3 | 0.944(0.025) | 0.956(0.012) | 0.963(0.007) |

| 0.5 | 0.927(0.016) | 0.935(0.008) | 0.945(0.004) | |

| 0.8 | 0.956(0.054) | 0.970(0.026) | 0.980(0.013) | |

| 200000 | 0.3 | 0.948(0.017) | 0.954(0.008) | 0.958(0.004) |

| 0.5 | 0.936(0.011) | 0.944(0.006) | 0.945(0.003) | |

| 0.8 | 0.952(0.034) | 0.966(0.017) | 0.971(0.008) | |

| 400000 | 0.3 | 0.942(0.012) | 0.954(0.006) | 0.952(0.003) |

| 0.5 | 0.944(0.008) | 0.949(0.004) | 0.946(0.002) | |

| 0.8 | 0.948(0.023) | 0.960(0.011) | 0.961(0.005) |

| 10000 | 0.3 | 0.900(0.021) | 0.927(0.011) | 0.938(0.006) |

|---|---|---|---|---|

| 0.5 | 0.951(0.022) | 0.970(0.011) | 0.971(0.006) | |

| 0.8 | 0.990(0.029) | 0.997(0.014) | 0.998(0.008) | |

| 20000 | 0.3 | 0.920(0.015) | 0.932(0.007) | 0.941(0.004) |

| 0.5 | 0.950(0.014) | 0.957(0.007) | 0.962(0.004) | |

| 0.8 | 0.983(0.016) | 0.990(0.008) | 0.992(0.004) | |

| 40000 | 0.3 | 0.927(0.011) | 0.937(0.005) | 0.936(0.003) |

| 0.5 | 0.947(0.009) | 0.951(0.004) | 0.955(0.002) | |

| 0.8 | 0.974(0.009) | 0.978(0.005) | 0.982(0.002) | |

| 100000 | 0.3 | 0.934(0.007) | 0.936(0.003) | 0.942(0.002) |

| 0.5 | 0.948(0.005) | 0.948(0.003) | 0.956(0.001) | |

| 0.8 | 0.967(0.005) | 0.969(0.003) | 0.972(0.001) | |

| 200000 | 0.3 | 0.936(0.005) | 0.935(0.002) | 0.939(0.001) |

| 0.5 | 0.943(0.004) | 0.952(0.002) | 0.949(0.001) | |

| 0.8 | 0.960(0.004) | 0.963(0.002) | 0.964(0.001) | |

| 400000 | 0.3 | 0.936(0.003) | 0.935(0.002) | 0.936(0.001) |

| 0.5 | 0.946(0.003) | 0.946(0.001) | 0.946(0.001) | |

| 0.8 | 0.955(0.003) | 0.956(0.001) | 0.956(0.001) |

| 10000 | 0.3 | 0.922(0.043) | 0.956(0.021) | 0.972(0.011) |

|---|---|---|---|---|

| 0.5 | 0.853(0.030) | 0.898(0.016) | 0.928(0.009) | |

| 0.8 | 0.965(0.057) | 0.984(0.028) | 0.994(0.015) | |

| 20000 | 0.3 | 0.930(0.027) | 0.950(0.013) | 0.963(0.007) |

| 0.5 | 0.896(0.022) | 0.928(0.011) | 0.934(0.006) | |

| 0.8 | 0.960(0.032) | 0.977(0.016) | 0.984(0.008) | |

| 40000 | 0.3 | 0.939(0.017) | 0.953(0.009) | 0.959(0.004) |

| 0.5 | 0.921(0.016) | 0.934(0.008) | 0.943(0.004) | |

| 0.8 | 0.959(0.019) | 0.969(0.009) | 0.974(0.005) | |

| 100000 | 0.3 | 0.942(0.010) | 0.953(0.005) | 0.955(0.003) |

| 0.5 | 0.939(0.010) | 0.942(0.005) | 0.943(0.003) | |

| 0.8 | 0.954(0.011) | 0.959(0.005) | 0.960(0.003) | |

| 200000 | 0.3 | 0.944(0.007) | 0.950(0.003) | 0.950(0.002) |

| 0.5 | 0.938(0.007) | 0.947(0.004) | 0.946(0.002) | |

| 0.8 | 0.950(0.007) | 0.956(0.004) | 0.957(0.002) | |

| 400000 | 0.3 | 0.945(0.005) | 0.947(0.002) | 0.950(0.001) |

| 0.5 | 0.944(0.005) | 0.951(0.002) | 0.950(0.001) | |

| 0.8 | 0.946(0.005) | 0.955(0.002) | 0.948(0.001) |

| 0 | 0 | 0.5 | 1.10 |

|---|---|---|---|

| 0.05 | 0.10 | 0.55 | 1.24 |

| 0.1 | 0.20 | 0.6 | 1.39 |

| 0.15 | 0.30 | 0.65 | 1.55 |

| 0.2 | 0.40 | 0.7 | 1.73 |

| 0.25 | 0.51 | 0.75 | 1.95 |

| 0.3 | 0.62 | 0.8 | 2.20 |

| 0.35 | 0.73 | 0.85 | 2.51 |

| 0.4 | 0.85 | 0.9 | 2.94 |

| 0.45 | 0.97 | 0.95 | 3.66 |

Appendix B Alternative self-normalizes

The following self-normalizer can also be used to construct the asymptotically pivotal quantity,

and based on the continuous mapping theorem again, one has that,

Appendix C Proof

C.1 Proof of Theorem 3.1

Exhaustive computation yields that for any

| (6) |

C.2 Proof of Theorem 3.2, 3.3 and 3.4

One can verify that the recursive equation (1) is asymptotically equivalent to

where , . Let

Hence is equivalent to . Then, one will find that the estimation of with sample under LDP is equivalent to the estimation of with sample without LDP constraints. The standard framework of the SGD method, such as Theorem 2 and 3 in (Dippon, 1998), can be applied. Moreover, the statements in Theorems 3.2, 3.3, and 3.4 hold true.

C.3

We prove this by contradiction. Assuming that for any there is a such that :

Let

We choose a sufficiently large such that for any

and

Then,

Then is not -DP and therefore is not asymptotically -GDP leading to a contradiction.