Online Projected Gradient Descent for Stochastic Optimization with Decision-Dependent Distributions

Abstract

This paper investigates the problem of tracking solutions of stochastic optimization problems with time-varying costs that depend on random variables with decision-dependent distributions. In this context, we propose the use of an online stochastic gradient descent method to solve the optimization, and we provide explicit bounds in expectation and in high probability for the distance between the optimizers and the points generated by the algorithm. In particular, we show that when the gradient error due to sampling is modeled as a sub-Weibull random variable, then the tracking error is ultimately bounded in expectation and in high probability. The theoretical findings are validated via numerical simulations in the context of charging optimization of a fleet of electric vehicles.

Optimization, Optimization algorithms.

1 Introduction

This paper considers the problem of developing and analyzing online algorithms to track the solutions of time-varying stochastic optimization problems, where the distribution of the underlying random variables is decision-dependent. Formally, we consider problems of the form111Notation. We let , where denotes the set of natural numbers. For a given column vector , is the Euclidean norm. Given a differentiable function , denotes the gradient of at (taken to be a column vector). Given a closed convex set , denotes the Euclidean projection of onto , namely . For a given random variable , denotes the expected value of , and denotes the probability of taking values smaller than or equal to ; , for any . Finally, denotes Euler’s number. :

| (1) |

where is a time index, is the decision variable, is a map from the set d to the space of distributions, is a random variable (with the union of the support of for all ), is the loss function, and is a closed and convex set. Problems of this form arise in sequential learning and strategic classification [1], and in applications in power and energy systems [2, 3] to model uncertainty in pricing and human behavior. Moreover, the framework (1) can be used to solve control problems for dynamical systems whose dynamics are unknown, where the variable is used to account for the lack of knowledge of the underlying system dynamics (similarly to problems in feedback-based optimization [4, 5]).

Since the distribution of in (1) depends on the decision variable , the problem of finding is computationally burdensome for general cases, and intractable when in unknown – even when the loss function is convex in [6, 7]. For this reason, we focus on finding decisions that are optimal with respect to the distribution that they induce; we refer to these points as performatively stable [6], while we refer to solutions to the original problem (1) as performatively optimal. We obtain explicit error bounds between performatively optimal and performatively stable points by leveraging tools from [6, 7]. The main focus of this work is to propose and analyze online algorithms that can determine performatively stable points, in contexts where the loss function and constraint set are revealed sequentially. Since the distributional map may be unknown in practice, we then extend our techniques to stochastic methods that only require samples of .

Prior Work: Online (projected) gradient descent methods have been well-investigated by using tools form the controls community, we refer to the representative works [8, 9, 10, 11, 12] as well as to pertinent references therein. Convergence guarantees for online stochastic gradient methods where drift and noise terms satisfy sub-Gaussian assumptions were recently provided in [13]. Online stochastic optimization problems with time-varying distributions are studied in, e.g., [1, 14, 15]. On the other hand, time-varying costs are considered in [16], along with sampling strategies to satisfy regret guarantees. For static optimization problems, the notion of performatively stable points is introduced in [6], where error bounds for risk minimization and gradient descent methods applied to stochastic problems with decision-dependent distributions are provided. Stochastic gradient methods to identify performatively stable points for decision-dependent distributions are studied in [7, 17] – the latter also providing results for an online setting in expectation. A stochastic gradient method for time-invariant distributional maps is presented in [18].

Contributions: We offer the following main contributions. C1) First, we propose an online projected gradient descent (OPGD) method to solve (1), and we show that the tracking error (relative to the performatively stable points) is ultimately bounded by terms that account for the temporal drift of the optimizers. C2) Second, we propose an online stochastic projected gradient descent (OSPGD) and we provide error bounds in expectation and in high probability. Our bounds in high probability are derived by modeling the gradient error as a sub-Weibull random variable [19]: this allows us to capture a variety of sub-cases, including scenarios where the error follows sub-Gaussian and sub-Exponential distributions [20], or any distribution with finite support.

Relative to [1, 14, 15, 16] our distributions are decision dependent; relative to [6, 7, 17, 18], our cost and distributional maps are time varying. Moreover, our results do not rely on bias or variance assumptions regarding the gradient estimator. In the absence of distributional shift and without a sub-Weibull error, our upper bounds reduce to the results of [9]. Relative to [18], we seek performatively stable points rather than the performative optima. In doing so, we incur the error characterized in [6]; however, we do not restrict to distributional maps that are continuous distributions or require finite difference approximations. With respect to the available literature on stochastic optimization, we provide for the first time explicit bounds in expectation and in high probability to solve stochastic optimization with decision dependent distributions in the presence of time-dependent distributional maps.

2 Preliminaries

We first introduce preliminary definitions and results. We consider random variables that take values on a metric space , where the set is equipped with the Borel -algebra induced by metric . We assume that is a complete and separable metric space (hence is a Polish space). We let denote the set of Radon probability measures on with finite first moment. Given , denotes that the random variable is distributed according to . Due to Kantorovich-Rubenstein duality, the Wasserstein-1 distance between can be defined as [21]:

| (2) |

where is the set of 1-Lipschitz functions over . We note that the pair describes a metric space of probability measures.

Heavy-Tailed Distributions. In this paper, we will utilize the sub-Weibull model [19], introduced next.

Definition 1

(Sub-Weibull Random Variable) is a sub-Weibull random variable, denoted by , if there exists such that for all .

The parameter measures the heaviness of the tail (higher values correspond to heavier tails) and the parameter measures the proxy-variance [19]. In what follows, we will also use the following equivalent characterization of a sub-Weibull random variable: if and only if . As shown in [22], the two characterizations are equivalent by choosing . The class of sub-Weibull random variables enjoys the following properties.

Proposition 2.1

(Closure of Sub-Weibull) Let and be (possibly coupled) sub-Weibull random variables and let . Then, the following holds:

-

1.

;

-

2.

, ;

-

3.

;

-

4.

.

3 Online Projected Gradient Descent

In this section, we propose and study an OPGD method to solve (1). In Section 4, we will leverage the results derived in this section to analyze a the stochastic version OSPGD.

We begin by outlining our main assumptions.

Assumption 1

(Strong Convexity) For a fixed , the map is -strongly convex, where , for all .

Assumption 2

(Joint smoothness) For all , is -Lipschitz continuous for all , and is -Lipschitz continuous for all .

Assumption 3

(Distributional Sensitivity) For all , there exists such that

| (3) |

for any .

Assumption 4

(Convex Constraint Set) For all , the set is closed and convex.

3.1 Performatively stable points

Since the objective function and the distribution in (1) both depend on the decision variable , the problem (1) is intractable in general, even when the loss is convex. For this reason, we follow the approach of [6, 7] and seek optimization algorithms that can determine the performatively stable point, defined as follows:

| (4) |

Convergence to a performatively stable point is desirable because it guarantees that is optimal for the distribution that it induces on . The following result, adapted from [7, Prop. 3.3], establishes existence and uniqueness of a performatively stable point.

Lemma 3.1.

In general, performatively stable points may not coincide with the optimizers of the original problem (1). However, an explicit error bound can be derived, as formally stated next.

Lemma 3.2.

The proof Lemma 3.2 follows from [6, Thm 3.5, Thm 4.3]. In the remainder of this paper, we assume that the assumptions of Lemma 3.1 are satisfied, so that the performatively stable point sequence is unique. We illustrate the difference between and in the following example.

Example 3.3.

Consider an instance of (1) where , , . In this case, the objective can be specified in closed form as: , and thus the unique performatively optimal point is given by . To determine the performatively stable point, notice that , and thus satisfies , which implies . The bound in (5) thus holds by noting that , , and .

3.2 Online projected gradient descent

We now propose an OPGD that seeks to track the trajectory of the performatively stable optimizer . To this end, in what follows we adopt the following notation:

| (6) |

for any , , and . Notice that when is a distribution induced by the decision variable , namely , we will use the notation . Moreover, we denote by the gradient of (we also note that, according to the dominated convergence theorem, the expectation and gradient operators can be interchanged).

The OPGD amounts to the following step at each :

| (7) |

where , with denoting a stepsize.

First, we note that a performatively stable point is a fixed point of the algorithmic map (7), namely, . Next, we focus on characterizing the error between the updates (7) and the performatively stable points . To this aim, we denote the temporal drift in the performatively stable points as and the tracking error relative to the performatively stable points as . Our error bound for OPGD is presented next.

Theorem 3.4.

Before presenting the proof, some remarks are in order.

Remark 3.5.

By application of Lemma 3.2, OPGD guarantees that the error between the algorithmic updates and the performatively optimal points is bounded at all times. Precisely, the following estimate holds:

Remark 3.6.

Next, we present the proof of Theorem 3.4. The following lemmas are instrumental.

Lemma 3.7.

(Gradient Deviations) Under Assumption 2, for any , , and measures , the following bound holds:

| (11) |

Lemma 3.8.

The proof of Lemma 3.7 follows by iterating the reasoning in [7, Lemma 2.1] for all ; the proof of lemma 3.8 is standard and is omitted due to space limitations.

Proof of Theorem 3.4: Note that for all directly follows by definition of Euclidean projection. By using the triangle inequality, we find that

where the first identity follows from the definition of and the second inequality follows by adding and subtracting . Applying (11) and Lemma 3.8 yields:

| (13) |

Thus we obtain the following by expanding the recursion:

where we defined . The bound (8) then follows by definition of the sequences and .

To prove (10), we show that for appropriate . Fix . Then, by the definition of , holds if the following two conditions are satisfied simultaneously:

| (14) |

The first inequality holds if and only if or, equivalently, . The second inequality holds if and only if . By using , both inequalities are satisfied when

Thus, to satisfy the maximum, its sufficient to enforce that . The result (10) follows by utilizing the geometric series.

Finally, we observe that when the objective and constraints are time-invariant, we recover the result of [7, Sec. 5] as formalized next.

Corollary 3.9.

4 Online Stochastic Gradient Descent

An exact expression for the distributional map may not be available in general and, even if available, evaluating the gradient may be computational burdensome. We consider the case where we have access to a finite number of samples of at each time step to estimate the gradient . For example, given a mini-batch of samples of , the approximate gradient is computed as ; when we have a “greedy” estimate and when we have a “lazy” estimate [17]. Accordingly, we consider an OSPGD described by:

| (15) |

where is a stepsize. In the remainder, we focus on finding error bounds in the spirit of Theorem 3.4 for OSPGD.

4.1 Bounds in expectation and high-probability

Throughout our analysis, we interpret OSPGD as an inexact OPGD with gradient error given by the random variable:

| (16) |

We make the following assumption.

Assumption 5

(Sub-Weibull Error) The gradient error is sub-Weibull; i.e., for some .

Assumption 5 allows us to describe a variety of sub-cases, including scenarios where the error follows sub-Gaussian and sub-Exponential distributions [20], or any distribution with finite support. Further, notice that Assumption 5 does not require the random variables to be independent. Examples of random variables that satisfy Assumption 5 are described in Section 4.2. Our error bounds for OSPGD are presented next.

Theorem 4.1.

Proof 4.2.

Note that for all directly follows by definition of Euclidean projection. To show (17), we first find a stochastic recursion. By the triangle inequality:

where the second inequality follows by adding and subtracting . By iterating (3.2), we have , where , and thus . This yields the stochastic recursion . Expanding the recursion yields

or, equivalently,

| (19) |

Thus, (17) follows by taking the expectation on both sides.

The bound (17) generalizes the estimate in Theorem 3.4 by accounting for the gradient error. It is also worth pointing out that (17) and (18) have a similar structure; indeed, (18) differs only by a logarithmic factor and by the introduction of the tail parameters (which replaces the expectation term).

Remark 4.3.

An alternative high probability bound can be obtained by using (17) and Markov’s inequality. For any , then Markov’s inequality guarantees that:

| (21) |

with probability at least . However, if we increase the confidence of the bound by allowing , the right-hand-side of (21) grows more rapidly than (18).

Note that the bounds in Theorem 4.1 are valid for any . The asymptotic behavior is noted in the next remark.

4.2 Remarks on the error model

The class of sub-Weibull distributions allows one to consider variety of error models. For instance, it includes sub-Gaussian and sub-exponential as sub-cases by setting and , respectively. We notice that a sub-Gaussian assumption was typically utilized in prior works on stochastic gradient descent; for example, the assumption in [25] corresponds to sub-Gaussian tail behavior. However, recent works suggest that stochastic gradient descent may exhibit errors with tails that are heavier than a sub-Gaussian (see, e.g., [26]). To further elaborate on the flexibility offered by a sub-Weibull model, we provide the following additional examples.

Example 4.5.

Suppose that each entry of the gradient error follows a distribution , for given . Then is sub-Weibull with [23].

Example 4.6.

Suppose that an entry of the gradient error is Gaussian is zero mean and variance ; then, it it sub-Gaussian with sub-Gaussian norm , with an absolute constant [20], and it is therefore a sub-Weibull with an absolute constant.

Example 4.7.

Suppose that is a random variable with mean , such that almost surely. Then [23].

5 Application to Electric Vehicle Charging

This section illustrates the use of the proposed algorithms in an application inspired from [3], where the operator of a fleet of electric vehicles (EVs) seeks to determine an optimal charging policy in order to minimize its charging costs. The region of interest is modeled as a graph , where each node in represents a charging station (or a group thereof), and an edge in allows vehicles to transfer from node to . We assume that the graph is strongly connected, so that EVs can be redirected from one node to any other node. We let denote the energy requested by the fleet at node . We assume that the net energy available is limited, and define the set , for a given . Given , the operator of the power grid strategically chooses a price per unit of energy so as to optimize its revenue from selling energy; we let denote the selected price in region , and we hypothesise that , as an example. We note that, although the grid operator can choose the price arbitrarily large to maximize its revenue, large prices may compel the fleet operator to withdraw its demand, thus motivating the use of a model where the mean grows linearly with the energy demand. Accordingly, we model the cost function of the EV operator as follows [3]:

| (22) |

where , models the charging aggressiveness of the fleet operator, and quantifies the satisfaction the fleet operator achieves from consuming one unit of energy. In (22), the term describes the charging cost at station , the quantity , and models the energy demand at the -th station. Notice that, because the displacement of vehicles can change over time, we assume that the parameters and are time dependent. We note that: (i) because of the capacity constraint , the decision variables are coupled, and (ii) although the optimization could be solved in a distributed fashion since (22) is separable, our focus is to solve it in a centralized way since the EV operator is unique.

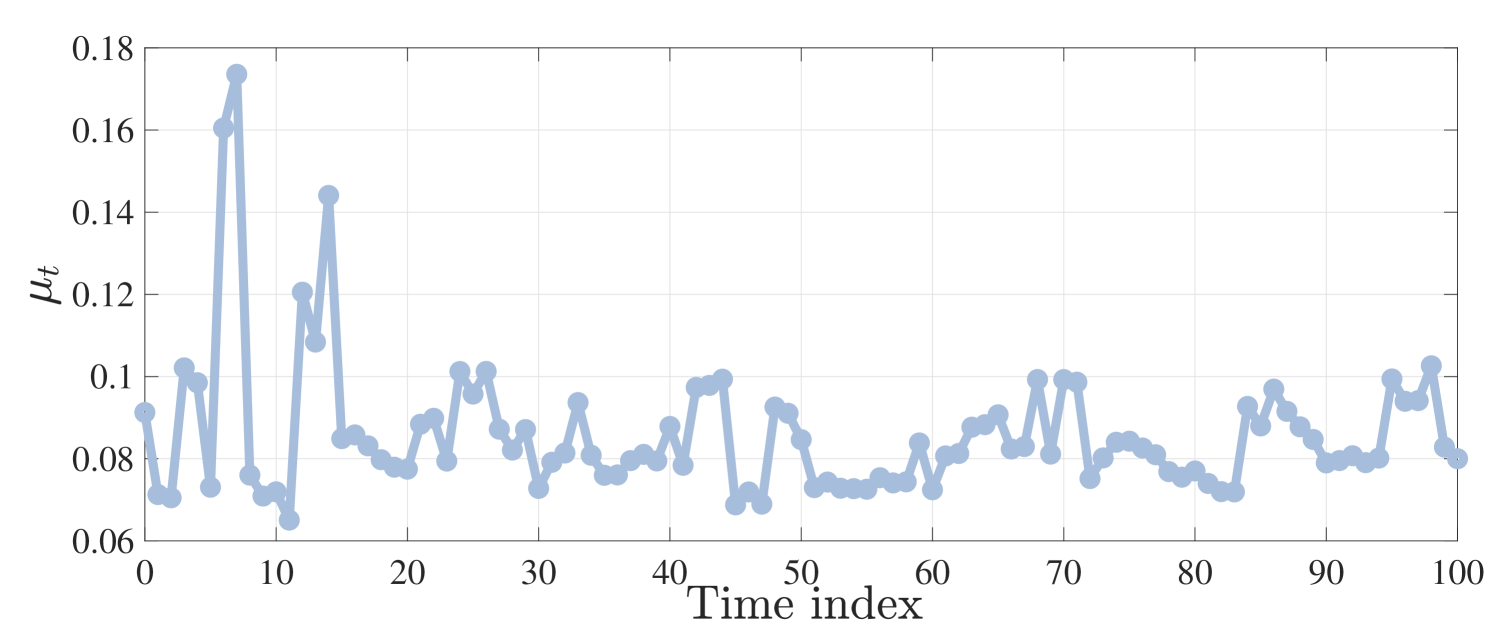

We apply the proposed methods to a system of 10 homogeneous charging stations over 100 time steps with fixed net energy (). Namely, and for . The charging cost distribution is informed by and ; in our case, is the time series data of CAISO real-time prices deposited in Fig 1 (taken from http://www.energyonline.com) and . Given these parameter values, the cost is -strongly convex and -jointly smooth with . Following the results in [27], the distributional maps are -sensitive with . The sequence of performatively stable points are computed in closed form by solving the KKT equations.

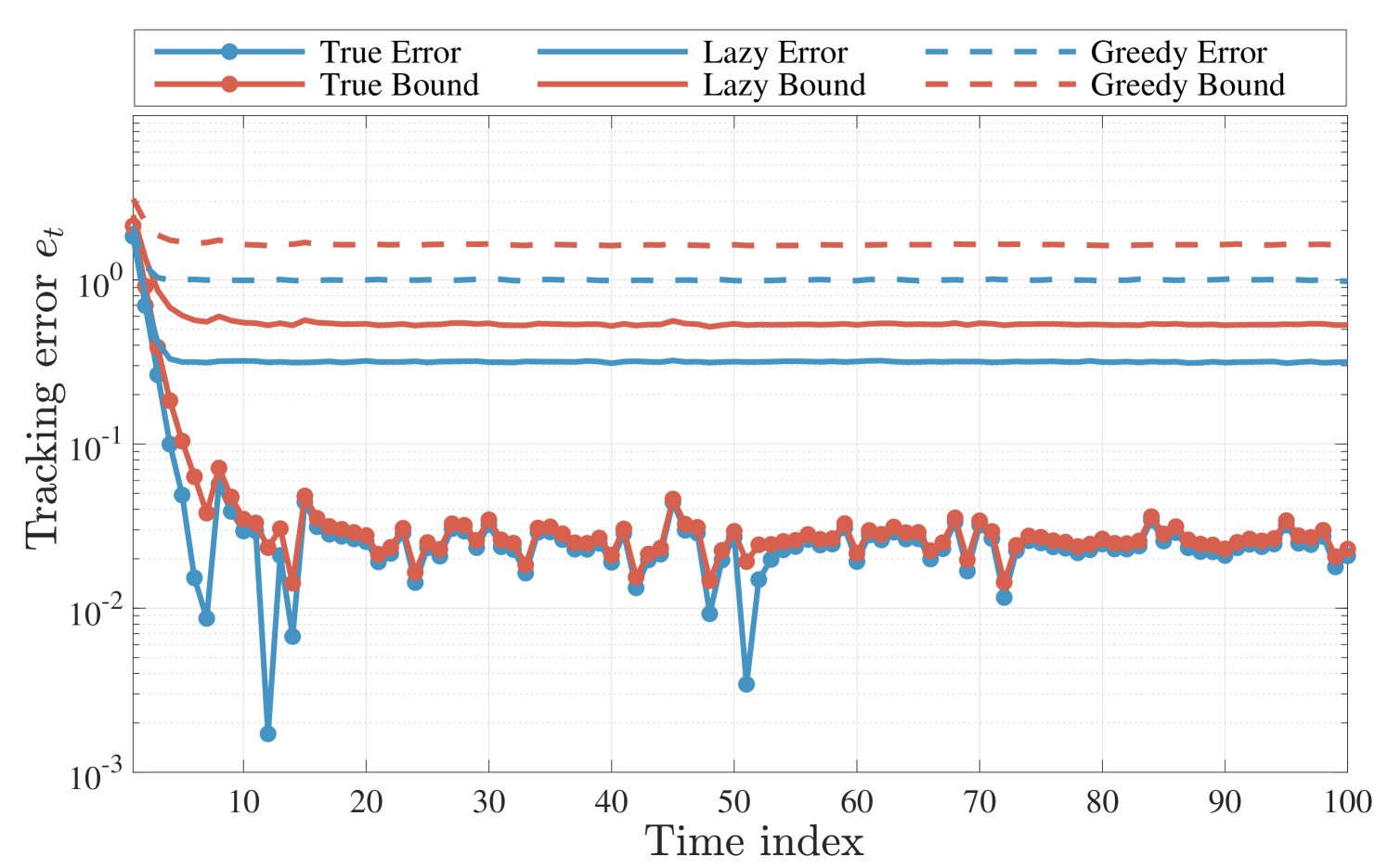

For each experiment, we run OPGD and OSPGD with fixed step size by drawing initial state uniformly from a sphere of radius . For OSPGD, we compute the mean tracking error for both greedy and lazy deployments. The mean tracking error for each is computed via Monte Carlo simulation using realizations of the initial state.

In Fig. 2, we illustrate the tracking errors and corresponding upper bounds presented in Theorems 3.4 and 4.1. “True” (i.e., true gradient) refers to the OPGD, “greedy” to the OSPGD with , and “lazy” to the OSPGD with . We notice that the upper bound curve mimics the evolution of the tracking error; yet, in the instance of OSPGD the relationship is looser relative to the OPGD curves.

6 Conclusions

This paper considered online gradient and stochastic gradient methods for tracking solutions of time-varying stochastic optimization problems with decision-dependent distributions. Under a distributional sensitivity assumption, we derived explicit error bounds for the two methods. In particular, we derived convergence in expectation and in high probability for the OSPGD by assuming that the error in the gradient follows a sub-Weibull distribution. To the best of our knowledge, our convergence results for online gradient methods are the first in the literature for time-varying stochastic optimization problems with decision-dependent distributions.

References

- [1] C. Wilson, Y. Bu, and V. V. Veeravalli, “Adaptive sequential machine learning,” Sequential Analysis, vol. 38, no. 4, pp. 545–568, 2019.

- [2] J. L. Mathieu, D. S. Callaway, and S. Kiliccote, “Examining uncertainty in demand response baseline models and variability in automated responses to dynamic pricing,” in IEEE Conference on Decision and Control, 2011, pp. 4332–4339.

- [3] W. Tushar, W. Saad, H. V. Poor, and D. B. Smith, “Economics of electric vehicle charging: A game theoretic approach,” IEEE Transactions on Smart Grid, vol. 3, no. 4, pp. 1767–1778, 2012.

- [4] A. Hauswirth, S. Bolognani, G. Hug, and F. Dörfler, “Optimization algorithms as robust feedback controllers,” arXiv preprint arXiv:2103.11329, 2021.

- [5] G. Bianchin, M. Vaquero, J. Cortes, and E. Dall’Anese, “Online stochastic optimization for unknown linear systems: Data-driven synthesis and controller analysis,” arXiv preprint arXiv:2108.13040, 2021.

- [6] J. Perdomo, T. Zrnic, C. Mendler-Dünner, and M. Hardt, “Performative prediction,” in International Conference on Machine Learning. PMLR, 2020, pp. 7599–7609.

- [7] D. Drusvyatskiy and L. Xiao, “Stochastic optimization with decision-dependent distributions,” arXiv preprint arXiv:2011.11173, 2020.

- [8] A. Y. Popkov, “Gradient methods for nonstationary unconstrained optimization problems,” Automation and Remote Control, vol. 66, no. 6, pp. 883–891, 2005.

- [9] D. D. Selvaratnam, I. Shames, J. H. Manton, and M. Zamani, “Numerical optimisation of time-varying strongly convex functions subject to time-varying constraints,” in IEEE Conference on Decision and Control, 2018, pp. 849–854.

- [10] A. Mokhtari, S. Shahrampour, A. Jadbabaie, and A. Ribeiro, “Online optimization in dynamic environments: Improved regret rates for strongly convex problems,” in IEEE Conference on Decision and Control, 2016, pp. 7195–7201.

- [11] A. Simonetto, “Time-varying convex optimization via time-varying averaged operators,” arXiv preprint arXiv:1704.07338, 2017.

- [12] L. Madden, S. Becker, and E. Dall’Anese, “Bounds for the tracking error of first-order online optimization methods,” Journal of Optimization Theory and Applications, vol. 189, no. 2, pp. 437–457, 2021.

- [13] J. Cutler, D. Drusvyatskiy, and Z. Harchaoui, “Stochastic optimization under time drift: iterate averaging, step decay, and high probability guarantees,” arXiv preprint arXiv:2108.07356, 2021.

- [14] X. Cao, J. Zhang, and H. V. Poor, “Online stochastic optimization with time-varying distributions,” IEEE Tran. on Automatic Control, vol. 66, no. 4, pp. 1840–1847, 2021.

- [15] I. Shames and F. Farokhi, “Online stochastic convex optimization: Wasserstein distance variation,” arXiv preprint arXiv:2006.01397, 2020.

- [16] C. Wilson, V. V. Veeravalli, and A. Nedić, “Adaptive sequential stochastic optimization,” IEEE Tran. on Automatic Control, vol. 64, no. 2, pp. 496–509, 2018.

- [17] C. Mendler-Dünner, J. C. Perdomo, T. Zrnic, and M. Hardt, “Stochastic optimization for performative prediction,” arXiv preprint arXiv:2006.06887, 2020.

- [18] Z. Izzo, L. Ying, and J. Zou, “How to learn when data reacts to your model: performative gradient descent,” arXiv preprint arXiv:2102.07698, 2021.

- [19] M. Vladimirova, S. Girard, H. Nguyen, and J. Arbel, “Sub-Weibull distributions: Generalizing sub-Gaussian and sub-Exponential properties to heavier tailed distributions,” Stat, vol. 9, no. 1, p. e318, 2020.

- [20] R. Vershynin, High-dimensional probability: An introduction with applications in data science. Cambridge University press, 2018.

- [21] L. V. Kantorovich and S. Rubinshtein, “On a space of totally additive functions,” Vestnik of the St. Petersburg University: Mathematics, vol. 13, no. 7, pp. 52–59, 1958.

- [22] K. C. Wong, Z. Li, and A. Tewari, “Lasso guarantees for -mixing heavy-tailed time series,” The Annals of Statistics, vol. 48, no. 2, pp. 1124 – 1142, 2020.

- [23] N. Bastianello, L. Madden, R. Carli, and E. Dall’Anese, “A stochastic operator framework for inexact static and online optimization,” arXiv preprint arXiv:2105.09884, 2021.

- [24] Z.-P. Jiang and Y. Wang, “Input-to-state stability for discrete-time nonlinear systems,” Automatica, vol. 37, no. 6, pp. 857–869, 2001.

- [25] A. Nemirovski, A. Juditsky, G. Lan, and A. Shapiro, “Robust stochastic approximation approach to stochastic programming,” SIAM Journal on optimization, vol. 19, no. 4, pp. 1574–1609, 2009.

- [26] L. Hodgkinson and M. W. Mahoney, “Multiplicative noise and heavy tails in stochastic optimization,” arXiv preprint arXiv:2006.06293, 2020.

- [27] C. R. Givens and R. M. Shortt, “A class of Wasserstein metrics for probability distributions.” Michigan Mathematical Journal, vol. 31, no. 2, pp. 231 – 240, 1984.