These authors contributed equally to this work. \equalcontThese authors contributed equally to this work. [1]\fnmMao \surSu [1]\fnmShufei \surZhang

1]\orgnameShanghai Artificial Intelligence Laboratory, \orgaddress\cityShanghai, \postcode200232, \countryChina

2]\orgnameMultimedia Laboratory, the University of Hong Kong, \orgaddress\cityHongkong, \postcode999077, \countryChina

3]\orgdivShenzhen International Graduate School, \orgnameTsinghua University, \orgaddress\cityShenzhen, \postcode518055, \countryChina

4]\orgdivCAS Key Laboratory of Theoretical Physics, \orgnameInstitute of Theoretical Physics, \orgnameChinese Academy of Sciences, \orgaddress\cityBeijing, \postcode100190, \countryChina

5]\orgdivSchool of Physical Sciences, \orgnameUniversity of Chinese Academy of Sciences, \orgaddress\cityBeijing, \postcode100049, \countryChina

6]\orgdivKey Laboratory for Computational Physical Sciences (MOE), State Key Laboratory of Surface Physics, Department of Physics, \orgnameFudan University, \orgaddress\cityShanghai, \postcode200433, \countryChina

7]\orgnameShanghai Qi Zhi Institute, \orgaddress\cityShanghai, \postcode200232, \countryChina

Online Test-time Adaptation for Interatomic Potentials

Abstract

Machine learning interatomic potentials (MLIPs) enable more efficient molecular dynamics (MD) simulations with ab initio accuracy, which have been used in various domains of physical science. However, distribution shift between training and test data causes deterioration of the test performance of MLIPs, and even leads to collapse of MD simulations. In this work, we propose an online Test-time Adaptation Interatomic Potential (TAIP) framework to improve the generalization on test data. Specifically, we design a dual-level self-supervised learning approach that leverages global structure and atomic local environment information to align the model with the test data. Extensive experiments demonstrate TAIP’s capability to bridge the domain gap between training and test dataset without additional data. TAIP enhances the test performance on various benchmarks, from small molecule datasets to complex periodic molecular systems with various types of elements. Remarkably, it also enables stable MD simulations where the corresponding baseline models collapse.

keywords:

Test-time Adaptation, Interatomic Potential, Molecular Dynamics Simulation1 Introduction

Molecular Dynamics (MD) simulation serves as a crucial technique across various disciplines including biology, chemistry, and material science [1, 2, 3, 4]. MD simulations are typically based on interatomic potential functions that characterize the potential energy surface of the system, with atomic forces derived as the negative gradients of the potential energies. Subsequently, Newton’s laws of motion are applied to simulate the dynamic trajectories of the atoms. In ab initio MD simulations [5], the energies and forces are accurately determined by solving the equations in quantum mechanics. However, the computational demands of ab initio MD limit its practicality in many scenarios. By learning from ab initio calculations, machine learning interatomic potentials (MLIPs) have been developed to achieve much more efficient MD simulations with ab initio-level accuracy [6, 7, 8].

Despite their successes, the crucial challenge of implementing MLIPs is the distribution shift between training and test data. When using MLIPs for MD simulations, the data for inference are atomic structures that are continuously generated during simulations based on the predicted forces, and the training set should encompass a wide range of atomic structures to guarantee the accuracy of predictions. However, in fields such as phase transition [9, 10], catalysis [11, 12], and crystal growth [13, 14], the objective of MD simulations involves unveiling microscopic processes of rare events, in which the atomic structures are difficult to be captured especially in the initial training set. Consequently, a distribution shift between training and test datasets often occurs, which causes the degradation of test performance and leads to the emergence of unrealistic atomic structures, and finally the MD simulations collapse [15]. Although strategies such as active learning [16, 17, 18] and pretraining [19, 20, 21, 22, 23] have been developed to alleviate this challenge, they still struggle to explore unknown atomic structures and inevitably consume more computational resources. Therefore, a method that address the distribution shift without exploring additional atomic structures is desired.

Test-time adaptation [24, 25, 26, 27] emerges as a promising solution that tackles the issue of distribution shifts by fine-tuning the models during the testing phase. Different from the aforementioned methods, test-time adaptation does not require exploring additional atomic structures or extra training data, instead opting for on-the-fly model adjustments to the model in response to the characteristic of test data with only a modest increase in computational overhead. Test-time adaptation has been proven effective in various domains such as image classification [26, 27, 28, 29], semantic segmentation [30, 31, 32, 33], and object detection [34, 35, 36, 37]. Nevertheless, its application in predicting interatomic potentials remains unexplored. For a successful implementation of test-time adaptation in MLIP, it is crucial to devise task-specific strategies which account for the specific characteristics of atomic structure data. A well-crafted test-time adaptation tailored for MLIP holds substantial promise in improving the accuracy of MLIP, as well as the stability and reliability of MD simulations driven by MLIPs.

In this work, we propose an online Test-time Adaptation strategy for Interatomic Potentials (TAIP) aimed at mitigating the impact of distribution shifts on MLIP applications. We design a dual-level self-supervised learning scheme that help extract both global and local structural information using an encoder. During training, the encoder is trained by the combined losses from both MLIP and self-supervised learning tasks. At the inference stage, the encoder is updated once per test sample by minimizing the self-supervised learning loss, subsequently yielding the final energy and force predictions. This fine-tuning process during inference allows the encoder to extract more adaptive features for test data. We test the accuracy of MLIPs on four datasets, including MD17, ISO17, water, and electrolyte solutions. Compared to baselines, TAIP reduces the prediction errors by an average of 30% without using any additional data. Moreover, we assess the influence of TAIP on the MD simulation stability using periodic water and electrolyte solution datasets and find that TAIP enables stable MD simulations throughout even under conditions where baselines collapse. Finally, visual analysis of feature distributions confirms that TAIP curtails the distribution shifts between training and test datasets.

2 Results

2.1 Overview of TAIP

Three stages of TAIP include training, test-time adaptation, and inference, as shown in Figure 1. In the training phase, the network parameters are updated according to the supervised learning loss of the main task, namely energy and force prediction, as well as three self-supervised learning losses. In the test-time adaptation phase, we first input the test sample into the encoder and update its parameters once per test sample to reduce the self-supervised learning losses. This unique process facilitates the model’s adaptation to the test data, thereby raising its generalization capabilities on test samples. Since the parameter update during adaptation is performed only once, the computational time and cost will not increase substantially. At the inference stage, the test sample passes through the encoder to predict the energy and forces, which is updated during the adaptation phase.

The main task shown in block 1 of Figure 1 is a typical MLIP task that predicts energy and force. We use a graph to represent a molecular conformation and feed it into the encoder to generate the graph feature. The graph feature is then utilized to predict the potential energy of the molecule, and the force exerted on each atom is calculated by taking the negative gradient of the potential energy.

The noise intensity prediction task is shown in block 2 of Figure 1. For each sample, we perturb the coordinates of atoms with random noises drawn from a Gaussian distribution, and the variance is defined as the noise intensity. The encoder takes the perturbed structure as input and produces the noisy graph feature. Subsequently, the noisy graph feature is combined with the original clean graph feature to jointly estimate the noise intensity, which reflects the dissimilarity between noisy and clean data. Instead of directly predicting the precise value of noise, we categorize the intensity into several bins and determine the bin to which the feature corresponds. This approach naturally groups similar configurations while separating dissimilar ones and thus reduces abrupt changes or outlines, leading to a smoother configuration representation space. By focusing on the key features that distinguish between different levels of noise, the model can learn more robust features and enhance its generalization ability to new scenarios.

The atom feature recovery task is shown in block 3 of Figure 1. We randomly mask some atoms in each sample with a certain ratio and then obtain the atomic representations by feeding the masked atomic structure to the encoder. The representations are subsequently used to rebuild the original atomic features. We can infer the most probable types of atoms in the vicinity of each atom by restoring the masked atoms, and thereby improving the extraction of local information.

The pseudo force recovery task is shown in block 4 of Figure 1. We mask the pseudo forces, which are defined as the negative gradient of the pseudo energy obtained from the masked node features. Then, we feed the masked pseudo forces into the decoder to reconstruct pseudo forces. By restoring the pseudo forces, the model can capture the relationship among the forces on neighboring atoms, which can better understand the local environment of the atoms and is helpful for the prediction of the atomic forces.

2.2 Experiments

We conducted a series of experiments across multiple datasets to evaluate the performance of TAIP on the widely used MLIPs of SchNet [38] and PaiNN [39], which are invariant and equivariant, respectively. First, we tested the enhanced accuracy in predicting energies and forces on the MD-17 dataset [40]. Next, we extended our evaluation to the ISO17 [38] dataset and complex systems featuring periodic boundary conditions, including water and electrolyte solutions. The test data from these datasets were designed to have a larger distribution shift from the training data, as indicated in Supplementary Figure S1, and therefore are used to investigate the improvement in generalizability achieved by TAIP. We then performed MD simulations with MLIPs on the aforementioned complex systems to assess the practical utility of TAIP. Moreover, we explored the impact of TAIP on feature distributions through dimensionality reductions using the t-SNE method [41], illustrating the mechanism behind the improvement from the feature embedding perspective.

MD-17. We evaluated the performance of TAIP on the widely used MD-17 dataset, which contains small organic molecules with reference values of energies and forces generated by ab initio MD simulations. Table 1 compares the mean absolute errors (MAEs) of the predicted energies and forces for different molecules using different models. The results show that TAIP-based models remarkably outperform their corresponding baseline models for all eight molecules, demonstrating the generalization ability of our method.

| SchNet | SchNet-TAIP | PaiNN | PaiNN-TAIP | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Aspirin | Energy | 0.37 | 0.3220.041 | 0.159 | 0.1440.011 | ||||

| Force | 1.35 | 0.8530.045 | 0.376 | 0.3300.012 | |||||

| Benzene | Energy | 0.08 | 0.0590.019 | 0.101 | 0.0670.012 | ||||

| Force | 0.31 | 0.2510.013 | 0.226 | 0.1860.007 | |||||

| Ethanol | Energy | 0.08 | 0.0570.020 | 0.086 | 0.0610.015 | ||||

| Force | 0.39 | 0.2720.035 | 0.230 | 0.1800.010 | |||||

| Malonaldehyde | Energy | 0.13 | 0.1180.011 | 0.100 | 0.0910.011 | ||||

| Force | 0.67 | 0.6120.023 | 0.319 | 0.2970.009 | |||||

| Naphthalene | Energy | 0.16 | 0.1100.017 | 0.113 | 0.0930.005 | ||||

| Force | 0.58 | 0.3180.033 | 0.079 | 0.0720.003 | |||||

| Salicylic acid | Energy | 0.20 | 0.1540.026 | 0.114 | 0.1050.004 | ||||

| Force | 0.85 | 0.6710.036 | 0.209 | 0.1930.003 | |||||

| Tolunene | Energy | 0.12 | 0.1030.010 | 0.119 | 0.1090.003 | ||||

| Force | 0.57 | 0.4610.023 | 0.102 | 0.0900.004 | |||||

| Uracil | Energy | 0.14 | 0.1090.006 | 0.104 | 0.0900.006 | ||||

| Force | 0.56 | 0.4930.013 | 0.143 | 0.1300.003 |

ISO17. The ISO17 dataset contains trajectories of isomers of C7O2H10. We consider two scenarios with the ISO17 dataset. In the first scenario (known molecules/unknown conformation), the isomers in the test set are also present in the training set. In the second scenario (unknown molecules/unknown conformation), the test set contains a different subset of isomers. This task is more challenging and is used to evaluate TAIP in the case where training and test data are drawn from different distributions. Table 2 shows that, for the energy and force MAEs on the ISO17 dataset, TAIP achieves an average reduction of 40% and 31% in scenario 1 and scenario 2, respectively.

| SchNet | SchNet-TAIP | PaiNN | PaiNN-TAIP | ||||

|---|---|---|---|---|---|---|---|

| ISO17 | known molecules/ | Energy | 0.39 | 0.2910.013 | 0.32 | 0.1110.079 | |

| unknown conformation | Force | 1.00 | 0.5540.079 | 0.26 | 0.2020.011 | ||

| ISO17 | unknown molecules/ | Energy | 2.40 | 1.1300.123 | 0.92 | 0.6620.054 | |

| unknown conformation | Force | 2.18 | 1.7830.111 | 0.89 | 0.6840.048 | ||

| Water | known phase/ | Energy | 0.2830.027 | 0.2360.023 | 0.2160.034 | 0.0900.017 | |

| unknown configuration | Force | 0.0760.005 | 0.0660.004 | 0.0270.004 | 0.0210.004 | ||

| Water | unknown phase/ | Energy | 1.1930.237 | 0.4230.204 | 0.5850.233 | 0.0420.084 | |

| unknown configuration | Force | 0.0830.010 | 0.0550.005 | 0.0230.003 | 0.0180.003 | ||

| Electrolyte | known concentration/ | Energy | 0.2630.031 | 0.2030.010 | 0.2380.014 | 0.1990.006 | |

| unknown configuration | Force | 0.0640.012 | 0.0430.008 | 0.0490.010 | 0.0370.003 | ||

| Electrolyte | unknown concentration/ | Energy | 9.4590.459 | 4.8160.274 | 9.8720.423 | 4.9140.307 | |

| unknown configuration | Force | 0.3410.043 | 0.1970.032 | 0.1430.025 | 0.1130.012 |

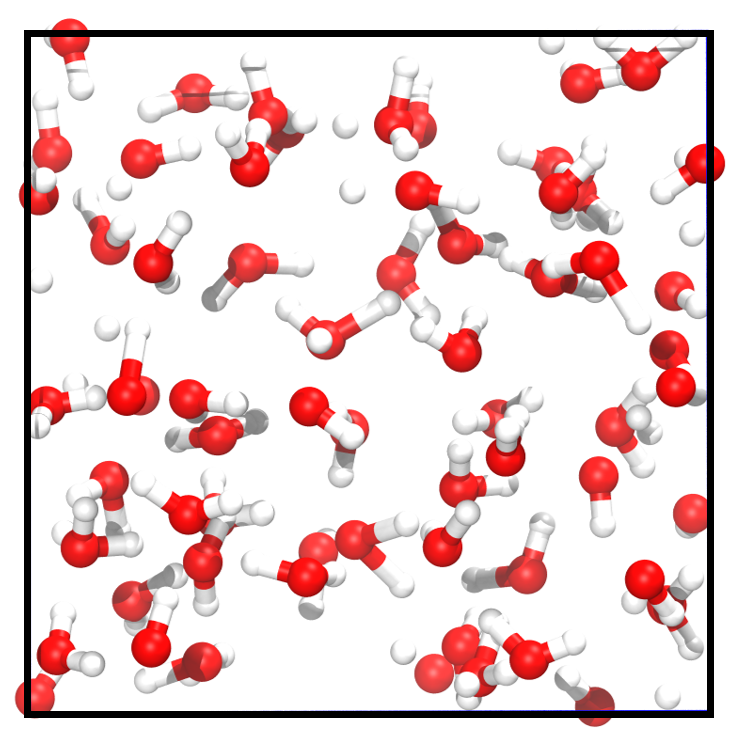

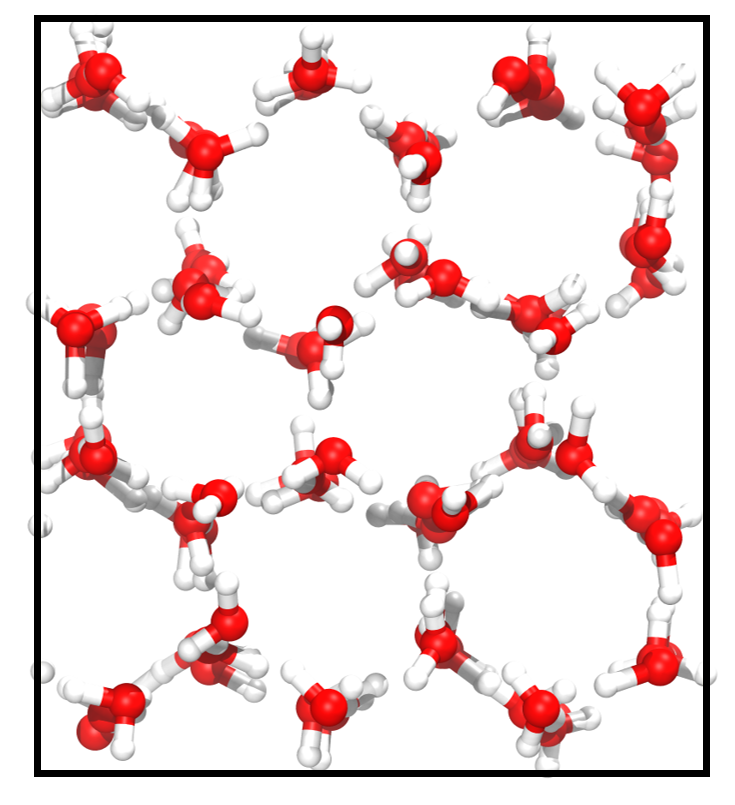

Liquid Water and Ice. Water has complex phase behaviors that pose considerable challenges for computational studies. As shown in Figure 2 and Figure 2, there exists a substantial difference between the microscopic structures of liquid water and ice. In the liquid state, water molecules form a highly dynamic network through hydrogen bonding. In contrast, water molecules form a stable hexagonal lattice structure in an ice crystal. The structural differences between different phases can lead to a decrease in prediction accuracy. Thus, we train the models only with liquid water, using a training set of 1000 frames and a validation set of 100 frames, and report the test accuracy on randomly sampled 1,000 liquid water and ice structures from the remaining dataset, respectively. The results of MAE on liquid water (known phase/unknown configuration) and on ice (unknown phase/unknown configuration) are shown in Table 2. The TAIP method notably reduces the errors of force and energy predictions by an average of 40% for unknown configurations across known and unknown phases in the two baseline models.

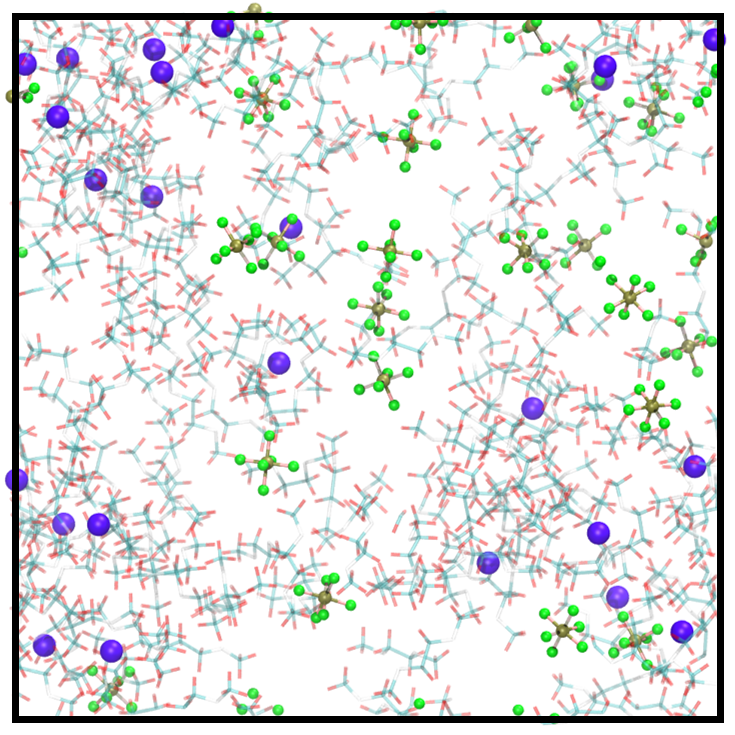

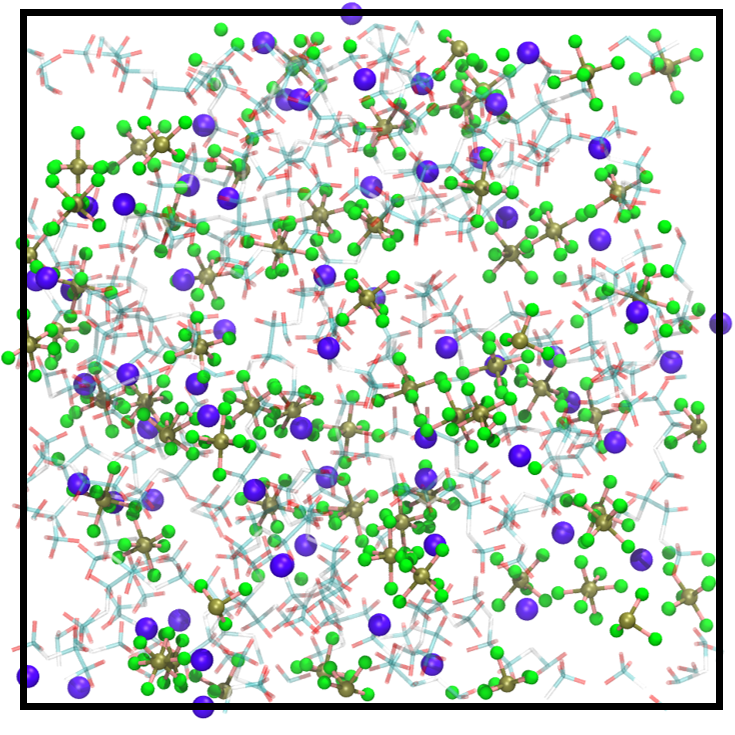

Electrolyte solutions of different concentrations. We examine TAIP on the electrolyte solution dataset, which is developed in our previous work [23], to further analyze compatibility with complex systems. Consisting of charged ions and electrolyte solvents, the dataset includes more elements, stronger electrostatic forces, and more complicated interatomic interactions, thereby exhibiting a higher degree of complexity than water. Training MLIPs for such complex systems and generalizing the trained models on different concentrations are both challenging. Here, we train the models on the 1 M electrolyte solutions and test the generalization across configurational space at different concentrations. The atomic structures of 1 M and 4 M electrolyte solutions are illustrated in Figure 2 and Figure 2, respectively. The MAE results for the test set of 1 M solutions (known concentration/unknown configuration) and 4 M solutions (unknown concentration/unknown configuration) are presented in Table 2. The data reveals that TAIP substantially enhances the precision of energy and force predictions for unknown configurations, irrespective of whether the concentration is known or unknown.

MD simulations. The MAEs of predicted forces and potential energies on fixed datasets are not enough to characterize the performance of MLIPs on long-time MD simulations [15]. During MD simulations, new molecular structures are constantly produced, and the false prediction of the interatomic forces on a single atom may cause the collapse of the entire simulation system. Therefore, we perform MD simulations using SchNet with and without TAIP on periodic systems (water and electrolyte solutions) to further analyze how TAIP can affect the predicted forces and, thus, the quality of MD simulations.

All simulations are set up with a timestep of 0.5 fs, using the Berendsen thermostat [42] as the temperature coupling method with a coupling temperature of 300 K and a decaying time constant of 100 fs. Without TAIP, the simulations for electrolyte solutions with concentrations of 1 M and 4 M collapse due to losing several atoms at the step of 258 (0.1 ps) and of 1843 (0.9 ps), respectively. The simulation for water exhibits undesired force and energy instability after 250 ps, and that for ice collapses at 248 ps. In contrast, all the simulations with TAIP can run stably for more than 500 ps. The simulation trajectories are provided in Supplementary Figure S2 and Supplementary Video S1-S4. We show the potential energies, kinetic energies, and the maximum absolute force of these trajectories on Figure 3. It is evident that the failure of MD simulations is caused by the divergence of predicted potential energies and forces. Instability is a fundamental issue for MLIP-based MD simulations, as the accuracy of MLIPs without TAIP is crucially compromised when encountered with unknown molecular configurations. The inclusion of the TAIP method remarkably enhances the overall performance of MILP-based MD simulations when dealing with unknown molecular structures, and it can be suggested as an applicable solution to MD instability.

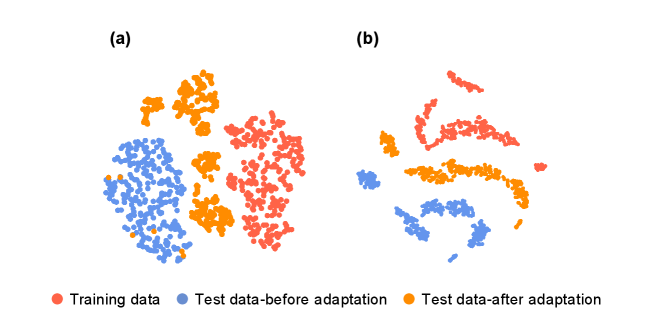

Feature visualization. To visually identify the effect of TAIP. We perform a series of dimensionality reductions using the t-SNE method [41] to map the feature embeddings of the training dataset and the testing dataset on benchmark models and TAIP models. In Figure 4, we can notice that the feature embeddings of the test dataset in the TAIP models are much closer to that of the training dataset than the feature embeddings in the baseline models. It indicates that the TAIP enables the MLIPs to process unknown molecular structures with less uncertainty, which helps the energies and forces predictions to harness more accuracy and generalizability.

3 Discussion

The primary challenge for MLIPs in practical applications lies in their ability to make accurate predictions on test data that exhibit a distribution shift from the training set. Specifically, a feasible MLIP must maintain sufficient accuracy for the new atomic structures that continuously emerge during simulations. This study demonstrates that our TAIP framework remarkably enhances the performance of MLIPs on test datasets, which are designed to exhibit substantial distribution shifts from the training data, without requiring any extra data. The efficacy of TAIP is attributed to self-supervised learning tasks including noise intensity prediction, atomic feature recovery, and pseudo force recovery. These tasks are trained concurrently with the interatomic potential task during the training phase. During the inference phase, the shared feature extractor is refined through self-supervised learning tasks in an online manner. This approach effectively bridges the domain gap between training and testing datasets, as evidenced by t-SNE visualizations of the feature space. Beyond improving the accuracy of predicting energy and force, TAIP also enables stable MLIP-based MD simulations, outperforming baseline models that otherwise fail. The experiments across various molecular systems underscores the vast potential of our method.

4 Methods

4.1 Dataset

MD-17. The MD17 dataset is composed of ab initio molecular dynamics trajectories of eight small molecules [40]. Obtained from http://www.sgdml.org/#datasets, we use the training set of 1,000, the validation set of 1,000, and the test set of 1,000 configurations for each small molecule.

ISO17. ISO17 dataset consists of ab initio MD trajectories of 129 isomers whose energies and forces are calculated by Density Functional Theory (DFT) [38]. We adopted the same splitting strategy reported in the original literature[38], using 80% trajectory steps of the 80% isomers for training and validation (totaling 404,000 molecular conformations for training and 4,000 for validation). We further set up two test datasets to evaluate the effectiveness of TAIP: (1) The other 20% unseen trajectory steps of the isomers included in the training set (totaling 101,000 conformations) and (2) all of the remaining 20% isomers not included in the training and validation set (totaling 130, 000 conformations).

Liquid Water and ice. The atomic structures are sampled from MD trajectories, which consist of a training set of 1,000 snapshots, a validation set of 500 snapshots, and a test set of 1,000 liquid water. In addition, 1,000 ice snapshots in the form of hexagonal ice crystals are sampled to be the other test set. There are 96 molecules or 288 atoms in each snapshot. The forces and energies for each sampled snapshot are calculated by DFT using the cp2k package[43]. The DFT calculations are conducted using PBE exchange-correlation functional[44] with the Projector Augmented-Wave (PAW) pseudo-potential[45]. The DFT-D3 method is used for the vdW-dispersion energy correction[46]. A single gamma k-point is used to sample the Brillouin zone, the cutoff energy for the plane-wave-basis set is set to be 400 eV, and the electronic self-consistency is considered to be achieved if the change of total energy between two steps is smaller than eV.

Electrolyte solutions. The electrolyte solutions dataset is taken from our previous work [23]. Here, we used the combination of [DME], DME], DME], DME], [EC+DMC], EC+DMC], EC+DMC], and EC+DMC] as electrolyte solutions with ionic concentrations of 1 M and 4 M. The training and validation data are randomly selected from the 1 M solutions. The training set contains 1000 samples and the validation set contains 250 samples. We construct two different test sets to evaluate TAIP: one is randomly sampled from the remaining 1 M solutions and the other is sampled from the 4 M solutions.

4.2 Training Settings

The TAIP framework is implemented based on PyTorch 1.8.0. The experiments are conducted with NVIDIA GeForce RTX 3090 GPU. The models are trained using the Adam optimizer [47], employing single-GPU training for efficient processing. The hyperparameters are provided in Supplementary Table S1-S3.

In order to achieve optimal performance on both properties, we incorporate both energy and forces into the criterion for training:

| (1) |

where represents the predicted energy, is the number of atoms in each sample and represents the coordinate of atom . The weight is used to in line with the setting of the baseline models. The , and are self-supervised learning losses corresponding to noise intensity prediction, masked atom reconstruction and masked pseudo force reconstruction, respectively.

For the periodic systems including water and electrolyte solutions, we employ the atomization energy as the target energy by shifting the original potential energy according to the energy of each single atom:

| (2) |

where is the potential energy of a single atom in vacuum.

Noise intensity prediction. For each sample, we randomly choose a noise intensity from a uniform distribution and perturb the coordinates accordingly, which will facilitate the exploration of the configurational space. The GNNs are trained to predict the noise intensity instead of the specific noise value. The graph feature of the perturbed structure is concatenated with that of the original structure and passes through a classification head to produce the classification logit. The training objective can be formulated as follows:

| (3) |

where is the one-hot encoding of , and denotes the cross entropy loss.

Atomic feature recovery. The masked atomic structures are fed into the encoder to get the masked node features. We utilize the cosine error as the criterion for reconstructing the original node features. Additionally, we reduce the weight of easy samples in the training set by scaling the cosine error with a power of , as shown below:

| (4) |

where denotes the original feature, is the output of Decoder 1, is the set of indices of the masked nodes .

Pseudo force recovery. The pseudo force is defined as the negative gradient the pseudo energy, which is obtained from the masked node features. We utilize the L1 loss as the criterion for reconstructing the pseudo force:

| (5) |

where represents the pseudo forces predicted by Decoder 2 and are the pseudo forces calculated from the pseudo energy.

Data availability

The data other than publicly available data used for experiments in this paper are available at https://cloud.tsinghua.edu.cn/d/7d2bfe81ed3b4269a692/.

Code availability

The source code for reproducing the findings in this paper is available at xxx.

Acknowledgments

This work was supported by the National Key R&D Program of China (NO.2022ZD0160101). M.S. was partially supported by Shanghai Committee of Science and Technology, China (Grant No. 23QD1400900). T.C. and C.T. did this work during their internship at Shanghai Artificial Intelligence Laboratory.

Author contributions

M.S. and S.Z. conceived the idea and led the research. T.C. developed the codes and trained the models. C.T. performed experiments and analyses. Y.L. and X.G. contributed technical ideas for datasets and experiments. D.Z., and W.O. contributed technical ideas for self-supervised methods. T.C., C.T., D.Z., M.S., and S.Z. wrote the paper. All authors discussed the results and reviewed the manuscript.

References

- \bibcommenthead

- Hospital et al. [2015] Hospital, A., Goñi, J.R., Orozco, M., Gelpí, J.L.: Molecular dynamics simulations: advances and applications. Advances and applications in bioinformatics and chemistry, 37–47 (2015)

- Senftle et al. [2016] Senftle, T.P., Hong, S., Islam, M.M., Kylasa, S.B., Zheng, Y., Shin, Y.K., Junkermeier, C., Engel-Herbert, R., Janik, M.J., Aktulga, H.M., et al.: The reaxff reactive force-field: development, applications and future directions. npj Computational Materials 2(1), 1–14 (2016)

- Karplus and Petsko [1990] Karplus, M., Petsko, G.A.: Molecular dynamics simulations in biology. Nature 347, 631–639 (1990)

- [4] Yao, N., Chen, X., Fu, Z.-H., Zhang, Q.: Applying classical, Ab Initio , and machine-learning molecular dynamics simulations to the liquid electrolyte for rechargeable batteries 122(12), 10970–11021 https://doi.org/10.1021/acs.chemrev.1c00904 . Accessed 2023-01-17

- Car and Parrinello [1985] Car, R., Parrinello, M.: Unified approach for molecular dynamics and density-functional theory. Physical review letters 55(22), 2471 (1985)

- [6] Butler, K.T., Davies, D.W., Cartwright, H., Isayev, O., Walsh, A.: Machine learning for molecular and materials science 559(7715), 547–555 https://doi.org/10.1038/s41586-018-0337-2 . Accessed 2023-02-11

- [7] Noé, F., Tkatchenko, A., Müller, K.-R., Clementi, C.: Machine learning for molecular simulation 71(1), 361–390 https://doi.org/10.1146/annurev-physchem-042018-052331 . Accessed 2023-01-17

- [8] Unke, O.T., Chmiela, S., Sauceda, H.E., Gastegger, M., Poltavsky, I., Schütt, K.T., Tkatchenko, A., Müller, K.-R.: Machine learning force fields 121(16), 10142–10186 https://doi.org/10.1021/acs.chemrev.0c01111 . Accessed 2023-01-17

- Zhang et al. [2021] Zhang, L., Wang, H., Car, R., Weinan, E.: Phase diagram of a deep potential water model. Physical review letters 126(23), 236001 (2021)

- Niu et al. [2020] Niu, H., Bonati, L., Piaggi, P.M., Parrinello, M.: Ab initio phase diagram and nucleation of gallium. Nature communications 11(1), 2654 (2020)

- Li et al. [2023] Li, J.-L., Li, Y.-F., Liu, Z.-P.: In situ structure of a mo-doped pt–ni catalyst during electrochemical oxygen reduction resolved from machine learning-based grand canonical global optimization. JACS Au 3(4), 1162–1175 (2023)

- Chen et al. [2024] Chen, D., Chen, L., Zhao, Q.-C., Yang, Z.-X., Shang, C., Liu, Z.-P.: Square-pyramidal subsurface oxygen [ag4oag] drives selective ethene epoxidation on silver. Nature Catalysis, 1–10 (2024)

- Su et al. [2022] Su, M., Yang, J.-H., Liu, Z.-P., Gong, X.-G.: Exploring large-lattice-mismatched interfaces with neural network potentials: the case of the cds/cdte heterostructure. The Journal of Physical Chemistry C 126(31), 13366–13372 (2022)

- Zhang et al. [2024] Zhang, D., Yi, P., Lai, X., Peng, L., Li, H.: Active machine learning model for the dynamic simulation and growth mechanisms of carbon on metal surface. Nature Communications 15(1), 344 (2024)

- Fu et al. [2022] Fu, X., Wu, Z., Wang, W., Xie, T., Keten, S., Gomez-Bombarelli, R., Jaakkola, T.: Forces are not enough: Benchmark and critical evaluation for machine learning force fields with molecular simulations. arXiv preprint arXiv:2210.07237 (2022)

- Zhang et al. [2020] Zhang, Y., Wang, H., Chen, W., Zeng, J., Zhang, L., Wang, H., E, W.: Dp-gen: A concurrent learning platform for the generation of reliable deep learning based potential energy models. Computer Physics Communications 253, 107206 (2020) https://doi.org/10.1016/j.cpc.2020.107206

- Kulichenko et al. [2023] Kulichenko, M., Barros, K., Lubbers, N., Li, Y.W., Messerly, R., Tretiak, S., Smith, J.S., Nebgen, B.: Uncertainty-driven dynamics for active learning of interatomic potentials. Nature Computational Science 3(3), 230–239 (2023)

- Yuan et al. [2023] Yuan, X., Zhou, Y., Peng, Q., Yang, Y., Li, Y., Wen, X.: Active learning to overcome exponential-wall problem for effective structure prediction of chemical-disordered materials. npj Computational Materials 9(1), 12 (2023)

- Zhang et al. [2022] Zhang, D., Bi, H., Dai, F.-Z., Jiang, W., Zhang, L., Wang, H.: Dpa-1: Pretraining of attention-based deep potential model for molecular simulation. arXiv preprint arXiv:2208.08236 (2022)

- Zhang et al. [2023] Zhang, D., Liu, X., Zhang, X., Zhang, C., Cai, C., Bi, H., Du, Y., Qin, X., Huang, J., Li, B., et al.: Dpa-2: Towards a universal large atomic model for molecular and material simulation. arXiv preprint arXiv:2312.15492 (2023)

- Wang et al. [2023] Wang, Y., Xu, C., Li, Z., Farimani, A.B.: Denoise pre-training on non-equilibrium molecules for accurate and transferable neural potentials. arXiv preprint arXiv:2303.02216 (2023)

- Gardner et al. [2024] Gardner, J.L., Baker, K.T., Deringer, V.L.: Synthetic pre-training for neural-network interatomic potentials. Machine Learning: Science and Technology 5(1), 015003 (2024)

- Cui et al. [2024] Cui, T., Tang, C., Su, M., Zhang, S., Li, Y., Bai, L., Dong, Y., Gong, X., Ouyang, W.: Geometry-enhanced pretraining on interatomic potentials. Nature Machine Intelligence, 1–9 (2024)

- Liang et al. [2020] Liang, J., Hu, D., Feng, J.: Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In: International Conference on Machine Learning, pp. 6028–6039 (2020). PMLR

- Sun et al. [2020] Sun, Y., Wang, X., Liu, Z., Miller, J., Efros, A., Hardt, M.: Test-time training with self-supervision for generalization under distribution shifts. In: International Conference on Machine Learning, pp. 9229–9248 (2020). PMLR

- Wang et al. [2020] Wang, D., Shelhamer, E., Liu, S., Olshausen, B., Darrell, T.: Tent: Fully test-time adaptation by entropy minimization. arXiv preprint arXiv:2006.10726 (2020)

- Iwasawa and Matsuo [2021] Iwasawa, Y., Matsuo, Y.: Test-time classifier adjustment module for model-agnostic domain generalization. Advances in Neural Information Processing Systems 34, 2427–2440 (2021)

- Boudiaf et al. [2022] Boudiaf, M., Mueller, R., Ben Ayed, I., Bertinetto, L.: Parameter-free online test-time adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8344–8353 (2022)

- Wang et al. [2022] Wang, Q., Fink, O., Van Gool, L., Dai, D.: Continual test-time domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7201–7211 (2022)

- Voigtlaender and Leibe [2017] Voigtlaender, P., Leibe, B.: Online adaptation of convolutional neural networks for video object segmentation. arXiv preprint arXiv:1706.09364 (2017)

- Karani et al. [2021] Karani, N., Erdil, E., Chaitanya, K., Konukoglu, E.: Test-time adaptable neural networks for robust medical image segmentation. Medical Image Analysis 68, 101907 (2021)

- Zhang et al. [2022] Zhang, Y., Borse, S., Cai, H., Porikli, F.: Auxadapt: Stable and efficient test-time adaptation for temporally consistent video semantic segmentation. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2339–2348 (2022)

- Shin et al. [2022] Shin, I., Tsai, Y.-H., Zhuang, B., Schulter, S., Liu, B., Garg, S., Kweon, I.S., Yoon, K.-J.: Mm-tta: Multi-modal test-time adaptation for 3d semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16928–16937 (2022)

- Veksler [2023] Veksler, O.: Test time adaptation with regularized loss for weakly supervised salient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7360–7369 (2023)

- Kim et al. [2024] Kim, D., Park, S., Choo, J.: When model meets new normals: Test-time adaptation for unsupervised time-series anomaly detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 38, pp. 13113–13121 (2024)

- Gao et al. [2023] Gao, Z., Yan, S., He, X.: Atta: Anomaly-aware test-time adaptation for out-of-distribution detection in segmentation. Advances in Neural Information Processing Systems 36, 45150–45171 (2023)

- Segu et al. [2023] Segu, M., Schiele, B., Yu, F.: Darth: Holistic test-time adaptation for multiple object tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9717–9727 (2023)

- Schütt et al. [2017] Schütt, K., Kindermans, P.-J., Sauceda Felix, H.E., Chmiela, S., Tkatchenko, A., Müller, K.-R.: Schnet: A continuous-filter convolutional neural network for modeling quantum interactions. Advances in neural information processing systems 30 (2017)

- Schütt et al. [2021] Schütt, K., Unke, O., Gastegger, M.: Equivariant message passing for the prediction of tensorial properties and molecular spectra. In: International Conference on Machine Learning, pp. 9377–9388 (2021). PMLR

- Chmiela et al. [2017] Chmiela, S., Tkatchenko, A., Sauceda, H.E., Poltavsky, I., Schütt, K.T., Müller, K.-R.: Machine learning of accurate energy-conserving molecular force fields. Science advances 3(5), 1603015 (2017)

- Van der Maaten and Hinton [2008] Maaten, L., Hinton, G.: Visualizing data using t-sne. Journal of machine learning research 9(11) (2008)

- Berendsen et al. [1984] Berendsen, H.J., Postma, J.v., Van Gunsteren, W.F., DiNola, A., Haak, J.R.: Molecular dynamics with coupling to an external bath. The Journal of chemical physics 81(8), 3684–3690 (1984)

- Kühne et al. [2020] Kühne, T.D., Iannuzzi, M., Del Ben, M., Rybkin, V.V., Seewald, P., Stein, F., Laino, T., Khaliullin, R.Z., Schütt, O., Schiffmann, F., et al.: Cp2k: An electronic structure and molecular dynamics software package-quickstep: Efficient and accurate electronic structure calculations. The Journal of Chemical Physics 152(19) (2020)

- Perdew et al. [1996] Perdew, J.P., Burke, K., Ernzerhof, M.: Generalized gradient approximation made simple. Physical review letters 77(18), 3865 (1996)

- Blöchl [1994] Blöchl, P.E.: Projector augmented-wave method. Physical review B 50(24), 17953 (1994)

- Grimme et al. [2010] Grimme, S., Antony, J., Ehrlich, S., Krieg, H.: A consistent and accurate ab initio parametrization of density functional dispersion correction (dft-d) for the 94 elements h-pu. The Journal of chemical physics 132(15) (2010)

- Kingma and Ba [2014] Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)