Online Testing of Subgroup Treatment Effects Based on Value Difference

Abstract

Online A/B testing plays a critical role in the high-tech industry to guide product development and accelerate innovation. It performs a null hypothesis statistical test to determine which variant is better. However, a typical A/B test presents two problems: (i) a fixed-horizon framework inflates the false-positive errors under continuous monitoring; (ii) the homogeneous effects assumption fails to identify a subgroup with a beneficial treatment effect. In this paper, we propose a sequential test for subgroup treatment effects based on value difference, named SUBTLE, to address these two problems simultaneously. The SUBTLE allows the experimenters to ”peek” at the results during the experiment without harming the statistical guarantees. It assumes heterogeneous treatment effects and aims to test if some subgroup of the population will benefit from the investigative treatment. If the testing result indicates the existence of such a subgroup, a subgroup will be identified using a readily available estimated optimal treatment rule. We examine the empirical performance of our proposed test on both simulations and a real dataset. The results show that the SUBTLE has high detection power with controlled type I error at any time, is more robust to noise covariates, and can achieve early stopping compared with the corresponding fixed-horizon test.

Index Terms:

sequential testing, heterogeneous treatment effectsI Introduction

Online A/B testing, as a kind of randomized control experiment, is widely used in the high-tech industry to assess the value of ideas in a scientific manner [1]. It randomly exposes users to one of the two variants: control (A), the currently-used version, or treatment (B), a new version being evaluated, and collects the metric of interest, such as conversion rate, revenue, etc. Then, a null hypothesis statistical test is performed to evaluate whether there is a statistically significant difference between the two variants on the metric of interest. This scientific design helps to control for the external variations and thus establish the causality between the variants and the outcome. However, the current A/B testing has its limitations in terms of framework and model assumptions.

First of all, most A/B tests employ a fixed-horizon framework, whose validity requires that the sample size should be fixed and determined before the experiment starts. However, experimenters, driven by a fast-paced product evolution in practice, often ”peek” at the experiment and hope to find the significance as quickly as possible to avoid large (i) time cost: an A/B test may take prohibitively long time to collect the predetermined size of samples; and (ii) opportunity cost: the users who have been assigned to a suboptimal variant will be stuck in a bad experience for a long time [2]. The behaviors of continuously monitoring and concluding the experiment prematurely will be favorably biased towards getting significant results and lead to very high false-positive probabilities, well in excess of the nominal significance level [3, 4]. Another limitation of A/B tests is that they assume homogeneous treatment effects among the population and mainly focus on testing the average treatment effect. However, it is common that treatment effects vary across subpopulations. Testing the subgroup treatment effects will help decision-makers distinguish the subpopulation that may benefit from a particular treatment from those who may not, and thereby guide companies’ marketing strategies in promoting new products.

The first problem can be addressed by applying the sequential testing framework. Sequential testing, in contrast to the classic fixed-horizon test, is a statistical testing procedure that continuously checks for significance at every new sample and stops the test as soon as a significant result is detected while controlling the type I error at any time. It generally gives a significant decrease in the required sample size compared to the fixed-horizon test with the same type I error and type II error control [5], and thus is able to end an experiment much earlier. This field was first introduced by Wald [5], who proposed the sequential probability ratio test (SPRT) for simple hypotheses using likelihood ratio as the test statistics, and then was extended to composite hypotheses by much following literature [6, 7, 8, 9, 10]. A thorough review was given by Lai [11]. However, the advantage of sequential testing in online A/B testing has not been recognized until recently Johari et al. [12] brought mSPRT, a variant of SPRT to A/B tests.

The second problem shows a demand for a test on subgroup treatment effects. Although sequential testing is rapidly developing in online A/B tests, little work focuses on subgroup treatment effect testing. Yu et al. [13] proposed a sequential score test (SST) based on the score statistics under a generalized linear model, which aims to test if there is any difference between treatment and control groups among any subjects. However, this test is based on a restrictive parametric assumption on treatment-covariates interaction and cannot be used to test the subgroup treatment effects.

In this paper, we consider a flexible model and propose a sequential test for SUBgroup Treatment effects based on vaLuE difference (SUBTLE), which aims to test if some group of the population would benefit from the investigative treatment. Our method does not require to specify any parametric form of covariate-specific treatment effects. If the null hypothesis is rejected, a beneficial subgroup can be easily obtained based on the estimated optimal treatment rule.

The remainder of this paper is structured as follows. In Section II, we review the idea of the mSPRT and SST, and discuss how they are related to our test. Then in Section III, we introduce our proposed method SUBTLE and provide the theoretical guarantee for its validity. We conduct simulations in Section IV and real data experiments in Section V to demonstrate the validity, detection power, robustness, and efficiency of our proposed test. Finally, in Section VI, we conclude the paper and present future directions.

II Related Work

II-A Mixture Sequential Probability Ratio Test

The mixture sequential probability ratio test (mSPRT) [9] supposes that the independent and identically distributed (i.i.d.) random variables have a probability density function induced by parameter , and aims to test

Its test statistics at sample size is a mixture of likelihood ratios weighted by a mixture density over the parameter space :

The mSPRT stops the sampling at stage

| (1) |

and rejects the null hypothesis in favor of . If no such time exists, it continues the sampling indefinitely and accepts the . Since the likelihood ratio under is a nonnegative martingale with the initial value equal to 1, and so is the mixture of such likelihood ratios , the type I error of mSPRT can be proved to be always controlled at by an application of Markov’s inequality and optional stopping theorem: . Besides, mSPRT is a test of power one [14], which means that any small deviation from can be detected as long as waiting long enough. It is also shown that mSPRT is almost optimal for data from an exponential family of distributions, with respect to the expected stopping time [15].

The mSPRT was brought to A/B tests by Johari et al. [12, 16], who assume that the observations in control and treatment groups arrive in pairs , . They restricted their data model to the two most common cases in practice: normal distribution and Bernoulli distribution, with and denoting the mean for the control and treatment groups, respectively. They test the hypotheses as below:

by directly applying mSPRT to the distribution of the differences (normal), or the joint distribution of data pairs (Bernoulli), . After making some approximations to the likelihood ratio and choosing a normal mixture density , the test statistic is able to have a closed form for both normal and Bernoulli observations.

However, the mSPRT does not work well in testing heterogeneous treatment effects due to the complexity of the likelihood induced by individual covariates. Specifically, a conjugate prior for the likelihood ratio may not exist anymore, making the computation for the test statistic challenging. The unknown baseline covariates effect also increases the difficulty in constructing and approximating the likelihood ratio [13].

II-B Sequential Score Test

The sequential score test (SST) [13] assumes a generalized linear model with a link function for the outcome :

| (2) |

where and respectively denote the binary treatment indicator and user covariates vector. It tests the multi-dimensional treatment-covariates interaction effect:

| (3) |

while accounting for the linear baseline covariates effect . For the test statistics , instead of using a mixture of likelihood ratios as mSPRT, SST employs a mixture of asymptotic probability ratios of the score statistics. Since the probability ratio has the same martingale structure as the likelihood ratio, the type I error can still be controlled with the same decision rule as mSPRT (1). The asymptotic normality of the score statistics also guarantees a closed form of with a multivariate normal mixture density . However, the considered parametric model (2) can only be used to test if there is a linear covariate-treatment interaction effect and may fail to detect the existence of a subgroup with enhanced treatment effects. In addition, the subgroup estimated based on the index may be biased if the assumed linear model (2) is misspecified. Therefore in this paper, we propose a subgroup treatment effect test, which is able to test the existence of a beneficial subgroup and does not require specifying the form of treatment effects.

III Subgroup Treatment Effects Test Based on Value Difference

III-A Problem Setup

Suppose we have i.i.d. data , , where , , respectively denote the observed outcome, binary treatment indicator, and user covariates vector. Here, we consider a flexible generalized linear model:

| (4) |

where baseline covariates effect and treatment-covariates interaction effect are completely unspecified functions, and is a prespecified link function. For example, we use the identity link for normal responses and the logit link for binary outcomes.

Assuming is coded such that larger values indicate a better outcome, we consider the following test of subgroup treatment effects:

| (5) |

where is the beneficial subgroup with . Note that the above subgroup test is very different from the covariate-treatment interaction test considered in (3) and is much more challenging due to several aspects. First, both and are nonparametric and need to be estimated. Second, the considered hypotheses (5) are moment inequalities that are nonstandard. Third, it allows a nonregular setting, i.e. , which makes associated inference difficult. Here, we propose a test based on the value difference between the optimal treatment rule and a fixed treatment rule.

Let denote the potential outcome had the subject received treatment (), and denote a value function for a fixed treatment rule which maps the information in to treatment , where the subscript represents the expectation is taken with respect to the joint distribution of . Consider the value difference between the optimal treatment rule and the treatment rule that assigns control to everyone , where is an indicator function. If the null hypothesis is true, no one would benefit from the treatment and the optimal treatment rule assigns everyone to control, and therefore the value difference is zero. However, if the alternative hypothesis is true, some people would have higher outcomes being assigned to treatment and thus the value difference is positive. In this way, the testing hypotheses (5) can be equivalently transformed into the following pair:

| (6) |

We make the following standard causal inference assumptions: (i) consistency, which states that the observed outcome is equal to the potential outcome under the actual treatment received, i.e. ; (ii) no unmeasured confounders, i.e. for , which means that the potential outcome is independent of treatment given covariates; (iii) positivity, i.e. for and all such that . Under these assumptions, it can be shown that

III-B Algorithm and Implementation

We estimate the value function of a given treatment rule by the augmented inverse probability weighted (AIPW) estimator [17, 18]:

where and is the propensity score. This estimator is unbiased, i.e. . Moreover, the AIPW estimator has double robustness, that is, the estimator remains consistent if either the estimator of or the estimator of the propensity score is consistent, which gives much flexibility. Then the value difference is unbiasedly estimated by

under our assumed model (4), where is the inverse of the link function. That is,

| (7) |

Since , , and are usually unknown, we let data come in batches and estimate them based on previous batches of data.

| (8) |

| (9) |

Like SST, the key idea behind SUBTLE is to construct a statistic that has an (asymptotic) normal distribution with different means under the null hypothesis and alternative hypothesis (6), and then build the test statistics as a mixture of (asymptotic) probability ratios of it. Define as below:

| (10) |

Note that is an asymptotic unbiased estimator for by (7). (8) is a multiplier of and thus has the mean related to the value difference . In section III-C we will show that has an asymptotic normal distribution with the same variance but different means under the null and local alternative hypotheses so that our test statistics (9) is a mixture of asymptotic probability ratios of . As we discussed in Section II, the probability ratio has the same martingale structure as the likelihood ratio, so SUBTLE can be shown to control type I error with the decision rule (1). Algorithm 1 shows our complete testing procedures.

In step (ii) of Algorithm 1, we estimate and by respectively building a random forest on control observations and treatment observations in previous batches. The propensity score is estimated by computing the proportion of treatment observations () in previous batches. In step (iv) we estimate with , where is the sample variance of . Since the value difference is always nonnegative, in step (v) we choose a truncated normal as the mixture density. The normality of the mixture density guarantees a closed form for our test statistic:

where is the cumulative distribution function of a normal distribution with mean and variance . In theory, the choice of mixture density variance will not have any effect on the type I error control. Johari et al. [12] proved that an optimal in terms of stopping time is the prior variance times a correction for truncating, so we suggest estimating based on historical data. In the last step, we add a failure time to the decision rule to terminate the test externally and accept the null hypothesis if we ever reach it. If the null hypothesis is rejected, we can employ random forests to estimate based on all the data up to the time that the experiment ends. Then the estimated treatment effect naturally gives the beneficial subgroup .

III-C Validity

In this section, we will show that our proposed test SUBTLE is able to control type I error at any time, that is, for any . Theorem III.1 gives the respective asymptotic distributions of under the null and local alternative hypotheses, which demonstrates that the test statistics is a mixture of asymptotic probability ratios weighted by . Proposition 1 shows that this asymptotic probability ratio has a martingale structure under when the sample size is large enough. Combining these two results with the demonstration in Section II-A, we can conclude that the type I error of SUBTLE is always controlled at .

The upcoming theorem relies on the following conditions:

- (C1)

-

The number of batches diverges to infinity as sample size diverges to infinity.

- (C2)

-

Lindeberg-like condition: for all

- (C3)

-

.

- (C4)

-

where is with replaced by the true value , but unchanged.

- (C5)

-

.

Similar conditions are also used in the literature for studying the properties of doubly robust estimators. Their appropriateness was discussed in Section 7 of [19]. Both (C4) and (C5) rely on the convergence rate of the estimator of . Wager and Athey [20] showed that under certain constraints on the subsampling rate, random forest predictions converge at the rate , where is chosen to satisfy some conditions. We assume that under this rate, (C4) and (C5) hold.

Theorem III.1

For defined in (10), under conditions (C1)-(C5),

where represents convergence in distribution. In particular, as , under the null hypothesis , while under the local alternative , where is fixed.

Proposition 1

Let

and denote a filtration that contains all the historical information in the first batches . Then under the null hypothesis , is approximately equal to .

The proofs of the above results are given in the appendices.

IV Simulated Experiments

In this section, we evaluate the test SUBTLE on three metrics: type I error, power, and sample size. We first compare SUBTLE with SST in terms of type I error and power under five models in Section IV-A. Then in Section IV-B, we present the impact of noise covariates on their powers. Finally, in Section IV-C, we compare the stopping time of SUBTLE to the required sample size of a fixed-horizon value difference test. The significance level , initial batch size , failure time , and variance of mixture distribution are fixed for both SUBTLE and SST in all simulation settings unless otherwise specified.

IV-A Type I Error & Power

We consider five data generation models in the form of (4) with logistic link . Data are generated in batches with batch size and are randomly assigned to two groups with a fixed propensity score . Each experiment is repeated 1000 times to estimate the type I error and power. For the first four models, we consider

- Five covariates

-

:

- Two baseline effects

-

:

- Two treatment-covariates interaction effects

-

:

Table I displays which covariates, , and are employed in each model. For model V, we consider the following high-dimensional setting:

where varies among indicating the intensity of the value difference. When and 0, the null hypothesis is true and the type I error is estimated; while when , the alternative is true and the power is estimated.

| Model | Input covariates | ||

|---|---|---|---|

| I | , | ||

| II | , , , , | ||

| III | , , , , | ||

| IV | , , , , |

Table II shows that the SUBTLE is able to control type I error and achieve competing detection power, especially under high-dimensional setting (Model V); however, SST couldn’t control type I error especially when . This can be explained by two things: (i) the linearity of the model (2) is violated; (ii) SST is testing if there is a difference between treatment and control groups among any subjects, instead of the existence of a beneficial subgroup. Specifically, SST is testing if the least false parameter , to which the MLE of under model misspecification converges, is zero or not. We also perform experiments with batch size , and the results (Table III) do not have much difference.

| Model | I | II | III | IV | V | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| SUBTLE | SST | SUBTLE | SST | SUBTLE | SST | SUBTLE | SST | SUBTLE | SST | |

| -1 | 0.009 | 0.695 | 0.002 | 0.589 | 0.003 | 0.224 | 0.004 | 0.411 | 0.002 | 0.008 |

| 0 | 0.015 | 0.134 | 0.010 | 0.023 | 0.006 | 0.095 | 0.010 | 0.023 | 0.006 | 0.038 |

| 0.6 | 0.323 | 0.564 | 0.491 | 0.513 | 0.269 | 0.389 | 0.424 | 0.425 | 0.559 | 0.170 |

| 0.8 | 0.623 | 0.845 | 0.878 | 0.900 | 0.719 | 0.723 | 0.822 | 0.824 | 0.925 | 0.390 |

| 1 | 0.911 | 0.974 | 0.988 | 0.996 | 0.952 | 0.943 | 0.985 | 0.982 | 0.997 | 0.742 |

| Model | I | II | III | IV | V | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| SUBTLE | SST | SUBTLE | SST | SUBTLE | SST | SUBTLE | SST | SUBTLE | SST | |

| -1 | 0.003 | 0.662 | 0.000 | 0.588 | 0.000 | 0.219 | 0.000 | 0.368 | 0.002 | 0.006 |

| 0 | 0.012 | 0.126 | 0.002 | 0.023 | 0.003 | 0.077 | 0.002 | 0.023 | 0.006 | 0.034 |

| 0.6 | 0.297 | 0.552 | 0.465 | 0.549 | 0.326 | 0.397 | 0.414 | 0.451 | 0.585 | 0.216 |

| 0.8 | 0.633 | 0.837 | 0.868 | 0.896 | 0.680 | 0.703 | 0.826 | 0.816 | 0.931 | 0.373 |

| 1 | 0.901 | 0.969 | 0.993 | 0.995 | 0.947 | 0.933 | 0.985 | 0.978 | 0.999 | 0.715 |

To evaluate the robustness of SUBTLE to the mixture density variance , we conduct additional simulations with . The data are generated from Model I with or , and batch size for computation efficiency. When we estimate the type I error, while when we estimate the power. The results in Table IV show that the type I error is always controlled below significance level 0.05 and the power has considerable robustness to the choice of .

| 0.0001 | 0.001 | 0.01 | 0.1 | 1 | 10 | |

|---|---|---|---|---|---|---|

| Type I error | 0.003 | 0.024 | 0.021 | 0.016 | 0.005 | 0.002 |

| Power | 0.887 | 0.976 | 0.956 | 0.932 | 0.892 | 0.825 |

IV-B Noise Covariates

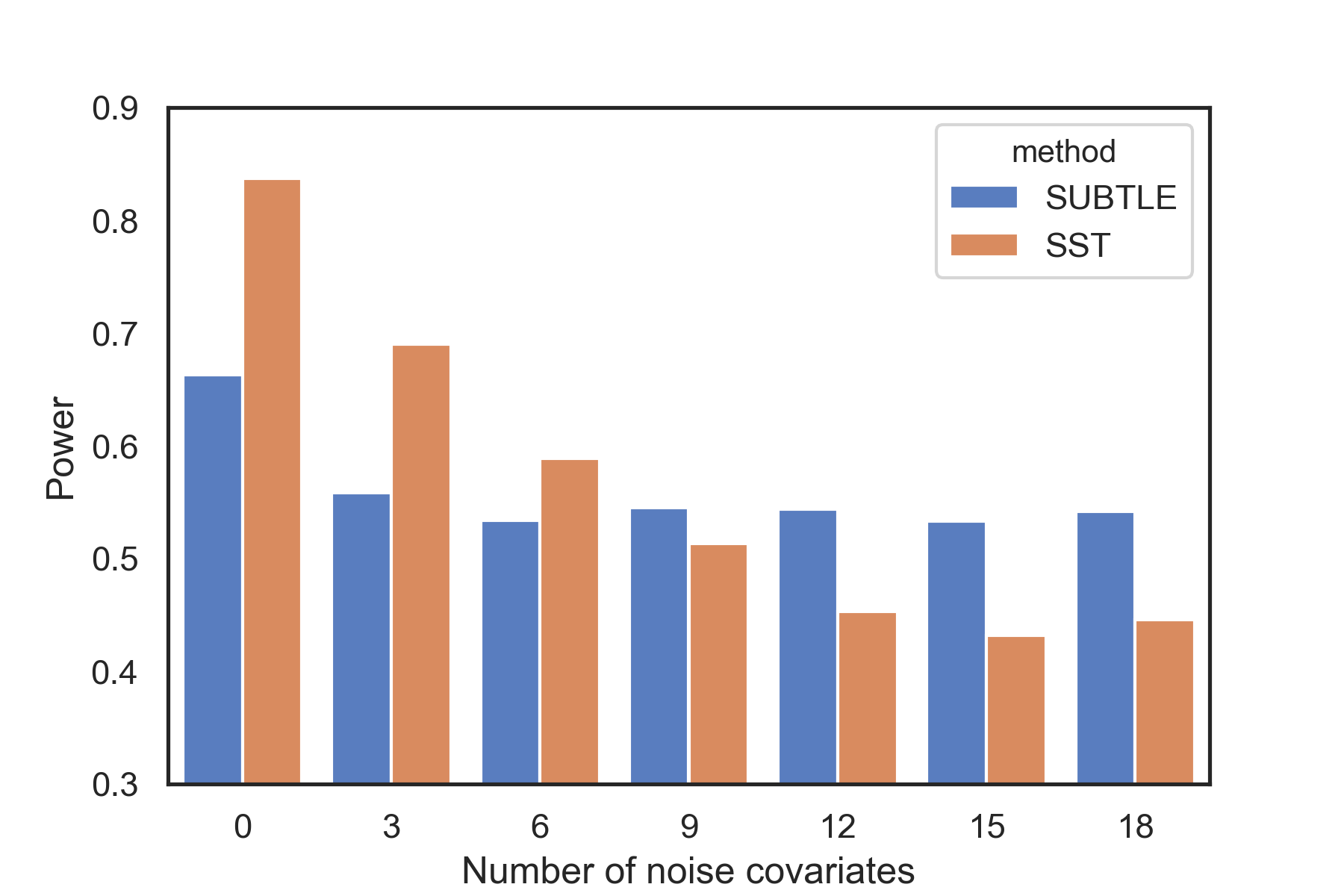

It is common in practice that a large number of covariates are incorporated in the experiment whereas the actual outcome only depends on a few of them. Some covariates do not have any effect on the response, like in Model II, III, IV, and we call them noise covariates. In the following simulation, we explore the impact of noise covariates on the detection power of SUBTLE and SST. We choose Model I with as the base model, and at each time add three noise covariates which are respectively from normal N, uniform U, and Bernoulli Ber distributions. The batch size is set to for computation efficiency. Fig. 1 shows that SST has continuously decreasing powers as the number of noise covariates increases, while the power of SUBTLE is more robust to the noise covariates.

IV-C Stopping Time

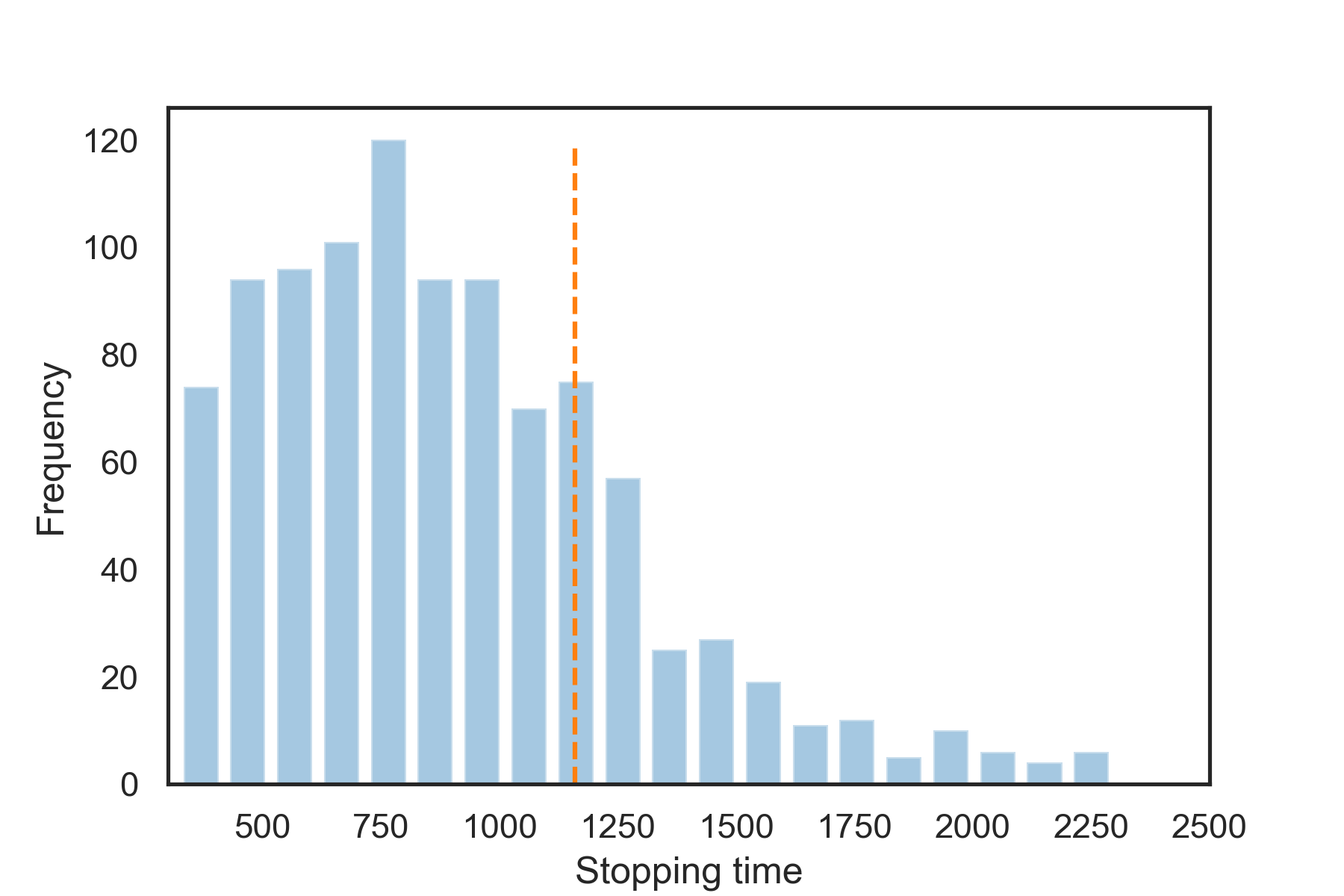

A key feature of sequential testing is that it has an expected smaller sample size than fixed-horizon testing. For comparison, we consider a fixed-horizon version of SUBTLE, which leverages Theorem III.1 and rejects the null hypothesis when for some predetermined , where denotes the quantile of standard normal distribution. We assume , then the required number of batches can be calculated as , and thus the required sample size is . The true value difference can be directly estimated from data generated under the true model with two treatment rules, while is estimated by the sample variance of times for some fixed large . Here, we choose Model V with and batch size . The stopping sample size of our sequential SUBTLE over 1000 replicates is shown in Fig. 2, and the dashed vertical line indicates the required sample size for the fixed-horizon SUBTLE with the same power of 0.997 (seen from Table II) under the same setting. We can find that most of the time our sequential SUBTLE arrives at the decision early than the fixed-horizon version, but occasionally it can take longer. The distribution of the stopping time for sequential SUBTLE is right-skewed, which is in line with the findings in [12] and [2].

V Real Data Experiments

We use the Yahoo dataset to examine the performance of our SUBTLE, which contains user click events on articles over 10 days. Each event has a timestamp, a unique article id (variant), a binary click indicator (response), and four independent user features (covariates). We choose two articles (id=109520 and 109510) with the highest click-through rates as control and treatment, respectively. We set the significance level , initial batch size and batch size , and the failure time .

To demonstrate the false-positive control of our method, we conduct an A/A test and a permutation test using SUBTLE. For the A/A test, we only use data from article 109510 and randomly generate fake treatment indicators. Our method accepts the null hypothesis. For the permutation test, we use combined data from articles 109510 and 109520 and permute their response 1000 times while leaving the treatment indicator and covariates unchanged. The estimated false-positive rate is below the significance level.

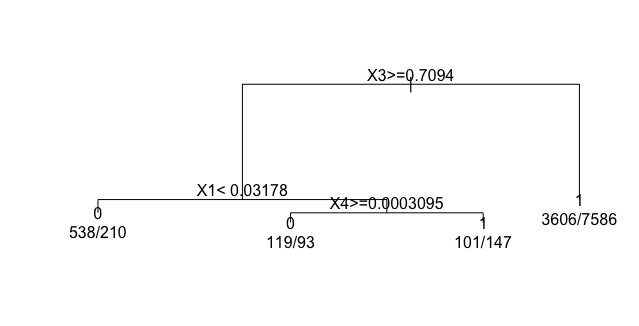

Then we test if there is any subgroup of users who would have a higher click-through rate on article 109510. In this experiment, SUBTLE rejects the null hypothesis with a sample size of 12400. We identify the beneficial subgroup by estimating with random forest on the first 12400 observations. To get a structured optimal treatment rule, we then build a classification tree on the same 12400 samples with a random forest estimator as true labels. The resulting decision tree (Fig. 3) suggests that the users in the subgroup defined by have a higher click-through rate on article 109510 compared with article 109520.

We then use the 50000 samples after the first 12400 samples as test data and compute the difference of click-through rates between articles 109510 and 109520 on the test data (overall treatment effect), and the same difference in the subgroup of the test data (subgroup treatment effect). We found that the subgroup treatment effect of 0.009 is larger than the overall treatment effect of 0.006, which shows that the identified subgroup has enhanced treatment effects than the overall population.

We further compute the inverse probability weighted (IPW) estimator of the values of two treatment rules using the test data: a treatment rule that assigns everyone to article 109520 and the optimal treatment rule estimated by random forest. Their IPW estimates are respectively 0.043 and 0.049, which suggests that the estimated optimal treatment rule is better than the fixed rule that assigns all users to article 109520 in terms of click-through rate. It also implies that there exists a subgroup of the population that has a higher click-through rate on article 109510 compared with article 109520.

VI Conclusion

In this paper, we propose SUBTLE, which is able to sequentially test if some subgroup of the population will benefit from the investigative treatment. If the null hypothesis is rejected, a beneficial subgroup can be easily identified based on the estimated optimal treatment rule. The validity of the test has been proved by both theoretical and simulation results. The experiments also show that SUBTLE has high detection power especially under high-dimensional settings, is robust to noise covariates, and allows quick inference most of the time compared with fixed-horizon testing.

Same as mSPRT and SST, the rejection condition of SUBTLE may never be reached in some cases, especially when the true effect size is negligible. Thus, a failure time is needed to terminate the test externally and accept the null hypothesis if we ever reach it. How to choose a failure time to balance waiting time and power need to be studied in the future. Another future direction is the application of our test under adaptive allocation, where users will have higher probabilities of being assigned to a beneficial variant based on previous observations. However, the validity may not be guaranteed anymore under adaptive allocation and more theoretical investigations are needed.

References

- [1] R. Kohavi, R. Longbotham, D. Sommerfield, and R. M. Henne, “Controlled experiments on the web: survey and practical guide,” Data Mining and Knowledge Discovery, vol. 18, no. 1, pp. 140–181, 2009.

- [2] N. Ju, D. Hu, A. Henderson, and L. Hong, “A sequential test for selecting the better variant: Online a/b testing, adaptive allocation, and continuous monitoring,” in Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining. ACM, 2019, pp. 492–500.

- [3] J. P. Simmons, L. D. Nelson, and U. Simonsohn, “False-positive psychology: undisclosed flexibility in data collection and analysis allows presenting anything as significant,” Psychological Science, vol. 22, no. 11, pp. 1359–1366, 2011.

- [4] M. Goodson. (2014) Most winning a/b test results are illusory. [Online]. Available: http://www.datascienceassn.org/sites/default/files/Most%20Winning%20A-B%20Test%20Results%20are%20Illusory.pdf

- [5] A. Wald, “Sequential tests of statistical hypotheses,” The Annals of Mathematical Statistics, vol. 16, no. 2, pp. 117–186, 1945.

- [6] G. Schwarz, “Asymptotic shapes of bayes sequential testing regions,” The Annals of Mathematical Statistics, vol. 33, no. 1, pp. 224–236, 1962.

- [7] D. R. Cox, “Large sample sequential tests for composite hypotheses,” Sankhyā: The Indian Journal of Statistics, Series A, vol. 25, no. 1, pp. 5–12, 1963.

- [8] P. Armitage, C. McPherson, and B. Rowe, “Repeated significance tests on accumulating data,” Journal of the Royal Statistical Society: Series A (General), vol. 132, no. 2, pp. 235–244, 1969.

- [9] H. Robbins, “Statistical methods related to the law of the iterated logarithm,” The Annals of Mathematical Statistics, vol. 41, no. 5, pp. 1397–1409, 1970.

- [10] T. L. Lai, “Nearly optimal sequential tests of composite hypotheses,” The Annals of Statistics, vol. 16, no. 2, pp. 856–886, 1988.

- [11] ——, “Sequential analysis: some classical problems and new challenges,” Statistica Sinica, vol. 11, no. 2, pp. 303–351, 2001.

- [12] R. Johari, L. Pekelis, and D. J. Walsh, “Always valid inference: Bringing sequential analysis to a/b testing,” arXiv preprint arXiv:1512.04922, 2015.

- [13] M. Yu, W. Lu, and R. Song, “A new framework for online testing of heterogeneous treatment effect,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 06, 2020, pp. 10 310–10 317.

- [14] H. Robbins and D. Siegmund, “The expected sample size of some tests of power one,” The Annals of Statistics, vol. 2, no. 3, pp. 415–436, 1974.

- [15] M. Pollak, “Optimality and almost optimality of mixture stopping rules,” The Annals of Statistics, vol. 6, no. 4, pp. 910–916, 1978.

- [16] R. Johari, P. Koomen, L. Pekelis, and D. Walsh, “Peeking at a/b tests: Why it matters, and what to do about it,” in Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 2017, pp. 1517–1525.

- [17] J. M. Robins, A. Rotnitzky, and L. P. Zhao, “Estimation of regression coefficients when some regressors are not always observed,” Journal of the American Statistical Association, vol. 89, no. 427, pp. 846–866, 1994.

- [18] B. Zhang, A. A. Tsiatis, E. B. Laber, and M. Davidian, “A robust method for estimating optimal treatment regimes,” Biometrics, vol. 68, no. 4, pp. 1010–1018, 2012.

- [19] A. R. Luedtke and M. J. Van Der Laan, “Statistical inference for the mean outcome under a possibly non-unique optimal treatment strategy,” The Annals of Statistics, vol. 44, no. 2, pp. 713–742, 2016.

- [20] S. Wager and S. Athey, “Estimation and inference of heterogeneous treatment effects using random forests,” Journal of the American Statistical Association, vol. 113, no. 523, pp. 1228–1242, 2018.

Appendices

A Proof of Theorem III.1

Let , , denote a filtration generated by observations in first batches , and denote an AIPW estimator for with only optimal decision rule estimated by previous batches:

Then

| (11) | |||

| (12) |

Above (11) follows by condition (C5) and (12) follows by condition (C4). For , let

It is obvious that for each , , , is a martingale with respect to the filtration . In particular, for all , and as by (C3). The conditional Lindeberg condition holds in (C2), so the martingale central limit theory for triangular arrays gives

Plugging it back into (12), we can get

| (13) |

B Proof of Proposition 1

We first simplify the formula of to:

where we denote with for simplicity. By the Delta method, we have

Then the expectation term can be calculated as

Since the true value difference is not very large in practice, we assume local alternative here as in Theorem III.1. Therefore,