Open-World Continual Learning: Unifying Novelty Detection and Continual Learning

Abstract

As AI agents are increasingly used in the real open world with unknowns or novelties, they need the ability to (1) recognize objects that (a) they have learned before and (b) detect items that they have never seen or learned, and (2) learn the new items incrementally to become more and more knowledgeable and powerful. (1) is called novelty detection or out-of-distribution (OOD) detection and (2) is called class incremental learning (CIL), which is a setting of continual learning (CL). In existing research, OOD detection and CIL are regarded as two completely different problems. This paper first provides a theoretical proof that good OOD detection for each task within the set of learned tasks (called closed-world OOD detection) is necessary for successful CIL. We show this by decomposing CIL into two sub-problems: within-task prediction (WP) and task-id prediction (TP), and proving that TP is correlated with closed-world OOD detection. The key theoretical result is that regardless of whether WP and OOD detection (or TP) are defined explicitly or implicitly by a CIL algorithm, good WP and good closed-world OOD detection are necessary and sufficient conditions for good CIL, which unifies novelty or OOD detection and continual learning (CIL, in particular). We call this traditional CIL the closed-world CIL as it does not detect future OOD data in the open world. The paper then proves that the theory can be generalized or extended to open-world CIL, which is the proposed open-world continual learning, that can perform CIL in the open world and detect future or open-world OOD data. Based on the theoretical results, new CIL methods are also designed, which outperform strong baselines in CIL accuracy and in continual OOD detection by a large margin.

keywords:

Open world learning , continual learning , OOD detection[label1]organization=University of Illinois Chicago, addressline=851 S Morgan St, city=Chicago, state=Illinois, postcode=60607, country=United States

[label2]organization=ByteDance, addressline=Building 24, Zone B, 1999 Yishan Road, city=Shanghai, postcode=201100, country=China

1 Introduction

The current dominant machine learning paradigm (ML) makes the closed-world assumption, which means that the classes of objects seen by the system in testing or deployment must have been seen during training [60, 59, 5, 14, 39], i.e., there is nothing novel occurring during testing or deployment. This assumption is invalid in practice as the real environment is an open world that is full of unknowns or novel objects. To make an AI agent thrive in the open world, it has to detect novelties and learn them incrementally to make the system more knowledgeable and adaptable over time. This process involves multiple activities, such as novelty/OOD detection, novelty characterization, adaption, risk assessment, and continual learning of the detected novel items or objects [40, 41]. Novelty detection, also called out-of-distribution (OOD) detection, aims to detect unseen objects that the agent has not learned. On detecting novel objects or situations, the agent has to respond or adapt its actions. But in order to adapt, it must first characterize the novel object as without it, the agent would not know how to respond or adapt. For example, it may characterize a detected novel object as looking like a dog. Then, the agent may react like it would react to a dog. In the process, the agent also constantly assesses the risk of its actions. Finally, it also learns to recognize the new object incrementally so that it will not be surprised when it sees the same kind of object in the future. This incremental learning is called continual learning (CL) or lifelong learning [13, 27]. Note that before learning, the agent must obtain labeled training data, which can be collected by the agent through interaction with the environment or human users. This aspect is out of the scope of this paper. See [40, 41] for details.

This paper focuses only on the key learning aspects of the open world scenario: (1) OOD/novelty detection and (2) continual learning, more specifically class incremental learning (CIL) (see the definition below). In the research community, (1) and (2) are regarded as two completely different problems, but this paper theoretically unifies them by proving that good OOD detection for each task within the set of learned tasks, which we call closed-world OOD detection, is in fact necessary for CIL. Below, we define the concepts of OOD detection and continual learning.

Out-of-distribution (OOD) detection: Given the training data , where is the number of data samples, and is an input sample and (the set of all class labels in ) is its class label, our goal is to build a classifier that can detect test instances that do not belong to any classes in (called OOD detection), which are assigned to the class . is often called the in-distribution (IND) classes.

We also call this open-world OOD detection. As we can see from the definition, an OOD detection algorithm can also classify test instances belonging to to their respective classes, which is called IND classification, although most OOD detection papers do not report the IND classification results.

Continual learning (CL) aims to incrementally learn a sequence of tasks. Each task consists of a set of classes to be learned together (the set may contain only a single class). Once a task is learned, its training data (at least a majority of it) is no longer accessible. Thus, unlike multitask learning, in learning a new task, CL will not be able to use the data of the previous tasks. A major challenge of CL is catastrophic forgetting (CF), which refers to the phenomenon that in learning a new task, the neural network model parameters need to be modified, which may corrupt the knowledge learned for previous tasks in the network and cause performance degradation for the previous tasks [47]. Although many CL techniques have been proposed, they are mainly empirical. Limited theoretical work has been done on how to solve CL. This paper performs such a theoretical study about the necessary and sufficient conditions for effective CL. Two main CL settings have been extensively studied: class incremental learning (CIL) and task incremental learning (TIL) [68]. In CIL, the learning process builds a single classifier for all tasks/classes learned so far. In testing, a test instance from any class may be presented for the model to classify. No prior task information (e.g., task-id) of the test instance is provided. Formally, CIL is defined as follows.

Class incremental learning (CIL). CIL learns a sequence of tasks, . Let be the number of tasks that have been learned so far. Each task () has a training dataset , where is the number of data samples in task , and is an input sample and (the set of all classes of task ) is its class label. All ’s are disjoint () and . The goal of CIL is to construct a single predictive function or classifier that can identify the class label of each given test instance from the tasks.

Based on CIL, we can also define the term close-world OOD detection.

Closed-world OOD detection: Closed-world OOD detection for a given task among the tasks that have been learned so far is OOD detection regarding the classes of task as the IND classes and those of the other tasks as the OOD classes.

From now on when we refer to OOD detection on its own (which is open-world OOD detection), we mean it is not limited to the learned tasks, as opposed to the closed-world OOD detection. Clearly, (open-world) OOD detection encompasses closed-world OOD detection, but not vice versa.

Unlike CIL, each task in TIL is a separate or independent classification problem. For example, one task could be to classify different breeds of dogs and another task could be to classify different types of animals (the tasks may not be disjoint). One model is built for each task in a shared network. In testing, the task-id of each test instance is provided and the system uses only the specific model for the task (dog or animal classification) to classify the test instance. Formally, TIL is defined as follows.

Task incremental learning (TIL). TIL learns a sequence of tasks, . Let be the number of tasks that have been learned so far. Each task () has a training dataset , where is the number of data samples in task , and is an input sample and is its class label. The goal of TIL is to construct a predictor to identify the class label for (the given test instance from task ).

This paper focuses on CIL, which involves incrementally learning new or novel object classes—a key aspect of open-world learning. While the proposed methods are also applicable to TIL, we do not address it in this paper. TIL is generally simpler, and several existing techniques can achieve it without CF [61, 69]. In contrast, CIL remains highly challenging due to the difficulty of Inter-task Class Separation (ICS), i.e., establishing decision boundaries between classes from the new task and those from previous tasks in learning the new task without accessing the training data of previous tasks.

Problem Statement (open-world continual learning): Open-world continual learning (OWCL) is defined as CIL with the OOD detection capability. We also call it open-world CIL or CIL+. At any time, the resulting open-world CIL model can classify test instances belonging to the classes in the tasks that have been learned so far to their respective classes and also detect OOD instances that do not belong to any of the learned classes so far.

Note that OOD detection in CIL+ is different from traditional OOD detection (which sees the full IND data together) because, in CIL+, the model does not see all the IND data together. Instead, the IND data comes in a sequence of tasks incrementally, and in learning each task, the model does not see any data (or only a very small sample) of the old or previous tasks.

Main contributions: This paper makes three main contributions. First, it theoretically proves the necessary and sufficient conditions for solving the CIL problem. A good closed-world OOD detection performance is one of the necessary conditions, which connects or unifies OOD detection and CIL. Since in this traditional CIL, the test instances are assumed to be from one of the tasks that have been learned, we call the existing CIL the closed-world CIL. Second, we prove that the theory can naturally be generalized or extended to the open-world CIL, which is the proposed open-world continual learning. Open-world CIL can perform CIL in the open world and detect OOD test data that do not belong to any of the tasks learned so far. Third, based on the theory, several new CIL algorithms are designed, which are also able to detect novel (or OOD) instances for the open-world continual learning (OWCL) setting. Note that from here onward, when we do not explicitly say open-world CIL, CIL means the traditional CIL.

Theory. We conduct a theoretical study of CIL, which is applicable to any CIL classification model. Instead of focusing on the traditional PAC generalization bound [53] or neural tangent kernel (NTK) [26], we focus on how to solve the CIL problem. We first decompose the CIL problem into two sub-problems in a probabilistic framework: Within-task Prediction (WP) and Task-id Prediction (TP). WP means that the prediction for a test instance is only made within the classes of the task to which the test instance belongs, which is basically the TIL problem. TP predicts the task-id. TP is needed because, in CIL, task-id is not provided at test time. This paper then proves based on cross-entropy loss that (i) the CIL performance is bounded by WP and TP performances, and (ii) TP and closed-world OOD detection performance bound each other. This paper further generalizes the result to open-world CIL (or CIL+). These results unify CIL and OOD detection.

Key theoretical results: Regardless of whether WP and TP or OOD detection are defined explicitly or implicitly by a closed-world or open-world CIL algorithm, (1) good WP and good TP or closed-world OOD detection are necessary and sufficient conditions for good closed-world CIL performances and (2) good WP and good TP or open-world OOD detection are necessary and sufficient conditions for good open-world CIL performances.444This result applies to both batch/offline and online/stream CIL and to CIL problems with blurry task boundaries which means that some training data of a task may come later together with a future task.

The intuition of the theory is simple because if a closed-world or open-world CIL model is perfect at detecting OOD samples for each task, which solves the ICS problem, then closed-world or open-world CIL is reduced to WP, which is the traditional single-task supervised learning for each task. Note that many OOD detection algorithms can also perform IND classification, which is WP.

New CIL Algorithms for OWCL. The theoretical result provides principled guidance for solving the (closed-world or open-world) CIL problem. Based on the theory, several new CIL methods are designed. (1) The first few methods integrate a TIL method and an OOD detection method, which outperform strong baselines in both the CIL and TIL settings by a large margin. This combination is attractive because TIL has achieved no forgetting, and we only need a strong OOD detection technique that can perform both IND prediction and OOD detection to learn each task to achieve strong CIL results. We do not propose a new OOD detection method as there are numerous such methods in the literature. We use two existing ones. (2) Another method is based on a pre-trained model and an OOD replay technique, which performs even better, outperforming existing baselines markedly in both CIL and OOD detection in the OWCL setting.

2 Related Work

Although a large number of algorithms have been proposed to solve the CIL problem, they are mainly empirical. Two papers have focused on studying the traditional PAC generalization bound [53] or NTK [26], but they do not tell how to solve the CL problem. This paper focuses on how to solve the CIL problem. To the best of our knowledge, we are not aware of any work that has proposed a theory on how to solve CIL. Also, none of the existing work has connected CIL and OOD detection. Our work shows that a good CIL algorithm can naturally perform OOD detection in the open world. Below, we first survey four popular families of CL approaches, which are mainly for overcoming catastrophic forgetting (CF). We then discuss related works about open-world learning.

Regularization-based methods prevent forgetting by restricting the learned parameters for previous tasks from being modified significantly by using a regularization term to penalize such changes [31, 76] or to regularize the learned representations or outputs so that they are not far from those of the previously learned network [37, 77].

Replay-based methods [56, 10, 7, 6, 72] mitigate forgetting by saving a small amount of training data from previous tasks in a memory buffer and jointly train the network using the current data and the previous task data saved in the memory. Some methods in this family also study which samples in the memory should be used in replaying [2] or which samples in the training data should be saved for later replaying [56, 43].

Generative methods construct a generative network to generate raw training data [62, 48, 3]. The generated data are used with the current task training data to jointly train the classification network. [77] generates features instead of raw data. The generated samples in these methods are used to prevent forgetting in both the generative network and the classification network.

Parameter-isolation methods [61, 69] train a set of task-specific parameters to effectively protect the important parameters of each task from being updated, which thus has almost no forgetting. A limitation of the approach is that the correct task-id of each test instance must be known to the system to select the corresponding task-specific parameters at inference. These methods are thus mainly used for task incremental learning (TIL). Some CIL methods also used these methods [1, 49, 54, 22] and they have separate mechanisms to predict the task-id (more on this below). However, their CIL performances are far below that of recent replay-based counterparts (see Sections 4.2 and 5.2 for details). Two of our proposed CIL methods also use two parameter-isolation methods (HAT [61] and SupSup [69]) for TIL as one of the components.

Using a TIL method for CIL means that CIL is decomposed into WP and TP. Task-id prediction (TP) is the key challenge. For example, CCG [1] constructs an additional network to predict the task-id. iTAML [54] identifies the task-id of the test data in a batch. A serious limitation of this is that it requires the test data in a batch to belong to the same task. Our methods are different as they can predict for a single test instance at a time. HyperNet [49] and PR [22] propose an entropy-based task-id prediction method. SupSup [69] predicts task-id by finding the optimal superpositions at inference. However, these methods perform poorly because they either do not know that OOD detection is the key for accurate task-id prediction or their task models are not built for OOD detection. It is also important to note that our theory does not explicitly predict task-id. Instead, it uses the TP probability and WP probability for test prediction.

Several papers have explicitly or implicitly indicated the use of OOD detection for task-id prediction in continual learning. For example, the CIL method in [24] is based on one-class classification, which is OOD detection with only a single class as the in-distribution (IND) class. In [22], the authors proposed an uncertainty-based OOD detection framework for task-id prediction. Two specific methods were presented. One uses entropy to quantify the uncertainty (which has also been used in some other systems discussed above) and the other is called agree, which selects the task that leads to the highest agreement in predictions across task models. There are also related works that did not explicitly make a connection between CIL and OOD detection, their methods implicitly imply it. For example, [72] uses a regularization similar to OOD detection, which employs the replay data from previous tasks as OOD samples. [67] proposed to train a VAE model for each class to be learned. It then estimates the likelihood and uses the Bayes rule to predict the class () of each test instance (). Our work makes a theoretical contribution by formally connecting CIL and OOD detection and proving that for a good CIL performance, a good OOD detection capability for each task is necessary.

Open world learning has been studied by many researchers [60, 59, 5, 14, 39, 41]. However, the existing research mainly focused on novelty detection, also called open set recognition or out-of-distribution (OOD) detection. Some researchers have also studied learning the novel objects after they are detected and manually labeled [5, 14, 71, 25]. However, none of them perform continual learning, which has additional challenges of catastrophic forgetting (CF) and inter-task class separation (ICS). Several researchers also studied other related tasks in addition to novelty detection, e.g., characterization of novelties and adaptation of novelties to maximize the performance task [46, 65]. Again, these works are not about continual learning. Excellent surveys of novelty detection or OOD detection and open-world learning can be found in [73, 51, 52, 25]. [16] did novelty detection and also continual learning, but its continual learning uses the regularization-based method. It is quite weak because it has serious forgetting. A position paper [33] recently presented some nice blue-sky ideas about open-world learning, but it does not propose or implement any algorithm.

Our proposed algorithms are quite different. In training, based on our theory, we use two existing OOD detection methods to verify that our theory can guide us to design new and much more effective CIL algorithms. In testing, our OOD detection is in the open-world continual learning (OWCL) setting, which has been described in the introduction section.

Several researchers have studied novel class discovery [17], which is defined as discovering the hidden classes in the detected novel or OOD instances. Our work does not perform this function. We assume that the training data for each new task is given. Performing automatic class label discovery is still very challenging as in many cases, the class assignments can be subjective and are determined by human users. For example, for a dog, whether it should just be labeled as a dog or a specific breed of dog is a subjective decision and depends on applications.

Some existing works have combined OOD detection and continual learning [16, 18, 57]. These papers use OOD score thresholds to determine OOD instances and also do continual learning afterward. However, their continual learning still assumes that the training data are given as it is hard to do real-time detection and learning. This is because, without human involvement, it is impossible to obtain novel class labels in general and verify the correctness of OOD detection results. Any error in OOD detection will propagate to the continual learning phase. [40, 41] reported a continual learning chatbot that can detect novel user utterances that the system does not understand and chat with the user through its novelty characterization mechanism to get the ground truth. However, this system is based on saving new/novel utterances and performing matching and retrieval in subsequent chatting. [46] proposed an integrated online architecture that combines and extends probabilistic programming and planning to (1) detect novelty, (2) incrementally characterize the novelty, and (3) continually adapt its task-based reasoning to the evolving understanding of the novelty to maximize task performance. However, this work is not about continual learning. [50] also reported a system for continuous emotion novelty detection.

3 A Theoretical Study on Solving CIL

This section presents our theory for solving CIL, which also covers novelty or OOD detection. It first shows that the CIL performance improves if the within-task prediction (WP) performance and/or the task-id prediction (TP) performance improve, and then shows that TP and OOD detection bound each other, which indicates that CIL performance is controlled by WP and OOD detection. This connects CIL and OOD detection. After that, we study the necessary conditions for a good CIL model, which includes a good WP, and a good TP or OOD detection. In the first four sub-sections, we focus on the traditional CIL that is limited to the number of tasks that have been learned so far, which we also call closed-world CIL. OOD detection in this context is also within the learned tasks and is called closed-world OOD detection (see Section 1). For simplicity in presentation, we will not add closed-world before CIL or OOD detection below. In Section 3.5, we generalize/extend the theory to open-world CIL or open-world continual learning, which will also detect OOD data that do not belong to any of the tasks learned so far. Table 1 gives the list of acronyms used in the paper.

| CL | Continual learning |

|---|---|

| CIL | Class incremental learning |

| TIL | Task incremental learning |

| OOD | Out-of-distribution |

| IND | In-distribution |

| WP | Within-task prediction |

| TP | Task-id prediction |

| CF | Catastrophic forgetting |

| OWCL | Open-world continual learning |

| NTK | Neural tangent kernel |

| AUC | Area Under the ROC Curve |

3.1 CIL Problem Decomposition

This sub-section first presents the assumptions made by CIL based on its definition and then proposes a decomposition of the CIL problem into two sub-problems. Assume that a CIL system has learned a sequence of tasks so far, where is the domain of task and are classes of task as , where indicates the th class in task . Let to be the domain of th class of task , where . For accuracy, we will use instead of in probabilistic analysis. Based on the definition of class incremental learning (CIL) (Section 1), the following assumptions are implied,

Assumption 1.

The domains of classes of the same task are disjoint, i.e., .

Assumption 2.

The domains of tasks are disjoint, i.e., .

For any ground event , the goal of a CIL problem is to learn . This can be decomposed into two probabilities, within-task IND prediction (WP) probability and task-id prediction (TP) probability. WP probability is and TP probability is . We can rewrite the CIL problem using WP and TP based on the two assumptions,

| (1) | ||||

| (2) |

where means a particular task and is a particular class in the task.

Some remarks are in order about Eq. 2 and our subsequent analysis to set the stage.

Remark 1.

Eq. 2 shows that if we can improve either the WP or TP performance, or both, we can improve the CIL performance.

Remark 2.

It is important to note that our theory is not concerned with the learning algorithm or training process. But we will propose some concrete CIL algorithms based on the theoretical result in the experiment section.

Remark 3.

We note that the CIL definition and the subsequent analysis apply to tasks with any number of classes (including only one class per task) and to online CIL where the training data for each task or class comes gradually in a data stream and may also cross task boundaries (blurry tasks [4]) because our analysis is based on an already-built CIL model after training. Regarding blurry task boundaries, suppose dataset 1 has classes {dog, cat, tiger} and dataset 2 has classes {dog, computer, car}. We can define task 1 as {dog, cat, tiger} and task 2 as {computer, car}. The shared class dog in dataset 2 is just additional training data of dog appeared after task 1.

Remark 4.

CIL = WP * TP in Eq. 2 means that when we have WP and TP (defined either explicitly or implicitly by implementation), we can find a corresponding CIL model defined by WP * TP. Similarly, when we have a CIL model, we can find the corresponding underlying WP and TP defined by their probabilistic definitions.

In the following sub-sections, we develop this further concretely to derive the sufficient and necessary conditions for solving the CIL problem in the context of cross-entropy loss as it is used in almost all supervised CIL systems.

3.2 CIL Improves as WP and/or TP Improve

As stated in Remark 2 above, the study here is based on a trained CIL model and not concerned with the algorithm used in training the model. We use cross-entropy as the performance measure of a trained model as it is the most popular loss function used in supervised CL. For experimental evaluation, we use accuracy following CL papers. Denote the cross-entropy of two probability distributions and as

| (3) |

For any , let to be the CIL ground truth label of , where if otherwise , . Let be the WP ground truth label of , where if otherwise , . Let be the TP ground truth label of , where if otherwise , . Denote

| (4) | ||||

| (5) | ||||

| (6) |

where , , and are the cross-entropy values of WP, CIL, and TP, respectively. We now present our first theorem. The theorem connects CIL to WP and TP and suggests that by having a good WP or TP, the CIL performance improves as the upper bound for the CIL loss decreases.

Theorem 1.

If and , we have

The detailed proof is given in A.1. This theorem holds regardless of whether WP and TP are trained together or separately. When they are trained separately, if WP is fixed and we let , , which means if TP is better, CIL is better. Similarly, if TP is fixed, we have . When they are trained concurrently, there exists a functional relationship between and depending on implementation. But no matter what it is, when decreases, CIL gets better.

Theorem 1 holds for any that satisfies or . To measure the overall performance under expectation, we present the following corollary.

Corollary 1.

Let represent the uniform distribution on . i) If , then . Similarly, ii) , then .

The proof is given in A.2. The corollary is a direct extension of Theorem 1 in expectation. The implication is that given TP performance, CIL is positively related to WP. The better the WP is, the better the CIL is as the upper bound of the CIL loss decreases. Similarly, given WP performance, a better TP performance results in a better CIL performance. Due to the positive relation, we can improve CIL by improving either WP or TP using their respective methods developed in each area.

3.3 Task Prediction (TP) to OOD Detection

Building on Eq. 2, we have studied the relationship of CIL, WP, and TP in Theorem 1. We now connect TP and OOD detection. They are shown to be dominated by each other to a constant factor.

We again use cross-entropy to measure the performance of TP and OOD detection of a trained network as in Section 3.2. To build the connection between and OOD detection of each task, we first define the notations of OOD detection. We use to represent the probability distribution predicted by the th task’s OOD detector. Notice that the task prediction (TP) probability distribution is a categorical distribution over tasks, while the OOD detection probability distribution is a Bernoulli distribution. For any , define

| (7) |

Note that the OOD detection here is the closed-world OOD detection. But for presentation simplicity, we still use OOD detection below. In CIL, the term OOD detection probability for a task can be defined using the output values corresponding to the classes of the task. Some examples of the function are the sigmoid of maximum logit value and the maximum softmax probability after re-scaling to 0 to 1. It is also possible to define the OOD detector directly as a function of tasks instead of a function of the output values of all classes of tasks, i.e. Mahalanobis distance. The following theorem shows that TP and OOD detection bound each other.

Theorem 2.

i) If , let , then . ii) If , let , then , where is an indicator function.

See A.3 for the proof. As we use cross-entropy, the lower the bound, the better the performance is. The first statement (i) says that the OOD detection performance improves if the TP performance gets better (i.e., lower ). Similarly, the second statement (ii) says that the TP performance improves if the OOD detection performance on each task improves (i.e., lower ). Besides, since converges to as ’s converge to in order of , we further know that and are equivalent in quantity up to a constant factor.

For the traditional CIL, Theorem 1 studied how CIL is related to WP and TP. Theorem 2 showed that TP and OOD detection bound each other. Now we explicitly give the upper bound of CIL in relation to WP and OOD detection of each task. The detailed proof can be found in A.5.

Theorem 3.

If and , we have

where is an indicator function.

3.4 Necessary Conditions for Improving CIL

In Theorem 1, we showed that good performances of WP and TP are sufficient to guarantee a good performance of CIL. In Theorem 3, we showed that good performances of WP and OOD detection are sufficient to guarantee a good performance of CIL. Again, for simplicity, OOD detection here refers to the closed-world OOD detection. For completeness, we study the necessary conditions of a well-performed CIL in this sub-section.

Theorem 4.

If , then there exist i) a WP, s.t. , ii) a TP, s.t. , and iii) an OOD detector for each task, s.t. .

The detailed proof is given in A.6. This theorem tells that if a good CIL model is trained, then a good WP, a good TP, and a good OOD detector for each task are always implied. More importantly, by transforming Theorem 4 into its contraposition, we have the following statements: If for any WP, , then . If for any TP, , then . If for any OOD detector, , then . Regardless of whether WP and TP (or OOD detection) are defined explicitly or implicitly by a CIL algorithm, the existence of a good WP and the existence of a good TP or OOD detection are necessary conditions for good CIL performance. Note that the OOD detection here is closed-world OOD detection.

Remark 5.

It is important to note again that our study in this section is based on a CIL model that has already been built. In other words, our study tells the CIL designers what should be achieved in the final model. Clearly, one would also like to know how to design a strong CIL model based on the theoretical results, which also considers catastrophic forgetting (CF). One effective method is to make use of a strong existing TIL algorithm, which can already achieve no or little forgetting (CF), and combine it with a strong OOD detection algorithm (as mentioned earlier, most OOD detection methods can also perform WP). Thus, any improved method from the OOD detection community can be applied to CIL to produce improved CIL systems (see Sections 4.2.3 and 4.2.4).

3.5 Generalization to Open-World Continual Learning

As mentioned at the beginning of this section, the first four subsections focused on the traditional closed-world CIL. This subsection generalizes or extends the theory to the open-world CIL, denoted by CIL+. CIL+ is CIL with an additional pseudo-task on top of the learned tasks representing OOD detection beyond the tasks, which we call the OOD task with a single pseudo-class (called OOD class) as we cannot predict the unseen class of an OOD sample because it is unknown. In this context, OOD detection is referred to as open-world OOD detection. For simplicity, we will continue using the term OOD detection.

We first note that Eq. 2 still applies because CIL+ only adds a new OOD task with one OOD class. Theorem 1 for the closed-world CIL can be extended to the open-world CIL (CIL+) by replacing with , and with . stays the same as the WP definition has no change in CIL+. The proof is trivially identical to the proof of Theorem 1. The key extension is to Theorem 2 of the traditional closed-world CIL so that test samples that do not belong to any of the already-learned tasks (i.e., OOD to the tasks) can also be detected.

Theorem 2 can be generalized to CIL+ by changing the closed-world TP to open-world TP, denoted by TP+, which must now predict the additional OOD task.

We denote as the open-world OOD domain beyond . For any , define

| (8) | ||||

| (9) |

For any , define

| (10) |

where OOD+ denotes the open-world OOD detection.

It is clear that open-world OOD detection implies closed-world OOD detection, but the reverse is not true. Since the classification in the closed-world CIL is limited to the tasks learned so far, it cannot derive open-world OOD detection but only closed-world OOD detection. Thus, only closed-world OOD detection is necessary for the traditional closed-world CIL.

We now generalize Theorem 2 to the open-world CIL (CIL+) setting with the following Corollary. The proof is given in A.4.

Corollary 2.

i) If , let , then . ii) If , let and , then , where is an indicator function.

For Corollary 2, we have that converges to as ’s converges to in order of , and converges to in order of . Therefore, we know that and are equivalent in quantity up to a constant factor.

We can extend Theorem 3 to the open-world CIL+ using Corollary 2. By substituting with and with , we obtain a new upper bound for the open-world CIL, . The proof is trivially identical to the original proof of Theorem 3.

We can establish the same theorem as Theorem 4 for CIL+ by replacing with and with . Again, the proof is trivially identical to the original proof of Theorem 4.

The new theorems establish that a good TP+ or OOD+ (open-world OOD detection) and a good WP are necessary and sufficient for a good CIL+.

4 Proposed Approach 1: Combining TIL and OOD Detection

Based on the above theoretical result, we have designed two approaches to solving CIL that employ OOD detection methods, more precisely open-world OOD detection methods. Although theoretically speaking, open-world OOD detection implies closed-world OOD detection, in practical applications, we often do not need to distinguish whether an OOD detection method is a closed-world or an open-world method as they usually can be used for either closed-world or open-world CIL. We just want them to be as accurate as possible for the applications.

This section presents the first approach, which combines a task incremental learning (TIL) method and an OOD detection method. The approach does not save any training data from previous tasks. The OOD detection method here is an open-world method as it does not use any information from the other tasks learned in the CIL process. The next section presents the second approach, which is based on replay and needs to save some training data from previous tasks.555Note that this paper focuses on establishing a theoretical connection between novelty (or OOD) detection and class incremental learning (CIL). Our experiments show the validity of the theory. We also report the OOD detection results using AUC but this paper does not focus on the problem of real-time decision-making and learning using OOD detection to detect each novel instance, acquire its class label, and incrementally learn it. The reason is that this will involve setting an OOD score threshold to decide each OOD instance and interacting with human users to acquire the class label to learn. Such user interactions wouldn’t give the system a large number of labeled training data. Then the highly challenging few-shot continual learning is required. We leave this to future work. The OOD detection method used there is a closed-world OOD detection method as it treats the replay data from previous tasks as the OOD data in the model building, but this method can also be used for open-world CIL.

4.1 Combining a TIL Method and an OOD Detection Method

As mentioned earlier, several existing TIL methods can overcome CF. This proposed approach basically leverages the CF prevention ability in two TIL methods (HAT [61] and SupSup (Sup) [69]) and replaces their task learning methods with an OOD detection technique, called CSI [64], which can perform both within-task or IND prediction (WP) and OOD instance detection. Below, we first introduce the two TIL methods, HAT and SupSup, and the OOD detection method, CSI. The combinations give two new CIL methods, HAT+CSI and Sup+CSI. None of these methods needs to save any data from previous tasks.

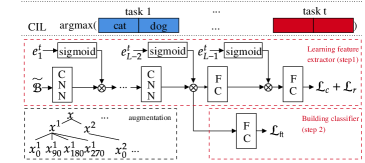

Figure 1 shows the overall training frameworks of HAT+CSI and Sup+CSI. Note that both HAT and Sup are multi-head methods (one head for each task) designed for task incremental learning (TIL).

4.1.1 HAT: Hard Attention Masks

To prevent forgetting the trained OOD detection model for each task in subsequent task learning, the hard attention mask (HAT) [61] for TIL is employed (which prevents forgetting in the feature extractor). Specifically, in learning a task, a set of embeddings is trained to protect the important neurons so that the corresponding parameters are not interfered with by subsequent tasks. The importance of a neuron is measured by the 0-1 pseudo-step function, where 0 indicates not important and 1 indicates important (and thus protected).

The hard attention mask is an output of sigmoid function with a hyper-parameter

| (11) |

where is a learnable embedding at layer of task . Since the step function is not differentiable, a sigmoid function with a large is used to approximate it. Sigmoid is approximately a 0-1 step function with a large . The attention is multiplied to the output of layer ,

| (12) |

The th element in the attention mask blocks (or unblocks) the information flow from neuron at layer if its value is (or ). With 0 value of , the corresponding parameters in and can be freely changed as the output values are not affected. The neurons with non-zero mask values are necessary to perform the task and thus need protection from catastrophic forgetting.

We modify the gradients of parameters that are important in performing the previous tasks during training task so they are not interfered with. Denote the accumulated mask by

| (13) |

where is element-wise maximum and the initial mask is a zero vector. It is a collection of mask values at layer where a neuron has value 1 if it has ever been activated previously. The gradient of parameter is modified as

| (14) |

where is the th unit of . The gradient flow is blocked if both neurons in the current layer and in the previous layer have been activated. We apply the mask for all layers except the last layer. The parameters in the last layer do not need to be protected as they are task-specific parameters.

A regularization is introduced to encourage sparsity in and parameter sharing with . The capacity of a network depletes when becomes 1-vector in all layers. Despite a set of new neurons can be added to the network at any point in training for more capacity, we utilize resources more efficiently by minimizing the loss

| (15) |

where is a hyper-parameter. The final objective of training a comprehensive task network without forgetting is

| (16) |

where is the cross-entropy loss. The overall framework of the algorithm is shown in Figure 1(a).

Note that for TIL, HAT needs the task-id for each test instance in order to choose the right task model for prediction or classification. However, by replacing the original model building method for each task in HAT with the OOD detection method in CSI (more specifically, ) during training, HAT+CSI does not require to know the task-id of each test instance at inference, which makes HAT+CSI suitable for CIL (class incremental learning). We will see the detailed prediction/classification method in Section 4.2.

4.1.2 SupSup: Supermasks in Superposition

SupSup (Sup) [69] is also a highly effective method that can overcome forgetting in the TIL setting. Sup trains supermasks by Edge Popup algorithm in [55]. Specifically, given the initial weights of a base network , find binary masks for task to minimize the cross-entropy loss.

| (17) |

where is the training data for task , and

| (18) |

where indicates element-wise product. The masks are obtained by selecting the top % of entries in the score matrices . The value determines the sparsity of the mask . The subnetwork found by Edge Popup algorithm is indicated by different colors in Figure 1(b).

Like HAT, Sup is also for TIL and needs the task-id of each test instance at inference. With , the system (which is referred to as Sup GG in the original Sup paper) uses the task-specific mask to obtain the classification output. Like HAT+CSI, by replacing the cross-entropy loss in mask finding with the OOD detection loss in CSI, Sup+CSI also does not require the task-id of each test instance, which makes Sup+CSI applicable to CIL (class incremental learning). We will discuss the detailed prediction/classification method in Section 4.2.

4.1.3 CSI: Contrasting Shifted Instances for OOD Detection

The OOD detection method CSI is based on contrastive learning [12, 29], data and class augmentations, and results ensembling [64]. The OOD training process is similar to that of contrastive learning. It consists of two steps: Step 1 learns the feature representation by the composite , where is the feature extractor and is the projection to contrastive representation, and Step 2 learns/fine-tunes the linear classifier , mapping the feature representation of to the label space (the classifier is the OOD model)). This two-step training process is outlined in Figure 1(b). In the following, we first describe the two-step training process and then explain how to make a prediction based on an ensemble method to further improve the prediction.

Step 1 (Contrastive Loss for Feature Learning). Supervised contrastive learning is used to try to repel data of different classes and align data of the same class more closely to make it easier to classify them. A key operation is data augmentation via transformations.

Given a batch of samples, each sample is first duplicated. Each version then goes through three initial augmentations (horizontal flip, color changes, and Inception crop [63]) to generate two different views and (they keep the same class label as ). Denote the augmented batch by , which now has samples. In [21] and [64], it was shown that using image rotations is effective in learning OOD detection models because such rotations can effectively serve as out-of-distribution (OOD) training data. For each augmented sample with class of a task, we rotate by to create three images, which are assigned three new classes , and , respectively. This results in a larger augmented batch . Since we generate three new images from each , the size of is . For each original class, we now have 4 classes. For a sample , let and let be a set consisting of the data of the same class as distinct from . The contrastive representation of a sample is , where is the current task. In learning, we minimize the supervised contrastive loss.

| (19) |

where is a scalar temperature, is dot product, and is multiplication. The loss is reduced by repelling of different classes and aligning of the same class more closely. basically trains a feature extractor with good representations for learning an OOD classifier.

Since the feature extractor is shared across tasks in continual learning, protection is needed to prevent catastrophic forgetting. HAT and Sup use their respective techniques to protect their feature extractor from forgetting. Therefore, the losses of Eq. 17 and of Eq. 16 are replaced by Eq. 19 while the forgetting prevention mechanisms still hold.

Step 2 (Fine-tuning the Classifier). Given the feature extractor trained with the loss in Eq. 19, we freeze and only fine-tune the linear classifier , which is trained to predict the classes of task and the augmented rotation classes. maps the feature representation to the label space in , where is the number of rotation classes including the original data with rotation and is the number of original classes in task . We minimize the cross-entropy loss,

| (20) |

where ft indicates fine-tune, and

| (21) |

where . The output includes the rotation classes. The linear classifier is trained to predict the original and the rotation classes. Since an individual classifier is trained for each task and the feature extractor is frozen, no protection is necessary.

Ensemble Class Prediction. We now discuss the prediction of class label for a test sample . Note that the network in Eq. 21 returns logits for rotation classes (including the original task classes). Note also for each original class label (original classes) of a task , we created three additional rotation classes. For class , the classifier will produce four output values from its four rotation class logits, i.e., , , , and , where 0, 90, 180, and 270 represent , and rotations respectively and is the original . We compute an ensemble output for each class of task ,

| (22) |

4.2 Experiments

We now present the experimental results of the combination techniques HAT+CSI and Sup+CSI for class incremental learning (CIL). We will also use another OOD detection method ODIN [38] to show that a better OOD detection method leads to better CIL results. We do not conduct extensive experiments on ODIN as it is much weaker than CSI in terms of ODD detection. Note that we will not report the ODD detection results for HAT+CSI and Sup+CSI in the open world in the continual learning process as the proposed method MORE in the next section performs better.

4.2.1 Experimental Datasets and Baselines

Datasets and CIL tasks: We use three standard image classification benchmark datasets and construct five different CIL experiments.

1. CIFAR-10 [32]: This dataset consists of 32x32 color images of 10 classes with 50,000 training and 10,000 testing samples. We construct an experiment (C10-5T) of 5 tasks with 2 classes per task.

2. CIFAR-100 [32]: This dataset consists of 32x32 color images of 100 classes with 50,000 training and 10,000 testing samples. We construct two experiments of 10 tasks (C100-10T) and 20 tasks (C100-20T), where each task has 10 classes and 5 classes, respectively.

3. Tiny-ImageNet [34]: This is an image classification dataset with 64x64 color images of 200 classes with 100,000 training and 10,000 validation samples. Since the dataset does not provide labels for testing data, we use the validation data for testing. We construct two experiments of 5 tasks (T-5T) and 10 tasks (T-10T) with 40 classes per task and 20 classes per task, respectively.

Baselines: We use 18 diverse continual learning baselines:

1. One projection method (OWM [75]).

4.2.2 Training Details and Evaluation Metrics

Training Details. For the backbone structure, we follow [69, 77, 6] and use ResNet-18 [19]. For CIFAR-100 and Tiny-ImageNet, the number of channels is doubled to fit more classes. For all baselines, the same ResNet-18 backbone architecture is employed except for OWM and HyperNet, for which we use their original architectures. OWM uses AlexNet. It is not obvious how to apply its orthogonal projection technique to the ResNet structure. HyperNet uses ResNet-32 and we are unable to replace it due to model initialization arguments unexplained in the original paper. For the replay methods, we use the memory buffer of 200 for CIFAR-10 and 2000 for CIFAR-100 and Tiny-ImageNet as in [56, 6]. We use the hyper-parameters suggested by the authors. If we cannot reproduce any result, we use 10% of the training data as a validation set to grid-search for good hyper-parameters. For our proposed methods, we report the hyper-parameters in F. All the results are averages over 5 runs with random seeds.

Evaluation Metrics.

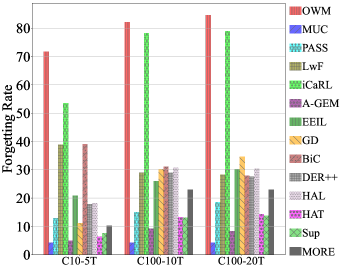

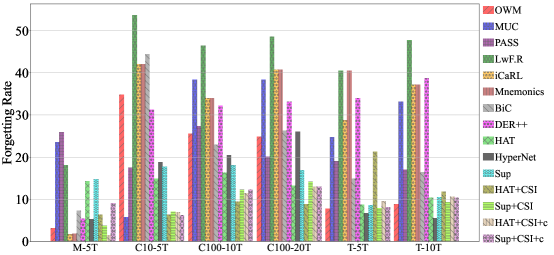

1. Average classification accuracy over all classes after learning the last task. The final class prediction depends on prediction methods (see below). We also report forgetting rate in G.

2. Average AUC (Area Under the ROC Curve) over all task models for the evaluation of OOD detection. AUC is the main measure used in OOD detection papers. Using this measure, we show that a better OOD detection method will result in a better CIL performance. Let be the AUC score of task . It is computed by using only the model (or classes) of task to score the test data of task as the in-distribution (IND) data and the test data from other tasks as the out-of-distribution (OOD) data. The average AUC score is: , where is the number of tasks.

It is not straightforward to change existing CL algorithms to include a new OOD detection method that needs training, e.g., CSI, except for TIL (task incremental learning) methods like HAT and Sup. For HAT and Sup, we can simply switch their methods for learning each task with CSI (see Section 4.1.1 and Section 4.1.2).

Prediction Methods. The theoretical result in Section 3 states that we use Eq. 2 to perform the final prediction. The first probability (WP) in Eq. 2 is easy to get as we can simply use the softmax values of the classes in each task. However, the second probability (TP) in Eq. 2 is tricky as each task is learned without the data of other tasks. There can be many options. We take the following approaches for prediction (which are a special case of Eq. 2, see below):

1. For those approaches that use a single classification head to include all classes learned so far, we predict as follows (which is also the approach taken by the existing papers.)

| (23) |

where is the logit output of the network.

2. For multi-head methods (e.g., HAT, HyperNet, and Sup), which use one head for each task, we use the concatenated output as

| (24) |

where indicate concatenation and is the output of task .666The Sup paper proposed a one-shot task-id prediction assuming that the test instances come in a batch and all belong to the same task like iTAML. We assume a single test instance per batch. Its task-id prediction results in an accuracy of 50.2 on C10-5T, which is much lower than 62.6 by using Eq. 24. The task-id prediction of HyperNet also works poorly. The accuracy of its task-id prediction is 49.34 on C10-5T while it is 53.4 using Eq. 24. PR uses entropy to find task-id. Among many variations of PR, we use the variations that perform the best for each dataset with exemplar-free and single sample per batch at testing (i.e., no PR-BW).

These methods (in fact, they are the same method used in two different settings) are a special case of Eq. 2 if we define as , where is the sigmoid. Hence, the theoretical results in Section 3 are still applicable. We present a detailed explanation of this prediction method and some other options in C. These two approaches work quite well.

4.2.3 Better OOD Detection Produces Better CIL Performance

The key theoretical result in Section 3 is that better OOD detection will produce better CIL performance. We compare a weaker OOD method ODIN with the strong CSI. ODIN is a post-processing method for OOD detection [38]. Note that it does not always improve the OOD detection performance compared to without the ODIN post-processing (see below).

| AUC | CIL | |||

| Method | Original | ODIN | Original | ODIN |

| OWM | 71.31 | 70.06 | 28.91 | 28.88 |

| MUC | 72.69 | 72.53 | 30.42 | 29.79 |

| PASS | 69.89 | 69.60 | 33.00 | 31.00 |

| LwF | 88.30 | 87.11 | 45.26 | 51.82 |

| A-GEM | 78.01 | 79.00 | 9.29 | 13.48 |

| EEIL | 83.37 | 79.73 | 48.99 | 41.74 |

| GD | 85.37 | 82.98 | 49.67 | 47.28 |

| BiC | 87.89 | 86.73 | 52.92 | 48.65 |

| DER++ | 85.99 | 88.21 | 53.71 | 55.29 |

| HAL | 64.21 | 64.83 | 15.59 | 21.01 |

| HAT | 77.72 | 77.80 | 41.06 | 41.21 |

| HyperNet | 71.82 | 72.32 | 30.23 | 30.83 |

| Sup | 79.16 | 80.58 | 44.58 | 46.74 |

Applying ODIN. We first train the baseline models using their original algorithms, and then apply temperature scaling and input noise of ODIN at testing for each task (no training data needed). More precisely, the output of class in task changes by temperature scaling factor of task as

| (25) |

and the input changes by the noise factor as

| (26) |

where is the class with the maximum output value in task . This is a positive adversarial example inspired by [15]. The values and are hyper-parameters and we use the same values for all tasks except for PASS, for which we use a validation set to tune (see B).

Table 2 gives the results for C100-10T. The CIL results clearly show that the CIL performance increases if the AUC increases with ODIN. For instance, the CIL of DER++ and Sup improves from 53.71 to 55.29 and 44.58 to 46.74, respectively, as the AUC increases from 85.99 to 88.21 and 79.16 to 80.58. It shows that when this method is incorporated into each task model in the existing trained CIL network, the CIL performance of the original method improves. We note that ODIN does not always improve the average AUC. For those experiencing a decrease in AUC, the CIL performance also decreases except LwF. The inconsistency of LwF is due to its severe classification bias towards later tasks as discussed in BiC [70]. The temperature scaling in ODIN has a similar effect as the bias correction in BiC, and the CIL of LwF becomes close to that of BiC after the correction. Regardless of whether ODIN improves AUC or not, the positive correlation between AUC and CIL (except LwF) verifies the efficacy of Theorem 3, indicating better OOD detection results in better CIL performances.

| CL | OOD | C10-5T | C100-10T | C100-20T | T-5T | T-10T | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | CIL | AUC | CIL | AUC | CIL | AUC | CIL | AUC | CIL | ||

| HAT | ODIN | 82.5 | 62.6 | 77.8 | 41.2 | 75.4 | 25.8 | 72.3 | 38.6 | 71.8 | 30.0 |

| CSI | 91.2 | 87.8 | 84.5 | 63.3 | 86.5 | 54.6 | 76.5 | 45.7 | 78.5 | 47.1 | |

| Sup | ODIN | 82.4 | 62.6 | 80.6 | 46.7 | 81.6 | 36.4 | 74.0 | 41.1 | 74.6 | 36.5 |

| CSI | 91.6 | 86.0 | 86.8 | 65.1 | 88.3 | 60.2 | 77.1 | 48.9 | 79.4 | 45.7 | |

Applying CSI. We now apply the OOD detection method CSI. Due to its sophisticated data augmentation, supervised contrastive learning, and results ensemble, it is hard to apply CSI to other baselines without fundamentally changing them except for HAT and Sup (SupSup) as these methods are parameter isolation-based TIL methods. We can simply replace their model for training each task with CSI wholesale. As mentioned earlier, both HAT and Sup as TIL methods have almost no forgetting.

Table 3 reports the results of using CSI and ODIN. ODIN is a weaker OOD method than CSI. Both HAT and Sup improve greatly as the systems are equipped with a better OOD detection method CSI. These experiment results empirically demonstrate the efficacy of Theorem 3, i.e., the CIL performance can be improved if a better OOD detection method is used.

4.2.4 Full Comparison of HAT+CSI and Sup+CSI with Baselines

| Method | C10-5T | C100-10T | C100-20T | T-5T | T-10T | Avg. | |

|---|---|---|---|---|---|---|---|

| (a) | OWM | 51.8

0.05 |

28.9

0.60 |

24.1

0.26 |

10.0

0.55 |

8.6

0.42 |

24.7 |

| (b) | MUC | 52.9

1.03 |

30.4

1.18 |

14.2

0.30 |

33.6

0.19 |

17.4

0.17 |

29.7 |

| PASS† | 47.3

0.98 |

33.0

0.58 |

25.0

0.69 |

28.4

0.51 |

19.1

0.46 |

30.6 | |

| (c) | LwF | 54.7

1.18 |

45.3

0.75 |

44.3

0.46 |

32.2

0.50 |

24.3

0.26 |

40.2 |

| iCaRL∗ | 63.4

1.11 |

51.4

0.99 |

47.8

0.48 |

37.0

0.41 |

28.3

0.18 |

45.6 | |

| A-GEM | 20.0

0.37 |

9.3

0.17 |

4.1

0.89 |

13.5

0.08 |

7.7

0.07 |

10.9 | |

| EEIL | 57.1

0.28 |

49.0

1.27 |

33.5

0.08 |

14.7

0.40 |

9.8

0.19 |

32.8 | |

| GD | 58.7

0.31 |

49.7

0.33 |

38.9

0.02 |

16.4

1.40 |

11.7

0.25 |

35.1 | |

| Mnemonics†∗ | 64.1

1.47 |

51.0

0.34 |

47.6

0.74 |

37.1

0.46 |

28.5

0.72 |

45.7 | |

| BiC | 61.4

1.74 |

52.9

0.64 |

48.9

0.54 |

41.7

0.74 |

33.8

0.40 |

47.7 | |

| DER++ | 66.0

1.20 |

53.7

1.30 |

46.6

1.44 |

35.8

0.77 |

30.5

0.47 |

46.5 | |

| HAL | 32.8

2.17 |

15.6

0.31 |

13.5

1.53 |

3.4

0.35 |

3.4

0.38 |

13.7 | |

| Co2L | 65.6 | ||||||

| (d) | CCG | 70.1 | |||||

| HAT | 62.7

1.45 |

41.1

0.93 |

25.6

0.51 |

38.5

1.85 |

29.8

0.65 |

39.5 | |

| HyperNet | 53.4

2.19 |

30.2

1.54 |

18.7

1.10 |

7.9

0.69 |

5.3

0.50 |

23.1 | |

| Sup | 62.4

1.45 |

44.6

0.44 |

34.7

0.30 |

41.8

1.50 |

36.5

0.36 |

44.0 | |

| PR-Ent | 61.9 | 45.2 | |||||

| (e) | HAT+CSI | 87.8

0.71 |

63.3

1.00 |

54.6

0.92 |

45.7

0.26 |

47.1

0.18 |

59.7 |

| Sup+CSI | 86.0

0.41 |

65.1

0.39 |

60.2

0.51 |

48.9

0.25 |

45.7

0.76 |

61.2 | |

| HAT+CSI+c | 88.0

0.48 |

65.2

0.71 |

58.0

0.45 |

51.7

0.37 |

47.6

0.32 |

62.1 | |

| Sup+CSI+c | 87.3

0.37 |

65.2

0.37 |

60.5

0.64 |

49.2

0.28 |

46.2

0.53 |

61.7 |

| Method | C10-5T | C100-10T | C100-20T | T-5T | T-10T | Avg. |

|---|---|---|---|---|---|---|

| BiC | 95.4

0.35 |

84.6

0.48 |

88.7

0.19 |

61.5

0.60 |

62.2

0.45 |

78.5 |

| HAT | 96.7

0.18 |

84.0

0.23 |

85.0

0.98 |

61.2

0.72 |

63.8

0.41 |

78.1 |

| Sup | 96.6

0.21 |

87.9

0.27 |

91.6

0.15 |

64.3

0.24 |

68.4

0.22 |

81.8 |

| HAT+CSI | 98.7

0.06 |

92.0

0.37 |

94.3

0.06 |

68.4

0.16 |

72.4

0.21 |

85.2 |

| Sup+CSI | 98.7

0.07 |

93.0

0.13 |

95.3

0.20 |

65.9

0.25 |

74.1

0.28 |

85.4 |

We now make a full comparison of the two proposed systems HAT+CSI and Sup+CSI designed based on the theoretical results with baselines. Since HAT and Sup are exemplar-free CL methods, HAT+CSI and Sup+CSI do not need to save any previous task data for replaying. Table 4 shows that HAT and Sup equipped with CSI outperform the baselines by large margins. DER++, the best replay method, achieves 66.0 and 53.7 on C10-5T and C100-10T, respectively, while HAT+CSI achieves 87.8 and 63.3 and Sup+CSI achieves 86.0 and 65.1. The large performance gap remains consistent in more challenging problems, T-5T and T-10T.

Due to the definition of OOD in the prediction method and the fact that each task is trained separately in HAT and Sup, the outputs from different tasks can be in different scales, which will result in incorrect predictions. To deal with the problem, we can calibrate the output as and use . The optimal and for each task can be found by optimization with a memory buffer to save a very small number of training examples from previous tasks like that in the replay-based methods. We refer to the calibrated methods as HAT+CSI+c and Sup+CSI+c. They are trained by using a memory buffer of the same size as the replay methods (see Section 4.2.2). Table 4 shows that the calibration improves from their memory-free versions, i.e., without calibration. We provide the details about how to train the calibration parameters and in D.

We note that CSI uses extensive data augmentations in its OOD detection. However, the baseline systems do not. To be fair, we added the same data augmentations to the three top-performing baselines, Mnemonics, BiC, and DER++. The average accuracy values over the five CIL experiments are 36.66, 35.75, and 18.43 for Mnemonics, BiC, and DER++, respectively. Our methods, HAT+CSI, Sup+CSI, HAT+CSI+c, and Sup+CSI+c, achieve accuracy values of 59.7, 61.2, 62.1, and 61.7, respectively, which are significantly better. In fact, with the augmentations, the three baselines perform worse than their original versions. We believe the reason is that while augmentations improve the performance of the current task as they help learn finer-grained and more task-specific features, they also cause more model updates in learning a task due to the additional augmented data, which leads to significantly more forgetting of prior tasks. However, our technique incorporating robust TIL mechanisms prevents forgetting while also concurrently benefiting from the strong OOD detection performance for each task model of CSI, which exploits data augmentations.

5 Proposed Approach 2: Out-of-Distribution Replay

The approach presented above does not save any training data from previous tasks except for the optional step of calibration. The method presented in this section is based on the replay approach to solving CIL, which saves a small number of training data from each previous task. The proposed method is called Multi-head model for continual learning via OOD REplay (MORE). As mentioned in Section 4, the OOD detection method used in this section is a closed-world method as it uses the saved samples from previous tasks as OOD samples in learning each new task.

5.1 The Proposed MORE Technique

Recall a replay-based method for continual learning works by memorizing or saving a small subset of the training samples from each previous task in a memory buffer. The saved data is called the replay data. In learning a new task, the new task data and the replay data are trained jointly to update the model. Clearly, using the replay data can partially deal with the inter-class separation (ICS) problem because the model sees some data from all classes learned so far. However, it cannot solve the ICS problem completely because the amount of replay data is often very small.

Unlike existing replay-based CIL methods, which simply use the replay data to update the decision boundaries between the old class and the new classes (in the new task), the proposed method uses the replay data to build an OOD detection model for each task in continual learning, which gives the name of the proposed method, i.e., out-of-distribution replay. Further, unlike existing OOD detection methods, which usually do not use any OOD data in training, the proposed method uses the replay data from previous tasks as the OOD data for the current new task in building its OOD detection model.

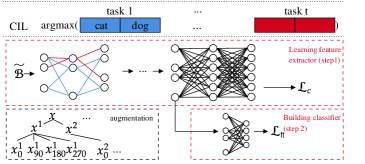

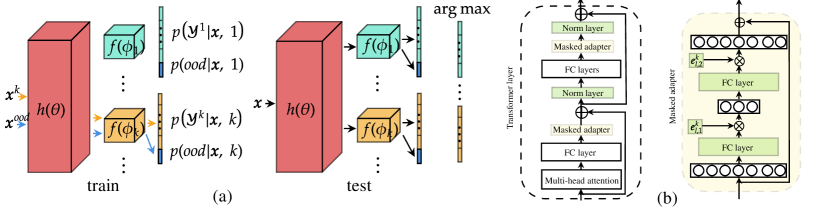

Unlike HAT+CSI and Sup+CSI, which do not use a pre-trained network, MORE trains a multi-head network as an adapter [23] to a pre-trained network (see Figure 2(b)). Note that using a pre-trained transformer network and adapter modules is a common practice in existing continual learning methods in the natural language processing community [28, 27]. Here we also leverage this approach for image classification tasks. In continual learning, the pre-trained network is frozen, only the adapters and the norm layers are trainable. Similar to HAT+CSI, a hard attention mask (HAT) is again employed to protect each task model or classifier to avoid forgetting. Each head is also an OOD detection model for a task, but, as mentioned above, MORE uses the replay data as the OOD data to build an OOD detection model. Since HAT has been described in Section 4.1.1, we will not discuss it further except to state that we need to use in Eq. 27 to replace in Eq. 16 after incorporating the trainable embedding . We describe the whole training and prediction process in H.

5.1.1 Training an OOD Detection Model

At task , the system receives the training data , where is the number of samples, and is an input sample and (the set of all classes of task ) is its class label. We train the feature extractor and task-specific classifier using and the samples in the memory buffer . We treat the buffer data as OOD data to encourage the network to learn the current task and also detect ODD samples (the models or classifiers of the previous tasks are not touched). We achieve it by maximizing for an IND sample and maximizing for an OOD sample . The additional label is reserved for previous and possible future unseen classes. Figure 2(a) shows the overall idea of the proposed approach. We formulate the problem as follows.

Given the training data of size at task and the memory buffer of size , we minimize the loss

| (27) | ||||

It is the sum of two cross-entropy losses. The first loss is for learning OOD samples while the second loss is for learning the classes from the current task. We optimize the shared parameter in the feature extractor. The task-specific classification parameters are independent of other tasks. The learned representation of the current data should be robust to OOD data. The classifier thus can classify both IND and OOD data.

In testing, we perform prediction by comparing the softmax probability output values using all the task classifiers from task 1 to without the OOD class as

| (28) |

where is the concatenation over the output space. Figure 2(a) shows the prediction rule. We are basically choosing the class with the highest softmax probability over all classes from all learned tasks.

5.1.2 Back-Updating the Previous OOD Models

Each task model works better if more diverse OOD data is provided during training. As in a replay-based approach, MORE saves an equal number of samples per class after each task [11]. The saved samples in the memory are used as OOD samples for each new task. Thus, in the beginning of continual learning when the system is trained on only a small number of tasks, the classes of samples in the memory are less diverse than after more tasks are learned. This makes the performance of OOD detection stronger for later tasks, but weaker in earlier tasks. To prevent this asymmetry, we update the model of each previous task so that it can also identify the samples from subsequent classes (which were unseen during the training of the previous task) as OOD samples.

At task , we update the previous task models as follows. Denote the samples of task in memory by . We construct a new dataset using the current task dataset and the samples in the memory buffer. We randomly select samples from the training data and pool them with the remaining samples in after removing the IND samples of task from . We do not use the entire training data as we do not want a large sample imbalance between IND and OOD. Denote the new dataset by . Using the data, we update only the parameters of the classifier for task with the feature representations frozen by minimizing the loss

| (29) |

We reduce the loss by updating the parameters of classifier to maximize the probability of the class if the sample belongs to task and maximize the OOD probability otherwise.

5.1.3 Improving Prediction Performance by a Distance Based Technique

We further improve the prediction in Eq. 28 by introducing a distance-based factor used as a coefficient to the softmax probabilities in Eq. 28. It is quite intuitive that if a test instance is close to a class, it is more likely to belong to the class. We thus propose to combine this new distance factor and the softmax probability output of the task model to make the final prediction decision. In some sense, this can be considered as an ensemble of the two methods.

We define the distance-based coefficient of task for the test instance by the maximum of inverse Mahalanobis distance [35] between the feature of and the Gaussian distributions of the classes in task parameterized by the mean of the class in task and the sample covariance . They are estimated by the features of class ’s training data for each class in task . If a test instance is from the task, its feature should be close to the distribution that the instance belongs to. Conversely, if the instance is OOD to the task, its feature should not be close to any of the distributions of the classes in the task. More precisely, for task with class (where represents the number of classes in task ), we define the coefficient as

| (30) |

is the Mahalanobis distance. The coefficient is large if at least one of the Mahalanobis distances is small but the coefficient is small if all the distances are large (i.e. the feature is far from all the distributions of the task). The parameters and can be computed and saved when each task is learned. The mean is computed using the training samples of class as follows,

| (31) |

and the covariance of task is the mean of covariances of the classes in task ,

| (32) |

where is the sample covariance of class . By multiplying the coefficient to the original softmax probabilities , the task output increases if is from task and decreases otherwise. The final prediction is made by (which replaces Eq. 28)

| (33) |

where is the last task that we have learned.

5.2 Experiments

We now report the experiment results of the proposed method MORE. For experimental datasets, we use the same three image classification benchmark datasets as in Section 4.2.1. For baselines, the same systems are used as well (see Section 4.2.1) except Mnemonics, HyperNet, CCG, Co2L, and PR-Ent. Mnemonics requires optimization of training instances and it is not clear how to implement it for images after interpolation for a given input size of a pre-trained model (see below). For HyperNet, it is due to the reason explained in Training Details in Section 4.2.2. For CCG, Co2L, and PR-Ent, CCG has no code and the codes of Co2L, and PR-Ent do not run in our environment and thus we could not convert their codes to use a pre-trained model. Finally, we are left with 13 baselines. Note that HAT-CSI and Sup+CSI are not included as they are much weaker (up to 15% lower than MORE in accuracy) as CSI’s approach of using contrastive learning and data augmentations does not work well with a pre-trained model.

Evaluation Metrics. We still use the same evaluation measures as we used in Section 4.2.2. (1). Average classification accuracy over all classes after learning the last task. (2). Average AUC (Area Under the ROC Curve) for evaluating OOD detection performance of continual learning in the open world. See Section 5.2.4 for more details.

5.2.1 Pre-trained Network

We pre-train a vision transformer [66] using a subset of the ImageNet data [58] and apply the pre-trained network/model to all baselines and our method. To ensure that there is no overlapping of data between ImageNet and our experimental datasets, we manually removed 389 classes from the original 1000 classes in ImageNet that are similar/identical to the classes in CIFAR-10, CIFAR-100, or Tiny-ImageNet. We pre-train the network with the remaining subset of 611 classes of ImageNet.

Using the pre-trained network, both our system and the baselines improve dramatically compared to their versions without using the pre-trained network. For instance, the two best baselines (DER++ and PASS) in our experiments achieved the average classification accuracy of 66.89 and 68.25 (after the final task) with the pre-trained network over 5 experiments while they achieved only 46.88 and 32.42 without using the pre-train network.

We insert an adapter module at each transformer layer to exploit the pre-trained transformer network in continual learning. During training, the adapter module and the layer norm are trained while the transformer parameters are unchanged to prevent forgetting in the pre-trained network.

5.2.2 Training Details

For all experiments, we use the same backbone architecture DeiT-S/16 [66] with a 2-layer adapter [23] at each transformer layer, and the same class order for both baselines and our method. The first fully connected layer in the adapter maps from dimension 384 to the bottleneck. The second fully connected layer following ReLU activation function maps from bottleneck to 384. The bottleneck dimension is the same for all adapters in a model. For our method, we use SGD with a momentum value 0.9. The back-updating method in Section 5.1.2 is also a hyper-parameter choice. If we apply it, we train each classifier for 10 epochs by SGD with a learning rate 0.01, batch size 16, and momentum value 0.9. We choose 500 for in Eq. 11 and 0.75 for in Eq. 15 as recommended in [61]. We find a good set of learning rates and number of epochs on the validation set made of 10% of the training data. We follow [11] and save an equal number of random samples per class in the replay memory. Following the experiment settings in [56, 77], we fix the size of the memory buffer and reduce the saved samples to accommodate a new set of samples after a new task is learned. We use the class order protocol in [56, 6] by generating random class orders for the experiments. The baselines and our method use the same class ordering. We also report the size of memory required for each experiment in I.

For CIFAR-10, we split 10 classes into 5 tasks (2 classes per task). The bottleneck size in each adapter is 64. Following [6], we use the memory size 200, and train for 20 epochs with a learning rate 0.005, and apply the back-updating method in Section 5.1.2.

For CIFAR-100, we conducted 10 tasks and 20 tasks experiments, where each task has 10 classes and 5 classes, respectively. We double the bottleneck size of the adapter to learn more classes. We use the memory size 2000 following [56] and train for 40 epochs with learning rates 0.001 and 0.005 for 10 tasks and 20 tasks, respectively, and apply the back-updating method in Section 5.1.2.

For Tiny-ImageNet, two experiments are conducted. We split 200 classes into 5 and 10 tasks, where each task has 40 classes and 20 classes per task, respectively. We use the bottleneck size 128, and save 2000 samples in memory. We train with the learning rate 0.005 for 15 and 10 epochs for 5 tasks and 10 tasks, respectively. There is no need to use the back-updating method as the earlier tasks already have diverse OOD classes.

5.2.3 Accuracy and Forgetting Rate Results and Analysis

| Method | C10-5T | C100-10T | C100-20T | T-5T | T-10T | Avg. | |

|---|---|---|---|---|---|---|---|

| (a) | OWM | 41.69

6.34 |

21.39

3.18 |

16.98

4.44 |

24.55

2.48 |

17.52

3.45 |

24.43 |

| (b) | MUC | 73.95

7.24 |

57.87

1.11 |

43.98

2.68 |

62.47

0.34 |

55.79

0.49 |

58.81 |

| PASS | 86.21

1.10 |

68.90

0.94 |

66.77

1.18 |

61.03

0.38 |

58.34

0.42 |

68.25 | |

| (c) | LwF | 67.59

4.27 |

66.50

1.93 |

67.54

0.97 |

33.51

4.36 |

36.85

4.46 |

54.40 |

| iCaRL | 87.55

0.99 |

68.90

0.47 |

69.15

0.99 |

53.13

1.04 |

51.88

2.36 |

66.12 | |

| A-GEM | 56.33

7.77 |

25.21

4.00 |

21.99

4.01 |

30.53

3.99 |

21.90

5.52 |

31.20 | |

| EEIL | 82.34

3.13 |

68.08

0.51 |

63.79

0.66 |

53.34

0.54 |

50.38

0.97 |

63.59 | |

| GD | 89.16 0.53 | 64.36

0.57 |

60.10

0.74 |

53.01

0.97 |

42.48

2.53 |

61.82 | |

| BiC | 67.44

3.93 |

64.47

1.30 |

67.69

1.97 |

38.78

1.26 |

40.98

2.39 |

55.87 | |

| DER++ | 84.63

2.91 |

69.73

0.99 |

70.03

1.46 |

55.84

2.21 |

54.20

3.28 |

66.89 | |

| HAL | 84.38

2.70 |

67.17

1.50 |

67.37

1.45 |

52.80

2.37 |

55.25

3.60 |

65.39 | |

| (d) | HAT | 83.30

1.54 |

62.34

0.93 |