2 School of Computer Science and Technology, Harbin Institute of Technology(Shenzhen), China

3 School of Electronic and Computer Engineering, Peking University, China

4 National Laboratory for Parallel and Distributed Processing, National University of Defense Technology, China

11email: {zhangt02}@pcl.ac.cn

OpenMedIA: Open-Source Medical Image Analysis Toolbox and Benchmark under Heterogeneous AI Computing Platforms

Abstract

In this paper, we present OpenMedIA, an open-source toolbox library containing a rich set of deep learning methods for medical image analysis under heterogeneous Artificial Intelligence (AI) computing platforms. Various medical image analysis methods, including 2D3D medical image classification, segmentation, localisation, and detection, have been included in the toolbox with PyTorch andor MindSpore implementations under heterogeneous NVIDIA and Huawei Ascend computing systems. To our best knowledge, OpenMedIA is the first open-source algorithm library providing compared PyTorch and MindSpore implementations and results on several benchmark datasets. The source codes and models are available at https://git.openi.org.cn/OpenMedIA.

1 Introduction

Deep learning has been extensively studied and has achieved beyond human-level performances in various research and application fields [10, 26, 31, 33]. To facilitate the development of such Artificial Intelligence (AI) systems, a number of open-sourced deep learning frameworks including TensorFlow [1], PyTorch [21], MXNet [4], MindSpore 111https://www.mindspore.cn have been developed and integrated to various AI hardwares. The medical image analysis community also witnessed similar rapid developments and revolutions with deep learning methods developed for medical image reconstruction, classification, segmentation, registration, and detection [3, 6, 23, 29, 32, 35]. In particular, MONAI 222https://monai.io, implemented in PyTorch, is a popular open-source toolbox with deep learning algorithms in healthcare imaging. Despite its popularity, the accuracy and performance of those algorithms may vary when implemented on different AI frameworks andor various AI hardware. Not to mention that several PyTorch or CUDA libraries included in MONAI are not supported by Huawei Ascend andor other NPU computing hardware.

In this paper, we report our open-source algorithms and AI models library for medical image analysis, OpenMedIA, which have been implemented and verified with various PyTorch and MindSpore AI frameworks with heterogeneous computing hardware. All the algorithms implemented with the MindSpore framework in OpenMedIA have been evaluated and compared to the original papers and the PyTorch version.

| Task | Algorithm | PyTorch | MindSpore |

| Classification | Covid-ResNet [9, 15] | ✓ | ✓ |

| Covid-Transformer [7] | ✓ | - | |

| U-CSRNet [17] | ✓ | ✓ | |

| MTCSN [18] | ✓ | ✓ | |

| Segmentation | 2D-UNet [16] | ✓ | ✓ |

| LOD-Net [5] | - | ✓ | |

| Han-Net [14] | ✓ | ✓ | |

| CD-Net [13] | ✓ | ✓ | |

| 3D-UNet [28] | ✓ | ✓ | |

| UNETR [12] | ✓ | - | |

| Localization | WeaklyLesionLocalisation [30] | ✓ | - |

| TS-CAM [11] | ✓ | - | |

| Detection | LungNodules-Detection [24] | - | ✓ |

| Covid-Detection-CNN 333https://github.com/ultralytics/yolov5 | ✓ | - | |

| Covid-Detection-Transformer [2] | ✓ | - | |

| EllipseNet-Fit [3, 27] | - | ✓ | |

| EllipseNet [3] | ✓ | - |

Our contributions can be summarized as follows:

Firstly, we provide an open-source library of recent State-Of-The-Art (SOTA) algorithms in the medical image analysis domain under two deep learning frameworks: PyTorch (with NVIDIA) and MindSpore (with Huawei Ascend).

Secondly, we conduct bench-marking comparisons of the SOTA algorithms with accuracy and performances.

Thirdly, we not only open-source the codes but also provide all the training logs and checkpoints under different AI frameworks. In this study, PyTorch is built with NVIDIA GPU, while MindSpore is with Huawei Ascend.

Table 1 shows the basic overview of the algorithms included in OpenMedIA. We categorize these algorithms into Classification, Segmentation, Localisation, and Detection tasks for easy understanding and comparison.

2 Algorithms

This study summarises seventeen SOTA algorithms from medical image classification, segmentation, localisation, and detection tasks. Eight of them are implemented by both MindSpore and PyTorch. We will continue updating the OpenMedIA library in the next few years. In this section, we will briefly introduce the selected algorithms.

2.1 Medical image classification

Four well-known methods are introduced for this task. It should be noted that the current open-source codes and models are with 2D classification settings.

Covid-ResNet. The contributions of Covid-ResNet 444PyTorch: https://git.openi.org.cn/OpenMedIA/Covid-ResNet.Pytorch 555MindSpore: https://git.openi.org.cn/OpenMedIA/Covid-ResNet.Mindspore are listed as follows:

-

•

ResNet [15] was proposed in 2015 and became one of the most famous Convolutional Neural Networks(CNN) in deep learning.

-

•

It uses a residual learning framework to ease the training of a deeper network than previous work and shows promising performance.

-

•

An early CNN model built for COVID-19 CT image classification.

Covid-Transformer. The contributions of Covid-Transformer 666PyTorch: https://git.openi.org.cn/OpenMedIA/Covid-Transformer.Pytorch are listed as follows:

-

•

ViT [7] was inspired by the success of the transformer in Natural Language Process(NLP) and proposed for Computer Vision in 2020.

-

•

Unlike a convolutional network like ResNet, which includes a convolution structure to extract features, ViT consists of self-attention. It doesn’t introduce any image-specific inductive biases into the architecture. ViT interprets an image as a sequence of patches and processes it by a pure encoder, which shows comparable performance to CNNs.

U-CSRNet. The contributions of U-CSRNet 777PyTorch: https://git.openi.org.cn/OpenMedIA/U-CSRNet.Pytorch 888MindSpore: https://git.openi.org.cn/OpenMedIA/U-CSRNet.Mindspore are listed as follows:

-

•

U-CSRNet [17] add transpose convolution layers after its backend so that the final output probability map can be identical to the input’s resolution. And modify the output of CSRNet and U-CSRNet from one channel to two channels to represent the two kinds of tumor cells.

MTCSN. The contributions of MTCSN 999PyTorch: https://git.openi.org.cn/OpenMedIA/MTCSN.Pytorch 101010MindSpore: https://git.openi.org.cn/OpenMedIA/MTCSN.Mindspore are listed as follows:

-

•

MTCSN is mainly used to evaluate the definition of the capsule endoscope.

-

•

It is an end-to-end evaluation method.

-

•

MTCSN uses the structure of ResNet in the encoding part and designs two multi-task branches in the decoding part. Namely, the classification branch for the availability of image definition measurement and the segmentation branch for tissue segmentation generates interpretable visualization to help doctors understand the whole image.

2.2 Medical image segmentation

Most methods are designed for 2D segmentation tasks. We also include 3D-UNet and UNETR for 3D segmentation tasks for further research.

2D-UNet. The contributions of 2D-UNet 111111PyTorch: https://git.openi.org.cn/OpenMedIA/2D-UNet.Pytorch 121212MindSpore: https://git.openi.org.cn/OpenMedIA/2D-UNet.Mindspore are listed as follows:

-

•

2D-UNet [16] was proposed in 2015 as a type of neural network directly consuming 2D images.

-

•

The U-Net architecture achieves excellent performance on different biomedical segmentation applications. Without solid data augmentations, it only needs very few annotated images and has a reasonable training time.

LOD-Net. The contributions of LOD-Net 131313MindSpore: https://git.openi.org.cn/OpenMedIA/LOD-Net.Mindspore are listed as follows:

-

•

LOD-Net [5] is mainly used in the task of polyp segmentation.

-

•

It is an end-to-end segmentation method. Based on the mask R-CNN architecture, the parallel branch learns the directional derivative of the pixel level of the feature image, measures the gradient performance of the pixels on the image with the designed strategy, and is used to sort and screen out the possible boundary regions.

-

•

The directional derivative feature is used to enhance the features of the boundary regions and, finally, optimize the segmentation results

HanNet. The contributions of HanNet 141414PyTorch: https://git.openi.org.cn/OpenMedIA/HanNet.Pytorch 151515MindSpore: https://git.openi.org.cn/OpenMedIA/HanNet.Mindspore are listed as follows:

-

•

HanNet [14] proposes a hybrid-attention nested UNet for nuclear instance segmentation, which consists of two modules: a hybrid nested U-shaped network (H-part) and a hybrid attention block (A-part).

CDNet. The contributions of CDNet 161616PyTorch: https://git.openi.org.cn/OpenMedIA/CDNet.Pytorch 171717MindSpore: https://git.openi.org.cn/OpenMedIA/CDNet.Mindspore are listed as follows:

-

•

CDNet [13] propose a novel centripetal direction network for nuclear instance segmentation.

-

•

The centripetal feature is defined as a class of adjacent directions pointing to the core center to represent the spatial relationship between pixels in the core. Then, these directional features are used to construct a directional difference graph to express the similarity within instances and the differences between instances.

-

•

This method also includes a refining module for direction guidance. As a plug-and-play module, it can effectively integrate additional tasks and aggregate the characteristics of different branches.

3D-UNet. The contributions of 3D-UNet 181818PyTorch: https://git.openi.org.cn/OpenMedIA/3D-UNet.mindspore 191919MindSpore: https://git.openi.org.cn/OpenMedIA/3D-UNet.Pytorch are listed as follows:

-

•

3D-UNet [6] was proposed in 2016, it is a type of neural network that directly consumes volumetric images.

-

•

3D-UNet extends the previous u-net architecture by replacing all 2D operations with their 3D counterparts.

-

•

The implementation performs on-the-fly elastic deformations for efficient data augmentation during training. It is trained end-to-end from scratch, i.e., no pre-trained network is required.

UNETR. The contributions of UNETR 202020PyTorch: https://git.openi.org.cn/OpenMedIA/Transformer3DSeg are listed as follows:

-

•

UNETR [12] was inspired by the success of transformers in NLP and proposed to use a transformer as the encoder to learn sequence representation of the input 3D Volume and capture multi-scale information.

-

•

With the help of a U-shape network design, the model learns the final semantic segmentation output.

2.3 Weakly supervised image localisation

Two weakly supervised medical image localisation methods are included in OpenMedIA. Both methods are designed for image localisation tasks with generative adversarial network (GAN) and class activation mapping (CAM) settings. It worth noting that WeaklyLesionLocalisation [30] also supports weakly supervised lesion segmentation. In this work, the comparisons of lesion segmentation were not included.

WeaklyLesionLocalisation. The contribution of WeaklyLesionLocalisation 212121PyTorch: https://git.openi.org.cn/OpenMedIA/WeaklyLesionLocalisation are listed as follows:

-

•

It [30] proposed a data-driven framework supervised by only image-level labels.

-

•

The framework can explicitly separate potential lesions from original images with the help of a generative adversarial network and a lesion-specific decoder.

TS-CAM. The contribution of TS-CAM 222222PyTorch: https://git.openi.org.cn/OpenMedIA/TS-CAM.Pytorch are listed as follows:

-

•

It [11] introduces the token semantic coupled attention map to take full advantage of the self-attention mechanism in the visual transformer for long-range dependency extraction.

-

•

TS-CAM first splits an image into a sequence of patch tokens for spatial embedding, which produce attention maps of long-range visual dependency to avoid partial activation.

-

•

TS-CAM then re-allocates category-related semantics for patch tokens, making them aware of object categories. TS-CAM finally couples the patch tokens with the semantic-agnostic attention map to achieve semantic-aware localisation

2.4 Medical image detection

We include seven popular SOTA detection methods in this library and trained them with various medical image detection tasks.

LungNodules-Dectection. The contribution of LungNodules-Dectection 232323MindSpore: https://git.openi.org.cn/OpenMedIA/LungNodules-Detection.MS are listed as follows:

-

•

It use CenterNet [34] as the backbone to detect Lung disease.

-

•

CenterNet is a novel practical anchor-free method for object detection, which detects and identifies objects as axis-aligned boxes in an image.

-

•

The detector uses keypoint estimation to find center points and regresses to all other object properties, such as size, location, orientation, and even pose.

-

•

In nature, it’s a one-stage method to simultaneously predict the center location and boxes with real-time speed and higher accuracy than corresponding bounding box-based detectors.

LungNodule-Detection-CNN. The contribution of LungNodule-Detection-CNN 242424PyTorch: https://git.openi.org.cn/OpenMedIA/LungNodule-Detection-CNN.Pytorch are listed as follows:

-

•

It use Yolov5 252525https://github.com/ultralytics/yolov5 the single stage method to detect lung nodules in the Lung.

-

•

Yolov5 is an object detection algorithm that divides images into a grid system. Each cell in the grid is responsible for detecting objects within itself.

LungNodule-Detection-Transformer. The contribution of LungNodule-Detection-Transformer 262626PyTorch: https://git.openi.org.cn/OpenMedIA/LungNodule-Detection-Transformer.Pytorch are listed as follows:

-

•

It use DeTR [2] to detect the location of the Lung Nodules.

-

•

DeTR is the end-to-end object detection framework based on the transformer, showing promising performance.

EllipseNet-fit. The contribution of EllipseNet-fit 272727MindSpore: https://git.openi.org.cn/OpenMedIA/EllipseFit.Mindspore are listed as follows:

- •

-

•

Compared to the fetal head ellipse detection, fetal echocardiographic measurement is challenged by the moving heart and shadowing artifacts around the fetal sternum.

EllipseNet. The contribution of EllipseNet 282828PyTorch: https://git.openi.org.cn/OpenMedIA/EllipseNet are listed as follows:

-

•

EllipseNet [3] presents an anchor-free ellipse detection network, namely EllipseNet, which detects the cardiac and thoracic regions in ellipses and automatically calculates the cardiothoracic ratio and cardiac axis for fetal cardiac biometrics in 4-chamber view.

-

•

The detection network detects each object’s center as points and simultaneously regresses the ellipses’ parameters. A rotated intersection-over-union loss is defined to further regulate the regression module.

3 Benchmarks and results

Algorithms with PyTorch version were implemented and tested on NVIDIA GeForce 1080/2080Ti and Tesla V100 292929https://www.nvidia.com/en-us/data-center/v100/. Algorithms with the MindSpore version were trained and evaluated on Huawei Ascend 910 303030https://e.huawei.com/en/products/cloud-computing-dc/atlas/ascend-910. Huawei Ascend 910 was released in 2019, which reports twice the performance of rival NVIDIA’s Telsa V100 313131https://www.jiqizhixin.com/articles/2019-08-23-7.

3.1 Datasets

We conduct seventeen experiments on ten medical image datasets to evaluate different implementations of deep medical image analysis algorithms. For 2D classification tasks, the Covid-19 CT image dataset 323232https://covid-segmentation.grand-challenge.org, noted as Covid-Classification in this paper, BCData [17] and Endoscope [18] datasets were included. For 2D image segmentation tasks, besides the COVID lesion segmentation in Covid-19 CT image dataset 32 noted as Covid-Segmentation, we also generated a lung segmentation based on this dataset and noted as 2D-Lung-Segmentation 333333https://git.openi.org.cn/OpenMedIA/2D-UNet.Pytorch/datasets. Besides, ETIS-LaribPolypDB [25] and MoNuSeg [19] were also used in this study. For 3D image segmentation tasks, we adopted MM-WHS [36] dataset. For 2D image detection tasks, both LUNA16 343434https://luna16.grand-challenge.org/ and fetal four chamber view ultrasound (FFCV) dataset [3] were evaluated. Some of the datasets with public copyright licenses have been uploaded to OpenMedIA.

| Algorithm | Dataset | Metric | Performance | ||||

| Original |

|

||||||

| Covid-ResNet [9] | Covid-Classification | Acc | 0.96 | 0.97/0.97 | |||

| Covid-Transformer [7] | Covid-Classification | Acc | - | 0.87/- | |||

| U-CSRNet [17] | BCData | F1 | 0.85 | 0.85/0.85 | |||

| MTCSN [18] | Endoscope | Acc | 0.80 | 0.80/0.75 | |||

| 2D-UNet [16] | 2D-Lung-Segmentation | Dice | - | 0.76/0.76 | |||

| LOD-Net [5] | ETIS-LaribPolypDB | Dice | 0.93 | -/0.91 | |||

| Han-Net [14] | MoNuSeg | Dice | 0.80 | 0.80/0.79 | |||

| CD-Net [13] | MoNuSeg | Dice | 0.80 | 0.80/0.80 | |||

| 3D-UNet [28] | MM-WHS | Dice | 0.85 | 0.88/0.85 | |||

| UNETR [12] | MM-WHS | Dice | - | 0.84/- | |||

| WeaklyLesionLocalisation [30] | Covid-Segmentation |

|

|

|

|||

| TS-CAM [11] | Covid-Segmentation | AUC | - | 0.50/- | |||

| LungNodules-Detection [24] | Luna16 | mAP | - | -/0.51 | |||

| LungNodule-Detection-CNN | Luna16 | mAP | - | 0.72/- | |||

| LungNodule-Detection-Transformer [2] | Luna16 | mAP | - | 0.72/- | |||

| EllipseNet-Fit [3, 27] | FFCV | Dice | 0.91 | 0.91/0.91 | |||

| EllipseNet [3] | FFCV | Dice | 0.93 | 0.93/- | |||

3.2 Metrics

For 2D classification task, accuracy(Acc) [22] and F1 [22] are used to evaluate the classification accuracy. For 2D/3D segmentation tasks, Dice score [6] is used to measure the segmentation accuracy. For weakly supervised lesion localisation and segmentation tasks, AUC [20] and Dice score [6] are used for quantitative comparisons. For 2D detection tasks, mAP [8] , AUC [20] and Dice score are used for evaluation.

3.3 Evaluation on image classification

In Table 2, Rows 1-4 show the accuracy of four image classification algorithms with different implementations. It can be seen that CNN achieves better performance than Transformer backbones, which indicates that Transformers are more difficult to train and usually require large training samples. Experimental results show that the algorithms implemented with PyTorch and MindSpore achieve the same accuracy. It should be noted that the reported classification algorithms and results are implemented and verified with 2D scenarios.

3.4 Evaluation on image segmentation

Both 2D and 3D segmentation methods are evaluated, and their results are shown in Rows 5-10 of Table 2. For 2D segmentation tasks shown in Rows 5-8, our implementation achieves comparable or even better performance than the reported results. For 3D segmentation tasks shown in Rows 9-10, 3D-Net is based on CNN architecture, while UNETR uses Transformer as the backbone. It is also worth noting that the 2D algorithms achieve similar results in both MindSpore and PyTorch frameworks, while the 3D-UNet algorithm implemented in MindSpore shows a 0.03 accuracy drop compared to the PyTorch re-implementation, which indicates that the MindSpore version could be further optimized in the future, especially on the 3D data augmentation libraries.

3.5 Evaluation on weakly supervised image localisation

For medical image localisation, two well-known weakly supervised methods methods are reported in Rows 11-12. Following the settings reported by [30], both methods are evaluated on the Covid-Segmentation datasets. The implementations of the MindSpore framework will be added in the future.

3.6 Evaluation on image detection

For image detection, the results on the Rows 13-14 show that networks based on both CNN and Transformer backbones apply to medical image detection tasks. YOLOv5m are used for CNN based network. In most medical image detection scenarios, rotated ellipses andor bounding boxes are more suitable considering the rotating and ellipse-shaped targets. In this study, we include EllipseNet [3] and its variant [27] in OpenMedIA. Rows 16-17 show that EllipseNet delivers the highest accuracy for rotated ellipse detection tasks.

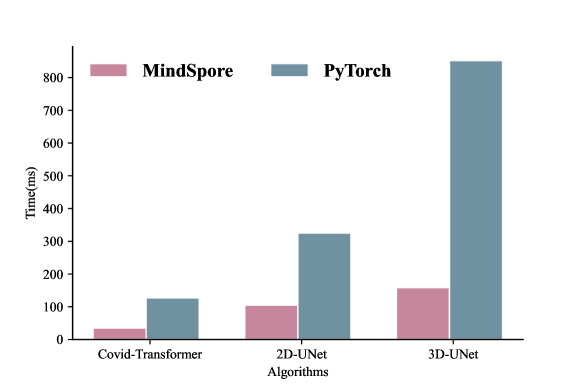

3.7 Evaluation on time efficiency

To evaluate the time efficiency of different implementations with PyTorch and MindSpore, we select one method from each category and evaluate their inference time as shown in Figure 1. For fair comparisons, all the algorithms implemented with PyTorch were assessed on a single NVIDIA Tesla V100, while those implemented with MindSpore were tested on a single Huawei Ascend 910. Figure 1 shows the compared time efficiency results. It can be seen that all the tested algorithms with MindSpore environment are more time-efficient than that with PyTorch settings. The time cost of the former is less than 1/3 of that of the latter, which confirms the time efficiency advantage of MindSpore and Huawei Ascend 910 as reported in.

4 Conclusions and future works

This paper introduces OpenMedIA, an open-source heterogeneous AI computing algorithm library for medical image analysis. We summarise the contributions of a set of SOTA deep learning methods and re-implement them with both PyTorch and MindSpore AI frameworks. This work not only reports the model training/inference accuracy, but also includes the performances of the algorithms with various NVIDIA GPU and Huawei Ascend NPU hardware. The OpenMedIA aims to boost AI development in medical image analysis domain with open-sourced easy implementations, bench-marking models and the open-source computational power. More SOTA algorithms under various AI platforms, such as self-supervised methods will be added in future.

Acknowledgement

The computing resources of Pengcheng Cloudbrain are used in this research. We acknowledge the support provided by OpenI Community (https://git.openi.org.cn).

References

- [1] Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., et al.: TensorFlow: a system for Large-Scale machine learning. In: OSDI. pp. 265–283 (2016)

- [2] Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: ECCV. pp. 213–229. Springer (2020)

- [3] Chen, J., Zhang, Y., Wang, J., Zhou, X., He, Y., Zhang, T.: Ellipsenet: Anchor-free ellipse detection for automatic cardiac biometrics in fetal echocardiography. In: MICCAI. pp. 218–227. Springer (2021)

- [4] Chen, T., Li, M., Li, Y., Lin, M., Wang, N., Wang, M., Xiao, T., Xu, B., Zhang, C., Zhang, Z.: Mxnet: A flexible and efficient machine learning library for heterogeneous distributed systems. NeurIPS Workshop (2015)

- [5] Cheng, M., Kong, Z., Song, G., Tian, Y., Liang, Y., Chen, J.: Learnable oriented-derivative network for polyp segmentation. In: MICCAI. pp. 720–730 (2021)

- [6] Çiçek, Ö., Abdulkadir, A., Lienkamp, S.S., Brox, T., Ronneberger, O.: 3d u-net: learning dense volumetric segmentation from sparse annotation. In: International conference on medical image computing and computer-assisted intervention. pp. 424–432. Springer (2016)

- [7] Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. ICLR (2020)

- [8] Everingham, M., Van Gool, L., Williams, C.K.I., Winn, J., Zisserman, A.: The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. http://www.pascal-network.org/challenges/VOC/voc2007/workshop/index.html

- [9] Farooq, M., Hafeez, A.: Covid-resnet: A deep learning framework for screening of covid19 from radiographs. Arxiv (2020)

- [10] Floridi, L., Chiriatti, M.: Gpt-3: Its nature, scope, limits, and consequences. Minds Mach. 30(4), 681–694 (2020)

- [11] Gao, W., Wan, F., Pan, X., Peng, Z., Tian, Q., Han, Z., Zhou, B., Ye, Q.: Ts-cam: Token semantic coupled attention map for weakly supervised object localization. In: ICCV. pp. 2886–2895 (2021)

- [12] Hatamizadeh, A., Tang, Y., Nath, V., Yang, D., Myronenko, A., Landman, B., Roth, H.R., Xu, D.: Unetr: Transformers for 3d medical image segmentation. In: WACV. pp. 574–584 (2022)

- [13] He, H., Huang, Z., Ding, Y., Song, G., Wang, L., Ren, Q., Wei, P., Gao, Z., Chen, J.: Cdnet: Centripetal direction network for nuclear instance segmentation. In: CVPR. pp. 4026–4035 (2021)

- [14] He, H., Zhang, C., Chen, J., Geng, R., Chen, L., Liang, Y., Lu, Y., Wu, J., Xu, Y.: A hybrid-attention nested unet for nuclear segmentation in histopathological images. Front. Mol. Biosci. 8, 6 (2021)

- [15] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR. pp. 770–778 (2016)

- [16] Hofmanninger, J., Prayer, F., Pan, J., Röhrich, S., Prosch, H., Langs, G.: Automatic lung segmentation in routine imaging is primarily a data diversity problem, not a methodology problem. European Radiology Experimental 4(1), 1–13 (2020)

- [17] Huang, Z., Ding, Y., Song, G., Wang, L., Geng, R., He, H., Du, S., Liu, X., Tian, Y., Liang, Y., et al.: Bcdata: A large-scale dataset and benchmark for cell detection and counting. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 289–298. Springer (2020)

- [18] Kong, Z., He, M., Luo, Q., Huang, X., Wei, P., Cheng, Y., Chen, L., Liang, Y., Lu, Y., Li, X., et al.: Multi-task classification and segmentation for explicable capsule endoscopy diagnostics. Front. Mol. Biosci. 8 (2021)

- [19] Kumar, N., Verma, R., Sharma, S., Bhargava, S., Vahadane, A., Sethi, A.: A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE TMI 36(7), 1550–1560 (2017)

- [20] Ling, C.X., Huang, J., Zhang, H., et al.: Auc: a statistically consistent and more discriminating measure than accuracy. In: IJCAI. vol. 3, pp. 519–524 (2003)

- [21] Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., et al.: Pytorch: An imperative style, high-performance deep learning library. NeurIPS 32 (2019)

- [22] Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, E.: Scikit-learn: Machine learning in Python. Journal of Machine Learning Research 12, 2825–2830 (2011)

- [23] Rueckert, D., Schnabel, J.A.: Model-based and data-driven strategies in medical image computing. Proceedings of the IEEE 108(1), 110–124 (2019)

- [24] Setio, A.A.A., Traverso, A., De Bel, T., Berens, M.S., Van Den Bogaard, C., Cerello, P., Chen, H., Dou, Q., Fantacci, M.E., Geurts, B., et al.: Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: the luna16 challenge. MIA 42, 1–13 (2017)

- [25] Siegel, R.L., Miller, K.D., Goding Sauer, A., Fedewa, S.A., Butterly, L.F., Anderson, J.C., Cercek, A., Smith, R.A., Jemal, A.: Colorectal cancer statistics, 2020. CA: a cancer journal for clinicians 70(3), 145–164 (2020)

- [26] Silver, D., Schrittwieser, J., Simonyan, K., Antonoglou, I., Huang, A., Guez, A., Hubert, T., Baker, L., Lai, M., Bolton, A., et al.: Mastering the game of go without human knowledge. Nature 550(7676), 354–359 (2017)

- [27] Sinclair, M., Baumgartner, C.F., Matthew, J., Bai, W., Martinez, J.C., Li, Y., Smith, S., Knight, C.L., Kainz, B., Hajnal, J., et al.: Human-level performance on automatic head biometrics in fetal ultrasound using fully convolutional neural networks. In: EMBC. pp. 714–717. IEEE (2018)

- [28] Tong, Q., Ning, M., Si, W., Liao, X., Qin, J.: 3d deeply-supervised u-net based whole heart segmentation. In: International Workshop on Statistical Atlases and Computational Models of the Heart. pp. 224–232. Springer (2017)

- [29] Uus, A., Zhang, T., Jackson, L.H., Roberts, T.A., Rutherford, M.A., Hajnal, J.V., Deprez, M.: Deformable slice-to-volume registration for motion correction of fetal body and placenta mri. IEEE Transactions on Medical Imaging 39(9), 2750–2759 (2020)

- [30] Yang, Y., Chen, J., Wang, R., Ma, T., Wang, L., Chen, J., Zheng, W.S., Zhang, T.: Towards unbiased covid-19 lesion localisation and segmentation via weakly supervised learning. In: ISBI. pp. 1966–1970 (2021)

- [31] Zhang, T., Biswal, S., Wang, Y.: Shmnet: Condition assessment of bolted connection with beyond human-level performance. Struct. Health Monit. 19(4), 1188–1201 (2020)

- [32] Zhang, T., Jackson, L.H., Uus, A., Clough, J.R., Story, L., Rutherford, M.A., Hajnal, J.V., Deprez, M.: Self-supervised recurrent neural network for 4d abdominal and in-utero mr imaging. In: Knoll, F., Maier, A., Rueckert, D., Ye, J.C. (eds.) Machine Learning for Medical Image Reconstruction. pp. 16–24 (2019)

- [33] Zhou, W., Yang, Y., Yu, C., Liu, J., Duan, X., Weng, Z., Chen, D., Liang, Q., Fang, Q., Zhou, J., et al.: Ensembled deep learning model outperforms human experts in diagnosing biliary atresia from sonographic gallbladder images. Nature Communications 12(1), 1–14 (2021)

- [34] Zhou, X., Wang, D., Krähenbühl, P.: Objects as points. ECCV (2020)

- [35] Zhuang, J., Cai, J., Wang, R., Zhang, J., Zheng, W.S.: Deep knn for medical image classification. In: MICCAI. pp. 127–136. Springer (2020)

- [36] Zhuang, X.: Challenges and methodologies of fully automatic whole heart segmentation: a review. Journal of healthcare engineering 4(3), 371–407 (2013)