Opportunistic Advertisement Scheduling in Live Social Media: A Multiple Stopping Time POMDP Approach

Abstract

Live online social broadcasting services like YouTube Live and Twitch have steadily gained popularity due to improved bandwidth, ease of generating content and the ability to earn revenue on the generated content. In contrast to traditional cable television, revenue in online services is generated solely through advertisements, and depends on the number of clicks generated. Channel owners aim to opportunistically schedule advertisements so as to generate maximum revenue. This paper considers the problem of optimal scheduling of advertisements in live online social media. The problem is formulated as a multiple stopping problem and is addressed in a partially observed Markov decision process (POMDP) framework. Structural results are provided on the optimal advertisement scheduling policy. By exploiting the structure of the optimal policy, best linear thresholds are computed using stochastic approximation. The proposed model and framework are validated on real datasets, and the following observations are made: (i) The policy obtained by the multiple stopping problem can be used to detect changes in ground truth from online search data (ii) Numerical results show a significant improvement in the expected revenue by opportunistically scheduling the advertisements. The revenue can be improved by in comparison to currently employed periodic scheduling.

Index Terms:

scheduling, advertisement, live channel, POMDP, multiple stopping, structural resultI Introduction

Popularity of online video streaming has seen a sharp growth due to improved bandwidth for streaming and the ease of sharing User-Generated-Content (UGC) on the internet platforms. One of the primary motivations for users to generate content is that platforms like YouTube, Twitch etc., allow users to generate revenue through advertising and royalties. The revenue of Twitch which deals with live video gaming, play through of video games, and e-sport competitions, is around 3.8 billion for the year 2015, out of which 77% of the revenue was generated from advertisements111http://www.tubefilter.com/2015/07/10/twitch-global-gaming-content-revenue-3-billion/.

Some of the common ways advertisements (ads) are scheduled on pre-recorded video contents on social media like YouTube are pre-roll, mid-roll and post-roll; where the names indicate the time at which the ads are displayed. In a recent research on viewer engagement, Adobe Research222https://gigaom.com/2012/04/16/adobe-ad-research/ concluded that mid-roll video ads constitute the most engaging ad type for pre-recorded video contents, outperforming pre-roll and post-rolls when it comes to completion rate (the probability that the ad will not be skipped). Viewers are more likely to engage with an ad if they are interested in the content of the video that the ad is been inserted into. Most content sharing platforms hosted pre-recorded videos, until recently, owing to higher bandwidth requirements of real-time video streaming. However, this has changed lately with improved bandwidths (e.g. Google Fiber, Comcast Xfinity) and well known content sharing websites like YouTube and Facebook making provisions for live streaming videos to capture major events in real time555For example, as early as 2011, millions watched the Royal wedding live through YouTube33footnotemark: 3, and, more recently, the Caribbean Premier League is scheduled to be broadcast live on Facebook44footnotemark: 4. Online live video streaming, popularly known as “Social TV”, now boasts a number of popular applications like Twitch, YouTube Live, Facebook Live, etc.

When a channel is streaming a live video, the mid-roll ads need to be scheduled manually666http://www.telegraph.co.uk/news/uknews/royal-wedding/8460801/Royal-wedding-Kate-and-William-marriage-live-on-YouTube.html. Twitch allows only periodic ad scheduling [1] and YouTube and other live services currently offers no automated method of scheduling ads for live channels. The ad revenue in live channel depends on the click rate (the probability that the ad will be clicked), which in turn depend on the viewer engagement with the channel content. Hence, ads need to be scheduled when the viewer engagement of the channel is high. The problem of optimal scheduling of ads has been well studied in the context of advertising in television; see [2],[3], [4] and the references therein. However, scheduling ads on live online social media is different from scheduling ads on television in two significant ways [5]: (i) real-time measurement of viewer engagement (ii) revenue is based on ads rather than a negotiated contract. Prior literature on scheduling ads on social media is limited to ad scheduling in real-time for social network games, where the ads are served to either the video game consoles in real time over the Internet [6], or in digital games that are played via major social networks [7]. 66footnotetext: http://www.si.com/tech-media/2016/07/06/caribbean-premier-league-matches-facebook-live 66footnotetext: YouTube Live: Slate and Ad Insertion https://is.gd/i6c7ku

Problem Formulation: This paper deals with optimal scheduling of ads on live channels in social media, by considering viewer engagement, termed as active scheduling, to maximize the revenue generated from the advertisements. We model the viewer engagement of the channel using a Markov chain [8, 9]. The viewer engagement of the content is not observed directly, however, noisy observation of the viewer engagement is obtained by the current number of viewers of the channel. Hence, the problem of computing the optimal policy of scheduling ads on live channel can then be formulated as an instance of a stochastic control problem called the partially observable Markov decision process (POMDP). To the best of our knowledge, this is the first time in the literature that ad scheduling in live channels on social media is studied in a POMDP framework. The main contribution of this paper is two-fold:

-

1.)

We provide a POMDP framework for the optimal ad-scheduling problem on live channels and show that it is an instance of the optimal multiple stopping problem.

-

2.)

We provide structural results of the optimal policy of the multiple stopping problem and using stochastic approximation compute the best approximate policy.

Structural Results: The problem of optimal multiple stopping has been well studied in the literature see [10], [11], [12], [13] and the references therein. The optimal multiple stopping problem generalizes the classical (single) stopping problem, where the objective is to stop once to obtain maximum reward. Nakai [10] considers optimal -stopping over a finite horizon of length in a partially observed Markov chain. More recently, [13] considers -stopping over a random horizon. The state of the finite horizon partially observed Markov chain in [10] above can be summarized by the “belief state”777The belief state is a sufficient statistic for all the past observations and actions [14].. For a stopping time POMDP, the policy can be characterized by stopping region (set of belief state where we stop) and continuance region (set of belief states where we do not stop). Nakai [10] shows that there are stopping regions corresponding to each time index and stops and these regions form a nested structure. However, in live channels, the time horizon is very large (in comparison to decision epochs) or initially unknown. Therefore, we extend the results in Nakai [10] to the infinite horizon case. The extension is both important and non-trivial. In the infinite horizon case, the policy is stationary (the stopping regions do not depend on the time index) and hence stopping regions characterize the policy. We obtain similar structural results as [10] in the infinite horizon case.

Our main structural result (Theorem 1) is that the optimal policy is characterized by a switching curve on the unit simplex of Bayesian posteriors (belief states). To prove this result we use the monotone likelihood ratio stochastic order since it is preserved under conditional expectations. However, determining the optimal policy is non-trivial since the policy can only be characterized on a partially ordered set (more generally a lattice) within the unit simplex. We modify the MLR stochastic order to operate on line segments within the unit simplex of posterior distributions. Such line segments form chains (totally ordered subsets of a partially ordered set) and permit us to prove that the optimal decision policy has a threshold structure. Having established the existence of a threshold curve, Theorem 2 and Theorem 22 gives necessary and sufficient conditions for the best linear hyperplane approximation to this curve. Then a simulation-based stochastic approximation algorithm (Algorithm 1) is presented to compute this best linear hyperplane approximation.

Context: The optimal multiple stopping problem can be contrasted to the recent work on sampling with “causality constraints”. In sampling with causality constraints, not all the observations are observable. [15] considers the case where an agent is limited to a finite number of observations (sampling constraints) and must adaptively decide the observation strategy so as to perform quickest detection on a data stream. The extension to the case where the sampling constraints are replenished randomly is considered in [16]. In the multiple stopping problem, considered in this paper, there is no constraint on the observations and the objective is to stop times at states that correspond to maximum reward.

The optimal multiple stopping problem, considered in this paper, is similar to sequential hypothesis testing [17, 18], sequential scheduling problem with uncertainty [19] and the optimal search problem considered in the literature. [20] and [21] consider the problem of finding the optimal launch times for a firm under strategic consumers and competition from other firms to maximize profit. However, in this paper we deal with sequential scheduling in a partially observed case. [22],[23] consider an optimal search problem where the searcher receives imperfect information on a (static) target location and decides optimally to search or interdict by solving a classical optimal stopping problem (). However, the multiple-stopping problem considered in this paper is equivalent to a search problem where the underlying process is evolving (Markovian) and the searcher needs to optimally stop times to achieve a specific objective.

The paper is organized as follows: Section II provides a model of a live channel and introduces the notations, assumptions and key definitions. Section III provides the main results of the paper. First, similar to [10], we show that the stopping regions of the optimal ad scheduling policy form a nested structure. Second, we show the threshold structure of the optimal ad scheduling policy. In Section IV, we use the nested property of the stopping regions and the threshold property in Section III and stochastic approximation algorithm to compute the best approximate policies using linear thresholds. Such linear threshold policies are computationally inexpensive to implement. In Section V, we validate the model on three different datasets. First, we illustrate the analysis using a synthetic dataset and verify the performance of the optimal ad scheduling policy against conventional scheduling policies. Second, we show that the policy obtain by the multiple stopping problem can be used to detect changes in ground truth using data from online search. Finally, we use real datasets from YouTube Live and Twitch to optimally schedule multiple ads () in a sequential manner so as to maximize the revenue.

II Opportunistic Scheduling on Live Channels: Model and Problem Formulation

II-A Live Channel Model

In this section, we develop a model of the live channel. The three main components of the live channel are i) Viewer engagement: How to model the viewer engagement of a live channel? ii) Dynamics of the channel viewers: How does the number of viewers vary with respect to the engagement? iii) Reward of the channel owner: What is the (monetary) reward obtained by the channel owner through advertising? Below, we develop models to address each of these questions.

-

1)

Dynamics of viewer engagement: Similar to [24], viewer engagement, in the context of live channels, can be defined as the following process: (i) The viewer decides to watch the live channel. (ii) The viewer is “engaged” with the content of the live channel. (iii) The viewer will watch the live content without switching to other channels. (iv) The viewer is more attentive when watching the live content. The potential benefits of (iii) and (iv) above is that it increases the odds of the viewers being exposed and persuaded by advertisements.

Viewer engagement, as defined above, is an abstract concept which captures viewer attitude, behaviour and attentiveness. Archak et. al. [8, 9] developed a Markov chain model for online behaviour of users and the effects of advertising. The main finding is that the user behaviour in online social media can be approximated by a first-order Markov chain. Following Archak et. al. [8, 9], we model the viewer engagement at time , denoted by , as an -state Markov chain with state-space . The dynamics of the viewer engagement of the channel, modelled as a Markov chain, can be characterized by the transition matrix and initial probability vector as follows:

(1) The Markov chain model for the viewer engagement of the channel is validated using simulations in Sec. V.

-

2)

Dynamics of channel viewers: The number of viewers at time depend on the viewer engagement of the live channel. As viewers are more engaged with the content, they are less likely to switch the channel. Hence, a higher viewer engagement state has higher number of viewers compared to a lower engagement state. Therefore, we model the dynamics of channel viewers as follows: The number of viewers at time , denoted by , belongs to the countably infinite set of non-negative integers. Denote, the conditional probability of viewers () in viewer engagement state () by . Note that the conditional probability is assumed to be time homogeneous888The conditional probability does not depend on the time index, . . The number of viewers is modeled as a Poisson random variable with state dependent mean , based on evidence in [25] and [11], as follows:

(2) The states with higher state dependent mean correspond to states with higher viewer engagement.

The channel owner does not observe the true viewer engagement of the channel, . However, at each time instant , the channel owner receives a noisy observation of the viewer engagement of the channel by the number of viewers, . Hence, the channel owner needs to estimate the viewer engagement using the history of noisy observations and schedule ads.

-

3)

Reward of channel owner: The channel owner agrees to show ads during the live session, which are decided prior to the beginning of the session. For example, the ads during the Super Bowl 50 in YouTube Live had to be pre-booked in advance.999http://www.campaignlive.co.uk/article/youtube-launches-real-time-ads-major-live-events-starting-super-bowl-50/1380260 Hence, at each time instant , the channel owner chooses an action as follows: The channel owner can continue with the live session (denoted by Continue) or can pause the live session to insert an ad (denoted by Stop). Hence, 101010The Stop and Continue actions will be denoted by and , respectively in the remainder of the paper. denote the actions available to the channel owner at time . This problem of scheduling the ads opportunistically, so as to obtain maximum revenue, corresponds to a multiple stopping problem with stops.

Choosing to stop at time (and schedule an ad), with stops remaining ( ads remaining), the channel owner will accrue a reward , where is the state of the Markov chain at time . The reward obtained by the channel owner depends on two factors: (i) the number of viewers (ii) the completion rate and click rate of the ad . To capture these, we model the reward as follows:

(3) In (3), captures the average number of viewers in any viewer engagement state. The term captures the completion and click rate of the ads at any viewer engagement state. Similarly, if the channel owner chooses to continue, he will accrue . When an ad is not shown, the reward obtained by the channel owner is usually zero, hence, .

Hence, to maximize revenue, the channel owner needs to opportunistically schedule ads at time slots when the viewer engagement is high, corresponding to a higher number of viewers and higher click rate.

II-B Ad Scheduling : Problem formulation & Stochastic Dynamic Programming

II-B1 Problem Formulation

The ad scheduling policy (or the control policy) prescribes a decision rule that determines the action taken by the channel owner. Let the initial probability vector, and the history of past observations at time for the channel owner be denoted as . The control policy, at time , maps the history to action. Hence, the policy of the channel owner belongs to the set of admissible policies Below, we reformulate the sequential multiple stopping problem of scheduling ads in terms of belief state.

Let denote the belief space of all -dimensional probability vectors. The belief-state is a sufficient statistic of [14], and evolves as , where

| (4) |

Here represents the -dimensional vectors of ones.

The aim is to compute the optimal stationary ad scheduling policy as a function of the belief, , and the number of stops (or the number of ads) remaining, , to maximize the infinite horizon criterion defined in (6). Let denote the stopping time when there are stops remaining:

| (5) |

The infinite horizon discounted criterion with stationary policy , and initial belief is as below:

| (6) | ||||

| (7) |

where . In (6) and (7), denotes the discount factor. Below, we study the special case, where and , i.e. the reward for stopping and scheduling an ad is independent of the number of stops remaining and the channel owner accrues no reward for continuing. The extension to the general case is straightforward.

II-B2 Stochastic Dynamic Programming

The computation of the optimal ad scheduling policy , to maximize the infinite horizon discounted criterion in (6) and (7), is equivalent to solving Bellman’s dynamic programming equation [14]:

| (8) |

where,

| (9) |

The above dynamic programming formulation is a POMDP. Since the state-space , is a continuum, Bellman’s equation (8) does not translate into practical solution methodologies as needs to be evaluated at each . This in turn renders the calculation of the optimal policy computationally intractable. In Sec. III we derive structural results of the optimal ad scheduling policy. The advantage of deriving structural results is that the optimal policy can be computed efficiently. Sec. IV provides stochastic approximation algorithms to compute approximations of the optimal policy using the structural results derived in Sec. III.

III Optimal ad scheduling: Structural results

In this section, we derive structural results for the optimal ad scheduling policy. We first introduce the value iteration algorithm in Sec. III-A, a successive approximation method to solve the dynamic programming recursion in (8). The value iteration algorithm is a valuable tool for deriving the structural results. In Section III-B, we use the value iteration algorithm in Sec. III-A to prove structural results of the optimal ad scheduling policy. Using the structural results in Sec. III-B, we provide a simulation based algorithm to compute the policy in Sec. IV.

III-A Value Iteration Algorithm

The value iteration algorithm is a successive approximation approach for solving Bellman’s equation (8).

The procedure is as follows: For iterations , the value function and the policy is obtained as follows

| (10) |

| (11) |

where

| (12) |

and

| (13) |

with initialized arbitrarily. It can be easily shown that the above procedure converges [14].

In order to prove the structural results of the stationary ad scheduling policy, defined in Sec. II, we define the stopping and the continuance regions of the policy as below. Let be defined as

| (14) |

The stopping and continuance region (at each iteration ) is defined as follows:

| (15) |

Since the value iteration converges, the optimal stationary policy is defined as

| (16) |

Correspondingly, the stationary stopping and continuance sets are defined by

| (17) |

The value function, in (10), can be rewritten, using (15), as follows:

| (18) |

where and are indicator functions on the continuance and stopping regions respectively, for each iteration .

The value iteration algorithm (10) to (13) does not translate into a practical solution methodology since the belief state belongs to an uncountable set. Hence, there is a strong motivation to characterize the structure of the optimal policy. In the following section, we use the definition of in (19) to prove structural results on the stopping region defined in (15).

III-B Structural results for the optimal ad scheduling policy

In this section, we provide structural results on the optimal policy of the multiple stopping problem corresponding to maximizing the ad revenue.

III-B1 Assumptions

The main result below, namely, Theorem 1, requires the following assumptions on the reward vector, , and the transition matrix, and the observation distribution, . The first assumption on the reward says that is the state with the highest reward and the reward monotonically decreases. This captures the channel owners’ preference of scheduling ads at the highest viewer engagement state. A sufficent condition for the reward to monotonically decrease is that both the mean number of viewers , and the completion and click rate be non-increasing. The second and the third assumptions (A2) and (A3) relate to the underlying stochastic model and is related to MLR ordering of the updated belief vector in (4) (see Theorem 4 and Theorem 5 in Appendix A). The assumption (A2) models the following facts: i) The user behaviour in online social media can be approximated by a first order Markov chain. ii) The viewer engagement changes at a smaller time scale compared to sampling or decision epochs. The assumption (A3) is due to the fact that the viewer engagement states can be ordered corresponding to decreasing mean number of viewers, . The last assumption (A4) is a technical condition required for our proof.

-

•

(A1) The vector, , has decreasing elements. Hence, is increasing in .

- •

-

•

(A3) is TP211.

-

•

(A4) The vector, , has decreasing elements.

III-B2 Main Result

The following (Theorem 1) is the main result of the paper and the proof is provided in Appendix B. Theorem 1.A states that the optimal policy is a monotone policy. The optimal policy decreases monotonically with the belief state . However, for a monotone policy to be well defined, we need to first define the ordering between two belief states. In this paper, we use the Monotone Likelihood Ratio (MLR) ordering121212MLR ordering is defined in Def. 2 in Appendix A-B, and the less restrictive MLR ordering on lines and 131313MLR ordering over lines is defined in Appendix A-C over the belief states [26, 27]. Fig. 15 illustrates the definition of .

Theorem 1

Assume (A1), (A2), (A3) and (A4), then,

-

A

There exists an optimal policy that is monotonically decreasing on lines , and for each .

-

B

There exists an optimal switching curve , for each , that partitions the belief space into two individually connected regions and ,such that the optimal policy is

(20) -

C

.

Theorem 1A implies that the optimal action is monotonically decreasing on the line , as shown in Fig. 15. Hence, on each line there exists a threshold above (in MLR sense) which it is optimal to Stop and below which it is optimal to Continue. Theorem 1B says, for each , the stopping and continuance sets are connected. Hence, there exists a threshold curve, , as shown in Fig. 15, obtained by joining the thresholds, from Theorem 1A, on each of the line . Theorem 15C proves the sub-setting structure of the stopping and continuance sets, as shown in Fig. 15.

In order to prove the theorem, we introduce the following propositions, proofs of which are provided in the Appendix B. The value function of the classical (single) stopping POMDP increases with (in MLR sense) [27, 28, 29]. Proposition 1 states that the above result holds even in the multiple stopping problem. In the classical stopping POMDPs in [27], the stopping and continuance sets are characterized using the value function. However, in the multiple stopping problem, considered in this paper, plays the role of value function in characterizing the stopping and continuance region. Proposition 2 proves the corresponding result in the multiple stopping problem. Proposition 3 proves the nested stopping regions at each iteration of the value iteration. Since the value iteration converges, Proposition 3 implies Theorem 15C.

Proposition 1

is increasing in .

Proposition 2

is decreasing in .

Proposition 3

To summarize, we showed the following properties of the optimal policy:

-

(i)

the optimal policy is monotone on the lines .

-

(ii)

existence of a unique threshold curve for the stopping region, .

-

(iii)

the stopping regions have a sub-setting property, i.e. .

IV Stochastic Approximation Algorithm for computing best approximate ad scheduling policy

In this section, we synthesize policies satisfying the properties of the optimal ad scheduling policy derived in Sec. III. The policies can be characterized by threshold curve, corresponding to each of the stopping regions. In this section, we parametrize the threshold curve, , as . Here, denotes the parameter of the threshold curve. To capture the essence of Theorem 1, we require that the parametrized optimal policy, , be decreasing on lines and and the stopping sets are connected and satisfy the sub-setting property, i.e. . Below, we will restrict our attention to obtain the best linear threshold policy, i.e. policy of the form given in (21). We characterize the parameters of the threshold policy in Sec. IV-A and provide an algorithm to compute the parameters using stochastic approximation in Sec. IV-B.

IV-A Structure of best linear MLR threshold policy for ad scheduling

Consider a threshold hyperplane, on the simplex , of the form (21) where denotes the coefficient vector. The linear threshold scheduling policy, denoted by is defined as

| (21) |

Here, is the concatenation of the vectors.

To capture the essence of Theorem 1A, we require that the policy be decreasing on lines, i.e. for . Theorem 2 gives necessary and sufficient conditions on the coefficient vector such that the above condition holds.

Theorem 2

A necessary and sufficient condition for the linear threshold policy to be

-

1.

MLR decreasing on line , iff and .

-

2.

MLR decreasing on line , iff , and .

The proof of Theorem 2 is similar to the proof of Theorem 12.4.1 in [27] and hence is omitted in this paper.

The stopping sets are connected since we parametrize the threshold curve using a linear hyperplane. Finally, the linear threshold approximation curve needs to satisfy the sub-setting property in Theorem 15C. Theorem 22 provides sufficient conditions such that the parametrized linear threshold curve satisfy the sub-setting property and the proof is provided in the Appendix B-E.

Theorem 3

Therefore, under the conditions of Theorem 2 and Theorem 22 the linear threshold policy in (21) satisfy all the conditions in Theorem 1 and hence qualify as the best linear threshold policy.

IV-B Simulation-based stochastic approximation algorithm for estimating the best linear MLR threshold policy for ad scheduling

In this section, we provide an algorithm to compute the best linear thresholds satisfying the conditions in Theorem 2 and Theorem 22. Recall, the optimal policy minimizes the average discounted reward in eqs. 6 and 7. The optimal linear thresholds can be obtained by a policy gradient algorithm [14]. Algorithm 1 is a policy gradient algorithm to compute the best linear threshold policy. In this algorithm, we approximate in eqs. 6 and 7 by the finite time approximation

| (24) |

Here, we have made explicit the dependence of the parameter vector, , on the discounted reward and with an abuse of notation, have suppressed the dependence on the policy . It can be shown that is an asymptotically biased estimate of and can be obtained by simulation for large .

The policy gradient algorithm in Algorithm 1 requires the gradient at each iteration. Computation of the gradient is quite difficult due to the non-linear dependence of the parameter on the cost function. Hence, in this paper, we estimate the gradient through a stochastic approximation algorithm.

There are several stochastic approximation algorithms available in the literature: infinitesimal perturbation analysis[30], weak derivatives [31] and the SPSA algorithm [32]. In this paper, we use the SPSA algorithm and because of the constraints in Theorem 22 we use a variant of SPSA that can handle linear inequality constraints [33].

V Numerical Results: Synthetic and Real Dataset

In this section, we present numerical results on synthetic and real datasets. First, we illustrate our analysis of Theorem 1, using synthetic data. Second, we demonstrate how the optimal multiple stopping framework, used for ad scheduling in live media, can be used to detect changes in ground truth using real data from online search. Online search is linked to advertising in television and online social media [34]. Third, we show, through simulations, that the scheduling policy obtained from Algorithm 1 outperforms conventional technique of scheduling ads in live social media. In this paper, we compare the scheduling policy obtained from Algorithm 1 with two schemes: “Periodic” and “Random”. The periodic scheme models the most common method of advertisement scheduling in pre-recorded videos in platforms like YouTube. In the context of live channels, Twitch uses a periodic scheduling where an ad is inserted periodically into the live channel [1]. In contrast, in the random scheduling scheme, the ad is inserted randomly into the live channel. The random scheduling scheme is used as a benchmark to compare the revenue obtained through the periodic scheme and the policy obtained through Algorithm 1.

V-A Synthetic Data

In this section, we do a simulation study based on synthetic data for the optimal multiple stopping problem. The objective is to illustrate the analysis in Theorem 1. In addition, we obtain the policy by solving the dynamic programming equations (8) and show through simulations that it outperforms conventional method of scheduling ads periodically.

In this “toy” example, the viewer engagement of the live channel can be categorized into states: “Popular”, “Interesting” and “Boring”, denoted by , and , respectively. The transition between the various states follow a Markov chain with transition matrix given in (29). The viewer engagement state is observed through a state dependent Poisson process with mean given in (30). The reward structure for scheduling the ads is as in (31).

| (29) |

| (30) | ||||

| (31) |

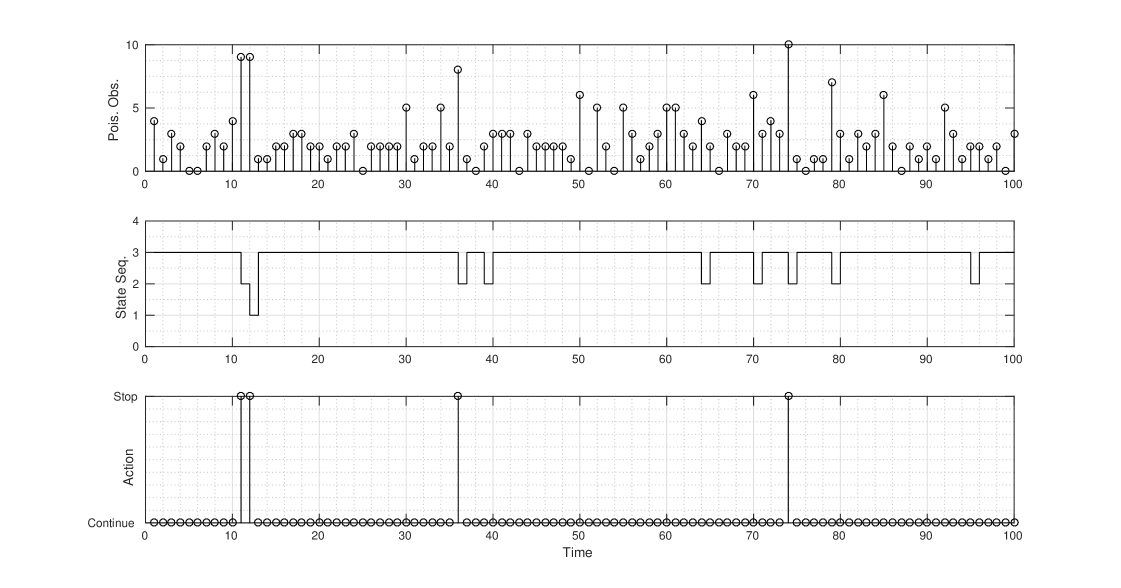

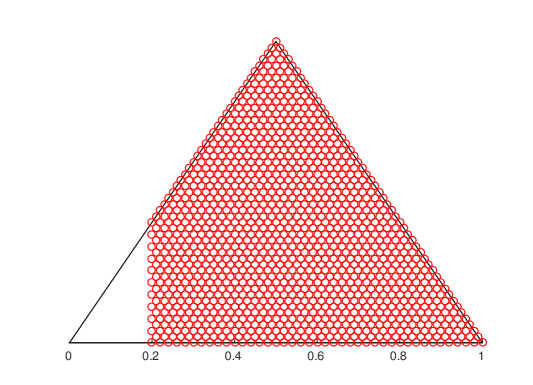

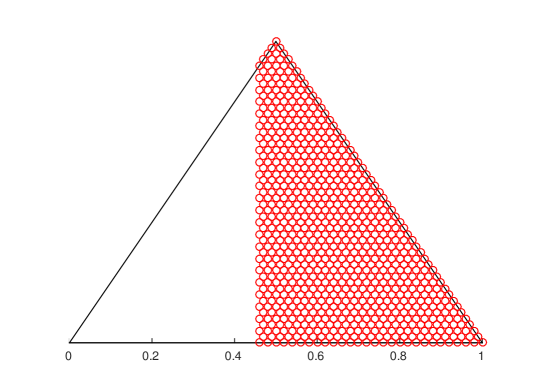

Fig. 2 shows a sample trajectory of the observation and the state sequence. For , i.e. for a total of ads to be scheduled, we obtained the policy by solving the dynamic programming equations in (8). The resulting policy, in terms of stopping and continuance set as defined in (14), is shown in Fig. 3. We also show the corresponding points where the policy was chosen in Fig. 2. Only the stopping set for and , i.e. and respectively are shown in Figure 3. As can be seen from Figure 3, , verifying Theorem 1.C. The stopping regions and are connected and the optimal policy is threshold on any line , verifying Theorem 1.B and Theorem 1.A respectively.

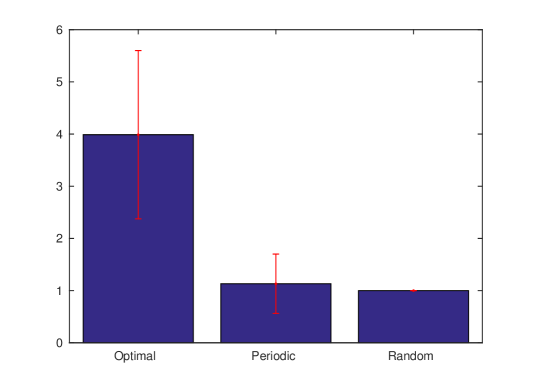

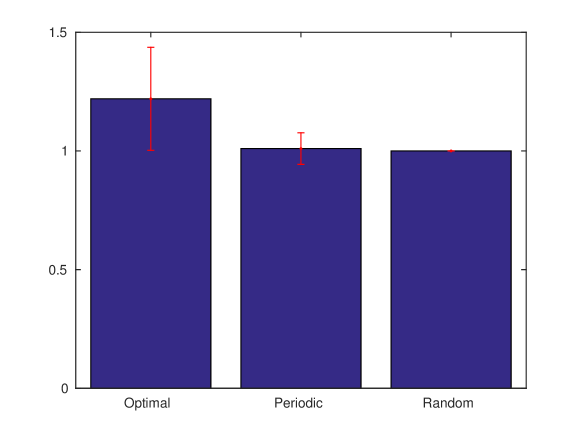

We now compare the policy obtained by solving the dynamic programming equation in (8) with the conventional technique of scheduling ads periodically within the live session. We also include a random scheduling policy, where the ads are scheduled randomly within the live session, for benchmarking purposes. Fig. 4 shows the comparison between the various schemes. The results in Fig. 4 was obtained by independent Monte Carlo (M.C.) simulations. It can be seen from Fig. 4 that the policy obtained from (8) significantly outperforms (close to times) periodic scheduling. This is not surprising since the policy obtained by solving the dynamic programming in (8) opportunistically schedules ads when the viewer engagement of the channel is high.

V-B Change Detection (Real Dataset)

In this section, we illustrate Theorem 1 for change detection problems. Change detection problems are special case of multiple stopping problems, when . We apply Theorem 1 for detecting changes in ground truth using an online search dataset. Online search is linked to advertising in television and online social media [34]. In addition, detecting changes in ground truth using online search data have been used for detection of outbreak of illness, political election, or major sporting events [35]. Hence, detection of changes in ground truth is important for optimizing advertising strategy. The dataset that we use is the Tech Buzz dataset from Yahoo!. We first describe the Tech Buzz dataset in Sec. V-B1 and then show through simulations that the policy obtained by solving the dynamic programming equation in (8) can be used for detecting changes in ground truth using data from online search.

V-B1 Dataset

The dataset that we use in our study is the Yahoo! Buzz Game Transactions from the Webscope datasets161616Yahoo! Webscope dataset: A2 - Yahoo! Buzz Game Transactions with Buzz Scores, version 1.0 http://research.yahoo.com/Academic_Relations available from Yahoo! Labs. In 2005, Yahoo! along with O’Reilly Media started a fantasy market where the trending technologies at that point where pitted against each other. For example, in the browser market there were “Internet Explorer”, “Firefox”, “Opera”, “Mozilla”, “Camino”, “Konqueror”, and “Safari”. The players in the game have access to the “buzz”, which is the online search index, measured by the number of people searching on the Yahoo! search engine for the technology. The objective of the game is to use the buzz and trade stocks accordingly.

V-B2 Change Detection

We consider a subset of the data containing the WIMAX buzz scores and the number of stocks traded (volume of the stocks). The unknown valuation of the WIMAX technology is modelled using a state Markov chain (“1” for high valuation and “2” for low valuation). The valuation of the stock is not observed directly, but through noisy observations on the volume of the stocks traded. Fig. 5 shows the volume of the stocks traded and the buzz during the month of April. The volume of stocks traded depend on the unknown valuation and, for ease of analysis, is quantized into states (“High”, “Medium” and “Low”), denoted by , and respectively. Given the time series of the (quantized) volume of stocks traded, we obtain the hidden Markov model constituting of the transition matrix of the WIMAX valuation and the observation probability of the volume of the stocks traded given the WIMAX valuation using an EM algorithm [27]. The parameters of the Markov chain obtained using an EM algorithm are as below:

| (32) |

| (33) |

| (34) |

As can be seen from Fig. 5 the WIMAX buzz and the volume of stocks traded is initially low. Hence, the objective is to find the time point at which the WIMAX value switches to the high value. The reward structure in (34) reflects the fact that choosing to Stop at the high value state, an agent obtains more money by trading the high value WIMAX stocks. The policy obtained by solving the dynamic programming in (8) shows a high valuation at April 18. The change point corresponds to Intel’s announcement of WIMAX chip171717http://www.dailywireless.org/2005/04/17/intel-shipping-wimax-silicon/. The high valuation of WIMAX stock can also be noticed from the “spike” in the buzz around April 18 in Fig. 5.

V-C Ad Scheduling on Live Channels (YouTube and Twitch Datasets)

In this section, we illustrate the policy from Algorithm 1 on real data from YouTube and Twitch. In comparison to Sec. V-A and Sec. V-B, real data from YouTube and Twitch has a wide range of viewer engagement states and hence requires more states in the Markov chain model. As the number of states increases, solving the dynamic programming equation in (8) becomes impractical. Hence, we resort to best linear threshold policy through Algorithm 1. We first describe the dataset in Sec. V-C1 and then show that the policy obtained from Algorithm 1 outperforms conventional periodic scheduling.

V-C1 Dataset

In this paper, we use the dataset in [36]181818The dataset is available from http://dash.ipv6.enstb.fr/dataset/live-sessions/. The dataset consists of live session on the two popular live broadcasting platforms: YouTube Live and Twitch, between January and April 2014. The dataset contains samples of the live sessions sampled at a -minute interval on each of the platforms. Each sample contains the identification of the channel, the number of viewers and some additional meta-data of the channel. The main finding in [36] is that the viewer engagement is more heterogeneous than in other user-generated content platforms such as YouTube. The heterogeneity of viewer engagement in live channel can be used to opportunistically schedule advertisements.

V-C2 Entertainment (YouTube Live)

In this section we use real data from YouTube Live channel and show that the policy obtained from Algorithm 1 outperforms conventional periodic scheduling.

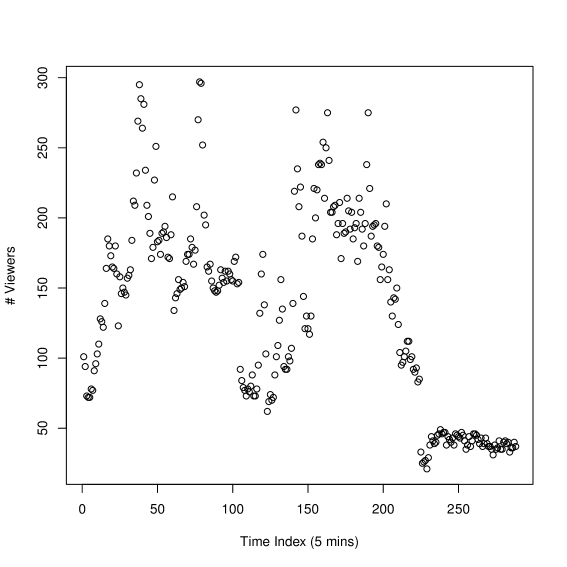

We selected data from the “entertainment” category from the YouTube Live dataset. The channel contains data from January 01, 2014 to Jan 31, 2014, i.e. for a period of one month. Fig. 6(a) shows the distribution of the viewers of the channel during Jan 01, 2014.

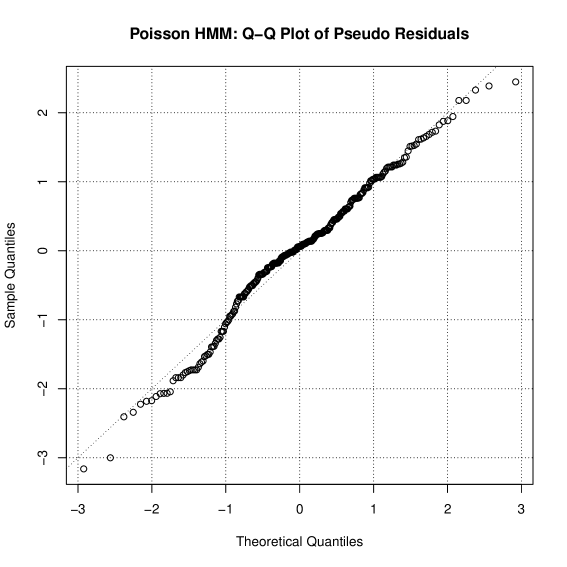

The parameters of the channel were obtained by the EM-Algorithm A.2.3 in [37]. The EM-Algorithm was run for Markov Chain with states. Using the AIC and BIC criteria, we selected that the channel be modelled by a state Markov chain with the transition matrix in (35) and observations following state dependent Poisson distribution with mean given by (36). As can be seen from (35) the transition matrix is a first-order Markov chain validating our initial assumptions. Moreover, the transition matrix is diagonally dominant entries ensuring the TP2 assumption. This diagonally domainant entries in the transition matrix in (35) models the fact the viewer engagement of the channel changes at a slower time scale compared to the decision epochs (or sampling epochs). The reward depend on both the in (36) and the completion and click rate . Since the click rate is not available in the dataset, we assume . Due to the ordinality of the reward, is assumed to be equal to . The model is validated using the QQ-plot of pseudo-residuals defined in Sec. 6.1 in [37] and is show in Fig. 6(b). As can be seen from Fig. 6(b), the QQ-plot closely follows the straight line which indicates that the model is a good fit for the data.

| (35) |

| (36) |

V-C3 Gaming (Twitch)

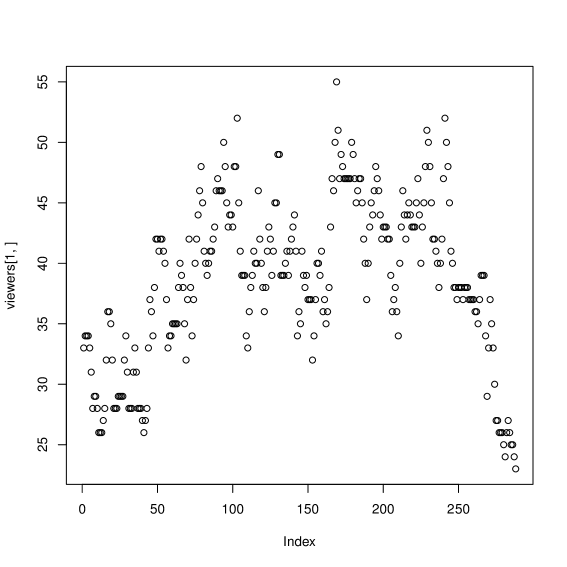

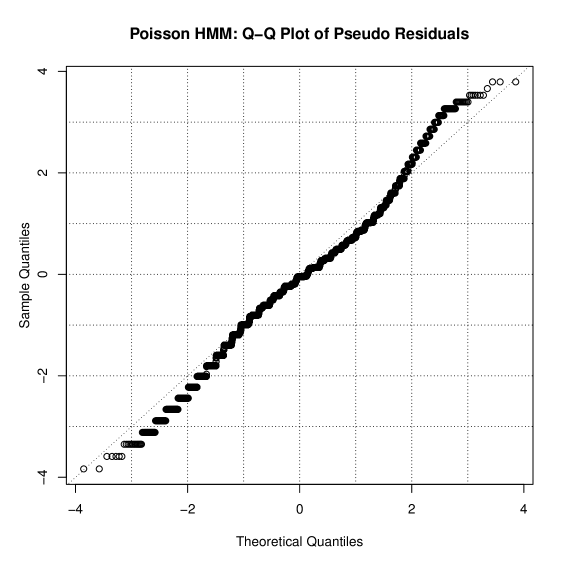

The Twitch dataset contains channels with “gaming” content. Fig. 7(a) shows the distribution of the viewers of the channel during Jan 01, 2014. Similar, to the Sec. V-C2 above, we use the EM-Algorithm in [37] to estimate the parameters of the Markov model. The parameters of the Markov model consisting of the transition matrix and the state dependent mean of the Poisson distribution is as given in (37) and (38), respectively. The model is validated using QQ-plot of pseudo-residuals and is shown in Fig. 7(b).

| (37) |

| (38) |

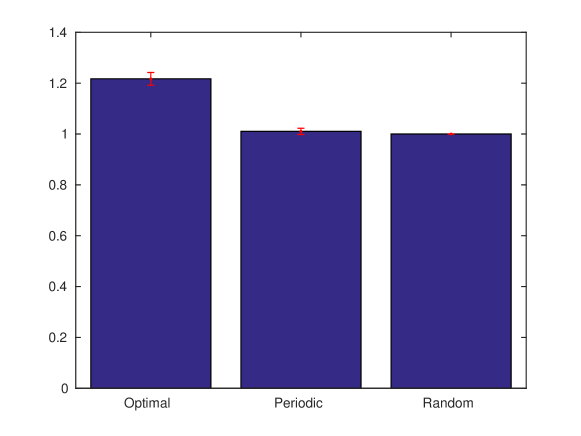

Fig. 8(b) shows the comparison between the various schemes. Similar to the result in the YouTube Live session, the policy obtained through Algorithm 1 outperforms conventional periodic scheduling by close to .

VI Conclusion

In this paper, we considered the problem of optimal scheduling of ads on live online social broadcasting channels. First, we cast the problem as an optimal multiple stopping problem in the POMDP framework. Second, we characterized the structural results of the optimal ad scheduling policy. Using the structural results of the optimal ad scheduling policy we computed best approximate policies using stochastic approximation. Finally, we validated the results on real datasets. First, we illustrated the analysis using synthetic data. In addition, using synthetic data, we showed that the optimal ad scheduling policy outperforms conventional scheduling techniques. Second, we show through simulations, that the policy obtained from the multiple stopping problem framework, used for ad scheduling, can be used to detect changes in the ground truth using data from online search. Detecting changes in ground truth is useful for optimizing ad strategy. Finally, we show through simulations, that the best approximate ad scheduling policies obtained through stochastic approximation outperforms conventional periodic scheduling by .

Extension of the current work could involve developing upper and lower myopic bounds to the optimal policy as in [38], optimizing the ad length and constraints on ad placement. These issues promise to offer interesting avenues for future work.

Appendix A Preliminaries and Definitions

A-A First-order stochastic dominance

Definition 1

Let and be two belief state vectors. Then, is greater than with respect to first-order stochastic dominance–denoted as , if

| (39) |

A-B MLR ordering

Definition 2

Let and be two belief state vectors. Then, is greater than with respect to Monotone Likelihood Ratio (MLR) ordering–denoted as , if

| (40) |

MLR ordering over is a strong condition. In order to show threshold structure, we define the following weaker notion of MLR ordering over two types of lines.

A-C MLR ordering over lines

First, we define as the dimensional linear hyperplane which connects the vertices as follows:

| (41) |

Figure 15 illustrates the definition (41) for an optimal multiple stopping problem with . Next, we construct two types of lines as follows:

-

•

: For any , construct the line that connects to as below:

(42) -

•

: For any , construct the line that connects to as below:

(43)

With an abuse of notation, we denote by and by . Figure 15 illustrates the definition of .

Definition 3 (MLR ordering on lines)

is greater than with respect to MLR ordering on the lines , denoted as , if , for some and .

The MLR order is a partial order, however, the MLR ordering on lines is a complete order. The MLR on lines requires less stringent conditions and can be used for devising threshold policies over lines.

A-D TP2 ordering

Definition 4 (TP2 ordering)

A transition probability matrix, is Totally Positive of order 2 (TP2), if all the second order minors are non-negative i.e. the determinants

| (44) |

An important consequence of the TP2 ordering in Definition 44 is the following theorems, which states that the filter preserves MLR dominance.

Theorem 4 (Theorem 10.3.1 in [27])

If the transition matrix, , and the observation matrix, , satisfies the condition in 11 and • ‣ III-B1, then

-

•

For , the filter satisfies .

-

•

For ,

Theorem 5 ([39])

if and only if for any increasing function ,

Appendix B Proof of Prop. 1, Prop. 2, Prop. 3, Theorem 1

To prove Prop. 1, Prop. 2 and Prop. 3, we assume that the proposition hold for all values less than .

B-A Proof of Prop. 1

Recall from (12),

Using Theorem 4, Theorem 5 and the induction hypothesis, the term is increasing in . From Assumption • ‣ III-B1, is increasing in . The proof for increasing in is similar and is omitted. Hence, is increasing in .

B-B Proof of Prop. 2

The proof follows by induction. Recall from (19), we have

|

|

(45) |

Hence, we compare and in the following regions:

-

a.)

which is non-negative by the induction assumption.

-

b.)

which is non-negative since .

-

c.)

which is non-negative since .

-

d.)

which is non-negative by the induction assumption.

B-C Proof of Prop. 3

If , then . By Prop. 2, . Hence .

B-D Proof of Theorem 1

Existence of optimal policy: In order to show the existence of a threshold policy of , we need to show that is supermodular in . Since,

We need to show that is decreasing in .

| (46) |

The term in (46) is decreasing in due to our assumption. Hence, to show that is decreasing in it is sufficent to show that is decreasing in . Define,

| (47) |

Now,

| (48) |

We prove using induction that is decreasing in , using the recursive relation over in (48).

For ,

| (49) |

The initial conditions of the value iteration algorithm can be chosen such that in (49) is decreasing in . A suitable choice of the initial conditions is given below:

| (50) |

The intuition behind the initial conditions in (50) is that the value function, gives the expected total reward if we stop times successively starting at belief . Hence, it is clear that is decreasing in , if is decreasing in , finishing the induction step.

Characterization of the switching curve : For each construct the line segment . The line segment can be described as . On the line segment all the belief states are MLR orderable. Since is monotone decreasing in , for each , we pick the largest such that . The belief state, is the threshold belief state, where . Denote by . The above construction implies that there is a unique threshold on . The entire simplex can be covered by considering all pairs of lines , for , i.e. . Combining, all points yield a unique threshold curve in given by .

Connectedness of : Since for all , call , the subset of that contains . Suppose is the subset that was disconnected from . Since every point on lies on the line segment , for some , there exists a line segment starting from that would leave the region , pass through the region where action is optimal and then intersect region , where action is optimal. But, this violates the requirement that the policy is monotone on . Hence, and are connected.

Connectedness of : Assume , otherwise and there is nothing to prove. Call the region that contains as . Suppose is disconnected from . Since every point in can be covered by a line segment , for some . Then, there exists a line starting from would leave region , pass through the region where action is optimal and then intersect the region (where action is optimal). But this violates the monotone property of .

Sub-setting structure: The proof is straightforward from Prop. 3.

B-E Proof of Theorem 22

References

- [1] T. Smith, M. Obrist, and P. Wright, “Live-streaming changes the (video) game,” in Proc. of the 11th European Conference on Interactive TV and Video. ACM, 2013, pp. 131–138.

- [2] S. Bollapragada, M. R. Bussieck, and S. Mallik, “Scheduling commercial videotapes in broadcast television,” Oper. Res., vol. 52, no. 5, pp. 679–689, Oct. 2004.

- [3] D. G. Popescu and P. Crama, “Ad revenue optimization in live broadcasting,” Management Science, vol. 62, no. 4, pp. 1145–1164, 2015.

- [4] S. Seshadri, S. Subramanian, and S. Souyris, “Scheduling spots on television,” 2015.

- [5] H. Kang and M. P. McAllister, “Selling you and your clicks: examining the audience commodification of google,” Journal for a Global Sustainable Information Society, vol. 9, no. 2, pp. 141–153, 2011.

- [6] R. Terlutter and M. L. Capella, “The gamification of advertising: analysis and research directions of in-game advertising, advergames, and advertising in social network games,” Journal of Advertising, vol. 42, no. 2-3, pp. 95–112, 2013.

- [7] J. Turner, A. Scheller-Wolf, and S. Tayur, “Scheduling of dynamic in-game advertising,” Operations Research, vol. 59, no. 1, pp. 1–16, 2011.

- [8] N. Archak, V. Mirrokni, and S. Muthukrishnan, “Budget optimization for online campaigns with positive carryover effects,” in Proc. of the 8th International Conference on Internet and Network Economics. Springer-Verlag, 2012, pp. 86–99.

- [9] N. Archak, V. S. Mirrokni, and S. Muthukrishnan, “Mining advertiser-specific user behavior using adfactors,” in Proceedings of the 19th International Conference on World Wide Web, ser. WWW ’10. ACM, 2010, pp. 31–40.

- [10] T. Nakai, “The problem of optimal stopping in a partially observable markov chain,” Journal of optimization Theory and Applications, vol. 45, no. 3, pp. 425–442, 1985.

- [11] W. Stadje, “An optimal k-stopping problem for the poisson process,” in Mathematical Statistics and Probability Theory. Springer, 1987, pp. 231–244.

- [12] M. Nikolaev, “On optimal multiple stopping of markov sequences,” Theory of Probability & Its Applications, vol. 43, no. 2, pp. 298–306, 1999.

- [13] A. Krasnosielska-Kobos, “Multiple-stopping problems with random horizon,” Optimization, vol. 64, no. 7, pp. 1625–1645, 2015.

- [14] D. P. Bertsekas, D. P. Bertsekas, D. P. Bertsekas, and D. P. Bertsekas, Dynamic programming and optimal control. Athena Scientific Belmont, MA, 1995, vol. 1, no. 2.

- [15] E. Bayraktar and R. Kravitz, “Quickest detection with discretely controlled observations,” Sequential Analysis, vol. 34, no. 1, pp. 77–133, 2015.

- [16] J. Geng, E. Bayraktar, and L. Lai, “Bayesian quickest change-point detection with sampling right constraints,” IEEE Transactions on Information Theory, vol. 60, no. 10, pp. 6474–6490, 2014.

- [17] T. L. Lai, “On optimal stopping problems in sequential hypothesis testing,” Statistica Sinica, vol. 7, no. 1, pp. 33–51, 1997.

- [18] ——, Sequential analysis. Wiley Online Library, 2001.

- [19] S. H. J. Alexander G. Nikolaev, “Stochastic sequential decision-making with a random number of jobs,” Operations Research, vol. 58, no. 4, pp. 1023–1027, 2010.

- [20] S. Savin and C. Terwiesch, “Optimal product launch times in a duopoly: Balancing life-cycle revenues with product cost,” Operations Research, vol. 53, no. 1, pp. 26–47, 2005.

- [21] I. Lobel, J. Patel, G. Vulcano, and J. Zhang, “Optimizing product launches in the presence of strategic consumers,” Management Science, vol. 62, no. 6, pp. 1778–1799, 2015.

- [22] K. E. Wilson, R. Szechtman, and M. P. Atkinson, “A sequential perspective on searching for static targets,” European Journal of Operational Research, vol. 215, no. 1, pp. 218 – 226, 2011.

- [23] M. Atkinson, M. Kress, and R.-J. Lange, “When is information sufficient for action? search with unreliable yet informative intelligence,” Operations Research, vol. 64, no. 2, pp. 315–328, 2016.

- [24] I. D. Askwith, “Television 2.0: Reconceptualizing tv as an engagement medium,” Ph.D. dissertation, Massachusetts Institute of Technology, 2007.

- [25] H. Yu, D. Zheng, B. Y. Zhao, and W. Zheng, “Understanding user behavior in large-scale video-on-demand systems,” SIGOPS Oper. Syst. Rev., vol. 40, no. 4, pp. 333–344, Apr. 2006.

- [26] V. Krishnamurthy and D. V. Djonin, “Structured threshold policies for dynamic sensor scheduling–a partially observed markov decision process approach,” IEEE Transactions on Signal Processing, vol. 55, no. 10, pp. 4938–4957, Oct 2007.

- [27] V. Krishnamurthy, Partially Observed Markov Decision Processes. Cambridge University Press, 2016.

- [28] ——, “How to schedule measurements of a noisy Markov chain in decision making?” IEEE Transactions on Information Theory, vol. 59, no. 7, pp. 4440–4461, July 2013.

- [29] ——, “Bayesian sequential detection with phase-distributed change time and nonlinear penalty – A POMDP Lattice programming approach,” IEEE Transactions on Information Theory, vol. 57, no. 10, pp. 7096–7124, Oct 2011.

- [30] J. J. M. Eric Johnson, “Infinitesimal perturbation analysis: A tool for simulation,” The Journal of the Operational Research Society, vol. 40, no. 3, pp. 243–254, 1989.

- [31] G. C. Pflug, Optimization of stochastic models: the interface between simulation and optimization. Springer Science & Business Media, 2012, vol. 373.

- [32] J. C. Spall, Introduction to stochastic search and optimization: estimation, simulation, and control. John Wiley & Sons, 2005, vol. 65.

- [33] I.-J. Wang and J. C. Spall, “Stochastic optimisation with inequality constraints using simultaneous perturbations and penalty functions,” International Journal of Control, vol. 81, no. 8, pp. 1232–1238, 2008.

- [34] M. Joo, K. C. Wilbur, B. Cowgill, and Y. Zhu, “Television advertising and online search,” Management Science, vol. 60, no. 1, pp. 56–73, 2013.

- [35] J. Ginsberg, M. H. Mohebbi, R. S. Patel, L. Brammer, M. S. Smolinski, and L. Brilliant, “Detecting influenza epidemics using search engine query data,” Nature, vol. 457, no. 7232, pp. 1012–1014, 2009.

- [36] K. Pires and G. Simon, “Youtube live and twitch: A tour of user-generated live streaming systems,” in Proceedings of the 6th ACM Multimedia Systems Conference, ser. MMSys ’15. ACM, 2015, pp. 225–230.

- [37] W. Zucchini and I. L. MacDonald, Hidden Markov models for time series: an introduction using R. CRC press, 2009.

- [38] V. Krishnamurthy and U. Pareek, “Myopic bounds for optimal policy of POMDPs: An extension of Lovejoy’s structural results,” Operations Research, vol. 62, no. 2, pp. 428–434, 2015.

- [39] P. C. Kiessler, “Comparison methods for stochastic models and risks,” Journal of the American Statistical Association, vol. 100, no. 470, pp. 704–704, 2005.