Optimal Clustering in Anisotropic Gaussian Mixture Models

Abstract

We study the clustering task under anisotropic Gaussian Mixture Models where the covariance matrices from different clusters are unknown and are not necessarily the identical matrix. We characterize the dependence of signal-to-noise ratios on the cluster centers and covariance matrices and obtain the minimax lower bound for the clustering problem. In addition, we propose a computationally feasible procedure and prove it achieves the optimal rate within a few iterations. The proposed procedure is a hard EM type algorithm, and it can also be seen as a variant of the Lloyd’s algorithm that is adjusted to the anisotropic covariance matrices.

1 Introduction

Clustering is a fundamentally important task in statistics and machine learning [7, 2]. The most popular and studied model for clustering is the Gaussian Mixture Model (GMM) [18, 20] which can be written as

Here are the observations with being the sample size. Let be the number of clusters that is assumed to be known. Denote to be unknown centers and to be unknown covariance matrices for the clusters. Let be the cluster structure such that for each index , the value of indicates which cluster the th data point belongs to. The goal is to recover from . For any estimator , its clustering performance is measured by a misclustering error rate which will be introduced later in (4).

There have been increasing interests in the theoretical and algorithmic analysis of clustering under GMMs. When the GMM is isotropic (that is, all the covariance matrices are equal to the same identity matrix), [15] obtains the minimax rate for clustering which takes a form of under the loss . Various methods have been studied in the isotropic setting. -means clustering [16] might be the most natural choice but it is NP-hard [4]. As a local approach to optimize the -mean objects, Lloyd’s algorithm [13] is one of the most popular clustering algorithms and has achieved many successes in different disciplines [24]. [15, 8] establishes computational and statistical guarantees for the Lloyd’s algorithm by showing it achieves the optimal rates after a few iterations provided with some decent initialization. Another popular approach to clustering especially for high-dimensional data is spectral clustering [22, 19, 21], which is an umbrella term for clustering after a dimension reduction through a spectral decomposition. [14, 17, 1] proves the spectral clustering also achieves the optimality under the isotropic GMM. Another line of work is to consider semidefinite programming (SDP) as a convex relaxation of the -means objective. Its statistical properties have been studied in [6, 9].

In spite of all the exciting results, most of the existing literature focuses on isotropic GMMs, and clustering under the anisotropic case where the covariance matrices are not necessarily the identity matrix is not well-understood. The results of some papers [15, 6] hold under sub-Gaussian mixture models, where the errors are assumed to follow some sub-Gaussian distribution with variance proxy . It seems that their result already covers the anisotropic case, as are indeed sub-Gaussian distributions. However, from a minimax point of view, among all the sub-Gaussian distributions with variance proxy , the least favorable case (the case where clustering is the most difficult) is when the errors are . Therefore, the minimax rates for clustering under the sub-Gaussian mixture model is essentially the one under isotropic GMMs, and methods such as the Lloyd’s algorithm that requires no covariance matrix information can be rate-optimal. As a result, the aforementioned results are all for isotropic GMMs.

A few papers have explored the direction of clustering under anisotropic GMMs. [3] gives a polynomial-time clustering algorithm that provably works well when the Gaussian distributions are well separated by hyperplanes. Their idea is further developed in [11] which allows the Gaussians to be overlapped with each other but only for two-cluster cases. A recent paper [23] proposes another method for clustering under a balanced mixture of two elliptical distributions. They give a provable upper bound of their clustering performance with respect to an excess risk. Nevertheless, it remains unknown what is the fundamental limit of clustering under the anisotropic GMMs and whether there is any polynomial-time procedure that achieves it.

In this paper, we will investigate the optimal rates of the clustering task under two anisotropic GMMs. Model 1 is when the covariance matrices are all equal to each other (i.e., homogeneous) and are equal to some unknown matrix . Model 2 is more flexible, where the covariance matrices are unknown and are not necessarily equal to each other (i.e., heterogeneous). The contribution of this paper is two-fold, summarized as follows.

Our first contribution is on the fundamental limits. We obtain the minimax lower bound for clustering under the anisotropic GMMs with respect to the loss . We show it takes the form

where the signal-to-noise ratio under Model 1 is equal to and the one for Model 2 is more complicated. For both models, we can see the minimax rates depend not only on the centers but also the covariance matrices. This is different from the isotropic case whose signal-to-noise ratio is . Our results precisely capture the role the covariance matrices play in the clustering problem. It shows covariance matrices impact the fundamental limits of the clustering problem through complicated interaction with the centers especially in Model 2. The minimax lower bounds are obtained by establishing connections with Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA).

Our second and more important contribution is on the computational side. We propose a computationally feasible and rate-optimal algorithm for the anisotropic GMM. Popular methods including the Lloyd’s algorithm and the spectral clustering no longer work well as they are developed under the isotropic case and only consider the distances among the centers [3]. We study an adjusted Lloyd’s algorithm which estimates the covariance matrices in each iteration and recovers the clusters using the covariance matrix information. It can also be seen as a hard EM algorithm [5]. As an iterative algorithm, we give a statistical and computational guarantee and guidance to practitioners by showing that it obtains the minimax lower bound within iterations. That is, let be the output of the algorithm after iterations, we have with high probability,

holds for all . The algorithm can be initialized by popular methods such as the spectral clustering or the Lloyd’s algorithm. In numeric studies, we show the proposed algorithm improves greatly from the two aforementioned methods under anisotropic GMMs, and matches the optimal exponent given in the minimax lower bound.

Paper Organization.

The remaining paper is organized as follows. In Section 2, we study Model 1 where the covariance matrices are unknown but homogeneous. In Section 3, we consider Model 2 where covariance matrices are unknown and heterogeneous. For both cases, we obtain the minimax lower bound for clustering and study the adjusted Lloyd’s algorithm. In Section 4, we provide a numeric comparison with other popular methods. The proofs of theorems in Section 2 are given in Section 5 and the proofs for Section 3 are included in Section 6. All the technical lemmas are included in Section 7.

Notation.

Let for any positive integer . For any set , we denote for its cardinality. For any matrix , we denote to be its smallest eigenvalue and to be its largest eigenvalue. In addition, we denote to be its operator norm. For any two vectors of the same dimension, we denote to be its inner product. For any positive integer , we denote to be the identity matrix. We denote to be the normal distribution with mean and covariance matrix . We denote to be the indicator function. Given two positive sequences , we denote if when . We write if there exists a constant independent of such that for all .

2 GMM with Unknown but Homogeneous Covariance Matrices

2.1 Model

We first consider a GMM where covariance matrices of different clusters are unknown but are assumed to be equal to each other. The data generating progress can be displayed as follow:

| Model 1: | (1) |

It is called Stretched Mixture Model in [23] as the density of is elliptical. Throughout the paper, we call it Model 1 for simplicity and to distinguish it from a more complicated model that will be introduced in Section 3. The goal is to recover the underlying cluster assignment vector from .

Signal-to-noise Ratio.

Define the signal-to-noise ratio

| (2) |

which is a function of all the centers and the covariance matrix . As we will show later in Theorem 2.1, SNR captures the difficulty of the clustering problem and determines the minimax rate. For the geometric interpretation of SNR, we defer it after presenting Theorem 2.2.

A quantity closely related to SNR is the minimum distance among the centers. Define as

| (3) |

Then we can see SNR and are in the same order if all eigenvalues of the covariance matrix is assumed to be constants. If is further assumed to be an identical matrix, then we have SNR equal to . As a result, in [15, 8, 14] where isotropic GMMs are studied, plays the role of signal-to-noise ratio and appears in the minimax rates.

Loss Function.

To measure the clustering performance, we consider the misclustering error rate defined as follows. For any , we define

| (4) |

where . Here the minimum is over all the permutations over due to the identifiability issue of the labels . Another loss that will be used is defined as

| (5) |

It also measures the clustering performance of considering the distances among the true centers. It is related to as and hence provides more information than . We will mainly use in the technical analysis but will eventually present the results using which is more interpretable.

2.2 Minimax Lower Bound

We first establish the minimax lower bound for the clustering problem under Model 1.

Theorem 2.1.

Under the assumption , we have

| (6) |

If instead, we have for some constant .

Theorem 2.1 allows the cluster numbers to grow with and shows that is a necessary condition to have a consistent clustering if is a constant. Theorem 2.1 holds for any arbitrary and , and the minimax lower bound depend on them through SNR. The parameter space is only for while and are fixed. Hence, (6) can be interpreted as a pointwise result, and it captures precisely the explicit dependence of the minimaxity on and .

Theorem 2.1 is closely related to the Linear Discriminant Analysis (LDA). If there are only two clusters, and if the centers and the covariance matrix are known, then estimating each is exactly the task of LDA: we want to figure out which normal distribution an observation is generated from, where the two normal distributions have different means but the same covariance matrix. In fact, this is also how Theorem 2.1 is proved: we will first reduce the estimation problem of into a two-point hypothesis testing problem for each individual , the error of which is given in Lemma 2.1 by the analysis of LDA, and then aggregate all the testing errors together.

In the following lemma, we give a sharp and explicit formula for the testing error of the LDA. Here we have two normal distributions and and an observation that is generated from one of them. We are interested in estimating which distribution it is from. By Neyman-Pearson lemma, it is known that the likelihood ratio test is the optimal testing procedure. Then by using the Gaussian tail probability, we are able to obtain the optimal testing error, the lower bound of which is given in Lemma 2.1.

Lemma 2.1 (Testing Error for Linear Discriminant Analysis).

Consider two hypotheses and . Define a testing procedure

Then we have If , we have

Otherwise, for some constant .

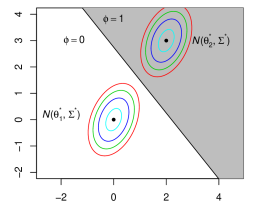

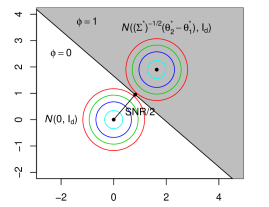

With the help of Lemma 2.1, we have a geometric interpretation of SNR. In the left panel of Figure 1, we have two normal distributions and for to be generated from. The black line represents the optimal testing procedure displayed in Lemma 2.1 that divides the space into two half-spaces. To calculate the testing error, we can make a transformation so that the two normal distributions become isotropic: and as displayed in the right panel. Then the distance between the two centers are , and the distance between a center and the black curve is half of it. Then is the probability of in the grayed area, which is equal to by Gaussian tail probability. As a result, is the effective distance between the two centers of and for the clustering problem, considering the geometry of the covariance matrix. Since we have multiple clusters, SNR defined in (2) can be interpreted as the minimum effective distances among the centers considering the anisotropic structure of and it captures the intrinsic difficulty of the clustering problem.

2.3 Rate-Optimal Adaptive Procedure

In this section, we will propose a computationally feasible and rate-optimal procedure for clustering under Model 1. Summarized in Algorithm 1, the proposed algorithm is a variant of the Lloyd algorithm. Starting from some initialization, it updates the estimation of the centers (in (7)), the covariance matrix (in (8)), and the cluster assignment vector (in (9)) iteratively. It differs from the Lloyd’s algorithm in the sense that Lloyd’s algorithm is for isotropic GMMs without the covariance matrix update (8). In addition, in (9) it updates the estimation of by instead. To distinguish them from each other, we call the classical Lloyd’s algorithm as the vanilla Lloyd’s algorithm, and name Algorithm 1 as the adjusted Lloyd’s algorithm, as it is adjusted to the unknown and anisotropic covariance matrix.

Algorithm 1 can also be interpreted as a hard EM algorithm. If we apply the Expectation Maximization (EM) for Model 1, we will have an M step for estimating parameters and and an E step for estimating . It turns out the updates on the parameters (7) - (8) are exactly the same as the updates of EM (M step). However, the update on in Algorithm 1 is different from that in the EM. Instead of taking a conditional expectation (E step), we also take a maximization in (9). As a result, Algorithm 1 consists solely of M steps for both the parameters and , which is known as a hard EM algorithm.

| (7) |

| (8) |

| (9) |

In Theorem 2.2, we give a computational and statistical guarantee of the proposed Algorithm 1. We show that starting from a decent initialization, within iterations, Algorithm 1 achieves an error rate which matches with the minimax lower bound given in Theorem 2.1. As a result, Algorithm 1 is a rate-optimal procedure. In addition, the algorithm is fully adaptive to the unknown and . The only information assumed to be known is the number of clusters, which is commonly assumed to be known in clustering literature [15, 8, 14]. The theorem also shows that the number of iterations to achieve the optimal rate is at most , which provides implementation guidance to practitioners.

Theorem 2.2.

Assume and for some constant . Assume and . For Algorithm 1, suppose satisfies with probability at least . Then with probability at least , we have

We have remarks on the assumptions of Theorem 2.2. We allow the number of clusters to grow with . When is a constant, the assumption on is the necessary condition to have a consistent recovery of according to the minimax lower bound presented in Theorem 2.1. The assumption on is to make sure the covariance matrix is well-conditioned. The dimensionality is assumed to be at most , an assumption that is stronger than that in [15, 8, 14] which only needs . This is due to that compared to these papers, we need to estimate the covariance matrix and to have a control on the estimation error .

The requirement for the initialization can be fulfilled by simple procedures. A popular choice is the vanilla Lloyd’s algorithm the performance of which is studied in [15, 8]. Since are sub-Gaussian random variables with proxy variance , [8] implies the vanilla Lloyd’s algorithm output satisfies with probability at least , under the assumption that . Note that [8] is for isotropic GMMs, but its results can be extended to sub-Gaussian mixture models with nearly identical proof. Then we have , as and are both in the same order under the assumption . As a result, we immediately have the following corollary.

Corollary 2.1.

Assume and for some constant . Assume and . Using the vanilla Lloyd’s algorithm as the initialization in Algorithm 1, we have with probability at least ,

3 GMM with Unknown and Heterogeneous Covariance Matrices

3.1 Model

In this section, we study the GMM with covariance matrices from each cluster unknown and not necessarily equal to each other. The data generation process can be displayed as follow,

| Model 2: | (10) |

We call it Model 2 throughout the paper to distinguish it from Model 1 studied in Section 2. The difference between (10) and (1) is that we now have instead of a shared . We consider the same loss functions as in (4) and (5).

Signal-to-noise Ratio.

The signal-to-noise ratio for Model 2 is defined as follows. We use the notation to distinguish it from SNR for Model 1. Compared to SNR, is much more complicated and does not have an explicit formula. We first define a space for any such that :

We then define and

| (11) |

The from of is closely connected to the testing error of the Quadratic Discriminant Analysis (QDA), which we will give in Lemma 3.1. For the interpretation of the (especially from a geometric point of view), we defer it after presenting Lemma 3.1. Here let us consider a few special cases where we are able to simplify : (1) When for all , by simple algebra, we have for any such that . Hence, and Model 2 is reduced to the Model 1. (2) When for any where are large constants, we have both close to . From these examples, we can see is determined by both the centers and the covariance matrices .

3.2 Minimax Lower Bound

We first establish the minimax lower bound for the clustering problem under Model 2.

Theorem 3.1.

Under the assumption , we have

If instead, we have for some constant .

Despite that the statement of Theorem 3.1 looks similar to that of Theorem 2.1, the two minimax lower bounds are different from each other due to the discrepancy in the dependence of the centers and the covariance matrices in and SNR. By the same argument as in Section 2.2, the minimax lower bound established in Theorem 3.1 is closely related to the Quadratic Discriminant Analysis (QDA) between two normal distributions with different means and different covariance matrices.

Lemma 3.1 (Testing Error for Quadratic Discriminant Analysis).

Consider two hypotheses and . Define a testing procedure

Then we have If , we have

Otherwise, for some constant .

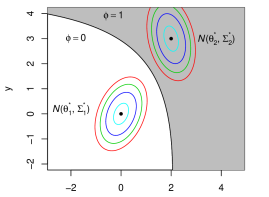

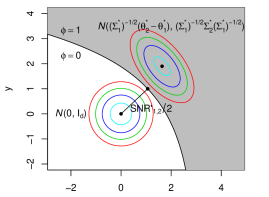

From Lemma 3.1, we can have a geometric interpretation of . In the left panel of Figure 2, we have two normal distributions and where can be generated from. The black curve represents the optimal testing procedure displayed in Lemma 3.1. Since is not necessarily equal to , the black curve is not necessarily a straight line. If is true, then the probability for to be incorrectly classified is when falls in the gray area, which is . To calculate it, we can make a transformation . Then displayed in the right panel of Figure 2, the two distributions become and , and the optimal testing procedure . As a result, in the right panel of Figure 2, represents the space colored by gray, and the black curve is its boundary. Then is equal to , which can be shown to be determined by the minimum distance between the center of and the space . Denote the minimum distance by , by Lemmas 7.10 and Lemma 7.11, we can show . As a result, the can be interpreted as the minimum effective distance among the centers considering the anisotropic and heterogeneous structure of and it captures the intrinsic difficulty of the clustering problem under Model 2.

3.3 Optimal Adaptive Procedure

In this section, we will propose a computationally feasible and rate-optimal procedure for clustering under Model 2. Similar to Algorithm 1, the proposed Algorithm 2 can be seen as a variant of the Lloyd’s algorithm that is adjusted to the unknown and heterogeneous covariance matrices. It can also be interpreted as a hard EM algorithm under Model 2. Algorithm 2 differs from Algorithm 1 in (13) and (14), as now there are covariance matrices to be estimated.

| (12) |

| (13) |

| (14) |

In Theorem 3.2, we give a computational and statistical guarantee of the proposed Algorithm 2. We show that provided with some decent initialization, Algorithm 2 is able to achieve the minimax lower bound within iterations. The assumptions needed in Theorem 3.2 are similar to those in Theorem 3.2, except that we require stronger assumptions on and the dimensionality since now we have (instead of one) covariance matrices to be estimated. In addition, by we not only assume each of the covariance matrices is well-conditioned, but also assume they are comparable to each other.

Theorem 3.2.

Assume and for some constant . Assume and . For Algorithm 2 , suppose satisfies with probability at least . Then with probability at least , we have

The vanilla Lloyd’s algorithm can be used as the initialization for Algorithm 2. Under the assumption that , Model 2 is also a sub-Gaussian mixture model. By the same argument as in Section 2.3 we have the following corollary.

Corollary 3.1.

Assume and for some constant . Assume and . Using the vanilla Lloyd’s algorithm as the initialization in Algorithm 2, we have with probability at least ,

4 Numerical Studies

In this section, we compare the performance of the proposed methods with other popular clustering methods on synthetic datasets under different settings.

Model 1.

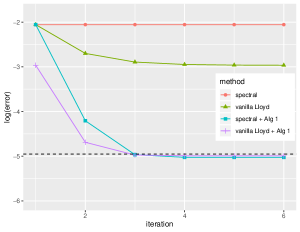

The first simulation is designed for the GMM with unknown but homogeneous covariance matrices (i.e., Model 1). We independently generate samples with dimension from clusters. Each cluster has 40 samples. We set , where is a diagonal matrix with diagonal elements selected from 0.5 to 8 with equal space and is a randomly generated orthogonal matrix. The centers are orthogonal or each other with . We consider four popular clustering methods: (1) the spectral clustering method [14] (denoted as “spectral”), (2) the vanilla Lloyd’s algorithm [15] (denoted as “vanilla Lloyd”), (3) the proposed Algorithm 1 initialized by the spectral clustering (denoted as “spectral + Alg 1”), and (4) Algorithm 1 initialized by the vanilla Lloyd (denoted as “vanilla Lloyd + Alg 1”). The comparison is presented in the left panel of Figure 3.

In the plot, the -axis is the number of iterations and the -axis is the logarithm of the mis-clustering error rate, i.e., . Each of the curves plotted is an average of 100 independent trials. We can see the proposed Algorithm 1 outperforms the spectral clustering and the vanilla Lloyd’s algorithm significantly. What is more, the dashed line represents the optimal exponent of the minimax lower bound given in Section 2.1. Then we can see Algorithm 1 achieves it after 3 iterations. This justifies the conclusion established in Theorem 2.2 that Algorithm 1 is rate-optimal.

Model 2.

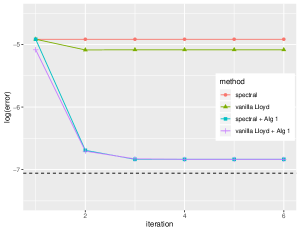

We also compare the performances of four methods (spectral, vanilla Lloyd, spectral + Alg 2, and vanilla Lloyd + Alg 2) for the GMM with unknown and heterogeneous covariance matrices (i.e., Model 2). In this case, we take , and . We set , which is a diagonal matrix with elements generated from 0.5 to 8 with equal space and , where is a diagonal matrix with elements selected uniformly from 0.5 to 2 and is a randomly generated orthogonal matrix. To simplify the calculation of , we take as a randomly selected unit vector, with denoting the vector with a 1 in the first coordinate and 0’s elsewhere and with randomly selected satisfying . The comparison is presented in the right panel of Figure 3 where each curve plotted is an average of 100 independent trials.

From the plot, we can clearly see the proposed Algorithm 2 improves greatly the spectral clustering and the vanilla Lloyd algorithm. The dashed line represents the optimal exponent of the minimax lower bound given in Section 3.1, which is achieved by Algorithm 2. Hence, this numerically justifies Theorem 3.2 that Algorithm 1 is rate-optimal for clustering under Model 2.

5 Proofs in Section 2

5.1 Proofs for The Lower Bound

Proof of Lemma 2.1.

Note that is the likelihood ratio test. Hence by the Neyman-Pearson lemma, it is the optimal procedure. Let . By Gaussian tail probability, we have

for some constant . The proof is complete. ∎

Proof of Theorem 2.1.

We adopt the idea from [15]. Without loss of generality, assume the minimum in (2) is achieved at so that . Consider an arbitrary such that for any . Then for each , we can choose a subset of with cardinality , denoted by . Let . Then we can define a parameter space

Notice that for any , we have and for any permutation on . Thus we can conclude

We notice that

Now consider a fixed . Define for . Then we can see and . What is more, there exists a one-to-one mapping between and , such that for any , we have with for any and . Hence, we can reduce the problem into a two-point testing probe and then apply Lemma 2.1. We first consider the case that . We have

for some . Here the second inequality is due to Lemma 2.1. Then,

for some other , where we use .

The proof for the case is similar and hence is omitted here. ∎

5.2 Proofs for The Upper Bound

In this section, we will prove Theorem 2.2 using the framework developed in [8] for analyzing iterative algorithms. The key idea to establish statistical guarantees of the proposed iterative algorithm (i.e., Algorithm 1) is to perform a “one-step” analysis. That is, assume we have an estimation for . Then we can apply (7), (8), and (9) on to obtain , , and sequentially, which all depend on . Then can be seen as a refined estimation of . We will first build the connection between with as in Lemma 5.1. To establish the connection, we will decompose the loss into several errors according to the difference in their behaviors. Then we will give conditions (Condition 5.2.1 - 5.2.3), under which we will show these errors are either negligible or well controlled by . With Lemma 5.1 established, in Lemma 5.2 we will show the connection can be extended to multiple iterations, under two more conditions (Condition 5.2.4 - 5.2.5). Last, we will show all these conditions hold with high probability, and hence prove Theorem 2.2.

In the statement of Theorem 2.2, the covariance matrix is assumed to satisfy . Without loss of generality, we can replace it by assuming satisfies

| (15) |

where are two constants. This is due to the following simple argument using the scaling properties of normal distributions. Let be some dataset generated according to Model 1 with parameters , , and . The assumption is equivalent to assume there exist some constants and some quantity that may depend on such that . Then performing a scaling transformation we obtain another dataset . Note that: 1) can be seen to be generated from Model 1 with parameters , , and , 2) clustering on is equivalent to clustering on , 3) by the definition in (2), the SNRs that are associated with the data generating processes of and are exactly equal to each other, and 4) we have . Hence, in this section, we will assume (15) holds and it will not lose any generality.

In the proof, we will mainly use the loss for convenience. Recall is defined as the minimum distance among centers in (3). We have

| (16) |

The algorithmic guarantees Lemma 5.1 and Lemma 5.2 are established with respect to the loss. But eventually we will use (16) to convert it into a result with respect to in the proof of Theorem 2.2.

Error Decomposition for the One-step Analysis:

Consider an arbitrary . Apply (7), (8), and (9) on to obtain , , and :

For simplicity we use that is short for . Let be an arbitrary index with . According to (9), will be incorrectly estimated after on iteration in if . That is, it is important to analyze the event

| (17) |

for any . Note that . After some rearrangements, we can see (17) is equivalent to,

where

and

Here is the main term that will lead to the optimal rate. Among all the remaining terms, includes all terms involving . includes all terms related to and consists of terms that only involves . Readers can refer [8] for more information about the decomposition.

Conditions and Guarantees for One-step Analysis.

To establish the guarantee for the one-step analysis, we first give several conditions on the error terms and .

Condition 5.2.1.

Assume that

holds with probability with at least for some .

Condition 5.2.2.

Assume that

holds with probability with at least for some .

Condition 5.2.3.

Assume that

holds with probability with at least for some .

We next define a quantity that we refer it as the ideal error,

Conditions and Guarantees for Multiple Iterations.

In the above we establish a statistical guarantee for the one-step analysis. Now we will extend the result to multiple iterations. That is, starting from some initialization , we will characterize how the losses , , , …, decay. We impose a condition on and a condition for .

Condition 5.2.4.

Assume that

holds with probability with at least for some .

Finally, we need a condition on the initialization.

Condition 5.2.5.

Assume that

holds with probability with at least for some .

With these conditions satisfied, we can give a lemma that shows the linear convergence guarantee for our algorithm.

Lemma 5.2.

With-high-probability Results for the Conditions and The Proof of The Main Theorem.

Recall the definition of in (3). Recall that in (15) we assume for two constants . Hence we have is in the same order of SNR. Specifically, we have

| (18) |

Hence the assumption in the statement of Theorem 2.2 is equivalently . Next, we give two lemmas to clarify the Conditions. The first lemma shows that can be taken for some term and the second lemma shows for any , is upper bounded by the desired minimax rate multiply by the sample size .

Lemma 5.3.

Under the same conditions as in Theorem 2.2, for any constant , there exists some constant only depending on and such that

| (19) | ||||

| (20) | ||||

| (21) |

with probability at least .

Proof.

Under the conditions of Theorem 2.2, the inequalities (33)-(38) hold with probability at least . In the remaining proof, we will work on the event these inequalities hold. Denote and for any . Then we have the equivalence

and

By the assumption that , and , we have , which implies . Thus, we have

| (22) |

and similarly

| (23) |

Now we start to prove (19)-(21). Let where

Notice that

where we use (34), (23), and the fact that and for the last inequality. From (41) we have under the assumption . By the similar analysis as in , we have

Similarly, we have

where we use (42) and the fact that has bounded operator norm. Combining these terms together, we obtain (20).

Lemma 5.4.

Proof.

Under the conditions of Theorem 2.2, the inequalities (33)-(38) hold with probability at least . In the remaining proof, we will work on the event these inequalities hold. Recall the definition of , we can write

where is some sequence to be chosen later. We bound the four terms respectively. Suppose , where . By (22), we know

where is a constant which may differ line by line. Recall that , , and by assumption. Let . Using the tail probability in Lemma 7.1, we have for any ,

We can obtain similar bounds on and by using (41). For , the Gaussian tail bound leads to the inequality

Thus,

Overall, we have . By the Markov’s inequality, we have

In other words, with probability at least , we have

∎

Proof of Theorem 2.2.

By Lemmas 5.2 - 5.4, we have Conditions 5.2.1 - 5.2.5 satisfied with probability at least . Then applying Lemma 5.2, we have

By (16) and there exists a constant C such that , we can conclude

Notice that takes value in the set , the term in the above inequality should be negligible as long as . Thus, we can claim

∎

6 Proofs in Section 3

6.1 Proofs for The Lower Bound

Proof of Lemma 3.1.

6.2 Proofs for The Upper Bound

We adopt a similar proof idea as in Section 5.2. We first present an error decomposition for one-step analysis for Algorithm 2. In Lemma 6.1, we show the loss decays after a one-step iteration under Conditions 6.2.1 - 6.2.6. Then in Lemma 6.2 we extend the result to multiple iterations, under two extra Conditions 6.2.7 - 6.2.8. Last we show all the conditions are satisfied with high probability and thus prove Theorem 3.2.

In the statement of Theorem 3.2, we assume for the covariance matrix . Without loss of generality, we can replace it by assuming satisfies

| (24) |

where are two constants. The is due to the scaling properties of the normal distributions. The reasoning is the same as that of (15) for Model 1 and hence is omitted here. In the remaining of this section, we will assume (24) holds for the covariance matrices.

Error Decomposition for the One-step Analysis:

Consider an arbitrary . Apply (12), (13), and (14) on to obtain , , and :

For simplicity we denote short for . Let be an arbitrary index with . According to (9), will be incorrectly estimated after on iteration in if . That is, it is important to analyze the event

| (25) |

for any . After some rearrangements, we can see (25) is equivalent to,

where

and

Among these terms, are nearly identical to their counterparts in Section 5.2 with replaced by or . There are three extra terms not appearing in Section 5.2: is a quadratic term of and are terms involving matrix determinants.

Conditions and Guarantees for One-step Analysis.

To establish the guarantee for the one-step analysis, we first give several conditions on the error terms.

Condition 6.2.1.

Assume that

holds with probability with at least for some .

Condition 6.2.2.

Assume that

holds with probability with at least for some .

Condition 6.2.3.

Assume that

holds with probability with at least for some .

Condition 6.2.4.

Assume that

holds with probability with at least for some .

Condition 6.2.5.

Assume that

holds with probability with at least for some .

Condition 6.2.6.

Assume that

holds with probability with at least for some .

We next define a quantity that refers to as the ideal error,

Proof.

The proof of this lemma is quite similar to the proof of Lemma 5.1. The additional terms and can be dealt with the same way as while can be dealt with the same way as . We omit the details here. ∎

Conditions and Guarantees for Multiple Iterations.

In the above we establish a statistical guarantee for the one-step analysis. Now we will extend the result to multiple iterations. That is, starting from some initialization , we will characterize how the losses , , , …, decay. We impose a condition on and a condition for .

Condition 6.2.7.

Assume that

holds with probability with at least for some .

Finally, we need a condition on the initialization.

Condition 6.2.8.

Assume that

holds with probability with at least for some .

With these conditions satisfied, we can give a lemma that shows the linear convergence guarantee for our algorithm.

Lemma 6.2.

Proof.

The proof of this lemma is the same as the proof of Lemma 5.2. ∎

With-high-probability Results for the Conditions and The Proof of The Main Theorem.

Recall the definition of in (3). Lemma 6.3 shows is in the same order with , which will play a similar role as (18) in Section 5.2. It immediately implies the assumption in the statement of Theorem 3.2 is equivalently . The proof of Lemma 6.3 is deferred to Section 7.

Lemma 6.3.

Assume and . Further assume there exist constants such that for any . Then, there exist constants only depending on such that

for any . As a result, is in the same order of .

Lemma 6.4.

Under the same conditions as in Theorem 3.2, for any constant , there exists some constant only depending on such that

| (26) | ||||

| (27) | ||||

| (28) | ||||

| (29) | ||||

| (30) | ||||

| (31) |

with probability at least .

Proof.

Under the conditions of Theorem 3.2, the inequalities (33)-(38) hold with probability at least . In the remaining proof, we will work on the event these inequalities hold. Hence, we can use the results from Lemma 7.7 and 7.8. Using the same arguments as in the proof of Lemma 5.3, we can get (26), (27) and (28).

As for (29), we first use Lemma 7.9 to have with probability at least . Then, we have

where the last inequality is due to (53) and the fact that .

Next for (30), notice that by (43), (44), and , we have for any , and

Thus by Lemma 7.6, we know

| (32) |

where the last inequality is due to the fact that is at the constant rate, and the inequality for any . (32) yields to the inequality

Finally for (31), by (43) and the similar argument as (32), we can get

which implies (31). We complete the proof. ∎

Lemma 6.5.

Proof.

Under the conditions of Theorem 3.2, the inequalities (33)-(38) hold with probability at least . In the remaining proof, we will work on the event these inequalities hold. Similar to the proof of Lemma 5.4, we have a decomposition where

is the main term and

Using the same arguments as the proof of Lemma 5.4, we can choose some which is slowly diverging to zero satisfying

As for , by (43) we have

where is a constant and . Since there exists some constant such that , we can choose appropriate such that

is essentially the same with . Finally for , using Lemma 7.10, we have

Then we have

Using the Markov’s inequality we complete the proof of Lemma 6.5. ∎

7 Technical Lemmas

Here are the technical lemmas.

Lemma 7.1.

For any , we have

Proof.

These results are Lemma 1 of [12]. ∎

Lemma 7.2.

For any and , consider independent vectors for any . Assume there exists a constant such that for any . Then, for any constant , there exists some constant only depending on such that

| (33) | ||||

| (34) | ||||

| (35) | ||||

| (36) |

with probability at least . We have used the convention that .

Proof.

Note that is sub-Gaussian with parameter which is a constant. The inequalities (33) and (35) are respectively Lemmas A.4, A.1 in [15]. The inequality (34) is a slight extension of Lemma A.2 in [15]. This extension can be done by a standard union bound argument. The proof of (36) is identical to that of (35). ∎

Lemma 7.3.

Consider the same assumptions as in Lemma 7.2. Assume additionally for some constant and . Then, for any constant , there exists some constant only depending on such that

| (37) |

with probability at least .

Proof.

Note that we have where for any . Since , we have

Define

Take and is an -covering of . In particular, we pick , then . By the definition of the -covering, we have

For any ,

Denote . Then . Using Lemma 7.1, we have

Since and where is a constant, we can take for some large constant and the proof is complete. ∎

Lemma 7.4.

Consider the same assumptions as in Lemma 7.2. Then, for any and for any constant , there exists some constant only depending on such that

| (38) |

with probability at least . We have used the convention that .

Proof.

Consider any and a fixed such that . Similar to the proof of Lemma 7.3, we can take and its -covering with and . Then we have

Note that is a sub-Gaussian random variable with parameter 1. By [10], for any fixed , we have

Since , there exists a constant such that . We can take with , then . Thus,

Hence, we have

As a result,

Since is an increasing function when and , a choice of , that is , can yield the desired result.

Finally, to allow , we note that . The proof is complete. ∎

Lemma 7.5.

For any and , assume and , then

| (39) |

Proof.

The next lemma is the famous Weyl’s Theorem and we omit the proof here.

Lemma 7.6 (Weyl’s Theorem).

Let and be any two symmetric real matrix. Then for any , we have

In the following lemma, we are going to analyze estimation errors of under the anisotropic GMMs. For any and for any , recall the definitions

Lemma 7.7.

For any and , consider independent vectors where for any . Assume there exist constants such that for any , and a constant such that . Assume and . Assume (33)-(38) hold. Then for any and for any constant , there exists some constant only depending on such that

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) |

for all such that .

Proof.

Using (33) we obtain (41). By the same argument of (118) in [8], we can obtain (42). By (33) and (37) and (41), we can obtain (43). In the remaining of the proof, we will establish (53).

Since , we have for any . The difference will be decomposed into several terms. We notice that

| (45) |

where

and

Also, we notice that

| (46) |

where

For , we have

| (47) |

By (36), (39), (40), we have uniformly for any ,

| (48) |

Since , by (39), (42), (41), (47), and (48), we have uniformly for any ,

| (49) |

To bound , we first give the following simple fact. For any positive integer and any , we have . Hence, for , we have the following decomposition

| (50) |

where

Since , we have

| (51) |

By (38) and the fact that , we also have

We are going to simplify the above bounds for . Under the assumption that , , and , we have , , and . Hence . Also we have

where in the last inequality, we use the fact that is an increasing function of when . Then,

Since is similar to , by (46) we have uniformly for any

| (52) |

Lemma 7.8.

Under the same assumption as in Lemma 7.7, if additional we assume and , there exists some constant only depending on such that

| (53) |

Lemma 7.9.

Let for any where are positive integers. Then we have

Proof.

We have and . Then we have . Then we obtain the desired result by Chebyshev’s inequality. ∎

Proof of Lemma 6.3.

Consider any . We are going to prove

| (55) |

We first prove the upper bound. Denote . Since we have assumed , we have that . Note that we have an equivalent expression of :

We consider the following scenarios.

(1). If , we have . Let , we can know . This tells us .

(2). If , let and assume , where is an orthogonal matrix and is a diagonal matrix with diagonal elements and . We can rewrite with and . Since , we can take and for . Then, we have

It means and hence . Then Thus we have .

(3). If , we still use the notations in scenario (2). Notice that , we have

Now we take and for , then we have

Thus we have .

Overall, we have for all the three scenarios.

To prove the lower bound, we have

By the upper bound we know for any , when . Thus, we have

Hence,

∎

In the following lemmas, we are going to establish connections between testing errors and . Consider any such that . Let , , and . Define

for any . In addition, we define

| and |

Recall the definitions of and in Section 3. Then they are a special case of and with . That is, we have and .

Lemma 7.10.

Assume and where are constants. Under the condition , for any positive sequence , there exists a that depends on such that

Proof.

For convenience and conciseness, we will use the notation instead of throughout the proof. By Lemma 6.3, we have in the same order of , which means .

Assume we had obtained . Then by Lemma 6.3, we have in the same order of which is far bigger than by assumption. Since , using Lemma 7.1, we have

which is the desired result. Hence, the proof of this lemma is all about establishing .

To prove it, we first simplify . In spite of some abuse of notation, denote to be the eigenvalues of such that its eigen-decomposition can be written as where are orthogonal vectors. Denote and

and

Then can be seen a reflection-rotation of by the transformation . Hence we have for any . What is more, let to be its boundary, i.e.,

Since , we have As a result, we only need to work on instead of . Denote to be for simplicity.

We then give an equivalent expression of . From (55), we have an upper bound of : where we use . The same upper bound actually holds for for any following the same proof. Define . We then have

We have the following inequality. Let be any mapping. By the triangle inequality, we have . We have

| (56) |

As a result, if we are able to find some such that , we will immediately have and the proof will be complete.

Let be some vector. Define . If is a well-defined mapping, we have

| (57) |

which can be used to derive an upper bound. However, to make well-defined, we need for any , there exits some such that . This means we have the following two equations:

| (58) | ||||

| and |

It is equivalent to require to satisfy

| (59) |

Hence, all we need is to find a decent vector such that: for any there exists a satisfying (59), and we can obtain the desired upper bound for (57).

In the following, we will consider four different scenarios according to the spectral . For each scenario, we will construct a with decent bounds for (57). Denote .

Scenario 1: . We choose . Note that we have in the same order of and in the same order of . Note that we have

where in the last inequality we use . Define . Then we have

Hence for any there exists a such that (59) is satisfied. Hence, is an upper bound for (57).

Scenario 2: . We choose which is the first standard basis of . Then, (59) can be written as

Since , the above equation has two different solutions . Simple algebra leads to

Hence, an upper bound for (57) is .

Scenario 3: and there exists a such that and . We choose . Then (59) can be written as

Note that for any , we have . Denote . Then we have

As a result, there exists some satisfying (59). Hence, is an upper bound for (57).

Scenario 4: and for all such that . This scenario is slightly more complicated as we need to be dependent on . Denote it as . Then (56) still holds and (57) can be changed into

| (60) |

Denote to be the integer such that for all and for all . We can have otherwise this scenario can be reduced to Scenario 1. Define

for any . Instead of using (59), we will analyze it slightly differently.

For , (58) can be rewritten as

| (61) |

On the other hand, if is well-defined, we need which means

Note that we have for and for . Then the above display can be written as

Together with (61) multiplied, the above equation leads to

| (62) |

It is sufficient to find some such that

| (63) |

then there definitely exists some satisfying (62).

We are going to give a lower bound for the right hand side of (63). Particularly, we need to lower bound . Denote . Then using the definition of and , we have

Then we have

| (64) |

Hence, the right hand side of (63) can be lower bounded by

| (65) |

where we use both and the assumption that and for any . Then a sufficient condition for (63) is satisfies

Since is in the same order of and , it can be achieved by .

As a result, from (60) we have

Combining the above four scenarios, we can see we all have which is . By the argument before the discussion of the four scenarios, we have and the proof is complete. ∎

Lemma 7.11.

Assume and where are constants. Under the condition , for any positive sequence , there exists a that depends on such that

Proof.

For convenience and conciseness, we will use the notation instead of throughout the proof. From Lemma 6.3, we know is in the same order of , which means . Similar to the proof of Lemma 7.10, denote to be the eigenvalues of such that its eigen-decomposition can be written as where are orthogonal vectors. Denote , . Then denote

and its boundary

By the same argument as in the proof of Lemma 7.10, can be seen a reflection-rotation of by the transformation . Hence we have and we can work on instead of . Denote to be the one such that . From the proof of Lemma 7.10 we also know which is defined as . In addition, we know .

We first give the main idea of the remaining proof. Denote to be the density function of We will construct a set around such that for any we have and . Then we have

| (66) |

Hence if then the proof will be complete. So it is all about constructing such . We will consider four scenarios same as in the proof of Lemma 7.10. Let be some positive sequence going to 0 very slowly and denote .

Scenario 1: . Define . We define as follows:

Since , we have

| (67) |

It is obvious . Hence we only need to show that for any , , i.e.,

| (68) |

From the above two displays, we need to show

| (69) |

Note that satisfies .

where we use the fact that are in the order of . Hence, for any , we have shown . From Lemma 7.12, we have . Since , , and goes to 0 slowly, (7) leads to .

Scenario 2: . Denote the first standard basis in . Define as

Here for the sign function we define . It is obvious . Hence we only need to establish (69) to show that for any , . Note that

where we use and are in the order of . It is easy to verify the right hand side is negative when . From Lemma 7.12, we have . Then (7) leads to the desired result.

Scenario 3: and there exists a such that and . Denote the th standard basis in . Define as

Again define and it is obvious . Now we are going to verify (69), i.e., to show . On one hand, we have

One the other hand, we have

we use and are in the order of . Hence (69) is established. From Lemma 7.12, we have . Then (7) leads to the desired result.

Scenario 4: and for all such that . Denote to be the integer such that for all and for all . We can have otherwise this scenario can be reduced to Scenario 1.

Define to be unit vector such that

Define

Now we are going to verify (69), i.e., to show . On one hand, we have

We are going to give a lower bound for . Note that (67) can be written as

Denote . Using (64), we have

for some constant . Here the second inequality is by the same argument as (65) and the last inequality uses the fact that is in the order of and . Hence,

On the other hand, we have

where we use the properties of as . Summing the above two displays together, we have (69) satisfied. From Lemma 7.12, we have . Then (7) leads to the desired result. ∎

Lemma 7.12.

Consider any positive integer and any , . Define a set . Then we have

Proof.

Define a -dimensional ball . We can easily verify that . First, for all , we have as . Then, we have . As a result, by the expression of the volume of a -dimensional ball, we have

where is the Gamma function. ∎

References

- Abbe et al. [2020] E. Abbe, J. Fan, and K. Wang. An -theory of PCA and spectral clustering. arxiv preprint, 2020.

- Bishop [2006] Christopher M Bishop. Pattern recognition and machine learning. springer, 2006.

- Brubaker and Vempala [2008] S Charles Brubaker and Santosh S Vempala. Isotropic pca and affine-invariant clustering. In Building Bridges, pages 241–281. Springer, 2008.

- Dasgupta [2008] Sanjoy Dasgupta. The hardness of k-means clustering. Department of Computer Science and Engineering, University of California …, 2008.

- Dempster et al. [1977] Arthur P Dempster, Nan M Laird, and Donald B Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1):1–22, 1977.

- Fei and Chen [2018] Yingjie Fei and Yudong Chen. Hidden integrality of sdp relaxation for sub-gaussian mixture models. arXiv preprint arXiv:1803.06510, 2018.

- Friedman et al. [2001] Jerome Friedman, Trevor Hastie, and Robert Tibshirani. The elements of statistical learning, volume 1. Springer series in statistics New York, 2001.

- Gao and Zhang [2019] Chao Gao and Anderson Y Zhang. Iterative algorithm for discrete structure recovery. arXiv preprint arXiv:1911.01018, 2019.

- Giraud and Verzelen [2018] Christophe Giraud and Nicolas Verzelen. Partial recovery bounds for clustering with the relaxed means. arXiv preprint arXiv:1807.07547, 2018.

- Hsu et al. [2012] Daniel Hsu, Sham Kakade, Tong Zhang, et al. A tail inequality for quadratic forms of subgaussian random vectors. Electronic Communications in Probability, 17, 2012.

- Kalai et al. [2010] Adam Tauman Kalai, Ankur Moitra, and Gregory Valiant. Efficiently learning mixtures of two gaussians. In Proceedings of the forty-second ACM symposium on Theory of computing, pages 553–562, 2010.

- Laurent and Massart [2000] Beatrice Laurent and Pascal Massart. Adaptive estimation of a quadratic functional by model selection. Annals of Statistics, pages 1302–1338, 2000.

- Lloyd [1982] Stuart Lloyd. Least squares quantization in pcm. IEEE transactions on information theory, 28(2):129–137, 1982.

- Löffler et al. [2019] Matthias Löffler, Anderson Y Zhang, and Harrison H Zhou. Optimality of spectral clustering for gaussian mixture model. arXiv preprint arXiv:1911.00538, 2019.

- Lu and Zhou [2016] Yu Lu and Harrison H Zhou. Statistical and computational guarantees of lloyd’s algorithm and its variants. arXiv preprint arXiv:1612.02099, 2016.

- MacQueen et al. [1967] James MacQueen et al. Some methods for classification and analysis of multivariate observations. 1967.

- Ndaoud [2019] M. Ndaoud. Sharp optimal recovery in the two component gaussian mixture model. arXiv preprint, 2019.

- Pearson [1894] Karl Pearson. Contributions to the mathematical theory of evolution. Philosophical Transactions of the Royal Society of London. A, 185:71–110, 1894.

- Spielman and Teng [1996] Daniel A Spielman and Shang-Hua Teng. Spectral partitioning works: Planar graphs and finite element meshes. In Proceedings of 37th Conference on Foundations of Computer Science, pages 96–105. IEEE, 1996.

- Titterington et al. [1985] D Michael Titterington, Adrian FM Smith, and Udi E Makov. Statistical analysis of finite mixture distributions. Wiley,, 1985.

- Vempala and Wang [2004] S. Vempala and G. Wang. A spectral algorithm for learning mixture models. J. Comput. Syst. Sci., 68(4):841–860, 2004.

- Von Luxburg [2007] Ulrike Von Luxburg. A tutorial on spectral clustering. Statistics and computing, 17(4):395–416, 2007.

- Wang et al. [2020] Kaizheng Wang, Yuling Yan, and Mateo Diaz. Efficient clustering for stretched mixtures: Landscape and optimality. arXiv preprint arXiv:2003.09960, 2020.

- Wu et al. [2008] Xindong Wu, Vipin Kumar, J Ross Quinlan, Joydeep Ghosh, Qiang Yang, Hiroshi Motoda, Geoffrey J McLachlan, Angus Ng, Bing Liu, S Yu Philip, et al. Top 10 algorithms in data mining. Knowledge and information systems, 14(1):1–37, 2008.