Optimal Decision Rules for Simple Hypothesis Testing under General Criterion Involving Error Probabilities

Abstract

The problem of simple ary hypothesis testing under a generic performance criterion that depends on arbitrary functions of error probabilities is considered. Using results from convex analysis, it is proved that an optimal decision rule can be characterized as a randomization among at most two deterministic decision rules, of the form reminiscent to Bayes rule, if the boundary points corresponding to each rule have zero probability under each hypothesis. Otherwise, a randomization among at most deterministic decision rules is sufficient. The form of the deterministic decision rules are explicitly specified. Likelihood ratios are shown to be sufficient statistics. Classical performance measures including Bayesian, minimax, Neyman-Pearson, generalized Neyman-Pearson, restricted Bayesian, and prospect theory based approaches are all covered under the proposed formulation. A numerical example is presented for prospect theory based binary hypothesis testing.

Index Terms– Hypothesis testing, optimal tests, convexity, likelihood ratio, randomization.

I Problem Statement

Consider a detection problem with simple hypotheses:

| (1) |

where the random observation takes values from an observation set with . Depending on whether the observed random vector is continuous-valued or discrete-valued, denotes either the probability density function (pdf) or the probability mass function (pmf) under hypothesis . For compactness of notation, the term density is used for both pdf and pmf. In order to decide among the hypotheses, we consider the set of pointwise randomized decision functions, denoted by , i.e., such that and for and . More explicitly, given the observation , the detector decides in favor of hypothesis with probability . Then, the probability of choosing hypothesis when hypothesis is true, denoted by with , is given by

| (2) |

where denotes expected value under hypothesis and is used in (2) to denote the fold integral and sum for continuous and discrete cases, respectively. Let denote the (column) vector containing all pairwise error probabilities for and corresponding to the decision rule . It is sufficient to include only the pairwise error probabilities in , i.e., with . To see this, note that (2) in conjunction with imply , from which we get the probability of correctly identifying hypothesis as .

For -ary hypothesis testing, we consider a generic decision criterion that can be expressed in terms of the error probabilities as follows:

| subject to | ||||

| (3) |

where and denote arbitrary functions of the pairwise error probability vector. Classical hypothesis testing criteria such as Bayesian, minimax, Neyman-Pearson (NP) [1], generalized Neyman-Pearson [2], restricted Bayesian [3], and prospect theory based hypothesis testing [4] are all special cases of the formulation in (3). For example, in the restricted Bayesian framework, the Bayes risk with respect to (w.r.t.) a certain prior is minimized subject to a constraint on the maximum conditional risk [3]:

| subject to | (4) |

for some , where is the maximum conditional risk of the minimax procedure [1]. The conditional risk when hypothesis is true, denoted by , is given by and the Bayes risk is expressed as , where denotes the a priori probability of hypothesis and is the cost incurred by choosing hypothesis when in fact hypothesis is true. Hence, (I) is a special case of (3).

In this letter, for the first time in the literature, we provide a unified characterization of optimal decision rules for simple hypothesis testing under a general criterion involving error probabilities.

II Preliminaries

Let be a real (column) vector of length whose elements are denoted as for and . Next, we present an optimal deterministic decision rule that minimizes the weighted sum of ’s with arbitrary real weights .111In classical Bayesian ary hypothesis testing, .

II-A Optimal decision rule that minimizes

The corresponding weighted sum of pairwise error probabilities can be written as

| (5) |

where (2) is substituted for in (II-A). Defining , we get

| (6) |

The lower bound in (II-A) is achieved if, for all , we set

| (7) |

(and hence, for all ), i.e., each observed vector is assigned to the corresponding hypothesis that minimizes over all . In case where there are multiple hypotheses that achieve the same minimum value of for a given observation , the ties can be broken by arbitrarily selecting one of them since the boundary decision does not affect the decision criterion . However, pairwise probabilities for erroneously selecting hypotheses and will change if the set of boundary points

| (8) |

occurs with nonzero probability. We also define the set of all boundary points

| (9) |

and the complimentary set where for some is strictly smaller than the rest:

| (10) |

II-B The set of achievable pairwise error probability vectors

Let denote the set of all pairwise error probability vectors that can be achieved by randomized decision functions , i.e., . In this part, we present some properties of .

Property 1: is a convex set.

Proof: Let and be two pairwise error probability vectors obtained by employing randomized decision functions and , respectively. Then, for any with , since is the pairwise error probability vector corresponding to the randomized decision rule as seen from (2).

Property 2: Let be a point on the boundary of . There exists a hyperplane that is tangent to at and for all .

Proof: Follows immediately from the supporting hyperplane theorem [5, Sec. 2.5.2].

III Characterization of Optimal Decision Rule

In order to characterize the solution of (3), we first present the following lemma.

Lemma: Let be a point on the boundary of and be a supporting hyperplane to at the point .

Case 1: Any deterministic decision rule of the form given in (7) corresponding to the weights specified by yields if , defined in (9), has zero probability under all hypotheses.

Case 2: is achieved by a randomization among at most deterministic decision rules of the form given in (7), all corresponding to the same weights specified by , if , defined in (9), has nonzero probability under some hypotheses.

Proof: See Appendix A.

It should be noted that the condition in case 1 of the lemma, i.e., has zero probability under all hypotheses, is not difficult to satisfy. A simple example is when the observation under hypothesis is Gaussian distributed with mean and variance for all . Furthermore, the lemma implies that any extreme point of the convex set , i.e., any point on the boundary of the convex set that is not a convex combination of any other points in the set, can be achieved by a deterministic decision rule of the form (7) without any randomization. The points that are on the boundary but not extreme points can be obtained via randomization as stated in case 2.

Next, we present a unified characterization of the optimal decision rule for problems that are in the form of (3). We suppose that the problem in (3) is feasible and let and denote an optimal decision rule and the corresponding pairwise error probabilities, respectively.

Theorem: An optimal decision rule that solves (3) can be obtained as

Case 1: a randomization among at most two deterministic decision rules of the form given in (7), each specified by some real , if , defined in (9), has zero probability under all hypotheses for all real ; otherwise

Case 2: a randomization among at most deterministic decision rules of the form given in (7), one specified by some real and the remaining correspond to the same weights specified by another real .

Proof: If the optimal point is on the boundary of , then the lemma takes care of the proof. Here, we consider the case when is an interior point of . First, we pick an arbitrary and derive the optimal deterministic decision rule according to (7). Let denote the pairwise error probability vector corresponding to the employed decision rule. Then, we move along the ray that originates from and passes through . Since is bounded, this ray will intersect with the boundary of at some point, say . If the condition in case 1 is satisfied, then by lemma-case 1, there exists a deterministic decision rule of the form given in (7) that yields . Otherwise, by lemma-case 2, is achieved by a randomization among at most deterministic decision rules of the form given in (7), all sharing the same weight vector . Since resides on the line segment that connects to , it can be attained by appropriately randomizing among the decision rules that yield and .

When the optimization problem in (3) possesses certain structure, the maximum number of deterministic decision rules required to achieve optimal performance may be reduced below those given in the theorem. For example, suppose that the objective is a concave function of and there are a total of constraints in (3) which are all linear in (i.e., the feasible set, denoted by , is the intersection of with halfspaces and hyperplanes). It is well known that the minimum of a concave function over a closed bounded convex set is achieved at an extreme point [5]. Hence, in this case, the optimal point is an extreme point of . By Dubin’s theorem [6], any extreme point of can be written as a convex combination of or fewer extreme points of . Since any extreme point of can be achieved by a deterministic decision rule of the form (7), the optimal decision rule is obtained as a randomization among at most deterministic decision rules of the form (7). If there are no constraints in (3), i.e., , the deterministic decision rule given in (7) is optimal and no randomization is required with a concave objective function.

An immediate and important corollary of the theorem is given below.

Corollary: Likelihood ratios are sufficient statistics for simple ary hypothesis testing under any decision criterion that is expressed in terms of arbitrary functions of error probabilities as specified in (3).

Proof: It is stated in the theorem that a solution of the generic optimization problem in (3) can be expressed in terms of decision rules of the form given in (7). These decision rules only involve comparisons among ’s, which are linear w.r.t. the density terms ’s. Normalizing ’s with and defining , we see that an optimal decision rule that solves the problem in (3) depends on the observation only through the likelihood ratios.

IV Numerical Examples

In this section, numerical examples are presented by considering a binary hypothesis testing problem; i.e., in (1). Suppose that a bit ( or ) is sent over two independent binary channels to a decision maker, which aims to make an optimal decision based on the binary channel outputs. The output of binary channel is denoted by , and the decision maker declares its decision based on . The probability that the output of binary channel is when bit is sent is denoted by for with . Then, the pmf of under is given by

| (11) |

for . As in the previous sections, the pairwise error probability vector of the decision maker for a given decision rule is represented by , which is expressed as in this case. It is assumed that the decision maker knows the conditional pdfs in (11).

In this section, a special case of (3) is considered based on prospect theory by focusing on a behavioral decision maker [4, 7, 8, 9]. In particular, there exist no constraints (i.e., in (3)) and the objective function in (3) is expressed as

| (12) |

where is a weight function and is a value function, which characterize how a behavioral decision maker distorts probabilities and costs, respectively [4], and denotes the probability of its argument. In the numerical examples, the following weight function is employed: [4, 7, 8, 9]. In addition, the other parameters are set as , , , and . Furthermore, the prior probabilities of bit and bit are assumed to be equal.

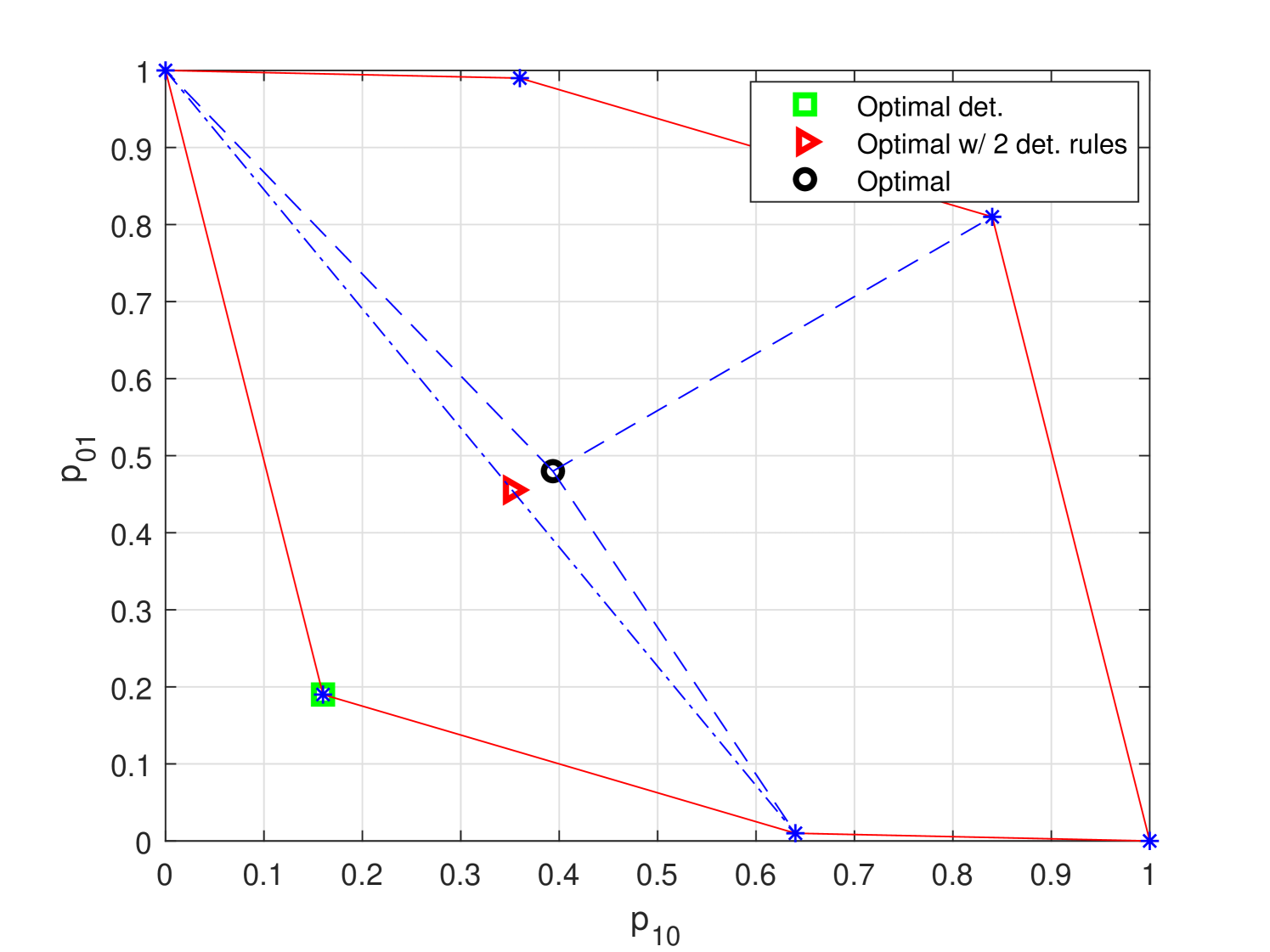

The aim of the decision maker is to obtain a decision rule that minimizes (12). In the first example, is set to , and the parameters of the binary channels are selected as and . In this case, it can be shown via (11) that there exist different deterministic decision rules in the form of (7), which achieve the pairwise error probability vectors marked with blue stars in Fig. 1. The convex hull of these pairwise error probability vectors is also illustrated in the figure. Over these deterministic decision rules (i.e., in the absence of randomization), the minimum achievable value of (12) becomes , which corresponds to the pairwise error probability vector shown with the green square in Fig. 1. If randomization between two deterministic decision rules in the form of (7) is considered, the resulting minimum objective value becomes , and the corresponding pairwise error probability vector is indicated with the red triangle in the figure. On the other hand, in compliance with the theorem (case 2), the minimum value of (12) is achieved via randomization of (at most) three deterministic decision rules in the form of (7) (since ). In this case, the optimal decision rule randomizes among , , and , with randomization coefficients of , , and , respectively, as given below:

| (13) | ||||

This optimal decision rule achieves the lowest objective value of , and the corresponding pairwise error probability vector is marked with the black circle in Fig. 1. Hence, this example shows that randomization among three deterministic decision rules may be required to obtain the solution of (3).

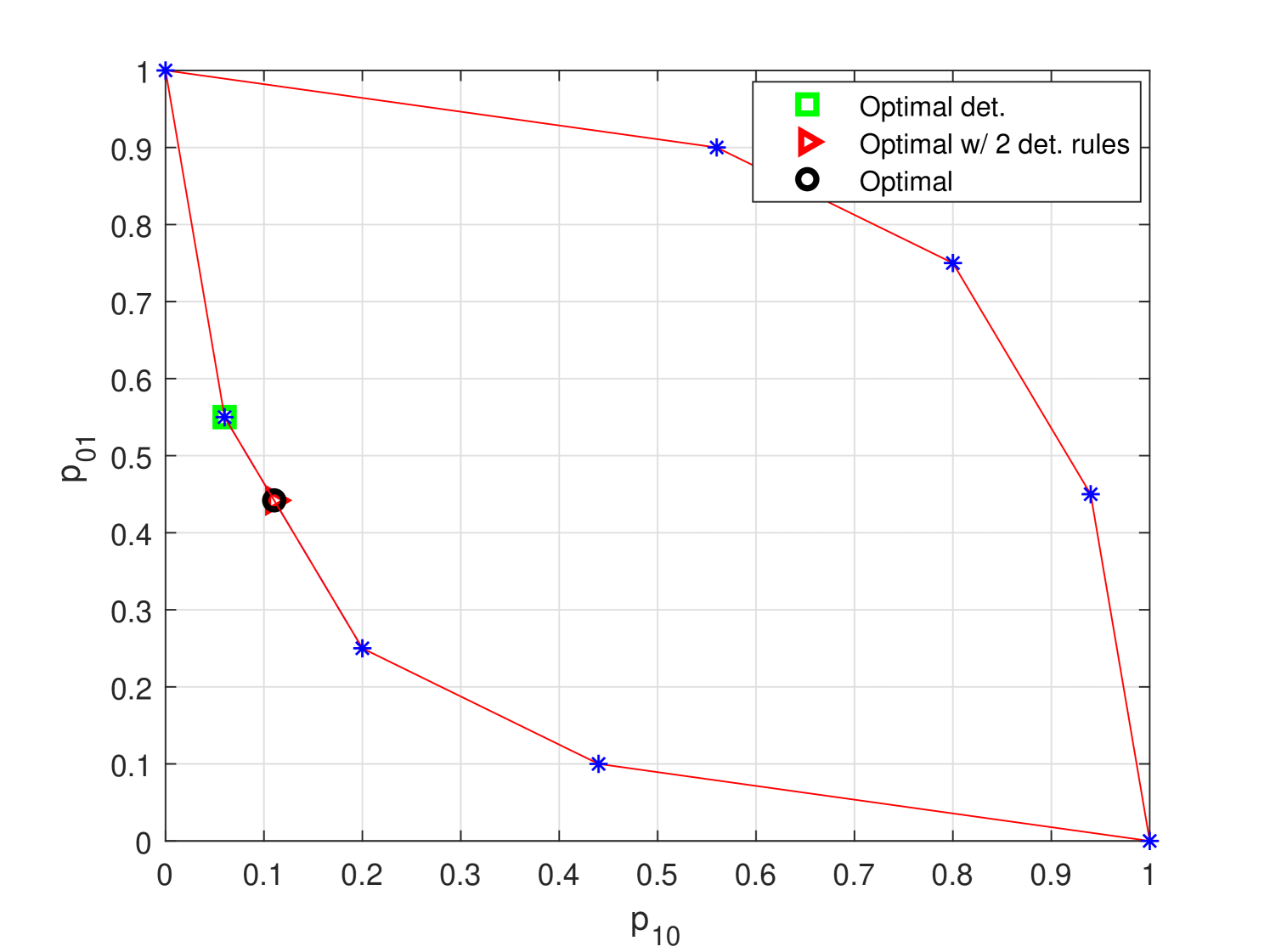

In the second example, the parameters are taken as , , , , and . In this case, there exist different deterministic decision rules in the form of (7), which achieve the pairwise error probability vectors marked with blue stars in Fig. 2. The minimum value of (12) among these deterministic decision rules is , which corresponds to the pairwise error probability vector shown with the green square in the figure. In addition, the pairwise error probability vectors corresponding to the solutions with randomization of two and three deterministic decision rules are marked with the red triangle and the black circle, respectively. In this scenario, the minimum objective value () can be achieved via randomization of two deterministic decision rules, as well. This is again in compliance with the theorem (case 2), which states that an optimal decision rule can be obtained as a randomization among at most deterministic decision rules of the form given in (7).

V Concluding Remarks

This letter presents a unified characterization of optimal decision rules for simple ary hypothesis testing under a generic performance criterion that depends on arbitrary functions of error probabilities. It is shown that optimal performance with respect to the design criterion can be achieved by randomizing among at most two deterministic decision rules of the form reminiscent (but not necessarily identical) to Bayes rule when points on the decision boundary do not contribute to the error probabilities. For the general case, the solution for an optimal decision rule is reduced to a search over two weight coefficient vectors, each of length . Likelihood ratios are shown to be sufficient statistics. Classical performance measures including Bayesian, minimax, Neyman-Pearson, generalized Neyman-Pearson, restricted Bayesian, and prospect theory based approaches all appear as special cases of the considered framework.

Appendix A Proof of Lemma

Since is a supporting hyperplane to at the point , we get for all . Furthermore, the deterministic decision rule given in (7), denoted here by , minimizes among all decision rules (and consequently over all ). Since as well, the deterministic decision rule given in (7) achieves a performance score of . Any other decision rule that does not agree with on any subset of with nonzero probability measure will have a strictly greater performance score than (due to the optimality of ), and hence, cannot be on the supporting hyperplane.

Case 1: We prove the first part by contrapositive. Suppose that the deterministic decision rule given in (7) yields meaning that is achieved by some other decision rule . Since minimizes over all , holds and both and are located on the supporting hyperplane . This implies that and must agree on any subset of with nonzero probability measure. As a result, the difference between the pairwise probability vectors and must stem from the difference of and over . Consequently, the set cannot have zero probability under all hypotheses.

Case 2: Suppose that the set of boundary points specified by has nonzero probability under some hypotheses. In this case, each point in can be assigned arbitrarily (or in a randomized manner) to hypotheses and . Since the way the ties are broken does not change , the resulting error probability vectors are all located on the intersection of the set with the dimensional supporting hyperplane . By Carathéodory’s Theorem [14], any point (including ) in the intersection set, whose dimension is at most , can be represented as a convex combination of at most extreme points of this set. Since these extreme points can only be obtained via deterministic decision rules which all agree with on the set , can be achieved by a randomization among at most deterministic decision rules of the form given in (7), all corresponding to the weights specified by .

References

- [1] H. V. Poor, An Introduction to Signal Detection and Estimation. New York: Springer-Verlag, 1994.

- [2] E. L. Lehmann, Testing Statistical Hypotheses, 2nd ed. New York, USA: Chapman & Hall, 1986.

- [3] J. L. Hodges, Jr. and E. L. Lehmann, “The use of previous experience in reaching statistical decisions,” Ann. Math. Stat., vol. 23, no. 3, pp. 396–407, Sep. 1952.

- [4] S. Gezici and P. K. Varshney, “On the optimality of likelihood ratio test for prospect theory-based binary hypothesis testing,” IEEE Signal Process. Lett., vol. 25, no. 12, pp. 1845–1849, Dec 2018.

- [5] S. Boyd and L. Vandenberghe, Convex Optimization. Cambridge, UK: Cambridge University Press, 2004.

- [6] H. Witsenhausen, “Some aspects of convexity useful in information theory,” IEEE Trans. Inform. Theory, vol. 26, no. 3, pp. 265–271, May 1980.

- [7] R. Gonzales and G. Wu, “On the shape of the probability weighting function,” Cognitive Psychology, vol. 38, no. 1, pp. 129–166, 1999.

- [8] D. Prelec, “The probability weighting function,” Econometrica, vol. 66, no. 3, pp. 497–527, 1998.

- [9] A. Tversky and D. Kahneman, “Advances in prospect theory: Cumulative represenation of uncertainty,” Journal of Risk and Uncertainty, vol. 5, pp. 297–323, 1992.

- [10] C. Altay and H. Delic, “Optimal quantization intervals in distributed detection,” IEEE Trans. Aerosp. Electron. Syst., vol. 52, no. 1, pp. 38–48, February 2016.

- [11] C. A. M. Sotomayor, R. P. David, and R. Sampaio-Neto, “Adaptive nonassisted distributed detection in sensor networks,” IEEE Trans. Aerosp. Electron. Syst., vol. 53, no. 6, pp. 3165–3174, Dec 2017.

- [12] A. Ghobadzadeh and R. S. Adve, “Separating function estimation test for binary distributed radar detection with unknown parameters,” IEEE Trans. Aerosp. Electron. Syst., 2018.

- [13] D. Warren and P. Willett, “Optimum quantization for detector fusion: some proofs, examples, and pathology,” J. Franklin Inst., vol. 336, no. 2, pp. 323–359, 1999.

- [14] R. T. Rockafellar, Convex Analysis. Princeton, NJ: Princeton University Press, 1968.