Optimal kernel selection for density estimation

Abstract

We provide new general kernel selection rules thanks to penalized least-squares criteria. We derive optimal oracle inequalities using adequate concentration tools. We also investigate the problem of minimal penalty as described in [BM07].

keywords:

density estimation, kernel estimators, optimal penalty, minimal penalty, oracle inequalities1 Introduction

Concentration inequalities are central in the analysis of adaptive nonparametric statistics. They lead to sharp penalized criteria for model selection [Mas07], to select bandwidths and even approximation kernels for Parzen’s estimators in high dimension [GL11], to aggregate estimators [RT07] and to properly calibrate thresholds [DJKP96].

In the present work, we are interested in the selection of a general kernel estimator based on a least-squares density estimation approach. The problem has been considered in -loss by Devroye and Lugosi [DL01]. Other methods combining log-likelihood and roughness/smoothness penalties have also been proposed in [EL99b, EL99a, EL01]. However these estimators are usually quite difficult to compute in practice. We propose here to minimize penalized least-squares criteria and obtain from them more easily computable estimators. Sharp concentration inequalities for U-statistics [GLZ00, Ada06, HRB03] control the variance term of the kernel estimators, whose asymptotic behavior has been precisely described, for instance in [MS11, MS15, DO13]. We derive from these bounds (see Proposition 4.1) a penalization method to select a kernel which satisfies an asymptotically optimal oracle inequality, i.e. with leading constant asymptotically equal to .

In the spirit of [GN09], we use an extended definition of kernels that allows to deal simultaneously with classical collections of estimators as projection estimators, weighted projection estimators, or Parzen’s estimators. This method can be used for example to select an optimal model in model selection (in accordance with [Mas07]) or to select an optimal bandwidth together with an optimal approximation kernel among a finite collection of Parzen’s estimators. In this sense, our method deals, in particular, with the same problem as that of Goldenshluger and Lepski [GL11] and we establish in this framework that a leading constant 1 in the oracle inequality is indeed possible.

Another main consequence of concentration inequalities is to prove the existence of a minimal level of penalty, under which no oracle inequalities can hold. Birgé and Massart shed light on this phenomenon in a Gaussian setting for model selection [BM07]. Moreover in this setting, they prove that the optimal penalty is twice the minimal one. In addition, there is a sharp phase transition in the dimension of the selected models leading to an estimate of the optimal penalty in their case (which is known up to a multiplicative constant). Indeed, starting from the idea that in many models the optimal penalty is twice the minimal one (this is the slope heuristic), Arlot and Massart [AM09] propose to detect the minimal penalty by the phase transition and to apply the "" rule (this is the slope algorithm). They prove that this algorithm works at least in some regression settings.

In the present work, we also show that minimal penalties exist in the density estimation setting. In particular, we exhibit a sharp "phase transition" of the behavior of the selected estimator around this minimal penalty. The analysis of this last result is not standard however. First, the "slope heuristic" of [BM07] only holds in particular cases as the selection of projection estimators, see also [Ler12]. As in the selection of a linear estimator in a regression setting [1], the heuristic can sometimes be corrected: for example for the selection of a bandwidth when the approximation kernel is fixed. In general since there is no simple relation between the minimal penalty and the optimal one, the slope algorithm of [AM09] shall only be used with care for kernel selection. Surprisingly our work reveals that the minimal penalty can be negative. In this case, minimizing an unpenalized criterion leads to oracle estimators. To our knowledge, such phenomenon has only been noticed previously in a very particular classification setting [FT06]. We illustrate all of these different behaviors by means of a simulation study.

In Section 2, after fixing the main notation, providing some examples and defining the framework, we explain our goal, describe what we mean by an oracle inequality and state the exponential inequalities that we shall need. Then we derive optimal penalties in Section 3 and study the problem of minimal penalties in Section 4. All of these results are illustrated for our three main examples : projection kernels, approximation kernels and weighted projection kernels. In Section 5, some simulations are performed in the approximation kernel case. The main proofs are detailed in Section 6 and technical results are discussed in the appendix.

2 Kernel selection for least-squares density estimation

2.1 Setting

Let denote i.i.d. random variables taking values in the measurable space , with common distribution . Assume has density with respect to and is uniformly bounded. Hence, belongs to , where, for any ,

Moreover, and denote respectively the -norm and the associated inner product and is the supremum norm. We systematically use and for and respectively, and denote the cardinality of the set . Recall that and, for any , .

Let denote a collection of symmetric functions indexed by some given finite set such that

A function satisfying these assumptions is called a kernel, in the sequel. A kernel is associated with an estimator of defined for any by

Our aim is to select a “good” in the family . Our results are expressed in terms of a constant such that for all ,

| (1) |

This condition plays the same role as , the milder condition used in [DL01] when working with -losses. Before describing the method, let us give three examples of such estimators that are used for density estimation, and see how they can naturally be associated to some kernels. Section A of the appendix gives the computations leading to the corresponding ’s.

Example 1: Projection estimators.

Projection estimators are among the most classical density estimators. Given a linear subspace , the projection estimator on is defined by

Let be a family of linear subspaces of . For any , let denote an orthonormal basis of . The projection estimator can be computed and is equal to

It is therefore easy to see that it is the estimator associated to the projection kernel defined for any and in by

Notice that actually depends on the basis even if does not. In the sequel, we always assume that some orthonormal basis is given with . Given a finite collection of linear subspaces of , one can choose the following constant in (1) for the collection

| (2) |

Example 2: Parzen’s estimators.

Given a bounded symmetric integrable function such that , and a bandwidth , the Parzen estimator is defined by

It can also naturally be seen as a kernel estimator, associated to the function defined for any and in by

We shall call the function an approximation or Parzen kernel.

Given a finite collection of pairs , one can choose in (1) if,

| (3) |

Example 3: Weighted projection estimators.

Let denote an orthonormal system in and let denote real numbers in . The associated weighted kernel projection estimator of is defined by

These estimators are used to derive very sharp adaptive results. In particular, Pinsker’s estimators are weighted kernel projection estimators (see for example [Rig06]). When , we recover a classical projection estimator. A weighted projection estimator is associated to the weighted projection kernel defined for any and in by

Given any finite collection of weights, one can choose in (1)

| (4) |

2.2 Oracle inequalities and penalized criterion

The goal is to estimate in the best possible way using a finite collection of kernel estimators . In other words, the purpose is to select among an estimator from the data such that is as close as possible to . More precisely our aim is to select such that, with high probability,

| (5) |

where is the leading constant and is usually a remaining term. In this case, is said to satisfy an oracle inequality, as long as is small compared to and is a bounded sequence. This means that the selected estimator does as well as the best estimator in the family up to some multiplicative constant. The best case one can expect is to get close to 1. This is why, when , the corresponding oracle inequality is called asymptotically optimal. To do so, we study minimizers of penalized least-squares criteria. Note that in our three examples choosing amounts to choosing the smoothing parameter, that is respectively to choosing , or .

Let denote the empirical measure, that is, for any real valued function ,

For any , let also

The least-squares contrast is defined, for any , by

Then for any given function , the least-squares penalized criterion is defined by

| (6) |

Finally the selected is given by any minimizer of , that is,

| (7) |

As , it is equivalent to minimize or . As our goal is to select satisfying an oracle inequality, an ideal penalty should satisfy , i.e. criterion (6) with

To identify the main quantities of interest, let us introduce some notation and develop . For all , let

and

Because those quantities are fundamental in the sequel, let us also define where for

| (8) |

Denoting

the ideal penalty is then equal to

| (9) |

The main point is that by using concentration inequalities, we obtain:

The term depends on which is unknown. Fortunately, it can be easily controlled as detailed in the sequel. Therefore one can hope that the choice

is convenient. In general, this choice still depends on the unknown density but it can be easily estimated in a data-driven way by

The goal of Section 3 is to prove this heuristic and to show that and are optimal choices for the penalty, that is, they lead to an asymptotically optimal oracle inequality.

2.3 Concentration tools

To derive sharp oracle inequalities, we only need two fundamental concentration tools, namely a weak Bernstein’s inequality and the concentration bounds for degenerate U-statistics of order two. We cite them here under their most suitable form for our purpose.

A weak Bernstein’s inequality.

Proposition 2.1.

For any bounded real valued function and any i.i.d. with distribution , for any ,

The proof is straightforward and can be derived from either Bennett’s or Bernstein’s inequality [BLM13].

Concentration of degenerate U-statistics of order 2.

Proposition 2.2.

Let be i.i.d. random variables defined on a Polish space equipped with its Borel -algebra and let denote bounded real valued symmetric measurable functions defined on , such that for any , and

| (10) |

Let be the following totally degenerate -statistic of order ,

Let be an upper bound of for any and

where

Then there exists some absolute constant such that for any , with probability larger than ,

The present result is a simplification of Theorem 3.4.8 in [GN15], which provides explicit constants for any variables defined on a Polish space. It is mainly inspired by [HRB03], where the result therein has been stated only for real variables. This inequality actually dates back to Giné, Latala and Zinn [GLZ00]. This result has been further generalized by Adamczak to U-statistics of any order [Ada06], though the constants are not explicit.

3 Optimal penalties for kernel selection

The main aim of this section is to show that is a theoretical optimal penalty for kernel selection, which means that if is close to , the selected kernel satisfies an asymptotically optimal oracle inequality.

3.1 Main assumptions

To express our results in a simple form, a positive constant is assumed to control, for any and in , all the following quantities.

| (11) | |||||

| (12) | |||||

| (13) | |||||

| (14) | |||||

| (15) | |||||

| (16) |

where is the set of functions that can be written for some with .

These assumptions may seem very intricate. They are actually fulfilled by our three main examples under very mild conditions (see Section 3.3).

3.2 The optimal penalty theorem

In the sequel, denotes a positive absolute constant whose value may change from line to line and if there are indices such as , it means that this is a positive function of and only whose value may change from line to line.

Theorem 3.1.

If Assumptions (11), (12), (13), (14) (15), (16) hold, then, for any , with probability larger than , for any , any minimizer of the penalized criterion (6) satisfies the following inequality

| (17) |

Assume moreover that there exists , and such that for any , with probability larger than , for any ,

| (18) |

Then for all and all , the following holds with probability at least ,

Let us make some remarks.

-

•

First, this is an oracle inequality (see (5)) with leading constant and remaining term given by

as long as

-

–

is small enough for to be positive,

-

–

is large enough for the probability to be large and

-

–

is large enough for to be negligible.

Typically, and are bounded w.r.t. and has to be of the order of for the remainder to be negligible. In particular, may grow with as long as (i) remains negligible with respect to and (ii) does not depend on .

-

–

-

•

If , that is if and in (18), the estimator satisfies an asymptotically optimal oracle inequality i.e. since can be chosen as close to 0 as desired. Take for instance, .

-

•

In general depends on the unknown and this last penalty cannot be used in practice. Fortunately, its empirical counterpart satisfies (18) with , , and for any and in particular (see (34) in Proposition B.1). Hence, the estimator selected with this choice of penalty also satisfies an asymptotically optimal oracle inequality, by the same argument.

-

•

Finally, we only get an oracle inequality when , that is when is larger than up to some residual term. We discuss the necessity of this condition in Section 4.

3.3 Main examples

This section shows that Theorem 3.1 can be applied in the examples. In addition, it provides the computation of in some specific cases of special interest.

Example 1 (continued).

Proposition 3.2.

These particular projection kernels satisfy for all

In particular, and .

Moreover, it appears that the function is constant in some linear spaces of interest (see [Ler12] for more details). Let us mention one particular case studied further on in the sequel. Suppose is a collection of regular histogram spaces on , that is, any is a space of piecewise constant functions on a partition of such that for any in . Assumption (20) is satisfied for this collection as soon as . The family , where is an orthonormal basis of and

Example 2 (continued).

Proposition 3.3.

These approximation kernels satisfy, for all ,

Therefore, the optimal penalty can be computed in practice and yields an asymptotically optimal selection criterion. Surprisingly, the lower bound can be negative if . In this case, a minimizer of (6) satisfies an oracle inequality, even if this criterion is not penalized. This remarkable fact is illustrated in the simulation study in Section 5.

Example 3 (continued).

Proposition 3.4.

For these weighted projection kernels, for all

In this case, the optimal penalty has to be estimated in general. However, in the following example it can still be directly computed.

Let , let be the Lebesgue measure. Let and, for any ,

Consider some odd and a family of weights such that, for any and any . In this case, the values of the functions of interest do not depend on

In particular, this family includes Pinsker’s and Tikhonov’s weights.

4 Minimal penalties for kernel selection

The purpose of this section is to see whether the lower bound is sharp in Theorem 3.1. To do so we first need the following result which links to deterministic quantities, thanks to concentration tools.

4.1 Bias-Variance decomposition with high probability

Proposition 4.1.

Assume is a finite collection of kernels satisfying Assumptions (11), (12), (13), (14) (15) and (16). For all , for all in , with probability larger than

Moreover, for all and for all in , with probability larger than , for all , each of the following inequalities hold

This means that not only in expectation but also with high probability can the term be decomposed in a bias term and a "variance" term . The bias term measures the capacity of the kernel to approximate whereas is the price to pay for replacing by its empirical version . In this sense, measures the complexity of the kernel in a way which is completely adapted to our problem of density estimation. Even if it does not seem like a natural measure of complexity at first glance, note that in the previous examples, it is indeed always linked to a natural complexity. When dealing with regular histograms defined on , is the dimension of the considered space , whereas for approximation kernels is proportional to the inverse of the considered bandwidth .

4.2 Some general results about the minimal penalty

In this section, we assume that we are in the asymptotic regime where the number of observations . In particular, the asymptotic notations refers to this regime.

From now on, the family may depend on as long as both and remain absolute constants that do not depend on it. Indeed, on the previous examples, this seems a reasonable regime. Since now depends on , our selected also depends on .

To prove that the lower bound is sharp, we need to show that the estimator chosen by minimizing (6) with a penalty smaller than does not satisfy an oracle inequality. This is only possible if the ’s are not of the same order and if they are larger than the remaining term . From an asymptotic point of view, we rewrite this thanks to Proposition 4.1 as for all , there exist and in such that

| (21) |

where means that . More explicitly, denoting by a sequence only depending on and tending to 0 as tends to infinity and whose value may change from line to line, one assumes that there exists and positive constants such that for all , there exist and in such that

| (22) | |||

| (23) |

We put a log-cube factor in the remaining term to allow some choices of and .

But (22) and (23) (or (21)) are not sufficient. Indeed, the following result explains what happens when the bias terms are always the leading terms.

Corollary 4.2.

Let be a sequence of finite collections of kernels satisfying Assumptions (11), (12), (13), (14) (15), (16) for a positive constant independent of and such that

| (24) |

for some positive constant .

Assume that there exist real numbers of any sign and a sequence of nonnegative real numbers such that, for all , with probability larger than , for all ,

Then, with probability larger than ,

The proof easily follows by taking in (17), for instance in Proposition 4.1 and by using Assumption (24) and the bounds on . This result shows that the estimator satisfies an asymptotically optimal oracle inequality when condition (24) holds, whatever the values of and even when they are negative. This proves that the lower bound is not sharp in this case.

Therefore, we have to assume that at least one bias is negligible with respect to . Actually, to conclude, we assume that this happens for in (21).

Theorem 4.3.

Let be a sequence of finite collections of kernels satisfying Assumptions (11), (12), (13), (14) (15), (16), with not depending on . Each is also assumed to satisfy (22) and (23) with a kernel in (22) such that

| (25) |

for some fixed positive constant . Suppose that there exist and a sequence of nonnegative real numbers such that and such that for all , with probability larger than , for all ,

| (26) |

Then, with probability larger than , the following holds

| (27) |

| (28) |

4.3 Examples

Example 1 (continued).

Let be the collection of spaces of regular histograms on with dimensions and let be the selected space thanks to the penalized criterion. Recall that, for any , the orthonormal basis is defined by and . Assume that is -Hölderian, with with -Hölderian norm . It is well known (see for instance Section 1.3.3. of [Bir06]) that the bias is bounded above by

Hence, (21) holds with and . Therefore, Theorem 4.3 and Theorem 3.1 apply in this example. If , the dimension and is not consistent and does not satisfy an oracle inequality. On the other hand, if ,

and satisfies an oracle inequality which implies that, with probability larger than ,

by taking It achieves the minimax rate of convergence over the class of -Hölderian functions.

From Theorem 3.1, the penalty provides an estimator that achieves an asymptotically optimal oracle inequality. Therefore the optimal penalty is equal to times the minimal one. In particular, the slope heuristics of [BM07] holds in this example, as already noticed in [Ler12].

Finally to illustrate , let us take and the collection of regular histograms with dimension in , with . Simple calculations show that

Hence (24) applies and the penalized estimator with penalty always satisfies an oracle inequality even if or . This was actually expected since it is likely to choose the largest dimension which is also the oracle choice in this case.

Example 2 (continued).

Let be a fixed function, let denote the following grid of bandwidths

and let be the selected bandwidth. Assume as before that is a density on that belongs to the Nikol’ski class with and . By Proposition 1.5 in [Tsy09], if satisfies

In particular, when ,

On the other hand, for ,

Hence, (21) and (25) hold with kernels and . Therefore, Theorem 4.3 and Theorem 3.1 apply in this example. If for some we set , then and is not consistent and does not satisfy an oracle inequality. On the other hand, if , then

and satisfies an oracle inequality which implies that, with probability larger than ,

for In particular it achieves the minimax rate of convergence over the class . Finally, if , achieves an asymptotically optimal oracle inequality, thanks to Theorem 3.1.

The minimal penalty is therefore

In this case, the optimal penalty derived from Theorem 3.1 is not twice the minimal one, but one still has, if ,

even if they can be of opposite sign depending on . This type of nontrivial relationship between optimal and minimal penalty has already been underlined in [1] in regression framework for selecting linear estimators.

Note that if one allows two kernel functions and in the family of kernels such that , and

then there is no absolute constant multiplicative factor linking the minimal penalty and the optimal one.

5 Small simulation study

In this section we illustrate on simulated data Theorem 3.1 and Theorem 4.3. We focus on approximation kernels only, since projection kernels have been already discussed in [Ler12].

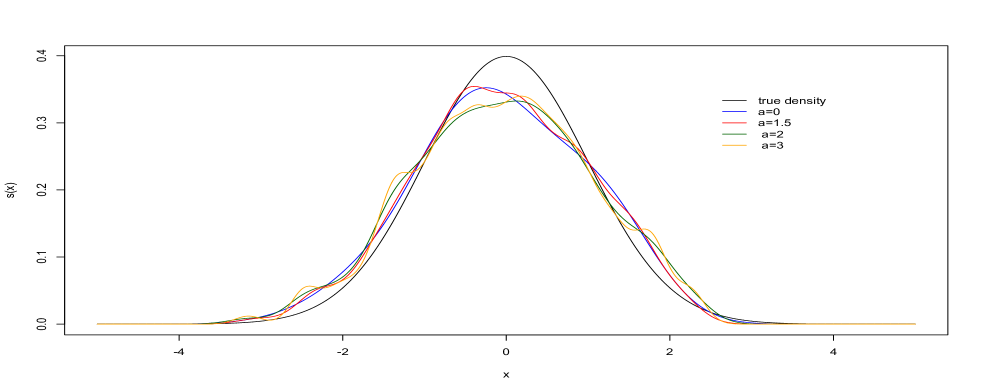

We observe an i.i.d. sample of standard gaussian distribution. For a fixed parameter we consider the family of kernels

where for

In particular the kernel estimator with is the classical Gaussian kernel estimator. Moreover

Thus, depending on the value of , the minimal penalty may be negative. We study the behavior of the penalized criterion

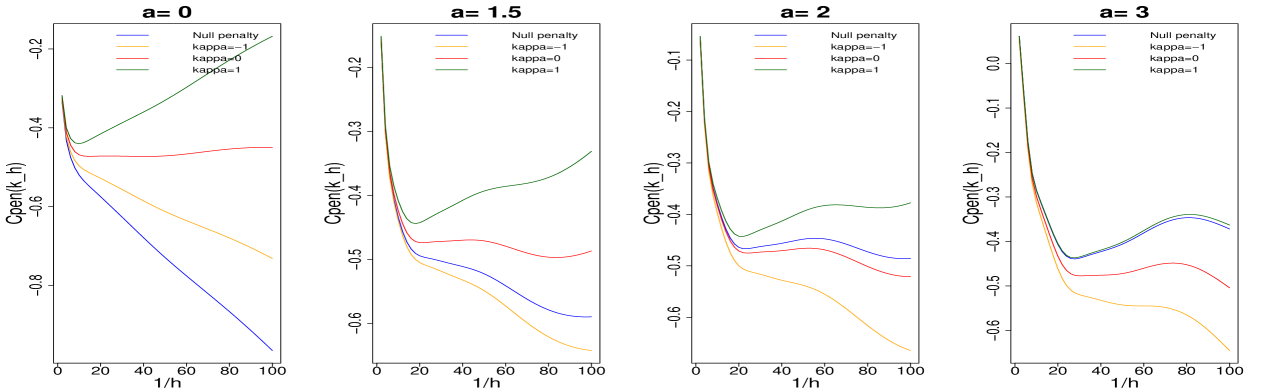

with penalties of the form

| (29) |

for different values of () and (). On Figure 1 are represented the selected estimates by the optimal penalty for the different values of and on Figure 2 one sees the evolution of the different penalized criteria as a function of .

|

|

The contrast curves for are classical on Figure 2. Without penalization, the criterion decreases and leads to the selection of the smallest bandwidth. At the minimal penalty, the curve is flat and at the optimal penalty one selects a meaningful bandwidth as shown on Figure 1.

When , despite the choice of those unusual kernels, the reconstructions on Figure 1 for the optimal penalty are also meaningful. However when or , the criterion with minimal penalty is smaller than the unpenalized criterion, meaning that minimizing the latter criterion leads by Theorem 3.1 to an oracle inequality. In our simulation, when , the curves for the optimal criterion and the unpenalized one are so close that the same estimator is selected by both methods.

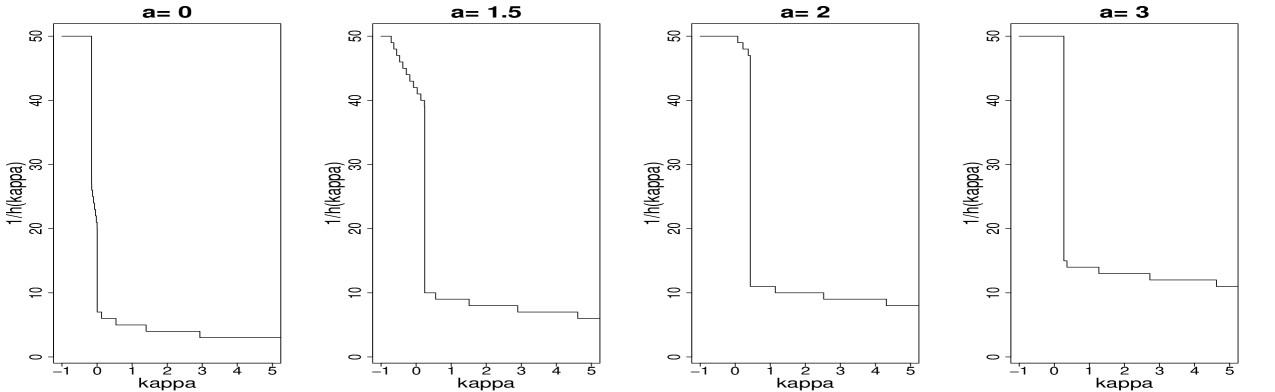

|

Finally Figure 3 shows that there is indeed in all cases a sharp phase transition around i.e. at the minimal penalty for the complexity of the selected estimate.

6 Proofs

6.1 Proof of Theorem 3.1

The starting point to prove the oracle inequality is to notice that any minimizer of satisfies

Using the expression of the ideal penalty (9) we find

| (30) |

By Proposition B.1 (see the appendix), for all , for all in , with probability larger than ,

Hence

This bound holds using (11), (12) and (13) only. Now by Proposition 4.1 applied with , we have for all , for all , with probability larger than ,

This gives the first part of the theorem.

For the second part, by the condition (18) on the penalty, we find for all , for all in , with probability larger than ,

By Proposition 4.1 applied with , we have with probability larger than ,

that is

Hence, because , we obtain the desired result.

6.2 Proof of Proposition 4.1

First, let us denote for all

and

Some easy computations then provide the following useful equality

We need only treat the terms on the right-hand side, thanks to the probability tools of Section 2.3. Applying Proposition 2.1, we get, for any , with probability larger than ,

One can then check the following link between and

In particular, since ,

It follows from these computations and from (11) that there exists an absolute constant such that, for any , with probability larger than , for any ,

We now need to control the term . From Proposition 2.2, for any , with probability larger than ,

By (1), (11) and Cauchy-Schwarz inequality,

In addition, by (15),

Moreover, applying the Assumption (14),

Finally, applying the Cauchy-Schwarz inequality and proceeding as for , the quantity used to define can be bounded above as follows:

Hence for any , with probability larger than ,

Therefore, for all ,

and the first part of the result follows by choosing . Concerning the two remaining inequalities appearing in the proposition, we begin by developing the loss. For all

Then, for all

Moreover, since , we find

This expression motivates us to apply again Proposition 2.1 to this term. We find by (1), (11) and Cauchy-Schwarz inequality

Moreover,

Thus by (16), for any ,

Putting together all of the above, one concludes that for all in , for all , with probability larger than

and

Choosing, leads to the second part of the result.

6.3 Proof of Theorem 4.3

It follows from (17) (applied with and ) and Assumption (26) that with probability larger than we have for any and any

| (31) |

Applying this inequality with and using Proposition 4.1 with and as a lower bound for and as an upper bound for , we obtain asymptotically that with probability larger than ,

This gives (27). In addition, starting with the event where (31) holds and using Proposition 4.1, we also have with probability larger than ,

Since , this leads to

Appendix A Proofs for the examples

A.1 Computation of the constant for the three examples

Example 1: Projection kernels.

First, notice that from Cauchy-Schwarz inequality we have for all and by orthonormality, for any ,

In particular, for any , . Hence, projection kernels satisfy (1) for . We conclude by writing

For we have . Hence with ,

Example 2: Approximation kernels.

First, Second, since

Now and implies , hence (1) holds with if one assumes that .

Example 3: Weighted projection kernels.

For all

From Cauchy-Schwarz inequality, for any ,

We thus find that verifies (1) with . Since we find the announced result which is independent of .

A.2 Proof of Proposition 3.2

Since , we find that (11) only requires . Assumption (12) holds: this follows from and

Now for proving Assumption (14), we write

In the same way, Assumption (15) follows from . Suppose (19) holds with so that the basis of is included in the one of . Since we have

Hence, (13) holds in this case. Assuming (20) implies that (13) holds since

Finally for (16), for any ,

is the orthogonal projection of onto . Therefore, is the unit ball in for the -norm and, for any ,

A.3 Proof of Proposition 3.3

First, since

Hence, Assumption (11) holds if . Now, we have

so it is sufficient to have (since ) to ensure (12). Moreover, for any and any ,

Therefore, Assumption (13) holds for . Then on one hand

And on the other hand

Therefore,

and Hence Assumption (14) and (15) hold when . Finally let us prove that Assumption (16) is satisfied. Let and be such that and for all . Then the following follows from Cauchy-Schwarz inequality

Thus for any

We conclude that all the assumptions hold if

A.4 Proof of Proposition 3.4

Let us define for convenience , so . Then we have for these kernels: for all . Moreover, denoting by the orthogonal projection of onto the linear span of ,

Assumption (11) holds for this family if . We prove in what follows that all the remaining assumptions are valid using only (1) and (11).

First, it follows from Cauchy-Schwarz inequality that, for any , . Assumption (12) is then automatically satisfied from the definition of

Now let and be any two vectors in , we have

Hence and, by Cauchy-Schwarz inequality, for any ,

Assumption (13) follows using (11). Concerning Assumptions (14) and (15), let us first notice that by orthonormality, for any ,

Therefore, Assumption (15) holds since

Assumption (14) also holds from similar computations:

We finish with the proof of (16). Let us prove that , where

First, notice that any can be written

Then, consider some . By definition, there exists a collection such that , and . If , and , hence . Conversely, for , there exists some function such that , and . Since is an orthonormal system, one can take . With , we find and . For any , . Hence

Appendix B Concentration of the residual terms

The following proposition gathers the concentration bounds of the remaining terms appearing in (6.1).

Proposition B.1.

Proof First for (32), notice that, by (13), for any

Then, by Proposition 2.1, with probability larger than ,

Since by (11)

Moreover, by (13) Hence, for , with probability larger than

Concerning (34), we get by (12), , hence, for any we have with probability larger than

For (35), we apply Proposition 2.2 to obtain with probability larger than , for any ,

where are defined accordingly to Proposition 2.2. Let us evaluate all these terms. First, by (1) and (11). Next,

Using (1), we find

References

- [1] S. Arlot and F. Bach. Data-driven calibration of linear estimators with minimal penalties. In Advances in Neural Information Processing Systems 22, pages 46–54, 2009.

- [Ada06] R. Adamczak. Moment inequalities for -statistics. Ann. Probab., 34(6):2288–2314, 2006.

- [AM09] S. Arlot and P. Massart. Data-driven calibration of penalties for least-squares regression. J. Mach. Learn. Res., 10:245–279, 2009.

- [Bir06] L. Birgé. Statistical estimation with model selection. Indag. Math. (N.S.), 17(4):497–537, 2006.

- [BLM13] S. Boucheron, G. Lugosi, and P. Massart. Concentration inequalities. Oxford University Press, Oxford, 2013.

- [BM07] L. Birgé and P. Massart. Minimal penalties for Gaussian model selection. Probab. Theory Related Fields, 138(1-2):33–73, 2007.

- [DJKP96] D. L. Donoho, I. M. Johnstone, G. Kerkyacharian, and D. Picard. Density estimation by wavelet thresholding. Ann. Statist., 24(2):508–539, 1996.

- [DL01] L. Devroye and G. Lugosi. Combinatorial methods in density estimation. Springer Series in Statistics. Springer-Verlag, 2001.

- [DO13] P. Deheuvels and S. Ouadah. Uniform-in-bandwidth functional limit laws. J. Theoret. Probab., 26(3):697–721, 2013.

- [EL99a] P. P. B. Eggermont and V. N. LaRiccia. Best asymptotic normality of the kernel density entropy estimator for smooth densities. IEEE Trans. Inform. Theory, 45(4):1321–1326, 1999.

- [EL99b] P. P. B. Eggermont and V. N. LaRiccia. Optimal convergence rates for good’s nonparametric maximum likelihood density estimator. Ann. Statist., 27(5):1600–1615, 1999.

- [EL01] P. P. B. Eggermont and V. N. LaRiccia. Maximum penalized likelihood estimation, volume I of Springer Series in Statistics. Springer-Verlag, New York, 2001.

- [FT06] M. Fromont and C. Tuleau. Functional classification with margin conditions. In Learning theory, volume 4005 of Lecture Notes in Comput. Sci., pages 94–108. Springer, Berlin, 2006.

- [GL11] A. Goldenshluger and O. Lepski. Bandwidth selection in kernel density estimation: oracle inequalities and adaptive minimax optimality. Ann. Statist., 39(3):1608–1632, 2011.

- [GLZ00] E. Giné, R. Latała, and J. Zinn. Exponential and moment inequalities for -statistics. In High dimensional probability, II, volume 47 of Progr. Probab., pages 13–38. Birkhäuser Boston, 2000.

- [GN09] E. Giné and R. Nickl. Uniform limit theorems for wavelet density estimators. Ann. Probab., 37(4):1605–1646, 2009.

- [GN15] E Giné and R. Nickl. Mathematical foundations of infinite-dimensional statistical models. Cambridge University press, 2015.

- [HRB03] C. Houdré and P. Reynaud-Bouret. Exponential inequalities, with constants, for U-statistics of order two. In Stochastic inequalities and applications, volume 56 of Progr. Probab., pages 55–69. Birkhäuser, Basel, 2003.

- [Ler12] M. Lerasle. Optimal model selection in density estimation. Ann. Inst. H. Poincaré Probab. Statist., 48(3):884–908, 2012.

- [Mas07] P. Massart. Concentration inequalities and model selection, volume 1896 of Lecture Notes in Mathematics. Springer, Berlin, 2007. Lectures from the 33rd Summer School in Saint-Flour.

- [MS11] D. M. Mason and J. W. H. Swanepoel. A general result on the uniform in bandwidth consistency of kernel-type function estimators. TEST, 20(1):72–94, 2011.

- [MS15] D. M. Mason and J. W. H. Swanepoel. Erratum to: A general result on the uniform in bandwidth consistency of kernel-type function estimators . TEST, 24(1):205–206, 2015.

- [Rig06] P. Rigollet. Adaptive density estimation using the blockwise Stein method. Bernoulli, 12(2):351–370, 2006.

- [RT07] P. Rigollet and A. B. Tsybakov. Linear and convex aggregation of density estimators. Math. Methods Statist., 16(3):260–280, 2007.

- [Tsy09] A. B. Tsybakov. Introduction to nonparametric estimation. Springer Series in Statistics. Springer, New York, 2009.