Optimal Learning for Sequential Decision Making for Expensive Cost Functions with Stochastic Binary Feedbacks

Abstract

We consider the problem of sequentially making decisions that are rewarded by “successes" and “failures" which can be predicted through an unknown relationship that depends on a partially controllable vector of attributes for each instance. The learner takes an active role in selecting samples from the instance pool. The goal is to maximize the probability of success in either offline (training) or online (testing) phases. Our problem is motivated by real-world applications where observations are time consuming and/or expensive. We develop a knowledge gradient policy using an online Bayesian linear classifier to guide the experiment by maximizing the expected value of information of labeling each alternative. We provide a finite-time analysis of the estimated error and show that the maximum likelihood estimator based produced by the KG policy is consistent and asymptotically normal. We also show that the knowledge gradient policy is asymptotically optimal in an offline setting. This work further extends the knowledge gradient to the setting of contextual bandits. We report the results of a series of experiments that demonstrate its efficiency.

1 Introduction

There are many real-world optimization tasks where observations are time consuming and/or expensive. This often occurs in experimental sciences where testing different values of controllable parameters of a physical system is expensive. Another example arises in health services, where physicians have to make medical decisions (e.g. a course of drugs, surgery, and expensive tests) and we can characterize an outcome as a success (patient does not need to return for more treatment) or a failure (patient does need followup care such as repeated operations). This puts us in the setting of optimal learning where the number of objective function samples are limited, requiring that we learn from our decisions as quickly as possible. This represents a distinctly different learning environment than what has traditionally been considered using popular policies such as upper confidence bounding (UCB) which have proven effective in fast-paced settings such as learning ad-clicks.

In this paper, we are interested in a sequential decision-making setting where at each time step we choose one of finitely many decisions and observe a stochastic binary reward where each instance is described by various controllable and uncontrollable attributes. The goal is to maximize the probability of success in either offline (training) or online (testing) phases. There are a number of applications that can easily fit into our success/failure model:

-

•

Producing single-walled nanotubes. Scientists have physical procedures to produce nanotubes. It can produce either single-walled or double walled nanotubes through an unknown relationship with the controllable parameters, e.g. laser poser, ethylene, Hydrogen and pressure. Yet only the single-walled nanotubes are acceptable. The problem is to quickly learn the best parameter values with the highest probability of success (producing single-walled nanotubes).

-

•

Personalized health care. We consider the problem of how to choose clinical pathways (including surgery, medication and tests) for different upcoming patients to maximize the success of the treatment.

-

•

Minimizing the default rate for loan applications. When facing borrowers with different background information and credit history, a lending company needs to decide whether to grant a loan, and with what terms (interest rate, payment schedule).

-

•

Enhancing the acceptance of the admitted students. A university needs to decide which students to admit and how much aid to offer so that the students will then accept the offer of admission and matriculate.

Our work will initially focus on offline settings such as laboratory experiments or medical trials where we are not punished for errors incurred during training and instead are only concerned with the final recommendation after the offline training phases. We then extend our discussion to online learning settings where the goal is to minimize cumulative regret. We also consider problems with partially controllable attributes, which is known as contextual bandits. For example, in the health care problems, we do not have control over the patients (which is represented by a feature vector including demographic characteristics, diagnoses, medical history) and can only choose the medical decision. A university cannot control which students are applying to the university. When deciding whether to grant a loan, the lending company cannot choose the personal information of the borrowers.

Scientists can draw on an extensive body of literature on the classic design of experiments [12, 59, 42] where the goal is to decide which observations to make when fitting a function. Yet in our setting, the decisions are guided by a well-defined utility function (that is, maximize the probability of success). The problem is related to the literature on active learning [49, 55, 18, 50], where our setting is most similar to membership query synthesis where the learner may request labels for any unlabeled instance in the input space to learn a classifier that accurately predicts the labels of new examples. By contrast, our goal is to maximize a utility function such as the success of a treatment. Moreover, the expense of labeling each alternative sharpens the conflicts of learning the prediction and finding the best alternative.

Another similar sequential decision making setting is multi-armed bandit problems [2, 6, 14, 39, 52, 7, 56]. Different belief models have been studied under the name of contextual bandits, including linear models [9] and Gaussian process regression [33]. The focus of bandit work is minimizing cumulative regret in an online setting, while we consider the performance of the final recommendation after an offline training phase. There are recent works to address the problem we describe here by minimizing the simple regret. But first, the UCB type policies [1] are not best suited for expensive experiments. Second, the work on simple regret minimization [28, 27] mainly focuses on real-valued functions and do not consider the problem with stochastic binary feedbacks.

There is a literature on Bayesian optimization [26, 8, 44]. Efficient global optimization (EGO), and related methods such as sequential kriging optimization (SKO) [32, 30] assume a Gaussian process belief model which does not scale to the higher dimensional settings that we consider. Others assume lookup table, or low-dimensional parametric methods, e.g. response surface/surrogate models [24, 31, 46]. The existing literature mainly focuses on real-valued functions and none of these methods are directly suitable for our problem of maximizing the probability of success with binary outcomes. A particularly relevant body of work in the Bayesian optimization literature is the expected improvement (EI) for binary outputs [53]. Yet when EI decides which alternative to measure, it is based on the expected improvement over current predictive posterior distribution while ignoring the potential change of the posterior distribution resulting from the next stochastic measurement (see Section 5.6 of [44] and [30] for detailed explanations).

We investigate a knowledge gradient policy that maximizes the value of information, since this approach is particularly well suited to problems where observations are expensive. After its first appearance for ranking and selection problems [16], KG has been extended to various other belief models (e.g. [41, 43, 57]). Yet there is no KG variant designed for binary classification with parametric belief models. In this paper, we extend the KG policy to the setting of classification problems under a Bayesian classification belief model which introduces the computational challenge of working with nonlinear belief models.

This paper makes the following contributions. 1. Due to the sequential nature of the problem, we develop a fast online Bayesian linear classification procedure based on Laplace approximation for general link functions to recursively predict the response of each alternative. 2. We design a knowledge-gradient type policy for stochastic binary responses to guide the experiment. It can work with any choice of link function and approximation procedure. 3. Since the knowledge gradient policy adaptively chooses the next sampled points, we provide a finite-time analysis on the estimated error that does not rely on the i.i.d. assumption. 4. We show that the maximum likelihood estimator based on the adaptively sampled points by the KG policy is consistent and asymptotically normal. We show furthermore that the knowledge gradient policy is asymptotic optimal. 5. We extend the KG policy to contextual bandit settings with stochastic binary outcomes.

The paper is organized as follows. In Section 2, we establish the mathematical model for the problem of sequentially maximizing the response under binary outcomes. We give a brief overview of (Bayesian) linear classification in Section 3. We develop an online Bayesian linear classification procedure based on Laplace approximation in Section 4. In Section 5, we propose a knowledge-gradient type policy for stochastic binary responses with or with context information. We theoretically study its finite-time and asymptotic behavior. Extensive demonstrations and comparisons of methods for offline objective are demonstrated in Section 6.

2 Problem formulation

We assume that we have a finite set of alternatives . The observation of measuring each is a binary outcome /{failure, success} with some unknown probability . The learner sequentially chooses a series of points to run the experiments. Under a limited measurement budget , the goal of the learner is to recommend an implementation decision that maximizes .

We adopt probabilistic models for classification. Under general assumptions, the probability of success can be written as a link function acting on a linear function of the feature vector

In this paper, we illustrate the ideas using the logistic link function and probit link function given its analytic simplicity and popularity, but any monotonically increasing function can be used. The main difference between the two sigmoid functions is that the logistic function has slightly heavier tails than the normal CDF. Classification using the logistic function is called logistic regression and that using the normal CDF is called probit regression.

We start with a multivariate prior distribution for the unknown parameter vector . At iteration , we choose an alternative to measure and observe a binary outcome assuming labels are generated independently given . Each alternative can be evaluated more than once with potentially different outcomes. Let denote the previous measured data set for any . Define the filtration by letting be the sigma-algebra generated by . We use and interchangeably. Note that the notation here is slightly different from the (passive) PAC learning model where the data are i.i.d. and are denoted as . Yet in our (adaptive) sequential decision setting, measurement and implementation decisions are restricted to be -measurable so that decisions may only depend on measurements made in the past. This notation with superscript indexing time stamp is standard, for example, in control theory, stochastic optimization and optimal learning. We use Bayes’ theorem to form a sequence of posterior predictive distributions .

The next lemma states the equivalence of using true probabilities and sample estimates when evaluating a policy. The proof is left in the supplementary material.

Lemma 2.1.

Let be the set of policies, , and be the implementation decision after the budget is exhausted. Then

where the expectation is taken over the prior distribution of .

By denoting as an implementation policy for selecting an alternative after the measurement budget is exhausted, then is a mapping from the history to an alternative . Then as a corollary of Lemma 2.1, we have [44]

In other words, the optimal decision at time is to go with our final set of beliefs. By the equivalence of using true probabilities and sample estimates when evaluating a policy as stated in Lemma 2.1, while we want to learn the unknown true value , we may write our objective function as

| (1) |

3 Background: Linear classification

Linear classification, especially logistic regression, is widely used in machine learning for binary classification [29]. Assume that the probability of success is a parameterized function and further assume that observations are independently of each other. Given a training set with a -dimensional vector and , the likelihood function is The weight vector is found by maximizing the likelihood or equivalently, minimizing the negative log likelihood:

In order to avoid over-fitting, especially when there are a large number of parameters to be learned, regularization is often used. The estimate of the weight vector is then given by:

| (2) |

It can be shown that this log-likelihood function is globally concave in for both logistic regression or probit regression. As a result, numerous optimization techniques are available for solving it, such as steepest ascent, Newton’s method and conjugate gradient ascent.

This logic is suitable for batch learning where we only need to conduct the minimization once to find the estimation of weight vector based on a given batch of training examples . Yet due to the sequential nature of our problem setting, observations come one by one as in online learning. After each new observation, if we retrain the linear classifier using all the previous data, we need to re-do the minimization, which is computationally inefficient. In this paper, we instead extend Bayesian linear classification to perform recursive updates with each observation.

A Bayesian approach to linear classification models requires a prior distribution for the weight parameters , and the ability to compute the conditional posterior given the observation. Specifically, suppose we begin with an arbitrary prior and apply Bayes’ theorem to calculate the posterior: where the normalization constant is the unknown evidence. An -regularized logistic regression can be interpreted as a Bayesian model with a Gaussian prior on the weights with standard deviation .

Unfortunately, exact Bayesian inference for linear classifiers is intractable since the evaluation of the posterior distribution comprises a product of sigmoid functions; in addition, the integral in the normalization constant is intractable as well, for both the logistic function or probit function. We can either use analytic approximations to the posterior, or solutions based on Monte Carlo sampling, foregoing a closed-form expression for the posterior. In this paper, we consider different analytic approximations to the posterior to make the computation tractable.

3.1 Online Bayesian probit regression based on assumed Gaussian density filtering

Assumed density filtering (ADF) is a general online learning schema for computing approximate posteriors in statistical models [5, 35, 40, 48]. In ADF, observations are processed one by one, updating the posterior which is then approximated and is used as the prior distribution for the next observation.

For a given Gaussian prior distribution on some latent parameter , and a likelihood , the posterior is generally non-Gaussian,

We find the best approximation by minimizing the Kullback-Leibler (KL) divergence between the true posterior and the Gaussian approximation. It is well known that when is Gaussian, the distribution that minimizes KL is the one whose first and second moments match that of . It can be shown that the Gaussian approximation found by moment matching is given as:

| (3) |

where the vector and the matrix are given by

and the normalization function is defined by

For the sake of analytic convenience, we only consider probit regression under assumed Gaussian density filtering. Specifically, the distribution of after observations is modeled as . The likelihood function for the next available data is . Thus we have,

Since the convolution of the normal CDF and a Gaussian distribution is another normal CDF, moment matching (3) results in an analytical solution to the Gaussian approximation:

| (4) | |||||

| (5) |

where

In this work, we focus on diagonal covariance matrices with as the diagonal element due to computational simplicity and its equivalence with regularization, resulting in the following update for the posterior parameters:

| (6) | |||||

| (7) |

where . See, for example, [22] and [10] for successful applications of this online probit regression model in prediction of click-through rates and stream-based active learning.

Due to the popularity of logistic regression and the computational limitations of ADF (on general link functions other than probit function), we develop an online Bayesian linear classification procedure for general link functions to recursively predict the response of each alternative in the next section.

4 Online Bayesian Linear Classification based on Laplace approximation

In this section, we consider the Laplace approximation to the posterior and develop an online Bayesian linear classification schema for general link functions.

4.1 Laplace approximation

Laplace’s method aims to find a gaussian approximation to a probability density defined over a set of continuous variables. It can be obtained by finding the mode of the posterior distribution and then fitting a Gaussian distribution centered at that mode [4]. Specifically, define the logarithm of the unnormalized posterior distribution as

Since the logarithm of a Gaussian distribution is a quadratic function, we consider a second-order Taylor expansion to around its MAP (maximum a posteriori) solution :

| (8) |

where is the Hessian of the negative log posterior evaluated at :

By exponentiating both sides of Eq. (8), we can see that the Laplace approximation results in a normal approximation to the posterior

| (9) |

For multivariate Gaussian priors ,

| (10) |

and the Hessian evaluated at is given for both logistic and normal CDF link functions as:

| (11) |

where and .

4.2 Online Bayesian linear classification based on Laplace approximation

Starting from a Gaussian prior , after the first observations, the Laplace approximated posterior distribution is according to (9). We formally define the state space to be the cross-product of and the space of positive semidefinite matrices. At each time , our state of knowledge is thus . Observations come one by one due to the sequential nature of our problem setting. After each new observation, if we retrain the Bayesian classifier using all the previous data, we need to calculate the MAP solution of (10) with to update from to . It is computationally inefficient even with a diagonal covariance matrix. It is better to extend the Bayesian linear classifier to handle recursive updates with each observation.

Here, we propose a fast and stable online algorithm for model updates with independent normal priors (with , where is the identity matrix), which is equivalent to regularization and which also offers greater computational efficiency [58]. At each time step , the Laplace approximated posterior serves as a prior to update the model when the next observation is made. In this recursive way of model updating, previously measured data need not be stored or used for retraining the model. By setting the batch size in Eq. (10) and (11), we have the sequential Bayesian linear model for classification as in Algorithm 1, where .

It is generally assumed that is concave to ensure a unique solution of Eq. (10). It is satisfied by commonly used sigmoid functions for classification problems, including logistic function, probit function, complementary log-log function and log-log function .

We can tap a wide range of convex optimization algorithms including gradient search, conjugate gradient, and BFGS method [60]. But if we set and in Eq. (10), a stable and efficient algorithm for solving

| (12) |

can be obtained as follows. First, taking derivatives with respect to and setting to zero, we have

Defining as

we then have Plugging this back into the definition of to eliminate ’s, we get the equation for :

Since is concave, by its derivative we know the function is monotonically decreasing, and thus the right hand side of the equation decreases as goes from to . We notice that the right hand side is positive when and the left hand side is larger than the right hand side when . Hence the equation has a unique solution in interval . A simple one dimensional bisection method is sufficient to efficiently find the root and thus the solution to the -dimensional optimization problem (12).

We illustrate and validate this schema using logistic and probit functions. For logistic function , by setting for all and then by denoting as , we have

resulting in the following equation for :

It is easy to see that the left hand side decreases from infinity to 1 and the right hand side increases from 1 when goes from 0 to 1, therefore the solution exists and is unique in .

For normal CDF link function , the computation is a little lengthier. First use to denote the standard normal distribution , we have

Let and for all , and thus we have . Plugging these into the definition of we have

| (13) |

Define the right-hand-size of the above equation as . The next lemma shows that and thus the right-hand-side of Eq. (13) is non-increasing. Together with the fact that the left hand side increases from 0 to 1, the bisection method can also be used based on Eq. (13). The proof can be found in Appendix 9.

Lemma 4.1.

Define

We have for every .

5 Knowledge Gradient Policy for Bayesian Linear Classification Belief Model

We begin with a brief review of the knowledge gradient (KG) for ranking and selection problems, where each of the alternative can be measured sequentially to estimates its unknown underlying expected performance . The goal is to adaptively allocate alternatives to measure so as to find an implementation decision that has the largest mean after the budget is exhausted. In a Bayesian setting, the performance of each alternative is represented by a (non-parametric) lookup table model of Gaussian distribution. Specifically, by imposing a Gaussian prior , the posterior after the first observations is denoted by . At the th iteration, the knowledge gradient policy chooses its ()th measurement to maximize the single-period expected increase in value [16]:

It enjoys nice properties, including myopic and asymptotic optimality. KG has been extended to various belief models (e.g. [41, 43, 47, 57]). The knowledge gradient can be extended to online problems where we need to maximize cumulative rewards [47],

where reflects a planning horizon.

Yet there is no KG variant designed for binary classification with parametric models, primarily because of the computational intractability of dealing with nonlinear belief models. In what follows, we first formulate our learning problem as a Markov decision process and then extend the KG policy for stochastic binary outcomes where, for example, each choice (say, a medical decision) influences the success or failure of a medical outcome.

5.1 Markov decision process formulation

Our learning problem is a dynamic program that can be formulated as a Markov decision process. Define the state space as the space of all possible predictive distributions for . By Bayes’ Theorem, the transition function : is:

| (14) |

If we start from a Gaussian prior , after the first observed data, the approximated posterior distribution is . The state space is the cross-product of and the space of positive semidefinite matrices. The transition function for updating the belief state depends on the belief model and the approximation strategy. For example, for different update equations in Algorithm 1 and (6)(7) under different approximation methods, the transition function can be defined as follows with degenerate state space :

Definition 5.1.

The transition function based on online Bayesian classification with Laplace approximation : is defined as

where for either logistic or probit functions, is a column vector containing the diagonal elements of and is understood as a column vector containing , so that . denotes the unobserved binary random variable at time .

Definition 5.2.

The transition function based on assumed density filtering : is defined as

where , , and is understood as the column vector containing , so that .

In a dynamic program, the value function is defined as the value of the optimal policy given a particular state at time , and may also be determined recursively through Bellman’s equation. In the case of stochastic binary feedback, the terminal value function is given by (1) as

The dynamic programming principle tells us that the value function at any other time , , is given recursively by

Since the curse of dimensionality on the state space makes direct computation of the value function intractable, computationally efficient approximate policies need to be considered. A computationally attractive policy for ranking and selection problems is known as the knowledge gradient (KG) [16], which will be extended to handle Bayesian classification models in the next section.

5.2 Knowledge Gradient for Binary Responses

The knowledge gradient of measuring an alternative can be defined as follows:

Definition 5.3.

The knowledge gradient of measuring an alternative while in state is

| (15) |

is deterministic given and is independent of alternatives . Since the label for alternative is not known at the time of selection, the expectation is computed conditional on the current belief state . Specifically, given a state , the outcome of an alternative is a random variable that follows from a Bernoulli distribution with a predictive distribution

| (16) |

We can calculate the expected value in the next state as

The knowledge gradient policy suggests at each time selecting the alternative that maximizes where ties are broken randomly. Because of the errors incurred by approximation and numerical calculation, the tie should be understood as within -accuracy. The knowledge gradient policy can work with any choice of link function and approximation procedures by adjusting the transition function accordingly. That is, or .

The predictive distribution is obtained by marginalizing with respect to the distribution specified by current belief state . Denoting and as the Dirac delta function, we have Hence

where Since the delta function imposes a linear constraint on and is Gaussian, the marginal distribution is also Gaussian. We can evaluate by calculating the mean and variance of this distribution [4]. We have

Thus

For probit function , the convolution of a Gaussian and a normal CDF can be evaluated analytically. Thus for probit regression, the predictive distribution can be solved exactly as Yet, the convolution of a Gaussian with a logistic sigmoid function cannot be evaluated analytically. We apply the approximation with (see [3, 51]), leading to the following approximation for the convolution of a logistic sigmoid with a Gaussian

where .

Because of the one-step look ahead, the KG calculation can also benefit from the online recursive update of the belief either from ADF or from online Bayesian linear classification based on Laplace approximation. We summarize the decision rules of the knowledge gradient policy at each iteration under different sigmoid functions and different approximation methods in Algorithm 2, 3, and 4, respectively.

We next present the following finite-time bound on the the mean squared error (MSE) of the estimated weight for Bayesian logistic regression. The proof is in the supplement. Since the learner plays an active role in selecting the measurements, the bound does not make the i.i.d. assumption of the examples which differs from the PAC learning bound. Without loss of generality, we assume , . In our finite-time analysis, we would like to highlight the difference between the ideal n-step estimate with the prior distribution , and its approximation . The approximation error may come from the Laplace approximation we adopt when an explicit form is not available, or from accumulation of numerical error.

Theorem 5.4.

Let be the measurements produced by the KG policy. Then with probability , the expected error of is bounded as

where the distribution is the vector Bernoulli distribution , is the probability of a d-dimensional standard normal random variable appears in the ball with radius and The same finite time bound holds for as long as the approximation satisfies

| (17) |

In the special case where , we have and . The bound holds with higher probability with larger . This is natural since a larger represents a normal distribution with narrower bandwidth, resulting in a more concentrated around .

Condition (17) quantifies how well the approximation should be carried out in order for the bound to hold. It places a condition on the optimality of the log-likelihood value instead of the distance to the global maximizer. This is a friendly condition since the dependence of the maximizer over the objective function is generally not continuous. The approximation error could come from either the Laplace approximation or the numerical calculation in practice, which calls for experiments to further verity the performance of the algorithm. Indeed, we conduct extensive experiments in Section 6 to demonstrate the behaviors of the proposed algorithm together with bench mark methods.

If the goal of the learner is to maximize the cumulative successes as in bandit settings, rather than finding the final recommendation after the offline training phases, the online knowledge gradient policy can be modified by deleting at each time step as:

| (18) |

where reflects a planning horizon.

5.3 Behavior and Asymptotic Optimality

In this section, we study theoretically the behavior of the KG policy, especially in the limit as the number of measurements grows large. For the purposes of the theoretical analysis, we do not approximate the predictive posterior distribution. We use logistic function as the sigmoid link function throughout this section. Yet the theoretical results can be generalized to other link functions. We begin by showing the positive value of information (benefits of measurement).

Proposition 5.5 (Benefits of measurement).

The knowledge gradient of measuring any alternative while in any state is nonnegative, The state space is the space of all possible predictive distributions for .

The next lemma shows that the asymptotic probability of success of each alternative is well defined.

Lemma 5.6.

For any alternative , converges almost surely to a random variable , where is short hand notation for

Proof.

ProofSince , the definition of implies that is a bounded martingale and hence converges. ∎

The rest of this section shows that this limiting probability of success of each alternative is one in which the posterior is consistent and thus the KG is asymptotically optimal. We also show that as the number of measurements grows large, the maximum likelihood estimator based on the alternatives measured by the KG policy is consistent and asymptotically normal. The next proposition states that if we have measured an alternative infinitely many times, there is no benefit to measuring it one more time. This is a key step for establishing the consistency of the KG policy and the MLE. The proof is similar to that by [15] with additional mathematical steps for Bernoulli distributed random variables. See Appendix 11.2 for details.

Proposition 5.7.

If the policy measures alternative infinitely often almost surely, then the value of information of that alternative almost surely under policy .

Without loss of generality, we assume and that the matrix formed by is invertible. The next theorem states the strong consistency and asymptotic normality of the maximum likelihood estimator (e.g. with ) based on KG’s sequential selection of alternatives by verification of the following regularity conditions:

-

(C1)

The exogenous variables are uniformly bounded.

-

(C2)

Let and be respectively the smallest and the largest eigenvalue of the Fisher information of the first observations . There exists such that , .

-

(C3)

The smallest eigenvalue of the Fisher information is divergent,

Theorem 5.8.

The sequence of based on KG’s sequential selection of alternatives converges almost surely to the true value and is asymptotically normal:

Proof.

Proof We first prove that for any alternative , it will be measured infinitely many times almost surely. We will prove it by contradiction. If this is not the case, then there exists a time such that for any ,

| (19) |

This is because otherwise the difference between the KG value of and the maximum KG value will be smaller than for infinitely many times, and thus the probability of not measuring after will be 0.

In addition, since the KG value is always non-negative, we have for each . Notice that is a finite set, then it follows that there exists an alternative such that the following happens infinitely many times:

| (20) |

Therefore, is measured infinitely many times almost surely. However, we have proved in Proposition 5.7 that goes to 0 zero as long as we measure infinitely many times, which contradicts (20). The contradiction shows that our original assumption that only being measured finite times is incorrect. As a consequence, we have prove that, almost surely, will be measured infinitely many times.

Since our proof is for arbitrary , we actually proved that every alternative will be measured infinitely many times, which immediately leads to . Therefore the algorithm will eventually pick the alternative uniformly at random. Hence it satisfies the conditions (C2) and (C3), leading to the strong consistency and asymptotic normality [21, 13, 25, 11].

In particular, we can prove that the smallest eigenvalue of the Fisher’s information goes to infinity in a simple way. Without loss of generality, assume that the alternatives are in general linear position, which means that the matrix is invertible. We use to denote the set of these alternatives and denote by . Then is a matrix whose every element equals 0 except that its -th diagonal element equals one.

We use to denote the Fisher’s information at time . Then from the fact that the matrix of type is positive semi-definite, it is straightforward to see that

where the constant since is a finite set. Now define matrix as

which is a diagonal matrix whose -th element equals the times that is estimated. Since is measured infinitely many times for , then each diagonal element of goes to infinity. Now notice that is congruent to and is a constant matrix, then it follows that the smallest eigenvalue of , and hence the smallest eigenvalue of , goes to infinity.

Similarly, by using the congruent argument, we only need to show that almost surely for any , there exists a constant such that , where is the time that is measured in the first measurements. Since the algorithm eventually selects alternatives uniformly at random, without loss of generality, we assume is the num of i.i.d Bernoulli random variable with probability , then it is clear to check that , and . Let be the event that . Notice that implies that there exists a sequence such that . However, by Chebyshev’s inequality, we have

Notice that the above inequality holds for any , which means and thus almost surely, which completes the proof. ∎

After establishing the consistency and asymptotic normality for , for any as the inverse of the variance of the prior Gaussian distribution, the asymptotic bias of the estimator is as follows [36]:

and the asymptotic variance of is

Finally, we show in the next theorem that given the opportunity to measure infinitely often, for any given neighborhood of , the probability that the posterior distribution lies in this neighborhood goes to 1 and KG will discover which one is the true best alternative. The detailed proof can be found in Appendix 11.3.

Theorem 5.9 (Asymptotic optimality).

For any true parameter value and any normal prior distribution with positive definite covariance matrix, under the knowledge gradient policy, the posterior is consistent and the KG policy is asymptotically optimal: .

5.4 Knowledge Gradient for contextual bandits

In many of the applications, the “success” or “failure” can be predicted though an unknown relationship that depends on a partially controllable vector of attributes for each instance. For example, the patient attributes are given which we do not have control over. We can only choose a medical decision, and the outcome is based on both the patient attributes and the selected medical decision.

This problem is known as a contextual bandit [34, 37, 33]. At each round , the learner is presented with a context vector (patient attributes) and a set of actions (medical decisions). After choosing an alternative , we observe an outcome . The goal is to find a policy that selects actions such that the cumulative reward is as large as possible over time. In contrast, the previous section considers a context-free scenario with the goal of maximizing the probability of success after the offline training phase so that the error incurred during the training is not punished.

Since there are very few patients with the same characteristics, it is unlikely that we can work with one type of patients that have the same characteristics to produce statistically reliable performance measurements on medical outcomes. The contextual bandit formulation provides the potential to use parametric belief models that allow us to learn relationships across a wide range of patients and medical decisions and personalize health care based on the patient attributes.

Each of the past observations are made of triplets . Under Bayesian linear classification belief models, the binomial outcome is predicted though ,

| (21) |

where suppose each context and action is represented by feature vectors and , respectively. At each round , the model updates can be slightly modified based on the observation triplet by treating as the alternative , where denotes the concatenation of the two vectors and .

The knowledge gradient of measuring an action given context can be defined as follows:

Definition 5.10.

The knowledge gradient of measuring an action given a context while in state is

| (22) |

The calculation of can be modified based on Section 5.2 by replacing as throughout.

Since the objective in the contextual bandit problems is to maximize the cumulative number of successes, the knowledge gradient policy developed in Section 5.2 for stochastic binary feedback can be easily extended to online learning following the “stop-learning” (SL) policy adopted by [47]. The action that is chosen by KG at time given a context and a knowledge state can be obtained as:

where reflects a planning horizon.

It should be noted that if the context are constant over time, is degenerated to the case of context-free multi-armed bandit problems as discussed in Eq. (18).

6 Experimental results

In this section, we evaluate the proposed method in offline learning settings where we are not punished for errors incurred during training and only concern with the final recommendation after the offline training phases.

We experiment with both synthetic datasets and the UCI machine learning repository [38] which includes classification problems drawn from settings including sonar, glass identification, blood transfusion, survival, breast cancer (wpbc), planning relax and climate model failure. We first analyze the behavior of the KG policy and then compare it to state-of-the-art learning algorithms. On synthetic datasets, we randomly generate a set of -dimensional alternatives from . At each run, the stochastic binary labels are simulated using a -dimensional weight vector which is sampled from the prior distribution . The label for each alternative is generated with probability . For each UCI dataset, we use all the data points as the set of alternatives with their original attributes. We then simulate their labels using a weight vector . This weight vector could have been chosen arbitrarily, but it was in fact a perturbed version of the weight vector trained through logistic regression on the original dataset.

6.1 Behavior of the KG policy

|

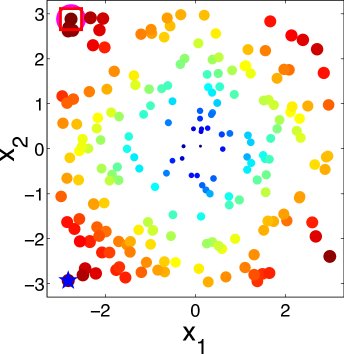

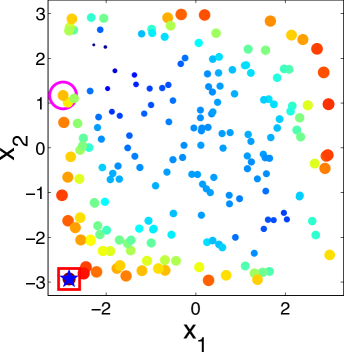

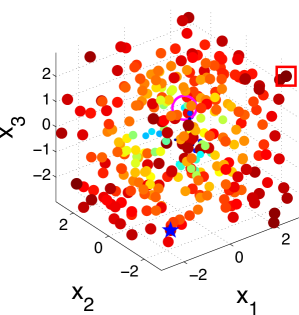

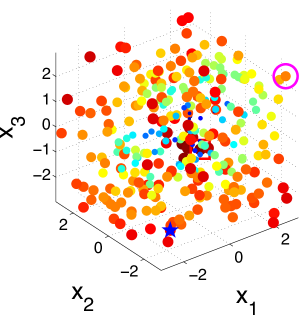

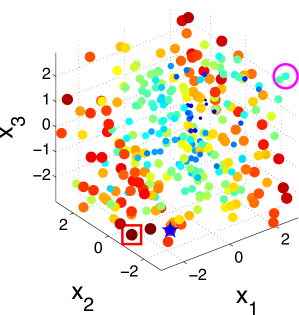

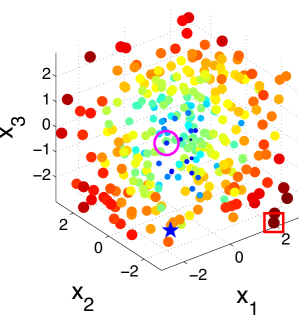

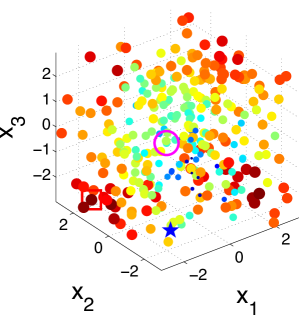

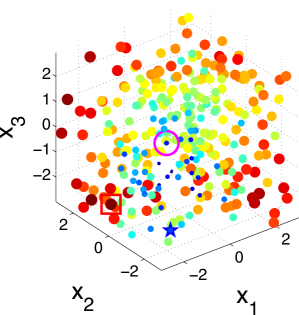

To better understand the behavior of the KG policy, Fig. 1 shows the snapshot of the KG policy at each iteration on a -dimensional synthetic dataset and a -dimensional synthetic dataset in one run. Fig. 1 shows the snapshot on a 2-dimensional dataset with 200 alternatives. The scatter plots show the KG values with both the color and the size of the point reflecting the KG value of the corresponding alternative. The star denotes the true alternative with the largest response. The red square is the alternative with the largest KG value. The pink circle is the implementation decision that maximizes the response under current estimation of if the budget is exhausted after that iteration.

It can be seen from the figure that the KG policy finds the true best alternative after only three measurements, reaching out to different alternatives to improve its estimates. We can infer from Fig. 1 that the KG policy tends to choose alternatives near the boundary of the region. This criterion is natural since in order to find the true maximum, we need to get enough information about and estimate well the probability of points near the true maximum which appears near the boundary. On the other hand, in a logistic model with labeling noise, a data with small inherently brings little information as pointed out by [61]. For an extreme example, when the label is always completely random for any since . This is an issue when perfect classification is not achievable. So it is essential to label a data with larger that has the most potential to enhance its confidence non-randomly.

|

|

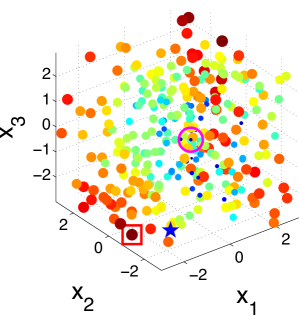

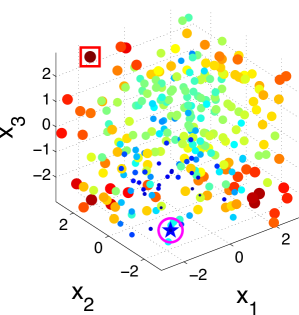

Fig. 2 illustrates the snapshots of the KG policy on a 3-dimensional synthetic dataset with 300 alternatives. It can be seen that the KG policy finds the true best alternative after only 10 measurements. This set of plots also verifies our statement that the KG policy tends to choose data points near the boundary of the region.

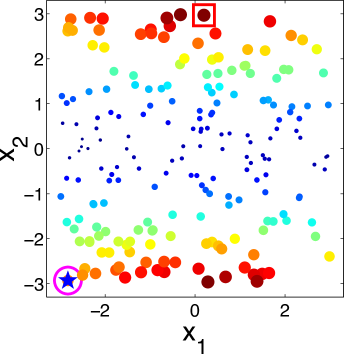

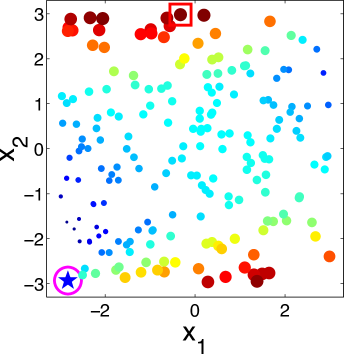

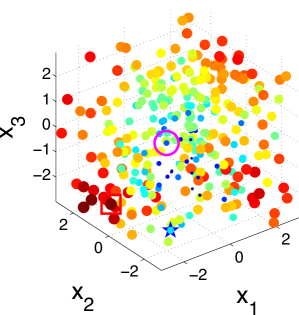

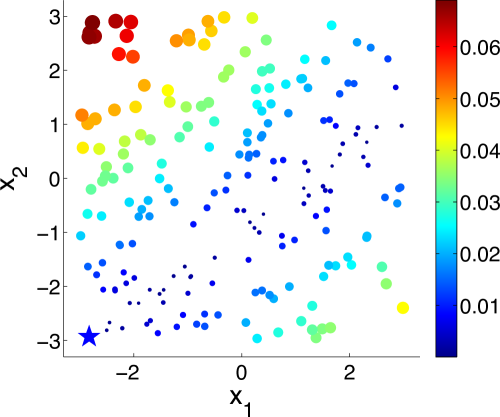

Also depicted in Fig. 3 is the absolute class distribution error of each alternative, which is the absolute difference between the predictive probability of class under current estimate and the true probability after iterations for the 2-dimensional dataset and 10 iterations for the 3-dimensional dataset. We see that the probability at the true maximum is well approximated, while moderate error in the estimate is located away from this region of interest.

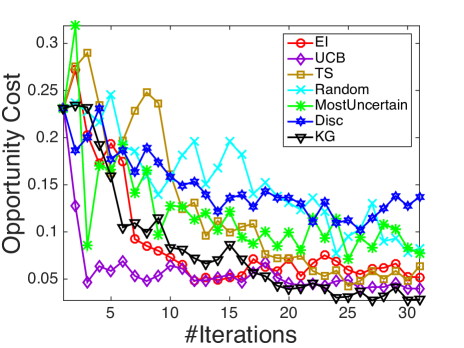

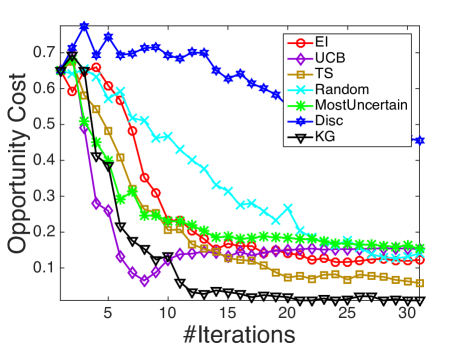

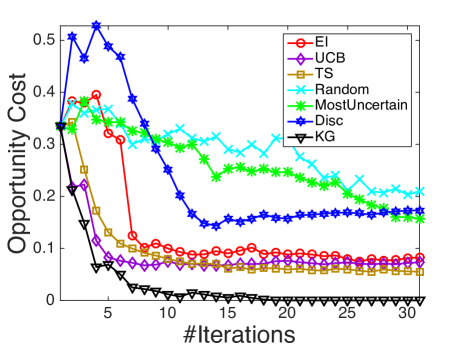

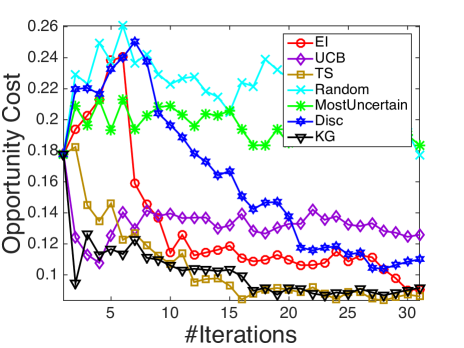

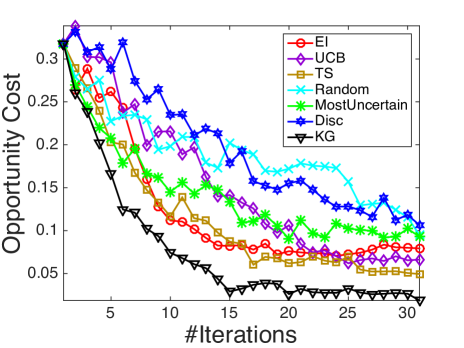

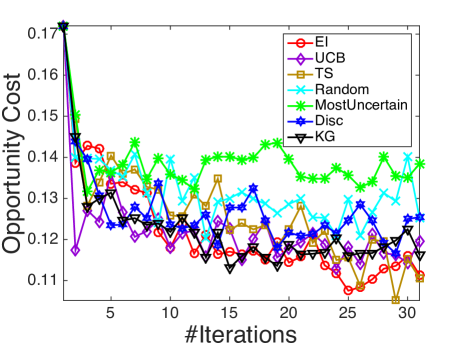

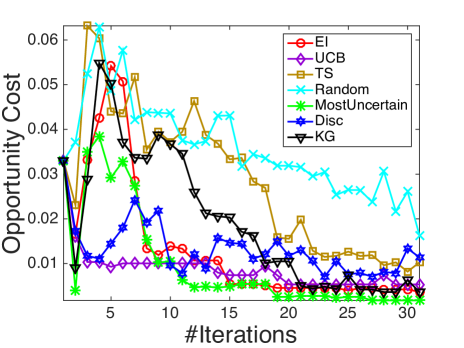

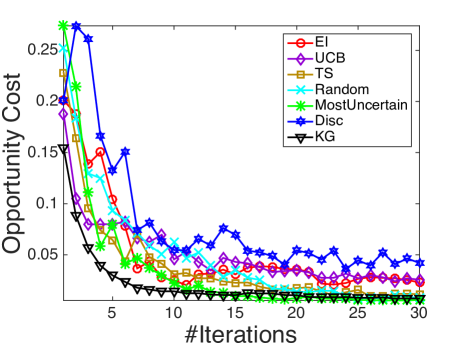

6.2 Comparison with other policies

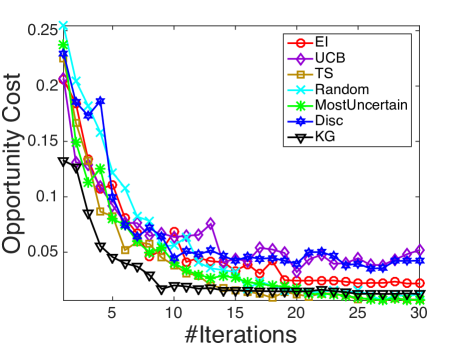

Recall that our goal is to maximize the expected response of the implementation decision. We define the Opportunity Cost (OC) metric as the expected response of the implementation decision compared to the true maximal response under weight :

Note that the opportunity cost is always non-negative and the smaller the better. To make a fair comparison, on each run, all the time- labels of all the alternatives are randomly pre-generated according to the weight vector and shared across all the competing policies. We allow each algorithm to sequentially measure N = 30 alternatives.

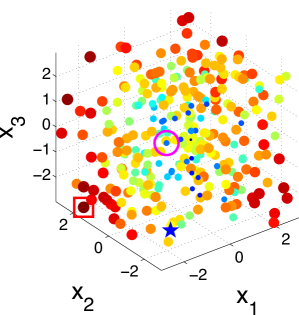

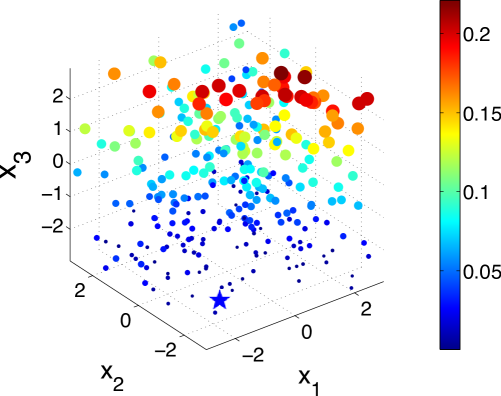

We compare with the following state-of-the-art active learning and Bayesian optimization policies that are compatible with logistic regression: Random sampling (Random), a myopic method that selects the most uncertain instance each step (MostUncertain), discriminative batch-mode active learning (Disc) [23] with batch size equal to 1, expected improvement (EI) [53] with an initial fit of 5 examples and Thompson sampling (TS) [7]. Besides, as upper confidence bounds (UCB) methods are often considered in bandit and optimization problems, we compare against UCB on the latent function (UCB) [37] with tuned to be 1. All the state transitions are based on the online Bayesian logistic regression framework developed in Section 4, while different policies provides different rules for measurement decisions at each iteration. The experimental results are shown in figure 4. In all the figures, the x-axis denotes the number of measured alternatives and the y-axis represents the averaged opportunity cost averaged over 100 runs.

It is demonstrated in Fig. 4 that KG outperforms the other policies in most cases, especially in early iterations, without requiring a tuning parameter. As an unbiased selection procedure, random sampling is at least a consistent algorithm. Yet it is not suitable for expensive experiments where one needs to learn the most within a small experimental budget. MostUncertain and Disc perform quite well on some datasets while badly on others. A possible explanation is that the goal of active leaning is to learn a classifier which accurately predicts the labels of new examples so their criteria are not directly related to maximize the probability of success aside from the intent to learn the prediction. After enough iterations when active learning methods presumably have the ability to achieve a good estimator of , their performance will be enhanced. Thompson sampling works in general quite well as reported in other literature [7]. Yet, KG has a better performance especially during the early iterations. In the case when an experiment is expensive and only a small budget is allowed, the KG policy, which is designed specifically to maximize the response, is preferred.

We also note that KG works better than EI in most cases, especially in Fig. 4(b), 4(c) and 4(e). Although both KG and EI work with the expected value of information, when EI decides which alternative to measure, it is based on the expected improvement over current predictive posterior distribution while ignoring the potential change of the posterior distribution resulting from the next (stochastic) outcome . In comparison, KG considers an additional level of expectation over the random (since at the time of decision, we have not yet observed outcome) binary output .

Finally, KG, EI and Thompson sampling outperform the naive use of UCB policies on the latent function due to the errors in the variance introduced by the nonlinear transformation. At each time step, the posterior of is approximated as a Gaussian distribution. An upper confidence bound on does not translate to one on with binary outcomes. In the meantime, KG, EI and Thompson sampling make decisions in the underlying binary outcome probability space and find the right balance of exploration and exploitation.

7 Conclusion

Motivated by real world applications, we consider binary classification problems where we have to sequentially run expensive experiments, forcing us to learn the most from each experiment. With a small budget of measurements, the goal is to learn the classification model as quickly as possible to identify the alternative with the highest probability of success. Due to the sequential nature of this problem, we develop a fast online Bayesian linear classifier for general response functions to achieve recursive updates. We propose a knowledge gradient policy using Bayesian linear classification belief models, for which we use different analytical approximation methods to overcome computational challenges. We further extend the knowledge gradient to the contextual bandit settings. We provide a finite-time analysis on the estimated error, and show that the maximum likelihood estimator based on the adaptively sampled points by the KG policy is consistent and asymptotically normal. We show furthermore that the knowledge gradient policy is asymptotic optimal. We demonstrate its efficiency through a series of experiments.

References

- [1] Jean-Yves Audibert and Sébastien Bubeck. Best arm identification in multi-armed bandits. In COLT-23th Conference on Learning Theory-2010, pages 13–p, 2010.

- [2] P. Auer, N. Cesa-Bianchi, and P. Fischer. Finite-time analysis of the multiarmed bandit problem. Mach. Learn., 47(2-3):235–256, 2002.

- [3] David Barber and Christopher M Bishop. Ensemble learning for multi-layer networks. Advances in neural information processing systems, pages 395–401, 1998.

- [4] Christopher M Bishop et al. Pattern recognition and machine learning, volume 4. springer New York, 2006.

- [5] Xavier Boyen and Daphne Koller. Tractable inference for complex stochastic processes. In Proceedings of the Fourteenth conference on Uncertainty in artificial intelligence, pages 33–42. Morgan Kaufmann Publishers Inc., 1998.

- [6] Sébastien Bubeck and Nicolò Cesa-Bianchi. Regret analysis of stochastic and nonstochastic multi-armed bandit problems. Foundations and Trends in Machine Learning, 2012.

- [7] Olivier Chapelle and Lihong Li. An empirical evaluation of thompson sampling. In Advances in neural information processing systems, pages 2249–2257, 2011.

- [8] Stephen E Chick. New two-stage and sequential procedures for selecting the best simulated system. Operations Research, 49(5):732–743, 2001.

- [9] Wei Chu, Lihong Li, Lev Reyzin, and Robert E Schapire. Contextual bandits with linear payoff functions. In International Conference on Artificial Intelligence and Statistics, pages 208–214, 2011.

- [10] Wei Chu, Martin Zinkevich, Lihong Li, Achint Thomas, and Belle Tseng. Unbiased online active learning in data streams. In Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 195–203. ACM, 2011.

- [11] David Roxbee Cox and David Victor Hinkley. Theoretical statistics. CRC Press, 1979.

- [12] M. H. DeGroot. Optimal Statistical Decisions. McGraw-Hill, 1970.

- [13] Ludwig Fahrmeir and Heinz Kaufmann. Consistency and asymptotic normality of the maximum likelihood estimator in generalized linear models. The Annals of Statistics, pages 342–368, 1985.

- [14] Sarah Filippi, Olivier Cappe, Aurélien Garivier, and Csaba Szepesvári. Parametric bandits: The generalized linear case. In Advances in Neural Information Processing Systems, pages 586–594, 2010.

- [15] Peter Frazier, Warren Powell, and Savas Dayanik. The knowledge-gradient policy for correlated normal beliefs. INFORMS journal on Computing, 21(4):599–613, 2009.

- [16] Peter I Frazier, Warren B Powell, and Savas Dayanik. A knowledge-gradient policy for sequential information collection. SIAM Journal on Control and Optimization, 47(5):2410–2439, 2008.

- [17] David A Freedman. On the asymptotic behavior of bayes’ estimates in the discrete case. The Annals of Mathematical Statistics, pages 1386–1403, 1963.

- [18] Yoav Freund, H Sebastian Seung, Eli Shamir, and Naftali Tishby. Selective sampling using the query by committee algorithm. Machine learning, 28(2-3):133–168, 1997.

- [19] Subhashis Ghosal, Jayanta K Ghosh, RV Ramamoorthi, et al. Posterior consistency of dirichlet mixtures in density estimation. Ann. Statist, 27(1):143–158, 1999.

- [20] Subhashis Ghosal and Anindya Roy. Posterior consistency of gaussian process prior for nonparametric binary regression. The Annals of Statistics, pages 2413–2429, 2006.

- [21] Christian Gourieroux and Alain Monfort. Asymptotic properties of the maximum likelihood estimator in dichotomous logit models. Journal of Econometrics, 17(1):83–97, 1981.

- [22] Thore Graepel, Joaquin Q Candela, Thomas Borchert, and Ralf Herbrich. Web-scale bayesian click-through rate prediction for sponsored search advertising in microsoft’s bing search engine. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), pages 13–20, 2010.

- [23] Yuhong Guo and Dale Schuurmans. Discriminative batch mode active learning. In Advances in neural information processing systems, pages 593–600, 2008.

- [24] H-M Gutmann. A radial basis function method for global optimization. Journal of Global Optimization, 19(3):201–227, 2001.

- [25] Shelby J Haberman and Shelby J Haberman. The analysis of frequency data, volume 194. University of Chicago Press Chicago, 1974.

- [26] Donghai He, Stephen E Chick, and Chun-Hung Chen. Opportunity cost and OCBA selection procedures in ordinal optimization for a fixed number of alternative systems. Systems, Man, and Cybernetics, Part C: Applications and Reviews, IEEE Transactions on, 37(5):951–961, 2007.

- [27] Philipp Hennig and Christian J Schuler. Entropy search for information-efficient global optimization. The Journal of Machine Learning Research, 13(1):1809–1837, 2012.

- [28] Matthew D Hoffman, Bobak Shahriari, and Nando de Freitas. On correlation and budget constraints in model-based bandit optimization with application to automatic machine learning. In AISTATS, pages 365–374, 2014.

- [29] David W Hosmer Jr and Stanley Lemeshow. Applied logistic regression. John Wiley & Sons, 2004.

- [30] Deng Huang, Theodore T Allen, William I Notz, and N Zeng. Global optimization of stochastic black-box systems via sequential kriging meta-models. Journal of global optimization, 34(3):441–466, 2006.

- [31] Donald R Jones. A taxonomy of global optimization methods based on response surfaces. Journal of global optimization, 21(4):345–383, 2001.

- [32] Donald R Jones, Matthias Schonlau, and William J Welch. Efficient global optimization of expensive black-box functions. Journal of Global optimization, 13(4):455–492, 1998.

- [33] Andreas Krause and Cheng S Ong. Contextual gaussian process bandit optimization. In Advances in Neural Information Processing Systems, pages 2447–2455, 2011.

- [34] John Langford and Tong Zhang. The epoch-greedy algorithm for contextual multi-armed bandits. In Advances in Neural Information Processing Systems, 2008.

- [35] Steffen L Lauritzen. Propagation of probabilities, means, and variances in mixed graphical association models. Journal of the American Statistical Association, 87(420):1098–1108, 1992.

- [36] Saskia Le Cessie and Johannes C Van Houwelingen. Ridge estimators in logistic regression. Applied statistics, pages 191–201, 1992.

- [37] Lihong Li, Wei Chu, John Langford, and Robert E Schapire. A contextual-bandit approach to personalized news article recommendation. In Proceedings of the 19th international conference on World wide web, pages 661–670. ACM, 2010.

- [38] M. Lichman. UCI machine learning repository, 2013.

- [39] Dhruv Kumar Mahajan, Rajeev Rastogi, Charu Tiwari, and Adway Mitra. Logucb: an explore-exploit algorithm for comments recommendation. In Proceedings of the 21st ACM international conference on Information and knowledge management, pages 6–15. ACM, 2012.

- [40] Peter S Maybeck. Stochastic models, estimation, and control, volume 3. Academic press, 1982.

- [41] Martijn RK Mes, Warren B Powell, and Peter I Frazier. Hierarchical knowledge gradient for sequential sampling. The Journal of Machine Learning Research, 12:2931–2974, 2011.

- [42] D. C. Montgomery. Design and Analysis of Experiments. John Wiley and Sons, 2008.

- [43] Diana M Negoescu, Peter I Frazier, and Warren B Powell. The knowledge-gradient algorithm for sequencing experiments in drug discovery. INFORMS Journal on Computing, 23(3):346–363, 2011.

- [44] Warren B Powell and Ilya O Ryzhov. Optimal learning. John Wiley & Sons, 2012.

- [45] Pradeep Ravikumar, Martin J Wainwright, John D Lafferty, et al. High-dimensional ising model selection using ℓ1-regularized logistic regression. The Annals of Statistics, 38(3):1287–1319, 2010.

- [46] Rommel G Regis and Christine A Shoemaker. Constrained global optimization of expensive black box functions using radial basis functions. Journal of Global Optimization, 31(1):153–171, 2005.

- [47] Ilya O Ryzhov, Warren B Powell, and Peter I Frazier. The knowledge gradient algorithm for a general class of online learning problems. Operations Research, 60(1):180–195, 2012.

- [48] Mehran Sahami, Susan Dumais, David Heckerman, and Eric Horvitz. A bayesian approach to filtering junk e-mail. In Learning for Text Categorization: Papers from the 1998 workshop, volume 62, pages 98–105, 1998.

- [49] Andrew I Schein and Lyle H Ungar. Active learning for logistic regression: an evaluation. Machine Learning, 68(3):235–265, 2007.

- [50] Burr Settles. Active learning literature survey. University of Wisconsin, Madison, 52(55-66):11, 2010.

- [51] David J Spiegelhalter and Steffen L Lauritzen. Sequential updating of conditional probabilities on directed graphical structures. Networks, 20(5):579–605, 1990.

- [52] Niranjan Srinivas, Andreas Krause, Sham M Kakade, and Matthias Seeger. Gaussian process optimization in the bandit setting: No regret and experimental design. arXiv preprint arXiv:0912.3995, 2009.

- [53] Matthew Tesch, Jeff Schneider, and Howie Choset. Expensive function optimization with stochastic binary outcomes. In Proceedings of The 30th International Conference on Machine Learning, pages 1283–1291, 2013.

- [54] Surya T Tokdar and Jayanta K Ghosh. Posterior consistency of logistic gaussian process priors in density estimation. Journal of Statistical Planning and Inference, 137(1):34–42, 2007.

- [55] Simon Tong and Daphne Koller. Support vector machine active learning with applications to text classification. The Journal of Machine Learning Research, 2:45–66, 2002.

- [56] Yingfei Wang, Hua Ouyang, Chu Wang, Jianhui Chen, Tsvetan Asamov, and Yi Chang. Efficient ordered combinatorial semi-bandits for whole-page recommendation. In AAAI, pages 2746–2753, 2017.

- [57] Yingfei Wang, Kristofer G Reyes, Keith A Brown, Chad A Mirkin, and Warren B Powell. Nested-batch-mode learning and stochastic optimization with an application to sequential multistage testing in materials science. SIAM Journal on Scientific Computing, 37(3):B361–B381, 2015.

- [58] Yingfei Wang, Chu Wang, and Warren Powell. The knowledge gradient for sequential decision making with stochastic binary feedbacks. In Proceedings of the 33rd international conference on Machine learning, 2016.

- [59] G. B. Wetherill and K. D. Glazebrook. Sequential Methods in Statistics. Chapman and Hall, 1986.

- [60] Stephen J Wright and Jorge Nocedal. Numerical optimization, volume 2. Springer New York, 1999.

- [61] Tong Zhang and F Oles. The value of unlabeled data for classification problems. In Proceedings of the Seventeenth International Conference on Machine Learning,(Langley, P., ed.), pages 1191–1198. Citeseer, 2000.

8 Proof of Lemma 2.1

First notice that for any fixed point ,

By the tower property of conditional expectations, and since is measurable,

Then by the definition of , we have

9 Proof of Lemma 4.1.

Let . Then

It is obvious that . We only need to show that . Since the normal density function is symmetric with respect to the origin and , we have . Define . Since , we show for by proving that .

First note that . So we have . Thus .

10 Proof of Theorem 5.4

For notation simplicity, we use and instead of and , and use , to denote and in the proof. Consider the objective function defined as

We use and to denote the quadratic term and the rest, respectively. By applying the mean value theorem to , we have

where is the Hessian of . To analyze the first and second order terms in the expansion, we use a similar technique adopted by [45]. For the gradient in the first order term, we have

For the second order term, we have

where .

We expand to its first order and use mean value theorem again,

where we omit the dependence of on for simplicity and use the fact .

Combining the first order and second order analysis and denoting as , we have

where we use the fact that is positive semi-definite and . By using to denote the minimal eigenvalue in the last inequality, we have

On the other hand, for the quadratic term , we have

where.

Now define function as , then we have

| (23) | |||||

From now on we will use the simplified symbols and instead of and . We consider the sign of in the case when and

| (24) |

Based on the analysis in (23), we have

Notice that and recall that minimizes so we have

Then based on the convexity of we know that , otherwise the values of at 0, and the intersection between the all and line from 0 to form a concave pattern, which is contradictory. Similarly, based on the assumption that , we have

Recall that and , then the convexity of also ensures that .

11 Proofs of Asymptotic Optimality

In this section, we provide detailed proofs of all the asymptotic optimal results in Section 5.3.

11.1 Proof of Proposition 5.5

We use to denote the predictive distribution under the state and use to denote the posterior distribution after we observe the outcome of to be . By Jensen’s inequality, we have

We then show that for any and , which leads to . Since is binomial distributed with mean , we have

Recall that By Bayes’ Theorem, the posterior distribution in the updated state becomes

and

Notice that

and similarly, we have

Therefore,

and thus we obtain

11.2 Proof of Proposition 5.7

The proof is similar to that by [15] with additional tricks for Bernoulli distributed random variables. Let be the sigma-algebra by the collection . Since if the policy measures alternative infinitely often, this collection is an infinite sequence of independent random variables with common Bernoulli distribution with mean , the strong law of large numbers implies . Since , we have . Let be a uniform random variable in . Then the Bernoulli random variable can be rewritten as . Since is independent with and the -algebra generated by , it can be shown that by properties of conditional expectations,

We next show that the knowledge gradient value of measuring alternative is zero by substituting this relation into the definition of the knowledge gradient. We have

11.3 Proof of the Theorem 5.9: Consistency of the KG Policy

It has been established that almost surely the knowledge gradient policy will achieve a state (at time T) that the KG values for all the alternatives are smaller than , after which the probability of selecting each alternative is where is the number of alternatives. Notice that in each round, KG policy first picks one out of alternatives, then a feedback of either 1 or -1 is observed. Equivalently, we can interpret the two procedures as one of the possible outcomes from the set , where each is a -dimensional vector with only element being +1 or -1. It should be noted that by changing the feedback schema in this way will not affect the Bayesian update equations because the likelihood function and the normalization factor in the posterior will both multiply by a factor of . On the other hand, this combined feedback schema makes it possible to treat each measurement as i.i.d. samples in .

Define the K-L neiborhood as , where the K-L divergence is defined as . Since the prior distribution is Gaussian with positive definite covariance matrix, and the likelihood function is the sigmoid function which only takes positive values, then after time , the posterior probability in the K-L neighborhood of is positive. Based on standard results on the consistency of Bayes’ estimates [20, 19, 54, 17], the posterior is weakly consistent at in the sense that for any neighborhood of , the probability that lies in converges to 1.

Without loss of generality, assume that the alternative with the largest probability of +1 is unique, which means for any alternative other than . Then we can pick to be the neighborhood of such that holds for any . The neighborhood exists because we only have finite number of altervatives. From the consistency results, the probability that the best arm under posterior estimation is the true best alternative goes to 1 as the measurement budget goes to infinity.