Optimization and Learning With Nonlocal Calculus

Abstract

Nonlocal models have recently had a major impact in nonlinear continuum mechanics and are used to describe physical systems/processes which cannot be accurately described by classical, calculus based “local” approaches. In part, this is due to their multiscale nature that enables aggregation of micro-level behavior to obtain a macro-level description of singular/irregular phenomena such as peridynamics, crack propagation, anomalous diffusion and transport phenomena. At the core of these models are nonlocal differential operators, including nonlocal analogs of the gradient/Hessian. This paper initiates the use of such nonlocal operators in the context of optimization and learning. We define and analyze the convergence properties of nonlocal analogs of (stochastic) gradient descent and Newton’s method on Euclidean spaces. Our results indicate that as the nonlocal interactions become less noticeable, the optima corresponding to nonlocal optimization converge to the “usual” optima. At the same time, we argue that nonlocal learning is possible in situations where standard calculus fails. As a stylized numerical example of this, we consider the problem of non-differentiable parameter estimation on a non-smooth translation manifold and show that our nonlocal gradient descent recovers the unknown translation parameter from a non-differentiable objective function.

Keywords: Nonlocal operators, optimization, machine learning

1 Introduction

Nonlocality is an important paradigm in understanding the behavior of a system undergoing spatio-temporal or structural changes, particularly when such changes are abrupt or singular. A system exhibits nonlocality if the changes occurring at some point are influenced by the interactions between the and its neighbors. In contrast, a local phenomenon is one where changes at depend solely on . In order to perform predictive analysis on nonlocal systems, an important step is to develop nonlocal analytic/computational tools i.e., nonlocal calculus and corresponding nonlocal descriptive models.

A generic description of a nonlocal operator is a mapping from some topological space into a set such that the value of at a point depends on not only but also points in some neighborhood . In contrast, for a local operator , its value at depends solely on . Some of these nonlocal analyses lend themselves to a multiscale viewpoint, where instead of a single nonlocal operator , we have instead a family of such operators indexed by a scale parameter . A common theme is the convergence of the nonlocal operators to a local counterpart, under suitable assumptions. In other words, the scale parameter controls the nonlocality in such a way that, asymptotically, as , the nonlocal operators , where is a local operator. The exact nature of convergence, i.e., the topology of the function spaces in question, naturally has an important role to play in such a situation.

The prototypical class of nonlocal operators is the class of integral operators. If is an open subset of a Euclidean space, and a suitable function, then we can consider the operator

| (1) |

for an appropriate kernel . A generic enough can capture the nonlocal interaction between points of , or said differently, the modeler has the flexibility of choosing the kernel generic enough to model the phenomena under investigation. We also can consider a sequence of such kernels which give rise to the integral operators

| (2) |

Assuming that the converge, in some sense, to a kernel , we can conclude, with reasonable assumptions, the convergence of the corresponding operators to (see [6]). In case , where is the Dirac mass at the origin, we have, at least formally, that the converge to the identity, i.e., . This observation underpins most of our further discussions.

While classical, differential operator (gradient, Hessian etc.) based methods work well under benign conditions, they cannot be used with singular domains and/or functions, since partial derivatives with the required regularity do not exist. The nonlocal calculus we study here is based on integral operators. The integral operators of nonlocal models come equipped with so-called “interaction kernels" and can be defined for irregular domains or non-differentiable functions. As such, they do not suffer from singularity issues to the extent of their differential counterparts ([3, 22, 19]) and have been applied in practical problems with great success. In addition to being applicable to singular problems, nonlocal operators can be shown to converge to their local counterparts under suitably natural conditions: nonlocal gradients (resp. Hessians) converge to the usual gradient (resp. Hessian) when the latter exists (see [10, 35, 30]).

1.1 Contributions

Our primary contributions are the theory and algorithmic applications of nonlocal differential operators, mainly nonlocal first and second order (gradient and Hessian) operators to perform optimization tasks. Specifically, we develop nonlocal analogs of gradient (including stochastic) descent, as well as Newton’s method. We establish convergence results for these methods that show how the optima obtained from nonlocal optimization converge to the standard gradient/Hessian based optima, as the nonlocal interaction effects become less pronounced. While our definition of nonlocal optimization methods is valid for irregular problems, our analysis will assume the required regularity of the objective functions in order to compare the local and nonlocal approaches. As a stylized numerical application of our nonlocal learning framework, we apply our nonlocal gradient descent procedure to the problem of parameter estimation on a non-differentiable “translation" manifold as studied via multiscale smoothing in [51] and [50]. In our framework, this problem can be solved without resorting to a multiscale analysis of the manifold; instead, the nonlocal gradient operators we use can be viewed as providing the required smoothing. While we have emphasized the use of nonlocal operators to non-differentiable optimization as an alternative to traditional subgradient methods ([43]), the scope of the theory is much wider. Indeed, one may use nonlocal methods to model long range interactions ([37]), phase transitions ([15]) etc.

1.2 Prior Work

Nonlocal models have, in recent years, been fruitfully deployed in a wide variety of application areas: in image processing ([25]) nonlocal operators are used for total variation (TV) inpainting and also image denoising ([11, 33, 32, 52]) with the nonlocal means framework. Computational/engineering mechanics has profited greatly in recent years with a plethora of nonlocal models. Plasticity theory ([8]) and fracture mechanics ([27, 21]) are two prominent examples. Indeed, the field of peridynamics (see [46, 45, 39]) is a prime consumer of nonlocal analysis. Nonlocal approaches have also featured in crowd dynamics [14], and cell biology ([1]). A nonlocal theory of vector calculus has been developed along with several numerical implementations (see [20, 4, 18]). The nonlocal approach has also found its way into deep learning ([49]). On the theoretical side, nonlocal and fractional differential operators have been studied in the context of nonlocal diffusion equations ([5] and references therein) as well as purely in the context of nonlocal gradient/Hessian operators ([35, 30, 10, 9, 29]). The use of fractional calculus via Caputo derivatives and Riemann-Liouville fractional integrals for gradient descent (GD) and backpropagation has been considered in [54, 38, 12, 42, 53]. Our work uses instead the related notion of nonlocal calculus from [35, 30, 10, 18] as its basis for developing not only a nonlocal (stochastic) GD, but also a nonlocal Newton’s method, and is backed by rigorous convergence theory. In addition, our nonlocal stochastic GD approach interprets, in a very natural way, the nonlocal interaction kernel as a conditional probability density. The multiple definitions of fractional and nonlocal calculi are related (see for e.g. [16]).

1.3 Notation and Review

In all that follows, will denote a simply connected bounded domain (i.e., a bounded, connected, simply connected, open subset of ) with a smooth, compact boundary where is a fixed positive integer. will be endowed with the subspace topology of . We will consistently denote by points in . Subscripts of vectorial (resp. tensorial) quantities will denote the corresponding components. For e.g., or will denote the component of the vector or entry of matrix respectively for . For vectors the notation denotes the outer product of and . The letters will denote positive integers while denote arbitrary real quantities. By , we mean an open ball centered at with radius . Furthermore, will in general denote real-valued functions with domain . All integrals will be in the sense of Lebesgue, and a.e. means almost every or almost everywhere with respect to Lebesgue measure. Given an arbitrary collection of subsets of , the -algebra generated by will be denoted by .

Given , we define the support as

where the overbar denotes closure in . A multi-index is an ordered -tuple of non-negative integers , and we let . For a suitably regular function , we denote by

If we consider all multi-indices with , we can identify with the usual gradient of . In this case, we denote the component of as for . Likewise, the Hessian of at a point is the matrix . We shall use the following function spaces:

| (3) |

We let . Note that each of the above spaces is a normed linear space. In particular is a Hilbert space with the inner product . The subspace of consists of those that vanish (i.e., have zero trace) on the boundary . Note that the space consisting of continuously differentiable functions vanishing on is dense in (see [2]). Next, denotes the absolute value of real quantities, will denote the Euclidean norm of and will denote the norm in any of the function spaces above.

Following [35, 10], we will fix a sequence of radial functions which satisfy the following properties:

| (4) |

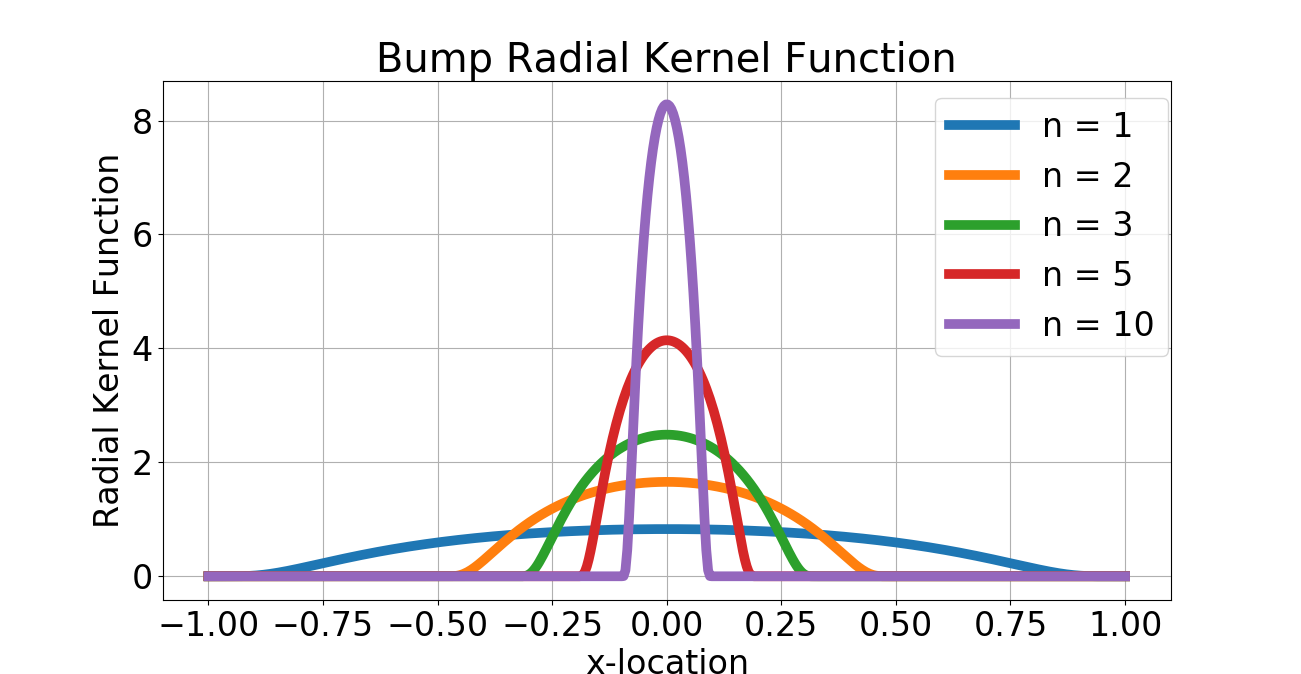

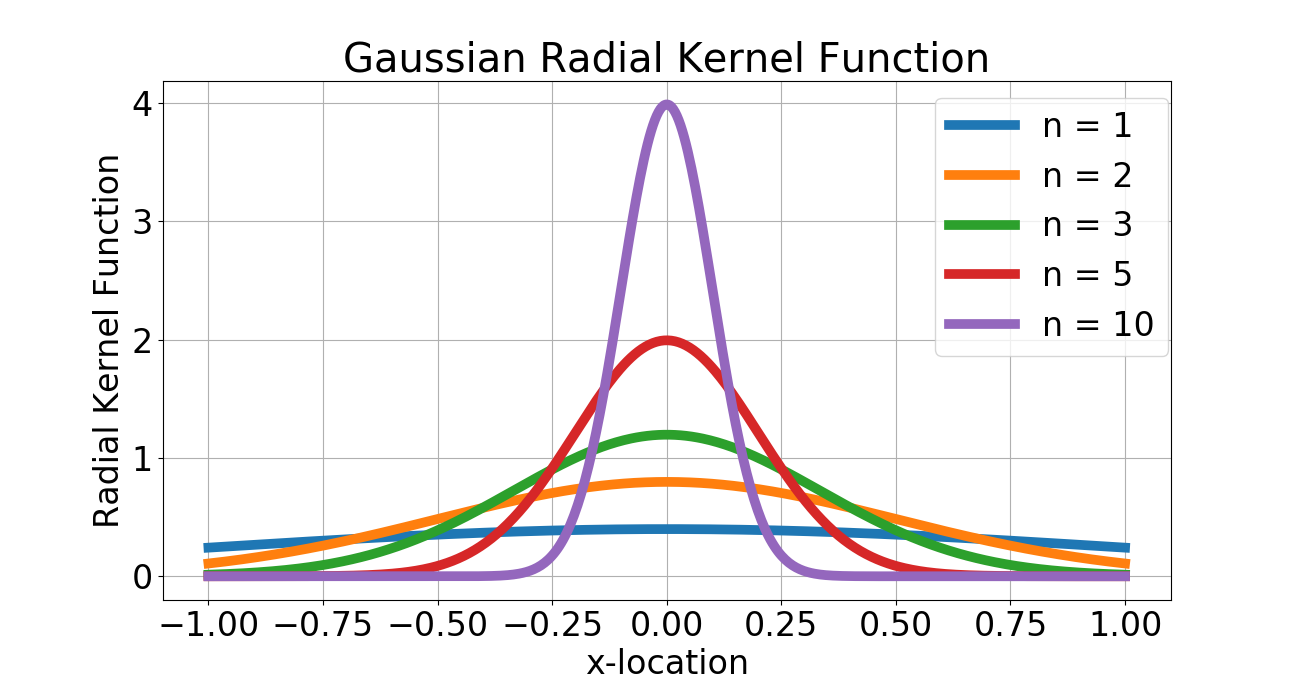

Note that by radial we mean that for any orthogonal matrix with unit determinant. Alternatively, this means that for some function . We can specify (but will not insist) that the have compact support or even be of class . Note that for our analysis of the nonlocal stochastic gradient descent method, we shall interpret the as a sequence of probability densities on that approach the Dirac density centered at the origin. Prototypical examples of are given by sequences of Gaussian distributions with increasing variance (the non-compact support case) and the so-called bump functions with increasing height (the compact support case). In both these cases, we can show that the sequence of converge as Schwartz distributions to the Dirac density . Details can be found in [23]. Finally, the set of all radial probability densities on , i.e., those that satisfy the first two conditions of equation 4 will be denoted by .

1.4 Nonlocal Operators

Let us now focus on the main objects of study in this paper, nonlocal differential operators. We note that there is no unique notion of “the" nonlocal gradient (resp. Hessian). Indeed, differing choices of kernels may give rise to differing notions of gradients (resp. Hessians).

1.4.1 First Order Operators

As done in [35], for any radial density , we can consider the linear operators for defined as:

| (5) |

where the domain of is the set of all functions for which the principle value integral on the right hand side of equation 5 exists (see [35]).

Stacking the as a vector yields a nonlocal gradient:

| (6) |

in other words for . Purely formally, if we allow for , the Dirac delta distribution centered at , then the “nonlocal" gradient defined above coincides (in the sense of distributions) to the usual “local" gradient. However, we would then be operating in the highly irregular realm of distributions, far removed from the space of functions that are more in line with the applications in mind. Nevertheless, much of the analysis in this paper revolves considering the case of a “well-behaved" approximating so that approximates the true gradient .

If , or more generally, as [35] note (see also [10]), if , the map

for a.e. . In these cases, the principle value integral in equation 6 exists and coincides with the Lebesgue integral of over all of . Thus, if (or ), then we may drop the limit in equation 6 and write:

| (7) |

At times, we may wish to evaluate the integral on a subset . In this case, we write to denote the corresponding nonlocal gradient:

| (8) |

If , we simply denote this as .

The nonlocal gradient defined via above exists for , which consists of functions that are only weakly differentiable and for which the standard (strong) gradient does not exist.

Specializing to the fixed sequence defined in equation 4, we write or, more succinctly, as , and similarly . We refer to the subscript as the “scale of nonlocality". It is easy to verify that unlike the usual local gradient , the nonlocal does not satisfy the product rule. The mode and topology of convergence of the sequence to as has been investigated by many authors (see [25, 20, 35])

1.4.2 Second Order Operators

Just as a first order nonlocal gradient operator has been defined earlier, we can define second order nonlocal differential operators as well. We consider nonlocal Hessians. In this case, even for a fixed scale of nonlocality and associated density (kernel) , one has several choices for defining a nonlocal Hessian. We list below four possibilities, and specify the entry of nonlocal Hessian matrices for . For the time being, we assume enough regularity for in order for the Hessians to make sense. We will specialize to and in section 4. Recall the notation .

| (9) |

Of the four Hessians above, is explicitly defined and analyzed in great detailed by [30]. It is interesting to note that is a non-local analog of the usual second-order central difference approximation to the second derivative ([31]).

1.5 Paper Organization

After this introductory section, we consider order nonlocal optimization in section 2. section 3 deals with a stylized numerical example of the order theory. In section 4, we develop the second order nonlocal optimization theory and the nonlocal analog of Newton’s method. We conclude in section 5. The proofs of all theoretical results are provided in the supplementary appendix section 6.

2 Order Nonlocal Theory and Methods

We now analyze the order nonlocal optimization theory and associated numerical methods. Recall that exists for , even if the classical gradient is not defined and we also know from [35] that if, in addition, (so that the usual gradient also exists), then we have that locally uniformly. Thus, if is a local minimizer of , then and we have that , from which we can immediately conclude the following.

Proposition 2.1.

Let be a local minimum of . Then given an , there is an such that for all .

While we shall not be using the following result directly in the development of nonlocal optimization methods, it is nonetheless an important analog of the local case. refers to the restriction of to a measurable subset (see supplementary appendix for more details).

Theorem 2.2.

Let be an a.e. local minimum of . For each there exists a measurable subset such that .

2.1 Nonlocal Taylor’s Theorem

Consider first the standard affine approximant

to at with the corresponding remainder term The nonlocal analog of the affine approximant is

and the corresponding nonlocal remainder term is Standard calculus dictates that the remainder term , and by uniqueness of the derivative, we can conclude that the nonlocal remainder will in general not be . Indeed, the standard (local) affine approximant is asymptotically the unique best fit linear polynomial and hence, the nonlocal affine approximant must necessarily be sub-optimal. It is therefore important to understand how relates to as , and this is the objective of the next result.

Proposition 2.3.

-

•

Let . Then , and, uniformly as .

-

•

Let . Then as .

2.2 Nonlocal Gradient Descent

We define the nonlocal gradient descent as follows. We first fix the scale of nonlocality , and initialize . We then perform the update:

| (10) |

Although one can easily conceive of an adaptive procedure with varying with step , we emphasize that for our analysis, the scale of nonlocality does not change between the steps of the descent. Henceforth, we denote by and . The step size and initial point are chosen small enough to ensure that the iterates all remain in . We emphasize that nonlocal gradient descent is applicable so long as exists, in particular, even if does not exist.

2.2.1 Suboptimal Stepsize

Consider first the sub-optimal step size situation, i.e., we use the same step size for both the local and nonlocal descent methods. This stepsize does not have to be the one obtained by linesearch. We will later consider the more involved situation of a nonlocal stepsize, or a stepsize that changes with , the scale of nonlocality. We thus now consider the situation with and compare the two descent methods:

| (11) |

with the same initial condition for all . Note that if , then we can conclude that the sequence of nonlocal iterates is bounded if the step sizes are bounded, and decreasing fast enough:

Proposition 2.4.

If the step sizes are chosen such that the sum for any , then we can conclude that the sequence is bounded.

In particular, proposition 2.4 and the Heine-Borel Theorem imply that we are guaranteed (at least) a convergent subsequence of the sequence . For the remainder of this subsection, we assume for purposes of our comparative analysis of the local and nonlocal gradient descent methods, that . Thus, the sequence of nonlocal iterates in this case are bounded, and hence, there is a compact such that for all . We are interested in studying the quantity as increases. We have the following result.

Theorem 2.5.

Let and let be given. Assume that nonlocal gradient descent is initialized with for all , and with the same sequence of step sizes for all satisfying for any . Then, for each , there is an such that for all , we have for .

Theorem 2.5 implies that, if the objective function admits a gradient, then for large enough, the iterates of nonlocal gradient descent get arbitrarily close to the iterates of the standard gradient descent. The important point to note is that while nonlocal gradient descent may be applicable in situations where the classical gradient is unavailable, we would not have a convergence analysis comparing the nonlocal gradient descent with the local analog. However, even in such cases, as we noted earlier, proposition 2.4 guarantees that we have a convergent subsequence of iterates of the nonlocal gradient descent method.

2.2.2 Optimal Stepsize

We now consider the case of optimal stepsize obtained by linesearch. We fix a maximal stepsize range of for some . As with the suboptimal stepsize case, we assume that so that the sequence of nonlocal iterates are bounded. Fix a compact such that for all . In the classical gradient descent approach, exact linesearch for the optimal stepsize at iteration involves finding , i.e., we perform:

where

We adapt it now to the nonlocal case. The steps of nonlocal gradient descent with exact linesearch for the optimal stepsize takes the form:

| (12) |

where

| (13) |

and again we assume that for all . Note that must be defined in such a way that for all . Also, let us assume for simplicity that is unique.

Theorem 2.6.

Let . Assume that the optimal stepsize version of nonlocal gradient descent is initialized with for all . Then, for each , there exists a subsequence of such that as and a subsequence of such that as .

2.3 Nonlocal Stochastic Gradient Descent

In this section, we consider the nonlocal version of stochastic gradient descent (SGD). A generic descent algorithm with a proxy-gradient :

| (14) |

After iterations, we output the averaged vector . If the expected value of equals the true gradient , then, roughly, the difference between the expected value of and the true minimum approaches as the number of iterations approaches . This assumes that we sample from a distribution such that , or, more generally, , where is the subdifferential set of at (see [41]).

As motivation for our nonlocal stochastic gradient descent analysis, let be valued random variables, with a conditional density Here, is a radial density in the class defined in section 1.3. Note that since we interpret to be , we can choose a density and arrive at a joint distribution , and upon integration, arrive at the marginal distribution of . Let us denote by

| (15) |

If we let denote the expectation with respect to the density , then it is evident that

| (16) |

or if we interpret as a random variable, we can write We will set , where is sampled from and perform the standard SGD with the knowledge that . We can therefore consider a sequence of nonlocal SGD with kernels , and as , we prove that the nonlocal optima converge to the usual SGD optima. In the following, we assume is a convex, Lipschitz function with Lipschitz constant .

Lemma 2.7.

Assume is convex. Given , there is an , such that for all , we have

Lemma 2.7 is a motivation of the following (well-known, see [36, 26, 48, 7]) generalization of the notion of the subderivative set of a function.

Definition 1.

Let be given. A vector is an subgradient of at if for all . The collection of all subgradients of at is called the subdifferential set of at and is denoted by

It is obvious from the definition of and Lemma 2.7 that given an , there is an such that for all , we have that and in particular, for any If we choose to run SGD with an subgradient in place of a usual subgradient, we call this an -stochastic subgradient descent (-SGD) algorithm.

Theorem 2.8.

Let be a convex, Lipschitz function and let Let be given. If we run an subgradient descent algorithm on for steps with then the output vector satisfies

Furthermore, for every , to achieve it suffices to run the SGD algorithm for a number of iterations that satisfies

We come now to the main result of this section. The result and proof are modeled after Theorem 14.8 of [41].

Theorem 2.9.

Let . Let be a convex function and let Let be given. Assume that is run for iterations with . Assume also that for all , we have with probability . Then,

Thus, running for iterations with ensures that

Note that Theorem 2.9 shows that the estimate of is composed of two parts: corresponds to the usual SGD estimate as in [41] while the term corresponds to the effects of nonlocal interactions. We can now specialize the general theory above to the case of the nonlocal gradient operator. Recall our observation that given an , we have for large enough. Thus, we can set to be the subgradients used in and choose to be the distribution from which the samples are chosen. We then have a nonlocal procedure.

3 Stylized Application: Non-differentiable Parameter Estimation

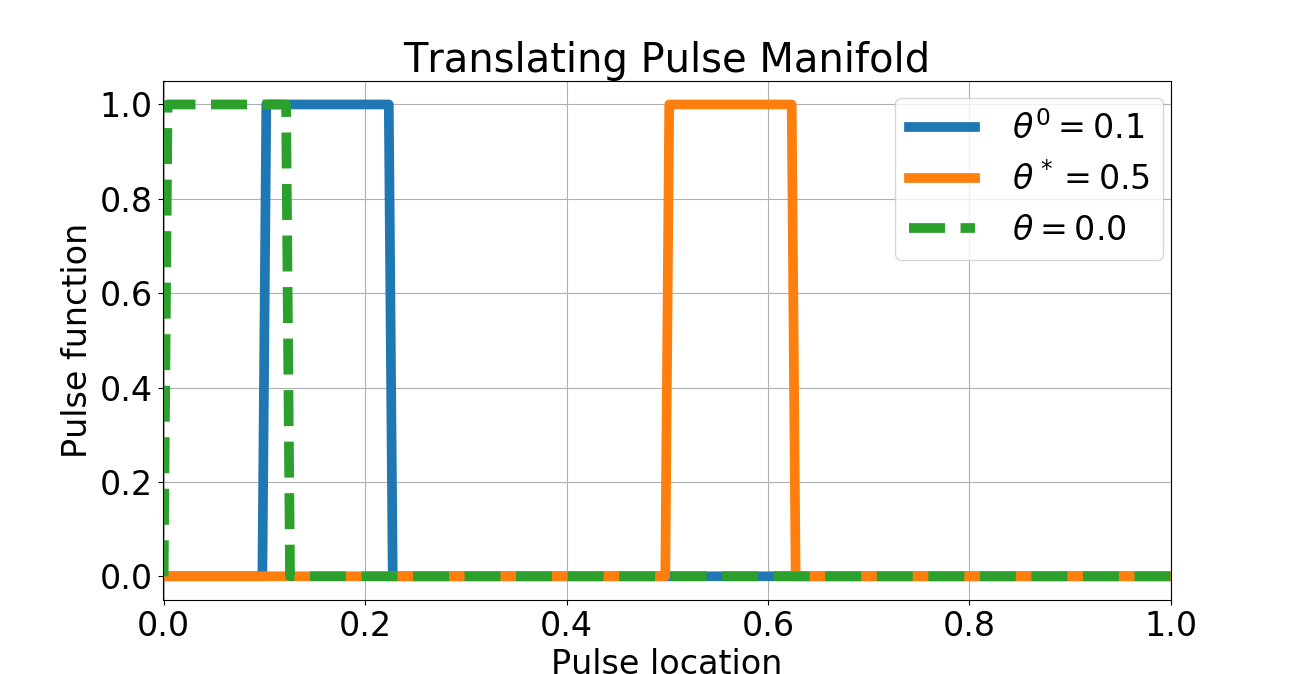

As a numerical example of the theory developed in section 2, we consider the case of parameter estimation of non-differentiable parametric mappings motivated by [51, 50] but restrict our attention to the case of a compact dimensional Euclidean parameter space . Let be the dimensional manifold consisting of translations of a rectangular pulse as shown in figure 1. is therefore the dimensional manifold viewed as a submanifold of and is the template pulse in figure 1 (green dashed line with support ). Note that while is a flat Euclidean space, its image is a topological manifold. It is known (see [17, 51]) that if is endowed with the metric, then the map is non-differentiable. Indeed [17, 51] observe that

| (17) |

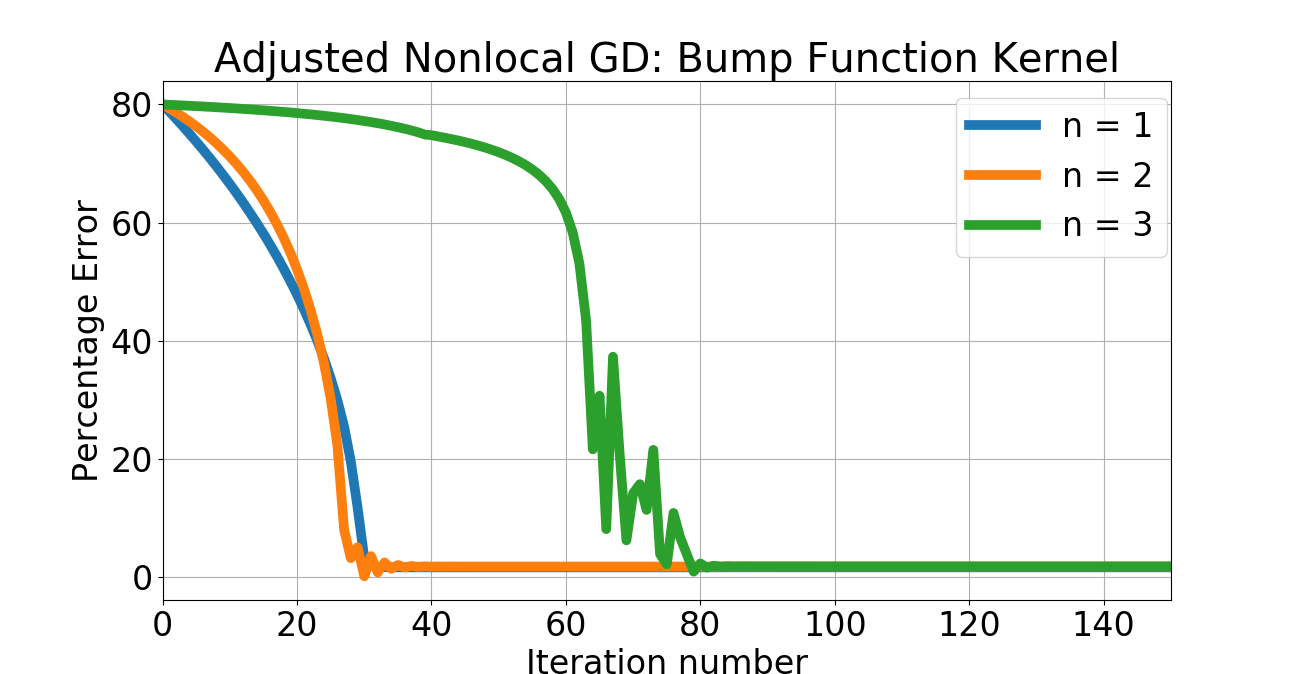

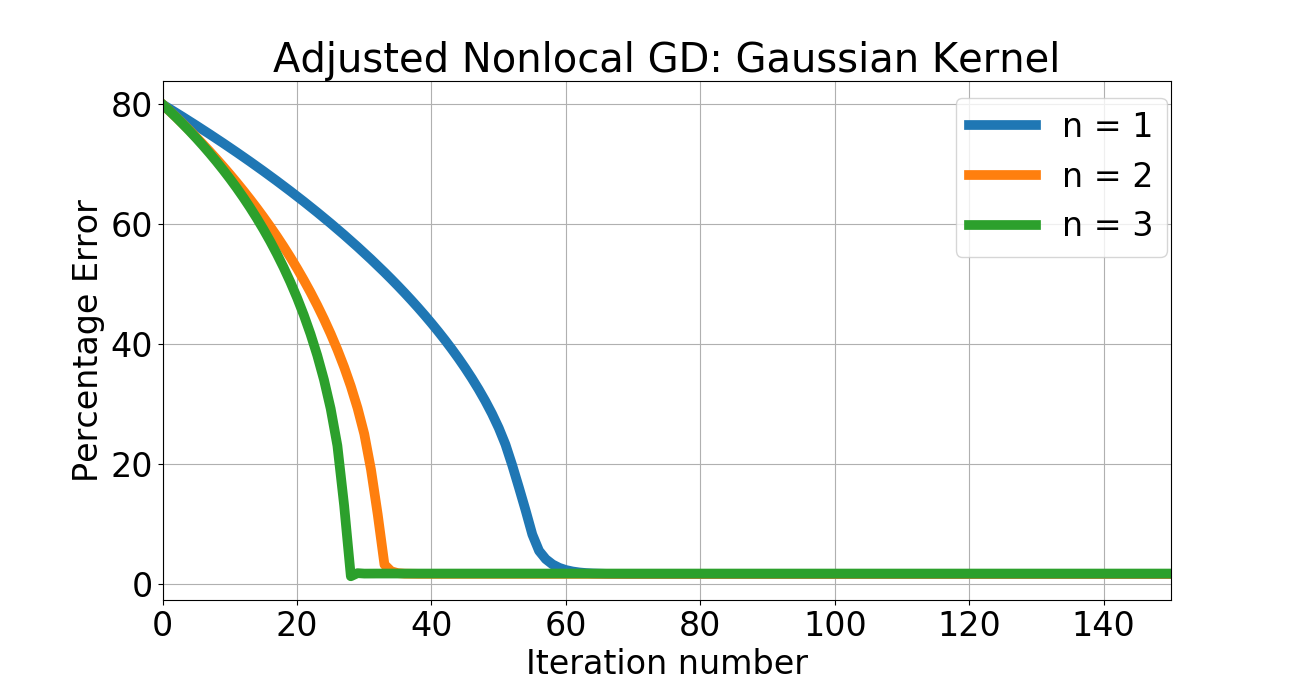

Given a template point corresponding to an unknown the objective is to recover an estimate of by minimizing the function . The approach taken in [51] is to “smoothen" the manifold by convolution with a Gaussian kernel and applying the standard Newton’s method to the smoothed manifold. We can directly address this problem without smoothing the manifold. Indeed, it is clear from the estimate 17 that is integrable as a function of for any , and hence the nonlocal gradient of exists. We can therefore use our nonlocal gradient descent to this problem to arrive at an estimate of . Note that we can view the scale of nonlocality as playing the role of a scale parameter vis-a-vis the approach of [51], however, our approach can be viewed as smoothing the tangent space, rather than the manifold itself. We apply our “vanilla" nonlocal gradient descent with a learning rate . Experiments were done on a 64-bit Linux laptop with GB RAM and 2.60GHz Intel Core i5 CPU. We consider the case of both bump function and Gaussian kernels that serve as in the nonlocal gradient definition, with (see figure 2). The initial and “ground truth" pulses corresponding to and are shown in figure 2. Given the extreme nonconvexity of , we adapted the nonlocal gradient descent presented earlier by decrementing the learning rate by half whenever the ratio of successive gradients between iterations exceeds . The convergence for both bump function and Gaussian kernels is rapid as seen in figure 3. The Gaussian kernel case gracefully converges: increasing results in more rapid convergence, as expected. However, the effect of numerical instability due to vanishing gradients is clear in the case of the bump function kernel: increasing results in nonmonotone convergence. Nevertheless, the efficacy of the nonlocal approach is apparent from this example, and extensions to higher dimensions are certainly possible, although the cost of dense quadrature-based integration like the one used here become prohibitive as the dimension of the parameter space increases.

4 Order Nonlocal Theory and Methods

We now turn our attention to second order methods, in particular Newton’s method. Recall from equation 9 that we have (at least) 4 distinct choices for defining a nonlocal analog of the Hessian of a function at a point. It is natural to consider the relationship between the four choices, and analyze the modes of convergence of each to the “usual" local Hessian operator. The following results make this relationship clear.

Theorem 4.1.

-

•

Let . Assume that there exists an such that for all , . Then, For fixed and , we have locally uniformly as that is, locally uniformly. If , the convergence is uniform.

-

•

Let . As , locally uniformly, that is, locally uniformly. If , the convergence is uniform.

We say a sequence (read: converges weakly to in ) with if for all as . We have the following theorem.

Theorem 4.2.

Let . Then:

-

•

As , we have , that is,

-

•

Combined with the hypothesis of theorem 4.1, we have:

with the convergence of to being locally uniform if and uniform if .

The proofs of theorem 4.1 and 4.2 are found in section 6. While we have shown weak convergence of to as , we can show that under additional hypothesis on the radial functions , we have an upper bound on how the sequence deviates from strong (uniform) convergence.

Theorem 4.3.

Assume in addition to the existing hypotheses on , that . Let . Then, given an there is an such that for all , we have that

Thus, if is independent of , we have local uniform convergence of to . Otherwise, the error grows as .

We note that theorem 4.3 provides a worst-case upper bound. Indeed, for the case of the Gaussian and “bump" function densities we discussed earlier, a simple scaling argument shows that the corresponding constants of theorem 4.3 grow with , and therefore, the error is possibly unbounded. However, in practice, it seems plausible that there is a subclass of functions for which the error between and “usual" local Hessian is bounded. We do not go further into the analysis of finding the optimal class of functions for which the error is bounded.

4.1 Nonlocal Newton’s Method

We now consider a nonlocal version of Newton’s method. Recall the standard Newton iterations to minimize for with stepsize :

| (18) |

where is the usual Hessian of evaluated at , and, as before, is the gradient of evaluated at . We shall consider the case of having been chosen by some line-search type method, sufficient to ensure a descent property at each step. The important point is that we consider the same for the nonlocal case:

| (19) |

where is the nonlocal Hessian defined earlier, and for all . As we did for gradient descent, in order to compare the nonlocal and local approaches, we will assume that the functions we wish to minimize are sufficiently regular. Again, we note that the nonlocal methods are still valid for possibly non-differentiable functions, so long as the nonlocal operators are well defined. We choose to do our analysis with ; while we can certainly consider the other possible nonlocal Hessians for numerical computations, we cannot guarantee theoretical convergence properties, due to only weak convergence of the other Hessians to the standard Hessian. Also, by [30], we can ensure uniform convergence of to , though we tacitly expand the domain of from to all of . Thus, if has compact support , we extend the domain of from to all of by zero while maintaining the regularity of in the extension to all of . We continue to denote by the extension of to all of .

Suppose is a minimizer of . We assume that is continuous, i.e., is at least . Also, we assume exists, and therefore, by [13, 40], there is a neighborhood of such that exists on and is continuous as . We let , on and assume enough regularity of to allow . We also assume that is uniformly continuous on . We can now state the following result, whose proof can be found in section 6.

Theorem 4.4.

Assume that has compact support , that is uniformly continuous on a neighborhood of , and enough regularity of to allow . Then, given , there exists an so that for , we have

We can now give a comparison between the usual Newton’s method’s quadratic convergence with the nonlocal counterpart.

Theorem 4.5.

Assume the hypotheses of theorem 4.4. Then, given , there exists an so that for , and large enough, we have

for some depending on the regularity of .

Proof.

It is interesting to note that the “error" in approximating the full Hessian by the nonlocal one has a clear effect in the convergence of the nonlocal Newton iterations. The term in the proof of theorem 4.5 corresponds to the “usual" quadratic convergence of the standard Newton iterations. However, the additional term corresponds to the error made in the approximation of the true Hessian by the nonlocal one. Indeed, can be made smaller by choosing a larger in the nonlocal Hessian. However, we are not guaranteed a clean quadratic convergence in the nonlocal case, indeed, the rate of convergence to is always limited by , i.e., the effect of nonlocal interactions in computing the nonlocal Hessian.

5 Concluding Remarks

While we have developed basic first and second order nonlocal optimization methods, much remains to be done, both theoretically and numerically. For instance, one can transfer nonlocal optimization to regularized objective functions, constrained optimization and quasi-Newton methods. A bottleneck on the computational side is the quadrature used for integration. Sparse grid methods going back to Smolyak ([47]) can be employed for higher dimensional problems. Nonlocal gradients can also be incorporated into deep learning models during backpropagation and would be particularly useful for data that exhibits singular behavior. Our analysis assumes the parameter space is Euclidean, and extending it to the case of having nonzero curvature is a non-trivial open problem. Nevertheless, the results presented here can serve as a first step towards such expansions.

References

- [1] Mathematical models for cell migration: a nonlocal perspective. Philosophical Transactions of the Royal Society of London B: Biological Sciences, 11 2019.

- [2] Robert A. Adams and John J. F. Fournier. Sobolev Spaces. Academic Press, 2 edition, Jun 2003.

- [3] Bacim Alali and Robert Lipton. Multiscale dynamics of heterogeneous media in the peridynamic formulation. Journal of Elasticity, 106:71–103, 04 2010.

- [4] Bacim Alali, Kuo Liu, and Max Gunzburger. A generalized nonlocal vector calculus. Zeitschrift für angewandte Mathematik und Physik, 66, 03 2015.

- [5] Fuensanta Andreu-Vaillo, Jose M. Mazon, Julio D. Rossi, and J. Julian Toledo-melero. Nonlocal Diffusion Problems. American Mathematical Society (Mathematical Surveys and Monographs (Book 165) ), USA, 2010.

- [6] Kendall E. Atkinson. The Numerical Solution of Integral Equations of the Second Kind. Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, 1997.

- [7] A. Auslender and M. Teboulle. Interior gradient and epsilon-subgradient descent methods for constrained convex minimization. Mathematics of Operations Research, 29:1–26, 2004.

- [8] Zdenek P. Bazant and Milan Jirasek. Nonlocal integral formulations of plasticity and damage: Survey of progress. Journal of Engineering Mechanics, 128(11):1119–1149, 2002.

- [9] C. Bjorland, Luis Caffarelli, and Alessio Figalli. Non-local gradient dependent operators. Advances in Mathematics, 230:1859–1894, 07 2012.

- [10] J. Bourgain, H. Brezis, and P. Mironescu. Another look at sobolev spaces. Optimal control and partial differential equations, IOS Press, A volume in honour of A. Benssoussan’s 60th birthday(Menaldi, J.L., et al. (eds.)):439–455, 2001.

- [11] A. Buades, B. Coll, and J. . Morel. A non-local algorithm for image denoising. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), volume 2, pages 60–65 vol. 2, 2005.

- [12] Yuquan Chen, Qing Gao, Yiheng Wei, and Yong Wang. Study on fractional order gradient methods. Applied Mathematics and Computation, 314:310 – 321, 2017.

- [13] Edwin K. P. Chong and Stanislaw H. Zak. An Introduction to Optimization, Fourth Edition. Wiley-Interscience Series in Discrete Mathematics and Optimization, John Wiley and Sons, Inc., USA, 2013.

- [14] Rinaldo M. Colombo and Magali Lecureux-Mercier. Nonlocal crowd dynamics models for several populations. Acta Mathematica Scientia, 32(1):177–196, 2012. Mathematics Dedicated to professor Constantine M. Dafermos on the occasion of his 70th birthday.

- [15] Matteo Cozzi, Serena Dipierro, and Enrico Valdinoci. Nonlocal phase transitions in homogeneous and periodic media. Journal of Fixed Point Theory and Applications, 19:387–405, 11 2016.

- [16] Marta D’Elia, Mamikon Gulian, Hayley Olson, and George Em Karniadakis. A unified theory of fractional, nonlocal, and weighted nonlocal vector calculus. arXiv preprint, arXiv:2005.07686v2 [math.AP], 2020.

- [17] David Donoho and Carrie Grimes. Image manifolds which are isometric to euclidean space. Journal of Mathematical Imaging and Vision, 23(1):5–24, 12 2005.

- [18] Qiang Du. Nonlocal Modeling, Analysis, and Computation. Society for Industrial and Applied Mathematics (CBMS-NSF Regional Conference Series in Applied Mathematics), USA, 2019.

- [19] Qiang Du, Max Gunzburger, R. Lehoucq, and Kun Zhou. Analysis and approximation of nonlocal diffusion problems with volume constraints. SIAM Review, 54:667–696, 04 2011.

- [20] Qiang Du, Max Gunzburger, R. Lehoucq, and Kun Zhou. A nonlocal vector calculus, nonlocal volume constrained problems, and nonlocal balance laws. Mathematical Models and Methods in Applied Sciences, 23(3):493–540, 2013.

- [21] Ravindra Duddu and Haim Waisman. A nonlocal continuum damage mechanics approach to simulation of creep fracture in ice sheets. Computational Mechanics, 51(6):961–974, June 2013.

- [22] Etienne Emmrich, Richard Lehoucq, and Dimitri Puhst. Peridynamics: A nonlocal continuum theory. Lecture Notes in Computational Science and Engineering, 89, 09 2013.

- [23] Lawrence C. Evans. Partial Differential Equations. American Mathematical Society, 2 edition, 2010.

- [24] Andrzej Fryszkowski. Fixed Point Theory for Decomposable Sets (Topological Fixed Point Theory and Its Applications Book 2). Springer, 1 edition, 2004.

- [25] Guy Gilboa and Stanley J. Osher. Nonlocal operators with applications to image processing. Multiscale Modeling and Simulation, 7(3):1005–1028, 2008.

- [26] X. L. Guo, C. J. Zhao, and Z. W. Li. On generalized -subdifferential and radial epiderivative of set-valued mappings. Optimization Letters, 8(5):1707–1720, 2014.

- [27] Milan Jirásek. Nonlocal models for damage and fracture: Comparison of approaches. International Journal of Solids and Structures, 35(31):4133–4145, 1998.

- [28] Olav Kallenberg. Foundations of Modern Probability. Probability and Its Applications. Springer 2nd edition, 2002.

- [29] Hwi Lee and Qiang Du. Nonlocal gradient operators with a nonspherical interaction neighborhood and their applications. ESAIM: Mathematical Modelling and Numerical Analysis, 54, 08 2019.

- [30] Jan. Lellmann, Konstantinos. Papafitsoros, Carola. Schonlieb, and Daniel. Spector. Analysis and application of a nonlocal hessian. SIAM Journal on Imaging Sciences, 8(4):2161–2202, 2015.

- [31] Randall LeVeque. Finite Difference Methods for Ordinary and Partial Differential Equations: Steady-State and Time-Dependent Problems (Classics in Applied Mathematics Classics in Applied Mathemat). Society for Industrial and Applied Mathematics, USA, 2007.

- [32] A. Maleki, M. Narayan, and R. Baraniuk. Suboptimality of nonlocal means on images with sharp edges. In 2011 49th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pages 299–305, 2011.

- [33] Arian Maleki, Manjari Narayan, and Richard G. Baraniuk. Anisotropic nonlocal means denoising. Applied and Computational Harmonic Analysis, 35(3):452 – 482, 2013.

- [34] G.F Dal Maso. Introduction to -Convergence. Birkhauser (Progress in Nonlinear Differential Equations and Their Applications (8)), Jan 1993.

- [35] Tadele Mengesha and Daniel Spector. Localization of nonlocal gradients in various topologies. Calculus of Variations and Partial Differential Equations, 52(1):253–279, Jan 2015.

- [36] R. Diaz Millan and M. Penton Machado. Inexact proximal -subgradient methods for composite convex optimization problems. Journal of Global Optimization, 75:1029–1060, 2019.

- [37] Mario Di Paola and Massimiliano Zingales. Long-range cohesive interactions of non-local continuum faced by fractional calculus. International Journal of Solids and Structures, 45(21):5642–5659, 2008.

- [38] Y. Pu, J. Zhou, Y. Zhang, N. Zhang, G. Huang, and P. Siarry. Fractional extreme value adaptive training method: Fractional steepest descent approach. IEEE Transactions on Neural Networks and Learning Systems, 26(4):653–662, 2015.

- [39] Srujan Rokkam, Max Gunzburger, Michael Brothers, Nam Phan, and Kishan Goel. A nonlocal peridynamics modeling approach for corrosion damage and crack propagation. Theoretical and Applied Fracture Mechanics, 101:373 – 387, 2019.

- [40] David L. Russell. Optimization theory. New York, W.A. Benjamin, 1970, Series Mathematics lecture note series., USA, 1970.

- [41] Shai Shalev-Shwartz and Shai Ben-David. Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, USA, 2014.

- [42] Dian Sheng, Yiheng Wei, Yuquan Chen, and Yong Wang. Convolutional neural networks with fractional order gradient method. Neurocomputing, 2019.

- [43] N.Z. Shor, K.C. Kiwiel, and A. Ruszczynski. Minimization Methods for Non-Differentiable Functions. Springer Series in Computational Mathematics. Springer Berlin Heidelberg, 2012.

- [44] Waclaw Sierpinski. Sur les fonctions d’ensemble additives et continues. Fundamenta Mathematicae, 3:240–246, 1922.

- [45] S. Silling and R. Lehoucq. Convergence of peridynamics to classical elasticity theory. Journal of Elasticity, 93:13–37, 10 2008.

- [46] S.A. Silling. Reformulation of elasticity theory for discontinuities and long-range forces. Journal of the Mechanics and Physics of Solids, 48(1):175 – 209, 2000.

- [47] S. A. Smolyak. Quadrature and interpolation formulas for tensor products of certain classes of functions. Dokl. Akad. Nauk SSSR, 148:1042–1045, 1963.

- [48] M. V. Solodov and B. F. Svaiter. A hybrid approximate extragradient-proximal point algorithm using the enlargement of a maximal monotone operator. Set-Valued Analysis, 7:323–345, 1999.

- [49] Yunzhe Tao, Qi Sun, Qiang Du, and Wei Liu. Nonlocal neural networks, nonlocal diffusion and nonlocal modeling. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18, page 494–504, Red Hook, NY, USA, 2018. Curran Associates Inc.

- [50] M. B. Wakin, D. L. Donoho, Hyeokho Choi, and R. G. Baraniuk. High-resolution navigation on non-differentiable image manifolds. In Proceedings. (ICASSP ’05). IEEE International Conference on Acoustics, Speech, and Signal Processing, 2005., volume 5, pages v/1073–v/1076 Vol. 5, March 2005.

- [51] Michael Wakin, David Donoho, Hyeokho Choi, and Richard Baraniuk. The multiscale structure of non-differentiable image manifolds. Proc SPIE, 5914, 08 2005.

- [52] J. Wang, Y. Guo, Y. Ying, Y. Liu, and Q. Peng. Fast non-local algorithm for image denoising. In 2006 International Conference on Image Processing, pages 1429–1432, 2006.

- [53] Jian Wang, Yanqing Wen, Yida Gou, Zhenyun Ye, and Hua Chen. Fractional-order gradient descent learning of bp neural networks with caputo derivative. Neural Networks, 89:19 – 30, 2017.

- [54] Yiheng Wei, Yu Kang, Weidi Yin, and Yong Wang. Generalization of the gradient method with fractional order gradient direction. Journal of the Franklin Institute, 357(4):2514 – 2532, 2020.

6 Appendix

6.1 Nonlocal Gradients and Hessians: More Background

We provide here some more details on nonlocal gradients and Hessians. Recall that if , or more generally, as [35] note (see also [10]), if , then the map

for a.e. . Thus, in these cases, the principle value integral in equation 6 exists and coincides with the Lebesgue integral of over all of .

Indeed, Lemma of [35] states:

Proposition 6.1.

(Lemma of [35]) Let . Then where .

Recall that we write to denote the corresponding nonlocal gradient on :

| (20) |

If the sequence of approaches a limit, albeit perhaps as distributions, we can expect the nonlocal gradients to converge to a limiting operator as well. For example, as we remarked earlier, in case the approach , we can presume that the approach as well. The mode and topology of convergence of the sequence to as has been investigated by many authors. In [25], it is formally shown that

while [20] show for (or more generally for tensors) the convergence of the nonlocal gradient in :

The localization of nonlocal gradients in multiple different topologies has been studied in [35], and we restate the following theorem from [35].

Theorem 6.2.

(Theorem of [35])

-

•

Let . Then locally uniformly as . If , then the convergence is uniform.

-

•

Let . Then in as .

We record the following theorem from [30] regarding a similar result in the Hessian case.

Theorem 6.3.

(Theorems and of [30])

-

•

Let , then in as for .

-

•

Let , then in for .

Note that in the Hessian case, the authors of [30] define and analyze the Hessian defined on all of , due to the definition of involving translation terms , yet still obtain convergence both in and uniform norms. In section 4, we will show weak convergence of the remaining Hessians and to as . The above theorems will be one of the main results we draw upon in our analysis. In the sequel, when we use the adjective “the" nonlocal gradient or “the" nonlocal Hessians , with , we mean the ones defined above associated with a fixed sequence .

6.2 Proofs

6.3 Proof of Theorem 2.2

In order to prove theorem 2.2, we will need the following lemma.

Lemma 6.4.

Let be a -finite measure space, i.e., is a set, is a -algebra generated by , a collection of subsets of , and a -finite measure on . Assume . Let , i.e., is -integrable, with almost everywhere. Then for any with , there is an such that .

Proof.

We now prove theorem 2.2.

Proof.

Define for for each and any

| (21) |

and

| (22) |

Notice that and that . Now, by assumption, and hence, is integrable with respect to , i.e., . Thus, for any there is a such that for any ball with volume we have

Pick such a ball with . Define

| (23) |

Note that and that .

It is easy to see, based on how we have set things up, that for varying we have that on while on . Indeed, since is an a.e. local minimum, a.e., the sign of is determined by that of . On , we have that so that a.e. while on , we have that so that a.e.

Therefore, we see that

| (24) |

and, since is the disjoint union

| (25) |

If , then setting , we are done. Otherwise, we consider two cases.

Case 1:

This is of course equivalent to

We shall shortly appeal to lemma 6.4. Let and be the -algebra generated by open subsets of . We have that , where is the Borel -algebra on . Let be the Lebesgue measure on restricted to , so that . If we let , then and almost everywhere. Finally, set

and . The conditions of lemma 6.4 being satisfied, we are guaranteed a measurable with . By definition, this means that . Thus,

Setting , we have

Case 2:

This equivalent to

In this case, let and be the -algebra generated by open subsets of containing . We have that and is the Lebesgue measure on restricted to , so that . If we let , then and almost everywhere. Similar to the previous case, we set

and . As before, the conditions of lemma 6.4 are satisfied, and we are guaranteed a measurable with . By definition, this means that . Thus,

Setting , we have ∎ Note that the constructed in the above proof implicitly depends on due to the dependency of on .

6.4 Proof of Proposition 2.3

Proof.

Case 1, uniform convergence: Since , we show uniformly, from which it follows that uniformly. We can estimate, using Cauchy-Schwarz,

| (26) |

where, since and is bounded, we have for some independent of . Since , we know uniformly by Theorem 1.1 of [35]. Thus, given , there is an such that for all , we have . Putting this into the earlier estimate yields for .

Case 2, convergence: We know that is dense in (see [2]). Hence, we select a such that and we consider the standard (local) and nonlocal affine approximant of which we denote by and respectively. As before, we have

Now, as we noted earlier, we have that for some independent of due to being bounded. Thus, holding constant, we have,

| (27) |

Assume that the measure of is . Then, integrating again with respect to we have

| (28) |

By Theorem 1.1 of [35], as , so we conclude that given , we can find an such that

when . Thus, we have for , that

| (29) |

We now write

and estimate each of the three terms in the above equation. Now,

By the density assumption, we have that and , and hence, we conclude that

| (30) |

Similarly, we have

We also have

| (31) |

By theorem 1.1 of [35], there exists an such that each of the three terms in equation 31 can be bounded by , for all , which yields

| (32) |

for all , which in turn implies that

| (33) |

Putting equations 29, 30 and 33 together, we have, for , that

and since was arbitrary, we conclude that as . ∎

6.5 Proof of Proposition 2.4

Proof.

Let . We know from Lemma 2.2 of [35] that and since , we conclude that

Now,

Iterating this observation, we get

By assumption, we have for any . Thus, , and the sequence of iterates is bounded. ∎

6.6 Proof of Theorem 2.5

Proof.

The proof is by induction on .

Base case:

Let . Now, , so

By [35], we know that as uniformly for all . Thus, given there is an so that for all , we have . Thus, for .

Induction Step:

Having completed the base case, we assume the result to be true for , and we prove the estimate for . Again,

Thus,

By the induction hypothesis, there is an such that term is bounded by for .

For the second term, we have

First, by uniform convergence of that there is an such that for , we have

Second, by the mean-value theorem applied to , we have that

where . Indeed, since the Hessian is continuous on the compact set and hence is uniformly bounded. By the induction hypothesis, there is an such that for all . Choosing , we have that for , , and the induction step is complete. ∎

6.7 Proof of Theorem 2.6

In the proof of the theorem, we shall need the notion of convergence in the context of metric spaces (see [34] for more details).

Definition 2.

Let be a metric space (or, more generally, a first countable topological space) and be a sequence of extended real valued functions on . Then the sequence is said to -converge to the -limit (written ) if the following two conditions hold:

-

•

For every sequence such that as , we have

-

•

For all , there is a sequence of with (called a -realizing sequence) such that

The notion of -convergence is an essential ingredient for the study of convergence of minimizers. Indeed, if , and is a minimizer of , then it is true that every limit point of the sequence is a minimizer of . Moreover, if uniformly, then , where is the lower semi-continuous envelope of , i.e., the largest lower semi-continuous function bounded above by . In particular, if and happen to be continuous, then , and therefore, if uniformly then (see [34]).

Proof.

The proof is by induction on .

Base case:

We consider . Recall that

since . Now let

and

We first notice that are continuous and uniformly as . Indeed,

and, by the uniform -independent convergence (see [35]), we conclude that

as for any . Now, since is compact and are continuous, the composition converges uniformly to .

By our remarks about -convergence earlier, we know that uniform convergence of a sequence of continuous functions implies -convergence, which in turn implies convergence of limits of minimizers. Thus, limit points of the sequence of minimizers of are minimizers of , i.e., . In other words, there is a subsequence of such that

as .

| (34) |

because so that,

| (35) |

Since as , for any , there is an such that

for . Likewise, there is an such that

for since as . Choosing , we see . The base case is proved.

Induction Step:

We assume the result is true for . We prove it for . Similar to the base case, we let

and

We now show that by the induction hypothesis, there is a subsequence of such that uniformly. Indeed,

Thus,

so that

By the induction hypothesis, there is a subsequence of such that as . Thus, given an , there is an such that for all ,

Next, by the uniform convergence of , we have that there exists an such that

for all . As in the suboptimal stepsize case, we now apply the mean value theorem to . We have that

where . Indeed, since , the Hessian is continuous on the compact set containing the iterates and hence is uniformly bounded. Putting all this together, and noting that we see that

for . Thus, uniformly on the compact interval . Therefore, the composition converges uniformly to since is continuous. This implies that -converges to , so that every limit point of the collection of minimizers of is a (and since we have assumed uniqueness of the linesearch minimizer, we can say the) minimizer of . There is therefore a further subsequence of , which we will denote by , such that . This proves the first half of the result. We still need to show that there exists a subsequence of such that as .

To this end, consider

| (36) |

We write

| (37) |

The last inequality follows since and by Lemma 2.2 of [35], we have

Now,

By the mean value theorem, the first term

with due to . The second term

as by uniform convergence of to on . Thus, putting all the above together, we see that

Now, by our induction hypothesis, there is a subsequence of such that as . Thus, given an , there is an such that for all ,

Next, by what was proved earlier, there is a subsequence of and an such that for ,

Finally, there exists an such that

Define a new subsequence to be the diagonal of and . Then, for , we have

| (38) |

The subsequence is the required subsequence of , and the induction is complete. ∎

6.8 Proof of Lemma 2.7

Proof.

By convexity of , we have that

| (39) |

On a compact neighborhood of , uniformly by theorem 1.1 of [35]. Thus, as . For any , this implies that we have . Choosing , we have . Thus, given , there is an such that for all , we have , so that . Thus, ∎

6.9 Proof of Theorem 2.8

As a motivating discussion of the nonlocal stochastic gradient descent method, we consider a simple example. If is a differentiable function, we can consider, for any , and , the difference quotient

which, by the mean value theorem, equals for some . If we now think of as valued random variables, then we have that the difference quotient is also a random variable. Thus, it is reasonable to expect that if the the conditional distribution of given is centered sufficiently closely around , then

While this approximation depends on the exact nature of , it nevertheless motivates our interpretation of as being a nonlocal subgradient.

We recall a relevant result from [41].

Corollary 6.5.

(Corollary 14.2 of [41]) Let be a convex, Lipschitz function and let If we run the GD algorithm on for steps with then the output vector satisfies

Furthermore, for every , to achieve it suffices to run the GD algorithm for a number of iterations that satisfies

Proof.

Consider

| (40) |

By the choice of subgradient of , we have for every , that

| (41) |

which upon summing and dividing by yields

| (42) |

We appeal now to lemma 14.1 of [41] to conclude that , which results in the final estimate

| (43) |

Now, given an , it is clear that picking results in . ∎

6.10 Proof of Theorem 2.9

Proof.

The proof follows that of theorem 14.8 of [41]. Let denote the sequence of subgradients , and indicates the expected value of the bracketed quantity over the draws . Now, , and therefore, By lemma 14.1 of [41], we have

We show now that

| (44) |

which would establish the result.

Now,

| (45) |

where the first equality is by linearity of expectation, and the second equality is due to the fact that the quantity depends on iterates upto and including only, and not beyond . Next, by the law of total expectation, we have

| (46) |

Conditioned on , the quantity is deterministic, and hence

| (47) |

We now make the crucial observation that . Indeed, we have noted that is deterministic given , so that . However, since depends on , the Doob-Dynkin lemma (see [28]) implies that the sigma algebra generated by is a subalgebra of , the sigma algebra generated by . Therefore, by the tower property of conditional expectation, we have that . This implies that since by construction we know that . Thus, by the subgradient property of the , we have that

Thus, we have shown that Summing over and dividing by we obtain equation 44, and thereby we have established the result. ∎

6.11 Proof of Theorem 4.1

Proof.

Convergence of : Let be compact. Assume that an has been chosen large enough so as to ensure that for all . Fix such an . Then, applying Theorem 1.1 of [35], we obtain that as , i.e., locally on . If the uniform convergence of follows from the analogous result of Theorem 1.1 of [35].

Convergence of : The proof of this case is exactly like that of the previous case obtained by replacing with . With the analogous set-up as before, we have . Thus, applying Theorem 1.1 of [35], we conclude that locally uniformly. If the uniform convergence of follows from the analogous result of Theorem 1.1 of [35].

∎

6.12 Proof of Theorem 4.2

We now provide the proof of theorem 4.2.

Proof.

Let . We show that as .

Now, integrating by parts we obtain,

where the boundary term is zero due to coming from and having zero trace. We can now estimate:

| (48) |

where the first inequality above is Cauchy-Schwarz, while the second follows from the definition of the norm. Now, since , in particular, we have that and therefore . By [35], Theorem 1.1, we have that as for . Therefore, given , there is an such that for all , we have . Thus, for . Since is arbitrary, we have proved as .

6.13 Proof of Theorem 4.3

The proof follows that of Theorem 1.1 of [35]. We record here the following Lemma from [35] that we will need in our proof as well.

Lemma 6.6.

We now provide the proof of theorem 4.3.

Proof.

For , with , being compact, we show that uniformly as . Let

| (49) |

where we have used the fact that exists due to . Now, since uniformly as by lemma 6.6, we have that uniformly.

We show that there exists an such that for fixed, we have uniformly for as , which implies that uniformly as , i.e., .

Now, we have by definition

so that

| (50) |

Now, by Theorem 1.1 of [35], we have that for , uniformly. Thus, given , there exists an independent of such that for all ,

or, for any , and , which upon combining together, result in

| (51) |

| (52) |

Term in equation 52 can be estimated as:

| (53) |

since, by the assumption on , we have . We now focus on term in equation 52.

Since by assumption , i.e., , we can find, given an , a small enough so that implies that .

Assume an is given and the corresponding has been found. Thus, we can write

| (54) |

By the mean value theorem, such that

Putting in this equality into equation 54, we obtain:

| (55) |

By the assumption on the radial densities , we have that and . Putting equations 52, 53, and 54 together, we obtain for . As were arbitrary, we obtain the result that the error of as is bounded by .

If , and is independent of , we can conclude uniform convergence on using an argument similar to that of Theorem 1.1 of [35] adapted to the current situation. Indeed, if then is a proper closed subset of . Thus, the distance between and is strictly positive. If

then . For points , we have

∎

6.14 Proof of Theorem 4.4

Proof.

By theorem 1.1 of [35], we have locally uniformly, and extending smoothly by zero outside of , we have uniform convergence on all of . By theorem 1.4 of [30], we have uniformly on . By assumption on being uniformly continuous on , it follows that converges uniformly to on . Therefore, converges uniformly to on . We now prove the result by induction.

Base case :

We have:

| (56) |

so that

Thus, for , we have, given an , an such that for all We can therefore conclude that for and the base case is complete.

Induction case :

Assume that the result is true for , and we proceed to prove the induction step . Let be given. Consider

| (57) |

We have

| (58) |

By the induction hypothesis, there is an such that for we have We now consider term of equation 58. We write:

| (59) |

Since uniformly on , we have that there exists an such that for . Next, we use the assumption that . This implies, by the mean value theorem that where Therefore, there exists an such that for , we have . Putting these estimates together into equation 59 and 58, we get for . The induction step is proved. ∎