Orthogonal Attention: A Cloze-Style Approach to Negation Scope Resolution

Abstract

Negation Scope Resolution is an extensively researched problem, which is used to locate the words affected by a negation cue in a sentence. Recent works have shown that simply finetuning transformer-based architectures yield state-of-the-art results on this task. In this work, we look at Negation Scope Resolution as a Cloze-Style task, with the sentence as the Context and the cue words as the Query. We also introduce a novel Cloze-Style Attention mechanism called Orthogonal Attention, which is inspired by Self Attention. First, we propose a framework for developing Orthogonal Attention variants, and then propose 4 Orthogonal Attention variants: OA-C, OA-CA, OA-EM, and OA-EMB. Using these Orthogonal Attention layers on top of an XLNet backbone, we outperform the finetuned XLNet state-of-the-art for Negation Scope Resolution, achieving the best results to date on all 4 datasets we experiment with: BioScope Abstracts, BioScope Full Papers, SFU Review Corpus and the *sem 2012 Dataset (Sherlock).

1 Introduction

Negation Scope Resolution involves finding the words in a sentence whose meaning was affected by the use of a negation cue (a word that expresses negation). Consider the following examples:

-

1.

This place is[n’t] familiar.

-

2.

I do [not] know the answer.

-

3.

I am [neither] a saint [nor] a sinner.

The words enclosed in square brackets are the negation cues, and the words in italics are their corresponding scopes. As we can see, negation cues can be of multiple types: an affix (1), a single word cue (2) and a multi-word cue (3). A sentence can also have multiple cue words, each of which can have different scopes. Hence, the input to any system performing Negation Scope Resolution is the sentence-negation cue pair.

Approaches to solve the task of negation scope resolution have varied significantly over the years, ranging from simple rule based systems to BiLSTM and CRF classifiers. To represent the cue word input, traditionally, these methods utilized either cue dependent hand-crafted features (for CRF classifiers), or an additional binary input vector representing the cue words in the sentence. More recently though, Khandelwal and Sawant (2020) and Britto and Khandelwal (2020) used transformer-based architectures to address the task, and represented the cue words in the sentence via a preprocessing strategy: by augmenting the input sentence with a special token which is added before each cue word to tell the system that the following word is a cue word.

In this paper, we propose a novel approach to solving the problem of Negation Scope resolution: by viewing it as a Cloze-Style task, where the sentence is used as the Context input and the cue words are used as the Query input. We also develop a novel Cloze-Style Attention mechanism called Orthogonal Attention, which uses the key-query-value structure used in Self-Attention Vaswani et al. (2017).

Cloze-Style Question Answering (and Machine Reading Comprehension) are 2 classes of problems that involve using 2 distinct inputs to produce the output desired. In most cases, the input is a query-context pair from which an answer is generated. This is akin to posing a Question (Query) over a paragraph containing information (Context). The format of the answer can vary significantly, from pointing to a part of the context that contains the answer, as in SQuADRajpurkar et al. (2016), to filling in the blanks of the Query using relevant information from the Context. Thus, these tasks require modelling the interaction between the query and the context, to produce the corresponding answer. Such tasks became popular after the release of the CNN and Daily News Datasets in Hermann et al. (2015).

Given the success of Attention Mechanisms for traditional Natural Language Processing tasks (like translation), researchers have also developed Attention mechanisms to address Cloze-Style tasks (by using them to model the interaction between the Query and Context). A few examples of such Attention mechanisms include: Attention Sum Readers Kadlec et al. (2016), Attention over Attention Cui et al. (2016), Gated-Attention Dhingra et al. (2016), and Dynamic Coattention Networks Xiong et al. (2016). Most attention mechanisms used a similar approach: compute a score for each query word-context word pair, and use that score to perform some form of a weighted summation of certain vectors to generate context-aware representations of the query and query-aware representations of the context. We review a few such attention mechanisms in Section 2.

The novel Cloze-Style Attention mechanism we propose is called Orthogonal Attention. We propose a framework to develop Orthogonal Attention variants, and propose 4 such variants (OA-C OA-CA, OA-EM, OA-EMB). The exact specifications are covered in Section 3, but at it’s core, it uses the key-query-value structure and multiheaded structure found in Self Attention Vaswani et al. (2017).

These layers are then appended to the current state-of-the-art architecture for Negation Scope Resolution (XLNet-base), and we observe that adding Orthogonal Attention layers to the architectures yield improvements over the current state-of-the-art architectures.

2 Literature Review

2.1 Attention for Cloze-Style Tasks

Kadlec et al. (2016) proposed Attention Sum Reader, wherein they performed a dot product between the question embedding and each context word embedding to get probability scores per word of the context.

Dhingra et al. (2016) proposed a Gated Attention Reader Network to compute a query-aware representation of the context words. They used a multi-hop architecture with k hops (layers), where each layer incrementally infused the context embeddings with information from the query embeddings. They used a “Gated-Attention Module” for each word of the context, which performs attention over the query words using the context word followed by an element-wise multiplication between the summarized query and the context word. Each layer computes a different query representation using a separate BiGRU, and the output of the Gated-Attention Modules is also passed through a BiGRU before being fed to the next query.

Seo et al. (2016) proposed Bidirectional Attention Flow (BiDAF), wherein they first computed a similarity matrix between the context words and the query words, which was then used to compute context-aware query representations and query-aware context representations. The context-to-query attention was modelled as an attention over the context representation using query tokens, where the attention weights came from the similarity matrix. The query-to-context attention produces a weighted sum of the query words, using the attention weights as the maximum across the columns of the similarity matrix. Finally, all the generated matrices and the original context embeddings were concatenated to generate the query-aware representation of each word.

Wang et al. (2017a) introduced a Gated Self-Matching Network to perform Question Answering. Their model included a gated matching layer to match the question and the context, and a self-matching layer to aggregate information from the whole passage. This was done by using an RNN to summarize a concatenation of: the context embeddings at time t , and a special context vector , which was derived from an attention over the query embeddings using the previous output of the summarizer RNN and . Thus, they generated a summary of the context and the query. The self-matching layer allowed each context embedding to gain information from its surrounding context.

Xiong et al. (2016) proposed Dynamic Coattention Network, which uses a Coattention encoder to model the interaction between the query and context. The coattention encoder involved computation of an attention matrix as the dot products between the query and context words: . This matrix was then normalized row-wise to produce (attention weights over the context for each query word), and column-wise to produce (attention weights over the query for each context word). was further used to compute summaries of the context: . Then, was used to summarise the concatenation of and , the summary of context words for each word of the query and the query word itself: . As a final step, they passed the concatenation of the context and the query aware representation of the context through a BiLSTM.

2.2 Negation Scope Resolution

Khandelwal and Sawant (2020) provide an extensive summary of papers using non-transformer based models addressing Negation Scope Resolution. We summarise their paper, and the follow-up paper by Britto and Khandelwal (2020), which use transformer-based architectures to address this task. They report state-of-the-art results, and we use their models as the baseline.

Khandelwal and Sawant (2020) proposed using BERT Devlin et al. (2018) to address negation scope resolution. The transfer learning capability of such models allowed these models to perform much better than all previous models. They encoded the cue words in the sentence using a preprocessing strategy, by adding special tokens representing the type of the cue word before the cue words. For example:

I do not know the answer. I do [cue_tok] not know the answer.

This yielded the best results to date on all datasets (BioScope Full Papers and Abstract Subcorpora, SFU Review Corpus and Sherlock Dataset).

Britto and Khandelwal (2020) went a step further and used XLNet and RoBERTa in place of BERT, improving results even further.

The strategy adopted by these papers could be summarized as follows:

2.3 Self-Attention

Self-attention was introduced in Attention is All you NeedVaswani et al. (2017) as a key part of the transformer architecture. It was designed to get contextual representation of each token. The process was as follows: extracting Key , Query and Value vectors from each input vector, and then performing a Scaled Dot-Product Attention operation to compute the attention weights for each token , using the token’s query vector and all Key vectors . These weights were used to summarise the Value vectors , to compute the contextual representation. This operation was repeated multiple times in parallel (using different weight vectors), and their outputs combined using a weight matrix. This was called Multiheaded Self Attention. We can summarise the above process using the following equations:

3 Orthogonal Attention

Inspired by self-attention Vaswani et al. (2017), we look to use the key-query-value structure and the multiheaded process to create an Attention mechanism to address Cloze-Style tasks. We first detail the overall modelling framework, and then explain individual components.

We use the following notational convention: is Query Inputs, is Context Input, is Query Vectors for Orthogonal Attention, is Key Vectors for Orthogonal Attention, is Value Vectors for Orthogonal Attention, is Query Vectors for Dot-Product Attention and is Context Vectors for Dot-Product Attention.

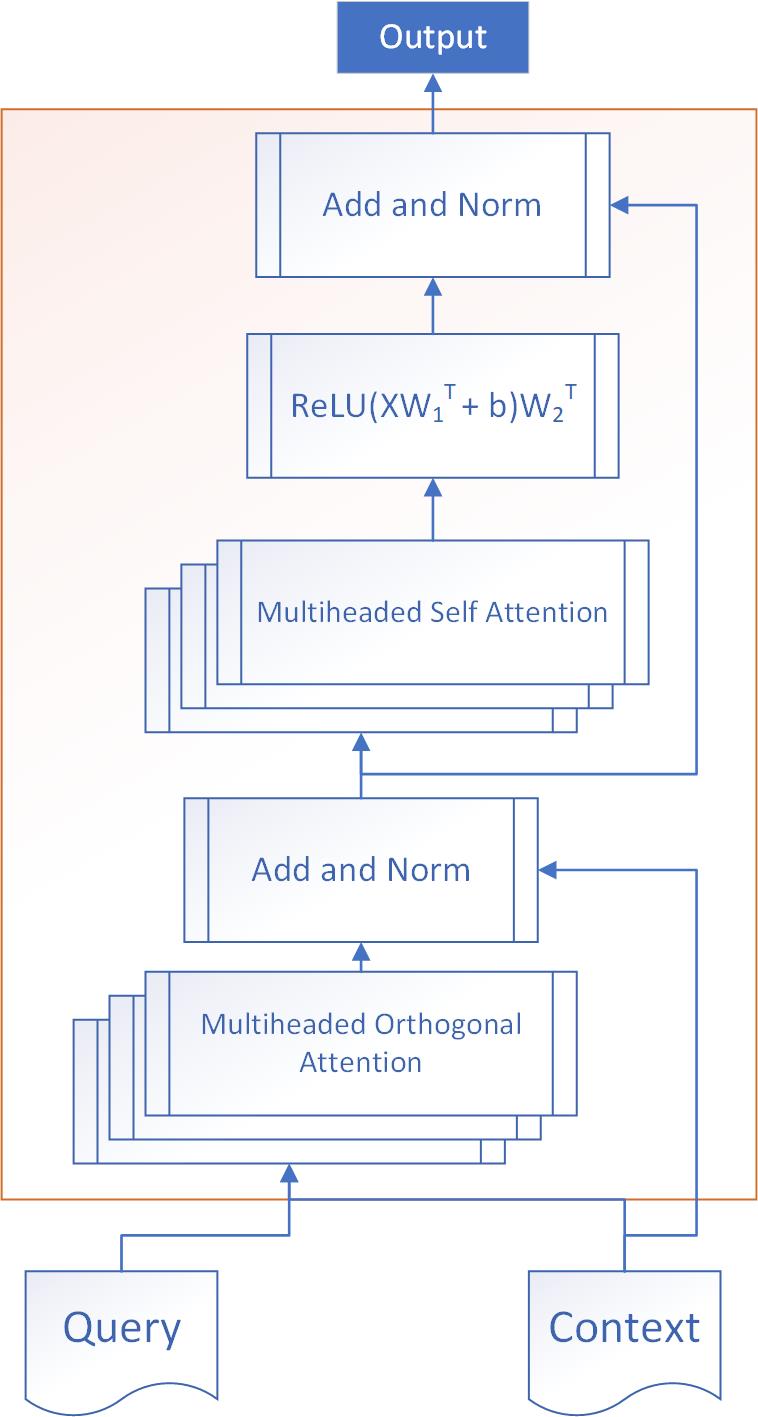

3.1 Orthogonal Attention Encoder Block (OA)

Multiheaded Orthogonal Attention:

Residual Connection:

Layer Normalization:

Multiheaded Self-Attention:

2 Fully Connected Layers:

Residual Connection:

Layer Normalization:

This is quite similar to the transformer encoder block proposed in Vaswani et al. (2017).

We use a Multiheaded Self-Attention module after the Multiheaded Orthogonal Attention module so as to allow the query-aware contextual representations to exchange information among themselves. This is similar in function to the self-matching layer by Wang et al. (2017b).

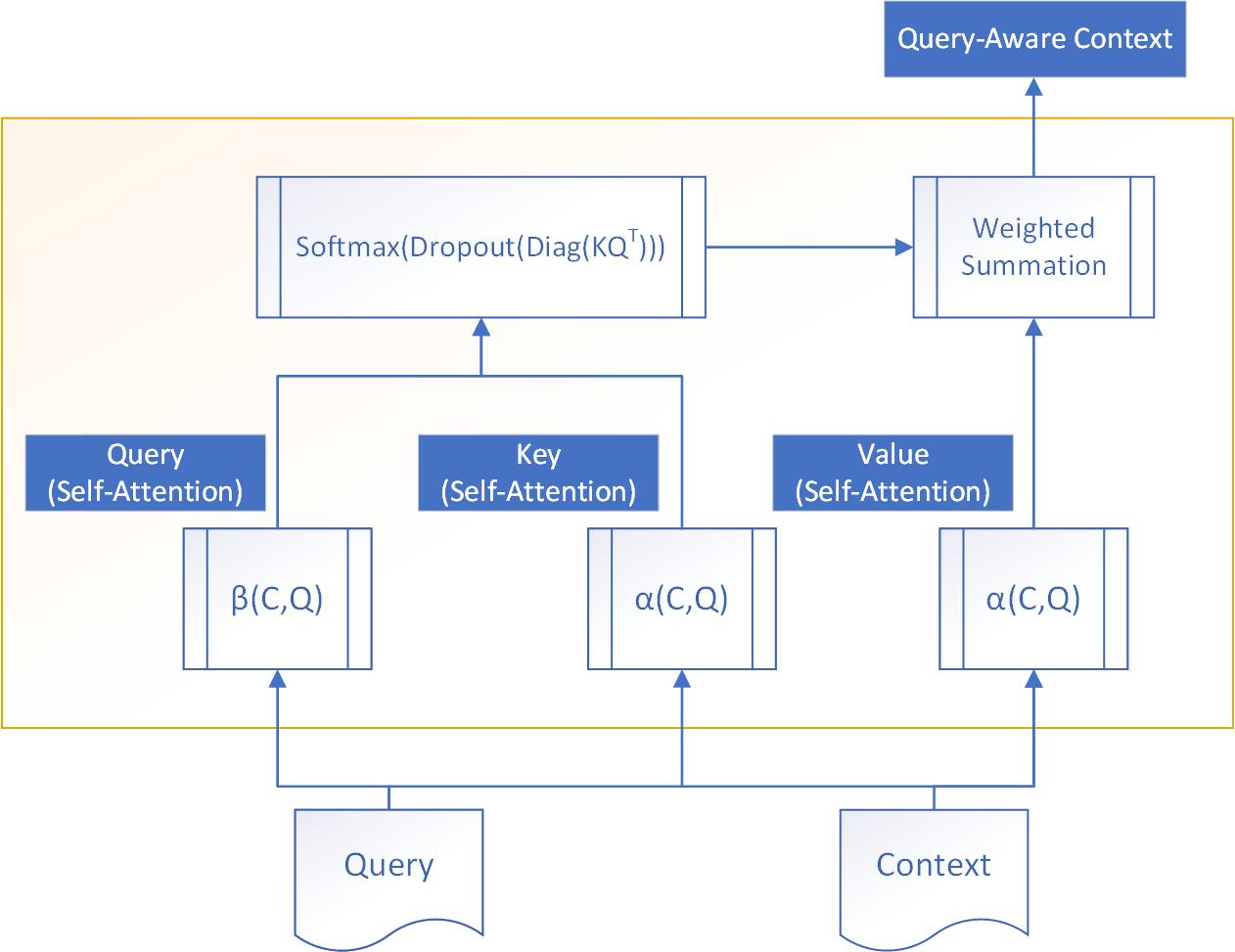

3.2 Multiheaded Orthogonal Attention

First, we detail the design of an individual head of Orthogonal Attention:

We use a function to generate key-value pairs ( and ), and to generate query vectors . Then, we summarise the value vectors using the key and query vectors, similar to self-attention. Specifically, the summation is performed for each context word () over all in . This generates a context word representation that is query-aware. This operation is performed in a multiheaded fashion. Specifically, the following equations can be used to summarise the entire process:

Specifically,

Query:

Context:

Attention Weights:

represent the output of the Orthogonal Attention head. represents the output of the Multiheaded Orthogonal Attention Layer

Like Self-Attention, we choose . is used to project the output back to a dimensional space.

3.3 Orthogonal Attention Variants

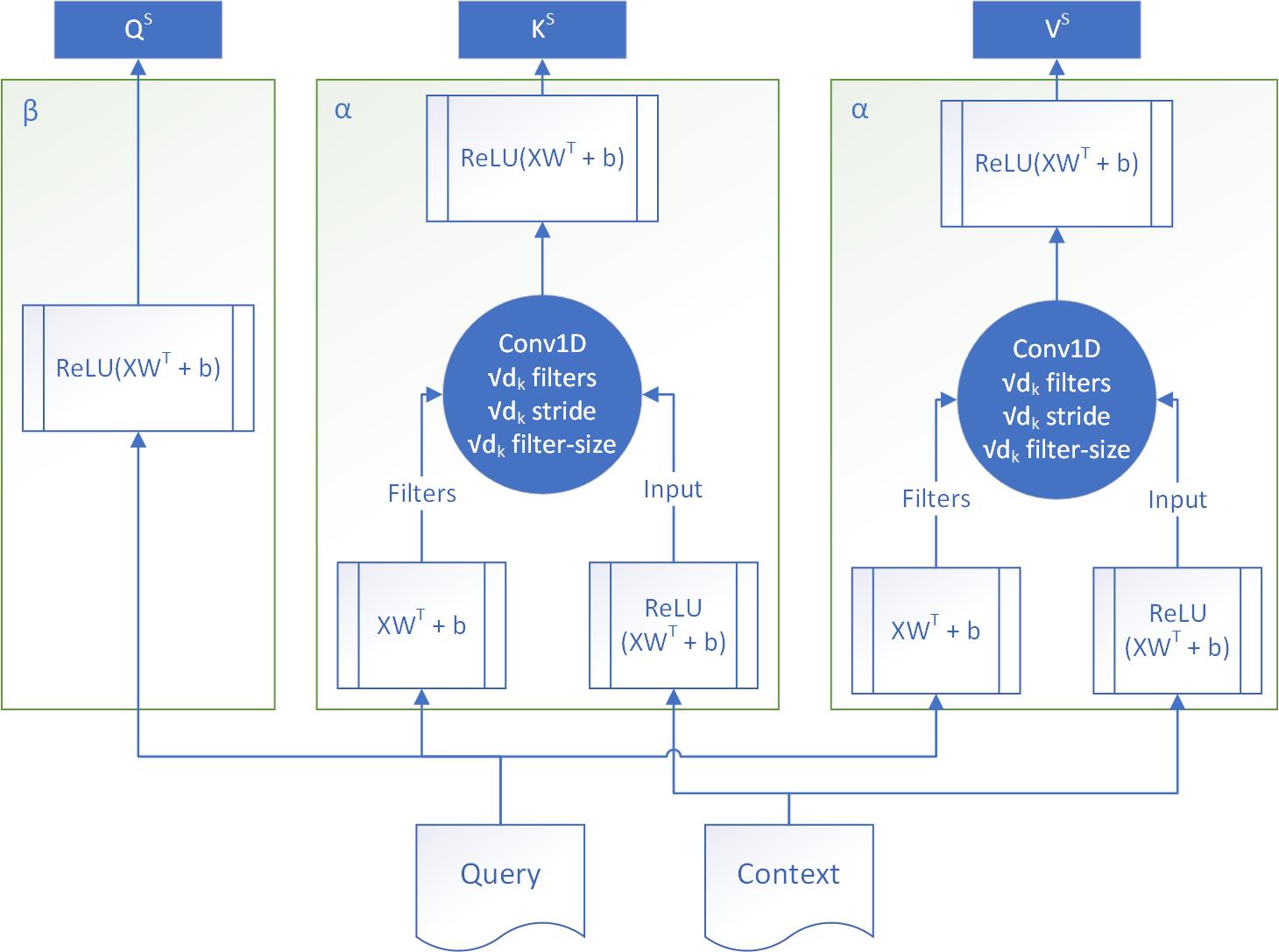

Orthogonal Attention models the interaction between the Query and Context using 2 functions, and , which are used to produce the key, query and value vectors , and . In this section, we detail 4 choices for and .

We experiment with 2 primary modes of interaction between the query vectors and context vectors to generate the and :

-

•

An elementwise multiplication operation (this is used in OA-EM and OA-EMB). This approach is similar to Dhingra et al. (2016), who also relied on element-wise multiplicative interaction between query and context words. This could be thought of as the query acting as a filter for each context dimension.

-

•

A 1D Convolutional operation using Query vectors as filters to convolve over the Context vectors (this is used in OA-C and OA-CA). The intuition is that instead of an elementwise multiplicative filter, we generate filters from each query word that could be convolved over the context word embeddings.

To generate , we experiment with 2 choices:

-

•

Only using the Query input (this is used in OA-C and OA-EM).

-

•

Using both the Query and the Context (this is used in OA-CA and OA-EMB). This choice is inspired by Seo et al. (2016), who proposed that using bidirectionality helps the model.

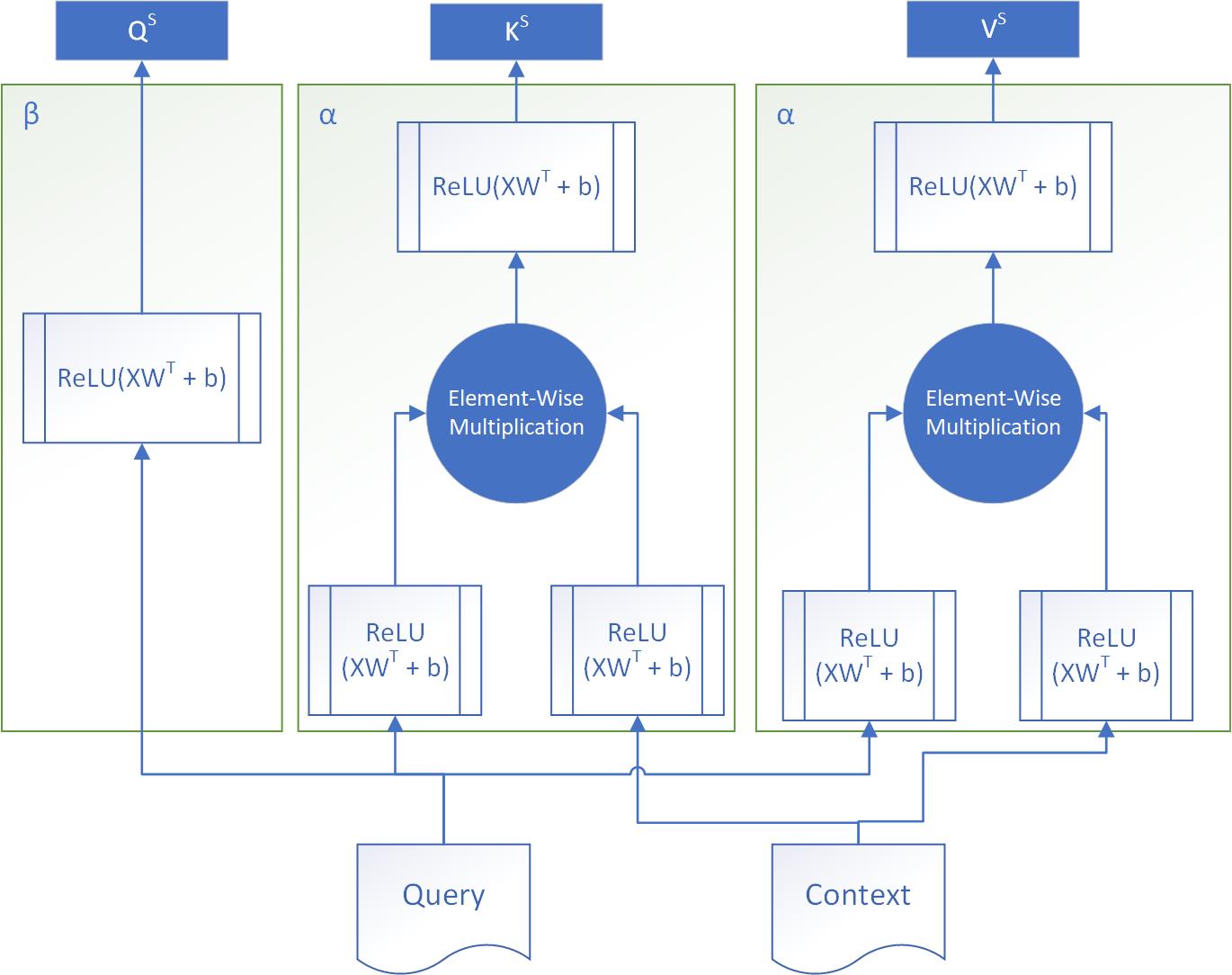

3.3.1 OA-EM

Here, uses Elementwise Multiplication to model the interaction between each context word-query word pair. only uses to generate . Formally,

-

•

:

Linear: )

Linear:

Elementwise Multiplication:

Linear:

-

•

Linear:

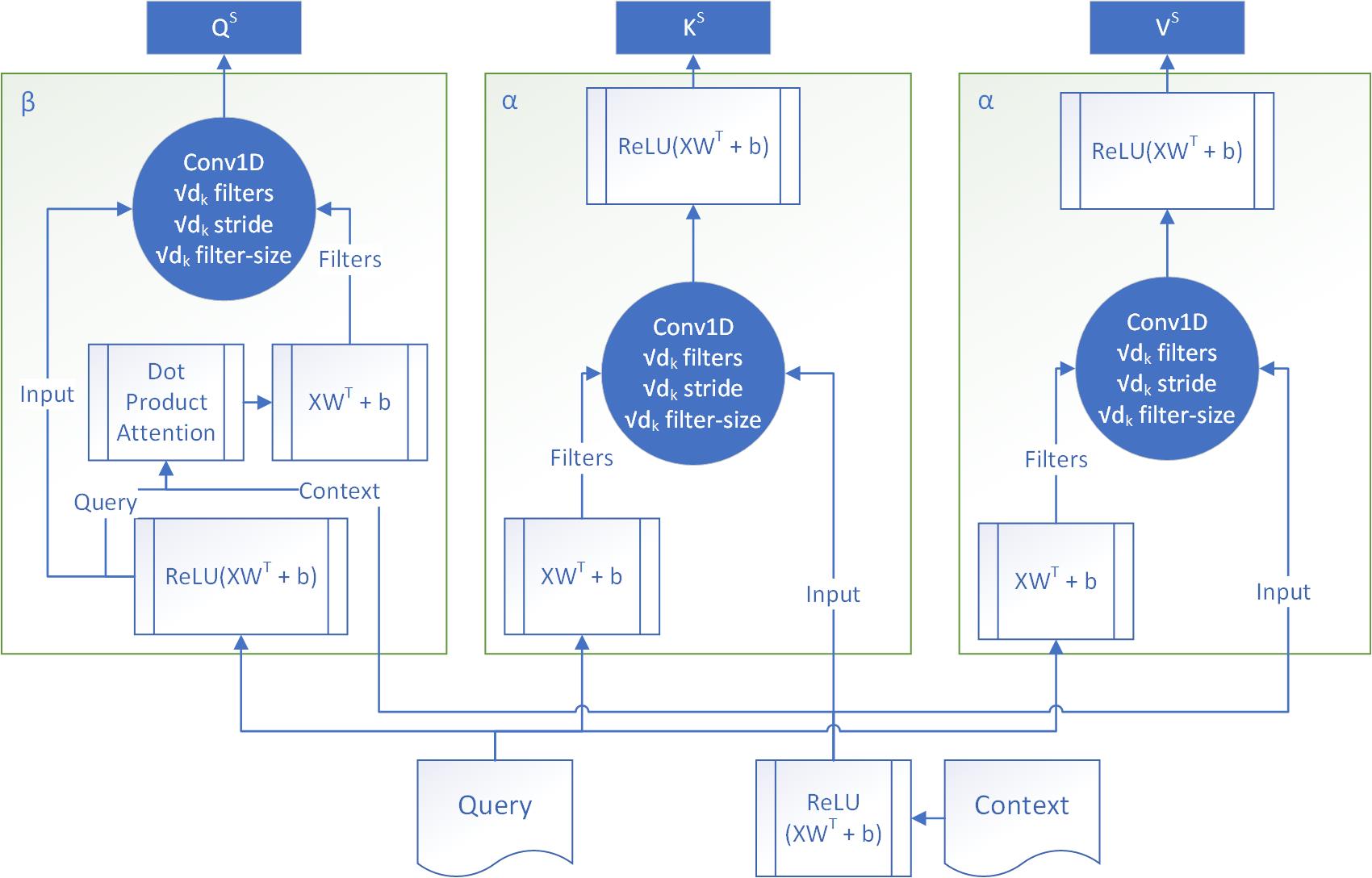

3.3.2 OA-EMB

Here, uses Elementwise Multiplication to model the interaction between each context word-query word pair, just like in . Unlike OA-EM though, generates as context-aware query representations using elementwise multiplication. Specifically, it uses the dot product attention as implemented in the PyTorchNLP Library 111https://pytorchnlp.readthedocs.io to calculate a summary of the context per query word . This generates a vector summary of for each query word, which is then multiplied elementwise with the query vectors themselves to get the context-aware query representation . Formally,

-

•

:

Elementwise Multiplication:

-

•

Linear:

Linear:

Dot Product Attention:

Elementwise Multiplication:

Linear:

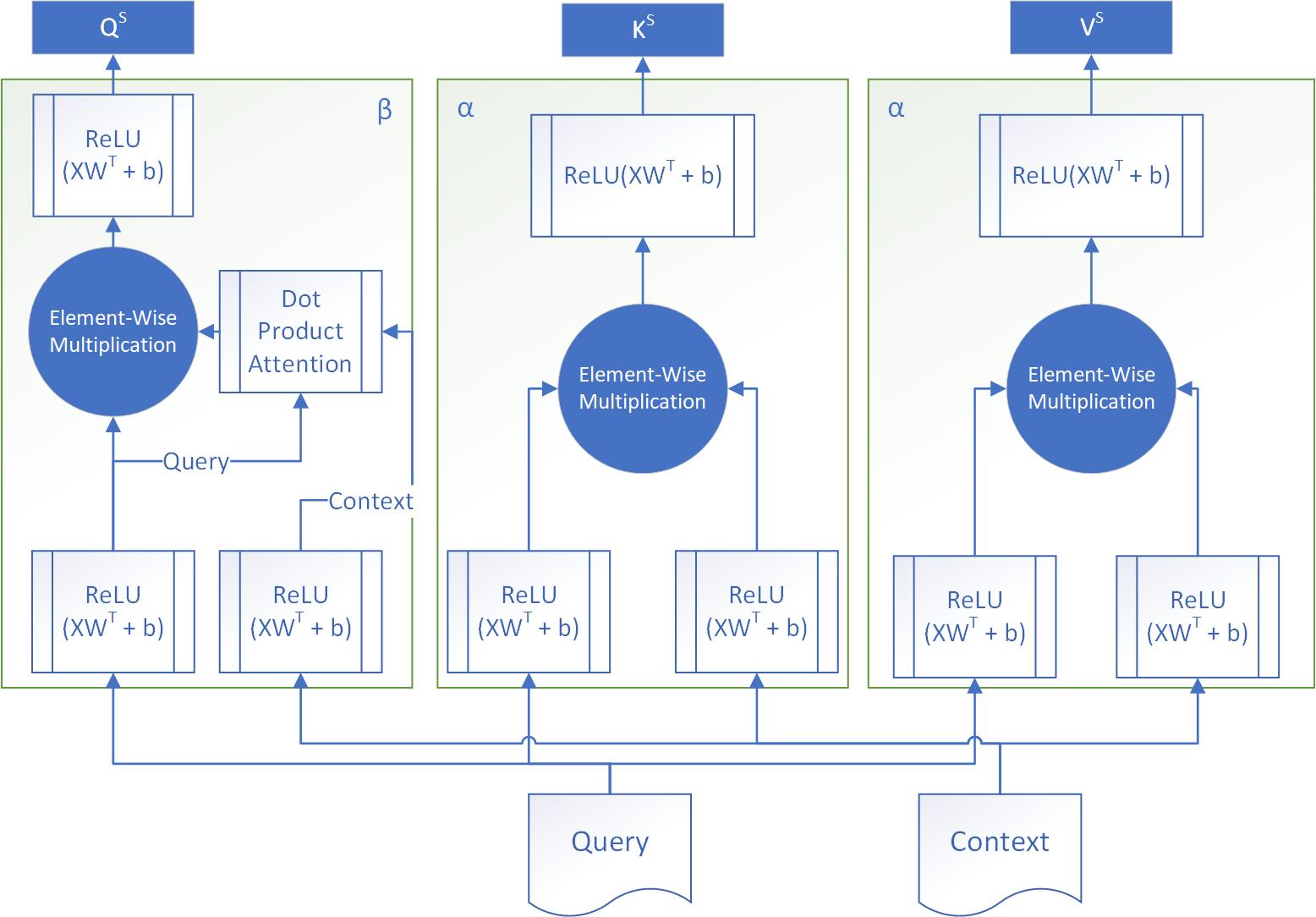

3.3.3 OA-C

Here, uses 1D Convolutional Operation to model the interaction between each context word-query word pair. Specifically, we use to generate 1D Convolutional filters, which are then convolved over each context word separately. We use filters of size , with a stride of . The resulting feature maps are flattened to produce embeddings for each context word-query word pair, from which and are generated. only uses to generate , just like . Formally,

-

•

Linear:

Linear (Generate convolutional filters):

Linear (Generate convolutional biases):

1D Convolution:

Linear:

-

•

Linear:

3.3.4 OA-CA

Here, uses 1D Convolutions to model the interaction between each context word-query word pair, just like in . Unlike OA-C though, uses and to generate . Specifically, we perform a dot product attention mechanism over using each individual query word to generate a vector summary of for each query word (like in OA-EMB), which are then used to generate filters for a 1D Convolution over the corresponding query word (from which the filter was generated). We use filters of size , with a stride of . The resulting feature maps are flattened to produce embeddings for each query word, from which are generated. Formally,

-

•

:

Conv1D:

-

•

Linear:

Linear:

Dot Product Attention:

Linear (Generate convolutional filters):

Linear (Generate convolutional biases):

1D Convolution:

4 Experimentation Details

The model architecture is a straightforward modification of Khandelwal and Sawant (2020), by passing the output of the XLNet-base model to 2 Orthogonal Attention Encoder blocks. Finally, we add a linear layer to get the output probabilities. We also use Dropout and Residual Connections to regularize the model and stabilize training.

The model architecture can be summarised as follows:

Sentence Embeddings:

Dropout:

Orthogonal Attention Encoder:

Orthogonal Attention Encoder:

Dropout:

Residual Connection:

Dropout:

Linear:

We experiment with 4 datasets:

-

•

BioScope Abstracts Subcorpora (BA): 1075 samples

-

•

BioScope Full Papers Subcorpora (BF): 235 samples

-

•

SFU Review Corpus (SFU): 2205 samples

-

•

*sem 2012 Shared Task dataset (Sherlock): 615 samples

We perform a 10-fold cross validation. When the Sherlock dataset is involved, we use the train-dev-test split provided by *sem 2012 organizers, so the 10-fold CV becomes a 10-run average over the test set provided. We report the scores for the Token level F1, which is an F1 score over the number of word level label matches. Using the proposed model architecture, we experiment with the 4 proposed variants of Orthogonal Attention. For each of the variants, we also experiment with 2 preprocessing techniques for the input sentence:

-

•

Normal: The input sentence is passed as is. We hypothesise that this approach will require the model to learn the dependencies between the cue words and their scope via leveraging the multiheaded orthogonal attention blocks, as the XLNet layer does not know which words are the cue words in the sentence.

-

•

Augment: Similar to the Augment method used in prior work (Khandelwal and Sawant (2020), Britto and Khandelwal (2020)), the cue words are preceded with a special token (tok[0]). This way, we explicitly tell the XLNet layer about the cue words as well, and thus the Orthogonal Attention Encoder layers would be able to enhance the representation of each token, making it easier for the linear layer to find the scope of the cue.

While the Normal Preprocessing strategy would be the default method for a Cloze-style task, we observed that our model overfit to the training set, due to the limited amount of training data (ranging from 200 samples to 2200 samples). Each Orthogonal Attention layer had around 4-7 million parameters which had to be learnt from scratch (as shown in Table 1). To reduce overfitting, we experimented with also allowing access to cue information to the XLNet layer,so that it generated meaningful cue-aware embeddings, via the Augment preprocessing technique. In our analysis, we show that all the Orthogonal Attention variants give us a gain in performance over using XLNet-base only.

We use a differential learning rate strategy, where XLNet-base is finetuned using an initial learning rate of 3e-5 and the Orthogonal Attention Encoder Layers are trained using an initial learning rate of 1e-4, both using the Adam optimizer.

We use a batch size of 32, a dropout probability of 0.3, and the maximum input sequence length as 128. We train for 60 epochs, with an early stopping on the validation set F1 with a patience of 6 epochs. From k folds that the model is divided into, one fold is kept as the test set while a validation set (for early stopping) is sampled from the k-1 folds, whose size is the same as the test set.

We also perform a 10-fold Cross-Validation for the model proposed by Britto and Khandelwal (2020), using XLNet as the base model (which is the state-of-the-art model for BF, BA and SFU), to accurately compare the results. This model is referred to as Baseline in all further results.

5 Results and Analysis

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c05a60e-0c92-4b15-bf22-a6e8ad4af8e2/Inference_Time_Graph.png)

Table 1 contains a summary of the model sizes and the execution times for an inference run with various batch sizes. We see that OA-C and OA-EM have similar execution profiles, and that OA-CA and OA-EMB (the bidirectional variants) have similar execution profiles.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c05a60e-0c92-4b15-bf22-a6e8ad4af8e2/Augment_F1.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c05a60e-0c92-4b15-bf22-a6e8ad4af8e2/Normal_F1.png)

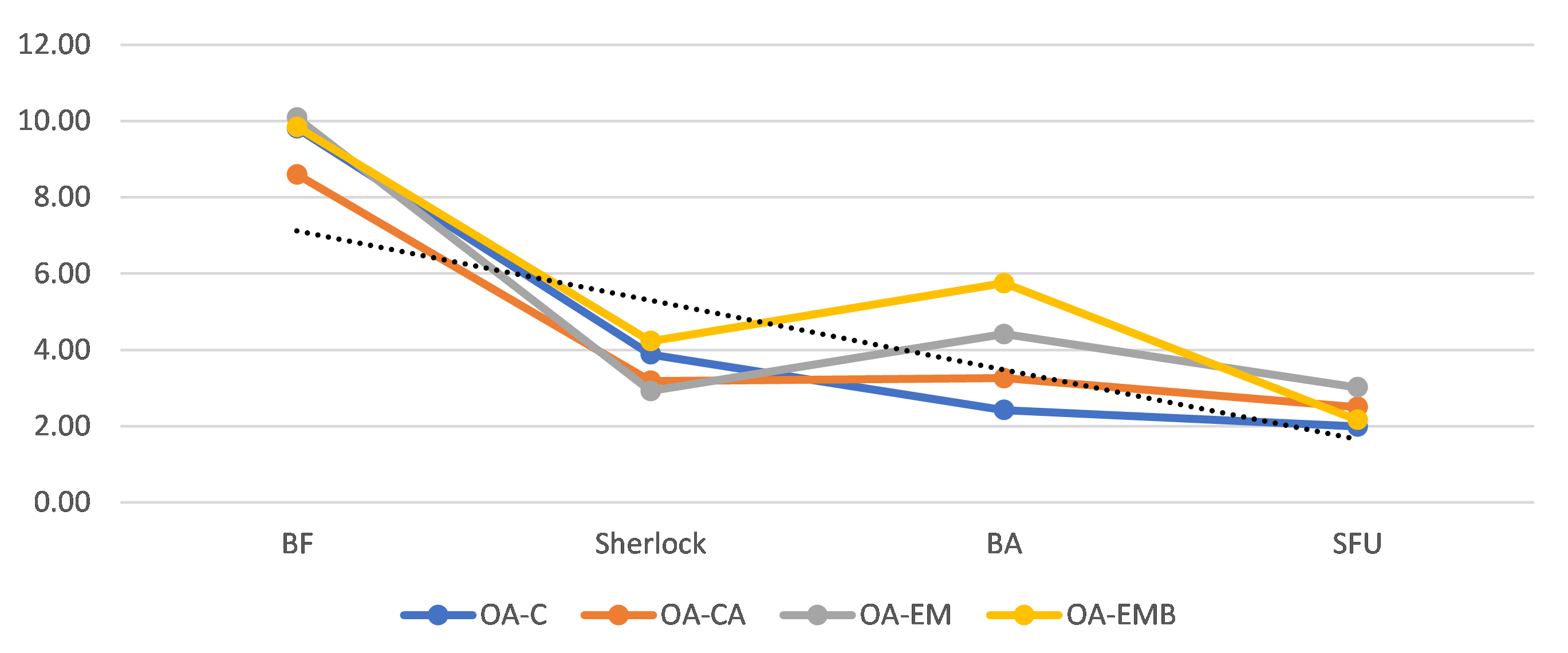

Tables 2 and 3 contain the results for Augment and Normal Preprocessing method respectively (Token-level F1 score (Macro Average over a 10-fold CV)). Across a row, the best model for that train dataset-test dataset combination is highlighted in bold. The best score for a given test dataset is highlighted in yellow. We observe that:

-

•

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c05a60e-0c92-4b15-bf22-a6e8ad4af8e2/Difference_to_Baseline_KV_2.png)

Table 4: Performance Difference (F1 points) Table 4 shows that for the Augment preprocessing method, all models yield improvements over the baseline(non-OA) for Augment preprocessing method.To compute the second column, we take the difference of the scores of that model to the best score that any model had on that train dataset-test dataset combination. It also shows that the 2 most promising variants are OA-EMB and OA-CA (the bidirectional variants).

-

•

Figure 7: Performance Difference between Augment and Normal (F1 Points) Figure 7 contains the difference between performance for the a model when the only difference was the preprocessing method used, averaged. We observe that the while the Normal preprocessing method has a significant difference in performance compared to Augment, but that this difference decreases with increasing dataset sizes (supporting our earlier hypothesis of overfitting).

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c05a60e-0c92-4b15-bf22-a6e8ad4af8e2/Summary_F1.png)

A summary of the best results by each model variant is shown in Table 5. A final summary of the results in comparison to the previous state-of-the-art results is shown in Table 6.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c05a60e-0c92-4b15-bf22-a6e8ad4af8e2/Final_Summary_Table_3.png)

6 Conclusion and Future Scope

In this paper, we proposed a novel approach to solving Negation Scope Resolution by viewing it as a Cloze-Style task, and also proposed a novel Cloze-Style Attention mechanism called Orthogonal Attention. We proposed 4 such variants. Our results showed that Orthogonal Attention is very effective as a Cloze-Style Attention mechanism, and using it with the current state-of-the-art models (XLNet-base) gives us an increase in performance over them. Thus, we report the best results till date on Negation Scope Resolution.

Future work could utilize Orthogonal Attention to address Question Answering and other Machine Reading Comprehension tasks. The Orthogonal Attention framework could be used to create better variants of Orthogonal Attention, which could better model the interaction between the Query and Context.

References

- Britto and Khandelwal (2020) Benita Kathleen Britto and Aditya Khandelwal. 2020. Resolving the scope of speculation and negation using transformer-based architectures.

- Cui et al. (2016) Yiming Cui, Zhipeng Chen, Si Wei, Shijin Wang, Ting Liu, and Guoping Hu. 2016. Attention-over-attention neural networks for reading comprehension. CoRR, abs/1607.04423.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. BERT: pre-training of deep bidirectional transformers for language understanding. CoRR, abs/1810.04805.

- Dhingra et al. (2016) Bhuwan Dhingra, Hanxiao Liu, William W. Cohen, and Ruslan Salakhutdinov. 2016. Gated-attention readers for text comprehension. CoRR, abs/1606.01549.

- Hermann et al. (2015) Karl Moritz Hermann, Tomás Kociský, Edward Grefenstette, Lasse Espeholt, Will Kay, Mustafa Suleyman, and Phil Blunsom. 2015. Teaching machines to read and comprehend. CoRR, abs/1506.03340.

- Kadlec et al. (2016) Rudolf Kadlec, Martin Schmid, Ondrej Bajgar, and Jan Kleindienst. 2016. Text understanding with the attention sum reader network. CoRR, abs/1603.01547.

- Khandelwal and Sawant (2020) Aditya Khandelwal and Suraj Sawant. 2020. Negbert: A transfer learning approach for negation detection and scope resolution. In Proceedings of The 12th Language Resources and Evaluation Conference, pages 5739–5748, Marseille, France. European Language Resources Association.

- Rajpurkar et al. (2016) Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and Percy Liang. 2016. Squad: 100, 000+ questions for machine comprehension of text. CoRR, abs/1606.05250.

- Seo et al. (2016) Min Joon Seo, Aniruddha Kembhavi, Ali Farhadi, and Hannaneh Hajishirzi. 2016. Bidirectional attention flow for machine comprehension. CoRR, abs/1611.01603.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. ArXiv, abs/1706.03762.

- Wang et al. (2017a) Wenhui Wang, Nan Yang, Furu Wei, Baobao Chang, and Ming Zhou. 2017a. Gated self-matching networks for reading comprehension and question answering. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 189–198, Vancouver, Canada. Association for Computational Linguistics.

- Wang et al. (2017b) Wenhui Wang, Nan Yang, Furu Wei, Baobao Chang, and Ming Zhou. 2017b. Gated self-matching networks for reading comprehension and question answering. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 189–198, Vancouver, Canada. Association for Computational Linguistics.

- Xiong et al. (2016) Caiming Xiong, Victor Zhong, and Richard Socher. 2016. Dynamic coattention networks for question answering. CoRR, abs/1611.01604.