Parallel ADMM Algorithm with Gaussian Back Substitution for High-Dimensional Quantile Regression and Classification

Abstract

In the field of high-dimensional data analysis, modeling methods based on quantile loss function are highly regarded due to their ability to provide a comprehensive statistical perspective and effective handling of heterogeneous data. In recent years, many studies have focused on using the parallel alternating direction method of multipliers (P-ADMM) to solve high-dimensional quantile regression and classification problems. One efficient strategy is to reformulate the quantile loss function by introducing slack variables. However, this reformulation introduces a theoretical challenge: even when the regularization term is convex, the convergence of the algorithm cannot be guaranteed. To address this challenge, this paper proposes the Gaussian Back-Substitution strategy, which requires only a simple and effective correction step that can be easily integrated into existing parallel algorithm frameworks, achieving a linear convergence rate. Furthermore, this paper extends the parallel algorithm to handle some novel quantile loss classification models. Numerical simulations demonstrate that the proposed modified P-ADMM algorithm exhibits excellent performance in terms of reliability and efficiency.

Keywords: Three-block ADMM; Gaussian back substitution; Massive data; Parallel algorithm

1 Introduction

Quantile regression, pioneered by Koenker and Basset (1978), explores how a response variable depends on a set of predictors by modeling the conditional quantile as a function of these predictors. Unlike mean regression, which focuses solely on estimating the conditional mean of the response, quantile regression offers a more precise representation of the relationship between the response and the predictors. Furthermore, quantile regression exhibits superior robustness when handling datasets with heterogeneous characteristics and can effectively process data with heavy-tailed distributions, owing to its less restrictive assumptions regarding error distribution. Quantile loss is also utilized in support vector machines (SVM) for classification purposes (see Section 9.3 in Christmann and Steinwart (2008) and Proposition 1 in Wu et al. (2025b)). Compared to traditional SVM (support vector machine) in Vapnik (1995), quantile loss SVM has been shown to be less sensitive to noise around the separating hyperplane, making it more robust to resampling. For a detailed discussion, see Huang et al. (2014).

Consider a regression or classification problem with observations of the form

| (1.1) |

where the data is assumed to be a random sample from an unknown joint distribution with a probability density function. The random variable represents the “response” or “outcome”, while denotes the predictor variables (features). These features may include the original observations and/or functions derived from them. If considering the intercept term, the first column of is set to all 1. Without loss of generality, is quantitative for regression models, but equals to -1 or 1 for classification models. For a given quantile , one can obtain an estimate of quantile regression by optimizing the following objective function,

where (for ) is the check loss, and is the indicator function. Note that when , the quantile loss degenerates into hinge loss in SVM for classification. Many studies have shown that quantile loss can also be used for classification when , such as Christmann and Steinwart (2008), Huang et al. (2014), Liang et al. (2024) and Wu et al. (2025b). The estimator for quantile loss SVM can be obtained by solving the following optimization problem,

where is a penalization parameter vector, where the first element is always set to 0, and the remaining elements are constrained to be non-negative. Here, the symbol is commonly used to denote the Hadamard product, also known as the element-wise product or the entrywise product. Clearly, when , degenerates into the hinge loss commonly used in SVM, and the above expression simplifies to the form of the ordinary SVM, specifically, hinge loss plus ridge penalty term.

To accommodate high-dimensional scenarios where , it is common practice to substitute the ridge term (Hoerl and Kennard (1970)) with sparse regularization techniques, including LASSO in Tibshirani (1996), elastic net in Zou (2006), adaptive LASSO in Zou (2006), SCAD in Fan and Li (2001), and MCP in Zhang (2010). Consequently, the sparse penalized quantile regression and classification formulation becomes

| (1.2) |

Here, denotes a sparse regularization term that is separable, meaning:

This formulation allows for a distinct penalization of each component using its respective regularization parameter . For regression, is , is ; for classification, is 1; is . Optimization formula (1.2) is a highly flexible expression hat can represent numerous quantile regression and SVM classification models, including penalized quantile regression (Belloni and Chernozhukov (2011), Wang et al. (2012), Fan et al. (2012) and Gu et al. (2018)) and SVM with sparse regularizationin (Zhu et al. (2003), Wang et al. (2006), Zhang et al. (2016) and Liang et al. (2024))

The advancement of modern science and technology has made data collection increasingly effortless, leading to an explosion of variables and vast amounts of data. Due to the sheer volume of data and other factors such as privacy concerns, it has become essential to store it in a distributed manner. Consequently, designing parallel algorithms that can effectively manage these large and distributed datasets is crucial. Several parallel algorithms have been proposed to address problem (1.2), including QR-ADMM (ADMM for quantile regression) in Yu and Lin (2017), QPADM (quantile regression with parallel ADMM) in Yu et al. (2017) and Wu et al. (2025a). More recently, inspired by Guan et al. (2018), Fan et al. (2021) introduced a slack variable representation of the quantile regression problem, demonstrating that this new formulation is significantly faster than QPADM, especially when the data volume or the dimensionality is large. In addition, the slack variable representation is also used by Guan et al. (2020) to design parallel algorithms for solving SVMs with sparse regularization.

However, these slack variable representations raise a new issue, which is that convergence cannot be proven mathematically. The main reason for this is that the slack variable representation introduces two slack variables, which transform the parallel ADMM algorithms (both distributed and non-distributed) into a three-block ADMM algorithm, see Section 3.1 for detailed information. Chen et al. (2016) demonstrated that directly extending the ADMM algorithm for convex optimization with three or more separable blocks may not guarantee convergence, and they even provided an example of divergence. Therefore, although the parallel ADMM algorithms proposed by Guan et al. (2020) and Fan et al. (2021) did not exhibit non-convergence of iterative solutions in numerical experiments, there is no theoretical guarantee of convergence for the iterative solutions, even when the optimization objective is convex.

In this paper, we apply the Gaussian back substitution technique to refine the iterative steps, which allows the parallel ADMM algorithm proposed by Guan et al. (2020) and Fan et al. (2021) to achieve a linear convergence rate. This Gaussian back substitution technique is straightforward and easy to implement, requiring only a linear operation on a portion of the iterative sequences generated by their algorithm. Besides demonstrating that the algorithm in Guan et al. (2020) and Fan et al. (2021) can theoretically guarantee convergence with a simple adjustment, the main contributions of this paper are as follows:

-

1.

We suggest changing the order of variable iteration in Fan et al. (2021) such that our Gaussian back substitution technique involves only simple vector additions and subtractions, thereby eliminating the need for matrix-vector multiplication. Although this change may seem minor, it can significantly enhance computational efficiency in algorithms where both and are relatively large. More importantly, this change will not impact the linear convergence of the algorithm.

-

2.

This paper proposes some new classification models with nonconvex regularization terms based on quantile loss. Leveraging the equivalence of quantile loss in classification and regression tasks, it indicates that existing parallel ADMM algorithms for solving penalized quantile regression, as well as those proposed in this paper, can also be applied to solve these new classification models.

The remainder of this paper is organized as follows. Section 2 provides a review of relevant literature along with an introduction to preliminary knowledge. Section 3 outlines the existing QPADM-slack algorithm, incorporating adjustments to the Gaussian back-substitution process to achieve linear convergence. Section 4 proposes modifications to the variable update sequence in QPADM-slack, simplifying the correction steps during Gaussian back-substitution. Numerical experiments in Section 5 demonstrate that Gaussian back-substitution not only theoretically guarantees the linear convergence of QPADM-slack but also significantly improves computational efficiency and accuracy. Section 6 summarizes the key findings of the study and identifies avenues for future research. The proofs of the theorems and supplementary experimental results are included in the online appendix.

Notations: and represent -dimensional vectors with all elements being 0 and 1, respectively. is a matrix with all elements being 0, except for 1 on the diagonal and -1 on the superdiagonal. represents the -dimensional identity matrix. The Hadamard product is denoted by . The sign function is defined component-wise such that sign if , sign if , and sign if . signifies the element-wise operation of extracting the positive component, while denotes the element-wise absolute value function. For any vector , and denote the norm and the norm of , respectively. is used to denote the norm of under the matrix , where is a matrix.

2 Preliminaries and Literature Review

A traditional method for solving penalized quantile regression and SVMs is to transform the corresponding optimization problem into a linear program (see Zhu et al. (2003), Koenker (2005), Wu and Liu (2009), Wang et al. (2012) and Zhang et al. (2016)), which can then be solved using many existing optimization methods. Koenker and Ng (2005) proposed an interior-point method for quantile regression and penalized quantile regression. Hastie et al. (2004), Rosset and Zhu (2007) and Li and Zhu (2008) introduced an algorithm for computing the solution path of the LASSO-penalized quantile regression and SVM, building on the LARS approach (Efron et al. (2004)). However, these linear programming algorithms are known to be inefficient for high-dimensional quantile regressions and SVMs.

Although gradient descent algorithms (Beck and Teboulle (2009), Yang and Zou (2015)) and coordinate descent methods (Friedman et al. (2010), Yang and Zou (2013)) are efficient in solving penalized smooth regression and classification problems (including some smooth SVMs), they cannot be directly applied to penalized quantile regressions and SVMs due to the non-smooth nature of quantile loss. To extend the coordinate descent method to nonsmooth loss regressions, Peng and Wang (2015) integrated the majorization-minimization algorithm with the coordinate descent algorithm to develop an iterative coordinate descent algorithm (QICD) for solving sparse penalized quantile regression. Yi and Huang (2016) introduced a coordinate descent algorithm for penalized Huber regression and utilized it to approximate penalized quantile regression. However, these algorithms are not suitable for distributed storage and are not easy to implement in parallel.

An efficient algorithm for solving penalized quantile regressions and SVMs is the alternating direction method of multipliers (ADMM), which, owing to its split structure, is well-suited for parallel computing environments. In the following subsection, we will review these ADMM algorithms and their parallel versions.

2.1 ADMM

The alternating direction method of multipliers (ADMM) is an iterative optimization method designed to solve complex convex minimization problems with linear constraints. It works by breaking down the original problem into smaller, more manageable subproblems that are easier to solve. ADMM alternates between optimizing these subproblems and updating dual variables to enforce the constraints, making it particularly useful for large-scale problems and various statistical learning applications (see Boyd et al. (2010) for more details). Since the quantile loss function is nonsmooth and nondifferentiable, it is necessary to introduce the linear constraint to apply ADMM for solving problem (1.2). To better address the subproblem involving , one can introduce the equality constraint . Consequently, the constrained optimization problem can be formulated as follows,

| s.t. | (2.1) |

where . The augmented Lagrangian form of (2.1) is

| (2.2) |

where and are dual variables corresponding to the linear constraints, and is a given augmented parameter. Given , the iterative scheme of ADMM for problem (2.1) is as follows,

| (2.3) |

The entire iterative process of (2.3) is the non parallel QPADM algorithm proposed by Yu et al. (2017) and Wu et al. (2024). On the other hand, Gu et al. (2018) and Wu et al. (2025b) introduced the linearized ADMM algorithms for solving penalized quantile regression and SVM. These methods do not introduce an auxiliary variable , and instead linearize the quadratic function in the -subproblem to facilitate finding the solution for . However, their did not extend the algorithms to handle distributed storage data, meaning there is no parallel version of their ADMM algorithms. Therefore, we will focus on discussing the parallel QPADM algorithm from Yu et al. (2017) and Wu et al. (2025a).

2.2 Parallel ADMM

When designing algorithms for distributed parallel processing, the setup generally involves a central machine and several local machines. Assume the data matrix and the response vector are distributed across local machines as follows,

| (2.4) |

where , and . To accommodate the structure of distributed storage data, Boyd et al. (2010) introduced , to enable parallel processing of the data. Then, the constrained optimization problem can be formulated as

| (2.5) |

where . The augmented Lagrangian form of (2.2) is

| (2.6) |

where and are dual variables corresponding to the linear constraints. Given , the iterative scheme of parallel ADMM for problem (2.2) is as follows,

| (2.7) |

This distribution enables efficient parallelization of the algorithm. Each local machine independently solves its own subproblem (e.g., ), while the central machine consolidates the results by updating and coordinates updates among the local machines. This framework facilitates parallel processing and effective management of large datasets. In subsection 3.2 of Yu et al. (2017), it is indicated that for convex penalties , the QPADM converges to the solution of (2.2). Here, we will briefly explain the proof approach. For ease of description, we will only discuss the case where ; the case where is similar.

When , the constraint form of (2.2) is written in matrix form as

where

Note that , , and are mutually orthogonal, as are and . Therefore, , , and can be considered as a single variable, and and can also be considered as a single variable. Consequently, the QPADM degrades to a traditional two-block ADMM algorithm in the parallel case. A similar conclusion applies to non-parallel QPADM, provided that and are treated as a single block, and is treated as a separate block. The convergence of the two-block traditional ADMM algorithm has been well-studied, thus QPADM is convergent as well. According to He and Yuan (2015), it exhibits linear convergence rate.

However, this does not apply to QPADM-slack in Guan et al. (2020) and Fan et al. (2021) because the introduction of slack variables prevents it from being formulated as a two-block ADMM. We will provide a detailed discussion on this in subsequent sections, which also serves as the motivation for this paper.

3 QPADM-slack and Gaussian Back Substitution

3.1 QPADM-slack

Simulation results from Yu et al. (2017) indicated that QPADM may require a large number of iterations to converge for penalized quantile regressions. This poses challenges for their practical application to big data, particularly in distributed environments where communication costs are high. To address this issue, Fan et al. (2021) proposed using two sets of nonnegative slack variables and to represent the quantile loss. Thus, (2.2) can be written as

| (3.1) |

where and . When , the above equation is used by Guan et al. (2020) to complete the classification task.

The augmented Lagrangian form of (3.1) is

| (3.2) |

Given , the iterative scheme of parallel ADMM for problem (3.1) is as follows,

| (3.3) |

Clearly, will be updated on the central machine, while and will be updated in parallel on the sub machines. In section 3 of Fan et al. (2021), they provided closed-form solutions for the ()-subproblems, which significantly facilitated the implementation of the parallel algorithm.

The iteration process described above cannot be reduced to a two-block ADMM algorithm; it can only be reduced to a three-block ADMM algorithm. Next, we will briefly explain this point using mathematical expressions. When , the constraint form of (3.1) is written in matrix form as

| (3.4) |

where , , and are block matrices partitioned into blocks by rows, and is the corresponding column vector partitioned into blocks. For example, for , , , , , , , , and . The above six matrices cannot be divided into two sets of mutually orthogonal matrices like QPADM. Indeed, these six matrices can be partitioned into three mutually orthogonal groups in the following order: , and . The same operation can also be implemented when , that is,

| (3.5) |

Hence, and can be considered as the first variable, as the second variable, and as the third variable. Thus, QPADM-slack is actually a three-block ADMM algorithm in terms of optimization form.

Chen et al. (2016) demonstrated that directly extending the ADMM algorithm for convex optimization with three or more separable blocks may not guarantee convergence, and they provided an example of divergence. As a result, QPADM-slack does not have a theoretical guarantee of convergence even in the convex case.

3.2 Gaussian Back Substitution

He et al. (2012) pointed out that although the ADMM algorithm with three blocks cannot be theoretically proven to converge, the algorithm can be corrected for some iterative solutions through simple Gaussian back substitution, thereby making the algorithm convergent. Because the correction matrix is an upper triangular block matrix, it is called Gaussian back substitution. The technique of Gaussian back substitution was extensively applied to various statistical and machine learning domains, including but not limited to the works of Ng et al. (2013), He and Yuan (2013), He et al. (2017), and He and Yuan (2018).

Let

| (3.6) |

and . To facilitate the description of the correction process (Gaussian back substitution), we define the sequence of iterations , generated by (3.3), as . Then, the Gaussian back substitution of QPADM-slack is defined as

| (3.7) |

where . Note that is an identity matrix, and thus

it follows that

| (3.8) |

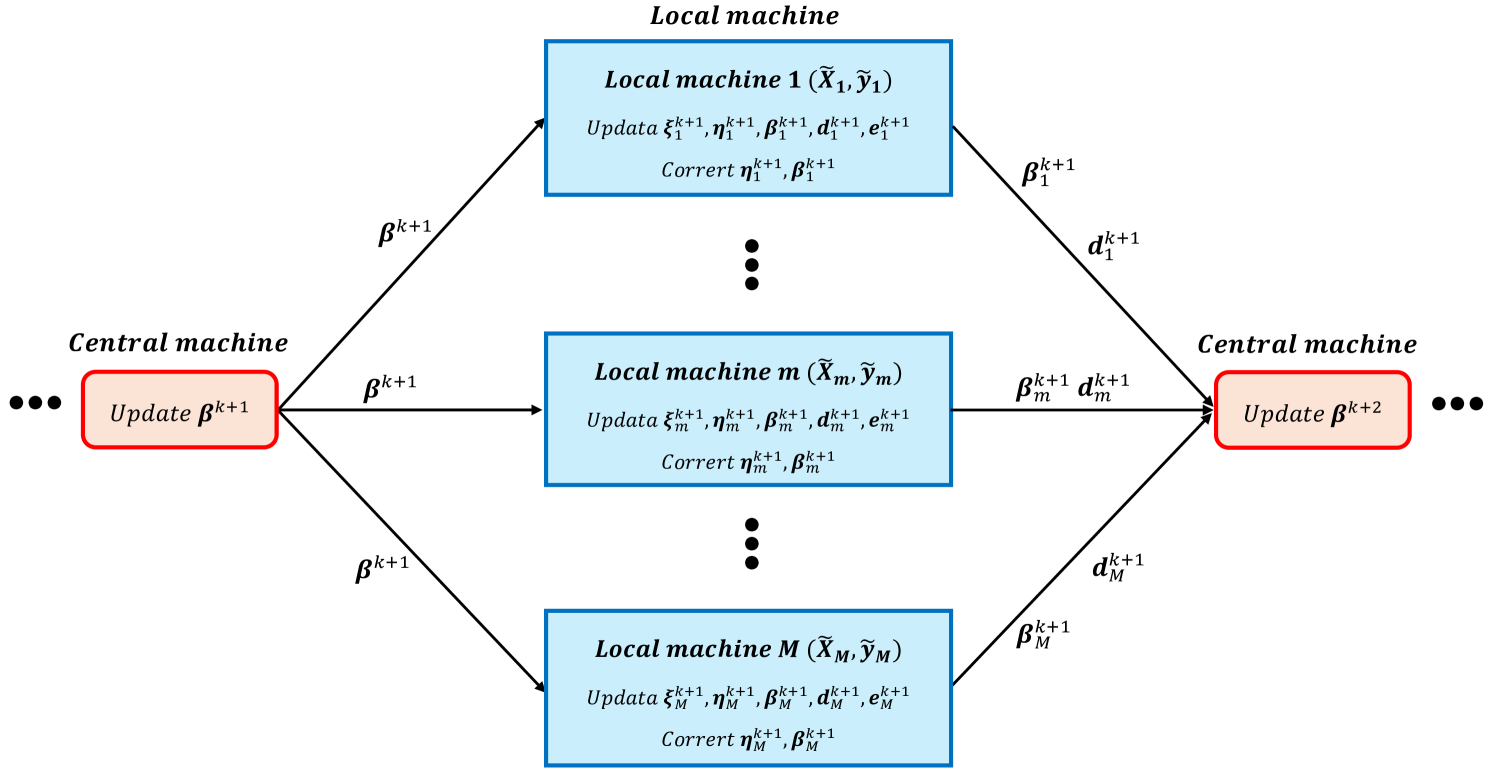

We summarize the process of QPADM-slack with Gaussian back substitution (QPADM-slack(GB)) in Algorithm 1. Its parallel algorithm diagram is shown in Figure 1. Algorithm 1 differs from QPADM-slack only in the correction steps performed in each local machine, specifically steps 3 and 4 in Local machines. This correction process involves only linear operations, with the heaviest computational load being the matrix-vector multiplication in the correction step. Next, we will establish the global convergence of QPADM-slack(GB) and its linear convergence rate. The proof of the following theorem is provided in Appendix A.

Theorem 1

Let the sequence be generated by QPADM-slack(GB).

-

1.

(Algorithm global convergence). It converges to some that belongs to , where , and is the set of optimal solutions for (3.1).

-

2.

(Linear convergence rate). For any integer , we have

(3.9) where is a positive constant and is a positive definite matrix.

Remark 1

It is clear that is a positive constant. Therefore, , which is known as a linear convergence rate. In addition, during the proof of this theorem, we demonstrated that and are monotonically nonincreasing, that is, and , see the propositions in the Appendix A.

3.3 Nonconvex Extension and Local Linear Approximation

In recent years, nonconvex penalized quantile regression has been extensively studied, both theoretically and algorithmically, see Wang et al. (2012), Fan et al. (2014), Gu et al. (2018), Fan et al. (2021), Wang and He (2024). However, there has been little research on quantile loss SVMs with nonconvex regularization terms. In this subsection, we propose the nonconvex quantile loss SVMs, defined as

| (3.10) |

When , the second inequality is always satisfied, and thus the aforementioned SVM simplifies to the nonconvex hinge SVM addressed in Zhang et al. (2016) and Guan et al. (2018). It is not difficult to see that (3.10) can be included in (1.2). In this paper, we mainly consider two popular nonconvex regularizations, SCAD penalty and MCP penalty.

In high-dimensional penalized linear regression and classification models, convex regularization terms like adaptive LASSO and elastic net ensure global optimality and computational efficiency, while non-convex regularization terms may provide better estimation and prediction performance but pose computational challenges due to the lack of global optimality. Fortunately, for quantile regression models and SVM models with nonconvex penalties, Fan et al. (2014) and Zhang et al. (2016) respectively pointed out that these problems can be uniformly solved by combining the local linear approximation (LLA, Zou and Li (2008)) method with an effective solution for the weighted penalized quantile regression and SVM.

LLA involves using the first-order Taylor expansion of the nonconvex regularization term to replace the original nonconvex regularization term, that is

| (3.11) |

where is the solution from the last iteration, and .

For SCAD, we have

| (3.12) |

For MCP, we have

| (3.13) |

Then, the nonconvex penalized quantile regressions and SVMs can be written as

| (3.14) |

By substituting equation (3.11) into equation (3.14), we can obtain the following optimized form in a weighted manner,

| (3.15) |

Note that we only need to make a small change to solve this weighted optimization form using Algorithm 1. This change only requires replacing with .

To address nonconvex penalized quantile regressions and SVMs through the LLA algorithm, it is crucial to identify a suitable initial value. Following the guidance of Zhang et al. (2016) and Gu et al. (2018), we can utilize the solution derived from in (3.14) as the initial starting point. Subsequently, the solution to (3.14) is obtained by iteratively solving a series of weighted penalized quantile regressions and SVMs. The comprehensive iterative steps of this approach are systematically outlined in Algorithm 2.

The preceding discussion reveals that resolving nonconvex penalized quantile regressions and SVMs requires multiple iterations of weighted penalized models. Theoretically, as shown by Fan et al. (2014) and Zhang et al. (2016), only two to three iterations suffice to find the Oracle solution for (3.14). In implementing Algorithm 2, we utilize the warm-start technique (Friedman et al. (2010)), initializing with , significantly reducing step 2.2’s iteration count, often leading to convergence within just a few to a dozen steps.

4 Modified QPADM-slack and Gaussian Back Substitution

The iteration order of variables in QPADM-slack is

| (4.1) |

and its Gaussian back substitution is and . We should note that the correction step for involves matrix-vector multiplication, which can impose an additional computational burden when both and are large.

4.1 Modified QPADM-slack

To address this issue, we suggest changing the variable update order of QPADM-slack in (4.1) to

| (4.2) |

We will explain the reason for adopting this order below. From the discussion in Section 3.2, it is evident that the first block among the three blocks of primal variables to be updated does not require correction. To avoid matrix operations in the correction step, should be placed in the first block because only the constraint matrix corresponding to is not composed of identity and zero matrices. During the Gaussian back substitution process, we need to compute the inverse of . To keep this inverse as simple as possible, we must place either or in the second block. Here, we choose to place in the second block in sequence. The remaining , form the third block.

Thus, with , the iterative scheme of modified QPADM-slack (M-QPADM-slack) for problem (3.1) is as follows,

| (4.3) |

where is defined as in (3.1). , , , and will be updated in parallel by each sub machine, while will be updated on the central machine. However, this update sequence will cause a new problem, that is, the updates of local sub machines become incoherent, necessitating that the central machine first completes the update of before proceeding with the update of . This will result in an additional round of communication between the central machine and the local machines, thereby reducing the efficiency of the algorithm. This issue will not occur in (3.3) because the updates of variables on its local sub machines are coherent. Fortunately, since the updates of each sub problem in (4.3) do not strictly depend on the previously updated variables, this issue can be resolved by adjusting the update positions of the dual variables and .

For the convenience of describing the correction steps, we need to define

| (4.4) |

Correspondingly,

It is obvious that the matrices and are partitioned, while is partitioned. , and correspond to the block matrices of the -th columns of , and , respectively. We also define the sequence of iterations , generated by (4.3), as . According to (3.7), it follows from

that

| (4.5) |

where . Unlike the correction step in (3.8), the correction step in (4.5) involves only simple addition and subtraction operations, without matrix-vector multiplications. As a result, the computational burden of this correction is less affected by large values of and .

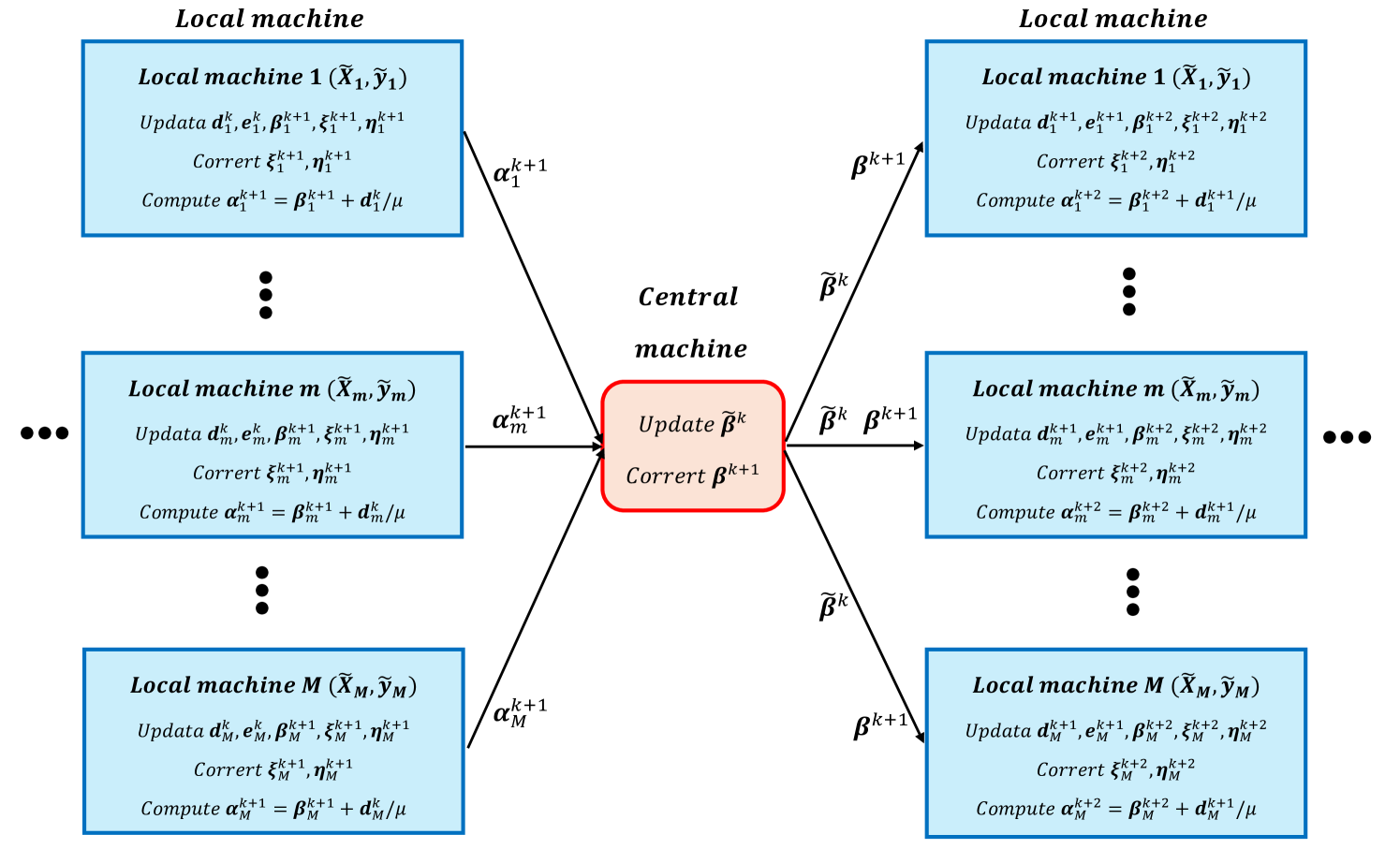

We summarize the process of M-QPADM-slack with Gaussian back substitution (M-QPADM-slack(GB)) in Algorithm 3, and its parallel algorithm diagram is shown in Figure 2. In Algorithm 3, prior to commencing the -th iteration, it is essential to first update the dual variables ( and ) from the -th iteration. This is achievable due to the sequence outlined in (4.3), where the dual variable updates are positioned at the conclusion of each iteration. Specifically, the updates for the primal variables in the -th iteration depend only on the dual variables and from the previous iteration, and not on the newly updated and . By relocating the updates of and , which are initially set to occur at the end of the -th iteration, to the start of the -th iteration, the incoherence in the sequence of iteration variables is effectively addressed. It is crucial to note that this rearrangement does not modify the iterative process delineated in (4.3) within the execution of Algorithm 3.

For some nonconvex regularization terms, M-QPADM-slack(GB) can also be embedded in Algorithm 2 to solve, simply replacing Algorithm 1 in its step 2.2 with Algorithm 3. In addition, Algorithm 3 also inherits the convergence result of Theorem 1, with and in (3.6) replaced by those in (4.4).

4.2 Details of Iteration

In this subsection, we describe the details of solving each subproblem in (4.3). In fact, our M-QPADM-slack (4.3) differs from the QPADM-slack in (3.3) only in the order of the iteration variables. Therefore, the solutions for each subproblem in (4.3) are generally the same as those used in Section 3, except for the order of the iteration variables. For completeness, we also provide detailed iteration steps.

For the update of the subproblems , and in (4.3), the minimization problem is quadratic and differentiable, allowing us to solve the subproblem by solving

| (4.6) | ||||

| (4.7) | ||||

| (4.8) |

For the first equation, Yu et al. (2017) suggested using the Woodbury matrix identity . This method is actually very practical when the size of is small because the inverse only needs to be computed once throughout the ADMM iteration.

For the subproblem of updating in (4.3), by rearranging the optimization equation and omitting some constant terms, we get

| (4.9) |

where . It is clear that the above expression represents a proximal operator, applicable to most convex regularization terms, such as LASSO and group LASSO (see Boyd et al. (2010)), elastic-net (see Parikh and Boyd (2013)), and sparse group LASSO (see Sprechmann et al. (2010)). Since the entire objective function is additive, the optimization problem can be transformed into smaller, independent subproblems during implementation.

5 Numerical Results

This section is dedicated to demonstrating the efficacy of the proposed algorithm in solving classification and regression problems. It focuses on evaluating the algorithm’s model selection capability, estimation accuracy, and computational efficiency. The algorithm, augmented with the Gaussian back substitution technique, is assessed in both non-parallel and parallel computing environments to showcase its robustness and scalability. To this end, the P-ADMM algorithm, combined with the Gaussian back substitution technique, is applied to various problems, including Penalized Quantile Regression (PQR, Fan et al. (2021)) and support vector machines (SVM) with sparse regularization (Guan et al. (2020)).

To select the optimal values for the regularization parameters , we employ the method proposed in Zhang et al. (2016) and Yu et al. (2017), which minimizes the HBIC criterion. The HBIC criterion is defined as follows:

| (5.1) |

Here, represents the specific loss function, denotes the parameter estimates obtained from the non-convex estimation, and denotes the number of non-zero coordinates in . The value of is recommended by Peng and Wang (2015) and Fan et al. (2021). By minimizing the HBIC criterion, we can effectively select the optimal values for for convex and non-convex estimation. This selection method balances model complexity and goodness of fit for optimal estimation. Moreover, reviewing the correction steps (3.8) and (4.5), an additional parameter needs to be selected. According to the proof of Theorem 1, as long as is within the interval (0, 1), it guarantees the convergence and the linear convergence rate of the two proposed algorithms. Empirically, we suggest setting , as this choice assigns a weight of 0.75 to the current iteration variable and a weight of 0.25 to the previous iteration variable. This selection places primary emphasis on the current iteration solution while also appropriately considering the previous iteration solution.

For the initial vector in the iterative algorithm, all elements are initialized to 0.01, although other values are also feasible. For all tested ADMM algorithms, the maximum number of iterations was set to 500, and the following stopping criterion was employed:

This stopping criterion ensures that the difference between the estimated coefficients in consecutive iterations does not exceed the specified threshold. All experiments were conducted on a computer equipped with an AMD Ryzen 9 7950X 16-core processor (clocked at 4.50 GHz) and 32 GB of memory, using the R programming language. For clarity, we denote QPADM-slack(GB) as the standard QRADM-slack with Gaussian back substitution (Algorithm 1), and M-QPADM-slack(GB) as the modified QRADM-slack with Gaussian back substitution (Algorithm 3).

5.1 Synthetic Data

In the first simulation, the P-ADMM algorithm with Gaussian back substitution proposed in this section is used to solve the quantile regression (-QR, Li and Zhu (2008)) problem, and its performance is compared with several state-of-the-art algorithms, including QPADM proposed by Yu et al. (2017) and QPADM-slack proposed by Fan et al. (2021). We have included the experiment on nonconvex regularization terms (SCAD and MCP) in the Appendix B.1.

Although Yu et al. (2017) and Fan et al. (2021) provide R packages for their respective algorithms, these packages are only compatible with the Mac operating system. Furthermore, the package in Yu et al. (2017) only provides the estimated coefficients, lacking information such as the number of iterations and computational time. To ensure a fair comparison, the R code for the QPADM and QPADM-slack algorithms was rewritten based on the descriptions in their respective papers.

In the simulation study of this section, the models used in the numerical experiments of Peng and Wang (2015), Yu et al. (2017), and Fan et al. (2021) were adopted. Specifically, data were generated from the heteroscedastic regression model , where . The independent variables were generated in two steps.

-

•

First, was generated from a -dimensional multivariate normal distribution , where the covariance matrix satisfies for .

-

•

Second, was set to , while for , we directly set .

As stated by Yu et al. (2017) and Fan et al. (2021), does not present when . For simplicity, in the ensuing numerical experiments pertaining to regression, the default selection for is set to 0.7. In a non-parallel environment (), this section simulates datasets of different sizes, specifically (, ) = (30,000, 1,000), (1,000, 30,000), (10,000, 30,000), and (30,000, 30,000). In a parallel environment (), datasets of sizes (, ) = (200,000, 500) and (500,000, 1,000) are simulated. For each setting, 100 independent simulations were conducted. The average results for non-parallel and parallel computations are presented in Tables 1 and 2, respectively.

| (30000,1000) | (10000,30000) | |||||||||

| P1 | P2 | AE | Ite | Time | P1 | P2 | AE | Ite | Time | |

| QPADM | 100 | 100 | 0.018(0.03) | 107.9(12.6) | 41.35(6.78) | 86 | 100 | 4.010(0.71) | 500+(0.0) | 2015.5(69.2) |

| QPADMslack | 100 | 100 | 0.058(0.06) | 56.7(6.04) | 37.56(5.27) | 84 | 100 | 4.196(1.05) | 279(28.6) | 1776.5(34.2) |

| QPADM-slack(GB) | 100 | 100 | 0.029(0.04) | 47.3(5.11) | 35.17(5.30) | 90 | 100 | 3.616(0.86) | 233(20.8) | 1031.9(23.1) |

| M-QPADM-slack(GB) | 100 | 100 | 0.021(0.03) | 31.6(4.50) | 24.42(3.08) | 97 | 100 | 3.241(0.78) | 189(16.6) | 795.6(16.3) |

| (1000,30000) | (30000,30000) | |||||||||

| P1 | P2 | AE | Ite | Time | P1 | P2 | AE | Ite | Time | |

| QPADM | 76 | 100 | 8.012(1.05) | 500+(0.0) | 2024.6(60.1) | 100 | 100 | 1.701(0.33) | 500+(0.0) | 3217.3(68.6) |

| QPADMslack | 73 | 100 | 8.324(1.22) | 243(27.8) | 1526.7(46.9) | 100 | 100 | 2.010(0.39) | 322(21.8) | 2713.6(41.7) |

| QPADM-slack(GB) | 85 | 100 | 8.107(1.09) | 213(24.3) | 928.1(20.8) | 100 | 100 | 1.929(0.36) | 299(18.8) | 1928.3(37.5) |

| M-QPADM-slack(GB) | 91 | 100 | 7.352(0.77) | 166(11.9) | 833.6(15.7) | 100 | 100 | 1.512(0.09) | 227(14.7) | 1532.6(28.1) |

-

*

The symbols in this table are defined as follows: P1 (%) represents the proportion of times is selected; P2 (%) represents the proportion of times , , , and are selected; AE denotes the absolute estimation error; Ite indicates the number of iterations; and Time (s) refers to the running time. The numbers in parentheses represent the corresponding standard deviations, and the optimal results are highlighted in bold.

The results in Table 1 reveal that the Gaussian back substitution method significantly enhances the convergence rate of QPADM-slack, as evidenced by the reduction in the number of iterations (Ite). The QPADM-slack(GB) algorithm introduces an additional correction step compared to QPADM-slack, which involves matrix-vector multiplication. Consequently, despite achieving a notably lower iteration count, QPADM-slack(GB) does not exhibit a substantial advantage in computational time (Time, in seconds) over QPADM-slack. This is because during the correction step, there are frequent matrix multiplication operations by vectors. By contrast, the modified M-QPADM-slack(GB), which adjusts the sequence of variable updates, demonstrates statistically significant improvements in both iteration count (Ite) and computational time (Time). In terms of computational precision, specifically absolute estimation error (AE), QPADM performs the best when the dimensionality is small. However, as the dimensionality increases, the situation changes. While the AE of QPADM remains better than that of QPADM-slack, it performs slightly worse compared to QPADM-slack(GB) and M-QPADM-slack(GB). Furthermore, regarding variable selection performance (P1 and P2), all methods perform well when the sample size () is larger than or slightly smaller than the dimensionality (). However, in scenarios where dimensionality significantly exceeds sample size, the Gaussian back substitution methods exhibit statistically superior performance compared to their counterparts.

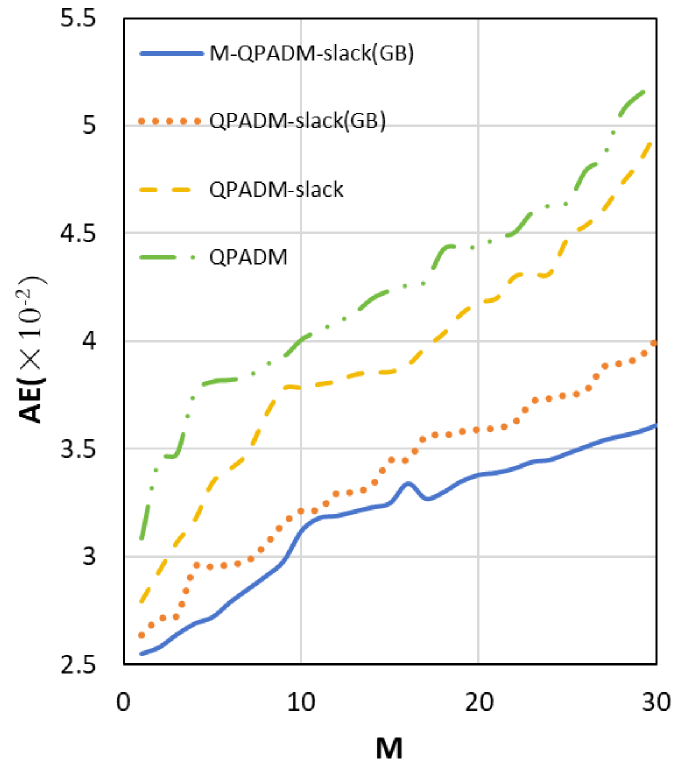

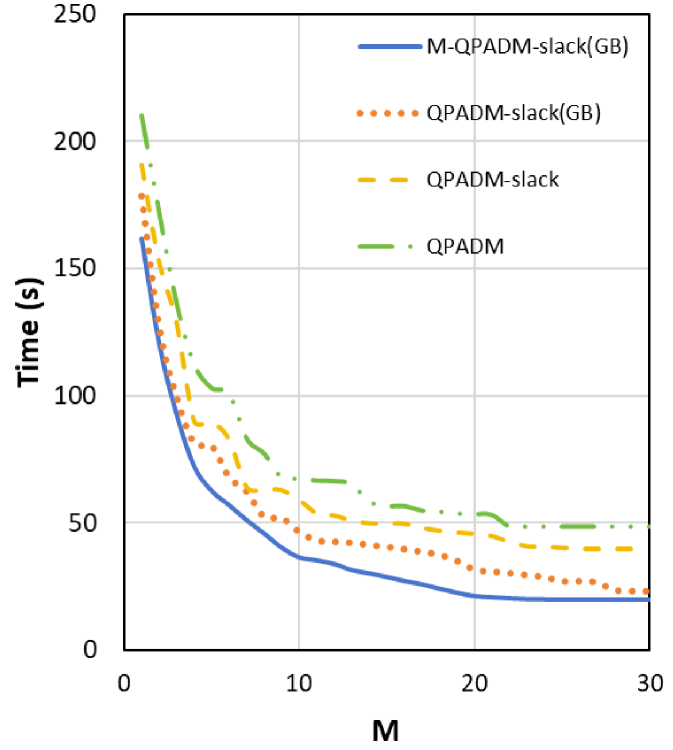

In Table 2, as the number of local sub-machines () increases, a deteriorating trend is observed in both Nonzero and AE for all variants of P-ADMM, a numerical observation consistent with the findings in the numerical experiments reported by Fan et al. (2021). Across different numbers of local sub-machines (), M-QPADM-slack(GB) consistently surpasses other P-ADMM methods by a notable margin, exhibiting superior performance in both computational accuracy and efficiency. This further supports the necessity of modifying the iteration sequence in QPADM-slack when Gaussian back substitution is incorporated.

More detailed results on estimation errors and computation times are presented in Figure 3. Concerning computation time, we noticed that after surpasses 20, the tendency of computation time to decrease with the increase of diminishes and may stabilize. This is not a result of the algorithm struggling to manage a high number of sub-machines, but rather a constraint imposed by our computer’s configuration. Using a machine with ample memory would prevent this issue from arising.

| QPADM | QPADM | |||||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 41.0(3.83) | 0.074(0.0009) | 359.4(27.1) | 80.1(5.82) | 28.3(2.15) | 0.042(0.0006) | 442.1(37.0) | 177.2(12.6) |

| 10 | 44.5(4.01) | 0.071(0.0009) | 372.3(28.9) | 48.2(2.98) | 29.1(2.33) | 0.049(0.0007) | 471.2(40.8) | 87.6(6.63) |

| 20 | 47.2(4.32) | 0.075(0.0011) | 405.2(32.6) | 29.7(1.64) | 32.2(2.01) | 0.052(0.0008) | 494.5(47.1) | 43.5(3.88) |

| QPADM-slack | QPADM-slack | |||||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 36.5(2.95) | 0.079(0.0010) | 255.6(22.0) | 61.3(3.56) | 25.2(1.92) | 0.049(0.0007) | 361.5(32.6) | 136.6(9.32) |

| 10 | 39.9(3.06) | 0.080(0.0011) | 269.1(26.1) | 35.8(2.28) | 28.9(2.09) | 0.051(0.0008) | 379.9(36.8) | 78.4(5.17) |

| 20 | 42.3(3.16) | 0.083(0.0013) | 356.8(42.2) | 22.6(1.53) | 31.6(2.23) | 0.055(0.0009) | 423.6(40.2) | 42.9(2.61) |

| QPADM-slack(GB) | QPADM-slack(GB) | |||||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 25.5(2.20) | 0.058(0.0006) | 195.6(12.6) | 49.5(3.03) | 24.4(1.90) | 0.033(0.0005) | 258.1(23.2) | 82.6(6.62) |

| 10 | 26.4(2.41) | 0.062(0.0008) | 203.0(13.4) | 29.8(1.63) | 25.6(1.97) | 0.037(0.0005) | 269.3(23.5) | 50.2(3.88) |

| 20 | 27.0(2.43) | 0.065(0.0009) | 211.9(13.5) | 15.7(0.92) | 26.2(2.04) | 0.039(0.0006) | 271.0(26.0) | 35.1(2.12) |

| M-QPADM-slack(GB) | M-QPADM-slack(GB) | |||||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 20.1(1.91) | 0.050(0.0005) | 148.5(9.31) | 39.2(2.82) | 15.21(1.44) | 0.027(0.0004) | 196.7(13.6) | 62.5(4.32) |

| 10 | 21.6(1.97) | 0.053(0.0005) | 152.1(9.92) | 22.2(1.73) | 15.38(1.57) | 0.030(0.0005) | 199.6(13.4) | 36.4(2.73) |

| 20 | 22.3(2.01) | 0.054(0.0006) | 156.6(10.8) | 12.9(0.95) | 15.52(1.64) | 0.033(0.0005) | 201.1(13.9) | 20.2(1.51) |

-

*

Since the values of all methods for metrics P1 and P2 are 100, they are not listed in Table 2. “Nonzero” indicates the number of non-zero coefficients in the estimates. The numbers in parentheses represent the corresponding standard deviations, and the optimal results are shown in bold.

5.2 Real Data Experiment

In this section, the empirical analysis focuses on classification tasks using real-world data. The dataset employed is rcv1.binary, which consists of 47,236 features, 20,242 training samples, and 677,399 testing samples. This dataset is publicly accessible at https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary.html#rcv1.binary.

In the subsequent experiments, the training samples are utilized to fit the model, where the data matrix has dimensions (number of observations) and (number of features). This high-dimensional setting, where , necessitates the use of regularization techniques to address potential overfitting. Specifically, an regularization term is incorporated to induce sparsity in the model, as many features are expected to be irrelevant for classification. The experiment on SVM with nonconvex regularization terms is included in Appendix B.2.

As demonstrated in Proposition 1 of Wu et al. (2025b), the optimization algorithms for piecewise linear classification and regression models are interchangeable. Consequently, both QPADM and QPADM-slack can be applied to solve the -SVM problem (Zhu et al. (2003)). Notably, the algorithm proposed in Guan et al. (2020) reformulates the piecewise linear classification loss using slack variables, making it equivalent to QPADM-slack with the quantile parameter . Therefore, QPADM-slack () is adopted as the baseline method for comparison in the following analysis.

To evaluate the algorithms, several performance metrics are defined. For the testing set, a random subsample of 10,000 observations is drawn from the 677,399 testing samples, yielding . The metrics include: (1) “Time”, which measures the computational runtime of the algorithm; (2) “Iteration”, which records the number of iterations required for convergence; (3) “Sparsity”, defined as the proportion of zero coefficients (i.e., the number of zero coefficients divided by the total number of coefficients); (4) “Train”, which quantifies the classification accuracy on the training set; and (5) “Test”, which measures the classification accuracy on the testing set. Each experimental setting is independently simulated 100 times, and the average results are reported in Table 3. Since the previous experiments demonstrated that the Gaussian back substitution technique outperforms QPADM-slack in terms of iteration count and computational time, the results for “Ite” and “Time” are omitted in Table 3.

| M-QPADM-slack(GB) | QPADM-slack(GB) | QPADM-slack | |||||||

| M | Sparsity | Train | Test | Sparsity | Train | Test | Sparsity | Train | Test |

| 2 | 90.36 | 99.42 | 97.25 | 85.98 | 95.12 | 92.98 | 83.63 | 93.37 | 91.99 |

| 4 | 90.36 | 99.23 | 97.15 | 84.60 | 94.55 | 92.02 | 81.36 | 92.25 | 91.04 |

| 6 | 90.36 | 99.15 | 97.05 | 83.59 | 93.87 | 91.46 | 80.13 | 91.76 | 90.58 |

| 8 | 90.31 | 99.02 | 96.91 | 82.10 | 93.03 | 90.93 | 79.36 | 91.04 | 89.91 |

| 10 | 90.12 | 98.89 | 96.82 | 81.36 | 92.56 | 90.07 | 77.98 | 90.76 | 89.07 |

| 12 | 90.01 | 98.77 | 96.69 | 80.75 | 91.87 | 89.38 | 77.12 | 90.01 | 88.36 |

| 14 | 89.85 | 98.65 | 96.57 | 79.36 | 91.15 | 88.64 | 76.42 | 89.72 | 87.82 |

| 16 | 89.58 | 98.51 | 96.41 | 78.69 | 90.36 | 87.39 | 73.95 | 88.36 | 87.05 |

| 18 | 89.65 | 98.43 | 96.36 | 77.65 | 89.78 | 86.27 | 72.65 | 87.77 | 86.23 |

| 20 | 89.55 | 98.32 | 96.27 | 76.25 | 88.62 | 85.33 | 71.45 | 87.65 | 85.16 |

The numerical results in Table 3 reveal that, not only in the regression numerical experiments, but also in the classification experiments, the performance of the three P-ADMM methods based on the consensus structure deteriorates as the number of local sub-machines () increases. This occurs because the consensus structure, designed to support the parallel algorithm, unavoidably introduces consensus constraints, namely auxiliary variables, as the number of local sub-machines grows. These additional auxiliary variables degrade the quality of the iterative solutions of the P-ADMM algorithm. In terms of classification accuracy (Train and Test) and variable selection (Sparsity), M-QPADM-slack(GB) performs the best, followed by QPADM-slack(GB), and lastly, QPADM-slack. This indicates that when solving sparse regularization classification problems using the slack-based P-ADMM algorithm, incorporating the Gaussian back substitution technique can enhance both the efficiency and accuracy of the algorithm.

6 Conclusions and Future Prospects

This paper introduces a Gaussian back substitution technique to adapt the parallel ADMM (P-ADMM) algorithms proposed by Guan et al. (2020) and Fan et al. (2021). Specifically, we incorporate minor linear adjustments at each iteration step, which, despite their minimal computational overhead, significantly enhance the algorithm’s convergence speed. More importantly, this technique theoretically achieves linear convergence rates and demonstrates high efficiency and robustness in practical applications.

Furthermore, this paper proposes a new iteration variable sequence for P-ADMM with slack variables. When combined with the Gaussian back-substitution technique, this approach can significantly enhance computational efficiency. The new iteration sequence is designed to better align with the Gaussian back-substitution technique. By streamlining key steps in the algorithm to involve only basic addition and subtraction operations, we avoid complex matrix-vector multiplications, thereby improving overall efficiency. This is particularly advantageous for large datasets and high-dimensional spaces, as it avoids computational load and complexity. This modification not only preserves the algorithm’s convergence properties but potentially accelerates the convergence process, offering a more efficient solution for practical applications.

Another significant contribution of this paper is the extension of quantile loss applications from traditional regression tasks to classification tasks. Given the intrinsic equivalence of quantile loss optimization forms in both classification and regression tasks, the proposed parallel algorithm can be seamlessly applied to quantile regression classification models. This extension provides a novel perspective and tool for addressing classification problems.

Despite the advancements presented in this paper, a challenges remains. To be specific, even with the incorporation of the Gaussian back-substitution technique, the quality of the solution and the algorithm’s convergence speed deteriorate as the number of local sub-machines increases. This limitation arises from the consensus-based parallel structure, which complicates resource allocation in practical applications. Consequently, developing a parallel algorithm that circumvents the consensus structure emerges as a highly promising research direction. Such an algorithm could provide more robust and efficient solutions for larger datasets and higher-dimensional feature spaces.

Acknowledgements

We express our sincere gratitude to Professor Bingsheng He for engaging in invaluable discussions with us. His insights and expertise have greatly assisted us in effectively utilizing Gaussian back substitution to correct the convergence issues of the QPADM-slack algorithm. The research of Zhimin Zhang was supported by the National Natural Science Foundation of China [Grant Numbers 12271066, 12171405], and the research of Xiaofei Wu was supported by the Scientific and Technological Research Program of Chongqing Municipal Education Commission [Grant Numbers KJQN202302003].

References

- Beck and Teboulle (2009) Beck, A., Teboulle, M., 2009. A Fast Iterative Shrinkage-Thresholding Algorithm for Linear Inverse Problems. SIAM Journal on Imaging Sciences 2, 183–202.

- Belloni and Chernozhukov (2011) Belloni, A., Chernozhukov, V., 2011. -penalized quantile regression in high-dimensional sparse models. The Annals of Statistics 39, 82 – 130.

- Boyd et al. (2010) Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J., 2010. Distributed Optimization and Statistical Learning Via the Alternating Direction Method of Multipliers. Foundation and Trends in Machine Learning 3, 1–122.

- Chen et al. (2016) Chen, C., He, B., Ye, Y., Yuan, X., 2016. The direct extension of ADMM for multi-block convex minimization problems is not necessarily convergent. Mathematical Programming 155, 57–79.

- Christmann and Steinwart (2008) Christmann, A., Steinwart, I., 2008. Support Vector Machines. Springer New York, NY.

- Efron et al. (2004) Efron, B., Hastie, T., Johnstone, I., Tibshirani, R., 2004. Least angle regression. Annals of Statistics 32, 407–499.

- Fan et al. (2012) Fan, J., Fan, Y., Barut, E., 2012. Adaptive robust variable selection. Annals of statistics 42(1), 324–351.

- Fan and Li (2001) Fan, J., Li, R., 2001. Variable Selection via Nonconcave Penalized Likelihood and its Oracle Properties. Journal of the American Statistical Association 96, 1348–1360.

- Fan et al. (2014) Fan, J., Xue, L., Zou, H., 2014. Strong Oracle Optimality of Folded Concave Penalized Estimation. The Annals of Statistics 42, 819–849.

- Fan et al. (2021) Fan, Y., Lin, N., Yin, X., 2021. Penalized Quantile Regression for Distributed Big Data Using the Slack Variable Representation. Journal of Computational and Graphical Statistics 30, 557–565.

- Friedman et al. (2010) Friedman, J.H., Hastie, T.J., Tibshirani, R., 2010. Regularization paths for generalized linear models via coordinate descent. Journal of statistical software 33 1, 1–22.

- Gu et al. (2018) Gu, Y., Fan, J., Kong, L., Ma, S., Zou, H., 2018. ADMM for High-Dimensional Sparse Penalized Quantile Regression. Technometrics 60, 319–331.

- Guan et al. (2018) Guan, L., Qiao, L., Li, D., Sun, T., Ge, K., Lu, X., 2018. An Efficient ADMM-Based Algorithm to Nonconvex Penalized Support Vector Machines. 2018 IEEE International Conference on Data Mining Workshops , 1209–1216.

- Guan et al. (2020) Guan, L., Sun, T., Qiao, L.b., Yang, Z.h., Li, D.s., Ge, K.s., Lu, X., 2020. An efficient parallel and distributed solution to nonconvex penalized linear SVMs. Frontiers of Information Technology & Electronic Engineering 21, 587–603.

- Hastie et al. (2004) Hastie, T.J., Rosset, S., Tibshirani, R., Zhu, J., 2004. The entire regularization path for the support vector machine. Journal of machine learning research 5, 1391–1415.

- He et al. (2022) He, B., Ma, F., Xu, S., Yuan, X., 2022. A rank-two relaxed parallel splitting version of the augmented Lagrangian method with step size in (0,2) for separable convex programming. Math. Comput. 92, 1633–1663.

- He et al. (2012) He, B., Tao, M., Yuan, X., 2012. Alternating direction method with gaussian back substitution for separable convex programming. SIAM Journal on Optimization 22, 313–340.

- He et al. (2017) He, B., Tao, M., Yuan, X., 2017. Convergence rate and iteration complexity on the alternating direction method of multipliers with a substitution procedure for separable convex programming. Mathematics of Operations Research 42, 662–691.

- He and Yuan (2013) He, B., Yuan, X., 2013. Linearized alternating direction method of multipliers with gaussian back substitution for separable convex programming. Numerical Algebra, Control and Optimization 3, 247–260.

- He and Yuan (2015) He, B., Yuan, X., 2015. On non-ergodic convergence rate of Douglas–Rachford alternating direction method of multipliers. Numerische Mathematik 130, 567–577.

- He and Yuan (2018) He, B., Yuan, X., 2018. A class of ADMM-based algorithms for three-block separable convex programming. Computational Optimization and Applications 70, 791 – 826.

- Hoerl and Kennard (1970) Hoerl, E., Kennard, R.W., 1970. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 12, 55–67.

- Huang et al. (2014) Huang, X., Shi, L., Suykens, J.A.K., 2014. Support vector machine classifier with pinball loss. IEEE Transactions on Pattern Analysis and Machine Intelligence 36, 984–997.

- Koenker (2005) Koenker, R., 2005. Quantile Regression. Cambridge University Press.

- Koenker and Basset (1978) Koenker, R., Basset, G., 1978. Regressions Quantiles. Econometrica 46, 33–50.

- Koenker and Ng (2005) Koenker, R.W., Ng, P.T., 2005. A frisch-newton algorithm for sparse quantile regression. Acta Mathematicae Applicatae Sinica 21, 225–236.

- Li and Zhu (2008) Li, Y., Zhu, J., 2008. L1-norm quantile regression. Journal of Computational and Graphical Statistics 17, 163 – 185.

- Liang et al. (2024) Liang, R., Wu, X., Zhang, Z., 2024. Linearized Alternating Direction Method of Multipliers for Elastic-net Support Vector Machines. Pattern Recognition 148, 110134.

- Ng et al. (2013) Ng, M.K., Yuan, X., Zhang, W., 2013. Coupled variational image decomposition and restoration model for blurred cartoon-plus-texture images with missing pixels. IEEE Transactions on Image Processing 22, 2233–2246.

- Parikh and Boyd (2013) Parikh, N., Boyd, S.P., 2013. Proximal Algorithms. Now Foundations and Trends 1, 127–239.

- Peng and Wang (2015) Peng, B., Wang, L., 2015. An Iterative Coordinate Descent Algorithm for High-Dimensional Nonconvex Penalized Quantile Regression. Journal of Computational & Graphical Statistics 24, 676–694.

- Rosset and Zhu (2007) Rosset, S., Zhu, J., 2007. Piecewise linear regularized solution paths. The Annals of Statistics 35, 1012 – 1030.

- Sprechmann et al. (2010) Sprechmann, P., Paulino, I.F.R., Sapiro, G., Eldar, Y.C., 2010. C-hilasso: A collaborative hierarchical sparse modeling framework. IEEE Transactions on Signal Processing 59, 4183–4198.

- Tibshirani (1996) Tibshirani, R., 1996. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B 58.

- Vapnik (1995) Vapnik, V.N., 1995. The Nature of Statistical Learning Theory. Springer .

- Wang and He (2024) Wang, L., He, X., 2024. Analysis of global and local optima of regularized quantile regression in high dimensions: A subgradient approach. Econometric Theory 40, 233–277.

- Wang et al. (2012) Wang, L., Wu, Y., Li, R., 2012. Quantile Regression for Analyzing Heterogeneity in Ultra-High Dimension. Journal of the American Statistical Association 107, 214–222.

- Wang et al. (2006) Wang, L., Zhu, J., Zou, H., 2006. The doubly regularized support vector machine. Statistica Sinica 16, 589–615.

- Wen et al. (2024) Wen, J., Yang, S., Zhao, D., 2024. Nonconvex Dantzig selector and its parallel computing algorithm. Statistics and Computing 34, 1573–1375.

- Wu et al. (2025a) Wu, X., Liang, R., Zhang, Z., Cui, Z., 2025a. A unified consensus-based parallel ADMM algorithm for high-dimensional regression with combined regularizations. Computational Statistics & Data Analysis 203, 108081.

- Wu et al. (2025b) Wu, X., Liang, R., Zhang, Z., Cui, Z., 2025b. Multi-block linearized alternating direction method for sparse fused Lasso modeling problems. Applied Mathematical Modelling 137, 115694.

- Wu et al. (2024) Wu, X., Ming, H., Zhang, Z., Cui, Z., 2024. Multi-block Alternating Direction Method of Multipliers for Ultrahigh Dimensional Quantile Fused Regression. Computational Statistics & Data Analysis 192, 107901.

- Wu and Liu (2009) Wu, Y., Liu, Y., 2009. Variable selection in quantile regression. Statistica Sinica 19, 801–817.

- Yang and Zou (2013) Yang, Y., Zou, H., 2013. An Efficient Algorithm for Computing the HHSVM and Its Generalizations. Journal of Computational and Graphical Statistics 22, 396–415.

- Yang and Zou (2015) Yang, Y., Zou, H., 2015. A fast unified algorithm for solving group-lasso penalize learning problems. Statistics and Computing 25, 1129–1141.

- Yi and Huang (2016) Yi, C., Huang, J., 2016. Semismooth newton coordinate descent algorithm for elastic-net penalized huber loss regression and quantile regression. Journal of Computational and Graphical Statistics 26, 547 – 557.

- Yu and Lin (2017) Yu, L., Lin, N., 2017. ADMM for Penalized Quantile Regression in Big Data. International Statistical Review 85, 494–518.

- Yu et al. (2017) Yu, L., Lin, N., Wang, L., 2017. A Parallel Algorithm for Large-Scale Nonconvex Penalized Quantile Regression. Journal of Computational and Graphical Statistics 26, 935–939.

- Zhang (2010) Zhang, C., 2010. Nearly Unbiased Variable Selection Under Minimax Concave Penalty. Annals of Statistics 38, 894–942.

- Zhang et al. (2016) Zhang, X., Wu, Y., Wang, L., Li, R., 2016. Variable selection for support vector machines in moderately high dimensions. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 78, 53–76.

- Zhu et al. (2003) Zhu, J., Rosset, S., Tibshirani, R., Hastie, T., 2003. 1-norm support vector machines, in: Thrun, S., Saul, L., Schölkopf, B. (Eds.), Advances in Neural Information Processing Systems, MIT Press.

- Zou (2006) Zou, H., 2006. The Adaptive Lasso and Its Oracle Properties. Journal of the American Statistical Association 101, 1418–1429.

- Zou and Li (2008) Zou, H., Li, R., 2008. One-step Sparse Estimates in Nonconcave Penalized Likelihood Models. The Annals of Statistics 36, 1509–1533.

Online Appendix

Appendix A Proofs of Convergence Theorems

A.1 Preliminary

A.1.1 Lemma

Lemma 1

(Lemma 2.1 in He et al. (2022)) Let be a closed convex set, and let and be convex functions. Suppose is differentiable on an open set containing , and the minimization problem

has a nonempty solution set. Then, if and only if

Lemma 2

Assume that and are both positive definite matrices, and are four arbitrary -dimensional vectors. Then the following identities hold:

-

1.

(A.1) -

2.

(A.2)

A.1.2 Four Matrices

To simplify the presentation of analysis, we define the following four matrices,

| (A.3) |

| (A.4) |

It is obvious that the matrices , , and are all partitioned, and they satisfy the condition

| (A.5) |

It is not difficult to verify that both and are positive-definite matrices when .

A.1.3 Variational Inequality Characterization

To transform the optimization objective function (3.1) into an equality optimization problem, that is, to eliminate the nonnegative constraints of and , we need to introduce the following indicator function,

If we consider the QPADM-Slack(GB) method, we define:

Thus, from (3.5), equation (3.1) can be rewritten as:

| (A.6) | ||||

| s.t. |

where the definitions of , , , and are provided in Section 3.1.

If the modified update sequence as described in Section 4.1 is employed, the variables are defined as follows:

Equation (3.1) can then be rewritten as (A.6), with the corresponding matrices , , and as detailed in Section 4.1.

Based on the variational inequality characterization discussed in Section 2.4.2 of this paper, the solution to the constrained optimization problem (A.6) is the saddle point of the following Lagrangian function:

| (A.7) |

where . However, since and are non-differentiable in this context, the variational inequality mentioned in He and Yuan (2018) cannot be directly applied to our scenario. Recently, the work in He et al. (2022) has covered the non-differentiable setting considered in this paper. As described in their Section 2.2, finding the saddle point of is equivalent to finding that satisfy the following variational inequality:

| (A.8) |

where , , and

| (A.9) |

and

| (A.10) |

It is important to note that the operator defined in (A.10) is antisymmetric , as

| (A.11) |

A.2 Proof

With the preparation above, we can now proceed to prove the convergence and convergence rate of QPADM-Slack with Gaussian backtracking (which includes both QPADM-Slack(GB) and M-QPADM-Slack(GB)). For brevity, both of these methods with Gaussian backtracking will hereafter be referred to as QPADM-Slack(GB).

The process of QPADM-Slack(GB) can be divided into two steps: The first step involves generating the predicted values , , , and ; the second step involves correcting , , and to produce , , and .

The specific iteration formulas for the first step (prediction step) are as follows:

| (A.12) |

where . Here, the updates for , , and are essentially the same as those for , , and in QPADM-Slack, or as improved in Section 4.1. For the sake of convenience in subsequent proofs, the update method for differs from that in QPADM-Slack, primarily because it does not utilize the newly generated and .

According to Lemma 1, we have

| (A.13) |

The last equation is derived from the final part of equation (A.12). By combining the four equations in (A.13), we obtain

| (A.14) |

where , and by definition, and are both components of .

The second step (correction step) involves setting , followed by the equations:

| (A.15) |

The first two rows of the above equation correspond to the correction steps for and , while the third row adjusts to align with the expression for in QPADM-Slack. The adjustment in the third row is necessary because QPADM-Slack (GB) only requires corrections for and . Hence, the correction step in (A.15) can be rewritten as

| (A.16) |

where the definition of the matrix is given in (A.3).

The above discussion indicates that the QPADM-slack (GB) algorithm can be simplified into two steps: the prediction step (see (A.12)) and the correction step (see (A.15)). The prediction and correction steps can also be expressed as:

| (A.17) | ||||

| (A.18) |

A.2.1 Global Convergence

Next, based on the predictive and corrective steps, we derive that the sequence exhibits a contraction property.

Proposition 1

Proof. By applying (see (A.5)) and the update expression in (A.18), the right-hand side of (A.17) can be rewritten as:

| (A.20) |

Using the identity in (A.1), let , , , and , we obtain:

| (A.21) |

For the last term in (A.2.1), we have:

| (A.22) | ||||

where the second-to-last equality holds due to and .

Substituting (A.2.1) and (A.22) into (A.20), we obtain:

| (A.23) | ||||

Let and in (A.23), which yields

| (A.24) | ||||

By the optimality of and the monotonicity of (see (A.11)), we have:

| (A.25) |

The conclusion in (A.19) follows directly from (A.24) and (A.25).

The contraction property mentioned above is crucial for the convergence of the sequence. The proof of sequence convergence derived from (A.19) has been extensively documented in the literature, including Theorem 2 in He and Yuan (2018) and Theorem 4.1 in He et al. (2022). For completeness, this section provides a detailed proof process here.

Theorem 2

Proof. Based on (A.19), the sequence is bounded, and

| (A.26) |

Hence, is also bounded. Let be an accumulation point of and be a subsequence converging to .

Recalling the statement in (A.14), the sequences and are associated with and respectively. According to (A.17), we have

Noting that the matrix is nonsingular (see (A.3)), this implies . Due to the continuity of and , we obtain

The above variational inequality indicates that is a solution point of (A.8). Together with (A.19), we obtain

| (A.27) |

A.2.2 Linear Convergence Rate

Here, we demonstrate that a worst-case convergence rate of in a non-ergodic sense can be established for QPADM-slack using Gaussian back substitution. To do this, we first need to prove the following proposition.

Proposition 2

Proof. First, by setting in (A.17), we obtain

| (A.29) |

Note that (A.17) is also true for . Thus, we also have

Setting in the above inequality, we obtain

| (A.30) |

By adding (A.29) and (A.30) , and utilizing the antisymmetry of in (A.11), we obtain

| (A.31) |

By adding the term

to both sides of (A.31), and using , we obtain

By substituting

| (A.32) |

(from (A.18)) into the left-hand side of the last inequality, and using , it follows that

| (A.33) |

Letting and in the identity (A.2), we have

By inserting (A.33) into the first term on the right-hand side of the last equality, we obtain

By inserting (A.32) into the second term on the right-hand side of the last equality, we get

Since is a positive-definite matrix, the assertion in (A.28) follows immediately.

Note that and are positive-definite matrices. Together with Proposition 1, there exists a constant such that

| (A.34) |

Proposition 2 and (A.32) indicate that

| (A.35) |

Now, with (A.34) and (A.35), we can establish the worst-case convergence rate in a nonergodic sense for QPADM-slack(GB).

Theorem 3

Appendix B Supplementary Experiments

Here, we will supplement numerical experiments on nonconvex regularization regression and classification problems. Note that when employing algorithms QPADM-slack(GB) and M-QPADM-slack(GB) to solve the problem of nonconvex regularizations, the LLA method (referred to as Algorithm 2) is actually used. Consequently, the number of iterations (Ite) corresponds to the total number of iterations generated within Algorithm 2. Typically, Algorithm 2 involves two ADMM iteration processes: one for solving the unweighted regularization problem and another for solving the weighted regularization problem. This counting method was also adopted by Wen et al. (2024) in their ADMM algorithms. We have implemented warm-start technique (Friedman et al. (2010)), which allows the second ADMM algorithm to converge rapidly, typically requiring only around ten to twenty iterations in most cases.

B.1 Supplementary Experiments for Section 5.1

In this section, we first supplement the experiments with nonconvex regularization terms for Table 1 (non-parallel computing environment), where the dimensions are chosen as and . The specific numerical results are provided in Table 4. The numerical results indicate that when solving the quantile regression problem with MCP and SCAD penalties, the ADMM algorithm with Gaussian back substitution still outperforms the one without it. Additionally, it is observed that the number of iterations required by algorithms QPADM-slack(GB) and M-QPADM-slack(GB) for these two nonconvex penalized quantile regressions is no more than 20 steps greater than that required for solving the quantile regression with penalty. This advantage is attributed to the warm-start technique, where the solution obtained from the penalized regularization serves as the initial solution for the weighted penalty regularization.

| MCP | ||||||||||

| P1 | P2 | AE | Ite | Time | P1 | P2 | AE | Ite | Time | |

| QPADM | 100 | 100 | 0.014(0.03) | 138.1(14.5) | 41.35(6.78) | 82 | 100 | 7.532(1.12) | 500+(0.0) | 2038.7(68.6) |

| QPADMslack | 100 | 100 | 0.051(0.05) | 68.3(6.69) | 37.56(5.27) | 89 | 100 | 7.807(1.33) | 266(28.6) | 1276.5(30.6) |

| QPADM-slack(GB) | 100 | 100 | 0.025(0.04) | 52.1(5.44) | 35.17(5.30) | 93 | 100 | 7.352(1.08) | 241(22.8) | 1101.4(27.6) |

| M-QPADM-slack(GB) | 100 | 100 | 0.017(0.03) | 39.5(4.78) | 24.42(3.08) | 96 | 100 | 7.082(0.92) | 172(16.3) | 885.7(19.4) |

| SCAD | ||||||||||

| P1 | P2 | AE | Ite | Time | P1 | P2 | AE | Ite | Time | |

| QPADM | 100 | 100 | 0.012(0.03) | 142.3(14.9) | 44.2(6.91) | 85 | 100 | 7.233(1.02) | 500+(0.0) | 2056.2(67.9) |

| QPADMslack | 100 | 100 | 0.056(0.05) | 65.2(6.22) | 34.78(5.10) | 88 | 100 | 7.886(1.38) | 251(24.6) | 1202.5(28.9) |

| QPADM-slack(GB) | 100 | 100 | 0.026(0.04) | 53.0(5.61) | 37.09(5.5) | 94 | 100 | 7.426(1.12) | 261(25.7) | 1208.6(29.6) |

| M-QPADM-slack(GB) | 100 | 100 | 0.015(0.03) | 40.9(4.91) | 26.32(3.17) | 98 | 100 | 7.021(0.89) | 175(16.7) | 892.2(20.2) |

-

*

The symbols in this table are defined as follows: P1 (%) represents the proportion of times is selected; P2 (%) represents the proportion of times , , , and are selected; AE denotes the absolute estimation error; Ite indicates the number of iterations; and Time (s) refers to the running time. The numbers in parentheses represent the corresponding standard deviations, and the optimal results are highlighted in bold.

Next, we supplement the experiments with nonconvex regularizer terms for Table 2 (parallel computing environment), where the dimensions are chosen as . The specific numerical results are provided in Table 5, which demonstrate that the Gaussian back substitution technique effectively enhances both the accuracy and efficiency of the QPADM-slack algorithm.

| QPADM | MCP | QPADM | SCAD | |||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 26.5(2.01) | 0.034(0.0005) | 451.3(37.5) | 180.4(15.02) | 27.1(1.99) | 0.035(0.0005) | 479.2(39.2) | 189.3(14.3) |

| 10 | 27.2(2.09) | 0.039(0.0006) | 475.7(40.1) | 98.3(6.99) | 28.5(2.07) | 0.040(0.0006) | 496.3(42.7) | 99.2(7.12) |

| 20 | 29.7(2.16) | 0.045(0.0008) | 500+(0.00) | 49.8(4.11) | 29.9(2.18) | 0.047(0.0007) | 500+(0.00) | 50.2(4.03) |

| QPADM-slack | MCP | QPADM-slack | SCAD | |||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 25.1(1.97) | 0.037(0.0006) | 332.5(30.0) | 141.2(9.66) | 24.7(1.95) | 0.038(0.0006) | 341.6(29.8) | 136.4(9.52) |

| 10 | 29.1(2.06) | 0.044(0.0007) | 360.1(34.5) | 75.2(5.23) | 28.9(2.11) | 0.043(0.0007) | 366.8(32.3) | 76.6(5.15) |

| 20 | 31.1(2.17) | 0.050(0.0008) | 431.1(41.7) | 42.1(2.52) | 30.1(2.23) | 0.049(0.0008) | 425.9(40.2) | 43.2(2.74) |

| QPADM-slack(GB) | MCP | QPADM-slack(GB) | SCAD | |||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 23.5(1.91) | 0.028(0.0005) | 290.1(24.8) | 87.2(6.53) | 23.1(1.88) | 0.031(0.0005) | 271.2(25.2) | 88.9(6.81) |

| 10 | 24.4(1.98) | 0.032(0.0005) | 263.0(22.4) | 55.1(3.99) | 24.2(1.91) | 0.035(0.0005) | 269.3(23.5) | 54.6(4.02) |

| 20 | 25.1(2.03) | 0.035(0.0006) | 291.2(27.0) | 39.5(2.35) | 25.3(2.00) | 0.038(0.0006) | 283.4(27.6) | 37.3(2.21) |

| M-QPADM-slack(GB) | MCP | M-QPADM-slack(GB) | SCAD | |||||

| M | Nonzero | AE | Ite | Time | Nonzero | AE | Ite | Time |

| 5 | 14.10(1.40) | 0.020(0.0004) | 228.1(14.9) | 71.1(4.90) | 14.05(1.37) | 0.022(0.0004) | 215.3(15.1) | 70.5(4.84) |

| 10 | 14.5(1.47) | 0.028(0.0005) | 232.2(17.83) | 40.1(2.73) | 14.77(1.48) | 0.027(0.0005) | 219.6(16.2) | 39.2(2.77) |

| 20 | 15.3(1.51) | 0.034(0.0005) | 230.7(13.8) | 22.0(1.55) | 15.01(1.54) | 0.031(0.0005) | 221.3(14.1) | 21.3(1.59) |

-

*

Since the values of all methods for metrics P1 and P2 are 100, they are not listed in Table 5. “Nonzero” indicates the number of non-zero coefficients in the estimates. The numbers in parentheses represent the corresponding standard deviations, and the optimal results are shown in bold.

B.2 Supplementary Experiments for Section 5.2

In this section, we provide additional experiments involving nonconvex regularization terms for the data presented in Table 6 and Table 7. All experimental settings remain consistent with those described in Section 5.2. The numerical results in both tables demonstrate that incorporating Gaussian back substitution steps into the QPADM algorithm significantly enhances the sparsity and accuracy of nonconvex SVM classifiers.

| M-QPADM-slack(GB) | QPADM-slack(GB) | QPADM-slack | |||||||

| M | Sparsity | Train | Test | Sparsity | Train | Test | Sparsity | Train | Test |

| 2 | 92.00 | 99.80 | 97.80 | 87.50 | 95.50 | 93.50 | 85.00 | 94.00 | 92.50 |

| 4 | 92.00 | 99.60 | 97.50 | 85.50 | 94.80 | 92.50 | 82.00 | 93.00 | 91.50 |

| 6 | 92.00 | 99.50 | 97.40 | 84.50 | 94.00 | 91.60 | 81.00 | 92.00 | 90.60 |

| 8 | 91.80 | 99.20 | 97.10 | 83.00 | 93.20 | 91.00 | 80.00 | 91.20 | 90.00 |

| 10 | 91.60 | 99.00 | 96.90 | 82.00 | 92.80 | 90.20 | 79.00 | 90.80 | 89.20 |

| 12 | 91.50 | 98.80 | 96.70 | 81.50 | 92.00 | 89.50 | 77.50 | 90.00 | 88.50 |

| 14 | 91.40 | 98.70 | 96.60 | 80.50 | 91.30 | 88.70 | 76.50 | 89.80 | 87.80 |

| 16 | 91.20 | 98.50 | 96.40 | 79.50 | 90.50 | 87.50 | 74.50 | 88.50 | 87.20 |

| 18 | 91.10 | 98.40 | 96.30 | 78.50 | 89.80 | 86.50 | 73.50 | 87.80 | 86.30 |

| 20 | 91.00 | 98.30 | 96.20 | 77.50 | 88.80 | 85.50 | 72.50 | 87.70 | 85.20 |

| M-QPADM-slack(GB) | QPADM-slack(GB) | QPADM-slack | |||||||

| M | Sparsity | Train | Test | Sparsity | Train | Test | Sparsity | Train | Test |

| 2 | 92.20 | 99.90 | 97.90 | 87.30 | 95.40 | 93.40 | 84.80 | 93.80 | 92.40 |

| 4 | 92.10 | 99.70 | 97.60 | 85.30 | 94.70 | 92.40 | 81.80 | 92.90 | 91.40 |

| 6 | 92.00 | 99.60 | 97.50 | 84.30 | 93.90 | 91.50 | 80.80 | 91.90 | 90.50 |

| 8 | 91.90 | 99.30 | 97.20 | 82.80 | 93.10 | 90.90 | 79.80 | 91.10 | 89.90 |

| 10 | 91.70 | 99.10 | 97.00 | 81.80 | 92.70 | 90.10 | 78.80 | 90.70 | 89.10 |

| 12 | 91.60 | 98.90 | 96.80 | 81.30 | 91.90 | 89.40 | 77.30 | 89.90 | 88.40 |

| 14 | 91.50 | 98.80 | 96.70 | 80.30 | 91.20 | 88.60 | 76.30 | 89.70 | 87.70 |

| 16 | 91.30 | 98.60 | 96.50 | 79.30 | 90.40 | 87.40 | 74.30 | 88.40 | 87.10 |

| 18 | 91.20 | 98.50 | 96.40 | 78.30 | 89.70 | 86.40 | 73.30 | 87.70 | 86.20 |

| 20 | 91.10 | 98.40 | 96.30 | 77.30 | 88.70 | 85.40 | 72.30 | 87.60 | 85.10 |